Abstract

Image dehazing is a crucial task in computer vision, aimed at restoring the clarity of images impacted by atmospheric conditions like fog, haze, or smog, which degrade image quality by reducing contrast, color fidelity, and detail. Recent advancements in deep learning, particularly convolutional neural networks (CNNs), have shown significant improvements by directly learning features from hazy images to produce clear outputs. However, color distortion remains an issue, as many methods focus on contrast and clarity without adequately addressing color restoration. To overcome this, we propose a Color-Correction Network (CCD-Net) based on dual-branch fusion of different color spaces for image dehazing, that simultaneously handles image dehazing and color correction. The dehazing branch utilizes an encoder–decoder structure aimed at restoring haze-affected images. Unlike conventional methods that primarily focus on haze removal, our approach explicitly incorporates a dedicated color-correction branch in the Lab color space, ensuring both clarity enhancement and accurate color restoration. Additionally, we integrate attention mechanisms to enhance feature extraction and introduce a novel fusion loss function that combines loss in both RGB and Lab spaces, achieving a balance between structural preservation and color fidelity. The experimental results demonstrate that CCD-Net outperforms existing methods in both dehazing performance and color accuracy, with CIEDE reduced by 40.81% on RESIDE-indoor and 45.57% on RESIDE-6K compared to the second-best-performing model, showcasing its superior color-restoration capability.

1. Introduction

Image dehazing plays a pivotal role in computer vision tasks by restoring the clarity of images degraded by atmospheric phenomena such as fog, haze, or smog []. The presence of these environmental factors significantly hampers the visibility and perceptibility of important image details, often leading to severe reductions in contrast, color fidelity, and overall image quality. This degradation poses substantial challenges for visual recognition systems, particularly in critical applications such as autonomous driving [], target tracking [,,], and outdoor object detection [,,], where accurate and detailed scene understanding is paramount.

The atmospheric scattering model [,] provides insights into the formation of hazy images by explaining how haze affects an image through two main components: the scene radiance that is attenuated by the medium and the atmospheric light that is scattered into the camera. The observed hazy image is a combination of these two factors. The transmission map represents the extent to which the scene radiance is preserved after passing through the haze, with denser haze leading to lower transmission values. Meanwhile, the atmospheric light refers to the ambient light scattered by particles in the air, which contributes to the overall brightness and color shift in the hazy image.

Most of the previous prior-based studies realized image dehazing by predicting transmission maps and atmospheric light. For instance, He et al. [] developed the Dark Channel Prior (DCP) as a technique for estimating the transmission map. Fattal et al. [] proposed a method for estimating the transmission map by leveraging prior information on object surface-shading and transmission characteristics. These methods based on prior knowledge can produce dehazed images to some extent. However, if the assumed priors do not align well with a particular scene or the parameter estimation is imprecise, the resulting images may exhibit artifacts, color distortion, or other undesired effects.

In contrast, deep learning algorithms leverage the powerful feature extraction and nonlinear mapping capabilities of convolutional neural networks (CNNs) to enhance the accuracy of parameter estimation, enabling dehazing models to produce clear, haze-free images autonomously, eliminating the reliance on explicit physical models, thereby driving the rapid advancement of image-dehazing technologies. In recent years, the rapid development of deep learning has led to significant breakthroughs in computer vision [,,]. Many dehazing approaches based on these methods have shown remarkable success. Some approaches employ convolutional neural networks to predict the transmission map and atmospheric light [,,], while others directly learn various features to generate haze-free images [,,,]. Because these learning-based methods can handle large training datasets, they effectively extract useful information, resulting in high-quality, clear outputs even in hazy conditions.

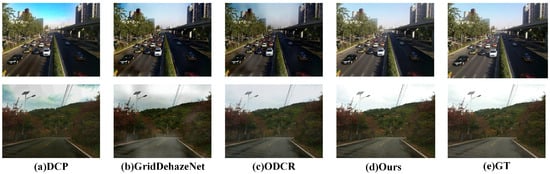

Many dehazing methods focus on enhancing image contrast and clarity while overlooking the precise restoration of color information. As a result, dehazed images may exhibit color distortion and bias, leading to suboptimal visual quality [,,]. Figure 1 shows examples of color difference in image dehazing. Color plays a pivotal role in image processing; accurate color representation not only influences the visual experience but also significantly impacts subsequent image analysis and computer vision tasks. Ancuti et al. [] proposed a local adaptive-fusion strategy that constructs a light attenuation map through the red channel to quantify local attenuation, then fuses the original image with a globally adjusted color map to dynamically correct the intensity. Zhang et al. [] first preprocessed the images using a white balance algorithm and a DCP dehazing technique, and applied a multiscale fusion strategy to correct color deviations. However, the computational efficiency of this method for high-resolution images needs to be improved.

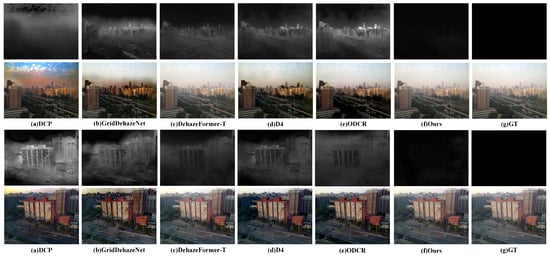

Figure 1.

Examples of color difference in image dehazing using different models.

Espinosa et al. [] developed an end-to-end deep learning architecture based on U-Net, incorporating discrete wavelet-transform jump connections to preserve high-frequency features, mitigating color distortion caused by red channel absorption. Kong et al. [] introduced a two-stage progressive network, which adjusts the pixel values of the three color channels independently and employs a channel attention mechanism for color restoration.

Deep learning-based methods have significantly advanced the field of image dehazing. These methods offer robust performance in tasks such as color restoration and image enhancement. Huang et al. [] proposed a multi-scale cascaded Transformer network with adaptive group attention to dynamically select complementary channels, addressing image color degradation caused by light absorption and scattering. Similarly, Khan et al. [] introduced a multi-domain query cascade Transformer network, which integrates local transmission and global illumination features using a multi-domain query cascade attention mechanism. However, the large number of parameters in these models often requires substantial storage space and computational resources, which limits their practicality. Therefore, it is crucial to design lightweight models for dehazing applications to address these efficiency challenges.

It is difficult to strike a balance between preserving image color and ensuring high-quality dehazing effect in image-dehazing tasks. In order to improve the comprehensive performance of the dehazing algorithm, we propose a dual-branch network that focuses on image dehazing along with color distortion correction. These two branches are the image-dehazing branch and the color-correction branch.

The dehazing branch is to enhance the processing capability of the image by multi-scale feature extraction and fusion, which utilizes different levels of feature representation to gradually enhance the details of the image, and also enhances the multi-scale information of the image by up- and downsampling operations. The branch uses attention mechanisms (channel attention, CA, and pixel attention, PA) to dynamically adjust the important parts of the feature map to further optimize the effect of image processing. These attention mechanisms help the network to focus on the regions that are most important to the task during processing, thus enhancing the effectiveness of the model.

The color-correction branch aims to enhance the color information and details of an image. We introduce Lab color space, attention mechanism, and multilayer convolutional neural network structure into the model design. In order to better process the color information of the image, we convert the image from RGB color space to Lab color space. A multilayer convolutional network is utilized to extract image features and generate an attention map with the same size as the input image. This attention map is connected with the original AB channel and input to the forward processing module to further enhance the image processing. The features acquired from these two branches are effectively merged, resulting in high-quality, visually satisfying images.

In summary, our main contributions are as follows:

- An effective end-to-end two-branch image-dehazing network CCD-Net is proposed. It focuses on image clarity and color features to obtain high-quality images.

- A color-correction branch is proposed to utilize features from different color spaces to avoid the difficulty of feature extraction in ordinary RGB color space. In addition, we introduce the Convolutional Block Attention Module (CBAM) into the Color-Correction Network to improve the feature-extraction capability of the network and effectively recover the missing color and detail information.

- We propose a loss function based on Lab space and form a fused loss function with the loss function in RGB space, which more comprehensively considers image color recovery.

- Numerous pieces of experimental evidence show that the proposed CCD-Net achieves a better dehazing effect, enhances the color quality of images, and consumes less computational resources compared to other competing methods.

The remaining sections of this paper are structured as follows: Section 2 reviews related work, covering both prior-based and deep learning-based dehazing methods. Section 3 introduces the proposed CCD-Net, detailing its overall framework, dehazing branch, and color-correction branch, along with the design of the loss function. Section 4 describes the experimental setup, and presents a comparison with state-of-the-art dehazing methods and an ablation study to evaluate the effectiveness of different components in our approach. Finally, Section 5 provides the conclusion of the paper.

2. Related Work

In recent years, advances in image dehazing can be divided into two main phases, which can be categorized into initial techniques based on a prior assumptions about the image, and deep learning-based dehazing methods that utilize neural network models for end-to-end mapping.

2.1. Prior-Based Methods

Traditional image-dehazing methods rely on prior assumptions to estimate the transmission map and atmospheric light. He et al.’s [] Dark Channel Prior (DCP) assumes that the minimum value of one color channel in natural images is near zero, while in foggy images, this value increases, reflecting haze intensity. The DCP method estimates the atmospheric light and transmission map, then restores the image through a recovery formula. Zhu et al. [] introduced a fast dehazing method based on the Color Attenuation Prior (CAP), which assumes the attenuation of the blue channel correlates with haze intensity. By calculating the color attenuation and transmission maps, this method quickly restores image clarity. Fattal et al. [] assumed haze exhibits spatial consistency across the image, with uniform local impact despite varying intensity. They also converted the image to HSV color space, allowing for more efficient haze removal by separately processing luminance. Berman et al. [] proposed a non-local prior, suggesting that clean images consist of color clusters in a non-local space. These clusters help recover the transmission map and restore image details by removing haze. Meng et al. [] introduced boundary-constraint priors combined with context regularization, forming an optimization model for accurate transmission map estimation, enhancing image clarity in haze-affected areas. Ancuti et al. [] proposed an enhancement method based on color-channel compensation (3C), assuming different color channels are distorted to varying degrees by haze. By recovering lost color information and adjusting channel contrast, this method improves color reproduction and image contrast. However, all these methods are highly dependent on specific priors, which may not be universally applicable. When the assumed prior fails to represent hazy images accurately, it can result in poor transmission-map estimation and color distortion.

2.2. Learning-Based Methods

Deep learning applications in image dehazing are notable for their superior capabilities and flexibility. With the power of convolutional neural networks, these algorithms can automatically capture features highly correlated with haze and accurately predict scene transmittance or directly generate clear images without fog. Therefore, deep learning technology has taken a central position in the research of image dehazing, which not only revolutionizes the image-processing and computer-vision fields, but also injects new development potential into these fields.

Cai et al. [] proposed an end-to-end image-dehazing network named DehazeNet, using convolutional neural networks to address the shortcomings of traditional image-dehazing methods, which utilizes deep learning to compute the transmission rate and atmospheric coefficients to obtain the dehazed image. Similarly, Ren et al. [] proposed multi-scale convolutional neural networks (MSCNNs) and Zhang et al. [] proposed a joint dehazing network for transmission maps; Li et al. [] creatively proposed a normalized dehazing network in response to the fact that the previous networks mostly estimated the atmospheric light value and transmittance separately, namely the All-in-one Dehazing Network (AOD-Net), which unifies the two unknown parameters into one parameter and reduces the computation and error.

The end-to-end algorithm does not require consideration of the foggy-image-degradation causes or the imaging process. The network takes foggy images as input and, after learning, outputs a clear image. Qu et al. [] proposed an enhanced pix2pix dehazing network, which treats image dehazing as an image-to-image conversion problem. Chen et al. [] introduced an end-to-end gated context-aggregation network for image dehazing. Qin et al. [] proposed a feature-fusion attention (FFA) network, which processes different types of feature information in order to realize image dehazing. Wu et al. [] proposed a compact image-dehazing method based on contrast learning, which further constrains the dehazing problem by introducing negative samples and fully exploiting the information in the negative samples to upper and lower bounds of the solution space. The above end-to-end dehazing network that does not rely on atmospheric degradation models can remove fog from hazy images to a certain extent, but it over-extracts global multiscale features at the input resolution in the dehazing process, and lacks the ability to focus on local detailed texture information (Table 1).

Table 1.

The list of the surveyed methods for image dehazing.

Table 1.

The list of the surveyed methods for image dehazing.

| Category | Method | Key Approach | Color Consideration |

|---|---|---|---|

| Prior-based methods | DCP [] | Dark channel assumption | ✗ |

| CAP [] | Blue channel attenuation | ✓ | |

| HSV-based [] | Processes luminance in HSV space | ✓ | |

| Non-local Prior [] | Color clustering for transmission estimation | ✗ | |

| Boundary Constraint [] | Boundary constraints and regularization | ✗ | |

| 3C Color Channel Compensation [] | Adjusts color channels separately | ✓ | |

| Learning-based methods | DehazeNet [] | CNN-based transmission prediction | ✗ |

| MSCNN [] | Multi-scale CNN estimation | ✗ | |

| AOD-Net [] | Joint transmission-atmosphere model | ✗ | |

| Enhanced Pix2Pix Dehazing [] | Image-to-image translation | ✗ | |

| Gated Context Aggregation [] | Gated feature aggregation | ✗ | |

| FFA-Net [] | Feature fusion attention | ✗ | |

| Contrastive Learning Dehazing [] | Contrastive feature training | ✗ |

Dehazed images often suffer from color distortion due to a focus on contrast enhancement over color accuracy. To address this issue, we introduce a novel dual-branch framework that integrates dehazing and color correction into a unified model, ensuring effective haze removal while enhancing chromatic fidelity.

This section first provides an overview of the proposed CCD-Net’s overall structure, offering a comprehensive understanding of the network architecture. It then proceeds to introduce the individual components, the dehazing branch and color-correction branch in detail, analyzing their respective functions and design objectives. Finally, the section discusses the design of the loss function and its role in the training process.

3. Our Method

3.1. Overall Framework

Restoring a blurred image to a clear one typically involves addressing several degradation issues, such as blurring, color bias, and uneven brightness. These degradations can result in the loss of image details and degrade both the quality and utility of the image. To address these challenges, a dual-branch dehazing network, CCD-Net, has been proposed. It primarily focuses on learning image clarity and color features, effectively mitigating the aforementioned degradation issues to produce clear and naturally colored images.

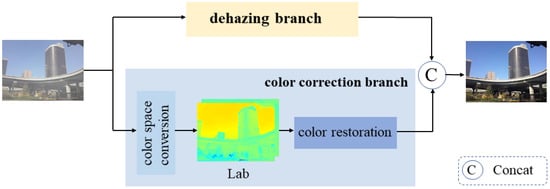

CCD-Net is composed of two main components: a dehazing branch and a color-feature learning branch, as illustrated in Figure 2. The input hazy image is processed simultaneously by both branches. The dehazing branch extracts the feature map , while the color-feature learning branch generates the feature map . These two feature maps are then combined to reconstruct a clear image.

Figure 2.

The overall architecture of CCD-Net.

The first branch focuses on restoring image clarity by employing advanced dehazing techniques to enhance blurred regions and recover fine details and edges. The second branch is dedicated to learning color features in the Lab color space, aiming to correct color bias caused by atmospheric scattering and other factors, ensuring that the restored image exhibits more natural and accurate colors.

By jointly optimizing these two objectives—clarity and color features—CCD-Net is able to effectively retain the original color and detail of the image while removing haze, avoiding the common problems of color distortion and edge blurring encountered in traditional dehazing methods. This ensures high-quality image restoration across a wide range of complex environments. Detailed descriptions of the dehazing and color-feature learning branches are provided below.

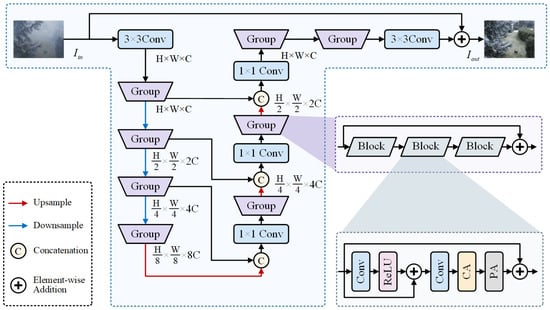

3.2. Dehazing Branch

The input image first passes through a 3 × 3 convolution for feature extraction. The encoder–decoder structure is a deep learning modeling framework widely used for image-dehazing tasks, as shown in Figure 3; its main goal is to restore haze-affected images to a clear state through layer-by-layer feature extraction and reconstruction. The main role of the encoder part is to extract and downsample the image features step by step. It consists of a convolution operation and four feature-extraction layers. The size of each layer’s feature map output is halved relative to the layer which is before it, and the number of channels is twice as large as the previous layer . The key process can be expressed as follows:

where indicates downsampling.

Figure 3.

The structure of the dehazing branch.

The decoder part is responsible for reconstructing the features and recovering the high-resolution information of the image. Each layer uses a Group extraction module to extract image detail features. Accordingly, the decoder consists of four feature-reconstruction layers and convolution operations. Each layer produces a feature map twice the size of the previous layer , while the number of channels is reduced by half. The main process can be described as follows:

where indicates downsampling.

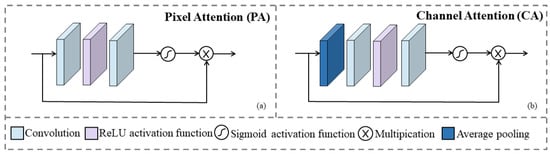

The fundamental unit of the model is the Block Module, consisting of two convolutional layers, a channel attention layer, and a pixel attention layer. Details of pixel attention and channel attention are shown in Figure 4. This module is designed to process image features at multiple levels, and by using residual connections, it fuses low-level features with higher-level ones, preventing the loss of information. The output from each Block module is further enhanced in subsequent layers, enabling the model to capture more complex feature representations and improving the dehazing result.

where indicates the output after the Block module.

Figure 4.

Details of pixel attention (a) and channel attention (b).

In the Group module, multiple Block modules are stacked together to form a more complex unit responsible for multi-level feature fusion. Within each Group module, features are processed through several layers to transform the input feature map into higher-level representations, allowing the model to capture information from different scales and further enhancing the dehazing effect.

3.3. Color-Correction Branch

To enhance color fidelity in dehazed images, we introduce a dedicated color-correction branch that leverages the Lab color space to decouple luminance and chromatic information, enabling more precise color restoration. The following subsections discuss the advantages of the Lab color space and the architectural design of the color-correction branch in CCD-Net.

3.3.1. Advantages of Lab Color Space

The RGB color space is the most widely used color space in color image processing. However, the red, green, and blue (R, G, B) components are highly correlated, and the space does not effectively separate brightness information from color information. As a result, it is easily affected by changes in brightness, occlusion, shadows, and other factors []. Thus, in cases where lighting conditions are inconsistent in an image, it can lead to uneven color representations, which may affect processing performance.

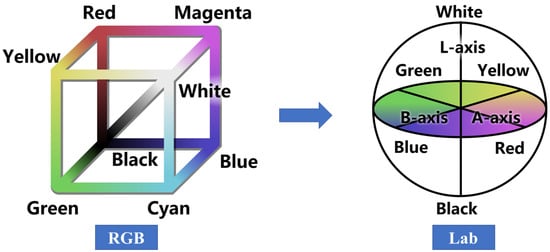

The Lab color space is a color model designed based on the opponent color theory of human visual perception. It represents colors using three independent components: L, a and b. The L component indicates lightness, with a range from 0 to 100, representing brightness from complete black to complete white [,]. The a component represents the color range between red and green, while the b component represents the color range between yellow and blue, both with a range of −128 to +127.

As shown in Figure 5, the RGB color space is represented by a cubic structure and consists of red (R), green (G) and blue (B) channels, whose colors are formed by the mixture of the three, but the coupling of luminance and color information is not conducive to color adjustment and image enhancement. Meanwhile, Lab color space adopts independent luminance (L), green-red (a), and blue-yellow (b) channels, which decouples the color information from the luminance information and is more in line with the human-eye perception characteristics. Therefore, Lab color space is more advantageous in tasks such as color correction, white balance adjustment, etc. It also improves the stability of color representation, reduces color shifts due to equipment differences, and performs better in computer-vision tasks such as image dehazing, image restoration, and super-resolution reconstruction.

Figure 5.

Difference between RGB and Lab color space.

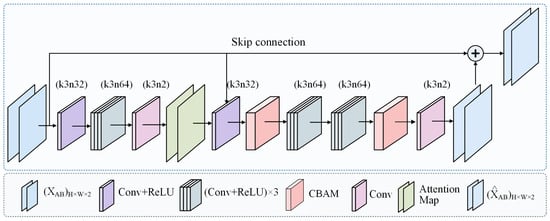

3.3.2. Architecture of Color-Correction Branch

A color-correction branch is applied to the image-dehazing task, aiming to enhance the color information and details of the image, thereby improving the visual quality of the dehazed result. The structure of the color-correction branch is shown in Figure 6. To better handle the color information, we first convert the image from the RGB color space to the Lab color space, then integrate attention mechanisms and multilayer convolutional neural network structures into the model design for enhanced performance. Unlike previous methods that adjust color distribution based on the mean values of RGB channels [] or compensate for weak color channels using attenuation matrices to correct color distortion [], our approach operates in the Lab color space. Through this color-space transformation, the model can independently process lightness and color information, allowing for more precise adjustments to the color details of the image.

Figure 6.

Illustration of color-correction branch.

We input the AB channels of the image. By combining the attention-map-generation module and the forward processing module, it effectively enhances the AB channels of the image. Additionally, to help the model focus more precisely on important regions of the image, we introduce an attention mechanism. This mechanism generates an attention map that highlights key regions of the image, aiding the network in focusing on critical parts of the image during the training process.

The attention-map-generation module uses a multilayer convolutional network to extract image features and produce an attention map of the same size as the input image. This attention map is concatenated with the original AB channels and fed into the forward processing module, further enhancing the image-processing effect. Specifically, the attention-map-generation module first processes the input AB channels and produces the corresponding attention map. Then, the attention map is concatenated with the original AB channels and passed through the forward processing module for further feature extraction and enhancement.

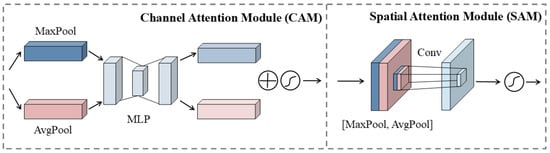

Additionally, in the second image-processing stage, We utilize the Convolutional Block Attention Module (CBAM) [] to enhance details, and its specific structure is shown in Figure 7. The Convolutional Block Attention Module (CBAM) consists of two key components: the Channel Attention Module (CAM) and the Spatial Attention Module (SAM). CAM enhances feature representation by assigning different weights to channels, emphasizing the most informative ones. SAM improves spatial perception by generating a spatial attention map, assigning higher weights to key regions to enhance focus on critical spatial information. Together, these modules enable adaptive feature refinement for more effective representation learning. The key idea behind the forward processing module is to stack convolutional layers, enabling the network to learn features at different levels of the image and ultimately output the enhanced AB channels.

Figure 7.

Illustration of CBAM.

Finally, the enhanced AB channels are output through the final convolutional layer. By separately handling lightness and color information and introducing attention mechanisms to focus on important regions, the model provides a more refined approach to image dehazing. This approach not only recovers the lightness information but also reconstructs more of the fine details during the dehazing process.

3.4. Loss Function

Effective image dehazing requires both structural restoration and precise color fidelity. To this end, we propose a novel dual-space loss function, optimizing reconstruction in both RGB and Lab color spaces. By leveraging RGB for structural consistency and Lab for perceptual color accuracy, our approach mitigates color distortions while preserving image integrity.

To maintain structural consistency between the dehazed output and the ground truth, we employ an L1 reconstruction loss in the RGB domain. The first component of the loss function is the pixel-level reconstruction loss. During training, the model takes hazy images as input and outputs dehazed images after processing by the network. loss calculates the pixel-level differences between the dehazed image and the ground-truth (GT) image, guiding the network to optimize the image reconstruction by minimizing the absolute differences between the two images. Specifically, the loss is computed as the sum of the absolute pixel-wise differences between the two images. The primary goal of this loss function is to reduce reconstruction errors, making the dehazed image as close as possible to the real clear image, thereby enhancing the image details. can be expressed mathematically as follows:

To further refine color correction, we introduce an loss in the Lab color space, specifically focusing on the a (red-green) and b (yellow-blue) chromatic channels. While pixel-level reconstruction loss enhances structural clarity, it remains insufficient for precise color restoration, particularly in maintaining color fidelity. In our approach, the a and b chromatic components of both the dehazed output and the ground truth are extracted, and the loss is computed between them. Given that the Lab color space inherently decouples luminance (L) from chromaticity (a, b), this loss formulation enables CCD-Net to more effectively restore natural colors and mitigate hue shifts introduced by atmospheric scattering. By incorporating this constraint, the loss function not only ensures accurate brightness restoration but also enhances color consistency, significantly reducing potential chromatic distortions in the dehazing process. can be expressed mathematically as follows:

To comprehensively address both brightness restoration and color restoration, this paper combines the two loss components into a unified loss function. Specifically, the loss function is a weighted sum of the pixel-level loss and the loss in the Lab color space, with each component contributing equally to the total loss by setting the weight factor to 0.5. This weighted combination enables the model to optimize both the brightness restoration and color consistency during training, resulting in final outputs that are visually clear and color-accurate. The final objective function is a weighted combination of both loss components:

where denotes the pixel-based loss and represents the L1 loss in the LAB color space.

4. Experiments

This section presents the experimental evaluation of CCD-Net, including the datasets, evaluation metrics, and implementation details. We conduct a comparative analysis against state-of-the-art dehazing methods, providing both quantitative and qualitative assessments to demonstrate the superiority of our approach. Additionally, we analyze the impact of the Lab color space and perform an ablation study to evaluate the contributions of individual components within the network.

4.1. Datasets and Metrics

In this work, the RESIDE-indoor and RESIDE-6K datasets are used to train the proposed CCD-Net. RESIDE (REalistic Single-Image-Dehazing Dataset) [] is a benchmark dataset designed for image-dehazing tasks, containing 13,990 pairs of hazy images paired with corresponding clear images from real-world scenes. The dataset offers a diverse set of samples from various environments, covering different lighting conditions, scenes, and visibility distances. By simulating and collecting images under various hazy weather conditions, RESIDE aims to provide a challenging benchmark for the development of dehazing algorithms. With a large scale that includes both synthetic and real image pairs, the dataset is well suited for training and testing deep learning models, particularly for evaluating the performance of dehazing algorithms in complex real-world scenarios.

We evaluated the dehazing effect of our method using three commonly used image-quality metrics: Peak Signal-to-Noise Ratio (PSNR, dB), Structural Similarity Index (SSIM), and CIEDE2000 (CIEDE). PSNR quantifies the ratio between the maximum possible signal power and the power of corrupting noise. SSIM is designed to measure the structural similarity between two images by considering luminance, contrast, and structural components. CIEDE [] is a perceptual color-difference metric proposed by the International Commission on Illumination (CIE). Unlike traditional color-difference formulas, CIEDE incorporates luminance, chroma, and hue adjustments, making it more consistent with human visual perception. It is particularly useful for evaluating color fidelity in image-processing tasks, as it quantifies the perceptual difference between two colors. The calculation formula is as follows:

where indicates the similarity between two colors, with a smaller value signifying a closer color match. represents the difference in lightness, while denotes the chroma difference, and corresponds to the hue difference. The weighting coefficients , , and are typically set to 1 to balance the contributions of different components. The scaling factors , , and are used to normalize the respective differences in lightness, chroma, and hue. Additionally, is a rotation term specifically designed to adjust color differences in the blue region, ensuring better perceptual accuracy.

A higher PSNR or SSIM value (closer to 1) suggests that the dehazed image preserves more structural details of the ground-truth image, while a lower CIEDE value indicates better color preservation.

4.2. Implementation Details

The proposed method in this paper was developed using the PyTorch 1.10 deep learning framework and executed on a computing platform featuring an NVIDIA® GeForce RTX3080 GPU. To ensure fairness, all experiments were conducted in the same environment, with each network trained using the same set of hyperparameters. Choosing the appropriate hyperparameters during the training process is essential for accelerating convergence and improving model performance. After thorough research and testing, a suitable set of hyperparameters was determined for the final model training, as shown in Table 2.

Table 2.

Configuration of model hyperparameters.

4.3. Comparison with Other Methods

To evaluate CCD-Net’s performance, we compare it with existing methods using both quantitative metrics and visual assessments. The following sections present the results.

4.3.1. Quantitative Comparison

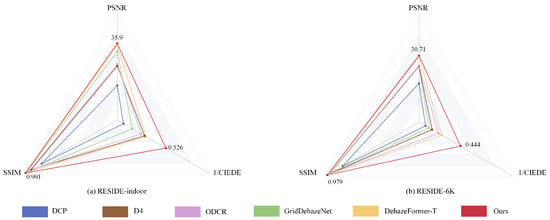

On RESIDE-indoor and RESIDE 6K datasets [], CCD-Net is compared with with other supervised, unsupervised, and unpaired dehazing methods, including GridDehazeNet, DehazeFormer-T for supervised comparison, DCP for unsupervised methods, and D4, ODCR for unpaired methods. The results shown in Table 3 indicate that CCD-Net achieves the best dehazing effect on the evaluation metrics PSNR, SSIM and CIEDE. For CCD-Net, the PSNR values on the two datasets are 35.90 dB, 30.71 dB, and SSIM metrics of 0.9589 and 0.9850, respectively. These values are improved compared to the sub-optimal values obtained. The CIEDE values of CCD-Net, on the other hand, are reduced by 1.3 and 1.88 compared to the sub-optimal values, which is a more significant improvement.

Figure 8 visualizes the performance of multiple dehazing models on the RESIDE-indoor and RESIDE-6K datasets on three metrics, PSNR, SSIM, and 1/CIELDE, using radar plots. The relationships of multiple metrics and comparisons between different methods or models are shown. Each dimension extends along a different axis and the data points are connected by lines to form polygons, thus showing the performance of different methods on each metric. From this radar plot, our method has a larger coverage area, which represents a better overall performance. The higher PSNR value indicates a better quality of the reconstructed image, SSIM is close to 1, which indicates that the structural information of the image is well preserved after dehazing, and the 1/CIELDE is also higher, which indicates a more accurate color restoration.

Figure 8.

Radar chart of comparative experimental results.

To evaluate the deployment efficiency of our method, we compare the number of parameters and inference time of CCD-Net with state-of-the-art dehazing methods, as shown in Table 3. While CCD-Net is not the most lightweight model in terms of computational complexity and runtime, it achieves an effective balance between performance and efficiency. On the RESIDE-6K dataset, CCD-Net outperforms other methods in both dehazing quality and color fidelity while maintaining a reasonable computational cost. Furthermore, the model achieves an inference speed of approximately 29 frames per second on an RTX 3080 GPU for 256 × 256 images, demonstrating its practical applicability for high-quality dehazing and color-correction tasks.

Table 3.

Quantitative results of different methods on RESIDE-indoor and RESIDE-6K datasets. Best results are bolded, and the second-best results are underlined.

Table 3.

Quantitative results of different methods on RESIDE-indoor and RESIDE-6K datasets. Best results are bolded, and the second-best results are underlined.

| Method | RESIDE-Indoor | RESIDE-6K | Overhead | |||||

|---|---|---|---|---|---|---|---|---|

| PSNR (dB) | SSIM | CIEDE | PSNR (dB) | SSIM | CIEDE | Param (M) | Inference Time (ms) | |

| DCP [] | 16.20 | 0.818 | 14.86 | 17.88 | 0.816 | 14.03 | - | - |

| GridDehazeNet [] | 32.16 | 0.984 | 6.17 | 25.86 | 0.944 | 9.28 | 0.956 | 2.575 |

| DehazeFormer-T [] | 35.05 | 0.989 | 3.21 | 30.36 | 0.973 | 4.13 | 0.686 | 12.90 |

| D4 [] | 25.42 | 0.932 | 3.85 | 25.91 | 0.958 | 7.15 | 10.74 | 75.82 |

| ODCR [] | 26.32 | 0.945 | 3.46 | 26.14 | 0.957 | 4.76 | 11.38 | 27.38 |

| Ours | 35.90 | 0.991 | 1.90 | 30.71 | 0.979 | 2.25 | 16.97 | 34.27 |

4.3.2. Visual Comparisons

Figure 9 and Figure 10 show the visual comparison of CCD-Net with five other methods on the RESIDE-indoor and RESIDE-6K datasets. On the RESIDE-indoor dataset, DCP causes the image to be darker in tone, with a more pronounced color cast. GridDehazeNet does not fully recover the natural tone and sharpness of the GT image, despite the higher contrast. DehazeFormer-T recovers details that are more blurred, such as the texture of the desktop and the background. D4 and ODCR result in poorly defined image edges and loss of details.

Figure 9.

Visual comparison between our method and other approaches on the RESIDE-indoor dataset.

Figure 10.

Visual comparison of various methods on haze-free images. The first row shows the error maps between the resulting images and the ground truth.

As shown in Figure 9, our method effectively restores edge details, producing a sharper door outline and preserving the fine structure of the window shutters. In contrast, the DCP method introduces a noticeable halo effect, causing severe edge artifacts, while GridDehazeNet struggles with blurriness, failing to accurately reconstruct the texture. In terms of color reproduction, our method also performs well in reproducing the warm tones of the ceiling light, and the colors of the walls and furniture are natural and close to the original scene, avoiding the problems of yellowish, dark, or skewed colors that occur in DCP and GridDehazeNet, and especially in the reproduction of the window light and the white areas of the walls, which is much closer to the real distribution of the colors. In contrast, the D4 and ODCR methods are slightly less bright and saturated, and fail to show the ideal color effect.

Our method also demonstrates excellent performance in the outdoor image-dehazing task on the RESIDE-6K dataset. As shown in Figure 10, from the error maps, we can observe that traditional methods such as DCP exhibit higher error intensities, particularly in regions with heavy haze or complex textures, indicating their limitations in effectively removing haze and preserving color. The error distribution of GridDehazeNet remains relatively high, especially in challenging areas, suggesting that it still struggles with fine-grained haze removal and color consistency. The bright regions in their error maps highlight significant discrepancies from the ground truth. In contrast, other learning-based methods such as DehazeFormer-T, D4, and ODCR demonstrate reduced error levels, indicating improved performance in reconstructing clearer images. However, residual haze and color distortion remain noticeable in their results.

Conversely, CCD-Net restores the details of the images, resulting in more natural colors that are closer to the ground-truth images (GT) on all datasets. Additionally, CCD-Net achieves the highest PSNR and SSIM values. The results show that CCD-Net recovered images with fog have better visual effects and the model is effective in image dehazing.

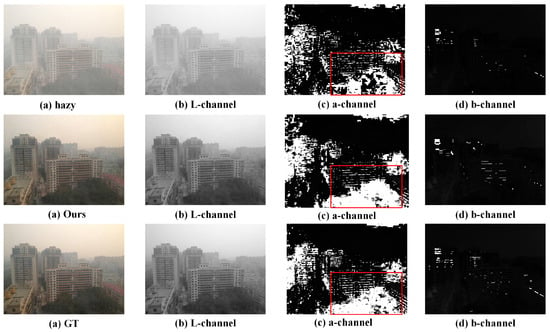

4.3.3. Lab Color-Space Analysis

From the perspective of the Lab color space, as shown in Figure 11, the a-channel represents the red-green component, while the b-channel represents the yellow-blue component. In the a-channel, the original hazy image appears relatively smooth, with much of the red-green information obscured by the haze. In contrast, the dehazed image exhibits significantly enhanced a-channel details, with clearer building edges and richer red-green contrast. Notably, in the region marked by the red box, the dehazed a-channel reveals more distinct details compared to the hazy image and closely resembles the ground truth, indicating that the model effectively restores red-green color information. The changes in the b-channel further support this observation. Overall, our method’s impact in the Lab color space is primarily reflected in restoring brightness distribution, enhancing contrast, and recovering color information, making the dehazed image visually clearer and more natural, bringing it closer to the real scene.

Figure 11.

Visualization of dehazed image and corresponding ground-truth image in the Lab color space.

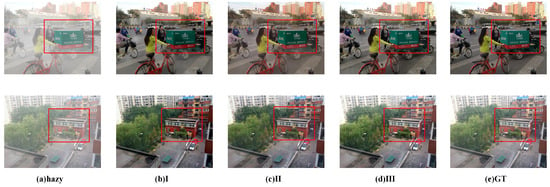

4.4. Ablation Studies

Ablation experiments are conducted to evaluate the contribution of each component in the proposed CCD-Net. The impact is analyzed by systematically removing different modules of the network: (I) CCD-Net removing the color-correction branch; (II) CCD-Net removing the loss of the Lab color space ; and (III) our proposed CCD-Net. In the second case, we only use the L1 loss in the RGB space to verify the effect of the loss in the Lab space. Table 4 and Figure 12 and Figure 13 present the quantitative and visual results of the ablation experiments on different datasets separately.

Table 4.

The ablation experiments on the RESIDE-indoor and RESIDE-6K datasets.

Figure 12.

Visualization of ablation experiments on the RESIDE-indoor dataset.

Figure 13.

Visualization of ablation experiments on the RESIDE-6K dataset.

Figure 12 shows that when only the dehazing branch is used, it can be seen from the visualization results (a) to (b) that although the fog is removed, the overall image still appears gray, the color is cold and lacks saturation, the detail performance is not clear enough, and there is still a slight color bias in local areas, especially the blurring of the edges, especially in the corners where the light is not uniform. When the color-correction branch is added, the color bias is effectively reduced. At this point, the brightness of the image is improved, the texture of the room carpet is more prominent, and the color saturation and contrast are improved. After further adding the color loss function , it shows that the color restoration is most effective. At this time, the color of the image is more natural, and the white wall no longer has a gray-yellow residual haze, achieving an overall effect that is remarkably close to the real image. The details are crisp and well defined, with a clear sense of layering. Notably, the finer details in locally zoomed-in areas have been exceptionally restored.

Similarly, in outdoor situations, the base dehaze branch of shows the limited performance of the base model. Visualization result (b) in Figure 13 shows that although the haze has been removed, the overall image is still grayish and the red buildings are dark, which cannot accurately restore the real colors. After adding the color-correction branch, the color saturation of the image is improved, the red buildings become clearer, the green advertising is brighter, and the overall image contrast is enhanced. However, some regions still have uneven colors. After further introducing the color loss function , the red color of the building and the green color of the advertisement are clearly defined, and even almost indistinguishable from the real image, which indicates that its ability in terms of detail retention and color reproduction has been significantly improved. In conclusion, the image recovered by CCD-Net is closest to the ground-truth image.

5. Conclusions

In this work, we propose a two-branch dehazing network CCD-Net. In the image-dehazing branch, multi-scale feature extraction and fusion are used to enhance the processing capability of the image, and the image details are gradually enhanced by different levels of feature representation. In addition, up- and downsampling operations are utilized to further enhance the multi-scale information of the image. This branch combines the channel attention (CA) and pixel attention (PA) mechanisms to dynamically adjust the important parts of the feature maps so as to optimize the image-processing effect. In the color-correction branch, the color information and details of the image are mainly enhanced by introducing Lab color space, the attention mechanism, and the multilayer convolutional neural network structure. Numerous experimental results demonstrate that CCD-Net outperforms five other methods in dehazing and effectively mitigates the impact of haze on image brightness and color. Most notably, CIEDE is reduced by 40.81% on RESIDE-indoor and 45.57% on RESIDE-6K compared to the second-best-performing model, highlighting CCD-Net’s superior color-restoration capabilities. Additionally, the ablation study confirms the effectiveness of our network components.

Author Contributions

Conceptualization, D.C. and H.Z.; methodology, D.C. and H.Z.; software, D.C.; validation, D.C.; formal analysis, D.C. and H.Z.; investigation, D.C. and H.Z.; resources, D.C. and H.Z.; data curation, D.C.; writing—original draft preparation, D.C.; writing—review and editing, D.C. and H.Z.; visualization, D.C.; supervision, H.Z.; project administration, H.Z.; funding acquisition, H.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (NSFC) under Grant 62173143.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

A publicly available dataset is analyzed in this study. These data can be found here: https://sites.google.com/view/reside-dehaze-datasets/reside-standard (accessed on 15 November 2024).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Lin, C.; Rong, X.; Yu, X. MSAFF-Net: Multiscale attention feature fusion networks for single image dehazing and beyond. IEEE Trans. Multimed. 2022, 25, 3089–3100. [Google Scholar] [CrossRef]

- Mehra, A.; Mandal, M.; Narang, P.; Chamola, V. ReViewNet: A fast and resource optimized network for enabling safe autonomous driving in hazy weather conditions. IEEE Trans. Intell. Transp. Syst. 2020, 22, 4256–4266. [Google Scholar] [CrossRef]

- Xu, Y.; Osep, A.; Ban, Y.; Horaud, R.; Leal-Taixé, L.; Alameda-Pineda, X. How to train your deep multi-object tracker. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 6787–6796. [Google Scholar]

- Cao, Z.; Huang, Z.; Pan, L.; Zhang, S.; Liu, Z.; Fu, C. TCTrack: Temporal contexts for aerial tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 14798–14808. [Google Scholar]

- Yin, J.; Wang, W.; Meng, Q.; Yang, R.; Shen, J. A unified object motion and affinity model for online multi-object tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 6768–6777. [Google Scholar]

- Pang, Y.; Xie, J.; Khan, M.H.; Anwer, R.M.; Khan, F.S.; Shao, L. Mask-Guided Attention Network for Occluded Pedestrian Detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Nie, J.; Anwer, R.M.; Cholakkal, H.; Khan, F.S.; Pang, Y.; Shao, L. Enriched feature guided refinement network for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, New Orleans, LA, USA, 19–24 June 2022; pp. 9537–9546. [Google Scholar]

- Li, Y.; Pang, Y.; Shen, J.; Cao, J.; Shao, L. NETNet: Neighbor erasing and transferring network for better single shot object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 13349–13358. [Google Scholar]

- Nayar, S.K.; Narasimhan, S.G. Vision in bad weather. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–25 September 1999; Volume 2, pp. 820–827. [Google Scholar]

- Narasimhan, S.G.; Nayar, S.K. Vision and the atmosphere. Int. J. Comput. Vis. 2002, 48, 233–254. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 2341–2353. [Google Scholar]

- Fattal, R. Single Image Dehazing. ACM Trans. Graph. (TOG) 2008, 27, 1–9. [Google Scholar] [CrossRef]

- Liu, J.; Li, S.; Liu, H.; Dian, R.; Wei, X. A lightweight pixel-level unified image fusion network. IEEE Trans. Neural Netw. Learn. Syst. 2023, 35, 18120–18132. [Google Scholar] [CrossRef]

- Jain, J.; Li, J.; Chiu, M.T.; Hassani, A.; Orlov, N.; Shi, H. Oneformer: One transformer to rule universal image segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Waikoloa, HI, USA, 2–8 January 2023; pp. 2989–2998. [Google Scholar]

- Zhou, J.; Li, B.; Zhang, D.; Yuan, J.; Zhang, W.; Cai, Z.; Shi, J. UGIF-Net: An efficient fully guided information flow network for underwater image enhancement. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–17. [Google Scholar] [CrossRef]

- Cai, B.; Xu, X.; Jia, K.; Qing, C.; Tao, D. Dehazenet: An end-to-end system for single image haze removal. IEEE Trans. Image Process. 2016, 25, 5187–5198. [Google Scholar] [CrossRef]

- Ren, W.; Liu, S.; Zhang, H.; Pan, J.; Cao, X.; Yang, M.H. Single image dehazing via multi-scale convolutional neural networks. In Proceedings of the Computer Vision—ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part II 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 154–169. [Google Scholar]

- Deng, Z.; Zhu, L.; Hu, X.; Fu, C.W.; Xu, X.; Zhang, Q.; Qin, J.; Heng, P.A. Deep multi-model fusion for single-image dehazing. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, South Korea, 27 October–2 November 2019; pp. 2453–2462. [Google Scholar]

- Zheng, L.; Li, Y.; Zhang, K.; Luo, W. T-net: Deep stacked scale-iteration network for image dehazing. IEEE Trans. Multimed. 2022, 25, 6794–6807. [Google Scholar] [CrossRef]

- Zheng, C.; Zhang, J.; Hwang, J.N.; Huang, B. Double-branch dehazing network based on self-calibrated attentional convolution. Knowl. Based Syst. 2022, 240, 108148. [Google Scholar] [CrossRef]

- Yi, Q.; Li, J.; Fang, F.; Jiang, A.; Zhang, G. Efficient and accurate multi-scale topological network for single image dehazing. IEEE Trans. Multimed. 2021, 24, 3114–3128. [Google Scholar] [CrossRef]

- Zhou, Y.; Chen, Z.; Li, P.; Song, H.; Chen, C.P.; Sheng, B. FSAD-Net: Feedback spatial attention dehazing network. IEEE Trans. Neural Netw. Learn. Syst. 2022, 34, 7719–7733. [Google Scholar] [CrossRef]

- Han, M.; Lyu, Z.; Qiu, T.; Xu, M. A review on intelligence dehazing and color restoration for underwater images. IEEE Trans. Syst. Man. Cybern. Syst. 2018, 50, 1820–1832. [Google Scholar] [CrossRef]

- Li, C.; Guo, J. Underwater image enhancement by dehazing and color correction. J. Electron. Imaging 2015, 24, 033023. [Google Scholar] [CrossRef]

- Deng, X.; Wang, H.; Liu, X. Underwater image enhancement based on removing light source color and dehazing. IEEE Access 2019, 7, 114297–114309. [Google Scholar] [CrossRef]

- Ancuti, C.O.; Ancuti, C.; De Vleeschouwer, C.; Garcia, R. Locally adaptive color correction for underwater image dehazing and matching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Venice, Italy, 22–29 October 2017; pp. 1–9. [Google Scholar]

- Zhang, Y.; Yang, F.; He, W. An approach for underwater image enhancement based on color correction and dehazing. Int. J. Adv. Robot. Syst. 2020, 17, 1729881420961643. [Google Scholar] [CrossRef]

- Espinosa, A.R.; McIntosh, D.; Albu, A.B. An efficient approach for underwater image improvement: Deblurring, dehazing, and color correction. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 2–8 January 2023; pp. 206–215. [Google Scholar]

- Kong, L.; Feng, Y.; Yang, S.; Gao, X. A Two-stage Progressive Network for Underwater Image Enhancement. In Proceedings of the 2024 5th International Conference on Computer Vision, Image and Deep Learning (CVIDL), Zhuhai, China, 19–21 April 2024; pp. 1013–1017. [Google Scholar]

- Huang, Z.; Li, J.; Hua, Z.; Fan, L. Underwater image enhancement via adaptive group attention-based multiscale cascade transformer. IEEE Trans. Instrum. Meas. 2022, 71, 1–18. [Google Scholar] [CrossRef]

- Khan, R.; Mishra, P.; Mehta, N.; Phutke, S.S.; Vipparthi, S.K.; Nandi, S.; Murala, S. Spectroformer: Multi-domain query cascaded transformer network for underwater image enhancement. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 2–8 January 2023; pp. 1454–1463. [Google Scholar]

- Zhu, Q.; Mai, J.; Shao, L. A fast single image haze removal algorithm using color attenuation prior. IEEE Trans. Image Process. 2015, 24, 3522–3533. [Google Scholar]

- Berman, D.; Treibitz, T.; Avidan, S. Non-local image dehazing. In Proceedings of the IEEE Conference on Computer Vision And Pattern Recognition, Las Vegas, NV, USA, 27 June–2 July 2016; pp. 1674–1682. [Google Scholar]

- Meng, G.; Wang, Y.; Duan, J.; Xiang, S.; Pan, C. Efficient image dehazing with boundary constraint and contextual regularization. In Proceedings of the IEEE international Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 617–624. [Google Scholar]

- Ancuti, C.O.; Ancuti, C.; De Vleeschouwer, C.; Sbert, M. Color channel compensation (3C): A fundamental pre-processing step for image enhancement. IEEE Trans. Image Process. 2019, 29, 2653–2665. [Google Scholar] [CrossRef]

- Zhang, H.; Patel, V.M. Densely connected pyramid dehazing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 3194–3203. [Google Scholar]

- Li, B.; Peng, X.; Wang, Z.; Xu, J.; Feng, D. Aod-net: All-in-one dehazing network. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4770–4778. [Google Scholar]

- Qu, Y.; Chen, Y.; Huang, J.; Xie, Y. Enhanced Pix2pix Dehazing Network. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 8152–8160. [Google Scholar]

- Chen, D.; He, M.; Fan, Q.; Liao, J.; Zhang, L.; Hou, D.; Yuan, L.; Hua, G. Gated context aggregation network for image dehazing and deraining. In Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 7–11 January 2019; pp. 1375–1383. [Google Scholar]

- Qin, X.; Wang, Z.; Bai, Y.; Xie, X.; Jia, H. FFA-Net: Feature fusion attention network for single image dehazing. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 11908–11915. [Google Scholar]

- Wu, H.; Qu, Y.; Lin, S.; Zhou, J.; Qiao, R.; Zhang, Z.; Xie, Y.; Ma, L. Contrastive learning for compact single image dehazing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 10551–10560. [Google Scholar]

- Li, C.; Anwar, S.; Hou, J.; Cong, R.; Guo, C.; Ren, W. Underwater image enhancement via medium transmission-guided multi-color space embedding. IEEE Trans. Image Process. 2021, 30, 4985–5000. [Google Scholar] [CrossRef]

- Suny, A.H.; Mithila, N.H. A shadow detection and removal from a single image using LAB color space. Int. J. Comput. Sci. Issues 2013, 10, 270. [Google Scholar]

- Chung, Y.S.; Kim, N.H. Saturation-based airlight color restoration of hazy images. Appl. Sci. 2023, 13, 12186. [Google Scholar] [CrossRef]

- Alsaeedi, A.H.; Hadi, S.M.; Alazzawi, Y. Adaptive Gamma and Color Correction for Enhancing Low-Light Images. Int. J. Intell. Eng. Syst. 2024, 17, 188. [Google Scholar]

- Zhang, W.; Wang, Y.; Li, C. Underwater image enhancement by attenuated color channel correction and detail preserved contrast enhancement. IEEE J. Ocean. Eng. 2022, 47, 718–735. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Li, B.; Ren, W.; Fu, D.; Tao, D.; Feng, D.; Zeng, W.; Wang, Z. Benchmarking single-image dehazing and beyond. IEEE Trans. Image Process. 2018, 28, 492–505. [Google Scholar] [CrossRef]

- CIE. Commission Internationale de L’Eclariage. Colorimetry; Bureau Central de la CIE: Vienna, Austria, 1976. [Google Scholar]

- Liu, X.; Ma, Y.; Shi, Z.; Chen, J. Griddehazenet: Attention-based multi-scale network for image dehazing. In Proceedings of the IEEE/CVF international Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 7314–7323. [Google Scholar]

- Song, Y.; He, Z.; Qian, H.; Du, X. Vision transformers for single image dehazing. IEEE Trans. Image Process. 2023, 32, 1927–1941. [Google Scholar] [CrossRef]

- Yang, Y.; Wang, C.; Liu, R.; Zhang, L.; Guo, X.; Tao, D. Self-augmented unpaired image dehazing via density and depth decomposition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 2037–2046. [Google Scholar]

- Wang, Z.; Zhao, H.; Peng, J.; Yao, L.; Zhao, K. ODCR: Orthogonal Decoupling Contrastive Regularization for Unpaired Image Dehazing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 16–20 June 2024; pp. 25479–25489. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).