Abstract

In recent years, the adverse effects of climate change have increased rapidly worldwide, driving countries to transition to clean energy sources such as solar and wind. However, these energies face challenges such as cloud cover, precipitation, wind speed, and temperature, which introduce variability and intermittency in power generation, making integration into the interconnected grid difficult. To achieve this, we present a novel hybrid deep learning model, CEEMDAN-CNN-ATT-LSTM, for short- and medium-term solar irradiance prediction. The model utilizes complete empirical ensemble modal decomposition with adaptive noise (CEEMDAN) to extract intrinsic seasonal patterns in solar irradiance. In addition, it employs a hybrid encoder-decoder framework that combines convolutional neural networks (CNN) to capture spatial relationships between variables, an attention mechanism (ATT) to identify long-term patterns, and a long short-term memory (LSTM) network to capture short-term dependencies in time series data. This model has been validated using meteorological data in a more than 2400 masl region characterized by complex climatic conditions south of Ecuador. It was able to predict irradiance at 1, 6, and 12 h horizons, with a mean absolute error (MAE) of 99.89 W/m2 in winter and 110.13 W/m2 in summer, outperforming the reference methods of this study. These results demonstrate that our model represents progress in contributing to the scientific community in the field of solar energy in environments with high climatic variability and its applicability in real scenarios.

1. Introduction

Global heating, the depletion of fossil resources, and international efforts to reduce carbon emissions by 2050 have driven countries to adopt renewable energies as a viable solution to generate green energy. These include solar energy, one of the most abundant and usable resources on the planet, which presents no environmental risk [1]. Efficient integration of these energies into the electrical grid and precise scheduling of available energy are fundamental for their optimal utilization [2]. Additionally, renewable energies offer sustainable and unlimited alternatives for electricity generation.

Kumar et al. [3] highlight that photovoltaic (PV) power production costs have become competitive, equaling or even surpassing those of non-renewable energy sources. As a result, the number of solar power plants is increasing considerably [4]. It is, therefore, becoming critical for utilities to have reliable and robust models that ensure the efficient operation of the power grid.

PV power generation is directly dependent on solar irradiance. Although predicting irradiance might appear to be an easy task, in reality, factors such as cloud cover, precipitation, and wind speed have a significant impact [5]. These factors introduce instability, randomness, and uncertainty into irradiance, resulting in solar power generation volatility. This variability makes it difficult to fully rely on PVs and presents a considerable challenge to the effective integration of solar generation plants into the power grid [6]. This challenge is even more complex in areas where the climate is volatile [7].

Different solar forescasting methods have been developed and experimented with over time. According to [8], solar energy prediction techniques are divided into five groups: physical methods, persistence methods, statistical methods, machine learning models, and hybrid models.

Persistence models are commonly used to compare forecasted results and evaluate model performance. According to Perez et al. [9], energy prediction models based on the persistence method are straightforward but limited. Physical methods, on the other hand, use meteorological variables defined by mathematical equations to model weather patterns, requiring significant computational resources [10]. In this context, Kocifaj and Kómar [11] proposed a unified model of irradiance patterns (UMRP) to simulate sky irradiance under various conditions. However, physical models often fail to achieve reasonable accuracy under unstable weather conditions [12].

Statistical methods rely on mathematical relationships between arbitrary and non-arbitrary variables. Examples of this method are the autoregressive integrated moving average (ARIMA) model [13], exponential smoothing [14], and multiple linear regression (MLR) [15], which efficiently model relationships between irradiance and meteorological data. Despite their utility, these models lack robustness and can produce null or incomplete values under certain conditions [16].

Machine learning (ML) approaches have become popular in the last decade, as these models can automatically learn and improve forecasting by comparing real and predicted data. Common methods include support vector machines (SVMs) [17], multi-layer perceptron (MLP) [18], extreme learning machines (ELMs) [19], and artificial neural networks (ANNs) [20]. However, the inherent volatility and randomness of irradiance makes more complex models have problems in learning from these data. Therefore, to solve this problem, hybrid models combine different architectures, such as SVMs, MLP, ELMs, and ANNs, to improve prediction efficiency and accuracy in PV power estimation.

In addition, variable decomposition techniques, such as empirical mode decomposition (EMD), ensemble empirical mode decomposition (EEMD), and wavelet decomposition (WT), are used to capture temporal and long-term features. Monjoly et al. [21] improved a hybrid model by combining EMD and EEMD, identifying clear sky patterns using autoregression (AR) and ANN models. Lan et al. [22] proposed a hybrid SOM-BP model that applies EEMD to decompose data into subsequences, enhancing network performance. Anupong et al. [23] utilized wavelet analysis, together with SVMs and ANNs, to improve irradiance predictions.

Deep learning (DL) models are currently the state of the art for renewable energy forecasting. DL models try to efficiently extract temporal and spatial features, which makes them particularly suitable in renewable energy applications, although they rely on large datasets. For example, Wang et al. [24] proposed a hybrid model that integrates a convolutional neural network (CNN) and a long short-term memory network (LSTM) for PV forecasting, achieving high accuracy.

Fan et al. [25] developed a combination of a CNN and bidirectional gated recurrent unit network (GRU), achieving an optimal combination for energy forecasting. Ullah et al. [26] built a model using CNN and a multi-layer bidirectional LSTM (M-BLSTM), outperforming simple LSTM and CNN models. Additionally, Agga et al. [27] introduced a hybrid CNN-LSTM model for solar irradiance forecasting, which outperformed conventional machine learning and deep learning architectures. In [28], the authors presented two hybrid models, a Conv-LSTM and a hybrid CNN-LSTM, for solar energy prediction in a PV plant, demonstrating superior performance compared to base LSTM models.

In [29], the PV-Net DL architecture was presented to predict PV power at 24 h. This approach uses Conv-GRU, a hybrid version of GRU that includes convolutional layers, instead of the standard GRU. This modification allows for a more accurate extraction of PV energy characteristics. Davo et al. [30] developed a hybrid approach that integrates principal component analysis (PCA) with an ANN and an analogous ensemble (AnEn) to predict wind power and solar irradiance. This method uses PCA to decrease the dimensionality of the numerical weather forecasting (NWP) data, while the ANN and AnEn are used to train the model and generate the final forecasts.

Huang et al. [31] proposed a hybrid multivariate WPD-CNN-LSTM-MLP model based on a hybrid multi-frame structure with multivariate inputs combining wavelet packet decomposition (WPD), a CNN, LSTM, and MLP. These inputs include hourly solar irradiance and three climate variables, namely temperature, relative humidity, and wind speed, and their combination.

1.1. Recent Advances in Time Series Prediction for Solar Forecasting

Currently, DL architectures such as artificial neural networks (ANNs) and LSTM have shown promising results in short-term solar energy forecasting [32]. Nasiri et al. [33] demonstrated that variational mode decomposition (VMD) combined with neural networks reduces root mean square error (RMSE) by 31.8% compared to MEMD-LSTM. Similarly, the multi-functional recurrent fuzzy neural network (MFRFNN) [34] achieved a 9.4% improvement in mean absolute percentage error (MAPE) for chaotic time series. In addition, Assaf et al. [35] reviewed DL methods such as LSTM, GRU, and hybrid models, emphasizing their effectiveness for short-term predictions and the role of techniques such as wavelet decomposition. However, these approaches focus on generic systems and do not address the high variability of solar data in mountainous regions or long-term horizons like 24 h.

Our work aims to address the gaps identified in Table 1, specifically on the challenges of medium-term forecasting under high variability conditions in mountainous regions. While several studies employ advanced architectures, such as transformers, hybrid LSTM models, and attention mechanisms, these may lack robust preprocessing techniques such as variable decomposition or resampling, which are critical for capturing the inherent variability in solar irradiance data. Our approach integrates these preprocessing methods, allowing the model to handle complex weather conditions more effectively. Furthermore, by incorporating additional meteorological variables and seasonal splits, our model also improves its applicability in real scenarios, especially in regions with high climate variability. The aim of this contribution is to integrate clean energy into the grid by enabling a stable energy supply and facilitating demand management, especially in complex environments.

Table 1.

Summary of key studies on AI techniques for solar forecasting.

1.2. Contribution of the Present Work

Having justified our work through the review of the advances in the literature and the limitations encountered, we present the CEEMDAN-CNN-ATT-LSTM model, a hybrid deep learning architecture designed to predict short- and medium-term irradiance. Our model combines an encoder-decoder structure, a CNN, an LSTM, an attention mechanism (ATT), and a complete empirical ensemble modal decomposition with adaptive noise (CEEMDAN). Unlike other architectures, our approach offers a hybrid model for irradiance prediction in a harsh climate and at high altitude to facilitate the integration of solar generation into the power grid, which is a major challenge for solar power distributors. In addition, our CEEMDAN-CNN-ATT-LSTM model was evaluated using a historical dataset reflecting real meteorological conditions. This study can contribute to the exploitation of PV potential in difficult areas and provides guidance for the future development of models to ensure the efficient integration of solar power plants into the power grid.

The highlights of this research are described below:

- A hybrid CEEMDAN-CNN-ATT-LSTM deep learning architecture to forecast irradiance is presented; this model implements an attention mechanism and two neural networks—a CNN and an LSTM—with multivariate input, to improve the forecasting capability for 1 h, 6 h, and 12 h in advance.

- CEEMDAN is used to increase the irradiance forecasting accuracy, proving effective in the two existing climatic seasons.

- The proposed methodology addresses the volatility and randomness of irradiance in a high altitude area in the south of Ecuador, characterized by a difficult climate, offering a robust solution for integrating solar energy into the power grid.

- Our model is analyzed and compared to existing forecasting base models, demonstrating the superiority and benefits of the proposed hybrid approach.

The remainder of this study is stuctured as folllows: Section 2 describes the methodology, the data, and the architecture developed, while also detailing the evaluation metrics used to measure the performance of the models. Section 3 presents the case study and the results. Finally, Section 4 concludes the study.

2. Materials and Methods

2.1. Data Description

The data used in this research were collected by Weather Station 5001 of the National University of Loja. The station is located in the province of Loja, in southern Ecuador, in a mountainous region of the Andes Mountains, at approximately 2200 masl. This study area has two climatic seasons, the winter season, from December to May, and the summer season, from June to November. To cover these 2 seasons, the data used corresponds to the period from 1 January 2023, to 28 February 2024, and another from 22 June to 22 August 2024, yielding 124,448 records with a sampling frequency of every 5 min. The variables included in this dataset are detailed in Table 2. In addition, it is worth noting that the dataset used in this research is publicly available, the direct link for which can be found at the end of this study.

Table 2.

Statistical information of variables collected from Weather Station 5001.

2.2. General Process

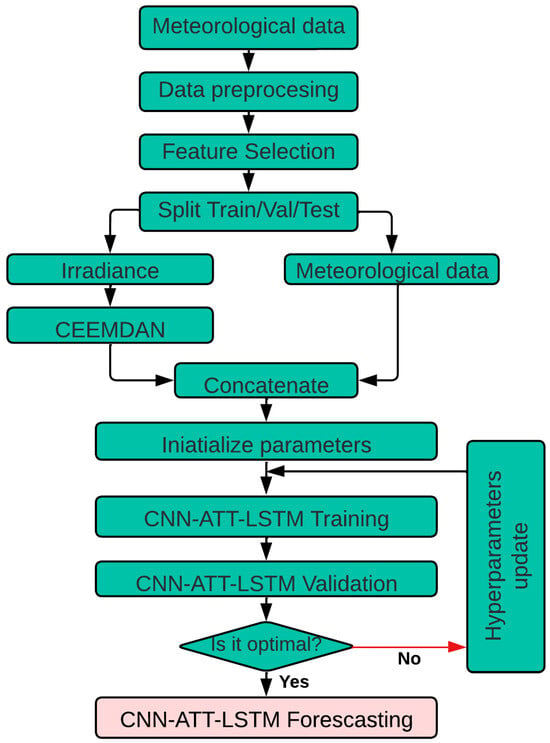

Figure 1 depicts the general process of irradiance forecasting 1 h, 6 h, and 12 h in advance. Each step of the process is described below:

Figure 1.

General flow chart of the methodological process used in this study.

- Meteorological data, including variables such as irradiance, precipitation, temperature, and wind speed, are collected from a weather station located in the south of Ecuador.

- The collected data are preprocessed to prepare the information for the model. This process includes normalization, treatment of missing values, and elimination of outliers.

- The most strongly correlated features are selected as input to the model, improving its accuracy and efficiency.

- The dataset is divided into three subsets for each year’s seasons, winter and summer.

- The irradiance is decomposed using CEEMDAN into several intrinsic mode functions to extract patterns from the data.

- The decomposed irradiance variables and other meteorological variables are concatenated to form the model input feature set.

- The CNN-ATT-LSTM model parameters are initialized, preparing the network for training.

- The CNN-ATT-LSTM model is trained using the training set. This process involves adjusting the model weights to minimize error.

- The model is evaluated using the validation set to ensure generalization of data not seen during training.

- The model performance is checked to see whether it is optimal. If not, the hyperparameters are updated, and the training and validation process is repeated.

- Once the model has been trained and validated with optimal results, the irradiance in the test set is predicted.

2.3. Data Preprocessing

The preprocessing steps of the meteorological data were crucial to ensure the quality and reliability of the dataset used in this study. To address missing values, a full row imputation method was employed, where rows containing missing values were removed to maintain data consistency. This approach was deemed suitable given the high frequency of data collection, ensuring that sufficient data points remained for analysis.

Outliers were detected and handled using a domain-specific range-based method. Given the high variability of climatic data, employing common statistical methods (e.g., interquartile range or z-scores) could risk removing valuable information that contributes to the model’s learning process. Instead, our approach focused on eliminating data points outside plausible physical ranges, as determined by historical and domain knowledge. In our case, solar irradiance values below 0 W/m2 or above 1000 W/m2 were removed, as such extremes are not physically possible. Similarly, wind speeds below 0 m/s or above 30 m/s were excluded, as these values are inconsistent with historical observations in the region. Air temperature values below 0 °C or above 40 °C were also discarded, as such conditions have never been recorded in the study area.

This range-based filtering minimized the influence of extreme and implausible values on model training while preserving the essential variability within the dataset.

2.4. Resampling and Data Filtering

The dataset was then resampled at 10 min, 20 min, 30 min, and 1 h intervals using the mean values of each interval to seek to smooth the time series and remove randomness from the data. The resampling operation is depicted in Equation (1).

where N represents the number of samples in the intervals of 5 min, 10 min, 30 min, or 1 h, respectively, V the variable to resample, and the time instants in each interval.

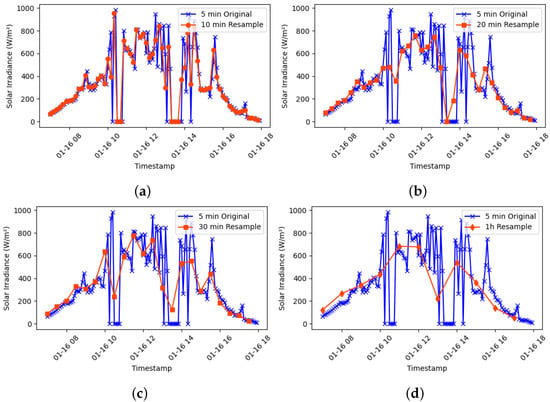

Table 3 shows that the 10 min resampling series is very similar to the original series (5 min), presenting noise due to the random nature of the data in the study area. However, we consider that the series loses little information compared to the 20 min, 30 min, and even 1 h series (See Figure 2). However, we would make the model work more inaccurately due to the randomness still present. We thus decided to analyze 3 resampling intervals of 10 min, 30 min, and 1 h.

Table 3.

Comparison of MAE and between different time series from January to December 2023.

Figure 2.

Temporary resampling for smoothing data with high variability on 16 January 2023. (a) Resampling from 5 to 10 min. (b) Resampling from 5 to 20 min. (c) Resampling from 5 to 30 min. (d) Resampling from 5 min to 1 h.

Since the analysis focuses on solar irradiance, the data were filtered out of the time range in which solar irradiance is recorded, i.e., from 6:00 to a maximum of 18:00. Finally, the processed dataset comprised 30,528 records, representing observations for the entire year.

2.5. Feature Selection

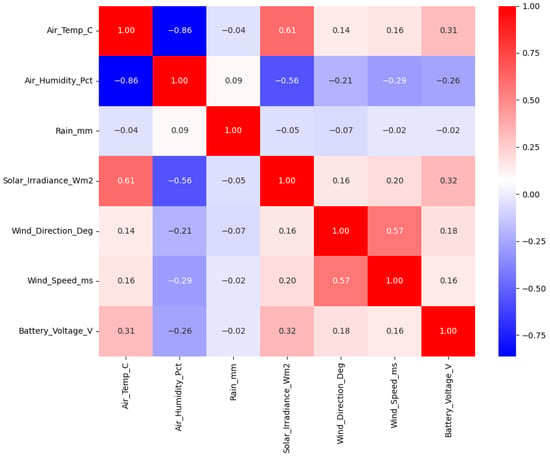

To select the relevant characteristics for the forecasting model in relation to the target variable (solar irradiance), the Pearson correlation was applied between the meteorological variables recorded in the dataset. Figure 3 presents the correlation matrix showing the relationship between the different variables and Equation (2) presents the Pearson correlation applied.

where represents the j-th observation of variable U, represents the j-th observation of variable V, and , are the means of the respective variables.

Figure 3.

Correlation matrix between different meteorological variables.

Figure 3 shows that air temperature (Air_Temp_C) and air humidity (Air_Humidity_Pct) have strong correlations with solar irradiance, with values of 0.60 and −0.56, respectively. This may mean that as air temperature increases, solar irradiance also increases, while an increase in air humidity has the contrary effect on solar irradiance.

To select the characteristics related to the target variable (solar irradiance), those with a correlation higher than 40% were taken. This allows the model to capture temporal patterns in the variables related to the target variable, thus avoiding noise from other variables, which can improve the accuracy and speed of the model.

2.6. Increased Time Characteristics

The time characteristics were increased in order to overcome missing data problems. Time was added as an additional variable using trigonometric transformations, such as sinusoidal functions to represent the cyclic variations of solar irradiance, thus improving the model’s prediction. The transformations were applied according to Equations (3)–(6).

These temporal features may permit the model to capture the periodic variations inherent in the solar irradiance data more effectively, thus improving the forecasting accuracy.

2.7. Data Normalization

In this study, we normalized the data using the Min-Max Scaler from the Scikit-Learn library (version 1.6.1) to scale the data to a range between 0 and 1 through Equation (7) in order to ensure that all features contribute equally to model training and there is no bias towards any variable. At the end of the forecasting process, the data were rescaled to the original range using the inverse process of Equation (7).

where M represents a given feature in the dataset, and is the normalized value.

2.8. Complete Ensemble Empirical Mode Decomposition with Adaptive Noise (CEEMDAN)

CEEMDAN was created as an improved version of the EEMD method. EEMD is a noise-assisted signal decomposition technique that solves the problem of modal mixing in EMD by introducing white Gaussian noise to the original signal, allowing the complex signals to be decomposed into high-frequency intrinsic modal functions (IMF) and a low-frequency part called residual (R) over several iterations [46]. However, the white Gaussian noise added to the EEMD must be repeatedly averaged to be removed, which consumes considerable time. In addition, the reconstructed signal, which includes residual noise, can produce a variable number of IMFs. Therefore, we use the CEEMDAN method to overcome these problems.

The CEEMDAN process is described as follows:

- White noise w with a standard deviation is added to the original signal , which can be expressed by Equation (8).where i represents the number of realizations.

- The EMD decomposition step is performed on the signal after each realization, and then the first IMF is calculated by averaging the decomposition components (see Equation (9)).

- The residual of the first stage is given as . Then, the signal can be further decomposed by EMD to calculate the second IMF mode, which can be formulated as in Equation (10).where represents the second IMF mode obtained by the EMD algorithm.

- In the next stage, the residual and the component can be calculated using Equation (11).where represents the -th IMF mode obtained by the CEEMDAN algorithm.

Repeat steps 3 and 4 until the residual meets the stopping criterion of Equation (12).

where T represents the length of the sequence , is the sequence after the n-th decomposition, and the empirical value of is set to 0.2.

Finally, the original signal can be decomposed as Equation (13).

where is the final residual.

In selecting the parameters in Table 4, consideration was given between decomposition accuracy and computational efficiency. First, 100 realizations (trials) were selected so that the intrinsic mode functions (IMFs) would capture the original signal features without adding excess noise. Then, was used to control the magnitude of the added noise, with the noise_scale set to 0.5 to maintain an appropriate signal-to-noise ratio. The number of siftings (num_siftings) was set to 100 to ensure that each IMF was extracted with maximum accuracy.

Table 4.

Parameters used in the implementation of CEEMDAN with their corresponding symbols and values.

CEEMDAN helps to analyze solar irradiance data due to the complex and variable nature of these signals, especially in regions with high climatic variability. Under these conditions, solar irradiance signals exhibit rapid fluctuations and seasonal variations, which can be difficult for the model to learn directly. CEEMDAN can effectively separate high-frequency components, such as noise and rapid fluctuations, from low-frequency trends, such as seasonal and long-term variations, by decomposing the data into different IMFs, allowing the model to learn these patterns more efficiently, increasing its robustness and accuracy.

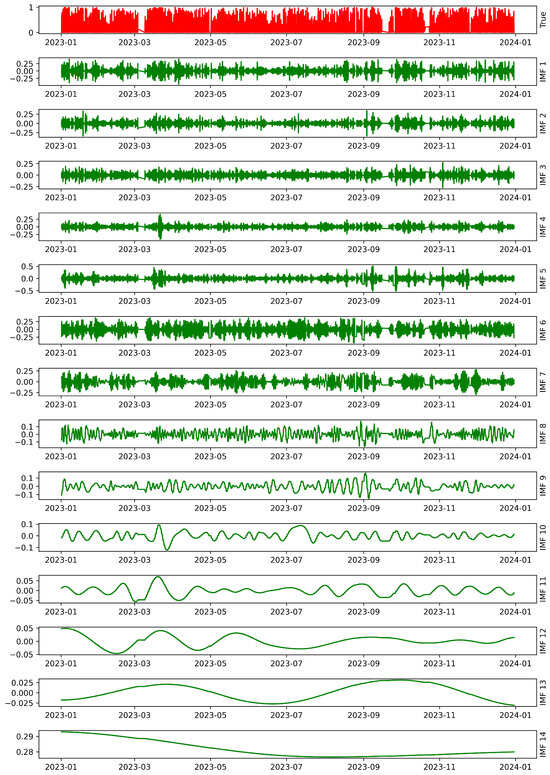

In Figure 4, the original solar irradiance (10 min resampled) time series is presented at the top (labeled “True”), followed by the different IMFs labeled 1 through 14. Each IMF can represent a different frequency component of the original signal:

Figure 4.

CEEMDAN decomposition of solar irradiance for the year 2023.

- IMF 1 to IMF 4: These may be capturing high-frequency variations, which may be associated with rapid and noisy fluctuations in solar irradiance.

- IMF 5 to IMF 8: These may be representing mid-frequency components, which could be related to more significant periodic changes throughout the day.

- IMF 9 to IMF 14: These may be capturing low frequency variations, which correspond to longer trends and seasonal changes in solar irradiance.

CEEMDAN was used in this research because it allowed the original signal (solar irradiance) to be decomposed into components that the model could quickly understand, facilitating the identification of specific patterns in the time series. This is useful for improving our forecasting model as it deals more effectively with rapid variations and long-term trends in the solar irradiance data.

To enable real-time forecasting, CEEMDAN can be applied using a sliding window approach on recent historical data (e.g., one year) to ensure the inclusion of new information while maintaining computational efficiency, minimizing IMF variations, and preserving the method’s robustness in high variability environments.

2.9. Convolutional Neural Networks (CNN)

CNNs are specialized deep learning models that process data within a grid structure. While time series data have a one-dimensional grid topology, image data typically have a two-dimensional grid of pixels. CNNs use convolutions in at least one of their layers, a specific type of linear operation that replaces the generic matrix multiplication used in other neural networks. Over time, various CNN architectures have emerged, such as multichannel and multi-head, although the basic structure remains essentially the same [47].

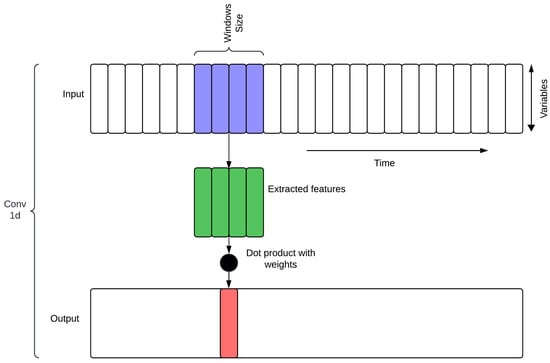

CNN models are versatile and capable of handling multiple input data formats, including 1D, 2D, and nD, where data typically consists of 1 to n channels [48]. This study employed a 1D input data format within the proposed hybrid model (see Figure 5). For this purpose, the time series data were treated as a one-dimensional grid, where each variable is represented as a distinct channel, allowing the CNN to learn the spatial relationships between variables while preserving the temporal sequence. The sliding window size should ensure that the model effectively captures the temporal features encountered. Therefore, our CNN has three convolutional layers, kernel sizes of 3, and stride of 1, which were optimized to balance computational efficiency and the ability to learn hierarchical patterns. This enables the CNN to extract meaningful features that are passed on to downstream components in the hybrid encoder-decoder model, improving the overall predictive accuracy of the model.

Figure 5.

CNN 1D input data format.

The application of CNNs in this research follows that in the cited literature, which the authors have used to extract and identify patterns in meteorological variables to improve solar irradiance forecasting. We intend to take advantage of the ability of CNNs to automatically learn hierarchical features from raw input data, such that the model could effectively capture complex temporal dependencies and spatial correlations within the data. The integration of CNNs into the hybrid model can complement other components by providing complex feature extraction, improving the overall predictive accuracy of solar irradiance forecasts.

2.10. Long Short-Term Memory (LSTM)

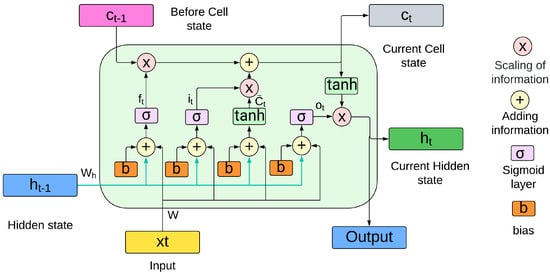

The LSTMs depicted in Figure 6 are a variant of recurrent neural networks (RNNs). RNNs can process sequential data and maintain information over time, but basic RNNs need help with problems such as vanishing and gradient bursting, which complicate their ability to capture long-term dependencies [49,50]. Therefore, LSTMs were developed, which incorporate forget, input, and output gates, and an internal memory unit called the cell state. The forget gate is responsible for removing irrelevant information, the input gate updates the cell state with new information, and the output gate filters the current state to transmit the most important information. LSTMs has been shown in several papers to be highly effective for accurate forecasting in a variety of applications, including renewable energy.

Figure 6.

Process of LSTM network.

The forget gate is calculated by the sigmoid function applied to the linear combination of the previous hidden state and the current input , as shown in Equation (14).

Next, the input gate is determined in a similar manner, as shown in Equation (15).

The new candidate information for the memory cell is calculated using the hyperbolic tangent function (tanh), as shown in Equation (16).

The state of cell is updated by combining the old information, modulated by the forgetting gate, with the new candidate information, weighted by the input gate, as described in Equation (17).

The output gate, which determines the part of the cell that should be passed to the next hidden state, is defined by Equation (18).

Finally, the new hidden state is calculated by applying the function tanh to the cell state and multiplying by the gate output, as presented in Equation (19).

where, , , , and are the weights associated with each gate, while , , , and are the corresponding biases. The function represents the sigmoid activation that regulates the flow of information in the gates, while tanh is used to generate the new memory units due to its ability to capture nonlinear relationships efficiently.

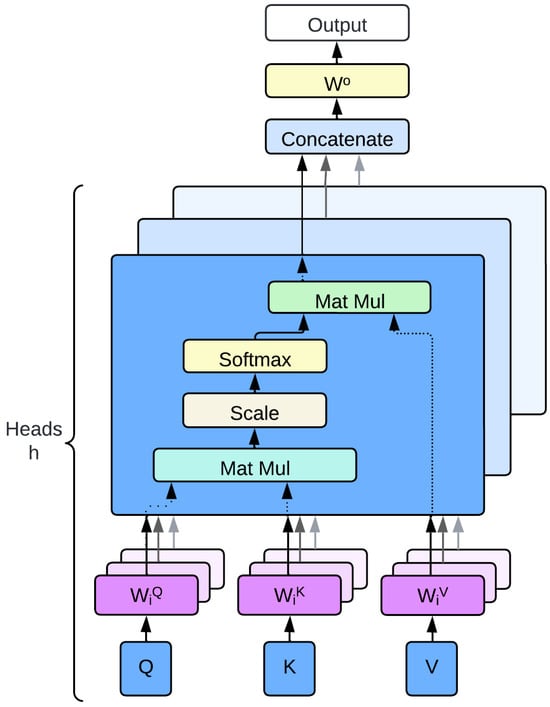

2.11. Multi-Head Attention Mechanism (ATT)

The ATT (see Figure 7) first presented by [51] was a new advance in DL architectures, especially in the transformer model. This mechanism has been used in several applications to improve learning capability [52]. This mechanism uses three main matrices: the query matrix Q, which represents the encoded temporal features to which attention is paid; the key matrix K, which represents the encoded features available for comparison; and the value matrix V, which contains the features used to compute the attention result. These matrices simultaneously focus on different parts of the input sequence, thus capturing a wide range of contextual information.

Figure 7.

Illustration of the multi-head attention mechanism, showing how multiple attention heads operate in parallel and are concatenated to form the final output.

Mathematically, the ATT is defined as Equations (20) and (21).

where, Q, K, and V represent the query, key, and value matrices, respectively. The projection matrices , , and correspond to each attention head i, and is the output projection matrix. The function Attention is defined as the scaled scalar product attention (see Equation (22)).

where denotes the dimensionality of the keys. The softmax function ensures that the attention weights are positive and their sum equals one, thus facilitating the effective weighting of values according to their relevance to queries.

We use this mechanism to improve the ability of our hybrid model to capture long-term dependencies and complex relationships in climate data. To this end, the ATT can identify hidden patterns and time-varying correlations. This results in more accurate and robust forecasts, especially for dynamic and seasonal features.

2.12. Positional Encoding and Embedding

Positional encoding is a technique that allows our hybrid model to incorporate temporal position information from the input sequences. This is useful to capture the sequentiality of time series data, which can be easily lost in ATT. On the other hand, the embedding layer maps the original input features, which represent variables such as irradiance, temperature and wind speed in a higher dimensional space, allowing the model to capture complex patterns and interactions between variables.

The embedding operation can be expressed as Equation (23).

where represents the input feature vector, is the embedding matrix, and is the bias term.

Consequently, we add positional encoding so that we can add unique information about the time steps in the input sequence, with each position in the input sequence thus having a distinct time position. The positional encoding vector of each time step is represented using sinusoidal functions (see Equations (24) and (25)).

where is the position of the time step in the sequence, i represents the dimension, and is the dimensionality of the embedding space. Including of positional encoding allows the temporal structure of the data to be maintained, which is essential for the model to understand the temporal positions of the data in time-dependent problems, e.g., solar irradiance prediction.

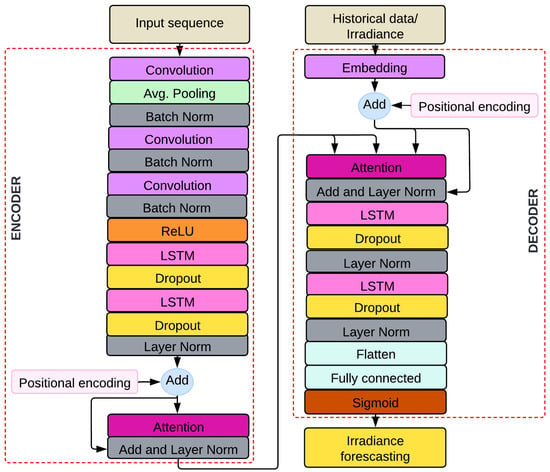

2.13. CEEMDAN-CNN-ATT-LSTM Model

Figure 8 presents a hybrid achitecture for solar radiance forecasting that combines CNNs, LSTM networks, and an ATT for solar irradiance forecasting. This combination leverages the strengths of each component: CNNs for spatial feature extraction, LSTMs to capture short-term temporal dependencies, and an ATT to extract long-term patterns from the input sequence.

Figure 8.

Architecture of the proposed model.

Our proposed CEEMDAN-CNN-ATT-LSTM model employs a carefully designed architecture with specific hyperparameters to optimize its performance. The CNN encoder consists of three sequential 1D convolutional layers. The first convolutional layer has 256 filters, the second 128 filters, and the third 256 filters, each with a kernel size of 3 and a padding of 1 to preserve the input size. Batch normalization is introduced after each convolutional layer to stabilize and speed up training. In addition, dropout regularization with a specific probability is applied after each layer to avoid overfitting. After the last convolutional layer, a ReLU activation function is applied to introduce nonlinearity. The goal of this setup is to extract spatial features from the input data while maintaining local temporal dependencies.

The LSTM component includes two stacked LSTM layers with 256 hidden units each. A dropout regularization with a rate of 0.2 is applied between the layers to mitigate overfitting and improve generalization. The outputs of the LSTM layers are normalized using layer normalization to stabilize the training.

For ATT, eight attention heads were employed, each with a projection dimension of 64. This design allows the model to focus on different parts of the input sequence simultaneously, effectively capturing both short- and long-term dependencies. Positional coding is added to maintain temporal order within the input sequence, ensuring that the model correctly interprets the sequential nature of the data.

The final dense layer consists of 128 units, followed by a single output unit with a sigmoid trigger function to produce normalized forecasting. This ensures that the output is limited to a range suitable for forecasting solar irradiance.

The model input is the sequence of irradiance data preprocessed using the CEEMDAN decomposition. In addition to irradiance, variables such as temperature and air humidity are included. Mathematically, the input x is a tensor , where q is the sequence length (number of time points) and n is the number of features (in this case, 19 features). The input shape is .

Three cascaded 1D convolutional layers are applied in the encoder, each using filters to extract local features from the input sequence. Each convolutional layer’s input x consists of the irradiance and other meteorological variables for each time step. The convolution operation is expressed in Equation (26).

where is the output of the l-th convolutional layer, are the weights (with k being the kernel size, n the input feature dimension, and m the output feature dimension), are the biases, and ∗ denotes the convolution operation. The ReLU activation function introduces non-linearity to the output, allowing the model to learn complex patterns in the data.

Following each convolutional layer, average pooling is applied to reduce the dimensionality of the feature maps and prevent overfitting. This operation is defined in Equation (27).

where is the pooled output from the l-th layer, and is the pooling window size. After pooling, batch normalization is applied to standardize the outputs, as shown in Equation (28).

where and are the mean and variance of the output from the l-th layer, and is a small constant for numerical stability.

The features extracted by the CNN are then passed through two LSTM layers. The input to each LSTM layer consists of the normalized outputs from the previous stage, which are data sequences representing features over time. The LSTM operation is given in Equation (29).

where LayerNorm represents layer normalization, and Dropout is used to prevent overfitting. The hidden units in this LSTM layer are set to 256. The LSTM layer captures the temporal dependencies in the data, with being the output at time step t.

ATT is then applied to the outputs of the two LSTM layers and the positional encoding (see Equation (30)). The attention layer calculates the importance of each time step in the sequence, helping the model focus on the most relevant features for predicting solar irradiance. This is expressed in Equation (31).

Residual connections are then employed (see Equation (32)) to add the original input back to the output of the attention mechanism:

This technique helps to maintain the original input information, enhancing the stability and performance of the model.

Before applying the ATT in the decoder, the target history (i.e., historical irradiance data) is embedded to map the input features into a higher-dimensional space that can capture more complex patterns. The embedding operation is described in Equation (33).

where represents the sequence of past irradiance values, and Embedding is the operation that transforms these values into a higher-dimensional space , where d is the embedding dimension.

To incorporate temporal information into the sequence, positional encoding is added to the embedded target history. This process is shown in Equation (34).

The positional encoding allows the model to differentiate between the positions of time steps in the sequence, which is crucial for tasks involving sequential data.

A multi-head attention mechanism is then applied to the outputs of the encoder and the positional encoded target history. This is expressed in Equation (35).

Then, residual connections are employed to add the original input back to the output of the ATT (See Equation (36)).

The features extracted are then passed through two LSTM layers. The LSTM operation is given in Equation (37).

The output from the LSTM is flattened into a one-dimensional vector, as shown in Equation (38).

This vector is then passed through a fully connected layer to produce the final prediction (See Equation (39)).

where and are the weights and biases of the fully connected layer, and is the predicted solar irradiance value. The output is then passed through a sigmoid function to constrain the predictions (See Equation (40))

where represents the sigmoid activation applied to the predicted value to ensure non-negative output.

Algorithms 1 and 2 describe the training and forecasting process of the hybrid model developed for this task. In the first phase, the model is iteratively trained on a dataset, adjusting its parameters to minimize a loss function represented as . During each epoch, the model is evaluated on a validation set to ensure that it learns from the training data and generalizes well to new data. The prediction phase, which follows training, uses the optimized model to generate predictions on test data.

| Algorithm 1: Training and Prediction with CNN-LSTM-MultiHeadAttention Model |

|

| Algorithm 2: Prediction with CNN-LSTM-MultiHeadAttention Model |

|

2.14. Data Splitting

The dataset was divided into two parts for the forecasting model’s input, taking into account the area’s specific climatic conditions, which have two distinct seasons: summer and winter. Since we have data for an entire year, different periods are used for the model’s training, validation, and testing.

For the winter season, data from December to May 2023 are used for training, January 2024 for validation, and February 2024 for testing the model with unseen data. As for the summer season, data from December to May 2023 are used for training, July 2024 for validation, and August 2024 for testing. This strategy considers seasonal variations, which can significantly affect the model’s accuracy.

2.15. Model Hyperparameters

Table 5 shows the hyperparameters used in the hybrid model. The hyperparameters shown in the table were selected because they obtained the best model performance. The sequence length and model prediction were set to 144, allowing the model to process data sequences of 144 units. A batch size 128 was used to balance computational efficiency and training stability. The model includes 2 LSTM layers, allowing temporal dependencies in the data to be more effectively captured. In addition, 8 attention mechanisms were implemented to improve the model’s ability to focus on relevant parts of the sequence. Finally, 3 convolutional layers were added to extract additional spatial features from the data.

Table 5.

Hyperparameters used in CNN-ATT-LSTM.

2.16. Model Evaluation Metrics

We use different common error metrics in forecasting models to evaluate the proposed model. These metrics include the root mean square error (RMSE), the mean absolute error (MAE), the mean absolute percentage error (MAPE), and the coefficient of determination (). In Equations (41)–(44) we define these metrics.

- Root mean square error (RMSE): RMSE calculates the square root of the mean of the squared differences between the predicted values () and the actual values (). It is highly sensitive to large errors, as these are squared before averaging. A lower RMSE indicates higher predictive accuracy, and a value of zero would represent a perfect model. RMSE is calculated as in Equation (41).

- Mean absolute error (MAE): MAE is defined as in Equation (42). This metric calculates the mean of the absolute differences between predicted and actual values. In contrast to RMSE, MAE does not penalize large errors so heavily and so it is a good option when a metric that treats all errors equally is required. A lower MAE indicates better model performance.

- Mean absolute percentage error (MAPE): MAPE is the mean of the absolute percentage errors between the forecast and actual values. A lower MAPE indicates that the forecast is closer to the observed values. However, one disadvantage of MAPE is that when actual values tend toward zero, they typically become extremely large, leading to disproportionately large values. Its calculation is based on Equation (43).

- Coefficient of determination (): , defined in Equation (44), measures the proportion of the variance in the dependent variable that is predictable from the independent variables. A value close to 1 indicates that the model accurately predicts the target variable. In contrast, a value close to 0 indicates that the model does no better than the mean of the target values.

In Equations (41)–(44), represents the actual values, represents the predicted values, n is the number of samples, and is the mean value of .

To conclude this section, the experiments were conducted using the Python programming language (version 3.11.11) in the PyTorch library (version 2.5.1+cu121) within a Google Colab environment. The environment comprised an Intel(R) Xeon(R) CPU @ 2.20 GHz (Intel, Santa Clara, CA, USA), 51.00 GB of RAM, a Tesla K80 accelerator (Tesla, Austin, TX, USA), and 12 GB of GDDR5 VRAM.

3. Results

This section presents the results for the winter and summer seasons, considering different forecasting time horizons at 1 h, 6 h, and 12 h. In addition, the analyses performed for different temporal resamples are included. The results are compared to the base models, providing an evaluation of the proposed model’s performance compared to traditional approaches.

Table 6 summarizes the losses obtained using various configurations of our solar radiation forecasting model, evaluated from 12 h forecasts. Our model shows an MAE of 110.43 and a of 0.63, indicating solid performance compared to the models without the proposed configurations. The “Model + Wavelet” configuration achieves an MAE of 120.03 and an of 0.61, demonstrating reasonable performance but falling short of the CEEMDAN-enhanced model, particularly in capturing intricate variability in solar irradiance data. The “Model without attention mechanisms” yields an MAE of 126.36 and an of 0.60, suggesting a slight reduction in accuracy when attention mechanisms are removed. The “Model without encoder-decoder and history” shows an MAE of 132.61 and an of 0.58, reflecting a further loss of accuracy when both historical data and the encoder-decoder structure are excluded. Finally, the “Baseline LSTM model” yields an MAE of 135.75 and an of 0.55, indicating a lower explanatory power than the other models.

Table 6.

Losses and training time by epoch for each architecture component with 1 h resampling for 12 h forecast in summer.

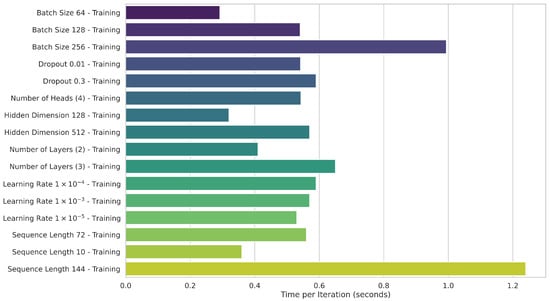

The execution time of our proposed model was evaluated for 12 h forecasts using the hyperparameters outlined in Table 5 with a 1 h resampling interval. Figure 9 shows that the average iteration time was measured across different batch sizes. A batch size of 256 significantly increased iteration time due to processing more data, while a batch size of 64 reduced iteration time by handling fewer data points. However, it is worth noting that in this case, the larger the batch size, the less training time we obtain by sacrificing model accuracy. We selected a batch size of 128, as it balances computational efficiency and performance, particularly with eight attention heads. Analysis of dropout rates revealed that values of 0.01 and 0.3 exhibited similar iteration times (0.55 and 0.57 s, respectively). Regarding attention heads, configurations with four heads were slightly faster than those with eight heads. Larger hidden sizes increased iteration time compared to a hidden size of 128, reflecting the higher computational demands of more extensive representations. Similarly, increasing the number of layers led to longer training times due to more computations per iteration. The learning rate had minimal impact on run time, although it significantly affected model accuracy, as will be discussed later. In addition, longer sequence lengths resulted in more complex architectures and exponentially increased training time.ased training time.

Figure 9.

Average time per iteration for different configurations of the proposed model.

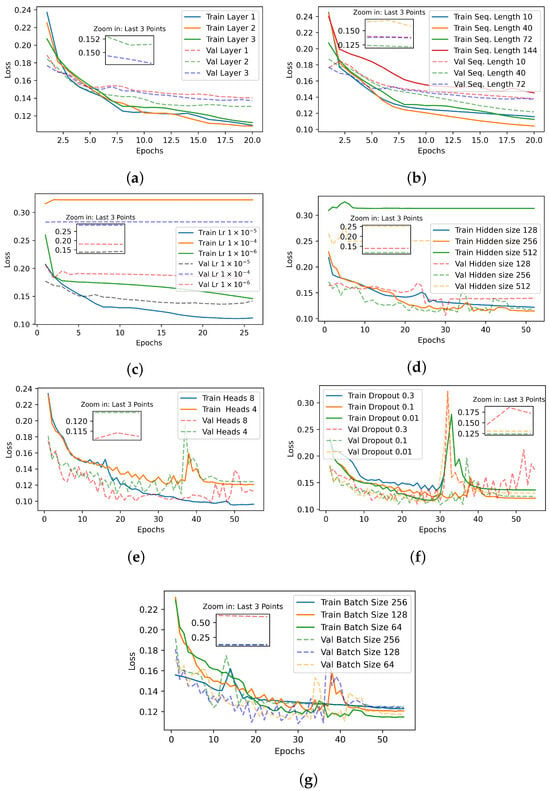

Figure 10 presents the loss rates during training and validation across different hyperparameters, including the number of layers, sequence length, and learning rate. From these plots, it can be observed that, in some cases, the loss during training is slightly higher than during validation. This indicates that the model is effectively learning the underlying patterns in the data without overfitting, showcasing its robustness. Specifically, the model was tested with one, two, and three LSTM layers (see Figure 10a), with the best performance being achieved with three layers during both training and validation phases, establishing this configuration in our model. Subsequently, varying the sequence length between 10, 40, 72, and 144 time steps (see Figure 10b), we observed optimal performance with sequence lengths of 40 and 72, corresponding to 40 h (2 days) and 72 h (3 days), respectively. We selected a sequence length of 72 time steps, as it effectively represents a 3-day period. The learning rate (see Figure 10c) was also tuned, with values set at , , and . It was observed that a learning rate of yielded better performance with appreciable improvements. Figure 10d, shows that increasing the number of attention heads improves the performance of the model; therefore, we selected a configuration with eight attention heads, achieving superior performance in both the training and validation phases. Figure 10e illustrates the evaluation of different dropout rates. Dropout values of 0.1 and 0.001 perform well during the training process, stabilizing to deliver optimal performance. On the other hand, a dropout rate of 0.3 results in highly erratic behavior, leading to suboptimal results. Consequently, we selected a dropout rate of 0.1 as the most suitable value for our model. In Figure 10f, we observe that batch sizes of 128 and 64 provide stable training performance, with the distinction that the training process is slower for a batch size of 64. Conversely, a batch size of 256, while faster, does not yield satisfactory results in terms of accuracy. Therefore, we selected a balanced approach with a batch size of 128. Additionally, Figure 10g highlights that hidden sizes of 128 and 256 provide stability for our model. While a hidden size of 512 shows potential for better performance, it requires significantly more time to train over 50 epochs. To achieve a balance between training efficiency and performance, we chose a hidden size of 256 for our final model configuration.

Figure 10.

Loss comparison during training and validation. (a) Loss comparison with different number of layers. (b) Loss comparison with different sequence lengths. (c) Loss comparison with different learning rate values. (d) Loss comparison with different hidden size. (e) Loss comparison with different heads values. (f) Loss comparison with different dropout values. (g) Loss comparison with different batch size values.

3.1. Forecasting at 1 h Ahead with Different Resampling for Winter and Summer

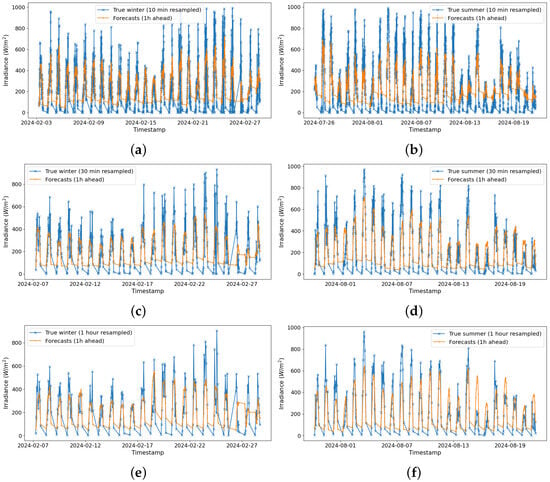

Figure 11 depicts the 1 h ahead predictions achieved for different resampling configurations in winter (left column) and summer (right column). In general, in comparison with winter, the model makes more accurate summer predictions, as inferred from the error metrics. For the 10 min resampling in winter (Figure 11a), the MAE equates to 111.31, the RMSE equals 148.62, and the is 0.50, while for the same configuration in summer (Figure 11b), it has increases. For the 30 min resampling, the model achieves an MAE of 109.64, an RMSE of 147.07, and an of 0.44 under winter conditions (Figure 11c). On the other hand, under summer conditions (Figure 11d), the model results are an MAE 114.45, an RMSE of 146.83, and an is 0.63. In winter (Figure 11e), the MAE is 116.47, the RMSE is 148.78, and the is 0.59, whereas in summer (Figure 11f), the MAE decreases to 100.22, the RMSE to 135.15, and the to 0.46. This suggests that the model is more accurate during summer in estimating solar irradiance, especially for larger resampling intervals, and remains reasonably accurate in winter.

Figure 11.

Predictions 1 h ahead with different resamplings. (a) 10 min resampled to 1 h forecasts in winter. (b) 10 min resampled to 1 h forecasts in summer. (c) 30 min resampled to 1 h forecasts in winter. (d) 30 min resampled to 1 h forecasts in summer. (e) 1 h resampled to 1 h forecasts in winter. (f) 1 h resampled to 1 h forecasts in summer.

3.2. Forecasting at 6 h Ahead with Different Resampling for Winter and Summer

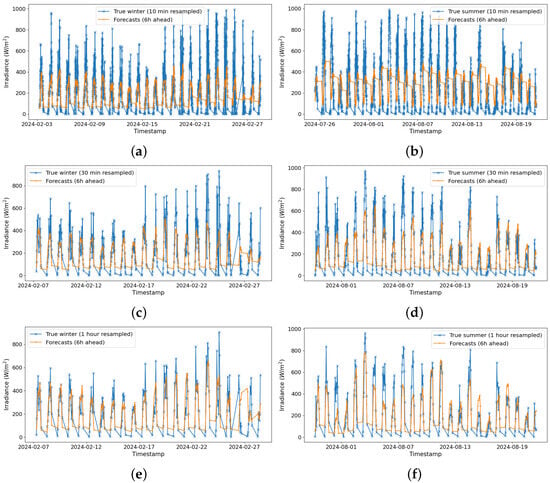

Figure 12 represents the 6 h ahead predictions with different resampling configurations. The figures on the right represent the summer, and those on the left represent the winter. The results show how the model performs under these resampling configurations, considering the effect of seasonal variations. For the 10 min resampling in winter (Figure 12a), the MAE is 117.39, the RMSE is 169.62, and the is 0.35, indicating moderate performance for a 6 h prediction. The resampling in summer (Figure 12b) yields an MAE of 222.91, an RMSE of 275.98, and a negative of −0.17, reflecting lower prediction accuracy due to increased seasonal variability. During winter (Figure 12c), the RMSE is 151.30, the MAE is 107.95, and the improves to 0.42, showing enhanced accuracy with a larger resampling interval. During summer (Figure 12d), the RMSE is 161.69, the MAE is 119.14, and the improves to 0.55, indicating better prediction accuracy. In winter (Figure 12e), the MAE is 100.12, the RMSE is 134.13, and the is 0.48, reflecting better accuracy in long-term forecasts compared to smaller resampling intervals. In summer, the RMSE decreases to 141.15, the MAE to 110.43, and the reaches 0.64, reflecting the model’s ability to capture seasonal patterns in long-term predictions with larger resampling intervals.

Figure 12.

Predictions 6 h ahead with different resamplings. (a) 10 min resampled to 6 h forecasts in winter. (b) 10 min resampled to 6 h forecasts in summer. (c) 30 min resampled to 6 h forecasts in winter. (d) 30 min resampled to 6 h forecasts in summer. (e) 1 h resampled to 6 h forecasts in winter. (f) 1 h resampled to 6 h forecasts in summer.

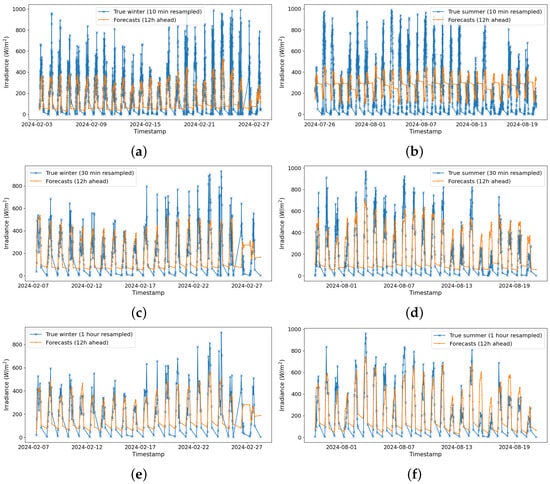

3.3. Forecasting at 12 h Ahead with Different Resampling for Winter and Summer

Figure 13 presents the results of 12 h ahead forecasts with varying resampling intervals, comparing winter (left column) and summer (right column). For the 10 min resampled forecasts in winter (Figure 13a), the model achieves an MAE of 116.46, an RMSE of 173.08, and an of 0.33, indicating moderate accuracy. However, in summer (Figure 13b), the error increases significantly with an MAE of 218.22, an RMSE of 266.95, and a negative of −0.09, reflecting higher seasonal variability. The 30 min resampled results in winter (Figure 13c) improve slightly, with an MAE of 112.63, an RMSE of 154.51, and an of 0.39, showing better accuracy over longer intervals. For summer (Figure 13d), the model achieves an MAE of 128.85, an RMSE of 160.40, and an of 0.56, indicating an improvement in forecast precision compared to shorter resampling intervals. Finally, for the 1 h resampling, the winter predictions (Figure 13e) demonstrate a significant increase in accuracy, with an MAE of 99.89, an RMSE of 130.42, and an of 0.51, while the summer predictions (Figure 13f) also show improved results, with an MAE of 110.43, an RMSE of 141.15, and an of 0.64. These results show that larger resampling intervals improve forecast accuracy, especially over longer prediction horizons such as 12 h ahead.

Figure 13.

Predictions 12 h ahead with different resamplings. (a) 10 min resampled to 12 h forecasts in winter. (b) 10 min resampled to 12 h forecasts in summer. (c) 30 min resampled to 12 h forecasts in winter. (d) 30 min resampled to 12 h forecasts in summer. (e) 1 h resampled to 12 h forecasts in winter. (f) 1 h resampled to 12 h forecasts in summer.

Table 7 summarizes the performance metrics for irradiance prediction at different resampling intervals (10 min, 30 min, and 1 h) and forecast horizons (1 h, 6 h, and 12 h) for both winter and summer seasons. The metrics presented include the MAE, RMSE, , and MAPE. The table shows that the model’s accuracy generally decreases with longer forecast horizons, especially in summer, where the higher seasonal variability leads to larger MAE and RMSE values. The best results are typically observed in the 1 h resampling, particularly for winter, where the MAE and RMSE values are the lowest for the 12 h ahead forecast. These results highlight the impact of both resampling intervals and forecast horizons on the overall prediction accuracy of the model, with winter predictions demonstrating higher accuracy than summer predictions across all time intervals.

Table 7.

Comparison of metrics for irradiance prediction with different resampling and forecast horizons.

Table 8 presents the performance metrics for forecasting irradiance 12 h in advance in winter and summer, with 1 h resampling intervals. The results compare the proposed model with a reference LSTM, an autoencoder, and a transformer-based model. The transformer model is configured with three encoder layers, three decoder layers, and eight attention heads. The proposed model outperforms the other approaches on MAE and , showing higher accuracy, especially in summer, where it achieves an MAE of 110.43 and an of 0.64. The transformer model, while competitive, exhibits slightly higher MAE and RMSE values (118.00 and 148.50 in winter; 113.50 and 140.75 in summer) and a slightly lower (0.56 in winter and 0.61 in summer) compared to the proposed model. Both the reference LSTM and the autoencoder show slightly higher errors in both seasons, highlighting the proposed model’s greater efficiency for accurate irradiance forecasting under varying seasonal conditions. The RMSE and MAPE values confirm the proposed model’s robustness and performance compared to the alternatives, with the transformer model demonstrating robust performance, albeit with marginally higher error metrics.

Table 8.

Comparison of metrics for 12 h ahead irradiance prediction in winter and summer with 1 h resampling.

Figure 14 shows two box plots illustrating the MAE distribution for solar irradiance forecasting at different horizons: 1 h, 6 h and 12 h. Figure 14a shows the summer forecast errors and Figure 14b the winter ones. Each box illustrates the MAE interquartile limits, and the red line within each box the MAE mean. The overall dispersion of the MAE is greater as the forecast horizon increases, as illustrated by the boxes for the 6 h and 12 h horizons. This, in turn, is consistent with expectations: longer forecast windows imply more significant uncertainty. However, despite this variability, the median MAE is reasonably stable over all horizons, including winter, which is reflective of the robustness of the model also for long-term forecasts. The difference in errors for the summer and winter seasons can be attributed to the seasonal effect, as summer tends to have a more compact error distribution than winter.

Figure 14.

Boxplot of MAE across different time horizons. (a) Summer forecast errors.(b) Winter forecast errors.

4. Conclusions

This research presents a novel methodology for forecasting solar irradiance in complex areas, combining advanced techniques such as a CEEMDAN, CNNs, attention mechanisms, and LSTM networks in a hybrid architecture. We integrated a CEEMDAN to decompose the solar irradiance variable into more interpretable components, which facilitated the identification of specific patterns in different time zones. The CEEMDAN proved effective in improving the accuracy of the forecasts in our model, providing a robust solution for integrating solar energy into the power grid and outperforming traditional approaches in terms of accuracy and generalization.

Our CEEMDAN-CNN-ATT-LSTM model demonstrated its ability to handle the variability and uncertain behavior of solar irradiance by combining the strengths of CNNs to capture spatial relationships between variables, the ability of LSTM networks to extract short-term temporal information, and the integrated attention mechanism to improve the ability to capture long-term spatial and temporal relationships by focusing on the most critical long-term features. This combination makes the model robust and allows it to generalize the time series to data with high variability, such as the high-altitude regions of southern Ecuador. In addition, the model is adaptable and can be applied to regions with more stable conditions.

Our model obtained an encouraging performance, with an MAE of 99.89 W/m2 in winter and 110 W/m2 in summer for a 12 h forecast, covering the entire diurnal solar cycle. The model was found to perform better when the time series was less variable, highlighting the importance of the resampling approach used in this study. Although our study obtained promising results, we found some limitations that we will address in the future, such as the dependence on the quality and quantity of the data used. In addition, the computational complexity of our hybrid model requires advanced hardware, such as GPU and sufficient RAM, which may cause problems in resource-constrained environments. Moreover, variations in the availability and quality of data from different regions or time periods can also affect the generalizability and performance of the model.

In the future, more advanced techniques will be adopted to optimize the speed and generalizability of the model, such as the use of transformer-like techniques. To this end, we will test its robustness in the case of stable and highly variable geographical regions and climatic conditions. In addition, we will include other related variables to increase the accuracy and reliability of the forecasting by adding weather forecasts and atmospheric parameters. Finally, such a model can be implemented within the operational structure, interfacing with real-time solar power generation systems, and be part of a grid connection with various performance optimization and adaptability options. Thus, the proposed approach will help the scientific community in the solar energy field to improve the methodological improvement of forecasting model building, with applicability in various scenarios to develop more accurate and efficient models in unpredictable and variable weather conditions.

Author Contributions

M.C., J.M.-C. and J.T.-C. contributed to the conceptualization and methodological design of the proposal, were responsible for data preparation and analysis, and prepared the original draft; S.M.-M. contributed to the review and editing; and E.G.-L. was responsible for project supervision. All authors have read and accepted the published version of the manuscript.

Funding

This research was partially funded by the Junta de Comunidades de Castilla-La Mancha and the E.U. FEDER (SBPLY/23/180225/000226) and by the Spanish Ministry of Economy and Competitiveness and the European Union (PID2021-126082OB-C21).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available in Station 50001—Faculty of Energy of the National University of Loja at https://unlmeteorologico.com/estacion-unl-facultad-de-energia/ (accessed on 24 November 2024). For access to the base code, please contact the corresponding author via email.

Acknowledgments

The authors would like to recognize the assistance received by National University of Loja through the research project 20-DI-FEIRNNR-2023.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Ahmed, R.; Sreeram, V.; Mishra, Y.; Arif, M. A review and evaluation of the state-of-the-art in PV solar power forecasting: Techniques and optimization. Renew. Sustain. Energy Rev. 2020, 124, 109792. [Google Scholar] [CrossRef]

- Krishnan, N.; Kumar, K.R.; Inda, C.S. How solar radiation forecasting impacts the utilization of solar energy: A critical review. J. Clean. Prod. 2023, 388, 135860. [Google Scholar] [CrossRef]

- Kumar, C.M.S.; Singh, S.; Gupta, M.K.; Nimdeo, Y.M.; Raushan, R.; Deorankar, A.V.; Kumar, T.A.; Rout, P.K.; Chanotiya, C.; Pakhale, V.D.; et al. Solar energy: A promising renewable source for meeting energy demand in Indian agriculture applications. Sustain. Energy Technol. Assess. 2023, 55, 102905. [Google Scholar] [CrossRef]

- Nijsse, F.J.; Mercure, J.F.; Ameli, N.; Larosa, F.; Kothari, S.; Rickman, J.; Vercoulen, P.; Pollitt, H. The momentum of the solar energy transition. Nat. Commun. 2023, 14, 6542. [Google Scholar] [CrossRef] [PubMed]

- Lara-Benítez, P.; Carranza-García, M.; Luna-Romera, J.M.; Riquelme, J.C. Short-term solar irradiance forecasting in streaming with deep learning. Neurocomputing 2023, 546, 126312. [Google Scholar] [CrossRef]

- Khare, V.; Chaturvedi, P.; Mishra, M. Solar energy system concept change from trending technology: A comprehensive review. e-Prime—Adv. Electr. Eng. Electron. Energy 2023, 4, 100183. [Google Scholar] [CrossRef]

- Yu, Y.; Cao, J.; Zhu, J. An LSTM Short-Term Solar Irradiance Forecasting Under Complicated Weather Conditions. IEEE Access 2019, 7, 145651–145666. [Google Scholar] [CrossRef]

- Yang, D.; Kleissl, J.; Gueymard, C.A.; Pedro, H.T.; Coimbra, C.F. History and trends in solar irradiance and PV power forecasting: A preliminary assessment and review using text mining. Sol. Energy 2018, 168, 60–101. [Google Scholar] [CrossRef]

- Perez, R.; Kivalov, S.; Schlemmer, J.; Hemker, K.; Renné, D.; Hoff, T.E. Validation of short and medium term operational solar radiation forecasts in the US. Sol. Energy 2010, 84, 2161–2172. [Google Scholar] [CrossRef]

- Liu, W.; Liu, Y.; Zhou, X.; Xie, Y.; Han, Y.; Yoo, S.; Sengupta, M. Use of physics to improve solar forecast: Physics-informed persistence models for simultaneously forecasting GHI, DNI, and DHI. Sol. Energy 2021, 215, 252–265. [Google Scholar] [CrossRef]

- Kocifaj, M.; Kómar, L. Modeling diffuse irradiance under arbitrary and homogeneous skies: Comparison and validation. Appl. Energy 2016, 166, 117–127. [Google Scholar] [CrossRef]

- Antonanzas, J.; Osorio, N.; Escobar, R.; Urraca, R.; de Pison, F.M.; Antonanzas-Torres, F. Review of photovoltaic power forecasting. Sol. Energy 2016, 136, 78–111. [Google Scholar] [CrossRef]

- Atique, S.; Noureen, S.; Roy, V.; Subburaj, V.; Bayne, S.; Macfie, J. Forecasting of total daily solar energy generation using ARIMA: A case study. In Proceedings of the 2019 IEEE 9th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 7–9 January 2019; pp. 0114–0119. [Google Scholar] [CrossRef]

- Liu, L.; Zhao, Y.; Chang, D.; Xie, J.; Ma, Z.; Sun, Q.; Yin, H.; Wennersten, R. Prediction of short-term PV power output and uncertainty analysis. Appl. Energy 2018, 228, 700–711. [Google Scholar] [CrossRef]

- AlShafeey, M.; Csáki, C. Evaluating neural network and linear regression photovoltaic power forecasting models based on different input methods. Energy Rep. 2021, 7, 7601–7614. [Google Scholar] [CrossRef]

- Huang, X.; Shi, J.; Gao, B.; Tai, Y.; Chen, Z.; Zhang, J. Forecasting Hourly Solar Irradiance Using Hybrid Wavelet Transformation and Elman Model in Smart Grid. IEEE Access 2019, 7, 139909–139923. [Google Scholar] [CrossRef]

- Fan, J.; Wu, L.; Ma, X.; Zhou, H.; Zhang, F. Hybrid support vector machines with heuristic algorithms for prediction of daily diffuse solar radiation in air-polluted regions. Renew. Energy 2020, 145, 2034–2045. [Google Scholar] [CrossRef]

- Mendonça de Paiva, G.; Pires Pimentel, S.; Pinheiro Alvarenga, B.; Gonçalves Marra, E.; Mussetta, M.; Leva, S. Multiple Site Intraday Solar Irradiance Forecasting by Machine Learning Algorithms: MGGP and MLP Neural Networks. Energies 2020, 13, 3005. [Google Scholar] [CrossRef]

- Hai, T.; Sharafati, A.; Mohammed, A.; Salih, S.Q.; Deo, R.C.; Al-Ansari, N.; Yaseen, Z.M. Global Solar Radiation Estimation and Climatic Variability Analysis Using Extreme Learning Machine Based Predictive Model. IEEE Access 2020, 8, 12026–12042. [Google Scholar] [CrossRef]

- Pazikadin, A.R.; Rifai, D.; Ali, K.; Malik, M.Z.; Abdalla, A.N.; Faraj, M.A. Solar irradiance measurement instrumentation and power solar generation forecasting based on Artificial Neural Networks (ANN): A review of five years research trend. Sci. Total Environ. 2020, 715, 136848. [Google Scholar] [CrossRef] [PubMed]

- Monjoly, S.; André, M.; Calif, R.; Soubdhan, T. Hourly forecasting of global solar radiation based on multiscale decomposition methods: A hybrid approach. Energy 2017, 119, 288–298. [Google Scholar] [CrossRef]

- Lan, H.; Yin, H.; Hong, Y.Y.; Wen, S.; Yu, D.C.; Cheng, P. Day-ahead spatio-temporal forecasting of solar irradiation along a navigation route. Appl. Energy 2018, 211, 15–27. [Google Scholar] [CrossRef]

- Anupong, W.; Jweeg, M.J.; Alani, S.; Al-Kharsan, I.H.; Alviz-Meza, A.; Cárdenas-Escrocia, Y. Comparison of Wavelet Artificial Neural Network, Wavelet Support Vector Machine, and Adaptive Neuro-Fuzzy Inference System Methods in Estimating Total Solar Radiation in Iraq. Energies 2023, 16, 985. [Google Scholar] [CrossRef]

- Wang, K.; Qi, X.; Liu, H. A comparison of day-ahead photovoltaic power forecasting models based on deep learning neural network. Appl. Energy 2019, 251, 113315. [Google Scholar] [CrossRef]

- Fan, C.; Wang, J.; Gang, W.; Li, S. Assessment of deep recurrent neural network-based strategies for short-term building energy predictions. Appl. Energy 2019, 236, 700–710. [Google Scholar] [CrossRef]

- Ullah, F.U.M.; Ullah, A.; Haq, I.U.; Rho, S.; Baik, S.W. Short-Term Prediction of Residential Power Energy Consumption via CNN and Multi-Layer Bi-Directional LSTM Networks. IEEE Access 2020, 8, 123369–123380. [Google Scholar] [CrossRef]

- Agga, A.; Abbou, A.; Labbadi, M.; Houm, Y.E.; Ou Ali, I.H. CNN-LSTM: An efficient hybrid deep learning architecture for predicting short-term photovoltaic power production. Electr. Power Syst. Res. 2022, 208, 107908. [Google Scholar] [CrossRef]

- Agga, A.; Abbou, A.; Labbadi, M.; El Houm, Y. Short-term self consumption PV plant power production forecasts based on hybrid CNN-LSTM, ConvLSTM models. Renew. Energy 2021, 177, 101–112. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Hawash, H.; Chakrabortty, R.K.; Ryan, M. PV-Net: An innovative deep learning approach for efficient forecasting of short-term photovoltaic energy production. J. Clean. Prod. 2021, 303, 127037. [Google Scholar] [CrossRef]

- Davò, F.; Alessandrini, S.; Sperati, S.; Delle Monache, L.; Airoldi, D.; Vespucci, M.T. Post-processing techniques and principal component analysis for regional wind power and solar irradiance forecasting. Sol. Energy 2016, 134, 327–338. [Google Scholar] [CrossRef]

- Huang, X.; Li, Q.; Tai, Y.; Chen, Z.; Zhang, J.; Shi, J.; Gao, B.; Liu, W. Hybrid deep neural model for hourly solar irradiance forecasting. Renew. Energy 2021, 171, 1041–1060. [Google Scholar] [CrossRef]

- Hanif, M.; Mi, J. Harnessing AI for solar energy: Emergence of transformer models. Appl. Energy 2024, 369, 123541. [Google Scholar] [CrossRef]

- Nasiri, H.; Ebadzadeh, M.M. Multi-step-ahead stock price prediction using recurrent fuzzy neural network and variational mode decomposition. Appl. Soft Comput. 2023, 148, 110867. [Google Scholar] [CrossRef]

- Nasiri, H.; Ebadzadeh, M.M. MFRFNN: Multi-Functional Recurrent Fuzzy Neural Network for Chaotic Time Series Prediction. Neurocomputing 2022, 507, 292–310. [Google Scholar] [CrossRef]

- Assaf, A.M.; Haron, H.; Abdull Hamed, H.N.; Ghaleb, F.A.; Qasem, S.N.; Albarrak, A.M. A Review on Neural Network Based Models for Short Term Solar Irradiance Forecasting. Appl. Sci. 2023, 13, 8332. [Google Scholar] [CrossRef]

- Almaghrabi, S.; Rana, M.; Hamilton, M.; Saiedur Rahaman, M. Multivariate solar power time series forecasting using multilevel data fusion and deep neural networks. Inf. Fusion 2024, 104, 102180. [Google Scholar] [CrossRef]

- Wai, R.J.; Lai, P.X. Design of Intelligent Solar PV Power Generation Forecasting Mechanism Combined with Weather Information under Lack of Real-Time Power Generation Data. Energies 2022, 15, 3838. [Google Scholar] [CrossRef]

- Kim, J.; Obregon, J.; Park, H.; Jung, J.Y. Multi-step photovoltaic power forecasting using transformer and recurrent neural networks. Renew. Sustain. Energy Rev. 2024, 200, 114479. [Google Scholar] [CrossRef]

- Jonathan, A.L.; Cai, D.; Ukwuoma, C.C.; Nkou, N.J.J.; Huang, Q.; Bamisile, O. A radiant shift: Attention-embedded CNNs for accurate solar irradiance forecasting and prediction from sky images. Renew. Energy 2024, 234, 121133. [Google Scholar] [CrossRef]

- Jacques Molu, R.J.; Tripathi, B.; Mbasso, W.F.; Dzonde Naoussi, S.R.; Bajaj, M.; Wira, P.; Blazek, V.; Prokop, L.; Misak, S. Advancing short-term solar irradiance forecasting accuracy through a hybrid deep learning approach with Bayesian optimization. Results Eng. 2024, 23, 102461. [Google Scholar] [CrossRef]

- Cheng, H.Y.; Yu, C.C.; Lin, C.L. Day-ahead to week-ahead solar irradiance prediction using convolutional long short-term memory networks. Renew. Energy 2021, 179, 2300–2308. [Google Scholar] [CrossRef]

- Liao, Z.; Coimbra, C.F. Hybrid solar irradiance nowcasting and forecasting with the SCOPE method and convolutional neural networks. Renew. Energy 2024, 232, 121055. [Google Scholar] [CrossRef]

- Pereira, S.; Canhoto, P.; Salgado, R. Development and assessment of artificial neural network models for direct normal solar irradiance forecasting using operational numerical weather prediction data. Energy AI 2024, 15, 100314. [Google Scholar] [CrossRef]

- Oliveira Santos, V.; Marinho, F.P.; Costa Rocha, P.A.; Thé, J.V.G.; Gharabaghi, B. Application of Quantum Neural Network for Solar Irradiance Forecasting: A Case Study Using the Folsom Dataset, California. Energies 2024, 17, 3580. [Google Scholar] [CrossRef]

- Wu, J.; Zhao, Y.; Zhang, R.; Li, X.; Wu, Y. Application of three Transformer neural networks for short-term photovoltaic power prediction: A case study. Sol. Compass 2024, 12, 100089. [Google Scholar] [CrossRef]

- Gao, B.; Huang, X.; Shi, J.; Tai, Y.; Zhang, J. Hourly forecasting of solar irradiance based on CEEMDAN and multi-strategy CNN-LSTM neural networks. Renew. Energy 2020, 162, 1665–1683. [Google Scholar] [CrossRef]

- Patel, M.; Patel, A.; Ghosh, D.R. Precipitation Nowcasting: Leveraging bidirectional LSTM and 1D CNN. arXiv 2018, arXiv:1810.10485. [Google Scholar]

- Hatami, N.; Gavet, Y.; Debayle, J. Classification of time-series images using deep convolutional neural networks. In Proceedings of the Tenth International Conference on Machine Vision (ICMV 2017), Vienna, Austria, 13–15 November 2017; Verikas, A., Radeva, P., Nikolaev, D., Zhou, J., Eds.; International Society for Optics and Photonics. SPIE: Bellingham, WA, USA, 2018; Volume 10696, p. 106960Y. [Google Scholar] [CrossRef]

- Huang, W.; Liu, M.; Shang, W.; Zhu, H.; Lin, W.; Zhang, C. LSTM with compensation method for text classification. Int. J. Wirel. Mob. Comput. 2021, 20, 159–167. [Google Scholar] [CrossRef]

- Ghimire, S.; Deo, R.C.; Wang, H.; Al-Musaylh, M.S.; Casillas-Pérez, D.; Salcedo-Sanz, S. Stacked LSTM Sequence-to-Sequence Autoencoder with Feature Selection for Daily Solar Radiation Prediction: A Review and New Modeling Results. Energies 2022, 15, 1061. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. arXiv 2017, arXiv:1706.03762. [Google Scholar] [CrossRef]

- Bu, S.J.; Cho, S.B. Time Series Forecasting with Multi-Headed Attention-Based Deep Learning for Residential Energy Consumption. Energies 2020, 13, 4722. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).