Abstract

Convolutional Neural Networks (CNNs) have achieved remarkable performance in computer vision tasks, but their deployment on resource-constrained devices remains challenging. While existing lightweight CNNs reduce FLOPs significantly, their practical inference speed is limited by memory access bottlenecks. We hence propose CFNet, an efficient architecture that bridges the gap between theoretical efficiency and practical speed through synergistic design of channel-focused convolution (CFConv) and channel mixed unit (CMU). CFConv dynamically selects informative channels via learnable GroupNorm scaling factors and reparameterization, reducing both FLOPs and memory access, while CMU enables cross-channel communication through a split-transform-and-mix strategy to mitigate information loss. Experiments on CIFAR/ImageNet classification and MS COCO object detection demonstrate CFNet’s superior performance. On ImageNet-1K, CFNet-A achieves 35.5% and 189.4% GPU throughput improvements over MobileNetV2 and MobileViTv1-XXS respectively, while delivering 1.76% and 4.09% accuracy gains. CFNet-E attains 83.5% top-1 accuracy, outperforming Swin-S by 0.47% with 44.6% higher GPU throughput and 43.6% lower CPU inference latency.

1. Introduction

Deep convolutional neural networks have demonstrated exceptional performance across various computer vision tasks, including image recognition [], object detection [], and semantic segmentation []. These advancements have enabled numerous applications in autonomous driving and visual search, where CNNs are essential for achieving high performance. However, such success relies heavily on intensive computational and storage resources, posing significant challenges for efficient deployment in resource-constrained environments such as mobile devices, embedded systems, edge computing platforms, and Internet of Things (IoT) devices. Consequently, recent trends in deep neural network design focus on exploring portable and efficient architectures while maintaining acceptable performance on mobile devices.

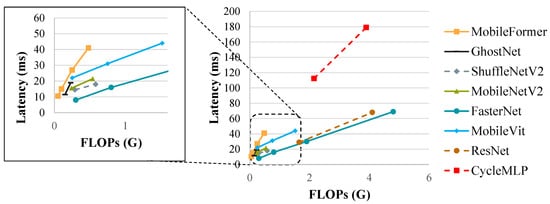

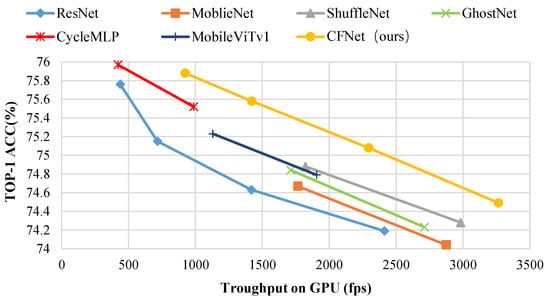

Mainstream lightweight CNNs focus on reducing FLOPs through techniques like depthwise separable convolutions, group convolutions, and channel compression [,,,,,]. However, these approaches exhibit a notable disconnect between theoretical efficiency gains and actual inference performances. As illustrated in Figure 1, FLOP reduction does not correlate linearly with inference latency reduction. Compared to the selected baseline ResNet series, many lightweight networks compress FLOPs by over 50%, yet inference latency decreases by only approximately 20% or less. This reveals that although current lightweight networks successfully reduce the number of parameters and FLOPs, approaches centered on theoretical computational optimization fail to deliver corresponding gains in practical inference speed. FasterNet [] has shown that frequent memory access is the main bottleneck limiting practical speedup and has proposed partial convolution to reduce both redundant computation and memory access. However, this approach leads to accuracy drops due to limited information flow across channels.

Figure 1.

The discrepancy between FLOP reduction and inference latency improvement in lightweight CNN models. Despite significant FLOPs compression, practical speedup remains limited due to memory access overhead. The enlarged view highlights the efficiency gap among low-FLOPs models.

To achieve efficient inference while maintaining accuracy, we propose a novel channel-focused convolution (CFConv). This lightweight operator selectively processes informative channels through learnable GroupNorm scaling factors, while employing reparameterization techniques to simultaneously reduce FLOPs and optimize memory access efficiency. However, the channel selection mechanism in CFConv inherently restricts cross-channel information flow, potentially causing accuracy degradation. To address this limitation, we further introduce a channel mixed unit (CMU) that implements a split-transform-and-mix strategy, explicitly facilitating efficient feature interaction across channels. By synergistically integrating CFConv and CMU, we construct CFNet—a highly efficient architecture that demonstrates superior trade-offs between computational cost, latency, and accuracy compared to existing approaches like MobileNet, ShuffleNetV2, and FasterNet, while maintaining competitive task performance.

We conduct extensive experiments on image classification and object detection to validate our approach. It is shown that our CFNet achieves better performance than existing methods with significantly lower latency. Specifically, on ImageNet-1K, our CFNet-A achieves GPU throughput improvements of 35.5% and 189.4% compared to MobileNetV2 and MobileViTv1-XXS, respectively, with accuracy gains of 1.76% and 4.09%. The contributions are as follows.

- We propose channel-focused convolution (CFConv), a lightweight convolution operator that selects informative channels using learnable Group Normalization scaling factors and incorporates reparameterization techniques to balance memory access and computational efficiency.

- We propose a channel mixed unit (CMU), which utilizes a split-transform-and-mix strategy that enables cross-channel information flow.

- We present a CFNet based on CFConv and CMU, achieving superior speed-accuracy trade-offs compared to existing lightweight networks through comprehensive experiments.

2. Related Work

2.1. Lightweight CNN Architectures

The development of lightweight CNNs has focused on reducing computational complexity while maintaining competitive accuracy. SqueezeNet [] pioneered extreme model compression by introducing Fire modules that first squeeze input channels and then expand with a mix of convolutions, achieving AlexNet-level accuracy with 50 times fewer parameters. MobileNet [] introduced depthwise separable convolutions, while subsequent MobileNet versions [,] incorporated inverted residuals and hardware-aware scaling strategies to build efficient architectures for mobile devices. ShuffleNet [] explored group convolutions with channel shuffle operations and architectural optimizations, emphasizing practical guidelines for efficient design. GhostNet [] proposed generating redundant feature maps through cheap linear operations, achieving significant parameter reduction. The EfficientNets [,] developed compound scaling methods that systematically balance network depth, width, and resolution for optimal efficiency–accuracy trade-offs. Nonetheless, most approaches primarily focused on reducing the number of parameters and FLOPs, yet they suffer from increased memory access overhead when scaling network width to compensate for accuracy degradation. FasterNet [] represents a shift in focus by directly targeting memory bottlenecks through partial convolution that processes only a channel subset. However, its random channel selection may discard informative features. We address both efficiency dimensions by proposing CFConv with learnable channel selection to simultaneously reduce FLOPs and optimize memory access patterns.

2.2. Channel Interaction Mechanisms

Beyond optimizing FLOPs and the number of parameters, lightweight CNN design has increasingly focused on efficient channel interaction and spatial information exchange. Squeeze-and-Excitation networks [] introduced explicit channel attention mechanisms but with additional computational overhead, while ECA-Net [] proposed more efficient attention with reduced parameters through local cross-channel interactions. Beyond simple channel attention, SKNet [] introduced a selective kernel mechanism with multi-branch soft attention, enabling dynamic receptive field selection through feature-level gating. ShuffleNet [] employed channel shuffling to facilitate information exchange within group convolutions, and reparameterization techniques [] have shown promise in improving training dynamics while maintaining inference efficiency. However, existing approaches struggle to balance computational efficiency with cross-channel communication, often relying on heuristic channel selection that may discard informative features or introduce memory access overhead. Our proposed CFConv addresses these limitations by leveraging learnable Group Normalization scaling factors to intelligently select channels, while CMU facilitates information flow through an efficient Split-Transform-and-Mix strategy.

2.3. Reparameterization Techniques

Structural reparameterization has emerged as an effective technique for decoupling training-time and inference-time architectures. RepVGG [] pioneered this approach by demonstrating that multi-branch structures during training can be algebraically merged into a single convolution during inference without loss of representational capacity. This technique enables networks to benefit from rich training dynamics while maintaining deployment efficiency. Subsequent works have extended reparameterization to various contexts. RepMLP [] applies structural reparameterization to MLP-based architectures. ACNet [] explores asymmetric convolution blocks that strengthen kernel skeletons. DBB [] introduces diverse branch blocks incorporating multiple convolution types, and MobileOne [] demonstrates efficient mobile deployment through carefully designed branch structures. However, most existing reparameterization methods apply uniform transformations across all channels without considering channel importance variations. In contrast, our method incorporates reparameterization specifically into the informative channels selected through a learnable mechanism, thereby enhancing feature learning capacity while maintaining high inference efficiency.

3. Methodology

The persistent gap between theoretical efficiency gains and practical speedup in lightweight CNNs stems from overlooking memory access patterns—the true bottleneck in resource-constrained deployment. To bridge this divide, we introduce a comprehensive framework addressing efficiency from both computational and memory perspectives. We begin with the Channel Memory Access Ratio (CMAR), a principled metric that reveals why conventional architectures fail to achieve proportional speedup despite dramatic FLOP reduction. Building upon this analysis, we propose Channel-Focused Convolution (CFConv), which leverages Group Normalization scaling factors to intelligently process information-rich channels, optimizing both computational redundancy and memory access. To address the inevitable constraint on cross-channel information flow, we introduce the Channel Mixed Unit (CMU) with a split-transform-and-mix strategy that restores efficient inter-channel communication. These components are integrated into CFNet, an architecture that achieves better speed-accuracy trade-offs by addressing both computational and memory constraints. The detailed algorithmic procedures for both CFConv and CMU are provided in Appendix A.

3.1. Preliminary

Building on the observation that memory access bottlenecks limit practical speedup, we provide a mathematical framework to quantify this phenomenon. Unlike previous approaches that address memory access through architectural modifications, we analyze the fundamental trade-offs between computational intensity and memory bandwidth requirements. We introduce the Computation-to-Memory Access Ratio (CMAR) as a metric to analyze the efficiency bottlenecks in different convolution operations. This metric reflects the proportional relationship between FLOPs and memory access during model inference.

where includes all data movement:

where , and are the computational volumes of the input feature maps, output feature maps, and convolution kernels, respectively.

A higher CMAR indicates computation-intensive operations, while a lower CMAR signifies memory-bound operations. Since memory access time overhead typically exceeds CPU FLOPs in computer architecture, low CMAR scenarios significantly increase inference latency. This represents the primary bottleneck for lightweight models in practical deployment, particularly on devices running I/O-intensive tasks where efficiency is further compromised under memorybound conditions.

For regular convolution with input , filters and , the CMAR can be calculated as

Depthwise separable convolution decomposes the regular convolution into depthwise convolutions (DWConv) and pointwise convolutions (PWConv). The CMAR of depthwise separable convolution can be calculated as

Although depthwise separable convolution reduces FLOPs by approximately 9× (assuming k = 3) compared to regular convolution. The separation into DWConv and PWConv decomposition significantly increases feature map accesses, with memory access volume roughly doubling due to the necessity of storing intermediate features between DWConv and PWConv. Consequently, the CMAR decreases by 18× compared to regular convolution, making operations more memory-bound and explaining why theoretical efficiency gains often fail to materialize in practice.

3.2. Channel-Focused Convolution (CFConv) as an Operator

To achieve computation-efficient inference with optimized memory usage, we propose channel-focused convolution (CFConv), which builds upon the partial convolution paradigm but introduces a principled channel selection mechanism. Unlike existing partial convolution approaches that rely on random or uniform channel selection, CFConv leverages the scaling factors in Group Normalization (GN) [] layers as indicators of channel importance, enabling intelligent identification of information-rich channels for focused computation. Additionally, CFConv incorporates reparameterization techniques to reduce inference latency while maintaining training flexibility.

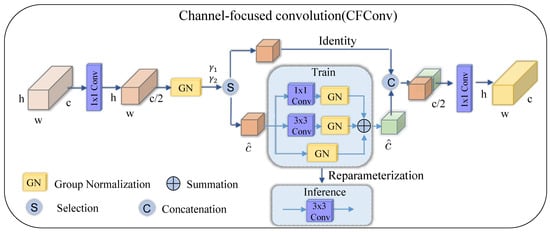

Specifically, as illustrated in Figure 2, for an input feature map with c channels, we first utilize PWConv with a channel reduction ratio (typical in our experiments) to squeeze feature channels for computational efficiency and information fusion. We then leverage Group Normalization to divide channels into r groups and normalize each group independently (group factor is a typical setting in our experiments). The normalization process for a GN layer [] is

where and are the mean and standard deviation of the group g, respectively. and are the trainable affine transformation.

Figure 2.

The architecture of channel-focused convolution (CFConv).

Notably, we leverage the trainable parameters in GN layers as indicators of channel importance. During training, larger values indicate channels requiring stronger scaling to maintain discriminative power after normalization. We select the group with highest accumulated for enhanced processing through multi-branch convolutions consisting of , convolutions, and identity mapping. These three distinct branches significantly enhance the feature extraction capability for informative channels by capturing features at different scales and complexities. The remaining groups undergo identity transformation and are concatenated with the branch outputs. Finally, 1 × 1 PWC operations are applied to restore the output feature maps to match the input dimensionality.

3.2.1. Inference Optimization

While the multi-branch architecture effectively enhances learning capacity, it suffers from lower inference efficiency. Following RepVGG [], we employ re-parameterization to merge the multi-branch convolutions into a single convolution during inference. Specifically, for the multi-branch structure with , convolutions and identity mapping, each followed by Batch Normalization (BN), we first fuse the BN parameters into convolution weights as in RepVGG [].

where , , , are the BN parameters. Then, leveraging the additive property of convolutions, the three branches are merged as

where converts the kernel to by zero-padding corners, and creates a kernel with 1 at the center for the identity branch. This transforms the complex training architecture into a simplified single-branch model for deployment, maintaining representational capacity while achieving computational efficiency.

3.2.2. Analysis on Complexities

During inference, our CFConv only considers channels as the representatives of the whole feature maps for computation. The CMAR of CFConv is given by

With a typical partial ratio , CFConv reduces FLOPs to and memory access volume to of regular convolution, demonstrating significant efficiency gains. Correspondingly, CFConv achieves a 4× lower CMAR than regular convolution. More significantly, compared to depthwise separable convolution, CFConv achieves over 4× higher CMAR, demonstrating considerable theoretical advantages in computation-to-memory access efficiency.

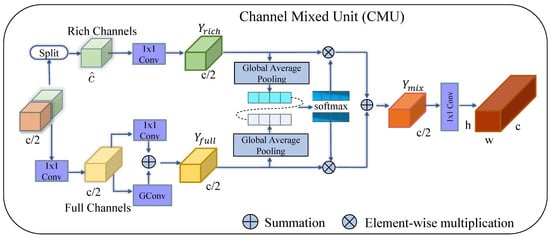

3.3. Channel Mixed Unit

While CFConv effectively replaces various convolution operations while maintaining sufficient model accuracy, it undeniably suffers from information loss across feature channels, similar to other lightweight networks that employ partial convolution methods. To overcome the side effects brought by limited channel communication, we propose a novel channel mixed unit (CMU) to facilitate information flow across feature channels, as illustrated in Figure 3. CMU utilizes a Split-Transform-and-Mix strategy to enhance cross-channel information exchange while maintaining computational efficiency.

Figure 3.

The architecture of channel mixed unit (CMU).

3.3.1. Split

The CMU module receives the concatenated outputs from CFConv’s multiple branches. We first split the channels into two distinct parts with different characteristics. The first part consists of the channels that have been processed by CFConv’s selective convolution operations, serving as “Rich Feature Channels” that contain enhanced and refined feature representations. The second part comprises all the original channels before selective processing, serving as “Full Channels” that preserve the complete feature information without selective processing.

3.3.2. Transform

For “Rich Feature Channels”, we utilize PWC operations to squeeze the channels of feature maps to channels. For “Full Channels”, we first utilize PWC operations to diffuse the channel information. The obtained channels are then subjected to pointwise convolutions and grouped convolutions, respectively, followed by the operation of element-wise addition on the two obtained feature maps.

3.3.3. Mix

After the transformation, we apply global average pooling to and to collect global spatial information, yielding two weight tensors. These tensors are then concatenated along the channel dimension to form . Following the soft attention mechanism in SKNet [], we apply channel-wise soft attention operation to generate feature importance vectors as

Under the guidance of the feature importance vectors , the mixed features can be obtained by combining the rich and full features as

where and represent the transformed rich and full feature channels, respectively, and ⊙ denotes element-wise multiplication.

In brief, we adopt CMU to compensate for the limitation of CFConv, which loses part of the feature channel information flow during partial convolution operations. The rich features provide enhanced representations through selective processing, while the full features preserve complete information diversity.

3.4. CFNet as a General Backbone

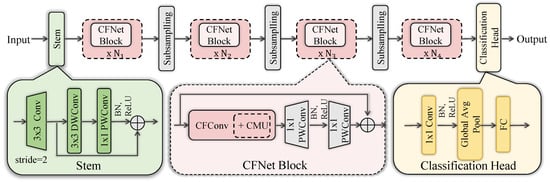

Given our novel CFConv operator and Channel Mixed Unit as the primary building module, we further propose CFNet, a lightweight neural network that achieves much lower latency and high accuracy for image classification and object detection tasks. We aim to keep the architecture as simple as possible, without bells and whistles, to make it hardware-friendly in general.

We present the overall architecture in Figure 4. It begins with a Stem module for initial feature extraction, followed by four hierarchical stages. Each stage has a stack of CFNet Blocks that incorporate our proposed CFConv operations. We distribute the CFNet Blocks across the four stages in a 1:1:3:1 ratio. Taking CFNet-A as an example with 18 convolution blocks in total, this yields [3, 3, 9, 3] CFNet Blocks per stage respectively. After each stage, a subsampling layer (a 2 × 2 Conv with stride 2) performs spatial downsampling and channel number expanding.

Figure 4.

Overall architecture of CFNet. The network comprises four stages with CFNet blocks in a 1:1:3:1 ratio. Each block integrates CFConv for selective channel processing and CMU (hybrid ratio = 1/4) for cross-channel communication. Subsampling layers perform spatial downsampling between stages.

Each CFNet Block has a CFConv layer integrated with efficient design principles. The channel dimensions are set as [32, 64, 128, 256] across the four stages, following the pyramid principle commonly adopted in modern architectures. To balance efficiency and performance, we deploy CMU selectively with a hybrid ratio ( = 1/4 is a typical setting in our experiments) relative to CFConv operators and at each stage end. The network concludes with global average pooling and a fully-connected layer for classification.

4. Experimental Results

In this section, we evaluate the proposed neural network CFNet through experiments on image classification, object detection and instance segmentation. We provide various CFNet scales (A/B/C/D/E) with similar architectures but different depths to accommodate diverse computational budgets.

4.1. Experimental Settings

4.1.1. Tasks and Datasets

- Image Classification. The CIFAR-10, CIFAR-100 [], and ImageNet-1k datasets [] are used for image classification tasks. We train our models for 300 epochs using the AdamW optimizer. We set the batch size to 2048 for CFNet and all baseline methods. The cosine learning rate scheduler is used with a peak learning rate of 0.001 · batch size/1024 and 20-epoch linear warmup. Standard regularization and augmentation techniques are applied, including Weight Decay, Stochastic Depth, Label Smoothing, Mixup, Cutmix and RandAugment, with parameters tuned for different baselines. For ImageNet-1k, we train with 192 × 192 resolution for the first 280 epochs and 224 × 224 for the final 20 epochs to improve training efficiency.

- Object Detection and Instance Segmentation. COCO dataset [] is used for object detection and instance segmentation tasks. CFNet-D and CFNet-E were utilized as the backbone within a standard Mask R-CNN framework. The model is pre-trained on ImageNet-1k and subsequently fine-tuned on COCO. Training is conducted with the AdamW optimizer, using a batch size of 16.

4.1.2. Hardware Configuration

A system equipped with four NVIDIA GeForce 1080Ti GPUs is used for experiments on CIFAR-10 and CIFAR-100 datasets. A more powerful setup featuring a 64-core Intel Xeon Gold 6226R CPU and four NVIDIA GeForce RTX 3090 GPUs, each with 24 GB of memory. This configuration was utilized for resource-intensive experiments, including model training and evaluation on ImageNet-1k and COCO datasets. Additionally, to evaluate the practical advantages of our method in real-world deployment scenarios, we conducted ARM Cortex-A simulation experiments using a Xilinx Zynq UltraScale+ MPSoC development board (Xilinx, Inc., San Jose, CA, USA) equipped with quad-core ARM Cortex-A53 processors running at 1.2 GHz and dual-core ARM Cortex-R5 processors operating at 500 MHz.

4.2. Image Classification on CIFAR

In the image classification task, we evaluate our CFNet against baseline methods on CIFAR-10/100 datasets using top-1 accuracy, the number of parameters, FLOPs and GPU throughput metrics. The baseline approaches include MobileNet [], ShuffleNet [], GhostNet [], MobileViTv1 [] CycleMLP [], EdgeNeXt [] and Swin Transformer [].

As shown in Table 1, our CFNet variants (A/B/C) consistently outperform all baseline models across dual metrics of accuracy and throughput, demonstrating the superiority of the proposed architecture. Given the consistent performance trends across both datasets, we focus our analysis on CIFAR-100 for its finer-grained 100-class classification. Specifically, CFNet-C outperforms ResNet-50 by 0.43% in accuracy and 98.5% in throughput. Notably, CFNet-D shows a remarkable 111% throughput boost relative to ResNet-101, while achieving 0.12% accuracy improvement. Compared to the state-of-the-art ResNeXt-101, CFNet-D achieves over 3× higher throughput with only a marginal 0.09% accuracy trade-off.

Table 1.

Image Classification Results on CIFAR-10 and CIFAR-100.

Furthermore, to provide a comprehensive comparison, we plot the GPU throughput vs. Top-1 accuracy curve on CIFAR-100 in Figure 5. The results show that CFNet consistently outperforms all competing methods including ResNet, MobileNet, ShuffleNet, GhostNet, CycleMLP, and MobileViTv1 across the entire accuracy-throughput spectrum. This clearly demonstrates that CFNet achieves superior balance between accuracy and inference efficiency among all examined networks, making it a highly effective lightweight architecture for resource-constrained deployment scenarios.

Figure 5.

Top1-Accuracy v.s. Throughput on GPU on CIFAR-100.

4.3. Image Classification on ImageNet

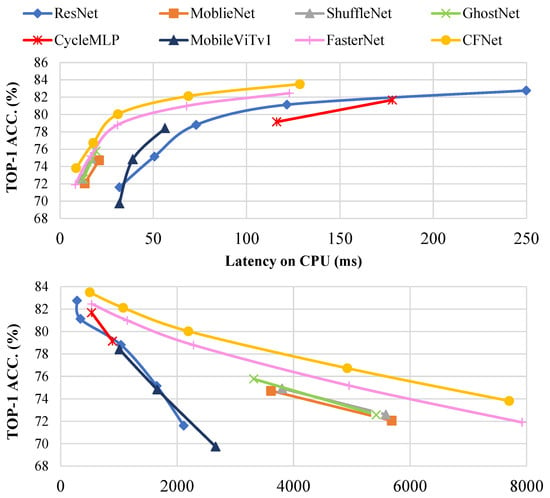

To verify CFNet’s effectiveness, we conduct experiments on ImageNet-1k classification. As shown in Table 2, CFNet achieves inference performance on both CPU and GPU platforms while maintaining superior accuracy compared to mainstream CNN, ViT, and MLP models. CFNet-A delivers 4× higher throughput than ResNet-18 with 2.19% accuracy improvement, and outperforms MobileNetV2 and MobileViTv1-XXS by 35.5% and 189.4% in throughput with 1.76% and 4.09% accuracy gains, respectively. Although CFNet-A and MobileNetV2 have similar FLOPs at 0.41 G and 0.36 G, respectively, the 35.5% throughput gap indicates that MobileNetV2’s depthwise separable convolutions create memory access bottlenecks. As analyzed in Section 3.1, depthwise decomposition reduces CMAR by 18× compared to regular convolution, whereas CFNet maintains 4× higher CMAR through intelligent channel selection, successfully bridging the gap between theoretical efficiency and practical hardware performance. CFNet-D achieves over 2× higher throughput than ConvNeXt-T with only 0.03% accuracy reduction. For CFNet-E, it achieves an accuracy of 83.50%, which is comparable to state-of-the-art models such as Swin-S and ConvNeXt-S, while delivering throughput improvements of 44.6% and 33.8% on GPU respectively and reducing inference latency by 43.6% and 23.2% on CPU respectively. These results demonstrate CFNet’s significant acceleration on both GPU and CPU platforms while maintaining scalability from lightweight to high-performance models through network depth scaling.

Table 2.

Image Classification Results on ImageNet-1k.

Additionally, we include FasterNet, an efficient lightweight network utilizing partial convolution, as a competitive baseline. As shown in Figure 6, the proposed CFNet achieves nearly identical inference performance while delivering a significant accuracy improvement exceeding 1%. The accuracy gap reveals fundamental differences in channel selection strategies. FasterNet employs random or contiguous selection that is content-agnostic and may inadvertently discard informative features. In contrast, CFNet leverages learnable GroupNorm scaling factors to identify channels with high information content, ensuring that computational resources concentrate on semantically rich features. Furthermore, our CMU module explicitly facilitates cross-channel communication to restore information flow restricted by partial processing, enabling CFNet to preserve representational capacity without compromising efficiency.

Figure 6.

Top1-Accuracy vs. Latency on CPU and Throughput on GPU on ImageNet-1k.

4.4. Object Detection and Instance Segmentation

To further evaluate the generalization ability of CFNet, we conduct experiments on the challenging COCO dataset [] for object detection and instance segmentation.

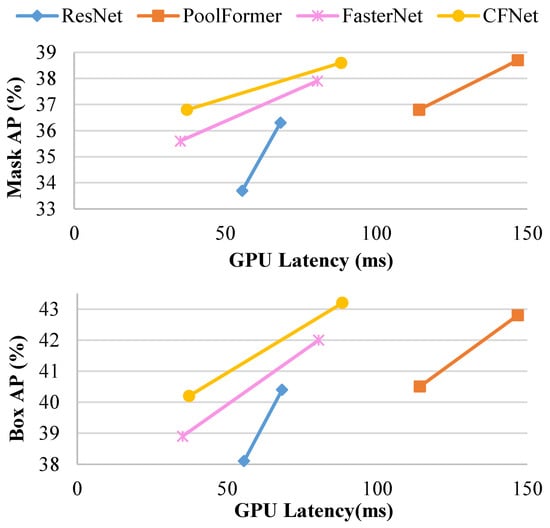

We compare CFNet against ResNet, PoolFormer, and FasterNet as baseline methods. As shown in Table 3, the and the of CFNet-D are 40.2% and 36.8%, outperforming original ResNet-50 by 2.1% and 3.1% while reducing inference latency by 33%. Compared to the high-accuracy ViT framework PoolFormer-S24, our CFNet-D achieves only 0.3% lower while reducing inference latency by 67%. Against PoolFormer-S36, our CFNet-E delivers 0.4% higher with 40% lower inference latency.

Table 3.

Object Detection and Instance Segmentation Results on COCO.

From an overall view of Figure 7, the curve of our proposed model is above all other methods. Compared to the fast-inference FasterNet, our method achieves approximately 1% improvement in average precision with comparable inference speed. This further demonstrates that CFNet enhances model learning capability while maintaining computational efficiency.

Figure 7.

Average Precision vs. GPU Latency on COCO.

4.5. Practical Deployment Analysis

To better demonstrate the practical advantages of our method in real-world deployment scenarios, we conduct comprehensive evaluation experiments on three hardware platforms: GPU, CPU, and ARM Cortex-A simulation using the ImageNet-1k dataset. During deployment, all networks are trained with knowledge distillation methods including KD, DKD, and ReviewKD. The selected lightweight networks for comparison include the baseline ResNet18, MobileNetV2, RepVGG-A0, FasterNet-A, and our proposed CFNet-A.

As shown in Table 4, our proposed CFNet-A demonstrates superior performance across different hardware platforms. On GPU, CFNet-A achieves approximately 1.7× higher throughput than ResNet18 while delivering nearly 3% higher accuracy (using ReviewKD as the distillation method). On CPU devices, CFNet-A matches FasterNet-A’s inference latency and is 0.7 ms faster than the second-best RepVGG-A0, benefiting from reduced memory access pressure that allows our design optimizations to be more effectively utilized. On ARM platforms, CFNet-A demonstrates exceptional efficiency with 57 ms lower latency compared to the baseline ResNet18. Although marginally 3 ms slower than FasterNet-A, CFNet-A’s substantial accuracy advantage makes it more suitable for edge deployment scenarios where both speed and accuracy are critical. These results confirm that CFNet-A delivers an optimal balance between accuracy and inference efficiency across diverse hardware platforms. These comprehensive cross-platform evaluations demonstrate that CFNet maintains its efficiency advantages across diverse deployment scenarios, while knowledge distillation further enhances its practical applicability in real-world applications.

Table 4.

Performance Evaluation on Different Hardware Platforms.

4.6. Ablation Study

We conduct a brief ablation study on the value of channel reduction ratio , the hybrid ratio and the group factor r. We compare different variants in terms of Cifar100 top-1 accuracy, params, FLOPs and throughput on GPU, with results detailed in Table 5. Different hyperparameter settings exhibit varying impacts on model performance.

Table 5.

Ablation on the channel reduction ratio , the hybrid ratio and the group factor r of CFNet.

The results in Table 5 illuminate the performance trade-offs governed by these parameters. The channel reduction ratio demonstrates a clear efficiency–accuracy trade-off, where provides the optimal balance—significantly reducing computational cost while maintaining competitive accuracy compared to more aggressive compression. Similarly, the group factor r directly controls channel selection granularity, where achieves maximal throughput but with significant accuracy reduction, while improves accuracy at substantial computational cost. This establishes as a robust middle ground. Similarly, the hybrid ratio reveals that while removing CMU entirely maximizes speed, it severely degrades accuracy. The setting proves optimal, recovering most accuracy loss with minimal latency impact. These analyses provide valuable insights for different deployment scenarios, where configurations like , , offer viable high-efficiency options for extremely resource-constrained edge environments.

Our ablation study validates that the default configuration with , , achieves an optimal efficiency–accuracy balance through principled design. These choices create a robust operating point where CFNet maintains competitive advantages across various tasks and datasets, demonstrating that our architectural innovations rather than parameter tuning drive the performance gains.

5. Conclusions

In this paper, we have investigated the persistent efficiency bottleneck in lightweight CNNs where reduced FLOPs fail to translate into practical speedup due to memory access overhead. To overcome this fundamental limitation, we proposed channel-focused convolution (CFConv), an intelligent operator that leverages Group Normalization scaling factors to dynamically select informative channels, and channel mixed unit (CMU) that enables efficient cross-channel communication through a Split-Transform-and-Mix strategy. Integrating these innovations, we built CFNet—a hardware-friendly architecture that optimally balances computational efficiency and memory access.

While CFNet demonstrates strong performance across various vision tasks, several aspects merit further investigation. The current architecture, though efficient, may require specialized optimization for extremely memory-constrained edge devices where every kilobyte of memory usage matters. Additionally, the scalability of our channel-focused paradigm to hybrid CNN-Transformer architectures presents an intriguing research direction. The principled channel selection mechanism in CFConv could potentially complement attention mechanisms in transformers, offering opportunities to reduce computational overhead while maintaining representational capacity.

The extensive experiments with various baseline methods on image classification, object detection and instance segmentation have indicated the superiority of CFNet for striking a much better trade-off between performance and inference efficiency. We hope the proposed method provides valuable insights for efficient architectural design and hope it inspires future research towards more hardware-aware neural network architectures.

Author Contributions

Conceptualization, X.L., J.L. and H.D.; methodology, X.L. and J.L.; software, X.L. and J.L.; validation, X.L., J.L. and Q.W.; formal analysis, X.L.; investigation, X.L. and J.L.; resources, Q.W. and C.Y.; data curation, X.L. and J.L.; writing—original draft preparation, X.L. and J.L.; writing—review and editing, H.D. and C.Y.; visualization, X.L., J.L. and J.Z.; supervision, H.D.; project administration, H.D.; funding acquisition, H.D. and Q.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Science and Technology Major Project No. 2022ZD0117103, NSFC under Grant 62192781, 62137002, 62172326, 62302384, Research Project Funded by the State Key Laboratory of Communication Content Cognition under Grant No. A202403, and the Project of China Knowledge Centre for Engineering Science and Technology.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Acknowledgments

This article is a revised and expanded version of a paper entitled “CFNet: An Efficient Convolutional Neural Network with Channel-Focused Convolution and Cross-Channel Mixing” [], which was presented at the 8th Chinese Conference on Pattern Recognition and Computer Vision (PRCV 2025), Shanghai, China, 15–18 October 2025.

Conflicts of Interest

Author Qi Wang was employed by the company MIGU Video Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Appendix A. Algorithm Summary of CFNet Modules

This appendix provides the algorithmic details for the key components of CFNet to facilitate understanding and reproduction.

Algorithm A1 presents the forward pass of Channel-Focused Convolution (CFConv) during training. The MultiBranchConv incorporates convolution, convolution, and identity mapping, which are subsequently merged through element-wise addition. The notation denotes selecting channels of the selected group, while selects channels from the remaining groups.

| Algorithm A1 Channel-Focused Convolution (CFConv) |

|

Algorithm A2 describes the Channel Mixed Unit (CMU) that addresses the information loss from CFConv’s selective processing. The input is split along the channel dimension. undergoes pointwise convolution, while combines pointwise and grouped convolutions. Mixing weights and are generated via global average pooling and softmax for adaptive feature fusion.

| Algorithm A2 Channel Mixed Unit (CMU) |

|

Appendix B. Architecture Specification

To facilitate reproduction of our work, we provide detailed architecture specifications for CFNet-A in Table A1. The table lists all operators, feature map dimensions, and computational costs (FLOPs and parameters) for each stage. During training, CFConv employs multi-branch convolutions (1 × 1, 3 × 3, and identity), which are reparameterized into a single 3 × 3 convolution during inference. All other CFNet variants (B/C/D/E) follow the same architectural design with proportionally scaled block repetitions across stages.

Table A1.

Architecture Specification of CFNet-A.

Table A1.

Architecture Specification of CFNet-A.

| Stage | Operator | Feature Map Size | FLOPs (M) | Params (M) |

|---|---|---|---|---|

| Stem | Conv 3 × 3 | 224 × 224 × 3 | 38.4 | 0.0004 |

| DWConv 3 × 3 (stride = 2) | 224 × 224 × 16 | 3.2 | 0.0001 | |

| PWConv 1 × 1 (stride = 2) | 112 × 112 × 16 | 6.4 | 0.0005 | |

| 56 × 56 × 32 | ||||

| Stage 1 | CFConv (, ) × 3 | 56 × 56 × 32 | 92.2 | 0.077 |

| CMU () | 56 × 56 × 32 | 10.5 | 0.008 | |

| Subsample | Conv 1 × 1 (stride = 2) | 56 × 56 × 32 | 2.0 | 0.002 |

| 28 × 28 × 64 | ||||

| Stage 2 | CFConv (, ) × 3 | 28 × 28 × 64 | 76.5 | 0.307 |

| CMU () | 28 × 28 × 64 | 8.4 | 0.033 | |

| Subsample | Conv 1 × 1 (stride = 2) | 28 × 28 × 64 | 1.6 | 0.008 |

| 14 × 14 × 128 | ||||

| Stage 3 | CFConv (, ) × 9 | 14 × 14 × 128 | 152.9 | 1.106 |

| CMU () × 3 | 14 × 14 × 128 | 25.2 | 0.394 | |

| Subsample | Conv 1 × 1 (stride = 2) | 14 × 14 × 128 | 3.2 | 0.033 |

| 7 × 7 × 256 | ||||

| Stage 4 | CFConv (, ) × 3 | 7 × 7 × 256 | 57.6 | 1.475 |

| CMU () | 7 × 7 × 256 | 8.4 | 0.525 | |

| Head | Global Avg Pool | 7 × 7 × 256 | 0 | 0 |

| FC | 1 × 1 × 1000 | 0.3 | 0.256 | |

| Total | 410 | 3.67 | ||

References

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. Focal Loss for Dense Object Detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2999–3007. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar] [CrossRef]

- Howard, A.; Sandler, M.; Chen, B.; Wang, W.; Chen, L.C.; Tan, M.; Chu, G.; Vasudevan, V.; Zhu, Y.; Pang, R.; et al. Searching for MobileNetV3. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar] [CrossRef]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6848–6856. [Google Scholar] [CrossRef]

- Ma, N.; Zhang, X.; Zheng, H.T.; Sun, J. ShuffleNet V2: Practical Guidelines for Efficient CNN Architecture Design. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 122–138. [Google Scholar] [CrossRef]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. GhostNet: More Features From Cheap Operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 5 August 2020; pp. 1577–1586. [Google Scholar] [CrossRef]

- Chen, J.; Kao, S.h.; He, H.; Zhuo, W.; Wen, S.; Lee, C.H.; Chan, S.H.G. Run, Don’t Walk: Chasing Higher FLOPS for Faster Neural Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 12021–12031. [Google Scholar] [CrossRef]

- Iandola, F.N.; Moskewicz, M.W.; Ashraf, K.; Han, S.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level Accuracy with 50x Fewer Parameters and <1 MB Model Size. In Proceedings of the International Conference on Learning Representations, San Juan, Puerto Rico, 2–4 May 2016; pp. 1–13. [Google Scholar]

- Tan, M.; Le, Q. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; Volume 97, pp. 6105–6114. [Google Scholar]

- Tan, M.; Le, Q. EfficientNetV2: Smaller Models and Faster Training. In Proceedings of the 38th International Conference on Machine Learning, Virtual, 18–24 July 2021; Volume 139, pp. 10096–10106. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar] [CrossRef]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11531–11539. [Google Scholar] [CrossRef]

- Li, X.; Wang, W.; Hu, X.; Yang, J. Selective Kernel Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 510–519. [Google Scholar] [CrossRef]

- Ding, X.; Zhang, X.; Ma, N.; Han, J.; Ding, G.; Sun, J. RepVGG: Making VGG-style ConvNets Great Again. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13728–13737. [Google Scholar] [CrossRef]

- Ding, X.; Xia, C.; Zhang, X.; Chu, X.; Han, J.; Ding, G. RepMLP: Re-parameterizing Convolutions into Fully-connected Layers for Image Recognition. arXiv 2021, arXiv:2105.01883. [Google Scholar]

- Ding, X.; Guo, Y.; Ding, G.; Han, J. ACNet: Strengthening the Kernel Skeletons for Powerful CNN via Asymmetric Convolution Blocks. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1911–1920. [Google Scholar] [CrossRef]

- Ding, X.; Zhang, X.; Han, J.; Ding, G. Diverse Branch Block: Building a Convolution as an Inception-like Unit. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 10881–10890. [Google Scholar] [CrossRef]

- Vasu, P.K.A.; Gabriel, J.; Zhu, J.; Tuzel, O.; Ranjan, A. MobileOne: An Improved One millisecond Mobile Backbone. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7907–7917. [Google Scholar] [CrossRef]

- Wu, Y.; He, K. Group Normalization. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar] [CrossRef]

- Krizhevsky, A. Learning Multiple Layers of Features from Tiny Images; Technical Report; University of Toronto: Toronto, ON, Canada, 2009. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. ImageNet: A Large-Scale Hierarchical Image Database. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 2009; 20–25 June 2009; pp. 248–255. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar] [CrossRef]

- Mehta, S.; Rastegari, M. MobileViT: Light-weight, General-purpose, and Mobile-friendly Vision Transformer. In Proceedings of the International Conference on Learning Representations, Online, 25–29 April 2022. [Google Scholar] [CrossRef]

- Chen, S.; Xie, E.; Ge, C.; Chen, R.; Liang, D.; Luo, P. CycleMLP: A MLP-Like Architecture for Dense Visual Predictions. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 14284–14300. [Google Scholar] [CrossRef] [PubMed]

- Maaz, M.; Shaker, A.; Cholakkal, H.; Khan, S.; Zamir, S.W.; Anwer, R.M.; Shahbaz Khan, F. EdgeNeXt: Efficiently Amalgamated CNN-Transformer Architecture for Mobile Vision Applications. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 3–20. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer using Shifted Windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 9992–10002. [Google Scholar] [CrossRef]

- Lv, X.; Liang, J.; Du, H.; Xin, Y.; Zhang, Y.; Zhang, J. CFNet: An Efficient Convolutional Neural Network with Channel-Focused Convolution and Cross-Channel Mixing. In Proceedings of the 8th Chinese Conference on Pattern Recognition and Computer Vision, Shanghai, China, 15–18 October 2025. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).