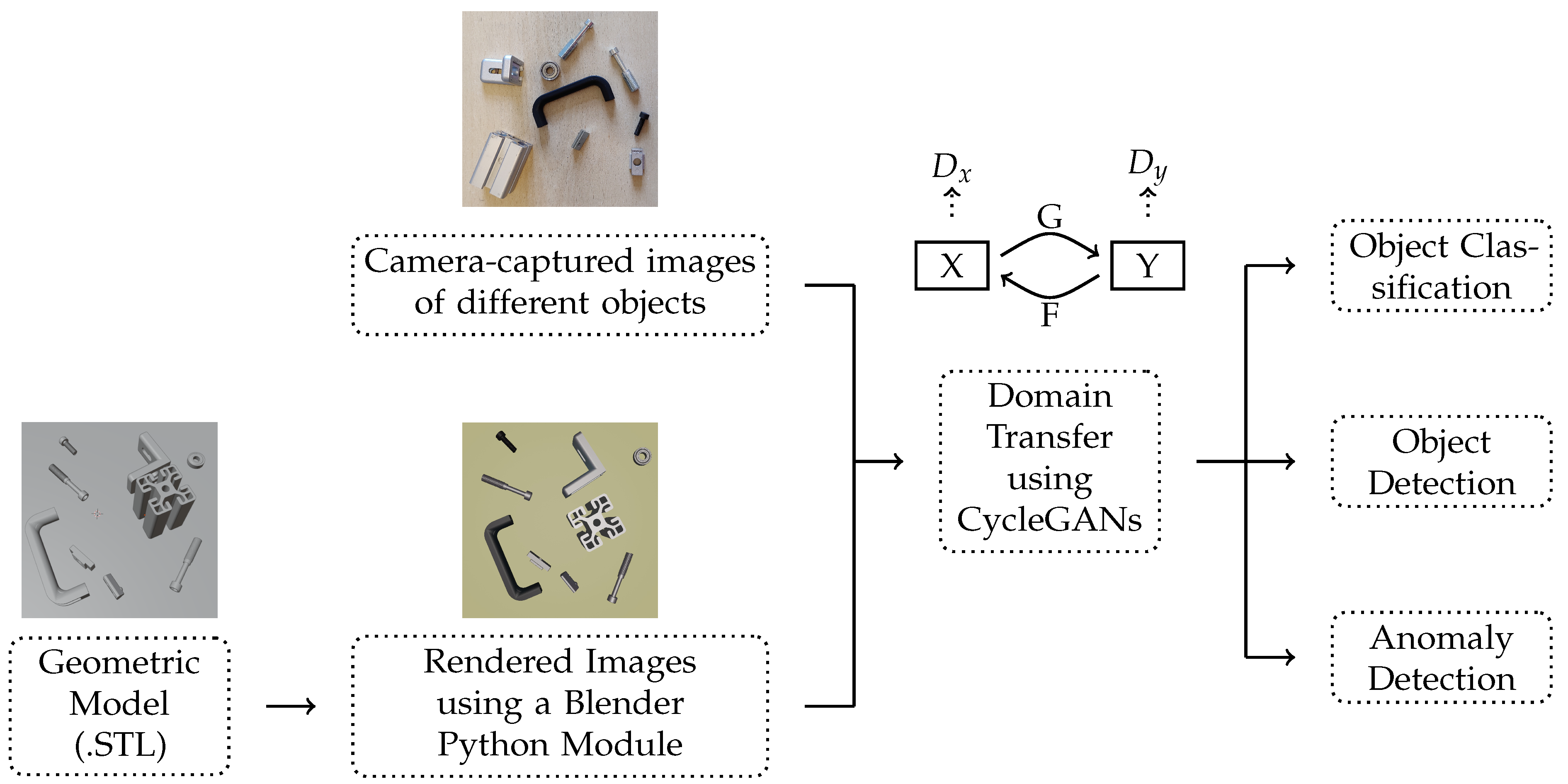

A Synthetic Image Generation Pipeline for Vision-Based AI in Industrial Applications

Abstract

1. Introduction

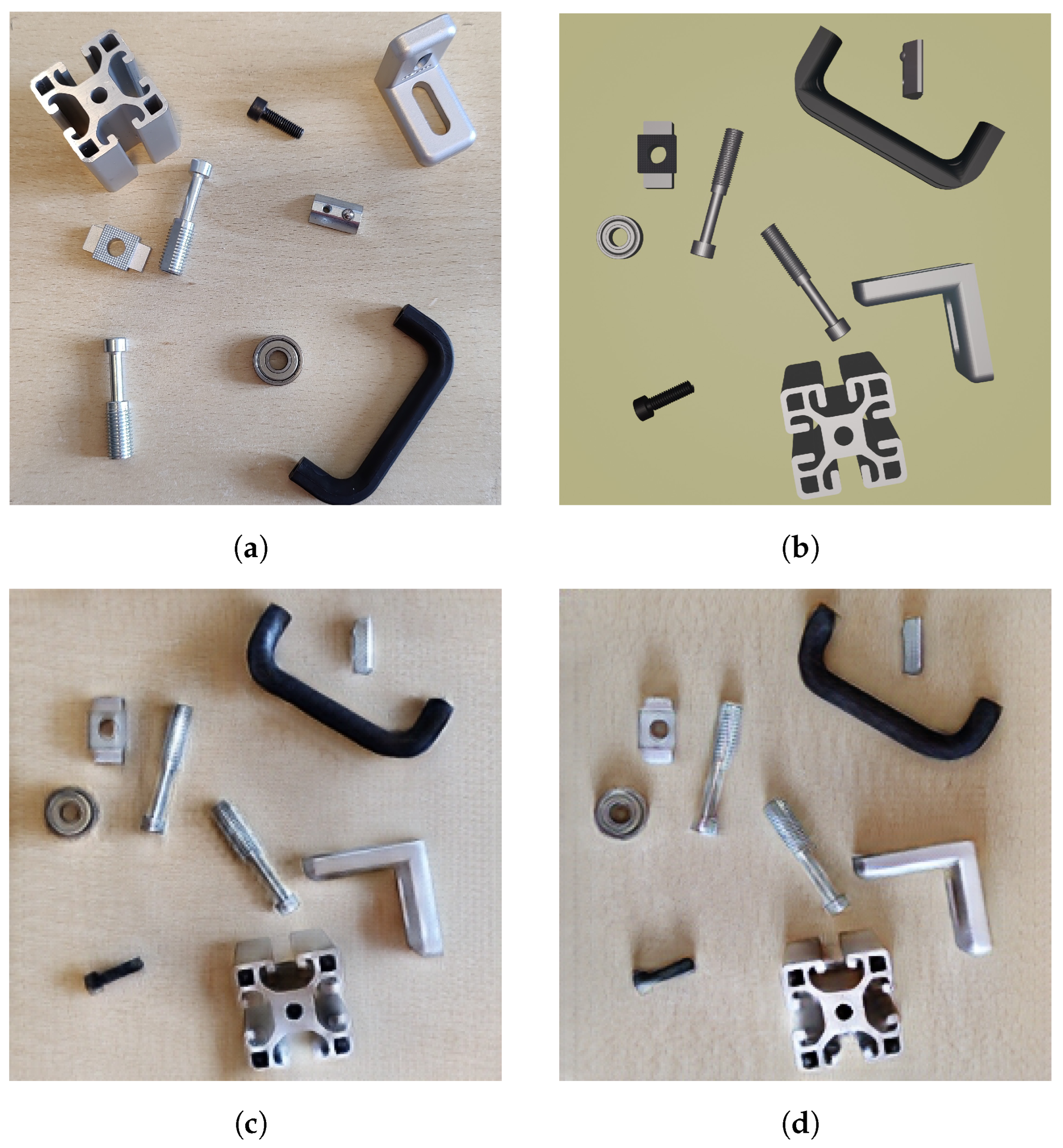

2. Materials and Methods

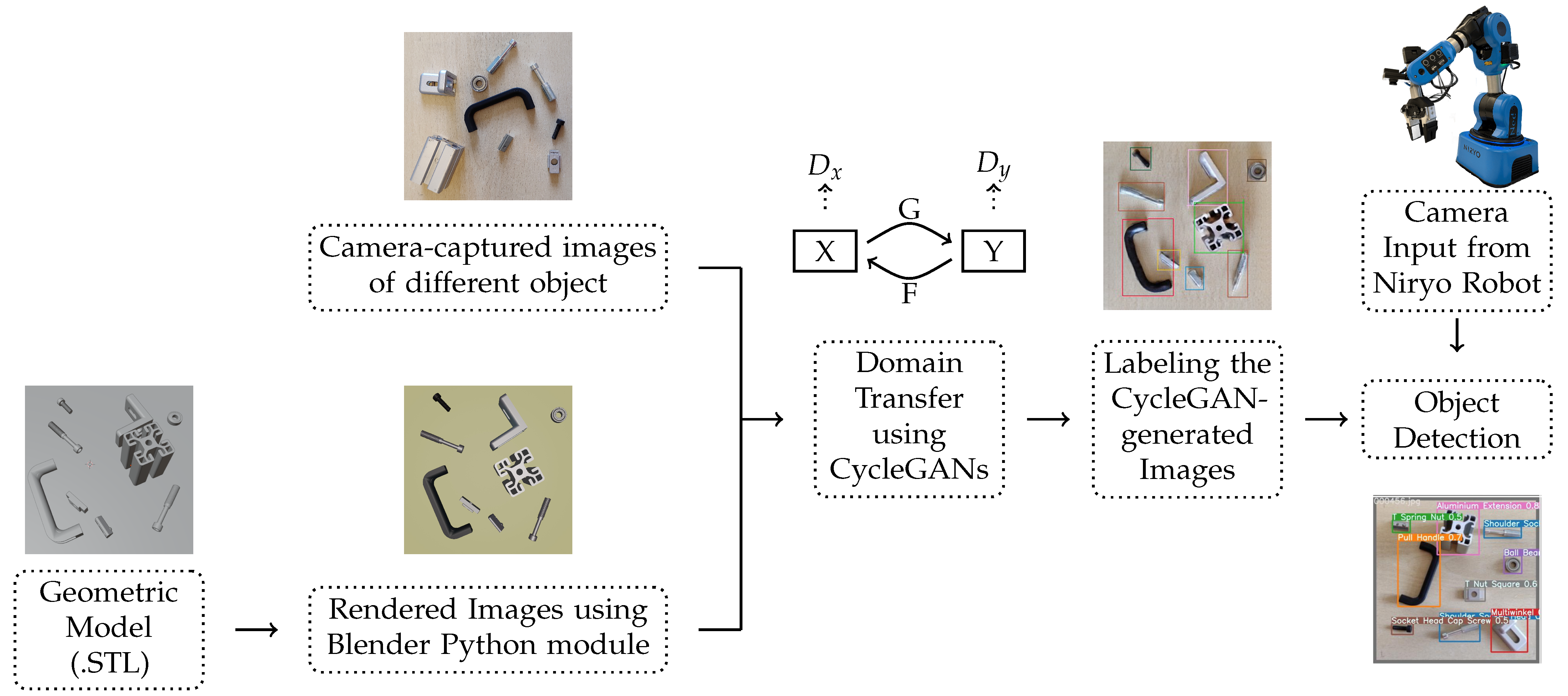

- Domain Transfer with Similar Objects: In this scenario, the images in both domains contain the same objects. The model recognizes the objects in domain A and transfers the domain-specific details of that particular object to domain B, resulting in photorealistic images that still retain the object shapes and positions.

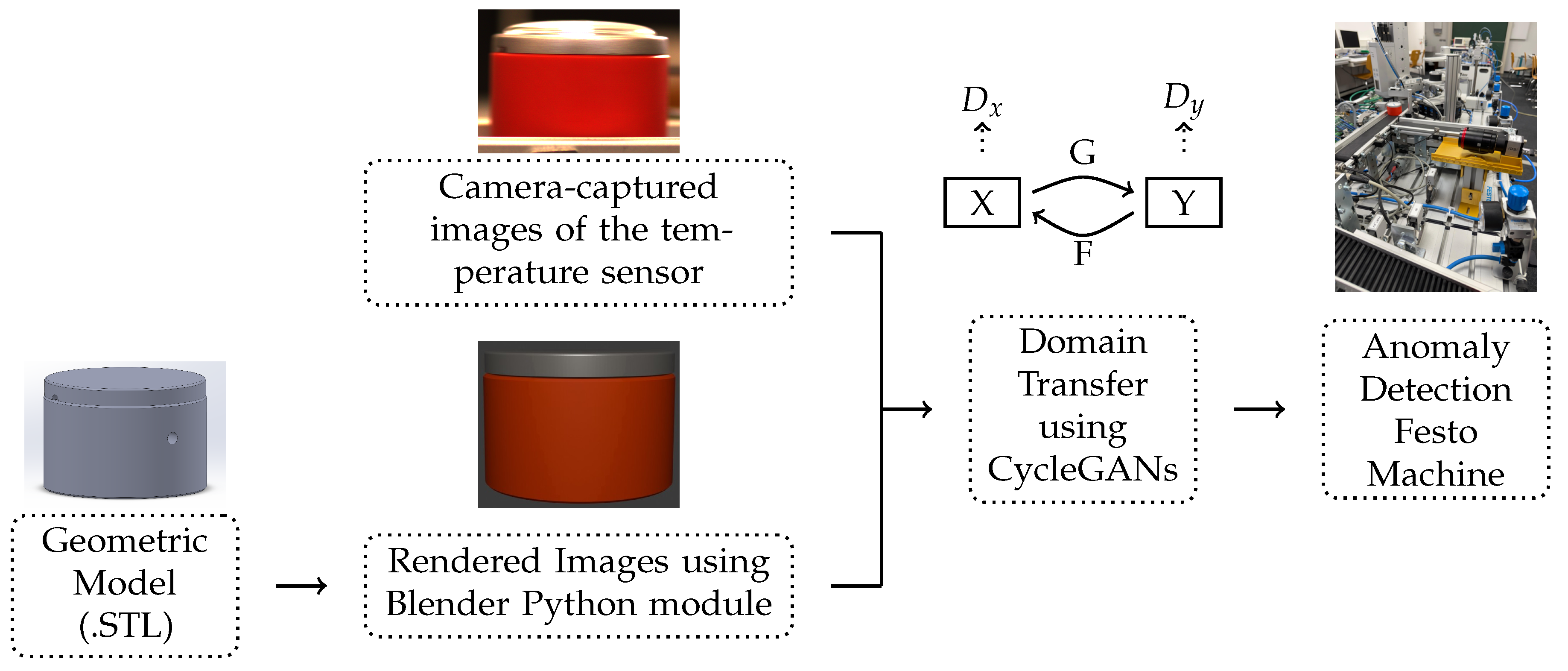

- Domain Transfer with Different Objects: In this case, the objects in the two domains are completely different. The model transfers the domain of one object to the other, producing a photorealistic image based purely on synthetic images. This method is particularly beneficial, as it enables the generation of realistic images of products prior to manufacturing, which is very useful in systems such as BSO and FMS production environments.

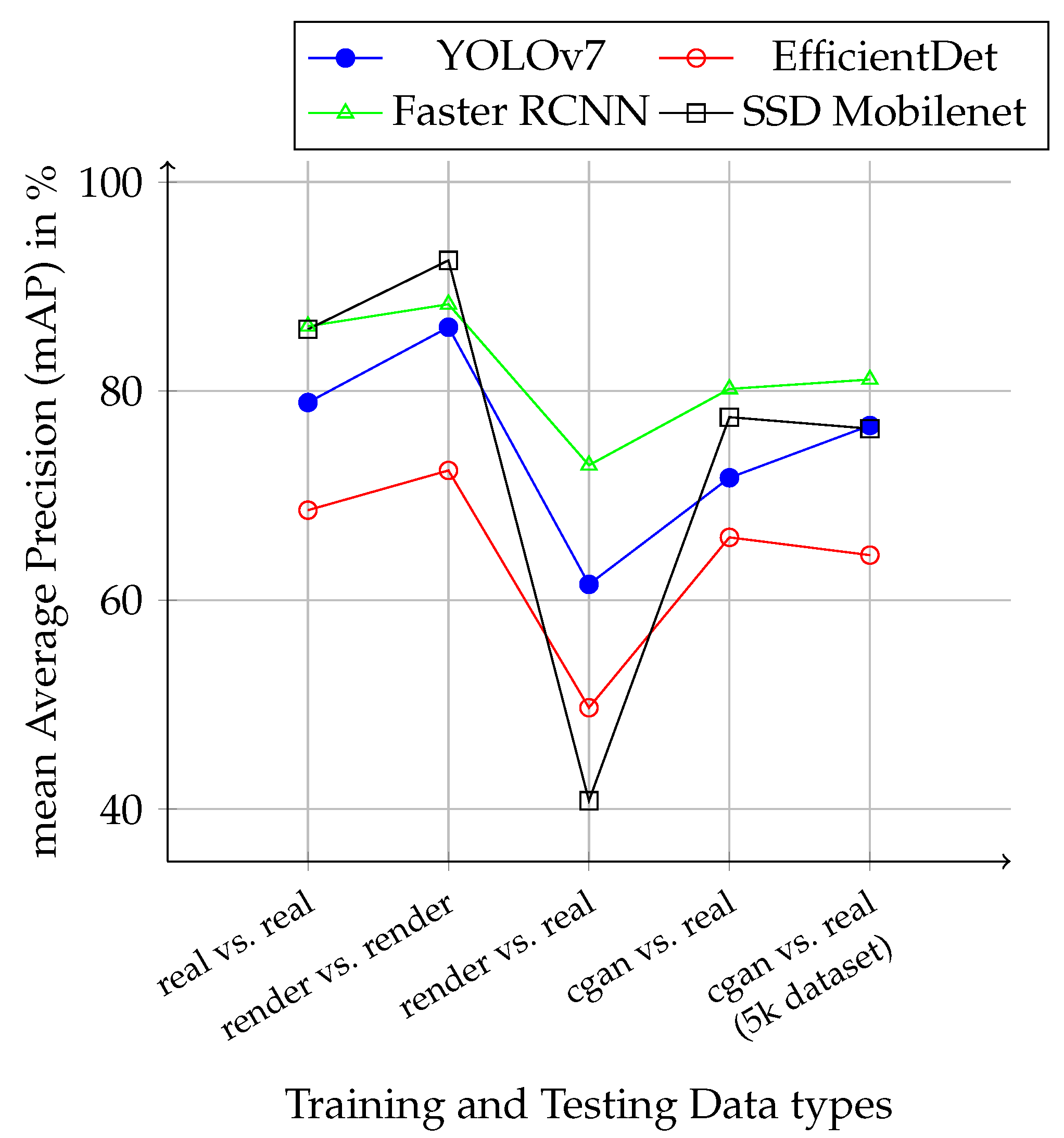

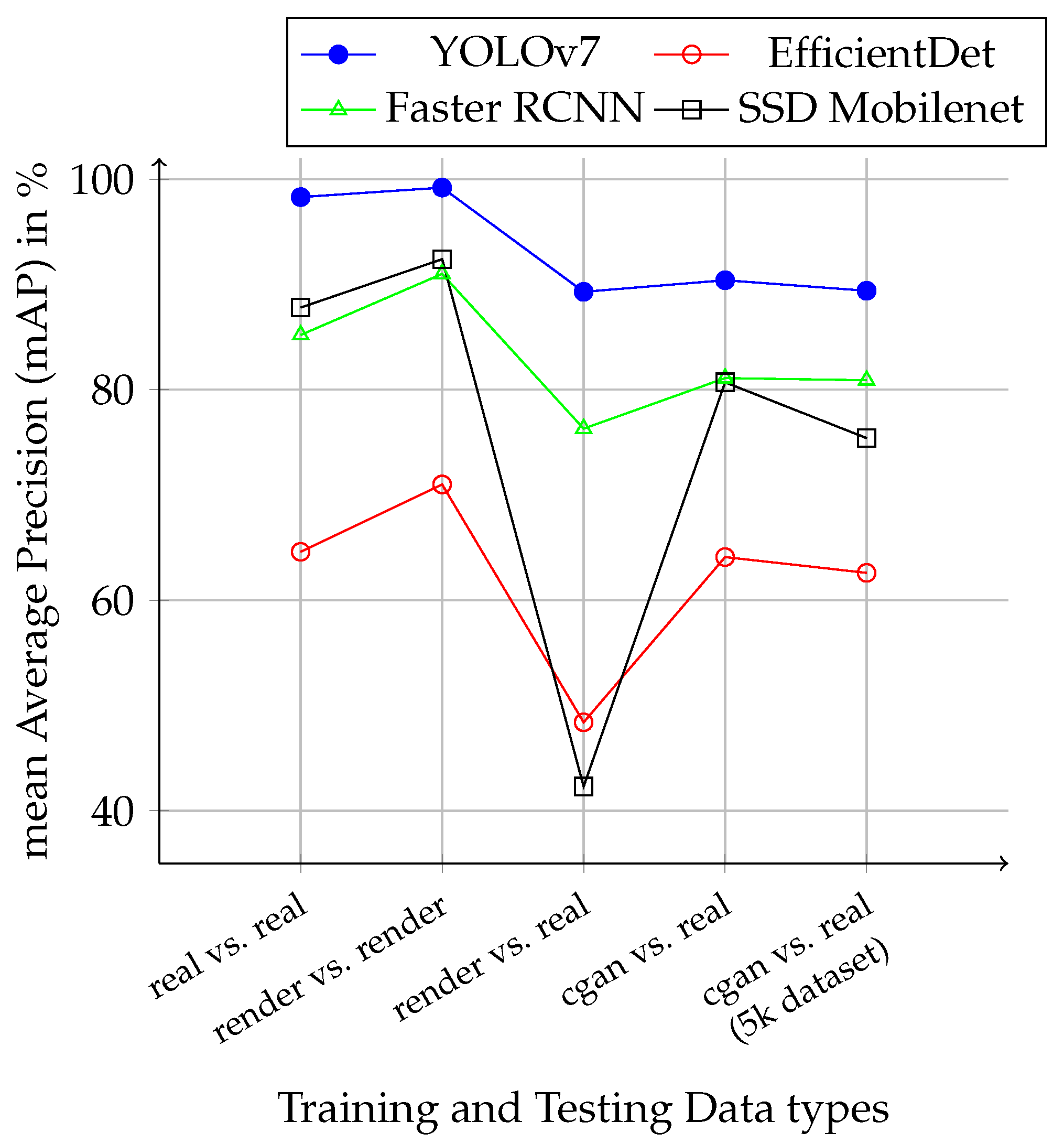

3. Results

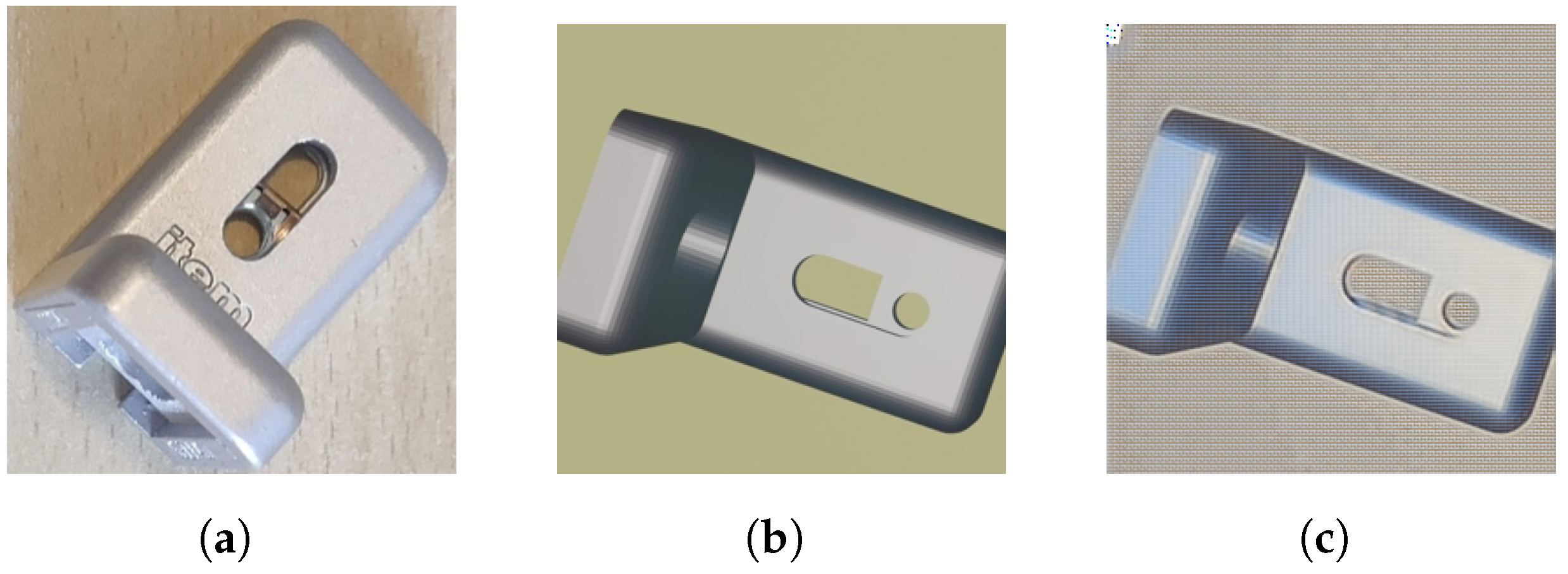

3.1. Domain Transfer with Similar Objects

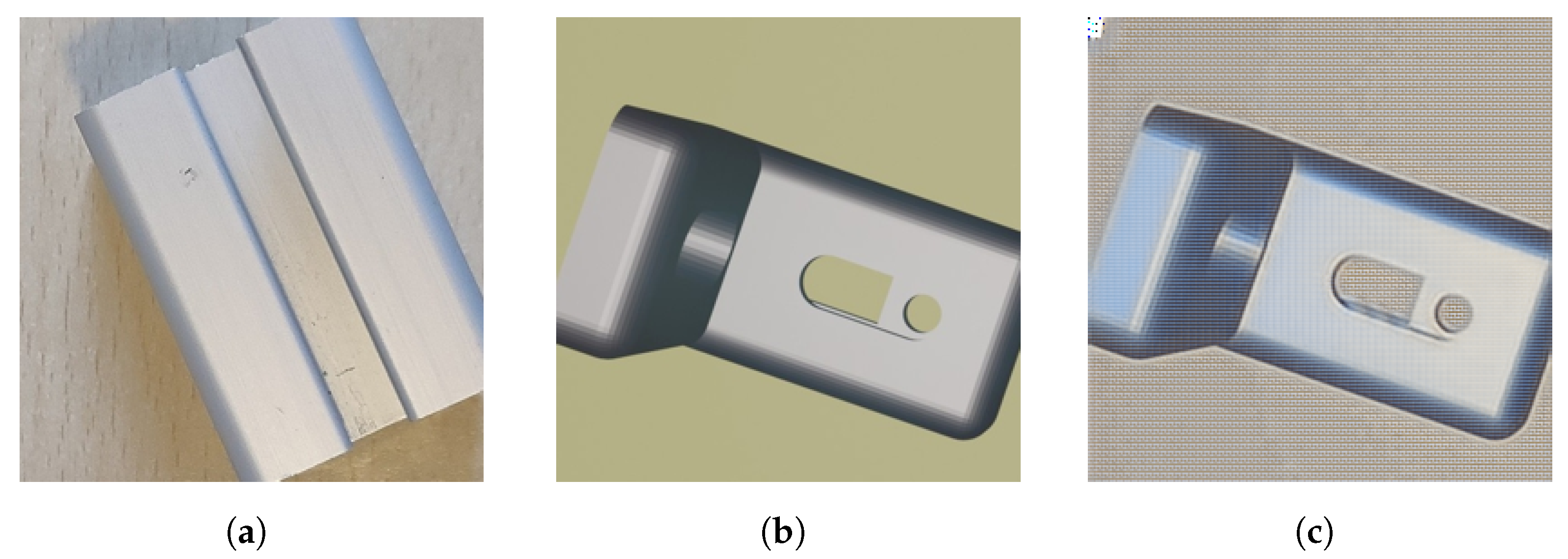

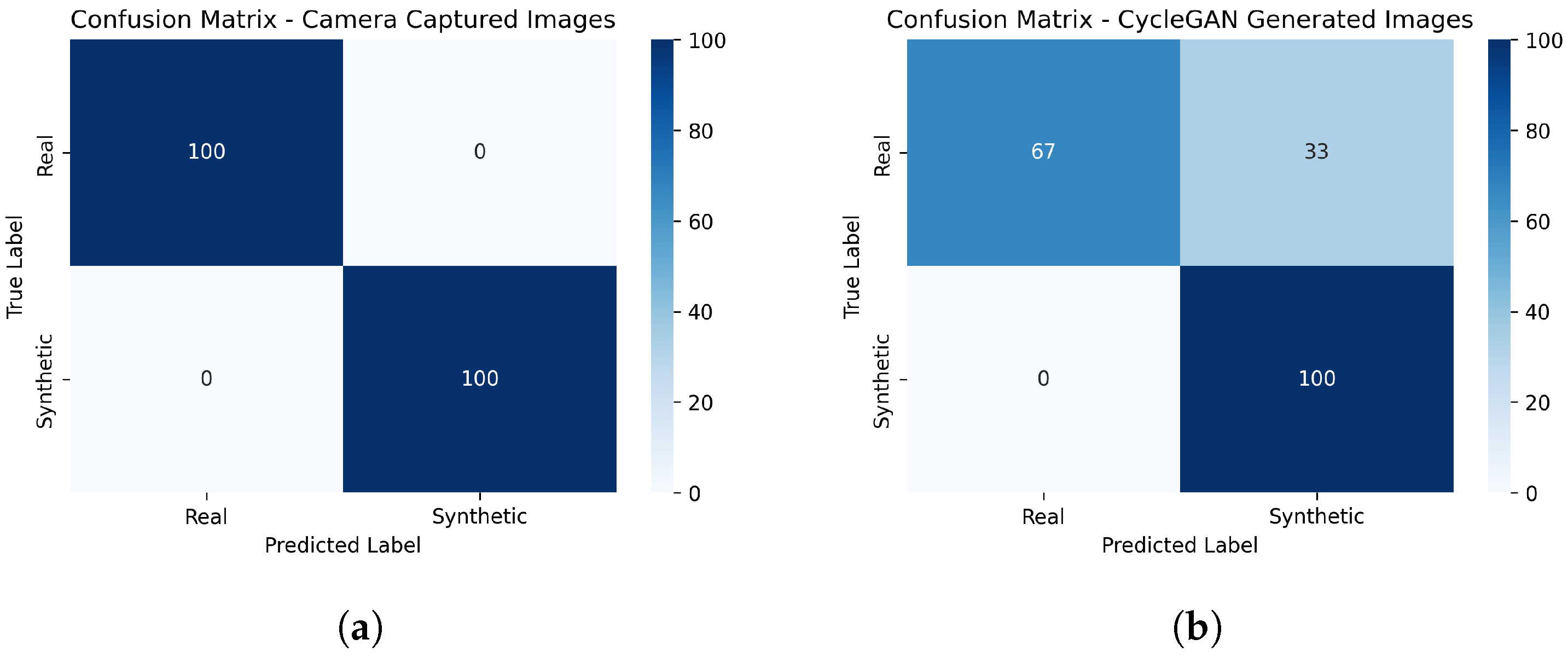

3.2. Domain Transfer with Different Objects

4. Discussion

4.1. Evaluation

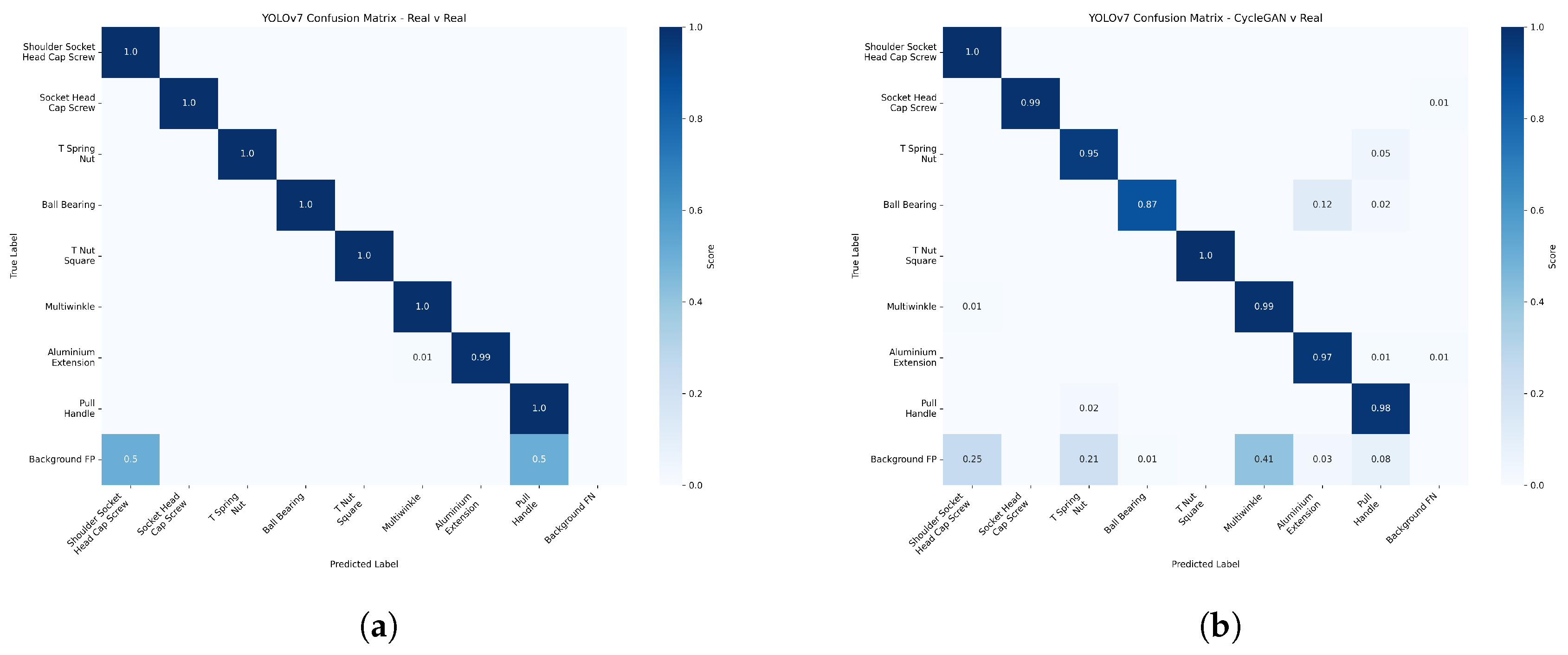

4.1.1. Domain Transfer with Similar Objects

- The architecture of the OD models;

- The domain of the training images;

- The number of training images.

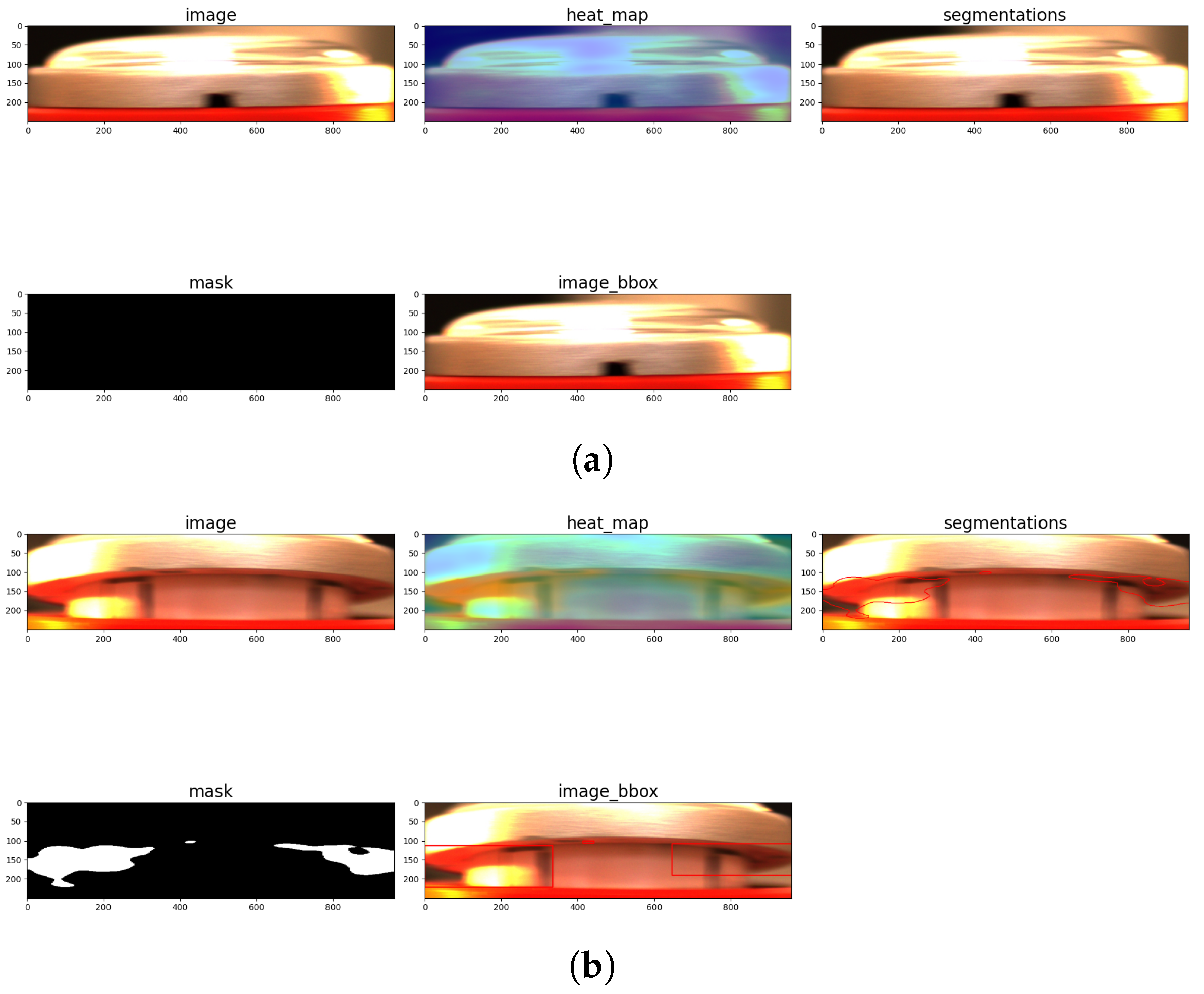

4.1.2. Domain Transfer with Different Objects

4.2. Application of the Synthetic Data Generation Pipeline

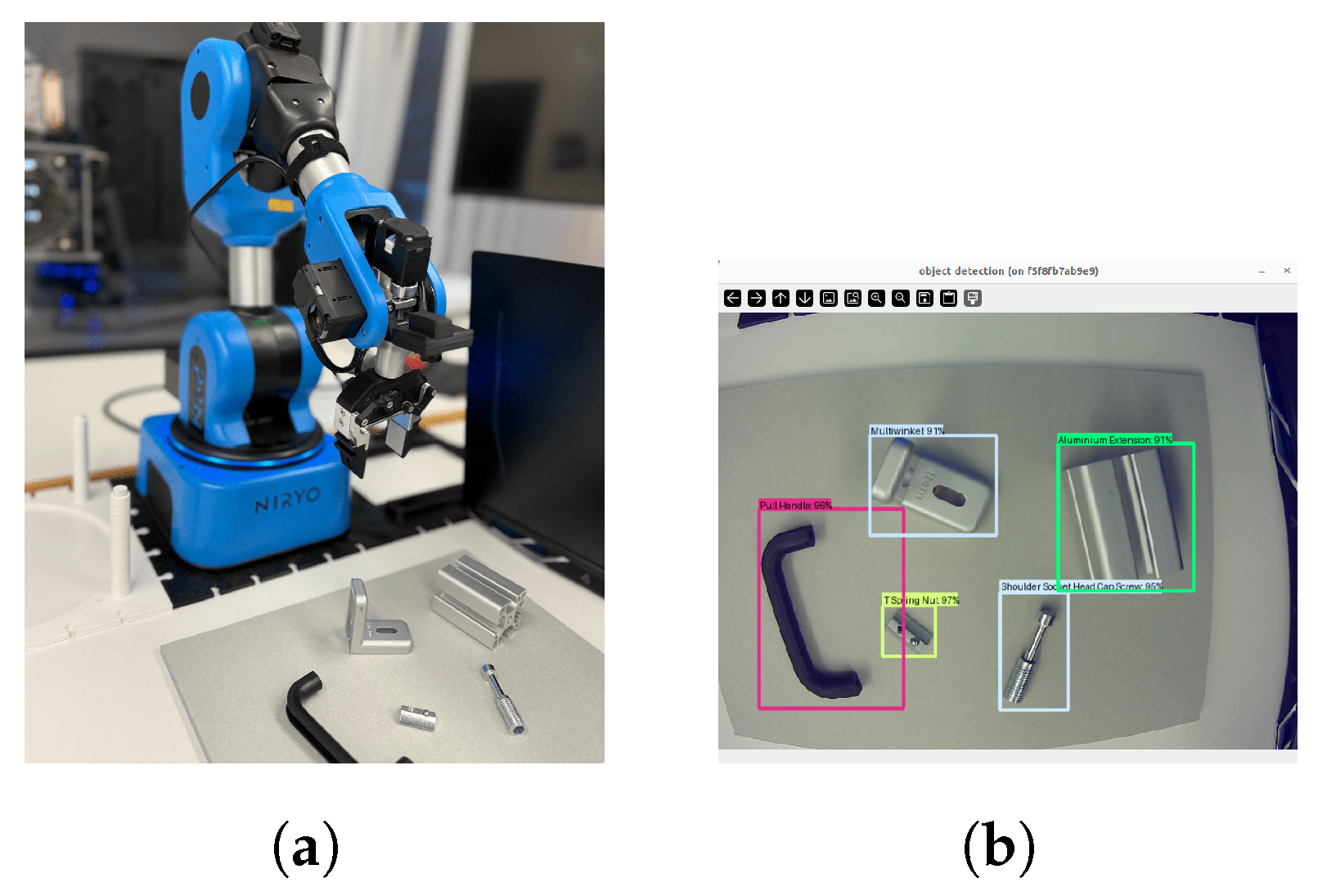

- Real-time object detection using a Niryo robot [27].

- Anomaly detection in temperature sensors assembled by an in-house Festo production system.

4.2.1. Object Detection Using Niryo Robot

4.2.2. Anomaly Detection of Temperature Sensors

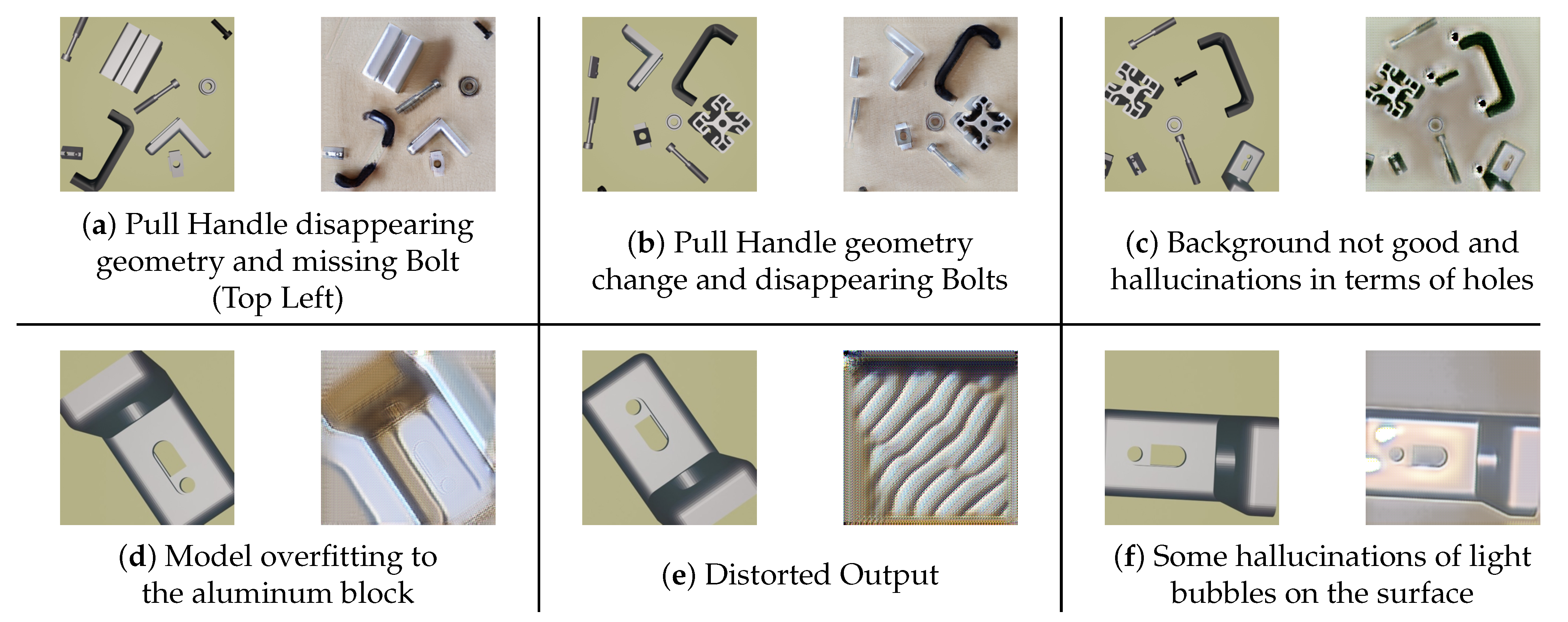

4.3. Limitations of the Pipeline

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Plathottam, S.J.; Rzonca, A.; Lakhnori, R.; Iloeje, C.O. A review of artificial intelligence applications in manufacturing operations. J. Adv. Manuf. Process. 2023, 5, e10159. [Google Scholar] [CrossRef]

- Singh, S.A.; Desai, K.A. Automated surface defect detection framework using machine vision and convolutional neural networks. J. Intell. Manuf. 2023, 34, 1995–2011. [Google Scholar] [CrossRef]

- Sundaram, S.; Zeid, A. Artificial intelligence-based smart quality inspection for manufacturing. Micromachines 2023, 14, 570. [Google Scholar] [CrossRef] [PubMed]

- Maharana, K.; Mondal, S.; Nemade, B. A review: Data pre-processing and data augmentation techniques. Glob. Transit. Proc. 2022, 3, 91–99. [Google Scholar] [CrossRef]

- Zhuang, F.; Qi, Z.; Duan, K.; Xi, D.; Zhu, Y.; Zhu, H.; Xiong, H.; He, Q. A comprehensive survey on transfer learning. Proc. IEEE 2020, 109, 43–76. [Google Scholar] [CrossRef]

- Wang, Y.; Yao, Q.; Kwok, J.T.; Ni, L.M. Generalizing from a few examples: A survey on few-shot learning. ACM Comput. Surv. (Csur) 2020, 53, 1–34. [Google Scholar] [CrossRef]

- Safonova, A.; Ghazaryan, G.; Stiller, S.; Main-Knorn, M.; Nendel, C.; Ryo, M. Ten deep learning techniques to address small data problems with remote sensing. Int. J. Appl. Earth Obs. Geoinf. 2023, 125, 103569. [Google Scholar] [CrossRef]

- Li, C.; Yan, H.; Qian, X.; Zhu, S.; Zhu, P.; Liao, C.; Tian, H.; Li, X.; Wang, X.; Li, X. A domain adaptation YOLOv5 model for industrial defect inspection. Measurement 2023, 213, 112725. [Google Scholar] [CrossRef]

- Kostal, P.; Velisek, K. Flexible manufacturing system. World Acad. Sci. Eng. Technol. 2011, 77, 825–829. [Google Scholar]

- Jin, Z.; Marian, R.M.; Chahl, J.S. Achieving batch-size-of-one production model in robot flexible assembly cells. Int. J. Adv. Manuf. Technol. 2023, 126, 2097–2116. [Google Scholar] [CrossRef]

- Boikov, A.; Payor, V.; Savelev, R.; Kolesnikov, A. Synthetic data generation for steel defect detection and classification using deep learning. Symmetry 2021, 13, 1176. [Google Scholar] [CrossRef]

- Nakumar, N.; Eberhardt, J. Overview of Synthetic Data Generation for Computer Vision in Industry. In Proceedings of the 2023 8th International Conference on Mechanical Engineering and Robotics Research (ICMERR), Krakow, Poland, 8–10 December 2023; pp. 31–35. [Google Scholar]

- Singh, M.; Srivastava, R.; Fuenmayor, E.; Kuts, V.; Qiao, Y.; Murray, N.; Devine, D. Applications of digital twin across industries: A review. Appl. Sci. 2022, 12, 5727. [Google Scholar] [CrossRef]

- Schmedemann, O.; Baaß, M.; Schoepflin, D.; Schüppstuhl, T. Procedural synthetic training data generation for AI-based defect detection in industrial surface inspection. Procedia CIRP 2022, 107, 1101–1106. [Google Scholar] [CrossRef]

- Singh, K.; Navaratnam, T.; Holmer, J.; Schaub-Meyer, S.; Roth, S. Is synthetic data all we need? benchmarking the robustness of models trained with synthetic images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 2505–2515. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134. [Google Scholar]

- Sara, U.; Akter, M.; Uddin, M.S. Image quality assessment through FSIM, SSIM, MSE and PSNR—A comparative study. J. Comput. Commun. 2019, 7, 8–18. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In European Conference on Computer Vision; Springer International Publishing: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Nandakumar, N. nishanthnandakumar/Synthetic-Data-Generation-Pipeline. GitHub. Available online: https://github.com/nishanthnandakumar/Synthetic-data-Generation-Pipeline- (accessed on 14 September 2025).

- Li, A.; Hamzah, R.; Rahim, S.K.N.A.; Gao, Y. YOLO algorithm with hybrid attention feature pyramid network for solder joint defect detection. IEEE Trans. Components Packag. Manuf. Technol. 2024, 14, 1493–1500. [Google Scholar] [CrossRef]

- Dehaerne, E.; Dey, B.; Halder, S.; De Gendt, S. Optimizing YOLOv7 for semiconductor defect detection. In Proceedings of the Metrology, Inspection, and Process Control XXXVII, San Jose, CA, USA, 26 February–2 March 2023; Volume 12496, pp. 635–642. [Google Scholar]

- Turing, A.M. Computing machinery and intelligence. In Parsing the Turing Test: Philosophical and Methodological Issues in the Quest for the Thinking Computer; Springer: Dordrecht, The Netherlands, 2007; pp. 23–65. [Google Scholar]

- Ned2—Educational Desktop Robotic Arm. Niryo. Available online: https://niryo.com/product/educational-desktop-robotic-arm/ (accessed on 28 November 2024).

- Tzutalin, D. HumanSignal LabelImg. Github Repository. 2015. Available online: https://github.com/HumanSignal/labelImg (accessed on 25 November 2022).

- Yu, H.; Chen, C.; Du, X.; Li, Y.; Rashwan, A.; Hou, L.; Jin, P.; Yang, F.; Liu, F.; Kim, J.; et al. Tensor Flow Model Garden. GitHub Repository. 2020. Available online: https://github.com/tensorflow/models (accessed on 5 July 2024).

- Deng, H.; Li, X. Anomaly detection via reverse distillation from one-class embedding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 9737–9746. [Google Scholar]

- Akcay, S.; Ameln, D.; Vaidya, A.; Lakshmanan, B.; Ahuja, N.; Genc, U. Anomalib: A deep learning library for anomaly detection. In Proceedings of the 2022 IEEE International Conference on Image Processing (ICIP), Bordeaux, France, 16–19 October 2022; pp. 1706–1710. [Google Scholar]

- He, Y.; Chen, L.; Yuan, Y.J.; Chen, S.Y.; Gao, L. Multi-level patch transformer for style transfer with single reference image. In International Conference on Computational Visual Media; Springer Nature: Singapore, 2024; pp. 221–239. [Google Scholar]

- Nguyen, A.; Yosinski, J.; Clune, J. Understanding neural networks via feature visualization: A survey. In Explainable AI: Interpreting, Explaining and Visualizing Deep Learning; Springer International Publishing: Cham, Switzerland, 2019; pp. 55–76. [Google Scholar]

- Andres, A.; Martinez-Seras, A.; Laña, I.; Del Ser, J. On the black-box explainability of object detection models for safe and trustworthy industrial applications. Results Eng. 2024, 24, 103498. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nandakumar, N.; Eberhardt, J. A Synthetic Image Generation Pipeline for Vision-Based AI in Industrial Applications. Appl. Sci. 2025, 15, 12600. https://doi.org/10.3390/app152312600

Nandakumar N, Eberhardt J. A Synthetic Image Generation Pipeline for Vision-Based AI in Industrial Applications. Applied Sciences. 2025; 15(23):12600. https://doi.org/10.3390/app152312600

Chicago/Turabian StyleNandakumar, Nishanth, and Jörg Eberhardt. 2025. "A Synthetic Image Generation Pipeline for Vision-Based AI in Industrial Applications" Applied Sciences 15, no. 23: 12600. https://doi.org/10.3390/app152312600

APA StyleNandakumar, N., & Eberhardt, J. (2025). A Synthetic Image Generation Pipeline for Vision-Based AI in Industrial Applications. Applied Sciences, 15(23), 12600. https://doi.org/10.3390/app152312600