Abstract

Achieving high accuracy in Chinese event prediction remains challenging due to the prevalence of short-text events that lack sufficient background knowledge and semantic depth. Existing approaches predominantly rely on knowledge augmentation strategies that adopt a retrieval-and-integration paradigm, which often results in suboptimal performance due to the limited scope and timeliness of KGs (Knowledge Graphs). Large language models (LLMs) play a crucial role in event knowledge augmentation by serving as dynamic, context-aware knowledge generators that address the limitations of KGs. This paper introduces an enhanced method for Chinese event prediction, named CEP-PKA (Chinese Event Prediction with Prompt-driven Knowledge Augmentation). Specifically, we design a prompt engineering strategy to leverage an LLM to generate fine-grained knowledge for event elements, including triggers and arguments. We then fine-tune a pre-trained language model (PLM) to learn a joint representation of events and its associated knowledge. Experimental results in two public Chinese benchmarks demonstrate that CEP-PKA outperforms baseline models relying solely on LLMs or PLMs, achieving average accuracy improvements of 10.62% and 12.51%, respectively.

1. Introduction

Events serve as fundamental units of human knowledge and are central to human comprehension of the world, making event prediction a critical task for natural language processing (NLP). Current event prediction methods largely depend on structured data, such as event pairs [], event chains [], or event graphs [], all of which are fundamentally dependent on structured event datasets and require labor-intensive efforts to construct from unstructured text []. Moreover, most human-annotated datasets are limited in scale and consist of short, ambiguous, and sparse texts, bringing significant challenges for model training.

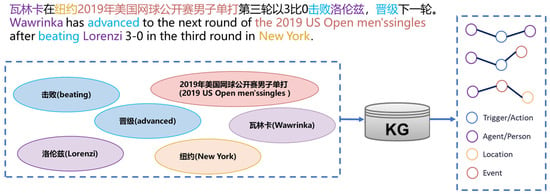

Knowledge augmentation has emerged as a promising approach for event prediction, enhancing both the interpretability of NLP models and the performance of downstream tasks. Existing methods [,] typically adopt a retrieval-and-integration paradigm, relying heavily on structured data, particularly knowledge graphs (KGs). This paradigm involves extracting event elements from text, retrieving relevant triples from KGs, and integrating them into event representations. While effective in structured knowledge scenarios, these approaches are inherently limited by the KG’s scale and timeliness, etc. As depicted in Figure 1, taking the sentence “Wawrinka has advanced to the next round of the 2019 US Open men’s singles after beating Lorenzi 3–0 in the third round in New York.” as an example, event prediction based on KGs would face three limitations.

Figure 1.

Traditional knowledge argumentation method for event prediction. This method generally has three steps: (1) extract event triggers and arguments from text-based events; (2) retrieve them in KGs; and (3) integrate retrieved triples with initial events.

- Structural Rigidity: KGs rely on pre-defined schemas that struggle to accommodate compound event elements. For instance, the phrase “2019 US Open men’s singles” represents a specific, time-bound entity that may not fit into a KG’s fixed entity schemas. Traditional KGs often lack flexible mechanisms to capture such context-specific or emerging entities, leading to an incomplete semantic representation of events.

- Coverage Gaps: Static KGs typically fail to include up-to-date information for the latest events. In this example, if the KG lacks entries for “2019 US Open men’s singles”, it cannot retrieve the relevant background knowledge, resulting in an incomplete knowledge integration. This limitation is further evident in scenarios involving newly emerging events (e.g., “2023 US Open men’s singles”) that have not been added to the KG.

- Ambiguity Challenges: KGs often struggle with entity disambiguation, particularly for ambiguous proper nouns. The name “Lorenzi” in the sentence could refer to multiple individuals, but a traditional KG may lack contextual clues to distinguish which specific “Lorenzi” is mentioned. Without additional disambiguation mechanisms, the KG might incorrectly associate the event with the wrong entity, introducing misleading knowledge into the prediction process and degrading accuracy.

These limitations of KG-based approaches highlight the need for more dynamic knowledge augmentation strategies. Recently, large language models have emerged as a revolutionary solution in knowledge engineering, with models like GPT-4 [] demonstrating exceptional capabilities in natural language generation and context-aware reasoning. Unlike static KGs, LLMs can (1) generate fine-grained knowledge for novel entities (e.g., “2019 US Open men’s singles”) by leveraging their vast pre-training data; (2) disambiguate ambiguous terms (e.g., “Lorenzi”) through contextual understanding, avoiding the pitfalls of KG-based entity confusion; and (3) dynamically adapt to evolving event schemas without relying on pre-defined structures.

However, LLMs are not without their own challenges, such as knowledge cutoffs and the propensity for hallucination, which can introduce factual inaccuracies into the knowledge augmentation process. The potential of large language models as proactive knowledge sources for event prediction has remained underexplored. To address this, we propose CEP-PKA (Chinese Event Prediction with Prompt-driven Knowledge Augmentation), a model that replaces traditional knowledge graphs by utilizing a prompt-driven framework for LLMs. (1) Prompt Engineering: We design custom templates to guide LLMs in generating context-specific knowledge for event triggers and arguments, which are fundamental elements of event detection, thereby enriching their semantic depth. (2) PLM Fine-Tuning: A pre-trained language model is fine-tuned on LLM-augmented event texts, learning to integrate raw text with generated knowledge for an accurate event type classification. Experimental results demonstrate that CEP-PKA significantly outperforms LLM-only and PLM-only baselines, achieving up to 12.51% accuracy improvement in Chinese event benchmarks. By addressing the structural rigidity, coverage gaps, and ambiguity challenges of KGs, CEP-PKA establishes a new paradigm for knowledge-augmented event prediction, where LLMs serve as dynamic, scalable knowledge generators rather than passive retrieval systems.

Our contributions are summarized as follows:

- Event Prediction with Fine-Grained Information: We leverage explicit fine-grained information within event texts to enable accurate event predictions, addressing the sparsity challenge in short-text scenarios.

- Prompt-Driven Augmentation: We propose a prompt-driven framework using LLMs to generate context-specific knowledge for triggers/arguments, enhancing semantic richness and addressing short-text sparsity.

- Systematic PLM Fine-Tuning: We establish a robust training pipeline through rigorous fine-tuning of PLMs on two Chinese event prediction benchmarks.

- Performance Validation: Experiments show our method significantly improves prediction accuracy over standalone LLMs/PLMs, validating the efficacy of integrated knowledge augmentation.

2. Related Works

2.1. Knowledge Augmentation

In the field of event prediction, the limited scale of most datasets and the brevity of texts bring significant challenges. Previous studies [,] have demonstrated that supplementary knowledge can enhance semantic richness, mitigate data sparsity and ambiguity, and clarify contextual meaning, making knowledge augmentation a critical approach. Traditional methods employ retrieval-based strategies to acquire knowledge from knowledge graphs [,]. Although effective in structured scenarios, they are inherently constrained by rigid schemas and incomplete domain coverage.

Recent breakthroughs in large language models offer a paradigm shift for knowledge augmentation. Unlike static KGs, LLMs, which are trained on vast and diverse corpora, possess inherent capabilities for context-aware knowledge generation. While existing studies have focused on using external knowledge to refine prompt design [,], the potential of using prompts to extract knowledge from LLMs remains unexplored. In this work, we leverage LLMs’ pre-trained knowledge and generative capabilities to dynamically predict supplementary event information, addressing traditional KGs’ sparsity and ambiguity limitations via adaptive semantic enrichment. By treating LLMs as proactive knowledge sources, we aim to enhance event prediction performance through context-aware knowledge enrichment.

2.2. Event Prediction

Existing event prediction approaches predominantly rely on event graphs to model event arguments and cross-event relationships. Li et al. [] constructed a narrative event evolution graph using scaled graph neural networks (GNNs) for event prediction. Liu et al. [] proposed a directed labeled syntactic information graph to enhance GNN capabilities for event factuality prediction. Yu et al. [] developed an online neural symbolic framework for temporal knowledge graph forecasting. Mathur et al. [] created a weakly labeled event logic graph for document-level script event prediction.

While these graph-based methods have demonstrated effectiveness, their applicability is largely limited to structured event graphs. However, most real-world event data exists in unstructured text, and converting all text-based events into graph structures is both labor-intensive and impractical. To address this gap, our work focuses on direct event type prediction from raw text, eliminating the need for manual graph construction.

3. Problem Definition

Event prediction involves analyzing the information presented in a given text, identifying the underlying event, and determining its type from a predefined set of event types. Given the nature of this task, we model event prediction as a textual event classification problem. Formally, given textual inputs , the task aims to assign each input a label from a fixed label set , which contains distinct event types. Each label represents a specific type of event, and the goal is to accurately map the input text to the most appropriate event type, thereby predicting the event that the text describes.

4. Methodology

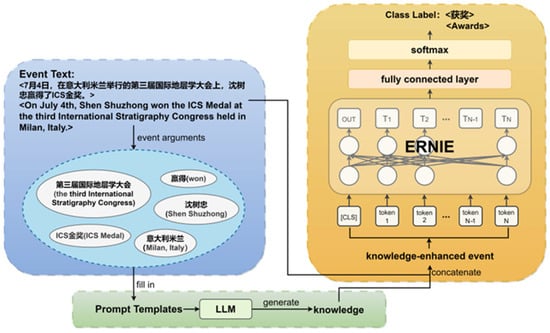

The CEP-PKA framework comprises three core modules: Fine-grained event decomposition, prompt construction, and fine-tuning (Figure 2). In the fine-grained event decomposition module, events are decomposed into granular lexical units, which serve as precise targets for knowledge augmentation to tackle semantic sparsity in short-text scenarios. In the prompt construction module, a tailored prompt framework is designed to generate context-aware knowledge for both event triggers and arguments, effectively bridging semantic gaps and enhancing the representation of event components. In the fine-tuning module, a pre-trained language model is rigorously fine-tuned on two Chinese event prediction datasets to optimize model performance for event type classification.

Figure 2.

The architecture of CEP-PKA.

4.1. Fine-Grained Event Decomposition

To enhance the model’s comprehension of the explicit information within event texts, we leverage fine-grained details from the original texts. This strategy is derived from the observation that event-related texts usually contain some specific linguistic elements, which are crucial for capturing the semantics of the described events. Specifically, the fine-grained event information emphasizes event trigger and event arguments, as these elements represent the most prominent and informative components in the event description.

Event triggers, which mostly appear in the form of verbs or verb phrases, accurately capture the core actions related to the event and determine the basic attributes and core behaviors of the event. For example, in the sentence “On 4 July, Shen Shuzhong won the ICS Medal at the third International Stratigraphy Congress held in Milan, Italy.”, the word “won” is the core trigger word of the event, which clearly expresses the main action of the event.

Event arguments provide rich, detailed information for the event, covering key dimensions such as entities, time, and location in the event. These arguments constitute important background information for understanding the event. Taking the above sentence as an example, “Shen Shuzhong” is the main entity of the event, “ICS Award” is the entity receiving the award, “the Third International Congress of Stratigraphy” is the background venue of the event, and “Milan, Italy” is the geographical location of the event. These arguments are interrelated, providing a multi-dimensional basis for judging the event type and helping the model comprehensively capture the information of the event.

Formally, for each event , is the set of its event triggers and event arguments:

where n is the total number of event arguments for event , and each (1 ≤ j ≤ n) denotes an individual argument (e.g., trigger, agent, time, or location).

4.2. Prompt Construction

To address the critical need for semantic expansion of original event representations and integration of background knowledge and commonsense information, we have developed a sophisticated set of prompt engineering templates. These templates are strategically designed to direct large language models to generate detailed, contextually relevant knowledge that closely aligns with fine-grained event elements. As a result, they bridge the semantic gaps and enhance the comprehensibility of event semantics.

For the acquisition of external knowledge related to event elements, we employ an open-ended question template. This approach effectively guides LLMs to generate rich, pertinent information for fine-grained event elements. Leveraging GPT-3.5, one of the most advanced LLMs, we are able to obtain detailed and high-quality descriptions. The specific templates used in our research are as follows:

- For Event Triggers: Please provide a detailed explanation of the meaning and function of the event trigger [trigger word] in the context.

- For Entity Arguments: Please provide background information about the entity [entity word], including its type, main characteristics, and related events.

- For Time Arguments: Please describe typical events or background related to the time expression [time words].

- For Location Arguments: Please introduce the nature and importance of the location [location words], as well as the types of events that commonly occur there.

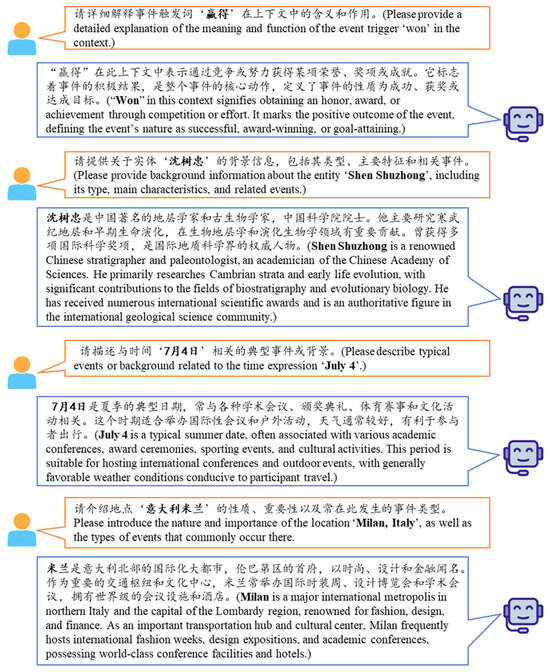

For example, consider the sentence above, by applying the templates presented above, an LLM can generate rich background knowledge for each event element, as illustrated in Figure 3.

Figure 3.

The examples of prompts and answers for knowledge generation.

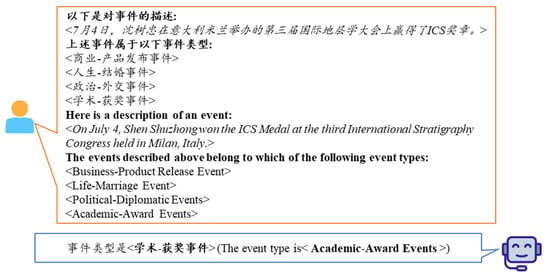

Furthermore, we design a single-choice question-based prompt template for direct event prediction by presenting an LLM with a target event and requiring it to select the most appropriate event type from a predefined label set. Essentially, the predictions made by the LLM follow a zero-shot learning paradigm (e.g., “The event type of [text] is: [Option 1, Option 2, …].”), as presented in Figure 4.

Figure 4.

The examples of prompts and answers for event prediction.

Formally, we fill in the prompt template with all the elements in to generate relevant knowledge through the LLM:

where represents the knowledge associated with , expressed as a sentence or paragraph recording specific contextual information, and denotes the aggregate knowledge of .

4.3. Fine-Tuning

We employ ERNIE (Enhanced Representation through Knowledge Integration) [] as our backbone pre-trained language model for the final event classification task.

ERNIE is a series of large-scale pre-trained models developed by Baidu, specifically designed for Chinese natural language processing. It enhances semantic representation by integrating knowledge from various sources during pre-training, making it particularly effective for understanding Chinese text. We choose ERNIE due to its proven superiority in Chinese NLP benchmarks.

We fine-tune ERNIE using the knowledge-augmented event texts generated in the preceding step. Specifically, the input text is formed by concatenating and its corresponding using special tokens to clearly demarcate different information sources. The final input format is as follows:

This structured format helps the ERNIE model distinguish the original event text from the LLM-generated knowledge, learning to integrate them effectively. Subsequently, the input is tokenized and fed into the ERNIE model to generate contextualized embeddings, which are then processed by a pooling layer. The output of the pooling layer is transmitted through a fully connected layer, which maps the high-dimensional embedding to the specified number of output classes:

where denotes an matrix, represents the number of output classes, and denotes the hidden layer size. Additionally, is a bias vector of -dimension, and is the output vector, also of -dimension.

To predict the final event type, the logits are passed through a softmax function as the activation function to obtain the probabilities of each class:

where is the -th (1 ≤ ≤ ) component of the output vector after applying the softmax function, is the -th component of the input vector , and is the number of classes.

Given that our task is essentially a multi-class classification problem, we employ cross-entropy loss, a loss function well-adapted for scenarios where each instance belongs to exactly one of the multiple classes:

where is the true label for class and is the predicted probability of class .

Similarly to Bert, we refrain from optimizing bias and parameters and apply the BERTAdam optimizer to the remaining parameters. A warm-up schedule is adopted, with the learning rate defined as follows:

where is the learning rate at time and is the total number of warm-up steps.

The parameter update with weight decay is defined as:

where is the learning rate at time step , represents the mean of gradients after bias correction, represents the variance of gradients after bias correction, and is a fixed-weight decay coefficient.

5. Experiment

5.1. Implementation Details

Datasets: We primarily evaluate our framework on two established Chinese event prediction benchmarks: DuEE [] and Title2Event [], and further conduct pilot experiments on the more recent LEVEN dataset [] to assess generalizability in specialized domains.

DuEE (Baidu’s DuEE Dataset) is a large-scale event extraction corpus containing 19,640 events with 65 types across domains such as finance, sports, and public safety. Each instance includes a sentence with annotated trigger and argument spans, making it suitable for evaluating both detection and classification. The dataset was constructed from news articles published before 2021.

Title2Event focuses exclusively on short-text scenarios using news headlines (average length: 23 characters) as input. Following Deng’s approach [], we group the 10 most frequent classes in Title2Event and label the rest as “Other Topics”. Notably, since the events in the datasets occurred before 2022 and GPT-3.5 (trained on data up to January 2022) is used to generate temporal knowledge, we posit that it can effectively provide accurate and context-relevant information.

To assess generalizability to specialized domains and more recent data, we also conduct pilot experiments on LEVEN [], a large-scale Chinese legal event detection dataset released in 2022. LEVEN comprises over 100,000 legal documents with 108 fine-grained event types (e.g., “Contract Dispute”, “Administrative Penalty”) and rich argument structures. Notably, all datasets used in our study—including LEVEN—predate GPT-3.5’s knowledge cutoff (early 2022), ensuring no temporal data leakage during knowledge generation. Although its annotation schema differs slightly from DuEE, we adapt our framework by mapping triggers to LEVEN’s event definitions. On a randomly sampled test subset (n = 1000), CEP-PKA improves F1 by +2.3% over vanilla ERNIE, demonstrating robustness in domain-specific settings. Full integration is reserved for future work.

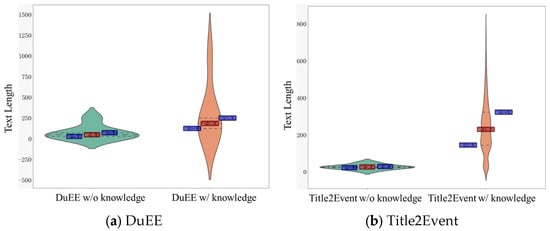

As shown in Table 1, the length distribution of event texts changes significantly after knowledge enhancement. This indicates that knowledge integration effectively enriches event content while mitigating the occurrence of excessively long texts.

Table 1.

The text length distribution of the initial events and the augmented events with knowledge in the DuEE and Title2Event.

Baselines: We compare CEP-PKA against widely used text classification models, including (1) BERT-family models: BERT, BERT + CNN/RNN/RCNN/DPCNN, RoBERTa [] and MacBERT []; (2) enhanced models: Label Confusion Model(LCM) [], Distribution Calibration(DC) [], SAtt-LSTM [], CED-EOSN [], EDLA [], miniRBT [], PMTNet []; (3) knowledge graph (KG)-enhanced models: KG-BERT + ERNIE [] and KEPLER-Chinese [].

Configuration: The padding size is set to 256 for original text-based events and adjusted to 512 for knowledge-augmented events. All experiments employ a learning rate of 0.00005 and a hidden layer size of 768, with each experiment run 10 times and the average taken as the final result; the LLM version used is gpt-3.5-turbo.

5.2. Main Results

Comparative results (Table 2) demonstrate that CEP-PKA outperforms all baseline models across all evaluation metrics on both DuEE and Title2Event datasets, validating its effectiveness. Specifically, CEP-PKA achieves an event prediction accuracy of 0.9463 on DuEE, surpassing the best baseline by 6.76%, and 0.8820 on Title2Event, outperforming baselines with an accuracy range from 0.72 to 0.79. This superiority stems from two key factors: (1) integration of ERNIE, a PLM optimized for the Chinese corpus, which enhances language understanding; and (2) incorporation of LLM-generated knowledge, which supplements contextual information and enriches semantic representations. (3) CEP-PKA maintains distinct advantages over knowledge-enhanced baselines (K-BERT, KEPLER) in event prediction. On DuEE, it outperforms K-BERT (Macro-F1 0.8420, accuracy 0.8651) and KEPLER (Macro-F1 0.9302, accuracy 0.9407) with its Macro-F1 of 0.9355 and accuracy of 0.9463. On Title2Event (short, context-sparse texts), the gap widens—CEP-PKA’s 0.8820 accuracy far exceeds K-BERT’s 0.7809 and KEPLER’s 0.8204. The key reason why CEP-PKA outperforms K-BERT and KEPLER lies in the fact that, unlike K-BERT and KEPLER which use fixed, pre-built knowledge graphs, CEP-PKA leverages dynamic knowledge generated by large language models. This dynamic knowledge better captures the context-specific semantics required for event prediction across diverse datasets.

Table 2.

Comparison of CEP-PKA baseline models. † signifies the results are taken from their original papers and * means that the results are achieved with their released code under default settings.

5.3. Ablation Study and Further Analysis

The impact of LLM-generated knowledge: As shown in Table 3, we evaluate the contribution of introduced knowledge to CEP-PKA on DuEE and Title2Event. Results indicate that most evaluation metrics across both datasets show significant improvements with knowledge integration, underscoring the positive impact of LLM-generated knowledge on CEP-PKA’s performance. Despite potential LLM hallucinations, findings confirm that structured knowledge from LLMs meaningfully enhances downstream task accuracy. We hypothesize that this is because the fine-tuned ERNIE model learns robust semantic representations that can align with and filter out highly relevant knowledge, making it resilient to occasional noise or inconsistencies from LLM outputs.

Table 3.

The influence of knowledge augmentation on CEP-PKA. ✓ denotes the presence of a specific component and × denotes the absence.

Comparison of Different LLMs for Knowledge Generation: To assess the generalization ability of CEP-PKA across different language models, we replaced the GPT-3.5-Turbo used in knowledge generation with two representative Chinese LLMs, Qwen-Turbo and DeepSeek-V3.2-Exp, while keeping all other experimental settings identical. As summarized in Table 4, CEP-PKA achieves consistently strong performance on both DuEE and T2E datasets under all LLMs. On DuEE, GPT-3.5-Turbo yields the highest overall accuracy (0.9463), whereas Qwen and DeepSeek remain highly competitive, with F1 scores within 1% of the baseline. On T2E, DeepSeek-V3.2-Exp slightly outperforms GPT-3.5. These findings demonstrate that CEP-PKA’s improvement primarily stems from its prompt-driven knowledge augmentation mechanism rather than dependence on a specific LLM, confirming the framework’s model-agnostic adaptability. Moreover, Qwen and DeepSeek achieve comparable accuracy with substantially lower inference cost, indicating their potential as cost-effective alternatives to commercial APIs. This observation also motivates further exploration of lightweight and distilled models, as discussed in Section 5.4, to enhance the scalability and practicality of LLM-augmented event prediction.

Table 4.

Performance comparison of CEP-PKA using different large language models (LLMs).

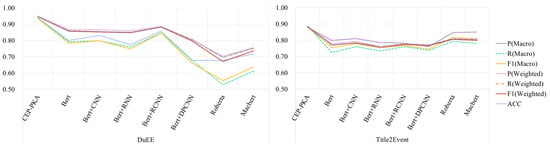

The impact of different PLMs: Figure 5 compares various PLMs using CEP-PKA’s fine-tuning pipeline. CEP-PKA consistently outperforms other models across all metrics on both datasets. This advantage is attributed to ERNIE’s training on a Chinese corpus, which enables stronger semantic representation and event prediction capabilities after fine-tuning, highlighting the critical role of language-specific pre-training in cross-lingual NLP tasks.

Figure 5.

The influence of different PLMs on CEP-PKA.

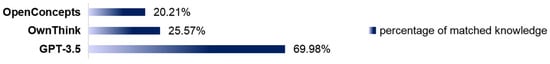

Comparison of knowledge retrieval capabilities between LLM and KGs: We assess the knowledge retrieval performance of two large-scale Chinese KGs by calculating the success rate of retrieving event argument knowledge, compared with knowledge generated by GPT-3.5, for all events in DuEE. As illustrated in Figure 6, GPT-3.5 demonstrates significantly stronger capabilities in generating knowledge for event arguments: it outperforms KGs in coverage, as the latter can only retrieve knowledge for a limited subset of arguments, primarily constrained by their dataset scale. Moreover, GPT-3.5 demonstrates superior ability to interpret compound nouns within event arguments and generate relevant knowledge, whereas KG-based methods often face challenges with such complex linguistic structures.

Figure 6.

Percentage of matched knowledge in different knowledge sources.

Comparison of CEP-PKA with prompt-driven GPT: We conduct a comprehensive performance comparison between the prompt-driven GPT-3.5 under a zero-shot learning paradigm and our proposed CEP-PKA method. As shown in Table 5, CEP-PKA outperforms the GPT-3.5-based prompt approach on both datasets, even when GPT-3.5 incorporates its self-generated knowledge, highlighting the effectiveness of CEP-PKA in Chinese event prediction tasks.

Table 5.

Comparison of CEP-PKA and direct LLM-based prediction. ✓ denotes the presence of a specific component and × denotes the absence.

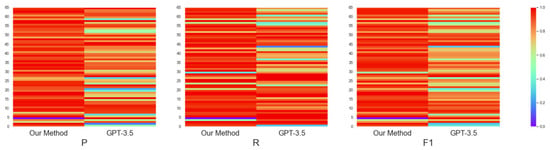

To further evaluate CEP-PKA’s stability and robustness, we tested its performance across 65 event types in DuEE. As illustrated in Figure 7, CEP-PKA consistently achieves high scores (marked by red blocks), while GPT-3.5’s performance (marked by cool-toned blocks) is less consistent and lower on average. These results confirm that CEP-PKA delivers stronger and more balanced performance across all event types, demonstrating its capability to handle diverse scenarios effectively.

Figure 7.

Heat maps of the prediction performance for different event types in DuEE. The left columns in all maps are from CEP-PKA, while the right ones are from GPT-3.5. The vertical axis is the 65 event types in DuEE.

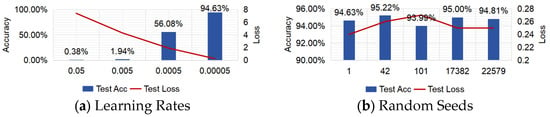

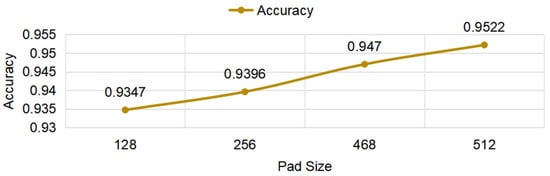

The impact of hyperparameters: We study the setting of parameters (learning rate, random seed, padding size) and their effects on the model.

On the DuEE dataset, we first systematically investigate the impact of learning rates on the performance of CEP-PKA. Through extensive hyperparameter tuning, we determine that a learning rate of 0.00005 yields optimal results, outperforming other tested rates in terms of both prediction accuracy and loss minimization, as vividly illustrated in Figure 8a.

Figure 8.

Impact of learning rates and random seeds on the performance of CEP-PKA on DuEE.

Experiments on DuEE with varying random seeds (Figure 8b) demonstrate minimal variance in accuracy, confirming CEP-PKA’s robustness to different weight initializations. This consistency underscores the model’s reliability in real-world deployments.

We also explored the influence of padding size on model performance. First, we analyzed the length distribution of original event texts and compared it with that after knowledge augmentation. As shown in Figure 9, for the DuEE and Title2Event datasets, only 1.7% and 5.0% of the data, respectively, exceed the 512-character padding limit. Considering that the maximum input length supported by the ERNIE model is 512, we strategically set the padding size to 512 to cover the vast majority of text instances.

Figure 9.

The length distribution of the initial event text and the event text after knowledge augmentation. The three dotted lines in the figure represent the first quartile (Q1, 25%), median (Q2, 50%), and the third quartile (Q3, 75%).

Finally, with the random seed fixed at 42, we comprehensively evaluated the impact of different padding sizes on model performance. The experimental results in Figure 10 show that the CEP-PKA model achieves the highest prediction performance when the padding size is set to 512. As the padding size decreases, the model’s performance gradually approaches that of relying solely on the original event text.

Figure 10.

Impact of pad sizes on the performance of CEP-PKA on DuEE.

5.4. Limitation

Despite the promising performance of our CEP-PKA framework, its reliance on Large Language Models (LLMs)—particularly commercial APIs like GPT-3.5—for knowledge augmentation introduces several inherent limitations that affect both reliability and practicality.

First, LLMs are susceptible to hallucinations, i.e., generating factually inaccurate or fabricated content. When such misleading knowledge is injected into the event prediction pipeline, it risks distorting semantic representations or steering the classifier toward incorrect predictions. Second, LLMs suffer from temporal knowledge cutoffs. For instance, GPT-3.5’s training data ends in June 2023, rendering it unable to accurately describe events, organizations, or policies that emerged afterward (e.g., China’s 2024 AI regulatory framework). While this limitation does not bias our current evaluation—since all datasets (DuEE, Title2Event, and LEVEN) were constructed prior to 2023—it poses a significant challenge for real-world deployment in dynamic environments.

Moreover, the heavy dependence on closed-source, commercial LLM APIs incurs substantial computational and financial costs, especially when scaling to large datasets. This hinders reproducibility, limits accessibility for resource-constrained researchers, and impedes practical adoption in production systems.

To address these challenges, we propose several actionable strategies for future work:

- Knowledge Verification: Integrate an automated validation layer that cross-checks LLM-generated knowledge against trusted external sources (e.g., CN-DBpedia, OwnThink) before augmentation.

- Confidence-based Filtering: Leverage the LLM’s internal confidence scores to discard low-certainty or ambiguous generations.

- Ensemble Knowledge Generation: Aggregate outputs from multiple diverse LLMs (e.g., GPT-3.5, Qwen, GLM, Baichuan) to identify consensus facts and suppress outliers.

- Knowledge Distillation: Train a lightweight student model (e.g., MiniRBT) to mimic the behavior of the full CEP-PKA pipeline, preserving performance while eliminating recurring API costs.

- Domain-specific Fine-tuning: Fine-tune LLMs on fact-checked, domain-relevant corpora to enhance factual consistency and reduce hallucination rates.

Notably, our results in Table 4 already provide empirical support for a cost-effective alternative. Open-source models such as Qwen and DeepSeek achieve performance comparable to GPT-3.5 at a fraction of the cost. This validates that strategies like ensemble generation and distillation are not merely theoretical—they offer a practical, scalable pathway for deploying CEP-PKA in real-world Chinese event prediction systems.

Ultimately, improving both the factual reliability and computational efficiency of LLM-augmented frameworks is essential. For dynamic applications, combining CEP-PKA with retrieval-augmented generation (RAG) or using continually updated open-source LLMs (e.g., Qwen-Max, DeepSeek-V3) represents a promising direction to mitigate knowledge decay and ensure long-term viability.

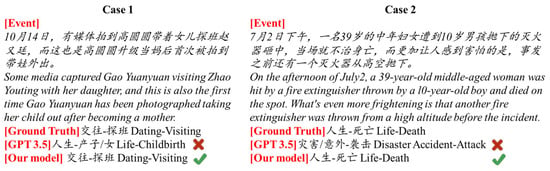

5.5. Case Study

For a qualitative analysis, we analyze two cases in DuEE to evaluate the semantic comprehension capabilities of GPT and CEP-PKA, as shown in Figure 11. Our observations reveal that GPT encounters difficulties in semantic comprehension. In Case 1, GPT misclassified the event as “Life-Childbirth” due to overemphasizing the phrase “becoming a mother” while ignoring the core action “visiting”; CEP-PKA correctly identified the event type by integrating global context. In Case 2, GPT focused narrowly on the word “hit” and incorrectly predicted “Disaster/Accident-Attack”, failing to recognize the event’s ultimate outcome of “death”. These examples demonstrate CEP-PKA’s ability to capture holistic semantics rather than relying on isolated keywords, highlighting its robustness against semantic interference.

Figure 11.

Case study of the outputs from CEP-PKA and GPT3.5.

5.6. Error Analysis

To gain deeper insights into our model’s behavior, we analyzed cases where CEP-PKA made incorrect predictions. In one instance, the event “The enterprise announces corporate layoffs.” was misclassified as “The enterprise announces a corporate merger and acquisition”. Our analysis revealed that the LLM-generated knowledge for the entity “Corporate” was overly generic, focusing on its business nature rather than the specific context of a workforce reduction. This led to semantic confusion during fine-tuning. This case highlights the importance of generating context-specific knowledge and suggests a future improvement: incorporating a relevance-checking mechanism for the generated knowledge before integration.

6. Conclusions

In this work, we introduce CEP-PKA to enhance the accuracy of Chinese event prediction via prompt-driven knowledge augmentation. The approach employs large language models as knowledge sources to generate fine-grained information for event elements, which is then integrated into a pre-trained language model for event type prediction via fine-tuning. Experimental results demonstrate that CEP-PKA significantly outperforms baseline models, highlighting the potential of LLMs to enrich event text semantics. For future research, we plan to integrate diverse LLMs to enhance the diversity and richness of generated knowledge and extend the framework to analyze event causality and temporal event prediction, expanding its application to more complex event understanding scenarios.

Author Contributions

Conceptualization, W.L.; Data curation, W.J.; Methodology, S.L. and W.L.; Validation, S.L., X.X. and W.J.; Writing—original draft, S.L. and W.L.; Writing—review and editing, X.X. and W.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China Major Projects: grant number 61991410.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available upon request from the corresponding author. The data are not publicly available due to the need for subsequent scientific work.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Chambers, N.; Jurafsky, D. Unsupervised learning of narrative event chains. In Annual Meeting of the Association for Computational Linguistics, Proceedings of the ACL-08: HLT, Columbus, OH, USA, 15–20 June 2008; Standford University: Standford, CA, USA, 2008; pp. 789–797. Available online: https://api.semanticscholar.org/CorpusID:529375 (accessed on 23 November 2025).

- Lv, S.; Qian, W.; Huang, L.; Han, J.; Hu, S. Sam-net: Integrating event-level and chain-level attentions to predict what happens next. In Proceedings of the Thirty-Third AAAI Conference on Artificial Intelligence (2019), Honolulu, HI, USA, 27 January–1 February 2019; Available online: https://api.semanticscholar.org/CorpusID:57983897 (accessed on 23 November 2025).

- Huai, Z.; Zhang, D.; Yang, G.; Tao, J. Spatial-temporal knowledge graph network for event prediction. Neurocomputing 2023, 553, 126557. [Google Scholar] [CrossRef]

- Zhao, L. Event Prediction in Big Data Era: A Systematic Survey. ACM Comput. Surv. (CSUR) 2020, 54, 1–37. [Google Scholar] [CrossRef]

- Cao, Y.; Tang, Y.; Du, H.; Xu, F.; Wei, Z.; Jin, C. Heterogeneous reinforcement learning network for aspect-based sentiment classification with external knowledge. IEEE Trans. Affect. Comput. 2023, 14, 3362–3375. [Google Scholar] [CrossRef]

- Gardères, F.; Ziaeefard, M.; Abeloos, B.; Lécué, F. Conceptbert: Concept-aware representation for visual question answering. In Findings of the Association for Computational Linguistics: EMNLP; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 489–498. Available online: https://api.semanticscholar.org/CorpusID:226284018 (accessed on 23 November 2025).

- Achiam, O.J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; Anadkat, S.; et al. GPT-4 Technical Report. 2023. Available online: https://api.semanticscholar.org/CorpusID:257532815 (accessed on 23 November 2025).

- Melamud, O.; Goldberger, J.; Dagan, I. context2vec: Learning generic context embedding with bidirectional LSTM. In Proceedings of the 20th Conference on Computational Natural Language Learning (CoNLL 2016), Berlin, Germany, 11–12 August 2016; Available online: https://api.semanticscholar.org/CorpusID:7890036 (accessed on 23 November 2025).

- Huang, L.; Sun, C.; Qiu, X.; Huang, X. Glossbert: Bert for word sense disambiguation with gloss knowledge. arXiv 2019, arXiv:1908.07245. Available online: https://api.semanticscholar.org/CorpusID:201103745 (accessed on 23 November 2025).

- Natu, S.; Sural, S.; Sarkar, S. External commonsense knowledge as a modality for social intelligence question-answering. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision Workshops (ICCVW), Paris, France, 2–6 October 2023; pp. 3036–3042. Available online: https://api.semanticscholar.org/CorpusID:266151538 (accessed on 23 November 2025).

- Bayat, F.F.; Qian, K.; Han, B.; Sang, Y.; Belyi, A.; Khorshidi, S.; Wu, F.; Ilyas, I.; Li, Y. FLEEK: Factual error detection and correction with evidence retrieved from external knowledge. arXiv 2023, arXiv:2310.17119v1. Available online: https://api.semanticscholar.org/CorpusID:264490676 (accessed on 23 November 2025). [CrossRef]

- Liu, J.; Yang, L. Knowledge-enhanced prompt learning for few-shot text classification. Big Data Cogn. Comput. 2024, 8, 43. [Google Scholar] [CrossRef]

- Yan, Y.; Zheng, P.; Wang, Y. Enhancing large language model capabilities for rumor detection with knowledge-powered prompting. Eng. Appl. Artif. Intell. 2024, 133, 108259. [Google Scholar] [CrossRef]

- Li, Z.; Ding, X.; Liu, T. Constructing narrative event evolutionary graph for script event prediction. In Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence (IJCAI), Stockholm, Sweden, 13–19 July 2018; Available online: https://api.semanticscholar.org/CorpusID:21723549 (accessed on 23 November 2025).

- Liu, X.; Huang, H.; Zhang, Y. End-to-end event factuality prediction using directional labeled graph recurrent network. Inf. Process. Manag. 2022, 59, 102836. [Google Scholar] [CrossRef]

- Yu, X.; Sun, W.; Li, J.; Liu, K.; Liu, C.; Tan, J. ONSEP: A novel online neural-symbolic framework for event prediction based on large language model. In Findings of the Association for Computational Linguistics ACL 2024; Ku, L.W., Martins, A., Srikumar, V., Eds.; Association for Computational Linguistics: Bangkok, Thailand, 2024; pp. 6335–6350. Available online: https://aclanthology.org/2024.findings-acl.378 (accessed on 23 November 2025).

- Mathur, P.; Morariu, V.I.; Garimella, A.; Dernoncourt, F.; Gu, J.; Sawhney, R.; Nakov, P.; Manocha, D.; Jain, R. DocScript: Document-level script event prediction. In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024), Torino, Italy, 20–25 May 2024; Calzolari, N., Kan, M.Y., Hoste, V., Lenci, A., Sakti, S., Xue, N., Eds.; ELRA: Paris, France; ICCL: Mauren, Liechtenstein, 2024; pp. 489–498. Available online: https://aclanthology.org/2024.lrec-main.458 (accessed on 23 November 2025).

- Sun, Y.; Wang, S.; Li, Y.; Feng, S.; Chen, X.; Zhang, H.; Tian, X.; Zhu, D.; Tian, H.; Wu, H. Ernie: Enhanced representation through knowledge integration. arXiv 2019, arXiv:1904.09223. [Google Scholar] [CrossRef]

- Li, X.; Li, F.; Pan, L.; Chen, Y.; Peng, W.; Wang, Q.; Lyu, Y.; Zhu, Y. Duee: A largescale dataset for chinese event extraction in real-world scenarios. In Proceedings of the Natural Language Processing and Chinese Computing (NLPCC 2020), Zhengzhou, China, 14–18 October 2020; Available online: https://api.semanticscholar.org/CorpusID:222180086 (accessed on 23 November 2025).

- Deng, H.; Zhang, Y.; Zhang, Y.; Ying, W.; Yu, C.; Gao, J.; Wang, W.; Bai, X.; Yang, N.; Ma, J.; et al. Title2Event: Benchmarking open event extraction with a large-scale Chinese title dataset. In Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing, Abu Dhabi, United Arab Emirates, 7–11 December 2022; Association for Computational Linguistics: Stroudsburg, PA, USA, 2022; pp. 6511–6524. Available online: https://aclanthology.org/2022.emnlp-main.437 (accessed on 23 November 2025).

- Yao, F.; Xiao, C.; Wang, X.; Liu, Z.; Hou, L.; Tu, C.; Liu, Y.; Shen, W.; Sun, M. LEVEN: A Large-Scale Chinese Legal Event Detection Dataset. arXiv 2022, arXiv:2203.08556. [Google Scholar] [CrossRef]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. Roberta: A robustly optimized bert pretraining approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- Cui, Y.; Che, W.; Liu, T.; Qin, B.; Wang, S.; Hu, G. Revisiting pre-trained models for Chinese natural language processing. arXiv 2020, arXiv:2004.13922v2. [Google Scholar] [CrossRef]

- Guo, B.; Han, S.; Han, X.; Huang, H.; Lu, T. Label confusion learning to enhance text classification models. arXiv 2020, arXiv:2012.04987. [Google Scholar] [CrossRef]

- Yang, S.; Liu, L.; Xu, M. Free lunch for few-shot learning: Distribution calibration. In Proceedings of the International Conference on Learning Representations (ICLR), Vienna, Austria, 3–7 May 2021; Available online: https://openreview.net/forum?id=JWOiYxMG92s (accessed on 23 November 2025).

- Yu, W.; Huang, X.; Yuan, Q.; Yi, M.; An, S.; Li, X. Information security field event detection technology based on satt-lstm. Secur. Commun. Netw. 2021, 2021, 5599962. [Google Scholar] [CrossRef]

- Ni, C.; Liu, W.; Li, W.; Wu, J.; Ren, H. Chinese event detection based on event ontology and siamese network. In Knowledge Science, Engineering and Management; Springer: Berlin/Heidelberg, Germany, 2021; Available online: https://api.semanticscholar.org/CorpusID:237206910 (accessed on 23 November 2025).

- Cheng, Q.; Fu, Y.; Huang, J.C.; Cheng, G.; Du, H. Event detection based on the label attention mechanism. Int. J. Mach. Learn. Cybern. 2022, 14, 633–641. [Google Scholar] [CrossRef]

- Yao, X.; Yang, Z.; Cui, Y.; Wang, S. Minirbt: A two-stage distilled small chinese pre-trainedmodel. arXiv 2023, arXiv:2304.00717. [Google Scholar]

- Ke, X.; Ou, Z.; Wu, X.; Li, B. A new Chinese event detection method based on pmtnet. In Proceedings of the 16th International Conference on Machine Learning and Computing, Shenzhen, China, 2–5 February 2024; Available online: https://api.semanticscholar.org/CorpusID:270338481 (accessed on 23 November 2025).

- Liu, W.; Zhou, P.; Zhao, Z.; Wang, Z.; Ju, Q.; Deng, H.; Wang, P. K-BERT: Enabling Language Representation with Knowledge Graph. In Proceedings of the Thirty-Fourth AAAI Conference on Artificial Intelligence (AAAI-20), New York, NY, USA, 7–12 February 2020; pp. 2901–2908. [Google Scholar] [CrossRef]

- Wang, X.; Gao, T.; Zhu, Z.; Zhang, Z.; Liu, Z.; Li, J.; Tang, J. KEPLER: A Unified Model for Knowledge Embedding and Pre-trained Language Representation. Trans. Assoc. Comput. Linguist. 2021, 9, 176–194. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).