1. Introduction

Camouflage detection plays a crucial role in remote sensing and military surveillance, particularly in addressing the growing sophistication of artificial materials. These materials often exhibit spectral characteristics that closely resemble natural vegetation, presenting significant challenges for traditional imaging technologies [

1,

2]. Hyperspectral imaging (HSI), which captures detailed reflectance information across hundreds of contiguous narrow bands, is widely regarded as a powerful tool for identifying subtle material differences [

3]. However, effectively utilizing such high-dimensional data remains a technical challenge.

Early studies on camouflage detection often relied on rule-based or thresholding techniques applied to spectral reflectance. Kamble and Rajarajeswari [

1] adopted fixed spectral thresholds to identify artificial targets, but their method performed poorly under variable illumination and complex backgrounds. To improve robustness, Esin et al. [

2] introduced SVMs, which achieved better performance in small-sample, high-dimensional scenarios. However, classifiers such as SVM and RF rely on handcrafted features and struggle to handle complex nonlinear boundaries [

4,

5].

To address these limitations, more advanced feature extraction approaches have been introduced. Jing and Hou [

6] proposed a PCA–SVM pipeline to reduce redundancy while preserving informative variance. Li et al. [

7] enhanced preprocessing using wavelet-based denoising and least squares filtering, effectively suppressing high-frequency noise but failing to maintain spatial continuity. Other strategies, including PCA–KNN and ICA-based pipelines, have also been explored to enhance classification robustness [

8,

9].

With the advent of deep learning, CNNs have gained prominence for their ability to automatically extract hierarchical spectral–spatial features [

3,

10,

11]. Chen et al. [

12] applied CNNs to camouflage detection, achieving significantly improved accuracy. Hybrid 3D–2D CNN architectures further strengthened the model’s ability to learn both local and global spectral patterns [

10]. ResNets, which introduce shortcut connections to alleviate vanishing gradient issues, have also demonstrated strong performance in hyperspectral classification tasks [

13,

14,

15]. Furthermore, residual models integrated with attention mechanisms and spatial–spectral regularization have been shown to improve class discrimination [

11,

16]. Recent reviews have emphasized that continuous methodological benchmarking and transparent reporting are critical for reproducibility and cross-study comparability in deep hyperspectral learning [

17,

18].

Recently, attention mechanisms have emerged as a key direction in hyperspectral classification, enabling dynamic modeling of contextual relationships across spectral and spatial dimensions. Li et al. [

19] proposed a wide-area attention-based architecture for real-time object search using UAVs, effectively integrating spectral–spatial feature fusion for rapid camouflage detection. Qing and Liu [

20] designed a multi-scale residual network with attention modules, capable of preserving both global structure and local textures in complex backgrounds. Sarker et al. [

11] developed a spectral–spatial residual attention network (SS-RAN) that introduced both channel and spatial attention blocks to enhance sensitivity to fine-grained features. Zhu et al. [

21] further extended this direction by proposing a Residual Spectral–Spatial Attention Network, which embedded attention modules within residual blocks to enhance class separability and spectral–spatial feature representation in complex scenes. Despite their high accuracy, these attention-based models often require deep architectures and introduce considerable computational overhead, making them unsuitable for deployment on resource-constrained platforms. To address this, Hupel and Stütz [

22] introduced a lightweight hyperspectral anomaly detection strategy adapted for multispectral data, enabling near real-time camouflage detection under limited hardware conditions. In parallel, AI-driven automation of systematic literature reviews has been proposed to accelerate methodological synthesis in remote sensing and hyperspectral imaging [

23,

24].

While classification has been the dominant paradigm, recent research has also begun to address hyperspectral object detection, expanding the scope of spectral analysis to include spatial object-level interpretation. He et al. [

25] proposed a unified spectral–spatial feature aggregation network that bridges the gap between pixel-wise classification and object detection, demonstrating promising performance on complex scenes. Moreover, modern review methodologies such as Rapid Literature Review (RLR) have been advocated for to ensure efficient synthesis of such diverse algorithmic developments [

26].

To capture sequential dependencies across spectral dimensions, researchers have also incorporated temporal modeling techniques such as LSTM and Bi-GRU into hyperspectral classification. Dash et al. [

27] proposed a deep learning framework combining PCA with LSTM to retain key principal components while modeling band-wise temporal patterns, improving robustness in dynamic environments. However, LSTM-based models typically suffer from long training times and poor scalability due to their sequential nature, particularly when applied to high-bandwidth hyperspectral cubes [

11,

19,

20,

28].

Beyond network design, training sample partitioning significantly affects model generalization. The commonly used Kennard–Stone (K-S) algorithm selects samples based solely on spectral distance, often resulting in sparse sampling of central data regions [

9,

11]. In contrast, the SPXY algorithm incorporates both spectral variance and label distribution, yielding more representative training subsets [

8,

12,

16,

29]. Zhao et al. [

29] showed that SPXY significantly reduces KL divergence and improves spectral similarity between training and testing sets, enhancing generalization.

Data preprocessing also remains essential in hyperspectral classification workflows. SNV normalization and SG filtering have been widely adopted to correct baseline drift and suppress noise [

14,

30]. Spatial–spectral denoising strategies have further improved signal consistency in complex environments [

7]. Abbasi and He [

8] proposed a hybrid ICA–PCA–DCT preprocessing framework that exhibited greater spectral stability under varying illumination. However, these techniques are often applied in isolation and are not specifically optimized for camouflage detection, which requires heightened sensitivity to subtle spectral differences between artificial and natural targets.

Although significant progress has been made in attention models [

11,

19,

20,

28], recurrent architectures, and residual frameworks, few studies have integrated optimized sampling strategies, residual deep networks, and comprehensive preprocessing into a unified solution for camouflage detection. In this context, a more systematic framework that balances data representativeness, model efficiency, and generalization is still needed for practical hyperspectral camouflage detection. Moreover, most existing models are computationally expensive and complex, making them difficult to deploy in real-time or embedded environments. The integration of large language models (LLMs) into scientific review and analysis pipelines has recently enhanced reproducibility and evidence aggregation in data-intensive research [

31].

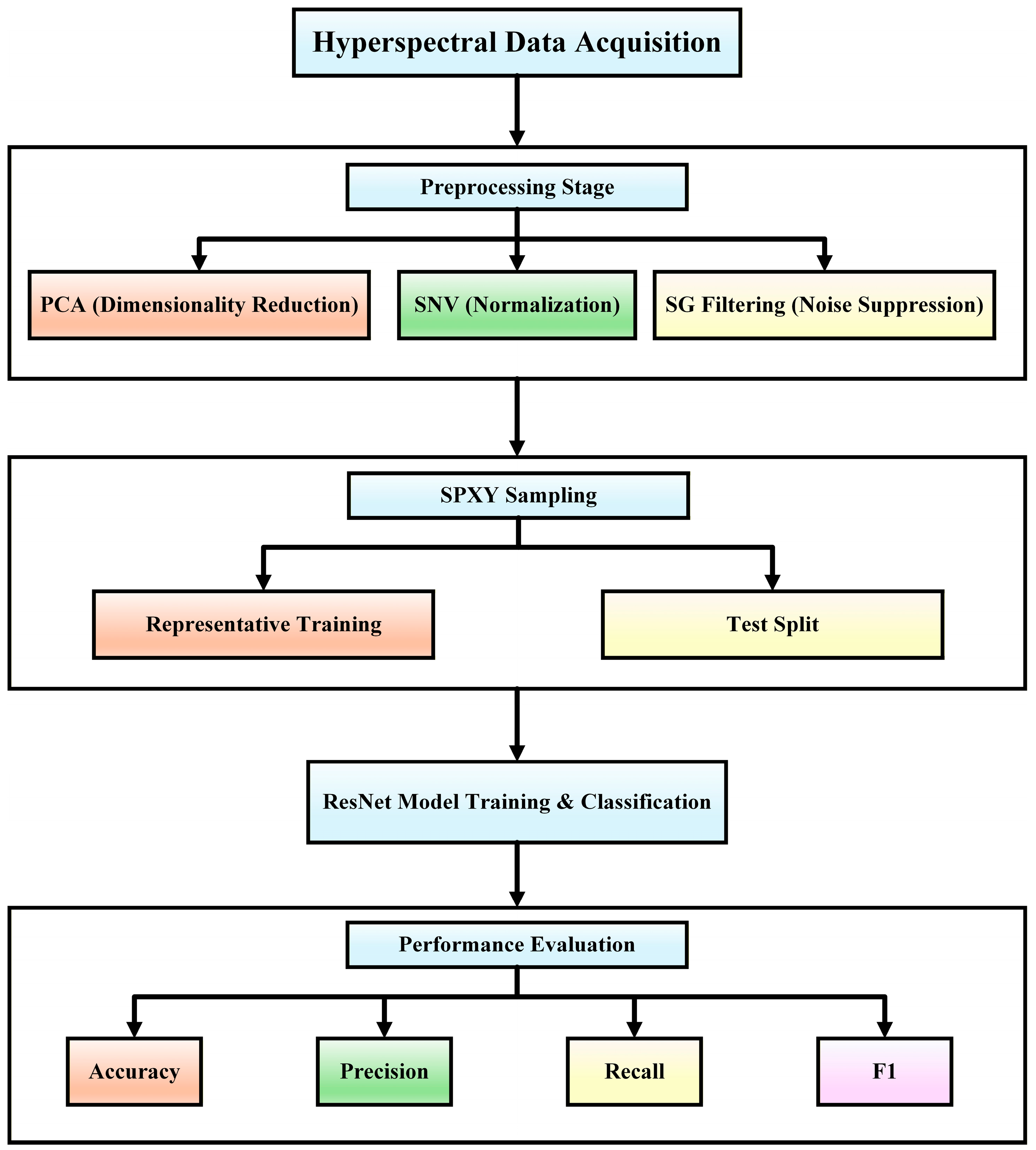

To address these challenges, this study proposes an integrated hyperspectral classification framework tailored for camouflage detection. The framework unifies SPXY-based sample partitioning, a multi-step preprocessing pipeline, and a lightweight residual CNN (ResNet18) architecture. Unlike prior studies that focus on isolated enhancements, our unified approach is designed to maximize class separability and generalization under spectral similarity and environmental variability. Experimental results demonstrate that this end-to-end framework not only achieves state-of-the-art accuracy but also maintains low computational overhead, making it practical for real-time and field-deployable applications in defense, agriculture, and environmental monitoring.

In

Section 1, an overview of the background and challenges in hyperspectral camouflage detection is presented, stressing the need for advanced techniques to differentiate artificial materials from natural vegetation. The main components of the proposed framework are introduced, highlighting its potential advantages over existing methods. In

Section 2, the materials, experimental setup, and methods for hyperspectral data acquisition, preprocessing, and classification are described, along with the algorithms used to improve classification accuracy. In

Section 3, experimental results are presented, including an evaluation of preprocessing techniques, dataset partitioning strategies, and the performance of various classification models. The proposed SPXY-ResNet model is compared with other approaches, demonstrating its superior accuracy and efficiency. In

Section 4, the findings are discussed, comparing the proposed framework to other methods in terms of performance and computational efficiency. Challenges and future improvements are also addressed. In

Section 5, the study’s conclusions are summarized, and the practical applications of the proposed framework in camouflage detection, agriculture, and environmental monitoring are highlighted.

3. Results

3.1. Preprocessing

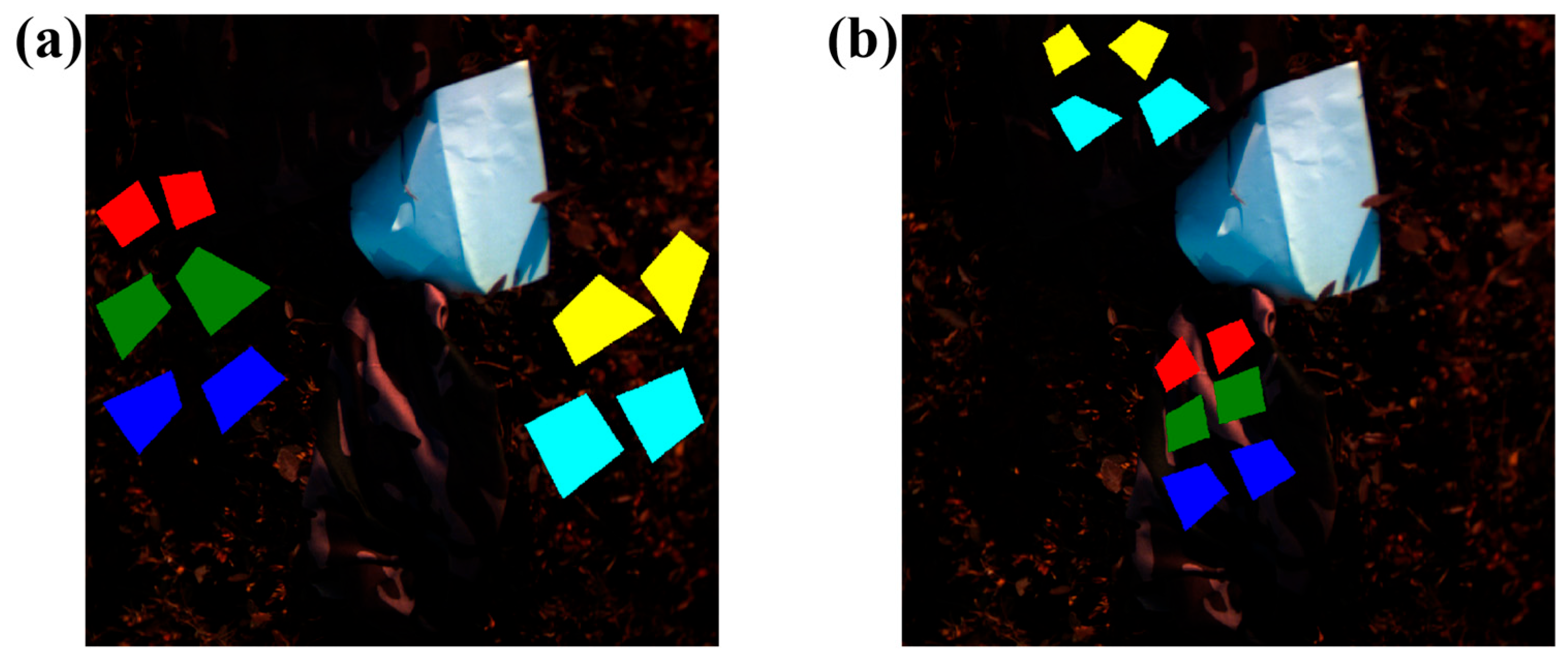

To enhance spectral data quality and suppress redundancy, PCA was performed on the original hyperspectral images before selecting regions of interest.

After PCA processing using the ENVI software module, thirty-six principal components were selected, ensuring that their cumulative variance explained remained below the 99.95% threshold. The raw hyperspectral image with evident spectral noise and low contrast is shown in

Figure 6a, whereas the denoised and PCA-processed image, exhibiting improved boundary clarity and spectral separability, is shown in

Figure 6b.

Preprocessing significantly enhanced spectral quality by suppressing noise and correcting baseline shifts.

Figure 7a illustrates the smoothed reflectance profile of camouflage fabric, while

Figure 7b shows the enhanced spectral curve of natural grass, with clear feature separation around the red-edge region. Based on the ICA–PCA–DCT framework proposed by Abbasi and He [

8], key spectral characteristics in the 680–750 nm red-edge and near-infrared regions became more distinct after preprocessing. This improvement in signal clarity and spectral contrast contributes to better class separability and enhances the reliability of downstream classification.

3.2. Division of Training and Test Spectral Data

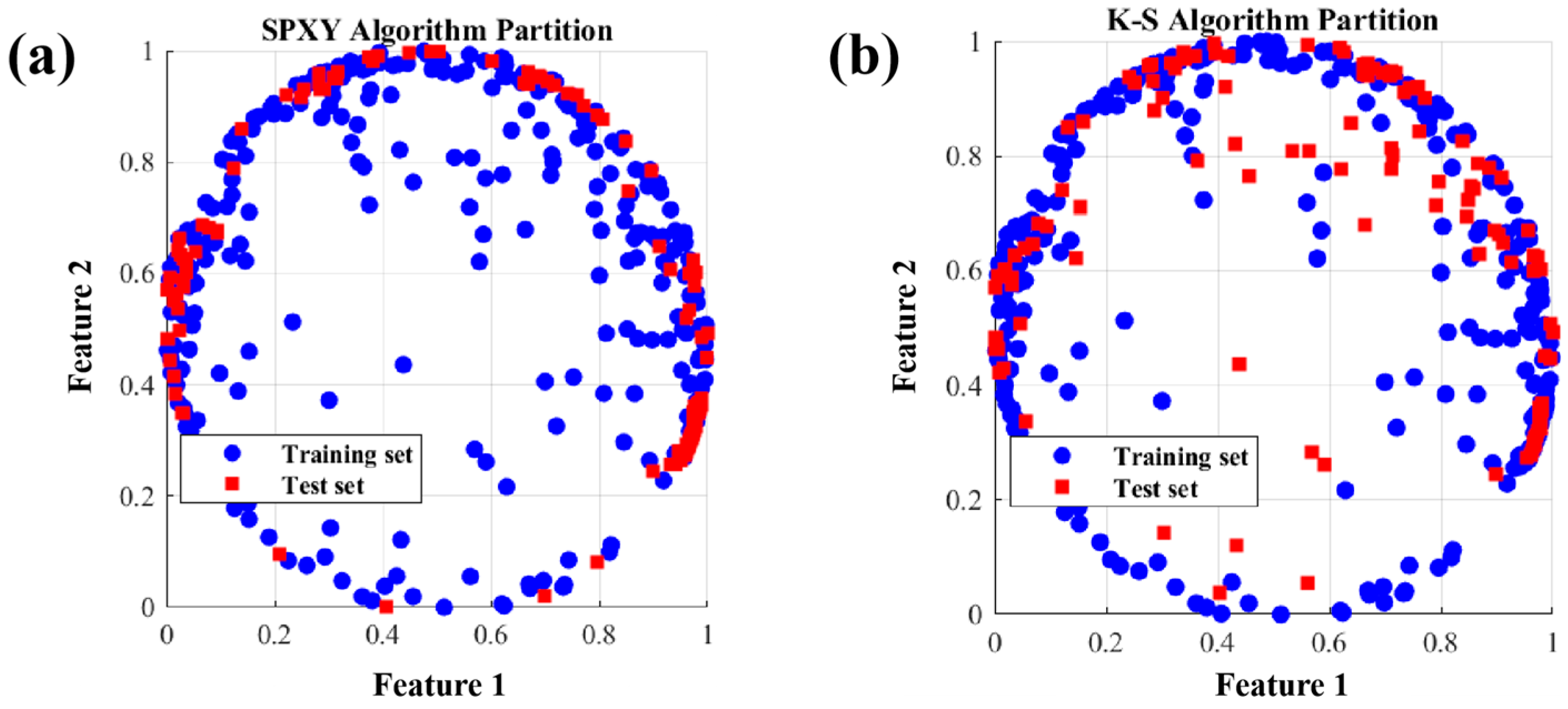

The dataset was randomly divided into training (70%) and testing (30%) subsets to support model calibration and performance evaluation. A total of 400 spectral samples were used in this experiment, each subjected to spectral analysis and chemical verification to ensure data reliability. Specifically, 280 samples were allocated for training and 120 for testing. To investigate the impact of sample selection strategies on classification performance, two commonly used partitioning algorithms, K-S and SPXY, were employed to divide the hyperspectral dataset [

17]. Both algorithms were applied independently under identical class distribution conditions, and their effectiveness was evaluated using a combination of qualitative and quantitative methods. The analysis focused on sample distribution within the spectral feature space, histogram consistency of spectral values, and statistical similarity between training and testing sets. These evaluations aimed to determine how well each method preserved the dataset’s representativeness and diversity, which are essential for improving model generalization in camouflage detection. To further avoid relying on a single 70/30 split and statistically validate the reliability of SPXY-based sampling, a robustness experiment was conducted using repeated resampling. Specifically, 1000 independent SPXY partitions were generated, and each training subset was evaluated using 5-fold cross-validation. The resulting performance remained highly stable, with a mean Root Mean Square Error (RMSE) of 2.8231 and a standard deviation of only 0.1264, confirming that model generalization is minimally affected by changes in data division.

Figure 8 illustrates the PCA distribution of all partitions, demonstrating consistent coverage of the feature space for both training and test samples across repeated trials. This comprehensive validation verifies that SPXY not only improves sample representativeness but also maintains strong robustness under stochastic resampling conditions.

The corresponding results are presented in

Figure 8,

Figure 9 and

Figure 10. These figures illustrate the differences in spatial sampling uniformity, distributional consistency, and statistical alignment achieved by each algorithm, providing an empirical basis for selecting the optimal data partitioning strategy.

As illustrated in

Figure 9a, the SPXY algorithm achieves a more uniform and representative distribution across the feature space, with samples densely covering both central and peripheral regions. This comprehensive coverage ensures that both typical and marginal spectral patterns are well represented in the training data. In contrast,

Figure 9b reveals that the K-S algorithm tends to oversample spectral extremes while underrepresenting the central distribution, which may result in biased model learning and reduced generalization performance.

Figure 10a further demonstrates the superior statistical alignment between the SPXY-based training and test sets, particularly in the high-frequency spectral regions. This alignment is crucial for hyperspectral camouflage detection, where fine spectral features such as vegetation absorption dips and textile reflectance plateaus serve as key discriminative cues. In comparison,

Figure 10b shows that the K-S partitioning leads to noticeable mismatches across several spectral intervals, indicating potential risk of model overfitting or reduced sensitivity to subtle class differences.

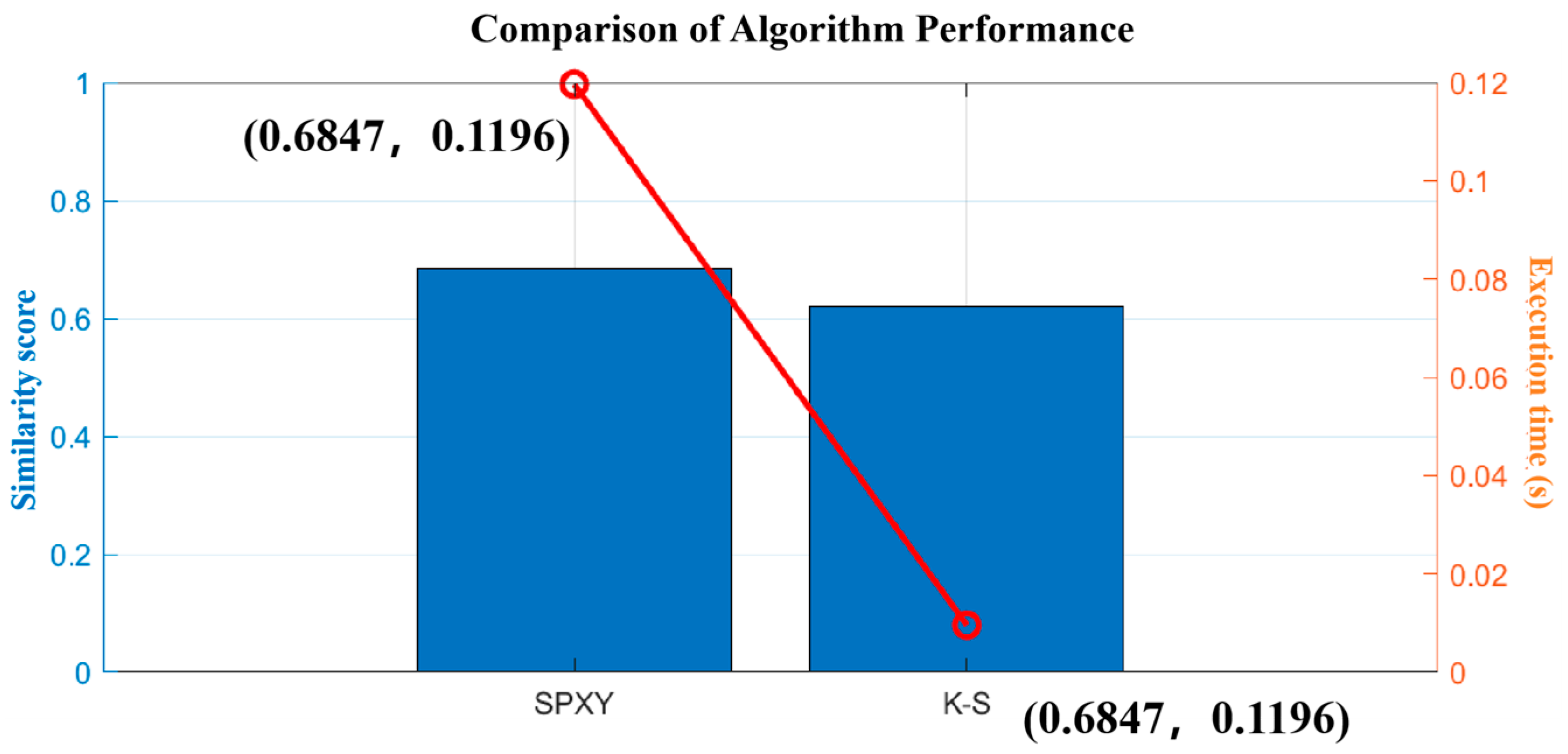

Figure 11 quantitatively confirms the advantage of SPXY in maintaining statistical consistency between training and testing subsets. The SPXY algorithm achieved a KL divergence of 0.1593, which is significantly lower than the 0.2485 recorded for the K-S algorithm, indicating a smaller spectral distribution shift. For mean spectral similarity, SPXY reached 0.6847 compared to 0.6217 for K-S, suggesting better alignment in average spectral characteristics. In terms of the standard deviation of similarity, SPXY achieved 0.1041 while K-S recorded 0.1382, reflecting a tighter match in spectral variability. Although the execution time for SPXY (0.1196 s) was slightly longer than that of K-S (0.0096 s), the improved distributional fidelity provided by SPXY offers clear benefits for subsequent model training and evaluation.

Overall, SPXY demonstrates superior performance in constructing representative and balanced training sets, a factor that directly contributes to improved model robustness in the presence of spectral variability and distributional shift. The observed improvements in similarity metrics and classification outcomes further confirm the effectiveness of SPXY in supporting high-performance hyperspectral modeling.

3.3. Classification Result

Six models were built to thoroughly assess the classification performance of several models based on the SPXY sample selection strategy: SPXY-PCA, SPXY-SVM, SPXY-RF, SPXY-CNN, SPXY-KNN, and SPXY-ResNet. To assess both performance and efficiency, all models were evaluated using accuracy, precision, recall, and F1-score as standard benchmarks. Additionally, training time was also used.

Table 1 summarizes the classification performance of all SPXY-based models. The Mean Accuracy % column reports the average accuracy obtained over 100 independent runs with randomly partitioned datasets, while the standard deviation (SD) of these runs indicates the stability and robustness of each model. The remaining metrics, Precision, Recall, F1-score, and Training time correspond to the best single-run results to facilitate direct comparison.

Among all evaluated methods, SPXY-ResNet outperformed all other models, achieving the highest mean accuracy of 99.17% with a small standard deviation of 0.79%, along with top scores in Precision (98.89%), Recall (98.82%), and F1-score (98.82%). This indicates its strong capability in extracting and generalizing complex spectral-spatial features while maintaining a competitive training time of 0.14 s, demonstrating that the residual structure facilitates efficient convergence without introducing significant computational overhead.

SPXY-SVM and SPXY-RF also delivered high mean accuracies of 97.5% and 96.67%, respectively. SPXY-SVM showed slightly better recall, while SPXY-RF was more balanced across metrics and required less training time (0.37 s). SPXY-CNN and SPXY-KNN offered solid performance (95–96% accuracy), with CNN showing faster training (0.07 s), yet slightly lower F1-score compared to ResNet and SVM. Error analysis demonstrates that all models exhibit small variations in accuracy, with standard deviations below 1%, indicating high repeatability and robust generalization. The superior performance and low fluctuation of SPXY-ResNet confirm that the proposed deep residual feature fusion framework is capable of learning fine-grained spectral-spatial representations and delivering stable classification outcomes across repeated experiments. These results collectively highlight the advantage of deep learning architectures, particularly ResNet-based models, in capturing nuanced hyperspectral patterns for camouflage detection.

3.4. Validation of a ResNet-Based Model for Discriminating Natural Grass and Camouflage Fabrics

To validate the ResNet model, unseen samples across five spectral categories were randomly selected for testing. The resulting confusion matrix, showing only two misclassifications and confirming high classification accuracy, is shown in

Figure 12a. The training and validation accuracy over iterations, indicating stable learning behavior, is shown in

Figure 12b, while the corresponding loss curves demonstrating effective convergence without overfitting are shown in

Figure 12c.

The ResNet model was trained for a total of 800 iterations (100 epochs). The model’s validation accuracy remained consistently above 85%, with a final accuracy of 99.17%, demonstrating excellent predictive performance. At the same time, the validation loss converged and remained stable below 0.4, indicating good model generalization.

The performance of six classifiers in identifying spectral differences between natural grass and camouflage fabrics was compared, with SPXY-ResNet demonstrating the best overall accuracy and consistency across all categories. Its ability to integrate spatial and spectral features contributed to better generalization on the validation set.

4. Discussion

The proposed SPXY-ResNet framework achieved a classification accuracy of 99.17% using limited training data, indicating strong generalization capability and robustness. Compared with existing deep residual or attention-based models that rely on large datasets and complex architectures [

19,

20], comparable performance was attained with substantially reduced computational overhead. This improvement is primarily attributed to the integration of SPXY-based sample partitioning, which enhances representativeness in both spectral and label space [

29], and a multi-step preprocessing pipeline that improves class separability.

Although attention-based networks such as SS-RAN and wide-field attention models have demonstrated high classification accuracy in hyperspectral imaging tasks [

11,

19], their reliance on multiple attention modules and deeper architectures leads to high computational cost, limiting their applicability to real-time or embedded systems. Similarly, sequential architectures like Bi-GRU effectively capture spectral dependencies [

28], but their long training times and poor scalability reduce their practicality in high-dimensional applications. In contrast, the SPXY-ResNet framework avoids explicit sequence modeling while preserving spectral continuity through spatial–spectral preprocessing and residual connections, enabling a more lightweight and deployable solution.

Recent advances in multibranch 3D–2D convolutional neural networks have shown excellent performance by capturing both local and global spectral–spatial features [

13]. However, such architectures often involve high model complexity and resource consumption, posing challenges for training and deployment in hardware-constrained environments. In comparison, the 2D ResNet-based approach, combined with efficient preprocessing and optimized sampling, delivers similar accuracy with significantly lower computational demand, making it better suited for real-world applications requiring fast and portable solutions.

Previous residual network designs have focused primarily on spectral denoising [

33], often neglecting the integration of spatial context. By combining residual learning with spatial–spectral preprocessing, enhanced feature representation is achieved, particularly under conditions of high spectral similarity between camouflage materials and natural vegetation. The model also enhances reflectance contrast in the red-edge region (680–750 nm), which is critical for vegetation-related material discrimination [

34].

Consistent improvements were observed across all evaluation metrics, including accuracy, precision, recall, and F1-score, when compared with baseline classifiers such as PCA, SVM, RF, KNN, and conventional CNNs. Minimal overfitting and stable convergence further confirm the robustness of the proposed approach. The repeated SPXY + 5-fold cross-validation experiments further demonstrated consistent model performance under 1000 stochastic resampling trials, eliminating the potential selection bias associated with a standalone 70/30 split. By jointly optimizing data partitioning, spectral preprocessing, and network architecture, the framework provides an efficient and scalable solution for hyperspectral camouflage detection.

Although the SPXY–ResNet framework achieved high accuracy in distinguishing camouflage fabric from natural vegetation, it should be noted that the model was trained and evaluated solely on a self-collected hyperspectral dataset. No publicly available camouflage or benchmark hyperspectral datasets were used in this study. Consequently, the generalizability and external validity of the proposed approach may be limited.

Future work will focus on extending the model evaluation to publicly available datasets and cross-scene validation to further verify its robustness and transferability. In addition, exploring the integration of transformer-based architectures or lightweight 3D CNN modules could enhance spatial–spectral modeling capabilities in more complex scenarios. Large-scale annotated datasets, such as BihoT [

35], also provide opportunities to extend static classification frameworks toward spatiotemporal object detection and tracking.

5. Conclusions

This study developed a hyperspectral classification framework for distinguishing natural grass from camouflage fabrics. Among the tested models, SPXY-ResNet achieved the best performance, with an accuracy of 99.17%, benefiting from its deep residual structure and ability to capture subtle spectral–spatial features. The SPXY algorithm outperformed the traditional Kennard-Stone method by providing more representative training sets, improving model generalization. Applying SNV normalization, SG filtering, and derivative-based enhancements successfully minimized spectral noise and improved the representation of informative features. The proposed method offers a fast, accurate, and non-destructive solution for camouflage detection based on hyperspectral data, with potential applications in agriculture, ecology, and defense.

In addition to its low computational overhead, the framework incorporates several methodological innovations. The integration of SPXY-based sampling with residual deep learning establishes a joint optimization chain that maintains spectral label balance and strengthens model generalization under distributional variations. The sequential preprocessing pipeline maximizes informative variance while suppressing redundant noise, which is crucial for hyperspectral camouflage detection. As illustrated in

Figure 4, the stepwise architecture unifies data acquisition, preprocessing, and classification into a lightweight and reproducible workflow. Validation across multiple acquisition distances (3 m, 5 m, and 10 m) demonstrates the model’s robustness and adaptability for real-world deployment.

Despite its high performance, the framework has certain limitations. Experiments were conducted only in a single grassland environment, and the camouflage materials consisted of a single fabric type under controlled illumination. These conditions may not fully capture the spectral and textural complexity of real-world camouflage targets.

Future work will address these limitations while further improving the framework’s interpretability and scalability. Planned efforts include expanding the dataset to cover forested and mixed-vegetation environments, incorporating multiple camouflage fabric types with distinct spectral characteristics, and conducting outdoor measurements under varying illumination and weather conditions to evaluate model robustness and transferability. Concurrently, Explainable AI techniques such as SHAP and Grad-CAM will be applied to visualize the contribution of individual spectral bands and enhance model interpretability. Additionally, large-scale deployment, automated hyperparameter optimization, and implementation on portable hyperspectral devices will be explored to improve operational efficiency and practical adaptability. Collectively, these developments aim to extend the robustness, transparency, and real-time applicability of the SPXY–ResNet framework across diverse environmental and operational conditions.