Abstract

Light scattering and attenuation in water degrade underwater images with low visibility and color distortion, which often interfere with the high-level visual tasks of underwater autonomous robots. Most existing deep learning methods for underwater image enhancement only supervise the final output of network and ignore the promotion effect of the intermediate results on the final feature representation. These supervision methods affect the feature representation ability, network efficiency, and ability. In this paper, we present a novel idea of multiple-stage supervision to guide the network to learn useful features correctly and progressively. With this idea, we propose a pixel-wise Progressive Guided Network (PGN) for underwater image enhancement to take advantage of the network’s intermediate results and promote the final enhancement effect. The Pixel-Wise Attention Module is designed by introducing supervision in each stage to progressively promote the representation ability of the features and the recovered image quality. The experimental results on several datasets demonstrate that our method outperforms recent state-of-the-art underwater image enhancement methods.

1. Introduction

With the development of underwater exploration technology, remotely operated vehicles (ROVs) and autonomous underwater vehicles (AUVs) are widely applied in underwater tasks. Underwater vision systems are increasingly used to help underwater robots complete close-range operations autonomously, because the data acquired by them have the advantages of low noise, rich details, and strong real-time performance compared with underwater sonar systems. Clear underwater images provide rich visual information that is important for underwater vision-based tasks, such as navigation and target and terrain detection [1,2,3,4]. However, due to the light attenuation and scattering in water, the images taken by underwater vision systems are often degraded by low visibility and color distortion, which seriously affect the performance of underwater visual perception tasks. Thus, it is critical to recover clear images from degraded underwater images for vision-guided underwater robots and ocean engineering.

Underwater image enhancement and restoration methods can be mainly divided into traditional methods [5,6] and deep learning-based methods [7,8]. Traditional data-based methods enhance the visual effect of underwater images by denoising and contrast adjusting. Due to these methods ignoring the degradation mechanism of images in water, they always cause over-enhancement, under-enhancement, and even artifacts. Traditional physical model-based methods restore degraded images by estimating scene transmission maps and ambient light through underwater imaging models. These methods achieve good underwater image enhancement. However, methods that rely on physical models often result in less-than-ideal visual outcomes because they heavily depend on the precision of the modeling and manually designed prior knowledge.

In recent years, deep learning-based methods achieve better performance compared with most traditional methods. Some researchers [9,10] combine physical models with deep learning to promote the effect of enhancement and restoration by learning imaging parameters or prior knowledge. However, these methods assume that all the color channels of an image have the same transmittance. In the case of complex degradation, this will lead to unpleasant visual results, such as color distortion and artifacts. In contrast, model-free deep learning methods [10] directly learn the mapping relationship between a degraded image and its corresponding clear image without an underwater imaging model or prior conditions. However, existing model-based [11,12] and model-free deep learning methods [7,13,14,15] only supervise the final output of a network, and the intermediate feature learning process of the network is uncontrollable. These supervision methods ignore the guiding effect of intermediate results on the network learning process, which is not conducive to the progressive learning of useful features. In fact, the intermediate results play an important role in promoting the network’s feature representation. Introducing reasonable supervision to the intermediate process of the network can guide the network to learn useful features progressively and stably. According to this idea of supervising the network at multiple stages rather than only at the end of the network, we design an underwater image enhancement network that effectively utilizes the intermediate result information to improve the underwater image enhancement effect. The experiment also proves that our network design idea can help the network learn useful features more accurately and efficiently, and obtain better underwater image enhancement results, especially when the illumination is uneven (Figure 1).

Figure 1.

Visual comparisons of the enhancement: (a) Input, (b) UGAN [16], (c) Uresnet [17], (d) FUnIE_GAN [10], (e) Water-Net [15] and (f) Ours. Our Model recovers more natural colors with almost no artifacts compared with other methods.

Our model is based on progressively guided pixel-wise attention, which decomposes the entire underwater image enhancement process into supervised stages. Specifically, we use clear underwater images at each stage to generate a guidance map through a lateral Pixel-Wise Attention Module to guide network learning. This strategy can progressively learn more accurate information and deepen the network’s image understanding and thus improve the network’s enhancement performance. The main contributions of this paper are summarized as follows:

- A Progressively Guided Network (PGN) for underwater image enhancement is proposed, which can progressively deepen the network’s understanding of image structures and details through a multiple-stage supervision strategy.

- A Pixel-Wise Attention Module (PAM) is proposed. The PAM extracts features from clear images and serves as a guide to further enhance feature representation at each stage.

- Experiments on publicly available datasets and real-world underwater images show that our method outperforms state-of-the-art methods in terms of both visual quality and quantitative metrics.

2. Related Works

2.1. Traditional Methods

Traditional data-based methods enhance the visual effect by denoising and contrast adjusting, such as histogram-based [5] and color correction methods [6,18]. Huang et al. [5] proposed a Relative Global Histogram Stretching (RGHS) based on adaptive parameter acquisition to complete contrast and color correction. Ancuti et al. [18] fused color-compensated and white-balanced versions of an underwater image with a multi-scale strategy to promote the edges and color contrast of the enhanced image. Zhang et al. [6] adjusted colors and details according to a minimum color loss principle and a maximum attenuation map-guided fusion strategy, and then utilized the mean and variance of image patches to adjust the contrast adaptively. Although these methods can enhance the visual quality, they ignore imaging principles and usually cause color deviation, noise, oversaturation or undersaturation.

Physical-based methods [19,20,21,22] reconstruct images by modeling the degradation problem and using its inverse algorithm to recover images. Some researchers designed a series of underwater image enhancement methods based on the Jaffe–McGlamery imaging model [23,24]. Peng et al. [19] proposed a model-based restoration method that estimates the depth map of underwater scenes by blurriness and light absorption. Song et al. [20] estimated backward light and transmission maps by the scene depth map obtained by a linear model, and achieved underwater image restoration. Some researchers have applied dark channel prior (DCP) [25] in underwater image enhancement and obtained better performance [21,22]. Akkaynak et al. [21] estimated backscatter using dark pixels and known range information for image enhancement. However, DCP is not suitable for red channels of an underwater image due to the severe absorption of red light in water and the color not being corrected well.

2.2. Deep Learning-Based Methods

With the rapid development of deep learning, some researchers utilize deep learning networks to more accurately estimate the parameters of physical models for underwater image recovery and enhancement. J. Li et al. [11] designed a WaterGAN to generate a large dataset of realistic underwater images and corresponding in-air and depth images, and proposed a restoration network for color correction. Wang et al. [12] proposed UIE-net, which consists of two parallel branches to estimate the parameters of an underwater imaging model for enhancement. C. Li et al. [8] proposed a network encoding multiple color spaces to diversify the feature representation by fusing features of RGB, Lab, and HSV color spaces into a unified structure. Based on the characteristics of underwater image attenuation, some researchers proposed transmittance estimation for different channels [26]. Their networks can effectively improve visual quality. However, due to the simplified imaging model and heavy reliance on hand-crafted priors, they are not robust in heavy scattering or insufficient-light conditions.

Model-free deep learning methods [7,13,14,15,16,17] directly learn the mapping relationship between a degraded image and its corresponding clear image without an imaging model or prior conditions. Fabbri et al. [16] utilized CycleGAN to improve the visual quality of underwater images and generated a dataset containing a large number of pairs of images. Liu et al. [17] generated synthetic underwater training data by CycleGAN, introduced the very-deep super-resolution reconstruction model (VDSR) [27] for underwater image enhancement, and proposed underwater resnet (Uresnet). C. Li et al. [14] designed a fully data-driven UWCNN model based on the underwater scene prior. It does not need to estimate the parameters of the imaging model. They further [15] proposed a Water-Net model based on a CNN and constructed an enhanced benchmark dataset with 950 underwater images. Islam et al. [10] proposed a real-time underwater image enhancement model, and used global content, color, local texture, and style information to supervise GAN. Yang et al. [28] proposed a lightweight multiple adaptive feature fusion network that reduces the number of parameters by about 94%. Chen et al. [13] proposed an underwater image enhancement method via self-attention and contrastive learning (UIESC). They designed a lightweight self-attention module that can effectively deal with underwater degradation problems, such as atomization, color distortion, and uneven illumination by separating local features and adaptively learning different weights. Wang et al. [7] integrate the RGB and HSV color spaces into a unified network and obtain good results. Ref. [29] considered the problem of underwater image enhancement as two sub-problems, which can be solved by a two-stage network architecture. With this strategy, they tried to address the issues progressively. Ref. [30] also proposed a multi-stage method to address different degrees of unbalanced attenuation. Although most existing model-free deep learning methods attempt to solve the problem of underwater image enhancement with multiple stages progressively, they only supervise the final output of network and ignore the promotion effect of intermediate results on the final enhanced results. Therefore, we propose the PGN, which introduces supervision at multiple stages to guide the network to learn features progressively, and improves the performance of underwater image enhancement.

3. The Proposed Method

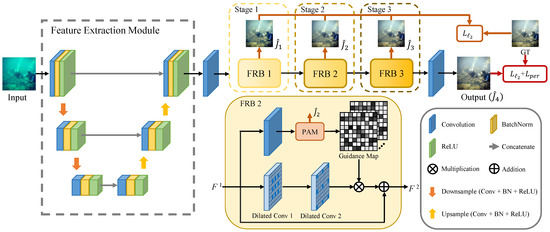

Our network consists of a Feature Extraction Module and multi-stage Feature Representation Blocks (FRBs), each of which contains a Pixel-Wise Attention Module (PAM) for supervision. To extract rich multi-scale features including the detail and structure of the input images, we choose a U-Net as the Feature Extraction Module as shown in the gray dashed box of Figure 2. To further improve the representational ability of the network, we feed the extracted features into three cascaded FRBs with dilated convolutions as the backbone. In order to enable FRBs to progressively and steadily learn more useful features, we introduce supervision in each FRB to control the feature learning process. To ensure reasonable supervision during the feature learning process, we design an effective supervisory strategy that uses PAM as a lateral structure in each FRB. PAM processes the information obtained from clear images into a pixel-level guidance map. Then, the guidance map is fed back to the backbone network.

Figure 2.

Overview of our network. We use three Feature Representation Blocks (FRBs) after the Feature Extraction Module. The input features of each FRB are fed into a convolution layer and Pixel-Wise Attention Module (PAM), which uses clear images to supervise the features, and generate a guidance map. Then, the guidance map is pixel-wise multiplied by the features of the backbone to enhance the features of important regions, and sent to the next stage.

3.1. Feature Representation Block

In our network, we use several FRBs to progressively complete the underwater image enhancement task. This structure decomposes the whole enhancement process into several controllable stages. At each stage, we introduce supervision from clear images to the network. We aim to segment the image restoration procedure into a series of sequential sub-processes, which can dis-aggregate the intricate and severely degraded image recovery process into an aggregation of several less intensive restoration processes. The FRB consists of two parts: dilated convolution layers and a Pixel-Wise Attention Module (PAM). Several dilated convolution layers with different dilation factors are utilized to extract rich contextual features, and the PAM provides supervision for network learning at each stage, enhancing these features by generating guidance maps from clear images.

For the nth FRB, FRB takes the output feature of the previous FRB as input. We first feed the input feature into the dilated convolution layers and obtain a feature map . Meanwhile, the features learned in the previous stage are fed to a convolution layer and we obtain . PAM uses clear images to supervise , and generate a guidance map with the same size as . Then, the guidance map is pixel-wise multiplied by feature to enhance the features of important regions. To better preserve the learned features, we add the multiplied feature and input feature as the output of the FRB. The output feature map of the FRB is formulated as follows:

To learn rich contextual information, we utilize the dilated convolution layers with different dilated factors to progressively expand the receptive field of our network. Dilated convolution increases the size of the receptive field by increasing the stride of the convolution kernel. Compared with the standard convolution, this structure can greatly expand the receptive field when the number of convolution layers is the same. As the network deepens, dilated convolution layers can obtain more context information and deepen the network’s understanding of image structural features.

In our model, each FRB has 2 dilated convolution layers that apply 3 × 3 convolutions with different dilation factors. As the number of FRBs increases, the dilation factor starts from 1 and increases layer by layer. The dilation factors are 1, 1, 2, 4, 8 and 10.

3.2. Pixel-Wise Attention Module

We design a PAM as shown in Figure 3 to provide supervision at each stage of the network, enabling the network to learn useful features progressively. As a lateral structure, PAM generates a guide map to enhance the features obtained by dilated convolution layers. In contrast to the existing attention strategies for image enhancement, we utilize information learned from ground truth (GT) to provide pixel-wise guidance for feature learning. This way of enhancing features with a guidance map provides a simple and effective pixel-wise attention mechanism for the network to focus on key regions of an image. This strategy introduces supervision at every stage rather than focusing only on the final output, and makes the stage learning process of the network controllable and reasonable. This module makes the network progressively deepen the understanding of image details and structure as the number of FRBs increases.

Figure 3.

Overview of our architecture for the Pixel-Wise Attention Module. We utilized the intermediate recovery results to learn the pixel-wise attention, which can enhance the representation ability of the proposed model progressively.

The input of PAM is the output feature map of the previous PRB. The feature map is first fed to a convolution layer to generate an image . We calculate the loss between and GT to make close to the corresponding clear image. Then, we learn features from using 2 standard convolution layers. Finally, we apply a squeeze-and-excitation (SE) block [31] to further improve understanding of the features in the lateral structure. The SE block can exploit the inter-dependencies among channels and enhance the representational power of features. The output feature of the SE block is the guidance map . providing pixel-wise guidance for , and thus has the same size as .

3.3. Training

To utilize the intermediate results to guide network learning, the loss is used to supervise generated in the PAMs of each stage of the network. To achieve a proper result, we use a combination of the loss and the perceptual loss between the final output and its corresponding clear image J. The final loss of our network is as follows:

The loss measures the difference between the enhanced result and its corresponding J as follows:

The is computed based on the VGG-19 network pre-trained on the ImageNet. We measure the distance between the feature representation of the enhanced and J as follows:

where denotes the layer relu5_4 of the VGG-19 network.

4. Experiments

In this section, we compare PGN with state-of-the-art methods and show the quantitative and visual results. We also provide a series of ablation studies to verify each component of the PGN. In the training process, our network is trained for 200 epochs on an Nvidia TITAN RTX GPU (Nvidia, Santa Clara, CA, USA) with a TensorFlow framework. We use the Adam optimizer for training and set the learning rate to .

4.1. Experiment Settings

4.1.1. Datasets

To evaluate our method, we conducted experiments on 5 public datasets: EUVP [10], UGAN [16], UIEB [15], C60 [15], and U45 [32]. In addition, to verify the enhanced capabilities of our PGN in a real underwater environment, we tested the PGN on real-world underwater images captured by the AUV. These images captured by the AUV are more representative of real-world underwater exploration applications than currently publicly available underwater datasets.

The EUVP dataset is divided into three groups according to the differences among the scenes, light source, and degree of degradation, including Underwater Dark, Underwater ImageNet and Underwater Scenes, respectively. The subset Underwater Dark has 5550 pairs of images for training and 570 images for testing. Underwater ImageNet contains 3700 training pairs and 1270 test pairs. Underwater Scenes contains 2185 training pairs and 130 test pairs. The UGAN dataset contains 5000 pairs of images for training and 1128 pairs for testing.

To verify the enhanced capabilities of our model on real underwater datasets, we tested PGN on three widely used real-world underwater datasets, including UIEB, Challenging-60, and U45. The UIEB dataset contains 950 real underwater images and 890 corresponding reference images. We randomly selected 760 pairs of images for training and the remaining 130 pairs for testing. C60 [15] contains 60 real-world underwater images from the UIEB dataset without satisfactory reference images. U45 [32] collects 45 images including the color casts, low contrast, and haze-like effects of degradation.

4.1.2. Training Settings

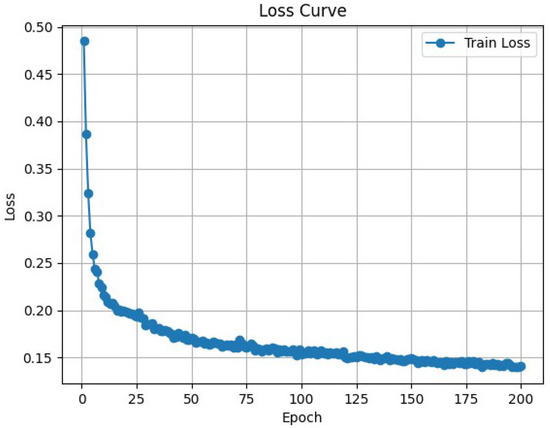

The training and all experimental procedures were performed on a single NVIDIA GeForce RTX 3090 GPU, utilizing the TensorFlow framework. The batch size for training was set to 32. The input images of the model were uniformly resized to dimensions of 128 by 128 pixels. For each dataset, we randomly selected 90% of the image pairs for training purposes, reserving the remaining 10% for testing. Our Progressive Generative Network (PGN) was trained across 200 epochs for each dataset, employing the Adam optimizer with a momentum factor of 0.9, and maintaining a batch size of 32. The initial learning rate was set to 0.00035, employing a cosine annealing schedule for learning rate decay. We also present the training details of the proposed model, including the training loss curves, as shown in Figure 4.

Figure 4.

The training loss curves of the proposed model.

4.1.3. Evaluation Metrics

We apply the Peak Signal-to-Noise Ratio (PSNR) and Structural Similarity Index (SSIM) to the full-reference evaluations of the enhanced images. Due to the particularity of the underwater environment, we also employ three no-reference image quality assessment methods, Underwater Image Sharpness Measure (UISM), Multi-Scale Image Quality (MUSIQ) and cosine similarity (COSINE), to evaluate the performance of different methods. Higher UISM and MUSIQ scores represent better human visual perception of an image. We adopt the VGG network to extract features from images and then calculate the cosine similarity between them. The closer the cosine similarity value to 1, the more similar the two images.

4.1.4. Compared Methods

To evaluate the effectiveness of our method, we compare it with underwater image enhancement methods, including 4 traditional methods (IBLA [19], RGHS [5], ULAP [20] and MLLE [6]), and 6 deep learning-based methods (UGAN [16], Uresnet [17], FUnIEGAN [10], Water-Net [15], Ucolor [8] and TACL [33]).

4.2. Quantitative Comparisons

Table 1 shows the results on the EUVP and UGAN datasets. Our method obtains the best PSNR and SSIM results on three subsets of the EUVP dataset and the UGAN dataset. For UISM and MUSIQ, our approach also achieved the best or second-best results on almost all subsets of the EUVP dataset. This shows that our method achieves the closest enhancement results to reference images, while also meeting the requirements of human visual perception. On the UGAN dataset, our method obtains the second-best UISM and MUSIQ values, while the MLLE method obtains the best UISM and MUSIQ values. Compared with our results, the underwater images enhanced by MLLE showed higher contrast. And images with higher contrast tend to obtain higher non-reference evaluation values. Therefore, MLLE obtains the best UISM and MUSIQ values on the UGAN dataset. For PSNR, SSIM, and MUSIQ, our method is best on Underwater Dark and Underwater Scenes. Most of the images in Underwater Dark have weak light and serious degradation. Our model also achieves a 0.5 percentage point increase in the PSNR metric. This shows that, when the degradation is serious or the scene is complex, our method is still able to obtain satisfactory results. This also indicates that the PAM can provide beneficial information, enrich the feature representation, and accurately enhance the underwater images. The images in the UGAN dataset are the closest to real underwater environments. Regardless of the SSIM or PSNR metric, our model surpasses the second-best algorithm by 2 points.

Table 1.

Image Quantitative evaluation of different methods on the EUVP [10] and UGAN [16] datasets. The best results are highlighted in bold, and the second-best results are underlined. ↑ demonstrates the larger the value, the better the effect.

Table 2 shows the quantitative results of different methods on the real-world underwater image datasets, including UIEB, C60, and U45. The PSNR and SSIM values of our method are best on the real underwater scene dataset, UIEB. Our method improves the PSNR and SSIM values by 3.84% and 1.91% on average compared with the second-best methods Water-Net and Ucolor, respectively. Combined with Figure 5, it can be seen that some methods, such as IBLA, RGHS, and ULAP, obviously do not remove color distortion well, but still obtain high UISM and MUSIQ values. Although our method did not achieve the best no-reference metrics, it achieved the highest PSNR and SSIM, and the visual results on these datasets were outstanding. The C60 dataset is composed of 60 challenging images from UIEBD without satisfactory reference images. PGN obtains the highest UISM and MUSIQ on the C60 dataset, which shows that PGN has great enhancement performance. PGN also obtained the best UISM value and second-best MUSIQ value on the U45 dataset. The results prove that our supervision strategy helps the network progressively learn accurate features and finally obtain great results. Experiments demonstrate that our algorithm not only shows significant restoration effects on severely degraded images but also performs well on real-world images.

Table 2.

Image Quantitative evaluation of different methods on the UIEB [15], C60 [15], and U45 [32] datasets. The best results are highlighted in bold, and the second-best results are underlined. ↑ demonstrates the larger the value, the better the effect.

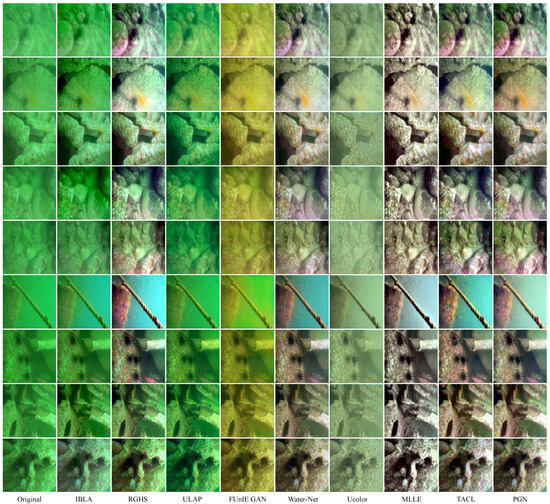

Figure 5.

Visual comparison on UIEB, C60, and U45 datasets. The comparison methods are arranged from left to right: Original images, IBLA [19], RGHS [5], ULAP [20], FunIEGAN [10], Water-Net [15], Ucolor [8], MLLE [6], TACL [33] and PGN. Our method performs best on color correction and is closest to the ground truth, especially when the image is rich in color.

4.3. Visual Comparisons

We randomly selected images from the real-world underwater datasets UIEB, C60, and U45, and present them in Figure 5. The images recovered by the PGN have a clearer texture and look more natural. In the sample from the UIEB dataset, our method recovers both background and foreground well when the illumination is complex. For the position with light spots, the image enhanced by PGN has neither overbrightness nor artifacts. The most traditional methods only enhance the contrast of the image, but fail to recover the color distortion. Traditional physical-based methods depend on limited parameters of simplified imaging models and cannot recover the degradation completely. Although the results of deep learning-based methods can sometimes remove color distortion well, these restored images have low contrast, which leads to the entire image appearing relatively dark, with colors that are not realistic or vivid. Although the image enhanced by MLLE [6] appears relatively bright, the colors exhibit noticeable distortion and lack the necessary sense of realism.

The task of enhancing the images in C60 is very challenging. Traditional methods including IBLA, RGHS, and ULAP are almost completely ineffective in this difficult situation. In the sample from the C60 dataset, the result of FUnIE_GAN shows obvious distortion, with poor visibility of the shapes of the fish and severe blurring of the edges. The other deep learning-based methods improve the color distortion problem, but overall, the visual effects of the results are not satisfactory enough. In the second sample, our method can effectively remove the color distortion and obtain more natural enhanced foreground and background results. The results from almost all methods except MLLE and PGN have noticeable blue or green color distortion. The MLLE [6] model can eliminate the obvious green color distortion, but it also generates more artificial details. Only our PGN effectively removes color distortion while restoring a more natural enhanced image.

For the first samples from U45, our PGN and TACL obtain the best enhanced results. Other methods have the problem that color distortion is not completely removed or the enhanced images are not natural enough. In the second sample from U45, results from almost all methods except MLLE and PGN have noticeable color distortion. Although MLLE is better at removing color distortion, its results have less color richness. PGN can recover the original rich color of the shells well, although the color distortion remains in the upper left corner of the image.

We randomly selected an image from all three subsets of the EUVP dataset, as shown in Figure 6. The degradation of images in the Underwater Dark dataset is relatively severe, in which case the existing methods cannot recover natural results, especially in the recovery of degraded colors. The images from Underwater ImageNet recovered by FUnIE_GAN [10] are too bright; this results in the restored images lacking a sense of realism. Water-net [15] and Ucolor [8] can not remove the color distortion. For the image from Underwater Scenes, the underwater light is uneven and our method can better recover the effect of the light changes, while other methods have obvious artifacts.

Figure 6.

Visual comparisons on the EUVP dataset. Images from top to bottom are from Underwater Dark, Underwater ImageNet, and Underwater Scenes, respectively. The comparison methods are arranged from left to right: Original images, IBLA [19], RGHS [5], ULAP [20], FunIEGAN [10], Water-Net [15], Ucolor [8], MLLE [6], PGN and the Reference. Our method performs best on color correction and detail recovery. Especially in complex lighting conditions, our method preserves the most detail, with no artifacts, and the images are not too light or too dark.

We also show the results on the UGAN dataset in Figure 7. Compared with the state-of-the-art methods, our method not only restores the color closest to reference images but also preserves the structure and details. It can be seen that the results of IBLA and RGHS still have significant color distortion. When the scene is colorful and rich, our method can accurately recover the color features of images. Most results from the other methods are too vivid or too dim, and the image enhanced by our method is very clear for richly textured areas.

Figure 7.

Visual comparison on the UGAN dataset. The comparison methods are arranged from left to right: Original images, IBLA [19], RGHS [5], ULAP [20], FunIEGAN [10], Water-Net [15], Ucolor [8], MLLE [6], PGN and the Reference. Our method performs best on detail recovery and color correction, and especially in the sample from UIEBD, our method preserves the most detail in complex lighting conditions.

Figure 8 shows the enhancement results of PGN on real underwater images collected by ourselves. Many methods such as IBLA, RGHS, ULAP, and FUnIE_GAN cannot recover the degraded images obtained by the vision equipment of underwater vehicles. Although Water-net, Ucolor, and MLLE are able to restore color distortion to a certain extent, the results are unsatisfactory. Only TACL and PGN produced the most natural visual results. However, our PGN is capable of restoring these images with remarkable improvements in both detail and color, resulting in a highly natural effect.

Figure 8.

PGN’s enhancement of underwater images captured by the vision sensor on the AUV. The comparison methods are arranged from left to right: Original images, IBLA [19], RGHS [5], ULAP [20], FunIEGAN [10], Water-Net [15], Ucolor [8], MLLE [6], TACL [33] and PGN. In an underwater environment with very severe color distortion and even turbidity, our PGN can still recover satisfactory results.

4.4. Ablation Study

In this section, we present ablation studies to analyze two core components of the PGN, Pixel-Wise Attention Module (PAM) and Feature Representation Block (FRb), on UIEBD.

4.4.1. Pixel-Wise Attention Module

To verify the effect of the PAM, we remove it and keep only the dilated convolutions of the network. As shown in Table 3, the network’s performance decreases without the PAM. When the PAM is introduced into the network, the overall quantitative results and visual effects are improved. This is because our attention mechanism has a strong guidance role, and the improvement of texture and detail is more significant. Then, we keep PAMs, and remove the supervision of in the PAMs, only performing loss on the final output. When there is no supervision of in the PAMs, the performance is even worse in that only the dilated convolutions of the network are retained. It can also be seen from Figure 9 that, without the PAM or supervision of , the color of the enhanced image is not real enough and the background part is noisy. In addition, we replace the dilated convolution layers of the backbone with standard convolution layers. The texture of the enhanced image is not as sharp as that of the full model. Obviously, the full model achieves the best results. This validates that our supervision strategy can effectively guide the lateral structure to learn useful information, correct the features learned by the backbone, and improve the performance.

Table 3.

Ablation study of our method. w/o means the variant model without the related module.

Figure 9.

Visual comparisons with different structures, where our method achieves the most accurate reconstruction compared to the others.

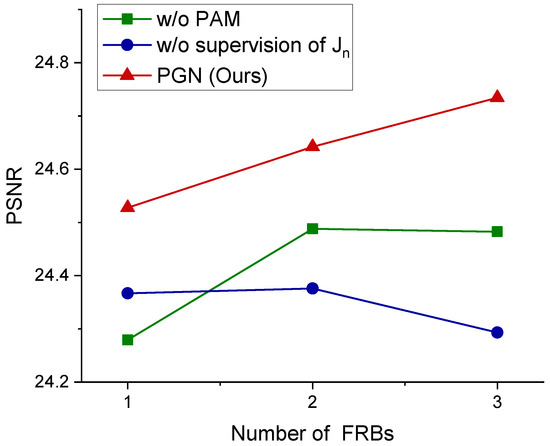

4.4.2. Feature Representation Block

To verify the progressive ability of our supervision strategy in the network, we use different numbers (1, 2, 3) of FRBs with different structures to train our network separately. With our effective supervision strategy, as the number of FRBs increases, the network deepens progressively, and the dilation factors of convolutions increase layer by layer. Thus, the network learns more useful features and achieves better performance. In Figure 10, our full model conforms to this expectation, and the PSNR becomes better as the number of FRBs increases. However, when the supervisions of or PAMs are removed, the network’s performance is not substantially improved when the number of FRBs is increased, and even the performance of the network begins to deteriorate when the number of FRBs exceeds 2. This shows that our supervision strategy can continuously guide the network to learn useful features as the network deepens. We also use more Feature Representation Blocks to train our network. Unfortunately, the performance of the model deteriorates heavily when the number of FRBs exceeds 4. This may be due to the two facts: first, as the number of FRBs increases, the model learns more effective features, but at the same time introduces redundancy and noise, thereby affecting the model’s performance; second, an excessive number of parameters cause the model to learn complex features on the training set, which in turn affects the model’s generalization ability.

Figure 10.

Variation trend of PSNR with an increasing number of FRBs under different network structures. With our effective supervision strategy, as the number of FRBs increases, the network deepens progressively, learns more useful features, and obtains better performance.

5. Conclusions

We propose an underwater image enhancement method that utilizes a Pixel-Wise Attention Module to introduce reasonable supervision to progressively improve the network’s feature representation ability, and finally achieve a better effect. The proposed method breaks down the image restoration process into several controllable sub-processes. By supervising and utilizing multiple intermediate processes, it restores degraded underwater images progressively. Secondly, we propose a Pixel-Wise Attention Module that weighs the image features with fine granularity, optimizing the restoration features, thereby accomplishing the task of underwater image restoration. Experiments indicate that the proposed method can effectively recover the distorted colors and texture details of underwater images, completing the task of underwater image restoration. The idea of introducing supervision in the intermediate process of the network and guiding the network to learn useful features progressively has potential applications in other image recovery tasks, such as denoising and inpainting.

Author Contributions

Conceptualization, H.J.; data curation, B.F. and Z.Z.; formal analysis, H.J. and Q.W.; funding acquisition, Y.T.; investigation, Q.W.; methodology, Q.W.; project administration, Q.W. and Y.T.; resources, Y.T.; software, H.J. and Z.Z.; supervision, Y.T.; validation, B.F.; visualization, H.J. and Z.Z.; writing—review editing, Y.T. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the National Natural Science Foundation of China (Grant No. 62073205) and the Project of the Education Department of Liaoning Province (Grant No. LJKMZ20221825).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The EUVP dataset is an open dataset accessed on February 18 2020 and can be downloaded at https://doi.org/10.1109/LRA.2020.2974710. The UGAN dataset is an open dataset accessed on 28 May 2018 and can be downloaded at https://doi.org/10.1109/ICRA.2018.8460552. The UIEB dataset is an open dataset accessed on January 11 2019 and can be downloaded at https://doi.org/10.1109/TIP.2019.2955241. The C60 dataset is an open dataset accessed on 28 November 2019 and can be downloaded at https://doi.org/10.1109/TIP.2019.2955241. The U45 dataset is an open dataset accessed on 17 June 2019 and can be downloaded at https://arxiv.org/abs/1906.06819.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Ren, W.; Luo, J.; Jiang, W.; Qu, L.; Han, Z.; Tian, J.; Liu, H. Learning Self- and Cross-Triplet Context Clues for Human-Object Interaction Detection. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 9760–9773. [Google Scholar] [CrossRef]

- Wang, Y.; Ma, X.; Wang, J.; Hou, S.; Dai, J.; Gu, D.; Wang, H. Robust AUV Visual Loop-Closure Detection Based on Variational Autoencoder Network. IEEE Trans. Ind. Inform. 2022, 18, 8829–8838. [Google Scholar] [CrossRef]

- Yuan, X.; Li, W.; Chen, G.; Yin, X.; Li, X.; Liu, J.; Zhao, J.; Zhao, J. Visual and Intelligent Identification Methods for Defects in Underwater Structure Using Alternating Current Field Measurement Technique. IEEE Trans. Ind. Inform. 2022, 18, 3853–3862. [Google Scholar] [CrossRef]

- Wang, Q.; Huang, Z.; Fan, H.; Fu, S.; Tang, Y. Unsupervised person re-identification based on adaptive information supplementation and foreground enhancement. IET Image Process. 2024, 18, 4680–4694. [Google Scholar] [CrossRef]

- Huang, D.; Wang, Y.; Song, W.; Sequeira, J.; Mavromatis, S. Shallow-Water Image Enhancement Using Relative Global Histogram Stretching Based on Adaptive Parameter Acquisition. In Proceedings of the MultiMedia Modeling, Bangkok, Thailand, 5–7 February 2018; pp. 453–465. [Google Scholar]

- Zhang, W.; Zhuang, P.; Sun, H.H.; Li, G.; Kwong, S.; Li, C. Underwater Image Enhancement via Minimal Color Loss and Locally Adaptive Contrast Enhancement. IEEE Trans. Image Process. 2022, 31, 3997–4010. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Guo, J.; Gao, H.; Yue, H. UIEC∧2-Net: CNN-based Underwater Image Enhancement using Two Color Space. Signal Process. Image Commun. 2021, 96, 116250. [Google Scholar] [CrossRef]

- Li, C.; Anwar, S.; Hou, J.; Cong, R.; Guo, C.; Ren, W. Underwater Image Enhancement via Medium Transmission-Guided Multi-Color Space Embedding. IEEE Trans. Image Process. 2021, 30, 4985–5000. [Google Scholar] [CrossRef]

- Lin, Y.; Shen, L.; Wang, Z.; Wang, K.; Zhang, X. Attenuation Coefficient Guided Two-Stage Network for Underwater Image Restoration. IEEE Signal Process. Lett. 2021, 28, 199–203. [Google Scholar] [CrossRef]

- Islam, M.J.; Xia, Y.; Sattar, J. Fast Underwater Image Enhancement for Improved Visual Perception. IEEE Robot. Autom. Lett. 2020, 5, 3227–3234. [Google Scholar] [CrossRef]

- Li, J.; Skinner, K.A.; Eustice, R.M.; Johnson-Roberson, M. WaterGAN: Unsupervised Generative Network to Enable Real-time Color Correction of Monocular Underwater Images. IEEE Robot. Autom. Lett. 2018, 3, 387–394. [Google Scholar] [CrossRef]

- Wang, K.; Hu, Y.; Chen, J.; Wu, X.; Zhao, X.; Li, Y. Underwater Image Restoration based on a Parallel Convolutional Neural Network. Remote Sens. 2019, 11, 1591. [Google Scholar] [CrossRef]

- Chen, R.; Cai, Z.; Yuan, J. UIESC: An Underwater Image Enhancement Framework via Self-Attention and Contrastive Learning. IEEE Trans. Ind. Inform. 2023, 19, 11701–11711. [Google Scholar] [CrossRef]

- Li, C.; Anwar, S.; Porikli, F. Underwater Scene Prior Inspired Deep Underwater Image and Video Enhancement. Pattern Recognit. 2020, 98, 107038. [Google Scholar] [CrossRef]

- Li, C.; Guo, C.; Ren, W.; Cong, R.; Hou, J.; Kwong, S.; Tao, D. An Underwater Image Enhancement Benchmark Dataset and Beyond. IEEE Trans. Image Process. 2020, 29, 4376–4389. [Google Scholar] [CrossRef] [PubMed]

- Fabbri, C.; Islam, M.J.; Sattar, J. Enhancing Underwater Imagery using Generative Adversarial Networks. In Proceedings of the IEEE International Conference on Robotics and Automation, Brisbane, Australia, 21–25 May 2018; pp. 7159–7165. [Google Scholar] [CrossRef]

- Liu, P.; Wang, G.; Qi, H.; Zhang, C.; Zheng, H.; Yu, Z. Underwater Image Enhancement with a Deep Residual Framework. IEEE Access 2019, 7, 94614–94629. [Google Scholar] [CrossRef]

- Ancuti, C.O.; Ancuti, C.; De Vleeschouwer, C.; Bekaert, P. Color Balance and Fusion for Underwater Image Enhancement. IEEE Trans. Image Process. 2018, 27, 379–393. [Google Scholar] [CrossRef] [PubMed]

- Peng, Y.T.; Cosman, P.C. Underwater Image Restoration Based on Image Blurriness and Light Absorption. IEEE Trans. Image Process. 2017, 26, 1579–1594. [Google Scholar] [CrossRef] [PubMed]

- Song, W.; Wang, Y.; Huang, D.; Tjondronegoro, D. A Rapid Scene Depth Estimation Model Based on Underwater Light Attenuation Prior for Underwater Image Restoration. In Proceedings of the Advances in Multimedia Information Processing, Hefei, China, 21–22 September 2018; pp. 678–688. [Google Scholar]

- Akkaynak, D.; Treibitz, T. Sea-Thru: A Method for Removing Water From Underwater Images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 1682–1691. [Google Scholar]

- Liang, Z.; Ding, X.; Wang, Y.; Yan, X.; Fu, X. GUDCP: Generalization of Underwater Dark Channel Prior for Underwater Image Restoration. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 4879–4884. [Google Scholar] [CrossRef]

- McGlamery, B.L. A Computer Model For Underwater Camera Systems. In Proceedings of the Ocean Optics VI, Monterrey, CA, USA, 23 October 1980; Volume 0208, pp. 221–231. [Google Scholar] [CrossRef]

- Jaffe, J. Computer modeling and the design of optimal underwater imaging systems. IEEE J. Ocean. Eng. 1990, 15, 101–111. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Single Image Haze Removal using Dark Channel Prior. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 2341–2353. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Liu, H.; Chau, L.P. Single Underwater Image Restoration Using Adaptive Attenuation-Curve Prior. IEEE Trans. Circuits Syst. I Regul. Pap. 2018, 65, 992–1002. [Google Scholar] [CrossRef]

- Kim, J.; Lee, J.K.; Lee, K.M. Accurate Image Super-Resolution Using Very Deep Convolutional Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1646–1654. [Google Scholar] [CrossRef]

- Yang, H.H.; Huang, K.C.; Chen, W.T. LAFFNet: A Lightweight Adaptive Feature Fusion Network for Underwater Image Enhancement. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 685–692. [Google Scholar]

- Wu, J.; Liu, X.; Qin, N.; Lu, Q.; Zhu, X. Two-Stage Progressive Underwater Image Enhancement. IEEE Trans. Instrum. Meas. 2024, 73, 1–18. [Google Scholar] [CrossRef]

- Liu, J.; Liu, Z.; Wei, Y.; Ouyang, W. Recovery for underwater image degradation with multi-stage progressive enhancement. Opt. Express 2022, 30, 11704–11725. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar] [CrossRef]

- Li, H.; Li, J.; Wang, W. A Fusion Adversarial Underwater Image Enhancement Network with a Public Test Dataset. arXiv 2019, arXiv:1906.06819. [Google Scholar]

- Liu, R.; Jiang, Z.; Yang, S.; Fan, X. Twin Adversarial Contrastive Learning for Underwater Image Enhancement and Beyond. IEEE Trans. Image Process. 2022, 31, 4922–4936. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).