Abstract

Artificial Intelligence, especially Convolutional Neural Networks (CNN), is gaining importance in health sciences, including forensic odontology. This study aimed to systematically analyze elements for automated dental status registration on OPGs using CNNs, on different image segments and resolutions. A dataset of 1400 manually annotated digital OPGs was divided into train, test, and validation sets (75%–12.5%–12.5%). Pre-trained and from-scratch models were developed and evaluated on images from full OPGs to individual and segmented teeth and sizes from 256 px to 1820 px. Performance was measured by Sørensen–Dice coefficient for segmentation and mean average precision (mAP) for detection. For segmentation, the UNet Big model was the most successful, using segmented or individual images, achieving 89.14% for crown and 84.90% for fillings, and UNet with 79.09% for root canal fillings. Caries presented a significant challenge, with the UNet model achieving the highest score of 64.68%. In detection, YOLOv5x6, trained from scratch, achieved the highest mAP of 98.02% on 1820 px images. Larger image resolutions and individual tooth inputs significantly improved performance. This study confirms the success of CNN models in specific tasks on OPGs. Image quality and input (individual tooth, resolutions above 640 px) critically influenced model competence. Further research with from-scratch models, higher resolutions, and smaller image segments is recommended.

1. Introduction

Artificial Intelligence (AI), specifically Convolutional Neural Networks (CNNs), is gaining prominence in health sciences; however, its application in Forensic Odontology for medico-legal identification through dental evidence like orthopantomograms (OPGs) remains in an early stage.

Dental identification is one of the three primary methods of human identification, with the same level of significance as DNA and fingerprints. It relies on key features present in human dental structures. The specific dental morphology, together with different dental treatments during lifetime, ensures the uniqueness of dentition in each person, on which dental identification is based [1].

This process involves the analysis of both antemortem (AM) and postmortem (PM) data. Comparing AM and PM dental status is a critical step in the identification process. Therefore, recording dental status is a fundamental aspect of dental identification in forensics. However, traditional methods of dental status recording are time-consuming, particularly in scenarios involving mass casualties and large volumes of antemortem data to process [2]. Automating this procedure by using artificial intelligence would represent a significant contribution in the field of forensic dental medicine, streamlining and enhancing the efficiency of dental identification processes.

Dental status involves documenting the condition of each tooth in the upper and lower jaws within an individual’s oral cavity. This process is a basic practice in clinical dentistry, serving as a key for diagnosis, treatment planning, and patient care. The registration of dental status is a process used across all clinical branches of dentistry. When registering dental status, the clinician records various details, including the number of present teeth, extracted teeth, teeth affected by caries, filled teeth, and those supported by prosthetic devices such as crowns, bridges, or dentures. Additionally, dental status includes the registration of all other characteristics specific to the condition of each tooth and the stomatognathic system as a whole. In everyday practice, an intraoral examination is crucial for registering dental status, which may be supplemented by radiological examinations (e.g., X-rays, computed tomography—CT, cone beam computed tomography—CBCT, and others) [3]. The main advantage of registering dental status using an orthopantomogram (OPG) is its non-invasive nature. It also allows the identification of features that are not visible through clinical examination, e.g., endodontic treatment and implants. Additionally, this technique is applicable to both living and deceased individuals [2].

AI is an overarching domain that encompasses a wide range of techniques and methodologies designed to create intelligent solutions capable of performing tasks that typically require human intelligence. Deep learning, a subfield of AI, uses neural networks, inspired by the human brain’s structure, to acquire knowledge from vast datasets by automatically identifying and extracting features from raw data such as images, sounds, and text. In forensic dentistry, specific AI-based technologies include deep neural networks, artificial neural networks, machine learning, and computer technology [4,5]. CNNs are a type of deep neural network frequently used in image analysis problems and have proven successful in various other fields [6]. The main advantage of convolutional networks is their specific adaptation to the detection of significant patterns in images, unlike fully connected neural networks [7]. In dental medicine, different AI models are being developed for tooth recognition, caries detection, evaluation of periodontal bone loss, detection of periapical pathologies, treatment, and prognosis assessment [8,9,10,11,12,13]. In dental forensic science, specific algorithms can be trained to predict age and sex by analyzing distinct dental markers [14,15,16].

The purpose of our work is to systematically analyze all the elements required for automated dental status registration on OPGs using CNN in order to ultimately realize the best method that enables the highest level of accuracy and reliability.

Deep learning for automated dental status registration on panoramic radiographs involves several steps, including preprocessing, segmentation, and classification. The first step is to preprocess the image to improve its quality and remove any noise (unwanted or irrelevant information in the image that can interfere with the accurate analysis of the data), which may obstruct further analysis. Segmentation involves isolating the teeth or dental structures of interest from the rest of the image, and classification is the process of identifying specific characteristics. Deep learning algorithms can extract and learn features from large datasets of labelled panoramic radiographs. In the dental field, various image recognition architectures are employed to address different scenarios, including YOLOv4, SSR-Net, VGG16, and ResNet101 [17]. Basic elements for automated dental status registration are tooth detection and segmentation of dental procedures. Segmentation and identification of teeth are basic parts of dental image analysis, which enable the correct distinction and classification of individual teeth and dental interventions. Basic tasks like tooth recognition and morphological structure in radiographic images, including OPGs, have been automated with advanced deep learning models, such as CNNs [18]. Recognition emphasizes the identification and classification of teeth according to their morphology and spatial arrangement, while segmentation focuses on the extraction of individual teeth from adjacent tissues and dental restorations for comprehensive examination. These methodologies are critical in both clinical dentistry and forensic analysis to enhance diagnostic precision, facilitate treatment strategies, and minimize the potential for human error. For example, the size, shape, and wear patterns of teeth can provide valuable information for forensic identification [2]. The training process involves feeding the algorithm with large amounts of labelled data, allowing it to learn and recognize patterns. Once trained, the algorithm can be applied to new, unseen radiographs to make predictions. CNNs are commonly used in this context, as they are well-suited for image processing tasks and have demonstrated high accuracy in detecting dental features [18]. Both pre-trained and trained-from-scratch models have distinct advantages and disadvantages when applied to dental radiographic analysis (Table 1).

Table 1.

Comparison between pre-trained and trained from scratch models.

Training a model from scratch requires learning all feature representations without prior knowledge. While this approach offers maximum flexibility for novel tasks, it needs significantly larger datasets, extended training times, and substantial computational resources. Without robust data augmentation and regularization techniques, models trained from scratch are prone to overfitting and often underperform compared to pre-trained counterparts. A comparative analysis highlights the advantages and limitations of both approaches [19]. Pre-trained models typically achieve higher accuracy due to their learned representations, while models trained from scratch require extensive tuning and data to match their performance. Training time is significantly reduced when using pre-trained models, as they converge within fewer epochs, whereas models trained from scratch demand extensive training. Data requirements further differentiate the two approaches, with pre-trained models generalizing effectively with limited labeled data, while training from scratch requires a large dataset. Additionally, computational costs are lower for pre-trained models due to reduced training demands, whereas training from scratch incurs a higher computational burden. While pre-trained models may be constrained by their initial training dataset, models trained from scratch offer full adaptability to novel tasks if sufficient data are available [19].

Despite the progress made, there are emerging challenges in the detection and segmentation of teeth and other dental features. A primary concern is the size of the database, which plays a crucial role in developing effective deep learning models. Additionally, the quality of the used images can significantly impact the results, particularly if the images are of poor quality, with issues such as overlaps, distortions, and other factors that compromise the clarity of the OPGs. Data processing and labelling also present specific challenges that must be addressed and optimized for effective model learning [20]. Nevertheless, the application of convolutional networks in forensic dentistry and their potential contribution to the dental identification tasks represent great potential, making it vital to continue research in this field. This study aimed to comprehensively evaluate state-of-the-art detection and segmentation methods using one of the largest datasets in the literature, allowing for valid cross-examination and statistical analysis of a wide array of architectures and their hyperparameter values. The specific aims of this study were to (1) develop, evaluate, and compare the performance of both pre-trained and scratch-trained CNN models in detecting teeth and segmentation of dental interventions, and (2) analyze the impact of image quality on overall model performance.

2. Materials and Methods

The research was conducted on 1400 digital OPGs randomly selected from the collection of the Department of Dental Anthropology at the School of Dentistry, University of Zagreb. Inclusion criteria required medical records with at least one associated OPG from individuals older than 18 years at the time of the OPG. Exclusion criteria included the edentulous patients, presence of radiopacities caused by intra- or extraoral materials (e.g., orthodontic treatments and metallic accessories like earrings or piercings), and significant angulation or exposure errors in the OPG. To protect personal data confidentiality, all identifying information was removed from the orthopantomograms. For this study, there was no need to capture new orthopantomograms. The dataset was divided into three distinct sets—the train set, validation set, and the test set (75%–12.5%–12.5%; 1050–175–175). The train and validation sets were used during training and for tracking validation metrics to detect any overfitting (did not occur), while the test set was used to report the final results. The separation of those three sets was kept consistent across all registration tasks in order to facilitate comparability. The images were randomly assigned to one of the three sets. All testing was performed by comparing the model results to the expected results—the manual annotations from the test set, which had no influence on the model creation. The input data were different according to image size, from 256 pixels to 1820 pixels.

The computer program GIMP 2.10.22 was used to process the OPGs. GIMP is a powerful, free, and open-source image editor. The tool’s editing capabilities provided the annotators with a robust toolbox to adjust image parameters, helping them better discern the boundaries of the target regions. Importantly, the original OPGs were not modified during this process; everything except the annotated masks was discarded. Additionally, GIMP’s open format facilitated easy handling of per-pixel annotation data. Regarding annotation speed and validation, marking an entire tooth took about 10 min, while segmentation time depends on the number of interventions the tooth has undergone. For validation, we conducted multiple rounds of annotation-review iterations. Annotations were saved as XML files in the PASCAL VOC format for further use in neural networks. Every annotation step was conducted manually by a human expert; no annotations were automatically generated. Within the program, each tooth was outlined, covering the entire range of the tooth, including the crown and root (Figure 1). Teeth were labelled using the dual or binary system (FDI).

Figure 1.

Segmentation of teeth on OPG in the GIMP program.

As a second layer, after outlining the teeth, each filling, crown, bridge, implant, endodontic filling, and tooth build-up was marked (Figure 2).

Figure 2.

Segmentation of dental interventions on OPG in the GIMP program.

All orthopantomograms were processed by the same experienced dentist (A.Z.C), specialist in Dental pathology and endodontics, with 16 years of clinical dental experience, and reviewed independently by another experienced dentist (I.S.P.), specialist in Dental pathology and endodontics, with 20 years of working experience, with additional automated heuristic consistency checks applied. Inter- and intra-rater reliability analyses were not performed due to the substantial annotation time required. While minor annotation variability is possible, convolutional neural networks have been shown to be robust to small random errors in training labels.

Once an OPG was annotated, it was automatically analyzed by a set of heuristics, which could flag the annotation for further manual review. Once flagged for review, the annotation was manually reviewed, notes were written, and the task was returned to the initial annotator for corrections.

The validation heuristics are designed to prioritize recall—the goal is to find and correct every annotation mistake, at the cost of additional review time. Four heuristics were used: position validation, size ratio checks, overlap analysis, and connectivity analysis. Position validation checks if an annotation is too far away from the average position for an FDI label across the entire dataset. Size ratio checks verify that an annotation mask is not too dissimilar in the size ratio compared to the average of the entire dataset; the assumption is that teeth and dental conditions of the same FDI label have roughly the same width-to-height ratio (e.g., X1 teeth are long and narrow, while X8 teeth are squarer). Overlap analysis ensures consistency of annotations for a single tooth, as we expect a tooth condition to be contained within (i.e., have a very high overlap with) the tooth mask. The last heuristic is a connectivity analysis. We assumed that one dental condition can be annotated with a singular, fully connected, gapless mask. If a mask contained more than one component, it was flagged for review. The thresholds for these heuristics were made stricter over time to ensure high data quality. Most annotations passed the automated validation check on the first try, and no annotation was returned more than 3 times.

Pre-trained models that were then fine-tuned and models trained “from scratch” were evaluated. The pre-trained models have been trained on the established standard large-scale vision dataset “Common Objects in Context” (COCO) [21], and can, therefore, recognize general image patterns. Given that COCO consisted of natural images, while we were analyzing medical images, the convolutional neural networks had to bridge a substantial domain gap during training. Subsequently, they were fine-tuned for specific tasks. The models trained “from scratch” follow the standard aiming initialization method; thus, they learn to recognize patterns specifically connected with teeth and dental practices, having never seen anything else before. Part of the analysis of this study focuses on finding whether having learned general natural image features (from pre-training) allowed the models to achieve a higher overall performance.

For segmentation, the three most used and successful models in the literature are Fully Convolutional Networks (FCN) [22], DeepLab v3 [23], and UNet [24]. Those models can be constructed in different ways via adjustments to their hyperparameters. For UNet, different capacity scales were evaluated, with MicroUNet, MiniUNet, and BiggerUNet being 1/16, 1/4, and 2x the scale of baseline UNet, respectively. DeepLab v3 was evaluated with MobileNet, HRNet V2 W32, ResNet50, ResNet101, and HRNet V2 W48 as the backbone feature extractor. FCN was evaluated with different sampling rates; specifically, FCN8, FCN16, and FCN32 were evaluated. The model code for every variant is published and freely available at https://github.com/denmil/dental-cnn-status-reg (accessed on 19 September 2025).

All segmentation models were trained by well-established practice, using Cross-Entropy loss, and using Adam [25] as the optimizer (learning rate = 1.0 · 10−3, beta_1 = 0.9, beta_2 = 0.999, eps = 1.0 · 10−8, lambda = 0). All models were trained for 150 epochs; the best epoch was selected via the validation set, and all reported results and analyses were performed on the test set. A batch size of 64 was used.

For detection, due to the limited variation in object sizes within our dataset, the YOLOv5 architecture was selected for its superior computational efficiency and speed [26]. In contrast, traditional two-stage detectors like Faster R-CNN, although robust, would introduce unnecessary computational overhead without significant gains for our specific use case. We have evaluated all model variants: s, s6, m, m6, l, l6, x, and x6. The models were trained per the specifications of the authors (including all model and training hyperparameters, as well as image preprocessing), with the code available at https://github.com/ultralytics/yolov5 (accessed on 19 September 2025).

Additionally, we also evaluated different image sizes for detection, which the architecture supported due to its fully convolutional nature. A particularly important aspect in the design of deep learning-based image analysis experiments is the image preprocessing pipeline. Different architectures are designed with different preprocessing methods in mind, and as we were evaluating a wide array of architectures, the preprocessing pipeline needed to be tailored to each evaluated architecture. We strictly followed the preprocessing procedures as described in the original papers (UNet [24], DeepLab v3 [23], FCN [21], YOLOv5 [26]), with special attention paid to image size. All described preprocessing pipelines follow the standard structure of size adjustment (resize, crop), pixel value normalization, data augmentation, and padding (as necessary).

The image size has a significant impact on prediction performance. In order to process the entire image and have full control over image size (and therefore insight into the impact), the resizing step has been standardized across all evaluated models. We resized the larger dimension of the image to the desired size using standard bilinear resizing and padded the image with zero values with the goal of generating a square image. This way, we preserved all image information and directly observed the impact of image size on the performance of the models.

Models were trained for only one image size. For each image resolution (256 px, 640 px, 1280 px, and 1820 px), we trained separate models using that fixed input size—no model processes images of multiple input sizes. This allowed us to directly compare model performance across different but consistent input resolutions, rather than trying to handle variable resolutions within a single model.

For pre-trained models, which are typically designed for specific input dimensions, we maintained this same preprocessing approach during fine-tuning, allowing us to directly compare their performance with models trained from scratch across different resolutions. It is important to acknowledge that the difference in image sizes (what the model was pre-trained on, compared to the image size we were using for fine-tuning) resulted in a mismatch for the learned features. While the best case would be to first pre-train the models on the COCO dataset resized to our targeted image size, this is unfeasible for most researchers. Our study evaluated this case as it is the most commonly used scenario in practice.

Furthermore, the findings were assessed across the complete OPGs, as well as in relation to segmented teeth (teeth separated from adjacent structures) and individual teeth. These individual teeth were marked in bounding boxes where the most prominent pixels were used as box margins (Figure 3).

Figure 3.

Bounding box generated based on the segmented tooth marginal pixels.

A comparative analysis of these distinct methodologies was conducted to ascertain which approach produced the highest precision in tooth detection and segmentation. OPGs with manually marked teeth and dental interventions were used to train a CNN with the aim of automating tooth recognition and segmentation of dental procedures.

The models were trained on in-house GPU workstations that contain a Nvidia RTX A6000 (48 GB VRAM) GPU, AMD Ryzen Threadripper 3960X CPU, 64 GB of RAM, running Ubuntu 22.04 LTS, using PyTorch 2.3.

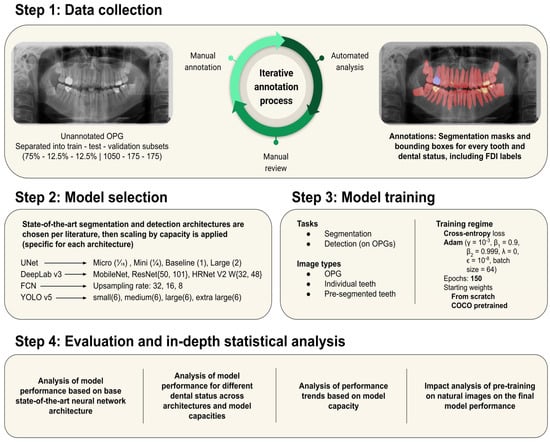

The workflow chart illustrates the development process of the CNN models, from data collection to the final model establishment and evaluation (Figure 4).

Figure 4.

Workflow chart of the study design.

The primary metric used to evaluate model performance is the Sørensen–Dice coefficient. The Sørensen–Dice coefficient calculates the overlap between the ground truth and the estimated areas. Formally, the Sørensen–Dice coefficient is defined as follows:

In this equation, X and Y are the ground truth and estimated regions, respectively. TP is the number of true positives, FP is the number of false positives, and FN is the number of false negative classifications (per-pixel). Segmentation studies in medical applications, and especially forensic odontology, report results using the Sørensen–Dice coefficient. State-of-the-art segmentation models are well established in the literature. The three most used and successful models in the literature are Fully Convolutional Networks [22], DeepLab v3 [23], and UNet [24]. Due to the limited variation in object sizes within our dataset, the YOLO architecture was selected for its superior computational efficiency and speed. In contrast, traditional two-stage detectors like Faster R-CNN, although robust, would introduce unnecessary computational overhead without significant gains for our specific use case. Detection was used to locate individual teeth in OPG images. While teeth are in a similar position in relation to each other, their absolute position and size on the image change due to differences between people [19,24]. There are many object detection approaches in the literature, from the traditional approaches like the Viola-Jones detector [27]. Precision and recall are key metrics in classification and are essential for evaluating object detection models. Precision measures the accuracy of positive classifications, defined as the ratio of true positives (TP) to all predicted positives (TP + FP). It indicates how reliable the model is when identifying positive instances. In contrast, recall assesses how well the model identifies all true positive instances, calculated as TP/(TP + FN). In object detection, these metrics serve complementary roles: recall evaluates the proportion of objects correctly detected, while precision determines the likelihood that a detected object is correct. The mean average precision (mAP) often summarizes the balance between these metrics. mAP represents the average precision across all object classes at a given Intersection over Union (IoU) threshold, which measures the overlap between the predicted and actual object boundaries [21].

This study was approved by the Ethics Committee (05-PA-30-XXX-10/2021) of the School of Dentistry, University of Zagreb. All subjects whose orthopantomograms were used in this study signed an informed consent regarding the use of their medical records for the purpose of this scientific research.

3. Statistical Analysis

Statistical analysis was performed to assess the normality of distribution of numerical data using the Smirnov–Kolmogorov test, and based on the obtained results, appropriate parametric and/or non-parametric statistical analyses and methods of data presentation were applied. The Smirnov–Kolmogorov test for normality yielded a non-significant result, K-S (p > 0.05).

Quantitative data were presented through ranges, arithmetic means, and standard deviations, or medians with interquartile ranges for non-parametric distribution. Categorical data (such as capsule penetration) were presented through absolute frequencies and corresponding proportions. All p-values less than 0.05 were considered significant. ANOVA was used to test the differences between the model used and within groups. The licensed statistical program MedCalc® Statistical Software version 20.015 (MedCalc Software Ltd., Ostend, Belgium; https://www.medcalc.org; 2021; accessed 28 October 2024.) was used for statistical analysis.

4. Results

In total, there were 39,441 segmented teeth, with 420 crowns, 828 bridges, 2172 root canals, 9869 fillings, and 795 teeth with caries.

In tooth segmentation, the most successful were UNet Big and DeepLabV3-HRNet V2 W32, with the same result of 95.94% using the individual tooth image as input, while UNet Big showed the highest score of 95.25% on the OPG as input. The segmented tooth option was not applicable to this task (Table 2).

Table 2.

Comparative strengths of different models for specific dental segmentation tasks using different image inputs: OPG, individual tooth, and segmented tooth. The highest performances (Dice score [%]) of models are highlighted in red.

In the context of crown segmentation, the UNet Big model showed superior performance on segmented tooth input, achieving the highest score of 89.14% (Table 2). Similarly, for filling segmentation, the UNet Big model on the individual tooth scored the highest value of 84.90%, closely followed by the segmented tooth input, with a comparable score of 83.49%. For root canal filling segmentation, the performance of the UNet on the segmented tooth was very close to that of UNet Big on the individual tooth, with scores of 79.09% and 79.53% respectively, showing their robustness in this category.

In contrast, caries segmentation posed a significant challenge, with the UNet model achieving the highest score of 64.68%, surpassing other models in this task. Across all experiments, the UNet family of models, including the Micro, Mini, UNet, and Big variants, exhibited consistently impressive performance, particularly in crown and filling segmentation. The performance of FCN models, however, varied considerably. The FCN32 model demonstrated limited efficacy, especially in caries detection, with a score of 19.92%. Conversely, the FCN8 model achieved improved results in crown segmentation on the segmented tooth (83.39%) and filling segmentation on individual teeth (83.66%). DeepLab variants also performed well across categories, with HRNet V2 W48 achieving a high score of 86.76% in crown segmentation and maintaining robust performance overall. ResNet-based models, including ResNet50 and ResNet101, showed consistent efficacy, particularly in crown and filling segmentation, with scores in the range of 85–86%. Overall, crown segmentation appeared as the most well-performing category across the models, while caries segmentation consistently proved to be the most challenging, as shown by its lower scores. The UNet Big and UNet variants exhibited superior generalization among all tested models. They ranked consistently as top performers across all dental segmentation tasks, especially on individual teeth as image input.

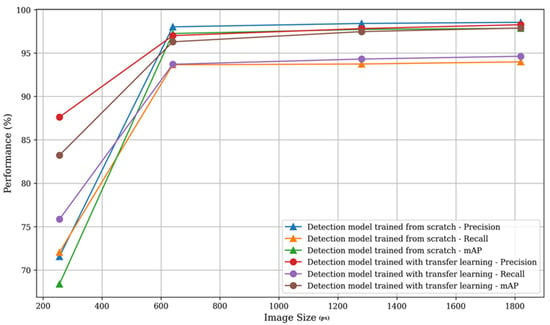

All variants of the YOLOv5 model architecture family (YOLOv5s, YOLOv5s6, YOLOv5m, YOLOv5m6, YOLOv5l, YOLOv5l6, YOLOv5x, and YOLOv5x6) trained from scratch and pre-trained were tested for detection on OPGs. Their performances are presented in Table 3. The evaluation was conducted across multiple image resolutions: 256 pixels, 640 pixels, 1280 pixels, and 1820 pixels. The best results, highlighted in red, showed the best performance of the YOLOv5x6 model, trained from scratch, with mAP of 98.02 vs. mAP of 97.98 achieved by the pre-trained YOLOv5m6 model. In both cases, the best results were achieved on the highest resolution image, 1820 px.

Table 3.

Precision, recall, and mAP (%) of trained from scratch and pre-trained detection models showing the best results (in red) on the highest resolution image (px).

The statistical analysis included all models of each resolution (image size, px). The results showed differences in performance based on image size (Figure 5). In all cases, larger image sizes led to more successful detection models. The lowest values were generally associated with a 256 resolution, with the minimum being 68.39% for mAP when trained from scratch, which was still satisfactory. For better resolution images, values exceed 90%, providing a strong indication of model effectiveness.

Figure 5.

Model’s performances based on image resolution (px). Larger image size resulted in higher success of models’ performance.

The best results based on between-group comparisons presented that in the detection model trained from scratch, precision, recall, and mAP yield incredibly significant F-values of 7,556,478, 23,307,591, and 15,095,629, respectively, all at p-values < 0.001. In the pre-trained model, the precision, recall, and mAP also showed very significant results with F-values of 822,789, 13,272,348, and 1,305,793, respectively, also all at p-values < 0.001. While the Within Groups Sum of Squares and Mean Square values were considerably lower, they gave insight into the variance within image sizes and pointed to model consistency (Table 4).

Table 4.

The comparison in precision, recall, and mAP (%) of detection models with different image resolution (px) based on ANOVA shows significant differences in all parameters within and between groups.

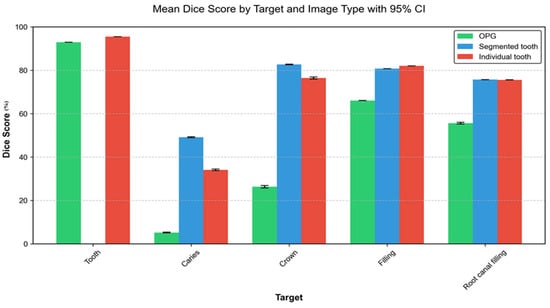

There was a significant difference in tooth segmentation between individual teeth and OPGs. However, in both cases, the success rate of tooth detection was greater than 90%, showing quite a successful outcome. However, results were significantly better when using individual teeth as image input compared to the entire OPG, 95.5% vs. 92.9%, p < 0.001. In the case of caries, the ranges of the models used are much larger—the weakest recognition was on the OPGs (5.21%), then on the individual tooth (34.10%), and the best on a segmented tooth (49.16%). The models showed best performances in crown segmentation on segmented teeth (82.10%), followed by individual teeth (76.45%), and the OPG—with 26.33% as the least successful input. Regarding the fillings and root canal filling segmentation, the results showed no significant difference between individual tooth and segmented tooth (82.04% and 80.79%; 75.58% and 75.71%), while the performances on the OPG were significantly lower—66.07%; 55.66% (Figure 6, Table 5).

Figure 6.

Segmentation of tooth, caries, crowns, fillings, and root canal fillings (Dice score [%]) on different image inputs: orthopantomogram, segmented and individual tooth; all models together.

Table 5.

ANOVA test of all models together in the segmentation of tooth, caries, crowns, fillings, and root canal filling. ANOVA showing significant differences in all parameters within and between groups.

Generally, the most successful model for teeth detection was YOLOv5x6 (trained from scratch) with a mean average precision (mAP) of 98.02% vs YOLOv5m6 (pre-trained) with mAP of 97.98%. Using the YOLOv5x6 model, detection for teeth in the upper jaw mAP was 96.41%, while for the lower jaw, it was 99.82%. In the segmentation of caries, crowns, tooth filling, and root canal filling, the most successful model was UNet Big used on individual teeth from OPG with removed surrounding structures with Sørensen–Dice coefficient 59.53%, 88.78%, 84.9% and 79.53% respectively.

5. Discussion

Research concerning the automated identification and delineation of dental structures and procedures within an orthopantomogram presents parallel themes, with many inquiries in the utilization of artificial intelligence and machine learning in the field of medical imaging and dentistry. For a specific task, as dental identification, several algorithms have been described in the literature with an accuracy of up to 85%. In both cases, authors were using pre-trained models based on their general advantages and the limited database of OPGs in conducted studies [1,28].

Pre-trained and trained from scratch models both have their advantages and disadvantages in dental image feature analysis. Pre-trained models use prior knowledge from large datasets and can be further fine-tuned for medical and dental tasks, reducing computational costs and training time, and often achieving high accuracy with limited labeled medical data. In contrast, scratch-trained models require large, labelled datasets and computational resources, but can lead to the extraction of domain-specific features tailored to dental images. While pre-trained models excel in generalization and efficiency, custom models can outperform them in specialized tasks where unique image features differ significantly from natural images. In this study, we aimed to determine which group of models is more suitable and gives more accurate results in the analysis of dental characteristics with different parameters of the input image. The results make a significant contribution to finding the properties of the best model and input image size, facilitating the automated application of dental status registration for forensic purposes.

High accuracy and precision of deep learning in dental forensics, if achieved in identification, would offer several advantages over traditional methods. Firstly, it reduces the need for human intervention and can process large volumes of data quickly and accurately. Furthermore, an immense amount of data needs to be analyzed in mass disasters when time is often of the essence and impacts the potential identification success [16,29]. Secondly, deep learning algorithms are not subject to human biases, ensuring more consistent and objective results [4]. Finally, the use of CNNs allows for the automatic extraction of features from panoramic radiographs, reducing the need for manual segmentation and feature selection.

It is not only the type of convolutional network used that is responsible for the final success of the algorithm. The type and quality of the input image (OPG) on which the analysis is performed are also of crucial importance. Therefore, in our study, we included images of different resolutions to determine the lower limit below which images are not adequate for the automated procedure. In addition to the image quality (px), we modified the type of input image (whole OPG, individualized tooth in the box, and segmented tooth without surrounding structures) to determine whether there is a difference in the success of the procedure based on the input image. Significantly better results were achieved on individual and segmented teeth compared to whole OPG. The superposition of adjacent structures and the presence of numerous and variable anatomical structures on the OPG clearly hinder the operation of the algorithms.

Research on the targeted application of CNN for the purpose of dental identification is rare. However, research on the application of CNN for clinical purposes, primarily diagnostic, is more frequent [30,31]. Similar features have been analyzed, and it is possible to compare the results obtained regardless of the specific end purpose, clinical or forensic.

Studies have demonstrated different results of accuracy in using CNN for tooth detection and segmentation [32,33,34]. Leite et al.’s model achieved a sensitivity of 98.9% and a precision of 99.6% for tooth detection, reducing time consumption by 67% compared to manual methods. However, their study was limited by a small dataset of 153 radiographs for training and testing [35]. A significant contribution of our work is precisely extensive experimentation and analysis of the applicability and performance of state-of-the-art convolutional neural network architectures for the tasks of detection and segmentation of teeth and dental procedures on one of the largest datasets in literature, allowing for an in-depth analysis across a wide variety of use cases.

One of the more frequent elements of research in the literature is caries diagnostics. Abu Tareq et al. proved the effectiveness of deep learning modes to predict dental cavitations [36]. These findings reinforce the diagnostic potential of CNNs in clinical dentistry. Research consistently showed CNN-based models excel in caries detection, often surpassing traditional methods and junior dentists in diagnostic accuracy. For instance, Ying et al. showed that models like YOLOv5 achieved F1-scores up to 0.87, outperforming younger dentists. Moreover, combining AI with expert input enhanced diagnostic accuracy and inter-observer agreement, highlighting AI’s potential to augment dental radiology workflows effectively [37]. The novel diagnostic model named ResNet + SAM achieved superior performance in caries detection, with an F1 score of 0.886, an accuracy of 0.885, and an AUC of 0.954. The results enhanced diagnostic accuracy and consistency compared to dentists alone [38]. In our study, the segmentation of caries showed significant challenges, with the UNet model achieving the highest score of 64.68 on the segmented teeth.

However, results with CNN models consistently achieving high diagnostic accuracy for caries detection, with AUCs often exceeding 0.90, are presented. These models often outperform or match dentists, enhancing accuracy and inter-observer agreement [39].

Simonyan et al.’s work on VGG networks highlights CNNs’ capabilities in accurate image processing. Similarly, CNN architectures excel in detecting and segmenting dental structures, using their strength in feature extraction for complex images, making them ideal for dental imaging and broader medical applications [40].

In extensive research on automatic segmentation of teeth and dental interventions on 8138 OPGs, Dice similarity coefficient and accuracy values across all OPGs were 0.85 and 0.95 for the tooth segmentation, 0.88 and 0.99 for dental caries, 0.87 and 0.99 for dental restorations, 0.93 and 0.99 for crown–bridge restorations, 0.94 and 0.99 for dental implants, 0.78 and 0.99 for root canal fillings, and 0.78 and 0.99 for residual roots, respectively. However, the authors concluded that many pitfalls still limit automated procedures in routine clinical applications [41]. As already presented by the authors of this paper in 2024, automated tooth recording from orthopantomograms exhibits high precision and recall, indicating its strong potential for effective implementation in clinical and forensic applications [42].

Furthermore, a general overview of the possible application of AI in forensic medicine and specifically forensic dentistry is presented in the work of Vodanović et al. [4]. However, there are also challenges associated with the use of deep learning in this field. One of the main limitations is the need for large amounts of labelled data to train the algorithms effectively [29]. In addition, the quality of the input data can significantly affect the model’s performance, which is also confirmed in our study [9,43]. Observed plateau in performance (Figure 6) at higher resolutions (beyond 640 px), which can be attributed to several factors. At lower resolutions (256 px), significant dental features and details may be lost due to down-sampling, explaining the lower performance. The substantial improvement seen when moving to 640 px suggests this resolution captures most diagnostically relevant features, such as tooth boundaries, dental caries, root canal fillings, and restoration margins with sufficient clarity. The minimal gains beyond this point, while statistically significant, indicate that additional resolution provides diminishing returns—most features critical for dental analysis are already well-preserved at 640 px resolution. This finding aligns with the spatial scale of dental structures and common dental conditions in OPGs, where features tend to manifest at scales well-captured by moderate resolutions. These results have important practical implications, suggesting that 640 px resolution might represent an optimal balance between diagnostic accuracy and computational efficiency for automated dental image analysis systems, particularly in resource-constrained environments or when processing large volumes of images. Poor-quality radiographs or those with significant noise may lead to inaccurate predictions.

In our study, the performance achieved across all segmentation models gives an insight into the trade-off between performance and model capacity, and it gives insight into how suitable different architectures are for the domain of dental X-ray images. It can be observed that the performance increases for all tasks as the model capacity is increased, but that a saturation point is reached for the larger models. This can be seen on UNet: the 4x difference in capacity between UNet mini and baseline UNet results in a 1.41 p.p. difference, while a further 2x difference in capacity between the largest and baseline UNet capacity results in only 0.11 p.p. difference.

With regard to segmentation model architectures, we can see that UNet models vastly outperform FCNs when it comes to the performance/capacity ratio, and that they outperform DeepLab v3, given the performance/capacity ratio for any of the tested backbone networks. This indicates that the copy-and-crop operation introduced with UNet carries useful information more efficiently than DeepLab’s atrous separable convolutions, and much more efficiently than FCN’s simple feed-forward approach. This is especially visible with the full OPG—caries case, where non-UNet architectures fail to achieve any meaningful results.

Detection performance for smaller image sizes is coupled with model capacity. However, with the increase of image size, the gap between pre-trained and from-scratch models decreases, with from-scratch models achieving a small advantage vs pre-trained models at the largest image size. Given this behavior, we can see that at lower image sizes the general image features learned during pre-training provide a benefit, but as the image size increases, those features become less relevant, as there is enough data to learn domain-specific features that ultimately outperform the pre-trained variants. Effectively, the general learned image features bridge the issues caused by small image sizes to some degree, but given enough data, models are ultimately able to achieve better performance with learned features tailored to the specific task and data. These performance trends also show that we have sufficient data to use the capabilities of the higher capacity models.

Based on our results, the recommended approach would be to use images larger than 640, regardless of the model, due to the improved quality. In the detection model trained from scratch, the most pronounced differences were seen between the smallest and largest image sizes, specifically 256 pixels and 1820 pixels. These differences accounted for mean variations of approximately 26.97 in precision, 21.94 in recall, and 29.47 in mAP. These results were statistically significant, with narrow confidence intervals indicating high reliability. Conversely, comparisons between closer image sizes, such as 1280 pixels and 1820 pixels, yielded minor differences. For instance, the mean differences in precision and mAP were −0.13 and −0.14, respectively, both significant at p-values < 0.001.

A similar trend was seen in the detection model trained using transfer learning with COCO weights (pre-trained). The most significant mean differences occurred between 256 pixels and 1820 pixels, with differences of 10.64 for precision, 18.77 for recall, and 14.64 for mAP, all highly significant. Minor differences were noted between 1280 pixels and 1820 pixels, with mean differences of −0.45 for precision and −0.41 for mAP, both statistically significant at p < 0.001. These findings highlight that the most substantial performance improvements are associated with increases in image resolution, particularly when comparing extreme resolutions. The results of this study align closely with findings from related research, which also underscore the effectiveness of artificial intelligence models in dental diagnostics and segmentation [38,39]. The systematic review showed a mean sensitivity of 0.75, specificity of 0.90, accuracy of 0.89, and AUC of 0.92, reflecting strong diagnostic ability for AI models in caries detection. The studies underline the promise of AI in dentistry, and the call for more standardized datasets and evaluation metrics to improve reliability and clinical applicability [10].

A notable mention from 2022. is a paper where six pre-trained CNN models were also successfully applied to CBCT images with a segmentation of tooth accuracy of 93.3% and classification of missing tooth regions with an accuracy of 89% [18]. Although CBCT is a three-dimensional and therefore, more detailed record of the teeth and surrounding structures, it is interesting that our results were better on two-dimensional images. Despite the existence of high-quality CBCT X-ray techniques that are now widespread in dental clinical practice, classic two-dimensional images are still the most commonly used for dental identification.

Recently, innovative ideas and techniques have emerged in the analysis of medical images. Transformers, originally developed for natural language processing, analyze images by dividing them into small parts (patches) and applying a self-attention mechanism to capture the global context of the image, making them potentially more flexible than convolutional neural networks (CNNs) [44]. Hybrid architectures combine key components of both CNNs and Transformers with the aim of combining their strengths, detecting fine details, and capturing global context, making them promising for medical image analysis [45].

Still, the research of Schneider et al. showed that CNNs significantly outperformed Transformer-based architectures and their hybrids across all three dental segmentation tasks evaluated in this study (tooth segmentation, tooth structure, and caries segmentation) [46].

The systematic review by Hung et al. encompasses all AI applications in dental and maxillofacial radiology and, thus, discusses the use of AI technologies, including CNNs, in performing such tasks as segmentation and detection. This especially applies to those AI models created for dental radiograph analysis that need exact delineation of dental features [47]. Khanagar et al. focused on the development and application of AI in dentistry, discussing the usage of AI and deep learning for dental disease recognition and automation of processes. Their results show the increased success of AI-based systems for dental condition detection, similar to other AI models that provide tooth segmentation and dental status recognition on radiographs [48].

This study has several noteworthy limitations. Firstly, all OPGs were acquired from a single institution using the same imaging device. While this ensured consistency in image acquisition, it limited variability in image characteristics, thereby reducing the external validity and generalizability of the proposed models to other clinical settings or equipment. To enhance robustness across diverse forensic environments, future datasets should incorporate images from multiple centers and devices.

Secondly, although the dataset used in this study was of considerable size, it originated from a narrow clinical context, leading to potential imbalances and limited heterogeneity across patient subgroups and dental conditions. This uniformity may affect the models’ performance in more diverse populations. Future research should prioritize the inclusion of multi-institutional data with more balanced subgroups to enhance performance stability.

A further limitation is the lack of formal calibration and inter-/intra-observer reliability assessment for manual annotations. While all annotations were performed by an experienced dentist and independently reviewed by another expert, the absence of structured calibration protocols introduces the risk of subjective bias, particularly in borderline or diagnostically challenging cases such as caries detection. Future studies should incorporate standardized annotation protocols, inter-rater agreement analysis, and consensus-driven labeling strategies to strengthen the validity of the reference standards.

Additionally, the models—particularly the larger architectures such as UNet Big and YOLOv5x6 at high resolutions—were trained using high-end GPU workstations. Such computational resources are often unavailable in typical clinical or forensic environments, posing challenges for real-world implementation. Future work should explore model optimization techniques such as pruning, quantization, or deployment via cloud-based platforms to improve accessibility and cost-efficiency.

Another methodological concern relates to the interpretability of convolutional neural networks (CNNs). These models inherently function as black boxes, providing limited insight into feature attribution or decision-making processes. While this does not necessarily impact classification accuracy, it may undermine their utility in medico-legal contexts, where explainability is a prerequisite for evidentiary admissibility. Enhancing model transparency through explainable AI (XAI) techniques is, therefore, a crucial direction for future research.

Finally, this study focused exclusively on panoramic radiographs. Although OPGs offer a broad overview of the dentition and are commonly used in forensic practice, they do not capture the finer anatomical details available in other modalities such as bitewing, periapical, or CBCT imaging. The exclusion of these modalities may limit the general applicability of the proposed approach. Future research should consider incorporating multimodal imaging data to improve diagnostic precision and expand the clinical and forensic relevance of CNN-based dental analysis.

Despite all the limitations, currently, in all studies, including our research, the effectiveness of pre-trained and trained from scratch CNN models in medical image segmentation, detection, and classification is established, and it is part of the wider trend in using AI to enhance precision and improve outcomes in patient care and indicating the possibility of application in forensic dentistry.

6. Conclusions

Our study found a high level of success of the models used in tooth detection and segmentation of dental interventions on OPGs. There were statistically significant differences between transfer learning groups and groups trained from scratch for all metrics and models studied. The best performing architecture for tooth intervention detection was UNet Big. Different image quality inputs significantly influenced the models’ competence. While larger image sizes, that was 1280 px and 1820 px—produced better performance of precision, recall, and mAP for the models, smaller image sizes, such as 256 px, had worse performances, especially by models trained from scratch, with large differences on all metrics. Significantly better results were achieved using individual teeth as input images, compared to the entire OPGs.

Recommendations for further research, based on our results, would be to use from-scratch models for specific tasks such as segmentation of teeth and dental interventions, on images with a resolution higher than 640 px. When segmenting dental interventions, it is better to use smaller segments of the image, such as a box with an individual tooth or a segmented tooth, instead of the entire OPG. By automating the process of analyzing panoramic radiographs, these algorithms cannot yet completely replace humans, but could assist forensic experts in facilitating and improving dental identification tasks. With continued advancements in deep learning technology, it is likely that its use in forensic dentistry will become more widespread and refined. However, further research is required to address the challenges associated with data quality and the need for large training datasets.

Author Contributions

Conceptualization, M.S. and M.V.; methodology, M.V., M.S. and I.S.P.; software, M.S. and D.M.; validation, A.Z.Ç., D.M. and L.B.; formal analysis, D.M. and A.Z.Ç.; investigation, D.M., I.S.P. and A.Z.Ç.; resources, I.S.P. and M.V.; data curation, D.M.; writing—original draft preparation, A.Z.Ç., L.B. and I.S.P.; writing—reviewing and editing, I.S.P., A.Z.Ç., D.M. and L.B.; visualization, D.M.; supervision, M.V. and M.S.; project administration, I.S.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research received funding from the University of Zagreb, Croatia, within the project “Analysis of teeth and jaws—anthropological and forensic aspects”.

Institutional Review Board Statement

This study was conducted in accordance with the Declaration of Helsinki and approved by the Institutional Ethics Committee (05-PA-30-XXX-10/2021) of the School of Dentistry, University of Zagreb.

Informed Consent Statement

Patients signed a consent for the images (OPGs) to be used for scientific purposes. All personal data were removed from the radiological images used before further use in the research.

Data Availability Statement

The datasets used and/or analyzed during the current study are available from the corresponding author on reasonable request.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Pereira, C.P.; Carvalho, R.; Augusto, D.; Almeida, T.; Francisco, A.P.; Salvado, E.; Silva, F.; Santos, R. Development of artificial intelligence-based algorithms for the process of human identification through dental evidence. Int. J. Legal Med. 2025, 139, 1835–1850. [Google Scholar] [CrossRef] [PubMed]

- Brkić, H. (Ed.) Textbook of Forensic Odonto-Stomatology by IOFOS; Naklada Slap: Jastrebarsko, Croatia, 2021. [Google Scholar]

- Hadden, A.M.; FGDP(UK). Clinical Examination and Record-Keeping Working Group. Clinical examination & record-keeping:Part 3: Electronic records. Br. Dent. J. 2017, 223, 873–876. [Google Scholar] [CrossRef] [PubMed]

- Vodanović, M.; Subašić, M.; Milošević, D.P.; Galić, I.; Brkić, H. Artificial intelligence in forensic medicine and forensic dentistry. J. Forensic Odontostomatol. 2023, 41, 30–41. [Google Scholar] [PubMed]

- Zhou, S.K.; Greenspan, H.; Shen, D. Deep Learning for Medical Image Analysis, 2nd ed.; Academic Press: London, UK, 2023; 518p. [Google Scholar]

- Vodanović, M.; Subašić, M.; Milošević, D.; Savić Pavičin, I. Artificial Intelligence in Medicine and Dentistry. Acta Stomatol. Croat. 2023, 57, 70–84. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Lin, S.Y.; Chang, H.Y. Tooth numbering and condition recognition on dental panoramic radiograph images using CNNs. IEEE Access 2021, 9, 166008–166026. [Google Scholar] [CrossRef]

- Lee, J.H.; Kim, D.H.; Jeong, S.N.; Choi, S.H. Detection and diagnosis of dental caries using a deep learning-based convolutional neural network algorithm. J. Dent. 2018, 77, 106–111. [Google Scholar] [CrossRef]

- Albano, D.; Galiano, V.; Basile, M.; Di Luca, F.; Gitto, S.; Messina, C.; Cagetti, M.G.; Del Fabbro, M.; Tartaglia, G.M.; Sconfienza, L.M. Artificial intelligence for radiographic imaging detection of caries lesions: A systematic review. Eur. J. Oral Sci. 2023, 131, e12963. [Google Scholar] [CrossRef]

- Chen, C.C.; Wu, Y.F.; Aung, L.M.; Lin, J.C.; Ngo, S.T.; Su, J.N.; Lin, Y.M.; Chang, W.J. Automatic recognition of teeth and periodontal bone loss measurement in digital radiographs using deep-learning artificial intelligence. J. Formos. Med. Assoc. 2022, 121, 603–613. [Google Scholar] [CrossRef]

- Li, S.; Liu, J.; Zhou, Z.; Zhou, Z.; Wu, X.; Li, Y.; Wang, S.; Liao, W.; Ying, S.; Zhao, Z. Artificial intelligence for caries and periapical periodontitis detection. J. Dent. 2022, 122, 104107. [Google Scholar] [CrossRef] [PubMed]

- Tanikawa, C.; Yamashiro, T. Development of novel artificial intelligence systems to predict facial morphology after orthognathic surgery and orthodontic treatment in Japanese patients. Sci. Rep. 2021, 11, 15853. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Wang, X.; Liu, Y.; Miao, X.; Chen, Y.; Cao, X.; Zhang, Y.; Li, S.; Zhou, Q. Correction: DENSEN: A convolutional neural network for estimating chronological ages from panoramic radiographs. BMC Oral Health 2023, 23, 256. [Google Scholar] [CrossRef]

- De Tobel, J.; Jacobs, R.; Vandermeulen, D.; Thevissen, P. Automated technique for visualizing the development of third molars on panoramic radiographs for forensic age estimation. Forensic Sci. Int. 2019, 294, 54–62. [Google Scholar]

- Wang, J.; Dou, J.; Han, J.; Li, G.; Tao, J. A population-based study to assess two convolutional neural networks for dental age estimation. BMC Oral Health 2023, 23, 38. [Google Scholar] [CrossRef] [PubMed]

- Milošević, D. Deep Learning-Based Analysis of Dental X-Ray Images for Forensic Estimation of Age and Sex. Ph.D. Thesis, University of Zagreb, Faculty of Electrical Engineering and Computing, Zagreb, Croatia, 2022. Available online: https://repozitorij.fer.unizg.hr/islandora/object/fer:7744 (accessed on 28 April 2025).

- Al-Sarem, M.; Al-Asali, M.; Alqutaibi, A.Y.; Saeed, F. Enhanced tooth region detection using pretrained deep learning models. Int. J. Environ. Res. Public Health 2022, 19, 15414. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Sabha, S.U.; Assad, A.; Din, N.M.; Bhat, M.R. From scratch or pretrained? An in-depth analysis of deep learning ap-proaches with limited data. Int. J. Syst. Assur. Eng. Manag. 2024, 1–10. [Google Scholar] [CrossRef]

- Brahmi, W.; Jdey, I.; Drira, F. Exploring the role of convolutional neural networks in dental radiography segmentation: A comprehensive systematic literature review. Eng. Appl. Artif. Intell. 2024, 133, 108510. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common objects in context. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; IEEE: New York, NY, USA, 2014; pp. 740–755. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Chen, L.-C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI; Springer International Publishing: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Kingma, D.P.; Ba, L.J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple features. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Kauai, HI, USA, 8–14 December 2001; Volume 1, p. I. [Google Scholar]

- Choi, H.R.; Siadari, T.S.; Ko, D.Y.; Kim, J.E.; Huh, K.H.; Yi, W.J.; Lee, S.-S.; Heo, M.-S. Can deep learning identify humans by automatically constructing a database with dental panoramic radiographs? PLoS ONE 2024, 19, e0312537. [Google Scholar] [CrossRef]

- Stavrianos, C.; Dietrich, E.; Stavrianos, I.; Petalotis, N. The role of dentistry in the management of mass disasters and bioterrorism. Acta Stomatol. Croat. 2010, 44, 110–119. Available online: https://hrcak.srce.hr/file/84582 (accessed on 22 June 2025).

- Çeshko, Z.; Subašić, M.; Milošević, D.; Savić Pavičin, I.; Vodanović, M. Automated recording of dental status using convolutional neural networks. Acta Stomatol. Croat. 2022, 56, 187. [Google Scholar]

- Küçük, D.B.; Imak, A.; Özçelik, S.T.A.; Çelebi, A.; Türkoğlu, M.; Sengur, A.; Koundal, D. Hybrid CNN-Transformer model for accurate impacted tooth detection in panoramic radiographs. Diagnostics 2025, 15, 244. [Google Scholar] [CrossRef]

- Brahmi, W.; Jdey, I. Automatic tooth instance segmentation and identification from panoramic X-ray images using deep CNN. Multimed. Tools Appl. 2024, 83, 55565–55585. [Google Scholar] [CrossRef]

- Görürgöz, C.; Orhan, K.; Bayrakdar, I.S.; Çelik, Ö.; Bilgir, E.; Odabaş, A.; Aslan, A.F.; Jagtap, R. Performance of a convolutional neural network algorithm for tooth detection and numbering on periapical radiographs. Dentomaxillofac. Radiol. 2022, 51, 20210246. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Rubiu, G.; Bologna, M.; Cellina, M.; Cè, M.; Sala, D.; Pagani, R.; Mattavelli, E.; Fazzini, D.; Ibba, S.; Papa, S.; et al. Teeth segmentation in panoramic dental X-ray using Mask regional convolutional neural network. Appl. Sci. 2023, 13, 7947. [Google Scholar] [CrossRef]

- Leite, A.F.; van Gerven, A.; Willems, H.; Beznik, T.; Lahoud, P.; Gaêta-Araujo, H.; Vranckx, M.; Jacobs, R. Artificial intelligence-driven novel tool for tooth detection and segmentation on panoramic radiographs. Clin. Oral Investig. 2021, 25, 2257–2267. [Google Scholar] [CrossRef] [PubMed]

- Abu Tareq, A.; Faisal, M.I.; Islam, M.S.; Rafa, N.S.; Chowdhury, T.; Ahmed, S.; Farook, T.H.; Mohammed, N.; Dudley, J. Visual Diagnostics of Dental Caries through Deep Learning of Non-Standardised Photographs Using a Hybrid YOLO Ensemble and Transfer Learning Model. Int. J. Environ. Res. Public Health 2023, 20, 5351. [Google Scholar] [CrossRef]

- Ying, S.; Huang, F.; Shen, X.; Liu, W.; He, F. Performance comparison of multifarious deep networks on caries detection with tooth X-ray images. Dentomaxillofac. Radiol. 2023, 52, 20230107. [Google Scholar] [CrossRef]

- Liu, Y.; Xia, K.; Cen, Y.; Ying, S.; Zhao, Z. Artificial intelligence for caries detection: A novel diagnostic tool using deep learning algorithms. J. Dent. Sci. 2023, 18, 509–516. [Google Scholar] [CrossRef]

- Thrall, J.H.; Li, X.; Li, Q.; Cruz, C.; Do, S.; Dreyer, K.; Brink, J.A. Artificial intelligence and machine learning in radiology: Opportunities, challenges, pitfalls, and criteria for success. J. Am. Coll. Radiol. 2018, 15 Pt B, 504–508. [Google Scholar] [CrossRef] [PubMed]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. CoRR 2015, arXiv:1409.1556. [Google Scholar]

- Gardiyanoğlu, E.; Ünsal, G.; Akkaya, N.; Aksoy, S.; Orhan, K. Automatic segmentation of teeth, crown–bridge restorations, dental implants, restorative fillings, dental caries, residual roots, and root canal fillings on orthopantomographs: Convenience and pitfalls. Diagnostics 2023, 13, 1487. [Google Scholar] [CrossRef] [PubMed]

- Savić Pavičin, I.; Zymber Çeshko, A.; Milošević, D.; Subašić, M.; Vodanović, M. Automated detection and segmentation of teeth and dental procedures from orthopantomograms. Abstract presented at: 28th Annual European Association of Dental Public Health (EADPH) Congress; Heraklion, Crete; 2024 Oct 4. Community Dent. Health. 2024, 29, 1. [Google Scholar] [CrossRef]

- Hatvani, J.; Horváth, A.; Michetti, J.; Basarab, A.; Kouamé, D.; Gyöngy, M. Deep learning-based super-resolution applied to dental computed tomography. IEEE Trans. Radiat. Plasma Med. Sci. 2018, 3, 120–128. [Google Scholar] [CrossRef]

- Takahashi, S.; Sakaguchi, Y.; Kouno, N.; Takasawa, K.; Ishizu, K.; Akagi, Y.; Aoyama, R.; Teraya, N.; Bolatkan, A.; Shinkai, N.; et al. Comparison of vision transformers and convolutional neural networks in medical image analysis: A systematic review. J. Med. Syst. 2024, 48, 84. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Chen, Y.; Wang, T.; Tang, H.; Zhao, L.; Zhang, X.; Tan, T.; Gao, Q.; Du, M.; Tong, T. CoTrFuse: A novel framework by fusing CNN and transformer for medical image segmentation. Phys. Med. Biol. 2023, 68, 175027. [Google Scholar] [CrossRef] [PubMed]

- Schneider, L.; Krasowski, A.; Pitchika, V.; Bombeck, L.; Schwendicke, F.; Büttner, M. Assessment of CNNs, transformers, and hybrid architectures in dental image segmentation. J. Dent. 2025, 156, 105668. [Google Scholar] [CrossRef] [PubMed]

- Hung, K.M.; Montalvao, C.; Tanaka, R.; Kawai, T.B.; Bornstein, M. The use and performance of artificial intelligence applications in dental and maxillofacial radiology: A systematic review. Dentomaxillofac. Radiol. 2020, 49, 20190107. [Google Scholar] [CrossRef]

- Khanagar, S.B.; Al-Ehaideb, A.; Maganur, P.C.; Vishwanathaiah, S.; Patil, S.; Baeshen, H.A.; Sarode, S.C.; Bhandi, S. Developments, application, and performance of artificial intelligence in dentistry—A systematic review. J. Dent. Sci. 2021, 16, 508–522. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).