Abstract

The classification of political inquiry messages is a crucial task in government affairs. However, with the increasing number of inquiry messages on platforms, it is difficult for government departments to accurately and efficiently categorize these messages solely through manual labor. Existing methods find it challenging to achieve excellent performance due to the sparsity of the features and the imbalanced data. In this paper, we propose a new framework (BTCAM) for automatically classifying political inquiry messages. Specifically, we first propose a topic-based data augmentation algorithm (TDA) to improve the diversity and quality of data. In addition, in order to enable the model to focus on the key information in the text, we propose an applicable streamlined convolutional block attention module (SCBAM), which can highlight the salient features on the channel and spatial axes. Extensive experiments show that the accuracy and recall of BTCAM reach 0.939 and 0.931, respectively, outperforming the state-of-the-art methods.

1. Introduction

With the development of information technology, political inquiry platforms have emerged as a crucial channel for citizen–government department communication, particularly during the COVID-19 pandemic. Citizens typically submit inquiry messages through various information services. These messages are then collected, classified, and forwarded to the relevant government departments for response [1]. However, due to the sheer volume and complexity of inquiry messages, manual classification based on expertise is inefficient and error-prone [2]. This will inevitably lead to a decrease in public satisfaction, which is harmful to the establishment of a positive government image. Therefore, the message-processing efficiency must be improved. With the emergence of natural language processing (NLP) technology, it has become feasible to automate the classification of these political inquiry messages, enabling the advancement towards building a smart government.

Machine learning algorithms can automatically learn features and patterns by training large amounts of text data, thereby building a model that can process natural language. Therefore, more and more researchers have begun to introduce these algorithms into government affairs management to promote the construction of smart government affairs and improve service levels. For example, rule-based algorithms were first proposed to predict governance indexes or classify emails [3,4]. Hong et al. [5] utilized text-mining technology to analyze extensive data on civil complaints to identify social problems and develop solutions automatically. Considering each document covers a different topic, Hagen [6] utilized the latent Dirichlet allocation (LDA) method to automatically identify the topics of electronic petitions, demonstrating that the discovered topics were closely related to social events. Ryu et al. [7] analyzed data on citizen petitions and looked into the variety of these requests by assessing the relevance of bus-related keywords. LDA performs well in some text analysis and clustering tasks, but it may encounter challenges in classification tasks. Therefore, Fu and Lee [8] selected support vector machines (SVMs) to improve the classification performance on official messages. Subsequently, the introduction and use of convolutional neural networks (CNNs) designed for text processing quickly outperformed the benchmarks. For example, Dieng et al. [9] introduced Topic-RNN, which can more accurately identify topics hidden in official documents. In response to the need to capture contextual information over time, researchers have utilized LSTM [10,11,12] in government affairs text processing with time-series analysis, resulting in remarkable advancements. Paul et al. [13] proposed a cyberbullying detection method based on the BERT pre-trained model and achieved excellent performance, confirming the strong generalization ability of BERT in complex social media text classification tasks. However, Chinese has multiple meanings for one word and BERT cannot adapt well to these characteristics using word-level embedding.

Despite the effectiveness of machine learning algorithms in dealing with government affairs, there is limited research on the automatic classification of government inquiry messages. The automatic classification of political inquiry messages still faces two serious challenges:

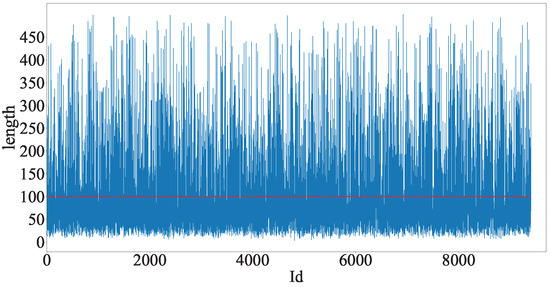

- As illustrated in Figure 1, the length of political inquiry messages is usually short, with most messages less than 100 words. Due to the limitation of text length, the association and contextual information between different elements are incomplete, resulting in possible ambiguity during the classification. For example, for a certain message, such as ”There is construction at night near the community. The sound is very loud and affects rest. I hope it can be solved.”, the message may involve categories such as ”Urban and rural construction” and ”Transportation”. Which category it belongs to requires more contextual information to determine. If this message is talking about road repair or expansion, it might be more inclined to ”Transportation.” If it is about construction of facilities within the community, it may fall under ”Urban and rural construction”.

Figure 1. The length of political inquiry messages. Each blue vertical line represents the length of a single message, while the red dashed line indicates the threshold of 100 characters.

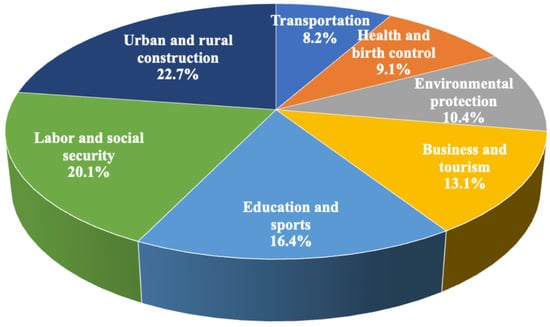

Figure 1. The length of political inquiry messages. Each blue vertical line represents the length of a single message, while the red dashed line indicates the threshold of 100 characters. - Another problem is data imbalance. Figure 2 shows the proportion of different categories of political inquiry messages, among which ”Urban and rural construction” is the most popular category, with a proportion of 22.7%, followed by ”Labor and social security”. ”Transportation” comprises the smallest proportion, with 8.2%. This indicates the significant difference in the distribution of different categories in the dataset, which will negatively impact the performance of models.

Figure 2. The proportions of different categories.

Figure 2. The proportions of different categories.

To address the above issues, we propose an attention-based BiLSTM for the classification of imbalanced political inquiry messages, named BTCAM. To address the data sparsity and data imbalance problems, we first propose a topic-based data augmentation algorithm to increase the number of high-quality messages. In addition, we design a dual-gated convolution mechanism that utilizes skip connections to preserve the semantics in the original input while extracting important features through a streamlined convolutional block attention module (SCBAM). The main contributions of this paper can be summarized as follows:

- We propose a topic-based data augmentation algorithm (TDA). Experiments demonstrate that TDA not only enhances the linguistic quality of sentences but also markedly elevates the operational efficiency of data processing.

- We design a streamlined convolutional block attention mechanism (SCBAM) and demonstrate its effectiveness in short-text classification. This plug-and-play module can be seamlessly integrated into diverse model architectures with minimal computational overhead.

- We propose an attention-based BiLSTM for the imbalanced classification of political inquiry messages. Extensive experiments show that BTCAM outperforms the state-of-the-art baseline.

2. Related Work

2.1. Text Classification

Text classification is a common NLP task [14,15]. Initially, machine learning (ML) algorithms dominated practical applications, and many studies have utilized ML technology to improve government efficiency. For example, Duan and Yao [16] used SVM to classify messages on an online political platform. Wang et al. [17] took messages from an online political platform, and then used naive Bayes, decision trees, random forests, and BP neural networks to classify messages. Since traditional neural networks find it difficult to process long-sequence data, Li et al. [18] used the CBOW model to vectorize the message text in the Hunan Provincial Government Questioning Platform, and built classification models such as TextCNN and TextRNN to compare and classify the message problem, successfully increasing the accuracy rate to 91%. However, collecting multi-category data is often more challenging in practice. This can easily result in significant disparities in the amount of data collected from different categories, leading to prediction bias.

2.2. Data Augmentation

Data augmentation, which is extensively utilized in NLP [19,20], attempts to provide a more effective technique to collect and label data for increasing the volume and diversity of the dataset. Zhang et al. [21] generated adversarial samples by randomly sampling real-world samples near a specific training sample for linear interpolation. The generated samples are similar to the training samples, but the labels are different. Many few-shot learning methods [22] exploit feature-space augmentation to obtain better feature extractors that augment new categories. The bottleneck of these approaches, also known as mixed-sample data augmentation, is the continuous input demand, which has been solved by Chen and Yang [23]. Despite the empirical success, data augmentation techniques continue to have serious drawbacks. Therefore, Sennrich et al. [24] developed a well-known back-translation technology to increase the diversity of data. Following that, pre-training techniques and neural network models, such as transformers [25], were successfully used for data augmentation. The meaning of complex expressions is constructed from their subparts. As a result, Zhang and Yang [21] proposed a combinatorial data augmentation technique for natural language comprehension that makes use of constituency parse trees to decompose sentences into their component substructures and then recombine them to produce new sentences. Model-based data augmentation techniques work well, but they have high implementation costs relative to performance gains. Wei and Zou [26] presented an Easy Data Augmentation (EDA) method that incorporates a collection of token-level random perturbation operations such as synonym replacement (SR), random insertion (RI), random swap (RS), and random deletion (RD). Karimi et al. [27] presented an Easier Data Augmentation (AEDA) strategy that only requires the random addition of punctuation from {“.”, “;”, “?”, “:”, “!”, “,”}. Rule-based data augmentation techniques are easier to use and more effective than model-based techniques. It is important to be transparent when handling government data. However, random perturbation may lead to semantic distortion and fail to generate high-quality data for minority categories.

2.3. Attention Mechanism

An attention mechanism is a technique used in neural networks to selectively focus or pay attention to important parts of the input data. The basic idea of the attention mechanism is to dynamically calculate the importance score of each input element based on certain features or the context of the input, and then perform a weighted summation of the input based on these scores. In this way, the model can pay more attention to the key information related to the task and ignore other less important parts when processing large amounts of input data. The utilization of the attention mechanism has been successful in fields such as image restoration, sentiment classification, and automatic speech recognition (ASR) [28,29,30]. For example, Hu et al. [31] designed a Squeeze-and-Excitation (SE) network that enhances performance by reinforcing important features between channels. Subsequently, a convolutional block attention mechanism (CBAM) [32] was proposed, which is a lightweight module that improves performance while minimizing overhead. It has two consecutive sub-modules: the channel attention module and spatial attention module. These two consecutive attention modules are used to infer which information the model should learn to focus on or ignore during training.

3. Methodology

3.1. Preliminaries

3.1.1. Notation

For quick reference, we provide a comprehensive summary of notation in Table 1.

Table 1.

Summary of notation.

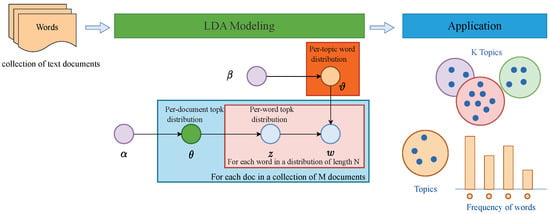

3.1.2. Topic Modeling

Topic modeling is the process of learning from unlabeled data to uncover latent topics and their similarities, which is often accomplished using probabilistic models. Latent semantic analysis (LSA), which was the first attempt to mine semantic structure in text collections, was developed by Deerwester et al. [33] to tackle the issue of polysemy in semantic retrieval. Probabilistic latent semantic analysis [34] is the first unsupervised learning technique to employ probabilistic generative models for topic analysis of texts. However, the PLSI algorithm has a tendency toward overfitting since the number of parameters increases linearly with topics. As a result, Blei [35] proposed latent Dirichlet allocation (LDA), which is now one of the most widely used topic modeling methods. As shown in Figure 3, LDA is a three-layer Bayesian model, including a document layer, topic layer, and word layer. Among them, the document layer represents the entire document collection, and each document is a collection of a series of words. The topic layer represents the underlying topics in a collection of documents. Each topic is a probability distribution of a set of words, indicating the likelihood of each word appearing under this topic. Each document is considered to be generated from a mixture of these latent topics. The word layer represents each word in the document. Each word is drawn from a specific topic, which is drawn from the topic distribution of documents.

where , and is the gamma function.

Figure 3.

LDA modeling and applications. Below is a graphical model of LDA. Its application describes clustering words by topic.

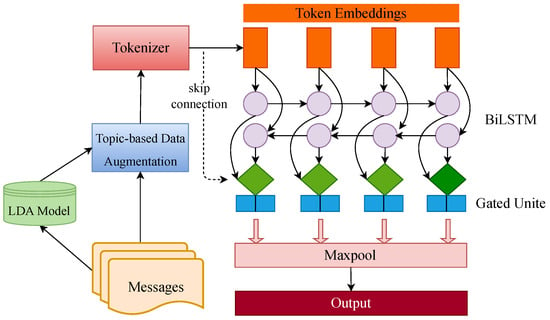

3.2. Framework Overview of BTCAM

The overall framework of BTCAM is illustrated in Figure 4. In this framework, we first introduce LDA to obtain the topic terms for each category, and then use the proposed topic-based data augmentation algorithm (TDA) to generate more high-quality samples. Subsequently, the embedding layer is used to obtain a vectorized representation, which is then fed into the BiLSTM to extract contextual information. The information flows into the dual-gated convolution mechanism, which utilizes skip connections to retain the details and semantic information in the original input and emphasizes key information through the streamlined convolutional block attention module (SCBAM). Finally, we use the dropout layer to prevent overfitting, and the output is obtained using the softmax function.

Figure 4.

The architecture of the BTCAM model.

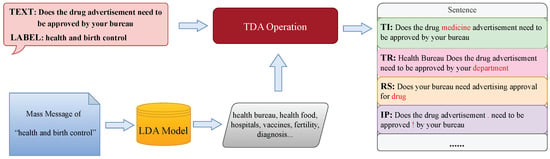

3.2.1. Topic-Based Data Augmentation

Deep learning is a data-driven strategy that is prone to underfitting when only a few examples are available. The cost of manually labeled data is high, so using data augmentation techniques to increase high-quality samples is an effective solution. In this paper, considering the cost of data addition, we adopt a rule-based data augmentation method. Specifically, we use the topic-term sets extracted by LDA to increase the number of samples in the minority category to promote a balance between different categories. First, we extend the text by inserting synonyms of the topical terms into the message. Then, topic-term replacement (TR), random swap (RS), and insertion punctuation (IP) operations are used to increase the number of minority categories. Table 2 shows the TDA’s operational guidelines.

Table 2.

Topic-based data augmentation operation.

Take the sentence ”Does the drug advertisement need to be approved by your bureau" as an example, the sentence label is "health and birth control", as shown in Figure 5. First, we merge all messages that belong to "health and birth control" to obtain a long-text corpus for this category. Then, we enter the corpus into the LDA model to extract the category topic terms: health food, vaccines, fertility, diagnosis... Finally, we randomly select topic terms and use the operations in the TDA algorithm to generate more high-quality samples.

Figure 5.

The implementation process of the topic-based data augmentation algorithm, taking “Does the drug advertisement need to be approved by your bureau” as an example.

3.2.2. Embedding Layer

Given the messages , where n represents the data size. The label of messages is {”Urban and rural construction”, “Environmental protection”, “Transportation”, “Labor and social security”, “Education and sports”, “Business and tourism”, “Health and birth control”} towards the text. To obtain a vectorized representation of each token, we use the embedding function found in the torch.nn package. Specifically, the embedding layer can return the embedding vector associated with the number of tokens, indicating the semantic relationships between the symbols represented by each number. In other words, the embedding function, which stores the embedding vectors of fixed-size dictionaries, is a straightforward lookup table. In this paper, we transform the text to , where , .

3.2.3. Context-Information-Extraction Layer

The embedding layer obtains the representation of each token but ignores the meaning of the word and its relationship to other words in the context. Therefore, we use bidirectional long short-term memory (BiLSTM) to extract contextual information, which consists of a series of memory units. Each memory block has three gates and one storage unit. The input gate and output are used to update the cell state and obtain the following hidden state, respectively, while the forget gate employs the sigmoid function to determine whether information should be maintained or deleted. Implementations of LSTM memory cells include the following:

where represents the input at t seconds. is the hidden layer representation at t seconds. represents the cell state representation at time t seconds. represent the weight coefficients of the forget gate, input gate, and output gate of , respectively. represent the forgetting gate, input gate, cell state, output gate, and weight coefficient of , respectively. represent the forgetting gate, the input gate, the cell state, and the bias value of the output gate in the feature extraction process, respectively.

In this paper, we employ bidirectional LSTM as in [36], which combines forward LSTM with backward LSTM and preserves data information in both directions. The output can be portrayed as follows:

Concatenate each and to obtain a hidden state . Let the hidden unit number for each unidirectional LSTM be u. For simplicity, denote all the as :

where ⊕ denotes the concatenation operation.

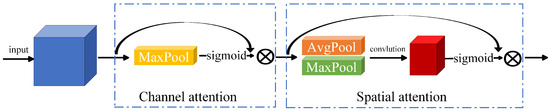

3.2.4. Dual-Gated Convolution Mechanism Layer

If each word in the input text is considered equally relevant to each word in the output, the semantic vector cannot accurately depict the entire information sequence. Therefore, we propose dual-gated convolutional layers to extract important information. Dual-gated convolution layers include a convolutional block attention gate and information fusion gate. The convolutional attention mechanism gate extracts important information by SCBAM and controls the contribution ratio of different information to the prediction results, while the information fusion gate retains the important semantics of the original input through shortcut connection and max pooling operations.

(1) Attention mechanism gate.

The convolutional block attention mechanism employs channel and spatial attention modules sequentially to highlight significant features along the channel and spatial axes, producing positive results in computer vision tasks. Unlike image data, each channel in text data represents the relationship features of adjacent words in the text. For the reasons stated above, we propose a convolutional block attention mechanism adapted to text (named SCBAM), as shown in Figure 6. Max-pooling on the channel axis is used to first capture keyword context information, and the spatial attention mechanism is then applied to gather more details about the keywords. The implementation details of SCBAM are as follows:

where denotes the sigmoid function and represents a convolution operation with a filter size of . ⊗ denotes element-wise multiplication.

Figure 6.

The structure of SCBAM, including channel and spatial attention modules.

(2) Information fusion gate.

To prevent the loss of important information, we use the shortcut join combination X and H to represent the fused information. Then, the maximum pool is used to reduce the risk of overfitting and improve the generalization ability of the model.

where ⊕ denotes the concatenation operation.

Finally, the attention mechanism gate and the information fusion are connected.

where ⊕ denotes the concatenation operation.

3.2.5. Output Layer

Finally, we use the dropout layer to prevent overfitting and obtain the output through the softmax function. The pseudocode of the proposed BTCAM is shown in Algorithm 1.

| Algorithm 1 The pseudocode of BTCAM |

| Input: A set of messages where each is an individual message. |

| Output: Predicted labels for the input messages. |

|

4. Experiment

In this section, we conduct experiments to demonstrate the effectiveness of the proposed model. These experiments focus on answering four key questions:

- RQ1: Does BTCAM outperform other baseline models?

- RQ2: Is the combination of topic-based data augmentation and the streamlined convolutional block attention mechanism crucial for BTCAM?

- RQ3: What is the impact of different attention mechanisms?

- RQ4: What is the efficiency and quality of the TDA algorithm?

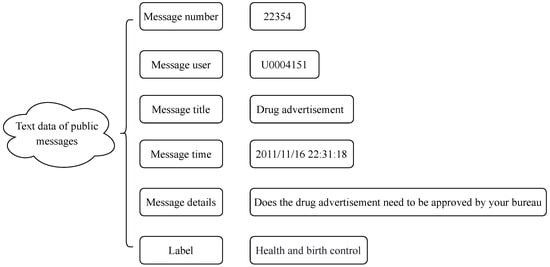

4.1. Datasets

In this paper, we collected public messages on government affairs in various regions of a province from November 2010 to January 2020 on the Internet (named POData). The dataset has a total of 9832 messaattributes: “Message number”, “Message user”, “Message title”, “Message time”, “Message details”, and “Label”. “Label” includes “Urban and rural construction”, “Environmental protection”, “Transportation”, “Labor and social security”, “Education and sports”, “Business and tourism”, and “Health and birth control”. The description of a single sample is shown in Figure 7. Since important messages are stored in “Message Topic” and “Message Details”, to avoid losing important information, we first merge ”message title” and “message title” and then use the merged data as the input to train the classification model.

Figure 7.

The description of a single sample.

To evaluate the cross-domain generalization ability of the model on the Chinese platform, we conducted experiments on the THUCNews dataset [37]. This large-scale Chinese text classification dataset contains approximately 840,000 news documents, covering 14 categories, and is constructed by screening the historical RSS subscription content of Sina News.

4.2. Evaluation Metrics

The confusion matrix provides a detailed evaluation of the classification model’s performance by illustrating the relationship between the sample data’s actual properties and the predicted results. Taking binary classification as an example, predicted values and real values are shown in turn in the rows and columns. According to the combination of the actual category and the predicted result, the samples can be divided into true positive (TP), false positive (FP), false negative (FN), and true negative (TN). The following is a description of each parameter:

- TP (true positive): The number of samples correctly predicted as positive.

- TN (true negative): The number of samples that are correctly predicted as negative.

- FP (false positive): The number of samples incorrectly predicted as positive.

- FN (false negative): The number of samples incorrectly predicted as negative.

Three indicators are drawn from the confusion matrix to assess the effectiveness of the classification model:

- AccuracyAccuracy is the proportion of the quantity that is predicted correctly:

- PrecisionPrecision is the percentage of actual positive samples that are predicted to be positive samples, accounting for all the samples that are predicted to be positive:

- RecallRecall indicates the probability that actual positive samples which have been predicted to be positive samples are actual positive samples:

4.3. Baselines and Experiment Setup

Compare the following methods with our proposed models. The experiment was conducted on a 64-bit Windows 10 operating system, with a 12th Gen Intel(R) Core(TM) i5-12400, 2.50 GHz processor (Intel, Santa Clara, CA, USA), and an NVIDIA RTX 4060Ti GPU (Nvidia, Santa Clara, CA, USA). In the experiment, we used Python version 3.10.11, PyTorch version 2.1.1, and CUDA API version 12.2. In addition, we tuned the model parameters and determined the optimal settings through grid search, as shown in Table 3.

Table 3.

Parameter settings.

- TextCNN [38]: This model is a classic baseline for text classification.

- TextRNN [39]: RNN are used in this method to solve text classification problems. Usually bidirectional LSTM and GRU.

- FastText [40]: This model extends word2vec to handle sentence and document classification, with a very fast training speed.

- BiLSTM_ATT [41]: This integrates RNN and an attention mechanism. Crucial information is captured using the attention mechanism.

- DPCNN [42]: By continuously deepening the network, this technique extracts long-distance text dependencies to produce better classification outcomes.

- BERT [13]: This method is a pre-trained deep bidirectional language model based on the Transformer architecture, which improves model performance by learning context-related word embeddings from large-scale text.

4.4. Results and Discussion

4.4.1. Comparison of Baseline Models (RQ1)

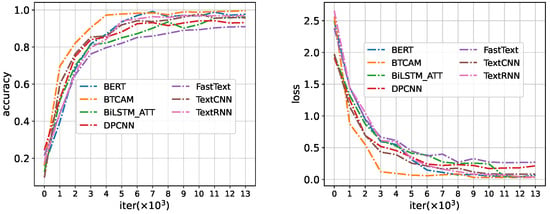

A comparison of the results of BTCAM on POData with six baselines is shown in Table 4. In the table, FastText, DPCNN, TextCNN, TextRNN, BiLSTM_ATT, and BERT represent the performance before data augmentation. TDA+BiLSTM_ATT, TDA+DPCNN, TDA+FastText, TDA+TextCNN, TDA+TextRNN, and TDA+BERT represent the performance after data augmentation using the proposed TDA algorithm. Figure 8 shows the accuracy and loss during the iterative process of the training of each model. Based on a comprehensive analysis of the figures and tables, it is easy to find the following:

Table 4.

The performance of different models on POData.

Figure 8.

The training of BTCAM on POData.

- Without data augmentation, the FastText, DPCNN, and TextCNN models have poor performance on the training dataset and validation dataset. Although TextRNN, BiLSTM_ATT, and BERT improve the accuracy of the model on the training dataset, the recall was only 0.632 and 0.651.

- After using the proposed TDA algorithm to increase the number of minority categories, the accuracy of the model on the training dataset is greater than 0.9, and the accuracy and recall on the validation dataset are both greater than 0.8. The performance of the models with TDA has improved greatly.

- Compared with the baseline, the model converges faster and achieves the best performance. On the training dataset, the accuracy of BTCAM reached 0.996, and the loss value dropped to 0.03. On the validation dataset, the precision and recall of BTCAM were both greater than 0.93.

- To further verify the generalizability of BTCAM, additional experiments were conducted on the public THUCNews dataset, a widely used benchmark for Chinese short-text classification. As shown in Table 5, BTCAM outperforms all baselines by a significant margin, thus confirming its adaptability beyond government-specific data.

Table 5. The performance of different models on THUCNews.

Table 5. The performance of different models on THUCNews.

Table 6 is the classification report of the BTCAM model on the validation dataset. The table shows the precision, recall, and F1 values of different categories. It is easy to find that the model’s prediction performance for all categories is above 90%, which is the best performance so far.

Table 6.

Precision, recall, and F1 of different categories on POData.

4.4.2. Ablation Study (RQ2)

The proposed TDA and SCBAM form the basis of the classification network in BTCAM, and it is necessary to undertake two groups of ablation tests to confirm their efficacy. Table 7 shows the experimental results. TDA means removing the TDA algorithm from the BTCAM model, while SCBAM means removing SCBAM from the BTCAM model. The following findings were discovered through comparison and analysis. It is easy to find the following from the table:

Table 7.

The performance of different components on POData.

- When the TDA component is removed, the accuracy of the model on the training dataset is only 0.882, and the accuracy and recall on the validation dataset are reduced to 0.601 and 0.581, respectively. This shows that the TDA algorithm has a great impact on the performance of BTCAM, especially on recall, which proves that the SCBAM component is essential for BTCAM.

- When the SCBAM component is removed, the accuracy on the training dataset is 0.983, and the accuracy and recall on the validation dataset are 0.922 and 0.913, respectively. The performance of the model dropped by varying degrees, and the drop was more obvious on the validation dataset. This shows that SCBAM can effectively improve the generalization ability of the model, which proves that the SCBAM component is necessary for BTCAM.

4.4.3. Analysis of Attention Mechanism (RQ3)

In this paper, we compared the CBAM in [32] with its streamlined version. Among them, the SE [31] module is a channel attention module with average pooling. SCBAM is a streamlined version that eliminates the mean pool operation in channel attention. In addition, we also study the influence of different connections between the channel attention module and the spatial attention module. SCBAM& stands for parallel channels and spatial attention modules, while SCBAM+ stands for series channels and spatial attention modules. The results are shown in Table 8; SCBAM has good performance in terms of accuracy and generalization. Moreover, the serial connection is superior to the parallel connection in SCBAM.

Table 8.

The performance of different attention modules on POData.

4.4.4. Analysis of Efficiency and Quality (RQ4)

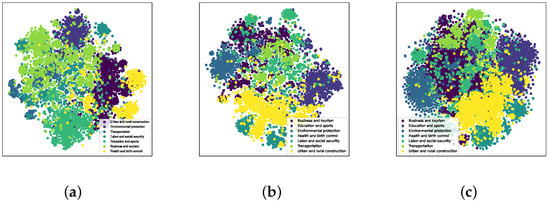

To further demonstrate the efficiency of the proposed TDA algorithm and the quality of the augmented data, we utilized the EDA, AEDA, and TDA algorithms to generate more samples for minority categories and calculated their running time. Then, we used these augmented data to train FastText, TextCNN, BiLSTM ATT, TextRNN, and BTCAM. The results are shown in Table 9. In addition, we used TSNE technology to visualize the embeddings obtained using different methods, as shown in Figure 9. Combining Table 9 and Figure 9, the following conclusions can be drawn.

Table 9.

Execution time and performance on POData. Execution time comparison for adding 15 augmented sentences per message using EDA, AEDA, and TDA. And the average accuracy of the augmented data in the classification model.

Figure 9.

Visual analysis of embeddings on POData augmented by (a) EDA, (b) AEDA, and (c) TDA.

- Compared with EDA, the execution time of TDA is reduced from 10,488 s to 129 s, and the efficiency of the algorithm has been greatly improved.

- The model performs best on the data obtained by the TDA algorithm. The reason is that the TDA algorithm can generate higher-quality samples, and the boundaries between different categories are clearer. This proves the superiority of the TDA algorithm.

5. Conclusions and Future Work

In this study, we develop a deep learning framework (BTCAM) based on an improved bidirectional LSTM that can automatically classify unbalanced government query information. In the BTCAM framework, we first propose our topic-based data augmentation algorithm. Different from existing algorithms, this algorithm pays more attention to topic information when generating augmented data, which not only improves the quality of augmented data but also greatly improves efficiency and solves the imbalance problem. Secondly, in the dual-gated convolutional mechanism module, we develop a streamlined convolutional block attention mechanism, which can effectively improve the generalization ability of the model. Extensive experiments have shown that the proposed BTCAM achieves superior accuracy and recall in the automatic classification of political inquiry messages.

Although the proposed model exhibits excellent prediction capabilities, it currently relies solely on traditional vectorization methods. In future work, we will explore the incorporation of pre-trained language models to extract word vectors imbued with rich contextual semantics. These context-aware embeddings will be utilized as supplementary feature inputs, fused with conventional vector representations before being fed into the model. This integrated approach aims to leverage the nuanced linguistic understanding of pre-trained architectures while retaining the established strengths of traditional vectorization methods, thereby further enhancing the model’s performance in nuanced government consultation information classification tasks.

Author Contributions

Conceptualization, H.H. and D.Z.; methodology, H.H., C.W. and D.Z.; validation, H.H. and C.W.; formal analysis, H.H. and C.W.; investigation, H.H.; resources, D.Z.; data curation, H.H.; writing—original draft preparation, H.H.; writing—review and editing, H.H.; visualization, H.H.; supervision, D.Z.; project administration, D.Z.; All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by Artificial Intelligence Robot Industry College of South China Normal University (Guangdong Provincial Model Industry College), and Scientific Research Innovation Project of Graduate School of South China Normal University (2025KYLX068).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The POData supporting the conclusions of this article will be made available at GitHub.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Jeong, H.; Lee, T.H.; Hong, S.-G. A corpus analysis of electronic petitions for improving the responsiveness of public services: Focusing on Busan petition. Korean J. Local Gov. Stud. 2017, 21, 423–436. [Google Scholar] [CrossRef]

- Kim, N.; Hong, S. Automatic classification of citizen requests for transportation using deep learning: Case study from Boston city. Inf. Process. Manag. 2021, 58, 102410. [Google Scholar] [CrossRef]

- Dalianis, H.; Sjöbergh, J.; Sneiders, E. Comparing manual text patterns and machine learning for classification of e-mails for automatic answering by a government agency. In Computational Linguistics and Intelligent Text Processing; Springer: Berlin/Heidelberg, Germany, 2011; pp. 234–243. [Google Scholar]

- Wirtz, B.W.; Müller, W.M. An integrated artificial intelligence framework for public management. Public Manag. Rev. 2018, 21, 1076–1100. [Google Scholar] [CrossRef]

- Hong, S.G.; Kim, H.J.; Choi, H.R. An analysis of civil traffic complaints using text mining. Int. Inf. Inst. (Tokyo). Inf. 2016, 19, 4995. [Google Scholar]

- Hagen, L. Content analysis of e-petitions with topic modeling: How to train and evaluate LDA models? Inf. Process. Manag. 2018, 54, 1292–1307. [Google Scholar] [CrossRef]

- Ryu, S.; Hong, S.; Lee, T.; Kim, N. A pattern analysis of bus civil complaint in Busan city using the text network analysis. Korean Assoc. Comput. Account. 2018, 16, 19–43. [Google Scholar] [CrossRef]

- Fu, J.; Lee, S. A multi-class SVM classification system based on learning methods from indistinguishable Chinese official documents. Expert Syst. Appl. 2012, 39, 3127–3134. [Google Scholar] [CrossRef]

- Dieng, A.B.; Wang, C.; Gao, J.; Paisley, J. Topicrnn: A recurrent neural network with long-range semantic dependency. arXiv 2016, arXiv:1611.01702. [Google Scholar]

- Hadi, M.U.; Qureshi, R.; Ahmed, A.; Iftikhar, N. A lightweight CORONA-NET for COVID-19 detection in X-ray images. Expert Syst. Appl. 2023, 225, 120023. [Google Scholar] [CrossRef]

- Ozbek, A. Prediction of daily average seawater temperature using data-driven and deep learning algorithms. Neural Comput. Appl. 2023, 36, 365–383. [Google Scholar] [CrossRef]

- Wang, Y.; Li, X.; Cheng, Y.; Du, Y.; Huang, D.; Chen, X.; Fan, Y. A neural probabilistic bounded confidence model for opinion dynamics on social networks. Expert Syst. Appl. 2024, 247, 123315. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. Bert: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019; Long and Short Papers. Volume 1, pp. 4171–4186. [Google Scholar]

- Alves, A.L.F.; de Souza Baptista, C.; Firmino, A.A.; de Oliveira, M.G.; de Paiva, A.C. A spatial and temporal sentiment analysis approach applied to Twitter microtexts. J. Inf. Data Manag. 2015, 6, 118. [Google Scholar]

- Lai, S.; Xu, L.; Liu, K.; Zhao, J. Recurrent convolutional neural networks for text classification. Proc. AAAI Conf. Artif. Intell. 2015, 29, 1. [Google Scholar] [CrossRef]

- Duan, Y.; Yao, L. The automatic classification and matching of informal texts on political media integration platform. Intell. Theory Pract. 2020, 43, 7. [Google Scholar]

- Sidi, W.; Guangwei, H.; Siyu, Y.; Yun, S. Research on automatic forwarding method of government website mailbox based on text classification. Data Anal. Knowl. Discov. 2020, 4, 51–59. [Google Scholar]

- Li, M.; Yin, K.; Wu, Y.; Guo, C.; Li, X. Research on government message text classification based on natural Language Processing. Comput. Knowl. Technol. 2021, 17, 160–161. [Google Scholar] [CrossRef]

- Chen, J.; Tam, D.; Raffel, C.; Bansal, M.; Yang, D. An empirical survey of data augmentation for limited data learning in NLP. Trans. Assoc. Comput. Linguist. 2023, 11, 191–211. [Google Scholar] [CrossRef]

- Feng, S.Y.; Gangal, V.; Wei, J.; Chandar, S.; Vosoughi, S.; Mitamura, T.; Hovy, E. A survey of data augmentation approaches for NLP. arXiv 2021, arXiv:2105.03075. [Google Scholar]

- Zhang, L.; Yang, Z.; Yang, D. TreeMix: Compositional Constituency-based Data Augmentation for Natural Language Understanding. arXiv 2022, arXiv:2205.06153. [Google Scholar]

- Hariharan, B.; Girshick, R. Low-shot visual recognition by shrinking and hallucinating features. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 3018–3027. [Google Scholar]

- Chen, J.; Yang, Z.; Yang, D. Mixtext: Linguistically-informed interpolation of hidden space for semi-supervised text classification. arXiv 2020, arXiv:2004.12239. [Google Scholar]

- Sennrich, R.; Haddow, B.; Birch, A. Improving neural machine translation models with monolingual data. arXiv 2015, arXiv:1511.06709. [Google Scholar]

- Yang, Y.; Malaviya, C.; Fernandez, J.; Swayamdipta, S.; Bras, R.L.; Wang, J.P.; Bhagavatula, C.; Choi, Y.; Downey, D. Generative data augmentation for commonsense reasoning. arXiv 2020, arXiv:2004.11546. [Google Scholar]

- Wei, J.; Zou, K. Eda: Easy data augmentation techniques for boosting performance on text classification tasks. arXiv 2019, arXiv:1901.11196. [Google Scholar]

- Karimi, A.; Rossi, L.; Prati, A. Aeda: An easier data augmentation technique for text classification. arXiv 2021, arXiv:2108.13230. [Google Scholar]

- Fu, Y.; Xu, D.; He, K.; Li, H.; Zhang, T. Image Inpainting Based on Edge Features and Attention Mechanism. In Proceedings of the 2022 5th International Conference on Image and Graphics Processing (ICIGP), Beijing, China, 7–9 January 2022; pp. 64–71. [Google Scholar]

- Jia, K. Sentiment classification of microblog: A framework based on BERT and CNN with attention mechanism. Comput. Electr. Eng. 2022, 101, 108032. [Google Scholar] [CrossRef]

- Zheng, Y.; Shao, Z.; Gao, Z.; Deng, M.; Zhai, X. Optimizing the Online Learners’ Verbal Intention Classification Efficiency Based on the Multi-Head Attention Mechanism Algorithm. Int. J. Found. Comput. Sci. 2022, 33, 717–733. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Deerwester, S.; Dumais, S.T.; Furnas, G.W.; Landauer, T.K.; Harshman, R. Indexing by latent semantic analysis. J. Am. Soc. Inf. Sci. 1990, 41, 391–407. [Google Scholar] [CrossRef]

- Hofmann, T. Probabilistic latent semantic analysis. arXiv 2013, arXiv:1301.6705. [Google Scholar]

- Blei, D.M.; Ng, A.Y.; Jordan, M.I. Latent dirichlet allocation. J. Mach. Learn. Res. 2003, 3, 993–1022. [Google Scholar]

- Khan, S.; Durrani, S.; Shahab, M.B.; Johnson, S.J.; Camtepe, S. Joint User and Data Detection in Grant-Free NOMA with Attention-based BiLSTM Network. arXiv 2022, arXiv:2209.06392. [Google Scholar] [CrossRef]

- Li, J.; Sun, M. Scalable term selection for text categorization. In Proceedings of the 2007 Joint Conference on Empirical Methods in Natural Language Processing and Computational Natural Language Learning (EMNLP-CoNLL), Prague, Czech Republic, 28–30 June 2007; pp. 774–782. [Google Scholar]

- Kim, Y. Convolutional Neural Networks for Sentence Classification. arXiv 2014, arXiv:1408.5882. [Google Scholar]

- Liu, P.; Qiu, X.; Huang, X. Recurrent neural network for text classification with multi-task learning. arXiv 2016, arXiv:1605.05101. [Google Scholar]

- Joulin, A.; Grave, E.; Bojanowski, P.; Mikolov, T. Bag of tricks for efficient text classification. arXiv 2016, arXiv:1607.01759. [Google Scholar]

- Zhou, P.; Shi, W.; Tian, J.; Qi, Z.; Li, B.; Hao, H.; Xu, B. Attention-based bidirectional long short-term memory networks for relation classification. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics, Berlin, Germany, 7–12 August 2016; Volume 2, pp. 207–212. [Google Scholar]

- Salerno, M.; Sargeni, F.; Bonaiuto, V. DPCNN: A modular chip for large CNN arrays. In Proceedings of the ISCAS’95-International Symposium on Circuits and Systems, Seattle, WA, USA, 30 April–3 May 1995; Volume 1, pp. 417–420. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).