Abstract

The linguistic summarization of data is one of the study trends in data mining because it has many useful practical applications. A linguistic summarization of data aims to extract an optimal set of linguistic summaries from numeric data. The blended learning format is now popular in higher education at both undergraduate and graduate levels. A lot of techniques in machine learning, such as classification, regression, clustering, and forecasting, have been applied to evaluate learning activities or predict the learning outcomes of students. However, few studies have been examined to transform the data of blended learning courses into the knowledge represented as linguistic summaries. This paper proposes a method of linguistic summarization of blended learning data collected from a learning management system to extract compact sets of interpretable linguistic summaries for understanding the common rules of blended learning courses by utilizing enlarged hedge algebras. Those extracted linguistic summaries in the form of sentences in natural language are easy to understand for humans. Furthermore, a method of detecting the exceptional cases or outliers of the learning courses based on linguistic summaries expressing common rules in different scenarios is also proposed. The experimental results on two real-world datasets of two learning courses of Discrete Mathematics and Introduction to Computer Science show that the proposed methods have promising practical applications. They can help students and lecturers find the best way to enhance their learning methods and teaching style.

1. Introduction

The linguistic summarization of data is one of the study trends in the field of data mining, which extracts useful knowledge represented as summary sentences from numeric data [1,2,3]. Each summary sentence with a prespecified sentence structure in natural language, the so-called linguistic summary (LS), expresses real-world knowledge of objects stored in the numeric datasets. The extracted knowledge expressed in the form of summary sentences is understandable to all users. This kind of knowledge can help human experts in their decision-making in the areas of network flow statistics [4], medical treatment [5,6,7], elderly people health care [8], ferritic steel design [9,10,11], process mining [12], sport [13], explanation of black-box outputs of AI models [14], social and economic analysis [15,16], weather analysis [17], and agriculture [18]. The commonly used structure of the linguistic summary proposed by Yager is a sentence with quantifiers such as “Q y are S” or “Q F y are S” [2]. For instance, “Many (Q) students (y) are with high mid-term score (S)”, “Very many (Q) students (y) with very high average practice score and very many times in direct learning classes (F) obtain very high final exam score (S)”. In these two sentences described above, the semantics of the words “very few”, “many”, “very many”, “high”, and “very high” help readers easily understand the knowledge extracted from the dataset; Q is the linguistic quantifier representing a proportion of the objects in the dataset satisfying the conclusion S in the first structure or a group of objects in the dataset satisfying the filtering condition F in the second structure. Since then, these sentence structures have been used in many studies on the problems of linguistic summarization of data [2,3,4,5,7,9,10,11,12,15,16,17,19], so we also utilize those structures in this study.

In the existing studies utilizing fuzzy set theory, the word semantics in the linguistic summaries, such as “very few”, “most”, “high”, etc., are represented by their associated fuzzy sets. The extracted linguistic summaries are considered fuzzy proportions expressing useful knowledge or information about objects in the designated dataset. The validity measure or truth value measure, calculated based on the membership degree of fuzzy sets representing the computational semantics of linguistic words, is used to evaluate a linguistic summary. To obtain the extracted linguistic summaries with high quality, only those with a value of validity greater than a high enough threshold are extracted. However, a large number of linguistic summaries need to be considered. Therefore, the genetic algorithm is applied to evaluate and choose an optimal set of linguistic summaries [5,9,20,21]. To do so, some constraints and quality evaluation measures are defined to establish an objective function. Furthermore, the authors in [9,20] defined two special genetic operators, the so-called Propositions Improver operator and Cleaning operator, to improve the quality of the extracted linguistic summary set. The interval type-2 fuzzy set is also applied to linguistic summarization instead of the traditional fuzzy set [22].

Although some additional genetic operators are applied, among the 30 linguistic summaries extracted by the enhanced genetic algorithm in [9,20], there is still one of them with a validity value equal to 0, and there are still three of them with a validity value of less than 0.8. These poor results may be due to the number of linguistic quantifier words being only 5 and no record in the dataset satisfying the filtering condition F. Moreover, the fuzzy partitions of the attribute value domains are intuitively designed by human users, so they depend on the designer’s subjective viewpoints. To solve those limitations of the existing methods, Nguyen et al. utilized the enlarged hedge algebras (EHAs) [23] to automatically induce the linguistic value domains of linguistic variables, then establish the fuzzy set-based multi-level semantic structures of linguistic words, which ensures the interpretability of the content of the extracted linguistic summaries [10]. To eliminate the linguistic summaries with a validity value equal to 0 and improve their quality, Lan et al. proposed an enhanced algorithm model by combining a genetic algorithm with a greedy strategy to extract an optimal set of linguistic summaries [24]. This new algorithm model is good enough for us to enhance and apply it to extract linguistic summaries from blended learning data collected from a learning management system (LMS) for supporting educators and lecturers in their course evaluation and management.

Blended learning is increasingly popular in the digital transformation process, especially in universities in Vietnam. Therefore, considerable data about students’ learning behaviors and evaluation scores in the learning processes generated from those courses and stored in LMSs can be analyzed using traditional machine learning techniques, such as classification, regression, clustering, and forecasting, and by applying deep learning techniques. However, each of those techniques is only applied to a specific analysis aspect, such as discovering learning style [25], evaluating learning performance [26], predicting learning outcomes [27,28], etc. Moreover, the explainability of artificial intelligence (AI) models in education has also been examined [29]. Nevertheless, one of the difficulties of studying explainable AI models is that the measures of explainability are not yet defined, so different metrics for evaluating the explainability of AI models have been introduced. So far, few studies have been examined to transform the data of blended learning courses into the knowledge represented as linguistic summaries. This kind of knowledge is easy to read and understand for all people. The extraction model is based on soft computing and knowledge-based systems [3,5,9], so it can complement and even outperform traditional machine learning techniques in educational analytics by enhancing interpretability and explainability, making it easier for educators to understand student performance trends. In contrast to machine learning models that produce complex numerical outputs that are difficult to comprehend, linguistic summarization of data transforms numerical data into meaningful summary sentences, allowing educators to make informed decisions. In addition, linguistic summaries capture qualitative aspects such as learning behaviors and study patterns instead of quantitative metrics. This enables educators to tailor interventions based on detailed student performance descriptions instead of only numerical predictions.

In this study, the algorithm model of linguistic summarization of data proposed in [24] was enhanced and applied to extract compact sets of interpretable linguistic summaries from blended learning data to detect the common rules of blended learning courses. In addition, a method of detecting exceptional cases or outliers of the courses based on a set of linguistic summaries expressing common rules in different scenarios is also proposed by utilizing the order relation property of words induced by enlarged hedge algebras. Outliers can be students’ learning outcomes that differ substantially from the common outcomes. For example, students did not actively take part in the learning activities during the learning course, but they obtained very high exam scores. In the contrary case, students actively took part in the learning activities, but they obtained poor learning outcomes. The experimental results on two real-world datasets of two courses of Discrete Mathematics and Introduction to Computer Science show the practical applications of the proposed methods. Based on the knowledge represented as linguistic summaries, especially the ones expressing outliers, university educators and lecturers can make informed decisions to adjust teaching and learning activities to enhance student’s overall learning outcomes. To achieve this, we define new summary sentence structures adapted to solving problems.

The rest of this paper is structured as follows. Section 2 presents some background knowledge related to our research. Section 3 presents the proposed methods of extracting linguistic summaries from blended learning data. Section 4 presents the experimental results and discussion. Some conclusions are presented in Section 5.

2. Background Knowledge

2.1. Linguistic Summaries with Quantifier Word

In 1982, Yager proposed a method of extracting summary sentences in natural language from numeric data using fuzzy propositions in the form of structures with quantifier words [2]. In this subsection, some concepts and notations related to the problem of extracting a set of compact and optimal linguistic summaries with quantifier words are briefly presented.

Let X = {x1, x2, …, xn} be data patterns representing data objects in a given dataset, and let A = {A1, A2, …, Am} be a set of considering attributes of X. The linguistic summary sentence structure used in this paper is one of the following proposed structures:

where S is the summarizer, which includes a linguistic word in the linguistic domain of the linguistic variable associated with the summarizer attribute representing the degree of the conclusion: for example, “extremely high mid-term score”, “very low final exam score”, etc.; Q is the quantifier word expressing the proportion of data patterns satisfying the summarizer S in relation to all patterns in the dataset as the sentence structure (1) or in relation to the number of patterns satisfying the filter criterion F as the sentence structure (2): for example, “few”, “a half”, “very many”, etc.

Q students are with <S> score,

Q students with <F> obtain <S> score,

Validity value or Truth value T has its numeric value in the normalized interval [0, 1] used to evaluate the truth degree of a linguistic summary, and it is considered the truth degree of a fuzzy proposition with a quantifier word.

In the general form, the components F and S are the associations of linguistic predicates AND/OR, and each linguistic theme is determined by a pair of an attribute and a linguistic word: for example, “very high average quiz score and very many slide views”, “few times doing quizzes and long average practice time”, etc. In these filter criteria, the average quiz score, the number of slide views, the number of times doing quizzes, and the average practice time are the filter attributes. To compute the truth value of the fuzzy propositions of the structures (1) and (2), the fuzzy set-based computational semantics of linguistic words in those fuzzy propositions should be designed. μQ, μF, and μS are denoted by the membership degrees of the computational fuzzy set-based semantics of words in Q, F, and S, respectively. The truth value T is the fundamental measure that is applied to evaluate the quality of a linguistic summary and is computed using Zadeh’s formula [30] for fuzzy predicates with quantifier words [9] as follows:

The linguistic summaries with a truth value greater than a given threshold δ are considered to represent the information and useful knowledge hidden in the given numeric dataset. Therefore, only such linguistic summaries are extracted. To ensure the high quality of the extracted linguistic summary set, the value δ should be greater than or equal to 0.8. Despite setting a given value δ, the cardinality of summary sentences in the extracted set is still very large. Therefore, genetic algorithm models are applied to achieve the optimal set of extracted linguistic summaries [5,9,20,21]. Furthermore, some additional genetic operators, such as imprecision, appropriateness, covering, and focus, are also applied to evaluate and select the best summary set. They are also computed based on the membership degrees of the computational fuzzy set-based semantics of words in the summary sentences [19,31].

2.2. Generating Interpretable Multi-Level Semantic Structures Based on Enlarged Hedge Algebras

In the existing algorithm models of the problem of linguistic summarization of data such as Yager’s [2,3], Diaz’s [9,20], Wilbik’s [31], etc., the number of used linguistic words of the linguistic variable’s domain linked with an attribute of the designated dataset is limited to 7 ± 2. Therefore, the ability to detect special characteristics of data is limited. Moreover, the fuzzy partitions associated with attributes of the dataset are strong and uniform, and they are designed based on the intuitive cognition of human experts. The algorithm models directly manipulate the membership functions of fuzzy sets instead of the inherent semantics of words, so the fuzzy sets determine the quality of the algorithm’s output. Because there is not any formal connection bridge between the inherent semantics of words and their associated fuzzy sets, the words are only the linguistic labels assigned to fuzzy sets. The manipulations on fuzzy sets may not correspond to the manipulations on the inherent semantics of words if the relations between them are not isomorphic. The direct manipulation of linguistic words is very important because the inherent semantics of words in natural language reflect the knowledge of the real world, and humans understand real-word objects through the medium of natural language. The semantic order of linguistic words determines the weak structure of natural language, and it is formalized using a mathematical structure, the so-called hedge algebra (HA) [32,33]. Then, to represent the interval semantic cores of words to adapt to real-world problems, Nguyen et al. extended HA by adding an artificial hedge h0 [23], the so-called enlarged hedge algebras (EHAs). By utilizing EHA, the linguistic value domains of linguistic variables are automatically induced, and then the fuzzy set-based multi-level semantic structures of linguistic words, which ensure the interpretability of the content of the extracted linguistic summaries, are established [10]. Hereafter is the mathematical formalism to generate such multi-level semantic structures of linguistic words.

Assume that A is a linguistic variable connected with an attribute of a given dataset. Each word has its own semantics that is assigned by humans, and it only has full meaning in relation to all words in the linguistic domain Dom(A) of A. For example, the word “hot” only has full meaning when compared to the word “cold” in the context of the considering problem. Therefore, the semantics of linguistic words should be specified in the context of the entire variable’s domain Dom(A). The authors in [34] proposed a new concept, the so-called linguistic frame of cognition (LFoC) of A, by applying hedge algebras [32,33]. At a given period, the word set taken from an LFoC for describing the universe of discourse of a linguistic variable is finite. In the early days, it commonly consisted of a few words, was short in length, and had general semantics. It means that in HA’s formalism, the value of k, which specifies the maximal length of words in the LFoC, is small, commonly . When human users need to expand LFoCs to describe more specific cases or to obtain more specific characteristics of data, they only need to add new words with more specific semantics and greater length at level k + 1 by increasing the value of k. By doing so, the semantic order relation, as well as the generality–specificity of the existing words, are still preserved. It also means that the semantics of the existing words remain unchanged when LFoCs are expanded [10,35] by adding new words. This scalable ability is suitable for practical requirements when human users use word sets of LFoCs of attributes of a given dataset in real-world recognition.

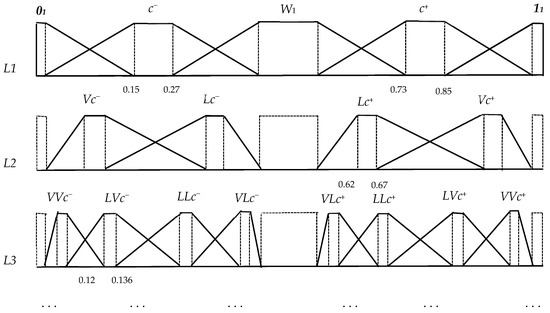

Trapezoidal fuzzy sets are commonly used to represent the computational semantics of words in the existing research on the linguistic summarization of data. In the EHA approach [10,24], an interval quantifying mapping (SQM) value of a word is a mapping f: Dom(A) → P[0, 1] (P[0, 1]—all sub-intervals of [0, 1]) that specifies an interval semantic core of the word, and it is considered the numerical semantics of that word. Therefore, the interval semantic core can be used to design the small base of the trapezoidal computational fuzzy set-based semantics of the word. In [23], based on the interval semantic cores (interval SQL values), a procedure to automatically produce such computational semantics of words from a set of given fuzziness parameter values of EHA was proposed. As stated in [23], the fuzziness parameter values of EHA are comprised of the fuzziness measure values of one of two primary words c− (e.g., “low”, “few”, “short”, etc.) and c+ (“high”, “many”, “long”, etc.), i.e., m(c−) or m(c+); the fuzziness measure values of the least word 0, neutral word W, and greatest word 1, i.e., m(1), m(W), and m(1), respectively; and the fuzziness measure values of the linguistic hedges, denoted by μ(hj), j = 0, …, n, where hj is a linguistic hedge including an artificial hedge h0, and n is the number of non-artificial linguistic hedges. For example, when k is set to 3, two primary words are c− (e.g., “low”) and c+ (e.g., “high”), the linguistic hedge set is {L (Little), V (Very)}, the steps for producing linguistic words are as follows: First, five words {0, c−, W, c+, 1} at level 1 are specified. Second, the hedges L, h0, and V linearly act on two primary words, c− and c+, to produce new words at level 2, including {Vc−, h0c−, Lc−, Lc+, h0c+, Vc+}. Two words, h0c− and h0c+, become the semantic cores of c− and c+, respectively. Therefore, all words of levels 1 and 2 are {0, Vc−, c−, Lc−, W, Lc+, c+, Vc+, 1}. Last, the hedges L, h0, and V linearly act on the words at level 2 including {Vc−, Lc−, Lc+, Vc+} to produce new words at level 3 including {VVc−, h0Vc−, LVc−, LLc−, h0Lc−, VLc−, VLc+, h0Lc+, LLc+, LVc+, h0Vc+, VVc+}. Four words h0Vc−, h0Lc−, h0Lc+, and h0Vc+ become semantic cores of Vc−, Lc−, Lc+, and Vc+, respectively. Consequently, all linguistic words at levels 1, 2, and 3 in the LFoC generated by acting two linguistic hedges on two primary words are {0, VVc−, Vc−, LVc−, c−, LLc−, Lc−, VLc−, W, VLc+, Lc+, LLc+, c+, LVc+, Vc+, VVc+, 1} and their semantic cores. These words are arranged by their semantic order. In [10,35], a method of producing the trapezoidal fuzzy sets that constitute an interpretable multi-level semantic structure of a given LFoC of A satisfying the concept of interpretability of Tarski [36] was also proposed. Therefore, when the fuzziness parameter values are given, the fuzziness measure values and interval SQM values of those words are computed. Consequently, an interpretable fuzzy set-based multi-level semantic structure is automatically constructed as in Figure 1 [10,35]. As can be seen in Figure 1, for example, the word Vc− is produced from the word c− by acting the hedge V on c−; the semantics of Vc− is more specific than c−, but it still conveys the original semantics of c−. Therefore, the support of the fuzzy set of Vc− must be within the support of c−. It is the same for all other words at levels greater than 1.

Figure 1.

An automatically generated interpretable fuzzy set-based multi-level semantic structure of a linguistic variable [10,35].

To implement the proposed method of detecting linguistic summaries expressing outliers based on a set of linguistic summaries expressing common rules in this paper, the concept of opposite semantics of two words should be defined. In the word set of the given LFoC of A, the word constant W (i.e., “medium”) has neutral semantics, and two-word constants 0 and 1 have the least semantic and greatest semantics, respectively. Two primary words, c− and c+, for example, “low” and “high”, have opposite semantics. Assume that there is a hedge h1 (e.g., “very”) that acts on c− and c+; these actions induce two new words h1c− (e.g., “very low”) and h1c+ (e.g., “very high”), which have opposite semantics. The words hc− and c+ also have opposite semantics. It is also true for two words, c− and h1c+. Generally, two words have opposite semantics if and only if they have different primary words.

2.3. Blended Learning Courses on Learning Management Systems

Blended learning is a learning method in which students learn a part directly in traditional classrooms and learn a part online using an LMS. Each learning course has a different learning plan to determine whether the time slots and learning contents are online or offline. This learning plan is given out by lecturers based on the characteristics of the learning courses and the pedagogical ideas of the lecturers.

LMSs store the learning activities of students, such as system login, document (slide) views, video views, homework submissions, quizzes, and practice exercises. The numeric evaluation results of homework, quizzes, and practice exercises are also stored. Therefore, the data on learning progress and frequent evaluation results can be extracted for analysis.

3. Materials and Methods

This section presents our proposed methods for extracting an optimal set of linguistic summaries from blended learning data. Both kinds of linguistic summaries, expressing common rules and expressing outliers, are examined. The scores that students obtain in tests and exams are measures of their learning outcomes. In this study, the attributes in summarizer S of the extracted linguistic summaries are the mid-term test scores, final exam scores, and course final scores. The attributes in the filter criterion F are the attributes of the learning activities of students in the learning process on the LMS.

3.1. A Method of Extracting Linguistic Summaries Expressing Common Rules

A linguistic summary of the structure (2) with quantifier Q expressing a common rule is a true statement for a group of objects that have a significant cardinality in the set of objects under consideration. For example, the linguistic summary “Most students who practice with high results and many times will have high practical test scores” expresses a common rule when the number of students who “practice with high results and many times” is a group that has significant cardinality. To evaluate the cardinality referred to in a linguistic summary of the structure (2), we used the focus measure computed using the following formula [31]:

The focus measure value of a linguistic summary should be greater than a given threshold α. Depending on the application requirements in different fields, the focus threshold value should be determined so that the linguistic summary expresses a common rule extracted from a given dataset. A linguistic summary expresses a common rule that should satisfy the following conditions:

- Q has a linguistic word with its semantics from “many” to “almost all”;

- The value of computed using Equation (5) is greater than a given threshold α.

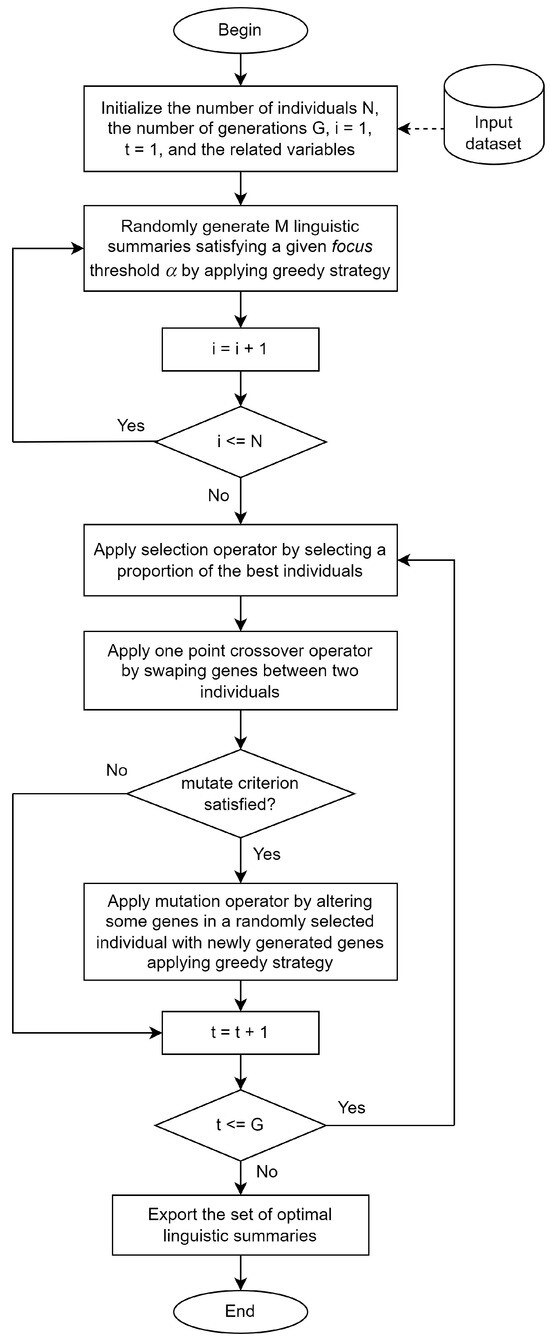

In this subsection, we enhance the genetic algorithm combined with the greedy strategy, Random-Greedy-LS, proposed in [24] to find a set of common rules with a high diversity of learning processes and learning outcomes. The modifications and enhancements of this algorithm are as follows, and its main flow is visualized in Figure 2:

Figure 2.

An algorithm model for extracting linguistic summaries expressing common rules.

- The set of attributes in the filter criterion F are the attributes reflecting the learning behaviors or activities of students, such as the number of times they view lecture slides, the number of times they do tests, the number of times they practice, the number of times they submit assignments, the total practice time, average practice time, average time of doing quizzes, the scores of practices, the scores of tests, etc.

- The attributes in summarizer S are the attributes reflecting the learning results of students, such as mid-term test scores, final exam scores, and course final scores.

- The threshold α is the minimum value of the focus measure of linguistic summaries.

- Only a linguistic summary with the semantics of the quantifier word Q greater than or equal to the semantics of “many” is included as a gene of an individual in our new algorithm model.

- The maximum specificity of the linguistic words in the LFoCs of all attributes and the quantifier Q is set to 3 (k = 3), so the linguistic word domain is rich enough, leading to the truth value T being approximately 1. Thus, the fitness function of genetic algorithms in our proposed models is only the diversity value instead of the weighted sum of the goodness value and the diversity value as in [24]. Therefore, the linguistic strength concept is not applied, and no weight is assigned to the quantifier words. It means that the applied genetic algorithm in our new algorithm model becomes a single-objective optimization problem.

- The output of the modified algorithm model described above is a set of summary sentences in the form of linguistic rules used to express the learning activities of students and their relations, as well as students’ learning outcomes. In particular, this set of summary sentences also reflects diverse groups of students at different levels of behavior. Therefore, those linguistic rules help students adjust and choose their suitable learning styles to achieve better learning outcomes.

3.2. A Method of Extracting Linguistic Summaries Expressing Outliers

In a practical management context, there is usually a need to detect exceptional cases that differ substantially from the common cases. Such cases are so-called outliers. Some methodologies have been proposed to detect outliers, such as the use of non-monotonic quantifiers and interval-valued fuzzy sets [37], linguistically quantified statements [38], and non-monotonic quantifiers [39]. In the actual context of monitoring students’ learning activities and their learning outcomes, outliers can be students’ learning outcomes that differ substantially from the common outcomes. For example, students did not actively take part in the learning activities during the learning course, but they obtained very high exam scores, or students obtained good scores on mid-term tests and practice tests but obtained bad final exam scores. Outliers are rare, but they can help educators and lecturers detect abnormalities in students’ learning outcomes.

In this paper, we propose a method of extracting linguistic summaries expressing outliers based on a set of linguistic summaries expressing common rules by utilizing the semantic order relation and semantic symmetry properties of words in the linguistic domains induced by enlarged hedge algebras. As stated above, the concept of opposite semantics of two words indicates that two words have their opposite semantics if and only if they have different primary words. This concept comes from the fact that by acting some linguistic hedges on two primary words, the words generated from the negative primary word c− have opposite semantics to the words generated from the positive primary word c+. Specifically, in the word set of a given LFoC of a linguistic variable A, the word constant W (i.e., “medium”) has neutral semantics. Two primary words, c− and c+, for example, “low” and “high”, have opposite semantics. Assume that there is a hedge h1 (e.g., “very”) that acts on c− and c+; these actions induce two new words h1c− (e.g., “very low”) and h1c+ (e.g., “very high”), which also have opposite semantics. The words hc− and c+ also have opposite semantics. It is the same with two words, c− and h1c+.

The concept of opposite semantics of two words is applied to our proposed algorithm model to detect outliers as follows. In a linguistic summary of the structure (2), if its summarizer S gives a conclusion that deviates too much from the conclusion in the common rules for a group of objects, there exists an outlier. If a linguistic summary of the structure Q1 F1 y are S1 (r*) expresses common rules, a linguistic summary of the structure Q2 F1 y are S2 (o*) expresses an outlier, provided that the following is true:

- S2 has the opposite semantics to S1. For example, the semantics of the word “low” is opposite to the semantics of the word “high”;

- Q2 is a linguistic word that is not “none”, and its semantics represent very few cardinalities, usually “very few”, “very very few”, “extremely few”, or similar.

Thus, compared with the linguistic summaries expressing the common rules (r*) extracted from the dataset, the linguistic summaries (o*) have the same filter criterion F1, i.e., they reflect the same group of objects, but have two opposite summarizers (S2 and S1 have opposite semantics). Q1 is a word indicating a large quantity, whereas Q2 indicates next to nothing, which shows that there is an outlier in this group of objects. Outliers of learning behaviors are rare. However, they should be considered to help detect anomalies in learning outcomes or to detect cheating on tests and exams in blended learning courses. To specify the cardinality of objects satisfying summarizer S among the set of objects satisfying filter criterion F, we propose to use the confidence measure β computed using Equation (6).

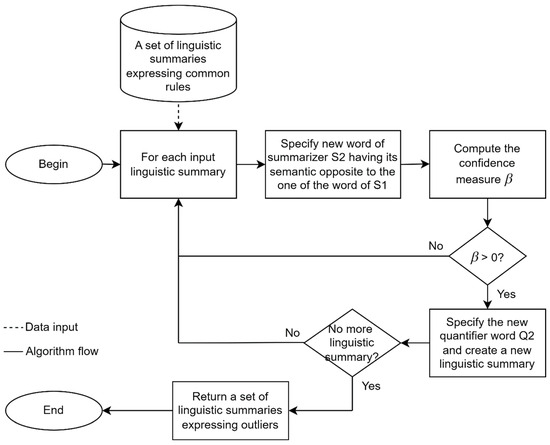

The steps to detect outliers based on a set of linguistic summaries expressing common rules are as follows and visualized in Figure 3:

Figure 3.

An algorithm model for outlier detection.

Step 1: With the summarizer S1 of the form “B is y” of each linguistic summary expressing common rules, if y is not “medium” (“medium” is the word that has neutral semantics), replace the word y with the word in the LFoC of attribute B having its semantic opposite to y to form a new summarizer S2.

Step 2: Compute the cardinality β of objects satisfying “B is ” in the set of objects satisfying filter criterion F by Equation (6).

Step 3: If β > 0 (an outlier is detected), specify the new quantifier word Q2 and create a new linguistic summary expressing the outlier with new summarizer S2 and new quantifier word Q2.

Step 4: If all linguistic summaries expressing common rules are examined, go to Step 5; otherwise, go to Step 1.

Step 5: Return a set of linguistic summaries expressing outliers.

To perform the evaluation of the extracted outliers, we used the similarity function L(p1, p2) proposed in [9] to check the similarity between the normal linguistic summary p1 and the outlier p2. The similarity value is calculated as follows [9]:

where p10 and p20 are the indexes of the linguistic quantifier Q in Dom(Q) of p1 and p2, respectively; p1m and p2m are the indexes of the word summarizers of p1 and p2, respectively; p1k and p2k, k = 1, …, m−1, are the indexes of the words in the Dom(Ak) of the qualifiers of p1 and p2, respectively. If the function L returns Yes, the two linguistic summaries are similar. The function H(p1k, p2k) determines the semantic distance between two words in the linguistic domain Dom(Ak) of the linguistic variable Ak is calculated as follows [9]:

For example, if Dom(Ak) = {“very few”, “few”, “little few”, “medium”, “little many”, “many”, “very many”}, the word “few” at position 2 and the word “many” at position 6 have their distance |2 − 6| > round(20% × 7) = 1. Therefore, the two words “few” and “many” are considered distinct.

4. Results and Analysis

This section presents the experimental results of the proposed algorithm models. Both kinds of linguistic summaries, the ones expressing common rules and the ones expressing outliers, are extracted from blended learning data stored in an LMS.

4.1. Experimented Datasets

First, the dataset of creep [9] was used to compare the proposed model to the existing ones. Then, two datasets of two actual blended learning courses were used in our experiments, i.e., Discrete Mathematics and Introduction to Computer Science courses, taking place at Hanoi National University of Education in the first and second semesters of the 2023–2024 school year, respectively. The number of students joining these courses was 255 and 228, respectively. The weekly learning plans for these two courses included the following: online learning activities are comprised of learning the course’s theory by viewing lecture slides, watching lecture videos, and doing quizzes; on-class learning activities are comprised of theory summarization, doing exercises, etc.; and after class activities are comprised of doing homework, doing practice exercises, etc. However, the activities of those two courses are different, so the collected data from the courses’ activities is split into two datasets. To achieve high-quality and accurate data, a data cleaning procedure was applied to eliminate inconsistent records with missing data or errors.

The Discrete Mathematics course does not include practice activities; therefore, that information is not included in the dataset. The descriptions of the attributes of its dataset are as in Table 1.

Table 1.

Dataset of the Discrete Mathematics course.

The Introduction to Computer Science course includes practice activities, so its dataset has attributes reflecting those learning activities. The descriptions of the attributes of the Introduction to Computer Science dataset are as in Table 2:

Table 2.

Dataset of the Introduction to Computer Science course.

4.2. Experiment Setups

The proposed algorithm models in this paper were implemented in C# programming language, running on a laptop with Intel Core i7-1360P 2.2 GHz (Intel, Santa Clara, CA, USA), 16 GB RAM, and Windows 11 64-bit. The settings of parameter values of the algorithm models are as follows:

- The maximum number of attributes in the filter criterion F is 6. The number of attributes in the summarizer S is 1. The focus threshold α is 0.01.

- For genetic algorithm models, the number of generations is 100, the number of individuals per generation is 20, the size of the chromosome (the number of summary sentences) is 20, the selection rate is 0.15, the crossover rate is 0.8, and the mutation rate is 0.1.

- The syntactical semantics of EHAs associated with attributes of the experimental datasets are as follows:

- +

- The negative primary word (c−) and positive primary word (c+) of score’s attributes (e.g., quizzes score, practice score, mid-term score, etc.) are low and high, respectively; Those of time’s attributes (e.g., video watching time, practice time, quizzes time, etc.) are short and high, respectively; Those of counting’s attributes (e.g., the number of slide views, the number of video views, the number of times doing quizzes, the number of times doing exercises, etc.) are few and many, respectively;

- +

- Two linguistic hedges used in our proposed model are Little (L) and Very (V).

- The fuzziness parameter values of EHAs associated with attributes of the experimented datasets are as follows:

- +

- The maximum specificity of the linguistic words in the LFoCs of all attributes and the quantifier Q: k = 3. Therefore, the number of used words in each LFoC is 17. With a high specificity level (k = 3), we have lots of specificity words that can describe more special cases;

- +

- For the attributes related to scores of students, the following is true: m(0) = m(W) = m(1) = 0.1, m(c−) = m(c+) = 0.35, μ(L) = μ(V) = 0.4, μ(h0) = 0.2;

- +

- For the quantifier Q and other attributes, the following is true: m(0) = m(1) = 0.05, m(W) = 0.1, m(c−) = m(c+) = 0.4, μ(L) = μ(V) = 0.4, μ(h0) = 0.2.

4.3. Experimental Results and Discussion

First, to show the efficiency of our proposed algorithm model, this subsection presents the comparison of the experimental results of our proposed model with the existing ones on the creep dataset [9]. Then, this subsection aims to present the applications of the proposed linguistic summarization model to extract linguistic summaries from datasets of blended learning courses to detect the common rules and outliers of the courses. It is an enhanced version of the Random-Greedy-LS algorithm proposed in [24], whose performance was proved in [11,24]. The linguistic summaries of the form (2) will be extracted. To evaluate each evaluation measure of the given learning courses, the summarizer attribute is, in turn, set to MidTermScore, FinalExamScore, and FinalCourseScore.

4.3.1. Experimental Results on the Creep Dataset

To show the efficacy of the proposed model in comparison with the existing ones, its experimental results on the creep dataset are compared to those of the Hybrid-GA [9] and ACO-LDS [40] models. Specifically, the proposed model was experimentally run 10 times. The results of the 10 runs are averaged, and the comparison results are shown in Table 3.

Table 3.

Comparison of the performance results of the proposed model in this study with the Hybrid-GA [9] and ACO-LDS [40] models.

As shown in Table 3, the proposed method has an average of fitness function values (Fitness) of 0.8, an average of the truth values (Truth) of 0.9957, an average of the goodness values (Goodness) of 0.75324, which are much better than those of Hybrid-GA [9] and ACO-LDS [40]. Considering the average of the diversity values (Diversity), the value of the proposed model is 0.91, which is better than that of ACO-LDS, with a value of 0.8233, but worse than that of Hybrid-GA, with a value of 1.0. The average number of linguistic summaries with Q greater than a half of the proposed model is 18.8, which is better than that of Hybrid-GA, with a value of 17.8, but worse than that of ACO-LDS, with a value of 21.0. The number of linguistic summaries with a truth value (T) greater than 0.8 of the proposed model is 30, better than that of Hybrid-GA, with a value of 27. The comparison results show the effectiveness of our proposed model.

4.3.2. Extraction of Linguistic Summaries Expressing Common Rules

- For the dataset of the Discrete Mathematics course

The samples of extracted linguistic summaries expressing common rules of this learning course are shown in Table 4. Only the linguistic summaries with a truth value of 1.0 are put into Table 4.

Table 4.

Linguistic summaries extracted from the dataset of Discrete Mathematics.

It can be shown in the first part of Table 4 that all five linguistic summaries include quiz score information in the filter criteria and have the same summarizer’s extremely high mid-term score. In addition, the semantics of linguistic words expressing the quantitative information of the quiz scores of those linguistic summaries vary from very high to extremely high. Therefore, the quiz score is one of the most important factors that has a big effect on the mid-term score. On the other hand, the other factors that directly or indirectly affect the mid-term score can be the number of lecture slide views, the number of times doing exercises, and the number of full-time lectures in direct learning classes. Therefore, to obtain a high mid-term score, students should improve those activities during the learning course.

As shown in the second part of Table 4, the final exam scores of students are quite low because students did not actively take part in the learning activities during the learning course. Specifically, the number of times doing quizzes and the quiz score are the two most important factors that directly affect the final exam score. Moreover, the number of full-time lectures in direct learning classes and the number of video views are also two factors affecting the final exam score.

The course learning outcome that a student obtains is evaluated by the course final score computed based on the mid-term score, final exam score, and attendance score. Their weights are 0.3, 0.6, and 0.1, respectively. By analyzing the semantics of linguistic words in the filter criteria of linguistic summaries shown in the last part of Table 4, we can see that the quiz score and the number of times doing exercises have a big effect on the learning outcomes of students. The number of slide views does not have much effect because students can download the lecture slides to read offline. In addition, the number of video views and the times in direct learning classes are the other factors that affect the course learning outcome.

- For the dataset of the Discrete Mathematics course

The samples of extracted linguistic summaries expressing common rules of this learning course are shown in Table 5. The same is true in Table 4; only the linguistic summaries with a truth value of 1.0 are put into Table 5.

Table 5.

Linguistic summaries extracted from the dataset of Introduction to Computer Science.

As we can see in part 1 of Table 5, the linguistic summaries shown in rows 1, 2, and 3 indicate that the extremely high average quiz scores and many times in direct learning classes are the important factors in helping extremely many students obtain very high mid-term scores; combining the short average practice time in the rows 1, 4, and 5 with few times of doing practice and long average practice time in row 3, we can see that if the average practice time is short, then the number of times doing practice should be many. Whereas, if the number of times doing practice is few, the average practice time should be long.

By analyzing the second part of Table 5, we found that high quiz scores and high practice scores are the factors that make many students obtain very high final exam scores. With the linguistic words few and short, the number of times doing practice, quizzes, quiz time, and practice time is not an important factor contributing to obtaining a high final exam score. This is also true for the video and slide views. Based on those analyses, we can state that there may be some problems with the final exam results because students did not actively take part in learning activities; they still obtained high final exam scores (rows 6 and 7). Thus, educators and lecturers need to take some action to find out the causes of the problems.

Based on the linguistic summaries shown in the last part of Table 5, very many students who have a long average practice time or little long total practice time and obtain high average practice scores, high self-learning scores, or extremely high mid-term scores obtain extremely high course final scores. In addition, the number of times practice is only a few, so long practice is efficient. Many students who have few times doing quizzes and short average quiz times or short total quiz times obtain very high course final scores. This indicates that short quiz time is efficient, so the number of quizzes is only a few.

In summary, based on the linguistic summaries shown in Table 5, we need to consider that there may be some problems with exam scores that we need to find out. For example, the mid-term tests and/or final exams can be very easy so that students can easily obtain good scores. It is clear that the learning outcomes of a course depend much on the learning activities, such as doing practice, quiz time, and self-learning. In general, a set of extracted summary sentences in the form of linguistic rules can express the learning activities of students and the activity relations in relation to students’ learning outcomes. It also reflects diverse groups of students at different levels of behavior. Thus, those linguistic rules can help students adjust and choose their suitable learning activities or suitable learning styles to achieve better learning outcomes. Moreover, they also help lecturers and educators discover the shortcomings in the curriculum and teaching activities so that they can adjust them to find an efficient way to improve the learning outcomes of students.

4.3.3. Extraction of Linguistic Summaries Expressing Outliers

The extracted linguistic summaries expressing exceptional cases or outliers of both learning courses are shown in Table 6. We can see that most outliers express the cases that although the student’s learning activities in the learning processes are remarkable, their exam scores or learning outcomes are poor and not suitable to their activity’s endeavors. The linguistic summaries, which have significant attention, are in rows 5 and 6 of the table. The linguistic summary in row 5 shows that extremely few students with trivial (low and few) learning activity participation (quizzes, videos, slides) achieve high final exam scores. It provides educators with valuable insights into student engagement and performance patterns. In practical aspects, it highlights the importance of continuous participation throughout the course, suggesting that students who engage minimally with the course activities are unlikely to achieve high scores on the final exam. This can suggest to educators that they should have their early intervention to encourage participation. Moreover, it represents an exceptional case that deviates from common student performance patterns, and it has practical significance for educators as follows:

Table 6.

Linguistic summaries expressing outliers.

- -

- Students have exceptional learning strategies: It may indicate that some students have good learning outcomes despite minimal engagement, possibly due to alternative study methods, prior knowledge, or external resources. Therefore, educators can explore those strategies and consider integrating them into the course design and try to apply them to the next learning courses.

- -

- There may be some curriculum gaps or limitations: In case a student achieves a high final exam score without participating much in the course activities, it might suggest that the course assessments do not fully reflect engagement-based learning, signaling a need to redesign the course’s curriculum.

- -

- Students have personalized learning abilities: Educators can investigate whether exceptional students have different backgrounds, learning preferences, or unique cognitive abilities. Understanding these factors may help them with tailoring support for diverse learners.

The linguistic summary in row 6 shows that the final exam scores are remarkable (high) and consistent with the student’s learning activities. However, the quantifier words in this sentence are extremely few. This means that very few students with remarkable learning activities obtain high final exam scores. It also means that extremely many students with remarkable learning activities obtain low final exam scores. Therefore, this linguistic summary suggests an interesting, possibly anomalous pattern in student performance. The practical significance of this observation for educators includes the following:

- -

- There may be assessment misalignment: If students achieve a high maximum quiz score but struggle in the final exam, it could indicate that the quizzes do not accurately reflect the depth of knowledge required for the exam. It means that some quizzes are too easy. Therefore, educators need to review quiz difficulty and consistency to align quizzes more closely with final assessment expectations.

- -

- Domination of memorization rather than deep understanding: Frequent quiz attempts and high scores might indicate that students rely on memorization rather than deep comprehension. This could signal a need to redesign quizzes to incorporate more critical thinking and problem-solving questions.

- -

- Students may face difficulties in exam conditions: Students may excel in quizzes, which are often in lower difficulty levels, but struggle in high-pressure exam environments. Educators might consider strategies to reduce stress or use alternative assessment methods to support students in achieving better learning outcomes.

- -

- Students may face test-taking challenges: Test anxiety, fatigue, and unfamiliarity with the exam format can be difficulties that students may face. Educators can consider offering practice exams or techniques to help students transition from quiz success to exam success.

Therefore, there must be a problem of learning and teaching quality that educators should consider and find out.

There is no outlier extracted in case the mid-term score’s attribute of the dataset of Introduction to Computer Science is selected as the summarizer. It shows that there may not be any abnormality in this case.

To validate the linguistic summaries expressing outliers, the functions H and L proposed in [9] can be used to calculate the distance of two linguistic words and the similarity of two linguistic summaries, respectively. For example, the words in the linguistic domain of the linguistic variable are {“extremely few”, “very very few”, “very few”, “little very few”, “few”, “little little few”, “little few”, “very little few”, “medium”, “very little many”, “little many”, “little little many”, “many”, “little very many”, “very many”, “very very many”, “extremely many”}, so the number of words is 17. The linguistic summary expressing common rule “Extremely many students with very high average quiz score, little many video views and little little few times doing quizzes obtain extremely high mid-term score” and the linguistic summary expressing an outlier “Extremely few students with very high average quiz score, little many video views and little little few times doing quizzes obtain extremely low mid-term score” have the semantic distance value of both the quantifier and the summarizer of |1 − 17| = 16 > round(0.2 × 17) = 3. Therefore, the value of the function L expressing the similarity between the normal linguistic summary and the outlier is No. Do similar calculations; all outliers shown in Table 6 have the values of the similarity function between them, and their corresponding normal linguistic summaries are No.

In summary, outliers mainly occur from unsuitable learning activities and unsuitable styles of students and from an inappropriate curriculum. Based on the outliers, students can notice their inconsistent points during the learning process to make timely adjustments. Lecturers and educators can identify the tangible shortcomings in the curriculum and teaching activities and plan for improvement. Moreover, they can detect cheating by students on the tests and exams based on the questionable linguistic summaries in outliers. Based on the results of the methodology examination, the LMS plug-in application platform will be developed in the next stage.

5. Conclusions

Algorithm models of the linguistic summarization of data provide us with the discovered knowledge represented by summary sentences in natural language. This kind of knowledge is easy to understand for people. The blended learning format is now popular in higher education at both undergraduate and graduate levels. A lot of techniques in machine learning, such as classification, regression, clustering, and forecasting, have been applied to discover learning styles, evaluate learning performance, and predict the learning outcomes of students. However, few studies have been examined to transform the data of blended learning courses into the knowledge represented as linguistic summaries. This paper presents the application of an interpretable linguistic data summarization algorithm model to extract compact sets of linguistic summaries from data from blended learning courses collected from a learning management system. A method of detecting exceptional cases or outliers based on a set of linguistic summaries expressing common rules is proposed. The experiments were carried out on two real-world datasets of blended learning courses, and the experimental results have shown the practical application of the proposed methods. Based on the contents of extracted linguistic summaries, university educators and lecturers can evaluate the learning activities during the learning courses to find any abnormal cases so that they can help students adjust and choose suitable learning styles to improve their learning results.

Author Contributions

Conceptualization, P.D.P. and P.T.L.; methodology, P.D.P.; software, P.D.P.; validation, T.X.T., P.D.P. and P.T.L.; formal analysis, P.D.P.; investigation, T.X.T.; resources, P.T.L.; data curation, P.T.L. and T.X.T.; writing—original draft preparation, P.D.P.; writing—review and editing, P.T.L. and T.X.T.; visualization, T.X.T.; supervision, P.T.L.; project administration, P.D.P.; funding acquisition, P.D.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research is funded by the University of Transport and Communications (UTC) under grant number T2023-CN-008TD.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data in the case study are not publicly available due to the confidentiality requirement of the project.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Mitra, S.; Pal, S.K.; Mitra, P. Data mining in soft computing framework: A survey. IEEE Trans. Neural Netw. 2002, 13, 3–14. [Google Scholar] [CrossRef] [PubMed]

- Yager, R.R. A new approach to the summarization of data. Inf. Sci. 1982, 28, 69–86. [Google Scholar] [CrossRef]

- Kacprzyk, J.; Yager, R.R. Linguistic summaries of data using fuzzy logic. Int. J. Gen. Syst. 2001, 30, 133–154. [Google Scholar] [CrossRef]

- Federico, M.P.; Angel, B.; Diego, R.L.; Santiago, S.S. Linguistic Summarization of Network Traffic Flows. In Proceedings of the IEEE International Conference on Fuzzy Systems, Hong Kong, China, 1–6 June 2008; pp. 619–624. [Google Scholar]

- Altintop, T.; Yager, R.R.; Akay, D.; Boran, F.E.; Ünal, M. Fuzzy Linguistic Summarization with Genetic Algorithm: An Application with Operational and Financial Healthcare Data. Int. J. Uncertain. Fuzziness Knowl.-Based Syst. 2017, 25, 599–620. [Google Scholar] [CrossRef]

- Aguilera, M.D.P.; Espinilla, M.; Olmo, M.R.F.; Medina, J. Fuzzy linguistic protoforms to summarize heart rate streams of patients with ischemic heart disease. Complexity 2019, 2019, 2694126. [Google Scholar] [CrossRef]

- Katarzyna, K.M.; Gabriella, C.; Giovanna, C.; Olgierd, H.; Monika, D. Explaining smartphone-based acoustic data in bipolar disorder: Semi-supervised fuzzy clustering and relative linguistic summaries. Inf. Sci. 2022, 588, 174–195. [Google Scholar] [CrossRef]

- Jain, A.; Popescu, M.; Keller, J.; Rantz, M.; Markway, B. Linguistic summarization of in-home sensor data. J. Biomed. Inform. 2019, 96, 103240. [Google Scholar] [CrossRef]

- Diaz, C.D.; Muro, A.; Pérez, R.B.; Morales, E.V. A hybrid model of genetic algorithm with local search to discover linguistic data summaries from creep data. Expert Syst. Appl. 2014, 41, 2035–2042. [Google Scholar] [CrossRef]

- Nguyen, C.H.; Pham, T.L.; Nguyen, T.N.; Ho, C.H.; Nguyen, T.A. The linguistic summarization and the interpretability, scalability of fuzzy representations of multilevel semantic structures of word-domains. Microprocess. Microsyst. 2021, 81, 103641. [Google Scholar] [CrossRef]

- Pham, D.P.; Nguyen, D.D. A design of computational fuzzy set-based semantics for extracting linguistic summaries. Transp. Commun. Sci. J. 2024, 75, 2081–2092. [Google Scholar] [CrossRef]

- Remco, D.; Anna, W. Linguistic summarization of event logs—A practical approach. Inf. Syst. 2017, 67, 114–125. [Google Scholar]

- Calderón, C.A.; Pupo, I.P.; Herrera, R.Y.; Pérez, P.Y.P.; Pulgarón, R.P.; Acuña, L.A. Sport Customized Training Plan Assisted by Linguistic Data Summarization. In Computational Intelligence Applied to Decision-Making in Uncertain Environments; Studies in Computational Intelligence; Springer: Cham, Switzerland, 2025; Volume 1195, pp. 283–309. [Google Scholar]

- Majer, K.K.; Casalino, G.; Castellano, G.; Dominiak, M.; Hryniewicz, O.; Kamińska, O.; Vessio, G.; Rodríguez, N.D. PLENARY: Explaining black-box models in natural language through fuzzy linguistic summaries. Inf. Sci. 2022, 614, 374–399. [Google Scholar] [CrossRef]

- Serkan, G.; Akay, D.; Boran, F.E.; Yager, R.R. Linguistic summarization of fuzzy social and economic networks: An application on the international trade network. Soft Comput. 2020, 24, 1511–1527. [Google Scholar] [CrossRef]

- Sena, A.; Gül, E.O.K.; Diyar, A. Linguistic summarization to support supply network decisions. J. Intell. Manuf. 2021, 32, 1573–1586. [Google Scholar] [CrossRef]

- Andrea, C.F.; Alejandro, R.S.; Alberto, B. Meta-heuristics for generation of linguistic descriptions of weather data: Experimental comparison of two approaches. Fuzzy Sets Syst. 2022, 443, 173–202. [Google Scholar] [CrossRef]

- Wilbik, A.; Barreto, D.; Backus, G. On Relevance of Linguistic Summaries—A Case Study from the Agro-Food Domain. In Information Processing and Management of Uncertainty in Knowledge-Based Systems, Proceedings of the 18th International Conference, IPMU 2020, Lisbon, Portugal, 15–19 June 2020; Communications in Computer and Information Science; Springer: Cham, Switzerland, 2020; Volume 1237, pp. 289–300. [Google Scholar] [CrossRef]

- Kacprzyk, J.; Zadrożny, S. Linguistic database summaries and their protoforms: Towards natural language based knowledge discovery tools. Inf. Sci. 2005, 173, 281–304. [Google Scholar] [CrossRef]

- Diaz, C.A.D.; Bello, R.; Kacprzyk, J. Linguistic data summarization using an enhanced genetic algorithm. Czas. Tech. 2014, 2013, 3–12. [Google Scholar] [CrossRef]

- Ortega, R.C.; Marín, N.; Sánchez, D.; Tettamanzi, A.G. Linguistic summarization of time series data using genetic algorithms. EUSFLAT 2011, 1, 416–423. [Google Scholar] [CrossRef]

- Sena, A. Interval type-2 fuzzy linguistic summarization using restriction levels. Neural Comput. Appl. 2023, 35, 24947–24957. [Google Scholar] [CrossRef]

- Nguyen, C.H.; Tran, T.S.; Pham, D.P. Modeling of a semantics core of linguistic terms based on an extension of hedge algebra semantics and its application. Knowl.-Based Syst. 2014, 67, 244–262. [Google Scholar] [CrossRef]

- Lan, P.T.; Hồ, N.C.; Phong, P.Đ. Extracting an optimal set of linguistic summaries using genetic algorithm combined with greedy strategy. J. Inf. Technol. Commun. 2020, 2020, 75–87. [Google Scholar] [CrossRef]

- Rashid, A.B.; Ikram, R.R.R.; Thamilarasan, Y.; Salahuddin, L.; Yusof, N.F.A.; Rashid, Z. A Student Learning Style Auto-Detection Model in a Learning Management System. Eng. Technol. Appl. Sci. Res. 2023, 13, 11000–11005. [Google Scholar] [CrossRef]

- Alsubhi, B.; Aljojo, N.; Banjar, A.; Tashkandi, A.; Alghoson, A.; Al-Tirawi, A. Effective Feature Prediction Models for Student Performance. Eng. Technol. Appl. Sci. Res. 2023, 13, 11937–11944. [Google Scholar] [CrossRef]

- Luo, Y.; Han, X.; Zhang, C. Prediction of learning outcomes with a machine learning algorithm based on online learning behavior data in blended courses. Asia Pac. Educ. Rev. 2024, 25, 267–285. [Google Scholar] [CrossRef]

- Abdulaziz, S.A.; Diaa, M.U.; Adel, A.; Magdy, A.E.; Azizah, F.M.A.; Yaser, M.A. A Deep Learning Model to Predict Student Learning Outcomes in LMS Using CNN and LSTM. IEEE Access 2022, 10, 85255–85265. [Google Scholar] [CrossRef]

- Sachini, G.; Mirka, S. Explainable AI in Education: Techniques and Qualitative Assessment. Appl. Sci. 2025, 15, 1239. [Google Scholar] [CrossRef]

- Zadeh, L.A. A computational approach to fuzzy quantifiers in natural languages. Comput. Math. Appl. 1983, 9, 149–184. [Google Scholar] [CrossRef]

- Wilbik, A. Linguistic Summaries of Time Series Using Fuzzy Sets and Their Application for Performance Analysis of Investment Funds. Ph.D. Dissertation, Systems Research Institute, Polish Academy of Sciences, Warsaw, Poland, 2010. [Google Scholar]

- Nguyen, C.H.; Wechler, W. Hedge algebras: An algebraic approach to structures of sets of linguistic domains of linguistic truth values. Fuzzy Sets Syst. 1990, 35, 281–293. [Google Scholar] [CrossRef]

- Nguyen, C.H.; Wechler, W. Extended algebra and their application to fuzzy logic. Fuzzy Sets Syst. 1992, 52, 259–281. [Google Scholar] [CrossRef]

- Nguyen, C.H.; Hoang, V.T.; Nguyen, V.L. A discussion on interpretability of linguistic rule based systems and its application to solve regression problems. Knowl.-Based Syst. 2015, 88, 107–133. [Google Scholar] [CrossRef]

- Hoang, V.T.; Nguyen, C.H.; Nguyen, D.D.; Pham, D.P.; Nguyen, V.L. The interpretability and scalability of linguistic-rule-based systems for solving regression problems. Int. J. Approx. Reason. 2022, 149, 131–160. [Google Scholar] [CrossRef]

- Tarski, A.; Mostowski, A.; Robinson, R. Undecidable Theories; Elsevier: North-Holland, The Netherlands, 1953. [Google Scholar]

- Agnieszka, D.; Piotr, S.S. Linguistic summaries using interval-valued fuzzy representation of imprecise information—An innovative tool for detecting outliers. Lect. Notes Comput. Sci. 2021, 12747, 500–513. [Google Scholar] [CrossRef]

- Agnieszka, D.; Piotr, S. Detection of outlier information using linguistically quantified statements—The state of the art. Procedia Comput. Sci. 2022, 207, 1953–1958. [Google Scholar] [CrossRef]

- Duraj, A.; Szczepaniak, P.S.; Chomatek, L. Intelligent Detection of Information Outliers Using Linguistic Summaries with Non-monotonic Quantifiers. In Proceedings of the International Conference on Information Processing and Management of Uncertainty in Knowledge-Based Systems, Lisbon, Portugal, 15–19 June 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 787–799. [Google Scholar] [CrossRef]

- Diaz, C.D.; Bello, R.; Kacprzyk, J. Using Ant Colony Optimization and Genetic Algorithms for the Linguistic Summarization of Creep Data. Adv. Intell. Syst. Comput. 2015, 322, 81–92. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).