Abstract

Lossless coding is a compression method in the Versatile Video Coding (VVC) standard, which can compress video without distortion. Lossless coding has great application prospects in fields with high requirements for video quality. Since the current VVC standard is mainly designed for lossy coding, the compression efficiency of VVC lossless coding makes it hard to meet people’s needs. In order to improve the performance of VVC lossless coding, this paper proposes a sample-based intra-gradient edge detection and angular prediction (SGAP) method. SGAP utilizes the characteristics of lossless intra-coding to employ samples adjacent to the current sample as reference samples and performs prediction through sample iteration. SGAP aims to improve the prediction accuracy for edge regions, smooth regions and directional texture regions in images. Experimental results on the VVC Test Model (VTM) 12.3 reveal that SGAP achieves 7.31% bit-rate savings on average in VVC lossless intra-coding, while the encoding time is only increased by 5.4%. Compared with existing advanced sample-based intra-prediction methods, SGAP can provide significantly higher coding performance gain.

1. Introduction

With the development of video capture technology and information transmission technology, videos have gradually become the main way for people to obtain information in their daily lives. In order to meet the ever-growing need for improved video compression, the Joint Video Experts Team (JVET) of the International Telecommunication Union-Telecommunication Standardization Sector (ITU-T) Video Coding Experts Group (VCEG) and the International Organization for Standardization/International Electrotechnical Commission (ISO/IEC) Moving Picture Experts Group (MPEG) developed the most recent international video coding standard, Versatile Video Coding (VVC) [1]. Compared to its predecessor, the High-Efficiency Video Coding (HEVC) standard [2], VVC can decrease the coding bit rate by 50% while maintaining the same video quality. Compared to the most commonly used video coding standard at present, the Advanced Video Coding (AVC) standard [3], VVC can reduce the coding bit rate by 75% while maintaining the same video quality. Video coding can be divided into lossy coding and lossless coding based on whether distortion will be introduced. As a coding method of the VVC standard, lossless coding compresses videos without distortion at the cost of losing a portion of compression efficiency. Lossless coding is widely used in some fields that require high video quality, such as satellite remote sensing image processing, medical image transmission, fingerprint image storage, digital archiving [4], remote desktop sharing, and so on.

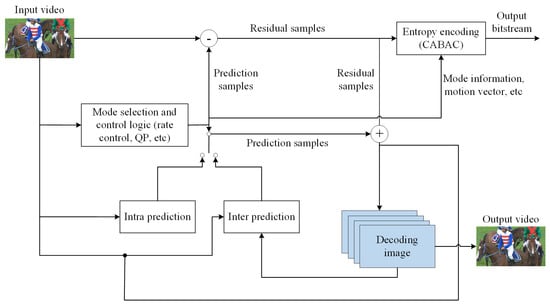

Figure 1 shows the encoding framework of VVC lossless coding. VVC achieves lossless coding by skipping transformation [5], quantization and other steps that will introduce distortion. Without transformation and quantization, the residual samples to be encoded have high energy, which may lead to low compression efficiency of lossless intra-coding. Although lossless coding can provide excellent reconstructed video quality, it is difficult to achieve satisfactory compression efficiency of lossless coding, which greatly limits its practical application. Since the coding tools of VVC are mainly designed for lossy coding, there is a large optimization space for lossless coding. In order to improve the practical application value of lossless coding, it is necessary to explore optimization methods for lossless coding.

Figure 1.

The encoding framework of VVC lossless coding.

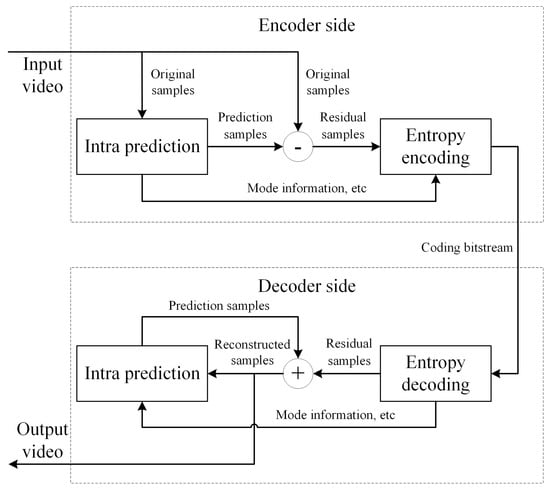

The existing optimization methods improve lossless coding chiefly from block partition, prediction, transformation, and so on. Among them, improving the intra-prediction step is the most common method. In conventional intra-prediction, the reconstructed samples from the adjacent prediction blocks of the current prediction block serve as reference samples. These reference samples are located outside the current prediction block and are referred to as out-block reference samples. This prediction method uses prediction blocks as the basic prediction unit and is referred to as block-based intra-prediction. In lossless intra-coding, the reconstructed samples on the decoder side are the same as the original samples on the encoder side. Therefore, in-block samples can be employed as reference samples. This prediction method uses samples as the basic prediction unit and performs prediction in an iterative way. The main encoding and decoding framework of sample-based lossless intra-coding is shown in Figure 2. On the encoder side, the adjacent original samples of the current sample are employed as reference samples for prediction. The original samples are subtracted from the prediction samples to obtain residual samples. On the decoder side, the reconstructed samples adjacent to the current sample serve as reference samples for prediction. The residual samples are added to the prediction samples to obtain the reconstructed samples. The reconstructed samples participate in subsequent iterative prediction.

Figure 2.

The main framework of sample-based lossless intra-coding.

In the VVC standard, Block Differential Pulse Code Modulation (BDPCM) [6] is a sample-based prediction tool that can provide performance gain of lossless coding. However, BDPCM only has two prediction modes: horizontal mode and vertical mode. The coding performance gain brought about by BDPCM is limited. Therefore, this paper analyzes the characteristics of different types of image regions and proposes a new sample-based intra-prediction method to replace the conventional intra-prediction method in VVC. The proposed prediction method is intended to perform accurate prediction on frequently occurring image regions to improve the performance of VVC lossless intra-coding.

The structure of this article is organized as follows. Section 2 reviews and introduces existing lossless coding optimization methods. Section 3 describes the proposed SGAP method in detail by presenting its principle and implementation processing. Section 4 provides the experimental comparison results with analysis and discussion. Finally, Section 5 draws the conclusions of this paper.

2. Related Works

In terms of the performance optimization of lossless coding, scholars in the field of video coding have conducted a series of studies. Lee et al. [7,8] proposed a Differential Pulse Code Modulation (DPCM) method within the framework of the AVC standard. Firstly, conventional horizontal or vertical predictions were performed on the current Prediction Unit (PU). Then, the residual samples were subtracted from adjacent residual samples to obtain secondary residual samples. This method was also known as Residual DPCM (RDPCM). In RDPCM coding, all samples within the current PU share the same prediction mode. References [9,10] proposed enhanced sample-based intra-prediction, which allows for selecting different prediction modes for each sample within the current PU. The HEVC standard continued to use the RDPCM method to improve the performance of lossless intra-coding by reprocessing residual samples [11,12]. Hong et al. [13] proposed cross-residual prediction (CRP), using DPCM for residual samples in both horizontal and vertical directions. References [14,15] utilized gradient information between adjacent reference samples of the current sample to improve the prediction accuracy of RDPCM. In the VVC standard, RDPCM served as a trigger for developing BDPCM coding tools [16]. Zhou et al. [17] extended DPCM to all angular intra-prediction modes and proposed a sample-based angular intra-prediction (SAP) method. SAP-Horizontal and Vertical (SAP-HV) [18] applied SAP only in the horizontal and vertical directions. SAP1 [19] adopted an equidistant prediction method for all angular prediction modes in SAP. In the case of short prediction distances, the equidistant prediction method is more suitable. On the basis of using adjacent reference samples for prediction, Sanchez et al. [20,21,22] introduced median edge detection (MED) [23] as the planar mode to enhance the prediction ability of the intra-prediction module for edge regions. References [24,25] analyzed the gradient information between adjacent reference samples to judge the edges around the current sample. Wige et al. [26,27] proposed a Sample-based Weighted Prediction (SWP) method with Directional Template Matching (DTM), which weighted the surrounding reference samples to predict the current sample. Sanchez et al. [28] proposed a Sample-based Edge and Angle Prediction (SEAP) method for lossless intra-coding of screen content. Weng et al. [29] proposed an L-shape-based Iterative Prediction (LIP) method and a Residual Median Edge Detection (RMED) method. LIP divided the current coding unit (CU) into adjacent one-dimensional sub-regions and allowed each sub-region to possess a separate prediction mode. RMED applied MED on residual blocks to remove edge spatial redundancy between residual samples.

Considering that the energy of residual values in lossless coding is relatively dispersed, some studies transformed residual samples to concentrate the energy of residual values. During the coding process, distortion should not be introduced. Chen et al. [30] designed reversible transforms for residual samples of different prediction modes. These transforms would not affect the quality of reconstructed videos. Cai et al. [31] performed transform and quantization in lossless coding. On the encoder side, quantized residual values and quantization error information were encoded together. On the decoder side, quantization error information was utilized to eliminate video distortion. Kamisli [32] proposed an integer-to-integer discrete sine transform, which performed a transform on residual samples without distortion and without increasing the dynamic range of values.

The above methods of transforming residual samples can improve the performance of lossless coding to some extent, but the performance gain is relatively limited. In addition, there are different complex types of regions in the image. The existing sample-based prediction methods make it difficult to perform accurate predictions for different image regions. Therefore, it is necessary to explore a method that can accurately predict frequently occurring image regions.

3. Proposed Method

The frequently occurring regions in images mainly include sharp edge regions, smooth regions with less noise and directional texture regions. The proposed SGAP contains three prediction algorithms that are suitable for these regions. This section analyzes the characteristics of the aforementioned image regions and introduces the three prediction algorithms in SGAP. In addition, the flowchart of SGAP in VVC lossless intra-coding is expounded.

3.1. Sample-Based Intra-Gradient Edge Detection and Angular Prediction

The following discussions and calculations are based on an prediction block. Except for in-block reference samples, two rows and columns of out-block reference samples are employed for the prediction of top and left samples. The coordinate of the top-left-corner out-block reference sample is defined as . The following intra-prediction is performed using sample iteration.

3.1.1. Edge Regions: Gradient Edge Detection

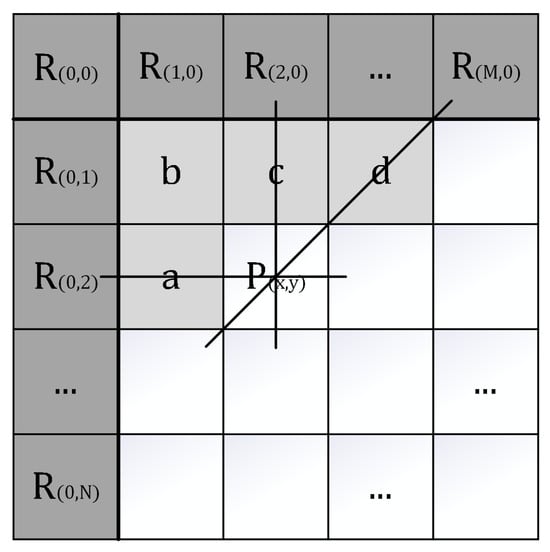

Edges are the intersection of different image attributes. The value differences between image samples located at both ends of edges are significant. Since images generally contain a large number of different attributes, the occurrence frequency of edges is very high. In conventional intra-prediction, all samples in a prediction block share the same predictor. However, in a prediction block, samples located at edges in different directions are suitable for different predictors. To improve this situation, a gradient edge detection (GED) algorithm is proposed. GED detects edges near the current sample by analyzing gradient information between adjacent reference samples. Then, GED selects the optimal predictor for the current sample based on the result of edge detection. The prediction process of GED and the positional relationship between the reference samples and the current sample are shown in Figure 3. In order to use reference sample d in the prediction process, the prediction has to be performed line-by-line. In the absence of reference sample d, the closest sample is used to populate it.

Figure 3.

The prediction process of GED and the positional relationship between the reference samples and the current sample.

GED can detect edges in both horizontal and vertical directions, as well as the top-right diagonal direction. GED uses four reference samples—a, b, c and d—around the current sample for prediction. The prediction sample is calculated as:

where m is the max value of a and c, n is the min value of a and c. When b is greater than m, a b-m-direction edge near the current sample is expected. Therefore, it is appropriate to take n as the value for the prediction sample . In the above case, if the difference between b and m is greater than the difference between m and n (), and d is less than n, the current sample will be expected to tend to approach d from n. is obtained by subtracting the difference between b and from n (). When is less than d, a top-right diagonal edge near the current sample is expected. Thus, d is selected as the best value for . If the condition that b is greater than and d is less than n, n is still taken to generate . Furthermore, when b is less than n, the a b-n-direction edge near the current sample is expected. Therefore, it is appropriate to take m as the value for the prediction sample . In this event, if the difference between n and b is greater than the difference between m and n (), and d is greater than m, the current sample will be expected to tend to approach d from m. is obtained by adding the difference between and b to m (). When is greater than d, a top-right diagonal edge near the current sample is expected. Similarly, d is selected as the best value for . If the condition that b is less than and d is greater than n is not met, m is still taken to generate . In other cases, according to the trend of changes between a, b, and c, () is selected as the optimal value for the prediction sample .

3.1.2. Smooth Regions: Average Prediction

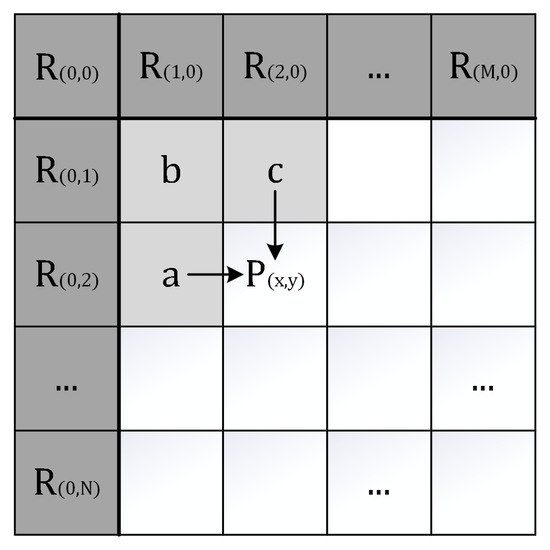

Smooth regions are the parts of images that contain less noise. The value fluctuations between image samples located at the smooth regions are relatively gentle. Within the same image attribute, the probability of sharp value fluctuations between samples is low, and the occurrence frequency of smooth regions is high. In this paper, an average predictor is used to deal with smooth regions. The process of average prediction and the positional relationship between the reference samples and the current sample are shown in Figure 4.

Figure 4.

The process of average prediction and the positional relationship between the reference samples and the current sample.

The adjacent samples a and c are employed as reference samples for prediction. The prediction sample is obtained as an average value of two reference samples:

where a is the left reference sample of the current sample, c is the top reference sample of the current sample. Since the regions to be handled are smooth, the values between adjacent samples are expected to be relatively close. In the sample-based prediction, the samples at positions a and c of the current sample are the closest reference samples that can be obtained. Therefore, the average value of the reference samples a and c is expected to be the optimal value for the prediction sample .

3.1.3. Directional Texture Regions: Angular Prediction

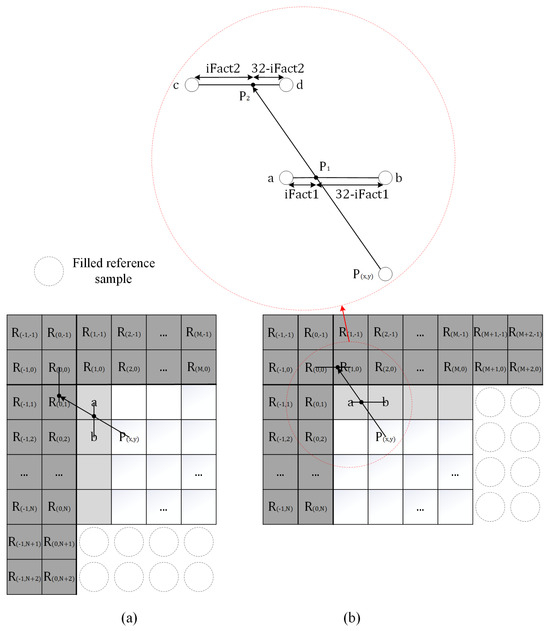

The video images contain massive textures in different directions. The values between image samples on the same texture are typically close. In conventional VVC intra-prediction, angular modes are used to predict these directional texture regions. Considering that the spatial correlation between image samples is enhanced with the decrease in sample distances, reference [17] proposed SAP, which uses adjacent reference samples for angular prediction. SAP has been proven to bring significant performance gains for lossless coding. We Introduce an additional adjacent reference line to extend and improve SAP. The process of improved SAP and the positional relationship between the reference samples and the current sample are shown in Figure 5. The improved SAP projects the current sample onto two adjacent reference lines to obtain two projection points. The prediction sample is generated through these two projection points. In addition, the distance between projection points of adjacent angular modes on the same reference line is relatively short, and the projection points of adjacent prediction modes on the same reference line are spaced relatively small. Therefore, sample-based angular prediction does not need to use too many prediction modes. The improved SAP reduces the VVC angular modes by half and only retains 33 angular modes. In addition, an equidistant prediction method the same as SAP1 is adopted.

Figure 5.

The process of improved SAP and the positional relationship between the reference samples and the current sample: (a) Prediction in the horizontal direction. (b) Prediction in the vertical direction.

The improved SAP uses the prediction values of two projection points to generate prediction samples according to a certain weight allocation. The projection point closer to the current sample is defined as . The projection point farther from the current sample is defined as . The prediction values of two projection points are obtained from:

where a and b are the two reference samples closest to the , and c and d are the two reference samples closest to the . The interpolation weight is determined by the distances between the and the two reference samples (when is located at a, is 0, and when is located at b, is 32). The interpolation weight is determined by the distances between the and the two reference samples (when is located at c, is 0, and when is located at d, is 32). The improved SAP has three types of weight allocations, respectively, named type 0, 1, and 2. Type 0 uses to generate the prediction sample:

Type 0 is consistent with the conventional SAP. Type 1 adds half of the difference between and to to generate the prediction sample:

The trend of changes between image samples has continuity. There is a correlation between the difference between the current sample and and the difference between and . Type 1 uses the difference between and to compensate for to obtain the prediction sample, which expands the scope of application of angular prediction. Type 2 uses the average value of and to generate the prediction sample:

If there are significant fluctuations between adjacent samples on the same texture, type 2 is expected to increase the accuracy of prediction.

Different prediction types of a prediction direction share the same prediction mode. The encoder uses additional data information to represent the weight allocation types of angle modes and writes the information data into the bitstream. Since the use frequency of type 0 is high, type 0 of all angular modes is tested in angular prediction. The use frequencies of types 1 and 2 are low. In order to avoid a significant increase in coding complexity, only types 1 and 2 of specific angular modes are tested.

3.2. Intra-Prediction Flowchart

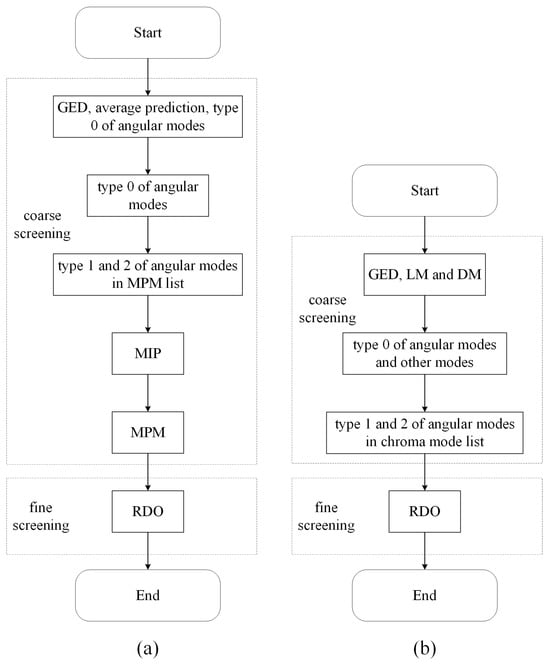

In the SGAP coding, the PLANAR mode and DC mode are not retained. The GED mode is set to mode 0. The average prediction mode is set to mode 1. The angle mode in SGAP is set to modes 2–34. Figure 6 shows the intra-prediction flowchart of SGAP.

Figure 6.

The flowchart of the proposed SGAP in VVC lossless intra-coding: (a) Luma component. (b) Chroma component.

The flowchart of the luma component is shown in Figure 6a. The optimal luma mode of the Current Coding Unit (CU) is determined through two steps: coarse screening and fine screening. In the first round of coarse screening, the Sum of Absolute Transformed Difference (SATD) of the prediction results of the GED mode, average prediction mode and type 0 of 17 even angular modes is calculated. A certain number of modes with the smallest SATD are selected to enter the mode candidate list. In the second round of coarse screening, the SATD of the prediction results of type 0 of the angular modes adjacent to the angular modes in the mode candidate list are calculated. Then, the candidate list is updated according to SATD. In the subsequent coarse screening steps, the SATD of the prediction results of type 1 and 2 of angular modes in the Most Probable Modes (MPM) list and modes in the Matrix-based Intra-Prediction (MIP) list and MPM list are calculated. Then, the candidate list is updated according to SATD. The fine screening is implemented by Rate-Distortion Optimization (RDO). In the RDO step, the Rate-Distortion Cost (RD cost) of all modes in the mode candidate list is calculated. The mode with the smallest RD cost is selected as the optimal luma mode.

The flowchart of the chroma component is shown in Figure 6b. The obtainment of the optimal chroma mode also goes through coarse screening and fine screening. In the coarse screening, the SATD of the prediction results of type 0 of the angular modes and other modes in the chroma mode list are calculated. Since the GED mode, Linear Model (LM) and Derived Mode (DM) must be tested, the SATD of the prediction results of GED mode, LM and DM are set to 0. A certain number of modes with the smallest SATD are selected to enter the mode candidate list. Then, the SATD of the prediction results of type 1 and 2 of angular modes in the mode candidate list are calculated. After completing the SATD calculation, the candidate list is updated. Finally, in the RDO step, the RD cost of all modes in the mode candidate list is calculated, and the mode with the smallest RD cost is selected as the optimal chroma mode.

4. Results and Analysis

In order to evaluate the performance of SGAP, we implemented it on the VTM12.3 platform and tested 26 video sequences (six natural scene classes and one screen content class) in the 4:2:0 format specified by the VVC standard. The parameter information of these sequences is shown in Table 1. The tests are run in a lossless All Intra (AI) configuration under the common test conditions [33]. In addition, performance tests for several existing lossless coding optimization methods on the VTM12.3 platform proceeded. In the coding schemes that do not retain block-based intra-prediction, the multiple reference lines (MRL) tool is not enabled. In the coding schemes that retain block-based intra-prediction and in which basic VVC coding is used for comparison, the MRL tool is enabled. Except for the MRL tool, other configurations for all coding schemes are identical. We compare and analyze the experimental results of the proposed SGAP and other optimization methods.

Table 1.

The parameter information of VVC test sequences.

Table 2 shows the bit-rate differences of several coding schemes compared to basic VVC coding. Negative numbers indicate a decrease in the bit rate of the coding schemes compared to basic VVC encoding. It can be observed that BDPCM achieves the lowest average bit-rate savings. BDPCM only applies sample-based angular prediction in horizontal and vertical directions, resulting in limited coding performance gain. SAP and SAP1 both use sample-based angular prediction to replace all conventional angle predictions and achieve close average bit-rate savings. Due to adopting an equidistant prediction method, the average bit-rate savings achieved by SAP1 are slightly higher than that achieved by SAP. LIP-RMED and SAP-E introduce the edge detection method into coding. Compared to coding schemes that only apply sample-based prediction in angular modes, LIP-RMED and SAP-E achieve higher coding performance gains. Since the proposed SGAP can perform accurate prediction on frequently occurring image regions, SGAP saves 7.31% bit-rate, on average, in VVC lossless intra-coding, which is ahead of other experimental coding schemes. Moreover, SGAP provides the highest bit-rate savings for all video sequences except the Campfire sequence.

Table 2.

Experimental results of bit-rate differences (%) of optimization coding schemes compared to basic VVC coding.

The average encoding and decoding time of several coding schemes compared to basic VVC coding are shown in Table 3. The allocation proportion of the average encoding and decoding time for each sequence is the same. Since SAP, SAP1, SAP-E and SGAP use the introduced prediction modes to replace the conventional prediction modes in the intra-prediction step without increasing additional testing costs, their coding times are close to that of the basic VVC coding. BDPCM and LIP-RMED add additional tests for the introduced prediction modes while retaining the original tests for the conventional prediction mode. Therefore, compared to the basic VVC coding, their encoding time is significantly increased. On the decoder side, sample-based intra-prediction increases the accuracy of prediction, reduces the energy of prediction residuals, and accelerates the speed of entropy decoding. Compared to the basic VVC coding, the decoding time of the experimental coding schemes is decreased in varying degrees.

Table 3.

Experimental results of average encoding and decoding time (%) of optimization coding schemes compared to basic VVC coding.

Compared with several existing optimization methods for lossless coding, SGAP has a great advantage in terms of coding performance gain. The encoding time of SGAP is close to that of the basic VVC lossless intra-coding, as well as other coding schemes with short encoding times. This indicates that SGAP brings significant coding performance gain at the cost of increasing the less encoding time and has high practical application value.

5. Conclusions

Lossless coding has great application prospects in some fields that require high video quality. However, the low compression efficiency of current VVC lossless coding greatly limits the practical application of lossless coding. In order to improve the coding performance of VVC lossless coding, this paper analyzes the characteristics of several types of frequently occurring image regions and proposes an SGAP method under the framework of sample-based prediction. In lossless intra-coding, SGAP can perform accurate prediction for edge regions, smooth regions with less noise and directional texture regions and provide significant coding performance gain. The experimental results show that SGAP can save 7.31% coding bit rate on average at the cost of increasing the lossless encoding time in VVC lossless intra-coding. Compared with existing lossless coding optimization methods, SGAP has a significant advantage.

Author Contributions

Conceptualization, G.C. and M.L.; methodology, G.C. and M.L.; software, G.C.; validation, G.C. and M.L.; formal analysis, G.C. and M.L.; investigation, G.C.; resources, G.C.; data curation, G.C.; writing—original draft preparation, G.C.; writing—review and editing, G.C. and M.L.; visualization, G.C.; supervision, M.L.; project administration, M.L.; funding acquisition, M.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Ministry of Science and Technology of China through the National Key Research and Development Program of China, under Grant 2019YFB2204500.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available in article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Bross, B.; Wang, Y.-K.; Ye, Y.; Liu, S.; Chen, J.; Sullivan, G.J.; Ohm, J.-R. Overview of the versatile video coding (VVC) standard and its applications. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 3736–3764. [Google Scholar] [CrossRef]

- Sullivan, G.J.; Ohm, J.-R.; Han, W.-J.; Wiegand, T. Overview of the high efficiency video coding (HEVC) standard. IEEE Trans. Circuits Syst. Video Technol. 2012, 22, 1649–1668. [Google Scholar] [CrossRef]

- Wiegand, T.; Sullivan, G.J.; Bjontegaard, G.; Luthra, A. Overview of the H.264/AVC video coding standard. IEEE Trans. Circuits Syst. Video Technol. 2003, 13, 560–576. [Google Scholar] [CrossRef]

- Unno, K.; Kameda, Y.; Kita, Y.; Matsuda, I.; Itoh, S.; Kawamura, K. Lossless video coding based on probability model optimization with improved adaptive prediction. In Proceedings of the 2021 IEEE International Conference on Image Processing (ICIP), Anchorage, AK, USA, 19–22 September 2021; pp. 2044–2048. [Google Scholar]

- Zhao, L.; An, J.; Ma, S.; Zhao, D.; Fan, X. Improved intra transform skip mode in HEVC. In Proceedings of the 2013 IEEE International Conference on Image Processing (ICIP), Melbourne, Australia, 15–18 September 2013; pp. 2010–2014. [Google Scholar]

- Abdoli, M.; Henry, F.; Brault, P.; Dufaux, F.; Duhamel, P.; Philippe, P. Intra block-DPCM with layer separation of screen content in VVC. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 3162–3166. [Google Scholar]

- Lee, Y.L.; Han, K.H.; Lim, S.C. Lossless intra coding for improved 4: 4: 4 coding in H. 264/MPEG-4 AVC. Joint Video Team (JVT). IEEE Trans. Image Process. 2005. [Google Scholar]

- Lee, Y.-L.; Han, K.-H.; Sullivan, G.J. Improved lossless intra coding for H.264/MPEG-4 AVC. IEEE Trans. Image Process. 2006, 15, 2610–2615. [Google Scholar] [CrossRef] [PubMed]

- Song, L.; Luo, Z.; Xiong, C. Improving lossless intra coding of H.264/AVC by pixel-wise spatial interleave prediction. IEEE Trans. Circuits Syst. Video Technol. 2011, 21, 1924–1928. [Google Scholar] [CrossRef]

- Wang, L.-L.; Siu, W.-C. Improved lossless coding algorithm in H.264/AVC based on hierarchical intra prediction. In Proceedings of the 2011 18th IEEE International Conference on Image Processing (ICIP), Brussels, Belgium, 11–14 September 2011; pp. 2009–2012. [Google Scholar]

- Lee, S.; Kim, I.K.; Kim, C. RCE2: Test 1 Residual DPCM for HEVC lossless Coding; Doc. JCTVC-M0079; Joint Collaborative Team on Video Coding (JCT-VC): Geneva, Switzerland, 2013. [Google Scholar]

- Xu, J.; Joshi, R.; Cohen, R.A. Overview of the emerging HEVC screen content coding extension. IEEE Trans. Circuits Syst. Video Technol. 2016, 26, 50–62. [Google Scholar] [CrossRef]

- Hong, S.-W.; Kwak, J.-H.; Lee, Y.-L. Cross residual transform for lossless intra-coding for HEVC. Signal Process. Image Commun. 2013, 28, 1335–1341. [Google Scholar] [CrossRef]

- Jeon, G.; Kim, K.; Jeong, J. Improved residual DPCM for HEVC lossless coding. In Proceedings of the 2014 27th SIBGRAPI Conference on Graphics, Patterns and Images, Rio de Janeiro, Brazil, 27–30 August 2014; pp. 95–102. [Google Scholar]

- Kim, K.; Jeon, G.; Jeong, J. Improvement of implicit residual DPCM for HEVC. In Proceedings of the 2014 Tenth International Conference on Signal-Image Technology and Internet-Based Systems, Marrakech, Morocco, 23–27 November 2014; pp. 652–658. [Google Scholar]

- Nguyen, T.; Xu, X.; Henry, F.; Liao, R.L.; Sarwer, M.G.; Karczewicz, M.; Chao, Y.H.; Xu, J.; Liu, S.; Marpe, D.; et al. Overview of the screen content support in VVC: Applications, coding tools, and performance. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 3801–3817. [Google Scholar] [CrossRef]

- Zhou, M.; Gao, W.; Jiang, M.; Yu, H. HEVC lossless coding and improvements. IEEE Trans. Circuits Syst. Video Technol. 2012, 22, 1839–1843. [Google Scholar] [CrossRef]

- Zhou, M.; Budagavi, M. RCE2: Experimental Results on Test 3 and Test 4; Doc. JCTVC-M0056; Joint Collaborative Team on Video Coding (JCT-VC): Geneva, Switzerland, 2013. [Google Scholar]

- Sanchez, V.; Bartrina-Rapesta, J. Lossless compression of medical images based on HEVC intra coding. In Proceedings of the 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014; pp. 6622–6626. [Google Scholar]

- Sanchez, V.; Aulí-Llinàs, F.; Bartrina-Rapesta, J.; Serra-Sagristà, J. HEVC-based lossless compression of whole slide pathology images. In Proceedings of the 2014 IEEE Global Conference on Signal and Information Processing (GlobalSIP), Atlanta, GA, USA, 3–5 December 2014; pp. 297–301. [Google Scholar]

- Sanchez, V. Lossless screen content coding in HEVC based on sample-wise median and edge prediction. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015; pp. 4604–4608. [Google Scholar]

- Sanchez, V.; Aulí-Llinàs, F.; Serra-Sagristà, J. Piecewise mapping in HEVC lossless intra-prediction coding. IEEE Trans. Image Process. 2016, 25, 4004–4017. [Google Scholar] [CrossRef] [PubMed]

- Weinberger, M.J.; Seroussi, G.; Sapiro, G. The LOCO-I lossless image compression algorithm: Principles and standardization into JPEG-LS. IEEE Trans. Image Process. 2000, 9, 1309–1324. [Google Scholar] [CrossRef] [PubMed]

- Sanchez, V. Sample-based edge prediction based on gradients for lossless screen content coding in HEVC. In Proceedings of the 2015 Picture Coding Symposium (PCS), Cairns, Australia, 31 May–3 June 2015; pp. 134–138. [Google Scholar]

- Sanchez, V.; Hernandez-Cabronero, M.; Auli-Llinàs, F.; Serra-Sagristà, J. Fast lossless compression of whole slide pathology images using HEVC intra-prediction. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 1456–1460. [Google Scholar]

- Wige, E.; Yammine, G.; Amon, P.; Hutter, A.; Kaup, A. Pixel-based averaging predictor for HEVC lossless coding. In Proceedings of the 2013 IEEE International Conference on Image Processing (ICIP), Melbourne, VIC, Australia, 15–18 September 2013; pp. 1806–1810. [Google Scholar]

- Wige, E.; Yammine, G.; Amon, P.; Hutter, A.; Kaup, A. Sample-based weighted prediction with directional template matching for HEVC lossless coding. In Proceedings of the 2013 Picture Coding Symposium (PCS), San Jose, CA, USA, 8–11 December 2013; pp. 305–308. [Google Scholar]

- Sanchez, V.; Aulí-Llinàs, F.; Serra-Sagristà, J. DPCM-based edge prediction for lossless screen content coding in HEVC. IEEE J. Emerg. Sel. Top. Circuits Syst. 2016, 6, 497–507. [Google Scholar] [CrossRef]

- Weng, X.; Lin, M.; Lin, Q.; Chen, G. L-shape-based iterative prediction and residual median edge detection (LIP-RMED) algorithm for VVC intra-frame lossless compression. IEEE Signal Process. Lett. 2022, 29, 1227–1231. [Google Scholar] [CrossRef]

- Chen, F.; Zhang, J.; Li, H. Hybrid transform for HEVC-based lossless coding. In Proceedings of the 2014 IEEE International Symposium on Circuits and Systems (ISCAS), Melbourne, Australia, 1–5 June 2014; pp. 550–553. [Google Scholar]

- Cai, X.; Gu, Q. Improved HEVC lossless compression using two-stage coding with sub-frame level optimal quantization values. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 5651–5655. [Google Scholar]

- Kamisli, F. Lossless image and intra-frame compression with integer-to-integer DST. IEEE Trans. Circuits Syst. Video Technol. 2019, 29, 502–516. [Google Scholar] [CrossRef]

- Bossen, F.; Boyce, J.; Li, X.; Seregin, V.; Sühring, K. JVET Common Test Conditions and Software Reference Configurations for SDR Video; Doc. JVET-N1010; Joint Video Experts Team (JVET): Geneva, Switzerland, 2019. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).