Abstract

Accurately classifying degraded images is a challenging task that relies on domain expertise to devise effective image processing techniques for various levels of degradation. Genetic Programming (GP) has been proven to be an excellent approach for solving image classification tasks. However, the program structures designed in current GP-based methods are not effective in classifying images with quality degradation. During the iterative process of GP algorithms, the high similarity between individuals often results in convergence to local optima, hindering the discovery of the best solutions. Moreover, the varied degrees of image quality degradation often lead to overfitting in the solutions derived by GP. Therefore, this research introduces an innovative program structure, distinct from the traditional program structure, which automates the creation of new features by transmitting information learned across multiple nodes, thus improving GP individual ability in constructing discriminative features. An accompanying evolution strategy addresses high similarity among GP individuals by retaining promising ones, thereby refining the algorithm’s development of more effective GP solutions. To counter the potential overfitting issue of the best GP individual, a multi-generational individual ensemble strategy is proposed, focusing on constructing an ensemble GP individual with an enhanced generalization capability. The new method evaluates performance in original, blurry, low contrast, noisy, and occlusion scenarios for six different types of datasets. It compares with a multitude of effective methods. The results show that the new method achieves better classification performance on degraded images compared with the comparative methods.

1. Introduction

Image classification aims to analyze image content and assign the correct category labels and is one of the core tasks in the field of computer vision. This technology has been widely applied in many fields, such as image retrieval, face recognition, autonomous driving, and medical image analysis [1,2,3,4]. Adequate and high-quality training samples ensure the precise capture of key features to complete the classification task. However, in the real world, most of the images obtained are of low quality, often containing a considerable amount of blurry information and noise. Therefore, analyzing degraded images and performing accurate classification is a more challenging task.

Extracting effective features is crucial for image classification tasks. Classic manual feature extraction methods such as Local Binary Patterns (LBP) [5], Scale-Invariant Feature Transform (SIFT) [6], and Histogram of Oriented Gradients (HOG) [7] aim to capture key information features of images, thereby achieving efficient image classification. However, despite these manual feature extraction methods demonstrating excellent performance on specific datasets, their classification performance may not be guaranteed when facing unknown types of classification tasks.

Compared with manual feature extraction methods, feature learning approaches are usually more effective for image classification. This is because they can automatically extract distinctive features from raw images. Convolutional Neural Networks (CNNs), as the current mainstream method for feature learning, exhibit outstanding performance in many tasks. However, their performance is dependent on the expert’s model design. Additionally, understanding and interpreting the decision-making process of CNNs remains a challenge.

As a type of evolutionary algorithm, Genetic Programming (GP) [8] has attracted widespread attention due to its excellent learning performance and good interpretability. It is applied in various tasks, such as workshop scheduling [9], symbolic regression [10], and image classification [11]. In GP-based image classification methods, a tree representation structure is generally used. In tree-structured GP, the original image and the parameters required by internal nodes are referred to as leaf nodes or terminals, serving as inputs to the program. Image filters, feature descriptors, and other image processing-related operators are used as internal nodes/functions in tree-structured GP [12]. According to this definition, each individual generated by the GP program can be viewed as a potential solution for the classification task.

Currently, image classification methods based on GP have made significant progress. Some approaches directly use raw images as inputs to the GP program. A common strategy among these is for the GP program to output learned high-level features, which are then fed into a classification algorithm for classification processing [13,14]. Another approach integrates a variety of classifiers within the internal nodes of the GP program. This allows the GP to automatically choose the most suitable classifiers and produce the predicted classification results [15].

However, the aforementioned GP methods primarily focus on processing limited types and higher quality samples, and have not thoroughly explored the issue of degraded image classification. Although some studies have proposed the EFLGP method [16] aimed at degraded image tasks, limitations in its program structure and internal node design may lead to poor classification performance. Furthermore, a common issue in the aforementioned GP methods is the excessive homogeneity of individuals within the population during the middle and later stages of the GP algorithm, which can result in a stagnation in fitness improvement for the best GP individual/program. On the other hand, existing research typically selects the best individual as the solution for classification tasks, a method that easily leads to the occurrence of overfitting.

To confront the challenges previously mentioned, this research introduces a new program structure based on information transmission and develops a novel function set and terminal set for it. The design of the structure enables nodes in specific layers to transmit the key information they learn to nodes in subsequent layers. This promotes the construction of more effective new features by GP individuals, thereby enhancing their performance as solutions for classification tasks. Furthermore, this research devises an accompanying evolution strategy that enhances population diversity by replacing redundant individuals after stagnation, thereby facilitating the search for superior GP individuals. Moreover, the proposed multi-generational individual ensemble strategy aims to combine several efficient GP individuals to construct an ensemble of GP individuals with enhanced generalization capabilities, serving as an effective solution for classification tasks. The proposed new method is abbreviated as ITACIE-GP.

The contributions of this paper lie in the following aspects.

- (1)

- Due to the limitations in program structure and node design in existing GP methods, the performance of GP individuals is restricted. To address this, the study develops a new program structure based on information transmission, which is centered on allowing nodes in specific layers to transmit effective information to subsequent layers. Such a design aids GP individuals in constructing distinctive features, thereby enhancing their performance as solutions for classification tasks.

- (2)

- To solve the problem of stagnation in fitness growth of the best GP individuals during the iterative process, this study proposes an accompanying evolution strategy. When stagnation in the fitness growth of the best GP individuals is detected, this strategy will guide the population toward exploring in the direction of more optimized GP individuals.

- (3)

- To address the potential overfitting issue when using a single GP individual as the solution, this study proposes a multi-generational individual ensemble strategy. This strategy, by combining several efficient GP individuals, constructs an ensemble GP individual with stronger generalization capabilities, effectively reducing the overfitting phenomenon.

- (4)

- ITACIE-GP is an effective method suitable for degraded image classification tasks. This method evaluates its performance in original, blurry, low contrast, noisy, and occlusion scenarios for six different types of datasets. It has been compared with several benchmark methods. Moreover, through detailed program examples, this paper delves into the reasons behind the high performance demonstrated by this method.

2. Related Work

2.1. GP and Strongly Typed GP

As an evolutionary algorithm, GP models the solutions to various application problems as individuals within a population. In this process, each individual is assigned a fitness score, which is determined based on a predefined fitness function. The population then undergoes several generations of evolution, thereby improving the fitness scores. At the end of the algorithm, the individual with the highest fitness score is selected as the best solution to the problem. In the application of image classification, Strongly Typed Genetic Programming (STGP) [17], based on tree representation, is widely used. This is because it allows for the specification of the input and output types of internal nodes as well as the output types of leaf nodes, enforcing constraints on the construction of the program tree, ensuring that only nodes with matching types can be connected. For example, in some operations in image processing (such as image filtering or feature extraction), the required input is of a specific type of image, and the output will also be of a specific type of image or feature value [12]. STGP is used to ensure that the generated program strictly follows the preset steps and requirements when processing images and extracting features.

2.2. Degraded Image

Degraded images are typically those affected by factors such as low contrast, occlusion, noise, or blur [16,18]. The low quality of these images may arise for a variety of reasons, including suboptimal shooting conditions, the limitations of the equipment’s performance, or interference from external environmental factors. Currently, researchers have proposed various methods to deal with degraded images. For example, some studies use image enhancement techniques to improve image quality, while others focus on reconstructing degraded images. These representative research works are displayed in Table 1.

Table 1.

Methods for processing degraded images.

However, the methods mentioned above, for different classification problems, all depend on diverse domain knowledge to design new solutions. Additionally, in real life, the decline in image quality is not just due to a single adverse factor. Therefore, in the field of degraded image classification, a new method is needed. This method should not rely heavily on domain knowledge while being able to adapt to a variety of task requirements, effectively solving degraded image classification problems that are affected by multiple adverse factors.

2.3. Image Classification Methods

In recent years, to more effectively carry out image classification, researchers have proposed various technical solutions and strategies. The existing image classification methods can be summarized into the following categories: manual feature-based image classification methods, CNN-based image classification methods, and GP-based image classification methods.

2.3.1. Manual Feature-Based Image Classification Methods

Image classification methods based on manual feature extraction are designed by experts based on an understanding of images and domain knowledge. In specific application scenarios, these methods often demonstrate good stability and effectiveness. Table 2 displays image classification methods based on manual features.

Table 2.

Manual feature-based image classification methods.

Although image classification methods based on manual features may demonstrate good performance in specific tasks and have the advantage of being easy to understand, their efficiency may not be high when dealing with unknown or complex classification tasks.

2.3.2. CNN-Based Image Classification Methods

CNNs are capable of automatically learning and extracting features, and they usually achieve better performance for large-scale and complex tasks. Table 3 displays image classification methods based on CNNs.

Table 3.

CNN-based image classification methods.

CNN-based methods have achieved significant success in image classification tasks. However, these methods typically require substantial computational resources to train parameters and often rely on expert-designed efficient architectures.

2.3.3. GP-Based Image Classification Methods

Tree-based GP has been successfully and widely applied in image classification. This method predefines certain operators and, during runtime, combines these operators within the problem space through an evolutionary algorithm to learn the best solution or program. This means that GP does not require extensive domain knowledge to adjust the model or tune parameters to obtain solutions for classification tasks, and these solutions often have a high level of interpretability.

In current research on GP-based image classification, there is a method that utilizes the GP algorithm to construct classifiers for binary classification tasks. This method takes images as direct inputs to the GP program and performs operations such as feature extraction and feature construction simultaneously in this process. Ultimately, the GP program outputs a high-level feature for making classification decisions. Table 4 displays the GP-based binary image classification methods.

Table 4.

GP-based binary image classification methods.

These methods excel in binary image classification, but their performance in multiclass image classification has not been extensively studied yet. Another GP algorithm is applied to multiclass classification tasks which processes original images to either predict class labels directly or extract features at the root node. These features are then fed into a predefined classifier for classification. These high-level features are either applied to a fixed classification algorithm, or the root node directly outputs prediction labels for multiclass image classification. Table 5 displays the GP-based multiclass image classification methods.

Table 5.

GP-based multiclass image classification methods.

Furthermore, some studies focus on improving genetic operations with the goal of obtaining GP individuals with excellent classification performance during the iterative evolution process. Table 6 displays the methods of improvement on GP genetic operators.

Table 6.

Improvement methods for GP genetic operator.

GP-based image classification methods have achieved notable progress. As shown in Table 4 and Table 5, some studies have achieved success in limited types of image classification tasks by designing new program structures and function sets. Others have proposed new GP methods for classifying degraded images affected by blurriness, low contrast, or noise. However, the designed program structures of these methods are not sufficiently effective, limiting the classification performance of the algorithm. As shown in Table 6, some studies have focused on improvements in genetic operators, with the aim of obtaining GP individuals with excellent classification performance. Nonetheless, these enhancements are mainly limited to a few datasets and may still face fitness stagnation in broader or more complex scenarios due to the high similarity among individuals. In cases with fewer image samples and adverse factors like blurriness and noise, the best individuals or solutions derived by GP algorithms are prone to overfitting, a problem that has not received adequate attention in current research.

Therefore, this study proposes a new GP-based method for degraded image classification. This method employs a new program structure that facilitates the transmission of information acquired by nodes across layers through an information transmission mechanism, thereby evolving efficient individuals with discriminative features. Additionally, the accompanying evolution strategy proposed accompanies multiple GP individuals during the evolutionary process and guides the algorithm to explore more effective GP individuals when the fitness of the best individual stagnates. Simultaneously, this paper introduces a multi-generation individual ensemble strategy. This strategy selects efficient individuals based on the frequency of different functions appearing in each individual and integrates them into a solution for classification tasks.

3. The Proposed Approach

This section provides a detailed introduction to the ITACIE-GP method proposed. It covers an algorithmic framework, program structure based on information transmission, new function set and terminal set, accompanying evolution strategy, and multi-generational individual ensemble strategy.

3.1. Algorithmic Framework

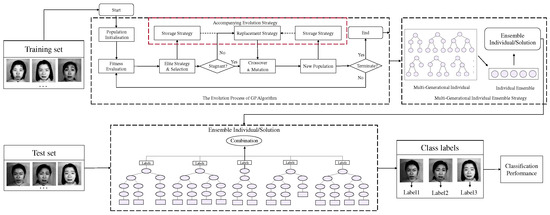

To effectively address the problem of image classification, this study developed the ITACIE-GP method. ITACIE-GP automates the construction of efficient image classification solutions by utilizing predefined program structure, function set, and terminal set. Figure 1 displays the training and testing process of ITACIE-GP. ITACIE-GP takes the training dataset as its input and subsequently initializes the population based on the newly defined function set and terminal set. Then, individuals/solutions in the population are evaluated for fitness using a fitness function. In each generation, an elitism strategy copies several excellent individuals directly to the next generation from the current population. The algorithm employs a tournament selection method to choose individuals with superior fitness values and stores the first type of accompanying individuals according to the storage strategy in the accompanying evolution strategy. During the iterative process, if the fitness of the optimal individuals stagnates and reaches the predetermined threshold, the replacement strategy within the accompanying evolution strategy will be activated. This approach breaks the stagnation in fitness, thereby assisting the algorithm in searching for more superior individuals. After this, the algorithm performs genetic operators (i.e., crossover and mutation operators) to generate a new population. If the population has already been updated through the accompanying evolution strategy, the algorithm stores the successfully updated individuals as the second type of accompanying individuals. This process continues until predefined termination conditions are met. Finally, GP individual set are selected for individual ensemble based on the multi-generational individual ensemble strategy, returning the ensembled individual/solution.

Figure 1.

The training process and testing process of the proposed ITACIE-GP method.

To validate the performance of the ensembled individual/solution obtained by the ITACIE-GP method, a test set is used as the input for the ensembled individual/solution. Then, the predicted class labels of the test set are output, and finally, the performance of this solution is evaluated based on the actual labels of the test set.

3.2. New Program Structure Based on Information Transmission

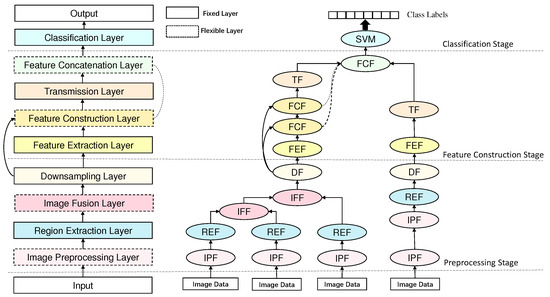

To enhance the performance of GP programs in classification tasks, this study proposes a program structure based on information transmission. This structure incorporates 11 distinct functional layers, of which 7 are designated as fixed and 4 as flexible. The fixed layers ensure the consistent inclusion of predefined functions within these layers in the GP program. In contrast, the flexible layers allow for the functions from these layers to appear in the generated GP program zero times, once, or multiple times.

By introducing the concept of information transmission, the GP program selects specific functional layers and selectively passes the information obtained from these layers to multiple subsequent layers.

Figure 2 displays this program structure and provides an example of a potential GP program. In this figure, layers connected by solid lines signify that information transmission necessarily occurs at these layers. Conversely, layers connected by dashed lines indicate that information transmission happens only in specific scenarios. Each node in the GP program example represents a function included in various layers.

Figure 2.

The proposed ITACIE-GP program structure (IPF: Image Preprocessing Layer Function; REF: Region Extraction Layer Function; IFF: Image Fusion Layer Function; DF: Downsampling Layer Function; FEF: Feature Extraction Layer Function; FCF: Feature Construction Layer Function; TF: Transmission Layer Function; FCF: Feature Concatenation Layer Function).

The image preprocessing layer preliminarily processes the raw image data, including noise reduction and smoothing. The region extraction layer extracts key information-containing image regions from the original or filtered image data. The image fusion layer performs pixel-level fusion of different image regions to obtain a new region rich in discriminative content.

The dimension reduction layer performs downsampling operations on regions to reduce the dimensionality of features and obtain new images after downsampling.

Notably, based on the concept of information transmission, this layer preserves the original image region and passes it to subsequent layers as needed.

The feature extraction layer extracts features from the image region. The feature construction layer constructs features based on multiple types of features. The transfer layer decides whether to pass the effective information generated by the feature construction layer to subsequent layers, based on the type of input. The feature concatenation layer combines the input data into a feature vector. The classification layer utilizes a fixed classification algorithm to conduct predictive classification based on the input features, ultimately outputting the predicted class labels.

3.2.1. New Function and Terminal Set

To meet the functional requirements of each layer in the newly designed GP program, this study designs a corresponding terminal set and function set for each layer. The function set includes the image processing functions necessary for constructing GP program.

The terminal set includes original image data and parameters for image processing functions, with a detailed list provided in Table 7. Notably, the terminal m represents three different manual feature extraction methods. When the value of m is 0, the SIFT method is used for feature extraction, and the input image region is treated as a key point [37]. When the value of m is 1, the HOG method is employed to extract features, calculating the average of a 10 × 10 grid from the image area with a step size of 10 [7]. When the value of m is 2, the uniform LBP method is selected to extract histogram features, with a radius of 1.5 and a neighbor count of 8 [5].

Table 7.

Terminal set.

The required parameter values for these functions will be automatically selected within a predefined range through the evolutionary process. The new method will evolve a GP with a multi-layer structure, capable of automatically selecting the appropriate functions and their corresponding parameters for each layer, tailored to different image classification tasks.

Table 8 provides a detailed list of the function set from the preprocessing layer to the pooling layer in the image preprocessing stage.

Table 8.

Image preprocessing stage function set.

Table 9 displays the function set used from the feature extraction layer to the classification layer in the feature construction and classification stages.

Table 9.

Feature construction and classification stage function set.

Functions of the image preprocessing layer: The image preprocessing layer integrates a variety of image filtering and enhancement functions. Processing image data through these functions enhances image quality. Each function accepts either a raw image or a previously processed image, along with related parameters if required, as input. It then produces an output image, processed and of the same size as the input.

Functions of the region extraction layer: The primary function of the region extraction layer is to extract a significant region from image data, reducing the computational load for irrelevant information within the image data. The region extraction layer accomplishes its function through the and functions. The input data for these functions include either raw image data or preprocessed image data, as well as and . Based on and , the function extracts rectangular or square regions from the image data to serve as input for subsequent processing layers.

Functions of the image fusion layer: The primary function of the image fusion layer is to merge information from multiple image regions, aiming to create a new image region enriched with more comprehensive information. These functions are capable of processing two image regions of possibly different sizes. They use the size of the larger image region as a reference and apply bilinear interpolation to unify the sizes. Subsequent pixel-level fusion is performed to generate a new image region with a greater amount of information. The function merges by selecting the minimum value of corresponding pixels from both image regions, thus creating a new image region. The function fuses by choosing the median value of corresponding pixels from the two image regions to create a new image region. The function performs fusion by calculating the average value of corresponding pixels from both image regions, thereby creating a new image region.

Functions of the downsampling layer: The primary task of the downsampling layer is to downsample the input image regions to reduce image sizes, thereby lessening the computational burden. To adapt to various classification tasks, this layer provides multiple downsampling functions. Specifically, the function set of the pooling layer includes the function, function, and function. These functions take an image region and a sliding window size formed by the integers and as input. The function emphasizes the most significant features by retaining the maximum value in each window. The function calculates the average of all values within the window to preserve more information. The function, through the use of bilinear interpolation, effectively preserves image details while reducing the size of the image. It is important to note that while the downsampled region generally retains key features, the loss of useful information can still occur. This might lead to reduced performance when using manual feature extraction methods. Hence, this layer incorporates the concept of information transmission, passing the original region to subsequent layers, ensuring the use of information-rich original region for manual feature extraction when necessary. Given this consideration, the layer’s output is designed in a tuple format, including both the original and the downsampled region.

Functions of the feature extraction layer: The primary responsibility of the feature extraction layer is to extract features from the input image region. The function will take as input. If dealing with the original image regions, it will select a manual feature extraction method based on the terminal parameter m. If it is not the original image region, each row of the region’s pixels will be concatenated into a vector to transform into pixel features. Then, these two types of features will be concatenated to form fused features and outputted. Integrating different types of features will help to comprehensively capture image information. It is noteworthy that the original image region passed from the downsampling layer may still be useful in subsequent layers, thus the function output still includes the original image regions. Additionally, the output includes a temporary variable t, initialized within the function. This value is key for further information transmission and will be introduced in the subsequent transmission layer.

Functions of the feature construction layer: To construct new features with distinctiveness, an innovative feature construction layer has been designed. accepts as input and generates new manual features based on the value of m produced by the terminal. These newly generated features are multiplied by the value of parameter i generated by the terminal, while the second element of Tuple 2 is multiplied by (1 − i). Subsequently, these two parts of features are added together to construct new features. It is important to note that since the function has the same type for both input and output, the constructed features may potentially be returned to the same layer for additional processing. During this process, the original image region will continually produce new handcrafted features, which will be preserved as output. At the same time, the already constructed features will be combined with the newly generated features for further feature construction.

Functions of the transmission layer: The primary function of the transmission layer is to decide whether to pass information to subsequent layers based on the type of input. When the function receives input originating from the feature extraction layer, it checks whether the third element of the tuple is a feature vector. If it is, the extracted features are returned directly in vector form. If it is not a feature vector, the decision to pass the newly generated manual features from the feature construction layer to subsequent layers is made based on the parameter p. Furthermore, considering the program structure uses strongly typed GP, the transmission layer also acts as a transitional layer for input-output type conversion, ensuring that the feature concatenation layer can be defined as a flexible layer.

Functions of the feature concatenation layer: The primary function of the function in the feature concatenation layer is to concatenate the feature data inputs from two transmission layers and output them. Due to the specific design of the transmission layers, the input data may include handcrafted features generated by the feature construction layer. Based on this, the function integrates all input features, forming a feature vector as the output. As a flexible layer, it can combine various features from the same region or similar features from different regions.

Functions of the classification layer: The function of the in the classification layer is to receive the learned features and input them into the Support Vector Machine (SVM) classifier for categorization, thereby outputting the predicted category labels.

3.2.2. Fitness Function

Function set and terminal set are used to generate GP individuals/programs. Since ITACIE-GP deals with image classification tasks, classification accuracy is adopted as the fitness function for the individuals, which is defined as

where represents the number of instances correctly classified, and denotes the total number of instances in the training set. The ITACIE-GP programs/individuals are evaluated using a stratified 5-fold cross-validation (5-fold CV) method.

3.3. Accompanying Evolution

To address the issue of fitness stagnation of the best individual during the iterative process of the GP algorithm, this paper introduces the accompanying evolution strategy. The accompanying evolution strategy consists of two sub-strategies: storage strategy and replacement strategy. The storage strategy is employed to identify and preserve individuals showing potential, referred to as accompanying individuals. These individuals are retained and not immediately reintroduced into the population. When the algorithm detects fitness stagnation of the best individual, the replacement strategy reintroduces these accompanying individuals into the population, thereby effectively enhancing the probability of finding the best individual.

3.3.1. Storage Strategy

In the storage strategy of the algorithm, two storage methods for accompanying individuals are introduced, aiming to store potential individuals at different evolutionary stages. The first method primarily focuses on high-fitness individuals that are eliminated during the evolutionary process, while the second method targets individuals that have been updated and enhanced their fitness within the new population.

The first storage method focuses on preserving individuals that, although eliminated during the evolutionary process, exhibit high fitness. This approach avoids introducing lower-quality individuals that might degrade the overall population quality, without adding extra computational resource consumption. At the end of the selection process for each generation, the eliminated individuals are ranked based on their health conditions. They are then stored as accompanying individuals according to the ratio and subsequently incorporated into . This ratio is defined in Equation (2),

where takes on different values depending on g, which represents the evolutionary generation. In the initial stages of the algorithm, the population diversity is typically high. As evolution progresses, the population might begin to converge, potentially leading to a decrease in diversity. To adapt to this transition, the algorithm reduces the number of accompanying individuals it retains.

The second type of storage method: The second storage method focuses on preserving individuals that have successfully updated. Specifically, the first type of accompanying individuals successfully replace some redundant individuals in the population, generating a new population through crossover and mutation. Within this new population, if any individual successfully updates (improves its fitness), this indicates that these new individuals have potential for development. Individuals that have updated successfully are stored in as the second type of accompanying individuals.

3.3.2. Replacement Strategy

In the replacement strategy, to determine the appropriate timing for substituting some redundant individuals with accompanying individuals, the parameter q is introduced, as defined in Equation (3),

where denotes the fitness value of the best solution in the population at the g-th generation, and q represents the number of times the best individual in the population fails to update.

During the evolutionary process, if the population’s fails to update, the algorithm determines that the population’s evolution has stagnated, leading to an increment in the value of q. When updates successfully or when q reaches the predetermined threshold T, the algorithm resets the value of q to zero.

When q reaches the designated threshold T, set at 5 in this study, for the first time, the algorithm selectively removes a certain proportion of redundant individuals from the population and selects individuals from for replacement. The number of individuals selected follows Equation (4),

where represents the number of redundant individuals identified in the current population, while denotes the number of individuals selected from A1. is a fixed proportionality coefficient, set at 0.25 here.

When the value of q reaches the threshold T again, the algorithm evaluates whether the previous replacement strategy was effective. If replacing individuals with those from has led to an improvement in the fitness of the best individual, the algorithm will continue with this strategy. Otherwise, individuals from will be selected. At this point, the number of individuals chosen follows Equation (5),

where represents the number of individuals in the population whose performance has successfully improved, while denotes the number of individuals selected from . Equation (5) ensures that the number of individuals chosen from does not exceed the upper limit of accompanying individuals in . By using Equations (4) and (5), the appropriate quantity of replacements is determined to substitute the redundant individuals in the population. When the fitness growth of the best individual in the population slows down or even stagnates, this will assist the algorithm in exploring better individuals.

3.4. Multi-Generation Individual Ensemble Strategy

To avoid the risk of overfitting that might be introduced by a single GP program individual, a multi-generation individual ensemble strategy is proposed. Specifically, starting from the 10th generation, the best GP program of each generation is continually saved. Upon completion of the GP algorithm iteration, the collection comprising the best GP program throughout the entire algorithm process and the best GP program of each generation is returned, denoted as where represents the best GP program generated by the n-th generation. Then, based on the function set defined in Section 3.2.1, the frequency of different functions contained in each GP program in is calculated and marked as . Based on these frequencies, the difference between the GP programs in the collection and the algorithm’s best GP program is calculated, as shown in Equation (6),

where denotes the function frequency of the algorithm’s best GP program, and represents the function frequency of each GP program in the set . is defined as the difference in function frequency between the best GP program and each of the best GP programs of every generation. The three GP programs with the highest values, along with the two best GP programs, are then effectively combined into an ensemble GP program. This process can be explained with the following example: assume that the predictive class labels obtained from the three GP programs with the highest values are 001, and from the two best GP programs are 11. These inputs are combined to form the final output 00111. A majority vote based on the outputs generates the predicted class label, with the final output in this example being 1.

4. Experimental Design

This section discusses the design of the experiment, including detailed information about the benchmark dataset, benchmark methods used for comparison, and parameter settings.

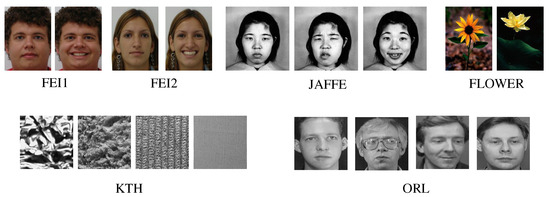

4.1. Benchmark Datasets

The proposed ITACIE-GP method is evaluated on image datasets of varying difficulties. They are FEI1 [38], FEI2 [38], JAFFE [39], KTH [40], Flower [41], and ORL [42]. Figure 3 displays examples of these image datasets.

Figure 3.

Example images from the FEI_1, FEI_2, JAFFE, FLOWER, KTH, and ORL datasets.

FEI1 and FEI2 are datasets related to binary facial expression classifications, distinguishing primarily between smiling and natural expressions. The JAFFE dataset comprises images categorized into seven facial emotions: anger, fear, disgust, neutral, sadness, surprise, and happiness. The KTH dataset is designed for texture classification and includes images from 10 different categories. These images differ in lighting, angles, and sizes. The Flower dataset focuses on flower classification, featuring images of two distinct flower types. ORL, commonly used in face recognition research, consists of images from 40 different individuals. Each individual contributes 10 distinct facial photos, capturing a range of facial expressions and variables, including the presence or absence of glasses. Table 10 describes the detailed information of the image datasets, including image size, the split between training and testing sets, and the number of classes in each dataset.

Table 10.

Image dataset parameters.

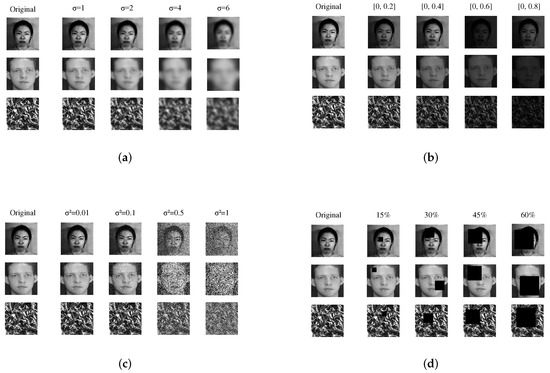

This paper formed a degraded image dataset based on the parameters proposed in reference [16,18] and the aforementioned image datasets. Specifically, different levels of blur, low contrast, and noise, and various sizes of square occlusions in different positions were introduced separately into the dataset, resulting in datasets for blurred scenarios, low contrast scenarios, noisy scenarios, and occluded scenarios.

Table 11 provides a detailed list of the parameters used to create these datasets, including the degree of blur, level of contrast, size of noise, and size of the occluding squares. Figure 4 shows examples of degraded images in four different scenarios.

Table 11.

Degraded image dataset parameters.

Figure 4.

Some examples from image datasets in four different scenarios. (a) Examples of Blurred Images with Different Standard Deviations (b) Examples of Low-Light Images with Different Contrasts (c) Examples of Noisy Images with Different Variances (d) Examples of Occluded Images with Obstructions of Different Sizes.

4.2. Benchmark Methods

To verify the performance of the ITACIE-GP method, this paper compares it with several benchmark methods. These benchmark methods include manual feature-based methods, CNN-based methods, deep feature-based methods, and a GP-based method.

For feature-based methods, this paper employs several image classification techniques that combine various handcrafted feature methods with the classification algorithm Support Vector Machine (SVM), namely SVM + Histogram, SVM + DWT [43], SVM + Gabor [44], SVM + SIFT [6], SVM + HOG [7], and SVM + uLBP [5]. These methods demonstrate relatively low computational complexity and good performance across multiple datasets.

For CNN-based methods, two widely used network architectures in computer vision tasks are selected for experimentation: CNN-5 and LeNet-5 [45].

For deep feature-based methods, this paper selects several deep models pre-trained on the ImageNet dataset [46], namely ResNet [47], VGG [48], AlexNet [49], MnasNet [50], and SqueezeNet [51], for performance evaluation. The ResNet, VGG, and MnasNet models have their last fully connected layer removed, while AlexNet has all its fully connected layers removed, thereby obtaining network structures for feature extraction. The extracted features are then fed into the SVM classification algorithm to complete the final classification task.

For the GP-based method, EFLGP [16] is considered to be representative in this field. The GP program of this method generates a set of feature vectors, which are then used as input to an SVM. This method has demonstrated superior performance on datasets with issues like blurriness, low contrast, and noise.

These benchmark methods were chosen because their performance on various publicly available datasets has been widely validated and is commonly used in this domain. The experimental design strictly accords with parameter configurations from the existing literature and assesses the classification performance of all methods on selected datasets.

4.3. Parameter Settings

The ITACIE-GP method proposed in this paper was implemented using the DEAP (Distributed Evolutionary Algorithm in Python) [52] library. The detailed parameter configurations for ITACIE-GP can be found in Table 12. The experiments were conducted on a personal computer equipped with an Intel Core i5 CPU at 3.2 GHz and a total memory of 32 GB.

Table 12.

GP parameters.

The SVM classification algorithm was implemented using the scikit-learn [53], where the penalty factor C was set to 1. The implementations of the CNN-based and deep feature-based methods were built upon PyTorch [54]. For the CNN-5 method, a structure identical to that described in [28] was established, while the Lenet-5 approach adhered to the parameter settings from its original paper. In both the CNN and deep feature-based methods, the learning rate was set at 0.05.

5. Results and Discussion

5.1. Classification Performance

To ensure fairness in the experiments and minimize potential biases, each method was independently run 30 times on each dataset with different random seeds. The analysis of the experimental results primarily relies on the average classification accuracy and standard deviation on the test sets. Table 13, Table 14, Table 15, Table 16 and Table 17 list the average classification accuracy and standard deviation for ITACIE-GP and the comparison methods on the FEI_1, FEI_2, FLOWER, JAFFE, KTH, and ORL datasets in five scenarios. The non-parametric Wilcoxon Signed-Rank test assesses the significant differences between the methods, without assuming they follow a normal distribution. ITACIE-GP compares with competitors at a significance level of , denoted in the W-test column. The symbols ) respectively indicate that ITACIE-GP is better than/similar to/inferior to the comparison methods in terms of the benchmark datasets. The best classification results for each dataset are highlighted in bold and marked in red, the second-best results are marked in green, and the third-best results are marked in blue. The overall results of the significance tests are summarized in the last row.

Table 13.

Classification accuracy (%) of ITACIE-GP and 14 comparison methods across 6 datasets in original scenarios.

Table 14.

Classification accuracy (%) of ITACIE-GP and 14 comparison methods across 6 datasets in blur scenarios.

Table 15.

Classification accuracy (%) of ITACIE-GP and 14 comparison methods across 6 datasets in low-contrast scenarios.

Table 16.

Classification accuracy (%) of ITACIE-GP and 14 comparison methods across 6 datasets in noise scenarios.

Table 17.

Classification accuracy (%) of ITACIE-GP and 14 comparison methods across 6 datasets in occlusion scenarios.

In 180 comparisons with SVM + Gabor, SVM + Histogram, SVM + HOG, SVM + SIFT, and SVM + DWT methods, the ITACIE-GP approach significantly outperformed the comparative methods in 178 comparisons and demonstrated similar performance in 2 comparisons. Only in original and low-contrast scenarios does SVM + SIFT perform similarly to ITACIE-GP on the ORL dataset. In other scenarios, ITACIE-GP holds a notable advantage. This indicates that the simple extraction of manual features followed by classification is ineffective, and performance degrades under the influence of various adverse scenarios. In contrast, benefiting from the adoption of a program structure based on information transmission, the ITACIE-GP method can evolve and construct effective features for accurate classification based on the characteristics of the classification problem. Moreover, it does not experience a significant decline in performance due to interference from adverse scenarios.

In 60 comparisons with Lenet-5 and CNN-5, the ITACIE-GP method significantly outperformed the comparative methods in 59 comparisons and showed similar performance in 1 comparison. The ITACIE-GP method demonstrated superior performance on almost all datasets. This phenomenon may be attributed to the relatively simple architectures of Lenet-5 and CNN-5, while the ITACIE-GP method has the ability to evolve solutions of different scales based on the problem definition.

The ITACIE-GP method demonstrated significant advantages in 150 comparisons with MNASNet, VGG, AlexNet, ResNet, and SqueezeNet. Specifically, in 136 comparisons, the ITACIE-GP method was significantly superior to the comparison method. In 5 comparisons, its performance was similar to the comparison method, while in 9 comparisons, its performance was inferior to the comparison method. In all testing scenarios, including facial expression classification (FEI_1, FEI_2, and JAFFE) and a facial recognition dataset (ORL), the performance of ITACIE-GP was significantly superior to these five deep feature-based methods. However, in original, blurred, and occlusion scenarios, the ITACIE-GP method’s performance was comparable to or slightly inferior to that of MNASNet, VGG, and ResNet. This may be due to the ability of deep feature-based methods to learn distinctive features during the classification of flowers in original, blurred, and occlusion scenarios, features that are not easily affected by such adverse factors. In contrast, in low-light and noisy scenarios, the low contrast and noise in images significantly impair the performance of these methods. Although ITACIE-GP may not outperform the deep feature-based methods in original, blurred, and occlusion scenarios, it still demonstrates similar results, and significantly surpasses these methods in the other two challenging scenarios, showcasing the robustness of the ITACIE-GP method. On the KTH dataset, the ITACIE-GP’s performance in original scenarios was not as good as all the deep feature methods referenced, but in blurred and noisy scenarios, its performance was comparable to or slightly inferior to some of the deep feature methods. It is particularly noteworthy that under scenarios of low contrast and occlusion, ITACIE-GP significantly outperforms the five deep feature methods, highlighting the potential instability of deep feature approaches when dealing with image datasets under adverse scenarios. At the same time, the design goal of ITACIE-GP to maintain good performance even in various challenging scenarios was significantly demonstrated.

In the 30 comparisons with EFLGP, the ITACIE-GP method demonstrated a significant advantage in the majority of comparisons, specifically in 28 out of 30. In the 2 comparisons under low contrast scenarios, its performance was similar to that of EFLGP. This advantage mainly results from the program structure based on information transmission designed in this paper, which ensures that the initialized solutions have higher performance. Moreover, thanks to the accompanying evolution strategy, the classification accuracy continues to improve even in the later stages of the evolutionary process.

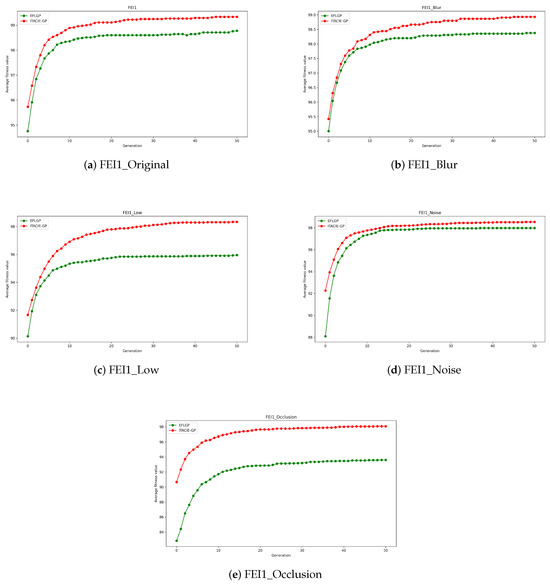

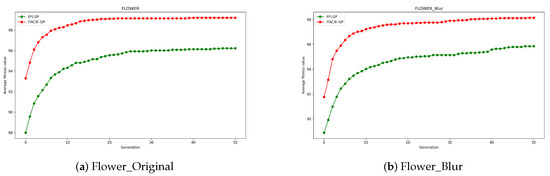

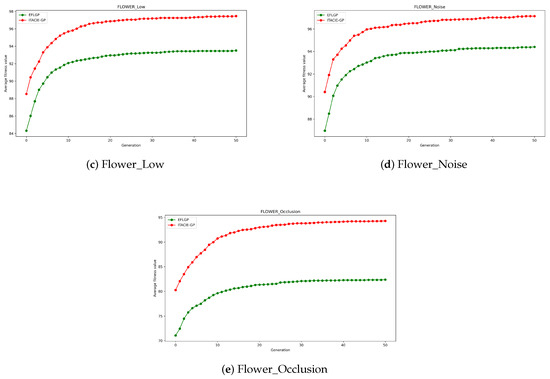

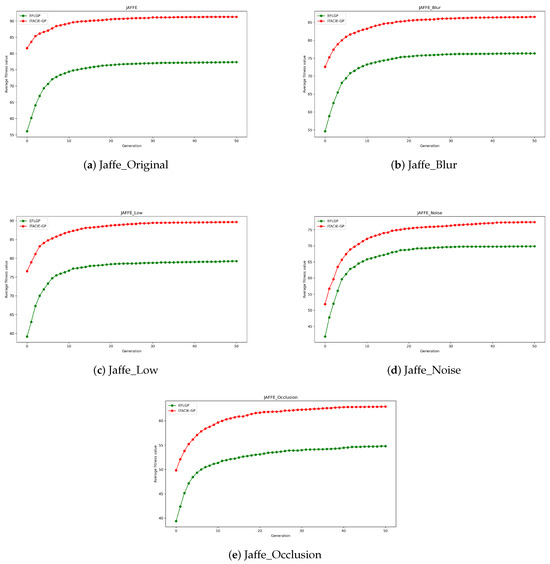

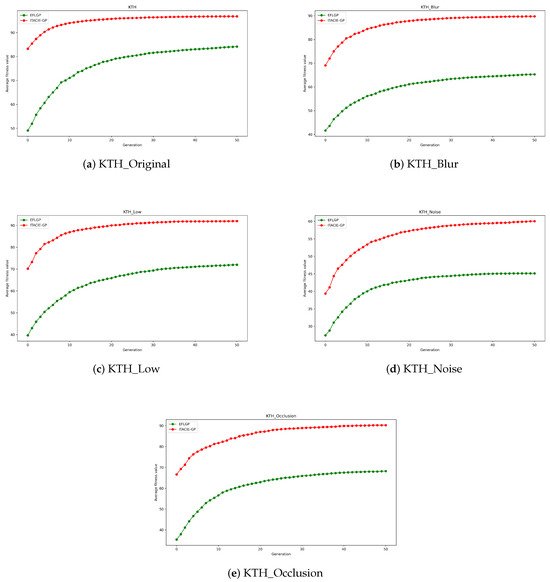

Figure 5, Figure 6, Figure 7, Figure 8 and Figure 9 illustrate the convergence curves of the average classification accuracy of the best individuals in each generation during 30 runs of EFLGP and ITACIE-GP across five datasets, FEI_1, FLOWER, JAFFE, KTH, and ORL, under five different scenarios. The figures demonstrate that the innovative program structure of ITACIE-GP enables optimal individuals to achieve high classification performance on the training set from the very beginning. As the evolutionary process progresses, especially in the middle and later stages, ITACIE-GP continues to enhance the training set classification performance of the best individuals due to its accompanying evolution strategy. In contrast, the training set classification performance of the best individuals in EFLGP might slow down or even stagnate after a certain generation, indicating that it may be less effective in continuous optimization compared with ITACIE-GP. Overall, ITACIE-GP uses its outstanding program structure to attain superior initial training set classification performance and continuously improves this performance through its evolutionary strategy.

Figure 5.

Convergence curves of EFLGP and ITACIE-GP on the FEI_1 dataset in five different scenarios.

Figure 6.

Convergence curves of EFLGP and ITACIE-GP on the Flower dataset in five different scenarios.

Figure 7.

Convergence curves of EFLGP and ITACIE-GP on the Jaffe dataset in five different scenarios.

Figure 8.

Convergence curves of EFLGP and ITACIE-GP on the KTH dataset in five different scenarios.

Figure 9.

Convergence curves of EFLGP and ITACIE-GP on the ORL dataset in five different scenarios.

5.2. Ablation Experiments

To verify the effectiveness of the strategies proposed in ITACIE-GP, this paper conducted ablation experiments for comparison. The comparison methods were divided into four types: the ITACIE-GP method proposed in this paper, ACIE-GP without the program structure based on information transmission, ITIE-GP, which has the same program structure as ITACIE-GP but does not use the accompanying evolution strategy, and ITAC-GP, which has the same program structure as ITACIE-GP but lacks a multi-generational individual ensemble strategy.

The four methods were run independently five times on different datasets using the same random seed. The experimental results have been analyzed based on the average and standard deviation of the classification accuracy on the test sets.

As shown in Table 18, in the row, the performance differences of ITACIE-GP compared with ACIE-GP, ITAC-GP, and ITIE-GP in terms of average values are shown. Specifically, "+" indicates that ITACIE-GP outperforms the compared methods, "=" indicates that the performance of ITACIE-GP is identical to that of the comparison methods, and "-" denotes that ITACIE-GP underperforms relative to the comparison methods.

Table 18.

Ablation experiment.

In the 16 performance comparisons with ACIE-GP, ITACIE-GP outperformed the comparison method in all scenarios. ITACIE-GP builds efficient feature combinations based on predefined feature types, delivering outstanding classification performance for datasets in complex scenarios. Conversely, ACIE-GP mainly relies on predefined manual features, leading to diminished classification capabilities when handling images from diverse categories or complex scenarios.

In 16 performance comparisons with ITIE-GP, ITACIE-GP outperformed the comparison method in 15 instances and performed equally in 1. This result indicates that a significant decline in classification performance occurs after losing the accompanying evolution strategy for continuous optimization. The reason is the lack of substantial improvement in multiple generations of optimal individuals. When implementing an individual ensemble strategy, it may combine solutions that are too similar and not particularly distinguished in classification performance, which not only fails to improve classification efficacy but sometimes may even lead to a reduction in performance.

In 16 performance comparisons, ITACIE-GP showed superior performance to ITAC-GP in 10 instances, presented equivalent performance in 4, and was slightly inferior in 2. Generally, the absence of the individual ensemble strategy leads to reduced classification performance. This happens because forming a final solution with multi-generational individuals/solutions effectively avoids the overfitting issue that reliance on a single solution might cause.

5.3. Performance Analysis

To clarify why ITACIE-GP is able to achieve good classification performance, this section analyzes the single programs/solutions obtained by ITACIE-GP in the FEI1 dataset under occlusion scenarios and in the JAFFE dataset under original scenarios.

5.3.1. Example Program of FEI1 Dataset under Occlusion Scenarios

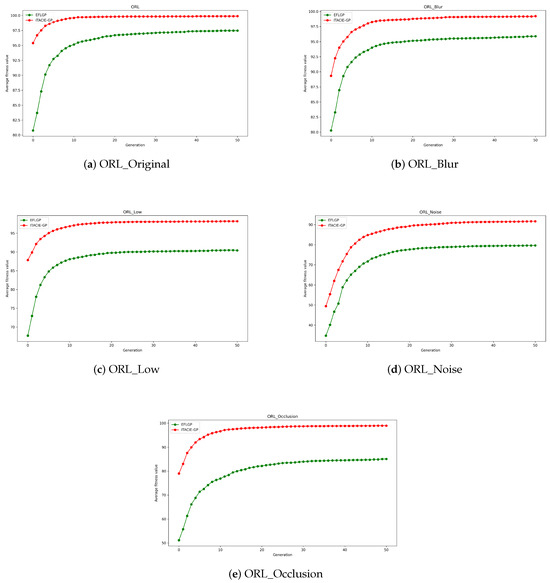

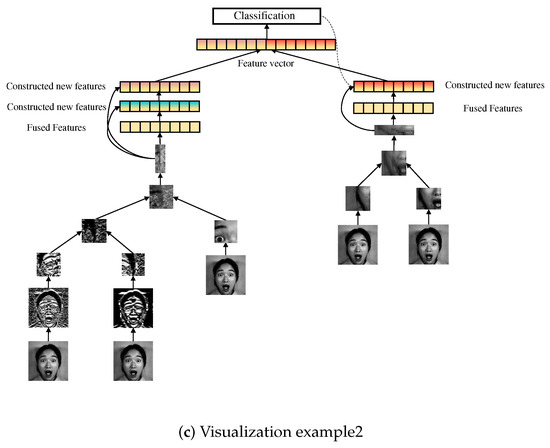

Figure 10 displays an example program evolved by ITACIE-GP for the FEI1 dataset in occlusion scenarios, along with its two visualization examples. This program achieves an accuracy of 99.33% on the training set and can reach an accuracy of 98% on the test set.

Figure 10.

Example program evolved from the FEI1 dataset through ITACIE-GP in occlusion scenarios, along with its two visualization examples. (a) Example program, (b) Visualization example1, (c) Visualization example2.

In the left branch of the example program, the operator is first applied to process the image to highlight edge information, and the mouth region with significant discriminative ability is extracted from it. Then, the extracted image region undergoes a downsampling process. Next, fused features are generated by combining the pixel features after downsampling with the LBP features of the original region. Based on these fused features, as well as the information transmitted from the downsampling layer, new features are further constructed. In the right branch of the example program, median fusion is performed on two different extracted image regions to construct new image regions with a higher discriminative. Subsequently, the new image region undergoes a downsampling process, resulting in the generation of fused features based on pixel and SIFT features. Ultimately, the features of the left and right branches of the example program are concatenated for the final classification decision.

It is worth mentioning that, in occlusion scenarios for category example images (as visualized on the left side of Figure 10), the ITACIE-GP method effectively extracts key regions in the images and performs efficient feature extraction and construction. This enables the effective classification of images obstructed in the same location. However, for category example images in occlusion scenarios (as visualized on the right side of Figure 10), the region extracted by the left branch might lead to ineffective features due to the obstruction. Despite this, the fused region obtained by the right branch still contains valid information, making the classification decision based on these features effective. This demonstrates the strong generalization ability of the ITACIE-GP algorithm, capable of extracting multiple sets of discriminative features, enabling effective classification decisions even in obstructed or complex scenarios.

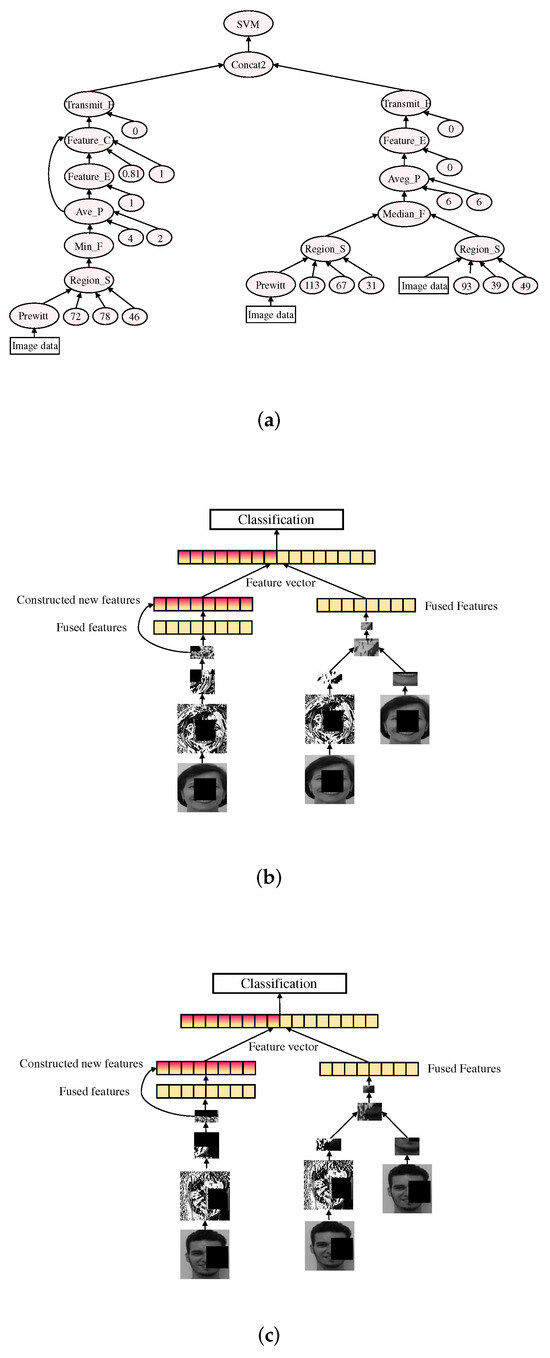

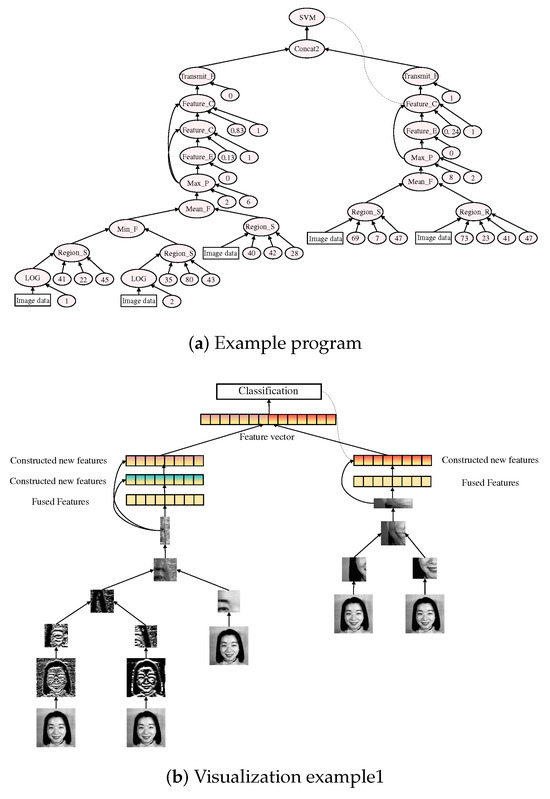

5.3.2. Example Program of the JAFFE Dataset in the Original Scenarios

Figure 11 shows an example program evolved by ITACIE-GP for the JAFFE dataset in the original scenarios, along with its two visualization examples. This program achieves an accuracy of 92.85% on the training set and can reach an accuracy of 89.04% on the test set.

Figure 11.

Example program evolved from the JAFFE dataset through ITACIE-GP in the original scenarios, along with its two visualization examples.

In the left branch of the example program, two different image fusion operations are executed to generate new image region rich in information. Subsequently, based on these new image region, pixel features and LBP features are extracted and concatenated to obtain fused features. In the feature construction layer, new features are further constructed by combining the information transmitted from the downsampling layer. In the right branch of the example program, an image fusion operation is conducted, resulting in the creation of a new image region. By extracting pixel and SIFT features from these new image regions, fused features are obtained. In the feature construction layer, new features will be further constructed, achieved by integrating the information transmitted from the downsampling layer. Ultimately, by concatenating the features from both the left and right branches, and during this process, the handcrafted features generated in the feature construction process are passed to the feature concatenation layer, thereby assisting in the classification decision.

Overall, through in-depth analysis of two example programs, it is demonstrated that the solutions generated by ITACIE-GP are not only easy to interpret but also exhibit excellent performance in terms of their generalization ability and classification accuracy, thanks to their program structure based on information transmission. Moreover, the ITACIE-GP method can effectively construct features according to the needs of different classification tasks, thereby ensuring effective classification decisions even in occlusion or other complex scenarios.

6. Conclusions

In this paper, a new method based on GP is introduced for the classification of degraded images. The proposed program structure, based on information transmission, significantly enhances the potential of GP individuals. Under this structure, nodes in specific layers of GP individuals can automatically transmit effective information to nodes in subsequent layers, enabling the automatic construction of effective features. This significantly improves their performance as solutions for classification tasks. The accompanying evolution strategy effectively addresses the issue of fitness stagnation in GP individuals during the iterative process by employing storage and replacement strategies. This approach substitutes redundant individuals with those possessing the potential for development when population fitness stagnation occurs, thus directing the algorithm toward the identification of more superior individuals. Moreover, the application of the multi-generational individual ensemble strategy, through the use of individual distances, incorporates several efficacious GP individuals into an ensemble solution, effectively mitigating the problem of overfitting. Leveraging these three strategies, the ITACIE-GP algorithm exhibits exceptional classification performance on datasets of original, blurry, low contrast, noisy, and occluded images. Experimental results indicate that ITACIE-GP consistently achieves similar or superior results, particularly in the classification of degraded images. Ablation studies further confirm the effectiveness and synergy of these strategies. Additionally, performance analyses elucidate the reasons behind the high-performance solutions evolved by ITACIE-GP.

In the future, we will prioritize expanding classification examples that are closely aligned with real-world applications and develop more advanced technologies to enhance classification performance for degraded image tasks.

Author Contributions

Conceptualization, Y.S. and Z.Z.; methodology, Z.Z.; validation, Y.S. and Z.Z.; formal analysis, Z.Z.; investigation, Z.Z.; resources, Z.Z.; data curation, Z.Z.; writing—original draft preparation, Z.Z.; writing—review and editing, Y.S. and Z.Z.; visualization, Z.Z.; project administration, Y.S.; funding acquisition, Y.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by National Natural Science Foundation of China (Grant Nos. 61763002 and 62072124), Guangxi Major projects of science and technology (Grants No. 2020AA21077021), Foundation of Guangxi Experiment Center of Information Science (Grant No. KF1401).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The datasets used in this paper are available at the following URLs: FEI Face Database (accessed on 29 January 2024) (https://fei.edu.br/~cet/facedatabase.html), Cambridge ORL Face Database (accessed on 29 January 2024) (http://cam-orl.co.uk/facedatabase.html), JAFFE Database (accessed on 28 January 2024) (https://www.kasrl.org/jaffe_download.html), and KTH-TIPS Material Database (accessed on 6 February 2024) (https://www.csc.kth.se/cvap/databases/kth-tips/credits.html). The code for this manuscript has been uploaded to GitHub, available at https://github.com/zzq12-zzq/Automatic-Feature-Construction-Based-Genetic-Programming (accessed on 29 January 2024).

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| GP | Genetic Programming |

| LBP | Local Binary Patterns |

| SIFT | Scale-Invariant Feature Transform |

| HOG | Histogram of Oriented Gradients |

| CNNs | Convolutional Neural Networks |

| STGP | Strongly Typed Genetic Programming |

| SVM | Support Vector Machine |

| BoW | Bag of Words |

| RF | Random Forest |

| IPF | Image Preprocessing Layer Function |

| REF | Region Extraction Layer Function |

| IFF | Image Fusion Layer Function |

| DF | Downsampling Layer Function |

| FEF | Feature Extraction Layer Function |

| FCF | Feature Construction Layer Function |

| TF | Transmission Layer Function |

| FCF | Feature Concatenation Layer Function |

| Min_F | Minimum Fusion Function |

| Med_F | Median Fusion Function |

| Mean_F | Mean Fusion Function |

| Max_P | Max Pooling Function |

| Ave_P | Average Pooling Function |

| Bilin_D | Bilinear Downsampling Function |

| Feature_E | Feature Extraction Function |

| Feature_C | Feature Construction Function |

| Transmit_F | Transmission Layer Function |

| Concat2 | Feature Concatenation Function |

| DWT | Discrete Wavelet Transform |

| LeNet-5 | LeNet-5 Convolutional Neural Network |

| CNN-5 | 5-Layer Convolutional Neural Network |

| MnasNet | MnasNet Convolutional Neural Network |

| AlexNet | AlexNet Convolutional Neural Network |

| VGG | Visual Geometry Group Neural Network |

| ResNet | Residual Neural Network |

| SqueezeNet | SqueezeNet Convolutional Neural Network |

| IT | Information Transmission |

| AC | Accompanying Evolution |

| IE | Individual Ensemble |

References

- Naeem, A.; Anees, T.; Ahmed, K.T.; Naqvi, R.A.; Ahmad, S.; Whangbo, T. Deep learned vectors’ formation using auto-correlation, scaling, and derivations with CNN for complex and huge image retrieval. Complex Intell. Syst. 2023, 9, 1729–1751. [Google Scholar] [CrossRef]

- Nguyen, H.D.; Kim, S.H.; Lee, G.S.; Yang, H.J.; Na, I.S.; Kim, S.H. Facial expression recognition using a temporal ensemble of multi-level convolutional neural networks. IEEE Trans. Affect. Comput. 2019, 13, 226–237. [Google Scholar] [CrossRef]

- Ni, J.; Shen, K.; Chen, Y.; Cao, W.; Yang, S.X. An improved deep network-based scene classification method for self-driving cars. IEEE Trans. Instrum. Meas. 2022, 71, 1–14. [Google Scholar] [CrossRef]

- Abdar, M.; Fahami, M.A.; Rundo, L.; Radeva, P.; Frangi, A.F.; Acharya, U.R.; Khosravi, A.; Lam, H.K.; Jung, A.; Nahavandi, S. Hercules: Deep hierarchical attentive multilevel fusion model with uncertainty quantification for medical image classification. IEEE Trans. Ind. Inform. 2022, 19, 274–285. [Google Scholar] [CrossRef]

- Ojala, T.; Pietikainen, M.; Maenpaa, T. Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 971–987. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 886–893. [Google Scholar]

- Koza, J.R. Genetic programming as a means for programming computers by natural selection. Stat. Comput. 1994, 4, 87–112. [Google Scholar] [CrossRef]

- Qin, M.; Wang, R.; Shi, Z.; Liu, L.; Shi, L. A genetic programming-based scheduling approach for hybrid flow shop with a batch processor and waiting time constraint. IEEE Trans. Autom. Sci. Eng. 2019, 18, 94–105. [Google Scholar] [CrossRef]

- Chen, Q.; Xue, B.; Zhang, M. Genetic programming for instance transfer learning in symbolic regression. IEEE Trans. Cybern. 2020, 52, 25–38. [Google Scholar] [CrossRef] [PubMed]

- Bi, Y.; Xue, B.; Zhang, M. A Gaussian filter-based feature learning approach using genetic programming to image classification. In Proceedings of the AI 2018: Advances in Artificial Intelligence: 31st Australasian Joint Conference, Wellington, New Zealand, 11–14 December 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 251–257. [Google Scholar]

- Bi, Y.; Xue, B.; Zhang, M. Genetic Programming for Image Classification: An Automated Approach to Feature Learning; Springer Nature: Cham, Switzerland, 2021; Volume 24. [Google Scholar]

- Wu, M.; Li, M.; He, C.; Chen, H.; Wang, Y.; Li, Z. Facial Expression Recognition Based on Genetic Programming Learning CCA Fusion. In Proceedings of the 2022 5th International Conference on Pattern Recognition and Artificial Intelligence (PRAI), Chengdu, China, 19–21 August 2022; pp. 526–532. [Google Scholar]

- Bi, Y.; Xue, B.; Zhang, M. Genetic programming with image-related operators and a flexible program structure for feature learning in image classification. IEEE Trans. Evol. Comput. 2020, 25, 87–101. [Google Scholar] [CrossRef]

- Yang, L.; He, F.; Dai, L.; Zhang, L. An Automatical And Efficient Image Classification Based On Improved Genetic Programming. In Proceedings of the 2022 IEEE 25th International Conference on Computer Supported Cooperative Work in Design (CSCWD), Hangzhou, China, 4–6 May 2022; pp. 477–483. [Google Scholar]

- Bi, Y.; Xue, B.; Zhang, M. Genetic programming-based discriminative feature learning for low-quality image classification. IEEE Trans. Cybern. 2021, 52, 8272–8285. [Google Scholar] [CrossRef]

- Montana, D.J. Strongly typed genetic programming. Evol. Comput. 1995, 3, 199–230. [Google Scholar] [CrossRef]

- Yang, S.; Zhang, L.; He, L.; Wen, Y. Sparse low-rank component-based representation for face recognition with low-quality images. IEEE Trans. Inf. Forensics Secur. 2018, 14, 251–261. [Google Scholar] [CrossRef]

- Abayomi-Alli, O.O.; Damaševičius, R.; Misra, S.; Maskeliūnas, R. Cassava disease recognition from low-quality images using enhanced data augmentation model and deep learning. Expert Syst. 2021, 38, e12746. [Google Scholar] [CrossRef]

- Gao, Y.; Gao, L.; Li, X. A generative adversarial network based deep learning method for low-quality defect image reconstruction and recognition. IEEE Trans. Ind. Inform. 2020, 17, 3231–3240. [Google Scholar] [CrossRef]

- Yadav, A.K.; Gupta, N.; Khan, A.; Jalal, A.S. Robust face recognition under partial occlusion based on local generic features. Int. J. Cogn. Inform. Nat. Intell. (IJCINI) 2021, 15, 47–57. [Google Scholar] [CrossRef]

- Attarmoghaddam, N.; Li, K.F. An area-efficient FPGA implementation of a real-time multi-class classifier for binary images. IEEE Trans. Circuits Syst. II Express Briefs 2022, 69, 2306–2310. [Google Scholar] [CrossRef]

- Quoc, T.N.; Hoang, V.T. A new local image descriptor based on local and global color features for medicinal plant images classification. In Proceedings of the 2021 International Conference on Decision Aid Sciences and Application (DASA), Virtual, 7–8 December 2021; pp. 409–413. [Google Scholar]

- Wu, X.; Feng, Y.; Xu, H.; Lin, Z.; Chen, T.; Li, S.; Qiu, S.; Liu, Q.; Ma, Y.; Zhang, S. CTransCNN: Combining transformer and CNN in multilabel medical image classification. Knowl.-Based Syst. 2023, 281, 111030. [Google Scholar] [CrossRef]

- Han, Q.; Qian, X.; Xu, H.; Wu, K.; Meng, L.; Qiu, Z.; Weng, T.; Zhou, B.; Gao, X. DM-CNN: Dynamic Multi-scale Convolutional Neural Network with uncertainty quantification for medical image classification. Comput. Biol. Med. 2023, 168, 107758. [Google Scholar] [CrossRef]

- Shi, C.; Wu, H.; Wang, L. CEGAT: A CNN and enhanced-GAT based on key sample selection strategy for hyperspectral image classification. Neural Netw. 2023, 168, 105–122. [Google Scholar] [CrossRef]

- Atkins, D.; Neshatian, K.; Zhang, M. A domain independent genetic programming approach to automatic feature extraction for image classification. In Proceedings of the 2011 IEEE Congress of Evolutionary Computation (CEC), New Orleans, LA, USA, 5–8 June 2011; pp. 238–245. [Google Scholar]

- Evans, B.; Al-Sahaf, H.; Xue, B.; Zhang, M. Evolutionary deep learning: A genetic programming approach to image classification. In Proceedings of the 2018 IEEE Congress on Evolutionary Computation (CEC), Rio de Janeiro, Brazil, 8–13 July 2018; pp. 1–6. [Google Scholar]

- Bi, Y.; Zhang, M.; Xue, B. An automatic region detection and processing approach in genetic programming for binary image classification. In Proceedings of the 2017 International Conference on Image and Vision Computing New Zealand (IVCNZ), Christchurch, New Zealand, 4–6 December 2017; pp. 1–6. [Google Scholar]

- Shao, L.; Liu, L.; Li, X. Feature learning for image classification via multiobjective genetic programming. IEEE Trans. Neural Netw. Learn. Syst. 2013, 25, 1359–1371. [Google Scholar] [CrossRef]

- Bi, Y.; Xue, B.; Zhang, M. An effective feature learning approach using genetic programming with image descriptors for image classification [research frontier]. IEEE Comput. Intell. Mag. 2020, 15, 65–77. [Google Scholar] [CrossRef]

- Yan, Z.; Bi, Y.; Xue, B.; Zhang, M. Automatically extracting features using genetic programming for low-quality fish image classification. In Proceedings of the 2021 IEEE Congress on Evolutionary Computation (CEC), Krakow, Poland, 28 June–1 July 2021; pp. 2015–2022. [Google Scholar]

- Fan, Q.; Bi, Y.; Xue, B.; Zhang, M. Genetic programming for image classification: A new program representation with flexible feature reuse. IEEE Trans. Evol. Comput. 2023, 27, 460–474. [Google Scholar] [CrossRef]

- Bi, Y.; Xue, B.; Zhang, M. Genetic programming with a new representation to automatically learn features and evolve ensembles for image classification. IEEE Trans. Cybern. 2020, 51, 1769–1783. [Google Scholar] [CrossRef] [PubMed]

- Price, S.R.; Anderson, D.T.; Price, S.R. Goofed: Extracting advanced features for image classification via improved genetic programming. In Proceedings of the 2019 IEEE Congress on Evolutionary Computation (CEC), Wellington, New Zealand, 10–13 June 2019; pp. 1596–1603. [Google Scholar]

- Fan, Q.; Bi, Y.; Xue, B.; Zhang, M. Genetic programming for feature extraction and construction in image classification. Appl. Soft Comput. 2022, 118, 108509. [Google Scholar] [CrossRef]

- Vedaldi, A.; Fulkerson, B. VLFeat: An open and portable library of computer vision algorithms. In Proceedings of the 18th ACM international conference on Multimedia, Firenze, Italy, 25–29 October 2010; pp. 1469–1472. [Google Scholar]

- Thomaz, C.E. Fei face database. FEI Face DatabaseAvailable 2012, 11, 46–57. [Google Scholar]

- Lyons, M.; Akamatsu, S.; Kamachi, M.; Gyoba, J. Coding facial expressions with gabor wavelets. In Proceedings of the Third IEEE International Conference on Automatic Face and Gesture Recognition, Nara, Japan, 14–16 April 1998; pp. 200–205. [Google Scholar]

- Mallikarjuna, P.; Targhi, A.T.; Fritz, M.; Hayman, E.; Caputo, B.; Eklundh, J.O. The kth-tips2 database. Comput. Vis. Act. Percept. Lab. Stock. Swed. 2006, 11, 12. [Google Scholar]

- Fei-Fei, L.; Fergus, R.; Perona, P. Learning generative visual models from few training examples: An incremental bayesian approach tested on 101 object categories. In Proceedings of the 2004 Conference on Computer Vision and Pattern Recognition Workshop, Washington, DC, USA, 27 June–2 July 2004; p. 178. [Google Scholar]

- Samaria, F.S.; Harter, A.C. Parameterisation of a stochastic model for human face identification. In Proceedings of the 1994 IEEE Workshop on Applications of Computer Vision, Sarasota, FL, USA, 5–7 December 1994; pp. 138–142. [Google Scholar]

- Lee, G.; Gommers, R.; Waselewski, F.; Wohlfahrt, K.; O’Leary, A. PyWavelets: A Python package for wavelet analysis. J. Open Source Softw. 2019, 4, 1237. [Google Scholar] [CrossRef]

- Tao, D.; Li, X.; Wu, X.; Maybank, S.J. General tensor discriminant analysis and gabor features for gait recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 1700–1715. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25. [Google Scholar] [CrossRef]

- Tan, M.; Chen, B.; Pang, R.; Vasudevan, V.; Sandler, M.; Howard, A.; Le, Q.V. Mnasnet: Platform-aware neural architecture search for mobile. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2820–2828. [Google Scholar]

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and< 0.5 MB model size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Fortin, F.A.; De Rainville, F.M.; Gardner, M.A.G.; Parizeau, M.; Gagné, C. DEAP: Evolutionary algorithms made easy. J. Mach. Learn. Res. 2012, 13, 2171–2175. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. Pytorch: An imperative style, high-performance deep learning library. Adv. Neural Inf. Process. Syst. 2019, 32. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).