Abstract

This paper proposes an efficient encoding framework based on Scalable High Efficiency Video Coding (SHVC) technology, which supports both low- and high-resolution 2D videos as well as stereo 3D (S3D) video simultaneously. Previous studies have introduced Cross-View SHVC, which encodes two videos with different viewpoints and resolutions using a Cross-View SHVC encoder, where the low-resolution video is encoded as the base layer and the other video as the enhancement layer. This encoder provides resolution diversity and allows the decoder to combine the two videos, enabling 3D video services. Even when 3D videos are composed of left and right videos with different resolutions, the viewer tends to perceive the quality based on the higher-resolution video due to the binocular suppression effect, where the brain prioritizes the high-quality image and suppresses the lower-quality one. However, recent experiments have shown that when the disparity between resolutions exceeds a certain threshold, it can lead to a subjective degradation of the perceived 3D video quality. To address this issue, a conditional replenishment algorithm has been studied, which replaces some blocks of the video using a disparity-compensated left-view image based on rate–distortion cost. This conditional replenishment algorithm (also known as VEI technology) effectively reduces the quality difference between the base layer and enhancement layer videos. However, the algorithm alone cannot fully compensate for the quality difference between the left and right videos. In this paper, we propose a novel encoding framework to solve the asymmetry issue between the left and right videos in 3D video services and achieve symmetrical video quality. The proposed framework focuses on improving the quality of the right-view video by combining the conditional replenishment algorithm with Cross-View SHVC. Specifically, the framework leverages the non-HEVC option of the SHVC encoder, using a VEI (Video Enhancement Information) restored image as the base layer to provide higher-quality prediction signals and reduce encoding complexity. Experimental results using animation and live-action UHD sequences show that the proposed method achieves BD-RATE reductions of 57.78% and 45.10% compared with HEVC and SHVC codecs, respectively.

1. Introduction

Three-dimensional video services have a long history, with their roots primarily in the film industry. Since the early 20th century, 3D films have evolved as a technology that displays two images from different angles simultaneously, providing audiences with a sense of depth and immersion. Early 3D films relied on simple analog technology, using two projectors to create a stereoscopic effect, offering viewers a new visual experience. However, due to technological limitations and high costs at the time, 3D films were not widely popular and were mostly reserved for special events or limited screenings.

In the 1950s, the first boom of 3D video services occurred, but it faded due to technical challenges and the complexities of the viewing environment. By the 1980s, the introduction of large-format films like IMAX brought renewed attention to 3D technology, offering audiences a more immersive viewing experience. However, many viewers experienced eye strain and discomfort during 3D viewing, limiting its widespread adoption. In the late 2000s, advancements in digital technology led to a resurgence of 3D video service [1]. The release of Avatar in 2009 marked a turning point, prompting the production of more 3D content and the upgrade of theaters with digital equipment for 3D screenings. Despite this, public interest in 3D video services gradually waned due to the inconvenience of wearing 3D glasses, the limited availability of 3D content, and the eye strain experienced by some viewers. Additionally, the perception that the difference in immersion between 3D and 2D formats was not significant led many to prefer 2D videos. Consequently, while 3D video services enjoyed a period of popularity, they eventually fell out of favor as they were perceived to lack sufficient distinction from 2D formats.

However, 3D technology is now exploring new possibilities beyond the film industry by merging with emerging technologies such as VR (virtual reality) and AR (augmented reality). VR and AR are technologies that maximize immersion and interactivity, and when combined with 3D video services, their potential can be greatly amplified. In line with this trend, research and development in 3D technology are actively progressing, opening new possibilities in various application fields [2,3,4]. To provide hyper-realistic video services utilizing VR and AR, two separate video streams are required, which demand significant computational power and bandwidth. In other words, to deliver a 3D video service, two separate video streams, one for each eye, must be received and combined to create a 3D image [5]. This process requires high-definition video to be received through two channels, which doubles the bandwidth needed compared with traditional 2D video. Receiving two high-definition video streams within the constraints of limited bandwidth and combining them into a 3D image has been a significant technical challenge. To address this bandwidth issue, a hybrid 3DTV system was proposed [6].

The hybrid 3DTV system is fully compatible with existing broadcasting systems and uses a method that combines high-definition DTV video with mobile-resolution video for the left and right images to create a 3D video. This allows for the delivery of 3D services without additional bandwidth consumption and has been standardized by the ATSC (Advanced Television Systems Committee) under the name SC-MMH (Service Compatible-Main and Mobile Hybrid Delivery) [7]. This system is an innovative technology that provides 3D services while maintaining compatibility with existing 2D-HD or -UHD broadcasts, and it is designed to simultaneously offer mobile SD, terrestrial HD, and stereoscopic 3D services in various user environments. As a result, users can enjoy an optimal 3D viewing experience on a range of devices, from mobile devices to large displays. Moreover, it has the advantage of providing 3D video and broadband services by utilizing existing 2D-HD (right view) and 2D-UHD (left view) broadcasts without the need for additional equipment.

One of the core technologies of the hybrid 3DTV system is Scalable High Efficiency Video Coding (SHVC) [8]. SHVC is an encoding technology that supports video services at various resolutions, enabling the simultaneous encoding of high-resolution video for fixed receivers (TVs) and low-resolution video for mobile receivers (e.g., smartphones). This allows for the optimization of bandwidth usage while meeting diverse resolution requirements. SHVC takes advantage of the strong correlation between the left and right images by dividing them into a base layer (BL) and an enhancement layer (EL). This method effectively generates the base layer for the right-view video and the enhancement layer for the left-view video simultaneously, making it highly efficient in the hybrid 3DTV system [9]. This approach is referred to as Cross-View SHVC.

However, hybrid 3DTV systems also have potential drawbacks. When the resolution difference is within a certain allowable range, a binocular suppression effect (BSE) can occur, where a lower-resolution or distorted image is suppressed in one eye [10]. As a result, viewers perceive the higher-resolution image more dominantly, making the overall image quality degradation less noticeable. However, if the resolution difference is excessively large or the distortion and quality degradation are severe, the quality of experience (QoE) of the synthesized 3D video may deteriorate. To address these issues, various methods for ensuring symmetric image quality have been proposed, such as improvements to the conventional Cross-View SHVC structure and adjustments of factors like camera distance, field of view (FOV), and screen distance to reduce geometric distortions [11,12,13,14]. Additionally, a conditional replenishment algorithm that leverages the high correlation between binocular videos has also been developed [15]. This algorithm enhances the quality of the right image by selecting the better blocks when comparing the upscaled right image and the high-resolution left image with the original, thus mitigating the asymmetry between the left and right images. Furthermore, it is easy to implement and has fast processing speeds, as it loads images in block units according to the mode without additional computation in the decoder.

In this paper, we propose a new structure that combines the conditional replenishment algorithm with the SHVC codec to overcome existing limitations and significantly enhance the quality of the right-view video to the level of the left-view video. In Section 2, we explain SHVC, Cross-View SHVC for symmetrical quality, and the conditional replenishment algorithm, which will be compared with the proposed method. Section 3 describes the proposed method, while Section 4 discusses the characteristics and performance of the sequences used in the experiments. Finally, Section 5 presents the main conclusions.

2. Related Work

2.1. Hybrid 3DTV Utilizing Cross-View SHVC

HEVC (High Efficiency Video Coding) [16] is a video compression standard designed to maximize compression efficiency, offering approximately 50% better compression compared with the previous AVC (Advanced Video Coding, H.264) standard. This means that high-quality video streams can be delivered with less data transmission. HEVC supports efficient encoding by employing complex prediction models and an irregular tree-based coding unit structure, making it suitable for transmitting high-resolution video content such as 4 K and 8 K at lower bitrates.

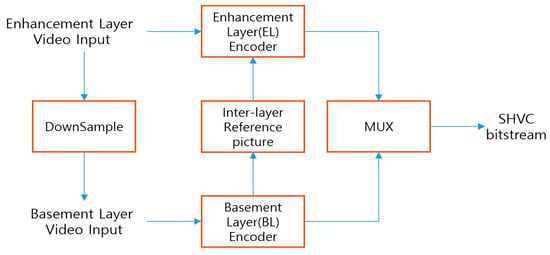

SHVC (Scalable High Efficiency Video Coding) [8] is an extension of HEVC, designed to efficiently encode video streams at various resolutions and quality levels. as shown in Figure 1 SHVC uses a hierarchical structure consisting of a base layer and one or more enhancement layers to flexibly adapt to different network conditions and device capabilities. The base layer contains a lower resolution or lower quality version of the video, while the enhancement layers reference the base layer to progressively improve resolution and quality. For instance, under slow network conditions, only the base layer may be transmitted to ensure minimal quality, and as network conditions improve, enhancement layers can be transmitted to gradually enhance the video quality.

Figure 1.

SHVC encoder.

The main difference between HEVC and SHVC is that SHVC introduces a hierarchical encoding structure that supports scalable video transmission, whereas HEVC focuses on maximizing efficiency through single-layer encoding. SHVC optimizes content delivery across a range of devices, from high-resolution TV displays to mobile streaming services, and adapts to varying network environments to enhance video quality and transmission efficiency. This layered approach provides more flexibility and efficient use of network bandwidth compared with the single encoding method used by HEVC.

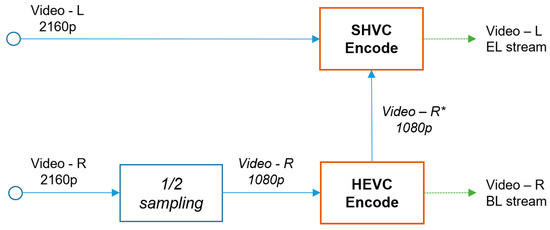

A method has been proposed to deliver 3D video services using the SHVC structure [9]. The method inputs the left- and right-view videos into the base layer and enhancement layer of the SHVC encoder, respectively, as illustrated in Figure 2. By using this method, since the left- and right-view videos are highly correlated with only a difference in viewpoint, it allows for the efficient transmission of both high-resolution and low-resolution videos. This enables 3D video services to be provided without requiring additional bandwidth. We refer to this approach as Cross-View SHVC.

Figure 2.

Cross-View SHVC encoder for the hybrid 3DTV system.

Figure 2 and Figure 3 depict schematic diagrams of video encoders and decoders using Cross-View SHVC in a hybrid 3DTV system, which provides 3D video services while maintaining full compatibility with existing 2D broadcasting systems. In the transmission stage, the high-resolution left-view video is transmitted via a fixed-reception channel, while the low-resolution right-view video is transmitted via a mobile-reception channel. In the reception stage, each channel provides a 2D video service, and to deliver 3D video services, the received right-view video is first upscaled to match the resolution of the left-view video and then combined with it.

Figure 3.

Cross-View SHVC decoder for the hybrid 3DTV system.

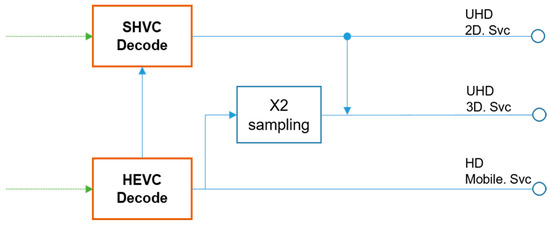

As demand for high-resolution video continues to increase, the high complexity of SHVC has been identified as an issue, leading to ongoing research aimed at reducing its complexity [17,18]. Cross-View SHVC also faces challenges that require improvements. Figure 4 shows an enhanced version of the structure presented in Figure 2 [11]. This improved structure is designed to encode a second enhancement layer while sharing the base layer. The enhanced Cross-View SHVC technique provides, on average, 16% higher encoding efficiency compared with the independent HEVC encoding of the left and right views. Additionally, this method reduces fluctuations in the Peak Signal-to-Noise Ratio (PSNR) (1) per video frame and improves the quality of the lowest PSNR frame by 0.6 dB. These improvements enhance bandwidth efficiency as well as the overall quality of 3D video [11].

Figure 4.

Improved Cross-View SHVC.

2.2. Conditional Replenishment Algorithm

In the hybrid 3DTV system, the resolution of the decoded right-view video is generally lower than that of the decoded left-view video. Specifically, it is assumed that the resolution of the right-view video is less than or equal to 1/4 of the resolution of the left-view video. Therefore, to form a high-quality stereoscopic image in 3D video services, an upscaling process is necessary before combining the left and right videos. However, simply upscaling the resolution of the video can lead to quality degradation compared with the original video, which may result in asymmetric picture quality between the left and right views in the combined 3D video.

In the past, there have been questions about whether the difference in image quality between the left and right views in S3D videos causes discomfort during viewing. However, it has generally been observed that the discomfort experienced by users is less severe than expected. This is due to the binocular suppression effect (BSE) [10]. The binocular suppression effect refers to the characteristic of the human visual system where, when there is a difference in image quality between the left and right fields of view, the subjective image quality is determined based on the higher-resolution image. This effect has been shown to reduce the perceived impact of quality degradation in one of the images in the combined 3D video, making the reduction in resolution less noticeable [19].

However, recent studies have shown that when the quality difference between the left and right images becomes too large, the binocular suppression effect does not function effectively. This result was confirmed using the DSIS (Dual Stimulus Impairment Scale) method, which is one of the subjective video quality assessment methods [20]. The DSIS method involves showing the evaluator the original video first, followed by a second video that contains some degradation. The evaluator is then asked to rate the second video based on the quality of the first one. This method is similar to the DCR (Degradation Category Rating) method described in ITU-T P.910, and it uses the same 5-point scale to measure the impairment between the two videos. The DCR method evaluates the quality difference between the original and test videos by playing them sequentially and asking the evaluator to rate the difference across five categories. While DCR only shows the original and test videos once, DSIS repeats the sequence one or two times according to a specific time pattern.

Through these experiments, it was found that when the PSNR (Peak Signal-to-Noise Ratio) difference between the left- and right-view videos exceeds an average of 1.9 dB, viewers quickly notice a degradation in the quality of the S3D video [20]. This result supports the technical necessity of maintaining symmetry in the image quality of S3D videos.

Therefore, to ensure symmetry between the left and right images, we identified the need for a technology that minimizes the PSNR difference between the two videos. To address this, we developed the conditional replenishment algorithm [15]. This algorithm takes advantage of the fact that although there are differences between the left- and right-view images due to disparity, they still share a very high correlation. By comparing the images at a block level, the algorithm uses left-view image blocks to supplement and enhance the quality of the right-view image.

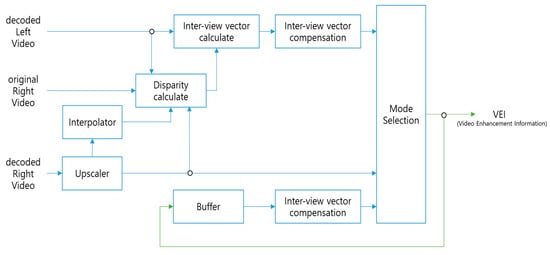

Figure 5 is a schematic diagram of the conditional replenishment algorithm. Block searching is performed using the high-resolution left-view video to improve the decoded low-resolution right-view video. During this process, replacement blocks are found in the higher-resolution left-view video, and the corresponding position is indicated by calculating the Inter-View Vector (IVV). This calculation is performed on blocks of various sizes based on a quad-tree structure. The quad-tree structure efficiently partitions and searches the blocks to find the optimal replacement blocks. As a result, Video Enhancement Information (VEI) is generated, which contains compressed data such as the mode, size of each block, and IVV.

Figure 5.

Conditional replenishment algorithm.

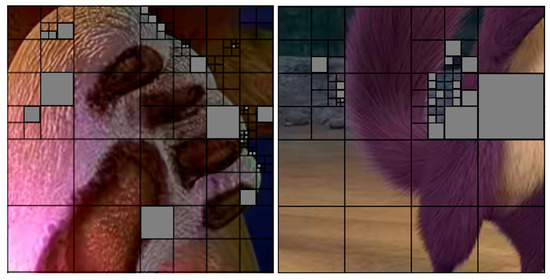

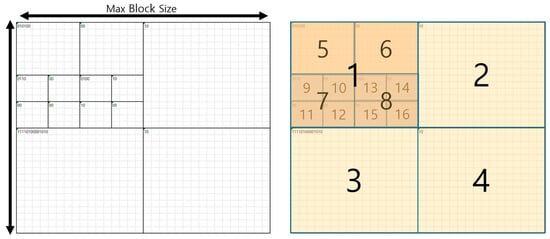

Figure 6 visually demonstrates the application of the conditional replenishment algorithm, showcasing the process where low-resolution blocks from the right-view video are replaced with high-quality substitute blocks extracted from the left-view video. The blocks designated for conditional replacement are marked in gray, with each block assigned a specific mode. Figure 7 briefly illustrates an example of the bits allocated according to the modes of these blocks, as the stream is constructed by sequentially traversing the blocks in the order shown on the right. For instance, if the mode of a block is 01, it is further divided into smaller blocks. If the mode is 10, the upscale mode is applied, and for 11, the replenishment mode is applied along with the assignment of IVV information. In blocks that are no longer divided, only upscale and replenishment modes are present, and VEI is structured in a manner that distinguishes them using 00 and 01, respectively.

Figure 6.

Conditional replenishment algorithm application results.

Figure 7.

VEI stream construction method.

Figure 8 illustrates the SHVC codec integrated with the aforementioned technology. The conditional replenishment algorithm improves video quality by 2 to 5 dB in terms of PSNR and enhances RD performance through inter-frame encoding of disparity information [21]. This helps resolve the issue of asymmetric image quality between the left and right views, providing a more comfortable 3D viewing experience.

Figure 8.

SHVC codec incorporated with VEI.

3. Proposed Solution

As previously mentioned, due to the differences in resolution or quality between the left and right videos, viewers may experience discomfort when watching 3D content. To address this issue of asymmetry and ensure symmetrical quality between the left and right videos, it was necessary to generate a high-resolution right-view video. To overcome this challenge, we propose a new encoding framework that combines the conditional replenishment algorithm with Cross-View SHVC. This framework is designed using the non-HEVC option of the SHVC encoder, which is a key factor for maximizing efficiency in hierarchical video coding. The non-HEVC option of the SHVC encoder reuses an already encoded base layer image and utilizes it as an inter-layer prediction signal to generate the enhancement layer stream. This method eliminates the need to re-encode the base layer, allowing the enhancement layer to be generated based on the already encoded base layer data, thereby reducing encoding complexity and improving efficiency. Additionally, it provides the advantage of delivering high-quality video while conserving bandwidth.

The Cross-View SHVC shown in Figure 4 is designed with a structure that uses the decoded video as the base layer for inter-layer prediction signals. In other words, the base layer, consisting of the down-sampled version of the right-view video, is used as a prediction signal when generating the enhancement layer. However, the conventional Cross-View SHVC method has limitations, as it does not fully resolve the quality differences between the left and right videos. Therefore, the proposed method introduces a more advanced approach rather than strictly adhering to the existing structure. Specifically, while the base layer of the left-view video is retained, the base layer of the right-view video uses a high-quality image restored using the conditional replenishment algorithm. By applying this high-quality image to the base layer of the right-view video, a better-quality prediction signal is provided during enhancement layer encoding. This approach generates a higher-quality right-view video compared with the conventional method, which uses only the decoded base layer image, effectively addressing the asymmetry between the left and right videos.

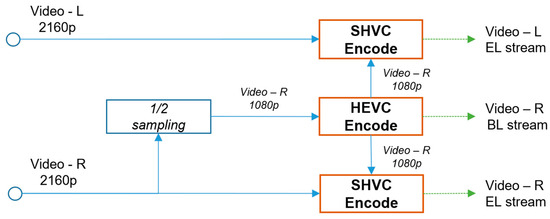

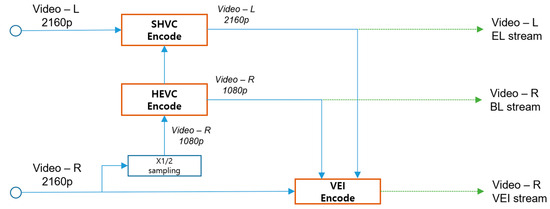

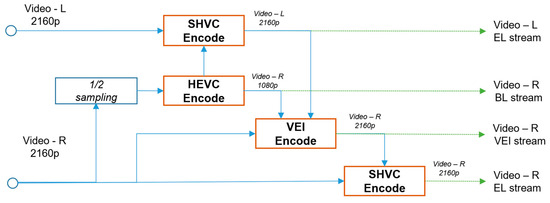

Figure 9 illustrates the overall flow of the proposed encoding framework, which includes step-by-step processes designed to ensure symmetrical quality between the left and right videos and achieve efficient 3D video encoding.

Figure 9.

Proposed encoding framework schematic diagram.

In the first step, the right-view video in HD resolution is encoded using the HEVC codec. This process generates the right-view base layer stream and an HEVC-encoded right-view video. This step provides a low-resolution base layer stream, which can be used for playback on mobile devices and similar environments.

In the second step, the UHD resolution left-view video is input into the Cross-View SHVC encoder, where it is processed using the non-HEVC option. This process generates the left-view video enhancement layer stream and the left-view video. Cross-View SHVC leverages the correlation between the left and right views to improve encoding efficiency and plays a crucial role in enhancing the quality of the left-view video.

The third step applies VEI (Video Enhancement Information) encoding, specifically the conditional replenishment algorithm. In this step, the HEVC-encoded right-view video, the Cross-View SHVC-encoded left-view video, and the original right-view video are used as inputs to the VEI encoding process. This generates a VEI-applied right-view video, which restores low-resolution blocks in the right-view video and significantly improves the overall quality. Through this process, the asymmetry in video quality is resolved, and an enhanced video quality is provided.

In the final step, the VEI-applied right-view video is used as the base layer, and the original right-view video is re-encoded using Cross-View SHVC. This results in the generation of the right-view video enhancement layer stream, which provides high-resolution video.

By following these steps, the proposed encoding framework effectively addresses the issue of asymmetry between the left and right videos while maintaining bandwidth efficiency and delivering high-resolution video quality. In particular, by combining VEI and Cross-View SHVC, the quality of the right-view video is improved to the same level as the left-view video, minimizing discomfort for viewers during binocular viewing. Additionally, depending on bandwidth conditions, there is the advantage of selectively providing right-view videos ranging from low to high quality. This allows for an optimized user experience by delivering the appropriate video quality based on network conditions or the performance of the user’s device. Moreover, by reducing encoding complexity and improving efficiency, the framework optimizes resource usage for service providers and enhances the overall system performance.

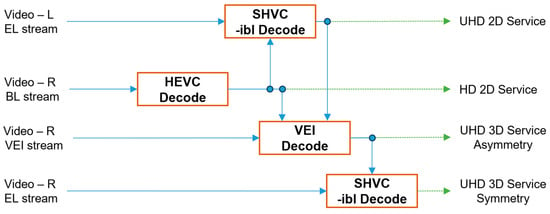

Figure 10 illustrates the overall structure of the decoding framework, visually representing the step-by-step process of decoding based on the streams generated using the proposed method. This figure systematically explains the flexible decoding process designed to adapt to various network environments and bandwidth conditions. The proposed encoding and decoding frameworks offer significant advantages by providing users with various options depending on network conditions and available bandwidth. Users can choose between high-quality 2D service and low-quality 2D service based on the current network environment. For 3D services, they can select between asymmetric quality and symmetric quality options. This optimizes the user experience, allowing for satisfactory content consumption depending on network performance and device capabilities. Notably, this decoding framework is distinguished by its ability to deliver high-quality services while minimizing bandwidth consumption through the efficient hierarchical coding method and the conditional replenishment algorithm. For instance, during the provision of 3D services, the framework addresses the quality asymmetry between left and right views by incorporating a process to restore the right view with high quality. These restored images are then used as high-quality predictive signals in the enhancement layer generation. Furthermore, the decoding process is designed to maintain service consistency even under deteriorating network conditions. For example, in situations where bandwidth is limited, lower-quality streams are selectively received and decoded. Conversely, when sufficient bandwidth is available, higher-quality streams are received to provide superior visual quality. This ensures that the proposed framework not only delivers personalized services but also efficiently utilizes network resources, contributing to the reduction in operational costs for service providers.

Figure 10.

Proposed decoding framework schematic diagram.

In conclusion, the decoding framework presented in Figure 10 visually demonstrates the entire process of optimized content delivery that reflects user preferences and network conditions. It highlights the key technical strengths of the proposed methodology and its contributions to enhancing the user experience.

4. Results

4.1. Test Condition and Experimental Method

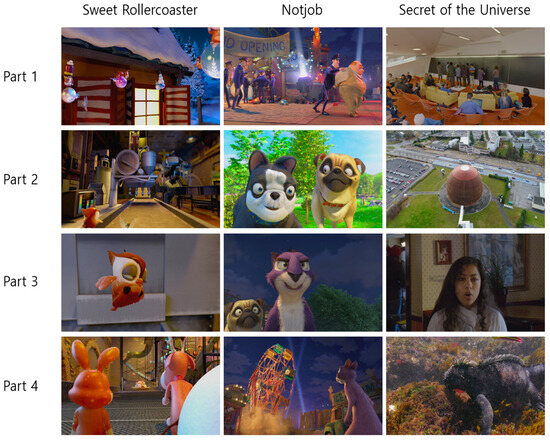

The test video consists of two UHD animation sequences and one UHD live-action sequence, each divided into four sections. Each section is determined based on SI (Space Perceptual Information) values of 50, 100, 150, and 200, obtained in accordance with the ITU-T P.910 recommendation [22]. SI is an indicator representing the spatial complexity within a video frame, and it measures the degree of edge and texture variation in the frame to calculate the SI value. The SI value is derived by calculating the spatial rate of change in each frame using a Sobel filter and then selecting the maximum value from the grayscale image. A low SI value (50) indicates a relatively simple scene with few textures or details, while a high SI value (200) represents a scene with highly complex textures and details. Therefore, as the SI value increases, the complexity of the video also increases, resulting in greater encoding complexity.

The evaluation compares the reconstructed right-view video produced by the proposed coding method with HEVC and Cross-View SHVC codecs in terms of PSNR, and the sequences used for this evaluation are illustrated in Figure 11 and Table 1.

Figure 11.

Sequence snapshots used in the experiment.

Table 1.

Test sequence.

The encoding process for the experiment proceeds as follows:

- As a reference, HEVC encoding is applied to encode the right-view video of UHD resolution. The QPs used for encoding are set to 30, 32, 34, and 36.

- As another reference, SHVC encoding with a non-HEVC option is applied to encode the right-view video of UHD resolution. The QPs are the same as HEVC.

- The proposed coding structure is used. The bitrate for Video Enhancement Information (VEI) is set to 1/5 of the bitrate used for base layer encoding in Cross-View SHVC. SHVC encoding based on the non-HEVC option is performed, using the VEI-reconstructed video as the base layer to generate the enhancement layer stream.

The reason for setting the QP values in the range of 30 to 36 is well known: to receive a 4 K video, a data rate of 15 Mbps is recommended. When compressed to a target bitrate of 15 Mbps, most frames remain within the QP range of 30 to 36.

The performance of the proposed method is evaluated using BDBR and BDSNR based on the videos obtained through the three methods [23,24].

BDBR (Bjøntegaard Delta Bitrate) measures the bitrate difference between two compression algorithms. This value represents the difference in the required bitrate between two compression techniques at a given quality level. In other words, it indicates how much less (or more) bitrate one technique requires compared with another while maintaining the same quality.

BDSNR (Bjøntegaard Delta SNR), on the other hand, shows the quality difference (in terms of SNR) between two compression methods. It measures how much the Signal-to-Noise Ratio (SNR) differs between the two codecs when the bitrate is the same. This metric is used to evaluate the quality of the video after compression.

These two metrics complement each other. BDBR measures compression efficiency in terms of bitrate, while BDSNR reflects quality differences in terms of the Signal-to-Noise Ratio. By using these two metrics together, one can clearly understand how much a new compression technique improves both in terms of bitrate and quality.

4.2. Test Results and Discussions

We have identified that, on average, the PSNR of the right-view video should not differ from that of the left-view video by more than 1.9 dB to prevent subjective quality degradation [20]. With this in mind, it is necessary to analyze the experimental results.

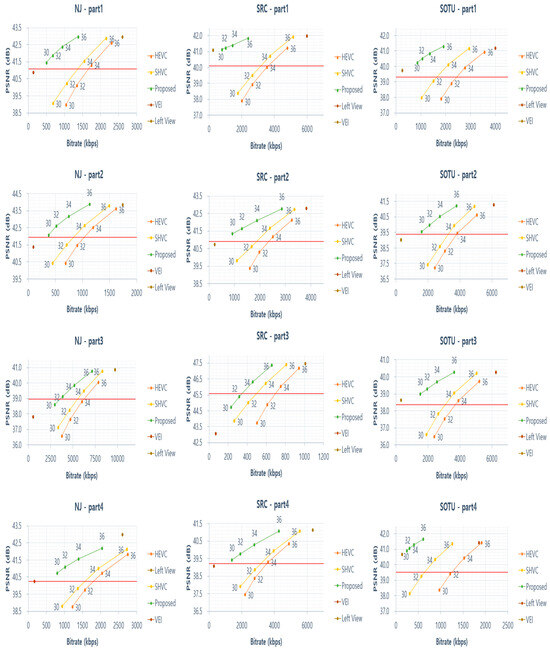

Figure 12 shows the RD (rate–distortion) curve for each sequence. The RD curve visually represents the relationship between compression efficiency and compression quality in data compression. Here, rate refers to the data transmission speed, expressed in the number of bits transferred per second, and distortion indicates the quality degradation caused by compression. In this experiment, PSNR is used as the indicator of distortion. Figure 12 shows the bitrate and PSNR values obtained using the proposed method, HEVC, and SHVC at QP levels of 30, 32, 34, and 36.

Figure 12.

RD curve.

In Figure 12, the PSNR values of the left-view video for the NJ sequence’s Part 1 to Part 4 are 42.99 dB, 43.84 dB, 40.87 dB, and 42.15 dB, respectively. Therefore, for the right-view video to maintain subjective quality without degradation, its PSNR must reach at least 41.09 dB, 41.94 dB, 38.97 dB, and 40.25 dB for Part 1 to Part 4, respectively. These target PSNR values are indicated by red lines in Figure 12.

As shown in Figure 12, the RD curve, the proposed algorithm reaches the target PSNR at QP 30 for all parts except the NJ sequence’s Part 3, where it reaches the target PSNR at QP 32. However, with conventional HEVC and Cross-View SHVC, QP 34 is required to reach the target PSNR. This indicates that the proposed method can achieve the target PSNR with a lower bitrate compared with the existing methods.

Thus, the proposed framework demonstrates superior performance in terms of compression efficiency and video quality, as it achieves the target PSNR for the right-view video with a lower bitrate while avoiding subjective quality degradation.

The performance of all experimental videos was evaluated using BDBR (Bjøntegaard Delta Bit Rate) and BDSNR (Bjøntegaard Delta Peak Signal-to-Noise Ratio) to assess the symmetric quality performance. To briefly explain the metrics, BDBR indicates the reduction in bitrate at the same quality level and plays an important role in evaluating compression efficiency. On the other hand, BDSNR measures the quality gain (in terms of PSNR) at the same bitrate and is used to compare video quality after compression.

Table 2 presents the overall results comparing the proposed method against encoding the right-view video using HEVC and Cross-View SHVC. It shows how much bitrate reduction was achieved and the quality gains provided by the proposed framework.

Table 2.

BDBR and BDSNR of the proposed method (excludes base layer bitrate).

The proposed method demonstrates a significant performance improvement compared with HEVC and Cross-View SHVC. First, according to the BDBR evaluation results, the proposed method achieved an average bitrate reduction of 57.78% compared with HEVC and a 45.10% reduction compared with Cross-View SHVC. This means that it can reduce bandwidth usage by more than half while maintaining the same quality. Such bitrate reduction can play a crucial role in environments with limited bandwidth, such as real-time 3D streaming, low-bandwidth networks, or mobile devices. The BDSNR evaluation results also show that the proposed method provides higher video quality at the same bitrate. Compared with HEVC, the average PSNR improved by 2.62 dB, and it improved by 1.81 dB compared with Cross-View SHVC. A difference of over 1 dB in PSNR indicates a visually noticeable difference in quality.

Table 3 presents the comparison of BDBR and BDSNR performance for all encoded videos. This table illustrates how the proposed structure performs when encoding left and right videos at UHD resolution, compared with standard codecs such as HEVC and Cross-View SHVC. In other words, it reflects the performance of 3D video encoding.

Table 3.

BDBR and BDSNR of the proposed method (includes total bitrate of hybrid 3DTV system).

Across the entire sequence, the proposed structure achieved an average bitrate reduction of 10.53% compared with the HEVC standard and 15.24% compared with Cross-View SHVC. Additionally, in terms of quality improvement at the same bitrate, the proposed method showed a 1.04 dB improvement over HEVC and a 1.76 dB improvement over Cross-View SHVC.

5. Conclusions

In this paper, we propose and analyze the performance of a new encoding framework designed to address the issue of asymmetric image quality between the left and right views in 3D video services while achieving more efficient 3D video encoding. Compared with conventional HEVC and Cross-View SHVC methods, the proposed framework combines the conditional replenishment algorithm and Cross-View SHVC to significantly improve the quality of the right-view video while maximizing encoding efficiency through the use of Video Enhancement Information (VEI) technology.

The experimental results demonstrate that the proposed method outperforms HEVC and Cross-View SHVC in terms of bitrate reduction and quality improvement. Specifically, the proposed framework delivers higher PSNR with lower bitrates, without subjective quality degradation, compared with encoding the right-view video using HEVC and Cross-View SHVC. On average, it achieved a 57.78% reduction in bitrate and a 2.62 dB improvement in PSNR compared with HEVC and a 45.10% reduction in bitrate and a 1.81 dB improvement in PSNR compared with Cross-View SHVC. These performance improvements contribute not only to bandwidth savings but also to increased network transfer efficiency.

Moreover, the proposed framework has proven to be effective in ensuring quality in 3D video services. When encoding left and right videos at UHD resolution, the proposed structure achieved an average bitrate reduction of 10.53% compared with HEVC and 15.24% compared with Cross-View SHVC while offering a PSNR improvement of 1.04 dB over HEVC and 1.76 dB over Cross-View SHVC at the same bitrate. Furthermore, it is well-suited for streaming services, as it allows users to selectively choose from outputs of various quality levels.

The proposed framework is designed to output streams at various resolutions, allowing users to choose from different quality levels. This enables high-quality service even in low-bandwidth environments, making it an excellent framework for OTT and broadcast environments. However, it should be noted that additional bandwidth is required, and it cannot be assumed that the left and right views will have 100% identical quality. In the future, as decoder processing power advances, further research should focus on enhancing video quality at the decoder side.

Author Contributions

Conceptualization, J.L., S.L. and D.K.; methodology, J.L., S.L. and D.K.; software, J.L. and S.L.; validation, J.L., S.L. and D.K.; formal analysis, J.L. and S.L.; investigation, J.L. and S.L.; resources, J.L., S.L., S.K. and D.K.; data curation, J.L. and S.L.; writing—original, J.L. and S.L.; draft preparation, J.L. and S.L.; writing—review and editing, J.L. and D.K.; visualization, J.L. and S.L.; supervision, D.K.; project administration, D.K. and S.K.; funding acquisition, D.K. and S.K. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by the Electronics and Telecommunications Research Institute (ETRI) [Project Number: 24ZC1100, Research on Original Technologies for Hyper-realistic Stereoscopic Spatial Media and Content].

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Triantafyllidis, G.A.; Enis Çetin, A.; Smolic, A.; Onural, L.; Sikora, T.; Watson, J. 3DTV: Capture, transmission, and display of 3D video. EURASIP J. Adv. Signal Process. 2008, 2009, 585216. [Google Scholar] [CrossRef][Green Version]

- Kryvoshei, O.; Kamencay, P.; Stech, A. Server Implementation Design for Adaptive 3D Video Streaming. In Proceedings of the 2024 34th International Conference Radioelektronika (RADIOELEKTRONIKA), Zilina, Slovakia, 17–18 April 2024; pp. 1–6. [Google Scholar]

- Chakareski, J.; Khan, M. Live 360° Video Streaming to Heterogeneous Clients in 5G Networks. IEEE Trans. Multimed. 2024, 26, 8860–8873. [Google Scholar] [CrossRef]

- Singla, S.; Saini, P.; Malhotra, K.; Kukreja, M.; Kiran, G.; Bhandari, R. Exploration and Analysis of Various Techniques used in 2D to 3D Image and Video Reconstruction. In Proceedings of the 2024 International Conference on Emerging Innovations and Advanced Computing (INNOCOMP), Sonipat, India, 25–26 May 2024; pp. 8–15. [Google Scholar]

- Fehn, C.; de la Barre, R.; Pastoor, S. Interactive 3-DTV-Concepts and Key Technologies. Proc. IEEE 2006, 94, 524–538. [Google Scholar] [CrossRef]

- Doc. S12-237r6; Candidate Standard: Revision of 3D-TV Terrestrial Broadcasting, Part 5—Service Compatible 3D-TV Using Main and Mobile Hybrid Delivery. ATSC: Washington, DC, USA, 2015.

- ATSC A/104; 3D-TV Terrestrial Broadcasting, Part 5-Service Compatible 3D-TV Using Main and Mobile Hybrid Delivery. Advanced Television Systems Committee Standards: Washington, DC, USA, 2014.

- Boyce, J.M.; Ye, Y.; Chen, J.; Ramasubramonian, A.K. Overview of SHVC: Scalable Extensions of the High Efficiency Video Coding Standard. IEEE Trans. Circuits Syst. Video Technol. 2015, 26, 20–34. [Google Scholar] [CrossRef]

- Kim, S.; Lee, J.; Choi, J.; Kim, J. Technology and Standardization Trend of Fixed & Mobile Hybrid 3DTV Broadcasting. Commun. Korean Inst. Inf. Sci. Eng. 2011, 29, 38–44. [Google Scholar]

- Asher, H. Suppression theory of binocular vision. Brit. J. Ophthalmol. 1953, 37, 37–49. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Kang, D.; Jung, K.; Kim, J.; Kim, J.H. Hybrid 3DTV Systems Based on the CrossView SHVC. J. Broadcast Eng. 2018, 23, 316–319. [Google Scholar]

- Gao, Z.; Hwang, A.; Zhai, G.; Peli, E. Correcting geometric distortions in stereoscopic 3D imaging. PLoS ONE 2018, 13, e0205032. [Google Scholar] [CrossRef] [PubMed]

- Fezza, S.A.; Larabi, M.-C. Perceptually Driven Nonuniform Asymmetric Coding of Stereoscopic 3D Video. IEEE Trans. Circuits Syst. Video Technol. 2016, 27, 2231–2245. [Google Scholar] [CrossRef]

- Farid, M.S.; Babar, B.U.Z.; Khan, M.H. Efficient representation of disoccluded regions in 3D video coding. Ann. Telecommun. 2024. [Google Scholar] [CrossRef]

- Jung, K.-H.; Bang, M.-S.; Kim, S.-H.; Choo, H.-G.; Kang, D.-W. Visual quality improvement for hybrid 3DTV with mixed resolution using conditional replenishment algorithm. In Proceedings of the 2013 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 11–14 January 2013; pp. 264–265. [Google Scholar]

- Sullivan, G.J.; Ohm, J.-R.; Han, W.-J.; Wiegand, T. Overview of the High Efficiency Video Coding (HEVC) Standard. IEEE Trans. Circuits Syst. Video Technol. 2012, 22, 1649–1668. [Google Scholar] [CrossRef]

- Parois, R.; Hamidouche, W.; Vieron, J.; Raulet, M.; Deforges, O. Efficient parallel architecture for a real-time UHD scalable HEVC encoder. In Proceedings of the 2017 25th European Signal Processing Conference (EUSIPCO), Kos, Greece, 28 August–2 September 2017; pp. 1465–1469. [Google Scholar] [CrossRef]

- Wang, D.; Sun, Y.; Zhu, C.; Li, W.; Dufaux, F.; Luo, J. Fast Depth and Mode Decision in Intra Prediction for Quality SHVC. IEEE Trans. Image Process. 2020, 29, 6136–6150. [Google Scholar] [CrossRef] [PubMed]

- Stelmach, L.; Tam, W.J.; Meegan, D.; Vincent, A. Stereo image quality: Effects of mixed spatio-temporal resolution. IEEE Trans. Circuits Syst. Video Technol. 2000, 10, 188–193. [Google Scholar] [CrossRef]

- Son, I.; Lee, S.; Kim, S.; Jung, S.; Jung, K.; Kang, D. Binocular suppression analysis for efficient compression of UHD stereo images. In Proceedings of the 2022 37th International Technical Conference on Circuits/Systems, Computers and Communications (ITC-CSCC), Phuket, Thailand, 5–8 July 2022; pp. 796–798. [Google Scholar]

- Lee, S.-J.; Son, I.; Kim, S.-H.; Jung, S.-W.; Jung, K.-H.; Kang, D.-W. Performance Improvement of Conditional Replenishment Algorithm Using Temporal Correlation. J. Korean Inst. Commun. Inf. Sci. 2023, 48, 275–281. [Google Scholar] [CrossRef]

- ITU-T Recommendation P.910. Subjective Video Quality Assessment Methods for Multimedia Applications. 2008. Available online: https://www.itu.int/rec/t-rec-p.910 (accessed on 29 October 2023).

- Bjontegaard, G. Calculation of average PSNR differences between RD-curves (VCEG-M33). In Proceedings of the VCEG Meeting (ITU-T SG16 Q. 6), Pattaya, Thailand, 4–6 December 2001. [Google Scholar]

- Pateux, S.; Jung, J. An excel add-in for computing Bjontegaard metric and its evolution. In Proceedings of the VCEG Meeting, Marrakech, Morocco, 15–16 January 2007. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).