Abstract

This work was conducted mainly to provide a healthy and safe monitoring system for the elderly living in the home environment. In this paper, two different target fall detection schemes are proposed based on whether the target is visible or not. When the target is visible, a vision-based fall detection algorithm is proposed, where an image of the target captured by a camera is transmitted to the improved You Only Look Once version 5s (YOLOv5s) model for posture detection. In contrast, when the target is invisible, a WiFi-based fall detection algorithm is proposed, where channel state information (CSI) signals are used to estimate the target’s posture with an improved long short-term memory (LSTM) model. In the improved YOLOv5s model, adaptive picture scaling technology named Letterbox is used to maintain consistency in the aspect ratio of images in the dataset, and the weighted bidirectional feature pyramid (BiFPN) and the attention mechanisms of squeeze-and-excitation (SE) and coordinate attention (CA) modules are added to the Backbone network and Neck network, respectively. In the improved LSTM model, the Hampel filter is used to eliminate the noise from CSI signals and the convolutional neural network (CNN) model is combined with the LSTM to process the image made from CSI signals, and thus the object of the improved LSTM model at a point in time is the analysis of the amplitude of 90 CSI signals. The final monitoring result of the health status of the target is the result of combining the fall detection of the improved YOLOv5s and LSTM models with the physiological information of the target. Experimental results show the following: (1) the detection precision, recall rate, and average precision of the improved YOLOv5s model are increased by 7.2%, 9%, and 7.6%, respectively, compared with the original model, and there is almost no missed detection of the target; (2) the detection accuracy of the improved LSTM model is improved by 15.61%, 29.36%, and 52.39% compared with the original LSTM, CNN, and neural network (NN) models, respectively, while the convergence speed is improved by 90% compared with the original LSTM model; and (3) the proposed algorithm can meet the requirements of accurate, real-time, and stable applications of health monitoring.

1. Introduction

The rapid development of science and technology has led to a general improvement in medical care and living standards. As a result, people are living longer in their living environment. Hence, the aging of the world population is a global trend. According to the World Health Organization (WHO), the number of elderly people aged 60 and above is expected to rise to 2 billion globally by the year 2050. The increase in the total number of elderly people will bring challenges to public health and medical systems worldwide.

As we know from the statistical data of WHO, an estimated 684,000 people worldwide die from falls each year, with adults over the age of 60 accounting for the largest proportion of fatal falls. The global incidence of falls among people over 65 years old and 70 years old is 28% to 35% and 32% to 42%, respectively. According to the Chinese Center for Disease Control and Prevention, falls have become the leading cause of injury and death among people over 65 years old in China.

Therefore, the health and safety of the elderly has become an important issue of concern. Many electronic products related to elderly health monitoring have been promoted, such as smart bracelets [1,2] and intelligent monitoring systems [3]. At the same time, to improve the accuracy of fall detection algorithms, many related studies have been conducted in recent years. These have mainly used sensors, cameras, and WiFi signals to realize fall detection.

The main principle of sensor-based fall detection algorithms is collecting data through one or more sensors (accelerometer, gyroscope, pressure sensors, etc.) that are placed on the human body or within the detection environment. These data are then processed by intelligent algorithms and the corresponding target’s posture is revealed.

Fall detection based on machine vision or video uses a camera to capture movement images of the human body and analyzes the human posture and movement in these images through computer vision algorithms to determine whether a fall has occurred.

The principle of fall detection algorithms based on WiFi signals mainly relies on the analysis and processing of CSI. During the propagation of WiFi signals, different propagation paths will be generated due to phenomena such as reflection, diffraction, and scattering when encountering objects. The signal strength and phase information along these paths is transmitted to the receiver, forming the CSI data. Through the analysis and processing of these CSI data, features related to human activities can be extracted to realize fall detection.

2. Related Works

2.1. Fall Detection Based on Sensors

For the elderly, falls are a severe issue, since they can result in life-threatening injuries or even death. The probability of a successful recovery can be considerably increased by prompt intervention following a fall, yet it may be difficult for caregivers to be aware of a fall as soon as it occurs. To address this issue, a wearable device that uses several sensors to detect falls and alert designated caregivers via a mobile app is described. The Internet of Things (IoT) design of this system is built on sensors that measure human acceleration and angular velocity and then transmit to the cloud, where machine learning algorithms analyze the information to determine if a fall has occurred. If a fall is detected, the system sends relevant information to the caregiver, enabling a timely and informed response [4]. The authors of [5] proposed a fall detection method based on a wearable pressure sensor. Firstly, an analysis of the principle and characteristics of the effect of the wearable pressure sensor on fall detection was undertaken based on the physiological structure of the human foot. Secondly, based on the framework of the fall detection system, the settings of pressure sensors and system integration methods, special methods of processing pressure data, and different judgment methods of the fall detection model were adopted. At the same time, in order to investigate the problems of fall detection methods (poor robustness and sparse audio data), the authors of [6] constructed a noisy dataset with background sound and designed a one-dimensional residual neural network model with the original audio waveform as an input of the convolutional network, 1D-Re CNN. Then, a fall detection framework based on heterogeneous sensor data fusion was designed. By analyzing the heterogeneity of acceleration data and audio data, D-S evidence theory was used to integrate acceleration output and audio output at the decision level.

To further improve the accuracy of fall detection methods, the authors of [7] have proposed a human fall detection method based on multi-sensor fusion, mainly aiming to tackle the problem of single optical sensors or millimeter-wave radar sensor accuracy in human fall detection applications, which are affected by the environment. In this work, CCD optical sensors and millimeter-wave radar sensors were used to simultaneously detect the target, with the obtained multi-sensor data being used for decision-level fusion detection. The authors of [8] proposed a deep classifier for pre-impact fall detection. Convolutional Neural Networks (CNNs) and bidirectional gated recurrent units (BiGRUs) with residual connections were combined to detect a fall in the pre-impact phase. In this work, the sensors are used to collect information on posture, and raw sensor data underwent z-score normalization followed by magnitude calculation; this was then fed into the proposed deep learner for fall classification.

The sensor-based fall detection algorithm has good real-time performance but shows certain problems in adaptability to dynamic scenes and is sensitive to irrelevant external objects. Using cameras or combining sensors with cameras for fall detection can ameliorate these deficiencies.

2.2. Fall Detection Based on Video

The work in [9] employed a camera, sensors, positioning module, heart rate module, and narrowband Internet of Things (NB-IoT) module to collect and transmit information, achieving outdoor and indoor fall detection of elderly people. Threshold detection and support vector machine (SVM) classification were used to improve the fall detection accuracy. The monitoring app was used to query status and location information on the elderly, and this method can effectively detect elderly people’s falling behavior and send alarm information and positioning information to the guardian, realizing the intelligent care of the elderly.

The authors of [10] designed an innovative architecture using consumer webcams to detect typical abnormal behaviors in the elderly, such as falling, attacking, wandering, etc. A deep learning model was designed to detect and classify abnormal behaviors by robust bone joint extraction with consideration of the spatio-temporal background. Abnormal images were taken with a web camera fixed to the typical activity points of the elderly, which were then fed to a local server, equipped with a Graphic Processing Unit (GPU), for online monitoring and alarm. In addition, a camera-based human posture analysis method using an Intel RealSense Depth Camera D435 was introduced in [11]. This method integrated the OpenCV, MediaPipe, and Numpy Python libraries to analyze human posture in three-dimensional space. This depth-based analysis included calculating the depth of the pose sign, estimating the body’s center of mass depth, and tracking the speed of motion.

With the development of target detection algorithms, which show fast processing speeds, some researchers have recently investigated human posture detection based on YOLO [12], achieving some impressive results. However, the YOLO model’s performance is not perfect enough in fall detection applications, so improvements to the model’s detection accuracy are necessary. For example, the multi-target detection layer of YOLOv5s was improved to make the model more accurate on small-sized datasets. Further, the loss function can be replaced or improved to obtain faster and higher-quality detection results [13]. Alternatively, the performance of YOLOv5 can be improved by changing some of the modules [14], such as by adding attention models [15], among others [16].

The video-based fall detection method has high precision and strong anti-interference ability, but it often makes mistakes in the case of target occlusion. However, the passive monitoring system based on wireless signals solves this problem well, and human body pose estimation based on the WiFi channel state has become an application of human body pose estimation algorithms.

2.3. Fall Detection Based on WiFi Signals

Early WiFi-based wireless perception studies mainly focused on a received signal strength indicator (RSSI), which is a coarse-grained signal that cannot be applied to fall detection tasks. With the open-source release of the CSI tool, researchers began to extract CSI based on specific network cards and apply it to fall detection research [17]. Wang, Y. et al. proposed a fall detection method based on CSI, WiFall [18], which applied a moving average to reduce data noise. The work in [19] describes a low-cost, accurate, and non-invasive wireless fall detection system using a commercial off-the-shelf 802.11n Wireless Local Area Network (WLAN) interface card. The system uses the phase difference in two receiving antennae to detect human falls. In [20], Principal Component Analysis (PCA) was applied to CSI amplitude to extract the main part of the data stream, short-time Fourier transform extracted data features in the frequency domain, and some frequency components were simultaneously extracted to avoid sparsity in the feature vector. It was used as a semaphore for fall detection. Recently, machine learning and its combination with other methods to process CSI signals and achieve fall detection have been attractive topics [21,22,23,24,25,26,27]. Ding, J. et al. introduced a smart home passive device-free system based on a commodity WiFi framework named FDS [21], which collected WiFi signals in real time and transmitted them to a data analysis platform, adopting the Discrete Wavelet Transform (DWT) method to eliminate the influence of random data noise. Finally, an recurrent neural network (RNN) model was used to classify human motion and automatically identify the fall state. Additionally, a novel deep learning-based fall detection technique was proposed in [22]. First, different WiFi CSI collection tools were implemented and their potential for fall detection was evaluated. Then, to develop a high-precision fall detection technique, a comprehensive dataset consisting of more than 700 CSI samples was constructed, including different fall types and other daily activities, performed in four different indoor environments. Finally, a deep learning-based classifier with an image classification algorithm was developed. In [23], a new method for intelligent real-time fall detection using a fine-grained Wi-Fi signal CSI was proposed. The proposed deep CNN model, FallCNN, was trained on a Wi-Fi CSI dataset that collected seven everyday human activities in an indoor environment. FallCNN used time series data enhancement and it benefited from raw Wi-Fi CSI data and output binary classifications for fall detection, improving its performance and generalization ability.

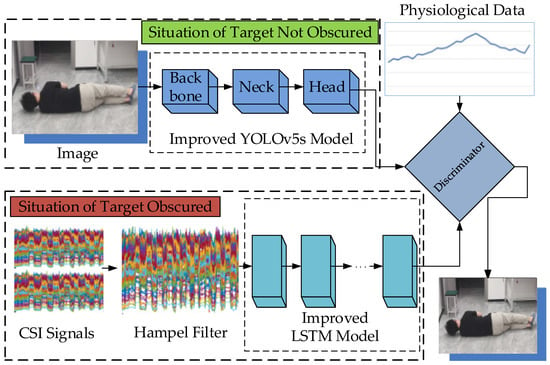

In this study, to improve the fall detection accuracy and make the detection model more lightweight, a dual model of elderly fall detection was designed, as shown in Figure 1.

Figure 1.

A dual model of human posture detection in this work.

- (1)

- When the monitoring target is visible, the camera can capture an image of the target. Then, an improved YOLOv5s model is adopted to process the image data and detect the target posture. The following improvements to the YOLOv5s model are made:

- Firstly, adaptive picture scaling technology (Letterbox) is introduced from YOLOv5s to process the images and improve the detection effect.

- Secondly, we add the SE and CA attention mechanisms to the YOLOv5s model Backbone network, and change their activation functions to make the model pays more attention to the detected target and improve the model’s understanding of the input data’s spatial structure.

- Finally, we introduce the BiFPN from the YOLOv5s model Neck network to fuse the information of feature maps of different scales and strengthen the feature information.

- (2)

- When the monitoring target is invisible, the camera cannot capture an image of the target. At this time, an improved LSTM model is adopted to process the collected CSI data and output the target posture. The following improvements to the LSTM model are made:

- CSI data noise is eliminated by using the Hampel filter.

- This paper first combines the CNN model with the short-duration memory neural network architecture of LSTM to improve the LSTM model’s prediction accuracy and reduce the algorithm’s complexity.

- The improved LSTM model’s object is changed to amplitude analysis of 90 CSI signals in the dataset, and the model’s output is changed to the result of determine the corresponding signal’s target posture.

- (3)

- When the target is detected as a fall posture by the improved YOLOv5s or improved LSTM model, a discriminator is designed to improve the accuracy of judgment, which combines the detected result from the models with the physiological data of the target to deliver the final target posture.

The key contributions of this work are as follows:

- The proposed dual fall detection model can detect the target’s posture whether it is visible or not, simultaneously solving the problem of fall detection in different scenarios.

- A small-scale database suitable for the home environment is established, and YOLOv5s, the most lightweight of the YOLOv5 series, is improved and applied to human fall detection in a home environment, improving detection accuracy and reducing the detection system’s complexity.

- The CNN model and LSTM neural network architecture are combined to process images from CSI human posture data, capturing long-term dependencies in sequence data and effectively extracting local CSI data features, providing a more accurate and stable fall detection model.

- Image data, CSI data, and target physiological data are combined to further improve the fall judgment accuracy and detect the target’s health status in real time, providing elderly people with a healthy and comfortable living environment.

The overall composition of this article is as follows. The proposed YOLOv5s-based fall detection algorithm is presented in Section 3, with an introduction of the YOLOv5s model and the improved YOLOv5s model. Section 4 describes the general framework of the proposed fall detection with WiFi signal based on the LSTM model, exploring WiFi signal sensing, the fall prediction principle based on WiFi signal, CSI data acquisition, CSI data preprocessing, and the principle of the proposed WiFi signal-based fall detection algorithm. Section 5 presents the structure and principle of the discriminator. The experiment deployment and experiment results are given in Section 6. Section 7 presents the conclusion.

3. Fall Detection Based on the Improved YOLOv5s

3.1. Introduction of the YOLOv5s Model

YOLOv5 is a widely used algorithm with good accuracy and speed, becoming a popular algorithm in the field of object detection. It is an object detection algorithm developed by Joseph Redmon, Alexey Bochkovskiy, and other authors [28]. It adopts an anchor-based detection method and uses a series of optimization strategies, such as feature pyramid network and adaptive incremental training, to improve detection accuracy and speed. YOLOv5s is the smallest version in the YOLOv5 series, with a fast detection speed and low model size, making it suitable for object detection in resource-constrained environments such as mobile devices. The YOLOv5s network’s structure is introduced in detail below.

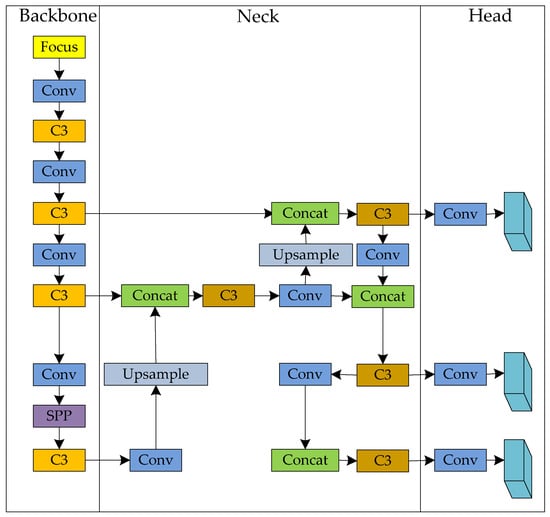

As shown in Figure 2, the YOLOv5s network structure can be divided into three parts: Backbone, Neck, and Head. Among them, the Backbone is used to extract features from the image, the Neck is used to fuse features of different resolutions, and the Head is used to predict the category and position of objects. In detail, each part functions as follows.

Figure 2.

YOLOv5s network structure.

Backbone: The Backbone network mainly extracts image feature points. It converts original input images into multi-layer feature maps for subsequent target detection tasks. The Backbone mainly comprises Conv modules, C3 modules, and Spatial Pyramid Pooling—Fast (SPPF) modules.

Neck: In the target detection algorithm, the Neck usually combines feature maps of different levels and generates feature maps with multi-scale information to improve the target detection accuracy.

Head: The object detection Head detects the object of the feature pyramid, which includes some convolutional layers, pooling layers, and fully connected layers.

3.2. Improved YOLOv5s Model

3.2.1. Letterbox

The size of the images is not uniform in the training dataset, which may affect the model’s accuracy. Therefore, it is necessary to perform uniform operations on the images. If resize is simply used, it will likely cause information loss in the images. Hence, this paper introduces the Letterbox technology from YOLOv5s to process the images and improve detection accuracy.

Letterbox mainly aims to make the best use of the network receptive field’s information features while ensuring that the overall image transformation ratio is consistent. A consistent scale means that the length and width of the contraction ratio are used in the same proportion. Effective use of receptive field information describes filling the side that does not meet the conditions after contraction with grayish-white until it can be evenly divided by the receptive field.

3.2.2. Attention Mechanism

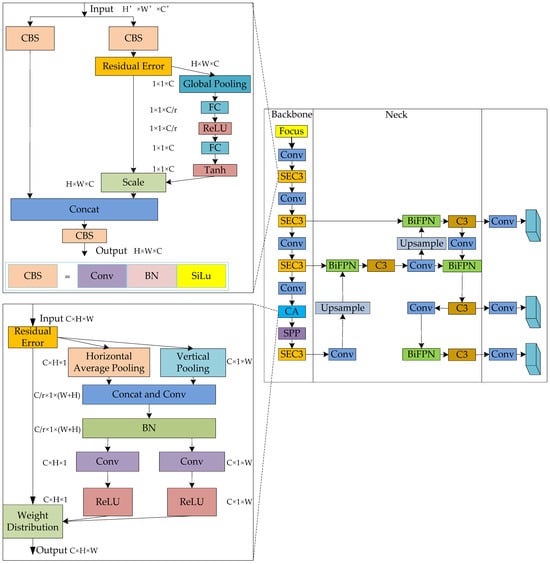

This work introduces the SE channel attention mechanism into the original YOLOv5s model’s Backbone network so that the network pays more attention to the detected target, improving the detection effect. At the same time, to enhance the YOLOv5s model’s understanding of the input data’s spatial structure, a CA module is introduced. The detection accuracy of the model can be improved by adding an attention mechanism.

- SE and Tanh activation function are introduced

The SE’s main aim is to improve the model’s performance by compressing and stimulating the input features. Specifically, the SE attention mechanism consists of two steps: squeeze and excitation [29]. In the squeeze step, the input feature map is compressed into a vector by a global averaging pooling operation, which is then mapped to a smaller vector through a fully connected layer. By contracting the output of the convolution operator through the spatial dimension , the statistic is generated, where the calculation formula for the element of is as follows:

where

Here, is the learned set of filter kernels, referring to the parameters of the c-th filter, is a 2D spatial kernel, denoting a single channel of that acts on the corresponding channel of .

In the excitation step, the Sigmoid activation function is changed to the Tanh activation function. Tanh was born a little later than Sigmoid, but one disadvantage of the Sigmoid function is that the output is not centered on 0, making the convergence slower. Tanh solves this problem. Hence, the Tanh function is used to compress each element of the vector from the squeeze step into a range between −1 and 1.

It then multiplies it with the original input feature map to obtain the weighted feature map.

where is the reduction ratio, and , , and refers to channel-wise multiplication between the feature map and the scalar .

Through the SE attention mechanism, the model can adaptively learn the importance of each channel, thus improving the model’s performance. In practical applications, the SE attention mechanism is widely used in various deep learning models, achieving good results.

- 2.

- CA attention module and ReLu activation function are introduced

To suppress irrelevant information, a CA attention module is used to embed position information in the channel. This mechanism effectively combines location information and channel information to improve the model’s ability to understand the input data, thus improving the model’s accuracy. In addition, CA can encode horizontal and vertical position information into channel attention, so that the mobile network pays attention to a large range of position information without too much computation [30].

In the coordinate embedding part, for the input features of the channel, CA encodes the tensors and obtained from the feature maps of each channel in the vertical and horizontal directions, respectively, by using an average pooling kernel

where and represent the horizontal feature at height and the vertical feature at width of the feature map of channel , respectively.

In the coordinate memory generation part, CA first imparts the coordinate information into the output aggregate feature map for concat operation, and then processes the convolution layer, BN layer and nonlinear layer to obtain the intermediate feature in the vertical direction and the horizontal directions

The intermediate feature is divided into two independent feature tensors and , and then the dimension is adjusted through the convolution layer. Finally, the attention weights and in the horizontal and vertical directions are obtained through the Rectified Linear Unit (ReLU) activation function

Since the ReLU activation function is sparse, there is no complex exponential operation, the calculation is simple, the efficiency is high, and the actual convergence speed is fast. Therefore, this work replaces CA’s original Sigmoid function with the ReLU function.

The output feature of CA is obtained by multiplying the feature tensor of the attention weight’ channel with at the corresponding coordinate position.

- 3.

- BiFPN module and SiLU activation function are introduced

Beginning with the Neck feature fusion, this work introduces the BiFPN to strengthen the underlying feature map information, so that feature maps of different scales can be fused. The specific BiFPN implementation process occurs as follows: Firstly, the input feature map is processed through a series of convolution and pooling operations to obtain a series of feature maps in different scales. Then, the following operations are performed at each scale: a set of 1 × 1 convolutional layers is used to unify the number of channels in the feature map to the same value; the weight that fuses the features of that scale with those of other scales is learned at each scale; a set of 3 × 3 convolutional layers fuse the feature of each scale with the feature of other scales; a set of 1 × 1 convolutional layers obtain the final feature map.

In addition, BiFPN also introduces techniques [31], such as dynamic weight updating and cross-level linking, to further improve the feature expression ability and detection performance.

The Sigmoid Linear Unit (SiLU) activation function, also known as the Swish activation function, is an adaptive activation function. It is an improvement on the Sigmoid function, which can better adapt to different data distributions. The role of the SiLU activation function is to convert the input signal in the neural network into the output signal, thus activating the neuron. Compared with other activation functions, the SiLU activation function has a faster convergence speed and better performance. SiLU has an attractive self-stabilizing feature. The global minimum with a derivative of zero acts as a “soft floor” on the weights and implicitly regularizes and inhibits the learning of a large number of weights. Therefore, here, we add the SiLU function to BiFPN.

As shown in Figure 3, the SE attention is integrated into the C3 module, the CA attention module is added before the Spatial Pyramid Pooling (SPP) module, and the concat module is replaced by the BiFPN module. With these improvements, the YOLOv5s model’s detection accuracy and other performance parameters can be greatly improved.

Figure 3.

Improved YOLOv5s network structure.

4. Fall Detection with WiFi Signal Based on LSTM Model

4.1. The Sensing of WiFi Signal

At present, CSI is basically used to study WiFi-based perception tasks, and CSI represents the channel attributes of communication links in each subcarrier. The Orthogonal Frequency Division Multiplexing (OFDM) technique is commonly used in WiFi devices. Multiple Input Multiple Output (MIMO) technology regulates the multipath transmission effect. Based on OFDM technology, the spatial channel is divided into multiple subcarriers with different frequencies, and multiple antenna pairs can transmit WiFi data simultaneously with the support of MIMO technology. CSI records each subcarrier’s data information, describes the signal characteristics in the propagation process, and also considers the influence of environmental factors and propagation path on the signal. The received signal can be expressed as follows:

where represents the receiver signal vector, is the channel state matrix of the wireless signal, denotes the signal vector of the wireless transmitter, and represents Gaussian white noise. The current method of collecting CSI is to extract information from the physical layer through special network cards, with the mainstream network cards being Intel 5300 chipset and Atheros 9k series. In this study, an Intel 5300 network adapter is mainly used to obtain CSI information, as CSI information is extracted from the network adapter through the 802.11n CSI tool, where each antennae pair generates 30 groups of subcarrier information and then the obtained CSI data, that is, the wireless signal channel state matrix, are expressed as follows:

where represents the number of subcarriers, and the CSI signal of the subcarrier can be expressed as follows:

where and represents the amplitude and phase of the subcarrier, respectively. CSI can effectively respond to multipath effects and record the information in the propagation process. As a kind of fine-grained channel information, CSI can be effectively applied to activity recognition and perception tasks such as fall detection [32].

4.2. Fall Prediction Principle Based on WiFi Signal

This study explores a fall detection method through WiFi signals under an occluded relationship, mainly through CSI amplitude data. Generally, WiFi signal data are first collected and CSI amplitude data are extracted, and then the mapping relationship between amplitude and human posture (fall, standing or sitting) is established to complete the fall detection task. When the environment is in a static state, that is, there is no human activity, the channel is less affected and the CSI amplitude is relatively stable. However, when there is action in the environment, the CSI signal is disturbed due to the interference of human body action on the channel, and the change in amplitude increases sharply. Based on these characteristics, a change in the current CSI amplitude can form the basis of whether there is a change in human posture in the environment. The overall WiFi-based fall detection method is as follows: First, it determines the change threshold of CSI amplitude used to judge whether there is activity and takes this threshold as the benchmark. When the CSI amplitude change exceeds this threshold, the human body posture is considered to have changed, and the appropriate activity interception algorithm is used to intercept the active fragments in the window; then, valid activity fragments are extracted and invalid activity fragments are eliminated by a valid activity fragment screening algorithm. Finally, the trained fall detection model estimates the valid activity fragments.

4.3. CSI Data Acquisition

This part of the paper mainly studies fall detection under the occlusion relationship; hence, data collection is also established under the occlusion relationship. We formed a penetralium in a small room, using a wooden door between the WiFi router and the receiving computer to directly form a blocking relationship. In the Ubuntu14.04 LTS Linux operating system equipped with an Intel 5300 network card, the AP mode was used to collect data. The router had two antennae at the transmitting end and three antennae at the receiving end.

4.4. CSI Data Preprocessing

Data noise reduction is an important process in WiFi-based fall detection tasks because the original CSI signal data contain high-frequency ambient noise data, affecting the final detection accuracy. Moving average filtering can effectively reduce the influence of noise, retain the main features of signal data, and improve the detection accuracy.

The first step is unpacking the collected data, and the result is shown in Table 1. The channel state information required is CSI of a 2 × 3 × 30 complex matrix. In the CSI matrix, “2” indicates the number of transmitting antennae, “3” indicates the number of receiving antennae, and “30” indicates the number of subcarriers contained in each antenna (the Intel 5300 network card used by the CSI tool determines the number of subcarriers per antenna to be 30). Therefore, it can be seen that the three-antennae links form 180 subcarriers, that is, each time stamp’s data are composed of 180 subcarriers. This matrix is made up of complex numbers related to the characteristics of the wireless signal. Signals are generally represented as complex numbers in wireless communication, where the real part represents the amplitude (or intensity) and the imaginary part represents the phase (or angle).

Table 1.

Main contents of the CSI packet.

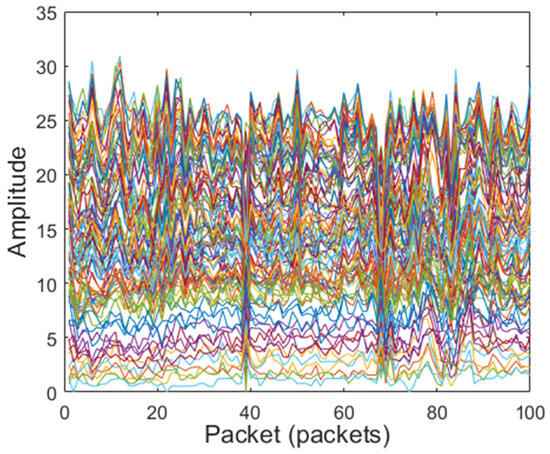

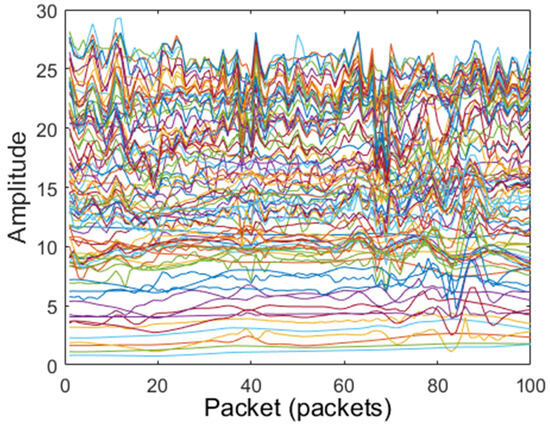

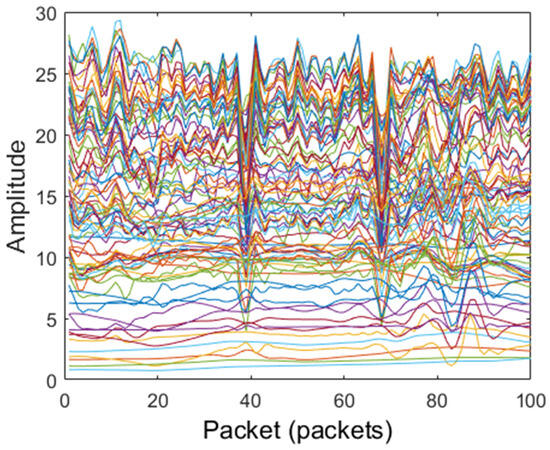

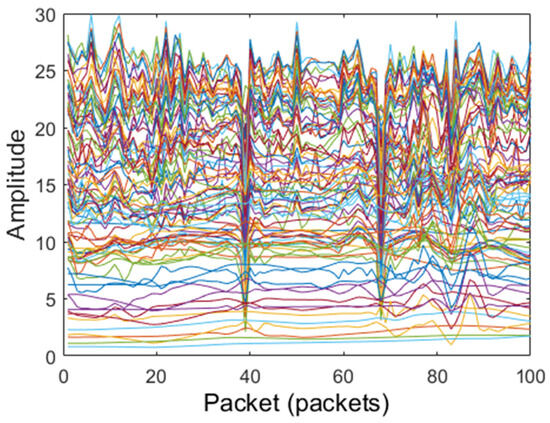

After dimensionality reduction and amplitude extraction of the CSI data, low-frequency filtering, Hampel filtering, and median filtering are, respectively, used to preprocess the CSI signals’ amplitude. The amplitudes of original CSI signals and filtered amplitude CSI signals are shown in Figure 4, Figure 5, Figure 6 and Figure 7. The original CSI signals contain many noisy signal components, causing the original CSI signals’ amplitude to fluctuate greatly, as shown in Figure 4. From Figure 5, Figure 6 and Figure 7, we can see that the CSI signals’ amplitude is smoothed and the noisy signal components are filtered; compared with other filters, the Hampel filter can handle outliers well and can better constrain the noise reduction effect for the amplitude. Therefore, the Hampel filter was finally selected to process the CSI data in this paper.

Figure 4.

Original CSI signals.

Figure 5.

Filtered CSI signals with Hampel filter.

Figure 6.

Filtered CSI signals with low pass filter.

Figure 7.

Filtered CSI signals with wavelet transform filter.

4.5. Fall Detection

4.5.1. Selection of Detection Model

The collected CSI data were brought into the applicable deep learning network model for training, a suitable fall detection classification model was established, and the test data were detected through the model. When the CSI amplitude information was used as the input data, the Multilayer Perceptron (MLP) deep learning model had many parameters, a slow convergence speed, and a high computing cost. The CNN deep learning model presents the problem of gradient disappearance, which easily leads to performance degradation. The traditional RNN model presents the problem of gradient disappearance when backpropagating, cannot capture the long-term dependence of CSI, and has the problem of gradient explosion. Transformer is a deep learning model architecture for Natural Language Processing (NLP) and other sequence-to-sequence tasks that require too many parameters, high training costs, and large amounts of labeled CSI data to be collected for learning. Therefore, after comprehensive consideration of the cost and the computing power of the terminal, in this study, we finally chose to use the LSTM deep learning model and combine it with a CNN for training and fall detection.

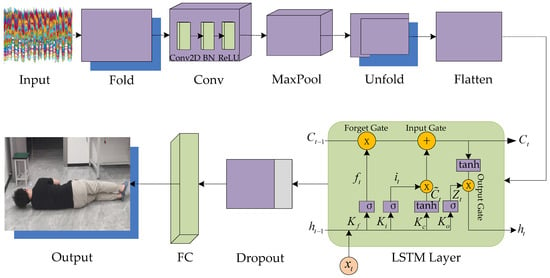

4.5.2. Improved LSTM Model

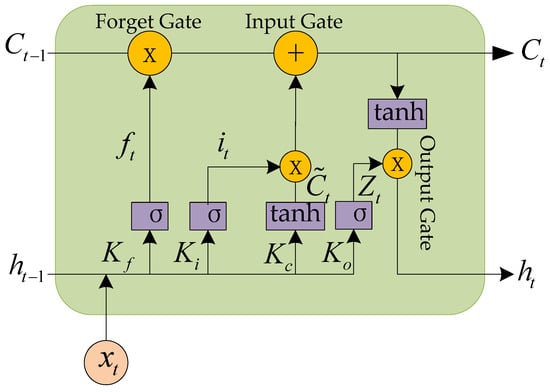

- LSTM model

LSTM is a special recurrent neural network (RNN) that is proposed to solve the problems of gradient disappearance and gradient explosion when a traditional RNN processes long sequence data [33]. The inspiration comes from the human memory mechanism, in which the human brain processes information to decide whether to retain or forget according to the information’s importance, enabling the human brain to deal with complex long-term dependencies. LSTM introduces a “gate” structure and a “cell state”. Gate structures can control the inflow and outflow of information, and cell states can store states for a long time. This allows LSTM to better capture dependencies in long sequences. The overall processing flow of LSTM is shown in Figure 8.

Figure 8.

Processing flow of LSTM.

Input layer: Consistent with RNN, the input sequence needs to be converted into numerical form.

Hidden layer: In the hidden LSTM layer, each time step receives an input and the cell state of the previous time step. Then, the cell state and hidden state of the current time step are calculated through a series of gating mechanisms (including forget gate, input gate, and output gate) and cell state updates and finally output. These gating mechanisms control the flow of information, deciding what information to forget, what new information to add, and what information to output.

There are two lines at each LSTM time point : and the output of . The forget gate determines how much of the previous cell state information to forget, calculated from the current input and the hidden state of the previous time step. The two pass through a fully connected layer and the Sigmoid function is applied to give the forgotten gate value. This value ranges from 0 to 1, where 0 means completely forgotten and 1 means completely retained. It is assumed that are the weighted matrices; are bias terms, and is the Sigmoid function.

For the cell state of previous time step , the model takes the output of the last stage and the input of the current stage into the Sigmoid function to determine how much can forget, as shown in the following formula:

The input gate compares the previous generation’s output with the current generation’s input, and then selects how much to add to the current main input . This consists of two parts: one is the input gate, which determines which parts of the memory cell will be updated; the other part is a Tanh layer, which creates a new candidate value vector to add to the memory cell—both the input gate value and the candidate value are calculated from the current input and the hidden state of the previous time step. The candidate value is computed as follows:

The input value is as follows:

Then, the new information that needs to be updated is generated according to the decisions of the forget gate and the input gate. The cell state is multiplied by the forgetting gate value, indicating the forgotten part of the state information. The input gate value is then multiplied and added to the candidate value, indicating that new state information has been added. The formula of the updated cell state is as follows:

Finally, is put into the Tanh function in the output gate and multiplied by , giving the final output .

where

- 2.

- Improved LSTM model

The LSTM network can capture long-term dependencies in sequence data, allowing the model to better adapt to the temporal dynamics in data, while CNNs are a very effective deep learning method, good at extracting local features in image data, and have achieved remarkable results in image recognition, classification, and detection. However, CNNs have some limitations when dealing with long-term dependencies and time series data. To effectively extract the local features of CSI data and capture their long-term dependence, this paper combines the convolution feature of a CNN model with LSTM neural network architecture to process the CSI human posture data and provide more accurate fall estimation. The fusion structure is shown in Figure 9, and the fused model’s related parameters are shown in Table 2.

Figure 9.

Improved LSTM model.

Table 2.

The improved LSTM model network.

The CSI data in each packet were converted into an image and fed to the CNN for feature extraction of the different postures. The LSTM model was then trained with these features. Hence, the goal of deep learning changed to the analysis of the amplitudes of 90 CSI signals (data received by three receiving antennae from one transmitting antenna) in the dataset, and the result changed to the analysis of human posture with corresponding signals. In this way, the algorithm’s computational pressure can be reduced, more computing power can be used by the required part, the model’s training efficiency can be improved, the LSTM model’s forgetful characteristics can be invoked to a greater extent, and the influence of the former point on the latter point can be reduced.

In this study, the combination of LSTM function and convolution function is used to train short- and long-term memory. The input of the LSTM layer is as follows:

where indicates that the ReLU function is stimulated; and are the data of current time and previous time, respectively, which are fed to the forget gate through the Sigmoid function; and is the bias.

Finally, the output of the improved LSTM model is obtained by interconnecting with the data label through the full connection layer.

5. Structure and Principle of the Discriminator

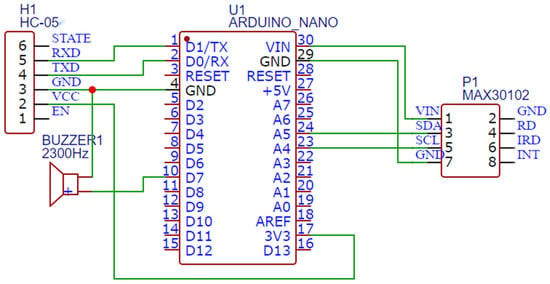

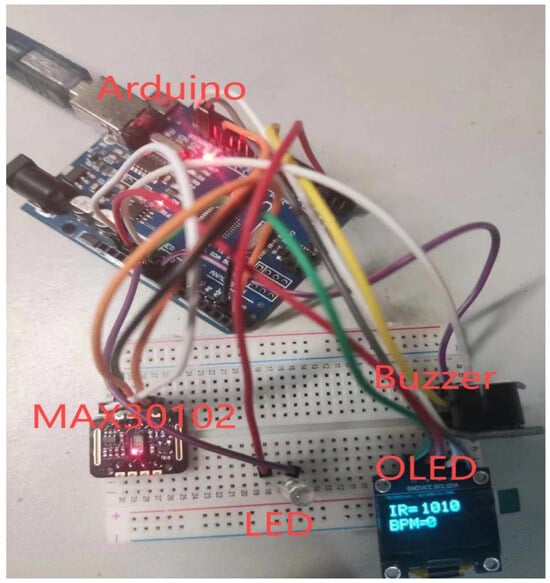

If only the neural network is used for fall detection of the target, it is highly likely to be misjudged. For example, when the target lies down to rest, the posture of the target at this time is also in the fall state, as recognized by the model. Therefore, this paper designs a discriminator to combine physiological data with the posture results of the neural models to improve judgment accuracy and monitor the health of elderly people in real time.

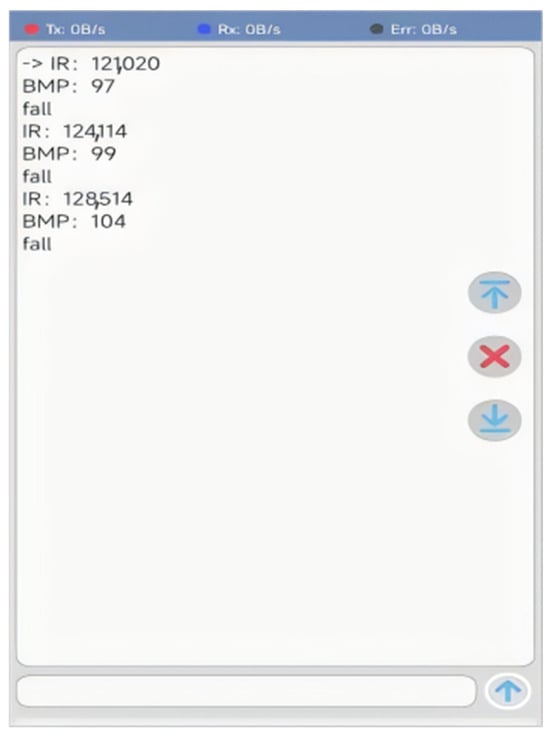

Figure 10 and Figure 11 show the working principle diagram and structure of the discriminator. These mainly include the Arduino series Microcontroller Unit (MCU), Organic Light-Emitting Diode (OLED) module, MAX30102 module, buzzer, and indicator light. Arduino is the core control and the brain of the system, enabling the whole operation to proceed smoothly. As the main module of heart rate detection, MAX30102 detects the human body’s heart rate in real time, as shown in Table 3, and feeds the detected data to the Arduino through the I2C port in real time, so that Arduino can respond appropriately. Arduino makes a comprehensive judgment that is reliant on the physiological data and the target’s posture received from the neural network models via the Bluetooth module and serial port. Finally, the buzzer alarms as a reminder that the resulting output of the MCU is in the fall state. At this time, the monitoring system relays the target’s situation to the mobile terminal of the guardian, as shown in Figure 12. The system can be made into a wearable bracelet. The MAX30102 module is located at the bottom of the bracelet and can be detected by attaching it to the wrist of elderly people. At the same time, a battery of between 3.3 V and 5 V is stored inside the bracelet for power supply, thus improving the usability and convenience of the bracelet.

Figure 10.

Working principle diagram of discriminator.

Figure 11.

Structure of discriminator.

Table 3.

Heart rates of people in different situations.

Figure 12.

The data received on the phone.

6. Experimental Results and Analysis

6.1. Performance of Fall Detection Algorithm Based on Improved YOLOv5s

6.1.1. Dataset

This paper collected a large number of images of different scenes and different people in a fall posture. The dataset contains more than 6000 images, covering a wide range of people in different occupations, people in different countries, and people of different races. The age span is also relatively large, and the overall data distribution is uniform. A part of the dataset is shown in Figure 13.

Figure 13.

A part of images in the data set.

6.1.2. Partitioning and Labeling of Dataset

The pictures were cropped to a suitable image for training. The labeling software was used to label the target person in the fall position in the image, as shown in Figure 14. After labeling, we divided the dataset into a training set and a testing set, with a ratio of 99:1. Depending on the project requirements, this dataset was labeled with only one class of fall, reducing the complexity of the training operations.

Figure 14.

The process diagram of fall labeling.

6.1.3. Experimental Settings

Table 4.

Experimental hardware environment.

Table 5.

Experimental software environment.

6.1.4. Experimental Results

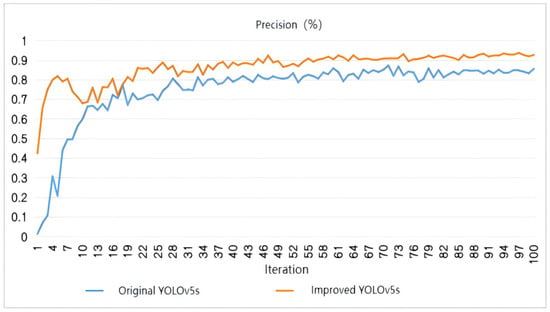

Figure 15, Figure 16 and Figure 17 show that the detection precision, recall rate, and average precision of the improved model are 92.7%, 91.4%, and 95.1%, respectively, which are 7.2%, 9%, and 7.6% higher than that of the original model. As shown in Figure 18 and Figure 19, regarding the actual detection effect of fall, the observation results show that the original model missed detection of distant targets due to environmental occlusion, too far distance, and other problems, and the overall detection accuracy was low, as shown in Figure 18. Compared with the original model, the proposed model can detect more targets in the same image or frame with severe occlusion and far distance, with the overall detection accuracy being high, as shown in Figure 19. These results demonstrate that after the improvements, the expressive ability of the model and its understanding of the input data’s spatial structure are enhanced, helping the network better focus on the key parts of the target and, therefore, increasing the model’s fall detection accuracy.

Figure 15.

Comparison of detection precision between original YOLOv5s and improved YOLOv5s.

Figure 16.

Comparison of recall between original YOLOv5s and improved YOLOv5s.

Figure 17.

Comparison of average precision between original YOLOv5s and improved YOLOv5s.

Figure 18.

The fall detection effect of the original YOLOv5s.

Figure 19.

The fall detection effect of the improved YOLOv5s.

6.2. Performance of CSI Fall Detection Algorithm Based on Improved LSTM Model

In this study, as the target’s posture changed from standing up to falling down, the changes in the CSI signals’ amplitude were set as class 1 for data annotation, and as the target’s posture changed from standing up to sitting down, the changes in the CSI signals’ amplitude were set as class 2, applying deep learning methods to the data and judging the current posture of the target.

The dataset was divided into an 8:2 format, with 80% of the data extracted for iterative learning and 20% of the data used for detection. Then, the dataset formed by CSI amplitude data and corresponding labels was brought into the model for training. After training, the deep learning model performed algorithm verification on the previously intercepted test data, indicating the estimated accuracy of the model.

In grid training, the initial learning rate was 0.012 and the forgetting factor was set to 0.1. The forgetting layer was pushed forward to reduce the influence of volatility and improve the learning rate to make the model learning more effective.

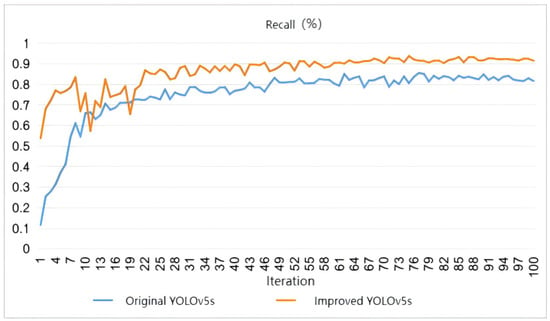

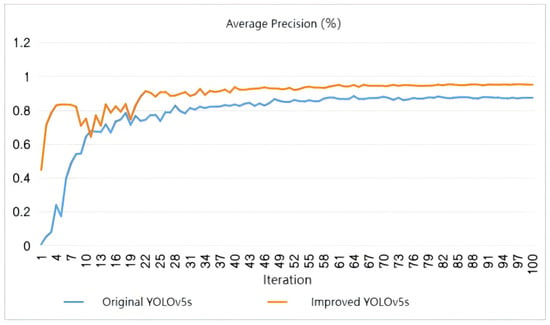

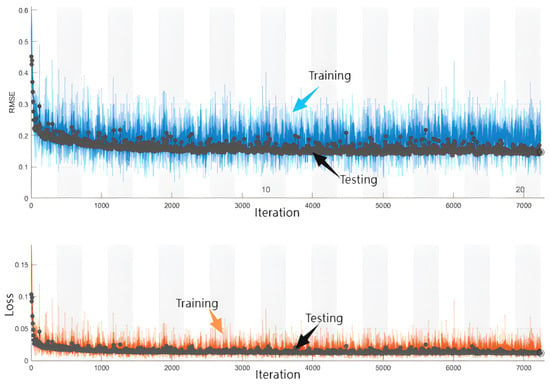

6.2.1. Comparison of Training Performance

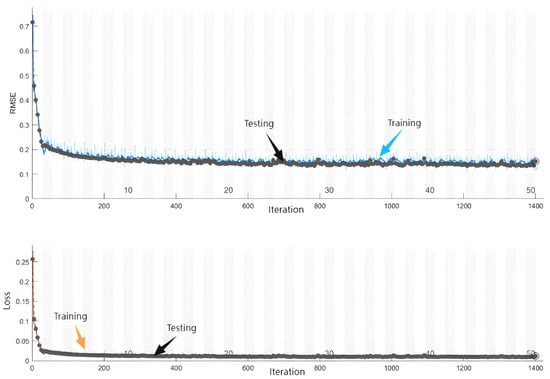

The CSI data were put into the original LSTM model for training, and the training performance is shown in Figure 20. As shown, the performance of the model begins to stabilize after the first 2000 iterations in training; the fluctuation in the root mean square error (RMSE) and loss is large, fluctuating greatly at about 0.2. Further, CSI data were put into the improved LSTM model for training, and its training performance is shown in Figure 21. It can be seen that the improved model begins to stabilize after 200 training iterations, becoming stable after about 1000 training iterations with less variance fluctuation. These results show that the improved model is more stable, is more accurate, has a faster convergence speed, and shows lower computational complexity than the original model.

Figure 20.

The training performance of the original LSTM model.

Figure 21.

The training performance of the improved LSTM model.

6.2.2. Comparison of Performance of Posture Estimation

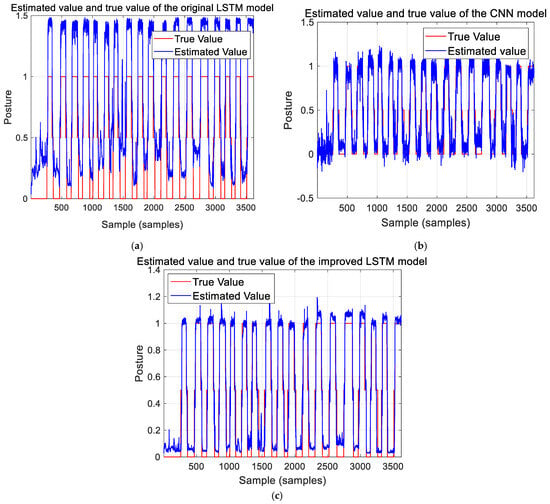

The results estimated with the original LSTM, CNN, and improved LSTM models and the true target posture data were compared using the training dataset, as shown in Figure 22. Here, we set the standing posture, lying posture, and process state to 1, 0, and 0.5, respectively. As we can see from Figure 22a,b, the results of the original LSTM model are more stable but the estimated error is bigger than that of the CNN model. On the contrary, the CNN model achieves a higher estimated accuracy as it can effectively capture local features in the CSI images, but the estimated results jump sharply, making it difficult to judge whether the target’s posture has changed. The combination of these two models takes into account both temporal and spatial information, thus improving the accuracy and stability of the model, as shown in the results of the improved LSTM model in Figure 22c, where the target’s posture changes and the differentiation in various postures can be clearly seen. This indicates that the estimated posture error and stability of the improved LSTM model are significantly improved compared to the original LSTM model and CNN model.

Figure 22.

Posture prediction performance of the models on the training data set: (a) original LSTM model; (b) CNN model; (c) improved LSTM model.

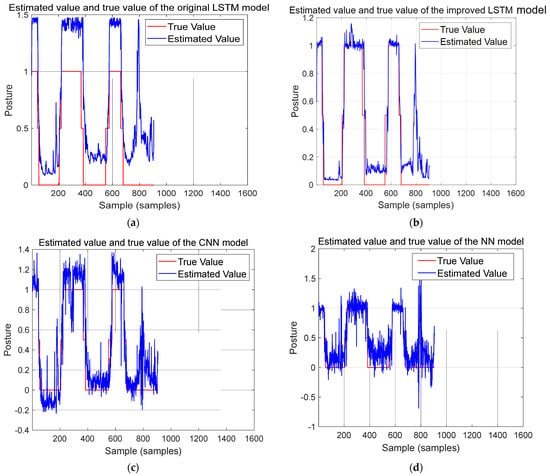

Similarly, the target postures estimated with the original LSTM, the improved LSTM, and the CNN and NN models were compared to the true target posture data from the testing dataset, and the results are shown in Figure 23a–d. From the results, we can conclude that the improved LSTM model achieved a good fitting between the estimated value and the true values, and the model can accurately estimate and judge changes in the target body’s posture through changes in the WiFi signal. As can be seen from Figure 23c, the CNN model is unstable in the direction of the estimated results and presents the problem of large errors in the judgment process of posture change. The NN model not only has the problems of unstable estimated results and inability to output effective estimated values but also shows an insufficient processing of outliers, as shown in Figure 23d.

Figure 23.

Posture prediction performance of the models on testing data set: (a) original LSTM model; (b) improved LSTM model; (c) CNN model; (d) NN model.

For greater clarity, we used a statistical index, named the coefficient of determination , to help us understand how well the models can explain variation in the data, thus evaluating the models’ goodness of fit. The calculation formula for is as follows:

where is the sum of squares residual, and is the sum of squares total. The value range of is [0, 1]. If the result is 0, the model is poorly fitted; the closer the value is to 1, the better the model fits the data, that is, the higher the posture estimation accuracy. As can be seen in Table 6, the of the improved LSTM model increased significantly, compared to the original LSTM and other models. The of the improved LSTM model reaches 0.7581, an increase of 15.61%, 29.36%, and 52.39% compared with the original LSTM, CNN, and NN models, respectively. Therefore, we believe that the improved LSTM model can accurately estimate changes in the target body’s posture or fall states in the target through changes in the WiFi signal in complex environments.

Table 6.

Determination coefficients of the models.

6.3. The Result of Combining Posture Detection with Physiological Data

The MAX3012 module monitors the heart rate of the target in real time, and the data obtained are sent to Arduino. When the neural network models detect that the target state is fall, they send data to Arduino through the serial port. Arduino combines the received posture information with the judgment statement if (heart rate > 90&&flag==1) for further detection. If the heart rate is >90 at this time, that is, the heart rate is in the abnormal range, it is judged that the target’s posture is fall. On the contrary, if the heart rate is <90 at this time, that is, the heart rate is in the normal range, it is judged that the target’s posture is not fall, as shown in Table 7.

Table 7.

The combination results of MAX3012 and the neural models.

7. Conclusions

In this study, the YOLOv5s and LSTM models were improved and used to train the image data and CSI data; then, physiological information was combined with the target posture estimated by these models to judge whether the target’s posture was fall. Information about the target posture was sent to the mobile phone of the guardian, achieving real-time health monitoring of elderly people. The experimental results show that, in a complex environment, the proposed method can accurately, stably, and quickly judge whether the target person is in an emergency situation.

Although pose estimation technology based on CSI faces great challenges in monitoring the displacement of multiple targets, it is very suitable for the application scenario of a solitary elderly target. Further, the technology is low in cost, and it is expected to become a part of the auxiliary smart home medical system.

In future work, we will study more lightweight and effective models that can accurately and quickly estimate the 3D posture of the human body and combine more physiological information, allowing us to monitor the health of a target in real time, initially predict some diseases, and provide more abundant and effective health protection mechanisms for people in a home environment.

Author Contributions

Conceptualization, T.B., J.L. and J.C.; methodology, T.B., J.L., J.C. and G.W.; software, T.B., J.L. and J.C.; validation, T.B., J.L., J.C. and G.W.; formal analysis, T.B., J.L., J.C. and G.W.; investigation, T.B., J.L., J.C., G.W. and Q.Z.; resources, T.B., J.L. and J.C.; data curation, T.B., J.L. and J.C.; writing—original draft preparation, T.B., J.L. and J.C.; writing—review and editing, T.B., J.L., J.C., G.W. and Q.Z.; visualization, T.B., J.L. and J.C.; supervision, T.B.; project administration, T.B.; funding acquisition, T.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Guangxi Science and Technology Program (grant. no. GuikeAD21238038) and the National Natural Science Foundation of China (grant. no. 62161031).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data that support the finding of the study are available from Dr. Bui at oanhbui@163.com upon reasonable request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Sivaprasad, T.C.; Surendhar, R.; Heiner, A.J. Wearable Smart Health Monitoring Transmitter and Portable Receiver Systems for Enhanced Wellness Insights. In Proceedings of the 2023 2nd International Conference on Automation, Computing and Renewable Systems (ICACRS), Pudukkottai, India, 11–13 December 2023; pp. 1558–1563. [Google Scholar] [CrossRef]

- Rasheed, A.; Iranmanesh, E.; Li, W.; Ou, H.; Andrenko, A.S.; Wang, K. A wearable autonomous heart rate sensor based on piezoelectric-charge-gated thin-film transistor for continuous multi-point monitoring. In Proceedings of the 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Jeju, Republic of Korea, 11–15 July 2017; pp. 3281–3284. [Google Scholar] [CrossRef]

- Coviello, G.; Florio, A.; Avitabile, G.; Talarico, C.; Wang-Roveda, J.M. Distributed Full Synchronized System for Global Health Monitoring Based on FLSA. IEEE Trans. Biomed. Circuits Syst. 2022, 16, 600–608. [Google Scholar] [CrossRef] [PubMed]

- Nafil, K.; Kobbane, A.; Mohamadou, A.-B.; Saidi, A.; Yahya, B.; Oussama, L. Fall Detection System for Elderly People using IoT and Machine Learning technology. In Proceedings of the 2023 3rd International Conference on Electrical, Computer, Communications and Mechatronics Engineering (ICECCME), Tenerife, Canary Islands, Spain, 19–21 July 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Guo, T.; Wan, F.; Shi, Y. Review of Fall Detection Research Based on Wearable Pressure Sensor. Softw. Eng. 2023, 26, 1–7. [Google Scholar] [CrossRef]

- Yan, B. Research on Fall Detection Method Based on Acceleration and Audio. Master’s Thesis, Yanshan University, Qinhuangdao, China, 2023. [Google Scholar] [CrossRef]

- Zhou, L.; Chen, Y.; Liu, M.; Zhu, C. Human fall detection system based on multi-sensor fusion. J. Air Space Early Warn. Res. 2023, 37, 129–135. [Google Scholar]

- Kiran, S.; Riaz, Q.; Hussain, M.; Zeeshan, M.; Krüger, B. Unveiling Fall Origins: Leveraging Wearable Sensors to Detect Pre-Impact Fall Causes. IEEE Sens. J. 2024, 24, 24086–24095. [Google Scholar] [CrossRef]

- Cheng, S.; Zhang, L.; Chu, Z.; Yu, Y.; Li, X.; Liu, Z. Fall detection system design based on visual recognition and multi-sensor. Transducer Microsyst. Technol. 2024, 43, 91–94. [Google Scholar]

- Zhang, Y.; Liang, W.; Yuan, X.; Zhang, S.; Yang, G.; Zeng, Z. Deep Learning-Based Abnormal Behavior Detection for Elderly Healthcare Using Consumer Network Cameras. IEEE Trans. Consum. Electron. 2024, 70, 2414–2422. [Google Scholar] [CrossRef]

- Zhang, C.C.; Wang, C.; Dai, X.; Liu, S. Camera-Based Analysis of Human Pose for Fall Detection. In Proceedings of the 2023 Congress in Computer Science, Computer Engineering, & Applied Computing (CSCE), Las Vegas, NV, USA, 24–27 July 2023; pp. 1779–1782. [Google Scholar]

- Yin, Y.; Lei, L.; Liang, M.; Li, X.; He, Y.; Qin, L. Research on Fall Detection Algorithm for the Elderly Living Alone Based on YOLO. In Proceedings of the 2021 IEEE International Conference on Emergency Science and Information Technology (ICESIT), Chongqing, China, 22–24 November 2021; pp. 403–408. [Google Scholar] [CrossRef]

- Chen, T.; Ding, Z.; Li, B. Elderly Fall Detection Based on Improved YOLOv5s Network. IEEE Access 2022, 10, 91273–91282. [Google Scholar] [CrossRef]

- Zhu, S.; Qian, C.; Kan, X. High-Precision Fall Detection Algorithm with Improved YOLOv5. Comput. Eng. Appl. 2024, 60, 105–114. [Google Scholar] [CrossRef]

- Song, X.; Luo, Q. Pedestrian fall detection algorithm based on improved YOLOv5. In Proceedings of the 2024 5th International Conference on Computer Vision, Image and Deep Learning (CVIDL), Zhuhai, China, 19–21 April 2024; pp. 1302–1306. [Google Scholar] [CrossRef]

- Chen, Y.; Du, R.; Luo, K.; Xiao, Y. Fall detection system based on real-time pose estimation and SVM. In Proceedings of the 2021 IEEE 2nd International Conference on Big Data, Artificial Intelligence and Internet of Things Engineering (ICBAIE), Nanchang, China, 26–28 March 2021; pp. 990–993. [Google Scholar] [CrossRef]

- Seifeldin, M.A.; El-keyi, A.F.; Youssef, M.A. Kalman Filter-Based Tracking of a Device-Free Passive Entity in Wireless Environments. In Proceedings of the WiNTECH’11, Las Vegas, NV, USA, 19–23 September 2011; ACM: New York, NY, USA, 2011; pp. 43–50. [Google Scholar]

- Wang, Y.; Wu, K.; Ni, L.M. Wifall: Device-free fall detection by wireless networks. IEEE Trans. Mob. Comput. 2016, 16, 581–594. [Google Scholar] [CrossRef]

- Halperin, D.; Hu, W.; Sheth, A.; Wetherall, D. Predictable 802.11 packet delivery from wireless channel measurements. ACM SIGCOMM Comput. Commun. Rev. 2010, 40, 159–170. [Google Scholar] [CrossRef]

- Keenan, R.M.; Tran, L.-N. Fall Detection using Wi-Fi Signals and Threshold-Based Activity Segmentation. In Proceedings of the 2020 IEEE 31st Annual International Symposium on Personal, Indoor and Mobile Radio Communications, London, UK, 31 August–3 September 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Ding, J.; Wang, Y.A. WiFi-based smart home fall detection system using recurrent neural network. IEEE Trans. Consum. Electron. 2020, 66, 308–317. [Google Scholar] [CrossRef]

- Chu, Y.; Cumanan, K.; Sankarpandi, S.K.; Smith, S.; Dobre, O.A. Deep Learning-Based Fall Detection Using WiFi Channel State Information. IEEE Access 2023, 11, 83763–83780. [Google Scholar] [CrossRef]

- Zein, H.E.; Mourad-Chehade, F.; Amoud, H. Leveraging Wi-Fi CSI Data for Fall Detection: A Deep Learning Approach. In Proceedings of the 2023 5th International Conference on Bio-engineering for Smart Technologies (BioSMART), Paris, France, 7–9 June 2023; pp. 1–4. [Google Scholar] [CrossRef]

- Wang, C.; Tang, L.; Zhou, M.; Ding, Y.; Zhuang, X.; Wu, J. Indoor Human Fall Detection Algorithm Based on Wireless Sensing. Tsinghua Sci. Technol. 2022, 27, 1002–1015. [Google Scholar] [CrossRef]

- El Zein, H.; Mourad-Chehade, F.; Amoud, H. Intelligent Real-time Human Activity Recognition Using Wi-Fi Signals. In Proceedings of the 2023 International Conference on Control, Automation and Diagnosis (ICCAD), Rome, Italy, 10–12 May 2023; pp. 1–5. [Google Scholar] [CrossRef]

- He, J.; Zhu, W.; Qiu, L.; Zhang, Q.; Wang, C. An indoor fall detection system based on WiFi signals and genetic algorithm optimized random forest. Wirel. Netw. 2024, 30, 1753–1771. [Google Scholar] [CrossRef]

- Ma, L. Elderly fall monitoring system based on WiFi human behaviour recognition. Electron. Test 2022, 36, 9–11+23. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshich, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, NT, USA, 18–23 June 2018. [Google Scholar] [CrossRef]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate Attention for Efficient Mobile Network Design. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 13708–13717. [Google Scholar] [CrossRef]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 10778–10787. [Google Scholar] [CrossRef]

- Luo, Y. Research and Implementation of Fall Detection Based on Ubiquitous Wireless Signals. Master’s Thesis, University of Electronic Science and Technology of China, Xi’an, China, 2023. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).