Abstract

Automated guided vehicles (AGVs) play a critical role in indoor environments, where battery endurance and reliable recharging are essential. This study proposes a multi-sensor fusion approach that integrates LiDAR, depth cameras, and infrared sensors to address challenges in autonomous navigation and automatic recharging. The proposed system overcomes the limitations of LiDAR’s blind spots in near-field detection and the restricted range of vision-based navigation. By combining LiDAR for precise long-distance measurements, depth cameras for enhanced close-range visual positioning, and infrared sensors for accurate docking, the AGV’s ability to locate and autonomously connect to charging stations is significantly improved. Experimental results show a 25% increase in docking success rate (from 70% with LiDAR-only to 95%) and a 70% decrease in docking error (from 10 cm to 3 cm). These improvements demonstrate the effectiveness of the proposed sensor fusion method, ensuring more reliable, efficient, and precise operations for AGVs in complex indoor environments.

1. Introduction

Currently, automatic recharging [1] is an essential function for mobile robots such as AGVs [2]. To safely and efficiently complete the docking of the robot with the charging station, multiple sensor data need to be integrated [3,4,5,6]. Traditional AGVs use infrared sensors alone in automatic recharging tasks, determining the relative position of the robot to the charging station by the position and number of infrared sensors that can receive signals [7], or they detect the signal strength of the infrared sensor to determine the robot’s direction. There are also methods that use the principle of infrared signal reflection to judge the relative position of the robot and the charging station. Some approaches use infrared and monocular cameras, where the monocular vision system relies on camera image processing [8], which is significantly affected by ambient lighting conditions. In environments with too much or too little light, image quality may decline, impacting target recognition and positioning accuracy. Additionally, monocular vision systems have limitations in depth perception, making it challenging to provide accurate 3D information, which may affect the robot’s obstacle avoidance capability in complex environments. Moreover, infrared sensors have limited effective range and accuracy, particularly in environments with obstacles or reflective interference, which may lead to unstable or failed signal transmission. Another approach combines laser and camera technologies, but their detection range is small, and once the target position cannot be determined initially, more time is required to wander and find it. Another common method uses LiDAR [9,10], which scans the environment around the robot, identifying the charging station’s location and posture from the scan data before approaching and docking with the charging port. The key to this approach lies in how to identify the charging station from the LiDAR scan data and calculate its posture. The principle of radar identification of the charging station primarily involves enhancing the charging station’s features, such as regular convex and concave structures, and the surface of the charging station is regularly covered with materials of different reflective intensities, enabling quicker detection of the charging station’s location. Therefore, the charging station is usually designed to be relatively large. In this paper, LiDAR and cameras are used for autonomous navigation [11,12,13,14] to complete the task of remotely finding the charging station, combined with infrared sensors for close-range docking to achieve automatic recharging. First, LiDAR provides high-precision distance and angle measurements, effectively detecting and avoiding obstacles, giving the robot strong autonomous navigation capabilities in complex environments. The camera supplements the LiDAR by providing visual information, recognizing and locating feature objects in the environment, offering a more comprehensive environmental perception. Infrared sensors are employed for the recharging task, guiding the robot to approach the charging station from a distance, addressing the issue of long-range precise docking that is difficult to achieve solely by vision and LiDAR [15,16,17]. Moreover, infrared sensors are not affected by lighting changes, enabling stable operation under different lighting conditions.

Given these issues, the work presented in this paper focuses on addressing the limitations of traditional LiDAR systems, particularly their blind spots in near-field detection and the restricted range of vision-based navigation. This study employs a multi-sensor fusion approach that combines LiDAR and cameras to enhance the robot’s ability to locate the charging station. The LiDAR generates a detailed map by scanning the environment, while the camera provides complementary visual information, enabling the robot to recognize feature objects and accurately determine the position and posture of the charging station.

The mapping and navigation algorithm leverages this integrated information to plan the optimal path, effectively guiding the robot to the charging station. Throughout the navigation process, the LiDAR continuously monitors the environment in real time, identifying and avoiding obstacles to ensure safe passage. As the robot approaches the charging station, it transitions to a close-range infrared docking mode. Infrared transmitters installed on the charging station create multiple signal zones that the robot’s infrared receivers can detect. Based on the received signal strength and direction, the robot adjusts its posture to achieve precise docking.

By integrating these advanced sensing capabilities, the robot can efficiently execute autonomous navigation and automatic recharging tasks, significantly improving the reliability and operational duration of the robotic system. This research demonstrates a novel approach to enhancing the functionality of AGVs in complex indoor environments, making them more adaptable and effective in real-world applications.

The structure of this paper is organized as follows: Section 2 presents the hardware components of the multi-sensor fusion-based autonomous recharging system, detailing the roles of LiDAR, cameras, and infrared sensors. Section 3 describes the software components required for the automatic recharging systems, including the algorithms used for navigation and docking. Section 4 discusses the experiments conducted to validate the proposed system, including environment mapping and autonomous navigation. Finally, Section 5 concludes the paper and outlines potential future work in this area.

2. Multi-Sensor Fusion-Based Autonomous Recharging System for AGVs

2.1. Hardware Components of the Multi-Sensor Fusion-Based Autonomous Recharging System

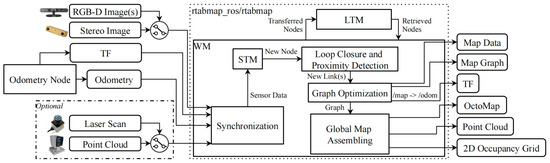

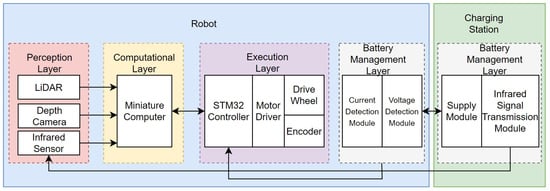

The multi-sensor fusion-based autonomous recharging system for unmanned vehicles consists of several key components: LiDAR, cameras, infrared sensors, the control system, and the charging station. The LiDAR is used for environmental scanning and mapping, generating a high-precision map by real-time perception of the surrounding environment. This map is then combined with the visual information captured by the camera to achieve long-range navigation and obstacle avoidance. The camera provides additional environmental perception data, supplementing the blind spots of the LiDAR. When the unmanned vehicle approaches the charging station, the infrared sensor becomes crucial. Infrared transmitters installed on the charging station emit multiple infrared signal areas, which are received by the infrared receivers on the vehicle. These signals help determine the vehicle’s relative position and orientation with respect to the charging station, enabling precise docking. The control system integrates data from the LiDAR, camera, and infrared sensors and, through multi-sensor fusion algorithms, continuously calculates the vehicle’s position and path planning to ensure that the vehicle can accurately locate the charging station and complete the autonomous recharging process. The system diagram of the autonomous recharging system is shown in Figure 1.

Figure 1.

Hardware components of the multi-sensor fusion-based autonomous recharging system.

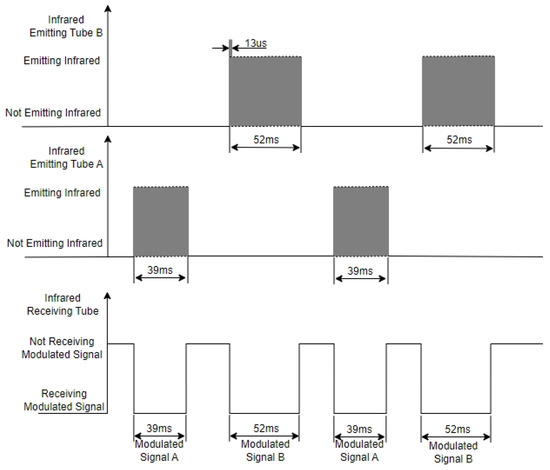

The infrared docking part is a crucial component of the autonomous charging system for the unmanned vehicle, with the hardware mainly comprising infrared transmitting tubes and infrared receiving tubes. Two or more infrared transmitting tubes are installed on the charging station, which create multiple infrared signal zones centered around the charging station. The unmanned vehicle is equipped with two or more infrared receiving tubes that detect these infrared signals to determine the vehicle’s position within the signal zones and its relative orientation to the charging station. By processing and analyzing the received signals, the vehicle can accurately adjust its position and orientation to achieve precise docking with the charging station.

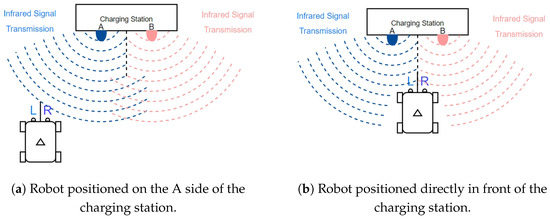

On the charging station, two infrared transmitting tubes (A and B) are installed, and a partition is added between them, splitting the infrared signals into two zones centered on the charging station (A and B zones). As shown in Figure 2, the robot positioning relative to the charging station varies between side (Figure 2a) and front views (Figure 2b). The vehicle’s front side is equipped with two infrared receiving heads (L for Left and R for Right), with a partition added between them, dividing the vehicle’s receiving area into two zones as well. By determining which infrared signals are received by the L and R infrared receiving tubes, the vehicle can ascertain the orientation of the charging station relative to the vehicle and the directional deviation between the vehicle’s front and the front of the charging station.

Figure 2.

Robot positioning relative to the charging station: side vs. front view.

2.2. Software Components of Multi-Sensor Fusion for Automatic Recharging Systems

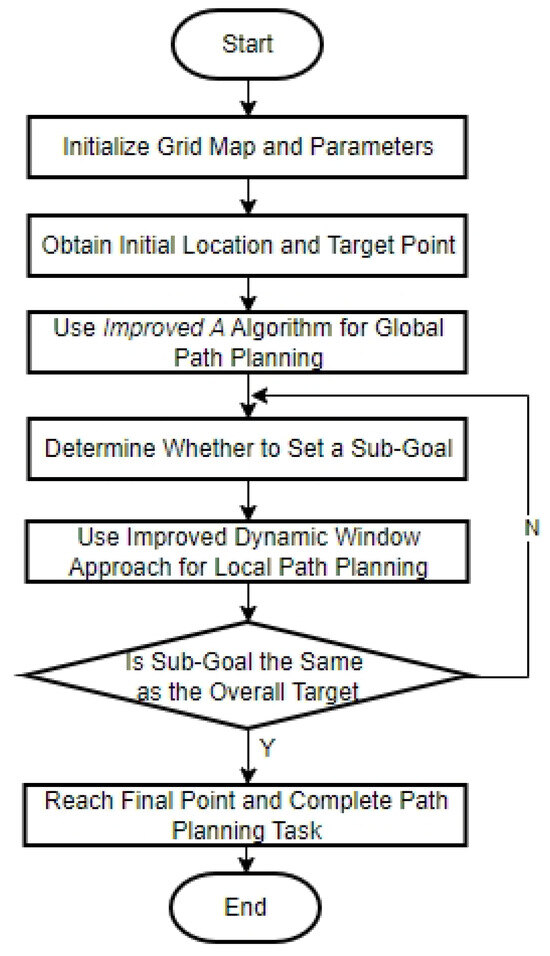

To accomplish the autonomous recharging task for robots over longer indoor distances, several steps are required. First, a map must be created. Next, the positions of both the robot and the charging station on the map need to be determined. Subsequently, navigation algorithms are used to plan the optimal path for the robot to reach the charging station. During the journey, navigation algorithms automatically control the robot to avoid obstacles encountered along the way. Once the robot approaches the vicinity of the charging station and receives the infrared signal emitted by the charging station, the reception of this infrared signal indicates that the robot has located the charging station.

4. Experiments

4.1. Hardware

The robot is designed with a heavy-duty motor, specifically intended for high-load applications, ensuring enhanced torque and operational reliability. This motor is part of the overall design that prioritizes the robot’s capability to handle demanding tasks such as transporting heavy loads or navigating rugged terrains. Accompanying the motor system is an advanced computational setup, which includes the Jetson Nano as the core processing unit and high-quality sensors such as the Orbbec Dabai camera for vision-based tasks. Additionally, the robot is equipped with a microphone array module and an N100 9-axis gyroscope module, which provide essential sensing and stabilization functions during operation. The physical object is shown in Figure 9.

Figure 9.

Illustration of the system components: (a) the robot and (b) the charging station.

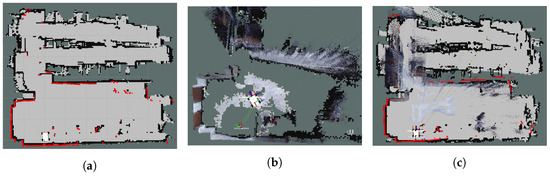

In this study, the selected experimental scene measures approximately 10 m in length and 8 m in width, featuring distinct open areas, obstacle regions, and clear boundaries, allowing for an intuitive comparison of the mapping results. The robot employed three different mapping methods for environmental scanning: gmapping for LiDAR-based mapping, the RTAB-Map using only visual point cloud data for purely visual mapping, and the RTAB-Map for visual and LiDAR fusion.

For the pure LiDAR mapping, the gmapping algorithm was employed. This algorithm allows for real-time construction of indoor maps with high accuracy and low computational demand, especially in small environments. Unlike Hector SLAM, which requires higher LiDAR frequency and is prone to errors during rapid robot movements, gmapping uses fewer particles and does not require loop closure detection, reducing computational load while maintaining a high degree of mapping accuracy. Additionally, gmapping effectively utilizes wheel odometry to provide prior pose information, reducing the dependency on high-frequency LiDAR data.

For pure visual mapping, the RTAB-Map was employed, using only the visual point cloud data from the RGB-D camera. While this visual system offers detailed environmental features, it is sensitive to lighting conditions, leading to less accurate results in low-light or feature-sparse areas compared to LiDAR-based methods. As the robot navigates through the environment, the map is generated using data from the RGB-D camera. Although the mapping results can be relatively accurate, the system’s sensitivity to lighting can cause it to miss key features in areas with insufficient or excessive light, resulting in gaps or inaccuracies in the map. Additionally, in narrow or confined spaces, the camera’s limited field of view often prevents the creation of a complete map, as crucial environmental details may not be adequately captured. These limitations highlight the challenges of relying solely on visual mapping, especially in complex or poorly lit environments.

In the case of LiDAR and visual fusion mapping, the RTAB-Map was used to integrate LiDAR data with visual information from cameras, resulting in a more comprehensive environmental map. LiDAR provides precise distance measurements and obstacle detection, while the camera offers detailed visual features, enhancing the overall resolution and accuracy of the map, particularly in environments with complex textures. The experimental results show that this fusion of sensors produces a more complete and reliable map. By accurately projecting 3D spatial points onto a 2D plane and processing the depth camera data, the map updates remain consistent with those generated by LiDAR. This fusion method combines the rich visual details captured by the camera with the precise distance measurements and obstacle detection from LiDAR, delivering a more comprehensive representation of the environment. Compared to maps created by single sensors, the fusion approach offers a higher level of environmental awareness, providing a more realistic and robust depiction of the experimental scene.

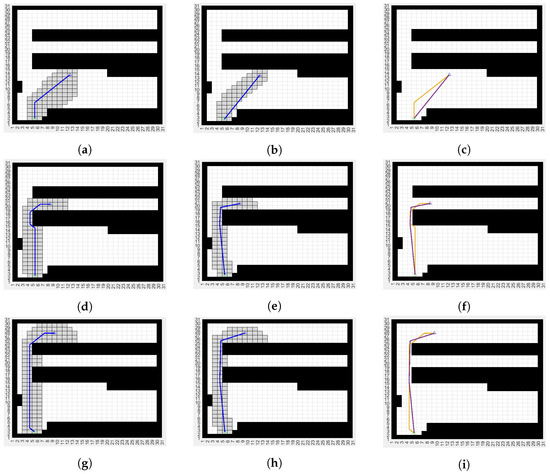

During the mapping process, the robot systematically explored the environment. Initially, it focused on smaller loops to achieve quick loop closure, before gradually expanding its exploration to cover the entire environment. This strategy minimized the accumulation of localization errors and ensured the robot could effectively close larger loops without encountering significant drift. After completing the loop closure, the robot revisited certain areas to refine the map’s details, ensuring that both the global structure and local features were captured accurately. By following this approach, the robot was able to fully explore the environment and generate high-quality maps that facilitated its navigation and task performance, including the critical sub-task of automatic recharging.LiDAR mapping, visual mapping, and LiDAR-vision fusion mapping are shown in Figure 10.

Figure 10.

Comparison of different mapping methods. (a) LiDAR mapping. (b) Visual mapping. (c) LiDAR and visual mapping.

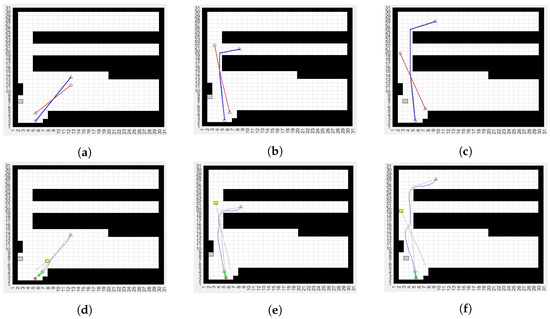

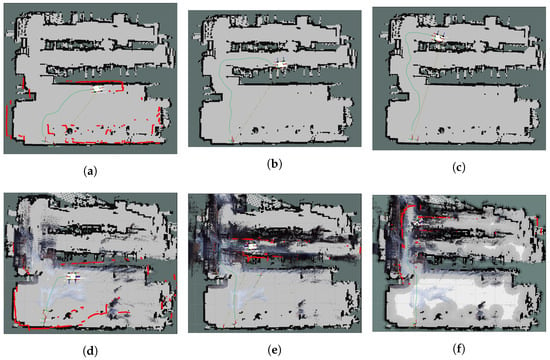

The figure showcases the navigation performance of the robot using two different sensor configurations: LiDAR-only mapping (top row) and LiDAR-visual fusion mapping (bottom row). The six images highlight the robot’s path planning and navigation from three different starting positions, with the goal of reaching the charging station. In the LiDAR-only mapping, the robot successfully detects random obstacles and adjusts its path accordingly using the improved A* algorithm combined with the dynamic window approach, ensuring smooth navigation to the target. This method is effective for obstacle avoidance and path optimization, as LiDAR provides precise environmental mapping.LiDAR mapping navigation and LiDAR-vision fusion mapping navigation results are shown in Figure 11.

Figure 11.

Navigation paths starting from three different positions: (Top row) LiDAR-only mapping, (Bottom row) LiDAR and visual mapping. (a) LiDAR mapping (Start 1). (b) LiDAR mapping (Start 2). (c) LiDAR mapping (Start 3). (d) LiDAR and visual mapping (Start 1). (e) LiDAR and visual mapping (Start 2). (f) LiDAR + Visual mapping (Start 3).

On the other hand, the visual-only mapping system struggled to complete the navigation task due to its sensitivity to lighting variations and difficulty in recognizing environmental features in low-light or cluttered areas. Pure visual data often led to incomplete maps, causing the robot to fail in planning a reliable path. The fusion of LiDAR and visual sensors significantly improved the mapping quality and navigation accuracy. The bottom row demonstrates how combining LiDAR’s accurate distance measurement with the visual system’s environmental details provides the robot with a more robust and comprehensive map, allowing it to navigate complex environments more effectively and reliably than using either sensor independently.

4.2. Infrared Docking

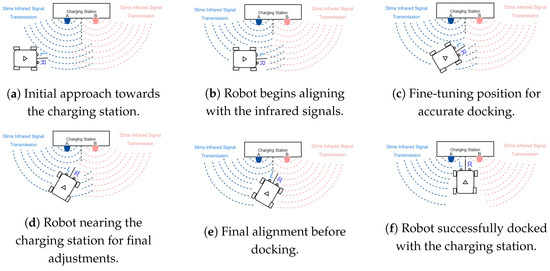

When the robot approaches the charging station and enters the close-range docking mode, the infrared emitters on the charging station start emitting 38KHz infrared carrier signals of varying durations. The infrared receivers on the robot identify these signals to determine its position and orientation relative to the charging station. The docking process is shown in Figure 12.

Figure 12.

Real infrared docking process.

If the left-side receiver receives a signal from emitter A and the right-side receiver receives a signal from emitter B, the robot adjusts its movement according to the predefined logic to ensure it faces the charging interface of the charging station. Once the robot is aligned with the charging station, it will continue moving forward and make contact with the charging port to complete the docking. Throughout the process, the robot continuously monitors the changes in infrared signals and adjusts its direction and position to ensure successful docking.

The experiments were conducted to evaluate the performance of the AGV in terms of map construction, path planning, navigation, and automatic docking under different sensor configurations. The tested configurations include infrared sensors, LiDAR, visual systems, and a combination of these sensors. The AGV was tasked with autonomously navigating from various starting positions to a charging station, avoiding obstacles along the way, and performing an automatic docking maneuver at the station. Table 3 summarizes the results of the tests, showing the advantages of sensor fusion over single-sensor methods.

Table 3.

Results comparing different sensor configurations.

The mapping process was initiated using three different sensor configurations: LiDAR with the gmapping algorithm, a visual system with the RTAB-Map, and a fusion of LiDAR and visual data with the RTAB-Map. LiDAR-based mapping using gmapping resulted in accurate and robust maps, but visual-only mapping struggled in low-light conditions and narrow spaces, leading to incomplete maps. The fusion of LiDAR and visual systems provided superior map quality, with fewer blind spots and more detailed environmental information. Path planning and navigation tests showed that the AGV using sensor fusion navigated more efficiently, as reflected in the reduced path planning distance and time to reach the target. Additionally, the AGV was able to avoid obstacles more effectively compared to using either LiDAR or visual systems alone. Finally, the automatic docking task demonstrated that relying solely on infrared or visual sensors led to higher docking errors, while the multi-sensor fusion system significantly improved docking precision and success rate. The experimental results clearly demonstrate the benefits of sensor fusion, particularly when operating in complex indoor environments where lighting conditions and obstacle configurations vary.

5. Conclusions

This paper investigates automatic recharging technology for unmanned vehicles based on multi-sensor fusion and proposes a method that integrates LiDAR, depth cameras, and infrared sensors for autonomous navigation and automatic recharging. LiDAR enables accurate environmental perception and obstacle avoidance through remote scanning. The combination of the improved A* algorithm and the dynamic window approach allows the robot to effectively avoid random obstacles while ensuring global path optimality. Infrared sensors are employed for precise docking in the final stage, ensuring accurate alignment with the charging station under various lighting conditions.

However, it is important to acknowledge that sensors may be sensitive to different environmental conditions, such as lighting variations and surface reflectivity. This sensitivity can impact the practicality of the experimental results. Future studies should focus on conducting experiments in a variety of real-world conditions to evaluate and improve the robustness of the system. Additionally, assessing the impact of different environmental factors on sensor performance will be crucial.

Furthermore, the cost and complexity of the proposed sensors must be carefully evaluated against their benefits in enhancing navigation. Future work should explore more cost-effective alternatives or strategies for sensor integration that maintain performance while reducing overall system complexity.

Moreover, the multi-sensor fusion approach requires real-time processing, raising questions about computational demand and latency. Addressing these challenges will be essential for ensuring that the system operates efficiently and effectively in dynamic environments. Future research should investigate optimization techniques to enhance processing speed and reduce latency in sensor data fusion.

In conclusion, the fusion of multiple sensors in the automatic recharging system fully leverages the advantages of each sensor, significantly enhancing the stability and adaptability of the robotic system. The experimental results demonstrate that the proposed method can achieve efficient autonomous navigation and automatic recharging in complex indoor environments, greatly improving system efficiency and reliability. By implementing these suggested improvements, future research can further refine the system’s practicality and performance, making it more suitable for real-world applications.

Author Contributions

Conceptualization, Y.X. and L.W.; methodology, Y.X.; software, L.L.; validation, Y.X., L.W. and L.L.; formal analysis, Y.X.; investigation, Y.X.; resources, L.L.; data curation, L.W.; writing—original draft preparation, Y.X.; writing—review and editing, L.L.; visualization, Y.X.; supervision, L.W.; project administration, Y.X.; funding acquisition, L.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China, grant number 41771487, and the Hubei Provincial Outstanding Young Scientist Fund, grant number 2019CFA086.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Su, K.L.; Liao, Y.L.; Lin, S.P.; Lin, S.F. An interactive auto-recharging system for mobile robots. Int. J. Autom. Smart Technol. 2014, 4, 43–53. [Google Scholar] [CrossRef]

- Moshayedi, A.J.; Jinsong, L.; Liao, L. AGV (automated guided vehicle) robot: Mission and obstacles in design and performance. J. Simul. Anal. Novel Technol. Mech. Eng. 2019, 12, 5–18. [Google Scholar]

- Song, G.; Wang, H.; Zhang, J.; Meng, T. Automatic docking system for recharging home surveillance robots. IEEE Trans. Consum. Electron. 2011, 57, 428–435. [Google Scholar] [CrossRef]

- Hao, B.; Du, H.; Dai, X.; Liang, H. Automatic recharging path planning for cleaning robots. Mobile Inf. Syst. 2021, 2021, 5558096. [Google Scholar] [CrossRef]

- Meena, M.; Thilagavathi, P. Automatic docking system with recharging and battery replacement for surveillance robot. Int. J. Electron. Comput. Sci. Eng. 2012, 1148–1154. [Google Scholar]

- Niu, Y.; Habeeb, F.A.; Mansoor, M.S.G.; Gheni, H.M.; Ahmed, S.R.; Radhi, A.D. A photovoltaic electric vehicle automatic charging and monitoring system. In Proceedings of the 2022 International Symposium on Multidisciplinary Studies and Innovative Technologies (ISMSIT), Ankara, Turkey, 20–22 October 2022; pp. 241–246. [Google Scholar]

- Rao, M.V.S.; Shivakumar, M. IR based auto-recharging system for autonomous mobile robot. J. Robot. Control (JRC) 2021, 2, 244–251. [Google Scholar] [CrossRef]

- Ding, G.; Lu, H.; Bai, J.; Qin, X. Development of a high precision UWB/vision-based AGV and control system. In Proceedings of the 2020 5th International Conference on Control and Robotics Engineering (ICCRE), Osaka, Japan, 24–26 April 2020; pp. 99–103. [Google Scholar]

- Liu, Y.; Piao, Y.; Zhang, L. Research on the positioning of AGV based on Lidar. J. Phys. Conf. Ser. 2021, 1920, 012087. [Google Scholar] [CrossRef]

- Yan, L.; Dai, J.; Tan, J.; Liu, H.; Chen, C. Global fine registration of point cloud in LiDAR SLAM based on pose graph. J. Geod. Geoinf. Sci. 2020, 3, 26–35. [Google Scholar]

- Gao, H.; Ma, Z.; Zhao, Y. A fusion approach for mobile robot path planning based on improved A* algorithm and adaptive dynamic window approach. In Proceedings of the 2021 IEEE 4th International Conference on Electronics Technology (ICET), Chengdu, China, 7–10 May 2021; pp. 882–886. [Google Scholar]

- Tang, G.; Tang, C.; Claramunt, C.; Hu, X.; Zhou, P. Geometric A-star algorithm: An improved A-star algorithm for AGV path planning in a port environment. IEEE Access 2021, 9, 59196–59210. [Google Scholar] [CrossRef]

- Sun, Y.; Zhao, X.; Yu, Y. Research on a random route-planning method based on the fusion of the A* algorithm and dynamic window method. Electronics 2022, 11, 2683. [Google Scholar] [CrossRef]

- Zhou, S.; Cheng, G.; Meng, Q.; Lin, H.; Du, Z.; Wang, F. Development of multi-sensor information fusion and AGV navigation system. In Proceedings of the 2020 IEEE 4th Information Technology, Networking, Electronic and Automation Control Conference (ITNEC), Chongqing, China, 12–14 June 2020; pp. 2043–2046. [Google Scholar]

- Zhang, J.; Singh, S. Laser–visual–inertial odometry and mapping with high robustness and low drift. J. Field Robot. 2018, 35, 1242–1264. [Google Scholar] [CrossRef]

- Jiang, Y.; Leach, M.; Yu, L.; Sun, J. Mapping, navigation, dynamic collision avoidance and tracking with LiDAR and vision fusion for AGV systems. In Proceedings of the 2023 28th International Conference on Automation and Computing (ICAC), Birmingham, UK, 30 August–1 September 2023; pp. 1–6. [Google Scholar]

- Dai, Z.; Guan, Z.; Chen, Q.; Xu, Y.; Sun, F. Enhanced object detection in autonomous vehicles through LiDAR—Camera sensor fusion. World Electr. Vehicle J. 2024, 15, 297. [Google Scholar] [CrossRef]

- Labbé, M.; Michaud, F. RTAB-Map as an open-source lidar and visual simultaneous localization and mapping library for large-scale and long-term online operation. J. Field Robot. 2019, 36, 416–446. [Google Scholar] [CrossRef]

- Das, S. Simultaneous localization and mapping (SLAM) using RTAB-MAP. arXiv 2018, arXiv:1809.02989. [Google Scholar]

- Gomez-Ojeda, R.; Moreno, F.A.; Zuniga-Noël, D.; Scaramuzza, D.; Gonzalez-Jimenez, J. PL-SLAM: A stereo SLAM system through the combination of points and line segments. IEEE Trans. Robot. 2019, 35, 734–746. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).