Abstract

Recent advancements in mobile neural networks, such as the squeeze-and-excitation (SE) attention mechanism, have significantly improved model performance. However, they often overlook the crucial interaction between location information and channels. The interaction of multiple dimensions in feature engineering is of paramount importance for achieving high-quality results. The Transformer model and its successors, such as Mamba and Vision Mamba, have effectively combined features and linked location information. This approach has transitioned from NLP (natural language processing) to CV (computer vision). This paper introduces a novel attention mechanism for mobile neural networks inspired by the structure of Vim (Vision Mamba). It adopts a “1 + 3” architecture to embed multi-dimensional information into channel attention, termed ”Multi-Dimensional Vim-like Attention Mechanism”. The proposed method splits the input into two major branches: the left branch retains the original information for subsequent feature screening, while the right branch divides the channel attention into three one-dimensional feature encoding processes. These processes aggregate features along one channel direction and two spatial directions, simultaneously capturing remote dependencies and preserving precise location information. The resulting feature maps are then combined with the left branch to produce direction-aware, location-sensitive, and channel-aware attention maps. The multi-dimensional Vim-like attention module is simple and can be seamlessly integrated into classical mobile neural networks such as MobileNetV2 and ShuffleNetV2 with minimal computational overhead. Experimental results demonstrate that this attention module adapts well to mobile neural networks with a low parameter count, delivering excellent performance on the CIFAR-100 and MS COCO datasets.

1. Introduction

Attention mechanisms are pivotal techniques in deep learning, enabling models to focus on specific parts of the input data when making predictions. By selectively emphasizing the most pertinent segments of the input, these mechanisms significantly enhance model performance across a wide range of tasks, including natural language processing (NLP) [1,2,3,4] and computer vision (CV) [5,6,7,8,9,10,11,12]. They allow models to determine “what” and “where” to attend, thereby reducing the influence of less relevant information. Consequently, attention mechanisms have garnered substantial interest within the computer vision research community [13,14,15,16]. Traditional methods for calculating channel attention typically involve strategies for aggregating spatial information and then reweighting channels accordingly. We took the ideas of Mamba [17] and listened to the results of MambaOut [18], redefined multidimensional attention as a “1 + 3”-branch module to design a new attention module.

In recent years, attention mechanisms have achieved significant advancements in deep learning, particularly in the domain of NLP (natural language processing), becoming indispensable tools for technological progress. Various attention mechanisms have been proposed and applied to diverse tasks, demonstrating exceptional performance and versatility. Soft attention [2] focuses on critical parts of a sequence by calculating context vectors as weighted sums, allowing models to assign different weights to various parts of the input. Hard attention [3] utilizes discontinuous selection, focusing on a single input element; despite its training challenges, it excels in specific tasks. The self-attention mechanism, a core component of the Transformer model [1,19], enables models to consider all positions of a sequence simultaneously, effectively capturing long-range dependencies. Multi-head attention enhances model expressiveness by independently computing attention in multiple subspaces and aggregating the results.

Moreover, hierarchical attention [20] processes hierarchical data by computing attention at both low and high levels, allowing models to focus on salient information across multiple layers. Convolutional attention, leveraging the strengths of convolutional neural networks, is well-suited for processing images or time series data. Channel-wise dimensionality reduction convolution is one of the most effective methods for managing model complexity. The existing attention mechanisms, such as the squeeze excitation (SE) module [6] and the convolutional block attention module (CBAM) [8], have demonstrated significant strides in enhancing feature representation. The SE module, though simple and computationally efficient, is limited to channel-wise attention and fails to capture spatial information. CBAM improves upon this by sequentially applying channel and spatial attention, yet it does not fully exploit the interdependencies between these dimensions and can increase computational complexity. Compared to SE and CBAM, coordinate attention (CA) [21] embeds direction-specific information along the spatial dimension into the channel attention and selects an appropriate channel dimensionality reduction ratio to achieve performance equivalent to SE attention.

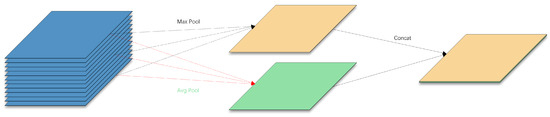

Inspired by the success of Mamba [17] in language modeling and Visual Mamba [22] in visual modeling, we propose a lightweight attention module inspired by Vision Mamba’s architecture. The proposed multi-dimensional Vim-like attention (MDVA) module integrates spatial attention, channel attention, channel pooling, dimensionality reduction pooling (DR-pooling), shown in Figure 1, and convolution operations across multiple dimensions within a unified framework. This strategy not only mitigates computational redundancy but also captures interdependencies in a more comprehensive and efficient manner, thereby offering a more potent mechanism for feature enhancement in contrast to the existing approaches. To address existing challenges, we introduce the multi-dimensional Vim-like attention (MDVA), which integrates channel and spatial attention using Vison Mamba’s architecture. This research’s primary contributions can be summarized as follows:

Figure 1.

Explains dimensionality reduction pooling (DR-pooling).

- We propose multi-dimensional Vim-like attention (MDVA), adopting the structure of Vim [22] and drawing from MambaOut to eliminate the SSM(structured space model) [23] for data streams. We redefine multidimensional attention as a “1 + 3” branch module that processes an input tensor and outputs a tensor of the same shape.

- The proposed attention module has only 0.0026M of the parameters while achieving equivalent or superior performance of SE [6] and CBAM [8].

- Extensive experiments on CIFAR-100 classification and various intensive prediction downstream tasks demonstrate that MDVA outperforms the well-established, high-performance traditional channel attention mechanism, SE.

2. Background and Related Work

To provide a comprehensive understanding of our proposed multi-dimensional Vim-like attention (MDVA) mechanism, we give a brief literature review of this paper, focusing on feature grouping in convolutional neural networks, the evolution of attention mechanisms and the Mamba system.

2.1. Feature Grouping

Feature grouping in convolutional neural networks (CNNs) [24,25,26,27,28,29,30] is a critical area of research focused on enhancing the efficiency and effectiveness of feature extraction and representation. To address computational constraints, AlexNet [31] introduced the concept of splitting feature maps into two separate GPU (graphics processing unit) pathways, thereby reducing computational complexity. Building on this idea, ResNeXt [26,32] employs grouped convolutions to create cardinality, referring to the number of groups in convolutions. This approach increases the diversity of learned features while maintaining manageable computational costs.

Xception [33] further advances this methodology by utilizing depthwise separable convolutions, which divide the convolution operation into depthwise convolutions (applying a single convolutional filter per input channel and pointwise convolutions 1 × 1 convolutions to combine the outputs) [34]. Graph convolutional networks (GCNs) [35,36,37] extend convolutional operations to graph-structured data, modeling relationships and interactions within feature groups in CNNs for enhanced contextual understanding. BaGFN [38] models high-order feature interactions in a flexible and explicit manner and designs a new generalized attention mechanism to dynamically learn the important weights of crossing features.

Despite these advancements, traditional modules often consider only a subset of channels, focusing on exploiting the inter-relationships of channels. While they aim to construct informative features by fusing both spatial and channel-wise information, there remains room for improvement in capturing comprehensive channel-wise dependencies.

2.2. Attention Mechanisms

Attention mechanisms have become a cornerstone in modern deep learning, significantly enhancing the performance of neural networks across various tasks by enabling models to focus on relevant parts of the input data. A prominent example is SENet [6], introduced by Hu et al., which recalibrates channel-wise feature responses by explicitly modeling interdependencies between channels. CBAM (convolutional block attention module) [8] combines both channel and spatial attention, sequentially applying channel attention to capture the importance of different feature channels and spatial attention to focus on critical regions in the spatial dimensions of the feature maps. Subsequent works, such as non-local neural networks [39], GAT (graph attention network) [40], and GALA (global attention local aggregation) [41], EMAttention [42], expand upon this concept by incorporating various spatial attention mechanisms or developing sophisticated attention blocks. EAPT [43] introduced deformable attention, which learns the offset of each position within a patch, enabling the acquisition of non-fixed attention information despite segmenting a fixed-sized patch. DaVit [44] uses two self-attention mechanisms, “spatial token” and “channel token”, to solve the problem of computational complexity as channel dimensions increase.

In contrast to methods that rely on computationally expensive and heavy non-local or self-attention blocks, we have designed a more efficient approach to capturing location information and channel interdependencies, thereby enhancing the feature representation of mobile networks. The method proposed in this research employs a “1 + 3” structure, which consists of a main body and three one-dimensional encoding processes. This approach not only captures detailed spatial and channel-wise dependencies but also maintains computational efficiency. Consequently, it outperforms other lightweight attention mechanisms, such as CBAM [8] and TA (Transform attention) [1].

2.3. The Mamba System

The advent of Mamba [17] has led to significant progress in deep learning models. The authors propose a new selective state space model (SSM) [23], which improves upon previous work in several key aspects, thereby achieving the modeling capabilities of Transformers [1] and extending sequence lengths linearly.

Firstly, the authors enhance the parameterized matrix by making the SSM parameters dependent on the input data, thereby rendering the model data-driven. Secondly, they design a selective scanning algorithm, a simple selection mechanism that enables the model to filter out irrelevant information and retain relevant data, addressing previous models’ inefficiencies in data selection. Additionally, to tackle the challenge of computational efficiency, the authors develop a recursive hardware-aware algorithm that optimizes computational performance on GPUs. This algorithm minimizes frequent I/O access between different memory levels, reduces memory access time, and increases processing speed linearly with sequence length, making it three times faster than convolution-based SSM models.

In terms of model architecture, the authors combine the previous SSM architecture with the MLP module of Transformers into a streamlined module, resulting in a simplified end-to-end neural network architecture devoid of attention and MLP blocks. This new architecture, named Mamba, is simpler and more homogeneous, featuring a selective state space design.

Subsequently, Lianghui Zhu applied Mamba to the field of computer vision, introducing Vision Mamba (Vim) [22]. Soon after, Weihao Yu suggested that SSM might not be suitable for visual tasks. Based on this feedback, we examined Vim’s architecture and incorporated Weihao Yu’s recommendations to develop this new model.

This work highlights the continuous evolution and refinement of deep learning models, emphasizing the importance of adapting architectures to specific tasks to enhance performance and efficiency.

3. Materials and Methods

In this section, we first review coordinate attention blocks and analyze the position encoding of CoT (coordinate-attention module) [21]. We then explore the Mamba architecture, which was previously undocumented, followed by the introduction of the multi-dimensional Vim-like attention module. Inspired by the Mamba framework [17], this module demonstrates the importance of cross-dimensional dependencies and cross-scale fusion. We further compare the complexity of the new method with other standard attention mechanisms.

3.1. Improved Coordinate Attention Blocks Method

The CoT [21] is a computational unit designed to enhance the expressive power of feature learning in mobile networks. It can take any intermediate feature tensor as input and output a transformed tensor with augmented representations, where y has the same dimensions as x. This paper introduces a novel attention mechanism called coordinate attention. Unlike traditional channel attention, which converts feature tensors into a single feature vector through 2D global pooling, coordinate attention decomposes channel attention into two one-dimensional feature encoding processes that aggregate features along two spatial directions. This can be represented as:

This approach captures long-range dependencies along one spatial direction while preserving precise positional information along the other spatial direction. The resulting feature maps are then encoded into a pair of direction-aware and position-sensitive attention maps.

These attention maps can be applied to the input feature map to enhance the representation of the objects of interest, which can be formulated as:

Then, f is divided into two tensors along the spatial dimension:

Finally, the coordinates modulate the output of the block as:

This encoding process allows the coordinate attention to more accurately locate the exact position of the object of interest, thereby helping the entire model to better recognize the image.

3.2. MDVA Method

As mentioned above, the goal of this research is to investigate how to model channel attention and spatial attention efficiently and cost-effectively, co-opted Vision Mamba [22], without increasing the number of parameters. In this section, we introduce a novel attention mechanism that differs from CBAM [8] and SENet [6], which rely on a significant number of learnable parameters to establish interdependencies between channels. Drawing inspiration from the structure of Vision Mamba, we propose an attention mechanism that integrates channel and spatial attention with minimal parameter usage, named the multidimensional Vim-like attention.

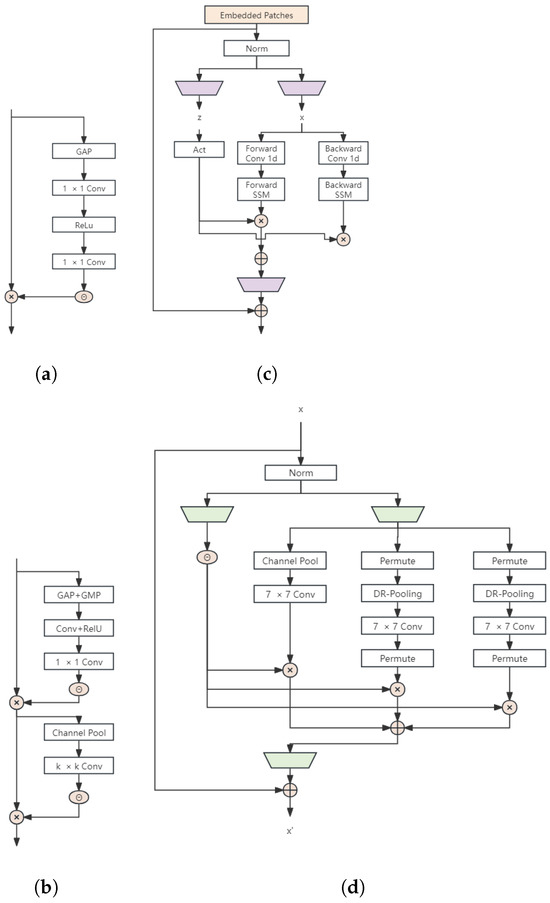

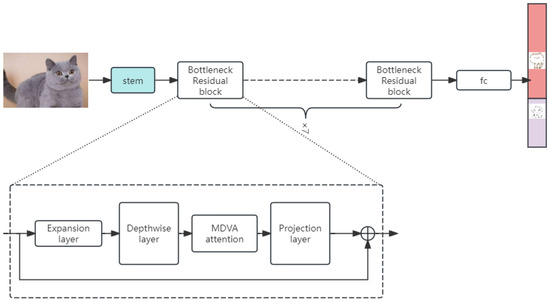

The multidimensional Vim-like attention module is depicted in Figure 2. As the name implies, an input tensor x is processed by the multidimensional fused Vim-like attention module, resulting in through the left branch. The input x then passes through a structure comprising three parallel branches. Each branch is responsible for capturing one of the dimensions—channels (C), height (H), and width (W)—in the tensor. The two branches on the right capture and fuse channel attention, while the left branch builds spatial attention, forming a “1 + 3” configuration. The outputs of all three branches are then aggregated using a simple average after multiplying by the result of the left branch.

Figure 2.

Comparisons with different attention modules: (a) Squeeze excitation (SE) module; (b) convolutional block attention module (CBAM); (c) Vision Mamba (Vim) module; (d) multi-dimensional Vim-like attention module. ⊖ denotes the “nn.ReLU”, ⊗ denotes broadcast element-wise multiplication, and ⨁ denotes broadcast element-wise addition.

This design aims to build interdimensional interactions to compensate for the independence of channels in traditional attention mechanisms. By integrating these interactions with minimal additional parameters, the Vim-like attention module effectively enhances feature representation without the computational overhead typically associated with other attention mechanisms.

3.2.1. Multi-Dimension Interaction

Traditional methods for calculating channel attention typically involve strategies for aggregating spatial information and then reweighting channels accordingly. Although these methods have achieved remarkable success, particularly the convolutional block attention module (CBAM) [8], which effectively integrates channel attention and spatial attention, they also have certain limitations. CBAM has demonstrated superior performance in various visual tasks and is widely used in deep learning applications. However, traditional attention modules primarily extract global context information through global average pooling (GAP) [45], which can result in the loss of important details. In CBAM, the channel attention mechanism derives the importance weight of each channel through both global average pooling and global maximum pooling. The spatial attention mechanism, on the other hand, determines the importance weight of each spatial position by performing average pooling and maximum pooling across the channel dimensions of the feature map. Despite their efficacy, these methods overlook the connections between channels and spatial dimensions and fail to capture the interdependence between these dimensions, thus ignoring the relationship between the two.

Inspired by the construction of multi-dimensional attention and Mamba’s linear complexity operations, we propose using the concept of multi-dimensional fusion interaction. By employing three branches to capture dependencies between the (C, H), (C, W), and (H, W) dimensions of the input tensor, and interacting with the left branch akin to Vision Mamba [22], we introduce multi-dimensional interaction in the new attention mechanism. This approach allows us to more comprehensively capture the interrelationship between channels and spatial dimensions, thereby enhancing the overall efficacy of the attention mechanism.

3.2.2. Multi-Dimensional Attention

Weihao Yu (MambaOut) [18] empirically demonstrated that the self-supervised module (SSM) is not essential for image classification tasks due to the absence of long sequences or autoregressive properties. However, for detection and segmentation tasks, where long sequence properties are present, SSM may offer potential benefits. Based on these findings and Weihao’s recommendation, we have eliminated the SSM module from Mamba, like MambaOut, and redefined multidimensional attention as a “1 + 3”-branch module that takes an input tensor and outputs a tensor of equivalent shape.

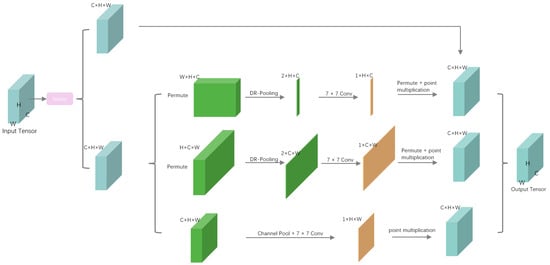

In the branch, we apply the input tensor through a sigmoid activation layer () to retain the original channel information. Meanwhile, in the branch, we input the tensor to each component of the proposed multidimensional attention module’s right branch. Here, we establish interaction between the height dimension and channel dimension by rotating 90 degrees counterclockwise along the height axis to obtain with dimensions . Subsequently, reducing via DR-Pooling yields a tensor, which then undergoes convolutional processing using a 7 × 7 kernel size followed by batch normalization, resulting in an intermediate output of shape . The attention weight is generated through a sigmoid activation layer (), applied to before being rotated back 90 degrees clockwise along the height axis to restore the original input shape. In the branch, rotation of by 90 degrees counterclockwise along the width axis produces a rotated tensor with dimensions . This tensor is reduced via DR-Pooling, yielding a tensor, which, after convolutional processing and batch normalization, results in an output tensor with dimensions . The attention weight is generated through a sigmoid activation layer () before being rotated back 90 degrees clockwise along the width axis, returning it to the original input shape. Finally, the channels of the input tensor are reduced using a process called dimensional reduction pooling (DR-Pooling). This transformation results in a new tensor with dimensions . This tensor is then passed through a convolutional layer, followed by a batch normalization layer, resulting in a final output with dimensions . The attention weights generated from each branch are independently applied to the corresponding tensors from the left-hand side. Finally, the outputs from each branch are averaged together to produce the result. The MDVA attention flow is shown in Figure 3.

Figure 3.

Multi-dimensional Vim-like attention module.

By incorporating the multi-dimensional reduction module, the system can effectively integrate spatial and channel-wise information, enhancing the overall performance of the attention mechanism without increasing computational complexity.

By redefining the multidimensional attention mechanism in this way, we can efficiently capture cross-dimensional dependencies and enhance the representational capacity of the model without increasing the number of parameters. This approach is particularly beneficial for tasks requiring detailed spatial and channel interdependencies.

To summarize, the process can be represented by the following equation:

where represents the input tensor; ∗ represents DR-pooling; represents the sigmoid activation function; , , and represent the standard convolutional layers defined by kernel size k in the three branches of multidimensional attention.

4. Experiment

4.1. Experiment Setup

We present detailed experiments and results to demonstrate the performance and efficiency of the proposed multi-dimensional Vim-like attention module (MDVA). We evaluate MDVA on the challenging vision tasks, including classification tasks on the CIFAR-100 dataset as well as object detection tasks on MS COCO datasets. To thoroughly assess the effectiveness of the final modules, we conducted extensive ablation experiments to analyze the contribution of each component to the overall performance of the proposed multidimensional attention mechanism.

CIFAR-100 is a dataset used in machine learning and computer vision, containing 60,000 color images of 32 × 32 pixels. It has 100 classes, each with 600 images, split into 50,000 training and 10,000 test images. The 100 classes are grouped into 20 superclasses, each with 5 classes. For example, the superclass “vehicles”, includes bicycle, bus, motorcycle, pickup truck, and train.

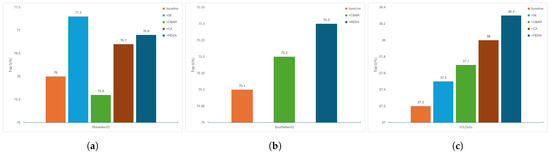

The MS COCO dataset is a large-scale dataset used in computer vision, containing over 330,000 images with 80 object categories. It includes detailed annotations like object segmentation masks, bounding boxes, key points, and captions. MS COCO is widely used for tasks such as object detection, segmentation, key point detection, and image captioning. Results can be found in Figure 4.

Figure 4.

Performance of different attention methods: (a) MobileNetV2; (b) ShuffleNetV2; (c) YOLOv5s. The y-axis labels from left to right are top-1 accuracy. Clearly, our approach not only achieves almost the best result in Cifar100 classification against the SE block and CBAM but performs even better in COCO object detection.

We conducted the experiments using PyTorch. During training, we utilized an adaptive moment estimation with weight decay (AdamW) optimizer with a learning rate of and a weight decay set to . The learning rate followed a cosine schedule, starting with an initial value of . The models were trained on an NVIDIA RTX 2080ti GPU with a batch size of 32. We used MobileNetV2 [46] and ShuffleNetV2 [47] as the baseline model, training all models for 100 epochs. Figure 5 shows how to integrate the MDVA attention mechanism into MobileNetV2. For data augmentation, we applied RandomResizedCrop, RandomHorizontalFlip, and Normalize, using a mean of [0.485, 0.456, 0.406] and a standard deviation of [0.229, 0.224, 0.225].

Figure 5.

This image shows how the MDVA attention mechanism can be integrated into MobileNetV2 while showing the flow of the entire model.

4.2. Ablation Studies

4.2.1. Importance of Multidimensional Vim-like Attention

To demonstrate the performance of the proposed multidimensional attention mechanism, we conducted a series of ablation experiments. The results of these experiments are listed in Table 1. We systematically removed horizontal attention, vertical attention, or channel attention from the multidimensional attention mechanism to evaluate the importance of encoding information across multiple dimensions. As shown in Table 1, a model incorporating attention in all three dimensions performs comparably to a model with attention in only a single dimension. However, the best results are achieved when horizontal, vertical, and channel attention are combined. These experimental results indicate that embedding multidimensional information is more beneficial for image classification, especially when the number of learnable parameters and computational cost remain equivalent.

Table 1.

Ablation studies for MDVA on MobileNetV2.

4.2.2. Different Weight Multipliers

In this study, we compare the performance of the new proposed approach with CBAM using different weight multipliers across one classic mobile network: ShuffleNetV2 [47] uses deep separable convolution and channel recombination techniques to facilitate information exchange and feature fusion to reduce the number of parameters and computational effort. We adopt three typical weight multipliers: 1.5, 1.0, and 0.5. As shown in Table 2, when using the ShuffleNetV2 as the baseline, models with MDVA yield similar results to those with CBAM attention [8] consuming fewer parameters. However, models incorporating the proposed MDVA perform exceptionally well, particularly under the setting of 1.5.

Table 2.

Comparisons of different attention methods under different multipliers when taking ShuffleNetV2 [47] as the baseline.

4.3. Image Classification

4.3.1. CIFAR-100 Classification Experiment

To rigorously evaluate the new module, we conducted classification experiments on the CIFAR-100 dataset. The new findings reveal that the overhead of the Mamba-class multidimensional attention module is minimal in terms of both parameters and computation. This efficiency prompted us to integrate the proposed module, MDVA (multi-dimensional Vim-like attention), into the lightweight MobileNet network and ShuffleNet network. Table 3 summarizes the experimental results based on the MobileNet architecture and ShuffleNet architecture.

Table 3.

Comparison of various attention methods on CIFAR-100 in terms of network parameters (in millions), FLOPs, Top-1 Validation Accuracies%.

Using MobileNetV2 [46] as the baseline, we compared the multidimensional attention module with other lightweight attention modules for mobile networks, including the widely used SE attention [6], CBAM [8], and CA [21], as detailed in Table 3.

As indicated in Table 3, incorporating these attention mechanisms improved classification performance by approximately 1%. Notably, the spatial attention module of CBAM did not significantly enhance mobile network performance. However, the proposed multidimensional Vim-like attention yielded the best results.

4.3.2. Stronger Baseline Evaluation

To further demonstrate the advantages of the proposed MVDA over existing attention mechanisms in more powerful mobile networks, we used ShuffleNetV2 [47] as the benchmark model. ShuffleNetV2 is a lightweight convolutional neural network architecture designed for efficient computation on mobile and edge devices proposed by the MegVII technology team in 2018. We replaced the SE attention in ShuffleNetV2 with the newly designed multidimensional Vim-like attention module, adhering to the original model specifications outlined in the ShuffleNetV2 paper.

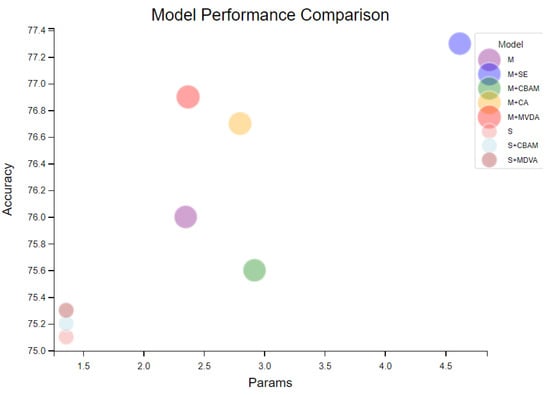

The results, shown in Table 3, indicate that the new multidimensional Vim-like attention outperforms the original efficient network with CBAM attention. This demonstrates that the proposed multidimensional Vim-like attention can effectively enhance performance in powerful mobile networks. According to this experiment, we observe significant improvements compared to models with other attention mechanisms as shown in Figure 6.

Figure 6.

Trade-offs between accuracy and training throughput. Measured with batch size of 32 on a GTX 2080ti GPU (graphics processing unit).

4.4. Object Detection

4.4.1. Implementation Details

To demonstrate the superiority of the proposed method in object detection, we evaluated MDVA on the COCO dataset. The COCO dataset’s training set consists of 118,000 images, and the standard preprocessing requires resizing images to a uniform size of . The implementation is based on PyTorch and YOLOv5s.

During the training, we set the size of the input image to , and we trained the model on an RTX 2080ti GPU, setting the batch size to 5 and using synchronized batch normalization. Cosine annealing learning rate scheduling was employed, with an initial learning rate set to 0.01. The model was trained for a total of 300 epochs.

These experimental settings allowed us to thoroughly evaluate the performance of MDVA in object detection and compare it with other methods. The experimental results show that the new multi-dimensional attention mechanism significantly outperforms traditional attention mechanisms in terms of performance. Notably, in object detection tasks, MDVA demonstrates superior localization and recognition capabilities.

4.4.2. Results on COCO

In this experiment, we integrated various attention strategies into the standard YOLOv5s [48] backbone and applied the same default settings across all implementations to evaluate their impact on object detection performance, with results summarized in Table 4. The baseline model, YOLOv5s, demonstrated a mean average precision (mAP) of 37.2 with 7.23 million parameters and 16.5 million FLOPs. Enhancements to the baseline model using different attention mechanisms yielded notable improvements. Incorporating the squeeze and excitation (SE) module slightly increased the parameter count to 7.27 million and FLOPs to 16.6 million, resulting in a mAP of 37.5, an improvement of 0.3. Adding the convolutional block attention module (CBAM) further improved the mAP to 37.7, with similar parameters and FLOP increments. The coordinate attention (CA) mechanism increased the mAP more significantly to 38.0 while maintaining nearly the same parameter and FLOP counts. Finally, the introduction of the multi-dimensional Vim-like attention (MDVA) mechanism achieved the highest mAP of 38.3, again with no additional parameter or FLOP cost compared to the baseline. These results indicate that integrating attention mechanisms can effectively enhance the detection performance of YOLOv5s on the COCO dataset without significantly impacting computational efficiency.

Table 4.

Results on MS COCO dataset.

5. Conclusions

This paper systematically explored attention mechanisms and introduced the multi-dimensional Vim-like attention (MDVA) mechanism, successfully integrating it into MobileNetV2 and ShuffleNetV2 backbones. The experiments demonstrated that MDVA significantly improved classification and detection accuracy compared to other attention mechanisms like SE, CBAM, and CA while maintaining a compact model size suitable for mobile devices with minimal additional computational overhead. According to the ablation experiment, simultaneous processing and fusion of the information of three dimensions can help extract the feature information to the greatest extent. The integration of MDVA into standard CNN architectures enhanced performance without significantly increasing model size. However, it has limitations, including a narrow scope of experiments and a lack of in-depth theoretical analysis. Future work would explore MDVA’s integration into a broader range of architectures, apply it to diverse computer vision tasks, investigate hardware efficiency optimizations, and leverage automated mechanism search techniques to further improve and demonstrate its potential in real-world scenarios. Future research directions include exploring MDVA’s integration into a broader range of architectures, applying it to diverse computer vision tasks, optimizing for hardware efficiency, conducting in-depth theoretical analyses, and leveraging automated mechanism search techniques. Additionally, the current modified model performs linear operations on the input’s three dimensions and then combines them through another branch. In the future, we can explore pre-combination techniques and conduct a series of experiments to verify their efficacy in feature extraction or downstream image tasks.

Author Contributions

Conceptualization, J.S.; methodology, J.S.; software, J.S.; validation, J.S.; formal analysis, P.R.; investigation, Z.L.; resources, J.S.; data curation, J.S.; writing—original draft preparation, J.S.; writing—review and editing, J.S.; visualization, J.S.; supervision, R.Z.; project administration, R.Z.; funding acquisition, R.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Key R&D Plan under grant number 2021YFF0601200 and 2021YFF0601204.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

You can find more information about the dataset at the following links: at https://www.cs.toronto.edu/~kriz/cifar.html (accessed on 8 April 2009) and https://cocodataset.org/#download (accessed on 21 February 2015).

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Curran Associates, Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural Machine Translation by Jointly Learning to Align and Translate. arXiv 2014, arXiv:1409.0473. [Google Scholar]

- Xu, K.; Ba, J.; Kiros, R.; Cho, K.; Courville, A.; Salakhudinov, R.; Zemel, R.; Bengio, Y. Show, Attend and Tell: Neural Image Caption Generation with Visual Attention. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 7–9 July 2015. [Google Scholar]

- Shaw, P.; Uszkoreit, J.; Vaswani, A. Self-Attention with Relative Position Representations. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, New Orleans, LA, USA, 1–6 June 2018; Volume 2 (Short Papers). [Google Scholar] [CrossRef]

- Howard, A.; Sandler, M.; Chen, B.; Wang, W.; Chen, L.C.; Tan, M.; Chu, G.; Vasudevan, V.; Zhu, Y.; Pang, R.; et al. Searching for MobileNetV3. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. arXiv 2018, arXiv:1709.01507. [Google Scholar]

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level Accuracy with 50x Fewer Parameters and <0.5 MB Model Size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Computer Vision—ECCV 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer International Publishing: Cham, Switzerland, 2018; Volume 11211, pp. 3–19. [Google Scholar] [CrossRef]

- Bello, I.; Zoph, B.; Le, Q.; Vaswani, A.; Shlens, J. Attention Augmented Convolutional Networks. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar] [CrossRef]

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual Attention Network for Scene Segmentation. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar] [CrossRef]

- Wang, F.; Jiang, M.; Qian, C.; Yang, S.; Li, C.; Zhang, H.; Wang, X.; Tang, X. Residual Attention Network for Image Classification. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef]

- Chen, Z.; Qiu, G.; Li, P.; Zhu, L.; Yang, X.; Sheng, B. MNGNAS: Distilling Adaptive Combination of Multiple Searched Networks for One-Shot Neural Architecture Search. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 13489–13508. [Google Scholar] [CrossRef]

- Cao, Y.; Xu, J.; Lin, S.; Wei, F.; Hu, H. GCNet: Non-Local Networks Meet Squeeze-Excitation Networks and Beyond. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) Workshops, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1971–1980. [Google Scholar]

- Liu, Y.; Shao, Z.; Hoffmann, N. Global Attention Mechanism: Retain Information to Enhance Channel-Spatial Interactions. arXiv 2021, arXiv:2112.05561. [Google Scholar]

- Brauwers, G.; Frasincar, F. A General Survey on Attention Mechanisms in Deep Learning. IEEE Trans. Knowl. Data Eng. 2023, 35, 3279–3298. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Vedaldi, A. Gather-Excite: Exploiting Feature Context in Convolutional Neural Networks. arXiv 2018, arXiv:1810.12348. [Google Scholar]

- Gu, A.; Dao, T. Mamba: Linear-Time Sequence Modeling with Selective State Spaces. arXiv 2023, arXiv:2312.00752. [Google Scholar]

- Yu, W.; Wang, X. MambaOut: Do We Really Need Mamba for Vision? arXiv 2024, arXiv:2405.07992. [Google Scholar]

- Mehta, S.; Rastegari, M. MobileViT: Light-weight, General-purpose, and Mobile-friendly Vision Transformer. arXiv 2022, arXiv:2110.02178. [Google Scholar]

- Yang, Z.; Yang, D.; Dyer, C.; He, X.; Smola, A.; Hovy, E. Hierarchical Attention Networks for Document Classification. In Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, San Diego, CA, USA, 12–17 June 2016. [Google Scholar] [CrossRef]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate Attention for Efficient Mobile Network Design. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021. [Google Scholar] [CrossRef]

- Zhu, L.; Liao, B.; Zhang, Q.; Wang, X.; Liu, W.; Wang, X. Vision mamba: Efficient visual representation learning with bidirectional state space model. arXiv 2024, arXiv:2401.09417. [Google Scholar]

- Gu, A.; Goel, K.; Ré, C. Efficiently Modeling Long Sequences with Structured State Spaces. arXiv 2021, arXiv:2111.00396. [Google Scholar]

- Gao, H.; Wang, Z.; Cai, L.; Ji, S. ChannelNets: Compact and Efficient Convolutional Neural Networks via Channel-Wise Convolutions. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 2570–2581. [Google Scholar] [CrossRef]

- Gholami, A.; Kwon, K.; Wu, B.; Tai, Z.; Yue, X.; Jin, P.; Zhao, S.; Keutzer, K. SqueezeNext: Hardware-Aware Neural Network Design. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 1719–1728. [Google Scholar] [CrossRef]

- Xie, S.; Girshick, R.; Dollar, P.; Tu, Z.; He, K. Aggregated Residual Transformations for Deep Neural Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef]

- Zhang, H.; Wu, C.; Zhang, Z.; Zhu, Y.; Lin, H.; Zhang, Z.; Sun, Y.; He, T.; Mueller, J.; Manmatha, R.; et al. ResNeSt: Split-Attention Networks. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), New Orleans, LA, USA, 10–20 June 2022. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q. EfficientNetV2: Smaller Models and Faster Training. In Proceedings of the 38th International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 10096–10106. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6848–6856. [Google Scholar] [CrossRef]

- Luo, Q.; Shao, J.; Dang, W.; Geng, L.; Zheng, H.; Liu, C. An efficient multi-scale channel attention network for person re-identification. Vis. Comput. 2024, 40, 3515–3527. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. arXiv 2015, arXiv:1512.03385. [Google Scholar]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Shi, L.; Zhang, Y.; Cheng, J.; Lu, H. Two-Stream Adaptive Graph Convolutional Networks for Skeleton-Based Action Recognition. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar] [CrossRef]

- Wu, F.; Zhang, T.; Souza, A.; Fifty, C.; Yu, T.; Weinberger, K. Simplifying Graph Convolutional Networks. arXiv 2019, arXiv:1902.07153. [Google Scholar]

- Li, Q.; Han, Z.; Wu, X.M. Deeper Insights Into Graph Convolutional Networks for Semi-Supervised Learning. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; AAAI Press: Palo Alto, CA, USA, 2018. [Google Scholar] [CrossRef]

- Xie, Z.; Wenling Zhang, B.S.; Ping Li, C.L.P.C. BaGFN: Broad Attentive Graph Fusion Network for High-Order Feature Interactions. IEEE Trans. Neural Netw. Learn. Syst. 2023, 34, 4499–4513. [Google Scholar] [CrossRef]

- Wang, X.; Girshick, R.; Gupta, A.; He, K. Non-local Neural Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar] [CrossRef]

- Liu, Z.; Zhou, J. Graph Attention Networks. In Synthesis Lectures on Artificial Intelligence and Machine Learning, Introduction to Graph Neural Networks; Springer: Cham, Switzerland, 2020; pp. 39–41. [Google Scholar] [CrossRef]

- Linsley, D.; Shiebler, D.; Eberhardt, S.; Serre, T. Learning what and where to attend. In Proceedings of the International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Li, H.; Zhang, Q.; Wang, Y.; Zhang, J. Efficient Multi-Scale Attention Module with Cross-Spatial Learning. Pattern Recognit. 2021, 113, 107848. [Google Scholar] [CrossRef]

- Lin, X.; Sun, S.; Huang, W.; Sheng, B.; Li, P.; Feng, D.D. EAPT: Efficient Attention Pyramid Transformer for Image Processing. IEEE Trans. Multimed. 2023, 25, 50–61. [Google Scholar] [CrossRef]

- Ding, M.; Xiao, B.; Codella, N.; Luo, P.; Wang, J.; Yuan, L. DaViT: Dual Attention Vision Transformers. arXiv 2022, arXiv:2204.03645. [Google Scholar]

- Lin, M.; Chen, Q.; Yan, S. Network In Network. arXiv 2013, arXiv:1312.4400. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar] [CrossRef]

- Ma, N.; Zhang, X.; Zheng, H.T.; Sun, J. ShuffleNet V2: Practical Guidelines for Efficient CNN Architecture Design. In Computer Vision—ECCV 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer International Publishing: Cham, Switzerland, 2018; Volume 11218, pp. 122–138. [Google Scholar] [CrossRef]

- Zhu, X.; Lyu, S.; Wang, X.; Zhao, Q. TPH YOLOv5: Improved YOLOv5 Based on Transformer Prediction Head for Object Detection on Drone-captured Scenarios. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision Workshops (ICCVW), Montreal, BC, Canada, 11–17 October 2021. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).