Vision-Based Hand Rotation Recognition Technique with Ground-Truth Dataset

Abstract

1. Introduction

2. Related Works

2.1. Cognitive Ability Assessment Based on Behavior Data

2.2. Behavioral Data Measurement Technologies

2.3. Image-Processing-Based Hand Recognition

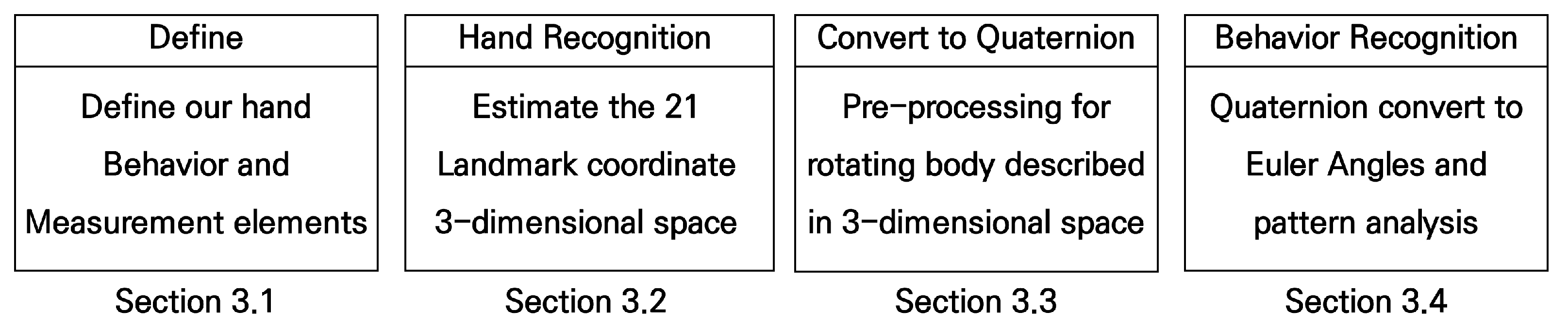

3. Methods

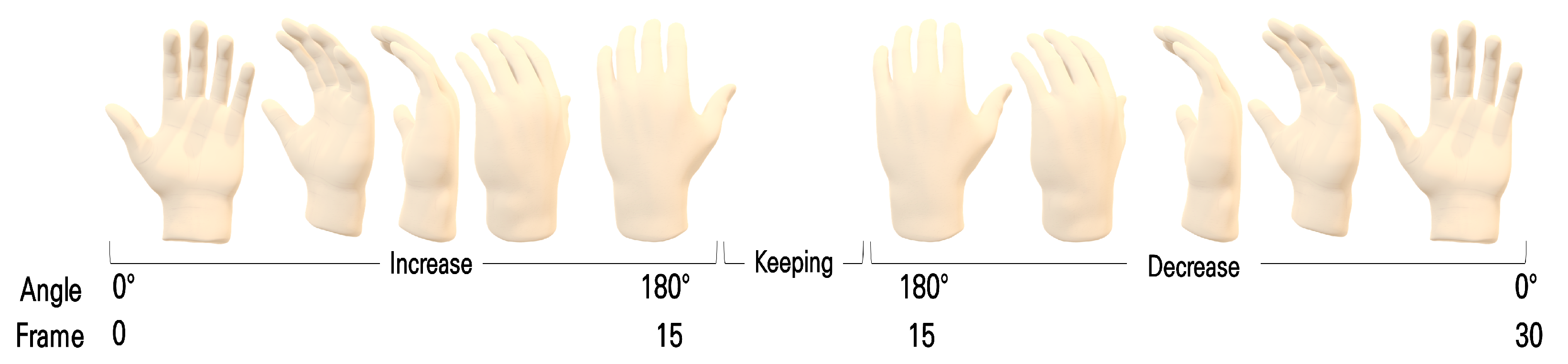

3.1. Defining Hand Behavior and Measurement Elements

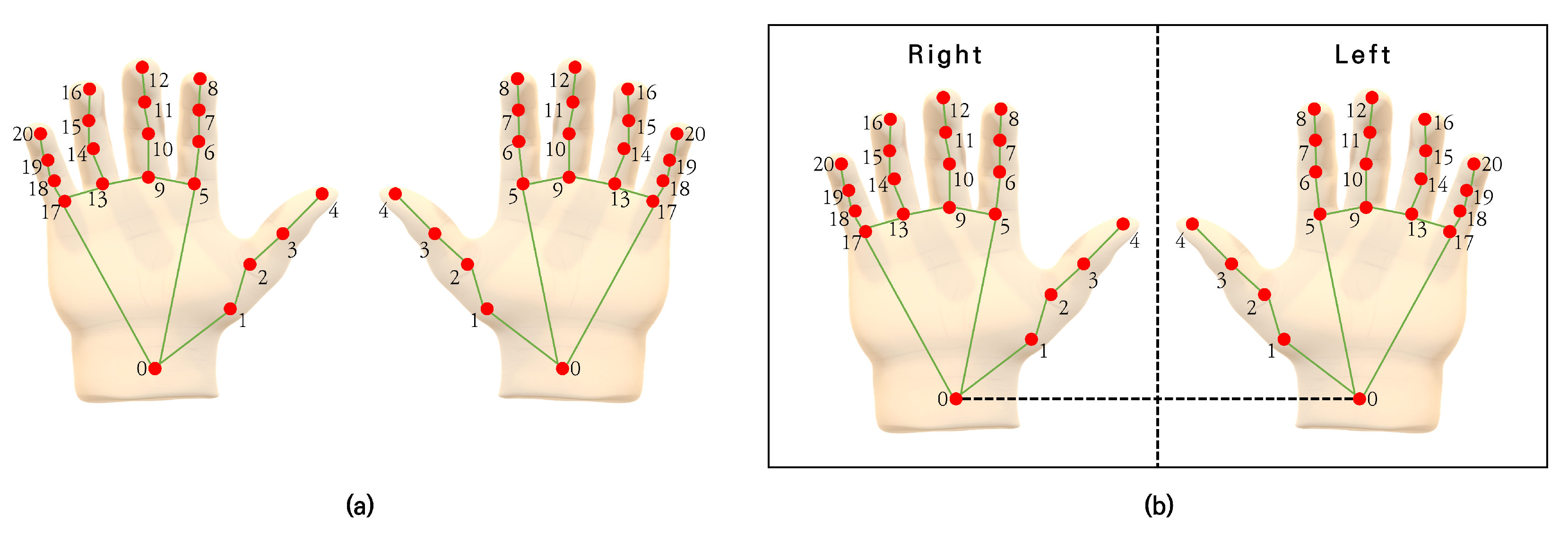

3.2. Hand Recognition

3.3. Converting to Quaternion

3.4. Behavior Recognition

3.4.1. Quaternion to Euler Angles

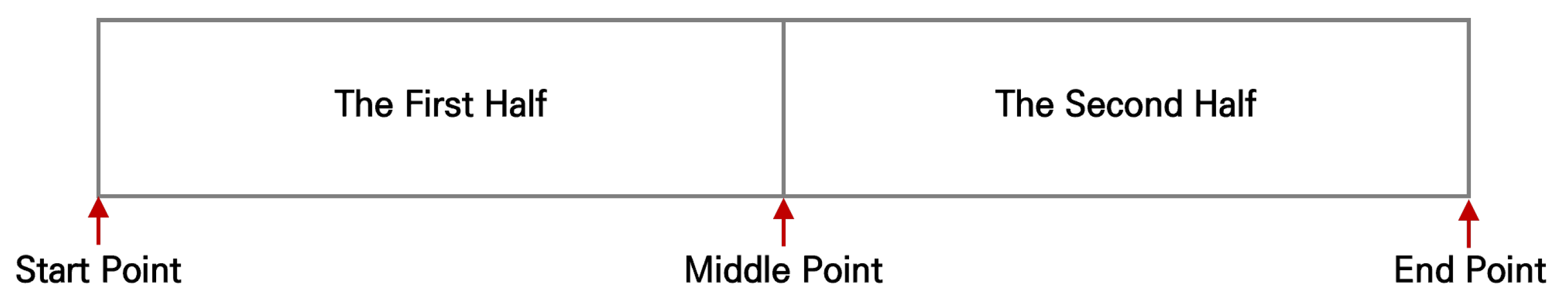

3.4.2. Pattern Analysis

- Input the continuous data: of both hands within the continuous data

- Slice data: Clip shape as in Figure 5 and set the initial values of the start point, middle point, and end point to 1, 5, and 10, respectively.

- Calculate gradient: Calculate gradient values for the first half and the second half. If the absolute value of the gradient is less than 0.1, treat it as 0.

- Compare gradient: Gradient sign compared between the first half and second half

- (a)

- First half gradient = 0: Add 5 to every point and return to process 2.

- (b)

- Equivalence: Add 5 to Middle point and End Point and return to process 2.

- (c)

- Difference: Go to process 5.

4. Experiment

- Human-to-human hand rotation angle difference.

- Changes over time between rotations while performing rotational actions.

- Change in rotational speed while performing rotational action.

- Rotation angle change while performing rotational action.

- Synchronization changes between both hands during rotation, e.g., increasingly, synchronization between both hands is wrong.

4.1. Experimental Apparatus

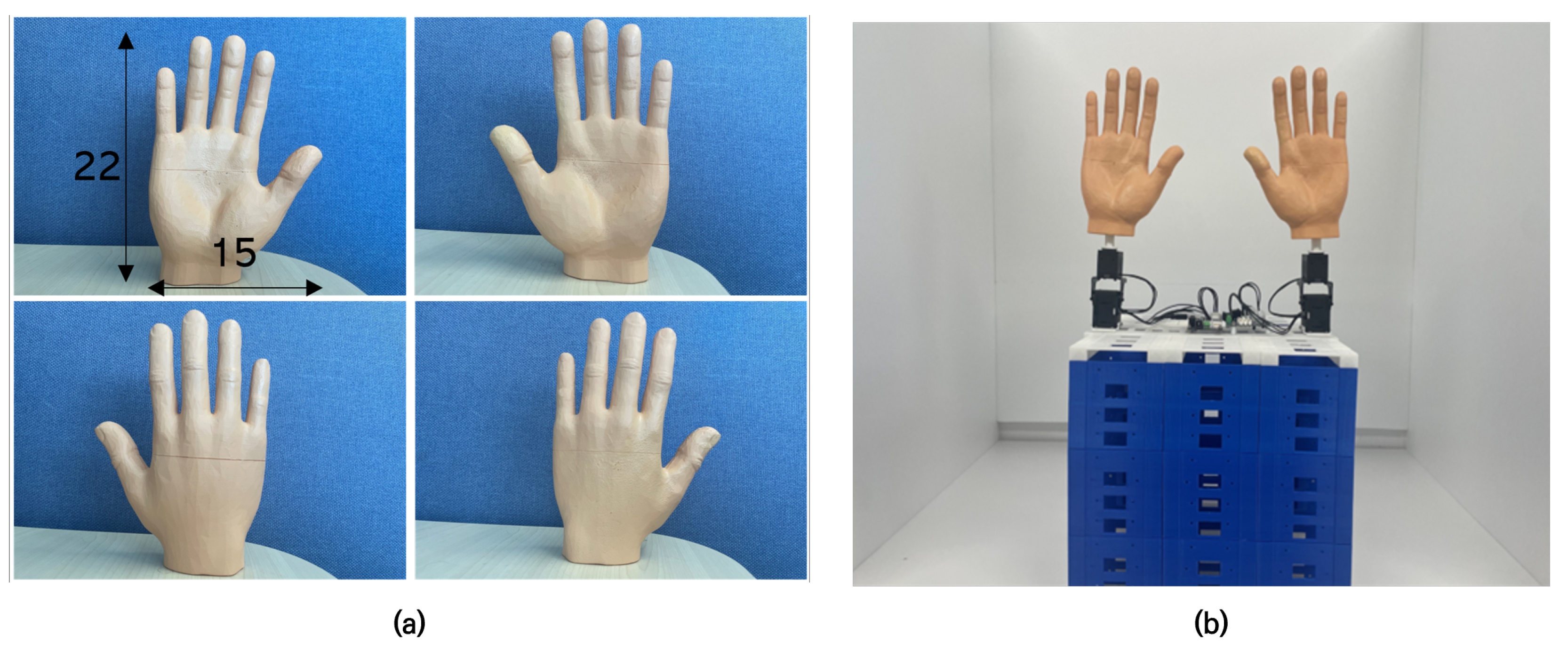

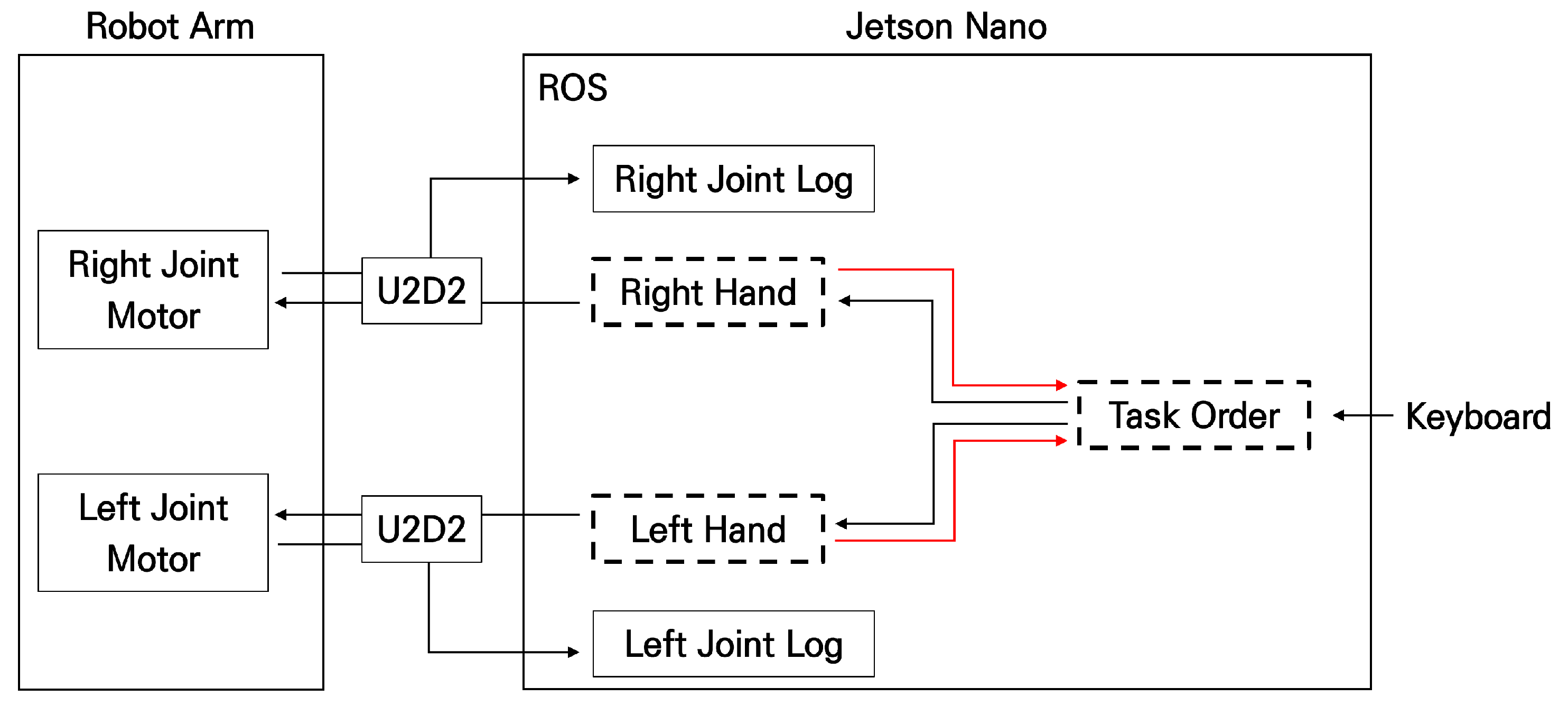

4.1.1. Hardware Configuration

4.1.2. Software Configuration

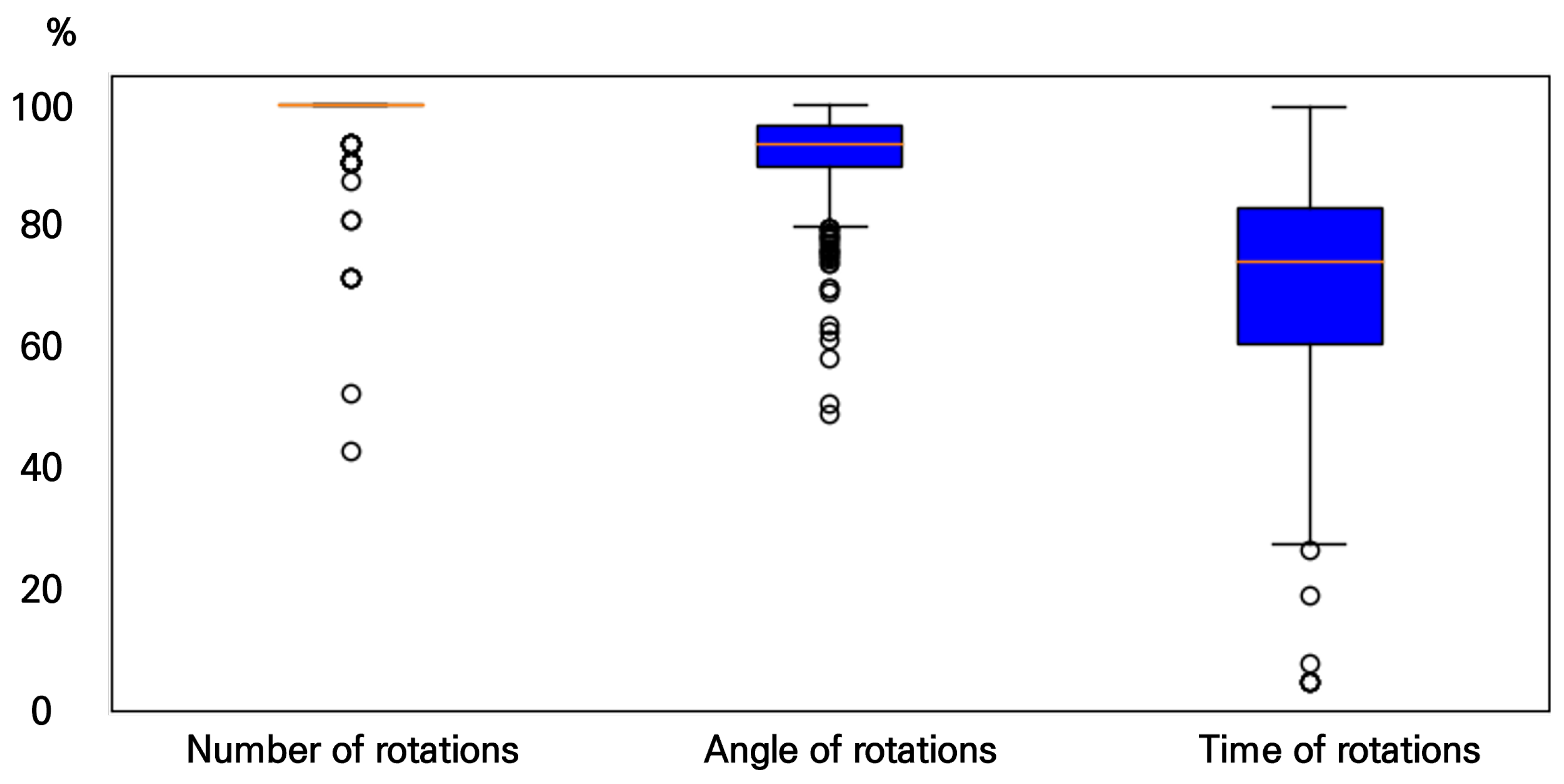

5. Results

6. Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lu, K.; Xiong, X.; Li, M.; Yuan, J.; Luo, Y.; Friedman, D.B. Trends in prevalence, health disparities, and early detection of dementia: A 10-year nationally representative serial cross-sectional and cohort study. Front. Public Health 2023, 10, 1021010. [Google Scholar] [CrossRef]

- Halonen, P.; Enroth, L.; Jämsen, E.; Vargese, S.; Jylhä, M. Dementia and related comorbidities in the population aged 90 and over in the vitality 90+ study, Finland: Patterns and trends from 2001 to 2018. J. Aging Health 2023, 35, 370–382. [Google Scholar] [CrossRef] [PubMed]

- Woodford, H.J.; George, J. Cognitive assessment in the elderly: A review of clinical methods. QJM Int. J. Med. 2007, 100, 469–484. [Google Scholar] [CrossRef] [PubMed]

- van Belle, G.; Uhlmann, R.F.; Hughes, J.P.; Larson, E.B. Reliability of estimates of changes in mental status test performance in senile dementia of the Alzheimer type. J. Clin. Epidemiol. 1990, 43, 589–595. [Google Scholar] [CrossRef] [PubMed]

- Moms, J.; Heyman, A.; Mohs, R.; Hughes, J.; van Belle, G.; Fillenbaum, G.; Mellits, E.; Clark, C. The Consortium to Establish a Registry for Alzheimer’s Disease (CERAD). Part I. Clinical and neuropsychological assesment of Alzheimer’s disease. Neurology 1989, 39, 1159. [Google Scholar] [CrossRef] [PubMed]

- Peters, C.A.; Potter, J.F.; Scholer, S.G. Hearing impairment as a predictor of cognitive decline in dementia. J. Am. Geriatr. Soc. 1988, 36, 981–986. [Google Scholar] [CrossRef]

- Murden, R.A.; McRae, T.D.; Kaner, S.; Bucknam, M.E. Mini-Mental State Exam scores vary with education in blacks and whites. J. Am. Geriatr. Soc. 1991, 39, 149–155. [Google Scholar] [CrossRef]

- Brayne, C.; Calloway, P. The association of education and socioeconomic status with the Mini Mental State Examination and the clinical diagnosis of dementia in elderly people. Age Ageing 1990, 19, 91–96. [Google Scholar] [CrossRef]

- O’connor, D.; Pollitt, P.; Treasure, F. The influence of education and social class on the diagnosis of dementia in a community population. Psychol. Med. 1991, 21, 219–224. [Google Scholar] [CrossRef]

- Folstein, M.; Anthony, J.C.; Parhad, I.; Duffy, B.; Gruenberg, E.M. The meaning of cognitive impairment in the elderly. J. Am. Geriatr. Soc. 1985, 33, 228–235. [Google Scholar] [CrossRef]

- Magaziner, J.; Bassett, S.S.; Rebel, J.R. Predicting performance on the Mini-Mental State Examination: Use of age-and education-specific equations. J. Am. Geriatr. Soc. 1987, 35, 996–1000. [Google Scholar] [CrossRef] [PubMed]

- Kay, D.; Henderson, A.; Scott, R.; Wilson, J.; Rickwood, D.; Grayson, D. Dementia and depression among the elderly living in the Hobart community: The effect of the diagnostic criteria on the prevalence rates. Psychol. Med. 1985, 15, 771–788. [Google Scholar] [CrossRef] [PubMed]

- Cavanaugh, S.A.; Wettstein, R.M. The relationship between severity of depression, cognitive dysfunction, and age in medical inpatients. Am. J. Psychiatry 1983, 140, 495–496. [Google Scholar] [PubMed]

- Uhlmann, R.F.; Larson, E.B. Effect of education on the Mini-Mental State Examination as a screening test for dementia. J. Am. Geriatr. Soc. 1991, 39, 876–880. [Google Scholar] [CrossRef] [PubMed]

- Yoon, E.; Bae, S.; Park, H. Gait speed and sleep duration is associated with increased risk of MCI in older community-dwelling adults. Int. J. Environ. Res. Public Health 2022, 19, 7625. [Google Scholar] [CrossRef] [PubMed]

- Huang, G.; Tran, S.N.; Bai, Q.; Alty, J. Hand gesture detection in tests performed by older adults. arXiv 2021, arXiv:2110.14461. [Google Scholar]

- Li, X.; Shen, M.; Han, Z.; Jiao, J.; Tong, X. The gesture imitation test in dementia with Lewy bodies and Alzheimer’s disease dementia. Front. Neurol. 2022, 13, 950730. [Google Scholar] [CrossRef]

- Kharb, A.; Saini, V.; Jain, Y.; Dhiman, S. A review of gait cycle and its parameters. IJCEM Int. J. Comput. Eng. Manag. 2011, 13, 78–83. [Google Scholar]

- Callisaya, M.L.; Launay, C.P.; Srikanth, V.K.; Verghese, J.; Allali, G.; Beauchet, O. Cognitive status, fast walking speed and walking speed reserve—the Gait and Alzheimer Interactions Tracking (GAIT) study. Geroscience 2017, 39, 231–239. [Google Scholar] [CrossRef]

- Buracchio, T.; Dodge, H.H.; Howieson, D.; Wasserman, D.; Kaye, J. The trajectory of gait speed preceding mild cognitive impairment. Arch. Neurol. 2010, 67, 980–986. [Google Scholar] [CrossRef]

- Yamaguchi, H.; Maki, Y.; Yamagami, T. Yamaguchi fox-pigeon imitation test: A rapid test for dementia. Dement. Geriatr. Cogn. Disord. 2010, 29, 254–258. [Google Scholar] [CrossRef] [PubMed]

- Nagahama, Y.; Okina, T.; Suzuki, N. Impaired imitation of gestures in mild dementia: Comparison of dementia with Lewy bodies, Alzheimer’s disease and vascular dementia. J. Neurol. Neurosurg. Psychiatry 2015, 86, 1248–1252. [Google Scholar] [CrossRef] [PubMed]

- Negin, F.; Rodriguez, P.; Koperski, M.; Kerboua, A.; Gonzàlez, J.; Bourgeois, J.; Chapoulie, E.; Robert, P.; Bremond, F. PRAXIS: Towards automatic cognitive assessment using gesture recognition. Expert Syst. Appl. 2018, 106, 21–35. [Google Scholar] [CrossRef]

- Park, J.; Seo, K.; Kim, S.E.; Ryu, H.; Choi, H. Early Screening of Mild Cognitive Impairment through Hand Movement Analysis in Virtual Reality Based on Machine Learning: Screening of MCI Through Hand Movement in VR. J. Cogn. Interv. Digit. Health 2022, 1, 1. [Google Scholar] [CrossRef]

- Chua, S.I.L.; Tan, N.C.; Wong, W.T.; Allen, J.C., Jr.; Quah, J.H.M.; Malhotra, R.; Østbye, T. Virtual reality for screening of cognitive function in older persons: Comparative study. J. Med. Internet Res. 2019, 21, e14821. [Google Scholar] [CrossRef]

- Zhong, Q.; Ali, N.; Gao, Y.; Wu, H.; Wu, X.; Sun, C.; Ma, J.; Thabane, L.; Xiao, M.; Zhou, Q.; et al. Gait kinematic and kinetic characteristics of older adults with mild cognitive impairment and subjective cognitive decline: A cross-sectional study. Front. Aging Neurosci. 2021, 13, 664558. [Google Scholar] [CrossRef]

- Baumard, J.; Lesourd, M.; Remigereau, C.; Lucas, C.; Jarry, C.; Osiurak, F.; Le Gall, D. Imitation of meaningless gestures in normal aging. Aging Neuropsychol. Cogn. 2020, 27, 729–747. [Google Scholar] [CrossRef]

- Curreri, C.; Trevisan, C.; Carrer, P.; Facchini, S.; Giantin, V.; Maggi, S.; Noale, M.; De Rui, M.; Perissinotto, E.; Zambon, S.; et al. Difficulties with fine motor skills and cognitive impairment in an elderly population: The progetto veneto anziani. J. Am. Geriatr. Soc. 2018, 66, 350–356. [Google Scholar] [CrossRef]

- Lindh-Rengifo, M.; Jonasson, S.B.; Ullen, S.; Stomrud, E.; Palmqvist, S.; Mattsson-Carlgren, N.; Hansson, O.; Nilsson, M.H. Components of gait in people with and without mild cognitive impairment. Gait Posture 2022, 93, 83–89. [Google Scholar] [CrossRef]

- Liang, X.; Kapetanios, E.; Woll, B.; Angelopoulou, A. Real time hand movement trajectory tracking for enhancing dementia screening in ageing deaf signers of British sign language. In Proceedings of the Machine Learning and Knowledge Extraction: Third IFIP TC 5, TC 12, WG 8.4, WG 8.9, WG 12.9 International Cross-Domain Conference, CD-MAKE 2019, Canterbury, UK, 26–29 August 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 377–394. [Google Scholar]

- Amprimo, G.; Masi, G.; Pettiti, G.; Olmo, G.; Priano, L.; Ferraris, C. Hand tracking for clinical applications: Validation of the Google MediaPipe Hand (GMH) and the depth-enhanced GMH-D frameworks. arXiv 2023, arXiv:2308.01088. [Google Scholar]

- Oikonomidis, I.; Kyriazis, N.; Argyros, A.A.; Computational Vision and Robotics Lab., Institute of Computer Science, FORTH; Computer Science Department, University of Crete. Efficient model-based 3D tracking of hand articulations using Kinect. In Proceedings of the BmVC, Dundee, UK, 29 August–2 September 2011; Volume 1, p. 3. [Google Scholar]

- Zimmermann, C.; Brox, T. Learning to estimate 3d hand pose from single rgb images. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4903–4911. [Google Scholar]

- Deng, X.; Zhang, Y.; Yang, S.; Tan, P.; Chang, L.; Yuan, Y.; Wang, H. Joint hand detection and rotation estimation using CNN. IEEE Trans. Image Process. 2017, 27, 1888–1900. [Google Scholar] [CrossRef] [PubMed]

- Zhang, F.; Bazarevsky, V.; Vakunov, A.; Tkachenka, A.; Sung, G.; Chang, C.L.; Grundmann, M. Mediapipe hands: On-device real-time hand tracking. arXiv 2020, arXiv:2006.10214. [Google Scholar]

- Watanabe, T.; Maniruzzaman, M.; Hasan, M.A.M.; Lee, H.S.; Jang, S.W.; Shin, J. 2D Camera-Based Air-Writing Recognition Using Hand Pose Estimation and Hybrid Deep Learning Model. Electronics 2023, 12, 995. [Google Scholar] [CrossRef]

- Kim, S.; Kim, M. Rotation Representations and Their Conversions. IEEE Access 2023, 11, 6682–6699. [Google Scholar] [CrossRef]

| Task | Hand | Angle of Rotation | Number of Rotations | TBR (ms) | TOK (ms) | TCA (ms) | ACA |

|---|---|---|---|---|---|---|---|

| Task 1 | Both | 180° | 10 | 0 | 0 | 0 | 0° |

| Task 2 | Both | 120° | 10 | 0 | 0 | 0 | 0° |

| Task 3 | Both | 100° | 10 | 0 | 0 | 0 | 0° |

| Task 4 | Both | 80° | 10 | 0 | 0 | 0 | 0° |

| Task 5 | Both | 60° | 10 | 0 | 0 | 0 | 0° |

| Task 6 | Both | 40° | 10 | 0 | 0 | 0 | 0° |

| Task 7 | Both | 180° | 10 | 0 | 50 | 0 | 0° |

| Task 8 | Both | 120° | 10 | 0 | 50 | 0 | 0° |

| Task 9 | Both | 100° | 10 | 0 | 50 | 0 | 0° |

| Task 10 | Both | 80° | 10 | 0 | 50 | 0 | 0° |

| Task 11 | Both | 60° | 10 | 0 | 50 | 0 | 0° |

| Task 12 | Both | 40° | 10 | 0 | 50 | 0 | 0° |

| Task 13 | Both | 180° | 10 | 0 | 0 | 50 | 0° |

| Task 14 | Both | 120° | 10 | 0 | 0 | 50 | 0° |

| Task 15 | Both | 100° | 10 | 0 | 0 | 50 | 0° |

| Task 16 | Both | 80° | 10 | 0 | 0 | 50 | 0° |

| Task 17 | Both | 60° | 10 | 0 | 0 | 50 | 0° |

| Task 18 | Both | 40° | 10 | 0 | 0 | 50 | 0° |

| Task 19 | Both | 180° | 10 | 0 | 0 | 0 | −4.4° |

| Task 20 | Both | 120° | 10 | 0 | 0 | 0 | −2.64° |

| Task 21 | Both | 100° | 10 | 0 | 0 | 0 | −0.88° |

| Task 22 | Both | 80° | 10 | 0 | 0 | 0 | 0.88° |

| Task 23 | Both | 60° | 10 | 0 | 0 | 0 | 2.64° |

| Task 24 | Both | 40° | 10 | 0 | 0 | 0 | 4.4° |

| Task 25 | Left | 180° | 15 | 50 | 50 | 50 | −4.4° |

| Right | 180° | 10 | 30 | 100 | 30 | −4.84° | |

| Task 26 | Left | 120° | 15 | 50 | 50 | 50 | −2.64° |

| Right | 120° | 10 | 30 | 100 | 30 | −3.08° | |

| Task 27 | Left | 100° | 15 | 50 | 50 | 50 | −0.88° |

| Right | 100° | 10 | 30 | 100 | 30 | −1.32° | |

| Task 28 | Left | 80° | 15 | 50 | 50 | 50 | 0.88° |

| Right | 80° | 10 | 30 | 100 | 30 | 1.32° | |

| Task 29 | Left | 60° | 15 | 50 | 50 | 50 | 2.64° |

| Right | 60° | 10 | 30 | 100 | 30 | 3.08° | |

| Task 30 | Left | 40° | 15 | 50 | 50 | 50 | 4.4° |

| Right | 40° | 10 | 30 | 100 | 30 | 4.84° |

| Measurement Factor | N | Average (std) | Minimum | Maximum |

|---|---|---|---|---|

| Number of rotations | 540 | 99.21 (4.64)% | 40.00% | 100.00% |

| Angle of rotations | 540 | 91.90 (6.98)% | 46.29% | 99.97% |

| Time of rotations | 540 | 68.67 (16.59)% | 0.00% | 99.77% |

| Task (N) | Evaluation Metric | Number of Rotations | Angle of Rotation | Time of Rotation |

|---|---|---|---|---|

| Task 1 (18) | Accuracy | 100.00 (0.00)% | 94.88 (3.49)% | 85.13 (1.29)% |

| MAE | 0.00 | 9.21° | 4.52 (ms) | |

| Task 2 (18) | Accuracy | 100.00 (0.00)% | 94.16 (3.15)% | 74.06 (2.84)% |

| MAE | 0.00 | 7.13° | 5.47 (ms) | |

| Task 3 (18) | Accuracy | 100.00 (0.00)% | 94.88 (2.50)% | 69.51 (4.69)% |

| MAE | 0.00 | 5.26° | 5.65 (ms) | |

| Task 4 (18) | Accuracy | 100.00 (0.00)% | 93.80 (5.26)% | 85.13 (9.75)% |

| MAE | 0.00 | 5.16° | 4.83 (ms) | |

| Task 5 (18) | Accuracy | 100.00 (0.00)% | 91.54 (3.73)% | 74.06 (6.94)% |

| MAE | 0.00 | 5.41° | 4.53 (ms) | |

| Task 6 (18) | Accuracy | 100.00 (0.00)% | 80.60 (4.52)% | 69.51 (7.88)% |

| MAE | 0.00 | 8.66° | 3.82 (ms) | |

| Task 7 (18) | Accuracy | 100.00 (0.00)% | 94.93 (3.45)% | 76.12 (1.46)% |

| MAE | 0.00 | 9.13° | 7.25 (ms) | |

| Task 8 (18) | Accuracy | 100.00 (0.00)% | 89.76 (2.71)% | 64.08 (2.65)% |

| MAE | 0.00 | 12.50° | 7.68 (ms) | |

| Task 9 (18) | Accuracy | 100.00 (0.00)% | 93.72 (2.03)% | 59.46 (3.75)% |

| MAE | 0.00 | 6.57° | 7.57 (ms) | |

| Task 10 (18) | Accuracy | 100.00 (0.00)% | 93.12 (4.43)% | 51.73 (5.54)% |

| MAE | 0.00 | 5.73° | 7.55 (ms) | |

| Task 11 (18) | Accuracy | 100.00 (0.00)% | 92.37 (4.02)% | 43.43 (5.25)% |

| MAE | 0.00 | 4.88° | 7.24 (ms) | |

| Task 12 (18) | Accuracy | 93.33 (10.28)% | 80.37 (5.39)% | 21.43 (14.36)% |

| MAE | 0.67 | 8.77° | 7.95 (ms) | |

| Task 13 (18) | Accuracy | 100.00 (0.00)% | 94.22 (3.70)% | 87.04 (0.85)% |

| MAE | 0.00 | 10.42° | 4.60 (ms) | |

| Task 14 (18) | Accuracy | 100.00 (0.00)% | 93.09 (2.79)% | 83.67 (2.60)% |

| MAE | 0.00 | 8.44° | 4.98 (ms) | |

| Task 15 (18) | Accuracy | 100.00 (0.00)% | 94.56 (1.67)% | 83.10 (2.45)% |

| MAE | 0.00 | 5.70° | 4.97 (ms) | |

| Task 16 (18) | Accuracy | 100.00 (0.00)% | 93.74 (5.17)% | 82.99 (3.38)% |

| MAE | 0.00 | 5.22° | 4.82 (ms) | |

| Task 17 (18) | Accuracy | 97.77 (7.32)% | 93.24 (4.36)% | 85.55 (3.48)% |

| MAE | 0.22 | 4.33° | 3.98 (ms) | |

| Task 18 (18) | Accuracy | 90.00 (18.47)% | 69.18 (11.73)% | 86.37 (8.68)% |

| MAE | 1.00 | 13.76° | 3.62 (ms) | |

| Task 19 (18) | Accuracy | 100.00 (0.00)% | 97.10 (2.19)% | 84.32 (0.52)% |

| MAE | 0.00 | 4.65° | 4.30 (ms) | |

| Task 20 (18) | Accuracy | 100.00 (0.00)% | 94.47 (1.59)% | 76.92 (2.32)% |

| MAE | 0.00 | 6.10° | 4.50 (ms) | |

| Task 21 (18) | Accuracy | 100.00 (0.00)% | 94.71 (2.48)% | 76.10 (2.36)% |

| MAE | 0.00 | 5.36° | 4.29 (ms) | |

| Task 22 (18) | Accuracy | 100.00 (0.00)% | 93.42 (4.51)% | 68.25 (3.36)% |

| MAE | 0.00 | 5.74° | 5.06 (ms) | |

| Task 23 (18) | Accuracy | 99.44 (2.35)% | 92.74 (4.26)% | 63.33 (5.12)% |

| MAE | 0.06 | 5.51° | 5.15 (ms) | |

| Task 24 (18) | Accuracy | 99.44 (3.35)% | 95.16 (3.49)% | 56.05 (8.75)% |

| MAE | 0.06 | 3.12° | 5.38 (ms) | |

| Task 25 (18) | Accuracy | 99.62 (1.57)% | 95.72 (3.06)% | 72.53 (9.05)% |

| MAE | 0.06 | 6.61° | 9.42 (ms) | |

| Task 26 (18) | Accuracy | 100.00 (0.00)% | 89.22 (4.35)% | 67.07 (12.84)% |

| MAE | 0.00 | 11.47° | 9.52 (ms) | |

| Task 27 (18) | Accuracy | 99.62 (1.57)% | 92.65 (3.86)% | 67.53 (13.68)% |

| MAE | 0.06 | 7.28° | 9.05 (ms) | |

| Task 28 (18) | Accuracy | 100.00 (0.00)% | 92.31 (5.12)% | 61.09 (16.08)% |

| MAE | 0.00 | 6.85° | 10.39 (ms) | |

| Task 29 (18) | Accuracy | 99.25 (2.15)% | 92.97 (5.11)% | 60.72 (19.51)% |

| MAE | 0.11 | 5.50° | 9.83 (ms) | |

| Task 30 (18) | Accuracy | 97.96 (4.14)% | 94.41 (4.30)% | 59.14 (21.73)% |

| MAE | 0.28 | 3.88° | 9.80 (ms) | |

| Total (540) | Accuracy | 99.21 (4.64)% | 91.91 (6.98)% | 68.68 (16.60)% |

| MAE | 0.08 | 6.95° | 6.26 (ms) |

| Variable | Value (N) | Number of Rotations | Angle of Rotation | Time of Rotation |

|---|---|---|---|---|

| Hand | Left (270) | 99.17 (3.64)% | 93.33 (6.93)% | 71.10 (16.07)% |

| Right (270) | 99.26 (5.47)% | 90.48 (6.75)% | 66.26 (16.79)% | |

| Number of rotations | 10 (486) | 99.26 (4.81)% | 91.44 (7.18)% | 67.49 (17.04)% |

| 15 (54) | 99.01 (2.72)% | 96.08 (2.18)% | 79.39 (3.62)% | |

| TOK | 0 (360) | 99.11 (5.41)% | 91.72 (7.80)% | 72.94 (14.28)% |

| 50 (126) | 99.26 (2.79)% | 93.39 (4.78)% | 64.52 (18.55)% | |

| 100 (54) | 99.81 (1.36)% | 89.68 (4.37)% | 49.97 (9.34)% | |

| TBR | 0 (324) | 99.26 (5.17)% | 91.98 (7.78)% | 75.33 (11.00)% |

| 30 (54) | 99.81 (1.36)% | 89.68 (4.37)% | 49.97 (9.34)% | |

| 50 (162) | 98.93 (4.21)% | 92.50 (5.77)% | 61.60 (19.84)% | |

| TCA | 0 (324) | 99.57 (2.91)% | 92.33 (5.72)% | 64.64 (15.98)% |

| 30 (54) | 99.81 (1.36)% | 89.68 (4.37)% | 49.97 (9.34)% | |

| 50 (162) | 98.31 (7.30)% | 91.81 (9.44)% | 82.99 (4.94)% | |

| ACA | −4.84° (9) | 100.00 (0.00)% | 95.16 (3.65)% | 63.91 (0.71)% |

| −4.4° (24) | 99.72 (1.36)% | 97.03 (2.30)% | 83.08 (2.15)% | |

| −3.08° (9) | 100.00 (0.00)% | 85.46 (2.60)% | 54.69 (2.15)% | |

| −2.6° (24) | 100.00 (0.00)% | 93.98 (1.71)% | 77.95 (2.37)% | |

| −1.32° (9) | 100.00 (0.00)% | 89.19 (1.06)% | 54.45 (1.90)% | |

| −0.88° (24) | 99.72 (1.36)% | 94.92 (2.36)% | 78.08 (3.23)% | |

| 0° (342) | 98.98 (5.66)% | 90.94 (7.98)% | 69.42 (17.72)% | |

| 0.88° (24) | 100.00 (0.00)% | 94.25 (4.12)% | 71.82 (4.59)% | |

| 1.32° (9) | 100.00 (0.00)% | 87.42 (1.04)% | 45.59 (2.60)% | |

| 2.6° (24) | 99.44 (1.88)% | 94.30 (4.51)% | 68.58 (9.48)% | |

| 3.08° (9) | 100.00 (0.00)% | 88.11 (1.15)% | 42.13 (3.43)% | |

| 4.4° (24) | 98.47 (3.68)% | 95.70 (3.05)% | 64.00 (14.21)% | |

| 4.84° (9) | 98.89 (3.33)% | 92.75 (5.47)% | 39.04 (7.71)% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, H.-J.; Kim, J.-S.; Kim, S.-H. Vision-Based Hand Rotation Recognition Technique with Ground-Truth Dataset. Appl. Sci. 2024, 14, 422. https://doi.org/10.3390/app14010422

Kim H-J, Kim J-S, Kim S-H. Vision-Based Hand Rotation Recognition Technique with Ground-Truth Dataset. Applied Sciences. 2024; 14(1):422. https://doi.org/10.3390/app14010422

Chicago/Turabian StyleKim, Hui-Jun, Jung-Soon Kim, and Sung-Hee Kim. 2024. "Vision-Based Hand Rotation Recognition Technique with Ground-Truth Dataset" Applied Sciences 14, no. 1: 422. https://doi.org/10.3390/app14010422

APA StyleKim, H.-J., Kim, J.-S., & Kim, S.-H. (2024). Vision-Based Hand Rotation Recognition Technique with Ground-Truth Dataset. Applied Sciences, 14(1), 422. https://doi.org/10.3390/app14010422