A Comparative Study of Effective Domain Adaptation Approaches for Arabic Sentiment Classification

Abstract

1. Introduction

- We employ two public multi-domain sentiment datasets (i.e., MSA and DA) in a unified configuration, following previous research in sentiment domain adaptation in English (i.e., binary classification, balanced dataset and equal domain size), allowing for a consistent comparison of methods and assisting future research;

- We replicate the existing domain adaptation methods for Arabic and compare their performance on both MSA and DA;

- We test two of the best-known domain adaptation methods and assess their performance on Arabic, compared with their reported performance on English.

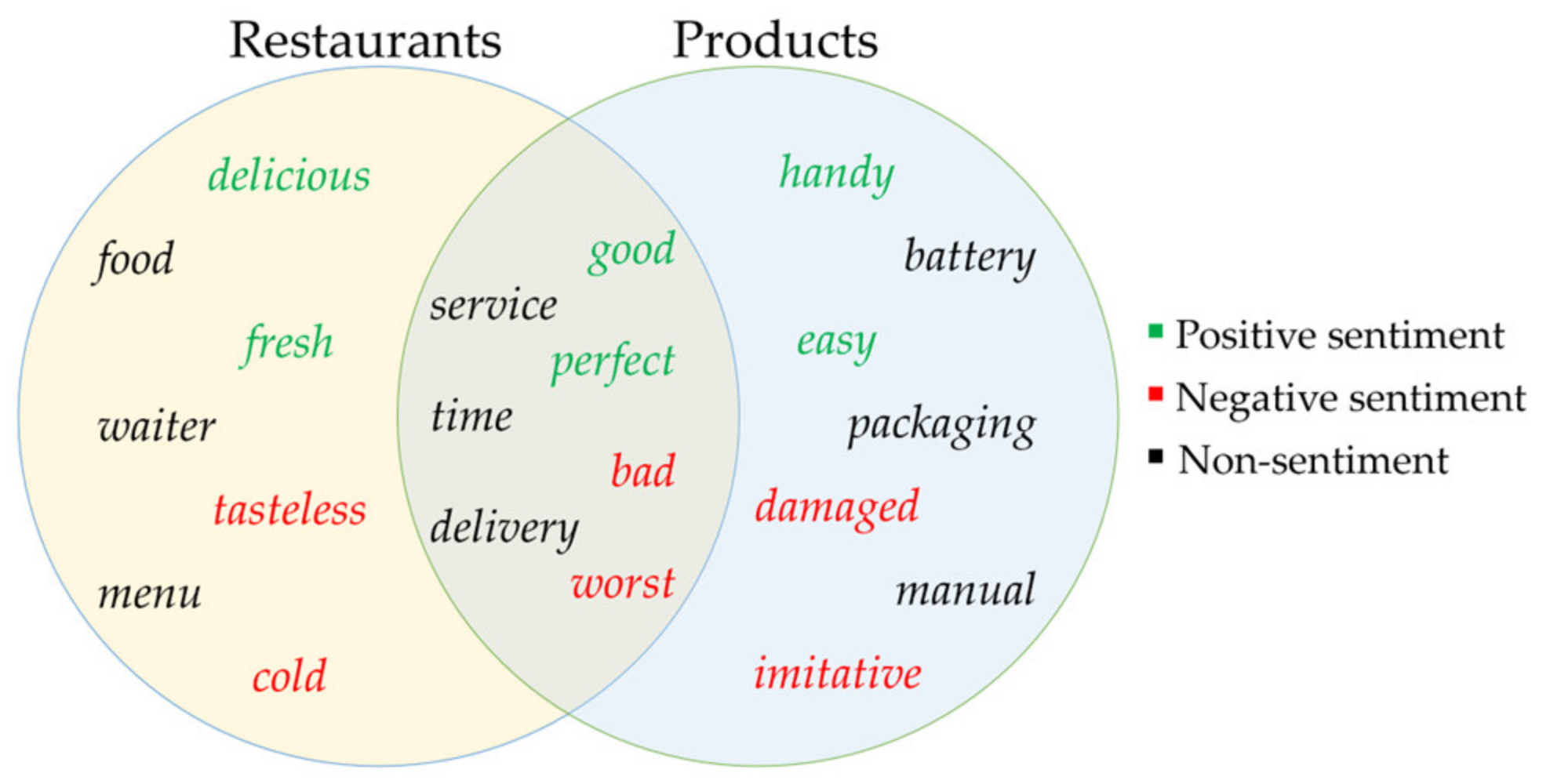

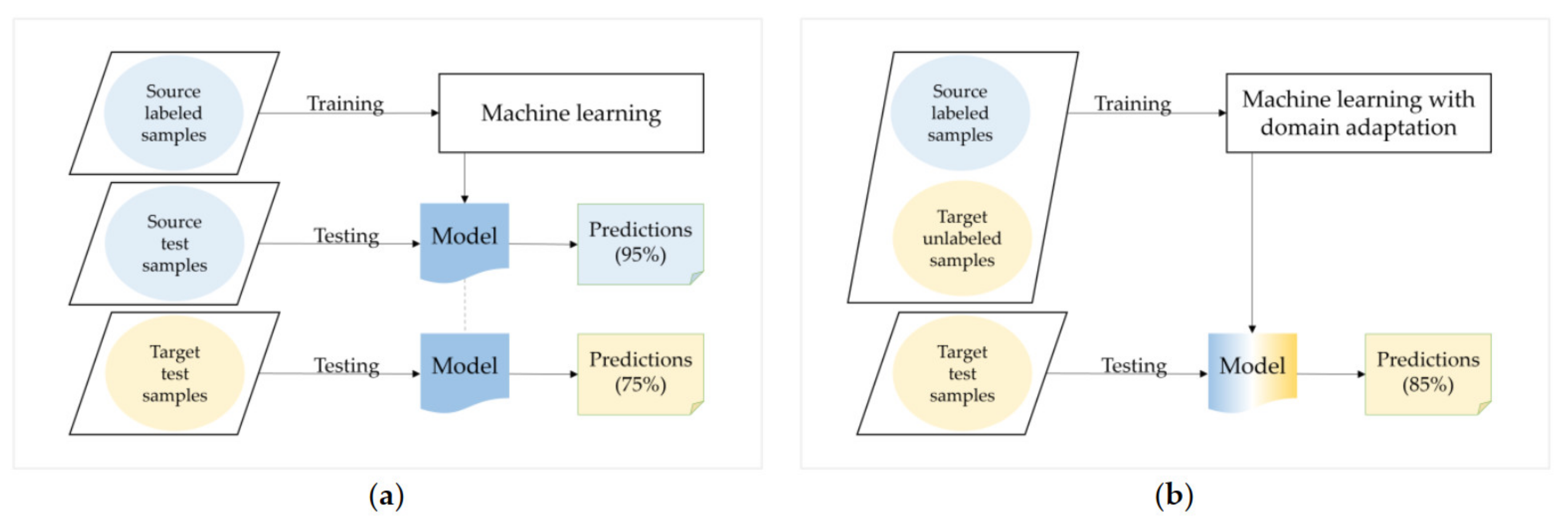

2. Literature Review

3. Materials and Methods

3.1. Datasets

- MSA dataset: The large, public Arabic multi-domain (LAMD) dataset [12] for sentiment analysis includes 33 K annotated reviews collected from several websites covering hotels, restaurants, products and movies. Due to size restrictions, the movies domain was excluded, while the products domain required a little oversampling to balance its samples;

- DA dataset: The public multi-domain Arabic resources for sentiment analysis (MARSA) [13] dataset consists of 61,353 manually labeled tweets collected from trending hashtags in the Kingdom of Saudi Arabia (KSA) across four domains: political, social, sports and technology. We excluded the technology domain because of its size limitation. MARSA covers one country-level Arabic dialect (i.e., the Saudi dialect), which itself consists of different local subdialects that are not specified in the dataset.

3.2. Preprocessing

- Normalization: to unify different forms of some letters, we replaced آ (i), أ (>) and إ (<) with ا (a) and replaced ة (p) with ه (h), where the symbols correspond to Buckwalter Arabic transliterations;

- Elongation removal: we removed repeated letters (used in social media to show strong feelings) and kept a maximum of two letters;

- Stop-words removal: we removed stop-words listed in both NLTK and Arabic stop-words libraries (we tested both options of removing or keeping stop-words and chose the best option);

- Cleaning: we removed numbers, URLs, punctuation, diacritics and non-Arabic letters.

3.3. Domain Adaptation Methods

3.4. Experimental Setup

- DANN [10]: we followed the original implementation, where the size of the hidden layer was 50, the learning rate was 10−3 and the adaptation parameter equaled one;

- mSDA [11]: we trained a linear SVM classifier using a representation learned by mSDA with five layers and a corruption probability of 50%;

- DARL [6]: to achieve the DARL goal of combining adversarial training and denoising reconstruction, we used both mSDA and DANN (with 10−4 learning rate);

- AraBERT [45]: we trained AraBERT for five epochs with the Adam optimizer and a learning rate of 2 × 10−5 using the Transformers library of HuggingFace;

- AraBERT-ALDA [7]: we used the same implementation provided by the authors;

- AraBERT-DANN: we used an implementation provided in [7].

3.5. Evaluation Metrics

4. Results

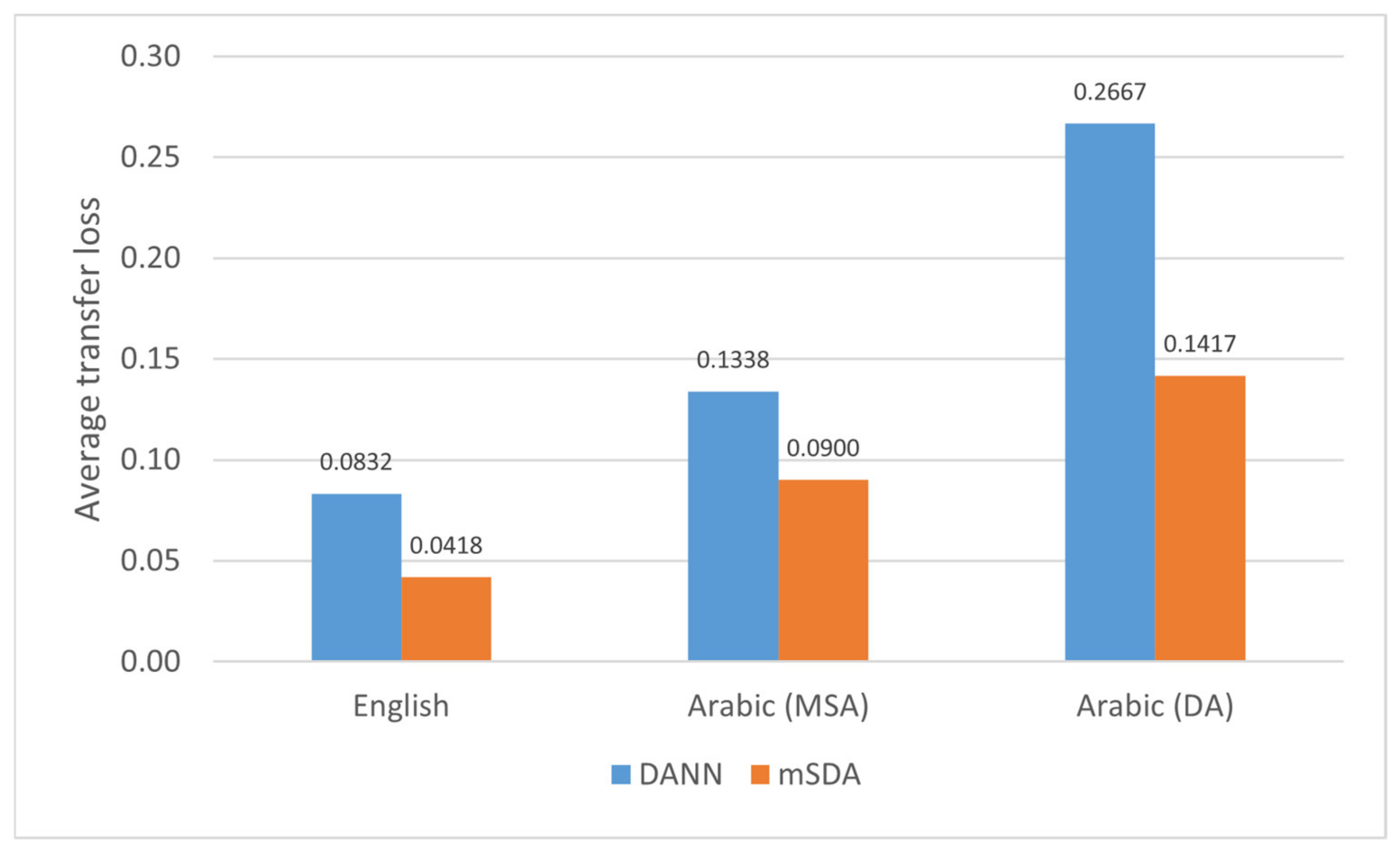

5. Discussion

5.1. MSA vs. DA

5.2. Arabic vs. English

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Pang, B.; Lee, L.; Vaithyanathan, S. Thumbs up? Sentiment Classification using Machine Learning Techniques. Proc. ACL Conf. EMNLP 2002, 10, 79–86. [Google Scholar]

- Liu, B. Sentiment analysis and opinion mining. Synth. Lect. Hum. Lang. Technol. 2012, 5, 1–167. [Google Scholar]

- Ben-David, S.; Blitzer, J.; Crammer, K.; Kulesza, A.; Pereira, F.; Vaughan, J.W. A theory of learning from different domains. Mach. Learn. 2010, 79, 151–175. [Google Scholar] [CrossRef]

- Blitzer, J.; McDonald, R.; Pereira, F. Domain Adaptation with Structural Correspondence Learning. In Proceedings of the 2006 Conference on Empirical Methods in Natural Language Processing (EMNLP 2006), Sydney, Australia, 22–23 July 2006; pp. 120–128. [Google Scholar]

- Ramponi, A.; Plank, B. Neural Unsupervised Domain Adaptation in NLP—A Survey. In Proceedings of the 28th International Conference on Computational Linguistics, COLING, Barcelona, Spain, 8–13 December 2020; pp. 6838–6855. [Google Scholar]

- Khaddaj, A.; Hajj, H. Improved Generalization of Arabic Text Classifiers. In Proceedings of the Fourth Arabic Natural Language Processing Workshop, Florence, Italy, 1 August 2019; pp. 167–174. [Google Scholar]

- el Mekki, A.; el Mahdaouy, A.; Berrada, I.; Khoumsi, A. Domain Adaptation for Arabic Cross-Domain and Cross-Dialect Sentiment Analysis from Contextualized Word Embedding. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Online, 6–11 June 2021; pp. 2824–2837. [Google Scholar]

- Blitzer, J.; Dredze, M.; Pereira, F. Biographies, Bollywood, Boom-Boxes and Blenders: Domain Adaptation for Sentiment Classification. In Proceedings of the 45th Annual Meeting of the Association of Computational Linguistics, Prague, Czech, 23–30 June 2007; pp. 440–447. [Google Scholar]

- Ponomareva, N.; Thelwall, M. Biographies or Blenders: Which Resource is Best for Cross-Domain Sentiment Analysis? In Proceedings of the International Conference on Intelligent Text Processing and Computational Linguistics, New Delhi, India, 11–17 March 2012; pp. 488–499. [Google Scholar]

- Ganin, Y.; Ustinova, E.; Ajakan, H.; Germain, P.; Larochelle, H.; Laviolette, F.; Marchand, M.; Lempitsky, V. Domain-Adversarial Training of Neural Networks. J. Mach. Learn. Res. 2016, 17, 2030–2096. [Google Scholar]

- Chen, M.; Xu, Z.; Weinberger, K.Q.; Sha, F. Marginalized Denoising Autoencoders for Domain Adaptation. In Proceedings of the 29th International Conference on Machine Learning, Edinburgh, UK, 26 June–1 July 2012; pp. 1627–1634. [Google Scholar]

- ElSahar, H.; El-Beltagy, S.R. Building Large Arabic Multi-domain Resources for Sentiment Analysis. In Proceedings of the International Conference on Intelligent Text Processing and Computational Linguistics, Cairo, Egypt, 14–20 April 2015; pp. 23–34. [Google Scholar]

- Alowisheq, A.; Al-Twairesh, N.; Altuwaijri, M.; Almoammar, A.; Alsuwailem, A.; Albuhairi, T.; Alahaideb, W.; Alhumoud, S. MARSA: Multi-Domain Arabic Resources for Sentiment Analysis. IEEE Access 2021, 9, 142718–142728. [Google Scholar] [CrossRef]

- Cui, X.; Al-Bazzaz, N.; Bollegala, D.; Coenen, F. A comparative study of pivot selection strategies for unsupervised cross-domain sentiment classification. Knowl. Eng. Rev. 2018, 33, 1–12. [Google Scholar] [CrossRef]

- Pan, S.J.; Ni, X.; Sun, J.T.; Yang, Q.; Chen, Z. Cross-domain sentiment classification via spectral feature alignment. In Proceedings of the 19th International Conference on World Wide Web, Raleigh, NC, USA, 26–30 April 2010; pp. 751–760. [Google Scholar]

- Lin, C.; He, Y. Joint Sentiment/Topic Model for Sentiment Analysis. In Proceedings of the 18th ACM conference on Information and knowledge management, Hong Kong, China, 2–6 November 2009; pp. 375–384. [Google Scholar]

- He, Y.; Lin, C.; Alani, H. Automatically Extracting Polarity-Bearing Topics for Cross-Domain Sentiment Classification. In Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics, Portland, OR, USA, 19–24 June 2011; Volume 1, pp. 123–131. [Google Scholar]

- Bollegala, D.; Weir, D.; Carroll, J. Cross-Domain Sentiment Classification Using a Sentiment Sensitive Thesaurus. IEEE Trans. Knowl. Data Eng. 2012, 25, 1719–1731. [Google Scholar] [CrossRef]

- Cui, X.; Kojaku, S.; Masuda, N.; Bollegala, D. Solving Feature Sparseness in Text Classification using Core-Periphery Decomposition. In Proceedings of the Seventh Joint Conference on Lexical and Computational Semantics, New Orleans, LA, USA, 5–6 June 2018; pp. 255–264. [Google Scholar]

- Vincent, P.; Larochelle, H. Extracting and composing robust features with denoising autoencoders. In Proceedings of the 25th International Conference on Machine Learning, Helsinki, Finland, 5–9 July 2008; pp. 1096–1103. [Google Scholar]

- Glorot, X.; Bordes, A.; Bengio, Y. Domain Adaptation for Large-Scale Sentiment Classification: A Deep Learning Approach. In Proceedings of the 28th International Conference on International Conference on Machine Learning, Bellevue, WA, USA, 28 June–2 July 2011; pp. 513–520. [Google Scholar]

- Ziser, Y.; Reichart, R. Neural Structural Correspondence Learning for Domain Adaptation. In Proceedings of the International Conference on Computational Natural Language Learning, Vancouver, BC, Canada, 3–4 August 2017; pp. 400–410. [Google Scholar]

- Ziser, Y.; Reichart, R. Pivot Based Language Modeling for Improved Neural Domain Adaptation. In Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, New Orleans, LA, USA, 1–6 June 2018; pp. 1241–1251. [Google Scholar]

- Li, Z.; Wei, Y.; Zhang, Y.; Yang, Q. Hierarchical Attention Transfer Network for Cross-domain Sentiment Classification Hierarchical Attention Transfer Network for Cross-Domain Sentiment Classification. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; pp. 5852–5859. [Google Scholar]

- Jiang, J.; Zhai, C. Instance Weighting for Domain Adaptation in NLP. In Proceedings of the 45th Annual Meeting of the Association of Computational Linguistics, Prague, Czech Republic, 23–30 June 2007; pp. 264–271. [Google Scholar]

- Remus, R. Domain adaptation using domain similarity- and domain complexity-based instance selection for cross-domain sentiment analysis. In Proceedings of the IEEE 12th International Conference on Data Mining Workshops, Brussels, Belgium, 10 December 2012; pp. 717–723. [Google Scholar]

- Xia, R.; Hu, X.; Lu, J.; Yang, J.; Zong, C. Instance Selection and Instance Weighting for Cross- Domain Sentiment Classification via PU Learning. In Proceedings of the Twenty-Third International Joint Conference on Artificial Intelligence, Beijing, China, 3–9 August 2013; pp. 2176–2182. [Google Scholar]

- Xia, R.; Zong, C.; Hu, X.; Cambria, E. Feature ensemble plus sample selection: Domain adaptation for sentiment classification. In Proceedings of the 24th International Joint Conference on Artificial Intelligence, Buenos Aires, Argentina, 25 July 2015; pp. 4229–4233. [Google Scholar]

- Ruder, S. Neural Transfer Learning for Natural Language Processing. Ph.D. Thesis, National University of Ireland, Galway, Ireland, 2019. [Google Scholar]

- Tan, S.; Cheng, X.; Wang, Y.; Xu, H. Adapting naive bayes to domain adaptation for sentiment analysis. In Proceedings of the European Conference on Information Retrieval, Toulouse, France, 6–9 April 2009; pp. 337–349. [Google Scholar]

- NYu; Kübler, S. Filling the Gap: Semi-Supervised Learning for Opinion Detection Across Domains. In Proceedings of the Fifteenth Conference on Computational Natural Language Learning, Portland, OR, USA, 23–24 June 2011; pp. 200–209. [Google Scholar]

- Chen, M.; Weinberger, K.Q.; Blitzer, J.C. Co-training for domain adaptation. In Proceedings of the 24th International Conference on Neural Information Processing Systems, Madrid, Spain, 11–15 July 2011; pp. 2456–2464. [Google Scholar]

- Saito, K.; Ushiku, Y.; Harada, T. Asymmetric tri-training for unsupervised domain adaptation. In Proceedings of the 34th International Conference on Machine Learning, Sydney, NSW, Australia, 6–11 August 2017; Volume 6, pp. 4573–4585. [Google Scholar]

- Ruder, S.; Plank, B. Strong Baselines for Neural Semi-supervised Learning under Domain Shift. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics, Melbourne, Australia, 15–20 July 2018; pp. 1044–1054. [Google Scholar]

- He, R.; Lee, W.S.; Ng, H.T.; Dahlmeier, D. Adaptive semi-supervised learning for cross-domain sentiment classification. In Proceedings of the 2018 Conference on EMNLP, Brussels, Belgium, 31 October–4 November 2018; pp. 3467–3476. [Google Scholar]

- Cui, X.; Bollegala, D. Self-Adaptation for Unsupervised Domain Adaptation. In Proceedings of the International Conference on Recent Advances in Natural Language Processing, Varna, Bulgaria, 2–4 September 2019; pp. 213–222. [Google Scholar]

- Wu, Q.; Tan, S.; Cheng, X. Graph Ranking for Sentiment Transfer. In Proceedings of the ACL-IJCNLP Conference, Singapore, 4 August 2009; pp. 317–320. [Google Scholar]

- Zhu, X.; Ghahramani, Z. Learning from Labeled and Unlabeled Data with Label Propagation; Rep. No. CMU-CALD-02–107; Carnegie Mellon University: Pittsburgh, PA, USA, 2002. [Google Scholar]

- Li, Z.; Zhang, Y.; Wei, Y.; Wu, Y.; Yang, Q. End-to-End Adversarial Memory Network for Cross-domain Sentiment Classification. In Proceedings of the 26th International Joint Conference on Artificial Intelligence, Melbourne, Australia, 19–25 August 2017; pp. 2237–2243. [Google Scholar]

- Ryu, M.; Lee, G.; Lee, K. Knowledge distillation for BERT unsupervised domain adaptation. Knowl. Inf. Syst. 2022, 64, 3113–3128. [Google Scholar] [CrossRef]

- Long, Q.; Luo, T.; Wang, W.; Pan, S.J. Domain Confused Contrastive Learning for Unsupervised Domain Adaptation. In Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Seattle, WA, USA, 10–15 July 2022; pp. 2982–2995. [Google Scholar]

- Fu, Y.; Liu, Y. Contrastive transformer based domain adaptation for multi-source cross-domain sentiment classification. Knowl. Based Syst. 2022, 245, 108649. [Google Scholar]

- Baly, R.; Khaddaj, A.; Hajj, H.; El-Hajj, W.; Shaban, K.B. ArSentD-LEV: A multi-topic corpus for target-based sentiment analysis in arabic levantine tweets. In Proceedings of the 3rd Workshop on Open-Source Arabic Corpora and Processing Tools, Miyazaki, Japan, 8 May 2018; p. 37. [Google Scholar]

- Chen, M.; Zhao, S.; Liu, H.; Cai, D. Adversarial-Learned Loss for Domain Adaptation. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 3521–3528. [Google Scholar]

- Antoun, W.; Baly, F.; Hajj, H. AraBERT: Transformer-based Model for Arabic Language Understanding. In Proceedings of the 4th Workshop on Open-Source Arabic Corpora and Processing Tools, Marseille, France, 12 May 2020; pp. 9–15. [Google Scholar]

- Habash, N. Introduction to Arabic Natural Language Processing. Synth. Lect. Hum. Lang. Technol. 2010, 3, 1–187. [Google Scholar]

- Oueslati, O.; Cambria, E.; Ben HajHmida, M.; Ounelli, H. A review of sentiment analysis research in Arabic language. Future Gener. Comput. Syst. 2020, 112, 408–430. [Google Scholar]

| LAMD | MARSA | |

|---|---|---|

| Arabic type | MSA | DA (Saudi) |

| Text type | Reviews | Tweets |

| Domains | Hotels, restaurants, products | Political, social, sports |

| Size | 6000 reviews (2000 per domain) | 6000 tweets (2000 per domain) |

| Vocabulary | 61,602 | 29,999 |

| Stem 1 | 24,299 | 11,731 |

| Root 1 | 10,929 | 5220 |

| Domains | In-Domain | No-Adapt | TF/IDF | AraBERT | |||||

|---|---|---|---|---|---|---|---|---|---|

| Source | Target | SVM | SVM | DANN | mSDA | DARL | Finetuning | ALDA | DANN |

| HOT | RES | 83.75 | 77.75 | 75.75 | 79.25 | 79.25 | 84.60 | 81.25 | 83.75 |

| PRO | 81.00 | 73.00 | 70.75 | 74.25 | 69.00 | 81.25 | 80.75 | 78.75 | |

| RES | HOT | 89.50 | 85.50 | 78.00 | 83.00 | 74.75 | 92.38 | 92.25 | 91.75 |

| PRO | 81.00 | 76.75 | 69.50 | 74.00 | 65.75 | 80.50 | 81.25 | 80.00 | |

| PRO | HOT | 89.50 | 83.25 | 71.75 | 77.75 | 76.50 | 87.00 | 86.25 | 92.00 |

| RES | 83.75 | 71.50 | 62.50 | 66.25 | 76.75 | 83.16 | 76.00 | 81.75 | |

| Average | 84.75 | 77.96 | 71.38 | 75.75 | 73.67 | 84.82 | 82.96 | 84.67 | |

| Domains | In-Domain | No-Adapt | TF/IDF | AraBERT | |||||

|---|---|---|---|---|---|---|---|---|---|

| Source | Target | SVM | SVM | DANN | mSDA | DARL | Finetuning | ALDA | DANN |

| POL | SOC | 76.25 | 67.25 | 64.75 | 71.50 | 71.25 | 86.00 | 83.50 | 85.25 |

| SPO | 87.25 | 61.00 | 55.75 | 61.25 | 55.25 | 89.40 | 80.75 | 78.25 | |

| SOC | POL | 85.75 | 72.50 | 60.00 | 73.50 | 80.75 | 90.25 | 90.75 | 90.75 |

| SPO | 87.25 | 67.00 | 50.00 | 70.00 | 68.25 | 85.50 | 82.00 | 88.50 | |

| SPO | POL | 85.75 | 57.50 | 51.50 | 72.25 | 60.00 | 90.60 | 85.75 | 90.25 |

| SOC | 76.25 | 63.00 | 56.50 | 65.00 | 61.75 | 82.20 | 85.00 | 81.75 | |

| Average | 83.08 | 64.71 | 56.42 | 68.92 | 66.21 | 87.33 | 84.63 | 85.79 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alqahtani, Y.; Al-Twairesh, N.; Alsanad, A. A Comparative Study of Effective Domain Adaptation Approaches for Arabic Sentiment Classification. Appl. Sci. 2023, 13, 1387. https://doi.org/10.3390/app13031387

Alqahtani Y, Al-Twairesh N, Alsanad A. A Comparative Study of Effective Domain Adaptation Approaches for Arabic Sentiment Classification. Applied Sciences. 2023; 13(3):1387. https://doi.org/10.3390/app13031387

Chicago/Turabian StyleAlqahtani, Yathrib, Nora Al-Twairesh, and Ahmed Alsanad. 2023. "A Comparative Study of Effective Domain Adaptation Approaches for Arabic Sentiment Classification" Applied Sciences 13, no. 3: 1387. https://doi.org/10.3390/app13031387

APA StyleAlqahtani, Y., Al-Twairesh, N., & Alsanad, A. (2023). A Comparative Study of Effective Domain Adaptation Approaches for Arabic Sentiment Classification. Applied Sciences, 13(3), 1387. https://doi.org/10.3390/app13031387