Abstract

Large-class few-shot learning has a wide range of applications in many fields, such as the medical, power, security, and remote sensing fields. At present, many few-shot learning methods for fewer-class scenarios have been proposed, but little research has been performed for large-class scenarios. In this paper, we propose a large-class few-shot learning method called HF-FSL, which is based on high-dimensional features. Recent theoretical research shows that if the distribution of samples in a high-dimensional feature space meets the conditions of compactness within the class and the dispersion between classes, the large-class few-shot learning method has a better generalization ability. Inspired by this theory, the basic idea is use a deep neural network to extract high-dimensional features and unitize them to project the samples onto a hypersphere. The global orthogonal regularization strategy can then be used to make samples of different classes on the hypersphere that are as orthogonal as possible, so as to achieve the goal of sample compactness within the class and the dispersion between classes in high-dimensional feature space. Experiments on Omniglot, Fungi, and ImageNet demonstrate that the proposed method can effectively improve the recognition accuracy in a large-class FSL problem.

1. Introduction

The problem of few-shot learning (FSL) attracted the attention of E. G. Miller et al., in 2000 [1]. With the development of deep learning [2,3,4], since 2015, some few-shot learning methods based on deep features have been proposed, which have achieved remarkable results in fewer-class scenarios. In 2016, the matching network [5] proposed by O Vinyals et al. achieved a 98.9% recognition accuracy under an experimental setup using a 5-way 5-shot setting on Omniglot; the prototype network [6] proposed by J Snell et al., in 2017 achieved a 99.7% recognition accuracy with the same dataset and experimental settings. These methods were aimed at scenarios with a very small number of target classes (5 or 20 classes). The recognition accuracy declines significantly when the number of target classes increases. Under an experimental setup using a 2636-way 5-shot setting on Omniglot, the accuracy of the matching network and prototype network decreased to 68.04% and 69.90%, respectively [7]. However, there are a large number of categories in the real world, and it is difficult to achieve a practical level using the existing few-shot learning methods in large-class scenarios. Compared with a traditional few-shot learning task, large-class few-shot learning tasks contain hundreds or thousands of target classes, which is more challenging. This paper proposes a large-class few-shot learning method for an image recognition task. We use a global regularization strategy to make different classes of samples as orthogonal as possible. In addition, we apply AM-Softmax Loss to the final cost function in order to describe the relationships between samples distributed on a hypersphere.

At present, little research for large-class few-shot learning has been performed. As far as we know, there are only a few studies [7,8,9,10,11]. Recently, theoretical research on few-shot learning in a high-dimensional feature space has been carried out [11,12,13,14,15]. As reported by [11], with certain conditions, the precision of few-shot classification can improve as the number of classes grows. Ref. [15] conducted theoretical research on high-dimensional space and proved that as long as samples of different classes are scattered and samples of the same class are distributed compactly in high-dimensional space, a large-class FSL learner can achieve a better generalization ability. Inspired by this theory, this paper proposes a large-class few-shot learning method based on high-dimensional features. The basic idea is to use convolutional neural networks to extract high-dimensional features and unitize them to project samples onto a feature sphere. The global orthogonal regularization strategy is then used to make the samples in different categories as orthogonal as possible on the hypersphere, so as to achieve the purpose of compactness within the class and dispersion between classes in high-dimensional space. To describe the relationships between samples distributed on a sphere, we employ an angle metric-based loss function. Our source code is available at: https://github.com/jwdang/HF-FSL (accessed on 30 October 2023).

The main contribution of this paper consists of two parts:

- (1)

- A large-class few-shot learning method, HF-FSL, based on high-dimensional features is proposed. On the high-dimensional feature sphere, HF-FSL uses a regularization strategy to make different classes of samples as orthogonal as possible, so as to achieve the purpose of homogeneous compactness and heterogeneous dispersion. In addition, HF-FSL uses AM-Softmax Loss to describe the relationships between samples distributed on a hypersphere.

- (2)

- The experimental results from three public large-class few-shot datasets, Omniglot [16], Fungi, and ImageNet [17], show that HF-FSL can effectively improve the recognition accuracy of a large-class FSL task.

2. Related Work

Few-shot learning: In recent years, many few-shot learning methods have been proposed, which have achieved excellent results in fewer-class classification tasks. These methods are mainly divided into three categories: data-augmentation methods, metric learning-based methods, and metalearning methods based on optimization strategies.

Data augmentation is an intuitive way to increase the number of training samples and enhance diversity in data. In the field of computer vision, some basic augmentation operations have been applied, such as rotation, flipping, cropping, translation, and adding noise to an image [18,19,20]. For few-shot learning tasks, these naive augmentation methods cannot improve generalization in the FSL method. Recently, data augmentation based on the generation model has aroused people’s interest as it can learn the distribution of data. Ref. [21] built a generator joint classifier model that combined the generation model with the classification algorithm and used a metalearning idea to generate additional samples to achieve an end-to-end metatraining optimization strategy. This greatly improved the credibility of additional images in the classification task. Ref. [22] proposed a data-augmentation method, f-DAGAN, based on GAN, which can generate high-quality training data. Ref. [23] proposed a model for orthogonal regularization of convolutional kernels called DBT, and they combined the DBT-based orthogonal regularizer with a data-augmentation strategy in order to improve the recognition accuracy of the few-shot learning method.

Metric learning [24] aims to measure the similarity among samples while using an optimal distance metric for learning tasks. Methods based on metric learning are more concise and efficient. By learning the mapping between samples and features, the samples can be mapped onto the feature space and the nearest neighbor in the space can be found in order to achieve better classification. The goal of metric learning is to learn the paired similarity metric. Under this metric, pairs from the same class obtain higher similarity scores, while pairs from different classes obtain lower similarity scores. Ref. [5] proposed matching networks, which predicted the category of query samples by measuring the cosine similarity between query samples and each supporting sample. Ref. [6] proposed prototypical networks, which project samples into a metric space, calculate the center of each category, and classify query samples by comparing the distance between the query sample and the center of each category.

The goal of meta-learning is to train a model on a variety of learning tasks so that it can solve new learning tasks using only a small number of training samples. Ref. [25] proposed a very general optimization algorithm that can be used for any model based on gradient descent learning. Ref. [26] proved that MAML with hyperparametric DNN can converge to the global optimal solution at a linear rate.

These three types of methods have their own advantages, and this paper adopts the method based on metric learning for modeling. HF-FSL projects samples onto a hypersphere and applies AM-Softmax Loss, which is based on an angular distance metric, to describe the relationships between samples distributed on a hypersphere.

Large-class few-shot learning: The above methods have shown good results in fewer-class few-shot learning tasks, but it is difficult to extend them to solve problems in large-class scenarios. At present, there is a paucity of literature on large-class few-shot learning. Facebook AI Research (FAIR) [10] proposed a large-class few-shot learning method based on shrinking and transformation features. First, inter class augmentation is used to expand data, and, then, square gradient loss is introduced in the representation learning stage to improve the representation ability of the large-class few-shot learning model. Ref. [8] put forward a class hierarchy that uses the semantic relationships between classes to guide the network to learn more transferable feature information, thereby helping the nearest neighbor algorithm to obtain more accurate classification results. Ref. [9] proposed a multimodal knowledge-discovery method. The foreground image and background image of a sample are obtained through saliency detection. The original image, foreground image, and background image are respectively input into the corresponding network to extract features. The three feature maps obtained are spliced into a visual feature, and the semantic weak supervision information of the class is used. Ref. [7] introduced a confusion matrix to analyze the easily confused categories in a dataset, so as to improve the recognition accuracy of neural networks for large-class few-shot learning. These methods can improve recognition accuracy in large-class few-shot learning tasks to a certain extent; however, there is still much room for improvement in practical applications.

Continuous learning: Continuous learning [27] refers to the ability of a system to continuously learn and adapt when processing new data without forgetting what it has learned. A representative scenario is few-shot continual learning (FSCL). FSCL requires CNN models to incrementally learn new classes from very few labelled samples, without forgetting the previously learned ones. Ref. [28] represents previously learned knowledge using a neural gas (NG) network, which can learn and preserve the topology of the feature manifold formed by different classes. The model first learns some base classes for initialization with a large number of training samples, and, then, learns a sequence of novel classes with only a small number of training samples. Ref. [29] introduces a data-free replay method based on entropy regularization to solve the FSCL problem. It proves that the data-replay method is effective in solving the FSCL problem, and it proposes a data-independent replay method to avoid privacy issues.

High-dimensional feature space learning: Recently, some scholars have conducted FSL theoretical research based on a high-dimensional feature space [11,12,13,14,15]. Ref. [11] proposed that, with certain conditions, the precision of few-shot classification can improve as the number of classes grows. Ref. [15] presents theoretical research on high-dimensional space, and the authors prove that, as long as samples from the same class can be distributed compactly in the high-dimensional space and samples from different classes can be fully dispersed, the model of large-class FSL can achieve better generalization. This theoretical research give us some proof that the large-class FSL problem could be solved in high-dimensional space. First, we normalized the feature embedding to unit length, projected the samples onto the unit sphere, and then used the global orthogonal regularization strategy to make the samples from different categories as orthogonal as possible on the hypersphere, so as to achieve the goal of compactness within the class and the dispersion between classes in high-dimensional space.

The classification loss function: softmax loss is the most commonly used loss function. It maps the flattened output of the feature map to (0, 1) and outputs the probability of each class. Many variants of the softmax loss function have been proposed to maximize the distance between classes and reduce the distance within a class. Ref. [30] proposed center loss, which is used to constrain the intraclass compactness of features. With the development of face recognition technology, A-Softmax Loss [31], CosFace Loss [32], AM-Softmax Loss [33], and other loss functions based on the angular distance metric in high-dimensional feature space have been proposed, and have achieved remarkable classification performances. Since HF-FSL distributes the samples on the hypersphere, it is more appropriate to use the loss function based on the angular distance metric; hence, AM-Softmax Loss was adopted in this study.

3. Methodology

3.1. The Large-Class FSL Definition and Symbol Representation

Formally, we use x to represent input data and y to represent the supervision target. A few-shot classification task, T, usually consists of a T-independent auxiliary dataset, , and a T-dependent dataset, . The goal of few-shot learning is to transfer knowledge learned from to , where contains limited amounts of available data per category. consists of support sets, , and query sets, , i.e., , where , . If contains C categories, and each category contains K samples, T is called a C-way K-shot task. Common settings for traditional few-shot learning are 5-way 1-shot and 20-way 1-shot. When the value of C reaches hundreds or thousands it is called a large-class few-shot learning task. In this paper, represents the feature embedding of sample x, where is the sample pair formed by pairs of samples from different categories.

3.2. The Basic Idea of Model Building

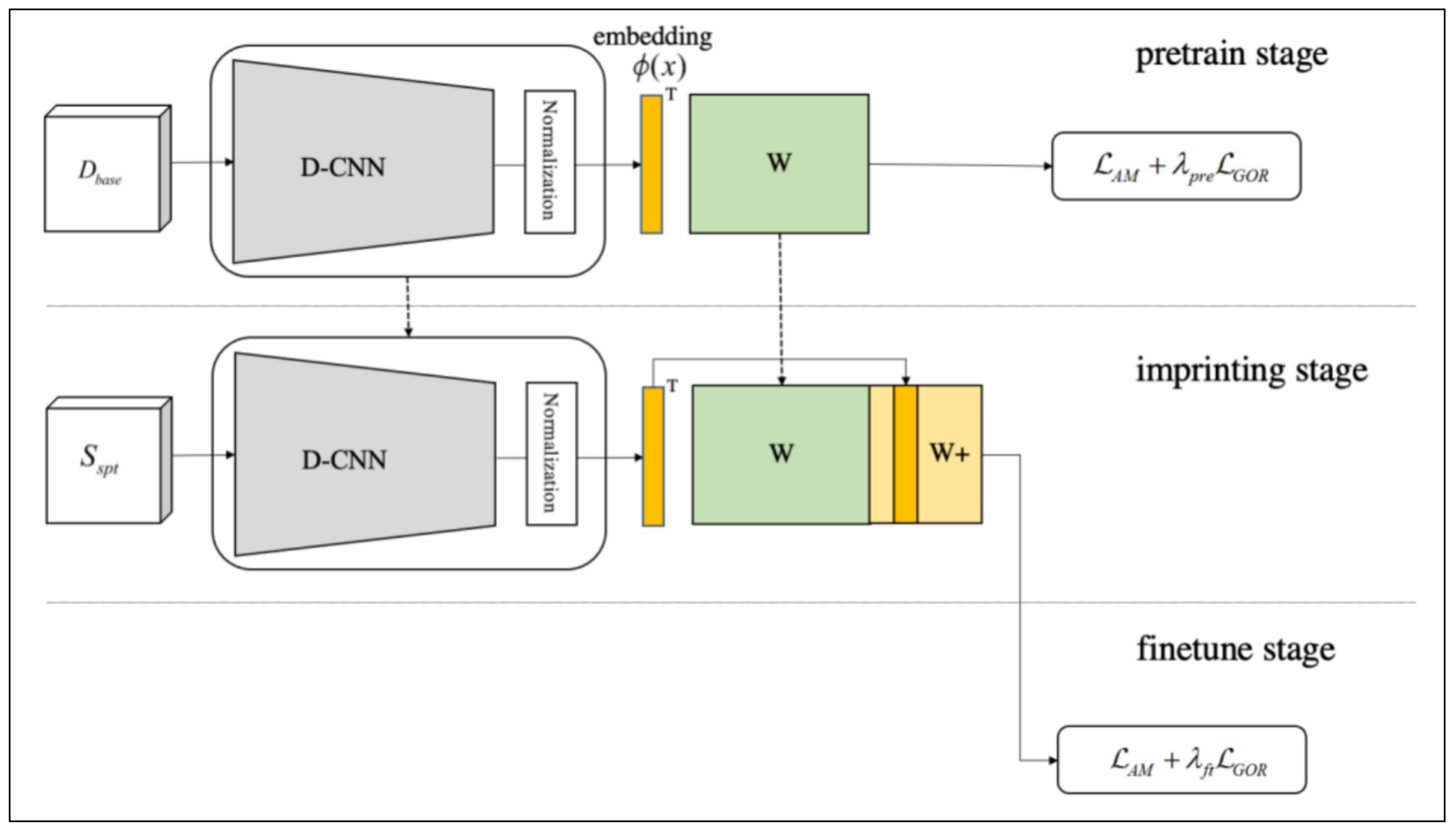

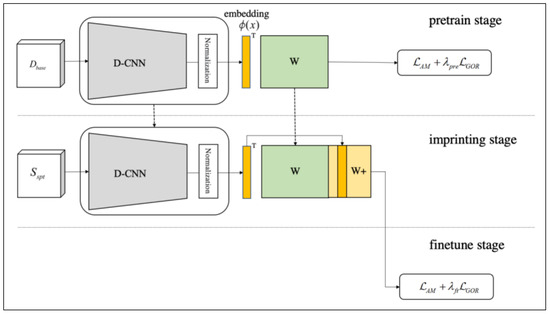

Ref. [15] proved that as long as samples from the same class are compactly distributed in the high-dimensional space and the heterogeneous samples are sufficiently dispersed, the large-class FSL learning model can achieve better generalization. Drawing on this theory, we propose a large-class FSL method based on high-dimensional features. As shown in Figure 1, we first use a deep convolutional neural network to extract high-dimensional features, we then unitize the obtained high-dimensional features, project the sample onto the hypersphere, and then use the global orthogonal regularization strategy to make different categories of samples as orthogonal as possible on the hypersphere, so as to achieve the purpose of compactness within the class and the dispersion between classes.

Figure 1.

HF-FSL architecture. HF-FSL uses an task-independent auxiliary dataset, , for pretraining. In the imprinting stage HF-FSL only uses support set, . The feature extractor trained in the pretraining stage is used to extract the feature embedding, and, after unitization, it is spliced into the classifier weight matrix in the pretraining stage as a classifier weight vector. In the end, is used for fine-tuning.

Ref. [34] proves that two independent and uniformly sampled points on the unit sphere are orthogonal with high probability. Let and be two points from different classes sampled from the unit sphere in d-dimensional space. The mean and the second moment of are, respectively,

This paper draws on this idea and characterizes the orthogonality of two samples on the sphere through Equation (1). We define a global orthogonal regularization term as follows:

It can be seen that the regular term tries to make the mean value of equal to zero, and the second moment equal to the reciprocal of the dimension number of the feature space, that is, the feature embedding of different classes are orthogonal to each other. In this way, the samples from different classes are fully dispersed, and those from same class are compactly distributed on the feature sphere.

Since the normalized feature embeddings are distributed on a hypersphere, in order to describe the relationships between the samples distributed on the sphere, we adopt AM-Softmax Loss [33], which is based on the angular distance metric. The AM-Softmax Loss function can be formalized as follows:

where represents the angular distance between the feature vector of the i-th sample in the support set, , and the weight vector corresponding to the ground truth class of the i-th sample in the classifier weight matrix. The value represents the hyperparameter of the angular interval, and we set the scaling factor, s, to a learnable parameter. Combining Equations (2) and (3), the loss function of HF-FSL is

where the hyperparameter, , is the weight coefficient of the regular term.

3.3. Model Training Strategy

As shown in Algorithm 1, this paper adopts the training strategy of imprinting [35], which consists of three stages: a pretraining stage, an imprinting stage, and a fine-tuning stage. Below is the specific introduction of the three stages:

- (1)

- Pretraining stage.

This stage uses a task-independent auxiliary dataset, , for pretraining. For an input image, x, first use a CNN feature extractor to extract a d-dimensional embedding, , and, then, unitize and the classifier weight matrix, respectively. The loss is then computed according to Equation (4). the values and are the hyperparameters in Equation (4) in the pretraining stage.

- (2)

- Imprinting stage.

The imprinting stage uses only the support set, . The feature extractor trained in the pretraining stage is used to extract the feature embedding, and, after unitization, it is spliced into the classifier weight matrix in the pretraining stage as a classifier weight vector.

- (3)

- Fine-tuning stage.

This stage uses fine-tuning, and the calculation process is similar to that of the pretraining stage. It is worth noting that they all use Equation (4) to calculate the loss; however, the hyperparameters used by each are not necessarily the same. The values and are the hyperparameters in Equation (4) in the fine-tuning stage.

| Algorithm 1 HF-FSL. |

Input: Auxiliary dataset , support set , query set Parameter: Feature extractor , classifier Hyper-parameter:

|

4. Experimental Results and Analysis

This paper verifies the effectiveness of HF-FSL on three public large-class few-shot datasets. Next, we introduce the datasets and experimental settings, comparative analysis, ablation experiments, and parameter sensitivity analysis, in turn.

4.1. Dataset and Experimental Setup

In order to prove the effectiveness of the proposed method, HF-FSL, on large-class and FSL tasks, experiments were performed on three public large-scale datasets: Omniglot, Fungi, and ImageNet. The number of novel classes in the experimental setting ranged from hundreds to thousands. Table 1 lists the number of base classes and novel classes in the three large-class few-shot datasets that were used.

Table 1.

Classification of the number of categories of base classes and novel classes in the different datasets.

The Omniglot dataset is often used for few-shot learning. It contains 1623 different handwritten characters from 50 different alphabets, which are written by 20 different people. To increase the number of classes, all images were rotated by 90, 180, and 270 degrees. Each spin generated an extra class, so the total number of classes became 6492 (). We adopted 3856 classes as base classes and the remaining 2636 classes as novel classes.

Fungi is a fine-grained fungal image dataset that was originally introduced by the 2018 FGVCx Fungal Classification Challenge. We randomly sampled 632 classes for the base class, 674 classes for the novel class, and 88 classes for the validation set.

ImageNet is a widely used image dataset. We conducted experiments using the ImageNet64 × 64 dataset, which is a downsampled version of the original ImageNet used in ILSVRC, with images resized to 64 × 64 pixels. We used WordNet to divide the original 1000 classes into three groups, namely Living Thing, Artifact, and Rest. Among them, 522 classes that were classified as Living Thing were used as base classes, 410 classes classified as Artifacts were used as novel classes, and the remaining 68 classes were used for the validation set.

4.2. Analysis of Comparative Results

This study used ResNet-50 as the backbone network for extracting high-dimensional features and adopted the experimental setting of 5-shot on Omniglot, Fungi, and ImageNet. It was compared with several few-shot learning methods, namely the Prototype Network (PN), the Matching Network (MN), the Prototype Matching Network (PMN), the Ridge Regression Differentiable Discriminator (R2D2), and their combination using the confusion learning method variant [7] (denoted by “w/CL”). Table 2 shows the Top-1 accuracy comparison of these methods on three large-class datasets. It is easy to see that the method proposed in this paper obtained the highest accuracy compared with other methods. Overall, HF-FSL significantly improved recognition accuracy compared with other methods.

Table 2.

Top-1 accuracy comparison table of different methods on three large-class datasets.

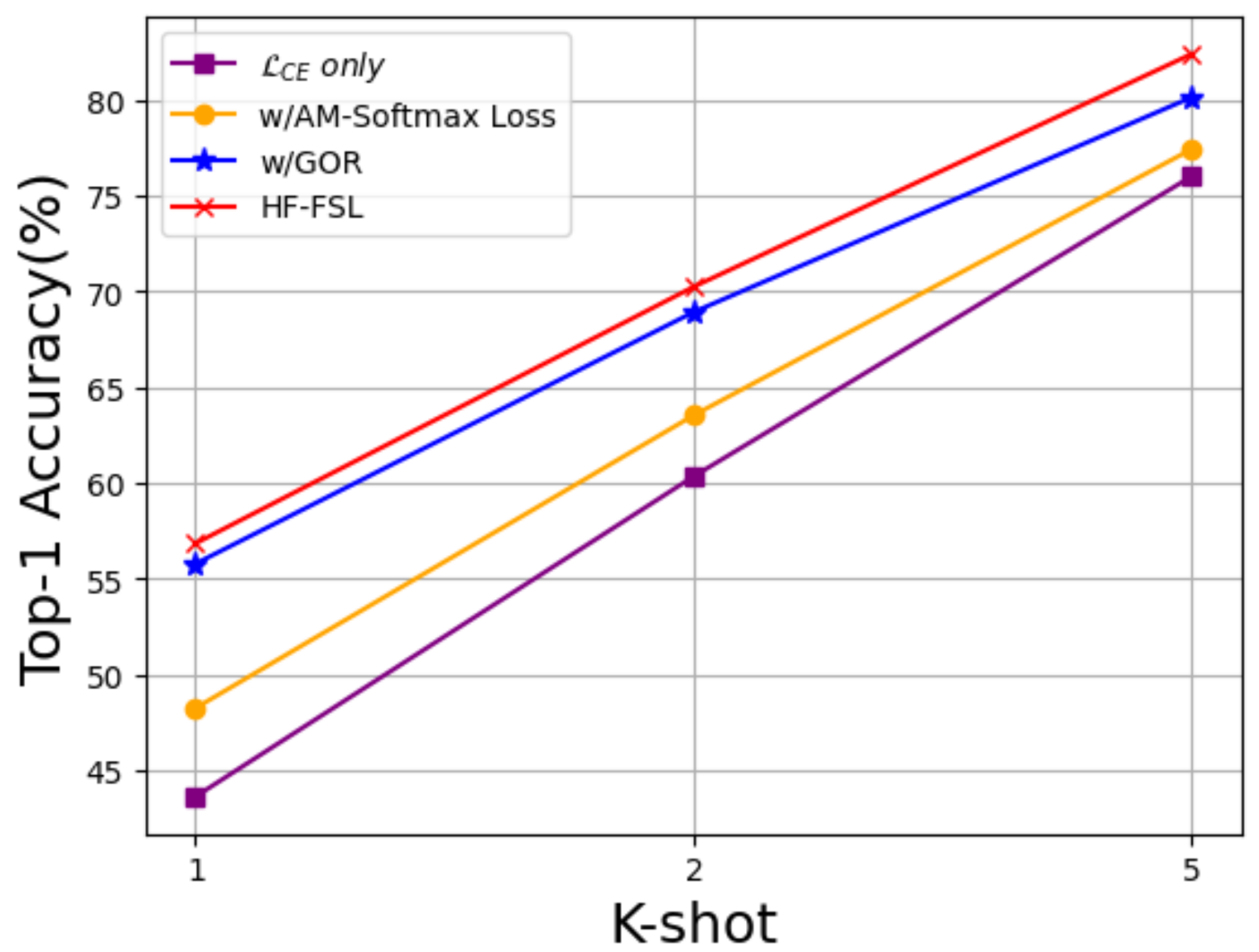

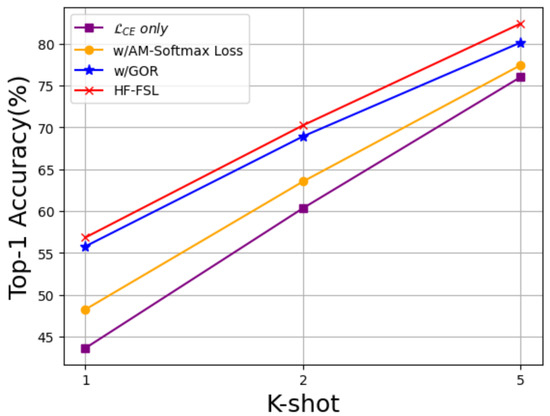

4.3. Ablation Study

In order to verify the effectiveness of the two core modules, AM-Softmax Loss and GOR in the proposed HF-FSL method, we conducted ablation experiments on Omniglot using the setting 2636-way K-shot, where K = 1, 2, 5, see Figure 2. The first row in Table 3 is the result of using the traditional cross-entropy loss function, denoted by , only. The second line shows results when the AM-Softmax function based on the angular distance metric was used, which is represented by AM-Softmax. The third line shows results when the traditional cross-entropy loss function and the global orthogonal regularization term proposed in this paper were used, which is represented by w/GOR. By comparing the first row with the second row and the first row with the third row, respectively, it can be seen that using AM-Softmax Loss and GOR greatly improved the accuracy of the ordinary cross-entropy loss function. Comparing these rows with the fourth row, it can be seen that, compared with the traditional method, the recognition accuracy of HF-FSL under the experimental setting of K = 1, 2, 5 brought improvements of 13.21%, 9.90%, and 6.39%, respectively.

Figure 2.

Ablation study on Omniglot.

Table 3.

Ablation experiment results (based on Omniglot dataset).

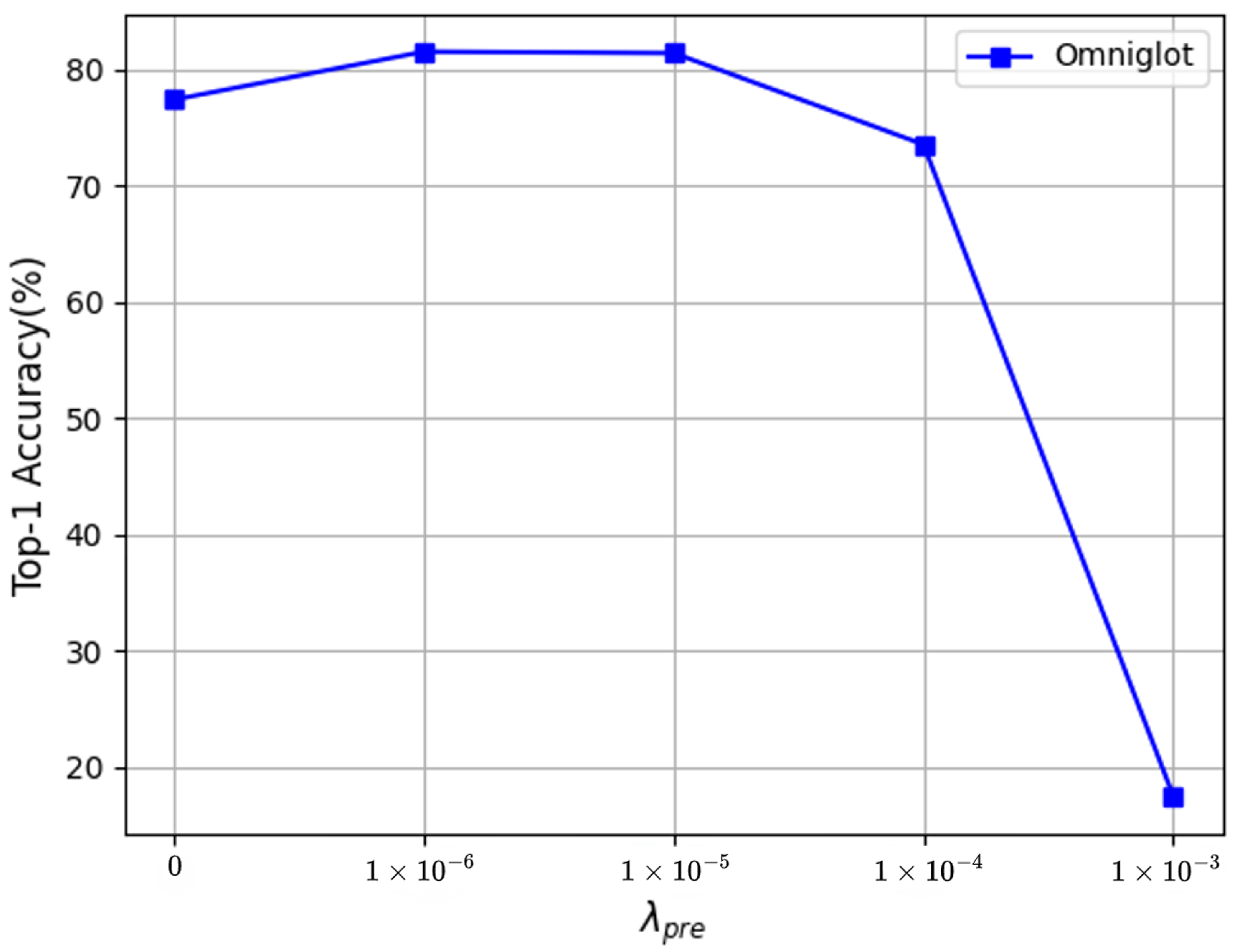

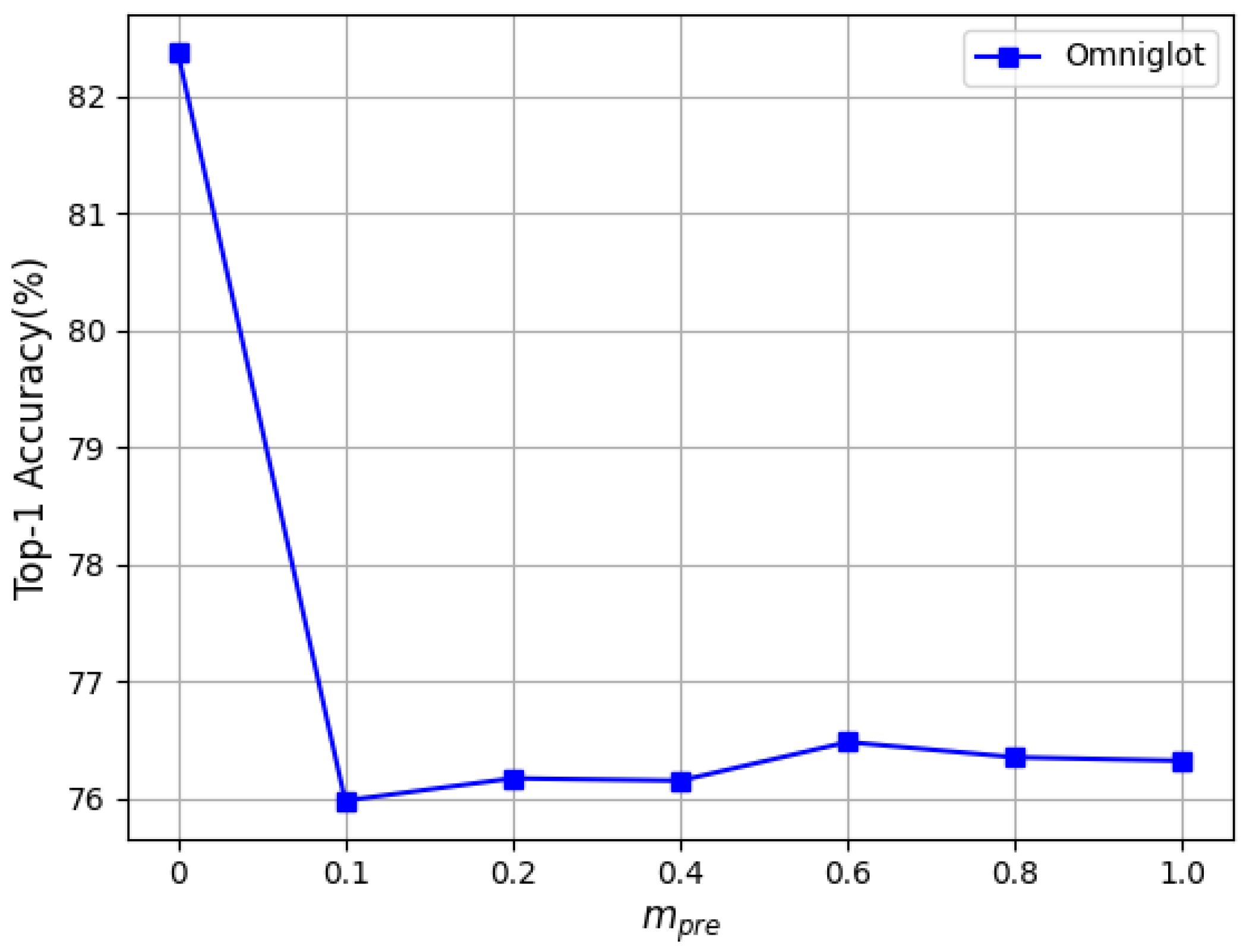

4.4. Parameter Sensitivity Analysis

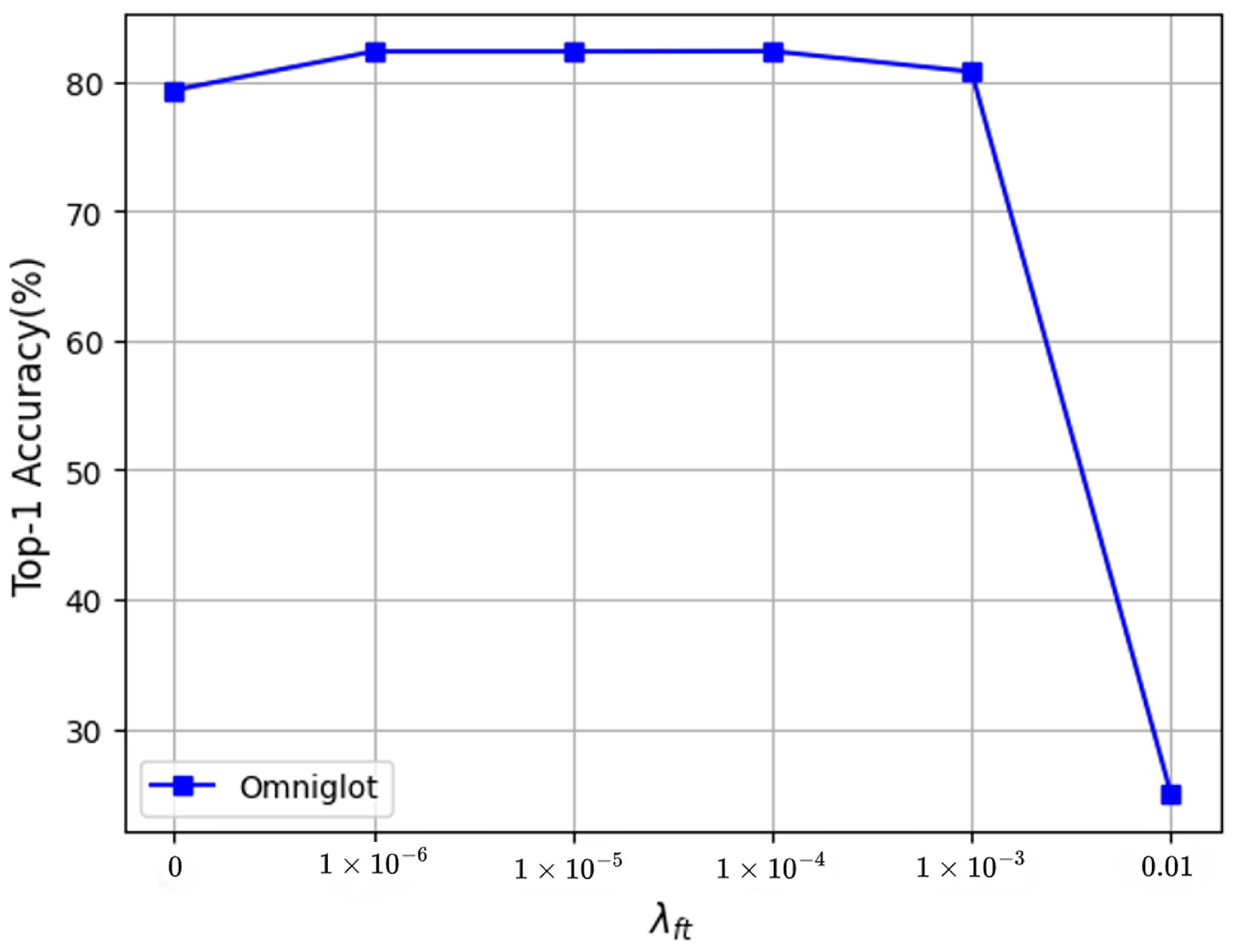

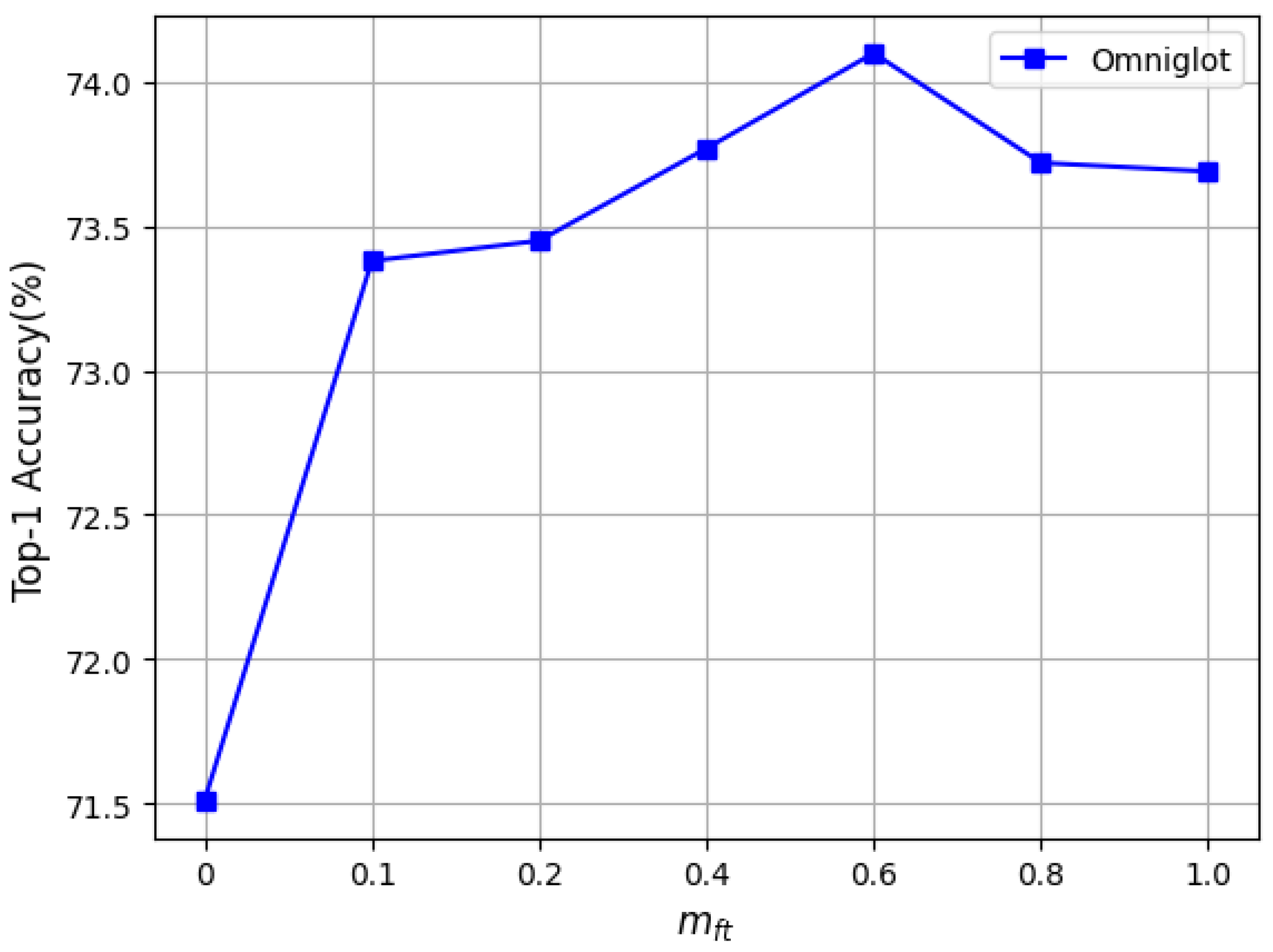

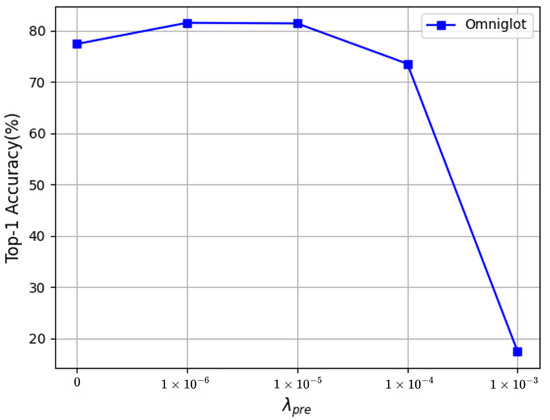

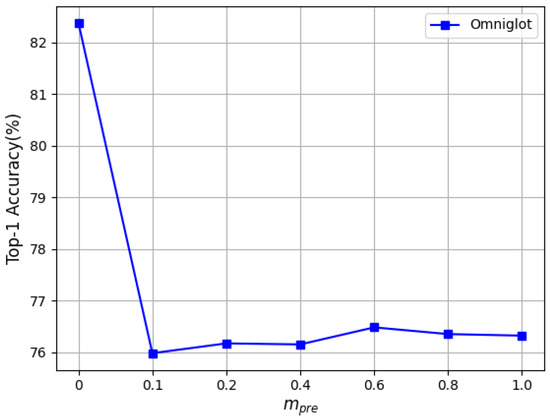

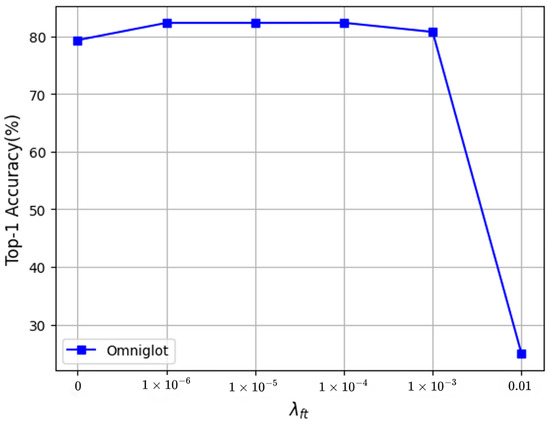

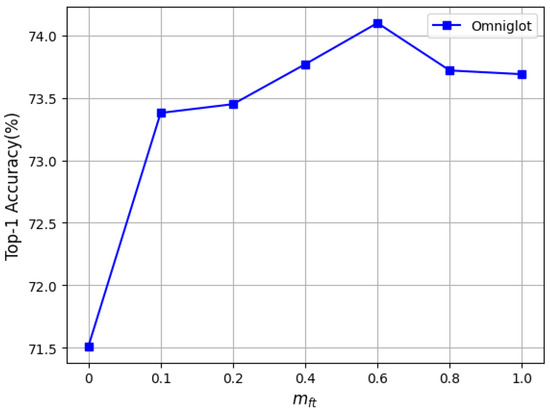

We adjusted these four hyperparameters on Omniglot separately (one hyperparameter was adjusted each time, and the remaining three hyperparameters were kept fixed). In Figure 3, when changed from 0 to 1 , the recognition accuracy significantly improved, and when was greater than 1 , the accuracy began to drop sharply. It can be seen in Figure 4 that the change in in the pretraining stage did not have a positive effect on the recognition accuracy; hence, it is recommended to set to 0 in the experiment. Figure 5 shows that in the fine-tuning stage changed from 0 to , the recognition accuracy was improved by varying degrees, and the accuracy dropped sharply when it was greater than . Therefore, we recommend that the value of be chosen from the interval 0 to . In Figure 6, with the change in the value of in the fine-tuning stage, the accuracy steadily improved compared to when was set to 0. It can be concluded that the proposed HF-FSL method can achieve better recognition accuracy without much parameter adjustment.

Figure 3.

Tuning .

Figure 4.

Tuning .

Figure 5.

Tuning .

Figure 6.

Tuning .

5. Conclusions

This paper proposes a large-class few-shot learning method called HF-FSL. First, we projected samples onto a hypersphere, and, then, used the global orthogonal regularization strategy to make samples from different categories as orthogonal as possible, so as to achieve the purpose of compactness within the class and the dispersion between classes. Moreover, the angle metric-based loss function was applied in order to characterize the relationships between the samples distributed on the hypersphere. Experimental results using three public large-class few-shot datasets showed that HF-FSL could effectively improve the recognition accuracy of large-class few-shot learning methods.

Author Contributions

Conceptualization, J.H.; methodology, J.H.; software, J.D.; validation, J.D.; formal analysis, J.D.; investigation, Y.Z.; resources, Y.Z.; data curation, R.Z.; writing—original draft preparation, J.D.; writing—review and editing, J.D.; visualization, Y.Z.; supervision, Y.Z.; project administration, R.Z.; funding acquisition, Y.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (62102062), the Humanities and Social Science Research Project of Ministry of Education (21YJCZH037), and the Natural Science Foundation of Liaoning Province (2020-MS-134, 2020-MZLH-29).

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to privacy.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Miller, E.G.; Matsakis, N.E.; Viola, P.A. Learning from one example through shared densities on transforms. In Proceedings of the Proceedings IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2000 (Cat. No. PR00662), Hilton Head Island, SC, USA, 15 June 2000; Volume 1, pp. 464–471. [Google Scholar]

- Tran, T.O.; Vo, T.H.; Le, N.Q.K. Omics-based deep learning approaches for lung cancer decision-making and therapeutics development. Briefings Funct. Genom. 2023, elad031. [Google Scholar] [CrossRef] [PubMed]

- Kha, Q.H.; Ho, Q.T.; Le, N.Q.K. Identifying SNARE proteins using an alignment-free method based on multiscan convolutional neural network and PSSM profiles. J. Chem. Inf. Model. 2022, 62, 4820–4826. [Google Scholar] [CrossRef] [PubMed]

- Morris, M.X.; Rajesh, A.; Asaad, M.; Hassan, A.; Saadoun, R.; Butler, C.E. Deep learning applications in surgery: Current uses and future directions. Am. Surg. 2023, 89, 36–42. [Google Scholar] [CrossRef] [PubMed]

- Vinyals, O.; Blundell, C.; Lillicrap, T.; Kavukcuoglu, K.; Wierstra, D. Matching networks for one shot learning. In Proceedings of the 30th Conference on Neural Information Processing Systems (NIPS 2016), Barcelona, Spain, 5–10 December 2016; Volume 29. [Google Scholar]

- Snell, J.; Swersky, K.; Zemel, R. Prototypical networks for few-shot learning. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Li, B.; Han, B.; Wang, Z.; Jiang, J.; Long, G. Confusable learning for large-class few-shot classification. In Machine Learning and Knowledge Discovery in Databases, Proceedings of the European Conference, ECML PKDD 2020, Ghent, Belgium, 14–18 September 2020; Proceedings, Part II; Springer: Cham, Switzerland, 2021; pp. 707–723. [Google Scholar]

- Li, A.; Luo, T.; Lu, Z.; Xiang, T.; Wang, L. Large-scale few-shot learning: Knowledge transfer with class hierarchy. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7212–7220. [Google Scholar]

- Wang, S.; Yue, J.; Liu, J.; Tian, Q.; Wang, M. Large-scale few-shot learning via multi-modal knowledge discovery. In Computer Vision, Proceedings of the ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part X 16; Springer: Cham, Switzerland, 2020; pp. 718–734. [Google Scholar]

- Hariharan, B.; Girshick, R. Low-shot visual recognition by shrinking and hallucinating features. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 3018–3027. [Google Scholar]

- Abramovich, F.; Pensky, M. Classification with many classes: Challenges and pluses. J. Multivar. Anal. 2019, 174, 104536. [Google Scholar] [CrossRef]

- Tyukin, I.Y.; Gorban, A.N.; McEwan, A.A.; Meshkinfamfard, S.; Tang, L. Blessing of dimensionality at the edge and geometry of few-shot learning. Inf. Sci. 2021, 564, 124–143. [Google Scholar] [CrossRef]

- Gorban, A.N.; Tyukin, I.Y. Blessing of dimensionality: Mathematical foundations of the statistical physics of data. Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 2018, 376, 20170237. [Google Scholar] [CrossRef]

- Gorban, A.N.; Grechuk, B.; Mirkes, E.M.; Stasenko, S.V.; Tyukin, I.Y. High-dimensional separability for one-and few-shot learning. Entropy 2021, 23, 1090. [Google Scholar] [CrossRef]

- Tyukin, I.Y.; Gorban, A.N.; Alkhudaydi, M.H.; Zhou, Q. Demystification of few-shot and one-shot learning. In Proceedings of the International Joint Conference on Neural Networks (IJCNN), Shenzhen, China, 18–22 July 2021; pp. 1–7. [Google Scholar]

- Lake, B.M.; Salakhutdinov, R.; Tenenbaum, J.B. The Omniglot challenge: A 3-year progress report. Curr. Opin. Behav. Sci. 2019, 29, 97–104. [Google Scholar] [CrossRef]

- Chrabaszcz, P.; Loshchilov, I.; Hutter, F. A downsampled variant of imagenet as an alternative to the cifar datasets. arXiv 2017, arXiv:1707.08819. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the 25th International Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; Volume 25. [Google Scholar]

- Chatfield, K.; Simonyan, K.; Vedaldi, A.; Zisserman, A. Return of the devil in the details: Delving deep into convolutional nets. arXiv 2014, arXiv:1405.3531. [Google Scholar]

- Zeiler, M.D.; Fergus, R. Visualizing and understanding convolutional networks. In Computer Vision, Proceedings of the ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part I 13; Springer: Cham, Switzerland, 2014; pp. 818–833. [Google Scholar]

- Wang, Y.X.; Girshick, R.; Hebert, M.; Hariharan, B. Low-shot learning from imaginary data. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7278–7286. [Google Scholar]

- Subedi, B.; Sathishkumar, V.; Maheshwari, V.; Kumar, M.S.; Jayagopal, P.; Allayear, S.M. Feature learning-based generative adversarial network data augmentation for class-based few-shot learning. Math. Probl. Eng. 2022, 2022, 9710667. [Google Scholar] [CrossRef]

- Osahor, U.; Nasrabadi, N.M. Ortho-shot: Low displacement rank regularization with data augmentation for few-shot learning. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2022; pp. 2200–2209. [Google Scholar]

- Kaya, M.; Bilge, H.Ş. Deep metric learning: A survey. Symmetry 2019, 11, 1066. [Google Scholar] [CrossRef]

- Finn, C.; Abbeel, P.; Levine, S. Model-agnostic meta-learning for fast adaptation of deep networks. In Proceedings of the International Conference on Machine Learning, PMLR, Sydney, Australia, 6–11 August 2017; pp. 1126–1135. [Google Scholar]

- Wang, H.; Wang, Y.; Sun, R.; Li, B. Global convergence of maml and theory-inspired neural architecture search for few-shot learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 9797–9808. [Google Scholar]

- Wang, L.; Zhang, X.; Su, H.; Zhu, J. A comprehensive survey of continual learning: Theory, method and application. arXiv 2023, arXiv:2302.00487. [Google Scholar]

- Tao, X.; Hong, X.; Chang, X.; Dong, S.; Wei, X.; Gong, Y. Few-shot class-incremental learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 12183–12192. [Google Scholar]

- Liu, H.; Gu, L.; Chi, Z.; Wang, Y.; Yu, Y.; Chen, J.; Tang, J. Few-shot class-incremental learning via entropy-regularized data-free replay. In Computer Vision, Proceedings of ECCV 2022: 17th European Conference, Tel Aviv, Israel, 23–27 October 2022; Springer: Cham, Switzerland, 2022; pp. 146–162. [Google Scholar]

- Wen, Y.; Zhang, K.; Li, Z.; Qiao, Y. A discriminative feature learning approach for deep face recognition. In Computer Vision Proceedings of the ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part VII 14; Springer: Cham, Switzerland, 2016; pp. 499–515. [Google Scholar]

- Liu, W.; Wen, Y.; Yu, Z.; Li, M.; Raj, B.; Song, L. Sphereface: Deep hypersphere embedding for face recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 212–220. [Google Scholar]

- Wang, H.; Wang, Y.; Zhou, Z.; Ji, X.; Gong, D.; Zhou, J.; Li, Z.; Liu, W. Cosface: Large margin cosine loss for deep face recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5265–5274. [Google Scholar]

- Wang, F.; Cheng, J.; Liu, W.; Liu, H. Additive margin softmax for face verification. IEEE Signal Process. Lett. 2018, 25, 926–930. [Google Scholar] [CrossRef]

- Zhang, X.; Yu, F.X.; Kumar, S.; Chang, S.F. Learning spread-out local feature descriptors. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4595–4603. [Google Scholar]

- Qi, H.; Brown, M.; Lowe, D.G. Low-shot learning with imprinted weights. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5822–5830. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).