Skin Cancer Classification Framework Using Enhanced Super Resolution Generative Adversarial Network and Custom Convolutional Neural Network

Abstract

Simple Summary

Abstract

1. Introduction

2. Related Work

3. Materials and Methods

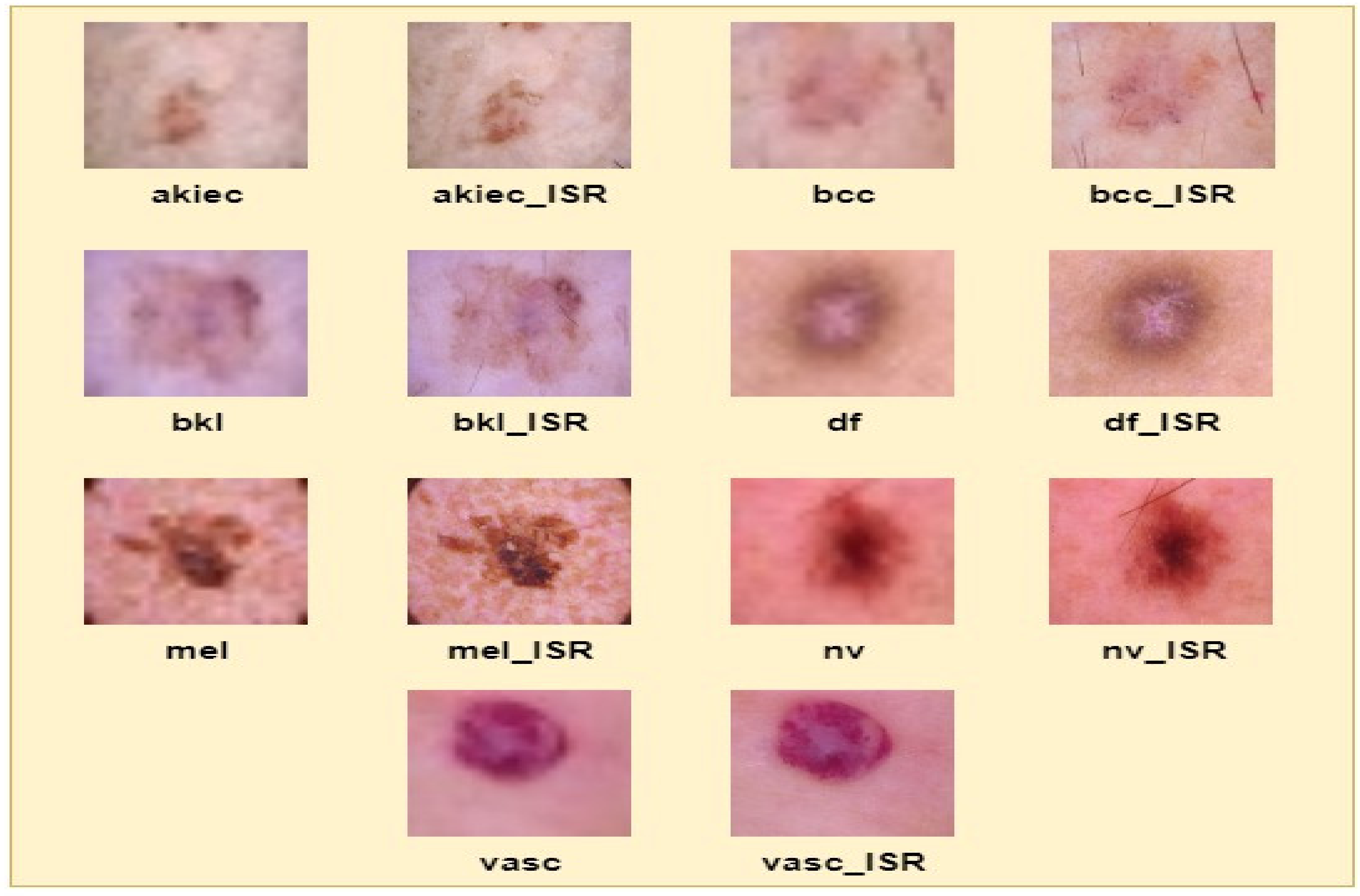

3.1. HAM10000 Dataset

3.1.1. Actinic Keratosis (akiec)

3.1.2. Basal Cell Carcinoma (bcc)

3.1.3. Benign Keratosis-Like Lesions (bkl)

3.1.4. Dermatofibroma (df)

3.1.5. Melanocytic Nevi (nv)

3.1.6. Vascular Lesions (vasc)

3.1.7. Melanoma (mel)

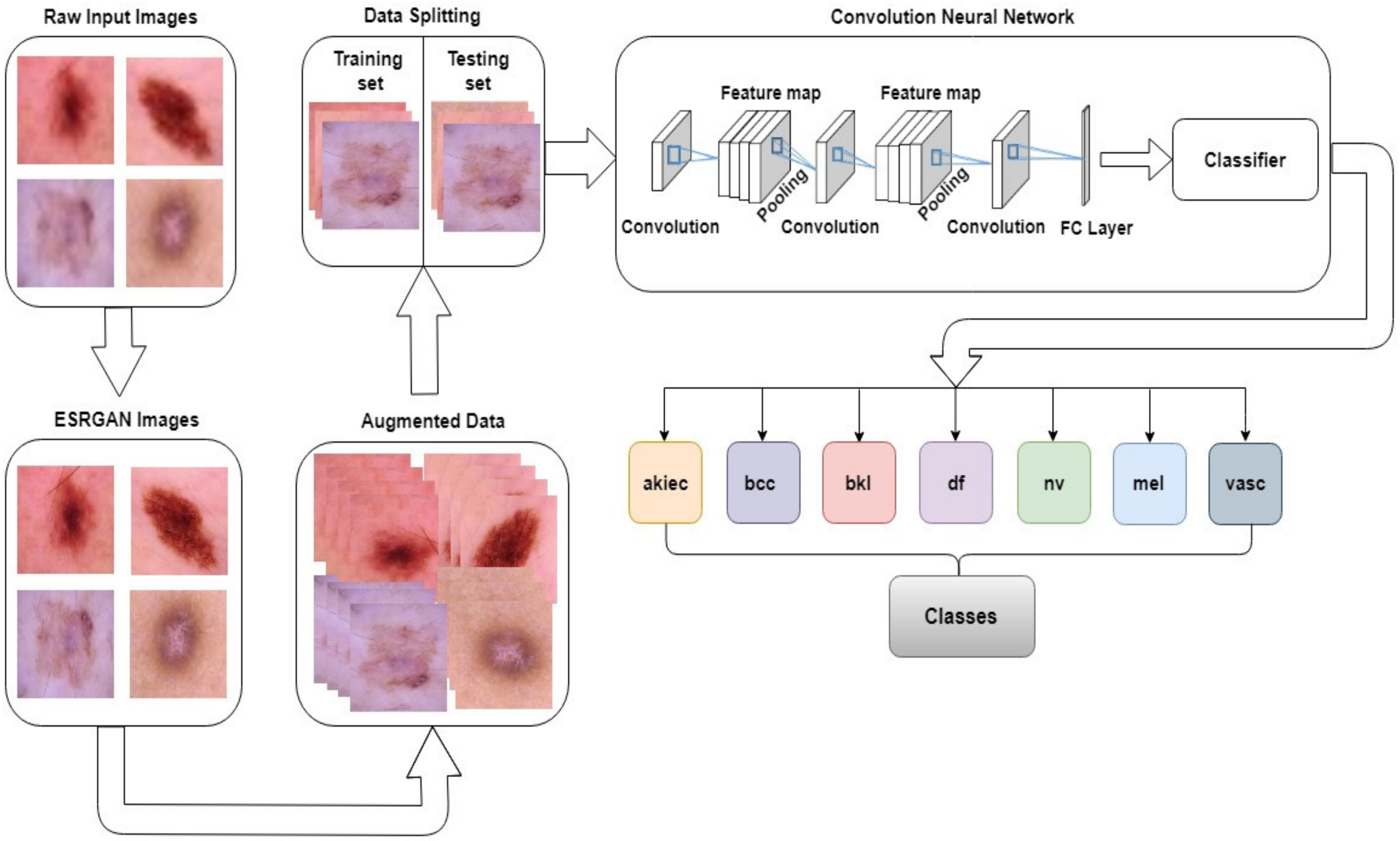

4. Proposed Methodology

4.1. Pre-Processing

4.1.1. Enhanced Super-Resolution Generative Adversarial Network (ESRGAN)

- Local Feature Fusion (LFF): It is an adaptive state derived from RRDB and a convolution layer in a new RRDB and is given by Equation (1).

- b.

- Local Residual Learning (LRL): It is implemented for the improvement of overall information flow. It also helps to get the final output of dth RRDB as shown in Equation (2).

- (i)

- Discriminator loss: It is the loss calculated during misclassification of real and fake instances. Some of the fake instances are obtained from the generator by expanding the equation given in Equation (3).

- (ii)

- Generator loss: The generator loss is calculated if the discriminator misclassifies the fake images which helps the discriminator to improvise. It is given by Equation (4)

- (iii)

- Perpetual Loss: In ESRGAN, the perpetual loss is also improved by confining the features prior to activation, as compared to features after activation in SRGAN. The perpetual loss function is given by Equation (5).

- (iv)

- Content Loss: The element wise Mean Square Error (MSE). It is most broadly used in targeting the super resolved image and is given by Equation (6)

4.1.2. Data Augmentation

4.2. Custom Convolutional Neural Network

4.3. Building a Custom CNN Model

| Algorithm 1: Proposed algorithm for classification of skin lesions |

Step 1: Pre-processing

|

Step 2: Training custom CNN model

|

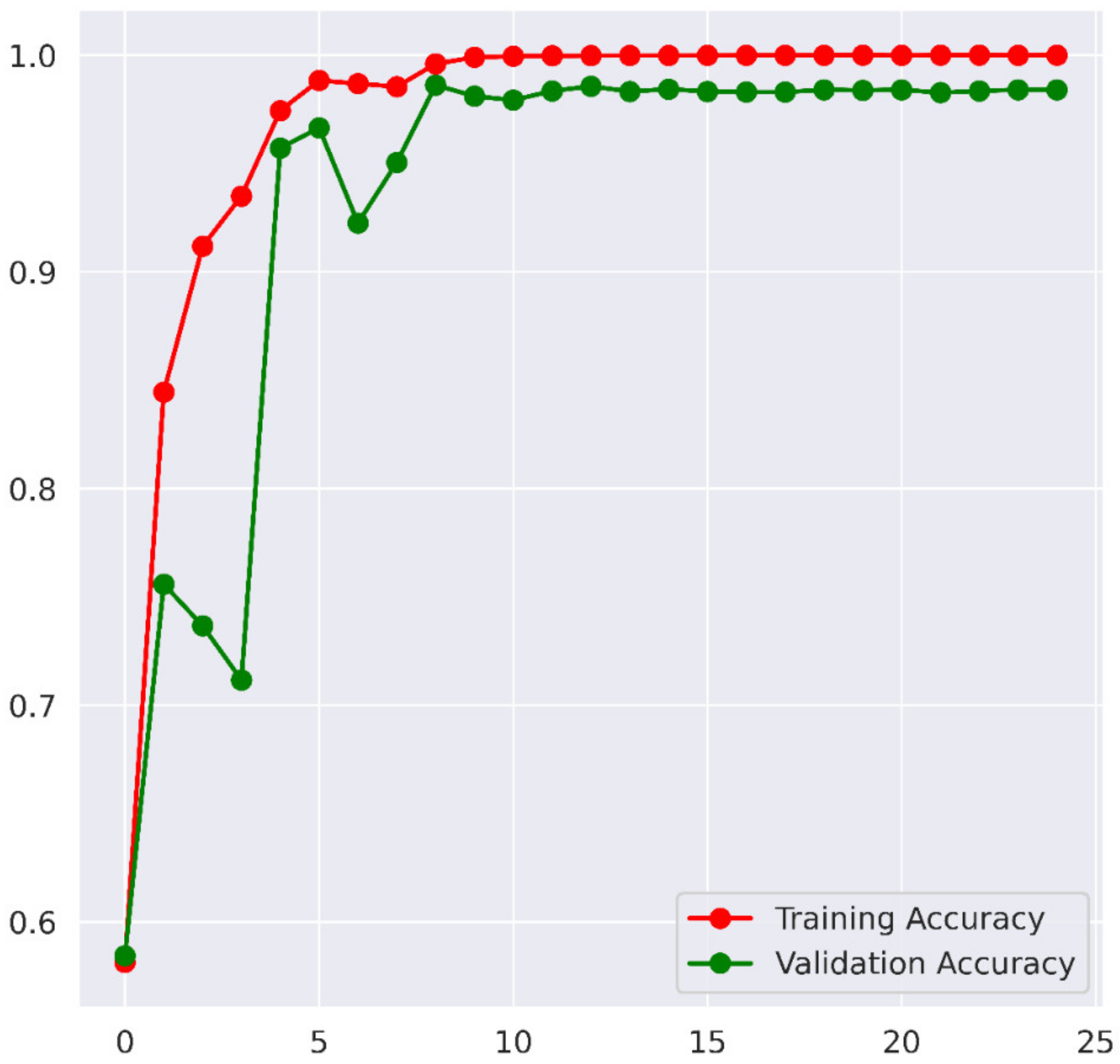

5. Results and Discussion

5.1. Performance Metrics

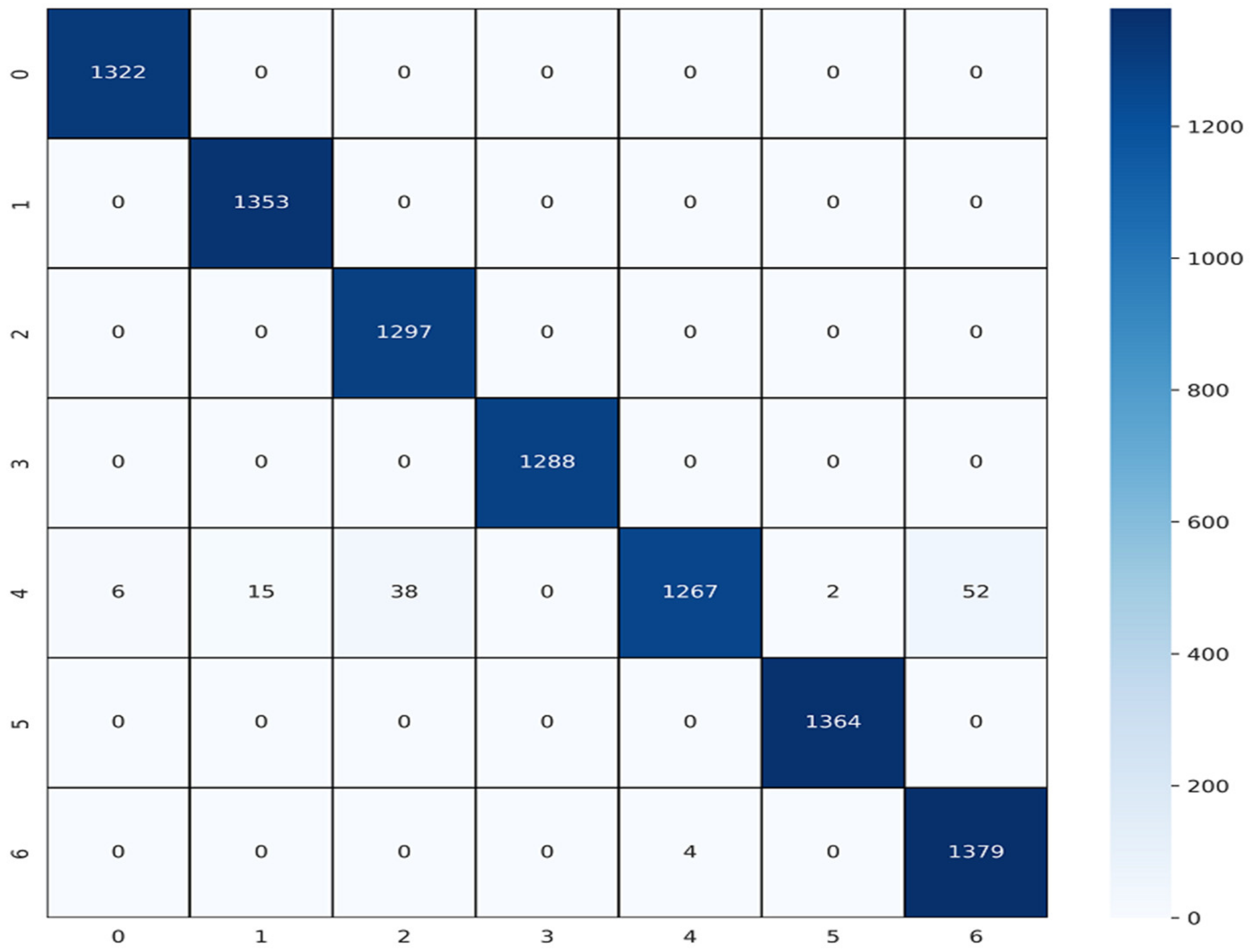

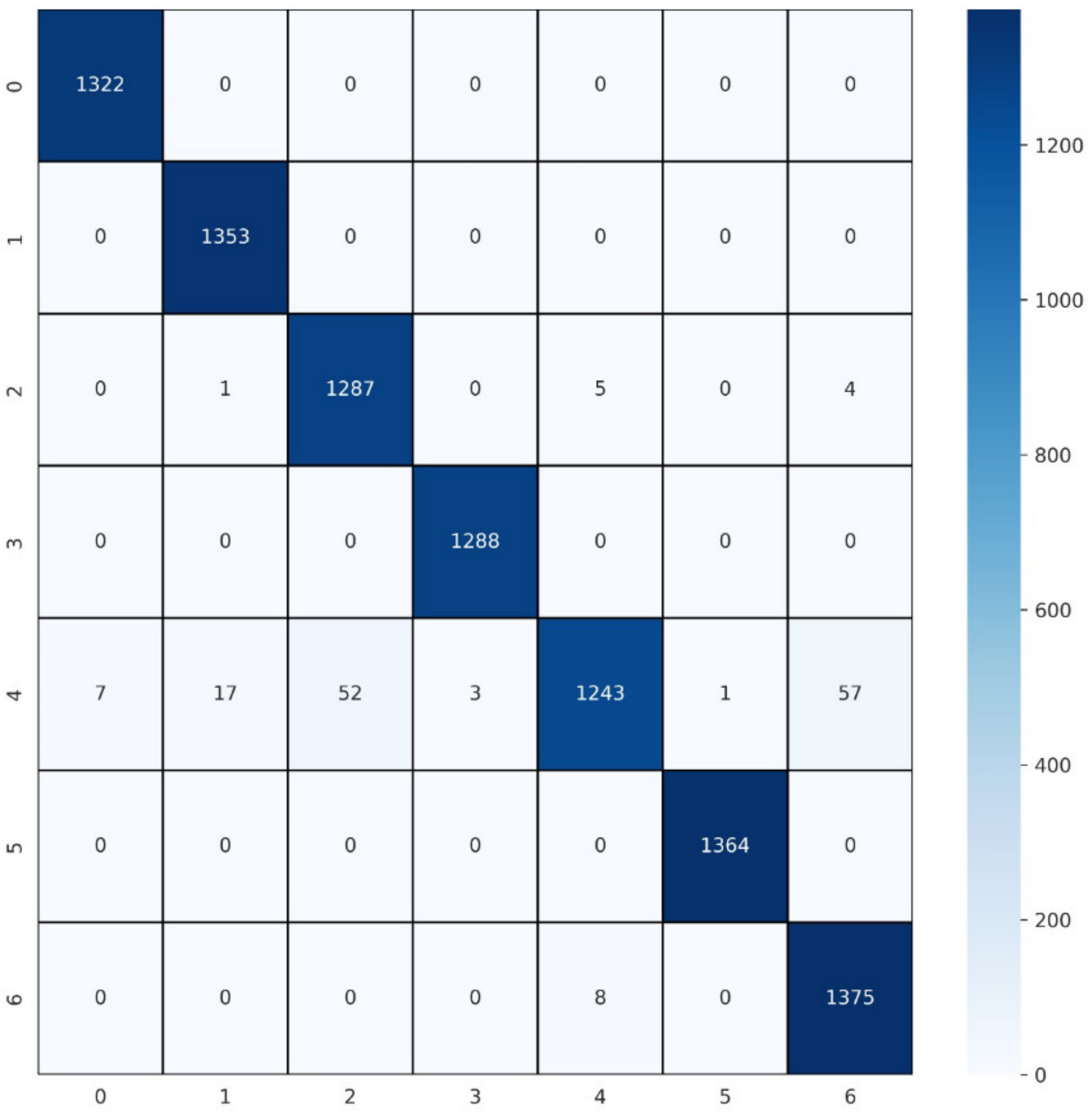

5.2. Protocol-I (Train:Test = 80:20 Ratio)

Various Approaches That Follow Protocol-I

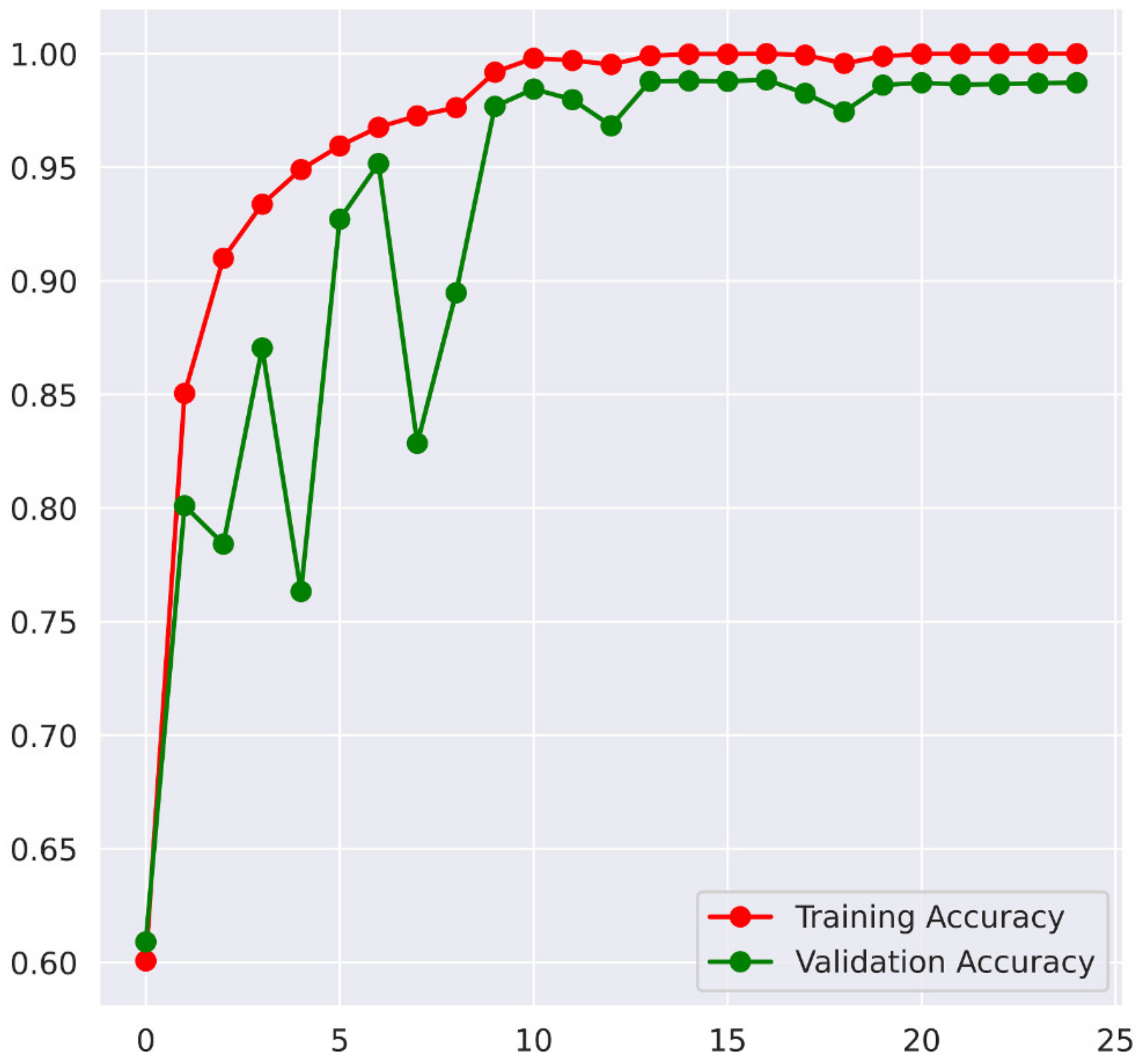

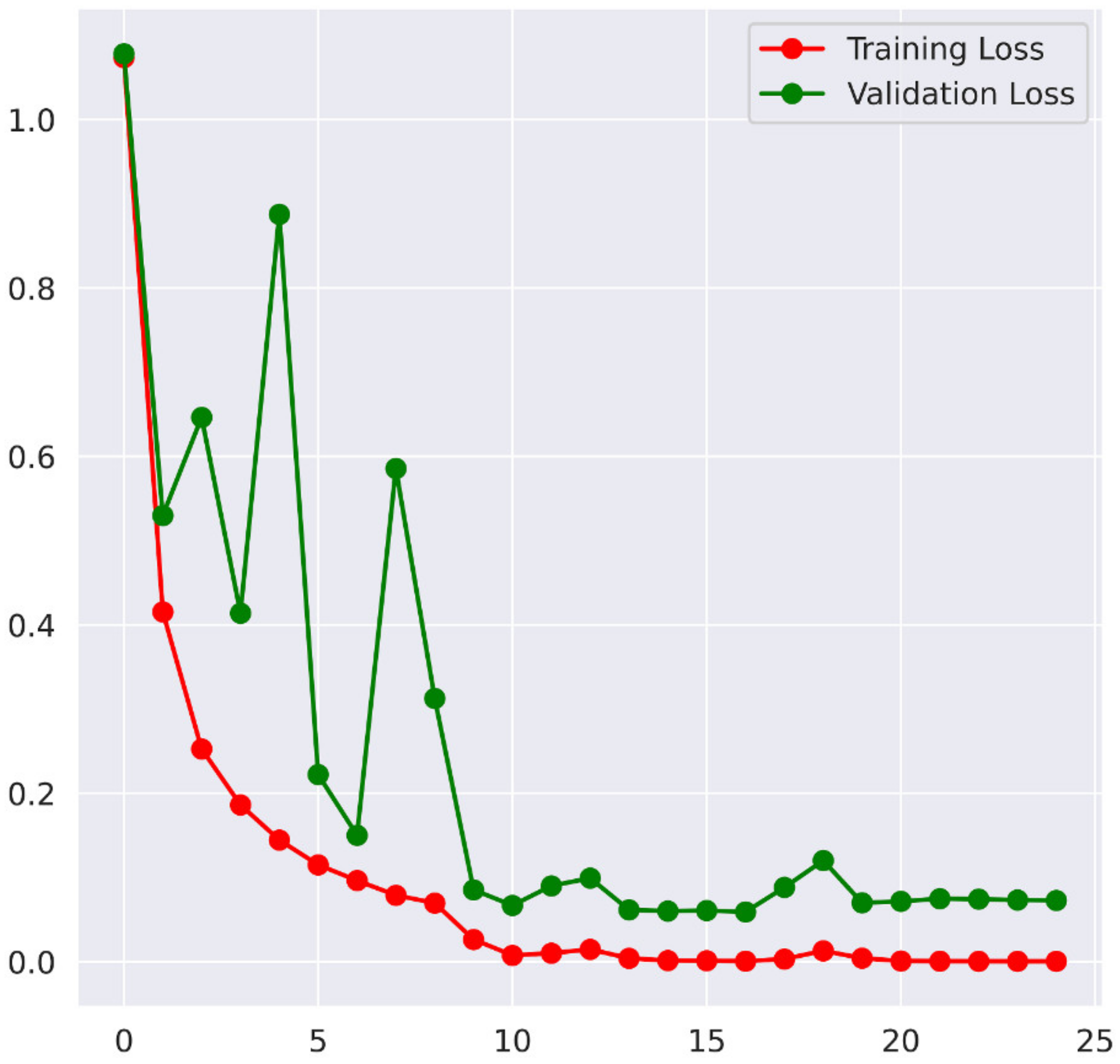

5.3. Protocol II

Various Approaches That Follow Protocol-II

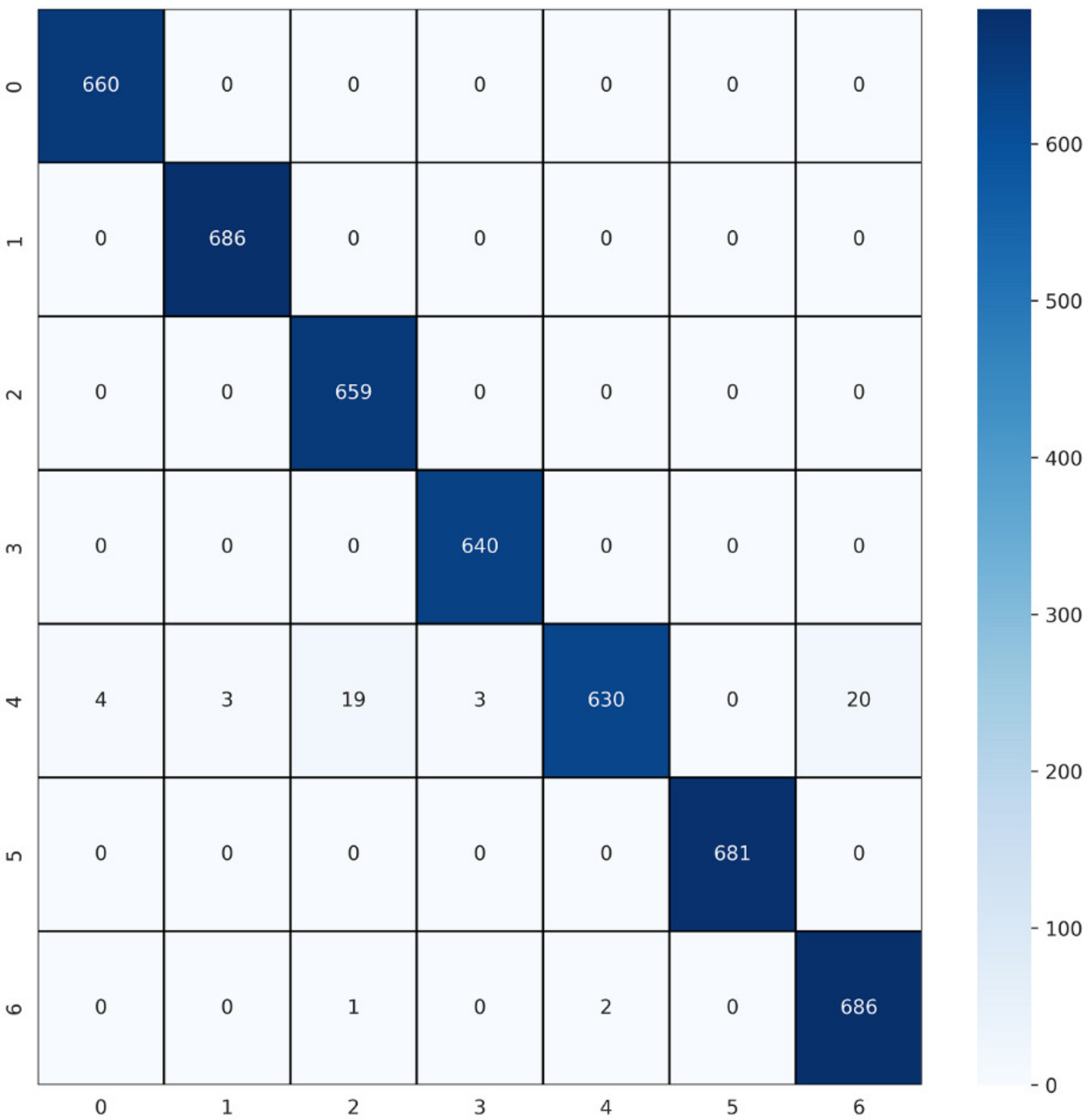

5.4. Protocol III

Various Approaches That Follow Protocol-III

6. Conclusions and Future Scope

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Afza, F.; Sharif, M.; Khan, M.A.; Tariq, U.; Yong, H.S.; Cha, J. Multiclass Skin Lesion Classification Using Hybrid Deep Features Selection and Extreme Learning Machine. Sensors 2022, 22, 799. [Google Scholar] [CrossRef] [PubMed]

- Aldhyani, T.H.H.; Verma, A.; Al-Adhaileh, M.H.; Koundal, D. Multi-Class Skin Lesion Classification Using a Lightweight Dynamic Kernel Deep-Learning-Based Convolutional Neural Network. Diagnostics 2022, 12, 2048. [Google Scholar] [CrossRef] [PubMed]

- World Health Organization. Radiation: Ultraviolet (UV) Radiation and Skin Cancer—How Common Is Skin Cancer. Available online: https://www.who.int/news-room/questions-and-answers/item/radiation-ultraviolet-(uv)-radiation-and-skin-cancer (accessed on 12 October 2022).

- Jeyakumar, J.P.; Jude, A.; Priya Henry, A.G.; Hemanth, J. Comparative Analysis of Melanoma Classification Using Deep Learning Techniques on Dermoscopy Images. Electronics 2022, 11, 2918. [Google Scholar] [CrossRef]

- Ali, K.; Shaikh, Z.A.; Khan, A.A.; Laghari, A.A. Multiclass Skin Cancer Classification Using EfficientNets—A First Step towards Preventing Skin Cancer. Neurosci. Inform. 2022, 2, 100034. [Google Scholar] [CrossRef]

- Hebbar, N.; Patil, H.Y.; Agarwal, K. Web Powered CT Scan Diagnosis for Brain Hemorrhage Using Deep Learning. In Proceedings of the 2020 IEEE 4th Conference on Information & Communication Technology (CICT), Chennai, India, 3 December 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–5. [Google Scholar]

- Aladhadh, S.; Alsanea, M.; Aloraini, M.; Khan, T.; Habib, S.; Islam, M. An Effective Skin Cancer Classification Mechanism via Medical Vision Transformer. Sensors 2022, 22, 4008. [Google Scholar] [CrossRef]

- Shetty, B.; Fernandes, R.; Rodrigues, A.P. Skin Lesion Classiication of Dermoscopic Images Using Machine Learning and Convolutional Neural Network. Sci. Rep. 2022, 12, 18134. [Google Scholar] [CrossRef]

- Tschandl, P.; Rosendahl, C.; Kittler, H. The HAM10000 Dataset, a Large Collection of Multi-Source Dermatoscopic Images of Common Pigmented Skin Lesions. Sci. Data 2018, 5, 180161. [Google Scholar] [CrossRef] [PubMed]

- Bansal, P.; Garg, R.; Soni, P. Detection of Melanoma in Dermoscopic Images by Integrating Features Extracted Using Handcrafted and Deep Learning Models. Comput. Ind. Eng. 2022, 168, 108060. [Google Scholar] [CrossRef]

- Basak, H.; Kundu, R.; Sarkar, R. MFSNet: A Multi Focus Segmentation Network for Skin Lesion Segmentation. Pattern Recognit. 2022, 128, 108673. [Google Scholar] [CrossRef]

- Nakai, K.; Chen, Y.W.; Han, X.H. Enhanced Deep Bottleneck Transformer Model for Skin Lesion Classification. Biomed. Signal Process. Control 2022, 78, 103997. [Google Scholar] [CrossRef]

- Popescu, D.; El-Khatib, M.; Ichim, L. Skin Lesion Classification Using Collective Intelligence of Multiple Neural Networks. Sensors 2022, 22, 4399. [Google Scholar] [CrossRef] [PubMed]

- Qian, S.; Ren, K.; Zhang, W.; Ning, H. Skin Lesion Classification Using CNNs with Grouping of Multi-Scale Attention and Class-Specific Loss Weighting. Comput. Methods Programs Biomed. 2022, 226, 107166. [Google Scholar] [CrossRef] [PubMed]

- Mahbod, A.; Schaefer, G.; Wang, C.; Dorffner, G.; Ecker, R.; Ellinger, I. Transfer Learning Using a Multi-Scale and Multi-Network Ensemble for Skin Lesion Classification. Comput. Methods Programs Biomed. 2020, 193, 105475. [Google Scholar] [CrossRef]

- Panthakkan, A.; Anzar, S.M.; Jamal, S.; Mansoor, W. Concatenated Xception-ResNet50—A Novel Hybrid Approach for Accurate Skin Cancer Prediction. Comput. Biol. Med. 2022, 150, 106170. [Google Scholar] [CrossRef]

- Almaraz-Damian, J.A.; Ponomaryov, V.; Sadovnychiy, S.; Castillejos-Fernandez, H. Melanoma and Nevus Skin Lesion Classification Using Handcraft and Deep Learning Feature Fusion via Mutual Information Measures. Entropy 2020, 22, 484. [Google Scholar] [CrossRef]

- Zalaudek, I.; Giacomel, J.; Schmid, K.; Bondino, S.; Rosendahl, C.; Cavicchini, S.; Tourlaki, A.; Gasparini, S.; Bourne, P.; Keir, J.; et al. Dermatoscopy of Facial Actinic Keratosis, Intraepidermal Carcinoma, and Invasive Squamous Cell Carcinoma: A Progression Model. J. Am. Acad. Dermatol. 2012, 66, 589–597. [Google Scholar] [CrossRef] [PubMed]

- Sevli, O. A Deep Convolutional Neural Network-Based Pigmented Skin Lesion Classification Application and Experts Evaluation. Neural Comput. Appl. 2021, 33, 12039–12050. [Google Scholar] [CrossRef]

- Lallas, A.; Apalla, Z.; Argenziano, G.; Longo, C.; Moscarella, E.; Specchio, F.; Raucci, M.; Zalaudek, I. The Dermatoscopic Universe of Basal Cell Carcinoma. Dermatol. Pract. Concept. 2014, 4, 11–24. [Google Scholar] [CrossRef]

- BinJadeed, H.; Aljomah, N.; Alsubait, N.; Alsaif, F.; AlHumidi, A. Lichenoid Keratosis Successfully Treated with Topical Imiquimod. JAAD Case Rep. 2020, 6, 1353–1355. [Google Scholar] [CrossRef]

- Ortonne, J.P.; Pandya, A.G.; Lui, H.; Hexsel, D. Treatment of Solar Lentigines. J. Am. Acad. Dermatol. 2006, 54, 262–271. [Google Scholar] [CrossRef] [PubMed]

- Zaballos, P.; Salsench, E.; Serrano, P.; Cuellar, F.; Puig, S.; Malvehy, J. Studying Regression of Seborrheic Keratosis in Lichenoid Keratosis with Sequential Dermoscopy Imaging. Dermatology 2010, 220, 103–109. [Google Scholar] [CrossRef]

- Zaballos, P.; Puig, S.; Llambrich, A.; Malvehy, J. Dermoscopy of Dermatofibromas. Arch. Dermatol. 2008, 144, 75–83. [Google Scholar] [CrossRef] [PubMed]

- Sarkar, R.; Chatterjee, C.C.; Hazra, A. Diagnosis of Melanoma from Dermoscopic Images Using a Deep Depthwise Separable Residual Convolutional Network. IET Image Process 2019, 13, 2130–2142. [Google Scholar] [CrossRef]

- Teja, K.U.V.R.; Reddy, B.P.V.; Likith Preetham, A.; Patil, H.Y.; Poorna Chandra, T. Prediction of Diabetes at Early Stage with Supplementary Polynomial Features. In Proceedings of the 2021 Smart Technologies, Communication and Robotics (STCR)STCR, Sathyamangalam, India, 9–10 October 2021; pp. 7–11. [Google Scholar] [CrossRef]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.P.; Tejani, A.; Totz, J.; Wang, Z.; et al. Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network. Cvpr 2017, 2, 4. [Google Scholar]

- Wang, X.; Yu, K.; Wu, S.; Gu, J.; Liu, Y.; Dong, C.; Qiao, Y.; Loy, C.C. ESRGAN: Enhanced Super-Resolution Generative Adversarial Networks. In Computer Vision – ECCV 2018 Workshops; Lecture Notes in Computer Science (LNCS), including its subseries Lecture Notes in Artificial Intelligence (LNAI) and Lecture Notes in Bioinformatics (LNBI); Springer: Cham, Switzerland, 2019; Volume 11133, pp. 63–79. [Google Scholar] [CrossRef]

- Le-Tien, T.; Nguyen-Thanh, T.; Xuan, H.P.; Nguyen-Truong, G.; Ta-Quoc, V. Deep Learning Based Approach Implemented to Image Super-Resolution. J. Adv. Inf. Technol. 2020, 11, 209–216. [Google Scholar] [CrossRef]

- Milton, M.A.A. Automated Skin Lesion Classification Using Ensemble of Deep Neural Networks in ISIC 2018: Skin Lesion Analysis Towards Melanoma Detection Challenge. arXiv 2019, arXiv:1901.10802. [Google Scholar]

- Naeem, A.; Farooq, M.S.; Khelifi, A.; Abid, A. Malignant Melanoma Classification Using Deep Learning: Datasets, Performance Measurements, Challenges and Opportunities. IEEE Access 2020, 8, 110575–110597. [Google Scholar] [CrossRef]

- Hu, Z.; Tang, J.; Wang, Z.; Zhang, K.; Zhang, L.; Sun, Q. Deep Learning for Image-Based Cancer Detection and diagnosis − A Survey. Pattern Recognit. 2018, 83, 134–149. [Google Scholar] [CrossRef]

- Srivastava, V.; Kumar, D.; Roy, S. A Median Based Quadrilateral Local Quantized Ternary Pattern Technique for the Classification of Dermatoscopic Images of Skin Cancer. Comput. Electr. Eng. 2022, 102, 108259. [Google Scholar] [CrossRef]

- Patil, P.; Ranganathan, M.; Patil, H. Ship Image Classification Using Deep Learning Method BT—Applied Computer Vision and Image Processing; Iyer, B., Rajurkar, A.M., Gudivada, V., Eds.; Springer: Singapore, 2020; pp. 220–227. [Google Scholar]

- Barua, S.; Patil, H.; Desai, P.; Manoharan, A. Deep Learning-Based Smart Colored Fabric Defect Detection System; Springer: Berlin/Heidelberg, Germany, 2020; pp. 212–219. ISBN 978-981-15-4028-8. [Google Scholar]

- Sarkar, A.; Maniruzzaman, M.; Ahsan, M.S.; Ahmad, M.; Kadir, M.I.; Taohidul Islam, S.M. Identification and Classification of Brain Tumor from MRI with Feature Extraction by Support Vector Machine. In Proceedings of the 2020 International Conference for Emerging Technology (INCET), Belgaum, India, 5–7 June 2020; Volume 2, pp. 9–12. [Google Scholar] [CrossRef]

- Agyenta, C.; Akanzawon, M. Skin Lesion Classification Based on Convolutional Neural Network. J. Appl. Sci. Technol. Trends 2022, 3, 14–19. [Google Scholar] [CrossRef]

- Saarela, M.; Geogieva, L. Robustness, Stability, and Fidelity of Explanations for a Deep Skin Cancer Classification Model. Appl. Sci. 2022, 12, 9545. [Google Scholar] [CrossRef]

- Alam, M.J.; Mohammad, M.S.; Hossain, M.A.F.; Showmik, I.A.; Raihan, M.S.; Ahmed, S.; Mahmud, T.I. S2C-DeLeNet: A Parameter Transfer Based Segmentation-Classification Integration for Detecting Skin Cancer Lesions from Dermoscopic Images. Comput. Biol. Med. 2022, 150, 106148. [Google Scholar] [CrossRef] [PubMed]

| Class | akiec | bcc | bkl | df | nv | vasc | mel |

|---|---|---|---|---|---|---|---|

| Images | 327 | 514 | 1099 | 115 | 6705 | 142 | 1113 |

| Class | akiec | bcc | bkl | df | nv | vasc | mel |

|---|---|---|---|---|---|---|---|

| Label | 0 | 1 | 2 | 3 | 4 | 5 | 6 |

| Layer | Output Shape | Parameters |

|---|---|---|

| Input Layer | [(None, 28, 28, 3)] | 0 |

| Convolution 2D_1 | (None, 28, 28, 32) | 896 |

| MaxPooling2D_1 | (None, 14, 14, 32) | 0 |

| Batch Normalization_1 | (None, 14, 14, 32) | 128 |

| Convolution 2D_2 | (None, 14, 14, 64) | 18,496 |

| Convolution 2D_3 | (None, 14, 14, 64) | 36,928 |

| MaxPooling2D_2 | (None, 7, 7, 64) | 0 |

| Batch Normalization_2 | (None, 7, 7, 64) | 256 |

| Convolution 2D_4 | (None, 7, 7, 128) | 73,856 |

| Convolution 2D_5 | (None, 7, 7, 128) | 147,584 |

| MaxPooling2D_3 | (None, 3, 3, 128) | 0 |

| Batch Normalization_3 | (None, 3, 3, 128) | 512 |

| Convolution 2D_6 | (None, 3, 3, 256) | 295,168 |

| Convolution 2D_7 | (None, 3, 3, 256) | 590,080 |

| Batch Normalization_4 | (None, 1, 1, 256) | 0 |

| Flatten | (None, 256) | 0 |

| Dropout | (None, 256) | 0 |

| Dense_1 | (None, 256) | 65,792 |

| Batch Normalization_5 | (None, 256) | 1024 |

| Dense_2 | (None, 128) | 32,896 |

| Batch Normalization_6 | (None, 128) | 512 |

| Dense_3 | (None, 64) | 8256 |

| Batch Normalization_7 | (None, 64) | 256 |

| Dense_4 | (None, 32) | 2080 |

| Batch Normalization_8 | (None, 32) | 128 |

| Classifier | (None, 7) | 231 |

| Parameter | Value |

|---|---|

| Batch size | 128 |

| Number of epochs | 25 |

| Number of iterations | 294 |

| Optimizer | Adam |

| Optimizer parameters | Lr = 0.00001 |

| Performance Metrics | Formula | Equation |

|---|---|---|

| Accuracy | (7) | |

| F1-Score | (8) | |

| Recall | (9) | |

| Precision | (10) |

| Class | akiec | bcc | bkl | df | nv | vasc | mel |

|---|---|---|---|---|---|---|---|

| Training Samples | 5383 | 5352 | 5408 | 5417 | 5325 | 5341 | 5322 |

| Testing Samples | 1322 | 1353 | 1297 | 1288 | 1380 | 1364 | 1383 |

| Lesion Class | Precision | Recall | F1-Score |

|---|---|---|---|

| 0-akiec | 1.00 | 1.00 | 1.00 |

| 1-bcc | 0.99 | 1.00 | 0.99 |

| 2-bkl | 0.97 | 1.00 | 0.99 |

| 3-df | 1.00 | 1.00 | 1.00 |

| 4-nv | 1.00 | 0.92 | 0.96 |

| 5-vasc | 1.00 | 1.00 | 1.00 |

| 6-mel | 0.96 | 1.00 | 0.98 |

| Sr. No. | Work | Data Augmentation/Balancing? (Yes/No). Total Number of Images after Data Augmentation/Balancing | Methodology | Accuracy (%) |

|---|---|---|---|---|

| 1 | Agyenta et al. [37] | Yes, 7283 | InceptionV3 | 85.80% |

| ResNet50 | 86.69% | |||

| DenseNet201 | 86.91% | |||

| 2 | Qian et al. [14] | Yes, Not mentioned | Grouping of Multi-scale Attention Blocks (GMAB) | 91.6% |

| 3 | Shetty et al. [8] | Yes, 1400 | Convolutional neural network (CNN) | 95.18% |

| 4 | Panthakkan et al. [16] | No | Concatenated Xception-ResNet50 - | 97.8% |

| 5 | Proposed algorithm | Yes, 46,935 | ESRGAN-CNN | 98.77% |

| Class | akiec | bcc | bkl | df | nv | vasc | mel |

|---|---|---|---|---|---|---|---|

| Training Samples | 4845 | 4817 | 4867 | 4875 | 4792 | 4807 | 4790 |

| Validation Samples | 538 | 535 | 541 | 542 | 533 | 534 | 532 |

| Testing Samples | 1322 | 1353 | 1297 | 1288 | 1380 | 1364 | 1383 |

| Sr. No. | Work | Data Augmentation/Balancing? (Yes/No). Total Number of Images after Data Augmentation/Balancing | Methodology | Accuracy (%) |

|---|---|---|---|---|

| 1 | Onur et al. [19] | Yes, Not Mentioned | Convolutional neural network (CNN) | 91.51% |

| 2 | Saarela et al. [38] | No | Deep Convolutional neural network (CNN) | 80% |

| 3 | Proposed algorithm | Yes, 46,935 | ESRGAN-CNN | 98.36% |

| Class | akiec | bcc | bkl | df | nv | vasc | mel |

|---|---|---|---|---|---|---|---|

| Training Samples | 5346 | 5557 | 5338 | 5184 | 5500 | 5513 | 5579 |

| Validation Samples | 594 | 617 | 593 | 576 | 611 | 613 | 620 |

| Testing Samples | 660 | 686 | 659 | 640 | 679 | 681 | 689 |

| Sr. No. | Work | Data Augmentation/Balancing? (Yes/No). Total Number of Images after Data Augmentation/Balancing | Methodology | Accuracy (%) |

|---|---|---|---|---|

| 1 | Aldhyani et al. [2] | Yes, 54,907 | Lightweight Dynamic Kernel Deep-Learning-Based Convolutional Neural Network | 97.8% |

| 2 | Alam et al. [39] | No | S2C-DeLeNet | 90.58% |

| 3 | Proposed algorithm | Yes, 46,935 | ESRGAN-CNN | 98.89% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mukadam, S.B.; Patil, H.Y. Skin Cancer Classification Framework Using Enhanced Super Resolution Generative Adversarial Network and Custom Convolutional Neural Network. Appl. Sci. 2023, 13, 1210. https://doi.org/10.3390/app13021210

Mukadam SB, Patil HY. Skin Cancer Classification Framework Using Enhanced Super Resolution Generative Adversarial Network and Custom Convolutional Neural Network. Applied Sciences. 2023; 13(2):1210. https://doi.org/10.3390/app13021210

Chicago/Turabian StyleMukadam, Sufiyan Bashir, and Hemprasad Yashwant Patil. 2023. "Skin Cancer Classification Framework Using Enhanced Super Resolution Generative Adversarial Network and Custom Convolutional Neural Network" Applied Sciences 13, no. 2: 1210. https://doi.org/10.3390/app13021210

APA StyleMukadam, S. B., & Patil, H. Y. (2023). Skin Cancer Classification Framework Using Enhanced Super Resolution Generative Adversarial Network and Custom Convolutional Neural Network. Applied Sciences, 13(2), 1210. https://doi.org/10.3390/app13021210