Abstract

Hyperspectral imaging (HSI) has been used in a wide range of applications in recent years. But in the process of image acquisition, hyperspectral images are subject to various types of noise interference. Noise reduction algorithms can be used to enhance the quality of images and make it easier to detect and analyze features of interest. To realize better image recovery, we propose a weighted group sparsity-regularized low-rank tensor ring decomposition (LRTRDGS) method for hyperspectral image recovery. Tensor ring decomposition can be utilized by this approach to investigate self-similarity and global spectral correlation. Furthermore, weighted group sparsity regularization can be employed to depict the sparsity structure of the group along the spectral dimension of the spatial difference image. Moreover, we solve the proposed model using a symmetric alternating direction method multiplier with the addition of a proximity term. The experimental data verify the effectiveness of our proposed method.

1. Introduction

Hyperspectral imaging (HSI) is a powerful tool for studying and analyzing data for various applications. HSI uses a combination of visible, infrared and ultraviolet wavelengths to capture images in a way that traditional imaging methods cannot. HSI manifests two essential attributes: spatial and spectral resolutions. Specifically, it possesses two spatial dimensions and one spectral dimension. As a result, it generates an almost uninterrupted reflectance spectrum for each pixel within the scene. This, in turn, facilitates a comprehensive spectral analysis of scene attributes that would otherwise prove challenging to identify using a broader range of multispectral scanners [1]. The Flying Laboratory of Imaging Systems (FLIS) provides a single platform that combines hyperspectral, thermal and laser scanning to produce high-quality remote sensing data [2].

HSI has many applications, such as mining [3], analyzing crops in agriculture [4,5], astroplanetary exploration [6], medical images [7], mineral exploration [8], the detection of soil-borne viruses [9] and the measurement of water quality [10]. High-spectral-resolution images often contain noise, which can significantly reduce their performance and accuracy. Hence, attenuating noise holds great significance in scrutinizing high-spectral-resolution pictures. This technique aids in augmenting image quality, thereby facilitating the identification and examination of intriguing attributes. With noise reduction algorithms, HSI is more useful and accurate for various applications.

Tensor is a powerful mathematical tool for extracting information from HSI images. It allows us to see patterns and relationships between different parts of an image that are not visible with traditional imaging techniques, and it is possible to analyze and interpret the data in a much more sophisticated way. Many experts use tensor methods, such as Tucker decomposition [11,12], canonical polyadic (CP) decomposition [13,14] and tensor singular-value decomposition (t-SVD) [15] for hyperspectral image analysis. For example, a model for the weighted group of low-rank tensor decomposition with regularized sparsity (LRTDGS) based on Tucker decomposition was proposed by Chen et al. [16]. Using Tucker decomposition, Wang et al. developed a hybrid noise removal method for HSI [17]. In their study, Zhao et al. introduced a nonlocal low-rank regularized canonical polyadic tensor decomposition method (NLR-CPTD) that relies on two prior information sources: GCS and nonlocal self-similarity [18]. The weighted Schatten -norm low-rank matrix approximation (WSN-LRMA) method was developed by Xie et al. In this method, the eigenvalues were obtained through t-SVD [19]. Despite the satisfactory denoising results obtained through the aforementioned techniques, there is still significant potential for enhancing the efficiency of denoising hyperspectral images.

Many scholars have recently proposed a decomposition method based on tensor rings (TRs) using cyclically contracted third-order tensors to represent higher-order tensors [20,21]. Circular shifting and equivalence can be performed according to the tensor ring factor, which can effectively balance the correlation between the modes. TR decomposition can better approximate higher-order tensors. For tensor completion, refs. [22,23] verified that the TR method obtains better results than the Tucker and CP decomposition methods. TR is able to improve accuracy and enhance security. It can store and process data with greater accuracy than traditional methods, as the three-dimensional structure of the ring allows for a more precise representation of the data. This improved accuracy helps to ensure accuracy during data processing. Data stored in a tensor ring are more secure than data processed by traditional methods, as the three-dimensional structure of the ring makes it difficult to hack or access the data without authorization. This means that data stored in a tensor ring are more secure than data stored in stationary locations.

The total variational (TV) regularization method was first proposed by Rudin et al. [24]. This technology effectively preserves the spatial sparsity and smoothness of images, making it applicable in various image processing tasks, like denoising, magnetic resonance and superresolution. He et al. proposed total variational regularized low-rank matrix decomposition (LRTV) [25]. Wang et al. proposed a spectral–spatial total variational regularization (SSTV) method to construct a smooth structure in the spectral and spatial domains [17]. He et al. also presented the method of local low-rank matrix recovery, known as spatial–spectral total variance regularization (LLRSSTV), which aims to effectively exploit the total variance nature of the variance in each direction of hyperspectral images for reorganizing the local low-rank patches [26]. However, most of the methods utilize one-norm constraints on the spatial difference images to promote structural segmentation smoothing. To address the issue of the ineffective depiction of the group sparse structure within the spectral dimensional spatial difference image, Chen et al. introduced a novel approach for hyperspectral image recovery. This technique employs weighted group sparsity-regularized low-rank tensor decomposition to overcome the limitation of the one-norm [16].

In light of the preceding discussion, we present a novel approach for enhancing hyperspectral image quality through the utilization of weighted group sparsity-regularized low-rank tensor ring decomposition (LRTRDGS). The method combines the advantages of tensor ring decomposition and weighted group sparse regularization. A symmetric alternating direction of multipliers method with a proximity point operator is used to solve our proposed model. This paper focuses on the following main topics:

- (1)

- Utilizing the global spatial and spectral correlation among hyperspectral images, the tensor ring decomposition technique is employed to segregate unpolluted hyperspectral images from raw observations that have been tainted with intricate noise.

- (2)

- Due to the fact that the gradient components in smooth areas of hyperspectral images typically exhibit a complete absence (a value of zero) in the spectral dimension, the gradient components in edge regions demonstrate non-zero values. Hence, to address this discrepancy, we incorporate a regularization term, with the group sparsity weighted, into the framework of tensor ring decomposition. It can explore the group structure of spatially differential images along the spectral dimension.

- (3)

- A symmetric alternating direction method multiplier is employed to solve the model of the low-rank tensor ring decomposition with regularization on weighted group sparsity. To enhance the efficiency of this method, a proximity point operator is incorporated. Through numerical experiments, it has been determined that this approach outperforms other commonly utilized methods in terms of both quantitative evaluation and visual comparison.

The structure of this paper is as follows. Several notations are presented, and the tensor ring is defined in Section 2. Section 3 proposes a hyperspectral image denoising model based on weighted group sparse regularized tensor ring decomposition and presents the model solving method. In Section 4, we present the experimental results for the simulated data and discuss the parametric analysis and convergence analysis. We summarize the proposed method in Section 5.

2. Notations and Tensor Ring

This section describes the notations used throughout this paper and introduces the tensor ring approach presented in [20].

2.1. Notations

Following the nomenclature in [27], we summarize the notations used in this paper as follows. x is a scalar, is a vector, is a matrix and is a tensor. or is the th element of . or is the th column of a third-order tensor . or is the th row of a third-order tensor . or is the th tubal of a third-order tensor . is the Frobenius norm. is the -norm. is the mode-k unfolding of . is the inner product. ⨂ is the Kronecker product. ⨀ is componentwise multiplication. is the mode-k tensor matrix product.

In order to increase the readability of this article, we summarize the abbreviations of the names in Abbreviations part.

2.2. Tensor Ring

In this segment, we present the technique of the tensor ring and the corresponding definitions linked to it. The tensor ring structure is a superior and effective form of decomposition in comparison to other types of tensor decomposition. TR decomposition can be represented as a sequence of cyclic multiplicative third-order tensors with a higher-order tensor. The nth-tensor is denoted as . The representation of the tensor ring can be decomposed into a series of latent tensors,

where is the th element of the tensor. represents the th lateral slice matrix of the latent tensor . It has a size of . Any two adjacent latent tensors and have equivalent dimensions of on their corresponding modes. The last latent tensor has a size of , i.e., , which ensures that the product of these matrices is a square matrix. In some cases, the latent tensor is called the kth-core. is the size of the cores. are known as TR ranks. According to (1), the trace of a sequential product of matrices is equivalent to . In addition, (1) can be rewritten as follows:

where k represents the tensor modes, represents the data dimension index and represents the latent dimension index. For , , , Equation (2) can also be written as follows:

where ‘∘’ is the outer product of the vector. is the mode-2 fiber of tensor .

Figure 1 graphically represents the tensor ring using a linear tensor network. The order of each tensor is determined by its edges, with every node representing a tensor. The size of each mode is indicated by the number on the corresponding edge. The tensor contraction, also known as the multilinear product operator, occurs when two tensors are connected on a specific mode. For a more comprehensive explanation, please refer to the provided reference [20].

Figure 1.

A graphical representation of tensor ring decomposition.

Definition 1.

(Multilinear Product). For the two adjacent cores of TR decomposition and , is the multilinear product of the two cores:

where .

According to Definition 1, it is established that the tensor can be expressed as the multilinear outcome of a series of core tensors of the third order, known as TR decomposition, i.e.,

where is the reconfiguration operator of TR and .

3. Proposed Method

Assuming that the noise is independent in the denoising problem, we usually consider the following degradation model:

where is the clean hyperspectral image; is Gaussian noise; is sparse noise; is the hyperspectral images spatial size; and is the number of bands in the spectral analysis.

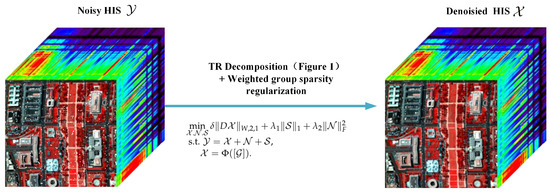

Based on this degradation model, we propose LRTRDGS, which is recovered by tensor ring constraints and preserves fine details by group sparse regularization. The framework of the proposed LRTRDGS method is depicted in Figure 2. The LRTRDGS model is represented as follows:

where D is composed of and , which are two differential operators in two spatial dimensions. The weighted -norm of is as follows:

Figure 2.

Framework of the proposed HSI denoising method.

To solve the LRTRDGS model (7), we propose a symmetric alternating direction method of the multiplier (ADMM) that incorporates proximity point operators. In the upcoming phases, the suggested ADMM will be implemented to address the proposed model. Two auxiliary variables can be introduced to the model (7) and are expressed as follows:

Under the constraint of tensor ring decomposition , this problem has the following augmented Lagrangian function:

where , and are the Lagrange multipliers and is a positive scalar. As seen from the Lagrangian function, all variables are independent. We fix other variables and choose suitable ones to optimize the incremental Lagrangian function in (9). To optimize the incremental Lagrangian function in (9), we need to fix the other variables and select the appropriate ones. In addition, we make use of the symmetric ADMM with the addition of the proximity point operator to improve the convergence speed of the algorithm. The specific algorithm framework is as follows:

In the th iteration, we update the variables involved in model (10) as follows:

(1) Subproblem :

(11) can be expressed as

Through tensor ring decomposition, we can easily obtain , and can be updated as follows:

(2) Subproblem :

This problem can be optimized with the following linear system:

where is the adjoint operator of D. The matrix corresponding to operator has a block cycle structure, which can be diagonalized by the 3-D Fast Fourier Transform (FFT) matrix. Thus, we have

where and correspond to the rapid 3-D Fourier transformation and its inverse conversion, respectively. The squared component is denoted by , with division being executed in an element-wise manner.

(3) Subproblem :

Using the soft threshold operator,

where , and , we have:

(4) Subproblem :

By applying the aforementioned soft threshold operator, one can acquire the solution to the described problem:

(5) Subproblem :

By using a simple calculation, we can find the following solution:

After solving these subproblems, we summarize those steps in Algorithm 1.

| Algorithm 1 ADMM for HSI Denoising. |

|

4. Experimental Results

In this section, we present the simulation outcomes for assessing the retrieval effectiveness of the novel LRTRDGS technique. The first dataset is the Washington DC Mall data, which is an image of Washington City, an aerial hyperspectral image acquired by the Hydice sensor, with a size of (256, 256, 160). Another dataset is the Pavia City Center dataset. This is an image of the city of Pavia in northern Italy captured by ROSIS sensors, taken by the Reflective Optical System Spectrometer, with dimensions (200, 200, 80) [28]. For comparison, we implement four typical HSI denoising methods, namely, total variation regularized low-rank matrix factorization (LRTV) [25], patchwise low-rank matrix approximation (LRMR) [29], weighted group sparsity-regularized low-rank tensor decomposition (LRTDGS) [16] and an spatial–spectral total variation regularized local low-rank tensor recovery model (TLR-SSTV) [30]. The experiment applies the parameters proposed in the referenced article or the code authored by the investigator.

In this paper, three quantitative image quality indexes are used to evaluate the performance of the comparison methods, including the peak signal-to-noise ratio based on the characteristics of the human visual system (PSNR-HVS), mean structural similarity index measure (MSSIM) and erreur relative globale adimensionnelle desynthse (ERGAS). PSNR-HVS is a quality metric that measures the characteristics of the human visual system in a full-reference manner. MSSIM assesses the structural consistency to determine the similarity between the original hyperspectral image and the denoised hyperspectral image. Evaluating the fidelity of the denoised hyperspectral image, ERGAS incorporates the MSE of each band by assigning proper weights. To gauge the quality of the resultant denoised image, we employ three metrics:

The PSNR-HVS can be adapted to different block sizes and is not computationally intensive. In this context, the height and width of the image are denoted as I and J, respectively, and an image block with the upper left corner at has as its DCT coefficient. The DCT coefficient of the corresponding block in the original image is denoted as . The JPEG quantization table specified in the JPEG standard is represented as , and K is calculated as [31].

where the constant represents , while the value is equivalent to the value. The standard deviations of x and y are symbolized as and correspondingly. The initial image and the altered image are denoted as X and Y, respectively. The composition of the local window corresponding to the jth instance is indicated by and , whereas the total number of local windows is denoted as .

where and represent the reference image and recovered image of the ith band, respectively. P represents the number of bands.

In the experiment, all the reflectance values of the hyperspectral images are normalized to [0,1] for numerical calculation and visualization. To verify the performance of the method under different noise conditions, we add simulated noise to the simulated HSI data. The noise here mainly includes three types of noise: Gaussian noise, impulse noise and deadline noise. The deadline noise is caused by the uneven distribution of the sensor dark current and dark voltage, which will produce some dead lines in the image and affect the image quality. Here, we list five different noise situations:

Case 1 (i.i.d. Gaussian Noise): In this particular scenario, every band encounters independent Gaussian noise distributed uniformly with an average of zero.

Case 2 (i.i.d. Gaussian Noise + Deadline Noise): In this particular instance, following case 1, the band of 60–80 also experiences interference from a certain type of noise that disrupts the signal. For our analysis, we assign a random width to each stripe, ranging from 1 to 3, and a random number of stripes, ranging from 3 to 10.

Case 3 (i.i.d. Gaussian Noise + i.i.d. Impulse Noise): In this scenario, all bands encounter independent Gaussian noise that is distributed identically and exerts an average of zero. Additionally, they are subject to independent impulse noise that is identically distributed.

Case 4 (i.i.d. Gaussian Noise + i.i.d. Impulse Noise + Deadline Noise): In this specific scenario, as noted in case 3, the frequency bands ranging from 60 to 80 also experience interference from the dead line noise. To address this issue, we adopt a random approach for determining the width of each stripe, varying it between 1 and 3 units. Similarly, the number of stripes is selected randomly, ranging from 3 to 10.

Case 5 (non-i.i.d. Gaussian Noise + non-i.i.d. Impulse Noise + Deadline Noise): In this particular scenario, every single band experiences nonindependent identically distributed Gaussian noise that has an average of zero, as well as nonindependent identically distributed impulse noise. Additionally, the bands ranging from 60 to 80 encounter a certain level of dead line noise. The width of each stripe is determined in a random manner, falling between the range of 1 and 3. Furthermore, the number of stripes is randomly assigned between 3 and 10.

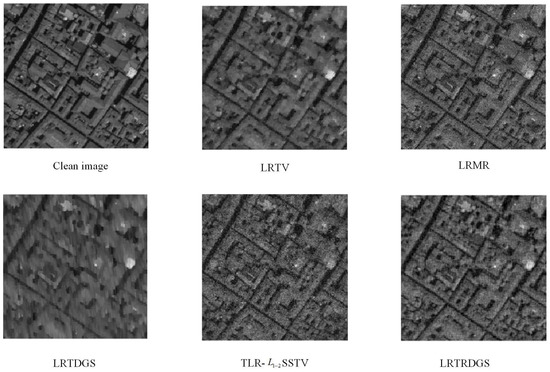

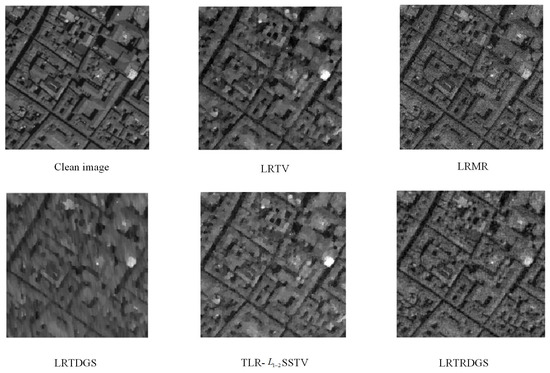

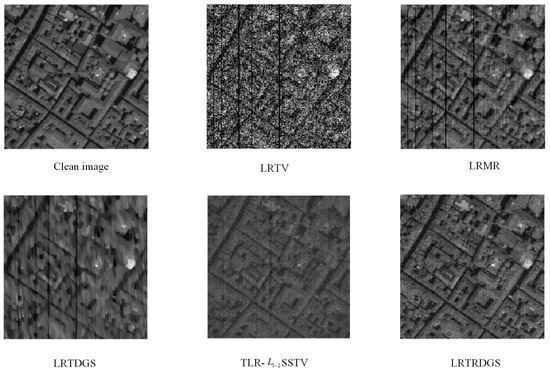

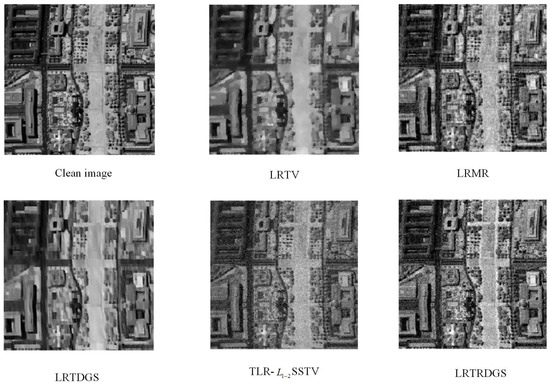

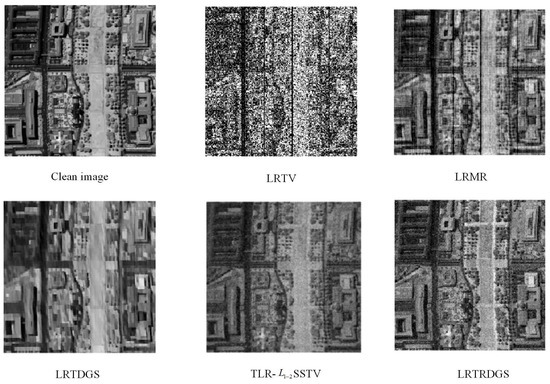

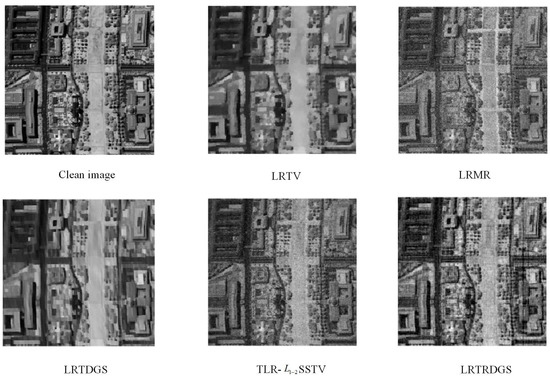

We provide the findings from our quantitative assessment of various comparison methods. The comparative outcomes of five HSI techniques on the Pavia City Center dataset are displayed in Table 1. Moreover, Table 2 demonstrates the comparative results of these five methods on the Washington DC Mall dataset. Notably, we have indicated the superior results, which are highlighted in bold within the tables. In this particular study, the Gaussian noise is denoted as , while the impulse noise is represented as . To visually demonstrate the comparative methods for HSI denoising, the denoising results at band 68 of the Pavia City Center dataset can be seen in Figure 3, Figure 4 and Figure 5. Additionally, the denoising result at band 61 of the Washington DC Mall dataset is shown in Figure 6, Figure 7 and Figure 8.

Table 1.

Results of removing noise from the Pavia City Center dataset in different cases.

Table 2.

Results of removing noise from the Washington DC Mall dataset in different cases.

Figure 3.

Denoising results of case 3-1 on the Pavia City Center dataset.

Figure 4.

Denoising results of case 4-2 on the Pavia City Center dataset.

Figure 5.

Denoising results of case 5-1 on the Pavia City Center dataset.

Figure 6.

Denoising results of case 1-2 on the Washington DC Mall dataset.

Figure 7.

Denoising results of case 4-1 on the Washington DC Mall dataset.

Figure 8.

Denoising results of case 5-2 on the Washington DC Mall dataset.

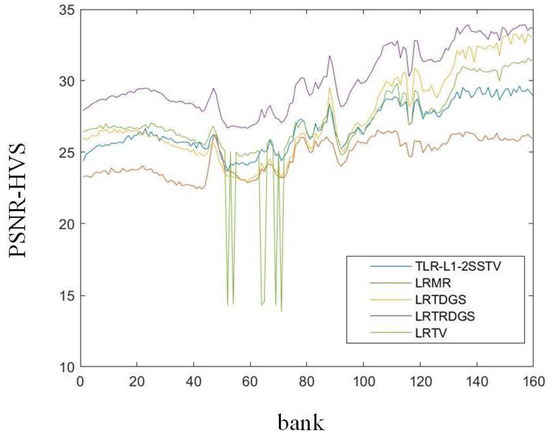

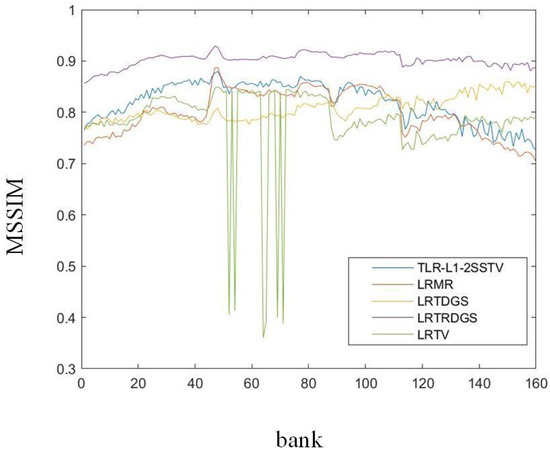

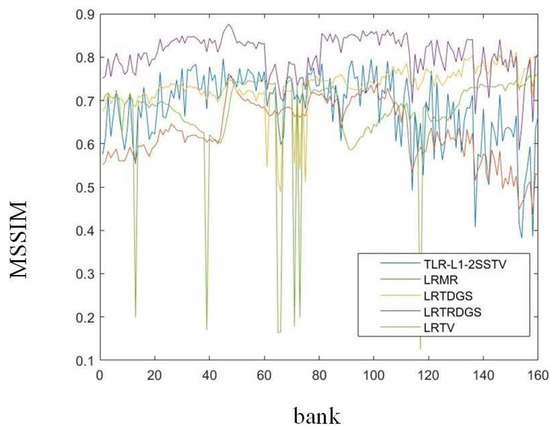

In addition, Figure 3 and Figure 6 show the denoising results of the testing HSIs for case 1 and case 3, respectively. LRTRDGS, the method that is being proposed, demonstrates exceptional outcomes in terms of visual quality in comparison to the alternative methods. LRTRDGS showcases its ability to eliminate both Gaussian noise and impulse noise while simultaneously maintaining the fundamental structure of HSI. LRMR and TLR-SSTV produce results with some noise. LRTV and LRTDGS can eliminate hyperspectral image noise, but some details may be lost in the process. For cases 2, 4 and 5, the focus is on evaluating the performance of deadline noise removal. Figure 4 and Figure 5 depict the denoising outcomes obtained from the Pavia City Center dataset. Similarly, the denoising results for the Washington DC Mall dataset are shown in Figure 7 and Figure 8. The proposed LRTRDGS method demonstrates its capability to effectively remove unexpected deadline noise while preserving the underlying HSI details. The results of LRTV and LRTDGS also show a small amount of deadline noise. Additionally, we present the values of PSNR-HVS and MSSIM for each frequency band in the Washington DC Mall simulation dataset case 3-1 (Figure 9 and Figure 10) and case 5-1 (Figure 11 and Figure 12). The results demonstrate that our proposed LRTRDGS method outperforms the other methods in terms of both PSNR-HVS and MSSIM. Specifically, when applied to two different hyperspectral images, the LRTRDGS method achieves superior results compared to the four other comparison methods. These findings suggest that tensor ring decomposition plays a positive role in denoising hyperspectral images.

Figure 9.

PSNR-HVS of the different methods for each band of case 3-1 on the Washington DC Mall dataset.

Figure 10.

MSSIM of the different methods for each band of case 3-1 on the Washington DC Mall dataset.

Figure 11.

PSNR-HVS of the different methods for each band of case 5-1 on the Washington DC Mall dataset.

Figure 12.

MSSIM of the different methods for each band of case 5-1 on the Washington DC Mall dataset.

Our proposed LRTRDGS does not have an advantage in CPU time. This is because the tensor ring structure is slightly more complex, resulting in longer calculation times. Because of the use of tensor rings, our proposed method achieves better results in quantification (Table 1 and Table 2) and visualization (Figure 6, Figure 7, Figure 8, Figure 9, Figure 10, Figure 11 and Figure 12).

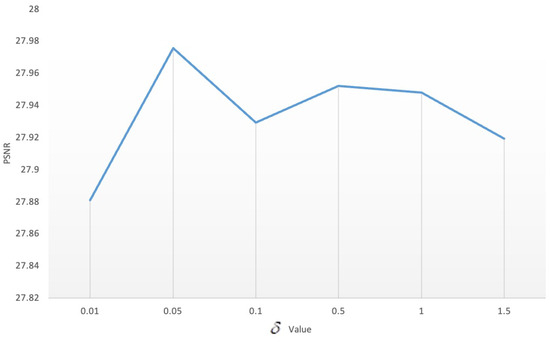

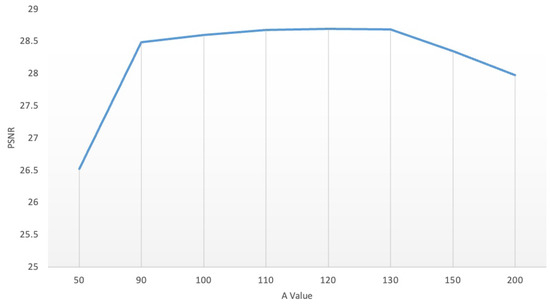

In the LRTRDGS method, the selection of parameters determines the denoising results of the hyperspectral images. The parameters include the total variation regularization parameter , sparse noise regularization parameter , Gaussian noise regularization parameter , the rank of the tensor ring , adjacent operator parameter and other parameters and . Based on the simulation data of case 3 in the Washington DC Mall dataset, the influence of the parameters is discussed.

The influence of the total variation regularization parameter is shown below. Figure 13 shows the PSNR value for values of 0.01, 0.05, 0.1, 0.5, 1 and 1.5. The results show that has the best effect.

Figure 13.

Influence of parameter .

Below is the demonstration of the impact of the regularization parameter on the sparse noise limitation, which restricts the extent of the sparsity in sparse noise. We set , where M is the height of the hyperspectral band and N is the width of the hyperspectral band. Figure 14 shows the PSNR values when A is set to 1, 2, 5, 8, 10, 15, 20, 25, 30, 40 and 50. The results show that achieves the best PSNR value.

Figure 14.

Influence of parameter A.

Here, we consider the influence of the sparse noise regularization parameter . This parameter plays a role in limiting the sparsity of Gaussian noise. Figure 15 shows the PSNR values when is set to 100, 200, 210, 220, 230, 240, 250, 260, 270, 280, 290 and 300. The results show that yields the best PSNR value.

Figure 15.

Influence of parameter .

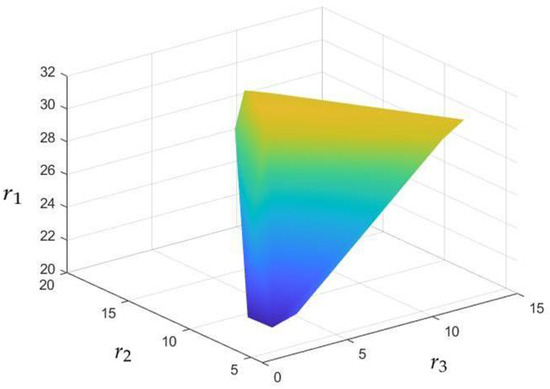

The influence of the rank of the tensor ring , which is an important parameter to control the correlation of tensors, is discussed. In order to streamline the determination of the tensor ring’s rank, we make the assumption that the ranks of the second and third dimensions are identical, denoted as . The PSNR values vary under different tensor ring ranks, as shown in Figure 16. In order to achieve a balance between the robustness of the tensor ring rank and the effectiveness of the denoising results, we set the tensor ring rank differently in different noise environments. For the previously discussed case, we set the tensor ring to .

Figure 16.

Influence of parameter r.

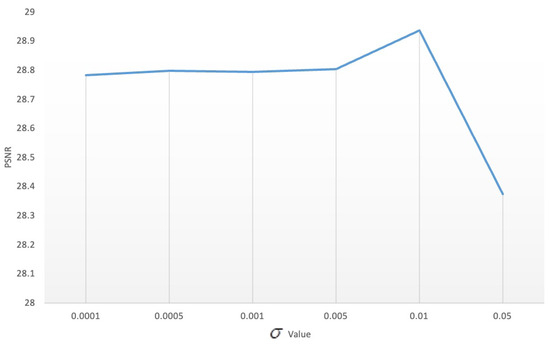

We consider the influence of the adjacent operator parameter , which is used to limit the step size of a neighboring operator. Here, we select the sets 0.0001, 0.0005, 0.001, 0.005, 0.01 and 0.05 as the test data. Figure 17 shows the data test results. Finally, is selected as the optimal value.

Figure 17.

Influence of parameter .

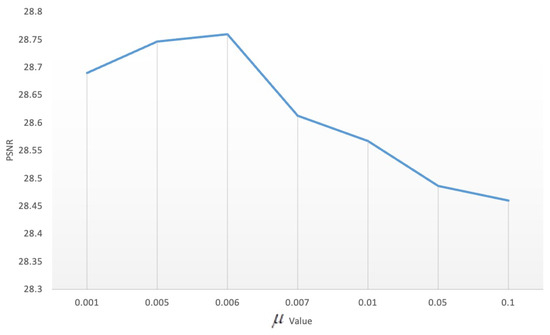

Regarding the influence of parameter , Figure 18 shows the recovery results for changes in the sets 0.001, 0.005, 0.006, 0.007, 0.01, 0.05 and 0.1. The results show that has the best effect.

Figure 18.

Influence of parameter .

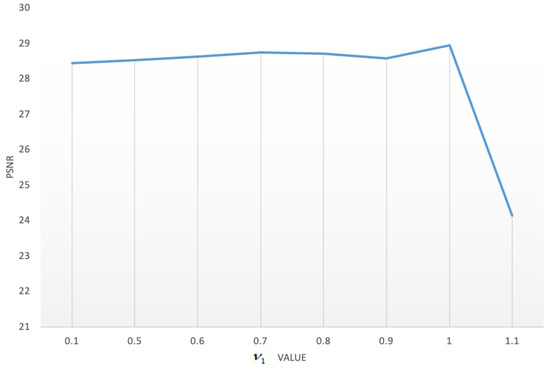

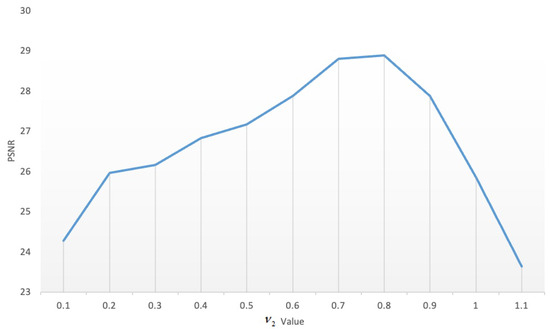

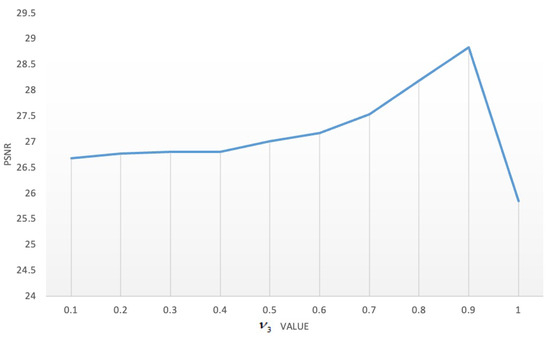

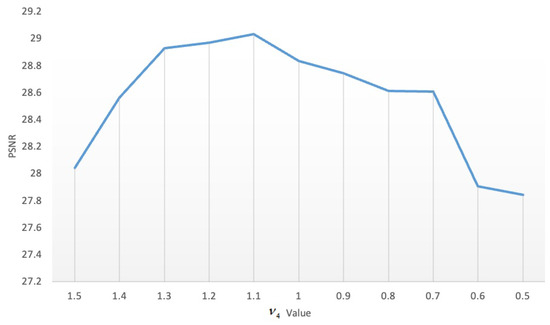

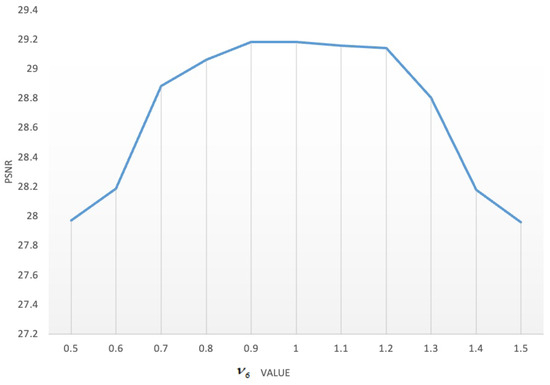

Regarding the influence of the parameters and , Figure 19, Figure 20, Figure 21, Figure 22, Figure 23 and Figure 24 show the data analyzed. Finally, we chose and as the best results.

Figure 19.

Influence of parameter .

Figure 20.

Influence of parameter .

Figure 21.

Influence of parameter .

Figure 22.

Influence of parameters .

Figure 23.

Influence of parameter .

Figure 24.

Influence of parameter .

Because the parameters , , and others are solved using simple algebraic calculations, our main focus is on the time spent on and . is the size of the hyperspectral image affected by noise. A tensor ring representation is used to estimate for updating . Assuming that the size is and the TR rank is set as , there is a cost of O( + + ) to in each iteration. refers to the computational complexity of the FFT, where is the size of the data. Consequently, the cost of updating the subproblem of is denoted as . According to the above, the proposed algorithm has a complexity of O( + + + h w b log(h w b)).

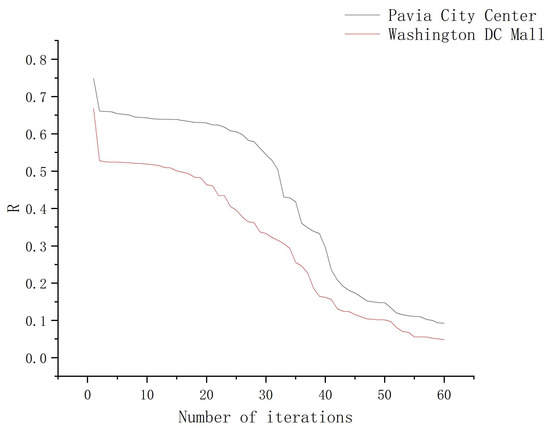

At last, numerical experiments are conducted to verify the convergence of the proposed algorithm. Figure 25 shows the variation in R with the number of iterations of LRTRDGS for hyperspectral reconstructions, where . As the number of iterations increases, R approaches zero.

Figure 25.

Change in the value R for the hyperspectral reconstructed images versus the number of iterations.

5. Conclusions

A weighted group sparse regularization tensor ring decomposition method for hyperspectral image restoration is proposed. The global spatial–spectral correlation model of hyperspectral images is tensor ring decomposition. Using weighted group sparsity to constrain the spectral dimension of spatial difference images is more reasonable than using total variation regularization. The effectiveness of hyperspectral image restoration is demonstrated by the experimental findings of the LRTRDGS method proposed. The implementation of LRTRDGS provides a means to effectively mitigate noise while preserving the intricate textural features of HSI. For further exploration of the spatial domain of HSI, it is recommended to consider representation-based subspace low-rank learning methods that provide more accurate regularization of nonlocal self-similarity. Additionally, it is worth investigating image compression techniques with a low-rank degree.

Author Contributions

Conceptualization, Y.L.; Methodology, S.W., Y.L. and B.Z.; Software, S.W.; Resources, B.Z.; Writing—Review and Editing, S.W. and Z.Z.; Supervision, Z.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the National Natural Science Foundation of China (61967004, 11901137, 11961011, 72061007 and 62171147), the Guangxi Key Laboratory of Automatic Detection Technology and Instruments (YQ23105, YQ20113 and YQ20114) and the Guangxi Key Laboratory of Cryptography and Information Security (GCIS201621 and GCIS201927).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data used to support the findings of this study are available from the corresponding author upon request.

Conflicts of Interest

The authors declare that they have no conflict of interest.

Abbreviations

| Abbreviation | Name |

| FLIS | The Flying Laboratory of Imaging Systems |

| HSI | Hyperspectral Imaging |

| CP | Canonical Polyadic |

| t-SVD | Tensor Singular-Value Decomposition |

| LRTDGS | A Weighted Group Sparsity-Regularized Low-Rank Tensor Decomposition Mode |

| GCS | Global Correlation Across Spectrum |

| NLR-CPTD | A Nonlocal Low-Rank Regularized CP Tensor Decomposition Method |

| WSN-LRMA | Weighted Schatten -Norm Low-Rank Matrix Approximation |

| TR | Tensor Ring |

| TV | Total Variational |

| LRTV | Total Variational Regularized Low-Rank Matrix Decomposition |

| SSTV | Spectral–Spatial Total Variational Regularization |

| LLRSSTV | The Spatial–Spectral Total Variance Regularized Local Low-Rank Matrix Recovery Method |

| LRMR | Patchwise Low-Rank Matrix Approximation |

| TLR-SSTV | Spatial–Spectral Total Variation Regularized Local Low-Rank Tensor Recovery Model |

| FFT | Fast Fourier Transform |

| PSNR-HVS | The Peak Signal-to-Noise Ratio Based On the Characteristics of the Human Visual System |

| MSSIM | Mean Structural Similarity Index Measure |

| ERGAS | Erreur Relative Globale Adimensionnelle Desynthse |

References

- Stuart, M.B.; McGonigle, A.J.S.; Willmott, J.R. Hyperspectral Imaging in Environmental Monitoring: A Review of Recent Developments and Technological Advances in Compact Field Deployable Systems. Sensors 2019, 19, 3071. [Google Scholar] [CrossRef]

- Hanuš, J.; Slezák, L.; Fabiánek, T.; Fajmon, L.; Hanousek, T.; Janoutová, R.; Kopkáně, D.; Novotný, J.; Pavelka, K.; Pikl, M.; et al. Flying Laboratory of Imaging Systems: Fusion of Airborne Hyperspectral and Laser Scanning for Ecosystem Research. Remote Sens. 2023, 15, 3130. [Google Scholar] [CrossRef]

- Schodlok, M.C.; Frei1, M.; Segl, K. Implications of new hyperspectral satellites for raw materials exploration. Miner. Econ. 2022, 35, 495–502. [Google Scholar] [CrossRef]

- Avola, G.; Matese, A.; Riggi, E. Precision Agriculture Using Hyperspectral Images. Remote Sens. 2023, 15, 1917. [Google Scholar] [CrossRef]

- Moncholi-Estornell, A.; Cendrero-Mateo, M.P.; Antala, M.; Cogliati, S.; Moreno, J.; Van Wittenberghe, S. Enhancing Solar-Induced Fluorescence Interpretation: Quantifying Fractional Sunlit Vegetation Cover Using Linear Spectral Unmixing. Remote Sens. 2023, 15, 4274. [Google Scholar] [CrossRef]

- Naß, A.; van Gasselt, S. A Cartographic Perspective on the Planetary Geologic Mapping Investigation of Ceres. Remote Sens. 2023, 15, 4209. [Google Scholar] [CrossRef]

- Sharma, S.R.; Singh, B.; Kaur, M. A hybrid encryption model for the hyperspectral images: Application to hyperspectral medical images. Multimed. Tools Appl. 2023. [Google Scholar] [CrossRef]

- Bedini, E. The use of hyperspectral remote sensing for mineral exploration: A review. J. Hyperspectral Remote Sens. 2017, 7, 189–211. [Google Scholar] [CrossRef]

- Haagsma, M.; Hagerty, C.H.; Kroese, D.R.; Selker, J.S. Detection of soil-borne wheat mosaic virus using hyperspectral imaging: From lab to field scans and from hyperspectral to multispectral data. Precis. Agric. 2023, 24, 1030–1048. [Google Scholar] [CrossRef]

- Adjovu, G.E.; Stephen, H.; James, D.; Ahmad, S. Measurement of Total Dissolved Solids and Total Suspended Solids in Water Systems: A Review of the Issues, Conventional, and Remote Sensing Techniques. Remote Sens. 2023, 15, 3534. [Google Scholar] [CrossRef]

- Renard, N.; Bourennane, S.; Blanc-Talon, J. Denoising and dimensionality reduction using multilinear tools for hyperspectral images. IEEE Trans. Geosci. Remote Sens. 2008, 5, 138–142. [Google Scholar] [CrossRef]

- Chen, Y.; Huang, T.Z.; Zhao, X.L. Destriping of multispectral remote sensing image using low-rank tensor decomposition. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 4950–4967. [Google Scholar] [CrossRef]

- Liu, X.; Bourennane, S.; Fossati, C. Denoising of hyperspectral images using the PARAFAC model and statistical performance analysis. IEEE Trans. Geosci. Remote Sens. 2012, 50, 3717–3724. [Google Scholar] [CrossRef]

- Guo, X.; Huang, X.; Zhang, L.; Zhang, L. Hyperspectral image noise reduction based on rank-1 tensor decomposition. ISPRS J. Photogramm. Remote Sens. 2013, 83, 50–63. [Google Scholar] [CrossRef]

- Fan, H.; Li, C.; Guo, Y.; Kuang, G.; Ma, J. Spatial Cspectral total variation regularized low-rank tensor decomposition for hyperspectral image denoising. IEEE Trans. Geosci. Remote Sens. 2018, 56, 6196–6213. [Google Scholar] [CrossRef]

- Chen, Y.; He, W.; Yokoya, N.; Huang, T.Z. Hyperspectral Image Restoration Using Weighted Group Sparsity-Regularized Low-Rank Tensor Decomposition. IEEE Trans. Cybern. 2020, 50, 3556–3570. [Google Scholar] [CrossRef]

- Wang, Y.; Peng, J.; Zhao, Q.; Meng, D.; Leung, Y.; Zhao, X.-L. Hyperspectral image restoration via total variation regularized low-rank tensor decomposition. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1227–1243. [Google Scholar] [CrossRef]

- Xue, J.; Zhao, Y.; Liao, W.; Chan, J.C. Nonlocal low-rank regularized tensor decomposition for hyperspectral image denoising. IEEE Trans. Geosci. Remote Sens. 2019, 57, 5174–5189. [Google Scholar] [CrossRef]

- Xie, Y.; Qu, Y.; Tao, D.; Wu, W.; Yuan, Q.; Zhang, W. Hyperspectral Image Restoration via Iteratively Regularized Weighted Schatten p-Norm Minimization. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4642–4659. [Google Scholar] [CrossRef]

- Zhao, Q.; Zhou, G.; Xie, S.; Zhang, L.; Cichocki, A. Tensor Ring Decomposition. arXiv 2016, arXiv:1606.05535. [Google Scholar] [CrossRef]

- Zhao, Q.; Sugiyama, M.; Yuan, L.; Cichocki, A. Learning Efficient Tensor Representations with Ring-structured Networks. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019. [Google Scholar]

- Wang, W.; Aggarwal, V.; Aeron, S. Efficient low rank tensor ring completion. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- He, W.; Yokoya, N.; Yuan, L.; Zhao, Q. Remote Sensing Image Reconstruction Using Tensor Ring Completion and Total Variation. IEEE Trans. Geoence Remote Sens. 2019, 57, 8998–9009. [Google Scholar] [CrossRef]

- Rudin, L.I.; Osher, S.; Fatemi, E. Nonlinear total variation based noise removal algorithms. Phys. D Nonlinear Phenom. 1992, 60, 259–268. [Google Scholar] [CrossRef]

- He, W.; Zhang, H.; Zhang, L.; Shen, H. Total-variation-regularized low-rank matrix factorization for hyperspectral image restoration. IEEE Trans. Geosci. Remote Sens. 2016, 54, 178–188. [Google Scholar] [CrossRef]

- Wei, H.; Zhang, H.; Shen, H.; Zhang, L. Hyperspectral Image Denoising Using Local Low-Rank Matrix Recovery and Global Spatial-CSpectral Total Variation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1–17. [Google Scholar]

- Kolda, T.G.; Bader, B.W. Tensor decompositions and applications. SIAM Rev. 2009, 51, 455–500. [Google Scholar] [CrossRef]

- Hyperspectral Images. Available online: https://engineering.purdue.edu/~biehl/MultiSpec/hyperspectral.html (accessed on 3 July 2023).

- Zhang, H.; He, W.; Zhang, L.; Shen, H.; Yuan, Q. Hyperspectral image restoration using low-rank matrix recovery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 4729–4743. [Google Scholar] [CrossRef]

- Zeng, H.; Xie, X.; Cui, H.; Yin, H.; Ning, J. Hyperspectral Image Restoration via Global L1-2 Spatial-Spectral Total Variation Regularized Local Low-Rank Tensor Recovery. IEEE Trans. Geosci. Remote Sens. 2021, 59, 3309–3325. [Google Scholar] [CrossRef]

- Valizadeh, S.; Nasiopoulos, P.; Ward, R. Perceptual rate distortion optimization of 3D–HEVC using PSNR-HVS. Multimed. Tools Appl. 2018, 77, 22985–23008. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).