Abstract

Dogs’ displacement behaviours and some facial expressions have been suggested to function as appeasement signals, reducing the occurrences of aggressive interactions. The present study had the objectives of using naturalistic videos, including their auditory stimuli, to expose a population of dogs to a standardised conflict (threatening dog) and non-conflict (neutral dog) situation and to measure the occurrence of displacement behaviours and facial expressions under the two conditions. Video stimuli were recorded in an ecologically valid situation: two different female pet dogs barking at a stranger dog passing by (threatening behaviour) or panting for thermoregulation (neutral behaviour). Video stimuli were then paired either with their natural sound or an artificial one (pink noise) matching the auditory characteristics. Fifty-six dogs were exposed repeatedly to the threatening and neutral stimuli paired with the natural or artificial sound. Regardless of the paired auditory stimuli, dogs looked significantly more at the threatening than the neutral videos (χ2(56, 1) = 138.867, p < 0.001). They kept their ears forward more in the threatening condition whereas ears were rotated more in the neutral condition. Contrary to the hypotheses, displacement behaviours of sniffing, yawning, blinking, lip-wiping (the tongue wipes the lips from the mouth midpoint to the mouth corner), and nose-licking were expressed more in the neutral than the threatening condition. The dogs tested showed socially relevant cues, suggesting that the experimental paradigm is a promising method to study dogs’ intraspecific communication. Results suggest that displacement behaviours are not used as appeasement signals to interrupt an aggressive encounter but rather in potentially ambiguous contexts where the behaviour of the social partner is difficult to predict.

1. Introduction

In the last twenty years, there has been increasing interest in dog cognition and behaviour within different disciplines including ethology, comparative cognition, and animal welfare science [1,2]. While, within these disciplines, dog–human communication has also received considerable scientific interest [3,4,5,6], dogs’ intraspecific communication has received less systematic attention from researchers [7].

Domestic dogs are highly sensitive to visual communicative signals and their species-specific repertoire includes postures and movements of the body and tail, facial cues, and displacement behaviours [8]. Movements of the facial regions, i.e., facial expressions, are successful indicators of different affective states in dogs [9] and can be successfully recognised by humans [10]. For example, while blinking, nose licking and ear flattening have been associated with frustration, the ears adductor action has been associated with positive anticipation [11,12]. Traditionally, facial expressions such as ear flattening, and lip licking have been considered submissive/appeasement signals used by both wild canids and domestic dogs to appease the receiver and reduce the probability of potentially aggressive behaviours [13]. Recent studies, in which facial expressions have been analysed with the DogFACS system (an anatomically based system allowing the objective measurement of facial cues [14]), confirmed that facial expressions such as ear movements and mouth-related actions (i.e., nose licking and lip wiping) are dependent on the presence of a social audience and thus possibly used as visual communicative signals (Behavioural Ecology view, [15]) by dogs during dog–human [16,17] and dog–dog interactions. In the dog training literature, displacement behaviours, including self-directed behaviours such as lip licking, yawning, scratching, auto-grooming and environment-directed behaviours, for example, sniffing the environment and drinking, have been suggested to function as de-escalation/appeasement signals in dog–dog as well as dog–human interactions [18,19] (see [20] for a summary of the classification of the displacement behaviours in dogs).

One prediction based on the appeasement function of the displacement behaviours hypothesis is that these behaviours should be exhibited more in conflict-ridden compared to non-conflict-ridden situations. However, the few scientific studies that have been carried out have shown mixed results. At the interspecific level, Firnkes and colleagues [21] investigated whether the display of lip licking was more frequent in dogs when exposed to a human approaching with a highly threatening, mild threatening or friendly attitude as well as different environmental stressors. They found that lip licking was associated with an active submissive attitude in dogs (e.g., ears flattened, tail wagging, crouched posture, greeting behaviour), but was not displayed in highly threatening situations [21]. In a previous study conducted by our research team [20], the threatening approach test (TAT) was adopted to test the dogs’ responsiveness to unfamiliar humans approaching them in either a threatening (bent posture and staring) or a neutral (relaxed posture, not staring at the dog) way. In general, the dogs’ attitudes in both conditions could be categorised as either reactive or non-reactive (i.e., presence or absence of barking and lunging). Results showed that displacement behaviours were not exhibited more in the threatening compared to the neutral condition but were rather associated with the attitude of the dog toward the unfamiliar human. Regardless of condition, “blinking”, “nose licking” and “lip wiping” were more likely to occur when dogs did not bark or lunge towards the human, whilst “head turning” was associated with a non-reactive attitude only when the dogs were approached in a threatening way [20].

At the intraspecific level, Mariti and colleagues [6] showed that dogs never exhibited putative appeasement signals (including the displacement behaviours of head turning, nose licking and paw lifting) before aggressive episodes but, if an aggressive interaction occurred, the probability of re-aggression was decreased if these behaviours were exhibited. The latter study, however, did not consider instances in which threatening interactions did not turn into aggression, thus it was not possible to evaluate whether putative appeasement signals could prevent the escalation from threat to aggressive interaction. Applying a TAT paradigm for dog–dog interactions would enable the testing, with a standardised procedure, of whether these behaviours are exhibited more towards a threatening conspecific before an aggressive interaction occurs. However, it is very difficult to standardise the behaviour of an “actor/stooge” dog approaching different unfamiliar dogs in such a way as to ensure the final approach will be either threatening or neutral. Thus, exposing dogs to moving visual representations (videos) of conspecifics could allow standardised conspecific stimuli to be used in order to study dogs’ intraspecific visual communication.

The use of videos depicting different conspecific communication signals has already been adopted in studies with other species with promising results: juvenile bonnet macaques (Macaca radiata) behave in a socially appropriate manner, acting in a submissive way and seeking contact with mates when viewing videos of threatening males while approaching videos of a passive female [22]. Furthermore, they are more attentive to videos of conspecifics scratching compared to performing a neutral behaviour suggesting a signalling function of the behaviour [23].

Several studies have looked at aspects of dogs’ visual and social cognition using experimental paradigms involving the broadcasting of images or videos. Dogs are skilful at discriminating pictures of conspecifics from human or other animal faces [24,25] and can match different dog vocalizations to coherent pictorial representations [26,27,28]. Video stimuli have been successfully used in domestic dog cognition research, for example, showing that dogs performed at above chance level in a classic pointing task when a projection of an experimenter performing the pointing gestures was used, thus implying that dogs could perceive the actual content of the videos as a human being [29]. Evidence suggests that dogs process the videos in a “confusion mode”, exchanging the image and its referent and thus reacting roughly the same way to an image as to the real object [30]. Finally, in an expectancy violation paradigm in which dogs were presented with videos of conspecifics and heterospecifics paired with coherent and incoherent auditory stimuli, dogs seemed to recognise (looking more at the non-matching stimuli) the visual (video) and auditory stimuli as belonging to a conspecific compared to another species [31].

Given the evidence for dogs being sensitive to video stimuli of conspecifics and possibly responding in a socially appropriate manner, the current study had two objectives. The primary aim was to investigate whether displacement behaviours may carry an “appeasing” function. Thus, the use of naturalistic videos, including their auditory stimuli was adopted to expose dogs to a standardised conflict-ridden (threatening dog) and non-conflict-ridden (neutral dog) situation and measure the occurrence of displacement behaviours and facial expressions under the two conditions. Although in our previous study with human strangers [20], dogs were not observed to exhibit these behaviours more frequently in a threatening compared to a neutral condition, given the few studies carried out with conspecifics, the predictions for this study were based on the “appeasement signal hypothesis”. Hence, it was predicted that dogs would exhibit displacement behaviours more frequently when exposed to videos of conspecifics showing threatening compared to neutral behaviour.

Previous results have shown that dogs’ behavioural reactions can be elicited solely by socially relevant conspecific auditory cues [27]; however, it is not clear to what extent visual cues may be sufficient. Thus, the secondary aim of this study was to assess whether the visual component of the stimuli would elicit the appropriate responses in dogs, thereby providing a tool for future studies. Thus, we included a condition in which the same videos were presented but paired with artificial non-species-relevant auditory cues (i.e., pink noise). If the auditory component was the major eliciting factor, we expected a more frequent display of signals in the conditions paired with natural sounds, whereas if animals were responding predominantly to the visual stimuli, we expected no effect from the audio type (natural vs. artificial).

2. Materials and Methods

2.1. Ethical Statement

All the procedures were approved by the ethical committee of the University of Parma (approval number PROT.N.6/CESA/2022). The owners were informed about the experimental procedure and signed a consent form.

2.2. Subjects

Fifty-six dogs, 30 females (6 intact, 24 neutered) and 26 males (7 neutered, 19 intact), aged between 1 and 12 years old (mean = 3.86) were tested in a within-subject design study. Medium to large size, purebred as well as mix-breed dogs were recruited (See Table S1—Supplemental Material for further details). Only mesocephalic dogs were included (brachycephalic and dolichocephalic breeds were excluded), in order to control for the influence of morphology on the facial expressions exhibited. Only dogs whose owners reported having no fear of loud noises were included in the test. Subjects were recruited from the database of our laboratory and adverts on social media.

2.3. Setup

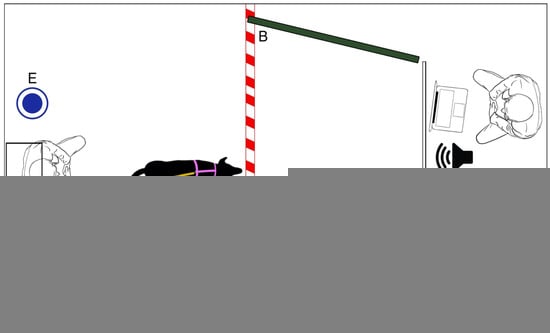

The dogs were tested in a room measuring 3 m × 4 m. The room was divided by a wooden apparatus to which a white projector screen was attached. A computer and two speakers (model: Maestro–SPKA 16) were placed behind the wooden apparatus as well as a chair for the experimenter. To the sides of the white projector screen, two dark green panels (1.50 m × 1.50 m) were present, covering the walls and creating a corridor to facilitate the focus of the dog on the video.

The projector (Epson, model: H435B) was placed facing the white screen (3.2 m from the back wall to the white screen), attached at a height of 1.65 m. The projector was placed on a support against the wall and the leash to which the dog was attached was fixed to the wall. Below the projector, a chair was placed for the owner; water was always available in the room (see Figure 1).

Figure 1.

Schematic representation of the room and experimental set up, (A) projector, (B) dark green panels and white dashed line on the floor, visually delimiting the space inaccessible for the dog, (C) projector screen, (D) speaker, (E) water.

2.4. Video and Audio Stimuli

The video stimuli were recorded (with an iPhone 12, video setting 4 K, 60 fps) from two female dogs: Dog 1—an 8-year-old Weimaraner (neutered) and Dog 2—a 2-year-old Rhodesian Ridgeback. The two dogs were comparable in size and morphology. The recording of the videos was performed in an outdoor fenced yard where the dogs were held on a leash in front of a black sheet (placed to cover the human handler) and a camera was placed on a tripod in front of the dog. The use of two spontaneous reactions in a natural context, together with the choice not to train the tested dogs to look at the screen during the test were made to maximise the ecological validity of the stimuli presented and the spontaneity of the subjects’ reactions. Four videos were recorded:

- -

- Threatening videos in which both Dog 1 and Dog 2 (individually) were recorded on a front-facing camera while standing and barking at another unfamiliar dog walking by a fence: Dog 1 Threatening (D1T) and Dog 2 Threatening (D2T).

- -

- Neutral videos in which both Dog 1 and Dog 2 (individually) were recorded using a front-facing camera while standing and panting without showing any threatening behaviour: Dog 1 Neutral (D1N) and Dog 2 Neutral (D2N).

The two videos recreated a natural context in which dogs either showed threatening/aggressive behaviours and vocalization (barking) or neutral behaviours and vocalization (panting); the videos were intended to both have auditory cues, and panting for thermoregulation on a warm day was chosen as the neutral auditory cue. The dogs panting during the neutral videos were not previously subjected to physical exercise or any type of distress.

From the videos, 7 s were extracted (Final Cut Pro 10.6.2 optimised for M1) (See Video study in the Supplemental Material). The sound of the videos was extracted and filtered to eliminate background noises (edited with Adobe Audition–version 2022). To reproduce the barking and the panting realistically during the experiment, we measured the sound level (with a sound level meter, model SLM-25 SoundLevel: 30–130 dCB) of the same dogs (Dog1 and Dog2) barking and panting inside the testing room. The bark measured ~90–105 dCB while the panting measured ~70–85 dCB. The volume of the videos was then set at a level that allowed the sound level meter to measure the same dCB level as the real dog barking and panting in the room.

The dogs tested may have reacted only to the sounds of panting and barking, independently of the visual stimuli [27]. To control for the possible effect of the sound on the reaction of the dogs tested, the same videos were paired with artificial sounds (pink noise) with the same characteristics (Hz, amplitude and pattern) as the original sounds (generated by Adobe Audition–version 2022).

2.5. Experimental Procedure

Upon the arrival of the dog and the owner at the lab, the dog was allowed to roam freely inside the room for about 5 min to familiarise itself with the new environment. After that, the owner was asked to sit on a chair placed against the back wall (right under the video projector), while the experimenter changed the dog’s harness with an H-shaped one (M/L size, the same for all the subjects) and leashed them to a 2 m leash attached to the back wall, before exiting the room. The owners were asked to ignore their dogs for the whole duration of the video projections to avoid any involuntary cues. The whole procedure was carried out in a single session and lasted about 30 min.

In session 1, the dogs were exposed to a series of 4 videos with natural sounds (2 threatening and 2 neutral; Figure 2), and to the same series of 4 videos paired with the artificial sounds, the order of artificial or natural sounds was counterbalanced between subjects. The first session consisted of a total of 43 s of video-projection. The same procedure was repeated after a 5 min break in which the dog was kept on the leash and the owner sat on the chair (session 2). The dog was exposed to 86 s of videos overall. In each session, the threatening and the neutral stimuli were always alternated to avoid a habituation effect. The order of: 1. Dogs’ identity (D1/D2); 2. Condition (threatening/neutral); and 3. Audio stimulus (natural/artificial) was counterbalanced between the subjects with 4 possible orders (see Figure 3 and Table S1 in Supplementary Material).

Figure 2.

Frames of the 4 video stimuli.

Figure 3.

Time dogs spent looking at the video in the two conditions.

2.6. Behavioural Coding

The video recordings were analysed using the software BORIS (“Behavioral Observation Research Interactive Software” v. 7.13.9, developed by the University of Torino, Italy) [32], based on two ethograms: a selection from DogFACS manual facial expressions and a general ethogram (including time spent looking at the video, posture of the dog and other displacement activities) (see Tables S2 and S3—Supplemental Material). The DogFACS ethogram included the action units of blinking (AU145), the action descriptors of panting (AD126), lip wiping (AD37) and nose licking (AD137) as well as all the ears’ action descriptors: ears forward (EAD101), ears adductor (EAD102), ears flattener (EAD103), ears downward (EAD105). Both coders, GP and AR were DogFACS certified coders, and intercoder reliability was assessed with interclass correlation (ICCs; Rousson, 2011) on 20% of the videos (ICC from 0.73 to 0.97).

2.7. Statistical Analyses

First, to assess whether the condition (threatening/neutral), the stimulus identity (D1/D2) and the audio (natural/artificial) influenced the amount of ttime the dogs spent looking at videos, a model with a three-way interaction between the condition (threatening/neutral), the identity of the stimulus (D1/D2) and the audio (natural/artificial) as test predictor was run, including also the session (1/2), age and sex of the subjects as fixed effect control variables. Dog ID was included as a random effect to account for repeated observation of the same subjects (model = lmer(duration of behaviour~condition*audio*stimuli ID + sex + age + session + (1|subject), data)). The interaction between the condition (threatening/neutral), the stimulus identity (D1/D2) and the audio, as well as the interaction between the condition (threatening/neutral) and the stimulus identity (D1/D2) were not significant, revealing no differences based on stimuli identity (D1/D2) in the time the dogs spent looking at the stimuli under the different experimental conditions (neutral/threatening). Given the irrelevant influence of the stimulus identity (D1/D2) on the attention of the dogs towards the videos, it was subsequently included as a control predictor in the models for the displacement behaviours and facial expressions variables.

For the remaining statistical analyses, only behavioural variables expressed by at least 10% of the dogs during the test were selected. Thus, to test whether the display of the selected variables was dependent on the experimental condition (threatening/neutral), their duration or frequency was modelled as a function of the interaction between the condition (threatening/neutral) and the audio (natural/artificial). Stimulus identity (D1/D2), session (1/2), age and sex of the subjects were included as fixed effect control variables. Dog ID was included as a random effect. Generalised linear mixed models (GLMM) with a Gaussian error distribution (function “lmer” of the package “lme4”) for duration variables and a Poisson distribution for frequency variables were run (function “glmmTMB” of the package “glmmTMB”) (example of model = lmer/glmmTMB(duration/frequency~condition*audio + stimuli ID + sex + age + session + (1|subject), data)).

A likelihood ratio test comparing the full model with a null model lacking the main test predictors and their interaction was performed to keep the type I error rate at 5%. If the interaction between the predictors was not significant, both test predictors were included in the model without interaction. The same full null model comparison was performed to assess the influence of each predictor of interest (condition and audio) on the response variable. Model stability was assessed on the level of the estimated coefficients and standard deviations by excluding the levels of the random effects one at a time [33]. This revealed the models to be of good stability (See Supplemental Materials for detailed results). Parametric bootstrapping was performed to obtain confidence intervals.

Results were considered statistically significant if p ≤ 0.05.

3. Results

3.1. Time Spent Looking at the Videos

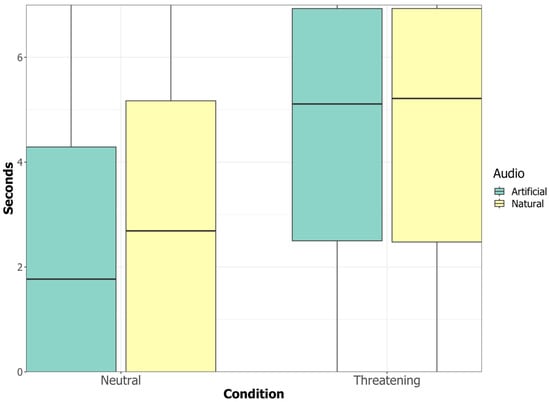

The interaction between the condition (threatening/neutral), the stimulus identity (D1/D2) and the audio (natural/artificial) had no significant influence on the time that the dogs spent looking at the video (χ2(56, 1) = 0.500, p = 0.479); neither had the interaction between the condition (threatening/neutral) and the audio (natural/artificial) (χ2(56, 1) = 3.021, p = 0.082) nor the interaction between the condition (threatening/neutral) and the stimulus identity (D2/D2) (χ2(56, 1) = 1.058, p = 0.304). The condition had a significant impact on the time dogs spent looking at the videos (χ2(56, 1) = 138.867, p < 0.001) with dogs looking more at the threatening videos compared to the neutral videos (Es = 1.716 ± 0.140, 95% CI [2.718, 4.272]). Independent of condition, the audio (natural/artificial) had a significant impact on the time dogs spent looking at the video (χ2(56, 1) = 5.842, p = 0.016), with dogs looking more when videos had a natural rather than an artificial audio (Es = 0.338 ± 0.140, 95% CI [0.067, 0.616]). Furthermore, dogs looked more at the videos during the first compared to the second session (Es = −1.034 ± 0.140, p = <0.001, 95% CI [−1.305, −0.759]).

3.2. Facial Expressions

There was no significant interaction between the condition (threatening/neutral) and the audio (natural/artificial) for any of the facial expressions analysed. Thus, regardless of audio type, dogs kept “Ears forward” (EAD101) for longer in the threatening compared to the neutral condition (Es = 0.670 ± 0.131, p < 0.001, 95% CI [0.338, 0.929]) and, in contrast, maintained the “Ears rotator” (EAD104) position longer in the neutral vs. the threatening condition (Es = −0.399 ± 0.131, p = 0.002, 95% CI [−0.645, −0.154]). The duration of “Ears flattener” (EAD103) was greater when the artificial sound was paired with the videos compared to the natural sound (Es = −0.200 ± 0.072, p = 0.006, 95% CI [−0.341, −0.057]).

3.3. Displacement Behaviours

The displacement behaviours performed by at least 10% of the dogs during the test were “head-turning”, “blinking” (AU145), “sniffing the environment”, “yawning” (AU27), “lip-wiping” (AD37) and “nose-licking” (AD137).

The “blinking” (AU145) behaviour was the only one showing a significant interaction between the condition and the audio: dogs blinked more when neutral videos with natural sounds were played (Es = −0.497 ± 0.214, p = 0.020, 95% CI [−0.907, −0.085]) but there was no difference in blink frequency between the threatening videos with natural or artificial sounds.

All other displacement behaviours were exhibited for a longer duration or with a higher frequency in the neutral compared to the threatening condition, independently of the accompanying audio (natural/artificial) (see Table 1 for statistical results and Figure 4). Dogs also sniffed the environment for longer durations in the neutral compared to the threatening condition (Es = −0.227 ± 0.072, p = 0.002, 95% CI [−0.370, −0.085]) and “Lip-Wiping” (AD37), “Nose-Licking” (AD137) and “Yawning” (AU27) occurred with higher frequencies in the neutral compared to the threatening condition (AD37: Es = −0.987 ± 0.353, p = 0.005, 95% CI [−1.907, −0.324], AD137: Es = −0.949 ± 0.340, p = 0.005, 95% CI [−1.796, −0.306], AU27: Es = −2.303 ± 1.049, p = 0.003, 95% CI [1.451 −1.989]).

Table 1.

Generalised linear mixed model (GLMM) output for the effect of the factor condition (threatening/neutral) on the exhibition of displacement behaviours. Reference level for the factor stimuli: threatening.

Figure 4.

Graphical representations of the visual signals expressed with higher duration and/or higher frequency in the neutral condition compared to the threatening condition.

4. Discussion

The present study aimed to test the reaction of dogs exposed to a video of conspecifics with either a threatening or neutral attitude; in particular, the analyses focused on the exhibition of displacement behaviours and facial expressions (putative communicative appeasement signals). The underlying hypothesis was that facial expressions and displacement behaviours, previously identified as putative appeasement signals in the dog training literature, would be exhibited more during a conflict-ridden situation (threatening condition) compared to a non-conflict-ridden situation (neutral condition). Contrary to predictions, the results showed that dogs exhibited more displacement behaviours, specifically: sniffing the environment, blinking, lip licking, lip wiping and yawning, when exposed to the video of dogs exhibiting a neutral behaviour (i.e., standing without staring at the camera and panting with an open mouth), compared to when exposed to videos of dogs with threatening behaviour, (i.e., barking and lunging towards the camera and hence the observing dog).

There may be several reasons for this. First, it is possible that the threatening condition was too intense to make a de-escalation/avoidance strategy a possible and efficient choice. This would be in line with previous studies showing that during dog–human interactions, dogs exhibit more displacement behaviours (lip licking and head turning) when a human approaches with a mildly threatening or a friendly attitude compared to with a highly threatening attitude (vocal and physical threat) which instead elicited a defensive/aggressive coping strategy [21]. Considering that the presentation of the video stimuli Iy elicited differential responses (see discussion point below), an avenue for future studies would be to use this method to present conditions with varying levels of threat, as well as more overtly friendly conspecific behaviours. This would allow researchers to assess in greater detail whether putative appeasement signals are negatively correlated with the gradient of threatening behaviour observed.

A second possibility, closely linked to the first, is that because dogs during the threatening condition were highly attentive and alert towards the threatening unfamiliar conspecific, they were not yet engaging in any other behavioural strategy aimed at coping with the potential threat (avoidance, defensive aggressive, etc.). Indeed, in the present study, no dogs showed aggressive behaviours towards the video stimuli, but dogs were more alert (looking for longer and holding their ears forward more, i.e., showing signs of attention —[17]) in the threatening compared to the neutral condition. This interpretation would suggest that the exhibition of these signals does not have the function of interrupting an ongoing threatening–aggressive encounter but may still be used during ambiguous social encounters when the outcome of the interaction is not easily “predicted” by the dogs. In line with this, future studies could test whether these behaviours are more likely to be elicited by an ambiguous situation, for example, when encountering an unfamiliar vs. a familiar social stimulus (thus, potentially carrying the proposed function of negotiation signals [19]; p. 163). Indeed, this would be in line with Mariti et al. [6] who found that displacement behaviours (especially head turning, nose licking and paw lifting) were exhibited more often when dogs encountered unfamiliar compared to familiar conspecifics.

In both our previous studies with actual human experimenters and the present study, the neutral stimuli (an unfamiliar human approaching with a relaxed posture and a video of an unfamiliar dog standing and panting) elicited more displacement behaviours than the “threatening one”. This may support the idea that it is the uncertainty of the situation which elicits these behaviours, which would hence have the function of conveying a “non-conflict/peaceful” intent. In this case, these behaviours should be exhibited more in such ambiguous scenarios, than, for example, when exposed to an overtly friendly approach. Future studies are required to further investigate the context, by, for example, changing the identity of the actor (familiar/non-familiar partner) and the social context (threatening, neutral, friendly and affiliative).

One limitation of this study is the absence of physiological measures to monitor the stress response in the dogs tested. Previous research has identified displacement behaviours and facial expressions, putative appeasement signals, as indicators of the physiological stress response: yawning [34,35,36], lip licking [34,35], manipulation/sniffing of the environment [17,37]. The results from the current study do not align with this interpretation since, in this case, we would have expected them more in the threatening than in the neutral condition. Nevertheless, to further test this hypothesis, studies should include a condition presenting a non-socially relevant video/audio which may elicit a stress response in dogs and include physiological measures of arousal (hypothalamic pituitary adrenal axis activation) such as heart rate or cortisol to assess their association with the exhibition of behavioural variables [34].

The secondary aim of the present work was to investigate whether the visual component of the stimuli would elicit behavioural responses in dogs, thereby providing a tool for future studies. To control for the potential effect of the auditory component on the reaction of the dogs, the same visual stimuli (threatening and neutral) either paired with its natural audio (barking and panting) or paired with a socially non-relevant audio with the same sound features (Hz, amplitude and pattern) was presented. If behavioural responses were caused predominantly by the social auditory stimulus (see [27]), then a higher frequency/duration of behaviours with the original video (with natural sound pairing) than the videos with the artificial audio were expected. Dogs looked more at videos paired with natural sounds suggesting that they were more interested in a socially coherent than a non-coherent stimulus. However, aside from this, only a very limited effect of the audio type on the behavioural responses was found. An interaction between the condition and the audio type was found only for one behaviour, i.e., AU145—blinking: dogs blinked more when the neutral videos were paired with the natural sound but there was no difference in blink frequency during the threatening condition between the natural and artificial sounds. Furthermore, independently of the condition, dogs kept their ears in a flattened position (EAD103) more when the artificial audio was played compared to when the natural audio was played. Thus, taken together, the results suggest that although the natural pairing elicited more attention, dogs were not just responding to the socially relevant auditory stimuli (barking and panting). This suggests that the use of videos, especially paired with natural auditory stimuli, may be a promising method to investigate intraspecific visual communicative behaviour in dogs. However, one limitation of the present experimental paradigm is the absence of a control condition in which only the visual stimuli was present, to exclude the potential behavioural reactions elicited by any auditory stimuli per se. In fact, dogs can be sensitive to artificial stimuli. Thus, even though dogs were selected based on the fact that they had no noise-sensitivity issues (based on owners’ report), we cannot exclude this issue entirely.

A further limitation of the current study relates to the potential effect of breed/morphology on intraspecific communication. Based on a previous study by our group, in which dog subjects were carefully recruited to have a balanced number of shepherd type (ears erected, triangular head shape and thin lips) and hunting type (with floppy ears, squared face and soft lips) dogs, we know that breed morphology may affect the use of specific facial expressions and tail wagging in a communicative interaction with a human [16]. However, none of the current study’s facial actions (blinking, yawning, lip wiping, nose licking) that were found to be significant, were previously found to be influenced by shepherd/hunting breed morphology. Nevertheless, the question of breed-/morphology-type effect on intraspecific communication is of great interest both from a theoretical and an applied perspective, and these effects were not directly investigated in the current study. The methodology outlined here may allow a more thorough investigation of this question in a standardised manner.

From an applied perspective, a better understanding of dogs’ intraspecific communication in the different contexts may help in the prevention of aggressive encounters between pet dogs. The dog training literature is progressively developing based on scientific data; however, the practices in many domains, such as dog intraspecific communication, are often still guided by subjective interpretation and personal experiences. Such studies can inform professionals (ethologist, veterinarians, dog trainers) who in turn may transfer knowledge to the general dog-owning public, thereby promoting pet dogs’ welfare.

In conclusion, the current study outlines a promising methodology for the investigation of intraspecific communication in dogs. It showed that dogs can pay attention to videos of conspecifics exhibiting neutral and threatening behaviours and showed that such stimuli can elicit socially relevant cues. Furthermore, in line with previous studies performed using “real” social stimuli (human experimenters and conspecifics), dogs showed more displacement behaviours, (putative appeasement signals) in a neutral compared to a conflict-ridden context. These results suggest that displacement behaviours are not used as appeasement signals to interrupt the occurrence of threatening behaviours but may rather be exhibited in ambiguous contexts when the behaviour of the dog/person is difficult to predict.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/app13169254/s1.

Author Contributions

The study concept and design were developed by G.P. (Giulia Pedretti), S.M.-P. and P.V. The audio stimuli were manipulated and created by G.P. (Gianni Pavan). C.C., G.P. (Giulia Pedretti) conducted the experiments. G.P. (Giulia Pedretti) coded the videos. G.P. (Giulia Pedretti) conducted the statistical analysis and interpreted the data. G.P. (Giulia Pedretti) and C.C. drafted the initial manuscript. C.C. prepared all figures with original drawings and pictures. P.V. and S.M.-P. contributed to the structure and content of the introduction and the discussion. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by a doctoral grant to Giulia Pedretti from the University of Parma.

Institutional Review Board Statement

The animal study protocol was approved by the ethical committee of the University of Parma (approval number PROT.N.6/CESA/2022). The owners were informed about the experimental procedure and signed a consent form.

Data Availability Statement

The raw data and analysis of this study are available from the corresponding author on request.

Acknowledgments

We thank all the owners who took part in the studies with their dogs and Alessia Ranucci (AR) who helped in the data collection and behavioural coding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Bensky, M.K.; Gosling, S.D.; Sinn, D.L. Chapter Five—The World from a Dog’s Point of View: A Review and Synthesis of Dog Cognition Research. In Advances in the Study of Behavior; Brockmann, H.J., Roper, T.J., Naguib, M., Mitani, J.C., Simmons, L.W., Barrett, L., Eds.; Academic Press: Cambridge, MA, USA, 2013; Volume 45, pp. 209–406. [Google Scholar] [CrossRef]

- Lea, S.E.G.; Osthaus, B. In what sense are dogs special? Canine cognition in comparative context. Learn. Behav. 2018, 46, 335–363. [Google Scholar] [CrossRef] [PubMed]

- Kaminski, J.; Nitzschner, M. Do dogs get the point? A review of dog–human communication ability. Learn. Motiv. 2013, 44, 294–302. [Google Scholar] [CrossRef]

- Riedel, J.; Schumann, K.; Kaminski, J.; Call, J.; Tomasello, M. The early ontogeny of human–dog communication. Anim. Behav. 2008, 75, 1003–1014. [Google Scholar] [CrossRef]

- Lakatos, G.; Gácsi, M.; Topál, J.; Miklósi, Á. Comprehension and utilisation of pointing gestures and gazing in dog–human communication in relatively complex situations. Anim. Cogn. 2012, 15, 201–213. [Google Scholar] [CrossRef] [PubMed]

- Kaminski, J.; Piotti, P. Current trends in dog-human communication: Do dogs inform? Curr. Dir. Psychol. Sci. 2016, 25, 322–326. [Google Scholar] [CrossRef]

- Mariti, C.; Falaschi, C.; Zilocchi, M.; Fatjó, J.; Sighieri, C.; Ogi, A.; Gazzano, A. Analysis of the intraspecific visual communication in the domestic dog (Canis familiaris): A pilot study on the case of calming signals. J. Veter. Behav. 2017, 18, 49–55. [Google Scholar] [CrossRef]

- Siniscalchi, M.; D’ingeo, S.; Minunno, M.; Quaranta, A. Communication in Dogs. Animals 2018, 8, 131. [Google Scholar] [CrossRef]

- Mota-Rojas, D.; Marcet-Rius, M.; Ogi, A.; Hernández-Ávalos, I.; Mariti, C.; Martínez-Burnes, J.; Mora-Medina, P.; Casas, A.; Domínguez, A.; Reyes, B.; et al. Current Advances in Assessment of Dog’s Emotions, Facial Expressions, and Their Use for Clinical Recognition of Pain. Animals 2021, 11, 3334. [Google Scholar] [CrossRef]

- Bloom, T.; Friedman, H. Classifying dogs’ (Canis familiaris) facial expressions from photographs. Behav. Process. 2013, 96, 1–10. [Google Scholar] [CrossRef]

- Bremhorst, A.; Sutter, N.A.; Würbel, H.; Mills, D.S.; Riemer, S. Differences in facial expressions during positive anticipation and frustration in dogs awaiting a reward. Sci. Rep. 2019, 9, 19312. [Google Scholar] [CrossRef]

- Bremhorst, A.; Mills, D.S.; Würbel, H.; Riemer, S. Evaluating the accuracy of facial expressions as emotion indicators across contexts in dogs. Anim. Cogn. 2021, 25, 121–136. [Google Scholar] [CrossRef] [PubMed]

- Fox, M.W. Socio-infantile and Socio-sexual signals in Canids: A Comparative and Ontogenetic Study. Z. Für Tierpsychol. 1971, 28, 185–210. [Google Scholar] [CrossRef]

- Waller, B.M.; Peirce, K.; Caeiro, C.C.; Scheider, L.; Burrows, A.M.; McCune, S.; Kaminski, J. Paedomorphic Facial Expressions Give Dogs a Selective Advantage. PLoS ONE 2013, 8, e82686. [Google Scholar] [CrossRef] [PubMed]

- Crivelli, C.; Fridlund, A.J. Facial Displays Are Tools for Social Influence. Trends Cogn. Sci. 2018, 22, 388–399. [Google Scholar] [CrossRef]

- Kaminski, J.; Hynds, J.; Morris, P.; Waller, B.M. Human attention affects facial expressions in domestic dogs. Sci. Rep. 2017, 7, 12914. [Google Scholar] [CrossRef]

- Pedretti, G.; Canori, C.; Marshall-Pescini, S.; Palme, R.; Pelosi, A.; Valsecchi, P. Audience effect on domestic dogs’ behavioural displays and facial expressions. Sci. Rep. 2022, 12, 9747. [Google Scholar] [CrossRef]

- Rugaas, T. On Talking Terms with Dogs: Calming Signals, 2nd ed.; Dogwise Publishing: Wenatchee, WA, USA, 2006. [Google Scholar]

- Aloff, B. Canine Body Language: A Photographic Guide: Interpreting the Native Language of the Domestic Dog; Dogwise Publishing: Wenatchee, WA, USA, 2005. [Google Scholar]

- Pedretti, G.; Canori, C.; Biffi, E.; Marshall-Pescini, S.; Valsecchi, P. Appeasement function of displacement behaviours? Dogs’ behavioural displays exhibited towards threatening and neutral humans. Anim. Cogn. 2023, 26, 943–952. [Google Scholar] [CrossRef]

- Firnkes, A.; Bartels, A.; Bidoli, E.; Erhard, M. Appeasement signals used by dogs during dog–human communication. J. Veter. Behav. 2017, 19, 35–44. [Google Scholar] [CrossRef]

- Plimpton, E.H.; Swartz, K.B.; Rosenblum, L.A. Responses of juvenile bonnet macaques to social stimuli presented through color videotapes. Dev. Psychobiol. 1981, 14, 109–115. [Google Scholar] [CrossRef]

- Whitehouse, J.; Micheletta, J.; Kaminski, J.; Waller, B.M. Macaques attend to scratching in others. Anim. Behav. 2016, 122, 169–175. [Google Scholar] [CrossRef]

- Range, F.; Aust, U.; Steurer, M.; Huber, L. Visual categorization of natural stimuli by domestic dogs. Anim. Cogn. 2008, 11, 339–347. [Google Scholar] [CrossRef] [PubMed]

- Autier-Dérian, D.; Deputte, B.L.; Chalvet-Monfray, K.; Coulon, M.; Mounier, L. Visual discrimination of species in dogs (Canis familiaris). Anim. Cogn. 2013, 16, 637–651. [Google Scholar] [CrossRef]

- Albuquerque, N.; Guo, K.; Wilkinson, A.; Savalli, C.; Otta, E.; Mills, D. Dogs recognize dog and human emotions. Biol. Lett. 2016, 12, 20150883. [Google Scholar] [CrossRef] [PubMed]

- Faragó, T.; Pongrácz, P.; Miklósi, Á.; Huber, L.; Virányi, Z.; Range, F. Dogs’ Expectation about Signalers’ Body Size by Virtue of Their Growls. PLoS ONE 2010, 5, e15175. [Google Scholar] [CrossRef] [PubMed]

- Gergely, A.; Petró, E.; Oláh, K.; Topál, J. Auditory–Visual Matching of Conspecifics and Non-Conspecifics by Dogs and Human Infants. Animals 2019, 9, 17. [Google Scholar] [CrossRef]

- Pongrácz, P.; Miklósi, Á.; Dóka, A.; Csányi, V. Successful Application of Video-Projected Human Images for Signalling to Dogs: Signalling to Dogs via Video-Projector. Ethology 2003, 109, 809–821. [Google Scholar] [CrossRef]

- Pongrácz, P.; Péter, A.; Miklósi, Á. Familiarity with images affects how dogs (Canis familiaris) process life-size video projections of humans. Q. J. Exp. Psychol. 2018, 71, 1457–1468. [Google Scholar] [CrossRef]

- Mongillo, P.; Eatherington, C.; Lõoke, M.; Marinelli, L. I know a dog when I see one: Dogs (Canis familiaris) recognize dogs from videos. Anim. Cogn. 2021, 24, 969–979. [Google Scholar] [CrossRef]

- Friard, O.; Gamba, M. BORIS: A Free, Versatile Open-Source Event-Logging Software for Video/Audio Coding and Live Observations. Methods Ecol. Evol. 2016, 7, 1325–1330. [Google Scholar] [CrossRef]

- Nieuwenhuis, R.; Grotenhuis, M.T.; Pelzer, B. influence.ME: Tools for Detecting Influential Data in Mixed Effects Models. R J. 2012, 4, 38–47. [Google Scholar] [CrossRef]

- Beerda, B.; Schilder, M.B.H.; van Hooff, J.A.R.A.M.; de Vries, H.W.; Mol, J.A. Behavioural, saliva cortisol and heart rate responses to different types of stimuli in dogs. Appl. Anim. Behav. Sci. 1998, 58, 365–381. [Google Scholar] [CrossRef]

- Quervel-Chaumette, M.; Faerber, V.; Faragó, T.; Marshall-Pescini, S.; Range, F. Investigating Empathy-Like Responding to Conspecifics’ Distress in Pet Dogs. PLoS ONE 2016, 11, e0152920. [Google Scholar] [CrossRef] [PubMed]

- Kuhne, F.; Hößler, J.C.; Struwe, R. Emotions in dogs being petted by a familiar or unfamiliar person: Validating behavioural indicators of emotional states using heart rate variability. Appl. Anim. Behav. Sci. 2014, 161, 113–120. [Google Scholar] [CrossRef]

- Howell, A.; Feyrecilde, M. Cooperative Veterinary Care; Wiley: Hoboken, NJ, USA, 2018. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).