Abstract

Human action recognition algorithms have garnered significant research interest due to their vast potential for applications. Existing human behavior recognition algorithms primarily focus on recognizing general behaviors using a large number of datasets. However, in industrial applications, there are typically constraints such as limited sample sizes and high accuracy requirements, necessitating algorithmic improvements. This article proposes a graph convolution neural network model that combines prior knowledge supervision and attention mechanisms, designed to fulfill the specific action recognition requirements for workers installing solar panels. The model extracts prior knowledge from training data, improving the training effectiveness of action recognition models and enhancing the recognition reliability of special actions. The experimental results demonstrate that the method proposed in this paper surpasses traditional models in terms of recognizing solar panel installation actions accurately. The proposed method satisfies the need for highly accurate recognition of designated person behavior in industrial applications, showing promising application prospects.

1. Introduction and Related Works

In many industrial application scenarios, people and robots need to work together [1]. In the present era of Industry 4.0, manufacturing automation is moving toward mass production and mass customization through human–robot collaboration [2,3]. One example is the installation of solar panels. The traditional manual installation method faces the safety issues of multiple-person operation, the problem of excessive material load, and a lack of human resources, while using only robots involves too much mechanical customization and intelligent transformation, and the cost is too high. With current technology, installing solar panels via human–robot collaboration is a good choice: people engage in some sophisticated and agile operations, while robots are responsible for cooperating with people to support heavy loads and other collaborative work. There is a lot of technology involved, and one key is how to recognize and predict human actions in order to provide assistance in a safe and collaborative manner [4,5]. In other words, making the robot automatically identify the current operational tasks and workflow of the worker is very important.

Using human action recognition (HAR) technology [6] to identify the current operation of workers, and to provide guidance for the work of robots become necessary for human–robot collaboration. HAR refers to identifying the corresponding motion category of the motion sequence by analyzing the input continuous time series data. There are different ways (or sensors) to achieve HAR, including cameras, internet of things [7], surface electromyography devices [8], RFID [9], etc. In this paper, we mainly focus on HAR using cameras as main sensor, which takes RGB or RGB-D image sequences [10] as input. It has important application prospects in the fields of human-computer interaction, intelligent security, video censorship. It has long been a research hot spot in the field of computer vision and human-computer interaction. In recent years, with the rapid development of image processing technology and computer hardware, it has received widespread research attention.

1.1. Feature-Based Methods

To achieve HAR through input videos, various artificial features or feature extraction methods are commonly used. They are mainly divided into two steps: feature representation and action classification [11]. Feature representation refers to representing the action information in the original video data as feature vectors, while action classification refers to using action feature vectors to achieve the classification of different action categories.

For example, Bobick et al. [12] used Motion Energy Images (MEI) and Motion History Images (MHI) for encoding motion information into monochromatic images, where MEI describes the location of the action, and MHI describes the motion of the action by calculating the changes in pixels at the same location within a certain period of time. In order to remove visual angle influencing factors in feature representation, Weinland et al. [13] proposed a 3D Motion History Volume, which extracts three dimensional stereo pixels from multiple camera views, and then uses Fourier transform to generate motion representations that are not affected by position and angle. The above feature representation method captures the motion information of the entire motion subject, which is easy to introduce irrelevant information such as the motion subject and chaotic background. To alleviate this problem, researchers turned to feature representation of local regions with significant motion information. Laptev et al. [14] proposed a local feature representation method based on spatio-temporal interest points, using Harris Corner Detector to detect regions with the largest gradient changes in each direction. Dollar et al. [15] proposed using a 2D Gaussian smoothing kernel on the temporal dimension of each point of interest, and a 1D Gabor filter on the spatial dimension to extract the original pixel values, gradients, and optical flow features around the point of interest, and splice them to generate a feature vector. Laptev et al. [16] aggregate the optical flow features in the local region where the point of interest is located into a histogram to obtain a Histogram of Optical Flow (HOF), and combine it with the HOG feature [17] to characterize complex actions in this domain. Spatio-temporal interest points can capture the most significant features in a local area, but they cannot capture motion information in the temporal dimension. In order to capture temporal information of actions, Wang et al. [18] proposed a feature trajectory method, which tracks points of interest and describes changes in the motion characteristics of the points of interest. Sun et al. [19] searched for tracks by matching corresponding feature points on continuous video images, and used average descriptors to generate track representations. L. Zelnik-Manor in [20] designed a simple statistical distance measure between video sequences which captures the similarities in their behavioral content.

In summary, although traditional artificial feature methods rely too heavily on human experience and have unsatisfactory recognition accuracy for arbitrary actions, there are still advantages in the recognition of worker actions involved in this article, as worker operations typically follow various standards, which are different from arbitrary action recognition. Therefore, the method design in this paper combines some traditional methods of statistical extraction of artificial features.

1.2. Deep Learning-Based Methods

In recent years, with the progress of deep learning technology, the accuracy of human motion recognition technology using RGB video input directly has developed rapidly.

One important direction is to use RGB video streams with human as input. The mainstream approach is to learn different video features through two or more backbones, which is called 2D dual flow networks [21,22]. In [21], the two inputs are RGB frames and multiple optical flow frames; while in [22], low resolution RGB frames and high resolution RGB frames are the two inputs, in order to reduce computational complexity. The core idea of this type of accurate optical flow acquisition usually requires a high computational cost, so how to obtain approximation or substitution of optical flow at a lower computational cost is also a research focus of such methods. In [16], a framework based on knowledge extraction is proposed to achieve knowledge transfer from a teacher network trained using optical flow to a student network using motion vectors as input. A disadvantage of 2D dual flow networks is the insufficient modeling of long-term dependencies on timing. Networks using timing modeling, such as LSTM (Long Short-Term Memory), can compensate for this [23,24,25]. In recent years, more complicated models, such as 3D CNN based approaches [26,27,28,29,30] are developed to deal with original image sequences directly, instead of using features, they are more like an end-to-end job than previous methods.

Compared to simply and directly throwing image sequences into a depth neural network for training, in the specific issue of human action recognition, another way is to to analyze and recognize the posture of the human body and limbs based on accurate segmentation and positioning [31,32]. Skeleton sequence lists the trajectories of human joints, which can be used to characterize human action. Skeleton data provides body structure and posture information, which has two obvious advantages: (1) It has proportional invariance. (2) It is robust to clothing textures and backgrounds. Therefore, skeleton data mode is relatively suitable for HAR tasks.

In terms of obtaining skeleton data: With the emergence of some inexpensive depth cameras such as Microsoft Kinect and Intel RealSense, as well as some excellent pose estimation algorithms (such as Openpose algorithm) [33,34,35,36,37], researchers can quickly and accurately obtain depth information and bone information of human motion.

For using the skeleton data, the direct method is to convert the skeleton into images, and then use a mature image processing depth neural network for recognition. For example, Ref. [38] divides the human skeleton into five parts, inputs them into multiple bidirectional RNNs, and then layers and fuses their outputs to generate high-level representations of actions; Ref. [39] proposed a local sensing LSTM and simulated the relationship between different body parts in the LSTM unit; The idea behind [40,41] is to encode bone sequence data into images and then send them to CNN for action recognition. They provide bone spectral maps and joint trajectory maps, respectively. In addition, there is some work focused on solving certain specific issues, such as viewpoint changes and high computing costs.

Although the above methods has achieved considerable results, there are more ways to use the skeleton data more efficiently. Because the skeleton of the human body itself is a ‘graph’, it is theoretically more intuitive and appropriate to calculate it directly using the ‘graph’ method. In the recent years, methods based on graph neural network (GNN) and graph convolution neural network (GCN) has been proposed and has achieved good results in the field of HAR. In [42], the human skeleton is represented as a directed acyclic graph to effectively merge bone and joint information. In [43] and [44], Spatial temporal GCN were designed to learn spatial and temporal features from skeleton data, respectively. By considering that different human joints have different importance for human action, the attention mechanism has been introduced into GCN to improve the effect of movement training and recognition [45,46,47], the difference lies in how to know which nodes deserve attention, and the introduction of attention mechanisms.

1.3. Contributions of This Paper

Currently, human action recognition includes several public datasets [48,49]. The commonly studied actions are classified into simple types of movements, such as walking, running, jumping, writing, etc., or some regular movements, such as playing ball, swimming, roller skating, etc. However, whether it is the difficulties in feature extraction and recognition classification mentioned above, or the vast scope for improvement in current research progress from our expected advanced motion recognition, advanced action recognition still requires a higher level of computer vision understanding and representation capabilities.

On the other hand, in some special industrial application scenarios, human action recognition could be difficult because of a lack of training data or low action discrimination. For the actions of operational tasks in industrial operations, there are particularities mainly reflected in the facts that:

- There could be standard actions for the operations;

- The overall pose may not vary significantly with different operations, but the distinction is reflected in the details of the hands or other parts;

- The amount of data available for training is relatively small and narrow.

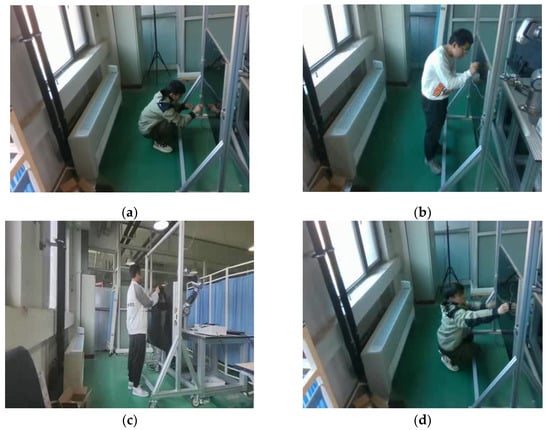

Figure 1 shows several image examples of workers’ actions while installing a solar panel, wherein sub-figures (a) and (b) come from the same type of action: ‘tie’, and (c) and (d) come from the same type of action: ‘pendulum’. We can see that the difference between the two types of actions lies only in the small differences in hand movements, regardless of whether the person is standing or not, while on the other hand, from the perspective of the overall movement, whether a person stands or not is very obvious.

Figure 1.

Action image frames of workers installing solar panels. (a,b) From the same type of action: ‘tie’; (c,d) from the same type of action: ‘pendulum’.

This can lead to a problem: when the number of training samples is small, if we simply and directly use the full body motion to train or calculate the action recognition framework, this will be difficult due to the large intra-class distance and the small inter-class distance, resulting in eventual training failures or recognition errors.

In this paper, aiming at the application requirements of action recognition for workers installing solar panels, a graph convolution neural network model combining prior knowledge supervision and attention mechanisms is proposed, which extracts prior knowledge from training data and is used to improve the training effect of action recognition models and improve the recognition reliability of special actions. The main contributions of this article are as follows:

- A method based on training set statistics is proposed to achieve the correlation representation of different human joint points for a specific action, which can be used as a priori knowledge of the action;

- A graph convolution neural network model using the aforementioned prior knowledge to train attention mechanisms is proposed, which improves the training and recognition effect of the model for specific actions.

2. Method

2.1. System Overview

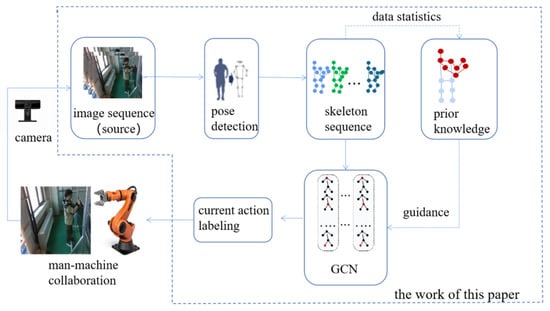

Figure 2 shows the system overview of the method proposed in this paper. For the sequence of worker motion images captured using the camera, firstly, the skeleton of the human body is extracted using the pose detection approach in [30]. Secondly, statistical methods are used to extract the skeleton prior knowledge of different actions. Furthermore, combining this prior knowledge, a GCN network guided by prior knowledge is designed and trained. Finally, the network is used to identify the actions of workers corresponding to the captured image sequences online, providing guidance for robot and human collaborative work.

Figure 2.

System overview.

2.2. Joint Modeling

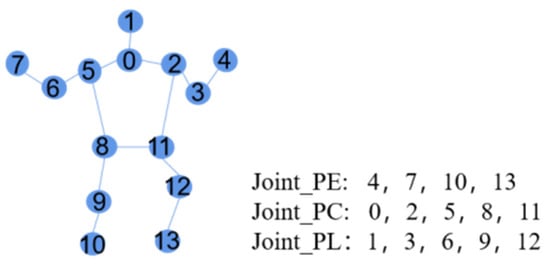

Aiming at the particularity of industrial operation tasks, this paper proposes a method for obtaining prior knowledge based on the importance of action joint points. In this method, the joint points of the human body are simplified to 14 points, as shown in Figure 3. For input, in each RGB image in the video sequences, the human joint points are obtained through the method in [30].

Figure 3.

Joint modeling.

These joints are divided into three categories: the end joints, Joint_PE; body joints, Joint_PC; and link joints, Joint_PL. According to this, the human body can be modeled as directed links from Joint_PC to Joint_PL to Joint_PE.

Let F(*) denote the basic pose feature of a joint. For different kinds of joints, they can be expressed as

where X denotes the 2D/3D spatial coordinates of the joint point. corresponds to the angles of the link edges to that joint point. For example, in Figure 3, joint 4 has an angle determined by link edges 2–3 and 3–4. Joint 3 has an angle and an angle β determined by link edges 0–2 and 2–3 and link edges 11–2 and 2–3.

For the corresponding body joints on two skeletons, the distance of features between them can be defined as

where L denotes a length factor determined by the size of body. Furthermore, we also define the average feature of the corresponding body joints on different skeletons:

2.3. Prior Knowledge Extraction

Let an action have K samples, and each sample can obtain N skeleton frames. Based on the above definition of joint features, we can calculate the irrelevant and significant expressions of all the joint points of the action.

The irrelevance factor: This is a measurement of how much a joint J should not be used as a characteristic criterion of an action. It is represented by the deviation of the corresponding joint of different samples.

For multiple samples of an action, means the average deviation statistics of human joint J at time t:

In the actual calculation process, considering that different samples may be completely aligned in time t, it is possible to consider the statistics in multiple adjacent time frames:

Furthermore, we can obtain a more intuitive metric for joint J, which is the irrelevance factor:

The significance factor: This represents the saliency of joint J when performing this action, which is represented by the deviation of the corresponding nodes of the same action sample at different times. It can also be considered as a form of expression of joint feature ‘velocity’.

For multiple samples of an action and human joint J at time t, means the average ‘velocity’ of the basic pose feature:

Similarly, the significance factor of joint J is represented as

It should be noted that in the field of general motion recognition, large significant expression does not mean that the action is related to it. For example, the action of making a phone call is identified by the long-term relative static relationship between the elbow and head of the human hand. However, in the field of industrial production operations, the actions and processes of motion are often directly related, which is one of the reasons why this paper uses it as a prominent feature.

2.4. Graph Convolution Networks

In this section, we introduce how to use prior knowledge to guide GCN networks. Inspired by ST-GCN [43], the network in this paper uses temporal and spatial skeleton data for modeling.

Firstly, based on a skeleton sequence with N joints and T frames featuring both intra-body and inter-frame connection, a graph model is constructed:

where V denotes the node set, which includes all the joint points of a skeleton sequence:

E denotes the edge set:

where Es denotes the intra-skeleton joint connection of a body and Et denotes the connection of same body joint at adjacent time t and t + 1. In this paper, we only consider the connection between physically adjacent joints and the connection between the same, temporally adjacent body joints.

Based on the above basic graph structure, we can construct a multi-layer graph convolution neural network. Specifically, for a node at time t of layer m + 1, the calculation method for its graph convolution features is

where represents the set of nodes in the graph that are connected (including itself); is a normalizing term, which is added to balance the contributions of different subsets to the output; and is the weight to learn in the network.

In the first layer of the network, the features of each joint and the connections in the skeleton sequence obtained from the image sequence are used as the initial input, which is . The most commonly used joint features are the spatial position coordinates and the motion speed in this step.

In this paper, prior knowledge is applied to the GCN in the feature setup procedure at the beginning. For the feature setup step, we encode the following features as feature vectors for a certain joint:

- The basic pose feature introduced in Section 2.1;

- The features of and introduced in Section 2.1;

- The Euclidean distances from the body’s center of gravity to the joint.

In the training step, an attention mechanism is also introduced, based on prior knowledge:

Before the input of the network, joints are divided into two categories based on prior knowledge. One type is marked as 1, for joint points that significantly reflect the action, and the other type is marked as 0, indicating joints unrelated to the action. For a certain action, this classification is based on the following method:

Firstly, divide the human skeleton into orderly connections from body joints to limb joints. One example of this is listed in Table 1.

Table 1.

Body parts of joints.

Then, for each body part P, based on the irrelevant and significant expressions of each joint, the significant feature of the body part is calculated as:

where is a weight factor, which varies depending on the type of joint.

For a certain action, the joints on the body part with larger , will be marked as 1, while the remaining joints will be marked as 0. For the input of the network, only the features of joints marked 1 are used for training, while joints marked 0 are not part of the training. This approach can improve efficiency by introducing prior knowledge and setting inputs in a form similar to supervision.

3. Experiment Results

3.1. Datasets of the Action of Solar Panel Installation

In order to verify the effectiveness of the algorithm in this paper, we collected 2460 videos of the operation of workers during the installation process of solar panels. These videos are standard actions performed by different workers from different camera perspectives.

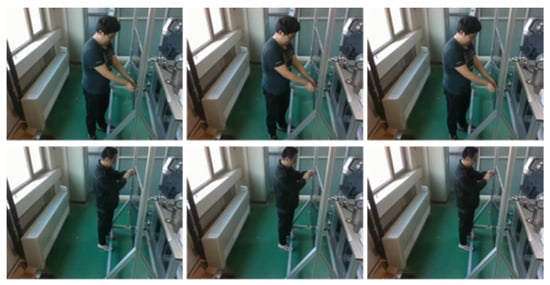

There are four main types of actions: ‘pendulum’ (Figure 4), ‘stay wire’ (Figure 5), ‘threading’ (Figure 6) and ‘tie’ (Figure 7). They correspond to four different processes in the installation process. The purpose of the application algorithms studied in this paper is to correctly distinguish these four types of actions from video, providing guidance for the automatic operation and control of robotic arms that cooperate with humans.

Figure 4.

Example of ‘pendulum’ step.

Figure 5.

Example of ‘stay wire’ step.

Figure 6.

Example of ‘threading’ step.

Figure 7.

Example of ‘tie’ step.

Before training, in order to prevent over-fitting, a data enhancement method was used to pre-process all video data, and the size of the video frames was adjusted to 256 × 340, then they were cut to 224 × 224, and horizontal flipping, corner cropping, and multi angle cropping were performed. It should be noted that considering that many image-based action recognition methods are limited by GPU performance and difficult-to-support high-resolution video data, in order to facilitate comparison with relevant methods, we unified the resolution to a lower resolution.

3.2. Experiment Setup

The hardware device for conducting the experiment in this article is an x86 architecture computer, with a CPU of CoRE™I7-12700 processor produced by Intel, RAM memory of 32GB, and a graphic computing card of GeForce RTX 3090 produced by Nvidia. The software system is Linux Ubuntu 18.04, equipped with the Python deep learning framework and CUDA 10.0.

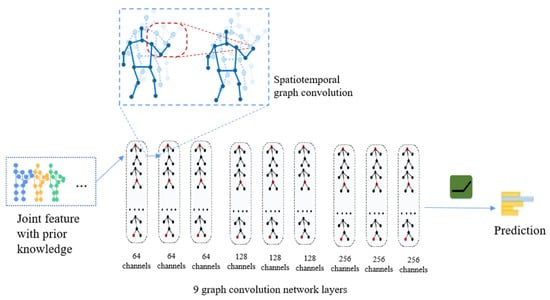

In the implementation process, firstly, for videos containing humans, a human skeleton recognition algorithm is used to extract the human skeleton. Subsequently, the skeleton data of all actions will be extracted according to the method in this article, including prior knowledge, features, and node regions labeled with significant actions; then, skeleton data are input into a deep neural network for training. The deep neural network used in this article is similar to ST-GCN [43], which consists of nine layers of spatio-temporal graph convolution operators. The first three layers have 64 channels for output. The three layers below have 128 channels for output. The last three layers have 256 output channels. The applied structure of the graph convolution layers is shown in Figure 8. The model adopts a batch size of 64, random gradient descent as the optimization strategy, and cross entropy as the loss function of gradient back-propagation, and the initial value of weight attenuation is 0.0005. The algorithms compared in this article include the original ST-GCN [43] and slowfast [41], both of which use default parameters.

Figure 8.

The applied structure of the graph convolution layers.

Detected significant joints for each action: For the four actions involved in the datasets collected in this paper, the significant joints identified for each action are shown in Table 2. The second column shows significant joints (body parts) obtained through the prior detection and statistics method in Section 2. The third column shows manually designed significant joints of the specific action. It can be seen that all movements are closely related to the hand joints, which is also closely related to the standard operation design of each process, because in terms of process production, the effective operation of humans is mainly with the hands, while the extension or bending of legs is mainly used to move the position of human operations. Meanwhile, the prior detection and statistics method relies on diverse datasets, and it can also discover hidden relationships with other joints that have not been found manually. In order to better compare the effects based on statistics with those specified manually, we also tested the recognition results using our method in the case of manually specifying significant joints.

Table 2.

The significant joints.

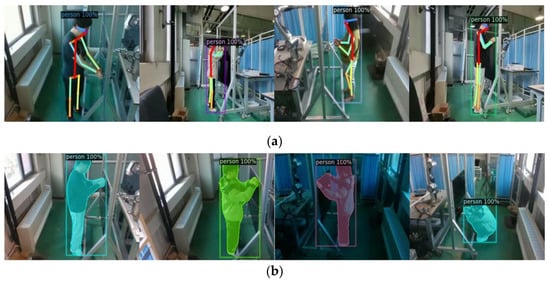

Pre-detection of human skeleton and body area: Figure 9 shows some intermediate results of human skeleton detection and human body area detection using methods provided by Detectron2 [30] from raw image sequences, called the pre-detection step. Then, the human skeleton data are used as inputs for the GCN in this paper and in [36]. The human body area data are used as the inputs of the method in [34].

Figure 9.

The intermediate results. (a) Human skeleton detection; (b) human body area detection.

It should be noted that due to the limitations of perspective, image quality, and the algorithm used for human area detection and skeleton detection, there are certain errors in extracting human skeletons and mask regions from the original video. With regard to the datasets collected in this paper, the accuracy of the skeleton recognition algorithm is about 93.6% (defined as all skeleton joints being correctly recognized). The accuracy of human body recognition is 98.7% (defined as the human body being correctly extracted, with non-human or missing areas less than 5%). The main interference area comes from the reflective area of the solar panel. In this paper, as we do not consider errors caused by failed pre-detection, we first removed all the error pre-detection data manually.

The experiment randomly extracts 70% of the datasets, then expands and enhances the datasets and trains the model. Meanwhile, the remaining 30% of the datasets will be used for validation. The accuracy of the recognition results for top1 and top2 was calculated, and the final experimental results are shown in Table 3. As mentioned earlier, our method adopts two ways: one is to directly use manually defined significant joints as training for different actions, and the other is to use statistically detected significant joints as training for different actions. Overall, the method proposed in this paper outperforms the comparison method in terms of accuracy in identifying specific actions, especially in the difficult action of ‘tie’, where recognition accuracy has been significantly improved.

Table 3.

The experiment results.

Time consumption: Due to most behavior recognition algorithms being designed for fixed-length image sequence datasets, they cannot be directly applied to online action recognition. In this paper, in order to verify the feasibility of the proposed algorithm in engineering applications, we also designed relevant experiments to test the execution time of the algorithm. Specifically, for continuously collected image sequences, firstly, a human pose recognition algorithm is run to detect and extract skeleton poses. Then, a sliding window is used to package the continuous N (N can be taken as 120 frames, 5 s) skeleton postures to extract features as input to the network of the algorithm proposed in this paper. Finally, the proposed GCN prediction thread is processed to obtain the output result. We have calculated the average execution time of each step mentioned above, and the results are shown in Table 4.

Table 4.

The time consumption of each step.

It should be noted that even without detailed optimization of the algorithm in this paper, after a buffering time of about 5 s, it can output action recognition results at an average of more than two times per second continuously, which can essentially meet the needs of online applications.

4. Discussion

For the application of recognizing the action of workers’ operation steps, the algorithm of directly using RGB image input [34] without using a skeleton has a relatively poor effect, especially in the ‘tie’ action and ‘pendulum’ action. This is mainly due to the lack of diversity of training data. On many occasions, we can only find a few people to produce train data, but their body shape and clothing are relatively simple, which leads to over-fitting. When different people appear in the test set, such recognition methods that directly use the entire RGB image of the human body collapse easily. From this point of view, approaches based on skeleton data can significantly improve the performance.

However, it must be noted that in the pre-detection stage, compared to the mask detection of the human body in RGB images, the accuracy of human skeleton detection is several percentage points lower because it is more difficult. In other words, when using action recognition algorithms based on the human skeleton, it is also necessary to balance the accuracy of its pre-detection. On the other hand, in our current work, we did not consider the problem of occlusion [50], which will happen in many real scenes.

Overall, compared to the representative scheme of general action recognition, the accuracy of the method proposed in this article is higher. This is mainly because in the installation of solar panels, there are some movements that have a small overall range of human body movement, and the difference is only reflected in the small range of small hand movements, which are difficult to distinguish using general motion recognition methods. The prior knowledge-based attention enhancement mechanism proposed in this article improves this problem by amplifying small changes. The comparison between manually designed significant joints and automatically detected significant joints shows that the automatic detection method achieves better detection results. This may be because although a person is performing a certain action locally, other body parts still participate in collaboration, and automatic detection can discover key information that is not directly found by human definition.

5. Conclusions and Future Work

In some special industrial application scenarios, human action recognition is needed for the collaboration of human and robots. This may be difficult because of high accuracy requirements and lack of training data or low action discrimination. In this paper, we propose a new method for extracting prior knowledge and integrating it into a GCN network to improve the recognition reliability. The experiments show that the proposed method can achieve better action recognition results in a solar panel installation action data-set.

Our work still has room for improvement. In the follow-up work, we plan to improve on the following aspects: (1) using multi-view image input to improve the inaccurate motion recognition caused by inaccurate skeleton recognition due to partial occlusion and (2) thoroughly combining close-range hand motion recognition with overall human motion recognition to enhance motion discrimination and improve accuracy.

Author Contributions

Software, X.Z.; formal analysis, C.W.; resources, Y.Z. and J.L.; writing—original draft preparation, J.W.; writing—review and editing, J.W. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the 2021 Tianjin Applied Basic Research Multiple Investment Fund (Grant No. 21JCQNJC00830, 21JCZDJC00820).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are available upon request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zhang, J.; Wang, P.; Gao, R.X. Hybrid machine learning for human action recognition and prediction in assembly. Robot. Comput. Integr. Manuf. 2021, 72, 102184. [Google Scholar] [CrossRef]

- Inkulu, A.K.; Raju Bahubalendruni, M.V.A.; Dara, A.; SankaranarayanaSamy, K. Challenges and opportunities in human robot collaboration context of Industry 4.0—A state of the art review. Ind. Robot 2022, 49, 226–239. [Google Scholar] [CrossRef]

- Garcia, P.P.; Santos, T.G.; Machado, M.A.; Mendes, N. Deep Learning Framework for Controlling Work Sequence in Collaborative Human–Robot Assembly Processes. Sensors 2023, 23, 553. [Google Scholar] [CrossRef]

- Chen, C.; Wang, T.; Li, D.; Hong, J. Repetitive assembly action recognition based on object detection and pose estimation. J. Manuf. Syst. 2020, 55, 325–333. [Google Scholar] [CrossRef]

- Ramanathan, M.; Yau, W.-Y.; Teoh, E.K. Human action recognition with video data: Research and evaluation challenges. IEEE Trans. Hum. Mach. Syst. 2014, 44, 650–663. [Google Scholar] [CrossRef]

- Gupta, N.; Gupta, S.K.; Pathak, R.K.; Jain, V.; Rashidi, P.; Suri, J.S. Human activity recognition in artificial intelligence framework: A narrative review. Artif. Intell. Rev. 2022, 55, 4755–4808. [Google Scholar] [CrossRef]

- Zhou, X.; Liang, W.; Wang, K.I.-K.; Wang, H.; Yang, L.T.; Jin, Q. Deep-Learning-Enhanced Human Activity Recognition for Internet of Healthcare Things. IEEE Internet Things J. 2020, 7, 6429–6438. [Google Scholar] [CrossRef]

- Mendes, N. Surface Electromyography Signal Recognition Based on Deep Learning for Human-Robot Interaction and Collaboration. J. Intell. Robot. Syst. 2022, 105, 42. [Google Scholar] [CrossRef]

- Yao, L.; Sheng, Q.Z.; Li, X.; Gu, T.; Tan, M.; Wang, X.; Wang, S.; Ruan, W. Compressive Representation for Device-Free Activity Recognition with Passive RFID Signal Strength. IEEE Trans. Mob. Comput. 2017, 17, 293–306. [Google Scholar] [CrossRef]

- Zhang, H.; Parker, L.E. CoDe4D: Color-Depth Local Spatio-Temporal Features for Human Activity Recognition From RGB-D Videos. IEEE Trans. Circuits Syst. Video Technol. 2014, 26, 541–555. [Google Scholar] [CrossRef]

- Chen, C.; Jafari, R.; Kehtarnavaz, N. A survey of depth and inertial sensor fusion for human action recognition. Multimedia Tools Appl. 2017, 76, 4405–4425. [Google Scholar] [CrossRef]

- Bobick, A.; Davis, J. The recognition of human movement using temporal templates. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 257–267. [Google Scholar] [CrossRef]

- Weinland, D.; Ronfard, R.; Boyer, E. Free viewpoint action recognition using motion history volumes. Comput. Vis. Image Underst. 2006, 104, 249–257. [Google Scholar] [CrossRef]

- Laptev, I. On space-time interest points. Int. J. Comput. Vis. 2005, 64, 107–123. [Google Scholar] [CrossRef]

- Dollár, P.; Rabaud, V.; Cottrell, G.; Belongie, S. Behavior recognition via sparse spatio-temporal features. In Proceedings of the IEEE International Workshop on Visual Surveillance and Performance Evaluation of Tracking and Surveillance, Beijing, China, 15–16 October 2005; pp. 65–72. [Google Scholar] [CrossRef]

- Laptev, I.; Marszalek, M.; Schmid, C.; Rozenfeld, B. Learning realistic human actions from movies. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar] [CrossRef]

- Klaser, A.; Marszałek, M.; Schmid, C. A spatio-temporal descriptor based on 3d-gradients. In Proceedings of the 19th British Machine Vision Conference, Leeds, UK, 1–4 September 2008; pp. 275:1–275:10. [Google Scholar]

- Wang, H.; Kläser, A.; Schmid, C.; Liu, C.-L. Action Recognition by Dense Trajectories. In Proceedings of the 2011 IEEE Conference on Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, 20–25 June 2011; pp. 3169–3176. [Google Scholar] [CrossRef]

- Sun, J.; Wu, X.; Yan, S.; Cheong, L.-F.; Chua, T.-S.; Li, J. Hierarchical spatio-temporal context modeling for action recognition. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 2004–2011. [Google Scholar] [CrossRef]

- Zelnik-Manor, L.; Irani, M. Statistical analysis of dynamic actions. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 1530–1535. [Google Scholar] [CrossRef] [PubMed]

- Simonyan, K.; Zisserman, A. Two-stream convolutional networks for action recognition in videos. In Advances in Neural Information Processing Systems; Ghahramani, Z., Welling, M., Cortes, C., Lawrence, N., Weinberger, K.Q., Eds.; MIT Press: Cambridge, MA, USA, 2014; Volume 27, pp. 1345–1367. [Google Scholar]

- Karpathy, A.; Toderici, G.; Shetty, S.; Leung, T.; Sukthankar, R.; Li, F.-F. Large-scale video classification with convolutional neural networks. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1725–1732. [Google Scholar] [CrossRef]

- Zhang, B.; Wang, L.; Wang, Z.; Qiao, Y.; Wang, H. Real-time action recognition with enhanced motion vector CNNs. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2718–2726. [Google Scholar] [CrossRef]

- Donahue, J.; Hendricks, L.A.; Guadarrama, S.; Rohrbach, M.; Venugopalan, S.; Saenko, K.; Darrell, T. Long-term recurrent convolutional networks for visual recognition and description. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 2625–2634. [Google Scholar] [CrossRef]

- Sharma, S.; Kiros, R.; Salakhutdinov, R. Action recognition using visual attention. arXiv 2015, arXiv:1511.04119. [Google Scholar]

- Wu, Z.; Wang, X.; Jiang, Y.-G.; Ye, H.; Xue, X. Modeling spatial-temporal clues in a hybrid deep learning framework for video classification. In Proceedings of the 23rd ACM International Conference on Multimedia, Brisbane, Australia, 26–30 October 2015; pp. 461–470. [Google Scholar] [CrossRef]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning spatiotemporal features with 3d convolutional networks. In Proceedings of the 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 4489–4497. [Google Scholar]

- Varol, G.; Laptev, I.; Schmid, C. Long-term temporal convolutions for action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 1510–1517. [Google Scholar] [CrossRef]

- Qiu, Z.; Yao, T.; Mei, T. Learning spatio-temporal representation with pseudo-3d residual networks. In Proceedings of the 2017 IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4489–4497. [Google Scholar] [CrossRef]

- Zhou, Y.; Sun, X.; Luo, C.; Zha, Z.; Zeng, W. Spatiotemporal fusion in 3d CNNs: A probabilistic view. In Proceedings of the 2020 IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1725–1732. [Google Scholar] [CrossRef]

- Kim, J.; Cha, S.; Wee, D.; Bae, S.; Kim, J. Regularization on spatio-temporally smoothed feature for action recognition. In Proceedings of the 2020 IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 12103–12112. [Google Scholar] [CrossRef]

- Wang, L.; Huynh, D.Q.; Koniusz, P. A comparative review of recent kinect-based action recognition algorithms. IEEE Trans. Image Process. 2019, 29, 15–28. [Google Scholar] [CrossRef]

- Mehta, D.; Sridhar, S.; Sotnychenko, O.; Rhodin, H.; Shafiei, M.; Seidel, H.-P.; Xu, W.; Casas, D.; Theobalt, C. Vnect: Real-time 3d human pose estimation with a single rgb camera. ACM Trans. Graph. 2017, 36, 1–14. [Google Scholar] [CrossRef]

- Pouyanfar, S.; Sadiq, S.; Yan, Y.; Tian, H.; Tao, Y.; Reyes, M.P.; Shyu, M.-L.; Chen, S.-C.; Iyengar, S.S. A survey on deep learning: Algorithms, techniques, and applications. ACM Comput. Surv. 2018, 51, 1–36. [Google Scholar] [CrossRef]

- Cao, Z.; Simon, T.; Wei, S.-E.; Sheikh, Y. Realtime multi-person 2D pose estimation using part affinity fields. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7291–7299. [Google Scholar] [CrossRef]

- Girshick, R.; Radosavovic, I.; Gkioxari, G.; Dollár, P.; He, K. Detectron. 2018. Available online: https://github.com/facebookresearch/detectron (accessed on 20 June 2023).

- Yuxin, W.; Alexander, K.; Francisco, M.; Wan-Yen, L.; Ross, G. Detectron2. 2019. Available online: https://github.com/facebookresearch/detectron2 (accessed on 20 June 2023).

- Du, Y.; Wang, W.; Wang, L. Hierarchical recurrent neural network for skeleton based action recognition. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1110–1118. [Google Scholar] [CrossRef]

- Si, C.; Chen, W.; Wang, W.; Wang, L.; Tan, T. An attention enhanced graph convolutional LSTM network for skeleton-based action recognition. In Proceedings of the 2019 IEEE International Conference on Computer Vision, Long Beach, CA, USA, 15–20 June 2019; pp. 1227–1236. [Google Scholar] [CrossRef]

- Hou, Y.; Li, Z.; Wang, P.; Li, W. Skeleton optical spectra-based action recognition using convolutional neural networks. IEEE Trans. Circuits Syst. Video Technol. 2016, 28, 807–811. [Google Scholar] [CrossRef]

- Feichtenhofer, C.; Fan, H.; Malik, J.; He, K. SlowFast Networks for Video Recognition. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6201–6210. [Google Scholar] [CrossRef]

- Shi, L.; Zhang, Y.; Cheng, J.; Lu, H. Skeleton-based action recognition with directed graph neural networks. In Proceedings of the 2019 IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7912–7921. [Google Scholar] [CrossRef]

- Yan, S.; Xiong, Y.; Lin, D. Spatial temporal graph convolutional networks for skeleton-based action recognition. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Zhang, Y.; Wu, B.; Li, W.; Duan, L.; Gan, C. Gan, STST: Spatial-temporal specialized transformer for skeleton-based action recognition. In Proceedings of the 29th ACM International Conference on Multimedia, Chengdu, China, 20–24 October 2021; pp. 3229–3237. [Google Scholar] [CrossRef]

- Ahmad, T.; Mao, H.; Lin, L.; Tang, G. Action recognition using attention-joints graph convolutional neural networks. IEEE Access 2019, 8, 305–313. [Google Scholar] [CrossRef]

- Chen, Y.; Ma, G.; Yuan, C.; Li, B.; Zhang, H.; Wang, F.; Hu, W. Graph convolutional network with structure pooling and joint-wise channel attention for action recognition. Pattern Recognit. 2020, 103, 107321. [Google Scholar] [CrossRef]

- Tunga, A.; Nuthalapati, S.V.; Wachs, J. Pose-based Sign Language Recognition using GCN and BERT. In Proceedings of the 2021 IEEE Winter Conference on Applications of Computer Vision Workshops (WACVW), Waikola, HI, USA, 5–9 January 2021; pp. 31–40. [Google Scholar] [CrossRef]

- Shahroudy, A.; Liu, J.; Ng, T.-T.; Wang, G. NTU RGB+D: A large scale dataset for 3d human activity analysis. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1010–1019. [Google Scholar] [CrossRef]

- Kay, W.; Carreira, J.; Simonyan, K.; Zhang, B.; Hillier, C.; Vijayanarasimhan, S.; Viola, F.; Green, T.; Back, T.; Natsev, P. The kinetics human action video dataset. arXiv 2017, arXiv:1705.06950. [Google Scholar]

- Sahoo, S.P.; Modalavalasa, S.; Ari, S. A sequential learning framework to handle occlusion in human action recognition with video acquisition sensors. Digit. Signal Process. 2022, 131, 103763. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).