Abstract

Digital twin technologies are still developing and are being increasingly leveraged to facilitate daily life activities. This study presents a novel approach for leveraging the capability of mobile devices for photo collection, cloud processing, and deep learning-based 3D generation, with seamless display in virtual reality (VR) wearables. The purpose of our study is to provide a system that makes use of cloud computing resources to offload the resource-intensive activities of 3D reconstruction and deep-learning-based scene interpretation. We establish an end-to-end pipeline from 2D to 3D reconstruction, which automatically builds accurate 3D models from collected photographs using sophisticated deep-learning techniques. These models are then converted to a VR-compatible format, allowing for immersive and interactive experiences on wearable devices. Our findings attest to the completion of 3D entities regenerated by the CAP–UDF model using ShapeNetCars and Deep Fashion 3D datasets with a discrepancy in L2 Chamfer distance of only 0.089 and 0.129, respectively. Furthermore, the demonstration of the end-to-end process from 2D capture to 3D visualization on VR occurs continuously.

1. Introduction

Digital twins have undergone rapid development. The concept originated in 2003 from the work of Professor Michael Grieves for use in a variety of fields [1], such as smart cities, smart homes, smart farms, hospitals, aerospace, electric power, intelligent construction, and manufacturing [2,3]. Some of the ideas used in the field of digital twins come from the industrial Internet of Things (IoT), which is the discipline of devising highly capable simulation models, particularly ones that consume data from streams for enhancing performance [4].

In the past few decades, the 3D modeling of real-world objects has been a difficult task, with several applications in computer graphics, gaming, virtual reality (VR), augmented reality (AR), and e-commerce [5,6]. Traditionally, 3D models were made manually using time-consuming and costly procedures, such as 3D scanning or CAD modeling [7]. In general, point cloud data are used to generate the CAD models. Modeling software is used to create a CAD model of the apparel structure from the point cloud [8]; online public repositories are also used to obtain models of real-world apparel items. It should be emphasized that the automatic transformation of 3D scans into CAD is still a work in progress [9].

The surfaces of 3D point clouds, 3D vision, robotics, and graphics all rely on 3D modeling. It bridges the gap between raw point clouds acquired by 3D sensors and editable surfaces for a variety of downstream applications [10]. As a workaround, state-of-the-art techniques use Unsigned Distance Functions (UDFs) [11,12,13] as a more general representation to reconstruct surfaces from point clouds. However, due to the non-continuous nature of point clouds, these approaches cannot develop UDFs with smooth distance fields near surfaces, even when employing ground truth distance values or large-scale meshes during training. Most Unsigned Distance Functions (UDFs) techniques fail to extract surfaces directly from unsigned distance variables.

Deep-learning technology has been a very useful method for solving problems, and deep-learning approaches have shown considerable success in producing accurate 3D models from images. These technologies have the potential to alter the way 3D models are generated and used since they can automatically infer 3D structures from 2D photos. Convolutional neural networks (CNNs) [14] have been used successfully for image identification, object recognition, audio recognition, and classification challenges [15]. CNNs are usually made up of three layers: convolution, pooling, and fully connected layers. CNNs used in conjunction with multi-view stereo (MVS) techniques [16] represent one of the most promising approaches for producing 3D models from images. CNNs can learn to predict depth maps from a single, whereas MVS algorithms can refine depth estimations and construct a complete 3D model from numerous photos. This method produced cutting-edge outcomes in terms of the precision and completeness of the generated 3D models [17].

Even though various studies in 3D object reconstruction have been proposed, especially in the garment industry for virtual trying on, existing studies still lack the feasibility of instant end-to-end transference of 2D image capturing to 3D object reconstructed visualization in the virtual world. To facilitate this, the aim of this study is to accelerate the adaptability of multiple perspectives to the use of mobile devices, for the above-mentioned garment task in particular, which is more accessibly achieved via apparel photographs that use deep-learning techniques to assist in providing details for people’s decision making in terms of making purchases through virtual reality glasses using digital twins technology. Hence, the explicit aim of our study is to establish a framework that can receive real-world 2D image data and process this input into 3D objects rendered in the virtual world seamlessly and perfectly.

In order to facilitate smooth integration into real-world testing, particularly within augmented reality (AR) installations, we focus on the following two research questions. Firstly, what strategies can be employed to overcome the challenges associated with implementing virtual reality (VR) devices for garment trying-on technology? Secondly, what strategies can be employed to harness user-generated content via smartphones in order to empower individuals to establish their own virtual stores within the virtual reality (VR) realm, integrating customized fashion designs and augmenting the overall shopping experience?

This study builds upon the preceding questions by incorporating the notion of user-generated content and its significance in establishing customized virtual stores within the virtual reality (VR) milieu. We investigate the potential of smartphone technology in enabling individuals to create and personalize virtual shops, thereby providing a platform for showcasing their distinctive fashion designs. The objective is to examine the influence of user-generated content on the overall shopping experience in the virtual reality (VR) domain and to explore its potential in addressing the limitations outlined in the conclusion.

The remainder of this study is organized as follows: Section 2 presents work related to digital twins, virtual reality (VR), augmented reality (AR), and 3D reconstruction. Our methodology is presented in Section 3. In Section 4, we present the experimental results from the reconstruction phase, visualization phase, and designed end-to-end framework. Finally, in Section 5, we offer our conclusions and suggestions for further research.

2. Related Work

Many studies have shown that digital twins are already part of human life [18,19]. For example, healthcare patient digital twins help doctors with therapeutic prescriptions and minimally invasive interventional procedures via machine-learning techniques. Digital twin technology in this research is able to be used in smart homes in collaboration with mobile assistive robots for the development of a smart home system [20]. In smart farming research, digital twin technologies are able to create a cyber-physical system (CPS) so that farmers can better understand the state of their farms in terms of the usage of resources and equipment [21].

Research on digital twins and the Metaverse offers a paradigm for a cultural heritage metaverse, focusing on key features and characterizing the mapping between real and virtual cultural heritage worlds. Most research has focused on the development of the Metaverse as a digital world [22,23] and offers three-layer architecture that connects the real world to the Metaverse via a user interface. It also looks into the security and privacy issues that come with using digital twins (DTs) in the Metaverse. [24] The research discusses digitization and the impact of DTs on the advancement of modern cities, as well as the primary contents and essential technologies of Smart Cities (SCs) backed by DTs. Urban construction is divided into four parts: vision, IoT perception, application update, and simulation [25]. In a study of the Metaverse with DTs and Healthcare, the author employed mixed Machine Learning-Enabled Digital Twins of Cancer to assist patients [26]. To develop real-time and reliable digital twins of cancer for diagnostic and therapeutic reasons, the case study focuses on breast cancer (BC), the world’s second most common type of cancer [27]. BlockNet [28] revealed the difficulties encountered in multiscale spatial data processing, a non-mutagenic multidimensional Hash Geocoding approach [29].

Next, research on digital twin design and execution strategy for equipment battle damage test evaluation was assessed, which is significant for the development of digital twin battlefield construction and battle damage assessment [30].

There are a number of important factors in regard to virtual reality (VR) with 3D Modeling and Computer Graphics. In VR, the computer graphics effect and visual realism are frequently trade-offs for real-time and realistic interaction [31]. The work explained the overall flow of the VR software development process, as well as the most contemporary 3D modeling and texture painting techniques utilized in VR. We will also discuss some of the most important 3D modeling and computer graphics approaches that can be used and focus on some of the primary 3D modeling and computer graphics techniques that can be used in VR to improve interaction speed [32].

Augmented reality (AR) applications have been further developed by using 3D and 4D modeling. Professional surveying and computer graphics tools, such as Leica’s Cyclone, Trimble’s SketchUp, and Autodesk 3ds Max, are used for 3D and 4D modeling [33]. The texture of historical images is used to provide the fourth dimension, time, to modern 3D creations. After homogenizing all 3D models in Autodesk 3ds Max, they are exported to the game engine Unity, where they are used to create the reference surface and, finally, the 3D urban model. The storyboard provides the programmer with an outline of which features and functionalities must be fulfilled [34].

Recent research has focused on 3D Reconstructed from 3D point clouds (surface) [35]. Deep neural networks, “Neural Implicit Functions,” have been trained to learn Signed Distance Functions (SDFs) or occupancies, and then the marching cubes algorithm is used to extract a polygon mesh of a continuous iso-surface from a discrete scalar field [36]. Earlier approaches embedded the shape into a global latent code using an encoder or an optimization-based algorithm, and then utilized a decoder to recreate the shape [37]. Some approaches proposed using more latent codes to capture local shapes prior to generating more precise geometry [38]. To complete this, the point cloud is first divided into uniform grids or local patches, and then a neural network is utilized to extract latent coding for each grid/patch.

Unsigned Distance Functions (UDFs) are capable of extracting surfaces directly from gradients. Some research uses UDFs as a normal estimation or semantic segmentation. Nevertheless, due to the noncontinuous nature of point clouds, these approaches require ground truth distance values or even large-scale meshes during training, and it is difficult to obtain smooth distance fields near the surface [39].

However, it is more difficult to learn implicit functions straight from raw point clouds without ground truth signed/unsigned distance or occupancy data. Current work presents agnostic learning for learning SDFs from raw data using a specifically designed network initialization [40], gradient constraints [41], or geometric regularization [42]. Neural-Pull [43] learns SDFs in a novel way by drawing neighboring space onto the surface. However, because they are designed to learn signed distances, they are unable to recreate complicated forms with open or multi-layer surfaces. Our technique, on the other hand, can learn a continuous unsigned distance function from point clouds, allowing us to recreate surfaces for forms and sceneries of any typology. We also compare previous studies with our developed framework, as shown in Table 1. Thus, the major contributions of our proposed method can be highlighted as follows:

Table 1.

The comparative summary of recent studies and our proposed method.

- We proposed an end-to-end 2D-to-3D framework including 2D-to-3D point cloud via PIFu processing and regenerating 3D-point cloud-to-3D fully regenerated instances via the CAP–UDF model, in which these two steps are integrated as one mainstream task;

- Comparisons from multiple perspectives were conducted using one benchmark dataset and one actual implementation for a garment task;

- Garment visualization has been further demonstrated in a virtual world after mapping from the regenerating task.

3. Methodology

In this section, the proposed method is divided into three subsections. Firstly, the reconstruction phase includes a 2D-to-point cloud Pixel-Aligned Implicit Function (PIFu) and point cloud-to-3D Unsigned Distance Function-based and Consistency-Aware Progression (CAP–DF). Secondly, the Visualization Phase describes Physical-to-Virtual Transformative Topology (P2V). Thirdly, we show our designed End-to-End Framework.

- A.

- Fundamental: Deep-Learning in regard to 3D Deep Reconstruction and Cloud Computing Infrastructure

Deep learning focuses on the development of neural networks and has been used in many areas, such as classification [45], segmentation [46], and object detection [47]. The field of transitional learning is growing exponentially, whether it be in transfer learning, using the virtual world to describe the real world [48], model compression [49], or deep generative modeling [50], which is the focus of this work. In general, for image regeneration, a regular generative model, such as a generative adversarial network (GAN) or a variational autoencoder (VAE), is used to capture the underlying distribution of the training data. Once trained, the model can generate new samples that exhibit similar characteristics to the original dataset. One of the methods that we find interesting is the unsigned distance field (UDF). Basically, UDF, which is represented as voxel grids or 3D textures where each voxel or texel stores the distance value, is a representation commonly used in computer graphics and geometric modeling to describe shapes or objects in three-dimensional space to render new objects. It represents surfaces as voxel grids or 3D textures, where each voxel or texel stores the distance value. Thus, the existing generative model should be capable of learning the underlying distribution of UDFs and generating from random input to yield new UDF samples.

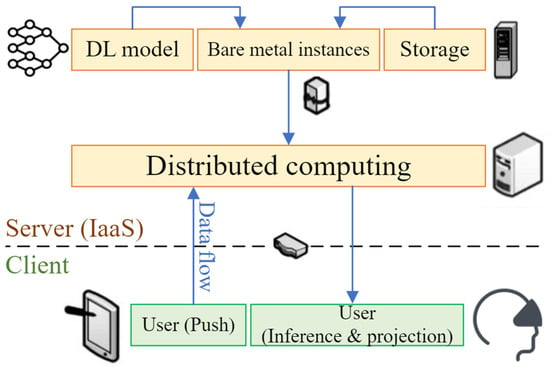

In general, three kinds of infrastructure have been presented via software, platform, and infrastructure as a service (SaaS, PaaS, and IaaS, respectively) to access transparency, scalability, intelligent monitoring, and security, which is significant for on-cloud implementation. Thus, cloud computing is used in our framework to enable scalable and on-demand access to computing resources with its demanding computational requirements by the computation of a deep-learning approach, as shown in Figure 1. The basis is a type of calculation, whose framework involves machine-to-machine collaboration, whether in storage or distributed computing, to drive computation from the bottom up based on client data and display visual results to the client from the top down. The cloud computing infrastructure is presented in Figure 1.

Figure 1.

The cloud computing infrastructure as employed in the exemplary learning system.

- B.

- Reconstruction phase: 2D-to-point cloud Pixel-Aligned Implicit Function (PIFu) and point cloud-to-3D Unsigned Distance Function-based Consistency-Aware Progression (CAP-UDF).

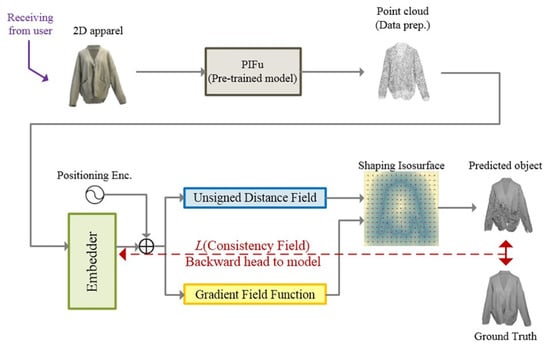

Initially, the two-dimensional data were captured from an external environment and were shaped as a point cloud through the Pixel-Aligned Implicit Function (PIFu) [51], which pins and anchors the point cloud as a three-dimensional point cloud object. Other existing studies rely on majority pinning and anchoring or use field aids. This causes a reduction in training time and wastes training resources. On the other hand, some studies present an approach to extract surfaces directly by using the gradient field directly to improve model learning and object reconstruction, as shown in Figure 2. CAP–UDF [52] is an end-to-end remarkable model in a one-stage batch run for reconstructing 3D objects where there are two main parts of the learning point for cloud surface regeneration, i.e., UDFs-based cloud rearrangement and consistency-aware gradient fields .

Figure 2.

Two principles (Gradient Field and Unsigned Distance Field; UDF) of surface reconstruction under the three stages of the CAP–UDF approach.

Whereas 3D query data are processed to query pre-processed data, the model over neural processing will shape the unsigned distance of the query information and move the query vector against the direction of the gradient in the query field. The second part is the shape metrics under the field consistency loss over Chamfer distance, as shown in Equation (1). As the optimization objective, the distance between the moved queries and the target point cloud is determined. This relies on consistency-aware field learning with a Neural-pulling model that leverages a mean squared error (MSE) to further diminish the distance between the moved query and the nearest neighbor of the query in the point cloud. After the model converged in the present iteration, the model was updated for the point cloud with an exclusive subset of queries in advance to train more local information in the following stage. Eventually, the gradient field is implemented for the learned UDFs as before to model the interaction between various 3D grids and explicitly shape a three-dimensional analog of an isoline to become a surface. Finally, we can evaluate the completion of the garment-regenerated objects through Equation (2) for the L2 normalization Chamfer distance measurement.

- C.

- Visualization Phase: Physical-to-Virtual Transformative Topology (P2V)

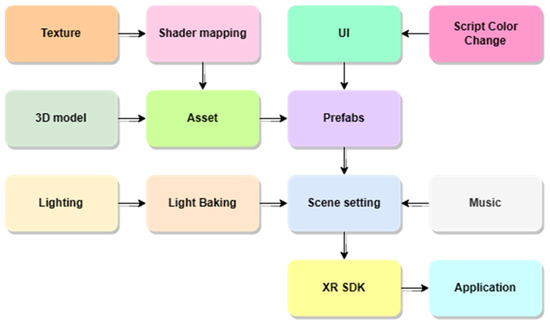

In Figure 3, the topology of mapping instances into the virtual world is shown, and we are able to see the 3D-regenerated object seamlessly. The software APIs, which act as an end-to-end Unity process, render objects and directly import them into the virtual space as object-virtualized triable. Generally, a three-dimensional item must be converted into an asset before it can be placed in the scene via the pre-fabrication process. We then selected the default choice for all music and lighting in the scenario.

Figure 3.

The topology of the mapping instance into the virtual world.

Finally, the XR SDK package can be turned into an application. Script Color is part of SDK as an alternative method to add skin color into an object immediately after the pre-fabrication process after completing the 3D-reconstruction process via the aforementioned CAP–UDF framework.

- D.

- Designed End-to-End Framework

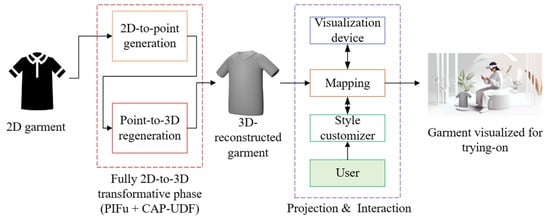

Our framework is established by an end-to-end service by capturing data on mobile devices to visualize in a virtual reality world. By combining 2D-to-point cloud instance generation via PIFu with point-to-3D object reconstruction over CAP–UDF, it becomes one mainstream pipeline regarded as the 2D-to-3D transformative phase. Moreover, we have also established the process of mapping and projection through visualization devices with multiple factors in control, such as real-time style preferences from mapped objects, with which users freely customize the objects, as illustrated in Figure 4. Additionally, the procedure is explained in Algorithm 1. The framework consists of two parties which are the cloud server and the client side. Firstly, instance I from the client side will be sent from mobile device M, pushing into the server to proceed with computing via distributed processing. When there is an event of processing for generating a 3D point cloud, it will first go through the PIFu function and then apply CAP–UDF for reconstructing fully formed 3D garment objects, which marks the completion of processing. After that, the newly regenerated 3D instances will be sent back from the server to the client side to be projected in a virtual world via the transformative function of P2V. Finally, the instances are ready for visualization and verification instantly through the use of virtual field-generating equipment.

| Algorithm 1: An end-to-end visualization for virtual trying-on. |

| Notation I: input or pre-processed data Ntrain: Num_trainings to generate 3D entities M: mobile device Φ: 3D-reconstruction model B: virtual reality equipment U(Phy/Vir); UP/UV: physical/virtual world via user interaction perspective S: server #---User side— #1 to transmit data from mobile device to server side S ← UP ⸰ M(I) #---Server side— #2 to generate 2D instances so as to be 3D-reconstruction entities OnEvent Reconstruction Phase do def pifu_pretrained: point_cloud_gen = PIFu_model(pretrained=True) def cap_udf_training: for n in training_loop: | Compute UDFs-based cloud rearrangement f ← ∇f | Compute Consistency-aware surface reconstruction L | Backward Total L objective function to model Φ End for Execute IPC = point_cloud_gen (I) While Iteration1 < Ntrain-raw3D: Iteration1++ Update ΦTr ← cap_udf_training(Φ(IPC_train)) End while Reconstruct I3D = ΦTr(IPC_test) Return Reconstructed 3D Objects I3D End OnEvent #---User side— #3 to transmit reconstructed instance and render over virtual world with user interaction UP ← S(I3D) OnEvent Projection Phase do import I3D if I3D. is_available() then Execute mapping entities into virtual space I3D-VR = P2V(I3D); Perform I3D-VR projecting over B virtual reality device with U interaction UV ⸰ B(I3D-VR); end if End OnEvent |

| Ensure Garment(s) for virtual try-on via VR devices |

Figure 4.

The end-to-end visualization pipelines.

4. Results

In this study, we have divided the experimental results into four parts: A. Datasets, B. System Configuration and Implementation details, C. Experiment findings under benchmark evaluation for the ShapeNet cars dataset and real-implementation garment reconstructions for the deep Fashion 3D dataset, and D. Object-Reconstructed Mapping and the Projection phase.

- A.

- Datasets

Our studies have established tests on the benchmark dataset and real-world implementations on the deployment and mapping into Virtual Reality equipment. The benchmark dataset is brought from ShapeNet cars data available on the original CAP–UDF study [53], which demonstrates the raw point cloud generating to the fully formed rigid reconstructed car model. Further, another implementation dataset in the activity of on-cloud visualization, such as the garment dataset from Deep Fashion 3D [54], which includes 2078 models reconstructed from genuine outfits, divided into 10 categories and 563 apparel examples. We consider pre-processed data for model training and assessment, which follows PIFu‘s data-generating methods [55]. The number of instances and different object classes for all datasets has been tabulated in Table 2. Some examples from both datasets are illustrated in Figure 5.

Table 2.

The number of instances for ShapeNet cars and DeepFashion 3D datasets.

Figure 5.

The exemplary ShapeNet cars and Deep Fashion 3D datasets.

- B.

- System Configuration and Implementation

We set up the batch training and testing systems on a system that included a CPU i7-10870H with 32 GB installed physical memory and a GPU Nvidia RTX 3080 Laptop with 8 GB dedicated memory, which served as our local cloud server. The software package that is compatible with our system includes a parallel computing platform (CUDA) version 11.5 and deep-learning acceleration (cuDNN) version 8.2., and we ran batch training on the deep-learning framework with PyTorch version 1.11, Torchvision 0.13.0, Numpy 1.23.5, and Chamferdist 1.0.0.

When pre-processing data, we considered PIFu pre-processed data for the garment dataset with 3000 anchor points. According to our training procedure, we set up benchmarks with three approaches: raw input without a regularization term, Anchor-UDF, and CAP–UDF for recruiting methods that produce flawlessly consistent results or completeness of the result object. During training, we used the Adam optimizer with a 0.001 starting learning rate and a cosine learning rate schedule with 1000 warm-up steps. We set for full training iterations at 80,000 steps for all approaches for the fairness of examination. After the previous level converged, we began training for the following step. With mutual understanding or fair findings, we measured the discrepancy or completeness of the 3D-regenerated work to ground truth with the Chamferdist measure, as previously mentioned, which is an evaluation metric for two-point clouds. It considers the distance between each point. CD selects the nearest point in the other point set to each point in the cloud and adds the square of distance up to test the performance of the workpiece. We measured both L1 and L2 normalization distances as seen in Equation (1) from the training phase and Equation (2) from the testing phase, respectively, which represents how to measure the L1 Chamfer distance. Moreover, we also evaluated the traditional metrics like F-score with thresholds of 0.005 and 0.01.

- C.

- Experimental Results

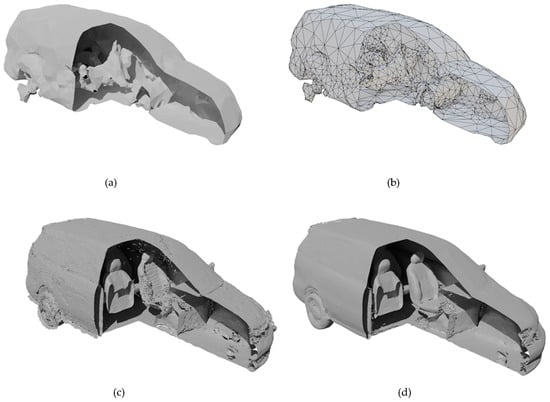

The test results shown in Figure 6, and the Chamfer distance quantitative test results for both L1 and L2 normalization, including the F1-score for two thresholds as tabulated in Table 3, demonstrate that CAP–UDF is able to smoothly reconstruct the 3D point to mesh in both exterior and interior objects with 0.389 of L1-norm Chamfer distances from point cloud-to-mesh generation batch run and L2-norm Chamfer distances from mesh evaluation whereas Anchor-UDF generated a quite unpolished surface on the whole body. Furthermore, the F-score, which is a metric used to evaluate performance, was also boosted to 94.7% and 99.8% for the 0.005 and 0.01-based thresholds, respectively.

Figure 6.

Visual comparison of reconstructed objects from three perspectives over ShapeNet cars. (a) Raw input; (b) Raw input (Wireframe); (c) Anchor-UDF; and (d) CAP–UDF.

Table 3.

Evaluation metrics from three perspectives over ShapeNet cars.

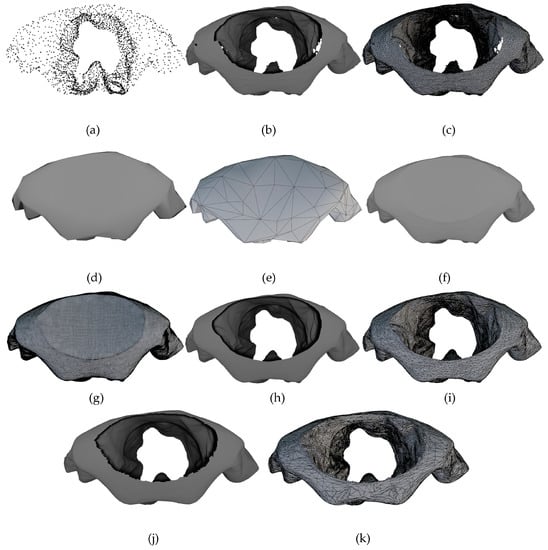

The samples shown above in Figure 7 and Figure 8 show the visual comparison of reconstructed objects from three perspectives over Deep Fashion 3D (PIFu pre-processed data). Each sample is represented as (a) 2D original garment, (b) ground truth, (c) ground truth (wireframe), (d) Pixel2Mesh, (e) Pixel2Mesh (wireframe), (f) PIFu, (g) PIFu (wireframe), (h) Anchor-UDF, (i) Anchor-UDF (wireframe), (j) CAP-UDF, and (k) CAP–UDF (wireframe), respectively.

Figure 7.

Visual comparison (Front view) of reconstructed objects from three perspectives over Deep Fashion 3D (PIFu pre-processed data) from (a–k) sample. The samples shown above in Figure 7 show the visual comparison of reconstructed objects from three perspectives over Deep Fashion 3D (PIFu pre-processed data). Each sample is represented as (a) 2D original garment, (b) ground truth, (c) ground truth (wireframe), (d) Pixel2Mesh, (e) Pixel2Mesh (wireframe), (f) PIFu, (g) PIFu (wireframe), (h) Anchor-UDF, (i) Anchor-UDF (wireframe), (j) CAP–UDF, and (k) CAP–UDF (wireframe), respectively.

Figure 8.

Visual comparison (Top view) of reconstructed objects from three perspectives over Deep Fashion 3D (PIFu pre-processed data) from (a–k) sample. Each sample represented is shown in Figure 8 as (a) point cloud PIFu-generating garment, (b) ground truth, (c) ground truth (wireframe), (d) Pixel2Mesh, (e) Pix-el2Mesh (wireframe), (f) PIFu, (g) PIFu (wireframe), (h) Anchor-UDF, (i) Anchor-UDF (wireframe), (j) CAP–UDF, and (k) CAP–UDF (wireframe).

As our purpose is for mobile visualization in actual implementation, we established the visual benchmark of garment data in the same L1- and L2-norm Chamfer distance measures. The quantitative results, as indicated in Table 4, show the Chamfer distance measured by point cloud during ground truth and predicted objects that CAP–UDF is uppermost improved by down to 0.586 and 0.129 for L1 and L2 normalization, respectively. In addition, the illustration results shown in Table 4 prove that the smoothness of the point-to-mesh regenerated object connection is much better, although some points are missing when compared to previous studies. Further investigation is needed to obtain in-depth information about whether it is on the inside or outside surfaces.

Table 4.

Evaluation metrics from three perspectives over Deep Fashion 3D (PIFu pre-processed data).

- D.

- Analysis

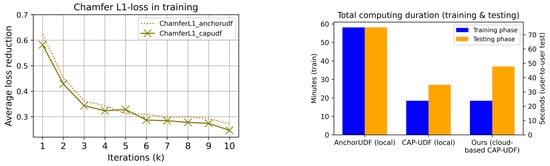

We further analyzed the improvement capability and speed of our assessments while working on both cloud and local computing for both assessment types, as illustrated in Figure 9. For this evaluation, we collected the results starting from the beginning of training up to 10,000 iterations and assessed the improvement every 1000 iterations. The observations on the CAP–UDF method are shown below.

Figure 9.

The comparison of Chamfer L1-loss and time consumption of training and testing time between local and cloud-based computing.

There was a slightly better improvement compared to the AnchorUDF method during this period at 5000 iterations. However, at the end of each evaluation period, it was evident that our adopted method increased the likelihood of creating objects more effectively.

In addition to the assessment of improvement, we also considered the training and testing time. The estimation is based on a batch size of 5000 epochs. We observed that our learning time frame was similar to that of the standard CAP–UDF method, as both rely on training resources. The main difference lies in the required AnchorUDF, which affects the process of creating an object and expanding its resolution. This process takes twice as long to complete compared to the standard CAP–UDF method, whereas for the evaluation of deployment to the client user, we observed that it took more than 10 s. This increase in time was primarily due to transportation costs, which included uploading files and transferring objects to the projector, subject to the mode of transportation. However, the evaluation process itself takes less time compared to the evaluation of Anchor-UDF. This is because pinning the object requires a higher resolution for object translation, which contributes to faster evaluation times. We can witness this capability by employing cloud computing, and we can thus achieve evaluation speeds that are comparable to the local computing of CAP–UDF and even surpass the evaluation speed of the local Anchor-UDF.

- E.

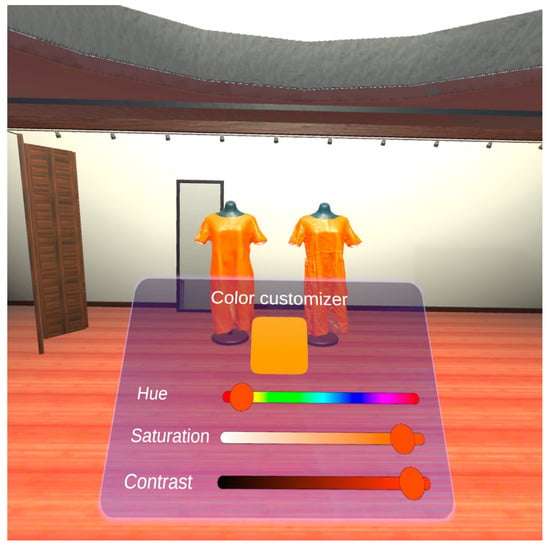

- Instance-Reconstructed Mapping and Projection

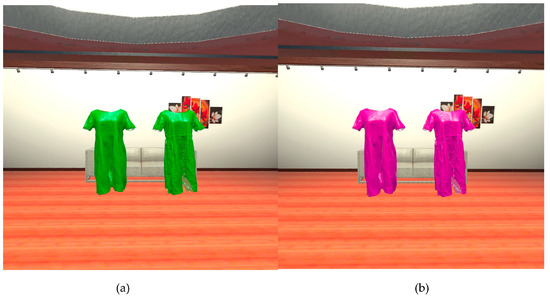

To demonstrate in a virtual space with a client-side device, we have configured mapping entities onto Oculus/Meta Quest 2 VR glasses with 64 GB of memory during the virtual-space object-mapping phase. While in the projection phase, we can access real-time customization, such as color preference for the objects, as illustrated in Figure 10.

Figure 10.

The color customizing after mapping and projecting inner devices.

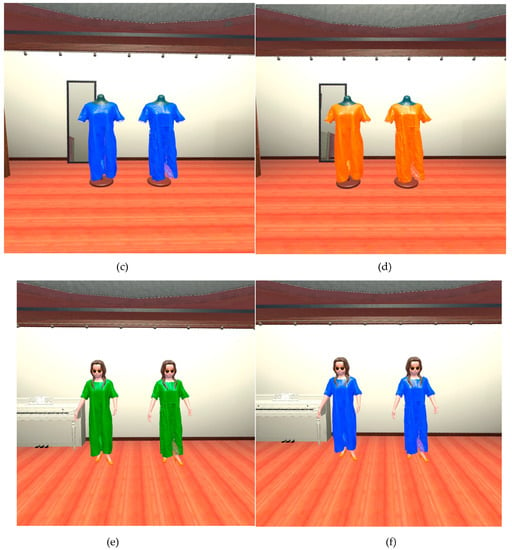

The findings revealed the pre-rendering and post-rendering of the final shapes, which are shown in Figure 11. Visualization demonstration is made possible with reality devices that allow people to access the surrounding objects. Thus, people can view and detach the puppet within to see the details of the object’s interior. The Anchor-UDF approach is used to create objects with genuine detail on the inside and outside.

Figure 11.

Comparative visualization of pure-projected garment and prompt-customized garment on a virtual reality device of ground truth and CAP–UDF-regenerating objects through perspectives in multiple environments, such as (a,b) object viewing, (c,d) virtual trying-on mannequin, and (e,f) virtual trying-on avatar.

In comparative demonstrations, we showed objects created in all three environments, as shown above. For example, when showing clothing objects, displaying the garment on a mannequin as well as deploying it in real-world scenarios and avatars by testing the similarity between ground truth and newly created shirts based on CAP–UDF, there is nice and smooth adaptability.

5. Conclusions

Recently, the garment trying-on industry has grown exponentially along with technological trends of visualization through devices. However, the inevitable problem is that the deployment of 2D captures to 3D visualization projection over the virtual world immediately remains a dilemma due to the current lack of framework availability. To this end, we have provided an end-to-end model establishment for the immediate adjustment of trying-on technology. Through 2D-to-point cloud conversion with PIFu and model remodeling with CAP–UDF, it achieves minimal object heterogeneity and is also ready to project into the virtual world.

Nevertheless, the limitation of this evaluation is its implementation on virtual reality devices; for instance, VR glasses in this study make it troublesome to adapt to actual people in real-world testing, where AR installations are more fluidly near to items. Furthermore, the developed material resolution is currently incomplete, which may result in certain items not being as attractive as expected.

In the near future, our novel study envisages fashion design by merging demand from many sources and assembling garments using the Neural Sewing Machine (NSW) perspective. Virtual entities may be able to be embedded into the real world immediately, such as AR technology. Another option is that the proposed VR shopping application can be extended to other domains beyond fashion, such as furniture, and automobiles. By generating 3D models from photographs of these products and incorporating them into a VR environment, users can visualize and interact with the products in a realistic and immersive way.

Author Contributions

Conceptualization, S.D. and J.-H.W.; methodology, J.P., V.P. and S.D.; software, S.D. and J.P.; formal analysis, J.P., V.P. and S.D.; investigation, V.P.; resources, S.D. and J.P.; data curation, J.P., V.P. and S.D.; writing—original draft preparation, J.P., V.P. and S.D.; writing—review and editing, J.P., V.P., J.-H.W. and S.D.; visualization, J.P., V.P. and S.D.; supervision, J.-H.W.; project administration V.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Ministry of Science and Technology, Taiwan, under grant numbers MOST-111-2221-E-027-121 and MOST-111-2221-E-027-131.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data in this work were obtained with the official GitHub of Anchor-UDF and CAP–UDF site for batch training.

Acknowledgments

The authors would like to thank Web Information Retrieval Lab, National Taipei University of Technology, Taipei, Taiwan, for providing equipment and software for this work, and also for providing funding for this study to be successful.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Grieves, M.; Vickers, J. Digital twin: Mitigating unpredictable, undesirable emergent behavior in complex systems. In Transdisciplinary Perspectives on Complex Systems: New Findings and Approaches; Springer International Publishing: Berlin/Heidelberg, Germany, 2017; pp. 85–113. [Google Scholar] [CrossRef]

- Van Der Horn, E.; Mahadevan, S. Digital Twin: Generalization, characterization and implementation. Decis. Support Syst. 2021, 145, 113524. [Google Scholar] [CrossRef]

- Hamzaoui, M.A.; Julien, N. Social Cyber-Physical System\s and Digital Twins Networks: A perspective about the future digital twin ecosystems. IFAC-PapersOnLine 2022, 55, 31–36. [Google Scholar] [CrossRef]

- Liu, M.; Fang, S.; Dong, H.; Xu, C. Review of digital twin about concepts, technologies, and industrial applications. J. Manuf. Syst. 2021, 58, 346–361. [Google Scholar] [CrossRef]

- Collins, J.; Goel, S.; Deng, K.; Luthra, A.; Xu, L.; Gundogdu, E.; Zhang, X.; Vicente, T.F.Y.; Dideriksen, T.; Arora, H.; et al. Abo: Dataset and benchmarks for real-world 3d object understanding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 21126–21136. [Google Scholar]

- Jurado, J.M.; Padrón, E.J.; Jiménez, J.R.; Ortega, L. An out-of-core method for GPU image mapping on large 3D scenarios of the real world. Future Gener. Comput. Syst. 2022, 134, 66–77. [Google Scholar] [CrossRef]

- Špelic, I. The current status on 3D scanning and CAD/CAM applications in textile research. Int. J. Cloth. Sci. Technol. 2020, 32, 891–907. [Google Scholar] [CrossRef]

- Helle, R.H.; Lemu, H.G. A case study on use of 3D scanning for reverse engineering and quality control. Mater. Today Proc. 2021, 45, 5255–5262. [Google Scholar] [CrossRef]

- Son, K.; Lee, K.B. Effect of tooth types on the accuracy of dental 3d scanners: An in vitro study. Materials 2020, 13, 1744. [Google Scholar] [CrossRef]

- Guo, Y.; Wang, H.; Hu, Q.; Liu, H.; Liu, L.; Bennamoun, M. Deep learning for 3d point clouds: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 4338–4364. [Google Scholar] [CrossRef]

- Chibane, J.; Pons-Moll, G. Neural unsigned distance fields for implicit function learning. Adv. Neural. Inf. Process Syst. 2020, 33, 21638–21652. [Google Scholar] [CrossRef]

- Venkatesh, R.; Karmali, T.; Sharma, S.; Ghosh, A.; Babu, R.V.; Jeni, L.A.; Singh, M. Deep implicit surface point prediction networks. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 12653–12662. [Google Scholar]

- Zhao, F.; Wang, W.; Liao, S.; Shao, L. Learning anchored unsigned distance functions with gradient direction alignment for single-view garment reconstruction. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 12674–12683. [Google Scholar]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Farhan, L. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data 2021, 8, 53. [Google Scholar] [CrossRef]

- Phanichraksaphong, V.; Tsai, W.H. Automatic Assessment of Piano Performances Using Timbre and Pitch Features. Electronics 2023, 12, 1791. [Google Scholar] [CrossRef]

- Liu, J.; Ji, P.; Bansal, N.; Cai, C.; Yan, Q.; Huang, X.; Xu, Y. Planemvs: 3d plane reconstruction from multi-view stereo. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 8665–8675. [Google Scholar]

- Cernica, D.; Benedek, I.; Polexa, S.; Tolescu, C.; Benedek, T. 3D Printing—A Cutting Edge Technology for Treating Post-Infarction Patients. Life 2021, 11, 910. [Google Scholar] [CrossRef]

- Barricelli, B.R.; Casiraghi, E.; Gliozzo, J.; Petrini, A.; Valtolina, S. Human digital twin for fitness management. IEEE Access 2020, 8, 26637–26664. [Google Scholar] [CrossRef]

- Shengli, W. Is human digital twin possible? Comput. Methods Programs Biomed. 2021, 1, 100014. [Google Scholar] [CrossRef]

- Li, X.; Cao, J.; Liu, Z.; Luo, X. Sustainable business model based on digital twin platform network: The inspiration from Haier’s case study in China. Sustainability 2021, 12, 936. [Google Scholar] [CrossRef]

- Al-Ali, A.R.; Gupta, R.; Zaman Batool, T.; Landolsi, T.; Aloul, F.; Al Nabulsi, A. Digital twin conceptual model within the context of internet of things. Future Internet 2020, 12, 163. [Google Scholar] [CrossRef]

- Štroner, M.; Křemen, T.; Urban, R. Progressive Dilution of Point Clouds Considering the Local Relief for Creation and Storage of Digital Twins of Cultural Heritage. Appl. Sci. 2022, 12, 11540. [Google Scholar] [CrossRef]

- Niccolucci, F.; Felicetti, A.; Hermon, S. Populating the Data Space for Cultural Heritage with Heritage Digital Twins. Data 2022, 7, 105. [Google Scholar] [CrossRef]

- Lv, Z.; Shang, W.L.; Guizani, M. Impact of Digital Twins and Metaverse on Cities: History, Current Situation, and Application Perspectives. Appl. Sci. 2022, 12, 12820. [Google Scholar] [CrossRef]

- Ashraf, S. A proactive role of IoT devices in building smart cities. Internet Things Cyber-Phys. Syst. 2021, 1, 8–13. [Google Scholar] [CrossRef]

- Hsu, C.H.; Chen, X.; Lin, W.; Jiang, C.; Zhang, Y.; Hao, Z.; Chung, Y.C. Effective multiple cancer disease diagnosis frameworks for improved healthcare using machine learning. Measurement 2021, 175, 109145. [Google Scholar] [CrossRef]

- Jamil, D.; Palaniappan, S.; Lokman, A.; Naseem, M.; Zia, S. Diagnosis of Gastric Cancer Using Machine Learning Techniques in Healthcare Sector: A Survey. Informatica 2022, 45, 7. [Google Scholar] [CrossRef]

- Meraghni, S.; Benaggoune, K.; Al Masry, Z.; Terrissa, L.S.; Devalland, C.; Zerhouni, N. Towards digital twins driven breast cancer detection. In Intelligent Computing: Proceedings of the 2021 Computing Conference, 1st ed.; Springer International Publishing: Berlin/Heidelberg, Germany, 2021. [Google Scholar]

- Lv, Z.; Qiao, L.; Li, Y.; Yuan, Y.; Wang, F.Y. Blocknet: Beyond reliable spatial digital twins to parallel metaverse. Patterns 2022, 3, 100468. [Google Scholar] [CrossRef]

- Song, M.; Shi, Q.; Hu, Q.; You, Z.; Chen, L. On the Architecture and Key Technology for Digital Twin Oriented to Equipment Battle Damage Test Assessment. Electronics 2020, 12, 128. [Google Scholar] [CrossRef]

- Tang, Y.M.; Au, K.M.; Lau, H.C.; Ho, G.T.; Wu, C.H. Evaluating the effectiveness of learning design with mixed reality (MR) in higher education. Virtual Real. 2020, 24, 797–807. [Google Scholar] [CrossRef]

- Livesu, M.; Ellero, S.; Martínez, J.; Lefebvre, S.; Attene, M. From 3D models to 3D prints: An overview of the processing pipeline. Comput. Graph. Forum 2017, 36, 537–564. [Google Scholar] [CrossRef]

- Fritsch, D.; Klein, M. 3D and 4D modeling for AR and VR app developments. In Proceedings of the 23rd International Conference on Virtual System & Multimedia (VSMM), Dublin, Ireland, 31 October–4 November 2017; pp. 1–8. [Google Scholar]

- Garcia-Dorado, I.; Aliaga, D.G.; Bhalachandran, S.; Schmid, P.; Niyogi, D. Fast weather simulation for inverse procedural design of 3d urban models. ACM Trans. Graph. (TOG) 2017, 36, 1–19. [Google Scholar] [CrossRef]

- Kazhdan, M.; Hoppe, H. Screened poisson surface reconstruction. ACM Trans. Graph. (TOG) 2013, 32, 1–13. [Google Scholar] [CrossRef]

- Chabra, R.; Lenssen, J.E.; Ilg, E.; Schmidt, T.; Straub, J.; Lovegrove, S.; Newcombe, R. Deep local shapes: Learning local sdf priors for detailed 3d reconstruction. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; pp. 608–625. [Google Scholar]

- Ding, C.; Zhao, M.; Lin, J.; Jiao, J.; Liang, K. Sparsity-based algorithm for condition assessment of rotating machinery using internal encoder data. IEEE Trans. Ind. Electron. 2019, 67, 7982–7993. [Google Scholar] [CrossRef]

- Li, T.; Wen, X.; Liu, Y.S.; Su, H.; Han, Z. Learning deep implicit functions for 3D shapes with dynamic code clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 21–24 June 2022; pp. 12840–12850. [Google Scholar]

- Guillard, B.; Stella, F.; Fua, P. Meshudf: Fast and differentiable meshing of unsigned distance field networks. In Proceedings of the Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, 23–27 October 2022; pp. 576–592. [Google Scholar]

- Atzmon, M.; Lipman, Y. Sal: Sign agnostic learning of shapes from raw data. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 2565–2574. [Google Scholar]

- Atzmon, M.; Lipman, Y. Sald: Sign agnostic learning with derivatives. arXiv 2020, arXiv:2006.05400. [Google Scholar]

- Gropp, A.; Yariv, L.; Haim, N.; Atzmon, M.; Lipman, Y. Implicit geometric regularization for learning shapes. arXiv 2020, arXiv:2002.10099. [Google Scholar]

- Ma, B.; Han, Z.; Liu, Y.S.; Zwicker, M. Neural-pull: Learning signed distance functions from point clouds by learning to pull space onto surfaces. arXiv 2020, arXiv:2011.13495. [Google Scholar]

- Tang, Y.M.; Ho, H.L. 3D modeling and computer graphics in virtual reality. In Mixed Reality and Three-Dimensional Computer Graphics; IntechOpen: London, UK, 2020. [Google Scholar] [CrossRef]

- Van Holland, L.; Stotko, P.; Krumpen, S.; Klein, R.; Weinmann, M. Efficient 3D Reconstruction, Streaming and Visualization of Static and Dynamic Scene Parts for Multi-client Live-telepresence in Large-scale Environments. arXiv 2020, arXiv:2211.14310. [Google Scholar]

- Saito, S.; Huang, Z.; Natsume, R.; Morishima, S.; Kanazawa, A.; Li, H. Pifu: Pixel-aligned implicit function for high-resolution clothed human digitization. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 2304–2314. [Google Scholar]

- Yang, X.; Lin, G.; Chen, Z.; Zhou, L. Neural Vector Fields: Implicit Representation by Explicit Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 16727–16738. [Google Scholar]

- Hong, F.; Chen, Z.; Lan, Y.; Pan, L.; Liu, Z. Eva3d: Compositional 3d human generation from 2d image collections. arXiv 2022, arXiv:2210.04888. [Google Scholar]

- Dong, Z.; Xu, K.; Duan, Z.; Bao, H.; Xu, W.; Lau, R. Geometry-aware Two-scale PIFu Representation for Human Reconstruction. Adv. Neural Inf. Process. Syst. 2022, 35, 31130–31144. [Google Scholar]

- Linse, C.; Alshazly, H.; Martinetz, T. A walk in the black-box: 3D visualization of large neural networks in virtual reality. Neural Comput. Appl. 2022, 34, 21237–21252. [Google Scholar] [CrossRef]

- Klingenberg, S.; Fischer, R.; Zettler, I.; Makransky, G. Facilitating learning in immersive virtual reality: Segmentation, summarizing, both or none? J. Comput. Assist. Learn. 2023, 39, 218–230. [Google Scholar] [CrossRef]

- Ghasemi, Y.; Jeong, H.; Choi, S.H.; Park, K.B.; Lee, J.Y. Deep learning-based object detection in augmented reality: A systematic review. Comput. Ind. 2022, 139, 103661. [Google Scholar] [CrossRef]

- Xu, Y.; Sun, Y.; Liu, X.; Zheng, Y. A digital-twin-assisted fault diagnosis using deep transfer learning. IEEE Access 2019, 7, 19990–19999. [Google Scholar] [CrossRef]

- Wei, Y.; Wei, Z.; Rao, Y.; Li, J.; Zhou, J.; Lu, J. Lidar distillation: Bridging the beam-induced domain gap for 3d object detection. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2022; Springer Nature: Cham, Switzerland, 2022; pp. 179–195. [Google Scholar]

- Regenwetter, L.; Nobari, A.H.; Ahmed, F. Deep generative models in engineering design: A review. J. Mech. Des. 2022, 144, 071704. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).