Abstract

Currently, algorithms to embed watermarks into digital images are increasing exponentially, for example in image copyright protection. However, when a watermarking algorithm is applied, the preservation of the image’s quality is of utmost importance, for example in medical images, where improper embedding of the watermark could change the patient’s diagnosis. On the other hand, in digital images distributed over the Internet, the owner of the images must also be protected. In this work, an imperceptible, robust, secure, and hybrid watermarking algorithm is presented for copyright protection. It is based on the Hermite Transform (HT) and the Discrete Cosine Transform (DCT) as a spatial–frequency representation of a grayscale image. Besides, it uses a block-based strategy and a perfectibility analysis of the best embedding regions inspired by the Human Vision System (HVS), giving the imperceptibility of the watermark, and a Singular-Value Decomposition (SVD) approach improved robustness against attacks. In addition, the proposed method can embed two watermarks, a digital binary image (LOGO) and information about the owner and the technical data of the original image in text format (MetaData). To secure both watermarks, the proposed method uses the Jigsaw Transform (JST) and the Elementary Cellular Automaton (ECA) to encrypt the image LOGO and a random sequence generator and the XOR operation to encrypt the image MetaData. On the other hand, the proposed method was tested using a public dataset of 49 grayscale images to assess the effectiveness of the watermark embedding and extraction procedures. Furthermore, the proposed watermarking algorithm was evaluated under several processing and geometric algorithms to demonstrate its robustness to the majority, intentional or unintentional, attacks, and a comparison was made with several state-of-the-art techniques. The proposed method obtained average values of PSNR = 40.2051 dB, NCC = 0.9987, SSIM = 0.9999, and MSSIM = 0.9994 for the watermarked image. In the case of the extraction of the LOGO, the proposal gave MSE = 0, PSNR ≫ 60 dB, NCC = 1, SSIM = 1, and MSSIM = 1, whereas, for the image MetaData extracted, it gave BER = 0% and . Finally, the proposed encryption method presented a large key space () for the LOGO image.

1. Introduction

Currently, digital watermarking has become a way to embed information into an image and protect it from unauthorized access and manipulation. Depending on digital content such as video, image, audio, and text and the application, algorithms can be developed for authentication, material security, trademark protection, and the tracking of digital content. The objective is to insert a watermark (digital image or text) into the digital content. There are important requirements to take into account when a watermarking algorithm is designed: imperceptibility, robustness, security, capacity, and computational cost. It is difficult to have an algorithm that embraces all requirements due to robustness, which refers to the ability to withstand image distortions that may compromise the imperceptibility of the watermark. Because of that, different techniques have been developed in order to improve robustness without compromising the original content. The state-of-the-art suggests that these algorithms can be designed in the spatial, transform, or hybrid domain. Thus, the algorithms alter the marked pixels to embed the watermark in the intensity domain of the image. The advantage of this is low computational complexity; however, the image suffers visible alterations, and the algorithm does not possess robustness against geometric transformations. In the transform domain, the watermark is embedded within specific elements to ensure enhanced resilience. Furthermore, the transforms can be combined to have hybrid domain watermarking. These kinds of methods increase the performance of the watermarking technique.

Therefore, in a watermarking image method, the watermark must be robust and imperceptible or perceptible, depending on the application. In this paper, we propose a hybrid, robust, and imperceptibility watermarking approach using the Hermite Transform (HT), Singular-Value Decomposition (SVD), the Human Vision System (HVS), and the Discrete Cosine Transform (DCT) to protect digital images. As watermarks, we used a digital image LOGO and image MetaData (with information about the original image or the owner), so we inserted two different digital contents into a digital image. The Hermite transform is based on the Gaussian function derivatives, and it incorporates human visual system properties, so it allows a perfect reconstruction of the image. To have more security, the LOGO is encrypted using the Jigsaw Transform (JST) before inserting. In addition, the indexes to decrypt the LOGO are secured using the Elementary Cellular Automaton (ECA), increasing the security of the proposal. In addition, the image MetaData are secured using a random sequence generator and the XOR operation. Finally, the Hamming error correcting code was applied to the image MetaData to reduce channel distortion.

The rest of the paper is divided as follows: Section 2 presents the related work describing the image watermarking methods using the DCT and SVD techniques and other space–frequency decomposition methods similar to the HT. We describe all the elements used to design the watermark algorithm such as the public dataset, JST, SVD, HT, DCT, HVS, and Elementary Cellular Automata (ECA) in Section 3. Section 4 details the proposed watermarking algorithm for the insertion/extraction of the watermarks. In Section 5, we report the experiments and results obtained in the insertion and extraction stages of the watermarks, including the computational complexity of the algorithm. In addition, we report the robustness analysis of the proposed method against the most-common processing and geometric attacks using the public image datasets, and we compare the algorithm with other methods of the state-of-the-art. Section 6 includes an analysis of the achieved results in this study, along with a comparison to other related works. Finally, Section 7 presents the conclusions and future work.

2. Related Work

There are many methods for watermarking images presented in the literature, and depending on the application, the requirements of the methodologies vary. The algorithms designed for watermarking have advantages and disadvantages. The most-representative work is in the transformation domain. For example, in Mokashi et al. [1], a strategy for watermarking images was introduced, which combines the Discrete Wavelet Transform (DWT), Discrete Cosine Transform, and Singular-Value Decomposition. The watermarks utilized in this approach are the users’ biometrics and their signature. During the embedding process, the biometrics acts as the host image, while the signature serves as the watermark. In contrast, for a second embedding process, the resulting watermark of the first process is embedded into the primary host image. In both embedding processes, the host image undergoes decomposition using the DWT, and the watermark is inserted inside the low-frequency coefficient by means of SVD.

In [2], Dharmika et al. preserved medical records by incorporating them into Magnetic Resonance Imaging (MRI) patient scans. The authors used the Advanced Encryption Standard (AES) to secure the medical records and SVD to compress the MRI scan reports. Then, the DCT was applied to embed the encrypted medical health record over the compressed MRI scan.

Sharma et al. [3] presented a combined approach for watermarking images using a resilient watermark (frequency domain) through the DWT and DCT and a fragile watermark (spatial domain). In the robust watermark, the Fisher–Yates shuffle method was used to scramble the watermark, and the LH and HL sub-bands were used to embed the watermark. On the other hand, a bitwise approach was used for the fragile watermark, including a halftoning operation in conjugation with the XOR and concatenation operations. In addition, a fragile watermarking method was used to perceive and locate the manipulated regions through the XOR operator in the extraction stage.

Nguyen [4] proposed a fragile-watermarking-based approach using the DWT, DCT, and SVD techniques. The watermark was inserted into the low-frequency coefficient of the DWT using the Quantization Index Modulation (QIM) technique, and the feature coefficients were adjusted using the Gram–Schmidt procedure. Besides, a tamper detection process under different attacks was incorporated.

In [5], Li et al. introduced an encryption/watermarking algorithm using the Fractional Fourier Transform (FRFT) in a hybrid domain. The Redistributed Invariant Wavelet Transform (RIDWT) and Discrete Cosine Transform (DCT) were applied to the enlarged host image. The resulting low-frequency and high-frequency components underwent SVD, and the watermark image was subjected to double-encryption using the Arnold Transform (AT). To achieve adaptive embedding, multi-parameter Particle Swarm Optimization (PSO) was utilized.

Alam et al. [6] reported a frequency-domain-based approach using the DWT and DCT and applying a two-level singular-value decomposition and a three-dimensional discrete hyper-chaotic map. The HH sub-band of the DWT was used to incorporate the watermark, which contains some image parameters, and it was encrypted through the Rivest–Shamir–Adleman (RSA), AT, and SHA-1 techniques.

Sharma and Chandrasekaran [7] investigated the robustness of popular image watermarking schemes using combinations of the DCT, DWT, and SVD, as well as their hybrid variations. These approaches were evaluated against traditional image-processing attacks and an adversarial attack utilizing a Deep Convolutional Neural Network (CNN) and an Autoencoder (CAE) technique.

In [8], Garg and Kishore analyzed various watermarking techniques to test robustness, imperceptibility, security, capacity, transparency, computational cost, and the false positive rate. The methods studied were classified into multiple categorizations of watermarking: perceptibility (visible and invisible watermark), accessibility (private and public), document type (text, audio, image, video), application (copyright protection, image authentication, fingerprinting, copy and device control, fraud and temper detection), domain-based (spatial domain, transform/frequency domain), type of schema (blind and non-blind), and cover image. The techniques analyzed were tested against several attacks: image-processing, geometric, cryptographic, and protocol attacks, using the more-representative evaluation measures, for example the PSNR, NCC, BER, and SSIM.

Zheng and Zhang [9] proposed a DWT-, DCT-, and SVD-based watermarking method to address common watermarking and rotation attacks. The scrambled watermark was inserted into the LL sub-band. In addition, the authors signed the U and V matrices to avoid the false positive problem.

In [10], Kang et al. reported a hybrid watermarking method of grayscale images based on DWT, DCT, and SVD for later embedding the watermark into the LH and HL sub-bands. Multi-dimensional PSO and an intertwining logistic map were used as the optimization algorithms and encryption models for watermarking robustness enhancement.

Taha et al. [11] evaluated two watermarking methods, a DWT based and an approach using the Lifting Wavelet Transform (LWT) under the same watermark and embedding it into the middle-frequency band. The results showed that, in terms of objective image quality, the LWT method outperformed the DWT method, whereas the DWT watermarking technique exhibited superior resilience against various attacks compared to the LWT approach.

Thanki and Kothari [12] proposed a watermarking technique using human speech signals as the watermark. For this, the watermark’s hybrid coefficients were derived using the DCT and subsequently subjected to SVD. Then, these coefficients were inserted into the coefficients of the host image, which were generated by a DWT followed by a Fast Discrete Curvelet Transform (FDCuT).

In [13], Kumar et al. presented a DWT-, DCT-, and SVD-based watermarking method. In addition, security was accomplished through a Set Partitioning in a Hierarchical Tree (SPIHT) and by the AT.

Zheng et al. [14] proposed a zero-watermarking approach applied to color images using the DWT, DCT, and SVD, taking advantage of the multi-level decomposition of the DWT, the concentration of the energy of the DCT, and the robustness of the SVD. Due to three color channels being used to embed the watermark, it was extracted by a voting strategy.

In [15], Yadav and Goel presented a composed watermarking proposal that involved DWT ad DCT analysis and an SVD approach to insert binary watermarks. The approach was image-adaptive, which identified blocks with high entropy to determine where the watermark should be embedded.

Takore et al. [16] reported a watermarking hybrid approach for digital images using LWT and DCT analysis and an SVD technique. Their proposal applied the Canny filter to identify regions with a higher number of edges, which were used to create two sub-images. These sub-images served as the reference points for both the embedding and extracting stages. Moreover, during the marking stage, the method used Multiple Scaling Factors (MSFs) to adjust various ranges of the singular-value coefficients. Kang et al. [17] reported a watermarking schema in digital images through a composed method applying DCT and DWT analysis and an SVD approach. In addition, the method used a logistic chaotic map.

Sridhar [18] proposed a scheme that protected the information with an adjustable balance between image quality and watermark resilience against image-processing and geometric attacks. The method was based on the DWT, DCT, and SVD techniques and provided an adaptive PSNR for the imperceptibility of the watermarks.

Madhavi et al. [19] investigated different digital watermarking schemes, comparing the protection and sensible limit. Moreover, the authors introduced a combined watermarking technique that leveraged the advantages of multiple spatial–frequency decomposition approaches such as the DWT and DCT, robust insertion analysis such as SVD, and security such as the AT.

Gupta et al. [20] used a cryptographic technique called Elliptic Curve Cryptography (ECC) in a semi-blind strategy of digital image watermarking. The proposed watermarking method was implemented within the DWT and SVD domain. Furthermore, the parameters of the entropy based on the HVS were calculated on a blockwise basis to determine the most-appropriate spatial locations.

Rosales et al. [21] presented a spectral domain watermarking technique that utilized QR codes and QIM in the YCbCr color domain, and the luminance channel underwent processing through SVD, the DWT, and the DCT to insert a binary watermark using QIM.

In [22], El-Shafai et al. presented two hybrid watermarking schemes for securing 3D video transmission. The first one was based on the SVD in the DWT domain, and the second scheme was based on the three-level discrete stationary wavelet transform in the DCT domain. In addition, El-Shafai et al. [23] proposed a fusion technique utilizing wavelets to combine two depth watermark frames into a unified one. The resulting fused watermark was subsequently secured using a chaotic Bakermap before being embedded in the color frames of 3D-High-Efficiency Video Coding (HEVC).

Xu et al. [24] introduced a robust and imperceptible watermarking technique for RGB images in the combined DWT-DCT-SVD domain. Initially, the luminance component undergoes decomposition using DWT and DCT. The feature matrix is generated by extracting the low and middle frequencies of the DCT from each region, which is subsequently subjected to SVD for watermark embedding.

In [25], Ravi Kumar et al. reported an image watermarking algorithm using hybrid transforms. In this approach, using SVD analysis, the decomposition of the image watermark was embedded in the decomposition of the cover image using the Normalized Block Processing (NBP) to obtain the invariant features. Then, the integer wavelet transform was applied, followed by the DCT and SVD.

In [26], Magdy et al. provided an overview of the watermarking techniques used in medical image security. The authors described the elements to design a watermarking algorithm. Furthermore, they presented a brief explanation of cryptography, steganography, and watermarking. Regarding watermarking, they took as an example different algorithms such as that in [27], where Kahlessenane et al. presented a watermarking algorithm to ensure the copyright protection of medical images. They used as the watermark patient information and used the DWT. The results showed high PSNR values (147 dB), demonstrating the imperceptibility of the watermark and the robustness of the method against attacks. However, they did not present any results about the extraction process.

In the paper [28], Dixit et al. described a watermarking algorithm using thirty different images and used two watermarks: one of them to authenticate (fragile), and the other one focused on robustness (information watermark). To insert the authentication watermark, they used the DCT, and for the information watermark, the process included the DWT and SVD. The results showed robustness for Salt and Pepper (SP) noise, rotation, translation, and cropping (even though the PSNR of the recovered watermark was low). The same authors proposed another watermark algorithm in [29]. This algorithm was non-blind and used the LWT on the cover image to decompose the image into four coefficient matrices; with this transform, the image had better reconstruction. Furthermore, the authors employed the DCT and SVD. The authors reported better robustness and mentioned that they reduced the time complexity of traditional watermarking techniques. The results showed high PSNR values (about 200 dB) without attacks. They applied different attacks, compared their technique with other techniques, and demonstrated that their technique had better robustness. They did not include the watermarks extracted. Therefore, to evaluate different techniques and compare them, some papers focused on describing different watermarking algorithms. For example, Gupta et al. [30] explained that, to achieve the security of digital data, it is necessary to improve the watermarking techniques and to provide better robustness. The authors clarified that several algorithms utilize SVD to enhance the quality aspect of the embedded image, aiming to increase its resilience against various signal-processing attacks. The authors presented different metrics that are possible to use to evaluate different techniques and different transformations that researchers use commonly.

In [31], Mahbuba Begum et al. presented a combined bling digital image watermarking method using the DCT and DWT as spatial–frequency decomposition and SVD analysis to ensure all requirements, according to the authors, that a watermarking algorithm must satisfy, for example imperceptibility, safety, resilience, and capacity of the payload. As a watermark, they used a digital image and encrypted it with the Arnold map. They presented results using only one image and only one watermark.

D. Rajani et al. [32] proposed a new technique called the Porcellio Scaber Algorithm (PSA). They explained that, with this algorithm, the visual perception of the extracted watermark was good and, at the same time, maintained robustness. Their proposal was a bling watermarking and used a redundant version of the DWT (RDWT), DCT, and SVD. In addition, they embedded a LOGO into the host image. They reported a high PSNR value of dB in the watermarked image (Lena).

Other hybrid algorithms were developed by Wu, J.Y. et al. [33,34]. On the one hand, in [33], they presented a scheme using SVD (to improve robustness), the DWT, and the DCT. Their proposal included a process to encrypt the watermark by an SVD ghost imaging system. As a watermark, they used a digital image with a size of . The authors did not indicate the parameters of the attacks that they employed to evaluate their method. On the other hand, in [34], a watermarking method using a decomposition by the DWT of four levels in conjunction with an SVD analysis was presented. They proposed four levels of the DWT to significantly enhance the imperceptibility and the robustness of the method. The evaluation of the algorithm showed good results using the PSNR, NCC, and SSIM. As a watermark, they used a digital image with a size of .

Seif Eddine Naffou et al. described in [35] a hybrid SVD-DWT. They explained that the Human Visual System (HVS) is less sensitive to high-frequency coefficients, so they chose them to insert the watermark and to avoid poor results when extracting the watermark, they aggregated SVD.

As we can see, different watermarking algorithms for digital images have been developed for copyright protection, and the majority are focused on the principal problem, which is robustness. In this paper, a watermarking method including imperceptibility, robustness, watermark capacity, and computational cost for copyright protection is presented.

3. Materials and Methods

3.1. Description of the Dataset

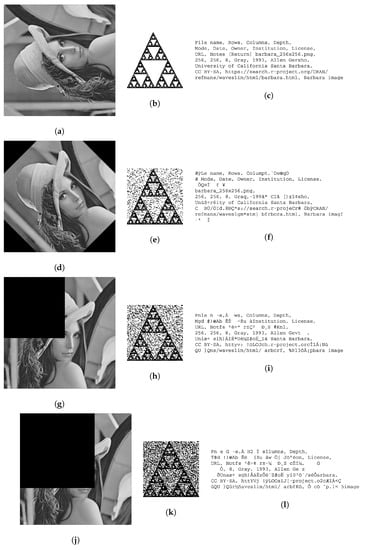

To evaluate the watermarking proposal, we selected 49 grayscale images of px (Figure 1) from public datasets: the USC-SIPI Image Database [36], the Waterloo Fractal Coding and Analysis Group [37], Fabien Petitcolas [38], the Computer Vision Group of University of Granada [39], and Gonzalez and Woods [40]. The collection of 49 grayscale images utilized in this study can be accessed publicly through our website: https://sites.google.com/up.edu.mx/invico-en/resources/image-dataset-watermarking.

Figure 1.

Complete image dataset; 49 grayscale images of px.

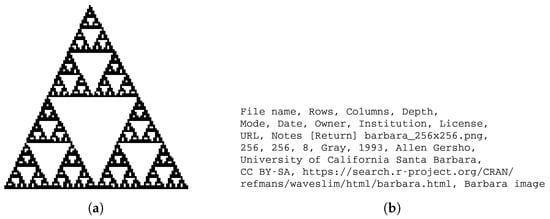

As a watermark, we used a digital image (LOGO) of px, as is shown in Figure 2a. In addition, we used the image MetaData of the Barbara image in plaintext, as we show in Figure 2b.

Figure 2.

(a) Watermark image LOGO. (b) Image MetaData of the Barbara image in plaintext.

3.2. Jigsaw Transform and Cellular Automata

Image encryption is of great importance currently to ensure the protection of sensitive information by preventing unauthorized access to it. Cryptography techniques used for image encryption are required to have features such as hiding the visual information of the image, having a large key space to resist brute force attacks, and having high key sensitivity to prevent differential attacks [41]. A recent example of an algorithm that meets these requirements is that proposed by Sambas et al. [42], where they developed a three-dimensional chaotic system with line equilibrium, which was used along with Josephus traversal to implement an image encryption scheme based on pixel and bit scrambling and pixel value diffusion, resulting in an encryption method secure against brute force attacks and differential attacks. In addition, the authors implemented the proposed chaotic system into an electronic circuit. In contrast, in the present work, we used the Jigsaw transform and a cellular automaton to encrypt the watermarked image, improving the key space of the Jigsaw-transform-alone implementation.

3.2.1. Jigsaw Transform

The Jigsaw transform is a popular scrambling technique to hide visual information in digital images. Its name is reminiscent of an image cut into pieces of different sizes that must be joined correctly to form the picture again. It is considered a nonlinear operator, which rearranges sections of an image following a random ordering [43]. The direct JST breaks a grayscale image into non-overlapping blocks of pixels, each one of which is moved to a location following a random order. In the same way as the direct JST (), the inverse Jigsaw transform () uses the initial order of the sections to recover the original image. The JST holds the energy of a grayscale image () and is, therefore, considered a unitary transform (Equation (1)).

Figure 3 shows a grayscale image of px and the corresponding results for the JST, giving non-overlapping blocks of px for , px for , and px for .

Figure 3.

Examples of the JST using an image grayscale image of px. (a) Barbara image. (b) JST result using blocks of (). (c) JST result using blocks of (). (d) JST result using blocks of ().

The set of each possible combination of the security keys used in the encryption of a digital image is called the key space. Thus, for the JST, the key space is related to the number of blocks , i.e., .

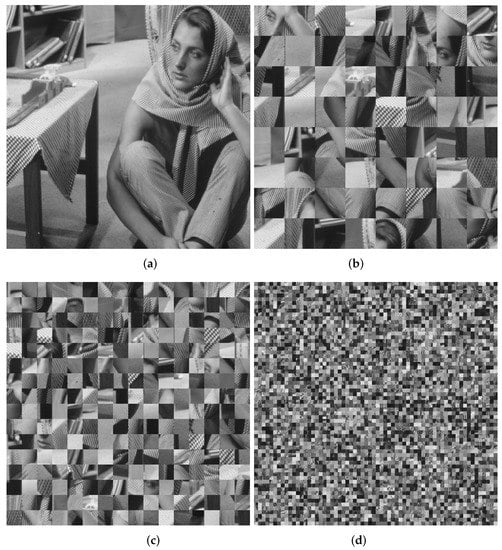

3.2.2. Elementary Cellular Automata

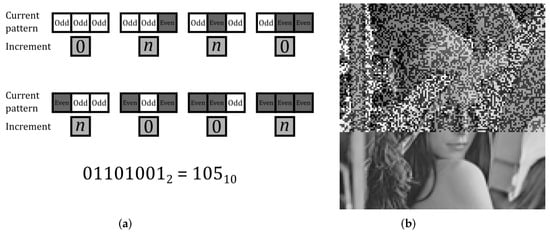

Elementary Cellular Automata consist of a grid of cells of width X and height Y. Each cell has two possible states: ON and OFF. Initially, all cells start turned OFF, except for the first row, which has an arbitrary configuration. The grid will evolve through a series of iterations. In iteration i, the th row is modified. The new value of a cell is determined by the neighborhood of the cell in the same column in the row above (the parent row). The neighborhood of a cell consists of three cells: the cell itself and its two horizontal neighbors (handling the edge cases with modular arithmetic). Therefore, there are possible neighborhoods and, consequently, possible rules for the next iteration of the automaton. In this paper, we focused on the rule known as Rule 105 according to the Wolfram code described in [44] to classify the rules of the ECA, depicted in Figure 4.

Figure 4.

Elementary cellular automaton with Rule 105. (a) The new state of a cell is based on each possible neighborhood of the cell in the same column in its parent row. (b) ECA is applied on a grid using 34 iterations, with the first row starting with all cells in the OFF state, except for the cell at the center.

For the purposes of our work, we considered a variant of the ECA, where cells can have values ranging from 0 to an integer k, and instead of using Rule 105 to determine if a cell should be ON or OFF, we used it to determine if a cell should increase its value by an odd number n or not (changing its parity) based on the parity of the values of the corresponding neighborhood, considering even cells as OFF states and odd cells as ON states. If the new value is greater than k, we made use of modular arithmetic to return it to our desired range of values. We also considered a finite grid in the vertical direction; if we performed a number of iterations greater than the amount of rows and we ran out of rows to modify, we continued with the top row considering the bottom row as its parent row. This allowed us to start the first iteration in the first row and to easily apply elementary cellular automata to the gray images. In Figure 5, we show this variation of Rule 105 on a gray image. The process is reversible by applying the algorithm to the rows in reverse order and subtracting by k. The exact size of the key space of our ECA is unknown; its upper bound must be since that is the total amount of configurations the matrix could have considering all possible values of the matrix and all possible rows that can be modified in a given iteration of the automaton. Evolving the ECA beyond that amount of iterations would lead to a repeated state. Since it is likely that a repeated state will occur before that amount of iterations, the key space of our ECA was .

Figure 5.

Variation of the ECA with Rule 105. (a) Increment on the value of a cell based on each possible neighborhood of the cell in the same column in its parent row. (b) Our modified ECA was applied on a gray image using 80 iterations, with .

3.2.3. ECA Applied on Jigsaw Transform

For our work, we used the Jigsaw transform in combination with the elementary cellular automaton described in Section 3.2.2. Given that the JST has a limited key space, the ECA previously described was used to help achieve a broader key space. We used the JST with subsections, storing the index of each subsection in a matrix to be able to reverse the algorithm. We can encrypt this matrix using our ECA with an arbitrary number of iterations and considering values in the range from 1 to 25. We chose a value of for our work. The key space of the ECA applied on the Jigsaw transform would be in general; substituting for the variables we chose for our work, we obtained a key space of . We used this algorithm to encrypt our watermark image, as described later in Section 4; given that we used subsections, we can achieve full image encryption of the watermark image by using the JST along with 5 iterations of the ECA to modify all the rows of the JST index matrix. Since the main theme of this work was watermarking, a thorough analysis of the JST with the ECA for image encryption is beyond the scope of this article but can be studied in future work.

3.3. SVD Analysis

Singular-value decomposition, used in linear algebra, performs an expansion of a rectangular matrix in a coordinate system where M and N are the dimensions of A and the covariance matrix is diagonal. Equation (2) shows the SVD theorem:

where and are orthogonal matrices defined by:

is a diagonal matrix, and with are the singular values that satisfy with representing the rank of the matrix A.

Thus, SVD calculates the eigenvalues of , forming the columns of V and the eigenvectors of and generating the columns of U, and the singular values in S are obtained by the square roots of the eigenvalues from and .

SVD has been successfully used for a variety of applications. In particular, in the signal- and image-processing areas, SVD has been applied to image compression and image completion [45], dimensionality reduction, facial recognition, background video removal, image noise reduction, cryptography, and digital watermarking [46].

In the following, we describe some properties of SVD:

- A few singular values contain the majority of the signal’s energy, which has been exploited in compression applications.

- The decomposition/reconstruction could be applied to both square and non-square images.

- When a slight interference, e.g., noise, alters the values of the image, the singular values remain relatively stable.

- Singular values of an image represent its intrinsic algebra.

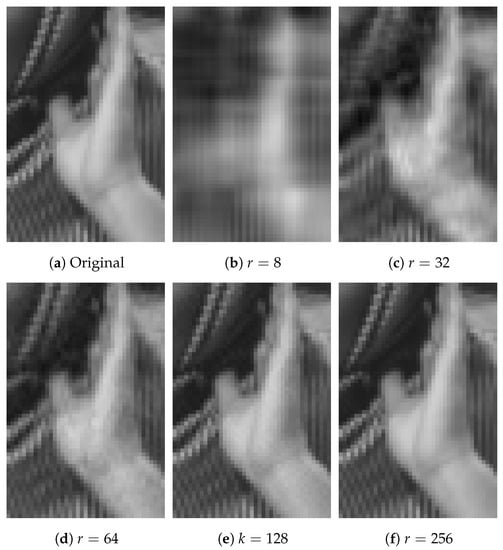

SVD generates the sorted matrices U, S, and V, following how they contribute to the matrix A. Thus, we obtained an approximation of the input image when only a number k of singular values was used. In addition, if k is very near M, the quality of the reconstructed image increases. From Equation (2), an image approximation is obtained taking r columns of U and V and the upper left square of S.

Figure 6 shows the image approximation using SVD over a grayscale image of for , and Table 1 reports the correlation coefficient (R), which is calculated using the original image and the reconstructed image, where using only of the singular values ( of 256), a correlation value of is achieved. In addition, Figure 7 shows the zooming of a region of the original image, where both homogeneous and texture regions are presented.

Figure 6.

Image approximation using a different number of singular values (r) of SVD.

Table 1.

Examples showing the relation between the number of singular values (r) used to reconstruct the image and the correlation coefficient value obtained.

Figure 7.

Zoom of the image approximation using a different number of singular values (r) of SVD.

3.4. Hamming Code

Linear block codes, defined in coding theory, are a kind of error-correcting code, where a linear combination of codewords is also a codeword. Hamming codes are efficient error-correcting binary linear block codes used to detect and correct errors when data are stored or transmitted.

For a Hamming code, the encoding operation is performed by the generator matrix shown in Equation (3) [47]:

When we talk about a Hamming code, we are referring to a code that generates seven bits for four input bits. Through a combination, e.g., lineal, of rows of G and the modulo-2 computation, the codewords are obtained for each input element, where the code corresponds to the length of a row of the matrix G. Thus, is the codeword for as the input message [47].

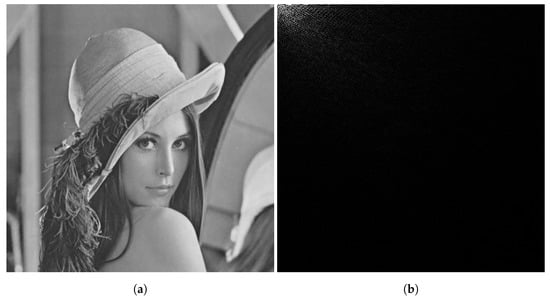

3.5. Discrete Cosine Transform

The DCT serves as the foundation for numerous image compression techniques and lossy digital image compression systems: Joint Photographic Experts Group (JPEG) for still images and Moving Picture Experts Group (MPEG) for video images [48]. It is a mathematical tool to perform frequency analysis without complex numbers and to approximate a typical signal using fewer coefficients (low-, high-, and middle-frequency components), i.e., it can pack the most information in the fewest coefficients and pack energy in the low-frequency regions [49,50]. One of the most-usual applications is in signal and image processing for lossy compression because of its property to compact strong energy, creating predictions according to its local uniqueness. Besides, as mentioned in [51], this transform has entropy retention, decorrelation, and energy retention–energy concentration, among which energy concentration is of great significance to digital image encryption. Therefore, the most-important DCT advantages, such as a high compression ratio and low error rates [52], are taken into account in different applications, such as digital image encryption, because the energy concentration is a very important element. When we applied the DCT to an image (matrix), we obtained a DCT coefficient matrix that contained the DC coefficient and the AC coefficient. The energy was concentrated in the DC element. As an example, the Lena image and its transformation applying the DCT are shown in Figure 8.

Figure 8.

Discrete cosine transform. (a) Original Lena image; (b) DCT of Lena image.

Another application is in steganography systems and watermark systems, which embed the information of a signal in the transform domain. These systems are more robust if they operate in the transform domain (Discrete Fourier Transform (DFT), Discrete Cosine Transform (DCT), Discrete Wavelet Transform (DWT), Contourlet Transform (CT), etc.). Specifically, in the DCT domain, the algorithms are more robust against common processing operations (JPEG and MPEG compression) compared with spatial domain techniques, and also, the DCT offers the possibility of directly realizing the embedding operator in the compressed domain (i.e., inside a JPEG or MPEG encoder) to minimize the computation time [53].

The 2D DCT of a grayscale image is as follows (Equation (5)):

where X represents the number of columns and Y the number of rows of the image , its spatial coordinates, the corresponding frequency coordinates, and

where is a weight factor, and [54].

The 2D Inverse Discrete Transform (IDCT) is given as follows (Equation (6)):

3.6. Hermite Transform

The Cartesian Hermite transform is a technique of signal decomposition. To analyze the visual information, it is necessary to use a Gaussian window function .

The information within the window is expanded to a family of polynomials . These polynomials have the characteristic of orthogonality in the function of the Gaussian window and are defined in terms of the Hermite polynomials as Equation (7):

where o and indicate the analysis order in the spatial directions x and y, respectively, and , are the generalized Hermite polynomials, and represents the variance of the Gaussian window.

Equation (8) defines the Hermite polynomials.

Convoluting the image with the Hermite analysis filters followed by a sub-sampling (T) as follows in Equation (9), we obtained the HT.

where are the Cartesian Hermite coefficients:

and is the spatial position in the sampling lattice S.

The Hermite filters are obtained by Equation (10):

with .

On the other hand, the original image could be reconstructed through Equation (11):

where are the Hermite synthesis filters of Equation (12) and is the weight function of Equation (13).

For the discrete implementation, we used the binomial window function to approximate the Gaussian window function (Equation (14)):

where represents the order of the binomial window (Equation (15)).

Thus, the Krawtchouk polynomials, defined in Equation (16), are the orthogonal polynomials associated with the binomial window.

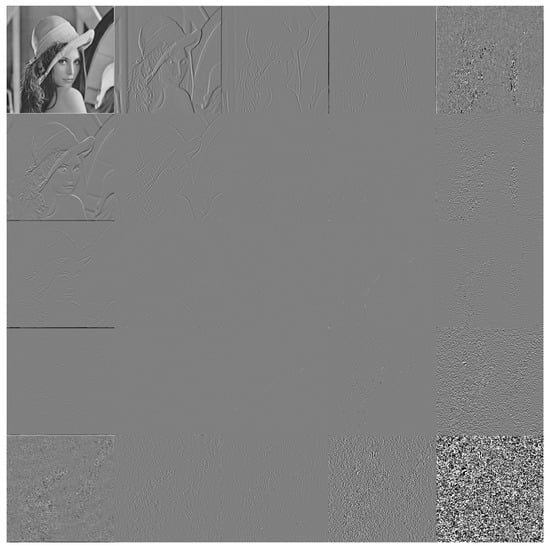

In the discrete implementation, the signal reconstruction from the expansion coefficients is perfect because the window function support is finite () and the expansion with the Krawtchouk polynomials is also finite. To implement the Hermite transform, it is necessary to select the size of the Gaussian window spread , the order for binomial windows, and the subsampling factor that defines the sampling lattice S. The resulting Hermite coefficients are arranged as a set of () equally sized sub-bands: one coarse sub-band representing a Gaussian-weighted image average and detail sub-bands corresponding to higher-order Hermite coefficients, as we can see in Figure 9.

Figure 9.

Hermite transform coefficients ( with ) of Lena image and the spatial order representation: .

3.7. Human Vision System

For several years, some characteristics of the HVS have been applied to address various image-processing challenges. For example, in [55], the authors proposed a watermarking approach considering that the determined mechanisms of the HVS are less sensitive to the redundancy of image information. Thus, the entropy was used to determine the regions with more redundant image information and to select the visually significant embedding regions.

On the other hand, entropy is a metric widely used to measure the spatial correlation of a local region of the image, for example a pixel neighborhood. It could be defined for an N-state, as is shown in Equation (17) [55]:

where defines the probability of the appearance of the pixel in the pixel neighborhood, N is the number of elements within the neighborhood, and is a small constant value to avoid .

In addition, image edges contain relevant information about the image characteristics. Thus, the edge entropy of an image block is taken into consideration to identify the specific areas in the image where the watermark will be inserted. It is calculated by means of Equation (18) [55]:

where represents the uncertainty of the j-th pixel value in the block.

In [55], the combination between the entropy and edge entropy was used to determine the suitable insertion locations, as is shown in Equation (19):

4. Proposed HT, DCT, and SVD and Block-Based Watermarking Method

The present work reports a blockwise image watermarking method to insert two watermarks, a digital image (LOGO) and information about the owner or the host image (MetaData). The proposed method is based on the HT and DCT as a spatial–frequency representation of the cover image with the HVS characteristics to add imperceptibility to the watermark. In addition, an SVD strategy adds robustness against attacks.

4.1. Watermarking Insertion Process

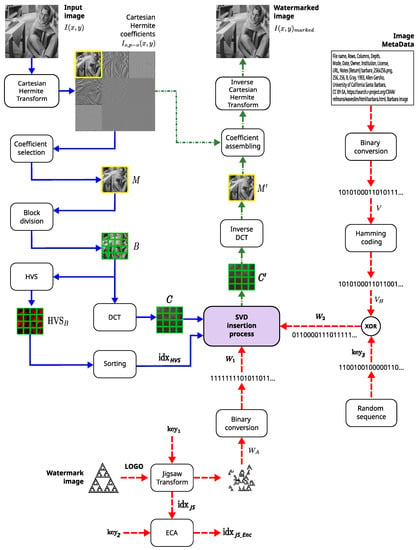

Figure 10 shows a schema of the proposed watermarking insertion process.

Figure 10.

Watermarking insertion process.

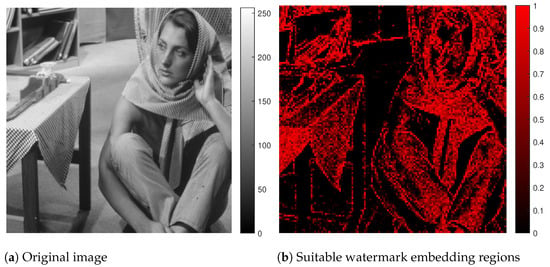

The reason to use DCT is that, according to Dowling et al. [56], block-based watermarking is more effective because we have smaller block sizes and the DCT concentrates the energy. After decomposing the original image into space–frequency bands using the HT, the selected sub-bands were partitioned into 4 × 4 blocks. Subsequently, each block was transformed into its DCT representation. On the one hand, an SVD analysis allows high robustness against attacks. On the other hand, the entropy values of the cover image are used to choose the suitable regions for embedding the watermarks, giving an adaptive approach that identifies blocks with high entropy. Thus, values (Equation (19)) in each block are sorted in ascending order, where the lowest values correspond to the best embedding regions. Figure 11a shows a grayscale image of px, and Figure 11b represents through a color bar, with descending and normalized values, those regions where a watermark, in this case of px, could be inserted, where high values (light color) correspond to the most-suitable regions and low values (dark color) are the worst regions.

Figure 11.

Embedding regions to insert a watermarking using the HVS values. (a) Grayscale original image. (b) The suitable regions to insert a watermark in descending order. Best locations (light color) and worst ones (dark color).

In addition, to increase the performance of the image MetaData against attacks and reduce channel distortion, a Hamming error-correcting code was applied to the MetaData. Finally, the LOGO image was encrypted using the Jigsaw transform in combination with elementary cellular automata to increase the security of the proposal.

The steps of embedding both an image watermark (LOGO) () and the image MetaData () are explained next regarding the block diagram of Figure 10:

- Image watermark (LOGO) :

- –

- Input the binary image watermark LOGO of size .

- –

- Apply the Jigsaw transform to the LOGO with as the first secret key, obtaining the watermark matrix of size , where corresponds to the number of non-overlapping subsections of px.

- –

- Convert to binary, obtaining the sequence .

- –

- The Jigsaw transform generates the indexes , which represent the original locations of each subsection of px.

- –

- The ECA algorithm encrypts through , obtaining the encrypted indexes , where is the second secret key, with representing the number of iterations, and k is an odd number.

- Image MetaData :

- –

- Enter the image MetaData as a character string.

- –

- Convert each alphanumeric character of the image MetaData into a binary string, obtaining the vector V.

- –

- Calculate the Hamming code over V, obtaining the vector of length P.

- –

- Generate a pseudo-random binary string of length P with a uniform distribution; will be the third secret key.

- –

- Perform the bitwise operation to obtain the watermark :

- –

- Since the image MetaData size is small compared tothe LOGO image size, an adjustment of the dimensions of by adding binary zeros to correspond to the dimensions of is performed.

- Input host image to watermark:

- –

- Input the host image. For an RGB image, convert it to grayscale, obtaining a matrix of size .

- –

- Perform the Hermite transform decomposition to , to obtain nine coefficients. Each one is a matrix of size , where and , where T is a sub-sampling factor, e.g., .

- –

- Select the low-spatial-frequency Hermite coefficient to embed the watermarks and .

- –

- Divide M into blocks of size , obtaining the multidimensional array B composed of blocks.

- –

- Apply the HVS analysis to each block of matrix B through Equation (19), obtaining .

- –

- Sort each value of in ascending order, storing the position of each ordered block, where the lowest values of correspond to the best embedding regions.

- –

- Apply the DCT to each block of B, obtaining , where and L is the number of blocks of size .

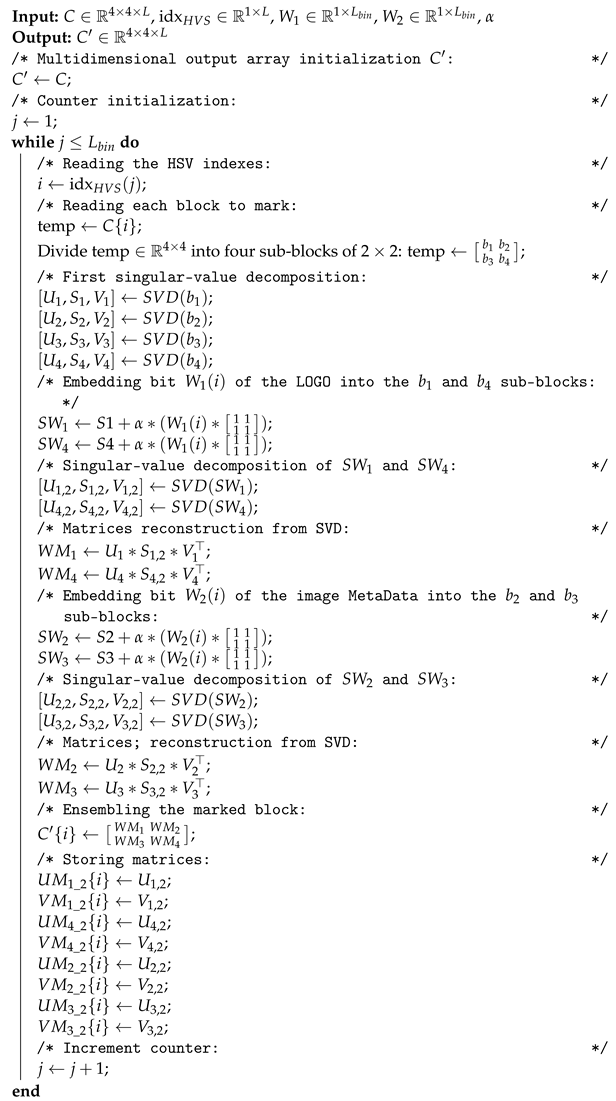

- SVD-based insertion process:

- –

- Embed the watermarks and using the SVD-based Algorithm 1, with blocks of , obtaining the multidimensional output array .

- –

- To fully embed the LOGO and image MetaData within the host image, the following relation must be satisfied:

- Making the watermarking image:

- –

- Apply the inverse DCT to each block of , obtaining .

- –

- Substitute the low-spatial-frequency Hermite coefficient of : .

- –

- Perform the inverse Hermite transform of to obtain .

| Algorithm 1: SVD-based insertion algorithm. |

|

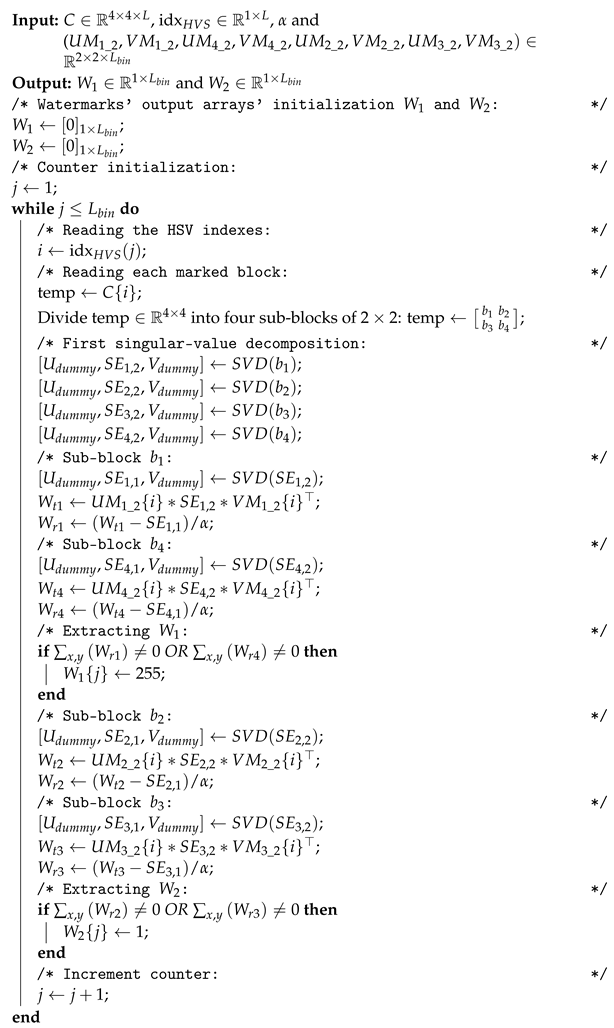

4.2. Watermarking Extraction Process

Since the watermarking approach is symmetric, the extraction stage is similar to the process shown in Figure 10, with the only change that the inverse operations are applied. Thus, the steps of extracting both the LOGO and the plaintext MetaData from the watermarked image are explained next:

- SVD-based extraction process:

- –

- Perform the Hermite transform decomposition to .

- –

- Select the low-spatial-frequency Hermite coefficient .

- –

- Divide M into blocks of size , obtaining the multidimensional array B composed of L blocks.

- –

- Apply the DCT to each block of B, obtaining .

- –

- Extract the matrices and using the SVD-based Algorithm 2.

- LOGO image extraction:

- –

- Convert array into a matrix .

- –

- Decrypt through the inverse ECA using , obtaining indexes.

- –

- Apply the inverse JST to using indexes to obtain the LOGO image.

- Image MetaData extraction:

- –

- Remove the extra zeros of to obtain the array .

- –

- Perform the bitwise operation between and to obtain :

- –

- Decode using the Hamming code to obtain the binary array V.

- –

- Convert the binary array V to an alphanumeric array, obtaining the the image MetaData as a character string.

| Algorithm 2: SVD-based extraction algorithm. |

|

5. Experiments and Results

The proposed watermarking method was run on a laptop computer with an Intel Core i7 @ 1.6 GHz, 16 GB of RAM, and without a GPU card. The watermarking algorithm had a time consumption of 2.971 s for the insertion stage and 2.640 s for the extraction process. The method was implemented in a non-optimized and non-parallelized script in MATLAB using images of px. The parameters used were for the JST, , maximum order decomposition and for the HT, and for the ECA, for the HVS analysis, and for the SVD-based insertion.

We evaluated our algorithm in different experiments: the insertion and extraction processes, robustness against attacks, and a comparison with other methods.

All the experiments were carried out using the 49 publicly available grayscale images of px (see Section 3.1).

5.1. Performance Measures

To assess the performance of our watermarking algorithm, we employed the following metrics [53]: Mean-Squared Error (MSE), Peak Signal-to-Noise Ratio (PSNR), Structural Similarity Index (SSIM), Mean Structural Similarity Index (MSSIM), Normalized Cross-Correlation (NCC), Bit Error (), and Bit Error Rate (BER). In the cases of the MSE, PSNR, SSIM, MSSIM, and NCC, the images were px, where x and y represent the spatial coordinates:

- The MSE refers to a statistical metric used to measure the image’s quality. It evaluates the squared difference between a pixel in the original image and the watermarked image . After calculating this result for all pixels in the image, it returns the average result, as is shown in Equation (23):If the MSE = 0, this indicates that there is no error between the original image and the watermarked image.

- The PSNR is defined in Equation (24):On the one hand, a higher image quality is achieved with a higher PSNR value, so it approaches infinity. On the other hand, low PSNR values report high differences between the images [57].

- The Mean Structure Similarity Index (MSSIM) has a value determined by Equation (25):where I and correspond to the original and the distorted image, respectively, and represent their j-th local window, and M stands for the amount of local windows of the image. In the case that and have no negative values, the value of the SSIM is calculated as shown in Equation (26):where the averages of I and are given by and , respectively, their standard deviations are given by and , the covariance between both images is represented by , and the constants and are used to prevent instability if the denominator happens to have a value close to zero [58].The SSIM is a metric that quantifies the similarity between two images and is believed to correlate with the quality perception of the human visual system [57]. The SSIM ranges in the interval . Thus, close to zero values indicate uncorrelated images, and values closer to 1 represent equal images.

- The Normalized Cross-Correlation coefficient (NCC) measures the amount of similarity between two images ( and ) given their gray level intensities, as illustrated by Equation (27):where represents the average value of .

- The Bit error () denotes the number of wrong bits extracted from the binary string , regarding the total bits (N) embedded in the binary string [53].

- The Bit Error Rate (BER) is similar to the bit error, but it measures the ratio between the number of wrong bits extracted from the binary string , regarding the total bits (N) embedded in the binary string [53] (see Equation (28)):

5.2. Sensitivity Analysis of the Scaling Factor

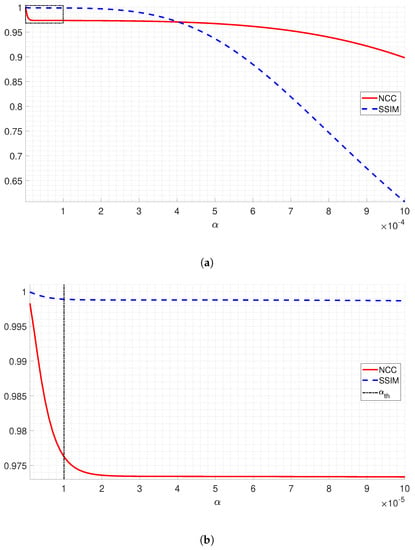

A critical parameter in the proposed SVD-based watermarking method (see Algorithm 1) corresponds to the scaling factor . This parameter defines the imperceptibility, on the one hand, and robustness, on the other, of the proposed watermarking method. A low value ensures imperceptibility, but minimizes robustness, while a high value gives strong robustness, but neglects the imperceptibility of the watermarks. Thus, to fix a suitable value of , we performed a sensitivity analysis of the scaling factor by varying from 10 to 10 with steps of 10, obtaining a set of one-hundred different values. Then, we embedded the watermarks into the Lena image and calculated the performance metrics. Thus, for the watermarked image, we computed the NC and SSIM values; for the extracted LOGO image, the NCC values; and for the image MetaData recovered, the BER values. We obtained an NCC = 1 for the LOGO watermark and a BER = 0 for the image MetaData extracted using the one-hundred values of , which showed that did not affect the extraction process. However, the NCC and SSIM metrics for the watermarked image showed a behavior dependent on . In Figure 12a, we show a plot of the NCC (solid red line) and SSIM (dashed blue line) values of the watermarked image as a function of , where both metrics decreased when increased. Then, Figure 12b shows an enlargement of the left-upper rectangle region (black dotted line) of Figure 12a, showing that, for values greater than 10 (vertical black dotted line), both the NCC and SSIM values decreased considerably. For this, we fixed the scaling factor to 10 to ensure, on the one hand, the quality of the marked image and, on the other hand, the correct extraction of both watermarks.

Figure 12.

Sensitivity analysis of the scaling factor. (a) NCC (solid red line) and SSIM (dashed blue line) values of the watermarked image versus . (b) Enlargement of the left-upper rectangle region (black dotted line) of (a) showing the defined limit value of .

5.3. Watermarking Insertion and Extraction Performance Analysis

We tested our algorithm on the 49 grayscale images shown in Section 3.1 to insert and extract the LOGO watermark (Figure 2a) and the image MetaData (Figure 2b).

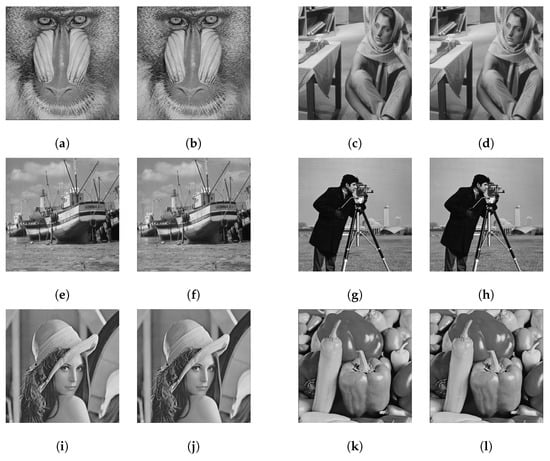

In Table 2, we present the metrics’ averages by applying the algorithm to the 49 grayscale images. In addition, in Table 3, we show only the results using six representative images. However, the results were similar for the other images. As representative images, we selected the following images commonly used to test image-processing algorithms, and at the same time, they represent the variability of both low and high spatial frequencies: Baboon, Barbara, Boat, Cameraman, Lena, and Peppers. The experimental results using these representative images are shown in Figure 13, where each pair shows the original image on the left and the watermarked image on the right.

Table 2.

Metrics’ averages using 49 grayscale images, showing the metrics over the watermarked images and the metrics of the LOGO and MetaData extracted.

Table 3.

Metrics’ averages using only six representative images, showing the metrics over the watermarked images and the metrics of the LOGO (MSE, PSNR, NCC, SSIM, MSSIM) and MetaData (BER) extracted.

Figure 13.

Results of original images and their watermarked images without attack. (a,c,e,g,i,k) correspond to the original images Baboon, Barbara, Boat, Cameraman, Lena, and Peppers, respectively. (b,d,f,h,j,l) correspond to the watermarked images.

Table 2 and Table 3 show, through the values of the metrics (PSNR, MSSIM), that the watermark is visually imperceptible, so both the LOGO and the image MetaData did not present perceptible changes. In relation to the extracted watermark and the MetaData, all metrics demonstrated that we can recover them perfectly. Among the six representative images, the best results were for the Peppers image; after inserting the watermark, the quantity of the pixels modified was 5.8020, and the rest of the metrics demonstrated that there were no visual changes. The Cameraman image presented the highest MSE value and the lowest PSNR value, but also these values indicated that the watermark was not perceptible.

5.4. Watermarking Robustness against Attacks

To evaluate the robustness against attacks of the proposed watermarking method, we applied the most-common processing and geometric attacks to the 49 grayscale watermarked images of Section 3.1.

Processing operations: Gaussian filter and median filter; Gaussian noise and salt and pepper noise. Gaussian filter—window of and varying N from 2 to 11; median filter—window of , and N varies between 2 and 9; Gaussian noise—with and increments of 0.05; SP noise—with noise density varying between 0 and 1 and increments of 0.1. Additionally, we applied image compression and image scaling. JPEG compression—with a variation of the quality factor between 0% and 100% and steps of 10%; Scale—with a scale varying between 0.25 and 4 and steps of 0.25. Furthermore, we applied histogram equalization and contrast enhancement. Equalization—varying the discrete equalization levels 2, where n is from 2 to 8. Contrast enhancement—varying f from 0.01 to 0.45 with increments of 0.05, such as suturing the bottom and the top of all pixel values using the histogram information.

Geometric attacks: Rotation—varying the rotation angle from 1° to 180° with steps of 5°. Translation—the variation was from 10 to 100 px, with increments of 10. Finally, we cropped the watermark image, substituting the original pixels for black pixels. In percentage p was from 0 to 95%. Table 4 shows the metrics’ averages using the 49 grayscale watermarked images for all attacks with parameter variation, including the metrics of the LOGO and MetaData recovered. As we can see, only with the Rotation and the Cropping was it difficult to recover the LOGO and the MetaData. For both cases, the bits modified in the MetaData were significant, and the LOGO images had visual modifications because the PSNR values and the SSIM values were high. With Gaussian noise, SP noise, scale, and contrast enhancement, = 0, , NCC = 1, SSIM = 1, MSSIM = 1, and MSE = 0, indicating that the recovered watermark was equal to the inserted watermark. In the rest of the attacks (Gaussian filter, median filter, translation, JPEG compression, and histogram equalization), the values of the PSNR, NCC, SSIM, and MSSIM indicated that there was a high similarity between the original watermark and the extracted watermark. In the case of the LOGO image, it could present visual changes, but it was still visible, and the MetaData changed in some characters. It is important to note that, in this table, we included the (number of modified bits) and (BER ratio) to identify if a LOGO had modified bits. For example, with the Gaussian filter, = 0 indicates that the watermark did not have modified bits, but if we calculated the BER ratio (), it was clear that it had few modifications.

Table 4.

Metrics’ averages using 49 grayscale images for all attacks with parameter variation, showing the metrics of the LOGO (MSE, PSNR, NCC, SSIM, MSSIM) and MetaData (, BER) extracted.

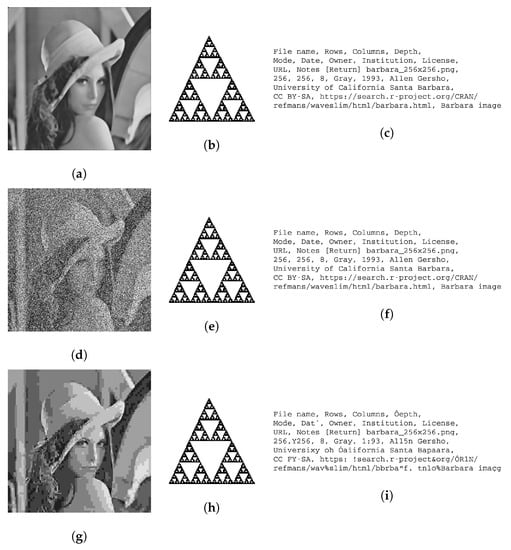

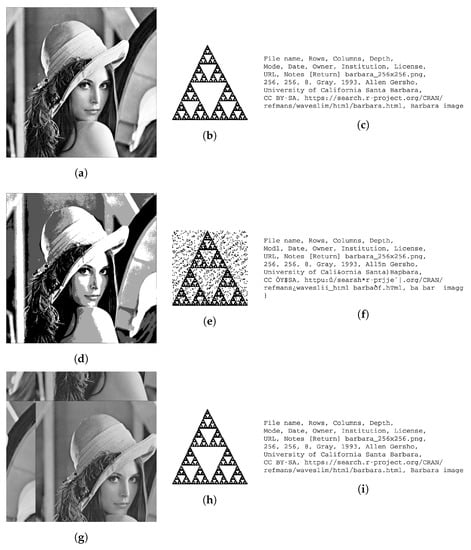

To analyze in detail the results of the robustness against attacks, in Table 5, we show the metrics’ averages for all attacks with parameter variation, showing the metrics for the recovery of the LOGO and MetaData extracted after applying the algorithm using only the Lena image. The results for each metric demonstrated that our proposal is robust and imperceptible. In Figure 14, Figure 15 and Figure 16, we present the original Lena image, recovered watermark, and recovery MetaData after applying some attacks. In Figure 14, we can observe that, after applying the median filter, SP noise, and JPEG compression, it was possible to recover the watermark and MetaData. The same result was obtained if we applied histogram equalization and translation (Figure 15). Finally, in Figure 16, we can see that, if we rotate or crop the watermark image, in some cases, it was not possible to recover the watermark and the MetaData without modifications, but in the majority of the cases, we had good results.

Table 5.

Metrics obtained from all attacks, with parameter variation, after applying the watermarking algorithm over Lena’s image and showing the metrics of the LOGO (MSE, PSNR, NCC, SSIM, MSSIM) and MetaData (, BER) extracted.

Figure 14.

Median filter (9): (a) Filtered Lena image. (b) Recovered watermark (LOGO). (c) Recovered MetaData. SP noise (0.5): (d) Noisy Lena image. (e) Recovered watermark (LOGO). (f) Recovered MetaData. JPEG compression (0): (g) Compressed Lena image. (h) Recovered watermark (LOGO). (i) Recovered MetaData.

Figure 15.

Histogram equalization (128): (a) Equalized Lena image. (b) Recovered watermark (LOGO). (c) Recovered MetaData. Histogram equalization (4): (d) Equalized Lena image. (e) Recovered watermark (LOGO). (f) Recovered MetaData. Translation (100): (g) Translated Lena image. (h) Recovered watermark (LOGO). (i) Recovered MetaData.

Figure 16.

Rotation (90): (a) Rotated Lena image. (b) Recovered watermark (LOGO). (c) Recovered MetaData. Rotation (45): (d) Rotated Lena image. (e) Recovery Watermark (LOGO). (f) Recovered MetaData. Cropping (30): (g) Cropped Lena image. (h) Recovered watermark (LOGO). (i) Recovered MetaData. Cropping (45): (j) Cropped Lena image. (k) Recovered watermark (LOGO). (l) Recovered MetaData.

5.5. Computational Complexity

Since the watermarking insertion/extraction processes are composed of several stages, we give the complexity for those stages that involved the host image in the insertion process, and we did not include neither the pre-processing of the LOGO watermark and image MetaData because of their small dimensions compared to the host image. Thus, the computational complexity for a grayscale image of px is given as follows:

- Hermite transform (for both the decomposition and reconstruction stages): , where is the number of coefficients and represents the size of the binomial window.

- HVS stage: , where is the size of each block.

- DCT and inverse DCT: .

- SVD, which is applied several time in the insertion process: , and it could be simply considering that .

- SVD reconstruction: , for .

Finally, fixing , , and , the simplified computational complexity is given by: , and resolving it, we obtain the following total computational complexity: .

5.6. Comparison with other Methods

In order to evaluate our proposed scheme, we compared it to other similar approaches. To have a valid comparison, it is important to have elements in common, such as the database, watermark type, the metrics to evaluate each algorithm, and the attacks applied. After reviewing the state-of-the-art, we decided to compare our algorithm to algorithms that use typical images for this application (Baboon, Barbara, Boat, Cameraman, Lena, and Peppers)and at least used as metrics the PSNR, SSIM, and 2D correlation. Implementing the algorithms that we selected to compare our method was difficult because, in some cases, the authors did not include their proposals with details, so we took their published results. In Table 6, we present the results of our proposal compared with [1,5,6,9,13,17,29,31,32,33,34,35]. In all cases, the original image used was Lena. The metrics shown are of the watermarked image.

Table 6.

Comparison between different types of watermarking systems [1,5,6,9,13,17,29,31,32,33,34,35] with our algorithm using the Lena image. The best result for each parameter is indicated by the values highlighted in bold in each column.

As we can see from Table 6, the proposals reported good results for all metrics, including ours, but a small difference implies better development. Concerning the PSNRs, even though [29] reported the highest PSNR value, success against attacks was not guaranteed. Furthermore, we can assume that, when two images are equal, their PSNR dB, so any algorithm that reported PSNR values or ≫60 indicated that both the watermarked and the original image were equal. In this situation, our algorithm and the algorithms [6,29,31,32] had PSNR values ≫60. Regarding the SSIM and NCC, the best results were obtained by our proposal and [6,29,35], so we can assume that the watermark image did not have perceptual modifications. Finally, with our algorithm, there was no error (MSE = 0) between the original and the marked image. Only two techniques [32,35] reported MSE values, and they were different from zero. Therefore, our method did not have errors in the insertion process and extraction process. Finally, after reviewing each result of [6,29] and our proposal, the three methods had the same values for the SSIM and NNC, in addition to reported values of PSNR = ≫60 dB; there was no difference between them, so we can conclude that the three methods are good watermarking techniques. However, there was a difference between them: the watermark. In [29], the authors used an image (); in [6], they used a LOGO (); with our proposal, it is possible to insert both an image () and information about the owner or the technical data of the original image in text format. Therefore, we can conclude that our proposal is competitive concerning other state-of-the-art works, giving similar performance evaluations, but with the advantage of a higher loading capacity.

To have more statistical significance, we included a comparison with [1,5,9,13,17,33,34,35] using other images (Barbara, Baboon, Peppers, and Pirate). In Table 7, we present the obtained results for each method after inserting the watermark. It is important to see that the methods did not use all the indicated images and the metrics, but we included them because it is important to compare our technique and demonstrate its effectiveness.

Table 7.

Comparison between different types of watermarking systems [1,5,9,13,17,33,34,35] with our algorithm using the Barbara, Peppers, and Pirate images. The best results for each proposal are indicated by the values highlighted in bold in each column.

Once again, we demonstrated that our proposal is competitive compared to other techniques using different images (Table 7), with optimal results for the metrics used.

To determine the effectiveness (robustness) of the proposed method, it was necessary to have a valid comparison, so we chose the proposals [1,13,17,29,31,32,34,35], which reported their results with the same attacks and the same parameters. In addition, we took into account the metrics employed by each one. Table 8 presents the results obtained after applying the Gaussian filter to the watermarked image. Table 9 shows the metrics’ values after applying the median filter. The comparison of the JPEG compression is presented in Table 10. Finally, Table 11 shows the results after applying the scale attack, and Table 12 the results for the rotation attack.

Table 8.

Comparison between different types of watermarking systems [1,29] with our algorithm, after applying the Gaussian filter using the Lena image. The best result for each parameter is indicated by the values highlighted in bold in each column.

Table 9.

Comparison between different types of watermarking systems [17,29,31,32] with our algorithm, after applying the median filter using the Lena image. The best result for each parameter is indicated by the values highlighted in bold in each column.

Table 10.

Comparison between different types of watermarking systems [13,17,29,34] with our algorithm, after applying JPEG compression using the Lena image. The best result for each parameter is indicated by the values highlighted in bold in each column.

Table 11.

Comparison between different types of watermarking systems [13,17,35] with our algorithm, after applying the scale attack using the Lena image. The best result for each parameter is indicated by the values highlighted in bold in each column.

Table 12.

Comparison between different types of watermarking systems [29,31] with our algorithm, after applying the rotation attack using the Lena image. The best result for each parameter is indicated by the values highlighted in bold in each column.

From Table 8, Table 9 and Table 10, it is clear that our algorithm had the best results for all metrics, demonstrating that the recovered watermark did not suffer alterations (LOGO and MetaData) after applying the Gaussian filter, median filter, and JPEG compression. About the scale attack (Table 11), we can determine that the watermark extracted with our algorithm is the same as the original watermark. Finally, with the rotation attack, the proposal [31] had the highest NCC values (very close to 1), and it is clear that our proposal did not overcome this attack.

Regarding Gaussian noise, only the algorithm from [34] used the same parameter of the noise density to probe their proposal as ours. In Table 13, we can see the results. Meanwhile, in Table 14, we present the results of SP noise compared with [13,34].

Table 13.

Comparison between watermarking systems [34] with our algorithm, after applying Gaussian noise using the Lena image. The best result for each parameter is indicated by the values highlighted in bold in each column.

Table 14.

Comparison between different types of watermarking systems [13,34] with our algorithm, after applying SP noise using the Lena image. The best result for each parameter is indicated by the values highlighted in bold in each column.

From the results shown in Table 13 and Table 14, we can confirm that, when we applied Gaussian noise or SP noise to the marked image, we could fully extract the watermarks. The metrics’ values for SSIM and NCC were equal to 1 using our algorithm, and BER = 0.

Finally, we decided to include a comparison with other techniques using different images. The comparison of Gaussian noise with [33] is presented in Table 15 using the Barbara image. Table 16 shows the metrics’ values after applying SP noise compared with [34], using the Pirate image, and Table 17 presents the comparison of JPEG compression, using the Baboon image, with [17,34].

Table 15.

Comparison between watermarking systems [33] with our algorithm, after applying SP noise using the Barbara image. The best result for each parameter is indicated by the values highlighted in bold in each column.

Table 16.

Comparison between watermarking systems [34] with our algorithm, after applying SP noise using the Pirate image. The best result for each parameter is indicated by the values highlighted in bold in each column.

Table 17.

Comparison between different types of watermarking systems [17,34] with our algorithm, after applying JPEG compression using the Baboon image. The best result for each parameter is indicated by the values highlighted in bold in each column.

6. Discussion

The experiments and results demonstrated that the image watermarking method based on SVD, the HVS, the HT, and the DCT is a robust and secure technique with the capacity to insert two different watermarks, the image LOGO and the image MetaData, in plaintext format containing information about the cover image or the image’s owner. Compared with the majority of the state-of-the-art proposals, we had an advantage because they only used one watermark.

The evaluation of the algorithm was presented by applying different attacks (processing and geometric operations), using two watermarks, inserting both at the same time, and 49 digital images. We used four different metrics to demonstrate that the watermarked images did not suffer visual alterations and that the watermark extracted, in the majority of cases, was recovered perfectly.

To have an imperceptible and robust algorithm, our proposal is a hybrid approach because we used the Hermite Transform (HT), Singular-Value Decomposition (SVD), the Human Vision System (HVS), and the Discrete Cosine Transform (DCT), and to have major security, we encrypted the watermark. On the one hand, we encrypted the watermark (LOGO) by combining the Jigsaw transform and ECA. On the other hand, we applied a Hamming error-correcting code to the MetaData, to reduce channel distortion.

The insertion process (Figure 10) shows all the elements we considered. First, we applied the HT to the original image, because this transform guarantees imperceptibility. We chose the low-frequency coefficient and divided it into blocks of size 4 × 4. Then, to determine the best regions (with more redundant information) to insert the watermark, we used a combination of entropy and edge entropy (HVS analysis). Figure 11b shows an example highlighting the most-suitable regions to insert the watermark. This HVS analysis was applied to each block, and then, we used the DCT (this transformation demonstrated greater effectiveness when applied to smaller block sizes). To insert the watermark, we used SVD because, as we explained, the SVD of a digital image in the majority of cases is rarely affected by various attacks. We inserted the watermark into S coefficients. Finally, we applied the IDCT, and the blocks were joined to calculate the inverse HT. An important element to take into account is the scaling factor because it defines the imperceptibility and robustness of the watermarking method. Both insertion and extraction processes were similar. Therefore, the proposed method is symmetric, and the extraction stage applied the inverse operations to those used in the insertion.

To probe the effectiveness of this method, we applied the insertion and extraction process to 49 different digital images, evaluated its robustness against attacks, and compared it with other methods. To probe the quality of our algorithm, we used typical metrics employed in this kind of application (MSE, PSNR, SSIM, MSSIM, NCC, and BER). For the original image, the watermarked image, the original watermark (LOGO), and the extracted watermark, we employed the metrics that indicated if an image had suffered visual alterations or if two images were equal, and in the case of the MetaData, we calculated the BER to measure how many bits were modified in the recovered watermark. The metrics’ values of Table 2 demonstrated that the watermarked images did not have visual modifications and the extracted watermarks (LOGO and MetaData) were the same as the originals. Furthermore, we presented the results of six representative images (Table 3). In all cases, the extracted watermark (LOGO) and MetaData were equal to the original. The watermarked image did not have visual modifications, although the worst MSE was obtained with the Cameraman image (MSE = 9.9479). Therefore, this algorithm guarantees imperceptibility and perfect extraction.

To evaluate the algorithm regarding the robustness, we probed it with the majority of attacks that are common in watermark applications. In total, we applied 11 attacks (common processing and geometrics). From Table 4, we can see that, for four attacks, Gaussian noise, SP noise, scaling, and contrast enhancement, the watermark could be recovered perfectly without errors, while for the rest of the attacks, the metrics’ values indicated that the extracted watermark could have some difference in comparison with the watermarked original. However, this difference did not prevent the identification of the LOGO; however, it was clear that the modification of the bits in the MetaData did change its meaning. However, if one of the two watermarks is clear, we can validate the method. The worst cases to recover the watermark were when we applied the cropping and rotation attacks.

Finally, in comparison with other similar algorithms, it is clear that our proposal presented equal or higher values for all metrics (Table 6). It is important to note that it was difficult to compare with other proposals because, in some cases, we used stronger attacks. Therefore, we presented the outcomes of the algorithms employing identical parameters to ours (Table 8, Table 9, Table 10, Table 11, Table 12, Table 13 and Table 14). Is clear that our method had better robustness and watermark capacity. Another difficulty was comparing with other methods, but using different images, because this depended on the published results for each research work. Therefore, from the state-of-the-art evaluation, we could select some of them that presented tests using different images from the Lena image.

7. Conclusions and Future Work

We presented a robust and invisible hybrid watermark algorithm for digital images in the transformed domain. We proposed a combination of the HT, DCT, and SVD techniques to have more robustness and imperceptibility for the watermarked images. With our proposal, it was possible to use as a watermark both the digital image information (LOGO) and information about the owner (MetaData) and insert them at the same time. In the state-of-the-art, we reported algorithms that use as a watermark only digital images or information about the owner, and their robustness is better because the watermark has less information. Therefore, we integrate different mathematical tools to insert two different watermarks without compromising imperceptibility and robustness. In addition, we included an encryption process to have more security, which could have a thorough performance analysis in future work.

With tests and results, we demonstrated that our technique is robust to the majority of attacks used to prove it. The parameters that we considered to apply the attacks, in some cases, were stronger than the parameters employed by other proposals. In Table 4, we present each attack that we applied and its parameters, indicating the value of each metric obtained after applying the algorithm. The results showed that, on the one hand, with Gaussian and SP noises, the scale attack, and contrast enhancement, our proposal had excellent performance because, in all cases, the watermark extracted did not have errors and the watermarked image did not present visual modifications. On the other hand, the worst results were obtained when we applied the rotation and cropping attacks, because it was not possible to extract the watermark in some cases.

In terms of the comparison with other proposals, as we explained in Section 5.6, our results were better in all cases (Table 6). It is important to note that, despite the fact that some papers [6,29,32] reported PSNR values above 60 dB, this factor does not ensure robustness. In our case, all metrics showed that our method was robust, secure, and ensured high imperceptibility, making it suitable for effective copyright protection.

As future work, we believe that is necessary to improve the algorithm for the rotation and cropping attacks, because, of all the attacks, only these were the ones that it did not overcome. In addition, we will carry out a thorough analysis of the JST with the ECA for the encryption of the image watermarking, and we will explore a combined watermarking/encryption approach to insert information into a host image and encrypt it in the frequency domain.

Author Contributions

Conceptualization, S.L.G.-C., E.M.-A., J.B. and A.R.-A.; methodology, S.L.G.-C., E.M.-A., J.B. and A.R.-A.; software, S.L.G.-C., E.M.-A. and A.R.-A.; validation, S.L.G.-C., E.M.-A., J.B. and A.R.-A.; formal analysis, S.L.G.-C., E.M.-A. and J.B.; investigation, S.L.G.-C., E.M.-A., J.B. and A.R.-A.; resources, S.L.G.-C., E.M.-A. and J.B.; data curation, S.L.G.-C. and E.M.-A.; writing—original draft preparation, S.L.G.-C., E.M.-A., J.B. and A.R.-A.; writing—review and editing, S.L.G.-C., E.M.-A. and J.B.; visualization, S.L.G.-C. and E.M.-A.; supervision, S.L.G.-C., E.M.-A. and J.B.; project administration, S.L.G.-C. and E.M.-A. All authors have read and agreed to the published version of the manuscript.

Funding

The APC was funded by Instituto Politécnico Nacional and by Universidad Panamericana through the Institutional Program “Fondo Open Access” of the Vicerrectoría General de Investigación.

Data Availability Statement

The complete dataset is publicly available on our website: https://sites.google.com/up.edu.mx/invico-en/resources/image-dataset-watermarking.

Acknowledgments

Sandra L. Gomez-Coronel thanks the financial support from Instituto Politecnico Nacional IPN (COFFA, EDI, and SIP). Ernesto Moya-Albor, Jorge Brieva, and Andrés Romero-Arellano thank the School of Engineering of the Universidad Panamericana for all the support in this work.

Conflicts of Interest

The authors affirm that they have no conflict of interest.

Abbreviations

The manuscript employs the following abbreviations:

| AES | Advanced Encryption Standard |

| AT | Arnold Transform |

| Bit error | |

| BER | Bit Error Rate |

| CAE | Convolutional Autoencoder |

| CNN | Deep Convolutional Neural Network |

| DCT | Discrete Cosine Transform |

| DWT | Discrete Wavelet Transform |

| ECA | Elementary Cellular Automata |

| ECC | Elliptic Curve Cryptography |

| FDCuT | Fast Discrete Curvelet Transform |

| FRFT | Fractional Fourier Transform |

| HEVC | High-Efficiency Video Coding |

| HT | Hermite Transform |

| HVS | Human Vision System |

| IDCT | Inverse Discrete Transform |

| JST | Jigsaw Transform |

| LWT | Lifting Wavelet Transform |

| MRI | Magnetic Resonance Imaging |

| MSE | Mean-Squared Error |

| MSF | Multiple Scaling Factors |

| MSSIM | Mean Structural Similarity Index |

| NBP | Normalized Block Processing |

| NCC | Normalized Cross-Correlation |

| SP | Salt and Pepper |

| PSNR | Peak Signal To Noise Ratio |

| PSO | Particle Swarm Optimization |

| QIM | Quantization Index Modulation |

| RIDWT | Redistributed Invariant Wavelet Transform |

| RSA | Rivest–Shamir–Adleman |