A Stereo-Vision-Based Spatial-Positioning and Postural-Estimation Method for Miniature Circuit Breaker Components

Abstract

Featured Application

Abstract

1. Introduction

2. Prior Work

3. Scheme of the Proposed Method

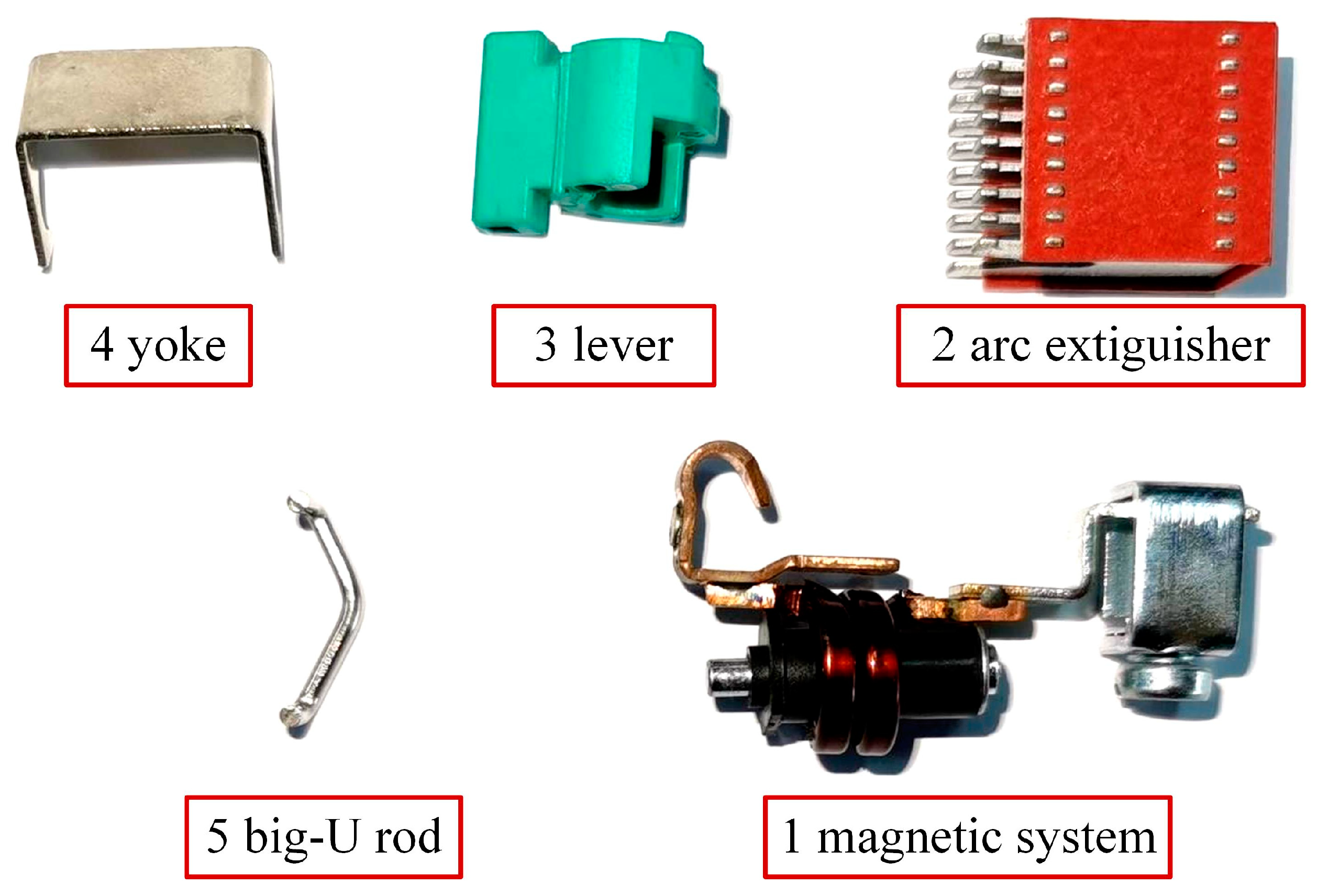

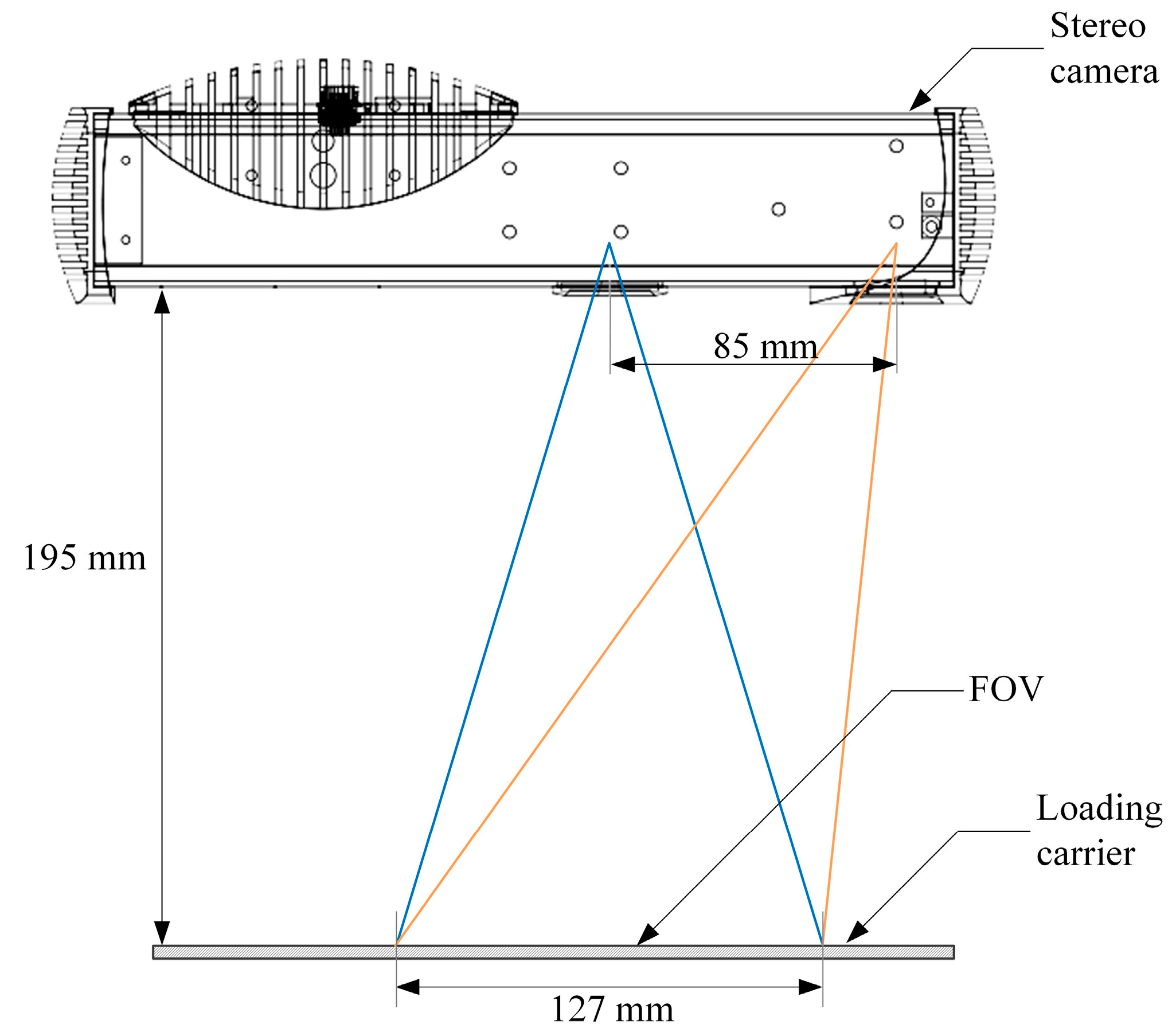

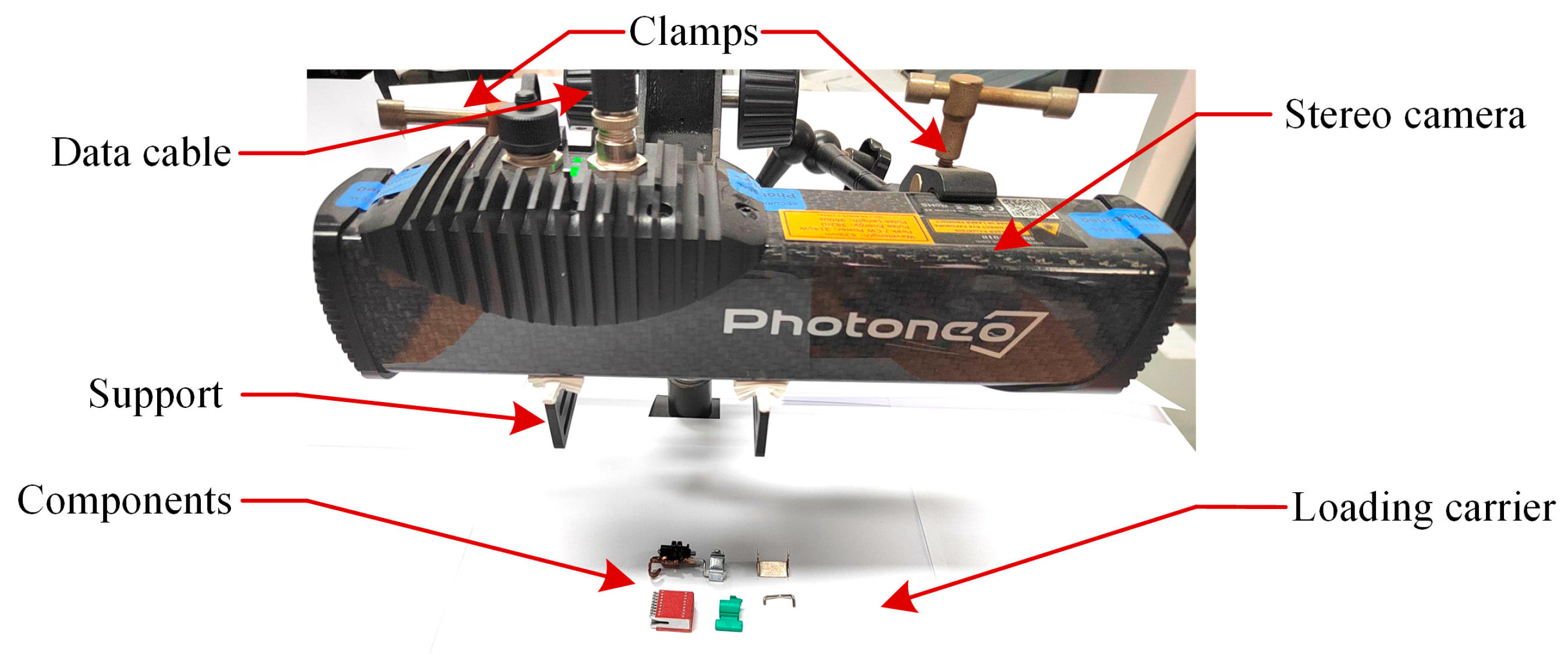

3.1. Hardware of the Vision System

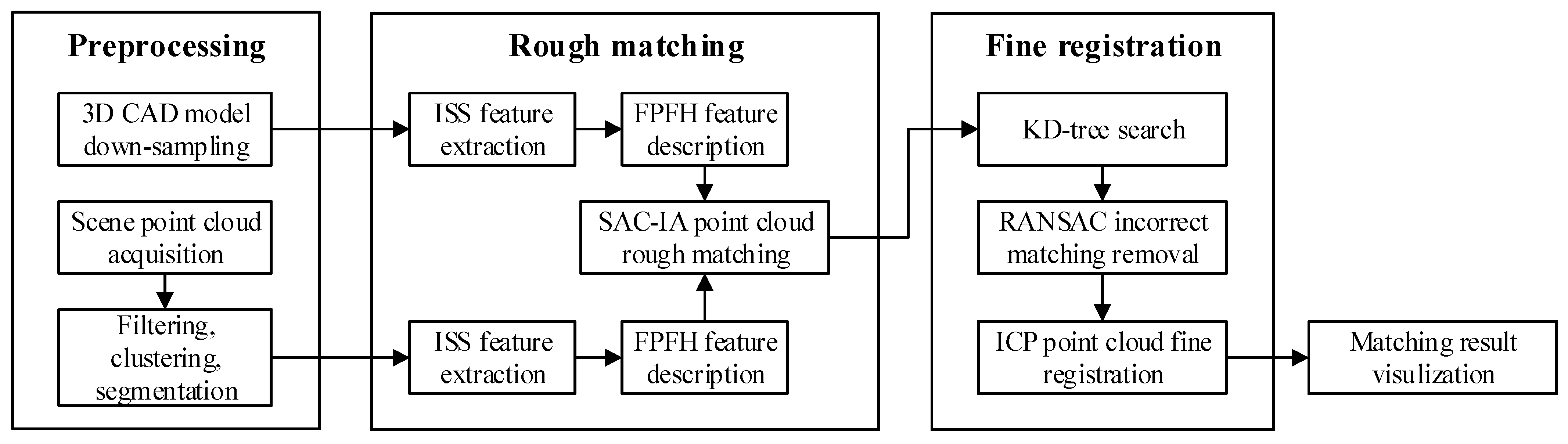

3.2. Overview of the Method

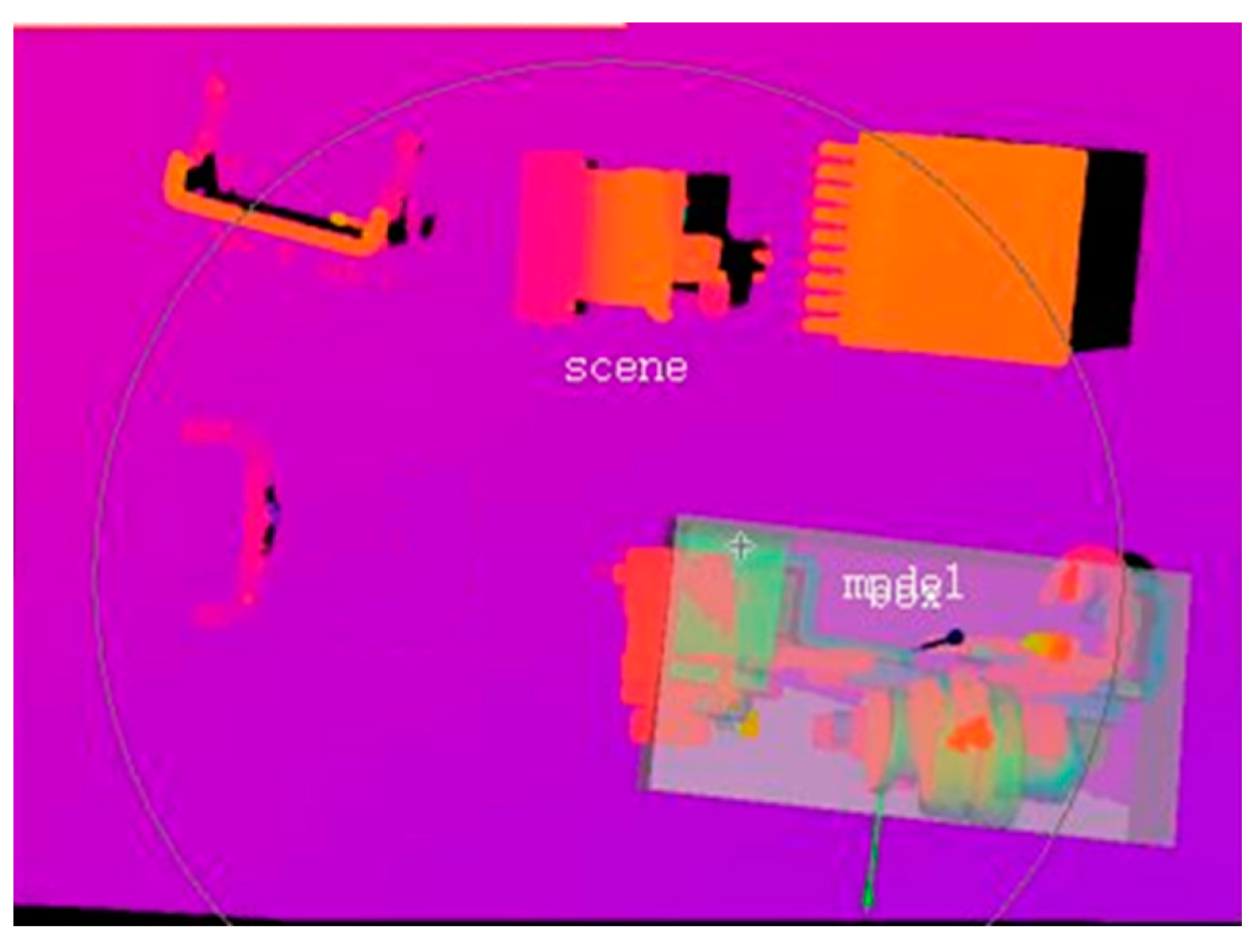

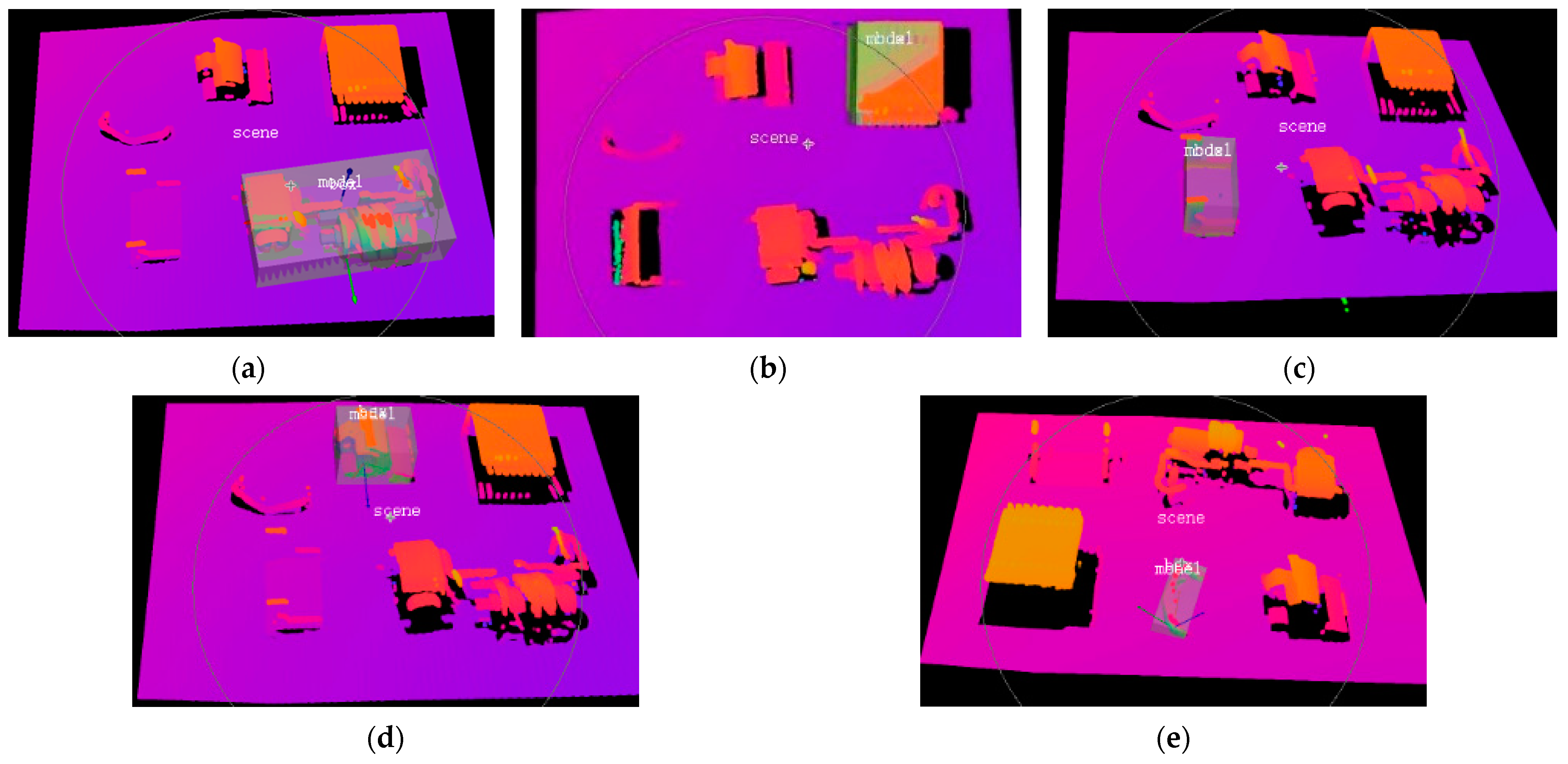

- The computer-aided design (CAD) models of the components are sampled to obtain surface point clouds that contain richer feature information, which improves the precision of point cloud registration.

- The scene point cloud is filtered, clustered, and segmented to obtain candidate-matching regions.

- Fine registration is used to improve the precision of the results obtained by rough matching. To perform a fast search, fine registration employs Iterative Closest Point (ICP) [23,26] with a K-dimensional Tree (KD-tree) [27] to obtain a set of point pairs of minimum Euclidian distances between the source and target point clouds. Meanwhile, Random Sample Consensus (RANSAC) [28] is employed to remove incorrectly matched point pairs to further raise the performance of the ICP algorithm.

- Finally, the result is visualized by moving a design model to the registered position and posture.

4. Spatial Positioning and Postural Estimation Method

4.1. Preprocessing

4.2. Rough-Matching Process

- Select sampling points according to conditions: select n sampling points from the candidate point cloud, and meanwhile, to ensure the discrimination of the FPFH features of each sampling point, the distance between each sampling point should be greater than the set threshold.

- Search the corresponding point pair set: according to the FPFH features of the sampled points, nearest-neighbor searching is used in the model surface point cloud to find the point pairs with the smallest difference between the FPFH features of the MSPC and the CPC, and RANSAC [28] is used to remove incorrectly matched point pairs to obtain optimized point pairs with a similar feature set.

- Obtain the optimal transformation parameters: first, the MSPC is transformed according to the matched point pair set obtained in Step 2, and then the matching effect is evaluated by the sum function of the distances between the transformed MSPC and the CPC. The Huber function is used to compute the sum function of the distance error.

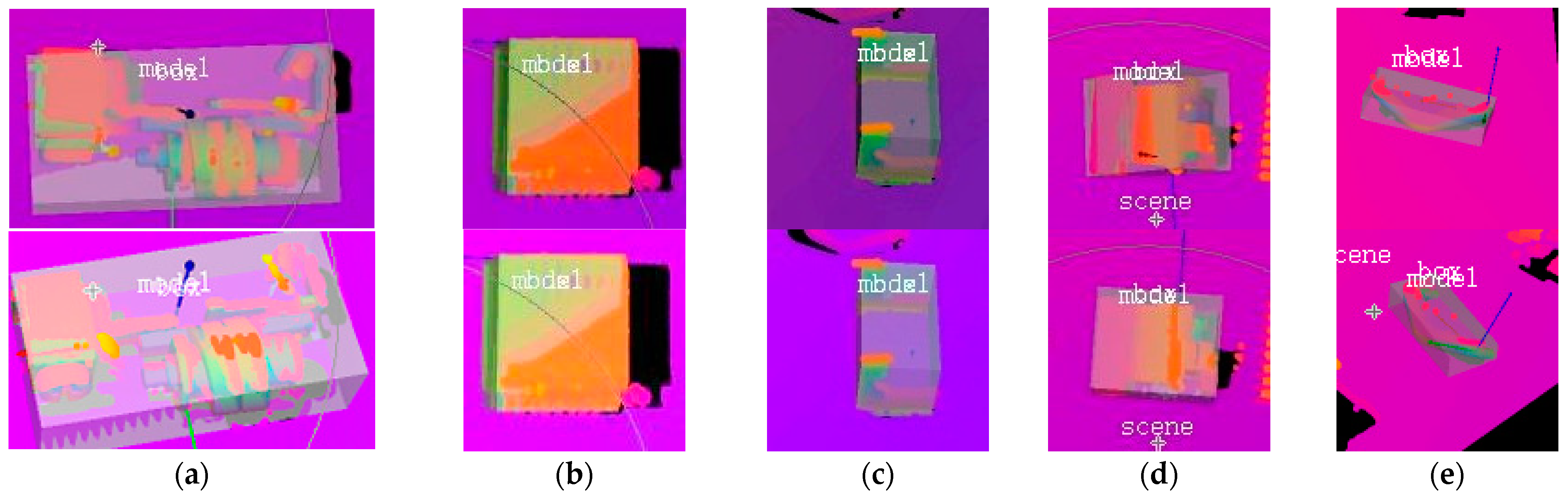

4.3. Fine Registration Process

- Obtain the optimized set of corresponding point pairs: each gi in G is searched within S to obtain a preliminary corresponding point pair set by finding the minimum Euclidean distance via the KD-tree search algorithm, and then the RANSAC algorithm is used to remove point pairs with large matching errors. Hence, the optimized corresponding point pair set {(si, gi)|i < n} is obtained.

- Obtain the transform matrices R and T according to the point pair set obtained in Step 1: the transform matrices R and T are achieved by finding the minimum value of the target function f(R, T) as the optimization objective. The target function f(R, T) is as follows:

- 3.

- Update the MSPC by the transform matrices R and T obtained in Step (2): the MSPC update formula is as follows, where k is the number of iterations, Sk is the point cloud before the update, and Sk+1 is the point cloud after this update.

- 4.

- Calculate iteratively and judge the threshold: The mean squared errors dk and dk+1 after this iteration are calculated by Equations (5) and (6), and then the judgment of whether any of the threshold conditions (dk < E, dk+1 − dk < e, k ≥ kmax) are met is performed. If any conditions are met, the iteration stops; otherwise, go back to Step 1.

- 5.

- Perform point cloud registration after iterative optimization: the result of the last iteration is used as the optimal matching, and the optimal transform matrices R and T are obtained. Fine registration results are exhibited in Figure 14.

5. Experiment and Analysis

5.1. Experiments and Evaluation

5.2. Results and Analysis

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Sun, S.; Wen, Z.; Du, T.; Wang, J.; Tang, Y.; Gao, H. Remaining Life Prediction of Conventional Low-Voltage Circuit Breaker Contact System Based on Effective Vibration Signal Segment Detection and MCCAE-LSTM. IEEE Sens. J. 2021, 21, 21862–21871. [Google Scholar] [CrossRef]

- Shi, X.; Zhang, F.; Qu, X.; Liu, B. An online real-time path compensation system for industrial robots based on laser tracker. Int. J. Adv. Robot. Syst. 2016, 13, 1729881416663366. [Google Scholar] [CrossRef]

- He, M.; Wu, X.; Shao, G.; Wen, Y.; Liu, T. A Semiparametric Model-Based Friction Compensation Method for Multi-Joint Industrial Robot. J. Dyn. Syst. Meas. Control 2021, 144, 034501. [Google Scholar] [CrossRef]

- Han, Y.; Shu, L.; Wu, Z.; Chen, X.; Zhang, G.; Cai, Z. Research of Flexible Assembly of Miniature Circuit Breakers Based on Robot Trajectory Optimization. Algorithms 2022, 15, 269. [Google Scholar] [CrossRef]

- XB4_ROKAE Robotics. Leading Robots Expert in Industrial, Commercial Scenarios. Available online: http://www.rokae.com/en/product/show/240/XB4.html (accessed on 8 June 2023).

- Suresh, V.; Liu, W.; Zheng, M.; Li, B. High-resolution structured light 3D vision for fine-scale characterization to assist robotic assembly. In Proceedings of the Dimensional Optical Metrology and Inspection for Practical Applications X, Online. 12 April 2021; p. 1. [Google Scholar]

- Erdős, G.; Horváth, D.; Horváth, G. Visual servo guided cyber-physical robotic assembly cell. IFAC-PapersOnLine 2021, 54, 595–600. [Google Scholar] [CrossRef]

- Hoffmann, A. On the Benefits of Color Information for Feature Matching in Outdoor Environments. Robotics 2020, 9, 85. [Google Scholar] [CrossRef]

- Zhou, Q.; Chen, R.; Bin, H.; Liu, C.; Yu, J.; Yu, X. An Automatic Surface Defect Inspection System for Automobiles Using Machine Vision Methods. Sensors 2019, 19, 644. [Google Scholar] [CrossRef] [PubMed]

- Lorenz, C.; Carlsen, I.-C.; Buzug, T.M.; Fassnacht, C.; Weese, J. A Multi-Scale Line Filter with Automatic Scale Selection Based on the Hessian Matrix for Medical Image Segmentation. In Scale-Space Theory in Computer Vision; Haar Romeny, B., Florack, L., Koenderink, J., Viergever, M., Eds.; Springer: Berlin/Heidelberg, Germany, 1997; Volume 1252, pp. 152–163. ISBN 978-3-540-63167-5. [Google Scholar]

- Birdal, T.; Ilic, S. Point Pair Features Based Object Detection and Pose Estimation Revisited. In Proceedings of the 2015 International Conference on 3D Vision, Lyon, France, 19–22 October 2015; pp. 527–535. [Google Scholar]

- Wu, P.; Li, W.; Yan, M. 3D scene reconstruction based on improved ICP algorithm. Microprocess. Microsyst. 2020, 75, 103064. [Google Scholar] [CrossRef]

- Liu, D.; Arai, S.; Miao, J.; Kinugawa, J.; Wang, Z.; Kosuge, K. Point Pair Feature-Based Pose Estimation with Multiple Edge Appearance Models (PPF-MEAM) for Robotic Bin Picking. Sensors 2018, 18, 2719. [Google Scholar] [CrossRef] [PubMed]

- Yu, J.; Yu, C.; Lin, C.; Wei, F. Improved Iterative Closest Point (ICP) Point Cloud Registration Algorithm based on Matching Point Pair Quadratic Filtering. In Proceedings of the 2021 International Conference on Computer, Internet of Things and Control Engineering (CITCE), Guangzhou, China, 12–14 November 2021; pp. 1–5. [Google Scholar]

- Biber, P. The Normal Distributions Transform: A New Approach to Laser Scan Matching. In Proceedings of the 2003 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2003) (Cat. No.03CH37453), Las Vegas, NV, USA, 27–31 October 2003. [Google Scholar]

- Zhang, R.; Zhang, Y.; Fu, D.; Liu, K. Scan Denoising and Normal Distribution Transform for Accurate Radar Odometry and Positioning. IEEE Robot. Autom. Lett. 2023, 8, 1199–1206. [Google Scholar] [CrossRef]

- Liu, L.; Xiao, J.; Wang, Y.; Lu, Z.; Wang, Y. A Novel Rock-Mass Point Cloud Registration Method Based on Feature Line Extraction and Feature Point Matching. IEEE Trans. Geosci. Remote Sens. 2022, 60, 21497545. [Google Scholar] [CrossRef]

- Efraim, A.; Francos, J.M. 3D Matched Manifold Detection for Optimizing Point Cloud Registration. In Proceedings of the 2022 International Conference on Electrical, Computer, Communications and Mechatronics Engineering (ICECCME), Malé, Maldives, 16–18 November 2022; pp. 1–5. [Google Scholar]

- Zhang, X.; Wang, Z.; Yu, H.; Liu, M.; Xing, B. Research on Visual Inspection Technology in Automatic Assembly for Manhole Cover of Rocket Fuel Tank. In Proceedings of the 2022 4th International Conference on Advances in Computer Technology, Information Science and Communications (CTISC), Virtual. 22–24 April 2022; pp. 1–5. [Google Scholar]

- Cao, H.; Chen, D.; Zheng, Z.; Zhang, Y.; Zhou, H.; Ju, J. Fast Point Cloud Registration Method with Incorporation of RGB Image Information. Appl. Sci. 2023, 13, 5161. [Google Scholar] [CrossRef]

- Photoneo Localization. SDK 1.3 Instruction Manual and Installation Instructions. Available online: https://www.photoneo.com/downloads/localization-sdk/ (accessed on 8 June 2023).

- 3D Scanner for Scanning Small Objects|PhoXi XS. Available online: https://www.photoneo.com/products/phoxi-scan-xs/ (accessed on 8 June 2023).

- Senin, N.; Colosimo, B.M.; Pacella, M. Point set augmentation through fitting for enhanced ICP registration of point clouds in multisensor coordinate metrology. Robot. Comput. Integr. Manuf. 2013, 29, 39–52. [Google Scholar] [CrossRef]

- Rusu, R.B.; Blodow, N.; Beetz, M. Fast Point Feature Histograms (FPFH) for 3D registration. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 3212–3217. [Google Scholar]

- Rusu, R.B.; Blodow, N.; Marton, Z.C.; Beetz, M. Aligning point cloud views using persistent feature histograms. In Proceedings of the 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; pp. 3384–3391. [Google Scholar]

- Besl, P.J. A method for registration of 3d shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Kalpitha, N.; Murali, S. Object Classification using SVM and KD-Tree. Int. J. Recent Technol. Eng. 2020, 8. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random Sample Consensus: A Paradigm for Model Fitting with Applications to Image Analysis and Automated Cartography. In Readings in Computer Vision; Fischler, M.A., Firschein, O., Eds.; Morgan Kaufmann: San Francisco CA, USA, 1987; pp. 726–740. [Google Scholar]

- Muja, M.; Lowe, D.G. Fast Approximate Nearest Neighbors with Automatic Algorithm Configuration. In Proceedings of the International Conference on Computer Vision Theory and Applications, Lisboa, Portugal, 5–8 February 2009. [Google Scholar]

| Parameter | Value |

|---|---|

| Brand | Photoneo |

| Model | PhoXi 3D Scanner XS |

| Imaging method | Structured light |

| Point-to-point distance (sweet spot) | 0.055 mm |

| Scanning range | 161–205 mm |

| Optimal scanning distance (sweet spot) | 181 mm |

| Scanning area (sweet spot) | 118 × 78 mm |

| 3D points throughput | 16 Million points per second |

| GPU | NVIDIA Pascal Architecture with 256 CUDA cores |

| Component Label | Posture (α, β, γ) (°) | Position (x, y, z) (mm) | ||||

|---|---|---|---|---|---|---|

| Ground Truth | Result | Error | Ground Truth | Result | Error | |

| 1 | 1.23, 2.73, 40.58 | 0.82, 2.34, 40.15 | 0.41, 0.39, 0.43 | −15.21, 18.34, 192.3 | −15.08, 18.20, 192.4 | −0.13, 0.14, −0.10 |

| 2 | 88.45, −3.76, 13.61 | 88.03, −4.02, 13.85 | 0.42, 0.26, −0.24 | 20.55, 30.72, 186.05 | 20.63, 30.56, 185.94 | −0.08, 0.16, 0.11 |

| 3 | 64.70, 307.19, 67.25 | 64.98, 306.78, 67.6 | −0.28, 0.41, −0.35 | −9.34, −2.20, 187.53 | −9.47, −2.05, 187.38 | 0.13, −0.15, 0.15 |

| 4 | 92.56, 42.68, 25.77 | 92.93, 42.34, 25.51 | −0.37, 0.34, 0.26 | −25.50, −4.97, 187.62 | −25.61, −4.89, 187.73 | 0.11, −0.08, −0.11 |

| 5 | 22.40, 56.52, 7.83 | 22.17, 56.66, 7.67 | 0.23, −0.14, 0.16 | 15.06, −6.43, 193.57 | 14.89, −6.57, 193.44 | 0.17, 0.14, 0.13 |

| Component Label | MAE of Postures (°) | MAE of Positions (mm) | ||||||

|---|---|---|---|---|---|---|---|---|

| NDT | Photoneo | 2D | Proposed | NDT | Photoneo | 2D | Proposed | |

| 1 | 2.79 | 1.22 | 0.47 | 0.48 | 3.26 | 1.53 | 0.28 | 0.20 |

| 2 | 2.54 | 1.09 | 0.37 | 0.32 | 1.88 | 1.10 | 0.34 | 0.13 |

| 3 | 2.22 | 1.41 | 0.41 | 0.45 | 2.86 | 1.15 | 0.33 | 0.16 |

| 4 | 3.63 | 1.14 | 0.40 | 0.39 | 2.11 | 0.72 | 0.25 | 0.17 |

| 5 | 4.03 | 1.85 | 0.44 | 0.33 | 1.94 | 1.35 | 0.28 | 0.14 |

| Average | 3.14 | 1.45 | 0.42 | 0.38 | 2.31 | 1.29 | 0.30 | 0.15 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, Z.; Bao, Z.; Wang, J.; Yan, J.; Xu, H. A Stereo-Vision-Based Spatial-Positioning and Postural-Estimation Method for Miniature Circuit Breaker Components. Appl. Sci. 2023, 13, 8432. https://doi.org/10.3390/app13148432

Wu Z, Bao Z, Wang J, Yan J, Xu H. A Stereo-Vision-Based Spatial-Positioning and Postural-Estimation Method for Miniature Circuit Breaker Components. Applied Sciences. 2023; 13(14):8432. https://doi.org/10.3390/app13148432

Chicago/Turabian StyleWu, Ziran, Zhizhou Bao, Jingqin Wang, Juntao Yan, and Haibo Xu. 2023. "A Stereo-Vision-Based Spatial-Positioning and Postural-Estimation Method for Miniature Circuit Breaker Components" Applied Sciences 13, no. 14: 8432. https://doi.org/10.3390/app13148432

APA StyleWu, Z., Bao, Z., Wang, J., Yan, J., & Xu, H. (2023). A Stereo-Vision-Based Spatial-Positioning and Postural-Estimation Method for Miniature Circuit Breaker Components. Applied Sciences, 13(14), 8432. https://doi.org/10.3390/app13148432