Predicting the Quality of Tangerines Using the GCNN-LSTM-AT Network Based on Vis–NIR Spectroscopy

Abstract

1. Introduction

2. Materials and Methods

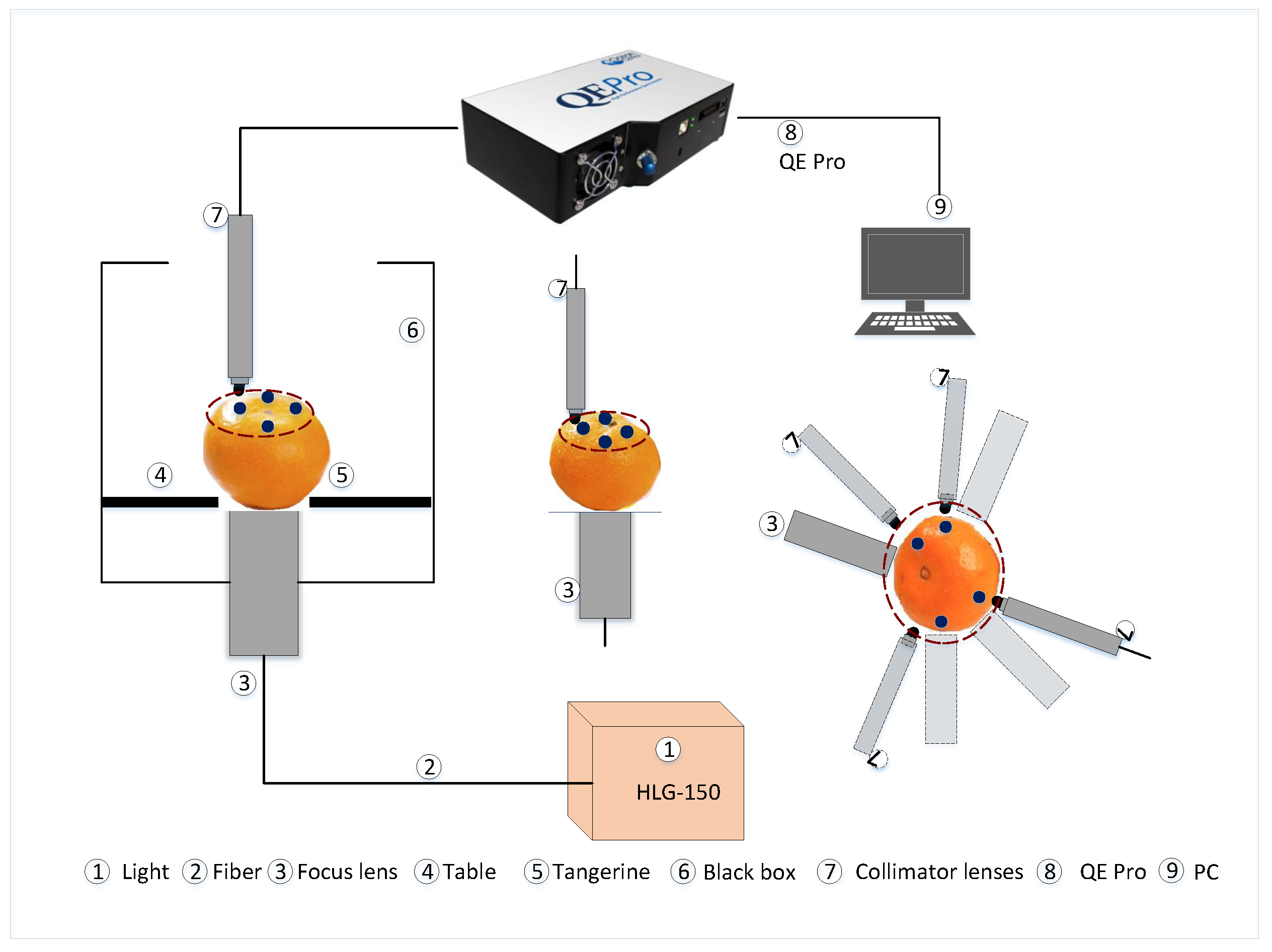

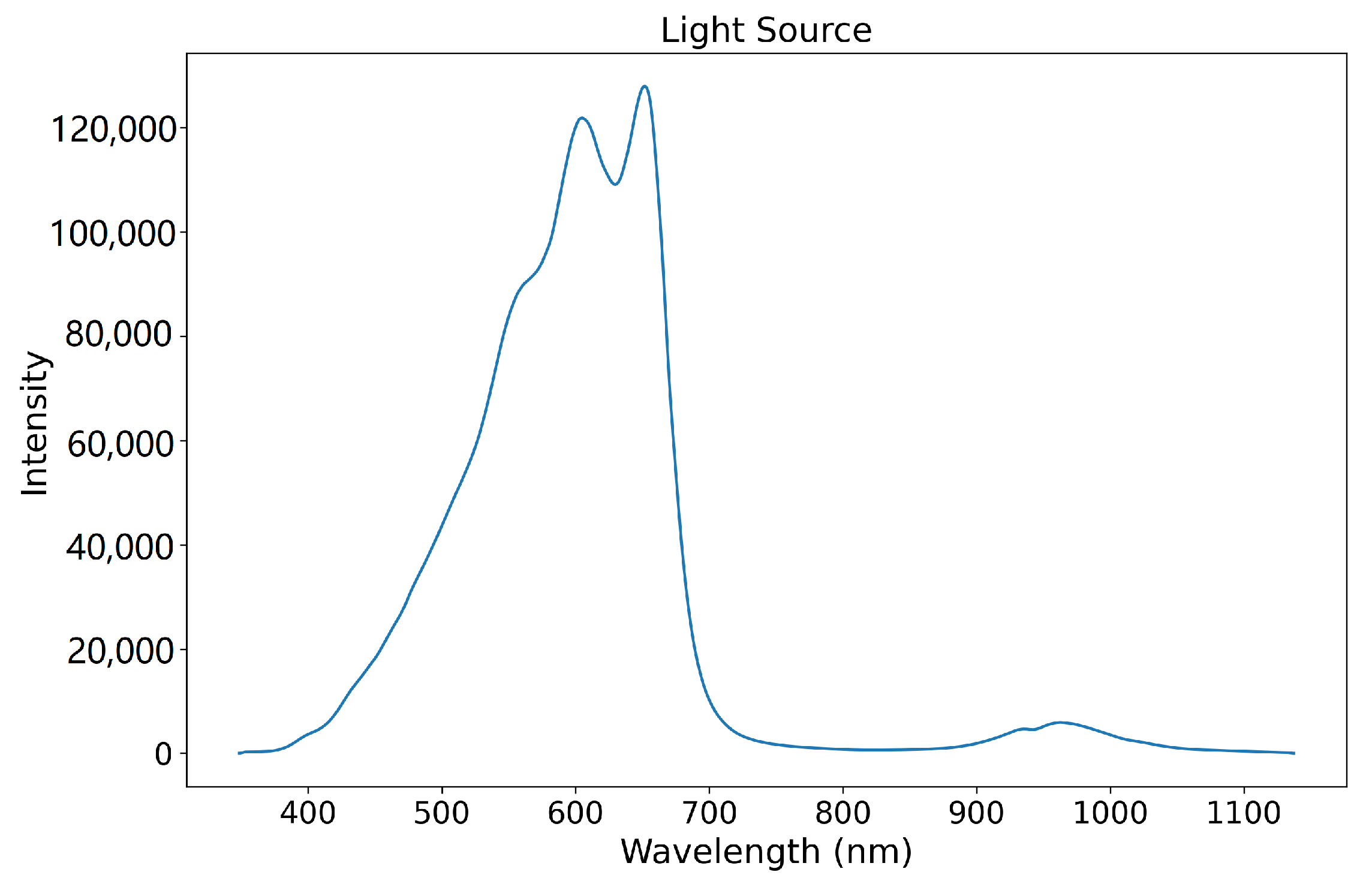

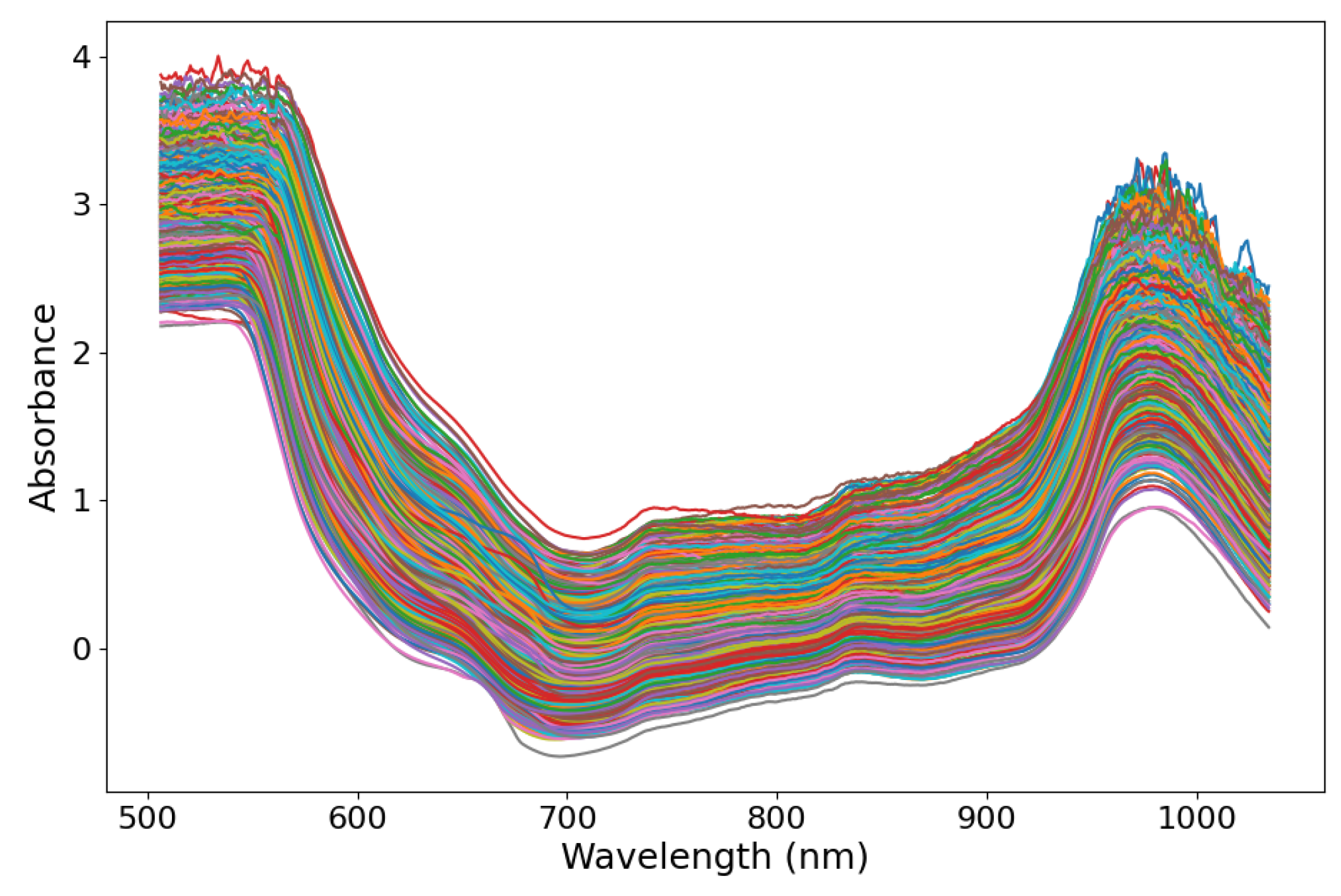

2.1. Spectra Collection and Processing

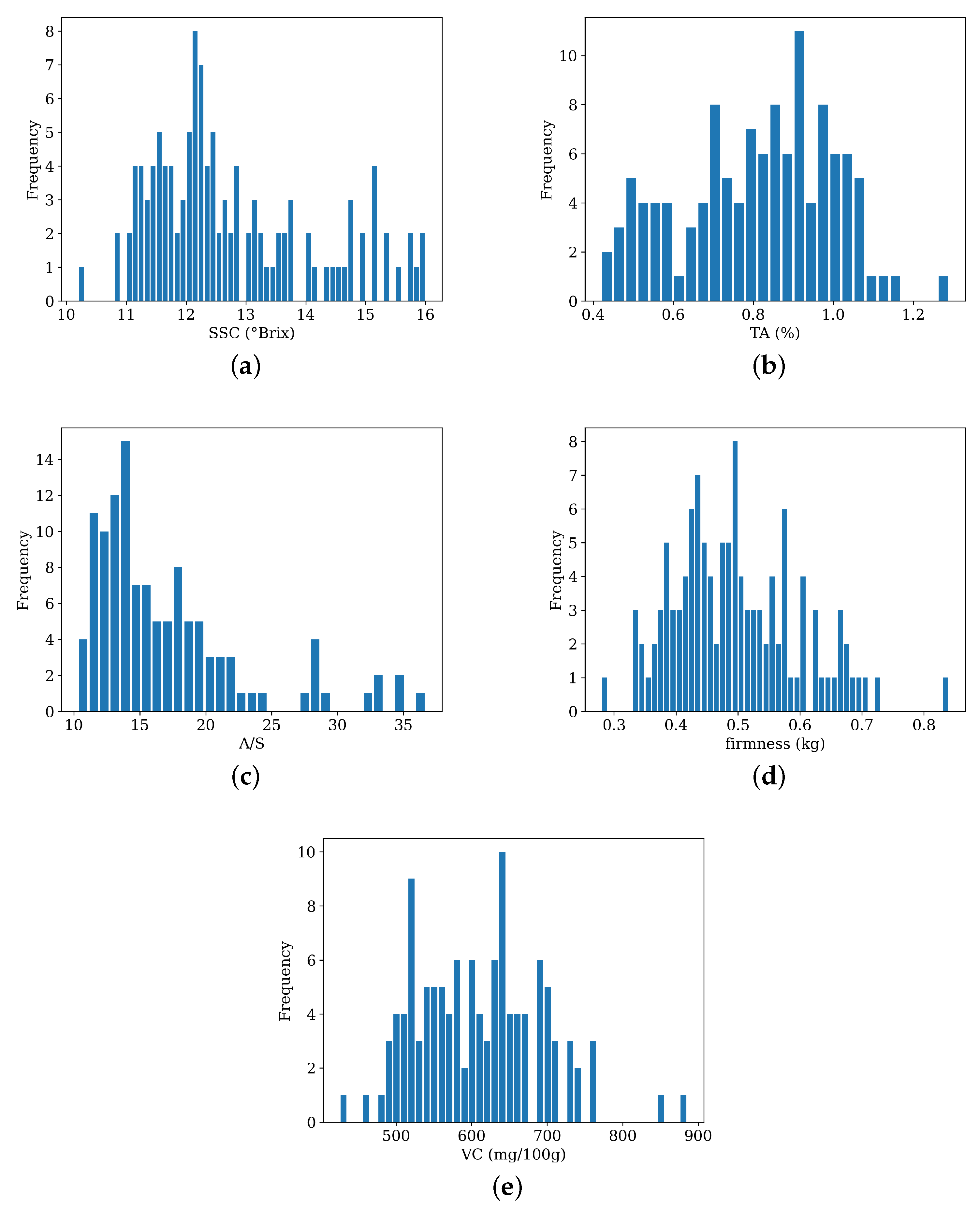

2.2. Internal Quality Attributes Assessment

2.3. Preprocessing Methods

3. Neural Networks

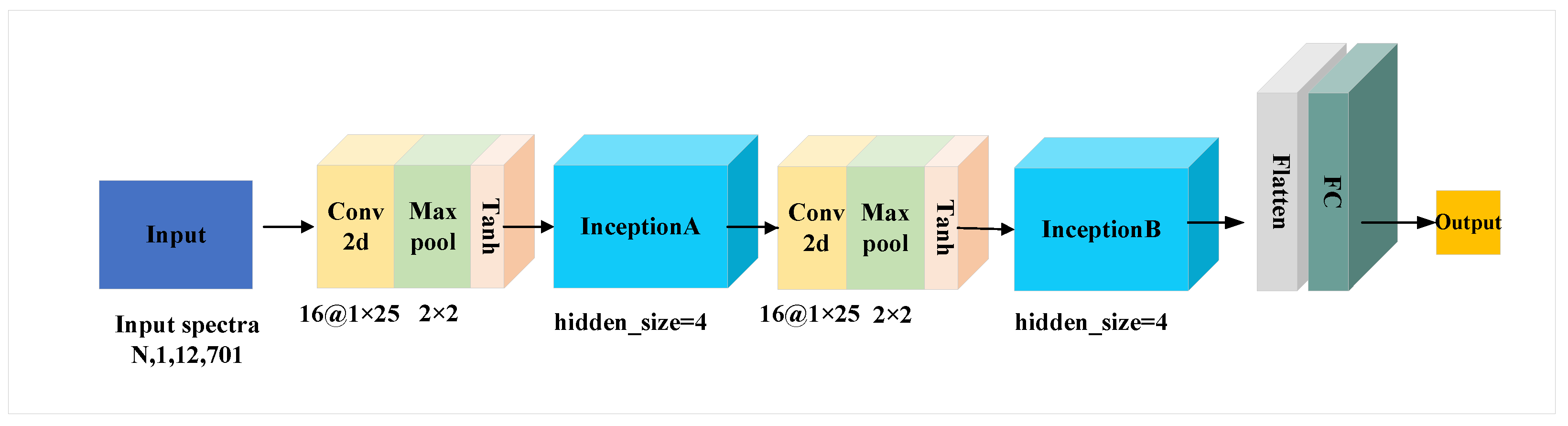

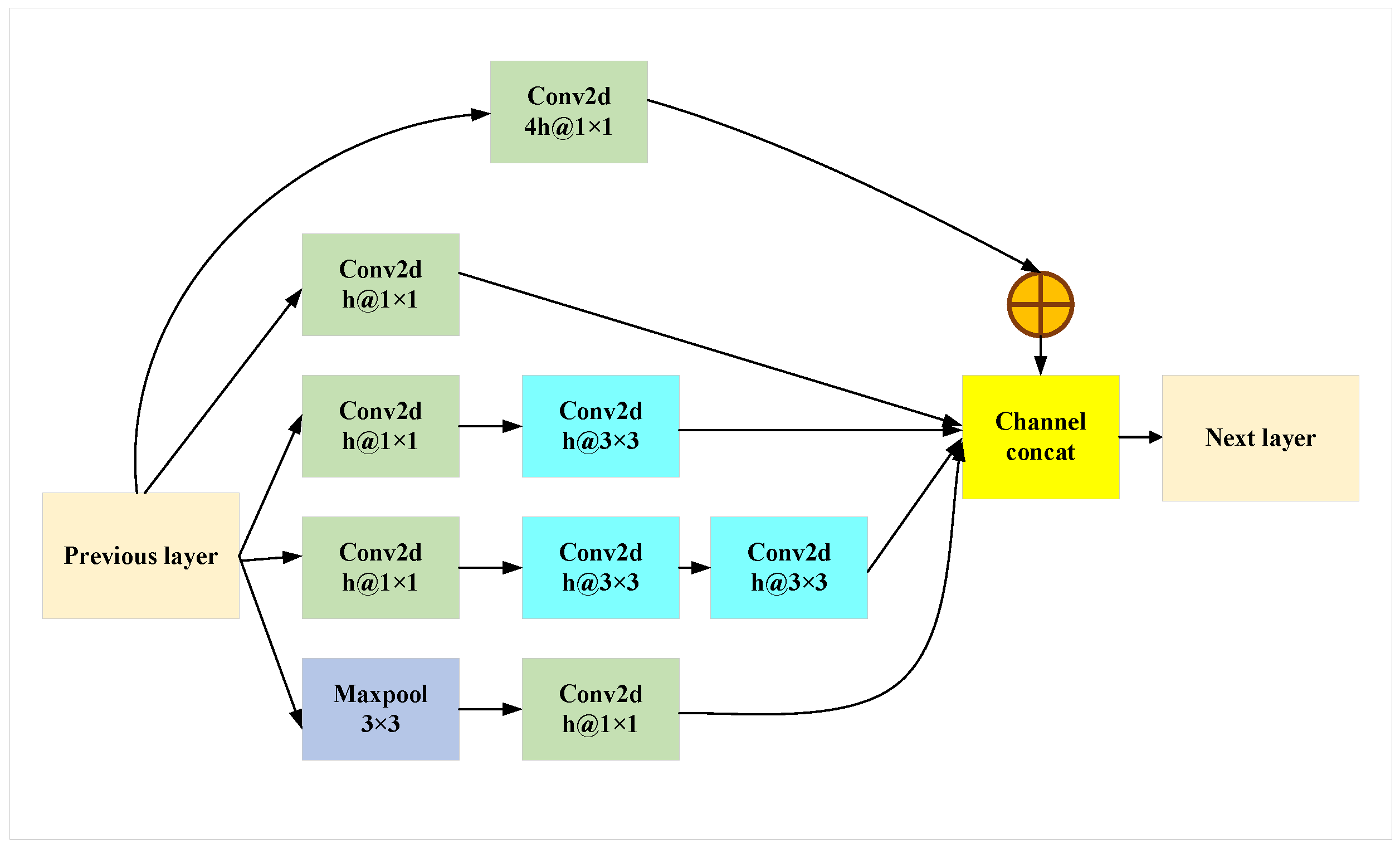

3.1. DeepSpectra2D

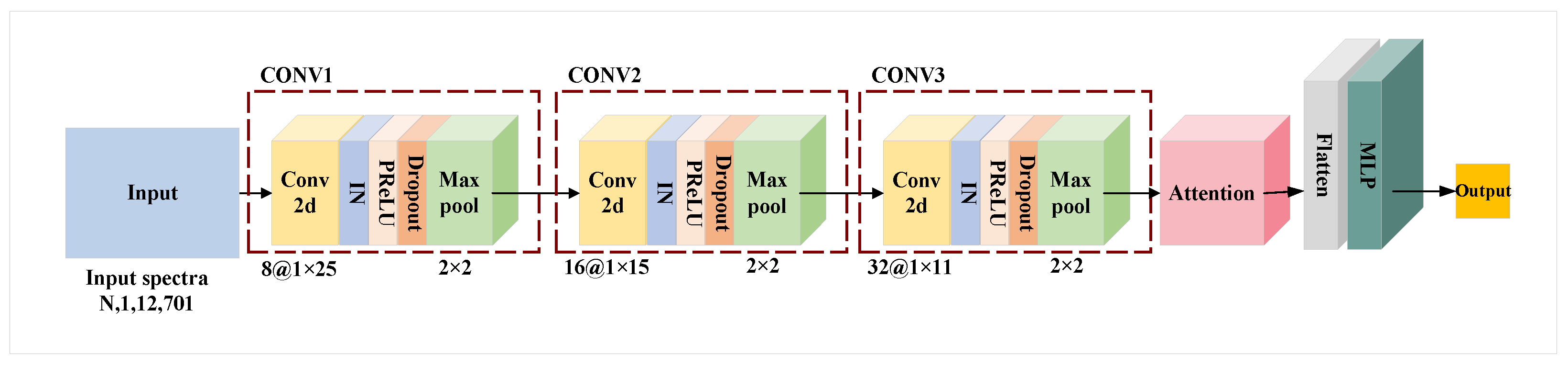

3.2. CNN-AT

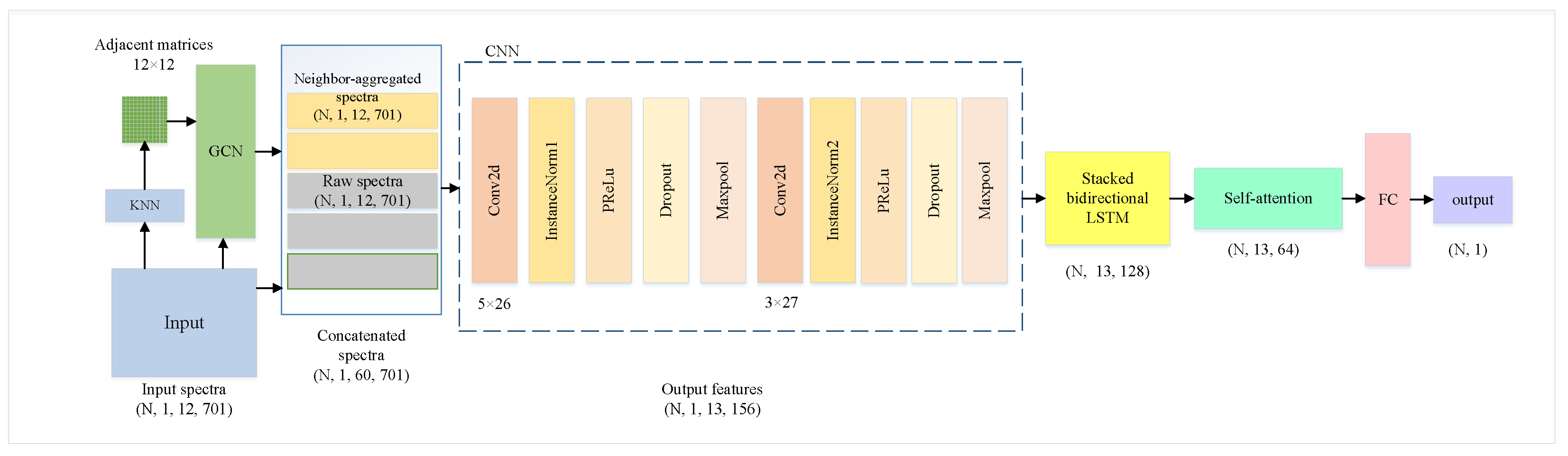

3.3. GCNN-LSTM-AT Network

3.3.1. Graph Convolutional Network (GCN)

3.3.2. Feature Extraction with CNNs

3.3.3. Sequential Modeling with LSTM

3.3.4. Attention Mechanism

4. Results and Discussion

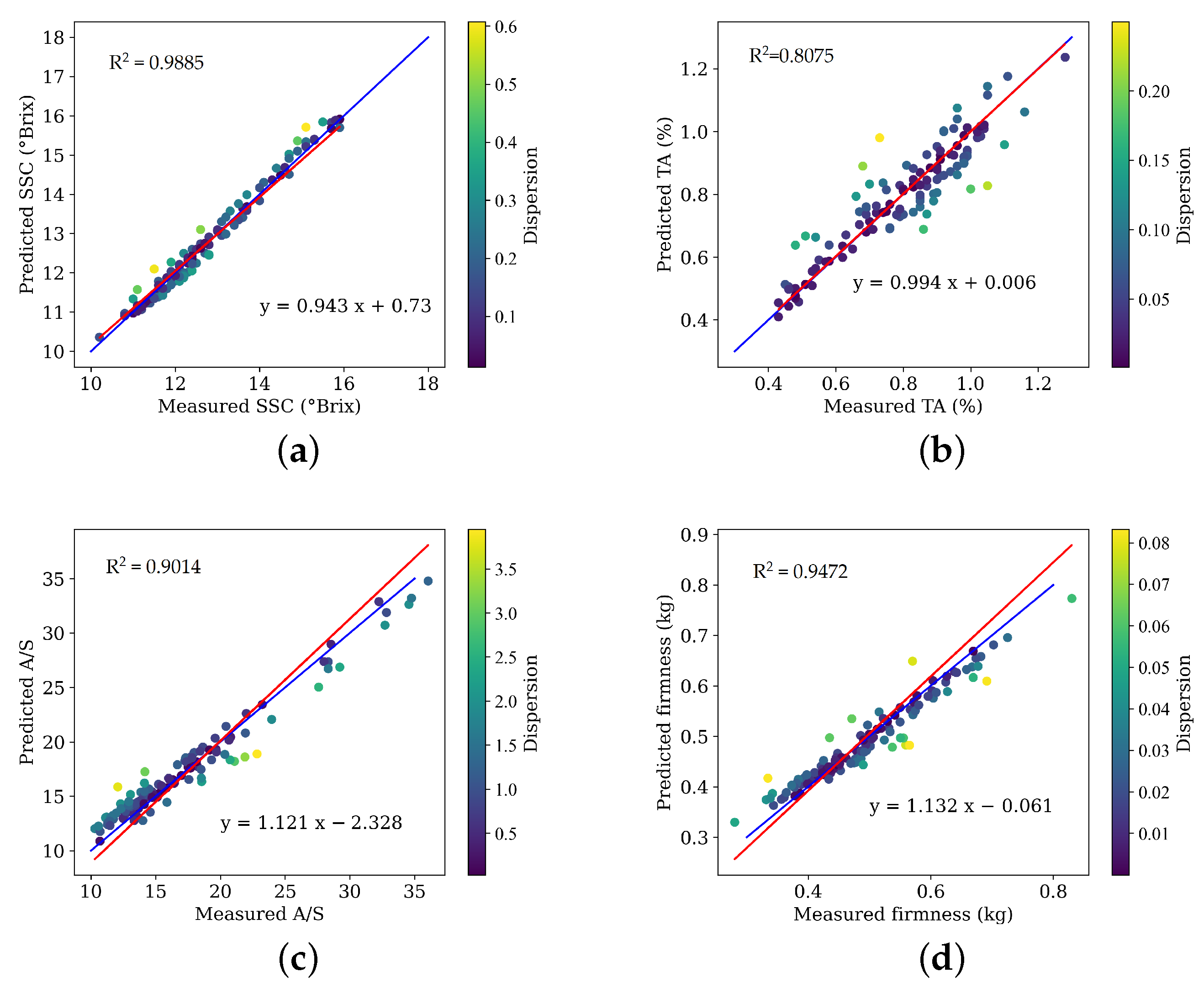

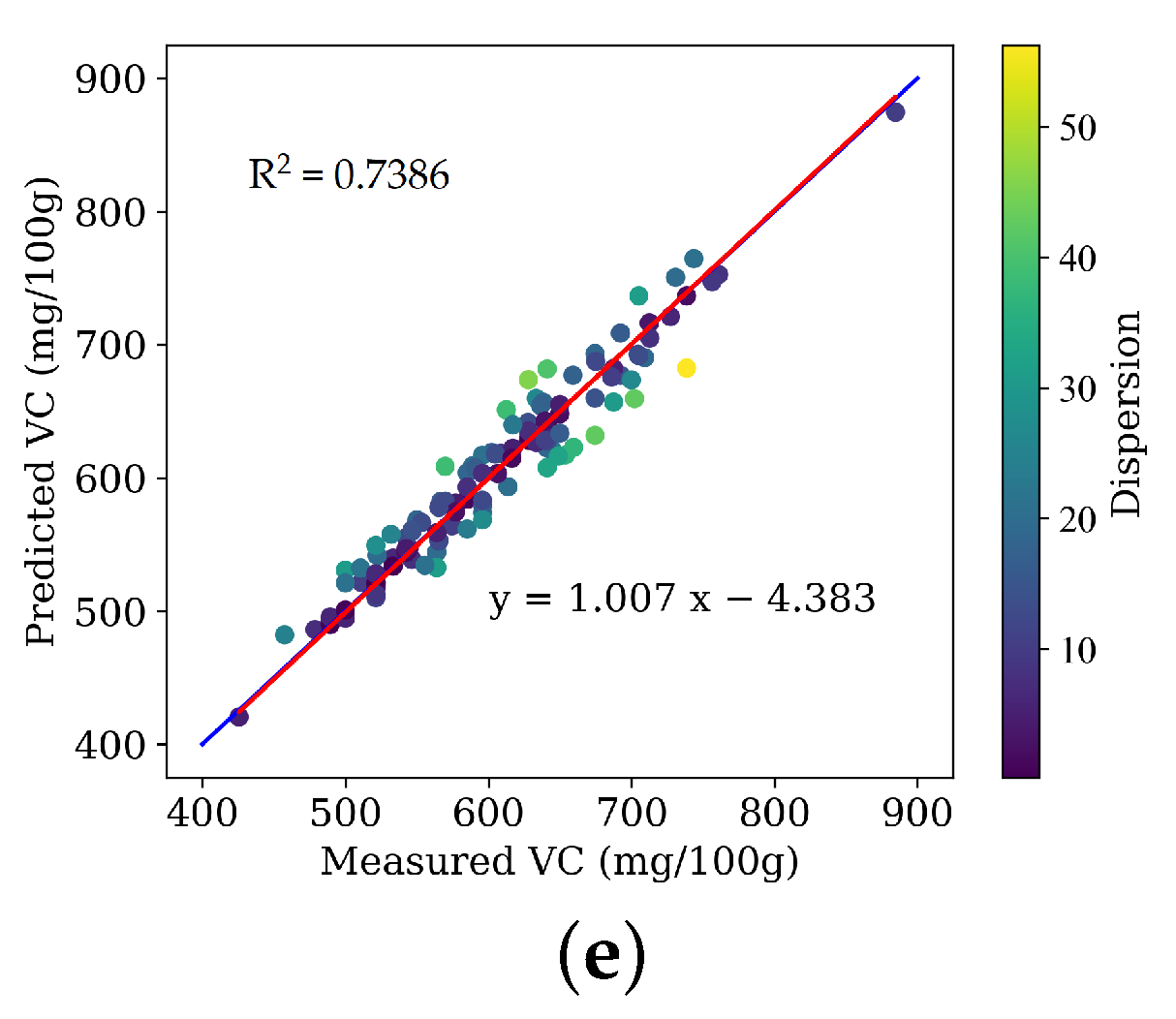

4.1. Model Evaluation

4.2. Comparison with Conventional Machine-Learning Approaches and Two Deep Networks

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Luo, J.; Lin, Z.; Xing, Y.; Forsberg, E.; Wu, C.; Zhu, X.; Guo, T.; Gaoxuan, W.; Bian, B.; Wu, D.; et al. Portable 4D Snapshot Hyperspectral Imager for Fastspectral and Surface Morphology Measurements. Prog. Electromagn. Res. 2022, 173, 25–36. [Google Scholar] [CrossRef]

- Nicolaï, B.; Beullens, K.; Bobelyn, E.; Peirs, A.; Saeys, W.; Theron, K.; Lammertyn, J. Nondestructive measurement of fruit and vegetable quality by means of NIR spectroscopy: A review. Postharvest Biol. Technol. 2007, 46, 99–118. [Google Scholar] [CrossRef]

- Ncama, K.; Opara, U.L.; Tesfay, S.Z.; Fawole, O.A.; Magwaza, L.S. Application of Vis/NIR spectroscopy for predicting sweetness and flavour parameters of ‘Valencia’ orange (Citrus sinensis) and ‘Star Ruby’ grapefruit (Citrus × paradisi Macfad). J. Food Eng. 2017, 193, 86–94. [Google Scholar] [CrossRef]

- Abbaspour-Gilandeh, Y.; Soltani Nazarloo, A. Non-Destructive Measurement of Quality Parameters of Apple Fruit by Using Visible/Near-Infrared Spectroscopy and Multivariate Regression Analysis. Sustainability 2022, 14, 14918. [Google Scholar] [CrossRef]

- Grabska, J.; Beć, K.; Ueno, N.; Huck, C. Analyzing the Quality Parameters of Apples by Spectroscopy from Vis/NIR to NIR Region: A Comprehensive Review. Foods 2023, 12, 1946. [Google Scholar] [CrossRef] [PubMed]

- Ribeiro, J.S.; Salva, T.d.J.G.; Silvarolla, M.B. Prediction of a wide range of compounds concentration in raw coffee beans using NIRS, PLS and variable selection. Food Control 2021, 125, 107967. [Google Scholar] [CrossRef]

- Xu, S.; Lu, B.; Baldea, M.; Edgar, T.F.; Nixon, M. An improved variable selection method for support vector regression in NIR spectral modeling. J. Process Control 2018, 67, 83–93. [Google Scholar] [CrossRef]

- Zhan, B.; Xiao, X.; Pan, F.; Luo, W.; Dong, W.; Tian, P.; Zhang, H. Determination of SSC and TA content of pear by Vis-NIR spectroscopy combined CARS and RF algorithm. Int. J. Wirel. Mob. Comput. 2021, 21, 41–51. [Google Scholar] [CrossRef]

- Sun, R.; Zhou, J.y.; Yu, D. Nondestructive prediction model of internal hardness attribute of fig fruit using NIR spectroscopy and RF. Multimed. Tools Appl. 2021, 80, 21579–21594. [Google Scholar] [CrossRef]

- Zhang, C.; Wu, W.; Zhou, L.; Cheng, H.; Ye, X.; He, Y. Developing deep learning based regression approaches for determination of chemical compositions in dry black goji berries (Lycium ruthenicum Murr.) using near-infrared hyperspectral imaging. Food Chem. 2020, 319, 126536. [Google Scholar] [CrossRef]

- Fukuhara, M.; Fujiwara, K.; Maruyama, Y.; Itoh, H. Feature visualization of Raman spectrum analysis with deep convolutional neural network. Anal. Chim. Acta 2019, 1087, 11–19. [Google Scholar] [CrossRef]

- Wei, X.; He, J.; Zheng, S.; Ye, D. Modeling for SSC and firmness detection of persimmon based on NIR hyperspectral imaging by sample partitioning and variables selection. Infrared Phys. Technol. 2020, 105, 103099. [Google Scholar] [CrossRef]

- Gong, D.; Ma, T.; Evans, J.; He, S. Deep Neural Networks for Image Super-Resolution in Optical Microscopy by Using Modified Hybrid Task Cascade U-Net. Prog. Electromagn. Res. 2021, 171, 185–199. [Google Scholar] [CrossRef]

- Shou, Y.; Yiming, F.; Chen, H.; Qian, H. Deep Learning Approach Based Optical Edge Detection Using Enz Layers (Invited). Prog. Electromagn. Res. 2022, 175, 81–89. [Google Scholar] [CrossRef]

- Zhang, X.; Yang, J.; Lin, T.; Ying, Y. Food and agro-product quality evaluation based on spectroscopy and deep learning: A review. Trends Food Sci. Technol. 2021, 112, 431–441. [Google Scholar] [CrossRef]

- Zhang, X.; Lin, T.; Xu, J.; Luo, X.; Ying, Y. DeepSpectra: An end-to-end deep learning approach for quantitative spectral analysis. Anal. Chim. Acta 2019, 1058, 48–57. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Tan, A.; Wang, Y.; Zhao, Y.; Wang, B.; Li, X.; Wang, A.X. Near infrared spectroscopy quantification based on Bi-LSTM and transfer learning for new scenarios. Spectrochim. Acta Part A Mol. Biomol. Spectrosc. 2022, 283, 121759. [Google Scholar] [CrossRef]

- Chen, C.; Yang, B.; Si, R.; Chen, C.; Chen, F.; Gao, R.; Li, Y.; Tang, J.; Lv, X. Fast detection of cumin and fennel using NIR spectroscopy combined with deep learning algorithms. Optik 2021, 242, 167080. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Ma, T.; Lyu, H.; Liu, J.; Xia, Y.; Qian, C.; Evans, J.; Xu, W.; Hu, J.; Hu, S.; He, S. Distinguishing bipolar depression from major depressive disorder using fnirs and deep neural network. Prog. Electromagn. Res. 2020, 169, 73–86. [Google Scholar] [CrossRef]

- Zheng, Z.; Liu, Y.; He, M.; Chen, D.; Sun, L.; Zhu, F. Effective band selection of hyperspectral image by an attention mechanism-based convolutional network. RSC Adv. 2022, 12, 8750–8759. [Google Scholar] [CrossRef]

- Yang, J.; Xu, J.; Zhang, X.; Wu, C.; Lin, T.; Ying, Y. Deep learning for vibrational spectral analysis: Recent progress and a practical guide. Anal. Chim. Acta 2019, 1081, 6–17. [Google Scholar] [CrossRef]

- Pasquini, C. Near Infrared Spectroscopy: Fundamentals, practical aspects and analytical applications. J. Braz. Chem. Soc. 2003, 14, 198–219. [Google Scholar] [CrossRef]

- Chang, S.; Ismail, A.; Daud, Z. Ascorbic Acid: Properties, Determination and Uses. In Encyclopedia of Food and Health; Caballero, B., Finglas, P.M., Toldrá, F., Eds.; Academic Press: Oxford, UK, 2016; pp. 275–284. [Google Scholar] [CrossRef]

- Geladi, P.; MacDougall, D.; Martens, H. Linearization and Scatter-Correction for Near-Infrared Reflectance Spectra of Meat. Appl. Spectrosc. 1985, 39, 491–500. [Google Scholar] [CrossRef]

- Savitzky, A.; Golay, M.J.E. Smoothing and Differentiation of Data by Simplified Least Squares Procedures. Anal. Chem. 1964, 36, 1627–1639. [Google Scholar] [CrossRef]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 2818–2826. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Xu, B.; Wang, N.; Chen, T.; Li, M. Empirical Evaluation of Rectified Activations in Convolutional Network. arXiv 2015, arXiv:1505.00853. [Google Scholar]

- Kipf, T.N.; Welling, M. Semi-Supervised Classification with Graph Convolutional Networks. arXiv 2016, arXiv:1609.02907. [Google Scholar]

- Jiang, J.H.; Berry, R.J.; Siesler, H.W.; Ozaki, Y. Wavelength Interval Selection in Multicomponent Spectral Analysis by Moving Window Partial Least-Squares Regression with Applications to Mid-Infrared and Near-Infrared Spectroscopic Data. Anal. Chem. 2002, 74, 3555–3565. [Google Scholar] [CrossRef] [PubMed]

- Anderson-Sprecher, R. Model Comparisons and R2. Am. Stat. 1994, 48, 113–117. [Google Scholar] [CrossRef]

- Chicco, D.; Warrens, M.J.; Jurman, G. The coefficient of determination R-squared is more informative than SMAPE, MAE, MAPE, MSE and RMSE in regression analysis evaluation. PeerJ Comput. Sci. 2021, 7, e623. [Google Scholar] [CrossRef] [PubMed]

- Spiess, A.N.; Neumeyer, N. An evaluation of R2 as an inadequate measure for nonlinear models in pharmacological and biochemical research: A Monte Carlo approach. BMC Pharmacol. 2010, 10, 6. [Google Scholar] [CrossRef] [PubMed]

| Target | Min | Max | Mean | STD | Measurement Accuracy |

|---|---|---|---|---|---|

| SSC (Brix) | 10.2 | 15.9 | 12.697 | 1.3615 | ±0.2% |

| TA (%) | 0.42 | 1.28 | 0.8037 | 0.1866 | ±0.1% |

| A/S | 10.3 | 36.05 | 16.974 | 5.7591 | ±0.3% |

| Firmness (kg) | 0.2802 | 0.8304 | 0.4958 | 0.1003 | ±1% |

| VC (mg/100 g) | 425.5319 | 884.6154 | 608.9775 | 81.9140 | ±2% |

| Target | Method | RMSECV | MAE | |

|---|---|---|---|---|

| SSC (Brix) | GCNN-LSTM-AT | 0.143019 | 0.988546 | 0.119733 |

| CNN-AT | 0.441304 | 0.930002 | 0.403427 | |

| MWPLS | 0.630621 | 0.857062 | 0.564087 | |

| DeepSpectra2D | 0.633972 | 0.855539 | 0.523082 | |

| SVR | 0.635088 | 0.855030 | 0.550726 | |

| RF | 0.637721 | 0.853826 | 0.545897 | |

| TA (%) | GCNN-LSTM-AT | 0.086817 | 0.807487 | 0.072121 |

| RF | 0.106826 | 0.708527 | 0.095840 | |

| CNN-AT | 0.11266 | 0.67582 | 0.080944 | |

| DeepSpectra2D | 0.116552 | 0.653038 | 0.097312 | |

| SVR | 0.128481 | 0.578378 | 0.115217 | |

| MWPLS | 0.132757 | 0.549845 | 0.110507 | |

| A/S | GCNN-LSTM-AT | 1.998381 | 0.901365 | 1.600419 |

| CNN-AT | 3.621092 | 0.669026 | 2.897637 | |

| RF | 3.66037 | 0.661807 | 3.025368 | |

| SVR | 3.787508 | 0.637906 | 2.619833 | |

| DeepSpectra2D | 4.172031 | 0.560651 | 3.241931 | |

| MWPLS | 4.234571 | 0.547381 | 3.200423 | |

| Firmness (kg) | GCNN-LSTM-AT | 0.029408 | 0.947206 | 0.020084 |

| DeepSpectra2D | 0.038597 | 0.820301 | 0.027363 | |

| MWPLS | 0.043178 | 0.775109 | 0.035490 | |

| CNN-AT | 0.048934 | 0.711151 | 0.038188 | |

| RF | 0.064057 | 0.505032 | 0.052835 | |

| SVR | 0.090967 | 0.19752 | 0.073538 | |

| VC (mg/100 g) | DeepSpectra2D | 28.941088 | 0.746859 | 27.861427 |

| GCNN-LSTM-AT | 29.410427 | 0.738583 | 23.131868 | |

| MWPLS | 35.492973 | 0.619271 | 25.987210 | |

| CNN-AT | 36.66847 | 0.593635 | 30.667585 | |

| RF | 43.01874 | 0.440698 | 31.808692 | |

| SVR | 45.583222 | 0.372027 | 33.289951 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, Y.; Zhu, X.; Huang, Q.; Zhang, Y.; Evans, J.; He, S. Predicting the Quality of Tangerines Using the GCNN-LSTM-AT Network Based on Vis–NIR Spectroscopy. Appl. Sci. 2023, 13, 8221. https://doi.org/10.3390/app13148221

Wu Y, Zhu X, Huang Q, Zhang Y, Evans J, He S. Predicting the Quality of Tangerines Using the GCNN-LSTM-AT Network Based on Vis–NIR Spectroscopy. Applied Sciences. 2023; 13(14):8221. https://doi.org/10.3390/app13148221

Chicago/Turabian StyleWu, Yiran, Xinhua Zhu, Qiangsheng Huang, Yuan Zhang, Julian Evans, and Sailing He. 2023. "Predicting the Quality of Tangerines Using the GCNN-LSTM-AT Network Based on Vis–NIR Spectroscopy" Applied Sciences 13, no. 14: 8221. https://doi.org/10.3390/app13148221

APA StyleWu, Y., Zhu, X., Huang, Q., Zhang, Y., Evans, J., & He, S. (2023). Predicting the Quality of Tangerines Using the GCNN-LSTM-AT Network Based on Vis–NIR Spectroscopy. Applied Sciences, 13(14), 8221. https://doi.org/10.3390/app13148221