Multi-Attribute NMS: An Enhanced Non-Maximum Suppression Algorithm for Pedestrian Detection in Crowded Scenes

Abstract

Featured Application

Abstract

1. Introduction

- For each pedestrian, only a rigid or a dynamic threshold is used to divide the single suppression interval, and all the proposals within the interval are considered duplicates;

- A uniform suppression operation is applied in the suppression interval, such as discarding, or a suppression weight function for re-scoring, making it more difficult to remove the highly similar duplicate proposals of pedestrians while preserving the proposals of occluded pedestrians.

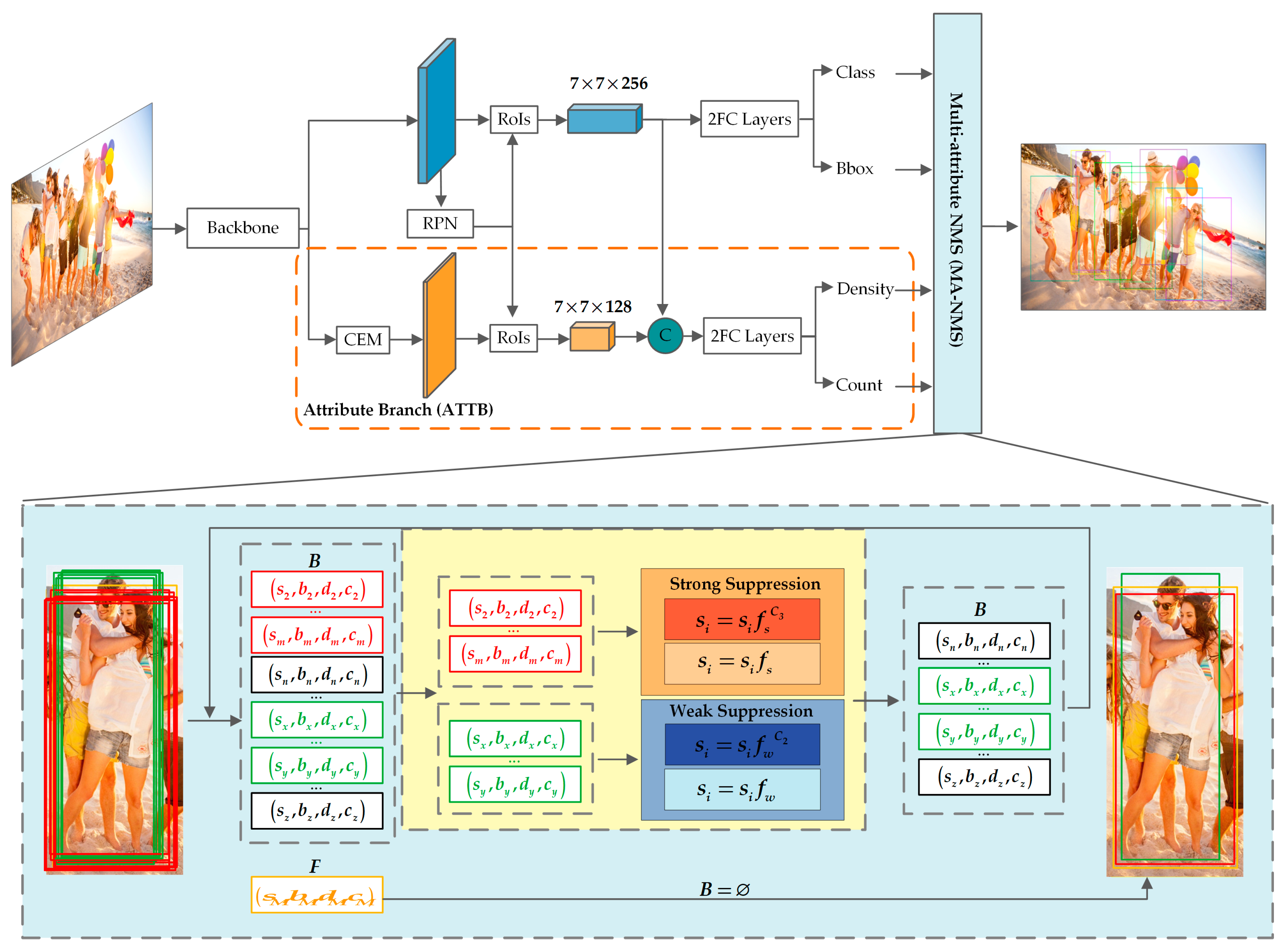

- In order to accurately remove duplicate detections, a Multi-Attribute NMS (MA-NMS) is proposed. Rather than using a uniform suppression interval, it refines the suppression intervals based on density attributes to perform adaptive suppression, which effectively preserves potentially occluded pedestrians, while substantially removing duplicate proposals. Additionally, the suppression intensity is further adjusted according to the count attributes, which further reduces the generation of false positives.

- To obtain the density and count attributes of pedestrians, an attribute branch (ATTB) is proposed. In ATTB, a context extraction module (CME) is designed to obtain the context of pedestrians. Furthermore, it concentrates the context with the feature of pedestrians from the generic detection branch to obtain more representative feature, which allows for a more comprehensive consideration of pedestrians and their surrounding occluded pedestrians.

- With the proposed ATTB, a pedestrian detector for crowded scenes is constructed based on MA-NMS. It simultaneously considers the density and count attributes of pedestrians and adjusts the NMS based on these two attributes for more accurate pedestrian predictions in crowded scenes.

2. Related Works

2.1. Pedestrian Detection

2.2. Intra-Class Occlusion Handling

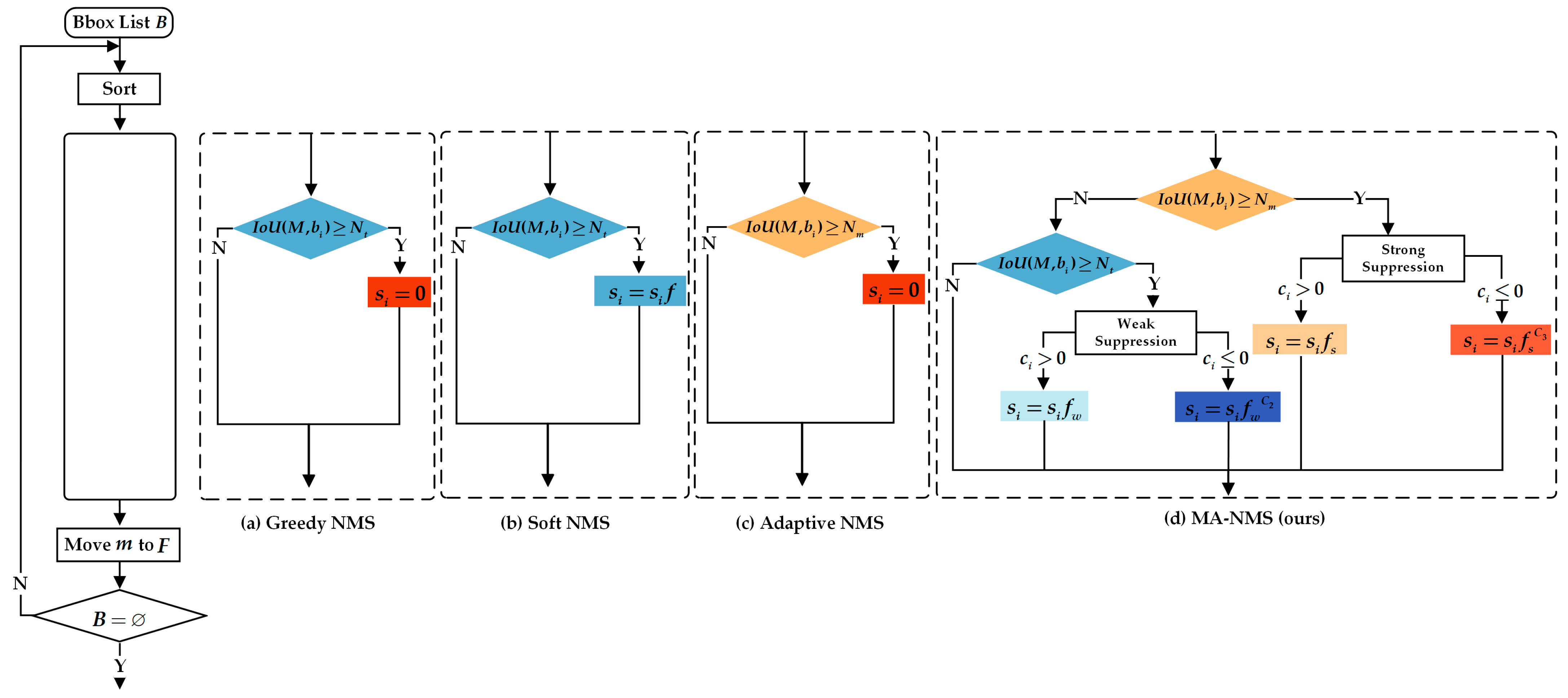

2.3. NMS

3. The Proposed Method

3.1. Greedy NMS

- Sort all the bounding boxes in set in descending order based on their confidence scores.

- Calculate the intersection-over-union () of the first bounding box , which has the highest confidence score, and the sequenced bounding boxes . If exceeds the rigid threshold , the confidence score of will be set to zero.

- Move the proposal , with bounding box , into the set , which is initialized with an empty set.

- Repeat the above three steps for the remaining bounding boxes in until complete traversal.

3.2. Multi-Attribute NMS

| Algorithm 1: The procedure of Multi-Attribute NMS. |

| Input: is the list of initial bounding boxes; is the list of corresponding confidence scores; is the list of corresponding density attributes; is the list of corresponding count attributes; is the rigid NMS threshold. Output: |

| 1: begin: 2: 3: While do 4: 5: 6: 7: 8: for in do 9: if then 10: if then 11: 12: else 13: 14: 15: else if then 16: if then 17: 18: else 19: 20: 21: end for 22: end while 23: return 24: end |

3.3. Attribute Branch

3.4. Pedestrian Detector for Crowded Scenes

3.5. Ground Truth for Pedestrian Density and Count Attributes

4. Experiments

4.1. Datasets and Evaluation Metrics

4.1.1. Datasets

4.1.2. Evaluation Metrics

- : Average precision, which summarizes a precision–recall curve of detection results, is one of the most popular evaluation metrics in generic object detection. In the subsequent experiments, we follow the metric in PASCAL VOC [44] (the larger, the better) and consider proposals with to be positive. This metric effectively measures the accuracy of a detector.

- : The maximum recall, for a fixed number of proposals, represents the proportion of true positives detected by a detector out of the total number ground truths. This metric evaluates the ability of a detector to accurately detect the true ground truths. Larger values indicate better performance.

- : Log-average miss rate, which is calculated using false positives per image (FPPI) in the range of , is a commonly used evaluation metric in pedestrian detection. This metric is particularly sensitive to false positives, especially those with high confidence scores. Smaller values of indicate better performance of a pedestrian detector.

- : Frames per second, which represents the number of frames processed per second, is a commonly used metric for measuring the speed of detectors. Larger values of indicate faster processing speed of a detector.

4.2. Implementation Details

4.3. Ablation Study

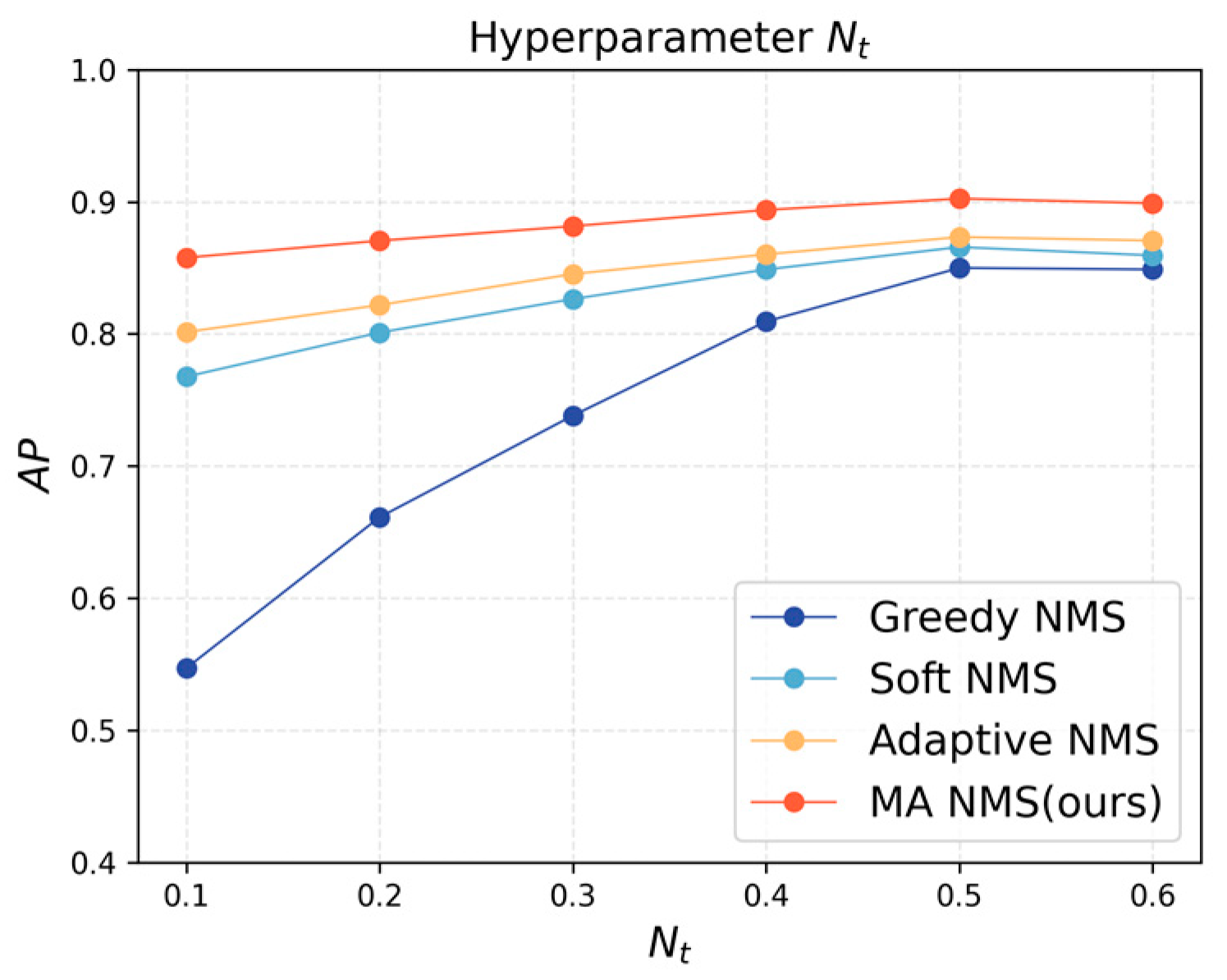

4.4. Hyperparameters

4.4.1. Rigid Threshold

4.4.2. Exponential Constants

4.5. Speed

4.6. Comparison

4.6.1. Results of CrowdHuman

4.6.2. Results of CityPersons

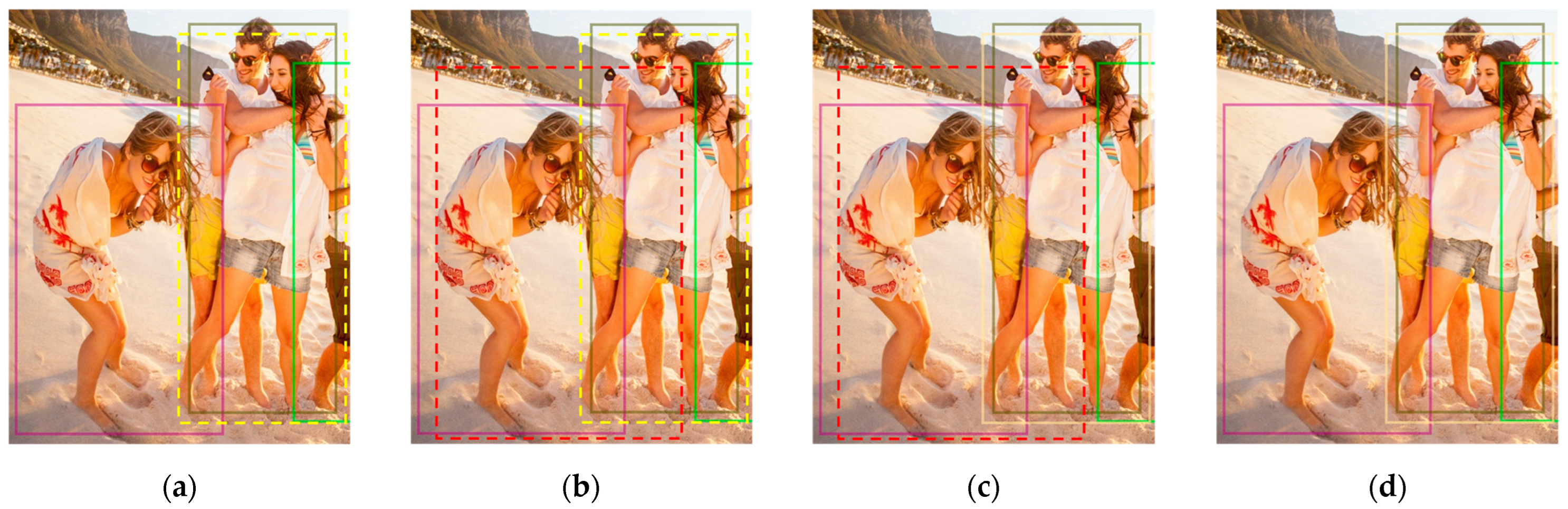

4.7. Visualization

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Cao, J.; Pang, Y.; Xie, J.; Khan, F.S.; Shao, L. From Handcrafted to Deep Features for Pedestrian Detection: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 4913–4934. [Google Scholar] [CrossRef]

- Claussmann, L.; Revilloud, M.; Gruyer, D.; Glaser, S. A Review of Motion Planning for Highway Autonomous Driving. IEEE Trans. Intell. Transp. Syst. 2020, 21, 1826–1848. [Google Scholar] [CrossRef]

- Sikandar, T.; Ghazali, K.H.; Rabbi, M.F. ATM Crime Detection Using Image Processing Integrated Video Surveillance: A Systematic Review. Multimed. Syst. 2019, 25, 229–251. [Google Scholar] [CrossRef]

- Lee, I. Service Robots: A Systematic Literature Review. Electronics 2021, 10, 2658. [Google Scholar] [CrossRef]

- Sepas-Moghaddam, A.; Pereira, F.M.; Correia, P.L. Face Recognition: A Novel Multi-Level Taxonomy Based Survey. IET Biom. 2020, 9, 58–67. [Google Scholar] [CrossRef]

- Wu, D.; Huang, H.; Zhao, Q.; Zhang, S.; Qi, J.; Hu, J. Overview of Deep Learning Based Pedestrian Attribute Recognition and Re-Identification. Heliyon 2022, 8, e12086. [Google Scholar] [CrossRef]

- Harris, E.J.; Khoo, I.-H.; Demircan, E. A Survey of Human Gait-Based Artificial Intelligence Applications. Front. Robot. AI 2021, 8, 749274. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. In Proceedings of the Advances in Neural Information Processing Systems 28 (NIPS 2015), Montreal, QC, Canada, 7–12 December 2015; Cortes, C., Lawrence, N.D., Lee, D.D., Sugiyama, M., Garnett, R., Eds.; Neural Information Processing Systems (NIPS): La Jolla, CA, USA, 2015; Volume 28. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; IEEE: New York, NY, USA, 2016; pp. 779–788. [Google Scholar]

- Li, J.; Liang, X.; Shen, S.; Xu, T.; Feng, J.; Yan, S. Scale-Aware Fast R-CNN for Pedestrian Detection. IEEE Trans. Multimed. 2018, 20, 985–996. [Google Scholar] [CrossRef]

- Liu, W.; Liao, S.; Ren, W.; Hu, W.; Yu, Y. High-Level Semantic Feature Detection: A New Perspective for Pedestrian Detection. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; IEEE: Long Beach, CA, USA, 2019; pp. 5182–5191. [Google Scholar]

- Cai, J.; Lee, F.; Yang, S.; Lin, C.; Chen, H.; Kotani, K.; Chen, Q. Pedestrian as Points: An Improved Anchor-Free Method for Center-Based Pedestrian Detection. IEEE Access 2020, 8, 179666–179677. [Google Scholar] [CrossRef]

- Liu, W.; Hasan, I.; Liao, S. Center and Scale Prediction: Anchor-Free Approach for Pedestrian and Face Detection. Pattern Recognit. 2023, 135, 109071. [Google Scholar] [CrossRef]

- Li, X.; Xu, F.; Lyu, X.; Gao, H.; Tong, Y.; Cai, S.; Li, S.; Liu, D. Dual Attention Deep Fusion Semantic Segmentation Networks of Large-Scale Satellite Remote-Sensing Images. Int. J. Remote Sens. 2021, 42, 3583–3610. [Google Scholar] [CrossRef]

- Li, X.; Li, T.; Chen, Z.; Zhang, K.; Xia, R. Attentively Learning Edge Distributions for Semantic Segmentation of Remote Sensing Imagery. Remote Sens. 2022, 14, 102. [Google Scholar] [CrossRef]

- Bodla, N.; Singh, B.; Chellappa, R.; Davis, L.S. Soft-NMS—Improving Object Detection with One Line of Code. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 5562–5570. [Google Scholar]

- Liu, S.; Huang, D.; Wang, Y. Adaptive NMS: Refining Pedestrian Detection in a Crowd. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 6452–6461. [Google Scholar]

- Ma, W.; Zhou, T.; Qin, J.; Zhou, Q.; Cai, Z. Joint-Attention Feature Fusion Network and Dual-Adaptive NMS for Object Detection. Knowl. Based Syst. 2022, 241, 108213. [Google Scholar] [CrossRef]

- Wang, Y.; Han, C.; Yao, G.; Zhou, W. MAPD: An Improved Multi-Attribute Pedestrian Detection in a Crowd. Neurocomputing 2021, 432, 101–110. [Google Scholar] [CrossRef]

- Zhang, J.; Lin, L.; Zhu, J.; Li, Y.; Chen, Y.; Hu, Y.; Hoi, S.C.H. Attribute-Aware Pedestrian Detection in a Crowd. IEEE Trans. Multimed. 2021, 23, 3085–3097. [Google Scholar] [CrossRef]

- Zhang, H.; Yan, C.; Li, X.; Yang, Y.; Yuan, D. MSAGNet: Multi-Stream Attribute-Guided Network for Occluded Pedestrian Detection. IEEE Signal Process. Lett. 2022, 29, 2163–2167. [Google Scholar] [CrossRef]

- Shao, S.; Zhao, Z.; Li, B.; Xiao, T.; Yu, G.; Zhang, X.; Sun, J. CrowdHuman: A Benchmark for Detecting Human in a Crowd. arXiv 2018, arXiv:1805.00123. [Google Scholar]

- Zhang, S.; Benenson, R.; Schiele, B. CityPersons: A Diverse Dataset for Pedestrian Detection. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2017), Honolulu, HI, USA, 21–26 July 2017; IEEE: New York, NY, USA, 2017; pp. 4457–4465. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of Oriented Gradients for Human Detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 886–893. [Google Scholar]

- Ojala, T.; Pietikainen, M.; Maenpaa, T. Multiresolution Gray-Scale and Rotation Invariant Texture Classification with Local Binary Patterns. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 971–987. [Google Scholar] [CrossRef]

- Lowe, D.G. Object Recognition from Local Scale-Invariant Features. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Corfu, Greece, 20–27 September 1999; Volume 2, pp. 1150–1157. [Google Scholar]

- Viola, P.; Jones, M. Rapid Object Detection Using a Boosted Cascade of Simple Features. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2001), Kauai, HI, USA, 8–14 December 2001; Volume 1, p. I. [Google Scholar]

- Zhang, H.; Zhao, L. Integral Channel Features for Particle Filter Based Object Tracking. In Proceedings of the 2013 5th International Conference on Intelligent Human-Machine Systems and Cybernetics, Hangzhou, China, 26–27 August 2013; Volume 2, pp. 190–193. [Google Scholar]

- Bouwmans, T.; Jayed, S.; Sultana, M.; Jung, S.K. Deep Neural Network Concepts for Background Subtraction: A Systematic Review and Comparative Evaluation. Neural Netw. 2019, 117, 8–66. [Google Scholar] [CrossRef]

- Wang, X. Intelligent Multi-Camera Video Surveillance: A Review. Pattern Recognit. Lett. 2013, 34, 3–19. [Google Scholar] [CrossRef]

- Li, X.; Xu, F.; Liu, F.; Xia, R.; Tong, Y.; Li, L.; Xu, Z.; Lyu, X. Hybridizing Euclidean and Hyperbolic Similarities for Attentively Refining Representations in Semantic Segmentation of Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 5003605. [Google Scholar] [CrossRef]

- Li, X.; Xu, F.; Liu, F.; Lyu, X.; Tong, Y.; Xu, Z.; Zhou, J. A Synergistical Attention Model for Semantic Segmentation of Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5400916. [Google Scholar] [CrossRef]

- Zhang, Y.; Yi, P.; Zhou, D.; Yang, X.; Yang, D.; Zhang, Q.; Wei, X. CSANet: Channel and Spatial Mixed Attention CNN for Pedestrian Detection. IEEE Access 2020, 8, 76243–76252. [Google Scholar] [CrossRef]

- Liu, Z.; Song, X.; Feng, Z.; Xu, T.; Wu, X.; Kittler, J. Global Context-Aware Feature Extraction and Visible Feature Enhancement for Occlusion-Invariant Pedestrian Detection in Crowded Scenes. Neural Process. Lett. 2022, 55, 803–817. [Google Scholar] [CrossRef]

- Li, X.; Xu, F.; Xia, R.; Lyu, X.; Gao, H.; Tong, Y. Hybridizing Cross-Level Contextual and Attentive Representations for Remote Sensing Imagery Semantic Segmentation. Remote Sens. 2021, 13, 2986. [Google Scholar] [CrossRef]

- Li, X.; Xu, F.; Xia, R.; Li, T.; Chen, Z.; Wang, X.; Xu, Z.; Lyu, X. Encoding Contextual Information by Interlacing Transformer and Convolution for Remote Sensing Imagery Semantic Segmentation. Remote Sens. 2022, 14, 4065. [Google Scholar] [CrossRef]

- Xie, J.; Pang, Y.; Khan, M.H.; Anwer, R.M.; Khan, F.S.; Shao, L. Mask-Guided Attention Network and Occlusion-Sensitive Hard Example Mining for Occluded Pedestrian Detection. IEEE Trans. Image Process. 2021, 30, 3872–3884. [Google Scholar] [CrossRef]

- Zhang, S.; Chen, D.; Yang, J.; Schiele, B. Guided Attention in CNNs for Occluded Pedestrian Detection and Re-Identification. Int. J. Comput. Vis. 2021, 129, 1875–1892. [Google Scholar] [CrossRef]

- Wang, X.; Xiao, T.; Jiang, Y.; Shao, S.; Sun, J.; Shen, C. Repulsion Loss: Detecting Pedestrians in a Crowd. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7774–7783. [Google Scholar]

- Zhou, P.; Zhou, C.; Peng, P.; Du, J.; Sun, X.; Guo, X.; Huang, F. NOH-NMS: Improving Pedestrian Detection by Nearby Objects Hallucination. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 1967–1975. [Google Scholar]

- Chu, X.; Zheng, A.; Zhang, X.; Sun, J. Detection in Crowded Scenes: One Proposal, Multiple Predictions. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 12211–12220. [Google Scholar]

- Abdelmutalab, A.; Wang, C. Pedestrian Detection Using MB-CSP Model and Boosted Identity Aware Non-Maximum Suppression. IEEE Trans. Intell. Transp. Syst. 2022, 23, 24454–24463. [Google Scholar] [CrossRef]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The Cityscapes Dataset for Semantic Urban Scene Understanding. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; IEEE: New York, NY, USA, 2016; pp. 3213–3223. [Google Scholar]

- Everingham, M.; Eslami, S.M.A.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The PASCAL Visual Object Classes Challenge: A Retrospective. Int. J. Comput. Vis. 2015, 111, 98–136. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; IEEE: New York, NY, USA, 2017; pp. 2980–2988. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; IEEE: New York, NY, USA, 2015; pp. 1026–1034. [Google Scholar]

- Huang, X.; Ge, Z.; Jie, Z.; Yoshie, O. NMS by Representative Region: Towards Crowded Pedestrian Detection by Proposal Pairing. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 10747–10756. [Google Scholar]

- Zhou, S.; Tang, Y.; Liu, M.; Wang, Y.; Wen, H. Impartial Differentiable Automatic Data Augmentation Based on Finite Difference Approximation for Pedestrian Detection. IEEE Trans. Instrum. Meas. 2022, 71, 2510611. [Google Scholar] [CrossRef]

- Tang, Y.; Li, B.; Liu, M.; Chen, B.; Wang, Y.; Ouyang, W. AutoPedestrian: An Automatic Data Augmentation and Loss Function Search Scheme for Pedestrian Detection. IEEE Trans. Image Process. 2021, 30, 8483–8496. [Google Scholar] [CrossRef]

- Ge, Z.; Wang, J.; Huang, X.; Liu, S.; Yoshie, O. LLA: Loss-Aware Label Assignment for Dense Pedestrian Detection. Neurocomputing 2021, 462, 272–281. [Google Scholar] [CrossRef]

- Wang, Z.; Wang, J.; Yang, Y.; Xing, J. A Coulomb Force Inspired Loss Function for High-Performance Pedestrian Detection. IEEE Signal Process. Lett. 2022, 29, 2318–2322. [Google Scholar] [CrossRef]

- Song, T.; Sun, L.; Xie, D.; Sun, H.; Pu, S. Small-Scale Pedestrian Detection Based on Topological Line Localization and Temporal Feature Aggregation. In Proceedings of the Computer Vision—ECCV 2018, Pt Vii, Munich, Germany, 8–14 September 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer International Publishing Ag: Cham, Switzerland, 2018; Volume 11211, pp. 554–569. [Google Scholar]

- Liu, W.; Liao, S.; Hu, W.; Liang, X.; Chen, X. Learning Efficient Single-Stage Pedestrian Detectors by Asymptotic Localization Fitting. In Proceedings of the Computer Vision—ECCV 2018, Munich, Germany, 8–14 September 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 643–659. [Google Scholar]

- Li, F.; Li, X.; Liu, Q.; Li, Z. Occlusion Handling and Multi-Scale Pedestrian Detection Based on Deep Learning: A Review. IEEE Access 2022, 10, 19937–19957. [Google Scholar] [CrossRef]

| Objects | CrowdHuman | CityPersons |

|---|---|---|

| Images | 15,000 | 2975 |

| Persons | 339,565 | 19,238 |

| Ignore regions | 99,227 | 6768 |

| Person/image | 22.64 | 6.47 |

| Unique persons | 339,565 | 19,238 |

| Subsets | Visibility |

|---|---|

| Reasonable () | |

| Bare () | |

| Partial () | |

| Heavy () |

| Methods | SS | WS | SF | AP | Recall | |

|---|---|---|---|---|---|---|

| Baseline | 85.0 | 88.1 | 44.8 | |||

| MA-NMS(ours) | √ | 88.6 | 92.7 | 45.1 | ||

| √ | √ | 89.1 | 94.2 | 43.7 | ||

| √ | √ | 89.3 | 93.5 | 42.5 | ||

| √ | √ | √ | 90.2 | 94.6 | 42.0 |

| Methods | AP | Recall | FPS | ||

|---|---|---|---|---|---|

| Greedy NMS | 0.5 | 85.0 | 88.1 | 44.8 | 10.75 |

| Soft NMS [16] | 0.5 | 86.6 | 90.8 | 44.5 | 10.62 |

| Adaptive NMS [17] | 0.5 | 87.3 | 90.0 | 45.2 | 10.45 |

| MA-NMS (ours) | 0.5 | 90.2 | 94.6 | 42.0 | 10.33 |

| Method | Backbone | AP | Recall | |

|---|---|---|---|---|

| PBM + R2NMS [48] | ResNet-50 | 89.3 | 93.3 | 43.4 |

| NOH-NMS [40] | ResNet-50 | 89.0 | 92.9 | 43.9 |

| RepLoss [39] | ResNet-50 | 85.6 | 88.4 | 45.7 |

| AutoPedestrian [50] | ResNet-50 | 87.7 | 93.0 | 46.9 |

| LLA.FCOS [51] | ResNet-50 | 88.1 | 93.4 | 47.9 |

| JointDet [18] | DarkNet-53 | 88.8 | - | 43.4 |

| IDADA [49] | ResNet-50 | 88.0 | 93.6 | 45.3 |

| CouLoss [52] | ResNet-50 | 89.8 | 91.0 | 42.4 |

| MA-NMS (w/o) | ResNet-50 | 89.1 | 94.2 | 43.7 |

| MA-NMS (w) | ResNet-50 | 90.2 | 94.6 | 42.0 |

| Methods | Backbone | R | H | P | B |

|---|---|---|---|---|---|

| RepLoss [39] | ResNet-50 | 13.2 | 56.9 | 16.8 | 7.6 |

| TLL [53] | ResNet-50 | 15.5 | 53.6 | 17.2 | 10.0 |

| TLL + MRF [53] | ResNet-50 | 14.4 | 52.0 | 15.9 | 9.2 |

| ALFNet [54] | ResNet-50 | 12.0 | 51.9 | 11.4 | 8.4 |

| PBM + R2NMS [48] | VGG16 | 11.1 | 53.3 | - | - |

| NOH NMS [40] | ResNet-50 | 10.8 | 53.0 | 11.2 | 6.6 |

| AutoPedestrian [50] | ResNet-50 | 11.5 | 56.7 | - | - |

| CSP [13] | ResNet-50 | 11.0 | 49.4 | 10.4 | 7.3 |

| MA-NMS (w/o) | ResNet-50 | 10.6 | 49.5 | 9.9 | 6.5 |

| MA-NMS (w) | ResNet-50 | 10.0 | 48.9 | 9.0 | 6.3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, W.; Li, X.; Lyu, X.; Zeng, T.; Chen, J.; Chen, S. Multi-Attribute NMS: An Enhanced Non-Maximum Suppression Algorithm for Pedestrian Detection in Crowded Scenes. Appl. Sci. 2023, 13, 8073. https://doi.org/10.3390/app13148073

Wang W, Li X, Lyu X, Zeng T, Chen J, Chen S. Multi-Attribute NMS: An Enhanced Non-Maximum Suppression Algorithm for Pedestrian Detection in Crowded Scenes. Applied Sciences. 2023; 13(14):8073. https://doi.org/10.3390/app13148073

Chicago/Turabian StyleWang, Wei, Xin Li, Xin Lyu, Tao Zeng, Jiale Chen, and Shangjing Chen. 2023. "Multi-Attribute NMS: An Enhanced Non-Maximum Suppression Algorithm for Pedestrian Detection in Crowded Scenes" Applied Sciences 13, no. 14: 8073. https://doi.org/10.3390/app13148073

APA StyleWang, W., Li, X., Lyu, X., Zeng, T., Chen, J., & Chen, S. (2023). Multi-Attribute NMS: An Enhanced Non-Maximum Suppression Algorithm for Pedestrian Detection in Crowded Scenes. Applied Sciences, 13(14), 8073. https://doi.org/10.3390/app13148073