Abstract

Spiking neural P systems (SNP systems), as computational models abstracted by the biological nervous system, have been a major research topic in biological computing. In conventional SNP systems, the rules in a neuron remain unchanged during the computation. In the biological nervous system, however, the biochemical reactions in a neuron are also influenced by factors such as the substances contained in it. Based on this motivation, this paper proposes SNP systems with rules dynamic generation and removal (RDGRSNP systems). In RDGRSNP systems, the application of rules leads to changes of the substances in neurons, which leads to changes of the rules in neurons. The Turing universality of RDGRSNP systems is demonstrated as a number-generating device and a number-accepting device, respectively. Finally, a small universal RDGRSNP system for function computation using 68 neurons is given. It is demonstrated that the variant we proposed requires fewer neurons by comparing it with five variants of SNP systems.

1. Introduction

Membrane computing (MC) [1], proposed in 1998, is a distributed parallel computational method inspired by the working process of biological cells. The computational models of MC are also known as membrane systems or P systems. P systems can be roughly classified into three groups according to the biological cells they imitate: cell-like P systems, tissue-like P systems, and neural-like P systems.

In neural-like P systems, spikes are used to represent information. Two classes of neural-like P systems have been proposed: axon P systems (AP systems) [2] and spiking neural P systems (SNP systems) [3]. An AP system has a linear structure, so that each node of this system can only send spikes to its neighboring left and right nodes. An SNP system has a directed graph structure, where neurons are represented as the nodes in the directed graph and the synapses between neurons are represented by directed arcs.

SNP systems, which also belong to the spiking neural network [4], have become a major research issue as soon as they were proposed. The research related to SNP systems mainly includes four aspects: variant model design, computational power proof, algorithm design (application), and implementation. Theoretical research mainly includes variant model design and computational power proof, while algorithmic research mainly includes algorithm design (application) and implementation. Under the continuous research of a wide range of scholars, several different variants of SNP systems have been proposed. Numerical SNP (NSNP) systems [5,6,7] are a variant of SNP systems inspired by numerical P systems, in which information is encoded by the values of variables and processed by continuous functions. Compared to the original SNP systems, NSNP systems are no longer discrete, but have a continuous numerical nature, which is useful for solving real-world problems. Homogeneous SNP (HSNP) systems [8,9,10,11] are a restricted variant of SNP systems in which each neuron has the same set of rules, making HSNP systems simpler than the original SNP systems. SNP systems with communication on request (SNQP systems) [12,13,14,15,16] are proposed by adding a communication strategy to the original SNP systems in which neuron request spikes from neighboring neurons according to the number of spikes it contains, and no spikes are consumed or produced during the computation. SNP systems with anti-spikes [17,18,19,20] are proposed by considering the anti-spikes and annihilation rules in the original SNP systems. In SNP systems with anti-spikes, the usual spikes and anti-spikes will annihilate each other when they meet in the same neuron. SNP systems with weights [21,22,23,24,25] are proposed by introducing synaptic weights to the original SNP systems in which the number of spikes received by a neuron depends on not only the number of spikes sent by its presynaptic neurons, but also the synaptic weights between neurons.

In addition, as a kind of bio-inspired computational model, SNP systems have many excellent characteristics. First, neurons are not fully connected to each other; therefore, the topological structure of SNP systems is sparse and simple. In SNP systems, information is transmitted only when meeting a specific value, so their information transmission is characterized by low power consumption. Additionally, because SNP systems are a kind of parallel computational model, the tasks can be processed in parallel, reducing the processing time required to be consumed significantly. In addition, the working mechanism of SNP systems is special. In SNP systems, spikes are used to represent information, and the information is processed and transmitted by using spiking rules. In SNP systems, a neuron can contain multiple rules and use regular expression as a control condition for the rules, allowing the neuron to freely select rules to use depending on the number of spikes. It is because of these characteristics that SNP systems have good performance in many kinds of applications [26,27], such as time series forecasting [28,29,30,31], pattern recognition [32,33], image processing [34,35,36,37], etc.

Generally, the rules of neurons in SNP systems are set in the initial state and cannot be changed during the computation process. However, in biological neurons, biochemical reactions are usually changed according to factors such as different substances in the neuron. Motivated by this, spiking neural P systems with rules dynamic generation and removal (RDGRSNP systems) is proposed. In RDGRSNP systems, rules in the rule set of a neuron can be added and removed during the computation process.

Our contribution is to propose a new variant of SNP systems called RDGRSNP systems. This new variant enriches the research on SNP systems. In RDGRSNP systems, the application of rules causes the number of spikes in the neuron to change, which causes the rules in the neuron to change. This makes the rules in RDGRSNP systems no longer constant like the traditional SNP systems, but dynamically changing during the computation. This is not considered by previous theoretical studies on SNP systems. Therefore, our proposed RDGRSNP systems are closer to biological nervous systems and fit better with biological facts than the original SNP systems. In addition, our proposed RDGRSNP systems can improve the ability of adaptive regulation of SNP systems based on dynamical change of rules according to the different substances in neurons.

This paper will be structured as follows: in Section 2, we introduce the proposed RDGRSNP systems and an illustrative example; in Section 3, we demonstrate the Turing universality of RDGRSNP systems when used as a number-generating device and a number-accepting device, respectively. In Section 4, a small universal RDGRSNP system for simulating function computation is constructed. In Section 5, the work of this paper is summarized.

2. SNP Systems with Rules Dynamic Generation and Removal

This section presents the definition of SNP systems with rules dynamic generation and removal and explains them with an illustrative example.

2.1. Definition and Description

In this subsection, we introduce the formal definition of RDGRSNP systems using abstract notation and a detailed description of the definition.

2.1.1. Definition

An RDGRSNP system of degree m consists of the following tuples:

where

- (1)

- O is a single alphabet with only one element a used to represent a spike.

- (2)

- denote m neurons in , and is represented by tuple , where

- (a)

- denotes the number of spikes in neuron .

- (b)

- denotes the rule set contained in neuron , where .

- (3)

- R is the rule set of , which contains rules of the following two forms:

- (a)

- . A rule of this form is called the spiking rule with rules dynamic generation and removal, where E is a regular expression defined on the single alphabet O, and c and p denote the number of spikes consumed and produced with , denoting the l rules in R, and are the labels. The spiking rule with rules dynamic generation and removal can be applied when the number of spikes in the neuron satisfies conditions and . Furthermore, the spiking rule with rules dynamic generation and removal can be abbreviated as when .

- (b)

- . A rule of this form is called the forgetting rule with rules dynamic generation and removal, where denotes the number of spikes consumed, with the additional restriction that for all the spiking rules with rules dynamic generation and removal , there is . Similarly, denotes the l rules R, and are the labels.

- (4)

- denotes the set of synapses, where and .

- (5)

- denotes the input and output neurons, respectively.

2.1.2. Description

The definition of RDGRSNP systems proposed above is described here in detail. We mainly describe the process of applying rules in RDGRSNP systems, how the rules are executed in RDGRSNP systems, and the state and graphical definition of RDGRSNP systems.

When neuron applies a spiking rule with rules dynamic generation and removal, , c spikes in are consumed, and p spikes are sent along synapse to postsynaptic neuron . Meanwhile, neuron adjusts its rule set as follows. When , can be abbreviated to , indicating that rule is added to the rule set of neuron . If rule already exists , no rule is repeatedly added. When , it means that rule is removed from the rule set of neuron . If rule does not exist in , no rule is removed. When , can be omitted and not written, and it means that rule is neither added to nor removed from the rule set of neuron .

When neuron applies a forgetting rule with rules dynamic generation and removal , s spikes in are removed and no spikes are sent. Meanwhile, adjusts its rule set, operating in the same way as when applying the spiking rule with rules dynamic generation and removal.

Note that an RDGRSNP system is parallel on the system level and sequential on the neuron level. There is a global clock used to mark steps throughout the system. When a neuron contains only one applicable rule, the neuron must apply it. When a neuron contains two or more applicable rules, the neuron can only non-deterministically choose one to apply. When no rule is applicable in the neurons of , the system stops computing.

In general, the state of the system at each step is represented by the number of spikes in each neuron at that step. However, in the proposed RDGRSNP system , since rules can be generated and removed dynamically, the rules contained in each neuron should also be included in the state of system . That is, the state of is represented by the number of spikes and the rules in each neuron, symbolized as . The initial state of is represented by . When the number of spikes and the rule sets contained in the neurons of do not change, the system stops computing.

Regarding the graphical definition, rounded rectangles are used to represent neurons, and directed line segments are used to represent synapses between neurons. In an RDGRSNP system, the result of a computation is defined by the time interval between the first two spikes sent to the environment by the output neuron .

2.2. An Illustrative Example

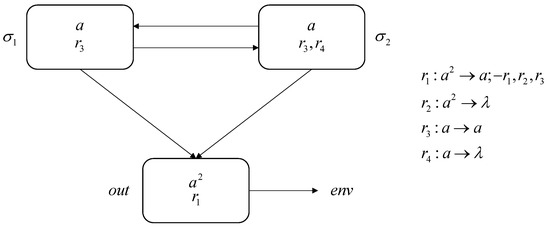

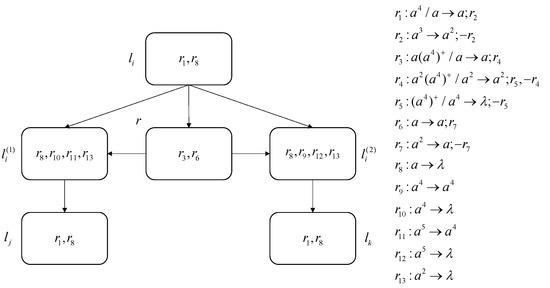

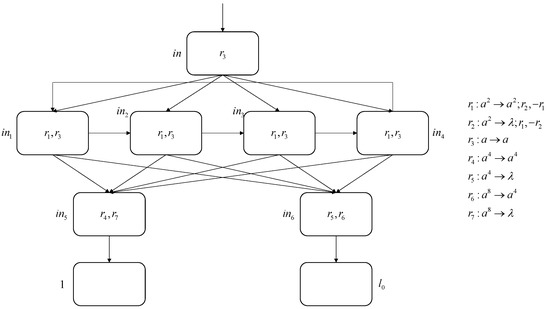

In Figure 1, an illustrative example is given to elaborate the definition of RDGRSNP systems. In this example, both and initially have one spike, and initially has two spikes, so the initial state is .

Figure 1.

An Illustrative Example.

At step 1, neuron applies to send one spike to and . The output neuron applies the spiking rule with rules dynamic generation and removal , consumes two spikes, sends the first spike to , removes , and adds and . As for neuron , the number of spikes contained can satisfy the two rules and , so it will non-deterministically choose one rule to apply.

Suppose at step 1, neuron applies and sends one spike to and . At step 2, applies again and sends one spike to and , respectively. As for the output neuron , it contains two spikes and rules and , so it applies rule , forgetting the two included spikes. Thereafter, if neuron continues to apply rule , the system will continue to repeat the cycle until neuron chooses rule to apply.

Suppose at step n, where , neuron chooses rule to apply, so it does not send spikes outward. In addition, neuron sends one spike to . Therefore, at step , has only one spike, applies rule , and sends the second spike to .

Thus, sends the first two spikes with a time interval of , where , i.e., all positive integers.

3. The Turing Universality of SNP Systems with Rules Dynamic Generation and Removal

This section is used to prove the Turing universality of RDGRSNP systems when used as a number-generating device and a number-accepting device, respectively. It has been shown that register machines can be used to characterize Turing computable sets of numbers (NRE), so we demonstrate the Turing universality of RDGRSNP systems by simulating register machines.

For a register machine , m denotes the number of registers r in the register machine M, H is a label set in which each label corresponds to an instruction in the instruction set I, denotes the start instruction, denotes the halt instruction, and I denotes an instruction set. The instructions in I have the following three forms:

- (1)

- , the instruction indicates that: add one to the number in r and then jump non-deterministically to or ;

- (2)

- , the instruction indicates that: if the number in r is not zero, subtract the number by one and jump to ; if the number in r is zero, jump directly to ;

- (3)

- , the instruction is the halt instruction, indicating that M stops computing.

In the number-generating mode, all registers in M are initially empty, and M starts from and executes until . After the computation is finished, all registers except register 1 are empty, and the number generated by M is stored in register 1. It is assumed that there is no for the instructions related to register 1, i.e., the number in register 1 will not be subtracted.

In the number-accepting mode, all registers of M except register 1 are initially empty, and the number to be accepted is stored in register 1. If M can reach the halt instruction , it proves that the number is accepted by M. Note that the instruction needs to be changed to deterministic in the number accepting mode.

In this paper, we use and to denote the set of numbers that can be generated and accepted by a RDGRSNP system, respectively.

In the proofs of this paper, we use neuron to denote instruction , neuron to denote register r, and auxiliary neurons for module construction, where . In addition, we stipulate that the simulation of instruction starts when has four spikes.

Theorem 1.

Proof.

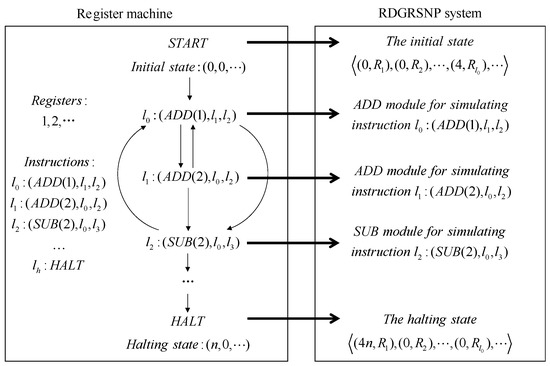

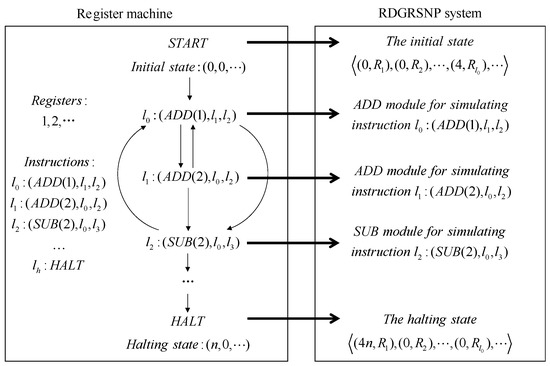

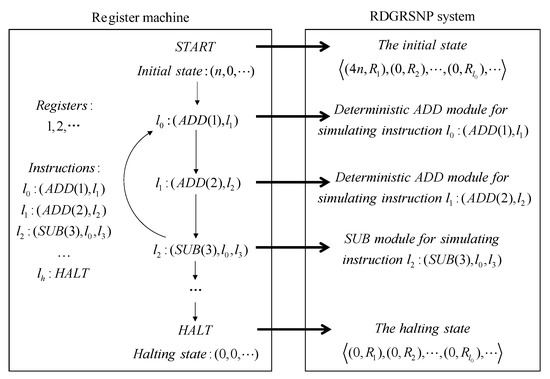

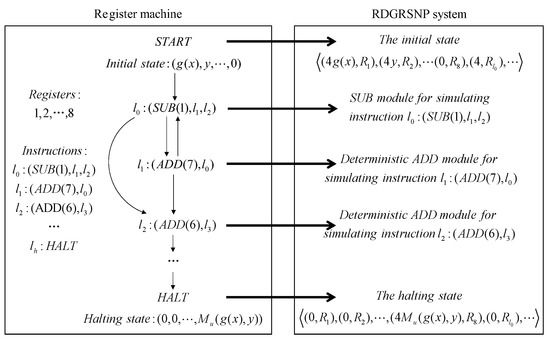

By Turing Church’s theorem, there is , so it is sufficient if we can prove . For , we simulate the register machine to prove it by following the process shown in Figure 2. □

Figure 2.

The flowchart of the SNP system with rules dynamic generation and removal simulating register machine in the number-generating mode.

In the initial state, all neurons are empty except neuron . Four spikes in neuron are used to trigger the simulation of computation. The simulation of computation follows the simulation of the instructions in the register machine until neuron receives four spikes. When neuron receives four spikes, it means that the computation process in the register machine is successfully simulated, and the FIN module starts to output the computation result stored in register 1.

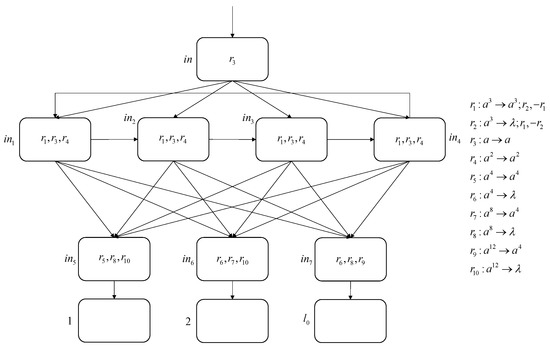

ADD module: The ADD module is used to simulate instruction , as shown in Figure 3. This module consists of six neurons and eight rules.

Figure 3.

ADD module.

Suppose that at step t, has four spikes and starts simulating instruction . In neuron , the spiking rule with rules dynamic generation and removal is applied, consuming two spikes, sending two spikes to , , and and adding . At step , has two spikes with , , and . Therefore, applies rule , sending two spikes to , , and and removing . At step , receives two spikes again, for a total of four spikes, corresponding to the number in register r add one. In addition, has four spikes with rules and . Since both rules can be applied, neuron non-deterministically chooses one to apply. The situations are as follows:

- (1)

- When neuron chooses rule to apply, it consumes two spikes, sends two spikes to neurons and , and adds rule . At step , applies the forgetting rule with rules dynamic generation and removal , consumes the six spikes it contains, and adds rule . In neuron , since it contains two spikes with rules , , and , rule is applied, sending two spikes to neurons and , and removing rule . At step , applies , consuming two spikes and removing rule . In addition, neuron receives two spikes, containing four spikes in total and starts simulating .

- (2)

- When neuron chooses rule to apply, it consumes two spikes and adds rule . At step , contains two spikes with rules , , and . Thus, the spiking rule with rules dynamic generation and removal is applied, sending one spike to neurons and , respectively, and removing rule . At step , applies , forgetting the one spike from neuron . In addition, is applied in neuron , sending four spikes to . At step , contains four spikes and starts simulating .

Table 1 lists five variants of SNP systems and the number of neurons they require to construct the ADD module. From Table 1, it can be seen that DSNP, PASNP, PSNRSP, MPAIRSNP, and SNP-IR require 7, 8, 11, 9, and 8 neurons, respectively, while RDGRSNP requires 6. Therefore, the proposed RDGRSNP system in this paper requires the least number of neurons.

Table 1.

The comparison of the number of neurons required to construct the ADD module.

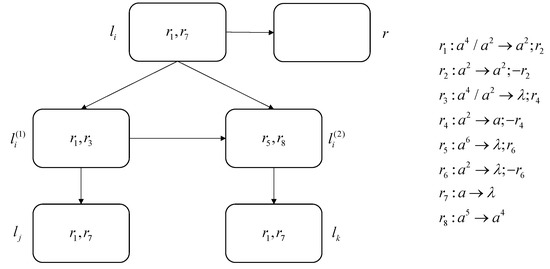

SUB module: The SUB module is used to simulate instruction , as shown in Figure 4. This module consists of six neurons and twelve rules.

Figure 4.

SUB module.

Suppose that neuron contains four spikes at step t and starts simulating instruction . Neuron applies the spiking rule with rules dynamic generation and removal , sends one spike to , , and , and adds to the rule set. At step , remains with three spikes with rules , , and , so applies the spiking rule with rules dynamic generation and removal , sends two spikes to the postsynaptic neuron, and removes the rule . In and , the forgetting rule is applied, forgetting one spike. In addition, different rules are applied in neuron depending on whether the initial state contains spikes or not in the following two cases:

- (1)

- If contains spikes, then at step , applies , consumes one spike, and sends it to and . Thus, at step , both and have three spikes. Additionally, neuron contains spikes with rules , and . Thus, the spiking rule with rules dynamic generation and removal is applied, sending two spikes to and , adding rule , and removing rule . At step , applies , forgetting four spikes. Thus, at step , has spikes, corresponding to the number in register r subtracted by one. Additionally, at step , applies to send four spikes to . In neuron , the forgetting rule is applied. At step , contains four spikes, simulating instruction .

- (2)

- If neuron does not contain spikes, then at step , the spiking rule with rules dynamic generation and removal is applied, sending one spike to and and adding . At step , has , , and with two spikes, so the spiking rule with rules dynamic generation and removal is applied, removing rule . Thus, at step , the auxiliary neuron applies rule , while applies rule , sending spikes to . At step , contains four spikes, simulating instruction .

Table 2 lists five variants of SNP systems and the number of neurons they require to construct the SUB module. From Table 2, it can be seen that DSNP, PASNP, PSNRSP, MPAIRSNP, and SNP-IR require 7, 10, 15, 8, and 8 neurons, respectively, while RDGRSNP requires 6. Therefore, the proposed RDGRSNP system in this paper requires the least number of neurons.

Table 2.

The comparison of the number of neurons required to construct the SUB module.

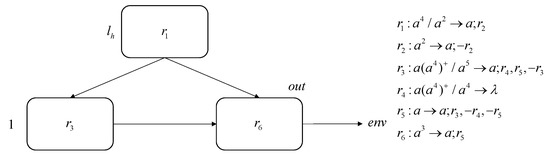

FIN module: The FIN module is used to simulate the halt instruction and output the numbers generated by system , as shown in Figure 5. The generated number n is represented by the time interval between the first two spikes sent to the environment by the output neuron. The FIN module constructed in this paper consists of three neurons and six rules.

Figure 5.

FIN module.

Suppose that at step t, has four spikes, starts simulating instruction and outputting the computation results. Neuron applies the spiking rule with rules dynamic generation and removal , sends one spike to and , and adds to it. Thus, at step , neuron applies , sends one spike to and , and removes . Neuron applies the spiking rule with rules dynamic generation and removal , consuming five spikes, sending one spike to the output neuron , adding rules and , and removing rule . At step , applies sending the first spike to and adding . So, starting from step , neuron will forget four spikes at each step until step , where .

At step , has only one spike with rules and . Therefore, it applies the spiking rule with rules dynamic generation and removal , sends one spike to , adds , and removes rules and . At step , applies to send the second spike to . Thus, the time interval between the first two spikes sent by to the environment is , i.e., the number stored in register 1 when the computation stops.

Table 3 lists five variants of SNP systems and the number of neurons they require to construct the FIN module. From Table 3, it can be seen that DSNP, PASNP, PSNRSP, MPAIRSNP, and SNP-IR require 4, 9, 8, 8, and 5 neurons, respectively, while RDGRSNP requires 3. Therefore, the proposed RDGRSNP system in this paper requires the least number of neurons.

Table 3.

The comparison of the number of neurons required to construct the FIN module.

Theorem 2.

Proof

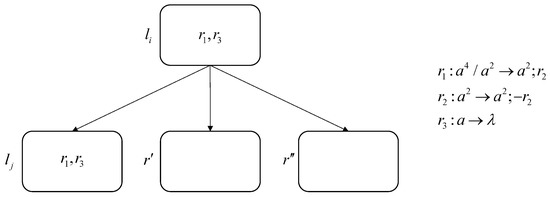

Similarly, by Turing Church’s theorem, we have , so we only need to prove . For , we simulate the register machine to prove it by following the process shown in Figure 6. □

Figure 6.

Flowchart of the RDGRSNP system simulating the register machine in number-accepting mode.

In the initial state, neuron contains spikes, corresponding to the number n to be accepted in the register machine, neuron contains four spikes for triggering the simulation of computation, and all the remaining neurons are empty. The simulation of computation follows the simulation of instructions in the register machine until neuron receives four spikes. When neuron receives four spikes, it means that the computation process in the register machine is simulated successfully, the computation stops, and the number is accepted.

First, in the number-accepting mode, we need the INPUT module to read the number to be accepted. The number n to be accepted is represented by the time interval between the first two spikes entered, i.e., the spike train entered is: .

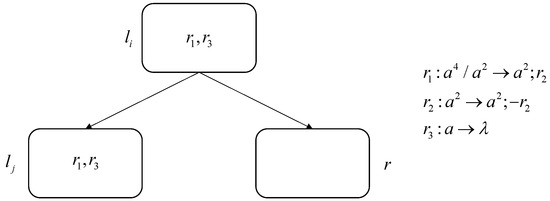

INPUT module: The INPUT module is used to read the number to be accepted, as shown in Figure 7. This module consists of nine neurons and seven rules.

Figure 7.

INPUT module.

Suppose that at step 0, receives one spike, so at step 1, applies and sends one spike to auxiliary neurons , , , and , respectively. Starting from step 2, auxiliary neurons , , , and will send spikes in a cycle and send one spike to and , respectively. For neuron , it applies to send four spikes to ; for neuron , it applies rule to forget the received four spikes. The above process will continue until the input neuron receives another spike.

At step n, receives another spike, so at step , applies again, sends one spike to , , , and . At step , , , , and all apply the spiking rule with rules dynamic generation and removal , send two spikes to neurons and , remove rule , and add rule . Thus, at step , these four auxiliary neurons apply rule to forget two spikes. At step , both neurons and receive eight spikes. Neuron applies rule to forget the spikes in it. Neuron receives four spikes at step , so it contains spikes. In , rule is applied to send four spikes to . Then, neuron contains four spikes, simulating the starting instruction .

Table 4 lists five variants of SNP systems and the number of neurons they require to construct the INPUT module. From Table 4, it can be seen that DSNP, PASNP, PSNRSP, MPAIRSNP, and SNP-IR require 6, 6, 10, 6, and 9 neurons, respectively, while RDGRSNP requires 9. However, this is acceptable because the INPUT module will be used only once in the number-generating mode and will not have a great impact on the overall number of neurons.

Table 4.

The comparison of the number of neurons required to construct the INPUT module.

In the number-generating mode, the SUB instruction in M does not change, so we can continue to use the SUB module in Theorem 1 above. Since the system stop means that the input numbers are accepted, an additional FIN module is no longer needed to output the computation results. However, in the number-accepting mode, the ADD instruction in M is no longer in the non-deterministic form of , but in the deterministic form of . Therefore, this paper provides a deterministic ADD module, as shown in Figure 8. This module consists of only three neurons and three rules.

Figure 8.

Deterministic ADD module.

In this deterministic ADD module, sends spikes to , simulating the operation of adding one to the number of register r and, at the same time, sends spikes to , causing instruction to start being simulated.

Table 5 lists five variants of SNP systems and the number of neurons they require to construct the deterministic ADD module. From Table 5, it can be seen that DSNP, PASNP, PSNRSP, MPAIRSNP, and SNP-IR require 4, 3, 5, 3, and 3 neurons, respectively, while RDGRSNP requires 3. Therefore, PASNP, MPAIRSNP, SNP-IR and the proposed RDGRSNP system in this paper require the least number of neurons.

Table 5.

The comparison of the number of neurons required to construct the deterministic ADD module.

4. Small Universal SNP System with Rules Dynamic Generation and Removal

This section presents a small universal RDGRSNP system used to simulate function computation and demonstrates that the RDGRSNP system we proposed requires fewer neurons by comparing it with five variants of SNP systems.

Theorem 3.

A small universal RDGRSNP system using 68 neurons can implement function simulation.

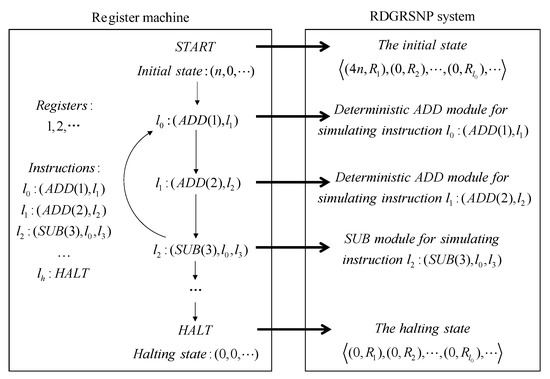

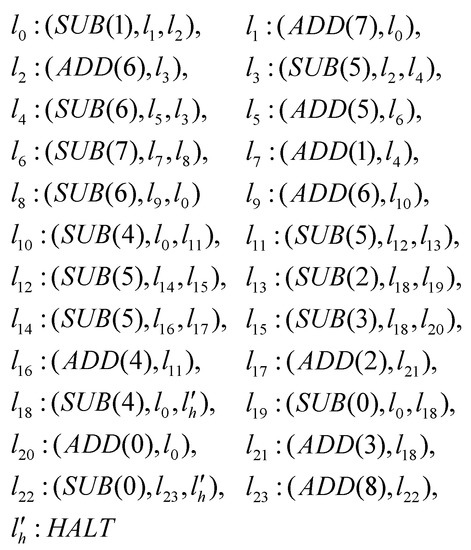

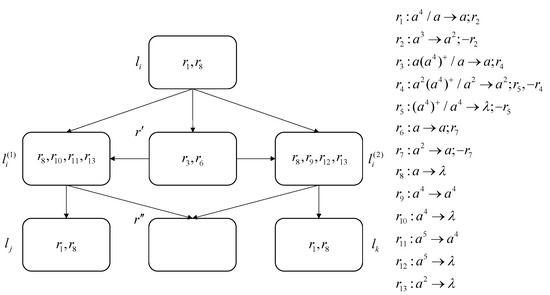

Regarding Theorem 3, we continue to prove it by simulating a register machine. In [41], for simulating the function computation shown in Figure 9, there always exists a recursive function g such that holds for any , where is any one function of the fixed enumeration of all unary partial recursive functions, denotes a universal register machine, and and y are two parameters with stored in registers 1 and 2, respectively. The register machine for the simulating function computation stops when the execution reaches . The result of the computation is stored in register 0.

Figure 9.

Register machine .

Proof

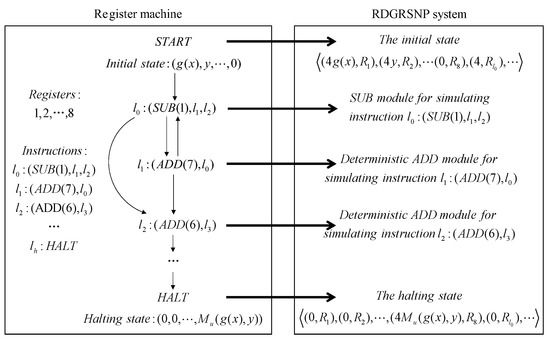

For Theorem 3, we simulate the register machine to prove it by following the process shown in Figure 10.

Figure 10.

The flowchart of a small universal RDGRSNP system simulating a register machine.

In the initial state, neuron contains spikes, corresponding to parameter in register 1, neuron contains spikes, corresponding to parameter y in register 2, neuron contains four spikes for triggering the simulation of computation, and all the remaining neurons are empty. The simulation of computation follows the simulation of instructions in the register machine until neuron receives four spikes. When neuron receives four spikes, it means that the computation process of the register machine is successfully simulated and the computation stops. Meanwhile, the FIN module is triggered and used to output the computation result.

In , the SUB instruction in the form of , the halt instruction , and the deterministic ADD instruction in the form of are included. Thus, we can continue to use the SUB, FIN, and deterministic ADD module, as proposed in Theorems 1 and 2 above. In addition, since two registers are needed to store parameters and y, respectively, this paper makes a little change to the INPUT module in Theorem 2 above, as shown in Figure 11. We still use the time interval between two spikes to represent the input number, so the input sequence is .

Figure 11.

INPUT module in register machine .

Suppose that at step 0, receives the first spike, so at step 1, applies and sends one spike to the four auxiliary neurons , , , and . Thus, from step 2, these four auxiliary neurons send spikes in a cycle by applying rule , and all send one spike to , , and . For neuron , starting at step 3, it continuously applies to send four spikes to , by which the first input number is stored in , while for neurons and , they continuously apply rule to forget four spikes. The process continues until the input neuron receives the second spike.

At step , receives the second spike, so at step , applies again, sends one spike to neurons , , , and . Thus, starting at step , these four neurons apply rule to send two spikes in a cycle, while sending two spikes to their postsynaptic neurons , , and . Similarly, for neuron , starting at step , it applies rule continuously to send four spikes to the neuron , by which the second input number is stored in . For neurons and , they apply rule to forget the spikes. The process continues until receives the third spike. In addition, receives four spikes sent from at step . Therefore, contains spikes, which corresponds to the number in register 1.

At step , receives the third spike, so at step , applies again and sends one spike to , , , and . At step , neurons , , , and all contain three spikes with rules , , and , so they all apply the spiking rule with rules dynamic generation and removal , send three spikes to neurons , , and , and add rule and remove rule . At step , neurons , , , and all apply the forgetting rule with rules dynamic generation and removal , forget three spikes, and remove rule and add rule again. Meanwhile, both neurons and contain 12 spikes and apply rule to forget these 12 spikes. Neuron applies rule to send four spikes to . In addition, receives four spikes sent by neuron at step , so it has spikes, which corresponds to the number y in register 2. At step , contains four spikes, simulating the starting instruction .

Table 6 lists five variants of SNP systems and the number of neurons they need to build the INPUT module in the register machine. From Table 6, it can be seen that DSNP, PASNP, PSNRSP, MPAIRSNP, and SNP-IR require 9, 9, 13, 9, and 9 neurons, respectively, while RDGRSNP requires 11. However, this is acceptable because the INPUT module will be used only once during the simulation of function calculation and will not have a great impact on the overall number of neurons.

Table 6.

The comparison of the number of neurons required to construct the INPUT module in register machine .

According to the INPUT, deterministic ADD, SUB, and FIN modules described above, a small universal RDGRSNP system for simulating function consists of 71 neurons, as follows:

- (1)

- Neurons used to start simulating instructions: 25

- (2)

- Neurons used to simulate registers: 9

- (3)

- Auxiliary neurons in the ADD module: 0

- (4)

- Auxiliary neurons in the SUB module:

- (5)

- The input and auxiliary neurons in the INPUT module: 8

- (6)

- The output neuron in the FIN module: 1

We can also further reduce the number of neurons by compound connections between modules, and the following is the specific structure of compound connections between modules.

Figure 12 represents the composite connection of a set of consecutive ADD instructions and . We put from the former module into the latter module, removing .

Figure 12.

Compound connection of ADD–ADD.

Figure 13 represents the compound connection of a set of consecutive ADD instruction and SUB instruction . We put the neuron of the former ADD module into the later SUB module, removing .

Figure 13.

Compound connection of ADD–SUB.

By the compound connection between the modules mentioned above, we remove three neurons , , and , so that the number of neurons required to build a small universal RDGRSNP system for simulating function is reduced from 71 to 68.

Table 7 lists several variants of SNP systems and the number of neurons they require to construct a small universal SNP system for simulating function computation. From the table, it can be seen that five variants of SNP systems, DSNP, PASNP, PSNRSP, MPAIRSNP, and SNP-IR, require 81, 121, 151, 95, and 100 neurons, respectively, while the RDGRSNP system proposed in this paper requires only 68.

Table 7.

The comparison of the number of neurons required to construct a small universal SNP system for simulating function computation.

In the construction of SNP systems, the number of neurons is generally used to measure the computational resources required to build a system. A smaller number of neurons means fewer computational resources are required to build a system; conversely, a larger number of neurons means more resources are required to build a system. Therefore, the smaller the number of neurons needed to construct the SNP system, the better. As we can see in Table 7, our proposed RDGRSNP systems require fewer neurons to build a small universal SNP system for simulating function . This means that a RDGRSNP system needs only fewer computational resources compared to other systems. Thus, our proposed RDGRSNP systems have an advantage. □

5. Conclusions

In conventional SNP systems, the rules contained in neurons do not change during the computation process. However, biochemical reactions in biological neurons tend to be different depending on factors such as the substances in the neuron. Considering this motivation, RDGRSNP systems are proposed in this paper. In RDGRSNP systems, neurons apply rules to update the rule set. In Section 2, we give the definition of RDGRSNP systems and illustrate how RDGRSNP systems work with an illustrative example.

In Section 3, we demonstrate the computational power of RDGRSNP systems by simulating the register machines. Specifically, we demonstrate that RDGRSNP systems are Turing universal when used as a number-generating device and a number-accepting device. Subsequently, in Section 4, we construct a small universal RDGRSNP system by using 68 neurons. By comparing with five variants of SNP systems, DSNP, Asynchronous RSSNP, PASNP, PSNRSP, MPAIRSNP, and SNP-IR, it is demonstrated that the RDGRSNP system proposed in this paper requires only fewer resources to construct a small universal RDGRSNP system for simulating function computation.

Our future research will focus on the following areas. Although the computational power of RDGRSNP systems has been demonstrated, the potential of RDGRSNP systems goes far beyond that. SNP systems have shown excellent capabilities in solving NP problems, and we have demonstrated the advantage of RDGRSNP systems compared with other variants of SNP systems. Therefore, we believe that RDGRSNP systems can perform better in solving NP problems.

RDGRSNP systems proposed in this paper work in sequential mode. However, there are other modes of operation such as asynchronous mode that have not been discussed. In asynchronous mode, the neurons in the system can choose to apply or not to apply the rules at each step. Of course, these rules also need to satisfy control conditions to be applied. Therefore, neurons have more autonomy in asynchronous mode. Many variants of SNP systems have been discussed working in asynchronous mode. An exploration of RDGRSNP systems working in asynchronous mode is also a future research direction for this paper.

Author Contributions

Conceptualization, Y.S. and Y.Z.; methodology, Y.S. and Y.Z.; writing—original draft preparation, Y.S.; writing—review and editing, Y.Z.; funding acquisition, Y.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by National Natural Science Foundation of China (Nos. 61806114, 61876101) and China Postdoctoral Science Foundation (Nos. 2018M642695, 2019T120607).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Păun, G. Membrane Computing. In Fundamentals of Computation Theory, Proceedings of the 14th International Symposium, FCT 2003, Malmö, Sweden, 12–15 August 2003; Lingas, A., Nilsson, B.J., Eds.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2003; Volume 2751, pp. 284–295. [Google Scholar]

- Zhang, X.; Pan, L.; Păun, A. On the Universality of Axon P Systems. IEEE Trans. Neural Netw. Learn. Syst. 2015, 26, 2816–2829. [Google Scholar] [CrossRef]

- Ionescu, M.; Păun, G.; Yokomori, T. Spiking Neural P Systems. Fundam. Inform. 2006, 71, 279–308. [Google Scholar]

- Fortuna, L.; Buscarino, A. Spiking Neuron Mathematical Models: A Compact Overview. Bioengineering 2023, 10, 174. [Google Scholar] [CrossRef] [PubMed]

- Jiang, S.; Liu, Y.; Xu, B.; Sun, J.; Wang, Y. Asynchronous Numerical Spiking Neural P Systems. Inf. Sci. 2022, 605, 1–14. [Google Scholar] [CrossRef]

- Wu, T.; Pan, L.; Yu, Q.; Tan, K.C. Numerical Spiking Neural P Systems. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 2443–2457. [Google Scholar] [CrossRef]

- Yin, X.; Liu, X.; Sun, M.; Ren, Q. Novel Numerical Spiking Neural P Systems with a Variable Consumption Strategy. Processes 2021, 9, 549. [Google Scholar] [CrossRef]

- Zeng, X.; Zhang, X.; Pan, L. Homogeneous Spiking Neural P Systems. Fundam. Inform. 2009, 97, 275–294. [Google Scholar] [CrossRef]

- Jiang, K.; Chen, W.; Zhang, Y.; Pan, L. Spiking Neural P Systems with Homogeneous Neurons and Synapses. Neurocomputing 2016, 171, 1548–1555. [Google Scholar] [CrossRef]

- Jiang, K.; Song, T.; Chen, W.; Pan, L. Homogeneous Spiking Neural P Systems Working in Sequential Mode Induced by Maximum Spike Number. Int. J. Comput. Math. 2013, 90, 831–844. [Google Scholar] [CrossRef]

- Wu, T.; Wang, Y.; Jiang, S.; Shi, X. Small Universal Spiking Neural P Systems with Homogenous Neurons and Synapses. Fundam. Inform. 2016, 149, 451–470. [Google Scholar] [CrossRef]

- Pan, L.; Paun, G.; Zhang, G.; Neri, F. Spiking Neural P Systems with Communication on Request. Int. J. Neural Syst. 2017, 27, 1750042. [Google Scholar] [CrossRef]

- Pan, L.; Wu, T.; Su, Y.; Vasilakos, A.V. Cell-Like Spiking Neural P Systems with Request Rules. IEEE Trans. Nanobiosci. 2017, 16, 513–522. [Google Scholar] [CrossRef]

- Pan, T.; Shi, X.; Zhang, Z.; Xu, F. A Small Universal Spiking Neural P System with Communication on Request. Neurocomputing 2018, 275, 1622–1628. [Google Scholar] [CrossRef]

- Wu, T.; Bîlbîe, F.D.; Păun, A.; Pan, L.; Neri, F. Simplified and Yet Turing Universal Spiking Neural P Systems with Communication on Request. Int. J. Neural Syst. 2018, 28, 19. [Google Scholar] [CrossRef]

- Wu, T.; Neri, F.; Pan, L. On the Tuning of the Computation Capability of Spiking Neural Membrane Systems with Communication on Request. Int. J. Neural Syst. 2022, 32, 12. [Google Scholar] [CrossRef]

- Pan, L.; Păun, G. Spiking Neural P Systems with Anti-Spikes. Int. J. Comput. Commun. Control 2009, 4, 273–282. [Google Scholar] [CrossRef]

- Song, T.; Jiang, Y.; Shi, X.; Zeng, X. Small Universal Spiking Neural P Systems with Anti-Spikes. J. Comput. Theor. Nanosci. 2013, 10, 999–1006. [Google Scholar] [CrossRef]

- Song, T.; Pan, L.; Wang, J.; Venkat, I.; Subramanian, K.G.; Abdullah, R. Normal Forms of Spiking Neural P Systems with Anti-Spikes. IEEE Trans. Nanobiosci. 2012, 11, 352–359. [Google Scholar] [CrossRef]

- Song, T.; Liu, X.; Zeng, X. Asynchronous Spiking Neural P Systems with Anti-Spikes. Neural Process. Lett. 2015, 42, 633–647. [Google Scholar] [CrossRef]

- Wang, J.; Hoogeboom, H.J.; Pan, L.; Paun, G.; Pérez-Jiménez, M.J. Spiking Neural P Systems with Weights. Neural Comput. 2010, 22, 2615–2646. [Google Scholar] [CrossRef]

- Pan, L.; Zeng, X.; Zhang, X.; Jiang, Y. Spiking Neural P Systems with Weighted Synapses. Neural Process. Lett. 2012, 35, 13–27. [Google Scholar] [CrossRef]

- Zeng, X.; Pan, L.; Pérez-Jiménez, M.J. Small Universal Simple Spiking Neural P Systems with Weights. Sci. China Inf. Sci. 2014, 57, 11. [Google Scholar] [CrossRef]

- Zeng, X.; Xu, L.; Liu, X.; Pan, L. On Languages Generated by Spiking Neural P Systems with Weights. Inf. Sci. 2014, 278, 423–433. [Google Scholar] [CrossRef]

- Zhang, X.; Zeng, X.; Pan, L. Weighted Spiking Neural P Systems with Rules on Synapses. Fundam. Inform. 2014, 134, 201–218. [Google Scholar] [CrossRef]

- Zhang, X.; Zeng, X.; Luo, B.; Xu, J. Several Applications of Spiking Neural P Systems with Weights. J. Comput. Theor. Nanosci. 2012, 9, 769–777. [Google Scholar] [CrossRef]

- Fan, S.; Paul, P.; Wu, T.; Rong, H.; Zhang, G. On Applications of Spiking Neural P Systems. Appl. Sci. 2020, 10, 7011. [Google Scholar] [CrossRef]

- Liu, Q.; Long, L.; Peng, H.; Wang, J.; Yang, Q.; Song, X.; Riscos-Núñez, A.; Pérez-Jiménez, M.J. Gated Spiking Neural P Systems for Time Series Forecasting. IEEE Trans. Neural Netw. Learn. Syst. [CrossRef]

- Liu, Q.; Peng, H.; Long, L.; Wang, J.; Yang, Q.; Pérez-Jiménez, M.J.; Orellana-Martín, D. Nonlinear Spiking Neural Systems with Autapses for Predicting Chaotic Time Series. IEEE Trans. Cybern. [CrossRef]

- Long, L.; Liu, Q.; Peng, H.; Wang, J.; Yang, Q. Multivariate Time Series Forecasting Method Based on Nonlinear Spiking Neural P Systems and Non-Subsampled Shearlet Transform. Neural Netw. 2022, 152, 300–310. [Google Scholar] [CrossRef]

- Long, L.; Liu, Q.; Peng, H.; Yang, Q.; Luo, X.; Wang, J.; Song, X. A Time Series Forecasting Approach Based on Nonlinear Spiking Neural Systems. Int. J. Neural Syst. 2022, 32, 2250020. [Google Scholar] [CrossRef]

- Ma, T.; Hao, S.; Wang, X.; Rodríguez-Patón, A.A.; Wang, S.; Song, T. Double Layers Self-Organized Spiking Neural P Systems with Anti-Spikes for Fingerprint Recognition. IEEE Access 2019, 7, 177562–177570. [Google Scholar] [CrossRef]

- Song, T.; Pan, L.; Wu, T.; Zheng, P.; Wong, M.L.D.; Rodríguez-Patón, A. Spiking Neural P Systems with Learning Functions. IEEE Trans. Nanobiosci. 2019, 18, 176–190. [Google Scholar] [CrossRef]

- Peng, H.; Wang, J.; Pérez-Jiménez, M.J.; Shi, P. A Novel Image Thresholding Method Based on Membrane Computing and Fuzzy Entropy. J. Intell. Fuzzy Syst. 2013, 24, 229–237. [Google Scholar] [CrossRef]

- Peng, H.; Yang, Y.; Zhang, J.; Huang, X.; Wang, J. A Region-based Color Image Segmentation Method Based on P Systems. Rom. J. Inf. Sci. Technol. 2014, 17, 63–75. [Google Scholar]

- Song, T.; Pang, S.; Hao, S.; Rodríguez-Patón, A.; Zheng, P. A Parallel Image Skeletonizing Method Using Spiking Neural P Systems with Weights. Neural Process. Lett. 2019, 50, 1485–1502. [Google Scholar] [CrossRef]

- Xue, J.; Wang, Y.; Kong, D.; Wu, F.; Yin, A.; Qu, J.; Liu, X. Deep Hybrid Neural-Like P Systems for Multiorgan Segmentation in Head and Neck CT/MR Images. Expert Syst. Appl. 2021, 168, 114446. [Google Scholar] [CrossRef]

- Ren, Q.; Liu, X. Delayed Spiking Neural P Systems with Scheduled Rules. Complexity 2021, 2021, 13. [Google Scholar] [CrossRef]

- Wu, T.; Zhang, T.; Xu, F. Simplified and Yet Turing Universal Spiking Neural P Systems with Polarizations Optimized by Anti-Spikes. Neurocomputing 2020, 414, 255–266. [Google Scholar] [CrossRef]

- Jiang, S.; Fan, J.; Liu, Y.; Wang, Y.; Xu, F. Spiking Neural P Systems with Polarizations and Rules on Synapses. Complexity 2020, 2020, 12. [Google Scholar] [CrossRef]

- Liu, Y.; Zhao, Y. Spiking Neural P Systems with Membrane Potentials, Inhibitory Rules, and Anti-Spikes. Entropy 2022, 24, 834. [Google Scholar] [CrossRef]

- Peng, H.; Li, B.; Wang, J.; Song, X.; Wang, T.; Valencia-Cabrera, L.; Perez-Hurtado, I.; Riscos-Nunez, A.; Perez-Jimenez, M.J. Spiking Neural P Systems with Inhibitory Rules. Knowl. Based Syst. 2020, 188, 105064. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).