Abstract

Relation classification tends to struggle when training data are limited or when it needs to adapt to unseen categories. In such challenging scenarios, recent approaches employ the metric-learning framework to measure similarities between query and support examples and to determine relation labels of the query sentences based on the similarities. However, these approaches treat each support class independently from one another, never looking at the entire task as a whole. Because of this, they are constrained to using a shared set of features for all meta-tasks, which hinders the ability to compose discriminative features for the task at hand. For example, if two similar relation types occur in a meta-task, the model needs to construct more detailed, task-related features instead of common features shared by all tasks. In this paper, we propose a novel task-aware relation classification model to tackle this issue. We first build a task embedding component to capture task-specific information, after which two mechanisms, such as task-specific gate and gated feature combination methods, are proposed to utilize the task-specific information to guide feature composition dynamically for each meta-task. Experiment results show that our model improves performance considerably over high performing baseline systems on both FewRel 1.0 and FewRel 2.0 benchmarks. Moreover, our proposed methods can be incorporated into metric-learning-based methods and significantly improve their performance.

1. Introduction

Relation classification aims to categorize the semantic relation between two entity mentions based on the contexts within the sentence. For example, the instance “[London] is the capital of [the UK]” expresses the relation capital_of between the entity mention London and the UK.

Previous supervised methods [1,2,3,4] have achieved great success and showed promising results. However, these methods suffer from a lack of large-scale manually annotated data. Another line of approaches are based on distant supervision; these methods [5,6,7,8] automatically generate large-scale training data by aligning knowledge bases with plain texts to alleviate the data sparsity problem to a degree. However, the model itself still suffers from the poor generalization problem. By contrast, humans are readily capable of rapidly learning new classes with few examples. Few-shot learning is devoted to mimicking the generalization ability of humans. It aims to classify unseen data instances (e.g., query sentences) into a set of new categories, given just a small number of labeled instances in each class (e.g., support sentences). The key challenge in few-shot learning, therefore, is to make best use of the limited data available in the support set.

Many methods have been proposed to approach this problem, among which, the prototypical network [9] is a simple and effective method. It iteratively samples some classes and their corresponding labeled support sentences from the whole training set to construct meta-tasks. If a meta-task contains N classes, and there are K labeled support sentences in each corresponding class, the task is called N-way-K-shot. After constructing meta-tasks, it first utilizes a context encoder to map query sentences and supporting sentences into distributed feature representations, then the feature representations of supporting sentences from each class are averaged and treated as prototype representations of these classes. Finally, the labels of query sentences are determined by computing distances or matching degrees to prototype representations. Since the method is simple and effective, lots of similar methods [10,11,12] have been proposed.

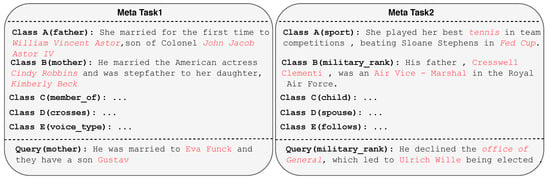

Despite the great success of these approaches, all of them treat each class in each meta-task separately, not as a whole, and do not take into account the specific characteristics of each meta-task, which results in two limitations of these approaches. First, since very few labeled data are available for each meta-task, it is imperative to make the best use of all of the information available from the full set of examples. Neglecting the information from the whole view will cause information loss. Second, the class set of each meta-task is not overlapped with each other. If models treat all meta-tasks equally without considering their specific characteristics, the models will compose a shared feature set for all tasks, which is unreasonable [13]. Take the two meta-tasks in Figure 1 as an example. In meta-task1, as the support sentence S1, S2 of class A “father” and class B “mother” share similar lexical and syntax clues, models need to pay much attention to pronouns such as “She” of S1 and “He” of S2 to make a correct prediction. However, in meta-task2, since class A “sport” and class B “military_rank” have distinct lexical and syntax clues, the models can predict correctly without considering pronouns. Using the same feature set learned from meta-task1, which always focuses on pronouns, we could reduce the weights of discriminative features, leaving potential hazards for other meta-tasks.

Figure 1.

A data example of 5-way-1-shot relation classification. The instances for other relation classes are omitted for saving space. The highlighted words are the entity mentions.

The above issues motivate the following research questions: (1) How can we extract task-specific information from each meta-task? (2) Can we design models to leverage task-specific information to guide feature composition for each meta-task dynamically?

To address these questions, we propose modeling task-specific information and dynamically leveraging this information to compose new feature sets for each meta-task by introducing the Task-aware Feature Composition (TFC) structure. Specifically, we first treat all support classes as a whole for each meta-task and design a task embedding learning module for capturing task-specific information. After that, we take inspiration from [14,15], designing two guiding modes for composing task-aware features, in which task embeddings can be used to dynamically guide feature composition. We demonstrate the effectiveness of our proposed method on the FewRel1.0 and FewRel2.0 benchmarks. The TFC outperforms all high performing baselines. Moreover, our model can be viewed as a plug-and-play module, and we witness consistent relative gains when incorporating our module into existing methods.

The contributions of this paper can be summarized as follows:

- We propose a task embedding learning module for capturing task-specific information.

- Two methods are proposed for utilizing task-specific information to guide feature composition dynamically for each meta-task.

- Our proposed method is a plug-and-play module that can be easily incorporated into existing methods and show significant improvements.

2. Related Work

2.1. Relation Classification

Relation extraction aims to classify semantic relations between two entity mentions into pre-defined categories. In recent years, neural networks have been widely used to deal with this task. Many works [1,5,16,17,18] utilize neural networks as sentence encoders and achieve great success. However, these supervised methods rely heavily on the large amount of labeled data. When the labeled data are limited, these methods show bad classification performance. To deal with this problem, Lin et al. [6] proposed selective sentence attention to alleviate the noise introduced by automatically generated training data. Shi et al. [3] tackled the data sparsity problem in another way. They proposed a cross-domain framework, which only uses training data from the high-resource domain to train the model and directly applied them to a distinct new domain without using training data from the new domain. Although these methods have achieved great success, they suffer from the noisy training data and cannot quickly adapt to a new class that has never been seen. Recently, some works [19,20] try to mimic humans’ ability to adapt quickly to new tasks with a limited number of instances. They treat the pretrained language model (e.g., BERT) as a knowledge base and use it as a basic sentence encoder to capture context semantics. Since the pretrained language model is trained using a large corpus scale, these models can transfer the knowledge learned from the general domain to the target domain, significantly improving the few-shot relation classification performances. For example, Soares et al. [21] proposed a pretraining strategy, which utilized an entity linking toolkit to automatically mined training data from Wikipedia and trained a relational representation learning model by replacing entities with blanks, improving the classification performance by a large margin. Compared with these pretraining methods, our work mainly focuses on proposing a new few-shot classification architecture rather than proposing a new pretraining strategy. Further, our methods can be combined with these pretraining strategies using the pretraining embeddings as inputs.

2.2. Metric-Based Few-Shot Learning

Our work is closely related to metric-based few-shot learning method. Vinyals et al. [22] introduced the concept of meta-task training in few-shot learning, where the training procedure mimics the test scenario based on support-query metric learning. They compare feature similarity after embedding both support and query samples into a shared feature space. Snell et al. [9] further extend the work of Vinyas et al. and propose prototypical network. By averaging all the sentence representations as class prototypes, the model can make use of the centroids to eliminate the outliers in the support set and find dimensions common to all class samples.

All these metric-based methods above are developed for computer vision tasks. Regarding with the few-shot relation classification, Han et al. [23] proposed the FewRel dataset and built several baselines. Gao et al. [10] proposed the instance-level and feature-level attention module to alleviate the problem of feature sparsity and measure the space distance in a more suitable way. Ye et al. [12] proposed a multi-level matching and aggregation network (MLMAN) to make the query and support sentences interact with each other and refine their representations. Geng et al. [24] tackled few-shot relation classification in an extreme low-resource setting by aggregating cross-domain knowledge into models with open-source task enrichment. Wang et al. [25] proposed an entity-guided attention and confusion-aware training strategy to alleviate the relation confusion problem. Compared with these methods, our work goes beyond by treating all classes in the support set together to extract task-specific features and uses them to guide the model learning.

3. Task Definition

Under few-shot configuration, we randomly sample N distinct categories and K support instances in each category from the whole training set to construct a meta-task, which consists of a support set S and a query set Q,

where n is the class index and K is the number of samples in class . The support set has a total number of instances and corresponds to a N-way-K-shot problem. Each instance from the query or support set is denoted as (for simplicity, we discard the superscript of s here), where x represents a sentence that contains the entity pair . indicates the semantic relation label holding between the entity mention and .

Given a query example from Q, few-shot relation classification aims to determine its relation label by comparing it with the support sentences. Since K is typically very small (i.e., 1, 5), and the relation label spaces of training and testing phase are disjointed, the standard supervised training method cannot be adopted here. Therefore, we adopt the meta-training method as [9]. In each meta-training episode, the model learns to learn from the support set S to minimize a loss over the query set Q, and the loss function at training time is defined as follows:

where J is the overall loss function, R represents the total number of queries, and the function is to calculate the matching degree between query representation q and the support representations of class n. Previous works treat each class independently. Different with these methods, we build a new function by taking the whole task information into consideration.

4. Methodology

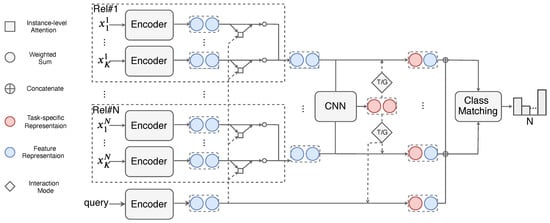

In this section, we will introduce our proposed framework for dynamically composing task-aware features. In the following sections, we use the subscript q or s combined with a symbol to indicate that the combined symbol applies to a query or support sentence. If the symbol does not have q or s as its subscript, we indicate that the symbol applies to both query and support sentences. For example, we use x to represent the sentence of a query or support instance, while we use , to indicate the sentence of a query and support instance, respectively. Furthermore, for simplicity, we discard the superscript of s in Section 4.1. As illustrated in Figure 2, our model mainly consists of four components:

Figure 2.

Overall framework for task-aware few-shot relation classification.

- Context Encoder. Given a query or support instance and its mentioned entity pairs, we employ a context encoder similar to the MLMAN [12] to encode an instance into sentence representation.

- Relation Prototypes and Task Embedding Module. Taking the query and support sentence representations as input, by computing the match degrees between query and support representations and using the degrees as weights to combine support representations, we can obtain a prototype for each relation. After obtaining relation prototypes, we treat them as a whole and place a CNN encoder on top of them to extract task-specific representations, which are depicted as task embeddings.

- Task-aware Feature Composition. Based on the task-specific representation, we further propose two different guiding modes to dynamically refine and compose task-aware feature representations for each meta-task. Our guiding modes are based on a task-gated mechanism and gated feature combination, respectively.

- Class Matching. Once the task-specific information is incorporated into the composed query representation and prototypes, these feature representations are then used as input and fed to a matching module to measure the relationship between query and class prototypes.

4.1. Context Encoder

This module is used to extract feature representations of query and support sentences. Given a support or query sentence mentioning two entities, we first apply an embedding layer to map words of them into embedding sequences, and then the MLMAN [12] is adopted as the context encoder to encode the embedding sequences into continuous low-dimensional sentence representations, aiming to capture the sentence semantics.

Embedding Layer. For a query or support sentence, we first map each word in the sentence into a -dimensional word embedding [26,27]. To incorporate the position information of the two mentioned entities in the sentence, the position embeddings [5], which indicate the relative distances between the current word and the two entities, are also adopted. Denote the position embeddings of as , . By concatenating the three embeddings, we finally obtain the word embedding of dimensions. Then, we gather all the input word embeddings in the sentence, and we have an embedding sequence ready for the encoding layers as follows:

where x represents the input embedding sequence for a query or a support sentence. T denotes the sentence length of the sentence.

Encoding Layer. In the encoding layer, we take the embedding sequence x as input and select MLMAN to encode x into the final instance representation. We first employ the convolution neural network [28] to slide number of filters with the window size m over the input embedding sequence and obtain a context representation sequence as follows:

we then adopt a local matching and aggregation mechanism to obtain the matching information between query and support sentences. Let us use and to represent the context representation sequences for query and support sentences, respectively. and represent the sentence length of query and support sentences. Denote and as the context representation positions of query and support sentences, so we can compute the match score between them as follows.

Once obtaining the match scores between each query context representation and support context representation, we normalize them and use them as weights to aggregate context representations. In more detail, for of a query sentence, we first compute the match score between and every single context representation in the support sentence according to Equation (7). Then, we normalize these scores by employing a softmax function and use the normalized weights to aggregate the context representations of the support sentence. Finally, we can obtain the match representation of and the whole match representation sequence of query as follows:

where represents the match representation in the position of query. The same operation also applies to the support sentence, and we can obtain the match representation sequence of support sentence. Denote as the match representation sequence of query or support sentences. We concatenate with , , and use a feed forward layer to reduce dimension. Then, feed them into a single-layer Bi-LSTM [29] with hidden units. After, we employ a max pooling together with an average pooling over the time step dimension to convert the results of local matching into a single vector and obtain the final sentence representations :

the can be either the final representation for the query sentence or support sentence.

For simplicity, we denote such an instance encoding operation, including both embedding and encoding layers, as the following equation,

where is the learnable parameters of the MLMAN encoder.

4.2. Relation Prototypes

After obtaining sentence representations, we further utilize the instance-level attention mechanism to build more query-related relation prototypes.

Given a query representation and support sentence representations { from the context encoder, we first compute the similarities between query and support sentences, then after combining support instances based on these similarity scores, we can obtain relation prototypes. The computing equations are as follows:

where indicates the relation prototype for relation type n, and indicates the similarity score between query and the k-th support instance of class n.

4.3. Task-Aware Feature Composition

Up until now, we assumed the feature extractor and the prototype to be task-independent. However, a dynamic task-aware feature extractor should be better suited for finding correct associations between given sample set relation prototypes and query samples. In other words, if there is a meta-task that its relation types are difficult to distinguish, the model needs to construct a more finely tailored feature set rather than a shared feature set across all meta-tasks. To achieve this, we first capture task-specific information by employing a task embedding learning module on top of relation prototypes. Then, we design two guiding modes for composing task-aware features.

Task Embedding Learning. Since each meta-task has a different set of relation types, in order to capture task-specific information, we place a task embedding a learning module on top of relation prototypes.

Formally, given relation prototypes , we first concatenate these prototypes and treat them as a sequence, then we place a CNN encoder following the max pooling operation on top of the sequence. Finally, we can obtain the task embedding o, which contains task-specific information,

where indicates the number of convolutional filters.

After obtaining the task embedding, two guiding modes are proposed to utilize the embedding to guide feature composition.

Mode 1: Task-gated mechanism. A task-gated mechanism is a specially designed unit for guiding feature composition, which is inspired by [30,31]. In particular, we propose a task-gate to select task-specific representations by controlling the transformation scale of query and prototype representations.

Given a query representation , relation prototypes , and task embedding , we first employ a fully connected layer to transform , into the same space of o. Then, the task-gate is placed on top of both query and relation prototypes for building task-specific representations. The computing equations are as follows:

where , represents the task-gate, denotes the sigmoid function, and and represent the task-aware representation of query and relation prototypes.

In Equations (16)–(18), the task-gate controls the transformations of query representation and relation prototypes under the guidance of the learned task embedding. This enables the task embedding to guide the encoding of task-specific features and block the task-irrelevant information.

Mode 2: Gated Feature Combination. Gated feature combination utilizes task-specific information from another perspective. It treats the task embedding o as task-specific features and uses a gated function to selectively combine the features with query representation and relation prototypes.

For o, it provides a summary of the meta-task. Though this evidence is valuable to the prediction process, we must mitigate the influence of the task embedding since the prediction should still be made primarily based on the query representation and relation prototypes. Therefore, we apply a gating mechanism to constrain this influence and enable the model to decide the amount of the task embedding that should be incorporated into the prediction process, which is given by

where is the learned matrix to transform the query representation and relation prototypes into the same space of task embedding; and are the gates to determine how much of the task-specific information should be incorporated into the model. and represent the task-aware representations of query and relation prototypes, respectively.

4.4. Class Matching

After the task-aware query representation and relation prototypes have been determined, the class-level matching function in Equation (2) is defined as

where , is the learned parameters to measure the match score between and .

By combining Equation (24) with (2)–(3), we can use SGD to optimize the model.

5. Experiments

5.1. Experiments on FewRel1.0 (General Domain)

5.1.1. Dataset and Evaluation Metrics

We evaluate our methods from multiple settings on the few-shot relation classification dataset FewRel [23]. This dataset was first generated by distant supervision and then filtered by crowdsourcing to remove noisy annotations. The final dataset has 100 relation types in total, and each type has 700 instances. The dataset is split into 64, 16, and 20 for training, validation, and testing. There are no overlapping relation types among training, validation, and test sets. As the training dataset and testing dataset are both from Wikipedia, and there is no domain gap between them, this dataset is relatively simple.

Our experiments investigate four few-shot learning settings as [23], namely, 5-way-1-shot, 5-way-5-shot, 10-way-1-shot, and 10-way-5-shot. We use the official scorer, which uses accuracy as the evaluation metric.

5.1.2. Baselines

We compare our work with the following baselines:

- Finetune(CNN). Using a convolution neural network as the basic sentence encoder and Softmax as the classifier, the model is trained with a traditional supervised learning method. During the test, the sentence encoder parameters are fixed, and the classifier’s parameters are fine-tuned with a few supporting sentences in the test data.

- Finetune(PCNN). The training method used is the same as Finetune (CNN), except that the sentence encoder is changed to the piecewise pooled convolution neural network encoder (PCNN) proposed by Zeng et al. [32].

- kNN(CNN). Adopting CNN as the basic encoder and Softmax as the classifier and training the model using the traditional supervised method. In the test phase, the sentence representations of support and query sentences are acquired through the CNN encoder. Then, the sentence representations are clustered using the K-nearest neighbor algorithm.

- kNN(PCNN). The core idea is the same as kNN(CNN), except that the encoder used is PCNN.

- Meta Network. A meta-network [23] utilizes a high-level meta-learner on top of the traditional classification model.

- GNN [23]. This work considers each support sentence or query sentence as a node in the graph and employs a graph neural network to propagate the information between nodes.

- SNAIL [23]. A meta-learning model that utilizes temporal convolutional neural networks and attention modules for fast learning.

- Proto [23]. This work assumes there exists prototype embedding for each support class, and for each class, they average all support sentence representations in the class as the class prototype, then compute the distance between query and class prototypes.

- Proto-HATT [10]. Incorporating a hybrid attention mechanism into a prototypical framework.

- HAPN [33]. It proposes word-level, feature-level, and instance-level attention to obtain a more discriminative prototype of each class.

- MLMAN [12]. The basic encoder we used in our paper; it iteratively utilizes matching information between query and support sentences to refine the feature representations.

- BERT-PAIR [11]. A pretraining-based model pairs each query instance with all the supporting instances and sends the concatenated sequence to the BERT classification model to obtain the two instances’ score expressing the same relation.

- CTEG [25]. A model equipped with an entity-guided attention mechanism and confusion-aware training strategy to learn to decouple easily confused relations.

5.1.3. Results and Discussions

Table 1 shows the results of different models tested on the FewRel test set. As shown in Table 1, our approach (TFC+Mode 1, TFC+Mode 2) significantly outperforms all the high-performing methods in all settings. Compared with BERT-PAIR, which performs the best among all the methods, our methods achieve up to 3.35% absolute gain, demonstrating the effectiveness of incorporating task-specific information into models. Moreover, to study the effect of our two guiding modes, we build the baseline TFC, which directly combines task embeddings with query representation and relation prototypes. From the performance of TFC, we find that directly combining task-specific information without any well-designed guiding mechanisms will result in performance degradation. This is because we indiscriminately incorporate task-specific information into the model, which can cause information confusion and introduce noise to the model. By adopting feature guiding mode 1 or 2 (TFC+mode 1, TFC+mode 2), we can leverage task-specific information well and improve the system performance significantly.

Table 1.

Accuracy (%) of all models on FewRel1.0 under four different settings.

Compared with the supervised training methods (Finetune (CNN), Finetune (PCNN), kNN (CNN), and kNN (PCNN)), the performance of all few-shot learning methods is greatly improved. This is because the number of training samples is too small to train a huge number of classifier parameters, leading to the overfitting problem. Therefore, it is necessary to adopt a meta-training method to deal with few-shot learning problems.

5.2. Experiments on FewRel2.0 (Cross Domain)

5.2.1. Datasets

To verify the generality of our proposed methods, we adopt a more challenging dataset named FewRel2.0. FewRel2.0 has the same training set as FewRel1.0, but the validation dataset and test dataset are different. FewRel2.0 uses SemEval2010 as the validation dataset, which is taken from websites whose source is different from the training set. The test set of FewRel2.0 is constructed by aligning PubMed with a large-scale biomedical knowledge base. It contains a total of 25 relation types, each with 100 examples. For evaluation, we use accuracy as the metric.

5.2.2. Baselines

For the cross-domain few-shot relation classification, we use the following models as baselines:

- Proto-ADV (CNN) [11]. Adopting the prototypical network as the basic architecture, then using adversarial training to remedy the huge domain gap;

- Proto (BERT) [11]. Using BERT as the sentence encoder and does not use adversarial training strategy;

- Proto-ADV (BERT) [11]. Using BERT as the sentence encoder and employing adversarial training;

- BERT-PAIR [11]. Using BERT sequence classification model to predict whether two instances express the same relation or not.

5.2.3. Results and Discussions

Table 2 presents comparisons between the proposed model and baseline models. For FewRel2.0, our model achieves a huge gain of 12.98% on average in four settings compared with BERT-PAIR, while our model outperforms BERT-PAIR by 3.35% on FewRel1.0. The reason why a greater performance boost is observed for FewRel2.0 is that there exists a huge domain gap between the training and testing dataset, and this exacerbates the severe data sparsity problem. Since our proposed methods encode task-aware information as additional guidance for the model training, they are more immune to the data sparsity issue, leading to more improvements in FewRel2.0.

Table 2.

Accuracy (%) of all models on FewRel2.0 under four different settings.

5.3. Adapting TFC into Existing Frameworks

To verify the portability of our models, we embed them into a GNN and a Prototypical Network, which are closely related to our work. Table 3 shows the gains obtained by including TFC into each method on the validation set of FewRel.

Table 3.

Improvement after incorporating TFC into existing methods on FewRel validation dataset.

We observe that, on average, there is an approximately 3% absolute increase after adopting TFC. This shows the ability of our module to plug-and-play into multiple metric-based systems. Moreover, the gains remain consistent for each method, regardless of the starting performance level. This supports the hypothesis that our method is able to incorporate signals previously unavailable to any of these approaches, i.e., the task-specific information.

5.4. The Impact of Word Embeddings

BERT can provide richer contextual representations of words by pretraining with a large-scale corpus, and many works have shown that BERT embeddings are better than classical word embeddings, such as GLOVE [34]. In this section, we investigate whether better pretrained embeddings will further improve the effectiveness of our proposed model. Specifically, we conduct experiments by replacing BERT embeddings with GLOVE embeddings. The experimental results are shown in Table 4.

Table 4.

Accuracy (%) of all models on FewRel validation dataset.WE means using word embeddings instead of BERT embeddings.

From Table 4, we find that the classification performance decreases dramatically when using word embeddings instead of BERT embeddings. This is because BERT is trained based on a considerable corpus scale, and its network structure is based on the transformer, which has stronger semantic modeling capability. BERT encodes more context semantic information through multi-task learning. This information can be used as an additional knowledge base to remedy limited training examples in the few-shot learning setting. This finding is consistent with the work of Petroni et al. [35].

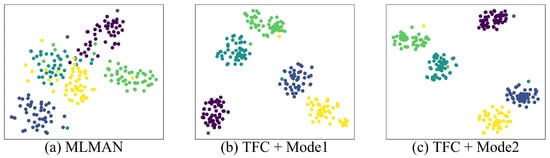

5.5. Feature Visualization Learned by TFC

To explore in more depth what impact our model has on the features, we visualize the feature variations before and after employing the TFC module using t-SNE [36]. We find that our task-feature composition module not only works well in capturing task-specific information but also helps the encoders learn more discriminative sentence representations. Figure 3 shows the visualization results of query feature distribution. The features compute in a 5-way 1-shot setting. We randomly select 5 classes and 50 sentences for each class from the validation dataset. Figure 3a shows the MLMAN without using any task-specific information, while Figure 3b,c shows the visualization results for TFC + Mode1 and TFC + Mode2, respectively. It is clear that the our TFC models have more compact and separable clusters, indicating that features are more discriminative for the task.

Figure 3.

Overall framework for task-aware few-shot relation classification.

6. Conclusions

In this paper, we propose a task-aware feature composition model that can learn task-specific features by taking the whole meta-task into consideration. To effectively utilize the task-specific information, two task-guided feature composition methods are proposed. One is based on a task-gated mechanism to guide the non-linear transformation scale, another is to utilize the gate mechanism to selectively incorporate task-specific features into our model. Experiments on the FewRel benchmark shows the effectiveness of our proposed method. Moreover, our method can be viewed as a plug-and-play module, which can be easily incorporate into existing frameworks to improve performance. In the future, we will further extend our approach to a noisy few-shot learning setting for information extraction. In addition, our proposed models lie in the metric-based few-shot learning methods, and we wonder whether the distance metric would have great impact on few-shot learning performances.

Author Contributions

Conceptualization, G.S. and S.D.; methodology, G.S.; software, Y.W.; validation, G.S. and Y.W.; formal analysis, Y.W.; investigation, G.S. and S.D.; resources, L.L.; data curation, Y.W.; writing—original draft preparation, G.S.; writing—review and editing, C.F.; visualization, L.L.; supervision, C.F. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Natural Science Foundation of China under Grant No. 61976010, 62106010, 62106011, 62176011.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data sharing is not applicable to this paper as no new data were created or analyzed in this study.

Acknowledgments

We thank the anonymous reviewers for their valuable comments and suggestions.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Yu, M.; Gormley, M.R.; Dredze, M. Combining word embeddings and feature embeddings for fine-grained relation extraction. In Proceedings of the 2015 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Denver, CO, USA, 31 May–5 June 2015; pp. 1374–1379. [Google Scholar]

- Gormley, M.R.; Yu, M.; Dredze, M. Improved relation extraction with feature-rich compositional embedding models. arXiv 2015, arXiv:1505.02419. [Google Scholar]

- Shi, G.; Feng, C.; Huang, L.; Zhang, B.; Ji, H.; Liao, L.; Huang, H.Y. Genre separation network with adversarial training for cross-genre relation extraction. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; pp. 1018–1023. [Google Scholar]

- Yin, R.; Li, K.; Zhang, G.; Lu, J. A deeper graph neural network for recommender systems. Knowl.-Based Syst. 2019, 185, 105020. [Google Scholar] [CrossRef]

- Zeng, D.; Liu, K.; Lai, S.; Zhou, G.; Zhao, J. Relation classification via convolutional deep neural network. In Proceedings of the 25th International Conference on Computational Linguistics, COLING 2014, Dublin, Ireland, 23–29 August 2014; pp. 2335–2344. [Google Scholar]

- Lin, Y.; Shen, S.; Liu, Z.; Luan, H.; Sun, M. Neural relation extraction with selective attention over instances. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Berlin, Germany, 7–12 August 2016; pp. 2124–2133. [Google Scholar]

- Zhang, T.; Subburathinam, A.; Shi, G.; Huang, L.; Lu, D.; Pan, X.; Li, M.; Zhang, B.; Wang, Q.; Whitehead, S.; et al. Gaia-a multi-media multi-lingual knowledge extraction and hypothesis generation system. In Proceedings of the Text Analysis Conference Knowledge Base Population Workshop, Gaithersburg, MD, USA, 13–14 November 2018. [Google Scholar]

- Yuan, C.; Huang, H.; Feng, C.; Liu, X.; Wei, X. Distant Supervision for Relation Extraction with Linear Attenuation Simulation and Non-IID Relevance Embedding. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 7418–7425. [Google Scholar]

- Snell, J.; Swersky, K.; Zemel, R. Prototypical networks for few-shot learning. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 4077–4087. [Google Scholar]

- Gao, T.; Han, X.; Liu, Z.; Sun, M. Hybrid attention-based prototypical networks for noisy few-shot relation classification. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 6407–6414. [Google Scholar]

- Gao, T.; Han, X.; Zhu, H.; Liu, Z.; Li, P.; Sun, M.; Zhou, J. FewRel 2.0: Towards more challenging few-shot relation classification. arXiv 2019, arXiv:1910.07124. [Google Scholar]

- Ye, Z.X.; Ling, Z.H. Multi-Level Matching and Aggregation Network for Few-Shot Relation Classification. arXiv 2019, arXiv:1906.06678. [Google Scholar]

- Oreshkin, B.; López, P.R.; Lacoste, A. Tadam: Task dependent adaptive metric for improved few-shot learning. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 3–8 December 2018; pp. 721–731. [Google Scholar]

- Xu, M.; Wong, D.F.; Yang, B.; Zhang, Y.; Chao, L.S. Leveraging local and global patterns for self-attention networks. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 3069–3075. [Google Scholar]

- Liu, Y.; Meng, F.; Zhang, J.; Xu, J.; Chen, Y.; Zhou, J. Gcdt: A global context enhanced deep transition architecture for sequence labeling. arXiv 2019, arXiv:1906.02437. [Google Scholar]

- Nguyen, T.H.; Grishman, R. Relation extraction: Perspective from convolutional neural networks. In Proceedings of the 1st Workshop on Vector Space Modeling for Natural Language Processing, Denver, CO, USA, 5 June 2015; pp. 39–48. [Google Scholar]

- Zhang, Y.; Zhang, G.; Zhu, D.; Lu, J. Scientific evolutionary pathways: Identifying and visualizing relationships for scientific topics. J. Assoc. Inf. Sci. Technol. 2017, 68, 1925–1939. [Google Scholar] [CrossRef]

- Shi, G.; Feng, C.; Xu, W.; Liao, L.; Huang, H. Penalized multiple distribution selection method for imbalanced data classification. Knowl.-Based Syst. 2020, 196, 105833. [Google Scholar] [CrossRef]

- Bouraoui, Z.; Camacho-Collados, J.; Schockaert, S. Inducing relational knowledge from BERT. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 7456–7463. [Google Scholar]

- Loureiro, D.; Jorge, A. Language modelling makes sense: Propagating representations through wordnet for full-coverage word sense disambiguation. arXiv 2019, arXiv:1906.10007. [Google Scholar]

- Soares, L.B.; FitzGerald, N.; Ling, J.; Kwiatkowski, T. Matching the blanks: Distributional similarity for relation learning. arXiv 2019, arXiv:1906.03158. [Google Scholar]

- Vinyals, O.; Blundell, C.; Lillicrap, T.; Wierstra, D. Matching networks for one shot learning. Adv. Neural Inf. Process. Syst. 2016, 26, 3630–3638. [Google Scholar]

- Han, X.; Zhu, H.; Yu, P.; Wang, Z.; Yao, Y.; Liu, Z.; Sun, M. Fewrel: A large-scale supervised few-shot relation classification dataset with state-of-the-art evaluation. arXiv 2018, arXiv:1810.10147. [Google Scholar]

- Geng, X.; Chen, X.; Zhu, K.Q.; Shen, L.; Zhao, Y. MICK: A Meta-Learning Framework for Few-shot Relation Classification with Small Training Data. arXiv 2020, arXiv:2004.14164. [Google Scholar]

- Wang, Y.; Bao, J.; Liu, G.; Wu, Y.; He, X.; Zhou, B.; Zhao, T. Learning to Decouple Relations: Few-Shot Relation Classification with Entity-Guided Attention and Confusion-Aware Training. arXiv 2020, arXiv:2010.10894. [Google Scholar]

- Deng, T.; Ye, D.; Ma, R.; Fujita, H.; Xiong, L. Low-rank local tangent space embedding for subspace clustering. Inf. Sci. 2020, 508, 1–21. [Google Scholar] [CrossRef]

- Esposito, M.; Damiano, E.; Minutolo, A.; De Pietro, G.; Fujita, H. Hybrid query expansion using lexical resources and word embeddings for sentence retrieval in question answering. Inf. Sci. 2020, 514, 88–105. [Google Scholar] [CrossRef]

- Kim, Y. Convolutional neural networks for sentence classification. arXiv 2014, arXiv:1408.5882. [Google Scholar]

- Huang, Z.; Xu, W.; Yu, K. Bidirectional LSTM-CRF models for sequence tagging. arXiv 2015, arXiv:1508.01991. [Google Scholar]

- Liang, Y.; Meng, F.; Zhang, J.; Xu, J.; Chen, Y.; Zhou, J. A Novel Aspect-Guided Deep Transition Model for Aspect Based Sentiment Analysis. arXiv 2019, arXiv:1909.00324. [Google Scholar]

- Li, H.; Eigen, D.; Dodge, S.; Zeiler, M.; Wang, X. Finding task-relevant features for few-shot learning by category traversal. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1–10. [Google Scholar]

- Zeng, D.; Liu, K.; Chen, Y.; Zhao, J. Distant supervision for relation extraction via piecewise convolutional neural networks. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Lisbon, Portugal, 17–21 September 2015; pp. 1753–1762. [Google Scholar]

- Sun, S.; Sun, Q.; Zhou, K.; Lv, T. Hierarchical Attention Prototypical Networks for Few-Shot Text Classification. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 476–485. [Google Scholar]

- Pennington, J.; Socher, R.; Manning, C.D. Glove: Global vectors for word representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar]

- Petroni, F.; Rocktäschel, T.; Lewis, P.; Bakhtin, A.; Wu, Y.; Miller, A.H.; Riedel, S. Language models as knowledge bases? arXiv 2019, arXiv:1909.01066. [Google Scholar]

- Van der Maaten, L.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).