Abstract

As reconstruction and redevelopment accelerate, the generation of construction waste increases, and construction waste treatment technology is being developed accordingly, especially using artificial intelligence (AI). The majority of AI research projects fail as a consequence of poor learning data as opposed to the structure of the AI model. If data pre-processing and labeling, i.e., the processes prior to the training step, are not carried out with development purposes in mind, the desired AI model cannot be obtained. Therefore, in this study, the performance differences of the construction waste recognition model, after data pre-processing and labeling by individuals with different degrees of expertise, were analyzed with the goal of distinguishing construction waste accurately and increasing the recycling rate. According to the experimental results, it was shown that the mean average precision (mAP) of the AI model that trained on the dataset labeled by non-professionals was superior to that labeled by professionals, being 21.75 higher in the box and 26.47 in the mask, on average. This was because it was labeled using a similar method as the Microsoft Common Objects in Context (MS COCO) datasets used for You Only Look at Coefficients (YOLACT), despite them possessing different traits for construction waste. Construction waste is differentiated by texture and color; thus, we augmented the dataset by adding noise (texture) and changing the color to consider these traits. This resulted in a meaningful accuracy being achieved in 25 epochs—two fewer than the unreinforced dataset. In order to develop an AI model that recognizes construction waste, which is an atypical object, it is necessary to develop an explainable AI model, such as a reconstruction AI network, using the model’s feature map or by creating a dataset with weights added to the texture and color of the construction waste.

1. Introduction

As the demand for housing increases due to rising real estate prices and the lack of supply, rebuilding and redevelopment have taken center stage, but on-site waste remains a serious problem [1,2,3]. According to ‘2019 Waste Occurrence by Type’, which is the result of the investigation of the current status of waste generation, construction waste comprises 44.5% of all waste in Korea, which is the largest overall portion [4]. When construction waste is neglected, environmental pollution occurs, such as underground water pollution due to waste oil and rust; thus, it is important to recycle where possible, because otherwise, waste is incinerated or landfilled. However, since construction waste of over five tons was banned in landfills in 2022, those that cannot be buried must be recycled or incinerated. Nevertheless, as a result of the toxic substances that are released during incineration, it is impossible to increase the landfill in Korea whilst adhering to the international environment standard. Because of this, waste must be recycled as much as possible, and thus this field is of increasing research interest.

Furthermore, Incheon Metropolitan City, South Korea, has announced that it will terminate the operation of its main landfill by 2025, the biggest singular landfill in the world, due to waste saturation [5]. In order to prepare for this, recycling methods for construction waste, which comprises a significant portion of the landfill, are required. Although crushed waste, such as concrete and asphalt, is already being reproduced as recycled aggregate, the quality of the recycled products is low due to the high cost of sorting different materials, such as those used interiorly and exteriorly, and this low-quality aggregate is difficult to use on construction sites. However, as is described in the ‘Construction Waste Recycling Promotion Act’, the introduction of separate dismantling systems, which removes materials in advance that are difficult to recycle, such as synthetic resin and board, is planned to become mandatory for effective recycled-aggregate usage. Furthermore, according to the research results from ‘Changes in Processing Methods by Waste Type in 2019’, construction waste is 98.9% recycled; however, the Ministry of Environment requires the remaining 1.1% to be reduced. Thus, the demand for technological development in the field of recycling methods is of great interest [4]. Therefore, construction waste from the demolition or remodeling of old buildings as a consequence of housing policy is inevitable in the near future, and in the end, recycling through separate dismantling systems is the only solution when the landfill is insufficient.

There is research into automatic sorting techniques using ventilators in separate dismantling systems [6]. Recycled sand and aggregate are reproduced after sorting mixed rock waste, sand, and soil by size so as to separate them automatically. Then, the fragmenting and cleansing process is performed [7,8]. This technique utilizes a ventilator to push out lighter waste and drop heavier ones onto a conveyor belt using wind power before sorting in the next step. The downside of this technique is that wind power cannot be controlled; thus, not only light vinyl waste but also fragments of lumber, fine aggregate, and even sand are thrown out together with the other waste. Recycling is therefore ineffective as foreign substances are introduced into the recycled aggregate, or fine aggregates or sand, which are required on-site, are landfilled with the waste. As a result of the issues with the wind power sorting equipment, AI has been utilized on construction waste landfills [9,10]. It demonstrates high accuracy since the automatic sorting robot equipped with AI differentiates waste and performs the classification task. Moreover, it is efficient as it is able to operate for 24 h/day [11]. Thus, in this study, we developed an AI model by transfer learning YOLACT that classifies construction waste with the goal of distinguishing construction waste accurately and increasing the recycling rate.

In recent AI-related research, the failure of the AI was associated with the poor construction of learning datasets [12]. According to that study, there is a good probability that learning data from a non-professional, who does not have the expertise to label data, affects the performance of the AI model. Therefore, in order to increase the efficiency of the AI research development process, the knowledge and management of a professional, who is able to differentiate between poor learning data during data pre-processing, are needed. Hence, in this research, workers were classified based on the level of their knowledge and were each labeled accordingly. The learning results of the construction waste recognition model, which received transfer learning, were then compared.

2. Related Works

There is much research, in many fields, regarding the detection and classification of objects using image recognition [13,14,15,16]. Nonetheless, most research focuses on simply increasing the volume of data so as to secure the AI model’s performance [16]. For this reason, there has been no progress in terms of enhancing learning efficiency and accuracy using professionals in the data refinement process. Hereafter, we investigate the AI studies in the construction industry and the effects that refined data quality has on the AI model by analyzing previous research. In addition, since instance segmentation was utilized as an image recognition technique for developing the model in this research, the practicality of this technique is investigated in terms of it being used as an AI model and labeling method in non-preferred special fields, such as construction [16].

2.1. Artificial Intelligence in the Construction Industry

Vision-based artificial intelligence is a branch of computer science that enables computers to recognize diverse objects and people in photos and movies. Several attempts were made in the 1950s to emulate the human visual system in order to identify the edges of objects and categorize the simple shapes of objects into categories, such as circles and squares [17,18,19,20]. In the 1970s, computer vision technology was commercialized, and it reached outcomes such as identifying typeface or handwriting using optical character recognition algorithms. In recent years, the construction industry has embraced computer vision-based technologies for a variety of reasons, including construction site safety monitoring, job efficiency, and structural health inspections [20,21].

In comparison to traditional sensor-based approaches, vision-based techniques might provide non-destructive, distant, ease-of-use, and ubiquitous measurement without the need for additional measuring and receiving equipment [22,23,24,25]. Furthermore, as low-cost, high-performance digital cameras have become more common in practice, it is expected that computer vision-based technologies will broaden their applications to the construction industry, which contains many risk factors, such as working at height and loading hazardous construction materials at sites [20,25,26].

Vision-based crack detection technologies on concrete structures, for example, have been one of the most extensively used techniques in the construction sector for infrastructure and building health checks and monitoring. Koch et al. [25] conducted a thorough study of existing computer vision-based fault identification and condition assessment procedures for concrete and asphalt civil infrastructures. They observed that, while the image-based crack and spalling detection and classification system could identify such problems automatically, the process of gathering image and video data was not totally automated.

Image-based inspection approaches for big infrastructures such as bridges and tunnels have allowed for the assessment of the bending and/or displacement of structures that otherwise would be difficult and dangerous for employees to inspect in person [20,27,28,29,30]. Furthermore, because image-based measurement approaches capture image and/or video data from remote places, it is possible to increase job productivity and worker safety [31,32,33]. Machine learning, in particular, when combined with modern digital image processing algorithms and unmanned aerial vehicles (UAVs), has improved the precision and accuracy of image-based displacement measurements acquired from remote places. For example, Jahanshahi et al. [34] used a pattern recognition system to analyze visual data from UAVs.

2.2. Importance of Raw Data and Labeling

In order to obtain an AI model with high performance, it has been reported that how well the collected raw data were labeled in accordance with the developmental purpose of the AI model is paramount, as opposed to the quantity of data. In one study, in order to diagnose tumors, fractures, and eruptions in teeth, an AI model was developed utilizing panorama X-ray images as learning data [18]. However, the research results showed that there were times when certain normal conditions were perceived as diseases in the teeth. These were cases in which the sorting of patients’ data classes were performed incorrectly, which supplemented the teeth with the artifact, and recognized teeth disease hidden behind the artifact as normal. A study to differentiate between normal tissue and cancer by assessing the colonic tissue with deep multichannel side supervision was also conducted [35,36,37]. The model could not identify whether the size of cancer tissue was small. This was because the labeling was conducted to differentiate between more complicated shapes than the cancer size. A shape-robust model was designed that recognized whether the cancer was small according to whether it had a simple shape. Although hundreds of AI models have been developed to predict patient risks over the last two years in order to cope with the COVID-19 pandemic, using medical images such as chest X-rays and CT scans, no diagnosis model has yet been reported as applicable on clinical sites. The data collected could only differentiate between single species types and was unable to identify if variable species developed; they were thus considered unsuitable for use in real examinations. Moreover, there were also cases when model performance could not be trusted due to the developers’ competence, due to the introduction of biased data or user errors in the diagnosis model, which frequently occur in AI studies [38,39]. Previous studies verified that AI model performance is affected by pre-processing learning data and labeling quality.

2.3. AI in Specialized Fields

AI is actively being used in various industries to improve efficiency [39]. In the medical industry, a variety of diagnosis models are used to accurately and quickly diagnose diseases and lessen patient risk. For instance, it is important to diagnose melanoma in the early stage since it can be cured if detected early. However, it is difficult to identify melanoma with the naked eye and thus it has a low detection rate in its early stages. Hence, research into diagnosing melanoma through classification and segmentation in skin lesion images was conducted. The study attempted to identify pigmented skin in dermoscopic images utilizing instance segmentation [40]. As a result of segmentation with the MeanShift algorithm and classifying using kNN, Decision Tree, and SVM, high accuracy was reported, which was of assistance when self-diagnosing melanoma.

Moreover, a deep learning model was also developed to diagnose metastatic breast cancer. In order to differentiate metastatic breast cancer from the overall lymph gland, a segmentation-based automatic background detection technique that deletes the background was used. The five-year survival rate for patients with metastatic breast cancer is 34%, and it is difficult to completely recover; thus, relapse and treatment are often repeated. However, patient quality of life can be improved using an early-stage diagnosis model [41]. The livestock industry also uses segmentation-based AI models. It is difficult to frequently check the health information of animals, such as weight and activity levels, but it is crucial. The pig breeding industry developed panoptic segmentation, which combines semantic segmentation and instance segmentation, and attempted to classify pigs in the farm as objects. Datasets annotated and labeled in ellipses similar to the shape of pigs were utilized, and the pigs’ weights were calculated using the volume information from the object shapes. Using this model, livestock industry workers can assess weight gain and other health information without having to walk around the farm [42].

2.4. Instance Segmentation for Labelling

The upside of image recognition techniques is that the identification result is similar to that of human beings, i.e., instance segmentation shows not only the location but also the shape of the object in the image in detail. Since 2020, the most commonly used instance segmentation models are as follows: the fully convolutional instance-aware semantic segmentation (FCIS) technique based on the fully convolutional network (FCN) technique, which does not have image size limits, remembers the location information of the object, and has improved instance segmentation in the image to enhance accuracy [43,44,45]. In the previous FCN technique, the object area in the image is marked by a human, but it exhibits comparatively low accuracy as parts overlap as an image becomes more complex, and a pixel ends up containing information from several parts. The FCIS technique, which appeared to solve this problem, successfully resolves overlapping issues by automatically recognizing both the object area and the divided sections in data learning.

The mask R-CNN technique improves accuracy by substituting the region-of-interest (RoI) pooling technique, which adjusts the Faster R-CNN technique’s image sampling and size to the RoI, aligned through bilinear interpolation, and greatly increases learning speed by executing classification and localization simultaneously [45]. The mask scoring R-CNN technique is similar to the mask R-CNN technique stated above in that it improves the accuracy of the AI model by enhancing the segmentation resolution from box level to pixel level but is different in that it improves segmentation accuracy by learning the quality of the predicted mask object [46].

The path-aggregation-network-for-instance-segmentation (PA-Net) technique uses bottom-up path augmentation and adaptive feature pooling for precise localization. Bottom-up path augmentation minimizes information omission by reducing the information delivery process from a low-level feature, closely resembling the original feature, to a high-level feature. Hereafter, the RoI created in low- and high-level features are combined in one vector through adaptive feature pooling, which finally allows it to predict the class and box. That is to say, the localization accuracy is enhanced by mutually supplementing the pros and cons of low- and high-level features [47]. The RetinaMask technique predicts the object mask with single-shot detection (SSD), an object detection method, which stably modifies the loss function, learns complicated data, and enhances the accuracy in a two-stage method [48]. Moreover, instance segmentation research is performed by focusing on object detection and location recognition, and the development model can be used instantly in the field. Taking into account the previous research, it is favorable to use AI models with instance segmentation, which can verify innumerable atypical objects through masking, in the construction industry.

3. Comparison of mAP According to Data Pre-Processing Proficiency

The research method was ordered as follows: model selection, data pre-processing, data labeling, data quality verification, and learning-model quality verification [49]. In this sequence, differences by the composition of the learning dataset, transfer learning by YOLACT, and the compared mAP differences are shown. The research processes for this are explained in the following.

3.1. AI Model Selection

The construction waste that was studied in this paper was dirty in the original images. This was because many of the materials, such as concrete, timber, and plastic, had lost their original shape and were mixed in a broken state; in many cases, they could not even have been differentiated by a human. Therefore, the objectives of this research were to assist in accurately and efficiently differentiating construction waste using AI. Generally, there are four methods for image recognition and object verification in AI: classification, localization, object detection, and instance segmentation. The factors considered in image recognition methods to develop a construction waste recognition model are as follows.

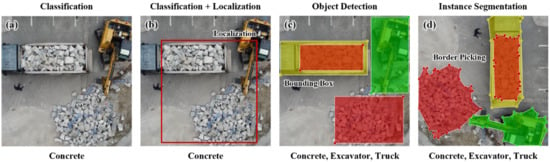

Classification is a method to simply classify objects in an image (Figure 1a). An additional coding process is required in order to check the location of the object found in classification. Therefore, even if a model is trained with well-formed learning data, it is difficult to instantly check where an error occurred if there is a failure. Although a technique to detect object type and location information by introducing classification and localization methods was attempted (Figure 1b), it could only identify the rough location, and many times it could not specify the location if many objects were mixed. Furthermore, since classification is designed to categorize one object at a time, it has to go through many filtering steps to identify many objects, which leads to increasing computation; thus, it would be difficult to apply in fields that require instant responses, such as waste categorization. For the above reasons, on-site classification is considered difficult when developing a construction waste model.

Figure 1.

Image recognition methods: (a) classification; (b) classification + localization; (c) object detection; (d) instance segmentation.

Object detection is a method to detect several objects in an image and show the object’s location with a bounding box (Figure 1c). It is possible to advance this method by increasing the intersection-over-union (IoU), which can generate more object proposals and distinguish object rotation, within-class diversity, and between-class similarity [50,51]. A function to differentiate several objects simultaneously was added to this method, making it suitable to classify waste; however, since it shows the location in a box shape, a human may find it difficult to assess if several objects are complexly mixed. In addition, the method aims to identify fixed set objects around human beings, making it inadequate to recognize atypical construction waste. Lastly, instance segmentation identifies several objects detected through object detection in the pixel unit according to the boundaries of each shape and indicates this by masking (Figure 1d). The masking effect makes this suitable for this study since construction waste that is tangled and overlapped can be identified, and the user can identify the object location and type intuitively.

We designed a model to classify construction waste by restructuring the YOLACT model, with the fastest calculation among all instance segmentation models, using transfer learning. Firstly, the movement fundamentals for the previous instance segmentation are processed in a two-stage method that localizes objects in an image with a bounding box and outputs a predictive value using masking in accordance with the class quantity. However, instead of skipping localization, YOLACT executes the following two processes in parallel, which profoundly increase the calculation speed [17] and reduce computation: predicting the prototype-mask-dictionary forming process, which creates an object set to be closely examined in the image, and coefficients; linear combining with the prototype mask vector value; and generating an optimal mask per instance.

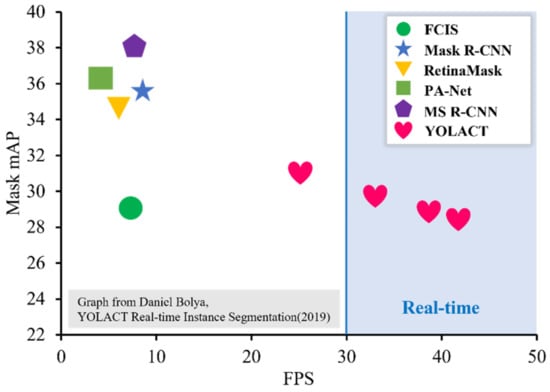

As indicated in Figure 2, the comparison results of the two-stage instance segmentation model and YOLACT show that the algorithm processing speed of YOLACT, which predicts the mask in parallel in one-stage instance segmentation, was much faster. Moreover, by utilizing the YOLACT model, the masks were predicted in more detail than with the other instance segmentation models [17]. Thus, YOLACT is considered suitable to learn the model in real time, precisely predicting objects, and thus was chosen as the development model in this study.

Figure 2.

Speed–performance trade-off for instance segmentation methods using the MS COCO dataset: Mask mAP means mAP for the predicted mask, frame per speed (FPS) means calculation speed.

The dataset used in this research was made up of 5 out of the 18 types of construction waste, which were board, brick, concrete, mixed waste, and lumber. A total of 599 images were used for learning. Data were photographed on site, collected on the web, modified to 512 × 512 pixels, pre-processed to within 100 KB each, and those inadequate for learning were removed. In order to clearly divide the boundaries of the construction waste in an image, shapes were extracted as polygons.

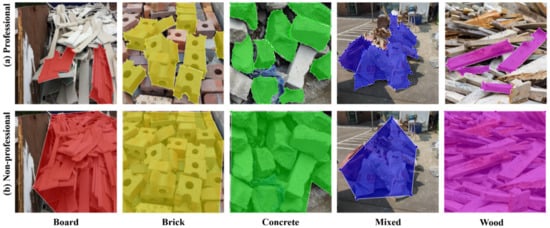

3.2. Criteria for Classification as Professional and Non-Professional

Labeling was performed, as shown in Figure 3, in order to test the hypothesis that the data labeling method differs depending on the level of the user’s knowledge, and that this affects the AI model performance. LabelMe was used as the labeling equipment [35]. Figure 3a is the result of labeling by a construction AI major graduate student, and the labeling in Figure 3b was performed by a construction major undergraduate freshman. Although the Figure 3b labeler was a construction major, the person had yet to acquire basic knowledge, and was thus considered to be a non-professional with the same level of knowledge as an ordinary person. The two students identified the waste types well, but the non-professional did not label and categorize each object, and thus unrelated objects were labeled together. On the other hand, the labeling done by professionals precisely and accurately categorized each object.

Figure 3.

Labeling by users: (a) labeling by professional for five types of construction waste; (b) labeling by non-professional for five types of construction waste.

3.3. Learning Method

The development languages used to conduct the AI research were Python and Google Colaboratory, which have a similar development environment to Jupyter Notebook. Python is frequently used for AI development since it has many machine libraries that are commonly utilized. Google Colaboratory is equipped with a basic scientific library and is convenient since the code can be produced online. In this development environment, the research was reorganized to be suitable for a construction waste recognition model based on YOLACT. Two datasets, one labeled by a professional and the other by a non-professional, were utilized as variables. Each batch size was 16, and a total of 10,000 iterations were carried out. The GPU used for the computation was Nvidia Tesla P100 provided by Google as standard; it required approximately 18 h for one model to perform transfer learning.

3.4. Learning Results Analysis

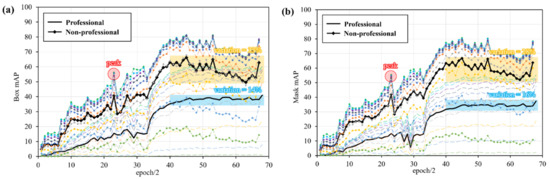

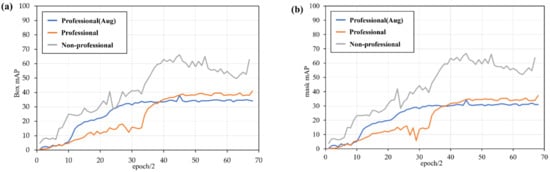

Figure 4 shows the results for the learning construction waste image labeled in accordance with a professional level of knowledge, as indicated in mAP. For graph visualization, mAP was showed every two epochs, which means the number of times the entire training data was used. The graph shows the calculated mAP per 10,000 iterations and its estimated tendency as an average value. Contrary to the first hypothesis that stated that the performance of the model learned with a dataset labeled by the professional would be better, the non-professional’s learning model was superior. As shown in Table 1, the mAP was 21.75 higher for the box and 26.47 for the mask on average. There are two hypotheses for this result. Firstly, it was surmised that the non-professional’s labeling method was similar to that of the COCO dataset, which is the YOLACT model’s learning dataset. Secondly, non-professionals tended to annotate a much larger area in images, as shown in Figure 3, and due to this, the parts that overlapped with the predicted mask obviously increased, which led to an overall surge in the mAP. Section 4 further explores this. Moreover, the non-professional’s mAP, which had been consistently increasing, reached a peak in 20~30 epoch/2 before falling again, and this tendency was only observed in the non-professional’s learning model. This may explain a general problem with the gradient descent observed in the learning process, with the mAP increasing along with the trend and not influencing the model performance. Furthermore, the mAP labeled by the non-professional exhibited much larger variation and was unstable after 40 epoch/2, as compared with that of the professional. The unstable mAP tendency may have been due to an annotation error resulting from the lack of knowledge of the non-professional, but we could not confirm a cause.

Figure 4.

mAP by users: (a) mAP of box; (b) mAP of mask.

Table 1.

mAP by user when the actual value and the predicted value match that percentage.

4. Discussion

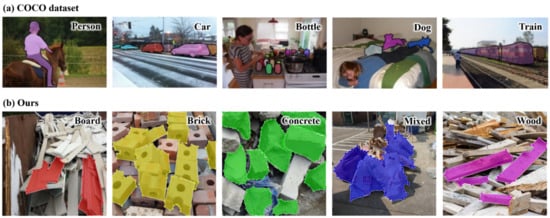

In Section 3.4, the non-professional learning model’s superior performance was hypothesized to be due to the similarity in labeling methods between MS COCO’s, which was learned prior in YOLACT, and the construction waste recognition model’s dataset. Therefore, the two dataset’s labeling methods were compared and analyzed to verify this [52].

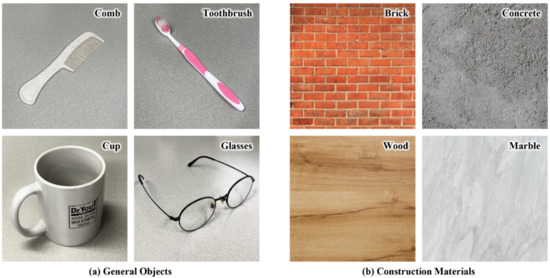

As shown in Figure 5, it can be seen that both the MS COCO and construction waste datasets labeled one class each in order to differentiate in an image; they were not in a mixed state with several object classes (categories). The number of classes for the datasets to a label was the same, but there were differences in the type of objects to recognize in the image. Table 2 is the class category of the MS COCO dataset. The MS COCO dataset had alternation possibilities with a perfectly regular object (Table 2a), which has a set shape, but consists of a semi-regular object (Table 2b) with a general shape set. On the other hand, the source of construction waste is construction material (Figure 6b). This does not have a shape, but rather may be rectangular or a polygon and is differentiated by texture and color; thus, it has different object characteristics to the MS COCO dataset’s regularly shaped object. Consequently, the professional’s learning model exhibited lower performance than that of the non-professional as it was annotating two different objects using the same labeling method. The construction waste recognition model has to apply different labeling methods to the one previously used, by putting weight on texture and color. Furthermore, looking at the results that compare the total image quantities of the two learning datasets in Table 3, the dog and train categories in the MS COCO dataset achieved a comparatively high accuracy despite learning with a similar quantity of data as the construction waste dataset. Hence, one can see the importance of creating a learning dataset in accordance with the AI model’s development purpose in terms of enhancing AI model performance, as opposed to focusing on the quantity of learning data.

Figure 5.

Comparison of labeling for each dataset: (a) the MS COCO dataset; (b) the construction waste dataset.

Table 2.

Class labels in the MS COCO dataset.

Figure 6.

General objects have typical shapes, while construction materials are atypical: (a) general objects; (b) construction materials.

Table 3.

Quantity of training images for various categories from the MS COCO dataset and the construction waste dataset: (a) the MS COCO dataset; (b) the construction waste dataset.

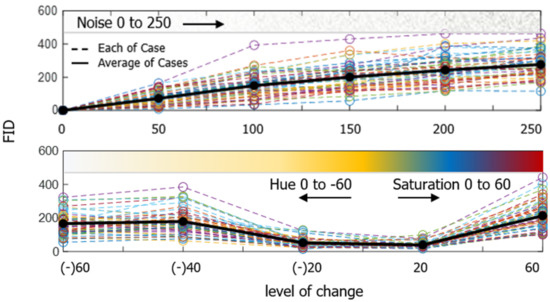

The research increased the quantity of learning data in two ways, with noise and hue, considering the following phenomena: firstly, it was thought that surface texture would differ depending on noise; secondly, it was considered that the level of a color change would depend on the sunlight when outside or lighting when indoors, and would lead to different waste categorizations; thus, we chose two augmentations. The level of image adjustment was numerically compared with the FID in the original and generated data after deforming the images in steps, as shown in Figure 7 [37]. In order to assess the optimal level, every 50 wood classes were deformed and compared, and as a result, the optimal level was under 100 for Gaussian noise and under −20 for hue. On the basis of the results, augmentation was conducted, and a total of 1198 additional images were acquired. After being augmented in this way, the data were equivalent to three times the size of the existing data, and so the number of iterations was also tripled for transfer learning (30,000 iterations), making it able to learn in the same number of epochs as in previous cases. Figure 8 shows the results after additional transfer learning with the same model by augmenting the color and texture, considering the characteristics of construction waste, and increasing the data quantity in order to verify the argument stated above.

Figure 7.

Result of calculating Frechet inception distance (FID) by augmenting 50 images per category each step by adding noise and changing color.

Figure 8.

mAP of the AI model trained with the augmented dataset: (a) mAP of box; (b) mAP of mask.

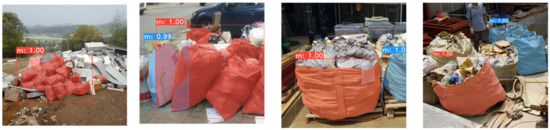

As in Figure 8, the final mAP of the model trained with the augmented dataset was poorer than that of the non-professional. However, a more meaningful accuracy was acquired since it converged in fewer epochs than the unreinforced dataset. It would be hard to commercialize with a 30~40 level mAP, as was the case in the research. The research team surmised that it would be very difficult to analyze hundreds of evaluation images and perceive all the boundaries of atypical objects. Although the model trained with the dataset reinforced by the professional categorized all classes existing in the image, as shown in Figure 9, it did not perfectly mask the boundaries. Nonetheless, the masking level did not affect counting or finding the location of a class. Therefore, although the mAP of the model that could not find all class boundaries was low, it was considered that the model could potentially be commercialized. However, it is necessary to find a way to enhance the accuracy using an explainable AI model in future research.

Figure 9.

Class recognition result of the AI model trained with the augmented dataset.

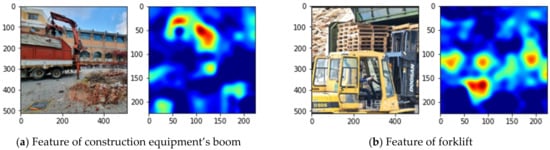

A technique called ‘Grad-CAM’ is a recent trend in explainable AI research. It indicates the perception range of AI in an image with a heat map and enables a human to deduce the reason for the prediction [28]. Figure 10 shows a heat map of an AI model recognizing construction equipment that has received transfer learning based on Resnet50, and which is in development by the research team. It could intuitively calculate whether the model could identify regularly shaped objects, such as construction equipment, by looking at distinct parts. Similarly, atypical objects such as construction waste may also be able to enhance the model’s reliability using a similar verification method.

Figure 10.

An example of Grad-CAM in which an AI model that recognizes construction equipment can visually check the feature range of an object: (a) As a result of Grad-CAM for a piece of construction equipment’s boom, the model could identify construction equipment’s boom; (b) As a result of Grad-CAM for a forklift, the model could identify feature of forklift.

5. Conclusions

The aim of this study was to increase the recycling rate through construction waste division by developing a construction waste recognition model. Because previous AI-related research indicated that poor data were central to the failure of learning model development, this study was conducted under the hypothesis that the accuracy of the AI learning model would differ depending on whether the data were refined by a professional or non-professional, each with a different level of knowledge concerning construction. As a result, contrary to the hypothesis, it was shown that the dataset mAP labeled by the non-professional was superior to that of the professional. We believe this was due to the fact that we utilized similar labeling methods for the YOLACT datasets, which were chosen for the learning model and MS COCO, despite them exhibiting different traits for the construction waste used in the research. However, after a particular training section, the professional’s mAP fluctuation rate was lower and more stable than that of the non-professional, thus it is considered that data quality management by a professional is necessary. Construction waste does not have a fixed shape and is characterized by varying textures and colors. Thus, by augmenting the training result by adding noise (texture) and changing colors to consider these traits, and increasing the dataset size threefold, we were able to improve the accuracy in 20 fewer epoch/2 as compared to the unreinforced dataset. The model assessment results exhibit a low mAP because not all boundaries were perfectly masked, despite categorizing all existing classes in the image. Therefore, in order to develop an AI model that recognizes atypical construction waste, it is necessary to develop an explainable AI model that restructures the AI network through the model’s feature map or creates a dataset that places importance on the color and texture of the waste.

Author Contributions

Conceptualization, S.N. and S.H.; methodology, H.S., W.C., and S.H.; software, H.S. and W.C.; validation, S.N., C.K., and S.H.; formal analysis S.N., C.K., and S.H.; investigation, H.S.; resources, S.N. and S.H.; data curation, H.S., W.C., and S.H.; writing—original draft preparation, H.S.; writing—review and editing, H.S., S.N., and S.H.; visualization, H.S.; supervision, C.K.; project administration, S.N.; funding acquisition, S.H. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Research Foundation of Korea (NRF) grant funded by the Korea government Ministry of Education (No. NRF-2018R1A6A1A07025819 and NRF-2020R1C1C1005406).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data used to support the results of this study are included within the article. In addition, some of the data in this research are supported by the references mentioned in the manuscript. If you have any queries regarding the data, the data of this research would be available from the corresponding author upon request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Kim, H.K. Apartment Prices in the Metropolitan Area Rise at an All-Time High for 8 Weeks. Available online: https://www.donga.com/news/Economy/article/all/20210909/109181926/1 (accessed on 10 December 2021).

- Korea Development Institute. KDI, Results of ‘Real Estate Forum’. Available online: https://www.kdi.re.kr/news/coverage_view.jsp?idx=11003 (accessed on 13 December 2021).

- Seoul Metropolitan Government. Seoul City’s ‘Relaxation of Redevelopment Regulations’ Will Be Applied in Earnes. First Nomination Contest. Available online: https://mediahub.seoul.go.kr/archives/2002616 (accessed on 5 January 2022).

- Korea Resource Recirculation Information System. National Waste Generation and Treatment Status. Available online: https://www.recycling-info.or.kr/rrs/stat/envStatDetail.do?menuNo=M13020201&pageIndex=1&bbsId=BBSMSTR_000000000002&s_nttSj=KEC005&nttId=1090&searchBgnDe=&searchEndDe= (accessed on 31 December 2020).

- Ko, D.-H. Gov’t seeks land to operate new waste treatment facilities. In The Korea Times; The Korea Times: Seoul, Korea, 2021. [Google Scholar]

- Kim, S.K. A Study on Separation Analysis of Woods from Construction Waste by the Double-Cyclone Windy Separator. In Proceedings of the Korean Society for Renewable Energy Conference, Jeju, Korea, 19–21 November 2018; p. 267. [Google Scholar]

- Davis, P.; Aziz, F.; Newaz, M.T.; Sher, W.; Simon, L. The classification of construction waste material using a deep convolutional neural network. Autom. Constr. 2021, 122, 103481. [Google Scholar] [CrossRef]

- Na, S.; Heo, S.-J.; Han, S. Construction Waste Reduction through Application of Different Structural Systems for the Slab in a Commercial Building: A South Korean Case. Appl. Sci. 2021, 11, 5870. [Google Scholar] [CrossRef]

- Ali, T.H.; Akhund, M.A.; Memon, N.A.; Memon, A.H.; Imad, H.U.; Khahro, S.H. Application of Artifical Intelligence in Construction Waste Management. In Proceedings of the 2019 8th International Conference on Industrial Technology and Management (ICITM), Cambridge, UK, 2–4 March 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar]

- Lu, W.; Chen, J.; Xue, F. Using computer vision to recognize composition of construction waste mixtures: A semantic segmentation approach. Resour. Conserv. Recycl. 2021, 178, 106022. [Google Scholar] [CrossRef]

- Raptopoulos, F.; Koskinopoulou, M.; Maniadakis, M. Robotic pick-and-toss facilitates urban waste sorting. In Proceedings of the 2020 IEEE 16th International Conference on Automation Science and Engineering (CASE), Hong Kong, China, 20–21 August 2020; IEEE: Piscataway, NJ, USA, 2020. [Google Scholar]

- Felderer, M.; Ramler, R. Quality Assurance for AI-Based Systems: Overview and Challenges (Introduction to Interactive Session). In Proceedings of the International Conference on Software Quality, Vienna, Austria, 19–21 January 2021; Springer: Cham, Switzerland, 2021. [Google Scholar]

- Adedeji, O.; Wang, Z. Intelligent Waste Classification System Using Deep Learning Convolutional Neural Network. Procedia Manuf. 2019, 35, 607–612. [Google Scholar] [CrossRef]

- Ahmad, K.; Khan, K.; Al-Fuqaha, A. Intelligent Fusion of Deep Features for Improved Waste Classification. IEEE Access 2020, 8, 96495–96504. [Google Scholar] [CrossRef]

- Aishwarya, A.; Wadhwa, P.; Owais, O.; Vashisht, V. A Waste Management Technique to detect and separate Non-Biodegradable Waste using Machine Learning and YOLO algorithm. In Proceedings of the 2021 11th International Conference on Cloud Computing, Data Science & Engineering (Confluence), Noida, India, 28–29 January 2021; IEEE: Piscataway, NJ, USA, 2021. [Google Scholar]

- Aral, R.A.; Keskin, S.R.; Kaya, M.; Haciomeroglu, M. Classification of trashnet dataset based on deep learning models. In Proceedings of the 2018 IEEE International Conference on Big Data (Big Data), Seattle, WA, USA, 10–13 December 2018; IEEE: Piscataway, NJ, USA, 2018. [Google Scholar]

- Bolya, D.; Zhou, C.; Xiao, F.; Lee, Y.J. Yolact: Real-time instance segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019. [Google Scholar]

- Lecun, Y.; Haffner, P.; Bottou, L.; Bengio, Y. Object Recognition with Gradient-Based Learning. In Shape, Contour and Grouping in Computer Vision; Springer: Berlin/Heidelberg, Germany, 1999; Volume 1681, pp. 319–345. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Spencer, B.F., Jr.; Hoskere, V.; Narazaki, Y. Advances in computer vision-based civil infrastructure inspection and monitoring. Engineering 2019, 5, 199–222. [Google Scholar] [CrossRef]

- Shin, Y.; Heo, S.; Han, S.; Kim, J.; Na, S. An Image-Based Steel Rebar Size Estimation and Counting Method Using a Convolutional Neural Network Combined with Homography. Buildings 2021, 11, 463. [Google Scholar] [CrossRef]

- Tejesh, B.S.S.; Neeraja, S. Warehouse inventory management system using IoT and open source framework. Alex. Eng. J. 2018, 57, 3817–3823. [Google Scholar] [CrossRef]

- Na, S.; Paik, I. Application of Thermal Image Data to Detect Rebar Corrosion in Concrete Structures. Appl. Sci. 2019, 9, 4700. [Google Scholar] [CrossRef] [Green Version]

- Bulut, A.; Singh, A.K.; Shin, P.; Fountain, T.; Jasso, H.; Yan, L.; Elgamal, A. Real-time nondestructive structural health monitoring using support vector machines and wavelets. In Advanced Sensor Technologies for Nondestructive Evaluation and Structural Health Monitoring; International Society for Optics and Photonics: Bellingham, WA, USA, 2005. [Google Scholar]

- Koch, C.; Georgieva, K.; Kasireddy, V.; Akinci, B.; Fieguth, P. A review on computer vision based defect detection and condition assessment of concrete and asphalt civil infrastructure. Adv. Eng. Inform. 2015, 29, 196–210. [Google Scholar] [CrossRef] [Green Version]

- Son, H.; Seong, H.; Choi, H.; Kim, C. Real-Time Vision-Based Warning System for Prevention of Collisions between Workers and Heavy Equipment. J. Comput. Civ. Eng. 2019, 33, 04019029. [Google Scholar] [CrossRef]

- Jeong, E.; Seo, J.; Wacker, J. Literature Review and Technical Survey on Bridge Inspection Using Unmanned Aerial Vehicles. J. Perform. Constr. Facil. 2020, 34, 04020113. [Google Scholar] [CrossRef]

- Chen, G.; Liang, Q.; Zhong, W.; Gao, X.; Cui, F. Homography-based measurement of bridge vibration using UAV and DIC method. Measurement 2021, 170, 108683. [Google Scholar] [CrossRef]

- Reagan, D.; Sabato, A.; Niezrecki, C. Feasibility of using digital image correlation for unmanned aerial vehicle structural health monitoring of bridges. Struct. Health Monit. 2018, 17, 1056–1072. [Google Scholar] [CrossRef]

- Weng, Y.; Shan, J.; Lu, Z.; Lu, X.; Spencer, B.F. Homography-based structural displacement measurement for large structures using unmanned aerial vehicles. Comput.-Aided Civ. Infrastruct. Eng. 2021, 36, 1114–1128. [Google Scholar] [CrossRef]

- Xu, Y.; Zhou, Y.; Sekula, P.; Ding, L. Machine learning in construction: From shallow to deep learning. Dev. Built Environ. 2021, 6, 100045. [Google Scholar] [CrossRef]

- Yang, Q.; Shi, W.; Chen, J.; Lin, W. Deep convolution neural network-based transfer learning method for civil infrastructure crack detection. Autom. Constr. 2020, 116, 103199. [Google Scholar] [CrossRef]

- Akinosho, T.D.; Oyedele, L.O.; Bilal, M.; Ajayi, A.O.; Delgado, M.D.; Akinade, O.O.; Ahmed, A.A. Deep learning in the construction industry: A review of present status and future innovations. J. Build. Eng. 2020, 32, 101827. [Google Scholar] [CrossRef]

- Jahanshahi, M.R.; Masri, S.F.; Padgett, C.W.; Sukhatme, G.S. An innovative methodology for detection and quantification of cracks through incorporation of depth perception. Mach. Vis. Appl. 2013, 24, 227–241. [Google Scholar] [CrossRef]

- Xu, Y.; Li, Y.; Liu, M.; Wang, Y.; Lai, M.; Chang, E.I. Gland Instance Segmentation by Deep Multichannel Side Supervision. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Athens, Greece, 17–21 October 2016. [Google Scholar]

- Yang, L.; Zhang, Y.; Chen, J.; Zhang, S.; Chen, D.Z. Suggestive Annotation: A Deep Active Learning Framework for Biomedical Image Segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Quebec City, QC, Canada, 10–14 September 2017. [Google Scholar]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; van der Laak, J.A.W.M.; van Ginneken, B.; Sánchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wynants, L.; Van Calster, B.; Collins, G.S.; Riley, R.D.; Heinze, G.; Schuit, E.; Bonten, M.M.J.; Dahly, D.L.; Damen, J.A.; Debray, T.P.A.; et al. Prediction models for diagnosis and prognosis of Covid-19: Systematic review and critical appraisal. BMJ 2020, 369, m1328. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Roberts, M.; Covnet, A.; Driggs, D.; Thorpe, M.; Gilbey, J.; Yeung, M.; Ursprung, S.; Aviles-Rivero, A.I.; Etmann, C.; McCague, C.; et al. Common pitfalls and recommendations for using machine learning to detect and prognosticate for COVID-19 using chest radiographs and CT scans. Nat. Mach. Intell. 2021, 3, 199–217. [Google Scholar] [CrossRef]

- Lynn, N.C.; Kyu, Z.M. Segmentation and Classification of Skin Cancer Melanoma from Skin Lesion Images. In Proceedings of the 2017 18th International Conference on Parallel and Distributed Computing, Applications and Technologies (PDCAT), Taipei, Taiwan, 18–20 December 2017; IEEE: Piscataway, NJ, USA, 2017. [Google Scholar]

- Zeebaree, D.Q.; Haron, H.; Abdulazeez, A.M.; Zebari, D.A. Machine Learning and Region Growing for Breast Cancer Segmentation. In Proceedings of the 2019 International Conference on Advanced Science and Engineering (ICOASE), Zakho-Duhok, Iraq, 2–4 April 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar]

- Brünger, J.; Gentz, M.; Traulsen, I.; Koch, R. Panoptic instance segmentation on pigs. arXiv 2020, arXiv:2005.10499. [Google Scholar]

- Li, Y.; Qi, H.; Dai, J.; Ji, X.; Wei, Y. Fully Convolutional Instance-Aware Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Zhao, Z.Q.; Zheng, P.; Xu, S.T.; Wu, X. Object detection with deep learning: A review. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef] [Green Version]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Zhaojin, H. Mask Scoring R-CNN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Fu, C.-Y.; Shvets, M.; Berg, A.C. RetinaMask: Learning to predict masks improves state-of-the-art single-shot detection for free. arXiv 2019, arXiv:1901.03353. [Google Scholar]

- Heo, S.; Han, S.; Shin, Y.; Na, S. Challenges of Data Refining Process during the Artificial Intelligence Development Projects in the Architecture, Engineering and Construction Industry. Appl. Sci. 2021, 11, 10919. [Google Scholar] [CrossRef]

- Cheng, G.; Yang, J.; Gao, D.; Guo, L.; Han, J. High-Quality Proposals for Weakly Supervised Object Detection. IEEE Trans. Image Process. 2020, 29, 5794–5804. [Google Scholar] [CrossRef] [PubMed]

- Cheng, G.; Han, J.; Zhou, P.; Xu, D. Learning Rotation-Invariant and Fisher Discriminative Convolutional Neural Networks for Object Detection. IEEE Trans. Image Process. 2018, 28, 265–278. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Bourdev, L.; Girshick, R.; Hays, J.; Perona, P.; Zitnick, C.L.; Dollár, P. Microsoft Coco: Common Objects in Context. In European Conference on Computer Vision 2014; Springer: Cham, Switzerland, 2014. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).