Abstract

This research proposes a novel strategy for constructing a knowledge-based recommender system (RS) based on both structured data and unstructured text data. We present its application to improve the services of heavy equipment repair companies to better adjust to their customers’ needs. The ultimate outcome of this work is a visualized web-based interactive recommendation dashboard that shows options that are predicted to improve the customer loyalty metric, known as Net Promoter Score (NPS). We also present a number of techniques aiming to improve the performance of action rule mining by allowing to have convenient periodic updates of the system’s knowledge base. We describe the preprocessing-based and distributed-processing-based method and present the results of testing them for performance within the RS framework. The proposed modifications for the actionable knowledge miner were implemented and compared with the original method in terms of the mining results/times and generated recommendations. Preprocessing-based methods decreased mining by 10–20×, while distributed mining implementation decreased mining timesby 300–400×, with negligible knowledge loss. The article concludes with the future directions for the scalability of the NPS recommender system and remaining challenges in its big data processing.

1. Introduction

Nowadays, most companies collect feedback data from their customers. Improving the customer experience results in stronger customer loyalty, more referrals, and better overall business results [1]. To improve the customer experience, it is necessary for companies to understand and implement what customers are saying in their feedback, which is usually a challenging task given the volume of data.

1.1. Net Promoter Score

One important business metric to measure customer experience is the Net Promoter Score (NPS®, Net Promoter®, and Net Promoter® Score are registered trademarks of Satmetrix Systems, Inc., Redwood City, CA, USA, (Bain and Company and Fred Reichheld)) which shows how likely a customer is to recommend a company. Those customers with a strong likelihood to recommend (with scores of 9 or 10) are called promoters, while those that score 6 or less are called detractors. The other customers are called passives since they do not express strong likelihood or dislike.

1.2. Customer Relationship Management and Decision Support

Information systems traditionally supporting customer relationship management are CRM systems. CRM is described as “managerial efforts to manage business interactions with customers by combining business processes and technologies that seek to understand a company’s customers” [2], i.e., structuring and managing the relationship with customers. CRM covers all the processes related to customer acquisition, customer cultivation, and customer retention. CRM systems are sometimes augmented with decision support systems (DSSs). A DSS is an interactive computer-based system designed to help in decision-making situations by utilizing data and models to solve unstructured problems [2,3].

1.3. Recommender Systems

Recommender systems (RSs) are considered a new generation of intelligent systems, and are applied mostly in e-commerce settings, supporting customers in online purchases of commodity products such as books, movies, or CDs. The goal of a recommender system is to predict the preferences of an individual (user/customer) and provide suggestions for further resources or items that are likely to be of interest. Formally, RS attempts to recommend the most suitable items (products or services) to particular users (individuals or businesses) by predicting a user’s interest in an item based on information about similar items, the users, and the interactions between items and users [4]. RSs are usually a part of e-commerce sites, and offer several important business benefits: increasing the number of items sold, selling more diverse items, increasing user satisfaction and loyalty, and helping to understand what the user wants [5,6,7,8]. Papers [8,9,10,11,12] provide comprehensive reviews of RSs. There is aplication-specific research. For example, the paper [11] describes explainable recommendations in hotel and restaurant service domains. There are various less traditional applications of RSs. Works such as [13,14] describe applying data mining methods to build recommender systems in the healthcare area.

1.3.1. Recommender System for B2B

The idea of applying a recommender system in the area of strategic business planning is quite novel and currently underresearched. Zhand and Wang proposed a definition of a recommender system for B2B e-commerce in [15]: “a software agent that can learn the interests, needs, and other important business characteristics of the business dealers and then make recommendations accordingly”. The systems use product/service knowledge—either hard-coded knowledge provided by experts or knowledge learned from the behavior of consumers—to guide the business dealers through the often overwhelming task of identifying actions that will maximize business metrics of the companies. Applying RS technology to B2B can bring numerous advantages: streamline the business transaction process, improve customer relationship management, improve customer satisfaction, and make dealers understand each other better. B2B participants can receive different useful suggestions from the system to help them perform better business.

1.3.2. NPS-Based Recommender System

The idea of an NPS-driven recommender system is to provide actionable recommendations that would be expected to improve the business goal of customer satisfaction, measured by the NPS score [16].

1.4. Distributed Data Mining

Data mining is a practice used to analyze datasets and refine interesting knowledge or patterns hidden in them. New algorithms have been proposed in the past decade to find some special actions based on the discovered patterns in the form of action rules. Action rules propose actionable knowledge that the user can undertake to his/her advantage. An action rule extracted from a decision system describes a possible transition of an object from one state to another state with respect to the distinguished attribute called decision attribute [17]. Authors in [17,18,19,20,21,22,23] proposed a variety of algorithms to extract action rules from the given dataset. However, due to the massive increase in the volume of data, these algorithms are stumbling to produce action rules in an expected timeframe. Therefore, there is a need to develop scalable and distributed methods to work on such datasets for generating action rules at a faster pace. In this epoch of big data and cloud computing, many frameworks, such as Hadoop MapReduce [24] and Spark RDD [25], have been proposed to efficiently distribute massive data over multiple nodes or machines to perform computations in parallel and in a fault-tolerant way. So far, there is limited research on applying distributed methods for building intelligent systems, but progress has been made in recent years on different applications [26].

2. Methods

We propose to apply action rule mining as the underlying algorithm for generating recommendations. Action rules have been applied in various areas [13,27,28], but not in the customer satisfaction area. Since a substantial amount of customer feedback has been collected over the years 2011–2016 (over 400,000 records), and there are limitations to manual data analysis, we propose a data-driven NPS-based recommender system [16,29]. The recommendation algorithm utilizes data mining techniques for actionable knowledge, as well as natural language understanding techniques on text data. The system recommends areas of service to be addressed by businesses to improve their customer loyalty metric (NPS), and it also quantifies the predicted improvement.

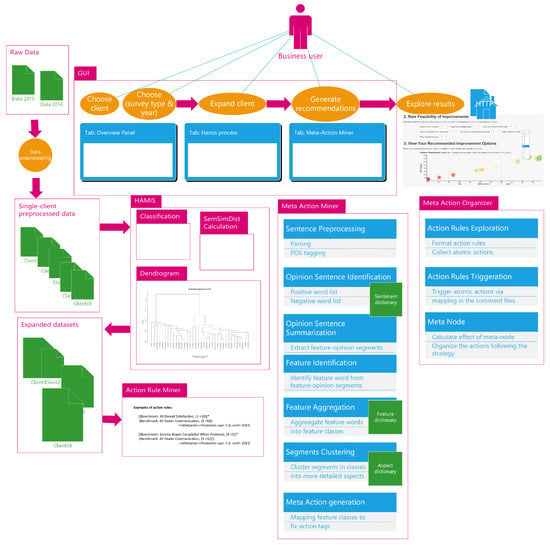

The high-level system architecture of the Customer Loyalty Improvement Recommender System (CLIRS) is presented in Figure 1.

Figure 1.

Modules and data flow within the data-driven recommender system for customer loyalty improvement. The presented diagram color-codes different modules within the system.

2.1. Scalability Issues

One of the important aspects of the knowledge-based recommender systems is the high performance of data preprocessing and ensuring scalability of the knowledge base once new data become available. The knowledge updates and generation of corresponding recommendations should ideally happen in real time. However, an update of the data-driven recommendation algorithm is a complex process and the model needs to be rebuilt based on all data from the past 5 years. The system should be scalable for adding new companies beyond the currently supported 38. Additionally, in the future, it can be expanded to support recommendations generated at the branch/shop level beyond the currently supported company level. The system should be also expandable for mining knowledge on how to change “Passive to Promoter” and “Detractor to Passive” beyond currently supported “Detractor to Promoter”. Action rule mining algorithms run on large datasets are computing resources-intensive and therefore are time-consuming. The critical part of the NPS system is the action rule mining process. There are many datasets to mine rules from, as the datasets are divided based on a company, the survey type (i.e., service, parts, rentals), and the chosen year. Each combination of these choices generates a different set of recommendations. From the business perspective, the update should proceed within a day, while these algorithms may take up even weeks when run on a single CPU. Therefore, an important aspect of this research is to tackle the issue of the performance of Action Rule Miner with the goal of making the RS usable in the real business setting. We identified this aspect as a bottleneck in the scalability of the system. In this work, we propose, implement, and evaluate a series of methods that aim to improve times of action rule mining and therefore time needed for updating a proposed system. Based on evaluation experiments, we propose incorporating the chosen set of methods that improve times of action rule mining.

Business Requirements

From the business perspective, it is important to ensure the system meets the following requirements:

- It should be scalable to add more than the current number of 38 companies.

- Up to date—the system’s models should be updated regularly once new data become available, typically once every six months.

- Update of the models should be time-constrained—should not take more than a day (and around half an hour per company).

- Sources: a user can choose the type of survey—whether service, parts, rentals, etc.—that would be used for the recommender system algorithm.

- Timeframe—a user should be able to choose the timeframe for the recommender algorithm’s input: for example, a yearly timeframe, bi-yearly, etc.

The above choices determine the type of input to the recommender algorithm. Since there are many rules extracted from each type of dataset, certain customers are covered by more than one rule, which makes certain action rules “redundant”. On the other hand, there are customers who are not “covered” or matched by any of the extracted rules. In other words, there are different ways a detractor customer can be “converted”, as indicated by the matched action rules. To address this issue, we propose a measure that tracks the number of distinct customers covered by rules. This measure translates to the NPS measure, which is calculated as the percentage of promoters minus the percentage of detractors.

Within this article, we propose a series of methods to speed up the mining process of action rules, which include the following:

- Data preprocessing measures, including handling NULL value and binning the Promoter Status attribute.

- Limiting the number of semantic neighbors in the hierarchical clustering procedure to limit the size of the datasets.

- Setting constraints on the patterns to be mined, by modifying the action rule mining algorithm.

- Implementing distributed action rule mining.

2.2. Dataset

Within our research, the B2B customer feedback was collected through structured surveys conducted via phone by The Daniel Group in Charlotte, NC. Thirty-eight companies from the heavy equipment repair industry were involved in the survey program. The dataset consists of attributes called “benchmarks” which represent questions asked in the surveys, i.e., “How do you rate the overall quality of XYZ Company’s products?”. Each customer survey is characterized by service-related attributes, company, and its end-customer information, and is labeled with the Net Promoter Status (Promoter/Passive/Detractor). The business goal is to find any patterns and insights for the improvement of customer loyalty, as measured by NPS. The collected dataset includes the following:

- Quantitative data (structured data)—numbers/scores for questions (benchmarks) that ask customers to “On a scale of 1 to 10, rate…”.

- Qualitative data (unstructured data)—text comments, where customers express their opinions, feelings, and thoughts in a free form.

The scores give insights into what customers are saying, while text comments give insights into why they are assigning these scores. Traditional software for customer relationship management typically utilize traditional machine learning on numerical data, while customized text analytics solutions are underresearched.

2.3. Action Rules

The concept of an action rule was first proposed by Ras and Wieczorkowska in [17]. The data analysis using the action rule technique is expected to bring a better understanding of data, but also to recommend specific actions aiding in decision-making. By action, we understand changing certain values of attributes in a given information/decision system. Action rules are extracted from an information system, and a singular action rule describes a possible transition from one object’s state to another one, considering the desired change in so-called decision attribute [17]. We split attributes into so-called stable attributes and flexible attributes, and one distinguished attribute called the decision attribute. A single action rule recommends the minimal changes in flexible attributes’ values with the given stable state, to achieve a change from one state of the decision attribute to another state. The formal definition of an action rule is as follows:

Definition 1.

An action rule is a term: , with ω denoting conjunction of fixed stable attributes, denoting the proposed changes in values of flexible attributes, and denoting a change of decision attribute, or an action effect.

Application of Action Rules to Improve NPS

Within this research, we propose to apply the action rule method to improve the Net Promoter Score metric. The mined actionable patterns should indicate which specific aspects of customer service/products should be addressed so that the predicted “Detractor” of a company changes to being a predicted satisfied “Promoter” or neutral “Passive”, at the minimum. We define the PromoterStatus attribute as the decision attribute. This attribute can have three values: satisfied Promoter, neutral Passive, or unsatisfied Detractor. Substituting for “Detractors” and for “Promoters”, the extracted action rules suggest changes in the flexible attributes leading to a change in the promoter status, with a certain confidence, and under certain defined stable conditions. The flexible attributes here would be any benchmark areas that can be addressed, and the overall goal is overall higher customer satisfaction, as measured by NPS. The Listing 1 presents a sample actionable pattern as extracted from customer survey data:

| Listing 1. An example of an action rule applied to customer survey data. |

| ((Benchmark: Repair Completed When Promised, (3->8)) |

| AND (Benchmark: Dealer Communication, (1->10))) => |

| (Detractor->Promoter) sup=4.0, conf=95.0 |

There are two characteristics of each action rule: so-called support and confidence. Support, in application to customer survey data, indicates the number of customers that matched the rule’s antecedent. On the other hand, confidence would be interpreted as the probability of changing the customer’s status from Detractor to Promoter.

2.4. Data Preprocessing

Starting from the left top of Figure 1, the input to the system is the raw data with customer feedback collected over the past 5 years. In the next step, datasets are being preprocessed before mined for knowledge. The preprocessing step includes feature extraction and feature selection, as well as data cleansing and transformation, described in more detail in [16]. The datasets are split per individual company.

2.5. User Interface

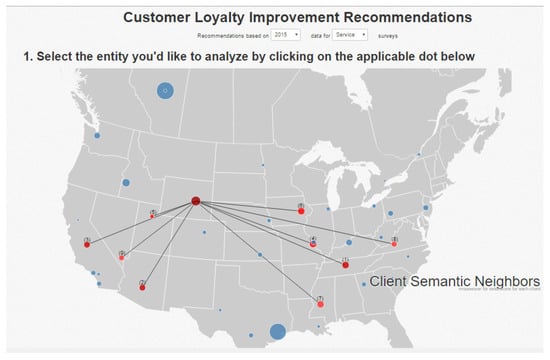

The user interface of the system guides the user through the process of recommendation (see the top of Figure 1). The user chooses a company to analyze, the year, and category (Parts/Service) in an Overview panel, which determines the source data for the recommendation algorithm. The alternative graphical user interface was implemented as the web-based dashboard (see Figure 2).

Figure 2.

Web-based UI to RS as an interactive map to choose the company to run recommendation algorithm for. Blue dots denote the location of the companies, while red dots highlight the semantic neighbors of the chosen company. The numbers are used to order from the closest semantic neighbor, starting with 1.

2.6. Hierarchical Clustering

The goal of the clustering method in data mining is to group similar objects together. Here, we cluster similar companies and we propose to use a semantic similarity distance as the similarity metric. The user chooses the “HAMIS” tab (see the top of Figure 1) and a company to analyze, and the procedure for expanding the current company’s dataset is run. The HAMIS procedure crawls through the dendrogram and calculates semantic similarity distance between each pair of companies. It expands the current company’s dataset only if another semantically similar company has a higher NPS score. The procedure is implemented within the HAMIS module (“Hierarchically Agglomerative Method for Improving NPS”) and is described in more detail in [30]. The HAMIS procedure trains the Net Promoter Status classification models on datasets first. Secondly, the semantic similarity distance between each pair of the companies’ classification models is calculated [29]. The semantic similarity is defined by terms of the NPS classifier’s similarity. Additional matrix structure keeps the semantic similarity scores between each pair. The dendrogram structure is built from the distance matrix. A dendrogram also visualizes the output of the hierarchical clustering algorithm. Through this visualization, the users can track which companies are “close” (or similar) to each other.

2.7. Actionable Knowledge Miner

Once expanded datasets are created from the “climbing the dendrogram” procedure, they are mined for actionable knowledge. The extracted actionable patterns propose minimal changes to “convert” the Promoter Status from negative Detractor/neutral Passive to a positive Promoter. The reason behind mining from the expanded datasets is that low performers can learn from their high-achieving counterparts. Extracted action rules are precise in indicating the type of change necessary down to specific benchmarks (e.g., Timeliness) and the needed changes in their scoring (e.g., the rating needs to change from 5 to 8). However, to trigger those changes, we need external events, which we call meta-actions.

2.8. Text Mining

The meta-action mining process is run from the Meta-Action Miner tab in the user interface. Within the system built, this procedure is part of the Meta-Action Miner package see Figure 1. The algorithm is based on natural language processing and text-mining techniques, and specifically utilizes an aspect-based sentiment analysis approach described in more detail in [31]. It involves text preprocessing, such as parsing and POS tagging, opinion mining, aspect taxonomy, and text summarization. The Meta-Action Organizer package of the system contains the triggering procedure. It associates mined meta-actions with the atomic actions in the rules.

2.8.1. Meta-Actions

In the proposed method, meta-actions act as triggers for action rules and are used for recommendations’ generation [29]. Wang et al. first proposed the concept of meta-action in [32], which was later specified by Ras and Wieczorkowska in [27]. In our method, meta-actions are external triggers that “execute” action rules, which are composed of atomic actions. The triggering mechanism is based on associating meta-actions with the corresponding atomic actions. The initial triggering procedure is illustrated in Algorithm 1.

| Algorithm 1 Initial procedure for action rule triggering. |

|

2.8.2. Triggering

The triggering mechanism can be depicted using the so-called “influence matrix”. The matrix lists meta-actions and associated triggered changes in atomic actions. The sample influence matrix is in Table 1. Attribute a is for a stable attribute, while attribute b is for a flexible one. {} is a set of meta-actions that can trigger action rules. The matrix lists changes that can be invoked by a meta-action in the row. As an example, meta-action (the first row) activates atomic action ().

Table 1.

An example of the meta-action influence matrix.

Here, we assume that one atomic action can be associated with more than one meta-action. An action rule is only triggered when a set of meta-actions triggers all its atomic actions. In general, one action rule can be triggered by different sets of meta-actions. As an example, if we consider an action rule , this rule consists of two association rules, and , where and [22]. Such a rule r can be triggered either by or from Table 1. Each of those meta-actions covers the rule’s atomic actions: , . In addition, one set of meta-actions can trigger many action rules. Within this method, we aim to create such sets of meta-actions that maximize the number of rules triggered and therefore have the greatest effect on the improvement of NPS. The NPS effect is calculated using the following formula. Let us assume that a set of meta-actions triggers a set of action rules . We define the coverage of M as the sum of the support measures from all covered action rules. This equals the number of customers affected by the triggering by M. We define confidence of M as the average of the confidence measures of all action rules that it is covering. Support and confidence of M are calculated as follows:

The overall impact of M equals the product of the support and confidence metrics: . This is further used for computing the predicted increase in NPS.

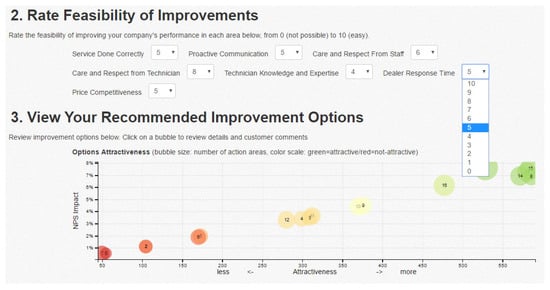

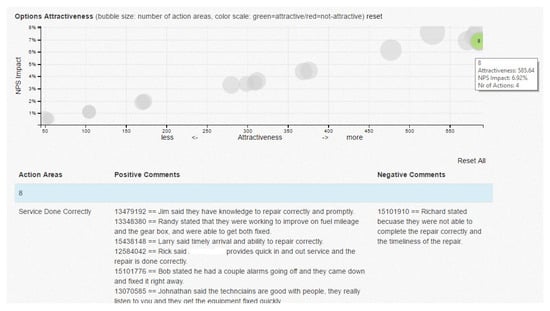

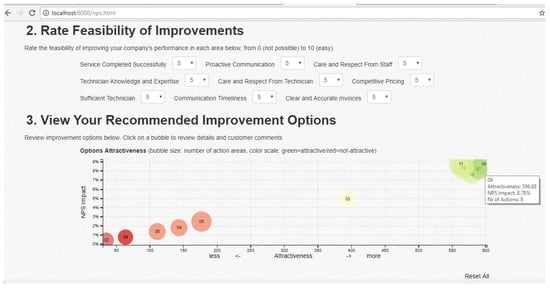

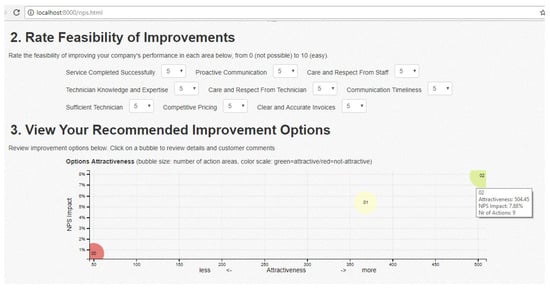

2.9. Recommendation Generation

The end recommendations of the system are called meta-nodes, which are generated by checking possible meta-action combinations to optimize the NPS impact. For each recommendation (meta-node), the predicted % impact on NPS is calculated from the triggering. The impact of triggering by meta-actions forming the meta-node depends on the number of action rules triggered, as well as their characteristics: confidence and support. The system presents to the user the meta-nodes with the highest predicted effect on NPS. The web-based visualization that supports recommendations analysis is presented in Figure 3. The set of recommendable items is visualized as a bubble chart, which color-codes each recommendation item based on its NPS impact and attractiveness. The user can perform sensitivity analysis by changing the feasibility scores for service areas. The visualization updates accordingly after recalculating the attractiveness based on the assigned feasibility scores. Each color-coded item can be analyzed further in terms of action areas analysis and related raw text comments, as seen in Figure 4.

Figure 3.

Web-based interface that produces interactive recommendations for improving NPS. The recomemndation nodes are color coded from non-attractive (red) to attractive (green) in terms of impact on NPS).

Figure 4.

The visualization of the mined text related to the analyzed recommendation.

2.10. Improved Data Preprocessing

We introduced additional data preprocessing measures to speed up the mining process. For example, the NULL value, imputed first for the missing values, was removed so that it is not seen as a separate value by the data mining program. The other preprocessing method is based on binning numerical variables [33], by converting distinct numerical values into ranges, and using categories such as low, medium, and high. The proposed mapping from the benchmark scores the discrete scale 0–10 into categorical labels:

- Low: <0,4>;

- Medium: <5,6>;

- High: <7,8>;

- Very high: <9,10>.

That binning was developed from the interpretation and understanding of such categories from the business expertise perspective and implemented with the PL/SQL programming script. Certain benchmarks were not binned due to already having low cardinality, such as 1–2, 0–1 (yes/no benchmarks), or on a 0–6 scale.

2.11. Improved HAMIS Strategy

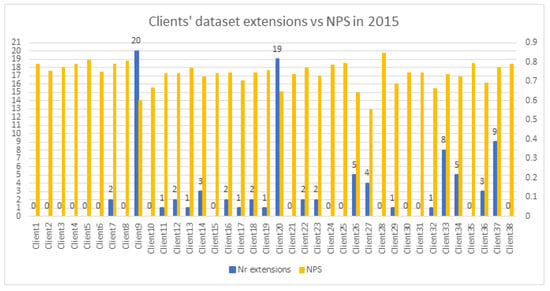

The second method aiming at improving the performance of data mining is limiting the number of semantically similar extensions within the HAMIS procedure. As described in Section 2.6, the data-driven approach for the recommendation system is based on the extended company’s datasets and extracting knowledge from the semantic neighbors’ datasets. The purpose of this step for a company is to learn from other companies who have a higher NPS and are similar by means of Net Promoter Status understanding. Figure 5 presents the number of semantic extensions for each of 38 companies (blue bars), as well as the resulting predicted percentage NPS improvement (yellow bars). For example, for the year 2015, Client #20 was extended with 19 other companies, and Client #9 with 20. Consequently, the original datasets of the above-mentioned companies grew from 479 records to 16,848 and from 2527 to 22,328 records, correspondingly. The size of the dataset increases the time of the action rule mining process.

Figure 5.

The resulting number of extensions after applying the HAMIS strategy for 38 client companies (blue bars) and the corresponding predicted improvements in NPS (%) (yellow bars) based on datasets from 2015.

On average for the 38 companies, the NPS improvement is predicted to increase to 78% from 76%. The dataset extension procedure based on HAMIS increases the sizes of datasets, on average, to 2159 rows from an average of 873 rows. When we consider Parts as the type of survey, the predicted NPS improvement is up to 84% from the initial 81%. The parts survey datasets increased in size, on average, to 2064 rows from the initial average of 559 rows. The extension process within HAMIS is time-consuming, and the extended datasets also take more time to mine from; it also occasionally causes an “out of memory” error. Many larger datasets take weeks or even months to complete the mining process, which is unacceptable from the business perspective and for requirements for updates. Within this work, we propose to modify the HAMIS extension strategy by limiting the number of extensions to a maximum of three.

2.12. Improved Pattern Mining

Another method we propose to improve the efficiency in mining is based on additional constraints on the defined patterns themselves. The initial mining process runs through all possible values of the atomic actions, including the undesirable changes in the benchmark values, from the higher to the lower. However, from the business perspective, such changes “for the worse” do not make sense. In general, the business strives to improve its benchmark scores and will not follow suggestions that recommend worsening these. To exclude such counterintuitive results of mining, the definitions of the mined patterns were changed to include only changes higher up (from 7 to 9, as an example), and changes downwards would be excluded (from 5 to 2, as an example). Within the experiments, the rules that suggest only changes “for the better” will be denoted as Rule—Type 0. An example of Rule—Type 0 is presented in the Listing 2.

| Listing 2. An example of “Rule—Type 0” |

| ((Benchmark: Likelihood Repeated Customer, 2->10)) AND |

| (Benchmark: Ease of Contact, 4->5)) => (Detractor->Promoter) |

| sup = 3, conf = 98.0 |

The further modification in the mined patterns assumes that the benchmark value can change to any value higher. That is, the atomic actions would recommend any change upwards. “Higher” in the experiments denotes any value higher than the original one. Therefore, the resulting action rules will contain atomic actions, such as “2→ higher” (means 2→ 3 or 4 or … or 10), “9→ higher” (means 9→ 10), etc. In further discussion, we call this modification Rule—Type 1. An example of Rule—Type 1 is in the Listing 3:

| Listing 3. An example of An example of “Rule—Type 1”. |

| ((Benchmark: Tech Equipped to do Job, 3->higher)) AND |

| (Benchmark: Repair Timely, 3->higher)) |

| => (Detractor->Promoter) sup = 3, conf = 100.0 |

The change of the mined pattern format led to the necessity of adapting the RS to process such rules in the recommendation engine. Such modifications are expected to improve the mining times of action rules. As a downside, this might result in a certain loss in precision and accuracy due to “generalizing” the mined actionable patterns.

2.12.1. Modifications in the Recommender System

The transition to “Rule—Type 1” required modifying the triggering procedure of the system. In the original implementation, the triggering mechanism was based on associating benchmark values with meta-actions. Another adaptation was related to binning the benchmark values. The modified version of Algorithm 1 is depicted in Algorithm 2.

| Algorithm 2 The modified triggering procedure to process Rule—Type 1. |

|

As can be seen from the listing, introducing Rule—Type 1 required modifications when collecting meta-actions related to the atomic actions of an action rule. In this new rule type, the numerical value was replaced by the value “higher” on the right-hand side of the atomic action, while the original datasets used for triggering still consist of numerical values. In the modified version, we need to associate meta-action with all values of benchmarks higher than the left-hand-side value. The binned and original datasets had to be treated differently. To denote this difference, the binned version used the following values for its categories: low—10, medium—20, high—30, and very high—40.

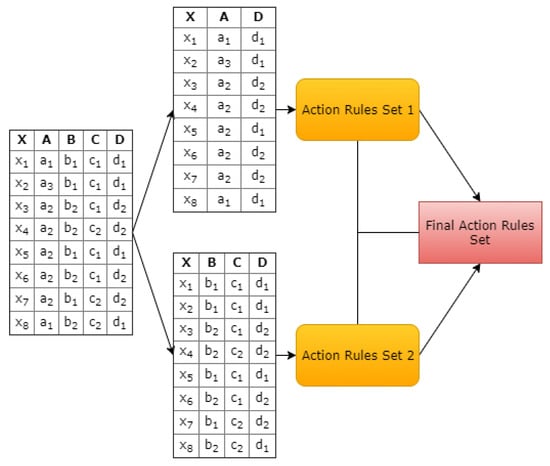

2.13. A Distributed Algorithm for the Mining of Action Rules

Another method we propose within this research to improve the times of action rule mining is based on the parallel version of the mining algorithm. It uses a distributed implementation in Spark [25]. Most of the existing approaches split large datasets into chunks of a small number of data records [34,35]. These methods prove to be inefficient when the dimensionality of the dataset grows. We propose to use a new data distribution strategy by splitting the datasets vertically (that is, by attributes) and extract action rules from multiple partitions using Spark as a distributed framework. Once all parallel processes are complete, action rules from each partition are combined to yield the final recommendation of action rules.

The proposed distributed action rule extraction algorithm is primarily imposed for the association action rules extraction, introduced by Ras et al. [22], to retrieve all possible action rules from the given decision system. Association action rules extraction is one of the most complex and computationally expensive of all action rules extraction algorithms because of the apriori algorithm [36,37] nature. The apriori algorithm starts with two element patterns and continues n iterations until it finds n element patterns, where n is the number of attributes in the given data. The sample association rule, which means that when a pattern () occurs together in the data, a pattern () also occurs in the same data, is given in the below equation:

Action rules also can be related to association rules in such a way that an actionable pattern occurs, where represents an atomic action, and the actionable pattern with decision attribute also occurs at least with minimum support () and minimum confidence (). The association action rules algorithm starts by extracting an atomic action on the left side of the rule and decision action on the right side. The algorithm continues for a maximum of iterations, where n is the number of attributes in the data and gives all actionable patterns in the data. We propose a method that provides very broad recommendations using the association action rules method and works comparatively faster in terms of execution time than the existing action rules extraction approaches [34,35]. We propose this method that suits data that have large attribute space like the business data used in our experiments. In this method, we split the data vertically into two or more partitions, and each partition can be split horizontally by the default settings of the Spark framework [25]. Figure 6 presents an example of vertical data partitioning with the sample decision table.

Figure 6.

Example Vertical Distribution Strategy. The table on the left side of the image is an example decision table.

The distributed action rule extraction algorithm, as given in Algorithm 3, runs separately on each partition, performs transformations such as map(), flatmap() functions, and combines results with join() and groupBy() operations.

Algorithm 3 uses the preprocessed data (, ) as input, where is the row id and is the list of values for each record in the data. The algorithm also takes the decision from () and the decision to () values as parameters. Step 2 of the algorithm obtains all distinct attribute values and their corresponding data row indexes. This step involves a Map phase and a groupByKey() phase of the Spark framework. We collect the data row indexes to find the support () and confidence () of action rules. Finding support and confidence is an iterative procedure. It takes O(nd) times to collect support and confidence of all action rules, where n is the number of action rules and d is the number of data records. To reduce this time complexity, we store a set of data row indexes of each attribute value in a Spark RDD. In Step 3, we assign the distinct attribute values to old combinations () RDD to start the iterative procedure. Thus, the RDD acts as a seed for all following transformations. The proposed algorithm only takes a maximum of n iterations, where n is the number of attributes in the data. During the ith iteration, the algorithm extracts action rules with i − 1 action set pairs on the left-hand side of the rule. Step 8 uses flatMap() transformation on the data to collect all possible i combinations from a data record. We sort the combination of attribute values since they act as the key for upcoming join() and groupBy() operations. We also attach to all combinations to obtain the support (which data records contain a particular pattern) of each combination with the use of Spark’s groupByKey() method in Step 9. We perform sequential filtering in the following steps. In Step 10, we filter out combinations for which the indexes count is lower than the given support threshold . From the filtered combinations, we obtain combinations ( and ) that have a decision from value and a decision to values in Step 11 and Step 12, respectively. In Step 14, we join and based on attribute names, and filter out and values since we know the decision action, which is not required in finding confidence of action rules. This results in action rules of the form (attributes, (fromValues, fromIndexes), (toValues, toIndexes)). We then calculate the actual support of the resultant action rules and filter out rules that have support of at least the given support threshold in Step 15. From Step 14, we have and , or, in other words, numerator of the confidence formula. To find the denominator and , we again use from values indexes and to values indexes in Step 17 and Step 18, respectively. We perform the join() operation with old combinations and assign values to and in Step 19 and Step 20, respectively. Subsequently, we perform the division operation of the confidence formula in the same steps. In Step 21, we join and . We now join action rules with support from Step 15 and action rules with confidence from Step 21 to obtain a final set of action rules. In Step 23, we assign new combinations to the old combinations and pass the same to the next iteration.

| Algorithm 3 Algorithm for distributed action rules mining: ARE (A, ,). |

Input: A is data of type (), DF is a decision from, and DT is a decision to

|

3. Results

We performed a series of experiments using the above-described methods. We measured the original and the improved mining times, as well as the impact on the generated recommendations.

3.1. Experimental Setup

The metrics collected for evaluation included the following:

- Mining times.

- The number of action rules extracted.

- The number of distinct customers covered by the rules.

We measured the number of distinct customers, as it directly affects the NPS impact of recommendations. In this system, the goal is to maximize that metric. The testing procedure consisted of the following steps/assumptions:

- Reading in the company’s action rule output file and processing it in the recommendation algorithm. We kept track of the triggering process and recorded the set of recommendations that produced the best NPS effect.

- We defined the base performance as that referring to the algorithm without any above-described modifications.

- We performed the initial testing on a dataset from one year, and then the primary testing on the dataset from the following year.

3.2. Test Cases

We chose different types of companies’ datasets to perform testing on and compared the performance of recommendations before and after the changes. The following sizes of datasets were chosen for testing:

- Size:

- –

- Small datasets (200–300 rows);

- –

- Medium datasets (1100 rows);

- –

- Large (6000 rows);

- –

- The largest (10,000 rows).

- Modification 1 (binning benchmark values into categories)

- –

- Original benchmark values (numerical);

- –

- Categorized ranges of benchmark values.

- Type of the rule’s right-hand-side definition:

- –

- Detractor→ Promoter;

- –

- Passive→ Promoter.

- Modification 3 (rule type)

- –

- Base rule type: downwards changes allowed;

- –

- Rule—Type 0—allowing only changes upwards;

- –

- Rule—Type 1—atomic actions can suggest changes to any higher value in benchmark scores.

- Modification 4 (original vs. distributed implementation)

- –

- Nonparallel (original) implementation (implemented in Java);

- –

- Parallel action rule mining reimplemented using the Spark technology.

3.3. Experiments

The experimental results on test cases defined above and the corresponding metrics are presented below.

3.3.1. Small Datasets

From the pool of 2016 service survey datasets, the smallest dataset was for Company Client #5 (213 records) and such size of the dataset was not sufficient to extract any “Detractor→ Promoter” action patterns. The next test among the smallest datasets was for Client#24 (306 surveys), and the results for different rule types and benchmark value types are presented in Table 2.

Table 2.

Running time and coverage of actionable knowledge miner on Client #24 dataset (306 surveys).

It can be observed that when the rule type was switched to “Type 0”, the mining process took twice less time than the original. It shortened twice again when we changed the type of rule to Rule—Type 1. Next, using categories of values, instead of distinct values, resulted in a slight lengthening of the time to 17 s from the original 10 s. The dataset with both benchmark categories and allowing the values to change higher only took 4.3 s, while further using “any higher” (Rule—Type 1), it took 3 s. In all tested cases, the coverage was at 71.4%. There were more rules generated of the base type—1342—than there were rules of “Type 0”—329, and rules of “Type 1”—141. It can be concluded that many of the rules in the original type were redundant and did not have better coverage than modified types of rules.

Passive to Promoter

Within another experimental setup, the smaller datasets were mined for “Passive to Promoter” action rules. This time, results were also generated for the smallest dataset with 213 records—see Table 3.

Table 3.

Running time and coverage of actionable knowledge miner on Client #24 dataset (213 surveys), “Passive to Promoter” rules.

In the tested dataset, there were 31 customers labeled as passive. The absolute coverage of customers by Rule—Type 0 and Rule—Type 1 decreased to 23. Using categories of benchmark values also deteriorated the coverage—down to 24 (with base rules) and 18 (with Rule—Type 0/Rule—Type 1). With Rule—Type 0, we shortened the mining time by 4x, and with Rule—Type 1, even further. A similar time gain was observed when testing the datasets with categorized values of benchmarks. Binning shortened mining time by about 2x in comparison to nonprocessed ones. Similar observations were drawn from the second smaller dataset of Company Client #24 (see Table 4).

Table 4.

Running time and coverage of actionable knowledge miner on Client #5 dataset (306 surveys), “Passive to Promoter” rules.

A significant speedup was observed when testing with Rule—Type 0 (down to 1 min from about 10 min) and with Rule—Type 1 (down to around 30 s). On the other hand, those changes affected the coverage negatively—it decreased down to 70.6% with the Rule—Type 0 and to 67.6% with the Rule—Type 1, from the base case of 76.5%. In addition, the categorization of the benchmark values worsened the mining times.

3.3.2. Medium Datasets

For medium-sized cases, Company Client #16 (see Table 5 and Table 6) and Company Client #15 were chosen.

Table 5.

Running time and coverage of actionable knowledge miner on Client #16 dataset (1192 surveys), “Detractor to Promoter” rules.

Table 6.

Running time and coverage of actionable knowledge miner on Client #16 dataset (1192 surveys), “Passive to Promoter” rules.

Detractor to Promoter

The performance gain was around 1.5 h for Rule—Type 0 and around 2 h versus the base case for Rule—Type 1 (see Table 5).

Passive to Promoter

The base case required 28 h to mine from this dataset, and was shortened to 18 h with categorized values of benchmarks (see Table 6). By changing the rule format, the times shortened further down to 2 h and 0.5 h, respectively, for Rule—Type 0 and Rule—Type 1. Those times were even shorter for the binned datasets—19 min and 11 min, respectively. As for the coverage, it was slightly worse—down to 143 and 138, respectively, from the original coverage of 153 passives.

When testing datasets with categorized benchmarks, that coverage was 157/180, but it was 110/180 with Rule—Type 0 and Rule—Type 1. Another medium dataset tested was for Client 15 with 1240 survey entries. The observed time improvement was down to 2 min from the original 10 min (Rule—Type 0), and down to half a minute for Rule—Type 1. When testing binned datasets, the mining time decreased to about a minute from the original 8 min. For binned datasets, the coverage was better with all tested types of rules. The mining times were as follows: around 11 h for “Passive to Promoter” rules, which was reduced down to 30 min with the introduction of Rule—Type 0, and further to 10 min with Rule—Type 1. These times were, respectively, 6 h and then down to 4/3 min when testing datasets with categorized values of benchmarks.

3.3.3. Distributed Algorithm for Mining Rules

The experiments with the distributed implementation were conducted on the UNC-Charlotte academic research cluster, with 73 nodes each of 32 GB memory. A set of test cases was chosen to run action rule mining with distributed implementation in Spark. Table 7 and Table 8 present running times and coverage, respectively, using the distributed algorithm and Rule—Type 0.

Table 7.

Running times using the distributed and nondistributed algorithm for action rule mining. Parts Survey 2015.

Table 8.

Coverage of customers using the distributed and nondistributed algorithm for action rule mining. Parts Survey 2015.

3.3.4. Recommendation Algorithm Testing

Besides testing for performance (running times and coverage), we tested the impact of the changes on the recommendations generated. We had to first modify the triggering mechanism when introducing a new rule format (Section 2.12.1). The way we measured the impact was by collecting different types of metrics calculated within the recommendation algorithm, such as the number of meta-actions and meta-nodes.

Evaluation Metrics

The following metrics were used to test the recommender algorithm:

- a

- Action rules extracted—the total number of action rules extracted with the Miner (including redundant ones).

- b

- Action rules read into RS—action rules that were actually used for the knowledge base of the system (after removing redundant ones and transformation).

- c

- Redundancy ratio (a/b)—action rules extracted versus actually used in the system.

- d

- Atomic actions—the total number of distinct atomic actions from all action rules.

- e

- Atomic actions triggered—atomic actions that have associated meta-actions.

- f

- Triggered action rules—action rules for which all the atomics have been triggered.

- g

- Ratio of the rules triggered to the rules read into the system (f/b).

- h

- Meta-actions extracted—the total number of distinct meta-actions extracted from the customers’ comments.

- i

- Effective meta-nodes—meta-nodes created by combining different meta-actions to obtain the highest effect on NPS.

- j

- Max NPS impact—the maximal impact on the Net Promoter Score from the optimal meta-node (that is, the NPS impact of the optimal meta-node).

Those metrics are presented in Table 9 (for small dataset), Table 10 (for medium dataset), and Table 11 (large dataset). Three test cases were chosen: Rule—Type 0, Rule—Type 1, and Rule—Type 1 with categorized benchmark values, since those three cases proved to be the most effective in improving the mining performance in the previously described experiments.

Table 9.

Comparison of meta-action mining, triggering, and meta-node creation processes for a small dataset with different modifications for the rule types.

Table 10.

Comparison of meta-action mining, triggering, and meta-node creation processes for a medium dataset with different modifications for the rule types.

Table 11.

Comparison of meta-action mining, triggering, and meta node creation processes for a large dataset with different rule types.

For the small dataset tested, as one can see in Table 9, accordingly, a smaller number of action rules was extracted. When changing the format from Type 0 to Type 1, that number further decreased twice. The atomic actions of all action rules read into the system were triggered, with seven meta-actions mined in total. There were four resulting meta-nodes when using Rule—Type 0, and one meta-node with Rule—Type 1. No meta-node resulted from using the binned versions of datasets. The overall impact on NPS from the recommendations was calculated at 3.21% for Rule—Type 0 and 2.89% for Rule—Type 1.

The same metrics were collected while testing a medium dataset, and the results are presented in Table 10. There were 5× as many extracted Rule—Type 0 than Rule—Type 1. Binning datasets resulted in extracting 3× as many Rule—Type 1. The action rules that were read into the system were triggered, 9 meta-actions extracted, 18 meta-nodes created with Rule—Type 0, 5 meta-nodes with Rule—Type 1, and 6 nodes when using binned datasets. The maximal impact on NPS from the generated recommendations was 8.58% for Rule—Type 0, and slightly worse with Rule—Type 1—7.37%. When using binned datasets, the maximal NPS impact was much lower—1.9%.

The recommendation algorithm’s metrics on a large dataset are presented in Table 11. About one-third of the action rules read into the system were triggered. There were 11 meta-actions extracted, 49 meta-nodes resulting from Rule—Type 0, 6 meta-nodes with Rule—Type 1, and 4 meta-nodes with binned datasets. Maximal NPS impact was 3.7% and 3.45% with Rule—Type 0 and Rule—Type 1, correspondingly, and 1.65% with the binned datasets.

Figure 7 and Figure 8 visualize the generated recommendations from the Company #32 dataset, using Rule—Type 0 and Rule—Type 1, correspondingly.

Figure 7.

Recommendations generated for Client 32, Category Service, 2016, based on extracted rules of “Type 0”.

Figure 8.

Recommendations generated for Client 32, Category Service, 2016, based on extracted rules of “Type 1”.

4. Discussion

4.1. Small Datasets

On small datasets, we were able to achieve significantly faster rule mining with changing the rule type; however, using categories of values did not bring such an effect. The coverage of customers was not impacted by the changes. For the small datasets, the mining usually completes within seconds, but these preliminary experiments provided insight into the magnitude of the potential performance improvement.

4.2. Medium Datasets

Medium datasets created performance issues in their original size, and the proposed modifications brought significant improvements. In this case, categorizing benchmark values also resulted in shorter mining times.

4.3. Distributed Algorithm

From the presented results, one can see that the distributed algorithm takes much less time to run. This improvement is important for the cases that originally run for hours, or even days, because it shortens it to minutes. In addition, the coverage was not worse than for the nondistributed algorithm.

4.4. Impact on Recommendations

Figure 7 and Figure 8 show that changing the algorithm to Rule—Type 1 resulted in fewer recommendations. Tests conducted for other companies with Rule—Type 1 also resulted in generating fewer meta-nodes and fewer meta-actions in a meta-node than with the original rule type. This results from the way a triggering procedure was implemented—Rule—Type 1—target a greater number of matched customers and there is less differentiation between these. Since the target value of flexible attributes in action patterns was changed to “higher”, the resulting rules aggregate more customers with the new rule type. More customers are associated with a single action rule and, accordingly, more meta-actions. As a matter of fact, most action rules extracted are associated with all extracted meta-actions. As a result, the meta-nodes generating procedure tends to produce nodes that encompass all extracted meta-actions. As a side effect, the functionality of the recommender system deteriorates. Meta-nodes (recommendations) that involve all meta-actions require businesses to change all areas of their services. As Figure 8 shows, the recommendable items, depicted as bubbles, are either “all” meta-actions or only 1–2 meta-actions, but nothing “in-between”. For smaller datasets, no, or one, recommendation is created.

5. Conclusions

Within this work, we proposed, implemented, and evaluated a number of methods to improve action rule mining performance. With regard to the modification of the NPS recommender system, eventually Rule—Type 1 was not incorporated due to resulting in much fewer recommendation options. This modification can, however, provide an alternative in case the mining processes are taking too long for larger datasets. The distributed implementation seems promising due to large performance improvements in the times of mining for the action rules. It is expected that this method will greatly improve the scalability of the system.

5.1. Limitations

The remaining challenges and limitations in this research include the following:

- Modifying the procedure to generate a greater variety of recommendations with Rule—Type 1. One solution to investigate includes applying a “relaxed” triggering strategy.

- More comprehensive testing—this work did not thoroughly test times for large and very large datasets of companies because the mining runs for days/weeks and is not machine-fault-tolerant.

- Further modifications in the algorithm for distributed action rule mining.

- Testing alternative mining tools such as LispMiner, which is known to efficiently extract rules due to the efficient algorithm based on the so-called GUHAprocedure [38].

5.2. Contribution and Future Work

The proposed approach to build a knowledge-based actionable recommender system can be applied to improve business metrics in various areas, not only Net Promoter Score. The system is customizable to different types of datasets specific to an organization. This work allows us to build a scalable data-driven recommender system, as the proposed methods for efficient actionable data mining were proposed within this methodology, implemented, and tested on industrial data describing customer surveys in a B2B environment. This is an important milestone, as oftentimes, the process of building the knowledge base of intelligent systems is the most time-consuming task, especially when working with big data.

Author Contributions

Conceptualization, Z.W.R.; Methodology, Z.W.R.; Software, K.A.T. and A.B.; Investigation, Z.W.R.; Resources, Z.W.R.; Writing—original draft, K.A.T.; Writing—review & editing, A.B. and Z.W.R.; Supervision, Z.W.R.; Project administration, Z.W.R. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data is confidential and property of The Daniel Group.

Conflicts of Interest

The authors declare no conflict of interest.

References

- The Daniel Group. Using Customer Feedback to Improve Customer Experience. Available online: http://info.thedanielgroup.com/using-customer-feedback-improve-experience (accessed on 20 November 2022).

- Hausman, A.; Noori, B.; Hossein, S.M. A decision-support system for business-to-business marketing. J. Bus. Ind. Mark. 2005, 20, 226–236. [Google Scholar]

- Hosseini, S. A decision support system based on machined learned Bayesian network for predicting successful direct sales marketing. J. Manag. Anal. 2021, 8, 295–315. [Google Scholar] [CrossRef]

- Bobadilla, J.; Ortega, F.; Hernando, A.; Gutierrez, A. Recommender systems survey. Knowl. Based Syst. 2013, 46, 109–132. [Google Scholar]

- Guo, L.; Liang, J.; Zhu, Y.; Luo, Y.; Sun, L.; Zheng, X. Collaborative filtering recommendation based on trust and emotion. J. Intell. Inf. Syst. 2019, 53, 113–135. [Google Scholar] [CrossRef]

- Mesas, R.M.; Bellogin, A. Exploiting recommendation confidence in decision-aware recommender systems. J. Intell. Inf. Syst. 2020, 54, 45–78. [Google Scholar] [CrossRef]

- Ricci, F.; Rokach, L.; Shapira, B. Recommender Systems Handbook, 2nd ed.; Springer: Boston, MA, USA, 2015. [Google Scholar]

- Shokeen, J.; Rana, C. Social recommender systems: Techniques, domains, metrics, datasets and future scope. J. Intell. Inf. Syst. 2020, 54, 633–667. [Google Scholar] [CrossRef]

- Dara, S.; Chowdary, C.R.; Kumar, C. An survey on group recommender systems. J. Intell. Inf. Syst. 2020, 54, 271–295. [Google Scholar] [CrossRef]

- Li, L.; Chen, L.; Dong, R. CAESAR: Context-aware explanation based on supervised attention for service recommendations. J. Intell. Inf. Syst. 2021, 57, 147–170. [Google Scholar] [CrossRef]

- Felfernig, A.; Polat-Erdeniz, S.; Uran, C. An overview of recommender systems in the internet of things. J. Intell. Inf. Syst. 2019, 52, 285–309. [Google Scholar] [CrossRef]

- Stratigi, M.; Pitoura, E.; Nummenmaa, J. Sequential group recommendations based on satisfaction and disagreement scores. J. Intell. Inf. Syst. 2022, 58, 227–254. [Google Scholar] [CrossRef]

- Tarnowska, K.A.; Ras, Z.W.; Jastreboff, P.J. Decision Support System for Diagnosis and Treatment of Hearing Disorders—The Case of Tinnitus; Studies in Computational Intelligence; Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Duan, L.; Street, W.N.; Xu, E. Healthcare information systems: Data mining methods in the creation of a clinical recommender system. Enterp. Inf. Syst. 2011, 5, 169–181. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, H. Study on recommender systems for business-to-business electronic commerce. Commun. IIMA 2005, 5, 46–48. [Google Scholar]

- Tarnowska, K.; Ras, Z.W.; Daniel, L. Recommender System for Improving Customer Loyalty; Studies in Big Data; Springer: Berlin/Heidelberg, Germany, 2020; Volume 55. [Google Scholar]

- Ras, Z.W.; Wieczorkowska, A. Action Rules: How to Increase Profit of a Company. In Proceedings of the PKDD’00, Lyon, France, 13–16 September 2000; LNAI, No. 1910. Springer: Berlin/Heidelberg, Germany, 2000; pp. 587–592. [Google Scholar]

- Dardzinska, A.; Ras, Z.W. Extracting Rules from Incomplete Decision Systems: System ERID. In Foundations and Novel Approaches in Data Mining; Lin, T.Y., Ohsuga, S., Liau, C.J., Hu, X., Eds.; Advances in Soft Computing; Springer: Berlin/Heidelberg, Germany, 2006; Volume 9, pp. 143–154. [Google Scholar]

- Ras, Z.W.; Tsay, L.S. Discovering Extended Action-Rules (System DEAR). In Proceedings of the Intelligent Information Processing and Web Mining, Zakopane, Poland, 2–5 June 2003; Advances in Soft Computing. Springer: Berlin/Heidelberg, Germany, 2003; Volume 22. [Google Scholar]

- Ras, Z.W.; Dardzinska, A. Action Rules Discovery, a New Simplified Strategy. In Proceedings of the ISMIS 2006, Foundations of Intelligent Systems, Bari, Italy, 27–29 September 2006; Lecture Notes in Computer Science. Springer: Berlin/Heidelberg, Germany, 2006; Volume 4203. [Google Scholar]

- Ras, Z.W.; Wyrzykowska, E.; Wasyluk, H. ARAS: Action Rules Discovery Based on Agglomerative Strategy. In Proceedings of the MCD 2007, Mining Complex Data, Warsaw, Poland, 17–21 September 2007; Lecture Notes in Computer Science. Springer: Berlin/Heidelberg, Germany, 2007; Volume 4944. [Google Scholar]

- Ras, Z.W.; Dardzinska, A.; Tsay, L.-S.; Wasyluk, H. Association Action Rules. In Proceedings of the MCD 2008, IEEE/ICDM Workshop on Mining Complex Data, Pisa, Italy, 15–19 December 2008; pp. 283–290. [Google Scholar]

- Im, S.; Ras, Z.W. Action rule extraction from a decision table: ARED. In Foundations of Intelligent Systems, Proceedings of the ISMIS’08, Toronto, ON, Canada, 20–23 May 2008; An, A., Matwin, S., Ras, Z.W., Slezak, D., Eds.; LNCS; Springer: Berlin/Heidelberg, Germany, 2008; Volume 4994, pp. 160–168. [Google Scholar]

- Dean, J.; Ghemawat, S. MapReduce: Simplified Data processing on large clusters. In Proceedings of the 6th Conference on Symposium on Operating Systems Design and Implementation, Berkeley, CA, USA, 3 October 2004; USENIX Association: Berkeley, CA, USA, 2004; Volume 6. [Google Scholar]

- Zaharia, M.; Chowdhury, M.; Das, T.; Dave, A.; Ma, J.; McCauley, M.; Franklin, M.J.; Shenkar, S.; Stoica, I. Resilient distributed datasets: A fault-tolerant abstraction for in-memory cluster computing. In Proceedings of the the 9th USENIX Conference on Networked Systems Design and Implementation, San Jose, CA, USA, 25–27 April 2012; USENIX Association: Berkeley, CA, USA, 2012. [Google Scholar]

- Gong, L.; Yan, J.; Chen, Y.; An, J.; He, L.; Zheng, L.; Zou, Z. An IoT-based intelligent irrigation system with data fusion and a self-powered wide-area network. J. Ind. Inf. Integr. 2022, 29, 100367. [Google Scholar] [CrossRef]

- Ras, Z.W.; Wieczorkowska, A. Advances in Music Information Retrieval; Studies in Computational Intelligence; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Ras, Z.W.; Wieczorkowska, A.; Tsumoto, S. Recommender Systems for Medicine and Music; Studies in Computational Intelligence; Springer: Berlin/Heidelberg, Germany, 2021; Volume 946. [Google Scholar]

- Ras, Z.W.; Tarnowska, K.; Kuang, J.; Daniel, L.; Fowler, D. User Friendly NPS-based Recommender System for driving Business Revenue. In Proceedings of the 2017 International Joint Conference on Rough Sets (IJCRS’17), Olsztyn, Poland, 3–7 July 2017; LNCS. Springer: Berlin/Heidelberg, Germany, 2017; Volume 10313, pp. 34–48. [Google Scholar]

- Kuang, J.; Ras, Z.W.; Daniel, A. Hierarchical agglomerative method for improving NPS. In PReMI 2015; Kryszkiewicz, M., Bandyopadhyay, S., Rybinski, H., Pal, S.K., Eds.; LNCS; Springer: Cham, Switzerland, 2015; Volume 9124, pp. 54–64. [Google Scholar]

- Tarnowska, K.A.; Ras, Z.W. Sentiment Analysis of Customer Data. Web Intell. J. 2019, 17, 343–363. [Google Scholar] [CrossRef]

- Wang, K.; Jiang, Y.; Tuzhilin, A. Mining actionable patterns by role models. In Proceedings of the 22nd International Conference on Data Engineering (ICDE’06), Atlanta, GA, USA, 3–7 April 2006; Liu, L., Reuter, A., Whang, K.-Y., Zhang, J., Eds.; IEEE Computer Society: New York, NY, USA, 2006; p. 16. [Google Scholar]

- Larose, D.T.; Larose, C.D. Data Mining and Predictive Analytics, 2nd ed.; Wiley Publishing: Hoboken, NJ, USA, 2015. [Google Scholar]

- Bagavathi, A.; Mummoju, P.; Tarnowska, K.; Tzacheva, A.A.; Ras, Z.W. SARGS method for distributed actionable pattern mining using spark. In Proceedings of the 4th International Workshop on Pattern Mining and Applications of Big Data (BigPMA 2017) at IEEE International Conference of Big Data (IEEE Big Data’17), Boston, MA, USA, 11–14 December 2017; pp. 4272–4281. [Google Scholar]

- Tzacheva, A.A.; Bagavathi, A.; Ganesan, P.D. MR-Random Forest Algorithm for Distributed Action Rules Discovery. Int. J. Data Min. Knowl. Manag. Process. IJDKP 2016, 6, 15–30. [Google Scholar] [CrossRef]

- Rathee, S.; Kaul, M.; Kashyap, A. R-Apriori: An efficient apriori based algorithm on spark. In Proceedings of the 8th Workshop on Ph.D. Workshop in Information and Knowledge Management, Melbourne, Australia, 19 October 2015; pp. 27–34. [Google Scholar]

- Hahsler, M.; Karpienko, R. Visualizing association rules in hierarchical groups. J. Bus. Econ. 2017, 87, 317–335. [Google Scholar] [CrossRef]

- Simunek, M. Academic KDD Project LISp-Miner; Springer: Berlin/Heidelberg, Germany, 2003; pp. 263–272. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).