Abstract

Haze and mist caused by air quality, weather, and other factors can reduce the clarity and contrast of images captured by cameras, which limits the applications of automatic driving, satellite remote sensing, traffic monitoring, etc. Therefore, the study of image dehazing is of great significance. Most existing unsupervised image-dehazing algorithms rely on a priori knowledge and simplified atmospheric scattering models, but the physical causes of haze in the real world are complex, resulting in inaccurate atmospheric scattering models that affect the dehazing effect. Unsupervised generative adversarial networks can be used for image-dehazing algorithm research; however, due to the information inequality between haze and haze-free images, the existing bi-directional mapping domain translation model often used in unsupervised generative adversarial networks is not suitable for image-dehazing tasks, and it also does not make good use of extracted features, which results in distortion, loss of image details, and poor retention of image features in the haze-free images. To address these problems, this paper proposes an end-to-end one-sided unsupervised image-dehazing network based on a generative adversarial network that directly learns the mapping between haze and haze-free images. The proposed feature-fusion module and multi-scale skip connection based on residual network consider the loss of feature information caused by convolution operation and the fusion of different scale features, and achieve adaptive fusion between low-level features and high-level features, to better preserve the features of the original image. Meanwhile, multiple loss functions are used to train the network, where the adversarial loss ensures that the network generates more realistic images and the contrastive loss ensures a meaningful one-sided mapping from the haze image to the haze-free image, resulting in haze-free images with good quantitative metrics and visual effects. The experiments demonstrate that, compared with existing dehazing algorithms, our method achieved better quantitative metrics and better visual effects on both synthetic haze image datasets and real-world haze image datasets.

1. Introduction

When there is the influence of haze, suspended particles in the air can refract and scatter light, thus attenuating the light intensity reflected from the target to be observed. At the same time, the attenuated reflected light mixes with the light refracted and scattered by the suspended particles, resulting in a substantial decrease in the clarity and contrast of the image captured by cameras, a large amount of detailed information about the target is lost, and the color of the target is biased, making it impossible to accurately extract the geometric and color characteristics of the target [1,2] and limiting the applications of automatic driving, satellite remote sensing, traffic monitoring [3,4], etc. Therefore, it is of great significance and application value to study the method of removing haze from images.

In recent years, image dehazing has become a popular research topic in academia and industry. Early dehazing algorithms were mainly based on the atmospheric scattering model [5], which can be expressed as

where denotes the haze image, is the corresponding haze-free image, denotes the medium transmission map, and denotes the global atmospheric light. By estimating the parameters and , the haze image is recovered, obtaining the corresponding haze-free image. However, owing to the complex physical factors affecting the dehazing effect, the atmospheric scattering model cannot be accurately established to achieve the recovery of haze images in complex scenes.

With the rapid development of deep learning technology, convolutional neural networks (CNN) have been heavily applied in image processing [6,7,8,9,10], especially in image dehazing. Existing deep-learning-based dehazing algorithms have mainly been studied in two aspects. On one hand, deep learning is used to estimate parameters, such as transmittance and global atmospheric light, of the atmospheric scattering model [11,12,13,14], where haze-free images are projected from haze images; on the other hand, according to the idea of image-to-image translation, the atmospheric scattering model is directly ignored and the image-dehazing task is regarded as an image-to-image translation task to find the mapping between haze and haze-free images in order to achieve end-to-end dehazing [15,16]. However, the existing dehazing algorithms based on convolutional neural networks are based on supervised learning [17,18,19,20,21,22], which requires a large amount of paired data, and the lack of data will affect the performance of the dehazing algorithm. Meanwhile, owing to the continuous changes in light intensity, it is difficult to acquire the corresponding haze and haze-free images in the same scene, which means that paired image data are difficult to obtain; thus, research on unsupervised dehazing algorithms is very meaningful. The proposal of Generative Adversarial Networks (GANs) [23] has greatly contributed to the further development of unsupervised learning. In the field of unsupervised image-to-image translation, Cycle-Consistent Adversarial Networks (CycleGAN) [24] showed the potential for GANs in unsupervised image-to-image translation by introducing GANs, and many CycleGAN-based image-to-image translation algorithms [25,26,27] have subsequently been proposed.

However, the results obtained from the direct use of CycleGAN for image-dehazing tasks are not very good, and the main issues are as follows: (i) CycleGAN assumes a bidirectional mapping between two image domains, which means that the information possessed by two image domains is equal. For image-dehazing tasks, the hazy-free image domain possesses more information than the hazy image domain, which means that the information between the two image domains is unequal; (ii) the feature extraction part of CycleGAN is less suitable for dehazing tasks, because, for the image-to-image translation task, it focuses on extracting the low-frequency features of the image, while the extraction of the high-frequency features of the image is slightly insufficient. However, for image-dehazing tasks, it is ideal if the high-frequency features of haze-free images are rich enough, as it is necessary to extract enough high-frequency features from haze images to better reconstruct the haze-free image; (iii) for the image-to-image translation task, the exchange of image feature information is necessary; the encoding–residual–decoding structure without cross-layer skip connection can achieve the exchange of feature information, resulting in the exchange of the source domain image and target domain image based on the extracted source domain image features. However, for image-dehazing tasks, the recovery of image feature information is needed; this no-cross-layer skip connection structure is not suitable, because the image feature information obtained by the encoding module is not directly provided to the decoding module for recovering clear images. Therefore, it is necessary to use the cross-layer skip connection to provide the features of different scales obtained by the encoding module directly to the decoding module for recovering images in order to avoid the problem of distortion, loss of image details, and poor retention of image features in haze-free images.

To solve the above problems, this paper reports on research work based on CycleGAN from two aspects of network structure and loss function, and it innovatively proposes CL-GAN to achieve low-frequency feature retention and high-frequency feature recovery to better recover the high-frequency features in the haze-free image and retain the low-frequency features in the original image, thus improving the quality of the haze-free image.

The main contributions of this paper are as follows:

- We propose CL-GAN based on CycleGAN, which achieves one-sided image translation by introducing contrastive learning [28,29,30,31], to address the adverse effects of information inequality between haze and haze-free images in the image-dehazing task in order to achieve a visually better image-dehazing algorithm;

- We innovatively propose the feature-fusion module (FFM) based on multi-scale skip connection (MSC) to achieve the fusion between different-scale features, which enables the continuous fusion of the features of different scales extracted by the feature-extraction module when they are passed in the decoding module, thus effectively improving the utilization of feature information;

- Compared with existing methods, the method proposed in this paper achieves better image-dehazing results in both synthetic and real-world haze image datasets.

2. Related Work

This paper focuses on image-dehazing research based on generative adversarial networks and provides an overview of image dehazing and generative adversarial networks in this section.

2.1. Image Dehazing

Existing image-dehazing algorithms can be divided into three categories: image-enhancement-based dehazing algorithms, physical model-based dehazing algorithms, and deep-learning-based dehazing algorithms. Although these three types of algorithms have achieved certain results, they still each have certain limitations, resulting in poor dehazing results.

Image enhancement-based algorithms achieve dehazing by enhancing the contrast and saturation of the image, such as histogram equalization [32], Retinex [33], and wavelet transformation [34]. These methods are simple in principle and low in computational complexity; however, because they do not consider the degraded physical factors of haze, they easily lead to problems, such as excessive local area enhancement, color distortion, and halo artifacts in the haze-free image.

Physical model-based dehazing algorithms are mainly based on the atmospheric scattering model; they obtain some mapping relationships through the observation and summary of a large number of haze images and haze-free images, and then use the reverse process of the formation process of haze images to restore clear images. The atmospheric scattering model was developed from the atmospheric scattering theory[5]; the model was formally proposed by McCartney and then further summarized by Narasimhan et al. [5,35]. The model considers incident light attenuation and scattering medium effects as the main factors causing the degradation of image quality; therefore, only the atmospheric light coefficient and transmittance need to be estimated to calculate the clear image. He et al. [36] proposed the Dark Channel Prior (DCP) theory based on a large number of statistical studies of haze-free images. According to this theory, atmospheric scattering model parameters can be estimated to obtain a haze-free image. This method is simple, effective, and widely used, but it is not effective in high-brightness regions, such as the sky. Zhu et al. [37] proposed Color Attenuation Prior (CAP) based on statistical experiments, thus establishing a model for luminance, saturation, and depth, and the model parameters were determined by supervised learning methods to recover the haze-free image. Berman et al. [38] proposed non-local dehazing based on statistical experiments, where RGB values in a haze-free image are clustered into different clusters; under the influence of haze, the clusters in the haze-free image become “haze lines” with atmospheric light as the origin, and the transmittance is estimated by the distance from the image point to the origin point in the haze lines. Although the physical model-based image-dehazing algorithm takes into account the physical factors that affect the dehazing effect, the physical model is not accurate due to the complex physical causes, which affect the dehazing effect.

Deep-learning-based dehazing algorithms learn the mapping between haze and haze-free images from the training data by building a neural network model, thus overcoming the problem of the difficult physical modeling of haze and obtaining better results. Two main deep-learning-based dehazing algorithms are widely used. One is to estimate the parameters in the atmospheric scattering model by constructing a neural network model and then use the estimated parameters to achieve image dehazing. Cai et al. [13] introduced convolutional neural networks to the image-dehazing task; they proposed the DehazeNet model to learn the mapping between the haze image and transmittance, and then estimate the parameters in the atmospheric scattering model in order to obtain the haze-free image. This method inputs the haze image, outputs the transmittance estimation map, and then recovers the haze-free image through the atmospheric scattering model while using a new nonlinear activation function to improve the quality of the recovered haze-free image. On this basis, Zhao et al. [39] proposed the Deep Fully Convolutional Regression Network (DFCRN) to estimate the transmittance in atmospheric scattering models while using the ReLU activation function and batch normalization layer to accelerate network convergence and prevent network overfitting. Li et al. [14] proposed a lightweight CNN-based end-to-end convolutional neural network, AOD-Net, to combine the transmittance and atmospheric light value parameters in the atmospheric scattering model into one parameter for estimation with the network, which effectively reduces the reconstruction error of the atmospheric scattering model and improves the quality of the haze-free image. Zhang et al. [40] proposed a Densely Connected Pyramid Dehazing Network (DCPDN) based on generative adversarial networks to estimate the transmittance parameters of the atmospheric scattering model using a densely connected module with a multi-layer pyramid structure, and then derived the recovered haze-free images. Deng et al. [41] proposed a multi-model fusion image-dehazing network, which generates multi-modal attention features from different layers of convolutional features and incorporates them into a fusion model combined with the atmospheric scattering model to derive haze-free images, effectively improving the overall performance of image dehazing. The other is to use neural network models, such as convolutional neural networks and generative adversarial networks, to directly determine the mapping between the haze image and the haze-free image for image dehazing. Li et al. [42] proposed an image-dehazing network based on a conditional generative adversarial network (cGAN) to generate recovered haze-free images directly from the input haze images without using an atmospheric scattering model by introducing a VGG feature extraction module and an L1 regularized gradient prior. Ren et al. [43] proposed a Gated Fusion Network (GFN) to first obtain corresponding white balance feature maps, contrast enhancement feature maps, and gamma correction feature maps based on haze images, then obtained the confidence coefficient of each feature map by training the network, and finally recovered haze-free images by fusing the most important feature information among them. Engin et al. [15] proposed Cycle-Dehaze based on cyclic generative adversarial networks; by combining cycle consistency loss and perceptual loss to train the network, they could obtain better visually haze-free images. Chen et al. [44] proposed an end-to-end Gated Context Aggregation Network (GCANet), which uses a gated sub-network to fuse image features from different levels and solves the artifact problem in dehazing tasks by using smoothed convolution instead of extended convolution, effectively improving the dehazing effect. Qin et al. [45] proposed an end-to-end Feature Fusion Attention Network (FFA-Net), which contains a feature attention module that extends the feature representation capability of deep neural networks by combining the channel attention mechanism and the pixel attention mechanism to make it more flexible in processing different types of information, thus improving the visual performance of recovered haze-free images. Jiang et al. [46] proposed an end-to-end trainable, densely connected residual spatial and channel attention network based on the conditional generative adversarial framework to directly restore a haze-free image from an input hazy image to solve color distortion, artifacts, and insufficient haze removal. Zhao et al. [47] proposed a generative adversarial network based on the deep symmetric Encoder–Decoder architecture for removing dense haze to solve dense haze being unevenly distributed on the images and the deep convolutional dehazing network relying too greatly on large-scale datasets. Gao et al. [48] proposed an image-dehazing model built with a convolutional neural network and a transformer, called Transformer for Image Dehazing (TID), to effectively improve the quality of the restored image.

2.2. Generative Adversarial Network

The core idea of GAN is a two-person zero-sum game, which consists of a generator G and a discriminator D; both of them learn by adversarial learning to achieve the best generation effect. The generator receives random noise and outputs a generated image, while the discriminator receives the generated image and the real image, and then judges the authenticity of the input image. In the training process, the goal of the generator is to generate an image that is as similar as possible to the target image domain in order to deceive the discriminator, while the goal of the discriminator is to distinguish the real image from the generated image as much as possible, which is the adversarial learning between them. The end of optimization occurs when the discriminator does not distinguish the real image from the generated image. The loss function of GAN is

where denotes the data distribution obeying the haze-free image and denotes the a priori input noise distribution.

Theoretically, generative adversarial networks are capable of fitting the target image domain distribution, but GANs are prone to the mode collapse problem during training, leading to unstable training processes and suboptimal generated images. Many studies are addressing this problem; for example, Arjovsky et al. [49] proposed WGAN to stabilize the training process by introducing the Wasserstein distance while providing a metric that can indicate the training process. Miyato et al. [50] proposed SNGAN to stabilize the training process by introducing spectral normalization without increasing the computational complexity. Mao et al. [51] proposed LSGAN to stabilize the training process by introducing a least-squares loss function while improving the quality of the generated image. These improvements are widely used in image-generation tasks and are also heavily used in image-restoration tasks.

3. Proposed Method

In this section, we present the detailed structure of the image-dehazing framework, feature fusion module, and cross-layer feature connection based on contrastive learning. Section 3.1 presents the overall structure of the approach in this paper, Section 3.2 and Section 3.3 detail the generator, feature fusion module, and multi-scale skip connection based on the residual network, Section 3.4 provides the structure of the discriminator, and Section 3.5 presents the loss functions used to train the network, including adversarial loss and contrastive loss.

3.1. Overview of The Proposed Method

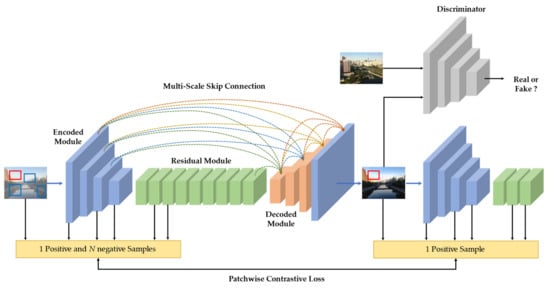

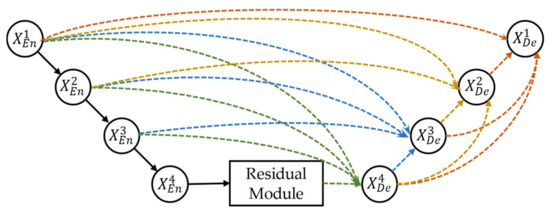

Contrastive learning has achieved great success in the field of unsupervised learning. In this paper, we determined the mapping function between haze and haze-free images by combining GAN and contrastive learning. As shown in Figure 1, the network structure proposed in this paper consists of a generator and a discriminator, where the generator consists of three parts: an encoding module, a residual module, and a decoding module. The feature fusion module based on the multi-scale skip connection proposed in this paper is used in the generator, aiming to recover the haze image into a clear and haze-free image, while the discriminator uses the now widely used PatchGAN [52], aiming to distinguish the generated clear and haze-free image from the original haze image. Given a haze image, we firstly adopted the encoding module to encode the input haze image into a low-resolution feature map, then used the residual module to extract more complex and deeper features from the low-resolution feature map and simultaneously remove the haze, and finally used the decoding module to output the final restored image, in which the residual module is essential because it not only extracts deeper features and improves the expression of characteristics, but it also stabilizes the training process by mitigating the problem of vanishing gradient and exploding gradient.

Figure 1.

Framework structure of the proposed method. The red boxed area in the bottom-right image indicates the query sample, while the red boxed area in the bottom-left image indicates the positive sample, and the remaining blue boxes indicate negative samples.

3.2. Architecture of the Generator

The primary goal of the generator is to remove the haze from haze images, and, on this basis, to preserve as many detailed features of the haze image as possible and restore the input haze image to a clear and haze-free image. Previous work [53] has shown that the residual connection offers superior advantages: (i) solving the gradient disappearance problem as the network becomes deeper and (ii) facilitating the flow of feature information to improve the representational capability of the network and speed up the convergence of the network. Therefore, this paper designs a generator using the residual connection and deepens both the encoding module and the decoding module of the generator, which can better extract features in haze images, and recover clear and haze-free images with clearer features and better image quality.

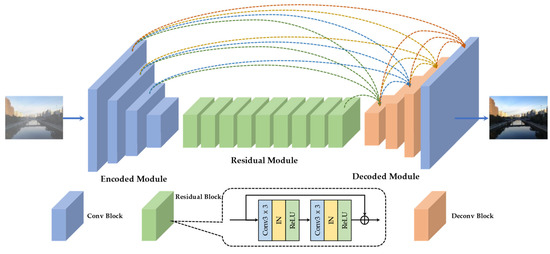

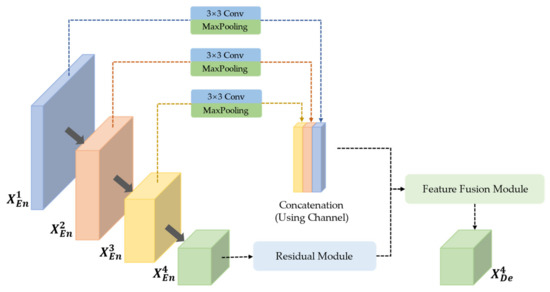

As shown in Figure 2, the input of the generator is a haze image, which is sequentially passed through the encoding module, the residual module, and the decoding module to obtain a clear and haze-free image. The encoding module consists of four convolution units, and each convolution unit consists of a convolutional layer, an Instance Normalization (IN) layer, and a ReLU activation function layer, in which the convolutional layer is a 3 × 3 convolutional layer with a step size of 2. The residual module consists of nine residual blocks, and each residual block includes a 3 × 3 convolutional layer with a step size of 1, an Instance Normalization layer, and a ReLU activation function layer. The decoding module consists of three deconvolution units and one convolution unit. Each deconvolution unit consists of a 3 × 3 deconvolution layer with a step size of 2, an instance normalization layer, and a ReLU activation function layer. The encoding module performs feature extraction on the input haze image, the residual module converts the extracted features into features that match the data distribution of the haze-free image, and the decoding module expands the converted features and inverts them to obtain the haze-free image.

Figure 2.

The specific structure of the generator.

The specific network structure of the generator is shown in Table 1. The nomenclature in Table 1 is as follows: ReflectionPad denotes reflection padding, Conv denotes the convolutional layer, N denotes the number of channels of the output, K denotes the size of the convolutional kernel, S denotes the size of the step, P denotes zero padding, IN denotes the instance normalization layer, ReLU and Tanh are activation functions, and Deconv denotes the deconvolution layer. For the generator network, we used Instance Normalization in all network layers except for the last layer, as well as reflection padding to avoid artifacts; in the last layer, we used the Tanh activation function to constrain the values of the output tensor to a range of pixel values.

Table 1.

Generator network architecture.

3.3. Feature Fusion Module and Multi-Scale Skip Connection Based on Residual Network

CycleGAN is usually used for image-to-image translation tasks [26,27], where the focus is on extracting the low-frequency features of the image, while the extraction of the high-frequency features of the image is slightly insufficient. However, for image-dehazing tasks, the goal is to obtain a detailed enough reproduction of the haze-free image; hence, it is necessary to extract enough high-frequency features from the haze image to better reconstruct the haze-free image. To better utilize the features extracted by the encoding module of the generator and generate clear and haze-free images, this paper proposes a feature fusion module and a multi-scale skip connection based on the residual network to supplement the loss of feature information caused by convolution operation and to fuse the features extracted from different scales in order to solve the problems of distortion, loss of details, and poor retention of image features in the generated clear and haze-free images in order to improve the image quality.

3.3.1. Feature Fusion Module

Feature fusion between different scales is usually achieved by simple operations, such as summing or splicing. However, simple summation or splicing does not adequately achieve feature fusion between different scales, because these simple operations only use fixed linear operations to achieve feature fusion, and it is not known whether such operations are suitable for a specific target. Therefore, to better fuse features with inconsistent semantics and scales, this paper proposes a feature fusion module to achieve the fusion of features of different scales and to solve the problem of inconsistent semantics and scales when fusing features of different scales.

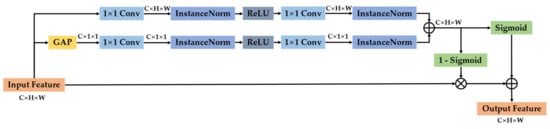

Firstly, the channel attention module is introduced in detail. The usual channel attention module uses global average pooling to map the feature map of each channel to a single value. However, this operation is not applicable for recovery tasks with high image detail requirements, and it tends to ignore the detailed features of images. Therefore, this paper combines image features with channel attention, such that the channel attention module focuses on global features, as well as local detail features of the image. As shown in Figure 3, the local context is combined with the global context inside the channel attention module. In the local context branch, it successively goes through 1 × 1 convolution, instance normalization, the ReLU activation function, 1 × 1 convolution, and instance normalization. In the global context branch, it successively goes through a global average pooling, then 1 × 1 convolution, instance normalization, the ReLU activation function, 1 × 1 convolution, and instance normalization. It is worth noting that 1 × 1 convolution preserves the size of the input features, which can preserve and highlight the fine details in the lower-level features. The tensor obtained from the local context branch and the global context branch is summed and then passed through a Sigmoid function to obtain a tensor with values in the range [0, 1], and this tensor is combined with the input tensor passed through a (1-Sigmoid) function to obtain the final output. The formulae are shown below

where is the input feature, and are the output features of the local context branch and global context branch, respectively, denotes the output of the whole channel attention module, Conv denotes 1 × 1 convolution, IN denotes instance normalization, ReLU and Sigmoid denote activation functions, GAP denotes Global Average Pooling, and denotes the product by element.

Figure 3.

Channel attention module.

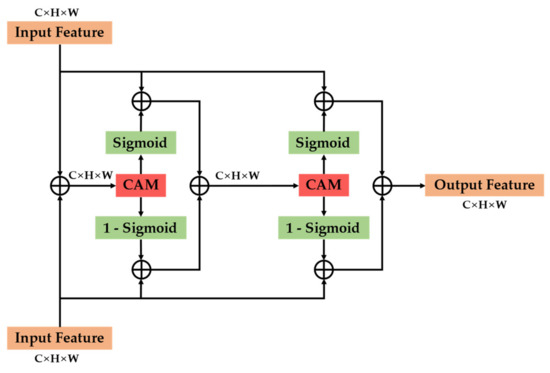

Based on the channel attention module described above, the feature fusion module is shown in Figure 4. The two input features are first summed and then passed through the channel attention module to obtain the features after importance selection by the channel attention module, which are then multiplied by the two input features after the Sigmoid function and (1-Sigmoid) function, making it possible to adaptively adjust the proportion of the two input features in the fused features. To deepen the depth of feature fusion, the output features obtained are then repeated in the above process to fuse features of different semantics and different scales more deeply.

Figure 4.

The proposed feature fusion module.

3.3.2. Multi-Scale Skip Connection Based on Residual Network

Skip connection usually connects each layer in the down-sampling module to the corresponding network layer in the up-sampling module, but such skip connection does not make use of the feature information at different scales. Many studies have shown that feature maps at different scales yield different information, with low-level feature maps capturing rich spatial information and highlighting the edge details of the target, while high-level feature maps capture positional information and locate the position of the target. However, as they pass through the up-sampling and down-sampling modules in turn, this information is diluted and faded. Inspired by the UNet3+ [54] network, this paper proposes a multi-scale skip connection structure based on a residual network, which uses the residual network to supplement the loss of feature information caused by convolution operation, thus enhancing the feature representation, and uses multi-scale skip connection to make full use of multi-scale features, combining low-level and high-level feature maps at different scales, in order to reduce the semantic differences between encoding and decoding modules; based on this, we can effectively achieve the transfer of feature information and make full use of multi-scale features.

As shown in Figure 5, an overview of the multi-scale skip connection based on a residual network is given. Each layer of the decoding module contains small-scale and same-scale feature maps from the encoding module and large-scale feature maps from the decoding module, resulting in the multi-scale capture of low-level detail features and high-level semantic features.

Figure 5.

The proposed multi-scale skip connection based on the residual network, where , , , and are features of different scales in the encoding module, and , , , and are features of different scales in the decoding module. Features with the same number in superscript on the right indicate features of the same scale in the encoding and decoding modules.

As shown in Figure 6, the feature map of how to construct the fourth layer in the decoding module is given. For a layer in the decoding module, the feature maps from the same scale of the encoding module, the feature maps from the smaller scale of the encoding module after the maximum pooling operation, and the feature maps from the larger scale of the decoding module after the bilinear interpolation operation are stitched together by the channel dimension while reducing the redundant information, and they then are inputted to the feature fusion module simultaneously with the output from the previous layer. The fused feature map is finally obtained.

Figure 6.

Illustration of how to construct a feature map of the fourth decoder layer.

3.4. Architecture of the Discriminator

The goal of the discriminator is to determine whether the input image is a real image or a fake image generated by the generator, thus guiding the generator to generate a more realistic image. In our approach, PatchGAN [52] was used as a discriminator, which could reduce the model parameters and better determine the authenticity of the input image. Unlike a traditional discriminator, the output of PatchGAN is a 30 × 30 tensor, where each element represents a 70 × 70 image patch in the original image, which helps to preserve texture information and remove artifacts from the image, and the final output is the average authenticity of all image patches, which determines the truth or falsity of the whole image.

The detailed structure of the discriminator is shown in Table 2. For the discriminator, the Leaky-ReLU activation function with a slope of 0.2 is used for all network layers, except for the last layer, and we used instance normalization for all network layers, except for the first and last layers.

Table 2.

Discriminator network architecture.

3.5. Loss Function

GAN ensures that the distribution of the generated image matches the distribution of the target domain image by adversarial loss. To achieve image-to-image translation, it is usually necessary to use the cycle consistency loss in CycleGAN to ensure a meaningful translation. However, CycleGAN assumes that there is a bi-directional mapping between the source and target domain images, which is a more stringent assumption for image-dehazing tasks, because the haze-free image domain has more information than the haze image domain and there is information inequality between the two image domains; thus, one-sided translation is more suitable for image dehazing. To achieve one-sided image translation, additional regular terms need to be introduced to ensure meaningful translation. This paper achieves one-sided image domain translation by introducing contrastive loss.

3.5.1. Adversarial Loss

The adversarial loss used in the standard GAN leads to an unstable training process, while LSGAN stabilizes the training process by introducing a least-squares loss function while improving the quality of the generated image. Therefore, in this study, we used least-squares adversarial loss instead of standard adversarial loss. The least-squares adversarial loss can be expressed as

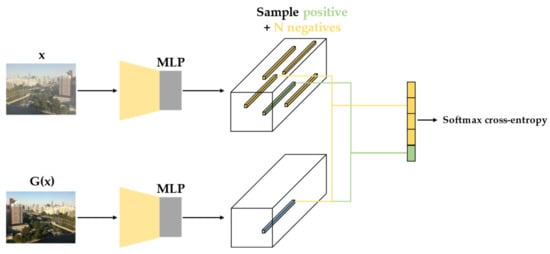

3.5.2. Patch-Wise Contrastive Loss

The main idea of contrastive loss is to make the positive samples closer to each other while making the negative samples further apart. For an image patch in the generated image, we call it a query sample and find the corresponding image patch in the original image; these two image patches are called positive samples, while the image patch in the generated image and other image patches in the original image are called negative samples. Specifically, we picked a query sample and found its corresponding patch in the original image as a positive sample , and then found N negative samples and mapped them to K-dimensional vectors, where , , and ; then, we built an (N+ 1)-way classification problem using cross-entropy loss to compute the probability of selecting the positive sample over negative samples, with the following equation:

where is the temperature coefficient to adjust the distance between the query sample and other samples, and the default is 0.07. denotes the n-th negative sample.

We used the encoding module of the generator and a two-layer MLP to extract features from the input image, where an element in the output feature map of each layer represents an image patch in the input image, and the deeper layer represents the larger image patch. We selected feature maps from L of these network layers, inputted them into the two-layer MLP, and obtained different-scale feature samples.

We aimed to make each image region of the input image match the corresponding image region of the generated image, which means that each image patch in the generated image should reflect the content of the corresponding image patch in the input image, as shown in Figure 7. At the same time, we also used the contrastive loss for the haze-free image to prevent the generator from making unnecessary changes.

where represents the selected L-th layer, represents one of the image patches in the L-th layer, represents the query sample, and and represent the positive samples and negative samples, respectively.

Figure 7.

Patch-wise contrastive loss.

3.5.3. Total Loss

We combined the adversarial loss and contrastive loss with weight coefficients to obtain the final total loss function with the following equation:

where is the adversarial loss, is the contrastive loss, is the weight coefficient of the adversarial loss, and and are the weight coefficients of the contrastive loss.

4. Experiments and Results

In this section, we describe the experiments on synthetic and real datasets to evaluate the effectiveness of the proposed method, as well as ablation experiments to verify the effectiveness of the proposed feature fusion module and multi-scale skip connection based on a residual network. We also compare the proposed method with several state-of-the-art dehazing algorithms.

4.1. Dataset

For comparison with other models, the benchmark dataset RESIDE [55] was used for experiments, which consists of four subsets, two training sets, i.e., the indoor training set (ITS) and the outdoor training set (OTS), and two testing sets, i.e., the synthetic objective testing set (SOTS) and the hybrid subjective testing set (HSTS). Our experiments used OTS as the training set, which consists of 8447 clear images and 296,695 haze images, and 1500 clear images and haze images were randomly selected as the training set. SOTS was used as the test set, which consisted of 500 indoor images and 500 outdoor images, and the synthetic images in HSTS were also used as the supplementary test set. To verify the dehazing effect of the proposed model in real scenes, real images from HSTS were used as the real haze image test set.

4.2. Implementation Details

In this paper, the algorithm was built based on the PyTorch framework, the experimental platform was the Ubuntu 18.04 operating system, and the graphics card was ab NVIDIA GeForce RTX2080Ti. In the training process, the input image was always set to 256 × 256, and the network was optimized using the Adam optimizer. The initial learning rate of the network was 0.0002, the total number of iterations was 200, the learning rate of the first 100 iterations was unchanged, and the learning rate of the last 100 iterations decreased linearly to 0. The value of the weight coefficient in the loss function was chosen to be unchanged from the default value of 10 in CycleGAN. Meanwhile, after experiments, the best results were obtained when and were selected to be 1 and 0.5, respectively. The parameter settings of the other dehazing algorithms were kept the same as those in the original papers.

4.3. Quantitative and Qualitative Results on The Synthetic Images

In this paper, the proposed model was evaluated using subjective and objective evaluations. The peak signal-to-noise ratio (PSNR) and structural similarity (SSIM) were used for the objective evaluation of the dehazing effect [56]. A larger value of PSNR indicated a better dehazing effect, while the value of SSIM was in the range [0, 1], where a closer value to 1 indicated a better dehazing effect. Given a clear image and the corresponding haze-free image after dehazing, the PSNR formula is shown in Equation (12), where MSE denotes the mean-square-error between and and denotes the number of bits of pixels.

The SSIM metric measures the similarity of images in terms of brightness, contrast, and structure. It is calculated as shown in Equation (13), where and are the mean values of image and image , respectively, and are the standard deviations of image and image , respectively, and is the covariance of image and image .

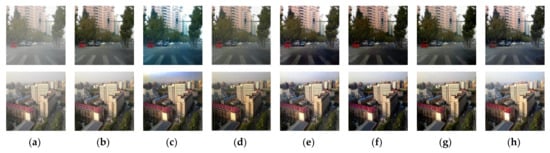

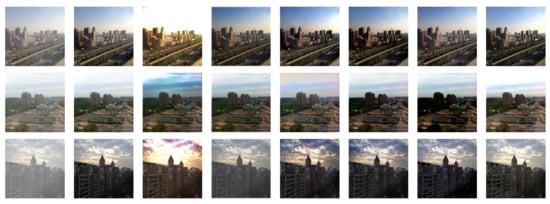

To verify the effectiveness of the algorithm in this paper, we used the algorithm in this paper to compare with classical traditional image-dehazing algorithms and deep-learning-based image-dehazing algorithms, including DCP [36], AOD-Net [14], GCANet [44], EPDN [57], and YOLY [58]. As can be seen from Table 3, the PSNR and SSIM metrics obtained by the proposed method in this paper on the SOTS test set were higher than those of several other algorithms. Compared with GCANet, the PSNR and SSIM metrics were improved by 2.54 and 0.048, respectively. Figure 8 shows some of the haze-free images obtained by the algorithm in this paper and several other algorithms on the SOTS test set. As shown in Figure 8, the haze image obtained by the DCP algorithm had a very serious color distortion problem, and the overall color was brighter, which is not suitable for dehazing high-brightness areas. The haze image obtained by the AOD-Net algorithm still had a small amount of haze residue, which led to a slight decrease in the overall brightness of the image. The GCANet algorithm could remove the haze in the image well, but caused image details to be mutilated. The EPDN algorithm caused some details of the haze image to be unclear. The YOLY algorithm obtained a haze image with partial color distortion. The proposed algorithm in this paper showed a better dehazing effect without color distortion, and it could better preserve the color and texture of the image, making the color and details of the haze-free image closer to those of the real image, and making the haze-free image better in terms of visual effects, improving the dehazing effect in both qualitative and quantitative aspects. In addition, as can be seen from the fourth column of Table 3, compared with other methods, the proposed method in this paper not only improved the dehazing effect, but also increased the number of model parameters, meaning that the amount of calculation was increased.

Table 3.

Quantitative results on the SOTS.

Figure 8.

Visual results on the SOTS dataset: (a) hazy images; (b) corresponding haze-free images; (c) DCP; (d) AOD-Net; (e) GCANet; (f) EPDN; (g) YOLY; (h) proposed method.

As can be seen from Table 4, the PSNR and SSIM metrics obtained by the method proposed in this paper on the HSTS test set were higher than those of several other algorithms. Compared with GCANet, PSNR and SSIM were improved by 2.69 and 0.038, respectively. Figure 9 shows some of the haze-free images obtained by the algorithm in this paper and several other algorithms on the HSTS test set. As shown in Figure 9, the DCP algorithm led to a serious color distortion problem in the haze-free image. The AOD-Net algorithm did not completely remove the haze of the image, and the overall brightness of the haze-free image obtained was low. GCANet led to a lack of image details. EPDN had the problem of not recovering the image details well. YOLY had the problem of partial color distortion. The results obtained by the proposed algorithm in this paper not only had good visual effects, but also did not show color distortion, while it also retained the detailed features of haze images well.

Table 4.

Quantitative results on the HSTS.

Figure 9.

Visual results on the HSTS dataset: (a) hazy images; (b) corresponding haze-free images; (c) DCP; (d) AOD-Net; (e) GCANet; (f) EPDN; (g) YOLY; (h) proposed method.

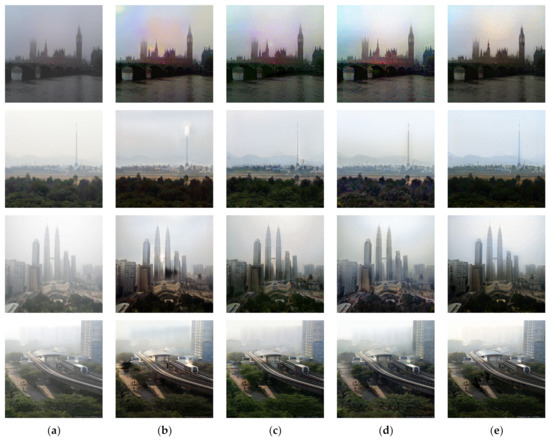

4.4. Qualitative Results on the Real-World Images

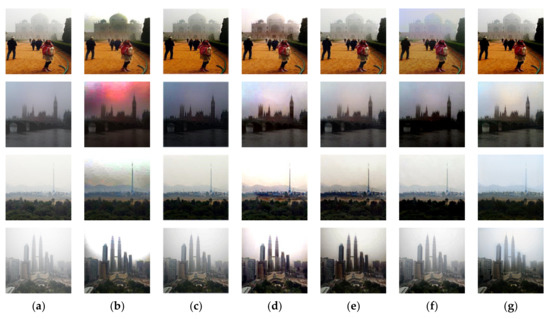

To further verify the effectiveness of the proposed algorithm in this paper, we used it and several other algorithms to conduct experiments on real haze image datasets. As shown in Figure 10, the DCP algorithm had more serious color distortion, such as the color distortion in the sky area in the second image in Figure 10b. The AOD-Net algorithm not only had incomplete haze, but also changed the brightness of the image; for example, the overall brightness of the second image in Figure 10c is very dark. The GCANet algorithm could remove haze, but it tended to have uneven haze removal and uneven color blocks; for example, the haze in the distant woods in the third image in Figure 10d was not removed. The haze-free image obtained by the EPDN algorithm had the problem of unclear details; for example, some areas were blurred in the fourth image in Figure 10e. The YOLY algorithm caused color distortion in the haze-free image, such as the color distortion in the sixth image in Figure 10f. The dehazing effect obtained by the proposed algorithm in this paper was better than that obtained by the other mentioned algorithms, which could both remove haze more thoroughly and better recover the detailed features of the original image. For the eighth image, no methods could recover the detailed features of the original image well, while the algorithm in this paper could recover the detailed features of the original image, which is a certain improvement compared with other algorithms.

Figure 10.

Visual results on real-world images: (a) hazy images; (b) DCP; (c) AOD-Net; (d) GCANet; (e) EPDN; (f) YOLY; (g) proposed method.

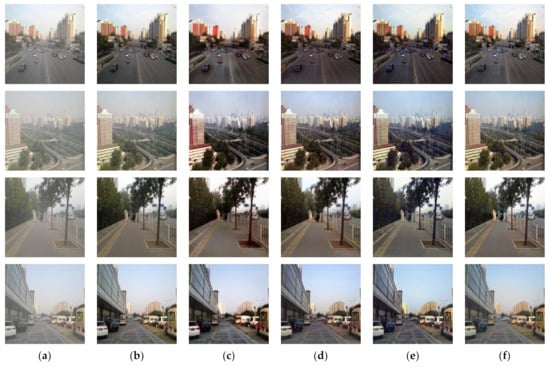

4.5. Ablation Study

To further investigate the effectiveness of the proposed method in this paper, we conducted ablation experiments for analysis. Table 5 and Table 6 show the quantitative results for the SOTS test set and the HSTS test set, respectively. Figure 11 and Figure 12 show the visual results on the SOTS test set and the HSTS test set, respectively. As can be seen in Figure 11 and Figure 12, the combination of the feature fusion module and the multi-scale skip connection based on the residual network resulted in a clear and haze-free image with better visual effects. The multi-scale skip connection based on residual network inputted features from different scales in the encoding module to different layers in the decoding module to provide more and richer features of the original image for the decoding module, while the feature fusion module combined the rich features extracted from the encoding module and the features in the decoding module to allow the haze-free image to retain the original image features as best as possible in order to solve the problems of color distortion and lack of image detail features, and then obtain a clear and haze-free image with a good quantitative result and visualization effect.

Table 5.

Quantitative results on the SOTS. FFM, and MSC denoting the feature fusion module and multi-scale skip connection based on the residual network, respectively. √ indicates the presence of a structure and × indicates the absence of a structure.

Table 6.

Quantitative results on the HSTS.

Figure 11.

Visual results on the SOTS dataset: (a) hazy images; (b) corresponding haze-free images; (c) no FFM and MSC; (d) FFM; (e) MSC; (f) FFM + MSC.

Figure 12.

Visual results on the HSTS dataset: (a) hazy images; (b) corresponding haze-free images; (c) no FFM and MSC; (d) FFM; (e) MSC; (f) FFM + MSC.

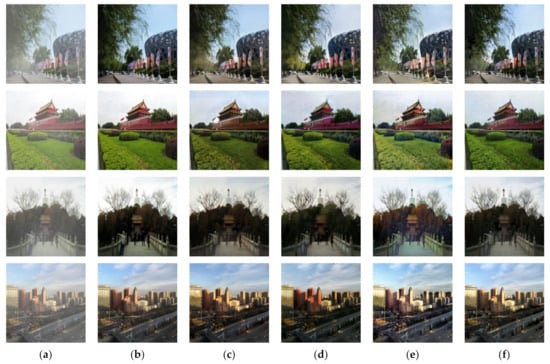

Figure 13 shows the visualization results on the real-world haze image dataset. As can be seen from the first panel of Figure 13e, combining the feature fusion module and the multi-scale skip connection based on the residual network could effectively solve the color distortion problem. From the second panel of Figure 13e, we can see that the combination of the feature fusion module and multi-scale skip connection based on the residual network could effectively solve the problem of image detail feature loss. Thus, it can be seen that the proposed structure in this paper not only performed well on synthetic datasets, but it also achieved good visualization results on real-world datasets.

Figure 13.

Visual results on real-world images: (a) hazy images; (b) no FFM and MSC; (c) FFM; (d) MSC; (e) FFM + MSC.

5. Conclusions

Inspired by contrastive learning methods, an end-to-end image-dehazing model was proposed in this paper. Specifically, a one-sided image-dehazing model was proposed to ensure a meaningful one-sided mapping from haze images to haze-free images by contrastive loss, and a feature fusion module and a multi-scale skip connection based on the residual network were proposed. The feature fusion module considered the connection between features of different scales and adaptively implemented feature fusion between different scales. The multi-scale skip connection based on the residual network considered the loss of feature information caused by convolution operation to use the residual network to supplement the loss of feature information and considered the feature connections between different layers to combine the image texture detail information represented by the low-level features and the image semantic information represented by the high-level features in order to better preserve the detailed features of the original image. The quantitative results and visualization results on both synthetic haze image datasets and real-world haze image datasets showed that the proposed method achieved better results with better dehazing results and better visualization of the obtained haze-free images compared with existing dehazing algorithms.

Compared with other dehazing algorithms, the unsupervised dehazing algorithm proposed in this paper had a relatively large number of parameters; hence, it would not be very dominant in engineering applications, indicating that the number of parameters in the algorithm needs to be optimized. Therefore, in future work, research on the tailoring method of this algorithm will be carried out to reduce the computational complexity without changing the algorithm performance and further enhance the engineering application value of the algorithm in this paper.

Author Contributions

Conceptualization, Y.Y. and Z.T.; methodology, Y.Y.; software, Y.Y.; validation, Y.Y.; formal analysis, Y.Y. and B.Z.; investigation, Y.Y., B.Z., X.S. and Y.Z.; writing—original draft preparation, Y.Y.; writing—review and editing, Y.Y., Q.L. and Z.T.; project administration, Q.L.; funding acquisition, Z.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Key Program Project of Science and Technology Innovation of the Chinese Academy of Sciences (No. KGFZD-135-20-03-02) and the Strategic Priority Research Program of the Chinese Academy of Sciences (Grant No. XDA28050401).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this paper:

| CNN | Convolutional Neural Network |

| GAN | Generative Adversarial Network |

| CycleGAN | Cycle-Consistent Adversarial Network |

| FFM | Feature Fusion Module |

| MSC | Multi-scale Skip Connection Structure Based on Residual Network |

| DCP | Dark Channel Prior |

| CAP | Color Attenuation Prior |

| cGAN | Conditional Generative Adversarial Network |

| WGAN | Wasserstein GAN |

| SNGAN | Spectral Normalization GAN |

| LSGAN | Least Square GAN |

| IN | Instance Normalization |

| ReLU | Rectified Linear Unit |

| CAM | Channel Attention Module |

| MLP | Multilayer Perceptron |

| PSNR | Peak Signal-to-Noise Ratio |

| SSIM | Structural Similarity |

References

- Chen, B.-H.; Huang, S.-C.; Li, C.-Y.; Kuo, S.-Y. Haze Removal Using Radial Basis Function Networks for Visibility Restoration Applications. IEEE Trans. Neural Netw. Learn. Syst. 2017, 29, 3828–3838. [Google Scholar] [PubMed]

- Qian, R.; Tan, R.T.; Yang, W.; Su, J.; Liu, J. Attentive Generative Adversarial Network for Raindrop Removal from a Single Image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2482–2491. [Google Scholar]

- Wang, G.; Luo, C.; Xiong, Z.; Zeng, W. Spm-Tracker: Series-Parallel Matching for Real-Time Visual Object Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision And Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 3643–3652. [Google Scholar]

- Zhao, Z.-Q.; Zheng, P.; Xu, S.; Wu, X. Object Detection with Deep Learning: A Review. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef] [PubMed]

- McCartney, E.J. Optics of the Atmosphere: Scattering by Molecules and Particles. Phys. Bull. 1977, 30, 76. [Google Scholar] [CrossRef]

- Tang, H.; Li, Y.; Huang, Z.; Zhang, L.; Xie, W. Fusion of Multidimensional CNN and Handcrafted Features for Small-Sample Hyperspectral Image Classification. Remote Sens. 2022, 14, 3796. [Google Scholar] [CrossRef]

- Xia, Y.; Xu, X.; Pu, F. PCBA-Net: Pyramidal Convolutional Block Attention Network for Synthetic Aperture Radar Image Change Detection. Remote Sens. 2022, 14, 5762. [Google Scholar] [CrossRef]

- Yang, W.; Gao, H.; Jiang, Y.; Zhang, X. A Novel Approach to Maritime Image Dehazing Based on a Large Kernel Encoder–Decoder Network with Multihead Pyramids. Electronics 2022, 11, 3351. [Google Scholar] [CrossRef]

- Guo, C.; Chen, X.; Chen, Y.; Yu, C. Multi-Stage Attentive Network for Motion Deblurring via Binary Cross-Entropy Loss. Entropy 2022, 24, 1414. [Google Scholar] [CrossRef]

- ter Burg, K.; Kaya, H. Comparing Approaches for Explaining DNN-Based Facial Expression Classifications. Algorithms 2022, 15, 367. [Google Scholar] [CrossRef]

- Tang, K.; Yang, J.; Wang, J. Investigating Haze-Relevant Features in a Learning Framework for Image Dehazing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 2995–3000. [Google Scholar]

- Ren, W.; Liu, S.; Zhang, H.; Pan, J.; Cao, X.; Yang, M.-H. Single Image Dehazing via Multi-Scale Convolutional Neural Networks. In Proceedings of the European Conference on Computer Vision, Amsterdam, the Netherlands, 11–14 October 2016; pp. 154–169. [Google Scholar]

- Cai, B.; Xu, X.; Jia, K.; Qing, C.; Tao, D. Dehazenet: An End-to-End System for Single Image Haze Removal. IEEE Trans. Image Process. 2016, 25, 5187–5198. [Google Scholar] [CrossRef]

- Li, B.; Peng, X.; Wang, Z.; Xu, J.; Feng, D. An All-in-One Network for Dehazing and Beyond. arXiv 2017, arXiv:1707.06543. [Google Scholar]

- Engin, D.; Genç, A.; Kemal Ekenel, H. Cycle-Dehaze: Enhanced Cyclegan for Single Image Dehazing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 825–833. [Google Scholar]

- Dong, H.; Pan, J.; Xiang, L.; Hu, Z.; Zhang, X.; Wang, F.; Yang, M.-H. Multi-Scale Boosted Dehazing Network with Dense Feature Fusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 2157–2167. [Google Scholar]

- Miao, Y.; Zhao, X.; Kan, J. An End-to-End Single Image Dehazing Network Based on U.-Net. Signal Image Video Process. 2022, 16, 1739–1746. [Google Scholar] [CrossRef]

- Zhang, S.; Zhang, J.; He, F.; Hou, N. DRDDN: Dense Residual and Dilated Dehazing Network. Vis. Comput. 2022, 1–17. [Google Scholar] [CrossRef]

- Yi, Q.; Li, J.; Fang, F.; Jiang, A.; Zhang, G. Efficient and Accurate Multi-Scale Topological Network for Single Image Dehazing. IEEE Trans. Multimed. 2021, 24, 3114–3128. [Google Scholar] [CrossRef]

- Han, W.; Zhu, H.; Qi, C.; Li, J.; Zhang, D. High-Resolution Representations Network for Single Image Dehazing. Sensors 2022, 22, 2257. [Google Scholar] [CrossRef]

- Jiao, Q.; Liu, M.; Ning, B.; Zhao, F.; Dong, L.; Kong, L.; Hui, M.; Zhao, Y. Image Dehazing Based on Local and Non-Local Features. Fractal Fract. 2022, 6, 262. [Google Scholar] [CrossRef]

- Wang, J.; Ding, C.; Wu, M.; Liu, Y.; Chen, G. Lightweight Multiple Scale-Patch Dehazing Network for Real-World Hazy Image. KSII Trans. Internet Inf. Syst. 2021, 15, 4420–4438. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Zhu, J.-Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks. In Proceedings of the International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Yang, S.; Jiang, L.; Liu, Z.; Loy, C.C. Unsupervised Image-to-Image Translation with Generative Prior. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 18332–18341. [Google Scholar]

- Chen, R.; Huang, W.; Huang, B.; Sun, F.; Fang, B. Reusing Discriminators for Encoding: Towards Unsupervised Image-to-Image Translation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 8168–8177. [Google Scholar]

- Huang, X.; Liu, M.-Y.; Belongie, S.; Kautz, J. Multimodal Unsupervised Image-to-Image Translation. In Proceedings of the European conference on computer vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 172–189. [Google Scholar]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A Simple Framework for Contrastive Learning of Visual Representations. In Proceedings of the International Conference on Machine Learning; PMLR, Vienna, Austria, 13–18 July 2020; pp. 1597–1607. [Google Scholar]

- He, K.; Fan, H.; Wu, Y.; Xie, S.; Girshick, R. Momentum Contrast for Unsupervised Visual Representation Learning. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, Seattle, WA, USA, 14–19 July 2020; pp. 9729–9738. [Google Scholar]

- Chen, X.; Fan, H.; Girshick, R.; He, K. Improved Baselines with Momentum Contrastive Learning. arXiv 2020, arXiv:2003.04297. [Google Scholar]

- Park, T.; Efros, A.A.; Zhang, R.; Zhu, J.-Y. Contrastive Learning for Unpaired Image-to-Image Translation. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 319–345. [Google Scholar]

- Xu, Z.; Liu, X.; Chen, X. Fog Removal from Video Sequences Using Contrast Limited Adaptive Histogram Equalization. In Proceedings of the 2009 International Conference on Computational Intelligence and Software Engineering, Washington, DC, USA, 11–14 December 2009; pp. 1–4. [Google Scholar]

- Land, E.H. The Retinex Theory of Color Vision. Sci. Am. 1977, 237, 108–129. [Google Scholar] [CrossRef]

- Khan, H.; Sharif, M.; Bibi, N.; Usman, M.; Haider, S.A.; Zainab, S.; Shah, J.H.; Bashir, Y.; Muhammad, N. Localization of Radiance Transformation for Image Dehazing in Wavelet Domain. Neurocomputing 2020, 381, 141–151. [Google Scholar] [CrossRef]

- Nayar, S.K.; Narasimhan, S.G. Vision in Bad Weather. In Proceedings of the Proceedings of the Seventh IEEE International Conference on Computer Vision, Corfu, Greece, 20–27 September 1999; Volume 2, pp. 820–827. [Google Scholar]

- He, K.; Sun, J.; Tang, X. Single Image Haze Removal Using Dark Channel Prior. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 2341–2353. [Google Scholar] [PubMed]

- Zhu, Q.; Mai, J.; Shao, L. A Fast Single Image Haze Removal Algorithm Using Color Attenuation Prior. IEEE Trans. Image Process. 2015, 24, 3522–3533. [Google Scholar] [PubMed]

- Berman, D.; Avidan, S. Non-Local Image Dehazing. In Proceedings of the IEEE conference on computer vision and pattern recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 1674–1682. [Google Scholar]

- Zhao, X.; Wang, K.; Li, Y.; Li, J. Deep Fully Convolutional Regression Networks for Single Image Haze Removal. In Proceedings of the 2017 IEEE Visual Communications and Image Processing (VCIP), St. Petersburg, FL, USA, 10–13 December 2017; IEEE; pp. 1–4. [Google Scholar]

- Zhang, H.; Patel, V.M. Densely Connected Pyramid Dehazing Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 3194–3203. [Google Scholar]

- Deng, Z.; Zhu, L.; Hu, X.; Fu, C.-W.; Xu, X.; Zhang, Q.; Qin, J.; Heng, P.-A. Deep Multi-Model Fusion for Single-Image Dehazing. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 2453–2462. [Google Scholar]

- Li, R.; Pan, J.; Li, Z.; Tang, J. Single Image Dehazing via Conditional Generative Adversarial Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8202–8211. [Google Scholar]

- Ren, W.; Ma, L.; Zhang, J.; Pan, J.; Cao, X.; Liu, W.; Yang, M.-H. Gated Fusion Network for Single Image Dehazing. In Proceedings of the IEEE conference on computer vision and pattern recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 3253–3261. [Google Scholar]

- Chen, D.; He, M.; Fan, Q.; Liao, J.; Zhang, L.; Hou, D.; Yuan, L.; Hua, G. Gated Context Aggregation Network for Image Dehazing and Deraining. In Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa Village, HI, USA, 7–11 January 2019; pp. 1375–1383. [Google Scholar]

- Qin, X.; Wang, Z.; Bai, Y.; Xie, X.; Jia, H. FFA-Net: Feature Fusion Attention Network for Single Image Dehazing. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 11908–11915. [Google Scholar]

- Jiang, X.; Zhao, C.; Zhu, M.; Hao, Z.; Gao, W. Residual Spatial and Channel Attention Networks for Single Image Dehazing. Sensors 2021, 21, 7922. [Google Scholar] [CrossRef] [PubMed]

- Zhao, W.; Zhao, Y.; Feng, L.; Tang, J. Attention Optimized Deep Generative Adversarial Network for Removing Uneven Dense Haze. Symmetry 2021, 14, 1. [Google Scholar] [CrossRef]

- Gao, G.; Cao, J.; Bao, C.; Hao, Q.; Ma, A.; Li, G. A Novel Transformer-Based Attention Network for Image Dehazing. Sensors 2022, 22, 3428. [Google Scholar] [CrossRef]

- Arjovsky, M.; Chintala, S.; Bottou, L. Wasserstein Generative Adversarial Networks. In Proceedings of the International conference on machine learning, PMLR. Sydney, Australia, 6–11 August 2017; pp. 214–223. [Google Scholar]

- Miyato, T.; Kataoka, T.; Koyama, M.; Yoshida, Y. Spectral Normalization for Generative Adversarial Networks. arXiv 2018, arXiv:1802.05957. [Google Scholar]

- Mao, X.; Li, Q.; Xie, H.; Lau, R.Y.; Wang, Z.; Paul Smolley, S. Least Squares Generative Adversarial Networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2794–2802. [Google Scholar]

- Isola, P.; Zhu, J.-Y.; Zhou, T.; Efros, A.A. Image-to-Image Translation with Conditional Adversarial Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Huang, H.; Lin, L.; Tong, R.; Hu, H.; Zhang, Q.; Iwamoto, Y.; Han, X.; Chen, Y.-W.; Wu, J. Unet 3+: A Full-Scale Connected Unet for Medical Image Segmentation. In Proceedings of the ICASSP 2020–2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 1055–1059. [Google Scholar]

- Li, B.; Ren, W.; Fu, D.; Tao, D.; Feng, D.; Zeng, W.; Wang, Z. Benchmarking Single-Image Dehazing and Beyond. IEEE Trans. Image Process. 2018, 28, 492–505. [Google Scholar] [CrossRef]

- Hore, A.; Ziou, D. Image Quality Metrics: PSNR vs. SSIM. In Proceedings of the 2010 20th International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; pp. 2366–2369. [Google Scholar]

- Qu, Y.; Chen, Y.; Huang, J.; Xie, Y. Enhanced Pix2pix Dehazing Network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 8160–8168. [Google Scholar]

- Li, B.; Gou, Y.; Gu, S.; Liu, J.Z.; Zhou, J.T.; Peng, X. You Only Look Yourself: Unsupervised and Untrained Single Image Dehazing Neural Network. Int. J. Comput. Vis. 2021, 129, 1754–1767. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).