Abstract

Moth-flame optimization is a typical meta-heuristic algorithm, but it has the shortcomings of low-optimization accuracy and a high risk of falling into local optima. Therefore, this paper proposes an enhanced moth-flame optimization algorithm named HMCMMFO, which combines the mechanisms of hybrid mutation and chemotaxis motion, where the hybrid-mutation mechanism can enhance population diversity and reduce the risk of stagnation. In contrast, chemotaxis-motion strategy can better utilize the local-search space to explore more potential solutions further; thus, it improves the optimization accuracy of the algorithm. In this paper, the effectiveness of the above strategies is verified from various perspectives based on IEEE CEC2017 functions, such as analyzing the balance and diversity of the improved algorithm, and testing the optimization differences between advanced algorithms. The experimental results show that the improved moth-flame optimization algorithm can jump out of the local-optimal space and improve optimization accuracy. Moreover, the algorithm achieves good results in solving five engineering-design problems and proves its ability to deal with constrained problems effectively.

1. Introduction

Optimization cases have been classified into many groups, such as single objective, multi-objective, and many-objective models [1,2], and according to each category, we need a different mathematical model for the optimization core [3]. In recent years, meta-heuristic algorithms have become an effective class of methods for solving real-world practical problems due to their excellent performance in dealing with high-dimensional optimization problems [4], such as resource allocation [5,6], task-deployment optimization [7], supply-chain planning [8], etc. There are numerous algorithms with terse principles and high optimization performance, such as particle swarm optimizer (PSO) [9,10], differential evolution (DE) [11], Equilibrium Optimizer (IEO) [12], Runge Kutta optimizer (RUN) [13], hunger games search (HGS) [14], slime mould algorithm (SMA) [15], Harris hawks optimization (HHO) [16], colony predation algorithm (CPA) [17], the weighted mean of vectors (INFO) [18], seagull optimization algorithm (SOA) [19], and so on. Also, due to their excellent performance, they have achieved very positive results in many fields, such as image segmentation [20,21], complex optimization problem [22], feature selection [23,24], resource allocation [25], medical diagnosis [26,27], bankruptcy prediction [28,29], gate-resource allocation [30,31], fault diagnosis [32], airport-taxiway planning [33], train scheduling [34], multi-objective problem [35,36], scheduling problems [37,38,39], and solar-cell-parameter identification [40]. Although these algorithms have a good ability to obtain the optimal solution, there is often the problem of the algorithm falling into deceptive optimization in practical applications. Therefore, researchers have aimed to further enhance the ability of the algorithms to jump out of the local optimal space.

Moth-flame optimization (MFO) [41] is a novel metaheuristic algorithm proposed by Mirjalili et al. in 2015, which was inspired by the flight characteristics of moths in nature. Once the algorithm was proposed, it was favored by many researchers because of its advantages, such as fewer parameters and ease of implementation. Therefore, within a few years, the algorithm has appeared frequently in practical application settings. For example, Yang et al. [42] developed a deep neural network to predict gas production in urban-solid waste. To improve the accuracy of prediction, MFO was used to optimize the deep-neural-network model, which effectively improved the prediction effect of the model and was conducive to improving environmental pollution.

In order to find the optimal-economic dispatch of the hybrid-energy system, Wang et al. [43] constructed an operation model based on the moth-flame algorithm to optimize the schedule of each unit, effectively reducing the system’s operating cost. Singh et al. [44] used the MFO algorithm to reasonably arrange the placement of multiple optical-network units in WiFi and simplify the network design. After using this algorithm, it was proved that the layout method could promote the reduction of deployment costs and improve network performance. Said et al. [45] proposed a segmentation method based on the MFO algorithm to solve the difficult segmentation problem of the liver image caused by factors such as liver volume in computer-medical-image segmentation. The structural-similarity index verified the method’s effectiveness, and the segmentation method’s accuracy was as high as 95.66%.

Yamany et al. [46] applied MFO to train multilayer perceptron and searched for the perceptron’s bias and weight, which reduced the error and improved the classification rate. Hassanien et al. [47] used the improved MFO in a study on the automatic detection of tomato diseases and the exploration ability of MFO for feature selection, significantly improving the classification accuracy of support-vector-machine evaluation. Allam et al. [48] chose MFO to estimate parameters in a three-diode model so that accurate parameter can be found quickly in experiments, and minimum RMSE error and average bias error were achieved. Singh et al. [49] proposed a moth-flame-optimization- guidance method to solve the data-clustering problem and verified the method’s effectiveness using Shape and UCI benchmark datasets. The cases above showed that MFO has been widely used in practical problems and has achieved good results in different fields.

As the scale of the problem rises, the simple and original MFO algorithm can no longer satisfy the complex high-dimensional and multimodal problems; thus, a large number of improved MFO variants have been proposed one after another. Kaur et al. [50] adopted the Cauchy-distribution function to enhance the algorithm’s exploration ability and improved the algorithm’s mining ability. Experiments showed that the improved algorithm promotes convergence speed and solution quality. Wang et al. [26] added chaotic-population initialization and chaotic-disturbance mechanisms in the algorithm to further balance the algorithm’s exploration and exploitation performance, and enhance the algorithm’s ability to jump out of the local optimum. Yu et al. [51] proposed an enhanced MFO, which added a simulate-annealing strategy to enhance the local exploitation and introduced the idea of a quantum-revolving gate to enhance its global exploration ability. Ma et al. [52] added inertia weight to balance the algorithm performance and introduced a small probability mutation in the position-update stage to enhance the ability of the algorithm to escape from the local optimal space.

Pelusi et al. [53] defined a hybrid phase in the MFO algorithm to balance the exploration and exploitation of the algorithm, and adopted the dynamic-crossover mechanism to the flame to enhance population diversity. Li et al. [54] proposed a novel variant of MFO, whose main optimization idea was to adopt differential evolution and opposite-learning strategies to the generation of flame. The improved shuffle-frog-jump algorithm was used as the local-search algorithm, and the individuals with poor fitness were eliminated through the death mechanism. Li et al. [55] proposed a dual-evolutionary learning MFO. The two optimization strategies in the algorithm were differential-evolutionary- flame generation and dynamic-flame-guidance strategy, which aimed to generate a high-quality flame to guide the moth to fly toward the optimal direction.

Li et al. [56] initialized the moth population by chaotic mapping, processed the cross-border individuals, and adjusted the distance parameters to improve the performance of MFO. The statistical results showed that chaotic mapping promotes the algorithm’s performance. Xu et al. [57] added a cultural-learning mechanism to MFO and performed a Gaussian mutation of the optimal flame, which increased the population diversity and enhanced the search ability of the algorithm. In order to overcome the problem of premature convergence of MFO, Sapre et al. [58] used opposition learning, Cauchy mutation and evolutionary-boundary-constraint-processing technology. Experiments showed that the enhanced MFO improved both in convergence and escape from local stagnation. Although many scholars have proposed optimization strategies for MFO from different perspectives, it still needs to be strengthened in terms of jumping out of the local optimal space and optimization accuracy.

To overcome the problem that the traditional MFO is prone to local optimality and poor optimization accuracy, this paper proposes a novel MFO-variant algorithm called HMCMMFO. To deal with the problem that MFO is vulnerable to deceptive optimality, this paper proposes a hybrid-mutation strategy that assigns dynamic weights to Cauchy and Gaussian mutation, which are applied to the individuals adaptively with the deepening of the optimization process. At the same time, to improve the optimization accuracy of the algorithm, chemotaxis-motion mechanism is proposed to better mine the solutions in the local space and improve the search efficiency. Based on the classical IEEE CEC2017 functions, experiments, such as mechanism combination, balanced-diversity analysis, and comparison of novel and advanced algorithms, were conducted to demonstrate the optimization effect of the MFO variant named HMCMMFO, of which the effectiveness and superiority were verified, and was then applied to engineering problems to realize its potential value better. Note that the main contributions of this study are as follows.

- (1)

- A hybrid-mutation strategy is proposed to enhance the exploration ability of the algorithm and the ability to jump out of the local optimum.

- (2)

- Chemotaxis-motion mechanism is proposed to guide the direction of the population to approach optimal solution and improve the algorithm’s local-exploitation capability.

- (3)

- HMCMMFO can obtain high-quality optimal solutions within the CEC 2017 test functions.

- (4)

- HMCMMFO is applied to five typical engineering cases to solve the constraint problems effectively.

The next part of the paper is structured into five sections, where Section 2 provides an overview of the MFO algorithm, Section 3 introduces two optimization strategies, Section 4 discusses the comparative experimental results, Section 5 presents applications of HMCMMFO to engineering problems, and Section 6 summarizes the full paper and introduces future work.

2. The Principle of MFO

MFO is a population-based, random-metaheuristic-search algorithm inspired by moths’ lateral positioning and navigation [41]. The main idea is that when a moth flies at a fixed angle with a flame as a reference, as the distance to the flame decreases, the moth will continuously adjust its angle to the light source during the flight to maintain a specific angle toward the flame, and finally its flight trajectory will be spiral-shaped. The mathematical model of this flight characteristic will be introduced as follows.

In the following matrix, n represents the number of moth populations, d denotes the problem dimension, and X means explicitly that n moths fly in the d-dimensional space.

Equation (2) is the fitness-value matrix of the moth population, and the moth individual in each row in matrix X stores the fitness value in the matrix through the fitness function.

Each moth in the population needs a flame to correspond to it, so the matrix representing the flame and matrix X should be of the same size. The flame position matrix P and its fitness matrix are shown below:

The process of spiral flight in the process of a moth flying towards the fire is modeled. Since the position update of the moth is affected by the position of flame, its expression is shown in Equations (5) and (6).

where denotes the i-th moth, te represents the j-th flame, and S is the path function of moths flying towards the flame. In Equation (6), is the distance between the j-th flame and the i-th moth, b is the logarithmic-spiral-shape constant, and t is a random number in the range of [−1,1]. The updated positions of moths and flames are reordered according to their fitness, and the spatial position with better fitness is selected to be updated and used as the position of the next generation of flames.

Meanwhile, the number of flames is adaptively reduced to balance the global-exploration and local-exploitation ability of the algorithm, as shown in Equation (7).

where is the current number of iterations and is the maximum number of iterations. As the number of flames decreases, moths corresponding to the reduced flames will update their positions according to the flames at present.

The pseudocode of the traditional MFO is shown in Algorithm 1.

| Algorithm 1 Pseudocode of MFO |

| Input: The size of the population, n; the dimensionality of the data, dim; the maximum iterations, Maxit; |

| Output: The position of the best flame; |

| Initialize moth-population positions X; |

| it = 0; |

| while (it < Maxit) do |

| if it == 1 |

| P = sort(Xit); |

| FP = sort(FXit); |

| else |

| P = sort(Xit−1, Xit); |

| FP = sort(FXit−1, FXit); |

| end if |

| Update l according to Equation (7); |

| Update the best flame; |

| for i = 1: size(X,1) do |

| for j = 1: size(X,2) do |

| Update parameters a, t; |

| if i <= l |

| Update X(i,j) according to Equation (6); |

| else |

| Update X(l,j) according to Equation (6); |

| end if |

| end for |

| end for |

| it = it + 1; |

| end while |

| return the best flame |

3. Improvement Methods Based on MFO

3.1. Hybrid Mutation

MFO is prone to local stagnation in the process of finding the optimal solution. A certain degree of mutation in the population can increase the population’s diversity and improve the algorithm’s solution accuracy. Common mutation operations are Gaussian mutation and Cauchy mutation. The difference between the two is that the disturbance ability of Gaussian mutation is weaker and has a more robust local-exploitation ability, while the disturbance ability of Cauchy mutation is more vital than that of Gaussian mutation; it has a strong global-exploration ability [59]. For this reason, the mutation methods used in this paper are the hybrid mutation of Cauchy and Gaussian [60], and dynamic weights are assigned to the two mutation methods as the number of iterations increases. The formula for updating the individual position of the moth using hybrid mutations is shown below.

where is the current individual position of the moth, is the updated individual position, is a Gaussian-distributed-random number, is a random number subject to Cauchy distribution, is the inertia constant, which is taken as 0.3 in this paper, and is the dynamic weight, which is the ratio of the current iteration to the maximum iteration. When the algorithm is in the early stage, a larger weight is given to Cauchy mutation to promote the global exploration of the algorithm, while in the later stage of the algorithm, Gaussian mutation has a large weight, which is more beneficial to the exploitation of solution space. Combining the two mutation methods, hybrid mutation can increase the population’s diversity and enhance the algorithm’s ability to jump out of the local optimum.

3.2. Chemotaxis Motion

There is a possibility that the MFO algorithm has a situation where the current population individual has moved away from the optimal solution or still has a certain distance from the optimal solution during the iteration, so it is necessary to enhance the local-search ability of the algorithm. Inspired by the chemotaxis motion of bacterial-foraging-optimization algorithm [61], E. coli has two movement modes in the chemotaxis motion: flipping and forwarding. Flipping motion is the movement of bacteria in any direction by unit steps. Forwarding motion means that if the fitness of the individual position is improved after the bacteria moves in a specific direction, it will continue to move in this direction until the fitness is no longer improved or reaches a predetermined number of moving steps. Based on this idea, this paper adds the chemotaxis-motion strategy to the optimization process of MFO. The mathematical model of this strategy is shown below.

where is the step size of the chemotaxis motion, and is a random direction vector with a value range of [−1,1]. When the fitness of is less than the fitness of , it means that moving in the current direction can get closer to the optimal solution, so the movement toward the direction continues. Cross-border judgment is made in each movement, and individual fitness before and after the chemotaxis motion is compared. If the updated position crosses the boundary or its fitness is worse than the fitness of the previous-generation individual, the current movement deviates from the optimal solution, and then the previous-generation individual is adopted. If the fitness of is better than that of , the updated solution is adopted.

To address the characteristics of the two optimization strategies, this paper adopts hybrid mutations in the first half of the optimization process to increase population diversity and promote solution-space exploration. In the second half, chemotaxis-motion strategy is adopted to increase the possibility of an individual approaching the optimal solution and improve the algorithm’s search efficiency. The improved MFO pseudocode is given in Algorithm 2.

| Algorithm 2 Pseudocode of HMCMMFO |

| Input: The size of the population, n; the dimensionality of the data, dim; the maximum iterations, Maxit; |

| the inertia constant, ; the chemotaxis-motion-step size, C; |

| Output: The position of the best flame; |

| Initialize moth population positions X; |

| it = 0; |

| while (it < Maxit) do |

| if it == 1 |

| P = sort(Xit); |

| FP = sort(FXit); |

| else |

| P = sort(Xit-1, Xit); |

| FP = sort(FXit-1, FXit); |

| end |

| Update the best flame; |

| for i = 1: size(X,1) do |

| if < 0.5 |

| Update X according to Equation (8); |

| Judge whether X is out of bounds and deal with it; |

| else |

| Generate a random direction vector ; |

| Set k = 1; |

| while (k <= 10) |

| Update Xnew according to Equation (9); |

| If Xnew is out of bounds, the search will jump out of the loop; |

| Calculate the fitness before and after updating X; |

| If the fitness of Xnew is worse than that of X, the search will jump out of the loop, otherwise, it will be copied to X; |

| k = k + 1; |

| end while |

| end if |

| end for |

| Update l according to Equation (7); |

| for i = 1: size(X,1) do |

| for j = 1: size(X,2) do |

| Update parameters a, t; |

| if i <= l |

| Update X(i,j) according to Equation (6); |

| else |

| Update X(l,j) according to Equation (6); |

| end if |

| end for |

| end for |

| it = it + 1; |

| end while |

| return the best flame |

The time complexity of HMCMMFO mainly depends on the number of evaluations (S), the number of moths (n), the problem dimension (d), and the maximum number of forwarding steps (m) for the chemotaxis motion. The time complexity of traditional MFO is calculated around three parts: population initialization, updating flame position, and updating moth position. The time complexity of these three parts is O(n × d), O((2n)2), and O(n × d), respectively. HMCMMFO in this paper adds two parts: hybrid mutation and chemotaxis motion based on the original MFO. The time complexity of hybrid mutation is O(n). When executing chemotaxis motion, the default is to move forward m steps each time the strategy is executed, and its time complexity is O(n × m). Hybrid-mutation strategy is adopted in the first half of the evaluations, and chemotaxis motion mechanism is adopted in the second half. Therefore, time complexity of HMCMMFO is O(HMCMMFO) = O(population initialization) + S × (O(updating flames position) + 0.5S × O(hybrid mutation) + 0.5S × O(chemotaxis motion) + O(updating moths position)). That is, the total time complexity is O(HMCMMFO) = (S + 1) × O(n × d) + S(O(4n2) + 0.5 × O(n) + 0.5 × O(n × m)).

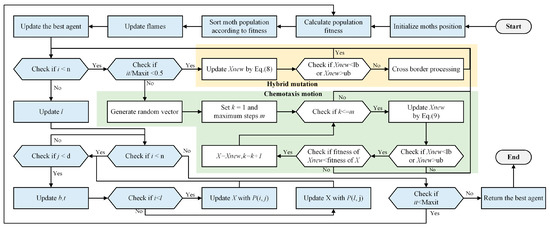

For a clear understanding of the algorithm flow of the improved MFO in this paper, the flow of HMCMMFO is shown in Figure 1 below.

Figure 1.

Flow chart of HMCMMFO.

4. Discussion of Experimental Results

In this section, a series of comparative experiments will be used to verify the superiority of HMCMMFO and the effectiveness of the optimization strategy proposed in this paper. A total of 30 benchmark functions of IEEE CEC 2017 are selected for the experimental test, and the specific function details are shown in Table 1. Among them, NO. denotes the serial number of the function, Functions represents the function name, Bound is the range of the function, and F(min) denotes the optimal value of the function. The experimental environment is intuitively displayed in Table S1 in the Supplementary Material, where N is the number of populations, set to 30 and dim is the dimension of the function. This paper sets the dimensions of IEEE CEC2017 functions to 30. At the same time, to ensure that the algorithm in the comparison experiment can give full play to its search performance, the evaluation times (Maxit) of the algorithm in this paper are set to 300,000 times. Meanwhile, to reduce the over-concentration or dispersion of the population caused by random initialization of the population, and thus losing the fairness of the experiment [62], all the test functions in this paper adopt 30 independently repeated experiments (Flod) and take the average value to objectively represent the optimization effect of the algorithm in each function.

Table 1.

Description of the CEC2017 functions.

4.1. Parameters Sensitivity Analyses

4.1.1. Determination of the Parameter δ

In order to discuss the influence of δ in hybrid-Cauchy-and-Gaussian mutation on the algorithm’s accuracy, the parameter-sensitivity experiment of δ is carried out in this paper. If the value of δ is too large, the updated individual will exceed the space boundary. Therefore, this experiment adopts boundary control for the updated agent and reinitializes the individuals beyond the spatial range. Meanwhile, to ensure the experiment’s fairness, as per other AI work [63,64,65], this experiment adopts the single-variable principle to only assign different values to , specifically . In addition, other experimental environments are described above.

In the 30 test functions, the average value of the optimal solution is obtained in each function by HMCMMFO with different , and the most suitable is determined according to the average-ranking value of each algorithm. As shown in Table 2 below, ARV is the average ranking of the algorithm in the 30 functions, and Rank is the final ranking of the algorithm obtained according to ARV. From the data in the table, it can be seen that δ in the range from 0.1 to 0.5 has better results in solving the optimal values among the 30 functions, and their ARV and Rank are stronger than those of other δ. This is because larger δ will cause the population individual to cross the boundary, and out-of-bounds processing needs to be conducted; in this case, the hybrid-Cauchy-and-Gaussian-mutation strategy will become meaningless. Therefore, it can be concluded from the data that this strategy can play best role when the value of δ is 0.3.

Table 2.

Comparison of HMCMMFO results with different δ.

4.1.2. Determination of Chemotaxis Motion Step Size

In order to select the most suitable step size, this experiment follows the single-variable principle. Only the parameter settings for the step size were changed, specifically , and the rest followed the experimental setting described above. If the direction in the chemotaxis-motion strategy helps the individual move toward the optimal solution, the maximum number of motion steps is specified as 10 to avoid too many motion steps which increases the overhead of the algorithm. Table 3 below shows the solutions using different step lengths.

Table 3.

Comparison of the results of HMCMMFO using different step sizes.

From the above table, it can be seen that HMCMMFO using different step lengths obtained the optimal solution with a similar magnitude in most functions. This indicates that under the limitation of the maximum number of chemotaxis motion steps, the algorithms using different step sizes facilitate the moth’s approach to the optimal solution. Moreover, HMCMMFO using a step size of 0.05 has the smallest average-ranking value among the compared algorithms, which is more beneficial to enhance the search efficiency of the algorithm.

4.2. Impact of Optimization Strategies on MFO

HMCMMFO uses two optimization mechanisms, namely hybrid mutation and chemotaxis motion. To demonstrate the effect of the two strategies on the MFO, three different MFOs were developed to compare with the original algorithm by different combinations of the two strategies. As shown in Table 4 below, hybrid-mutation strategy is denoted by “HM,” and chemotaxis motion is represented by “CM”, with “1” indicating that the MFO uses this strategy and “0” indicating that it does not. For example, CMMFO indicates that only chemotaxis-motion strategy is adopted, and HMCMMFO indicates that both hybrid-mutation and chemotaxis-motion optimization strategies are used.

Table 4.

Performance of the two strategies on MFO.

4.2.1. Comparison between Mechanisms

Table 5 below shows the average value and standard deviation of the optimal solution obtained by four different MFOs in the test function. The “+”, “−”, and “=” in the table indicate that the optimization accuracy of HMCMMFO is better than, worse than, or equal to the comparison algorithm, respectively. AVG represents the average value of the optimal solution obtained by the algorithm in the function, and STD is the standard deviation. The data in the table show that the average value and standard deviation of the optimal solution obtained by HMCMMFO in most functions are better than those of other algorithms, and the search performance is stable. The average ranking values of MFO variants with different strategies are stronger than that of the original MFO, which proves the effectiveness of the two optimization strategies. HMCMMFO with two strategies is better than the traditional algorithm in 29 functions, and their optimization performance is the same in only one function. Comparing the average ranking values of both, HMCMMFO is only about one-third of MFO. This shows that the two optimization strategies proposed in this paper are essential to improve the performance of the algorithm.

Table 5.

Comparison of MFO results with different strategies.

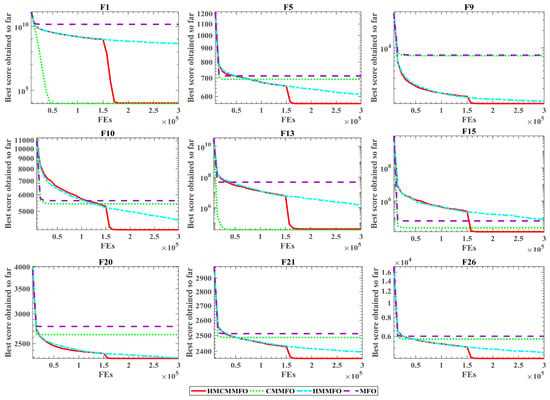

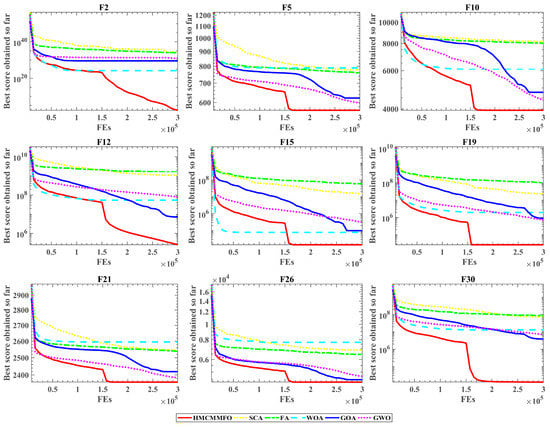

To visualize the impact of the two optimization mechanisms on the optimization performance of MFO, convergence plots of nine test functions are selected in this experiment, as shown in Figure 2 below. HMMFO with hybrid-mutation strategy has a better optimization accuracy than the traditional MFO on eight functions. CMMFO with chemotaxis-motion mechanism has a significant optimization effect on F1 and F13, and can find the optimal solution at a faster speed, while it can also improve MFO in other functions to varying degrees. Among the nine function plots, HMCMMFO jumps downward in the middle of the evaluation and finds the optimal solution quickly. This is because, in the early stage of the evaluation, hybrid-mutation strategy is used to mutate the individual to avoid the algorithm falling into the local optimal space. By comparing the convergence curves of HMMFO and MFO in the figure, it can be proved that this strategy contributes to avoiding stagnation problem of the algorithm. A chemotactic- motion strategy is adopted in the later evaluation stage to help individuals approach the optimal solution. The effectiveness of this strategy is illustrated by comparing HMCMMFO with HMMFO, and it outperforms HMMFO in terms of optimization accuracy. Overall, the two strategies promote each other, which positively improves the optimization performance of MFO.

Figure 2.

MFO convergence curves with different strategies.

4.2.2. Balance and Diversity Analysis of HMCMMFO and MFO

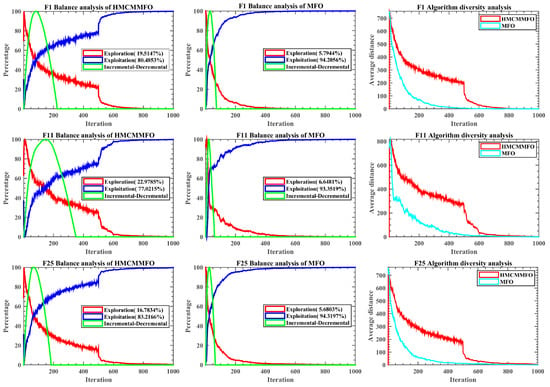

To further analyze the changes produced by the strategies proposed in this paper on the optimization performance of MFO, this section analyzes the diversity and balance of HMCMMFO and MFO. As shown in Figure 3 below, this section selects three functions from the 30 test functions for discussion, namely F1, F11, and F25. In the figure, the first column is the balance plots of HMCMMFO, the second column is the balance plots of MFO, and the third column is the diversity plots, including HMCMMFO and MFO.

Figure 3.

Balance-and-diversity-analysis plots.

There are three curves in the equilibrium graph’s first and second columns: exploration, exploitation, and incremental-decremental. Among them, the exploration curve represents the proportion of the global search process of the algorithm, the exploitation curve represents the proportion of the local-exploitation process of the algorithm, and the incremental-decremental curve is a dynamic curve that changes with the changes in the exploration and exploitation curves. When the global-exploration ability of the algorithm is greater than its local-exploitation ability, the incremental-decremental curve increases, and vice versa. The curve reaches the vertex position when the algorithm’s global-exploration ability and local-exploitation ability are equal. In the F1, F11, and F25 balance plots shown below, the global-exploration ability of traditional MFO is weak, but the local-exploitation ability is strong. Although the stronger local-exploitation ability helps the MFO algorithm to search for the solution space finely, global exploration that is too short will affect the population’s optimal solution and reduce its optimization accuracy. Moreover, the HMCMMFO proposed in this paper effectively improves the global-exploration ability of the algorithm. For example, the global-exploration stage of MFO in F1 only accounts for 5.7944%, while HMCMMFO accounts for 19.5147%, and still has a large proportion of the exploitation process. When HMCMMFO does not reach half of the maximum evaluation times, its exploration curve decreases slowly compared with MFO’s, and oscillation is large. When it exceeds half of the maximum evaluation times, the exploitation curve increases rapidly, and then the slope of the curve slowly approaches 0. The reason for this phenomenon is that the algorithm adopts hybrid mutation on the individual in the first half of the evaluation, which increases the exploration performance of the algorithm. The chemotaxis motion is adopted in the second half to guide the individual to approach the optimal solution, increasing the algorithm’s local-exploitation ability.

In the diversity plots, the horizontal coordinate indicates the number of iterations, and the vertical coordinate represents the diversity metric. The algorithm population is affected by random initialization, which leads to good population diversity at the beginning of the algorithm and gradually decreases as it keeps approaching the optimal solution. In F1, F11, and F25, the diversity curve of MFO decreases rapidly and approaches 0 in the middle of the iteration. A too-fast decline in diversity will lead the algorithm to local optimum, while too-fast convergence is not conducive to finding higher-quality optimal solutions. In the early stage of the iteration, HMCMMFO has a slow decline in diversity curve, rich diversity, and large fluctuation, which means that the hybrid mutation adopted in the early stage is helpful for the algorithm to explore the solution space comprehensively. HMCMMFO diversity drops fast when the algorithm just enters the second half of the iteration, which indicates that the chemotaxis-motion strategy can help an individual to approach the optimal solution effectively.

4.3. Impact of Optimization Strategies on MFO

In order to verify the strength of the optimization performance of HMCMMFO, six well-known MFO-variant algorithms are selected for comparison in this experiment. These algorithms are: CLSGMFO [28], LGCMFO [66], NMSOLMFO [67], QSMFO [51], CMFO [56], and WEMFO [68]. Table S2 in the Supplementary Material shows the parameter settings of these algorithms.

By comparing the average value and standard deviation of the optimal solutions obtained by these algorithms in the IEEE CEC2017 functions, the differences in optimization performance among them were explored. Table 6 shows the optimization results of the MFO variants in test functions. Among these, ARV indicates the algorithm’s average ranking value across all functions, and Rank is the final ranking obtained from ARV. According to the table data, HMCMMFO’s average values of optimum solutions are superior to other techniques in most functions. By comparing the standard deviations of the optimal solutions obtained by these MFO variants, it can be seen that the optimal performance of HMCMMFO in most functions is relatively stable and is at an upper-middle level. By comparing ARV and RANK, it can be found more intuitively that the ARV value of HMCMMFO is only 2.2, which ranks first among these algorithms. Compared with NMSOLMFO, with strong performance, HMCMMFO has more advantages.

Table 6.

Comparison of MFO-variant algorithms.

In order to further verify the difference between the optimization performance of HMCMMFO and other MFO variants, the experiment in this section uses the nonparametric Wilcoxon signed-rank test with a 5% significance level to compare the differences between these variant algorithms. When the data in the table are less than 0.05, it shows that HMCMMFO has obvious advantages, compared with other algorithms. As can be seen from Table S3 in the Supplementary Material, HMCMMFO is less than 0.05 in most functions, compared to other algorithms. Compared with CMFO and WEMFO, HMCMMFO has a better optimal solution in 28 functions, and the optimization effect of the remaining two functions is the same. Compared with CLSGMFO and LGCMFO, HMCMMFO obtains optimal values in 21 functions that are closer to the theoretically optimal solutions. Compared with the powerful NMSOLMFO, HMCMMFO still outperforms by a slight advantage. Overall, the improved algorithm proposed in this paper has a good optimization ability in these MFO-variant algorithms, which reflects that the optimization strategy adopted in this paper is both effective and strong in improving MFO performance.

In order to visualize the graphs of the optimization effect of the different algorithms in this experiment, as shown in Figure 4 below, the optimization-convergence plots of algorithms in nine functions are selected for display. In F1, although the optimal solution found by HMCMMFO in the early stage of algorithm evaluation is not as good as other algorithms, it continues to converge downward after 1.5 × 105 evaluations, and the optimal solution found in the latter stage of evaluation is close to that of NMSOLMFO. In F9, the optimal solution found by HMCMMFO in the early stage of evaluation is better than that found by other algorithms at the end of the evaluation. Through the convergence plots of these nine functions, it can be found that there is a common feature, that is, before the 1.5 × 105 evaluations of HMCMMFO, its optimization-convergence curve always shows a downward search trend, while the curves of other MFO variants converge early in the evaluation, the curve of HMCMMFO shows a rapid and continuous downward trend. This is because hybrid-mutation strategy is adopted in the early stage of evaluation, which enhances the algorithm’s ability to jump out of the local optimum and the algorithm’s global-exploration ability. In general, although HMCMMFO is not as fast as other algorithms in convergence speed, it performs better in optimization accuracy.

Figure 4.

Convergence plots of the MFO variants.

4.4. Comparison with Original Algorithms

To strengthen the reliability of the optimization performance of HMCMMFO in this paper, the experiment in this section selected the popular and highly recognized meta-heuristic algorithms for comparison. These algorithms are sine cosine algorithm (SCA) [69], firefly algorithm (FA) [70], whale optimization algorithm (WOA) [71], grasshopper optimization algorithm (GOA) [72], and grey wolf optimizer (GWO) [73]. The parameter settings in these algorithms are shown in Table S4 in the Supplementary Material.

Table 7 below shows the optimization results of different novel algorithms in 30 test functions. Among the six algorithms, the average value of the optimal solution found by the HMCMMFO algorithm is closer to the theoretical optimal values of functions than other algorithms. As shown in the data in F2, the average optimal solutions obtained by the other five algorithms are not as good as that of HMCMMFO, and the numerical gap between them is large. By comparing the standard deviations, it can be seen that the optimization ability of HMCMMFO in most functions is more stable. From the final ranking, it can be seen clearly that HMCMMFO is in first place, and there is a significant gap between it and the second-ranked GOA in terms of average-ranking value, demonstrating that HMCMMFO has a significant advantage.

Table 7.

Comparison of original algorithms.

Table S5 in the Supplementary Material shows the comparison results of using the Wilcoxon signed-rank test to evaluate HMCMMFO with other original metaheuristic algorithms. As can be seen from the data in Table S5, most of the results are far less than 0.05. It can be concluded that the optimization ability of HMCMMFO in most functions is significantly different from that of other algorithms. By comparing “+/−/=”, it can be found that, compared with SCA and WOA, HMCMMFO has a better optimization effect in the 30 functions than the two algorithms. Compared to FA, HMCMMFO is inferior to it in only one function. From the comparison results of GOA and GWO, only four functions of their optimization results are not significantly different, compared with HMCMMFO. GOA is inferior to HMCMMFO in 25 functions and GWO is inferior to HMCMMFO in 26 functions. In general, it can be seen that the improved algorithm proposed in this paper has a strong optimization ability and other advantages, compared with novel algorithms.

The convergence effect of HMCMMFO and other original algorithms is shown in Figure 5 below. In F2, these original algorithms find the optimal value in the early stage of evaluation and show convergence. However, HMCMMFO jumps out of the local optimum space and continues to search downward in the later stage of evaluation, and its solution is much better than those obtained by other algorithms. In F12, although GOA, GWO, and HMCMMFO show a downward trend throughout the evaluation process, the decline of GWO is significantly less than that of HMCMMFO and GOA, and its decline rate is not as fast as theirs. At the end of the evaluation, the curve of HMCMMFO still remains some distance from the curve of GOA. It can be seen that HMCMMFO has advantages in global-exploration ability. In F19 and F30, the optimal solution searched by HMCMMFO in the middle of the evaluation is already stronger than those found by the other algorithms after the whole evaluation. At the same time, HMCMMFO has a fast convergence speed and can find the optimal value quickly and accurately in the later stage of evaluation. In other functions, the optimal solution obtained by HMCMMFO is closer to the theoretical optimal solution in the test functions than those obtained by other algorithms, and the strong optimization performance of HMCMMFO can be seen from the optimization effect of the algorithm in Figure 5 below.

Figure 5.

Convergence effect of original algorithms.

4.5. Comparison with Advanced Algorithms

This section compares HMCMMFO with typically advanced algorithms to further verify its effectiveness and superiority. The advanced algorithms are: FSTPSO [74], ALCPSO [75], CDLOBA [76], BSSFOA [77], SCADE [78], CGPSO [79], and OBLGWO [80]. Table S6 in the Supplementary Material shows the parameter settings of these advanced algorithms.

Table 8 below shows the average and standard-deviation results of the optimal solutions obtained by these eight advanced algorithms in the IEEE CEC2017 functions. The average ranking value of HMCMMFO is 1.466667, and, compared with the second-ranked ALCPSO algorithm, the average ranking value of the two is significantly different. PSO is a classical, swarm-intelligence algorithm with powerful optimization ability, and its variants FSTPSO, ACLPSO, and CGPSO are enhanced algorithms based on PSO. However, by comparing the results of HMCMMFO and these three PSO variants, HMCMMFO shows better averages and smaller standard deviations in most functions. From the data in the table as a whole, the optimization ability of HMCMMFO is better than these advanced algorithms.

Table 8.

Comparison results of advanced algorithms.

To clearly understand the difference in optimization performance between advanced algorithms and HMCMMFO, the nonparametric Wilcoxon signed-rank test method at a 5% significance level is used for this experiment. As shown in Table S7 in the Supplementary Material, the results of most of the functions are less than 0.05, which shows that HMCMMFO has obvious advantages, compared with these algorithms. Compared with typical PSO variant algorithms, HMCMMFO outperforms FSTPSO by 30 functions and outperforms CGPSO by 26 functions. Compared with the powerful ALCPSO, HMCMMFO outperforms ALCPSO in 20 functions and has the same search results in 10 functions.

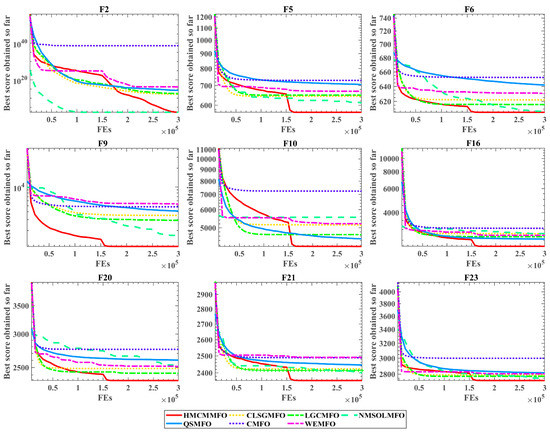

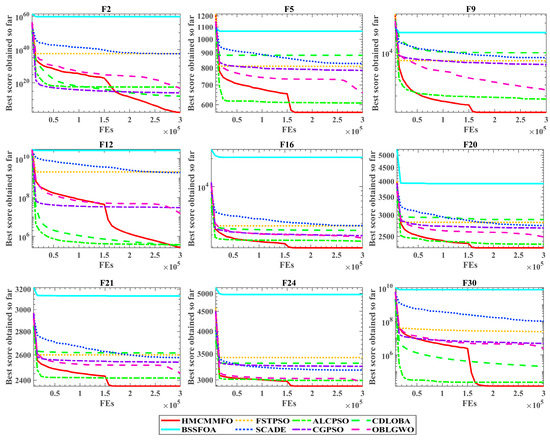

Figure 6 below shows the convergence effects of different advanced algorithms in nine test functions. In F2 and F12, HMCMMFO shows a downward exploration trend throughout the evaluation, while the other algorithms reach convergence early in the evaluation. In F5, F9, F16, F20, and F21, the accuracy of the optimal solution found by HMCMMFO in the early stage of evaluation is already better than the accuracy of the solution obtained at the end of evaluation by the other algorithms, except for ALCPSO. Although HMCMMFO is inferior to ALCPSO in the early stage, after continuous evaluation, the accuracy of the solution obtained by HMCMMFO exceeds that of ALCPSO in the middle of the evaluation. Through these convergence-effect plots, it can be seen that hybrid-mutation strategy effectively reduces the risk of the algorithm falling into deceptive optimal, and chemotaxis-motion mechanism improves the search efficiency of the MFO algorithm. With the support of these two optimization strategies, HMCMMFO has good advantages in optimization performance, compared with other advanced algorithms.

Figure 6.

Convergence effect of advanced algorithms.

5. Engineering Design Problems

In this section, five classical engineering design problems are used to study the real-life application value of HMCMMFO’s optimization performance: tension-compression string, three-bar truss, pressure vessel, I-beam, and speed-reducer-design problems.

5.1. Tension-Compression String Problem

The engineering model is a typical engineering-constraint problem, which minimizes the weight of the spring under the constraints of model parameters. The parameters involved are wire diameter (d), average coil diameter (D), and the finite number of coils (N). Specific model details are shown below.

Table 9 below shows the parameters and minimum weight obtained by the 11 methods for solving this problem. Through comparison, it is found that the improved algorithm proposed in this paper obtains the smallest spring weight of 0.012665, compared to the traditional mathematical methods and well-known metaheuristic algorithms. Compared with WEMFO, which is also an improved MFO algorithm, the minimum cost of HMCMMFO is much better than WEMFO. It can be seen that HMCMMFO can solve this problem effectively and with significant results.

Table 9.

Comparison results of the tension-compression string problem.

5.2. Tension-Compression String Problem

This engineering model contains three constraint functions and two parameters. The effective parameters are applied to the constraints to find the optimal cost. The specific details of this model are described below.

As shown in Table 10 below, the cost of HMCMMFO for this problem is 263.895843, with x1 = 0.788673 and x2 = 0.408253. Compared with other mature algorithms, HMCMMFO ranks first with the smallest optimum cost. It shows that the method proposed in this paper has advantages in solving the optimization problem, which can effectively reduce engineering consumption and help to solve practical problems in the real world.

Table 10.

Comparison results of the three-bar design problem.

5.3. Pressure Vessel Design Problem

The cylindrical-pressure-vessel-design problem is to reduce the manufacturing cost to satisfy the parameters and constraint functions. The model involves four parameters and four constraint functions, and its mathematical model is shown as follows.

From Table 11 below, it can be seen that HMCMMFO can reduce the manufacturing cost of pressure vessels, compared with G-QPSO and CDE algorithms, which have strong optimization performance and do not exceed 6060. For this problem, the cost of HMCMMFO is 6059.714, the cost of G-QPSO is 6059.721, and the cost of CDE is 6059.734. Comparison shows that HMCMMFO is superior to the latter two algorithms. Furthermore, compared with the cost obtained by other typical algorithms, HMCMMFO has outstanding advantages and shows more competitive performance.

Table 11.

Comparison results of pressure-vessel-design problem.

5.4. I-Beam Design Problem

The fourth engineering example aims to obtain the minimum vertical deflection in the I-beam structure. The model contains four parameters: the structure’s length, two thicknesses, and height. The details of the model are shown below.

The minimum vertical deflection obtained by different algorithms in this model is shown in Table 12 below, where the result obtained by HMCMMFO is 0.013074; HMCMMFO obtains the same optimal cost as IDARSOA and SOS, and all three obtain the same value on the , and , with only a small difference in . It can be seen that HMCMMFO can effectively solve this problem and achieve desired results.

Table 12.

Comparison results of the I-beam design.

5.5. Speed Reducer Design Problem

The model involves seven parameters, which are represented by –, and their meanings are the width of the end face (b), the tooth module (m), the number of teeth in the pinion (z), the length of the first shaft between the bearings (l1), the length of the second shaft between bearings (l2), the diameter of the first shaft (d1), and the second shaft (d2). The following constraints are carried out within the effective range of satisfying these parameters, and finally, the weight of the reducer is minimized. The specific details in this model are shown below.

Table 13 below shows the target values obtained by eight algorithms, including HMCMMFO, when solving this problem. These algorithms include novel metaheuristics and advanced algorithms. According to the data in the table, the weight of the model obtained by HMCMMFO is 2994.4711, which is smaller than that obtained by other algorithms and satisfies the goal of the model. Compared with SCA and GSA, HMCMMFO has a larger difference in the weight of the reducer. It can be seen that HMCMMFO can achieve good results when solving this problem, and can satisfactorily meet the requirements of industrial problems.

Table 13.

Comparison results of speed-reducer-design problem.

To sum up, research on the above five engineering examples shows that the HMCMMFO proposed in this paper is an effective method for solving engineering-constraint problems and has an excellent performance in solving practical problems. When it is applied to other fields in the future, such as recommender system [101,102], power-flow optimization [103], location-based services [104,105], road-network planning [106], human-activity recognition [107], information-retrieval services [108,109], image denoising [110], and colorectal-polyp-region extraction [111], it has the potential to perform well.

6. Conclusions and Future Work

The HMCMMFO proposed in this paper substantially improves optimization performance over the traditional MFO. By introducing a hybrid-mutation strategy, the diversity of the algorithm is increased, and its ability to jump out of the local optimal space is enhanced. A chemotaxis-motion strategy is adopted to guide individuals to move toward the optimal solution and improve the optimization accuracy of the algorithm. In the test functions of CEC2017, the most suitable parameter settings in the optimization strategy are determined through parameter-sensitivity analysis. MFO variants using different mechanisms are compared to prove the effectiveness of the improved strategy. Comparing HMCMMFO with MFO variants, original metaheuristics, and advanced algorithms proves that HMCMMFO has more superior optimization performance. Moreover, five typical engineering design examples are verified in this paper to discuss the application value of HMCMMFO in solving practical problems in the real world. The results show that HMCMMFO has significant advantages in solving constraint problems.

Due to the time-consuming nature of HMCMMFO in dealing with large-scale and complex problems, the next piece of research is planned to combine HMCMMFO with distributed platforms to deal with such problems in a parallel way. In addition, balancing the global-exploration and local-exploitation capabilities of HMCMMFO is crucial to improving the overall optimization performance of the algorithm. Therefore, the next piece of work will also investigate the flame-number formula of HMCMMFO and adopt a dynamic-adaptive mechanism based on the number of evaluations to balance the algorithm’s exploration and exploitation. At the same time, relying on the powerful optimization ability of HMCMMFO, its applications in image segmentation [21] and dynamic-landscape processing are also worthy research directions. In addition, future research can also be extended to a multi-objective optimization algorithm to solve more complex issues in real industrial environments.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/app122312179/s1, Table S1: The relevant parameters involved in experiments; Table S2: Parameter settings of MFO variants; Table S3: Wilcoxon sign rank test comparison results of MFO variants; Table S4: Parameter settings of original algorithms; Table S5: Comparison results of Wilcoxon sign rank test for original algorithms; Table S6: Parameter settings of advanced algorithms; Table S7: Comparison results of Wilcoxon sign rank test for advanced algorithms.

Author Contributions

Conceptualization, methodology, software, validation, formal analysis, investigation, data curation, resources, writing, writing—review and editing, visualization, H.Y., S.Q., A.A.H., L.S. and H.C.; supervision, L.S. and H.C.; funding acquisition, project administration, L.S. and H.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research study was supported by the Science and Technology Development Program of Jilin Province (20200301047RQ).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Cao, B.; Li, M.; Liu, X.; Zhao, J.; Cao, W.; Lv, Z. Many-Objective Deployment Optimization for a Drone-Assisted Camera Network. IEEE Trans. Netw. Sci. Eng. 2021, 8, 2756–2764. [Google Scholar] [CrossRef]

- Cao, B.; Fan, S.; Zhao, J.; Tian, S.; Zheng, Z.; Yan, Y.; Yang, P. Large-scale many-objective deployment optimization of edge servers. IEEE Trans. Intell. Transp. Syst. 2021, 22, 3841–3849. [Google Scholar] [CrossRef]

- Zhang, K.; Wang, Z.; Chen, G.; Zhang, L.; Yang, Y.; Yao, C.; Wang, J.; Yao, J. Training effective deep reinforcement learning agents for real-time life-cycle production optimization. J. Pet. Sci. Eng. 2022, 208, 109766. [Google Scholar] [CrossRef]

- Beheshti, Z.; Shamsuddin, S.M.H. A review of population-based meta-heuristic algorithms. Int. J. Adv. Soft Comput. Appl. 2013, 5, 1–35. [Google Scholar]

- Li, W.K.; Wang, W.L.; Li, L. Optimization of water resources utilization by multi-objective moth-flame algorithm. Water Resour. Manag. 2018, 32, 3303–3316. [Google Scholar] [CrossRef]

- Al-Shourbaji, I.; Zogaan, W. A new method for human resource allocation in cloud-based e-commerce using a meta-heuristic algorithm. Kybernetes 2021, 51, 2109–2126. [Google Scholar] [CrossRef]

- Adhikari, M.; Nandy, S.; Amgoth, T. Meta heuristic-based task deployment mechanism for load balancing in IaaS cloud. J. Netw. Comput. Appl. 2019, 128, 64–77. [Google Scholar] [CrossRef]

- Fahimnia, B.; Davarzani, H.; Eshragh, A. Planning of complex supply chains: A performance comparison of three meta-heuristic algorithms. Comput. Oper. Res. 2018, 89, 241–252. [Google Scholar] [CrossRef]

- Chen, H.; Li, S. Multi-Sensor Fusion by CWT-PARAFAC-IPSO-SVM for Intelligent Mechanical Fault Diagnosis. Sensors 2022, 22, 3647. [Google Scholar] [CrossRef]

- Cao, B.; Gu, Y.; Lv, Z.; Yang, S.; Zhao, J.; Li, Y. RFID Reader Anticollision Based on Distributed Parallel Particle Swarm Optimization. IEEE Internet Things J. 2021, 8, 3099–3107. [Google Scholar] [CrossRef]

- Sun, G.; Li, C.; Deng, L. An adaptive regeneration framework based on search space adjustment for differential evolution. Neural Comput. Appl. 2021, 33, 9503–9519. [Google Scholar] [CrossRef]

- Zhu, B.; Zhong, Q.; Chen, Y.; Liao, S.; Li, Z.; Shi, K.; Sotelo, M.A. A Novel Reconstruction Method for Temperature Distribution Measurement Based on Ultrasonic Tomography. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 2022, 69, 2352–2370. [Google Scholar] [CrossRef]

- Ahmadianfar, I.; Heidari, A.A.; Gandomi, A.H.; Chu, X.; Chen, H. RUN beyond the metaphor: An efficient optimization algorithm based on Runge Kutta method. Expert Syst. Appl. 2021, 181, 115079. [Google Scholar] [CrossRef]

- Yang, Y.; Chen, H.; Heidari, A.A.; Gandomi, A.H. Hunger games search: Visions, conception, implementation, deep analysis, perspectives, and towards performance shifts. Expert Syst. Appl. 2021, 177, 114864. [Google Scholar] [CrossRef]

- Li, S.; Chen, H.; Wang, M.; Heidari, A.A.; Mirjalili, S. Slime mould algorithm: A new method for stochastic optimization. Future Gener. Comput. Syst. 2020, 111, 300–323. [Google Scholar] [CrossRef]

- Heidari, A.A.; Mirjalili, S.; Faris, H.; Aljarah, I.; Mafarja, M.; Chen, H. Harris hawks optimization: Algorithm and applications. Future Gener. Comput. Syst. 2019, 97, 849–872. [Google Scholar] [CrossRef]

- Tu, J.; Chen, H.; Wang, M.; Gandomi, A.H. The Colony Predation Algorithm. J. Bionic Eng. 2021, 18, 674–710. [Google Scholar] [CrossRef]

- Ahmadianfar, I.; Asghar Heidari, A.; Noshadian, S.; Chen, H.; Gandomi, A.H. INFO: An Efficient Optimization Algorithm based on Weighted Mean of Vectors. Expert Syst. Appl. 2022, 195, 116516. [Google Scholar] [CrossRef]

- Dhiman, G.; Kumar, V. Seagull optimization algorithm: Theory and its applications for large-scale industrial engineering problems. Knowl.-Based Syst. 2019, 165, 169–196. [Google Scholar] [CrossRef]

- Hussien, A.G.; Heidari, A.A.; Ye, X.; Liang, G.; Chen, H.; Pan, Z. Boosting whale optimization with evolution strategy and Gaussian random walks: An image segmentation method. Eng. Comput. 2022, 1–45. [Google Scholar] [CrossRef]

- Yu, H.; Song, J.; Chen, C.; Heidari, A.A.; Liu, J.; Chen, H.; Zaguia, A.; Mafarja, M. Image segmentation of Leaf Spot Diseases on Maize using multi-stage Cauchy-enabled grey wolf algorithm. Eng. Appl. Artif. Intell. 2022, 109, 104653. [Google Scholar] [CrossRef]

- Deng, W.; Xu, J.; Gao, X.Z.; Zhao, H. An Enhanced MSIQDE Algorithm With Novel Multiple Strategies for Global Optimization Problems. IEEE Trans. Syst. Man Cybern. Syst. 2022, 52, 1578–1587. [Google Scholar] [CrossRef]

- Hu, J.; Gui, W.; Heidari, A.A.; Cai, Z.; Liang, G.; Chen, H.; Pan, Z. Dispersed foraging slime mould algorithm: Continuous and binary variants for global optimization and wrapper-based feature selection. Knowl.-Based Syst. 2022, 237, 107761. [Google Scholar] [CrossRef]

- Liu, Y.; Heidari, A.A.; Cai, Z.; Liang, G.; Chen, H.; Pan, Z.; Alsufyani, A.; Bourouis, S. Simulated annealing-based dynamic step shuffled frog leaping algorithm: Optimal performance design and feature selection. Neurocomputing 2022, 503, 325–362. [Google Scholar] [CrossRef]

- Deng, W.; Ni, H.; Liu, Y.; Chen, H.; Zhao, H. An adaptive differential evolution algorithm based on belief space and generalized opposition-based learning for resource allocation. Appl. Soft Comput. 2022, 127, 109419. [Google Scholar] [CrossRef]

- Wang, M.; Chen, H.; Yang, B.; Zhao, X.; Hu, L.; Cai, Z.; Huang, H.; Tong, C. Toward an optimal kernel extreme learning machine using a chaotic moth-flame optimization strategy with applications in medical diagnoses. Neurocomputing 2017, 267, 69–84. [Google Scholar] [CrossRef]

- Chen, H.-L.; Wang, G.; Ma, C.; Cai, Z.-N.; Liu, W.-B.; Wang, S.-J. An efficient hybrid kernel extreme learning machine approach for early diagnosis of Parkinson’s disease. Neurocomputing 2016, 184, 131–144. [Google Scholar] [CrossRef]

- Xu, Y.; Chen, H.; Heidari, A.A.; Luo, J.; Zhang, Q.; Zhao, X.; Li, C. An Efficient Chaotic Mutative Moth-flame-inspired Optimizer for Global Optimization Tasks. Expert Syst. Appl. 2019, 129, 135–155. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, R.; Heidari, A.A.; Wang, X.; Chen, Y.; Wang, M.; Chen, H. Towards augmented kernel extreme learning models for bankruptcy prediction: Algorithmic behavior and comprehensive analysis. Neurocomputing 2021, 430, 185–212. [Google Scholar] [CrossRef]

- Deng, W.; Xu, J.; Zhao, H.; Song, Y. A Novel Gate Resource Allocation Method Using Improved PSO-Based QEA. IEEE Trans. Intell. Transp. Syst. 2020, 23, 1737–1745. [Google Scholar] [CrossRef]

- Deng, W.; Xu, J.; Song, Y.; Zhao, H. An Effective Improved Co-evolution Ant Colony Optimization Algorithm with Multi-Strategies and Its Application. Int. J. Bio-Inspired Comput. 2020, 16, 158–170. [Google Scholar] [CrossRef]

- Yu, H.; Yuan, K.; Li, W.; Zhao, N.; Chen, W.; Huang, C.; Chen, H.; Wang, M. Improved Butterfly Optimizer-Configured Extreme Learning Machine for Fault Diagnosis. Complexity 2021, 2021, 6315010. [Google Scholar] [CrossRef]

- Deng, W.; Zhang, L.; Zhou, X.; Zhou, Y.; Sun, Y.; Zhu, W.; Chen, H.; Deng, W.; Chen, H.; Zhao, H. Multi-strategy particle swarm and ant colony hybrid optimization for airport taxiway planning problem. Inf. Sci. 2022, 612, 576–593. [Google Scholar] [CrossRef]

- Song, Y.; Cai, X.; Zhou, X.; Zhang, B.; Chen, H.; Li, Y.; Deng, W.; Deng, W. Dynamic hybrid mechanism-based differential evolution algorithm and its application. Expert Syst. Appl. 2023, 213, 118834. [Google Scholar] [CrossRef]

- Deng, W.; Zhang, X.; Zhou, Y.; Liu, Y.; Zhou, X.; Chen, H.; Zhao, H. An enhanced fast non-dominated solution sorting genetic algorithm for multi-objective problems. Inf. Sci. 2022, 585, 441–453. [Google Scholar] [CrossRef]

- Hua, Y.; Liu, Q.; Hao, K.; Jin, Y. A Survey of Evolutionary Algorithms for Multi-Objective Optimization Problems With Irregular Pareto Fronts. IEEE/CAA J. Autom. Sin. 2021, 8, 303–318. [Google Scholar] [CrossRef]

- Han, X.; Han, Y.; Chen, Q.; Li, J.; Sang, H.; Liu, Y.; Pan, Q.; Nojima, Y. Distributed Flow Shop Scheduling with Sequence-Dependent Setup Times Using an Improved Iterated Greedy Algorithm. Complex Syst. Modeling Simul. 2021, 1, 198–217. [Google Scholar] [CrossRef]

- Gao, D.; Wang, G.-G.; Pedrycz, W. Solving fuzzy job-shop scheduling problem using DE algorithm improved by a selection mechanism. IEEE Trans. Fuzzy Syst. 2020, 28, 3265–3275. [Google Scholar] [CrossRef]

- Wang, G.-G.; Gao, D.; Pedrycz, W. Solving multi-objective fuzzy job-shop scheduling problem by a hybrid adaptive differential evolution algorithm. IEEE Trans. Ind. Inform. 2022, 18, 8519–8528. [Google Scholar] [CrossRef]

- Ye, X.; Liu, W.; Li, H.; Wang, M.; Chi, C.; Liang, G.; Chen, H.; Huang, H. Modified Whale Optimization Algorithm for Solar Cell and PV Module Parameter Identification. Complexity 2021, 2021, 8878686. [Google Scholar] [CrossRef]

- Mirjalili, S. Moth-flame optimization algorithm: A novel nature-inspired heuristic paradigm. Knowl.-Based Syst. 2015, 89, 228–249. [Google Scholar] [CrossRef]

- Yang, L.; Nguyen, H.; Bui, X.-N.; Nguyen-Thoi, T.; Zhou, J.; Huang, J. Prediction of gas yield generated by energy recovery from municipal solid waste using deep neural network and moth-flame optimization algorithm. J. Clean. Prod. 2021, 311, 127672. [Google Scholar] [CrossRef]

- Jiao, S.; Chong, G.; Huang, C.; Hu, H.; Wang, M.; Heidari, A.A.; Chen, H.; Zhao, X.J.E. Orthogonally adapted Harris hawks optimization for parameter estimation of photovoltaic models. Energy 2020, 203, 117804. [Google Scholar] [CrossRef]

- Singh, P.; Prakash, S. Optical network unit placement in Fiber-Wireless (FiWi) access network by Moth-Flame optimization algorithm. Opt. Fiber Technol. 2017, 36, 403–411. [Google Scholar] [CrossRef]

- Said, S.; Mostafa, A.; Houssein, E.H.; Hassanien, A.E.; Hefny, H. Moth-flame optimization based segmentation for MRI liver images. In Proceedings of the International Conference on Advanced Intelligent Systems and Informatics 2017, Cairo, Egypt, 9–11 September 2017. [Google Scholar]

- Yamany, W.; Fawzy, M.; Tharwat, A.; Hassanien, A.E. Moth-flame optimization for training multi-layer perceptrons. In Proceedings of the 2015 11th International Computer Engineering Conference (ICENCO), Cairo, Egypt, 29–30 December 2015. [Google Scholar]

- Hassanien, A.E.; Gaber, T.; Mokhtar, U.; Hefny, H. An improved moth flame optimization algorithm based on rough sets for tomato diseases detection. Comput. Electron. Agric. 2017, 136, 86–96. [Google Scholar] [CrossRef]

- Allam, D.; Yousri, D.; Eteiba, M. Parameters extraction of the three diode model for the multi-crystalline solar cell/module using Moth-Flame Optimization Algorithm. Energy Convers. Manag. 2016, 123, 535–548. [Google Scholar] [CrossRef]

- Singh, T.; Saxena, N.; Khurana, M.; Singh, D.; Abdalla, M.; Alshazly, H. Data clustering using moth-flame optimization algorithm. Sensors 2021, 21, 4086. [Google Scholar] [CrossRef]

- Kaur, K.; Singh, U.; Salgotra, R. An enhanced moth flame optimization. Neural Comput. Appl. 2020, 32, 2315–2349. [Google Scholar] [CrossRef]

- Yu, C.; Heidari, A.A.; Chen, H. A quantum-behaved simulated annealing algorithm-based moth-flame optimization method. Appl. Math. Model. 2020, 87, 1–19. [Google Scholar] [CrossRef]

- Ma, L.; Wang, C.; Xie, N.-g.; Shi, M.; Ye, Y.; Wang, L. Moth-flame optimization algorithm based on diversity and mutation strategy. Appl. Intell. 2021, 51, 5836–5872. [Google Scholar] [CrossRef]

- Pelusi, D.; Mascella, R.; Tallini, L.; Nayak, J.; Naik, B.; Deng, Y. An Improved Moth-Flame Optimization algorithm with hybrid search phase. Knowl.-Based Syst. 2020, 191, 105277. [Google Scholar] [CrossRef]

- Li, Z.; Zeng, J.; Chen, Y.; Ma, G.; Liu, G. Death mechanism-based moth–flame optimization with improved flame generation mechanism for global optimization tasks. Expert Syst. Appl. 2021, 183, 115436. [Google Scholar] [CrossRef]

- Li, C.; Niu, Z.; Song, Z.; Li, B.; Fan, J.; Liu, P.X. A double evolutionary learning moth-flame optimization for real-parameter global optimization problems. IEEE Access 2018, 6, 76700–76727. [Google Scholar] [CrossRef]

- Hongwei, L.; Jianyong, L.; Liang, C.; Jingbo, B.; Yangyang, S.; Kai, L. Chaos-enhanced moth-flame optimization algorithm for global optimization. J. Syst. Eng. Electron. 2019, 30, 1144–1159. [Google Scholar]

- Xu, L.; Li, Y.; Li, K.; Beng, G.H.; Jiang, Z.; Wang, C.; Liu, N. Enhanced moth-flame optimization based on cultural learning and Gaussian mutation. J. Bionic Eng. 2018, 15, 751–763. [Google Scholar] [CrossRef]

- Sapre, S.; Mini, S. Opposition-based moth flame optimization with Cauchy mutation and evolutionary boundary constraint handling for global optimization. Soft Comput. 2019, 23, 6023–6041. [Google Scholar] [CrossRef]

- Lan, K.-T.; Lan, C.-H. Notes on the distinction of Gaussian and Cauchy mutations. In Proceedings of the 2008 Eighth International Conference on Intelligent Systems Design and Applications, Kaohsiung, Taiwan, 26–28 November 2008. [Google Scholar]

- Taneja, I.; Reddy, B.; Damhorst, G.; Dave Zhao, S.; Hassan, U.; Price, Z.; Jensen, T.; Ghonge, T.; Patel, M.; Wachspress, S.; et al. Combining Biomarkers with EMR Data to Identify Patients in Different Phases of Sepsis. Sci. Rep. 2017, 7, 1–12. [Google Scholar]

- Passino, K.M. Biomimicry of bacterial foraging for distributed optimization and control. IEEE Control Syst. Mag. 2002, 22, 52–67. [Google Scholar]

- Zheng, W.; Xun, Y.; Wu, X.; Deng, Z.; Chen, X.; Sui, Y. A Comparative Study of Class Rebalancing Methods for Security Bug Report Classification. IEEE Trans. Reliab. 2021, 70, 1658–1670. [Google Scholar] [CrossRef]

- Dang, W.; Guo, J.; Liu, M.; Liu, S.; Yang, B.; Yin, L.; Zheng, W. A Semi-Supervised Extreme Learning Machine Algorithm Based on the New Weighted Kernel for Machine Smell. Appl. Sci. 2022, 12, 9213. [Google Scholar] [CrossRef]

- Lu, S.; Guo, J.; Liu, S.; Yang, B.; Liu, M.; Yin, L.; Zheng, W. An Improved Algorithm of Drift Compensation for Olfactory Sensors. Appl. Sci. 2022, 12, 9529. [Google Scholar] [CrossRef]

- Zhong, T.; Cheng, M.; Lu, S.; Dong, X.; Li, Y. RCEN: A Deep-Learning-Based Background Noise Suppression Method for DAS-VSP Records. IEEE Geosci. Remote Sens. Lett. 2021, 19, 1–5. [Google Scholar] [CrossRef]

- Xu, Y.; Chen, H.; Luo, J.; Zhang, Q.; Jiao, S.; Zhang, X. Enhanced Moth-flame optimizer with mutation strategy for global optimization. Inf. Sci. 2019, 492, 181–203. [Google Scholar] [CrossRef]

- Zhang, H.; Heidari, A.A.; Wang, M.; Zhang, L.; Chen, H.; Li, C. Orthogonal Nelder-Mead moth flame method for parameters identification of photovoltaic modules. Energy Convers. Manag. 2020, 211, 112764. [Google Scholar] [CrossRef]

- Shan, W.; Qiao, Z.; Heidari, A.A.; Chen, H.; Turabieh, H.; Teng, Y. Double adaptive weights for stabilization of moth flame optimizer: Balance analysis, engineering cases, and medical diagnosis. Knowl.-Based Syst. 2021, 214, 106728. [Google Scholar] [CrossRef]

- Mirjalili, S. SCA: A Sine Cosine Algorithm for solving optimization problems. Knowl.-Based Syst. 2016, 96, 120–133. [Google Scholar] [CrossRef]

- Yang, X.-S. Firefly algorithms for multimodal optimization. In Proceedings of the International Symposium on Stochastic Algorithms, Sapporo, Japan, 26–28 October 2009. [Google Scholar]

- Mirjalili, S.; Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Saremi, S.; Mirjalili, S.; Lewis, A. Grasshopper optimisation algorithm: Theory and application. Adv. Eng. Softw. 2017, 105, 30–47. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Nobile, M.S.; Cazzaniga, P.; Besozzi, D.; Colombo, R.; Mauri, G.; Pasi, G. Fuzzy Self-Tuning PSO: A settings-free algorithm for global optimization. Swarm Evol. Comput. 2018, 39, 70–85. [Google Scholar] [CrossRef]

- Chen, W.-N.; Zhang, J.; Lin, Y.; Chen, N.; Zhan, Z.-H.; Chung, H.S.-H.; Li, Y.; Shi, Y.-H. Particle swarm optimization with an aging leader and challengers. IEEE Trans. Evol. Comput. 2012, 17, 241–258. [Google Scholar] [CrossRef]

- Yong, J.; He, F.; Li, H.; Zhou, W. A novel bat algorithm based on collaborative and dynamic learning of opposite population. In Proceedings of the 2018 IEEE 22nd International Conference on Computer Supported Cooperative Work in Design (CSCWD), Nanjing, China, 9–11 May 2018. [Google Scholar]

- Fan, Y.; Wang, P.; Mafarja, M.; Wang, M.; Zhao, X.; Chen, H. A bioinformatic variant fruit fly optimizer for tackling optimization problems. Knowl.-Based Syst. 2021, 213, 106704. [Google Scholar] [CrossRef]

- Nenavath, H.; Jatoth, R.K. Hybridizing sine cosine algorithm with differential evolution for global optimization and object tracking. Appl. Soft Comput. 2018, 62, 1019–1043. [Google Scholar] [CrossRef]

- Jia, D.; Zheng, G.; Qu, B.; Khan, M.K. A hybrid particle swarm optimization algorithm for high-dimensional problems. Comput. Ind. Eng. 2011, 61, 1117–1122. [Google Scholar] [CrossRef]

- Heidari, A.A.; Abbaspour, R.A.; Chen, H. Efficient boosted grey wolf optimizers for global search and kernel extreme learning machine training. Appl. Soft Comput. 2019, 81, 105521. [Google Scholar] [CrossRef]

- Tu, J.; Chen, H.; Liu, J.; Heidari, A.A.; Zhang, X.; Wang, M.; Ruby, R.; Pham, Q.-V. Evolutionary biogeography-based whale optimization methods with communication structure: Towards measuring the balance. Knowl.-Based Syst. 2021, 212, 106642. [Google Scholar] [CrossRef]

- Yu, H.; Qiao, S.; Heidari, A.A.; Bi, C.; Chen, H. Individual Disturbance and Attraction Repulsion Strategy Enhanced Seagull Optimization for Engineering Design. Mathematics 2022, 10, 276. [Google Scholar] [CrossRef]

- Mahdavi, M.; Fesanghary, M.; Damangir, E. An improved harmony search algorithm for solving optimization problems. Appl. Math. Comput. 2007, 188, 1567–1579. [Google Scholar] [CrossRef]

- Kaveh, A.; Khayatazad, M. A new meta-heuristic method: Ray optimization. Comput. Struct. 2012, 112, 283–294. [Google Scholar] [CrossRef]

- Mezura-Montes, E.; Coello, C.A.C. An empirical study about the usefulness of evolution strategies to solve constrained optimization problems. Int. J. Gen. Syst. 2008, 37, 443–473. [Google Scholar] [CrossRef]

- Rashedi, E.; Nezamabadi-Pour, H.; Saryazdi, S. GSA: A gravitational search algorithm. Inf. Sci. 2009, 179, 2232–2248. [Google Scholar] [CrossRef]

- Belegundu, A.D.; Arora, J.S. A study of mathematical programming methods for structural optimization. Part I: Theory. Int. J. Numer. Methods Eng. 1985, 21, 1583–1599. [Google Scholar] [CrossRef]

- Mirjalili, S.; Gandomi, A.H.; Mirjalili, S.Z.; Saremi, S.; Faris, H.; Mirjalili, S.M. Salp Swarm Algorithm: A bio-inspired optimizer for engineering design problems. Adv. Eng. Softw. 2017, 114, 163–191. [Google Scholar] [CrossRef]

- Wang, G.; Heidari, A.A.; Wang, M.; Kuang, F.; Zhu, W.; Chen, H. Chaotic arc adaptive grasshopper optimization. IEEE Access 2021, 9, 17672–17706. [Google Scholar] [CrossRef]

- Sadollah, A.; Bahreininejad, A.; Eskandar, H.; Hamdi, M. Mine blast algorithm: A new population based algorithm for solving constrained engineering optimization problems. Appl. Soft Comput. 2013, 13, 2592–2612. [Google Scholar] [CrossRef]

- Huang, H.; Heidari, A.A.; Xu, Y.; Wang, M.; Liang, G.; Chen, H.; Cai, X. Rationalized Sine Cosine Optimization With Efficient Searching Patterns. IEEE Access 2020, 8, 61471–61490. [Google Scholar] [CrossRef]

- Gandomi, A.H.; Yang, X.-S.; Alavi, A.H. Cuckoo search algorithm: A metaheuristic approach to solve structural optimization problems. Eng. Comput. 2013, 29, 17–35. [Google Scholar] [CrossRef]

- Pan, W.-T. A new Fruit Fly Optimization Algorithm: Taking the financial distress model as an example. Knowl.-Based Syst. 2012, 26, 69–74. [Google Scholar] [CrossRef]

- Gandomi, A.H.; Yang, X.-S. Chaotic bat algorithm. J. Comput. Sci. 2014, 5, 224–232. [Google Scholar] [CrossRef]

- dos Santos Coelho, L. Gaussian quantum-behaved particle swarm optimization approaches for constrained engineering design problems. Expert Syst. Appl. 2010, 37, 1676–1683. [Google Scholar] [CrossRef]

- Huang, F.-z.; Wang, L.; He, Q. An effective co-evolutionary differential evolution for constrained optimization. Appl. Math. Comput. 2007, 186, 340–356. [Google Scholar] [CrossRef]

- Sandgren, E. Nonlinear integer and discrete programming in mechanical design. In Proceedings of the International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, Kissimmee, FL, USA, 25–28 September 1988. [Google Scholar]

- Cheng, M.-Y.; Prayogo, D. Symbiotic organisms search: A new metaheuristic optimization algorithm. Comput. Struct. 2014, 139, 98–112. [Google Scholar] [CrossRef]

- Wang, G.G. Adaptive response surface method using inherited latin hypercube design points. J. Mech. Des. 2003, 125, 210–220. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95-International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; pp. 1942–1948. [Google Scholar]

- Li, J.; Chen, C.; Chen, H.; Tong, C. Towards Context-aware Social Recommendation via Individual Trust. Knowl.-Based Syst. 2017, 127, 58–66. [Google Scholar] [CrossRef]

- Li, J.; Zheng, X.-L.; Chen, S.-T.; Song, W.-W.; Chen, D.-r. An efficient and reliable approach for quality-of-service-aware service composition. Inf. Sci. 2014, 269, 238–254. [Google Scholar] [CrossRef]

- Cao, X.; Wang, J.; Zeng, B. A Study on the Strong Duality of Second-Order Conic Relaxation of AC Optimal Power Flow in Radial Networks. IEEE Trans. Power Syst. 2022, 37, 443–455. [Google Scholar] [CrossRef]

- Wu, Z.; Li, G.; Shen, S.; Cui, Z.; Lian, X.; Xu, G. Constructing dummy query sequences to protect location privacy and query privacy in location-based services. World Wide Web 2021, 24, 25–49. [Google Scholar] [CrossRef]

- Wu, Z.; Wang, R.; Li, Q.; Lian, X.; Xu, G. A location privacy-preserving system based on query range cover-up for location-based services. IEEE Trans. Veh. Technol. 2020, 69, 5244–5254. [Google Scholar] [CrossRef]

- Huang, L.; Yang, Y.; Chen, H.; Zhang, Y.; Wang, Z.; He, L. Context-aware road travel time estimation by coupled tensor decomposition based on trajectory data. Knowl.-Based Syst. 2022, 245, 108596. [Google Scholar] [CrossRef]

- Qiu, S.; Zhao, H.; Jiang, N.; Wang, Z.; Liu, L.; An, Y.; Zhao, H.; Miao, X.; Liu, R.; Fortino, G. Multi-sensor information fusion based on machine learning for real applications in human activity recognition: State-of-the-art and research challenges. Inf. Fusion 2022, 80, 241–265. [Google Scholar] [CrossRef]

- Wu, Z.; Li, R.; Xie, J.; Zhou, Z.; Guo, J.; Xu, X. A user sensitive subject protection approach for book search service. J. Assoc. Inf. Sci. Technol. 2020, 71, 183–195. [Google Scholar] [CrossRef]

- Wu, Z.; Shen, S.; Zhou, H.; Li, H.; Lu, C.; Zou, D. An effective approach for the protection of user commodity viewing privacy in e-commerce website. Knowl.-Based Syst. 2021, 220, 106952. [Google Scholar] [CrossRef]

- Zhang, X.; Zheng, J.; Wang, D.; Zhao, L. Exemplar-Based Denoising: A Unified Low-Rank Recovery Framework. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 2538–2549. [Google Scholar] [CrossRef]

- Hu, K.; Zhao, L.; Feng, S.; Zhang, S.; Zhou, Q.; Gao, X.; Guo, Y. Colorectal polyp region extraction using saliency detection network with neutrosophic enhancement. Comput. Biol. Med. 2022, 147, 105760. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).