Abstract

Since the hardware limitations of satellite sensors, the spatial resolution of multispectral (MS) images is still not consistent with the panchromatic (PAN) images. It is especially important to obtain the MS images with high spatial resolution in the field of remote sensing image fusion. In order to obtain the MS images with high spatial and spectral resolutions, a novel MS and PAN images fusion method based on weighted mean curvature filter (WMCF) decomposition is proposed in this paper. Firstly, a weighted local spatial frequency-based (WLSF) fusion method is utilized to fuse all the bands of a MS image to generate an intensity component IC. In accordance with an image matting model, IC is taken as the original α channel for spectral estimation to obtain a foreground and background images. Secondly, a PAN image is decomposed into a small-scale (SS), large-scale (LS) and basic images by weighted mean curvature filter (WMCF) and Gaussian filter (GF). The multi-scale morphological detail measure (MSMDM) value is used as the inputs of the Parameters Automatic Calculation Pulse Coupled Neural Network (PAC-PCNN) model. With the MSMDM-guided PAC-PCNN model, the basic image and IC are effectively fused. The fused image as well as the LS and SS images are linearly combined together to construct the last α channel. Finally, in accordance with an image matting model, a foreground image, a background image and the last α channel are reconstructed to acquire the final fused image. The experimental results on four image pairs show that the proposed method achieves superior results in terms of subjective and objective evaluations. In particular, the proposed method can fuse MS and PAN images with different spatial and spectral resolutions in a higher operational efficiency, which is an effective means to obtain higher spatial and spectral resolution images.

1. Introduction

Nowadays, many remote sensing images with different resolutions can be obtained. The panchromatic (PAN) images have high spatial resolution and reflect the spatial structure information contained in the target region. The multispectral (MS) images contain rich spectral information, which is suitable for recognition and interpretation in the target region, but the spatial resolution is low. By fusing the MS and PAN images, the fused images have both higher spatial detail representation ability and retain the spectral features contained in the MS images, which can obtain a richer description about the target information. The fusion between the MS and PAN images is also known as pan-sharpening. With the improvement of remote sensing satellite sensor technology, the information integration and processing technology has been enhanced, and the pan-sharpening technology has been widely applied in various aspects such as military reconnaissance, remote sensing measurement, forest protection, mineral detection, image classification, and computer vision.

The pan-sharpening methods are divided into various fusion strategies. The component substitution-based (CS) methods mainly include the intensity hue saturation (IHS) transform-based method [1], principal component analysis-based (PCA) method [2], adaptive Gram–Schmidt-based (AGS) method [3], etc. Moreover, Choi et al. [4] proposed a partial replacement-based adaptive component substitution-based (PRACS) method. This method generates high-resolution fused components by partial substitution and performs high-frequency injection based on statistical ratios. Vivone et al. [5] proposed a band-dependent spatial-detail with physical constrains-based (BDSD-PC) method. This method adds physical constraints to the optimization problem for guiding the band-dependent spatial-detail method toward a more robust solution. In general, the CS-based methods can effectively enhance the spatial resolution of the fused image. On the other hand, it may cause the spectral distortion to some extent.

The multi-resolution analysis-based (MRA) methods mainly include the Wavelet Transform-based (WT) [6], Curvelet Transform-based [7], and Non-Subsampled Shearlet Transform-based (NSST) methods [8]. With the MRA-based methods, the source image is disintegrated into several sub-images with different scales through some multi-scale decomposition methods. Then, different fusion rules are developed for sub-images at different scales, so that the sub-images can be fused. Finally, the final fused image is obtained by inverse transformation. In general, the MRA-based methods can obtain higher spectral resolution, but their spatial resolution is inferior in comparison with the CS-based methods.

Moreover, Fu et al. [9] proposed a variational local gradient constraints-based (VLGC) method. This method first calculates the gradient differences between the PAN and MS images in different regions and bands. Then, a local gradient constraint is added to the optimization objective, so as to fully utilize the spatial information contained in the PAN images. Wu et al. [10] proposed a multi-objective decision-based (MOD) method. This method designs a fusion model based on multi-objective decision making, which, in turn, performs a generalized sharpening operation by spectral modulation. Khan et al. [11] proposed a Brovey Transform-based method that integrates Brovey and Laplacian filter to improve the pan-sharpening effects.

In recent years, edge-preserving filters (EPF) are extensively applied for image processing. Li et al. [12] proposed a novel method in accordance with a guided filter. This method applies a guided filter to enhance the spectral resolution of the fused image. EPF can fully utilize the spatial information contained in the original images by preserving the edge information while smoothing it. Thus, EPF is often used as a decomposition method. Another important method is the Pulse Coupled Neural Network (PCNN) model [13]. After several iterations, the PCNN model can accurately extract the features contained in the image. Thus, it is suitable for the field of image fusion [14,15]. For the traditional PCNN model, the primary obstacle it faces is how to set the free parameters scientifically. Thus, the Parameters Automatic Calculation Pulse Coupled Neural Network (PAC-PCNN) model [16] is introduced into the pan-sharpening process. All parameters in the PAC-PCNN model can be automatically calculated according to the inputs with a fast convergence speed.

In general, the fusion images obtained by the CS-based methods can obtain higher spatial resolution, but many spectral information will be lost. The fusion images obtained by the MRA-based method can retain the spectral information, but will lose many spatial details. In particular, the fusion images obtained by the MRA-based methods tend to have spatial distortion, while the fusion images obtained by the CS-based method tend to have spectral distortion. It is important to balance spatial distortion and spectral distortion. Thus, a novel MS and PAN images fusion method based on weighted mean curvature filter (WMCF) decomposition is proposed. The proposed method combines the advantages of EPF decomposition and PAC-PCNN model, and focuses on solving spatial and spectral distortion problems. Firstly, a weighted local spatial frequency-based method (WLSF) is used to fuse all the bands of the MS image to generate intensity component IC. According to an image matting model, IC is used as the original α channel for spectral estimation to obtain a foreground and background images. Secondly, a PAN image is decomposed into a small-scale (SS) image, a large-scale (LS) image and a basic image by WMCF and GF. The multi-scale morphological detail measure (MSMDM) values are used as the inputs of the PAC-PCNN model. With the PAC-PCNN model guided by the MSMDM values, the basic image and IC are effectively fused by the PAC-PCNN model. The fused image, and the LS and SS images are linearly combined together to construct the last α channel. Finally, the last α channel, foreground and background images are reconstructed in accordance with an image matting model to acquire the final fused image.

The four primary contributions of this paper are listed below:

- (1)

- An image matting model is introduced to effectively enhance the spectral resolution of the fused image. The preservation of spectral information contained in the MS image is mainly achieved by the image matting model;

- (2)

- A MSMDM method is used as a spatial detail information measure within a local region. By using the multi-scale morphological gradient operator, the gradient information of an original image can be extracted at different scales. Moreover, summing the multiscale morphological gradients helps both to measure the clarity and to suppress noise within a local region;

- (3)

- A PAC-PCNN model is introduced in the fusion process. The MSMDM values are taken as the inputs to the PAC-PCNN model. All parameters in the PAC-PCNN model are calculated automatically in accordance with the inputs and the conversion speed is also fast;

- (4)

- WLSF method improves the calculation by adding the diagonal direction based on the original spatial frequency. In addition, based on the Euclidean distance, it is determined that the weighting factor for the row and column frequencies is ;

- (5)

- A WMCF method is used to decompose image with multi-resolution, which has advantages including robustness in scale and contrast, fast computation, and edge protection.

The remainder of the paper is organized below. Section 2 introduces the related methods, including an image matting model, a MSMDM method, a PAC-PCNN model, a WLSF method, and a WMCF method. Section 3 describes the fusion process in detail. Section 4 performs comparison experiments on four image pairs and analyzes the experimental results. Section 5 presents the summary and some future works.

2. Related Methods

2.1. Image Matting Model

On the basis of an image matting model [17], an input image D can be separated into a foreground image F and a background image B by a linear synthesis model, i.e., the color of the i-th pixel is a linear combination of the corresponding foreground color and background color , as shown below:

where is the foreground color of the i-th pixel. is the background color of the i-th pixel. α is the opacity of the foreground image F. After the inputs D and original α channel are determined, the foreground image F and the background image B are evaluated through addressing the formula:

where i is the i-th channel. , , , , , and are the horizontal and vertical derivatives of the foreground color , background color , and α channel, respectively.

2.2. Multi-Scale Morphological Detail Measure

A multi-scale morphological detail measure (MSMDM) method [18] is used as a spatial detail information measure within a local region. By the multi-scale morphological gradient operator, the gradient information of an original image can be extracted at different scales. Moreover, summing the multiscale morphological gradients of a local region helps both to measure the clarity and to suppress noise within a local region. The detailed implementation process of MSMDM is as follows:

Firstly, the multi-scale structural elements should be constructed, as detailed below:

where is a basic structure element whose radius is r, and n is the number of scales.

The structural elements with different shapes can extract different kinds of features contained in the original image. Moreover, it can be extended to several scales by altering the size of the structural elements. Then, using the structure element, a comprehensive gradient feature can be extracted from the original image.

Then, a multi-scale morphological gradient operator is used to extract the gradient features at scale t from an image g.

where and denote the morphological expansion and corrosion operators, respectively.

The gradients can be expressed as local pixel value difference information in the original image. In particular, the maximum and minimum pixel values in the local area for an original image can be obtained by the expansion and erosion operators, respectively. In essence, the morphological gradient is the difference between the results obtained by expansion and erosion operations. Thus, the local gradient information contained in an original image can be extracted completely by the morphological gradient. Moreover, the gradients can be extracted at different scales by using multi-scale structural elements.

Moreover, the gradients at all scales are integrated into the multi-scale morphological gradient (MSMG).

where denotes the weight of the gradient at scale k:

The weighted summation is an effective method for fusing multi-scale morphological features. Thus, we can first multiply the gradient values at each scale with appropriate weights to obtain the morphological gradient values at each scale. Then, the morphological gradient values of all scales are summed, and, thus, the multiscale morphological gradient values are calculated.

In this paper, we assign larger weights to the smaller scale gradients and smaller weights to the larger scale gradients. In particular, for the smoother parts of the image, the pixel values vary less and the corresponding gradient values are smaller. Thus, the gradient-weighted sum of different scales can reflect the spatial detail information.

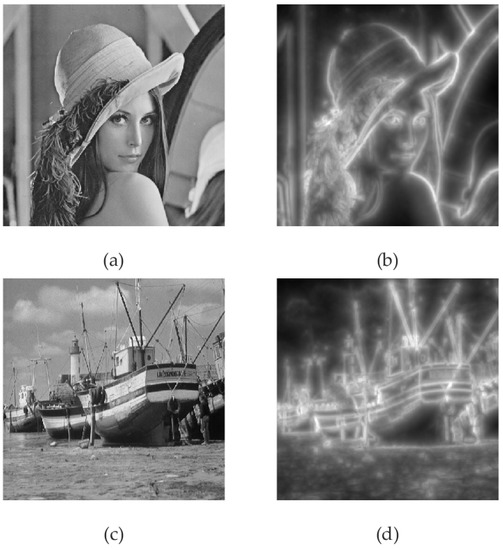

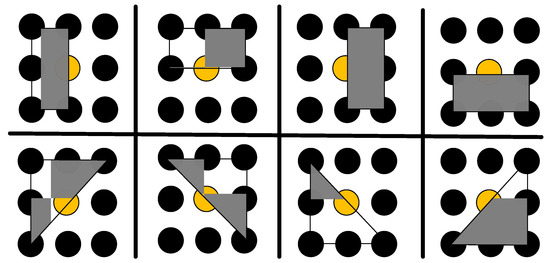

Finally, the multi-scale morphological gradients in local region are summed to calculate the MSMDM values and the specific details are shown in Formula (9). Summing the multi-scale morphological gradients can help measure the clarity as well as suppress the noise within a local region. Two examples of MSMDM are shown in Figure 1.

Figure 1.

Two examples of MSMDM. (a) Input image of the first example; (b) MSMDM result of the first example; (c) Input image of the second example; and (d) MSMDM result of the second example.

There are three parameters to be set in MSMDM, i.e., the shape of the structural element, the size of the basic structural element and the number of scales. In this paper, a flat structure is chosen as the shape of the structure element. To suppress the noise, the radius of the basic structural element is set to 4. Then the radius of the structural element at the n-th scale will be equal to (2n + 1). Finally, the number n of scales from 4 to 9 is tested experimentally, and the best results were obtained when n = 6.

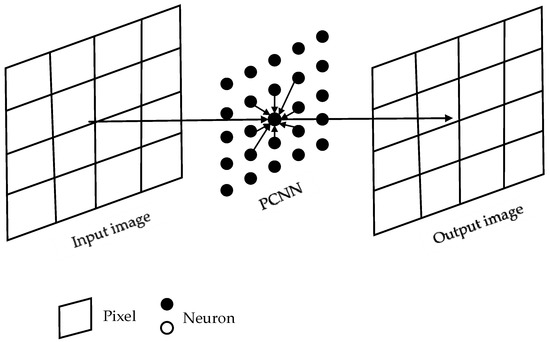

2.3. Parameters Automatic Calculation Pulse Coupled Neural Network

The Pulse Coupled Neural Network (PCNN) model was proposed by Johnson et al. by improving and optimizing the Eckhorn model and Rybak model [13]. A single neuron consists of three parts: the input part, the link part, and the pulse generator. Compared with the artificial neural networks, the PCNN model does not require any training process. In the PCNN model, there is a one-to-one correspondence between the pixels of an image and neurons. The connection model of the PCNN neurons is shown in Figure 2.

Figure 2.

Connection model of the PCNN neurons.

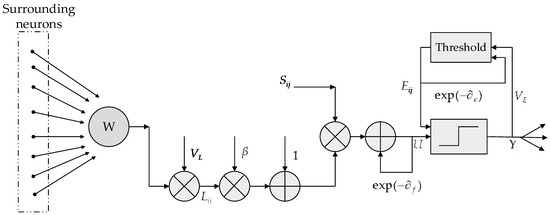

For the traditional PCNN model, the primary obstacle it faces is how to set the free parameters scientifically. In order to overcome the challenges and set these parameters scientifically, a Parameters Automatic Calculation Pulse Coupled Neural Network (PAC-PCNN) model [16] is introduced into the pan-sharpening process. All parameters in the PAC-PCNN model are calculated automatically in accordance with the inputs and the conversion speed is also fast. In this paper, the MSMDM value of the original image is taken as the inputs of the PAC-PCNN model. Figure 3 demonstrates the structure of the PAC-PCNN model.

Figure 3.

The structure of the PAC-PCNN model.

In the above PAC-PCNN model, denotes the connection input at the position . denotes the input information at the position . denotes the amplitude of the connection input and denotes the internal activity item. denotes the exponential attenuation coefficient and denotes the connection strength. The output has two states: one is ignition () and the other is non-ignition (). Its status depends on its two inputs, i.e., the current internal activity and the previous dynamic threshold . Moreover, and denote the exponential attenuation coefficient and the amplification coefficient of , respectively. denotes the following connection matrix, whose value is generally determined by experience.

The PAC-PCNN model is mainly used to segment images, but it is also an effective method to fuse images. In fact, the segmentation principle of PAC-PCNN-based image segmentation methods is basically based on pixel intensity. It means that the PAC-PCNN-based image fusion problem is strongly related to image segmentation. Thus, the PAC-PCNN model is introduced into the fusion process.

2.4. Weighted Local Spatial Frequency

The traditional spatial frequency (SF) [19] only describes spatial information in both horizontal and vertical directions and lack diagonal information, resulting in some texture and detail information is lost. However, this detail information is crucial for image fusion. SF is calculated by Formula (9).

where and denote the row and column frequencies at the position , respectively.

In this paper, the calculation of spatial frequency is improved by adding the calculation of diagonal direction on the basis of the original one. In addition, based on the Euclidean distance, it is determined that the weighting factor for row and column frequencies is . The improved spatial frequency is called weighted local spatial frequency (WLSF), which contains both row, column and diagonal directions, and the spatial frequencies in the eight directions are weighted and summed. WLSF can reflect the activity of each pixel in the neighborhood, and a larger spatial frequency value indicates that more spatial detail information is contained within a local area. WLSF is calculated as follows:

where , , and denote the row, column, and diagonal frequencies at the position , respectively. RF and CF contain horizontal and vertical directions. DF contains positive and negative diagonal directions. The detailed definitions are as follows:

where (2M + 1) (2N + 1) denotes the size of the local area. denotes the pixel value at the position .

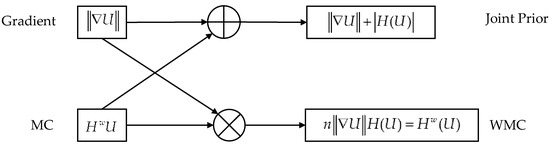

2.5. Weighted Mean Curvature

The weighted mean curvature (WMC) [20] has the advantages of sampling invariance, scale invariance and contrast invariance as well as computational efficiency. With a given image U, the WMC is calculated as follows:

where and denote the gradient operation and the scattering operation, respectively. In particular, for a two-dimensional image, i.e., n = 2, Formula (21) can be simplified as follows:

where denotes the isotropic Laplace operator, and denote the partial derivatives in the x and y direction. , , and denote the corresponding second-order partial derivatives. The detailed derivation process can be found in [20]. WMC can be considered as the gradient weighted by the mean curvature (MC) and also as the mean curvature weighted by the gradient. The connection between WMC, the gradient and MC is shown in Figure 4.

Figure 4.

The connection between , H(U) and HwU.

Formula (22) is a continuous form definition of WMC. However, the pixel points contained in the actual image are discrete. Thus, we should define a discrete form for WMC. For a 3 × 3 window, eight normal directions are considered. Figure 5 shows the eight possible normal directions.

Figure 5.

Eight possible normal directions.

The eight distances can be calculated from the above eight kernels as follows:

where denotes the convolution operation. The discrete form of Formula (22) is shown below:

For convenience, the simplified process of WMC can be expressed as follows:

where and are the input and filtered image, respectively. denotes the WMC filtering operation.

3. Methodology

3.1. Fusion Steps

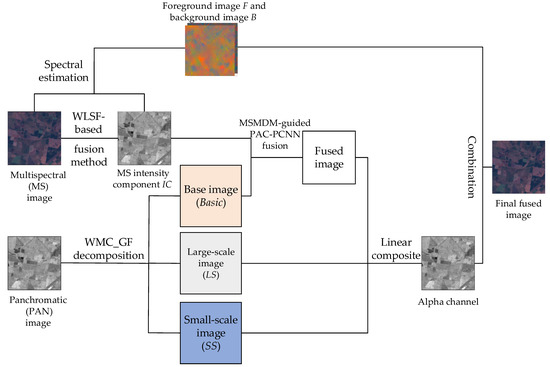

Figure 6 shows the fusion steps of a novel MS and PAN images fusion method based on weighted mean curvature filter (WMCF) decomposition, and the detailed fusion process is described below:

Figure 6.

The fusion steps of the proposed method.

- (1)

- Calculation the Intensity Component.

The main purpose of this step is to fuse all bands of the MS image in accordance with a WLSF-based fusion method, thus generating an intensity component.

If a simple averaging rule is used, some details and texture information contained in the original image will be dropped. Before performing the fusion operation, it is necessary to weight the different regions in the image according to the importance of the information contained in the original image. The weighting factor size will directly affect the effect of fusion. Thus, a WLSF-based fusion method is utilized to fuse all the bands of a MS image to generate an intensity component IC. More specific details about WLSF can be found in Section 2.4.

An individual pixel fails to accurately represent all features within a local area. WLSF can make full use of the multiple pixels in a local area including horizontal, vertical, and eight neighborhoods in the primary and secondary diagonal directions. Different weights are assigned according to the Euclidean distance from the center pixel, and then participate in the weighted calculation. WLSF can be used as a quantitative index for important information such as details and textures. The pixels with larger WLSF values are more important for fusion and should be assigned with a larger weight, e.g., detail, texture, and other spatial detail information. Then, these pixels are given larger weights in the fusion process. Thus, we design a coefficient for adaptive weighted averaging based on the WLSF value, as shown below:

where n denotes the band number contained in the MS image. In the MS image, denotes the WLSF value of the d-th band at the position (i, j), denotes the pixel value of the d-th band at the position (i, j). denotes the weighting factor of the d-th band at the position (i, j). denotes the pixel value at the position (i, j) in IC.

- (2)

- Spectral Estimation.

The main purpose of this step is to extract the foreground and background colors in accordance with an image matting model, thus preserving the spectral information contained in the MS image.

Setting IC as the original α channel, a foreground image F and a background image B are obtained in accordance with Formula (2) in Section 2.1 to facilitate subsequent image reconstruction operations using F and B.

- (3)

- Multi-scale Decomposition.

The main purpose of this step is to perform multi-scale decomposition for the PAN image on the basis of WMCF and Gaussian filter (GF), so as to sufficiently extract the spatial detail information contained in the PAN image.

The multi-scale decomposition is based on WMCF and GF. By Formulas (30)–(34) in Section 3.2, a PAN image can be decomposed into three sub-images with different scales, which are a large scale image LS, a small scale image SS and a basic image, respectively. More specific details about multi-scale decomposition can be found in Section 3.2.

- (4)

- Component Fusion.

The main purpose of this step is to fuse the primary spatial information contained in the MS and PAN images in accordance with a MSMDM-guided PAC-PCNN fusion strategy, thus improving the spatial resolution of the final fused image.

The MSMDM value of the basic image and IC are used as the inputs of the PAC-PCNN model, respectively. All parameters in the PAC-PCNN model can be automatically calculated in accordance with the inputs with a fast convergence speed. In this paper, we set the maximum iteration number for the PAC-PCNN model to 2000. When the maximum number of iterations is reached, the iteration is stopped and then a fused image FA can be obtained. More specific details about component fusion can be found in Section 3.3.

- (5)

- Image Reconstruction.

The main purpose of this step is to substitute the final α channel, F and B, into an image matting model to obtain the final fused image.

A fused image FB is reconstructed through a linear combination of SS, LS, and FA, as shown in Formula (28). Then, FB is utilized as the last α channel. According to Formula (1) in Section 2.1, the final fusion result HD is calculated through combining the final α channel, F, and B, as shown in Formula (29).

Finally, the proposed method is summarized in the following Algorithm 1.

| Algorithm 1: A WMCF-based pan-sharpening method |

| Input: low resolution MS image and high resolution PAN image |

| Output: high resolution MS image |

| 1: Calculation the Intensity Component A WLSF-based fusion method is utilized to fuse all the bands of MS image to generate an intensity component IC. 2: Spectral Estimation Setting IC is the original α channel, a foreground image F and a background image B are obtained in accordance with Formula (2). 3: Multi-scale Decomposition Based on WMCF and GF, PAN is decomposed into three different scales by Formulas (30)–(34): large scale image LS, small scale image SS and basic image. 4: Component Fusion A MSMDM-guided PAC-PCNN fusion strategy is used to fuse the basic image and IC. Then, a fused image FA can be obtained. 5: Image Reconstruction A fused image FB is reconstructed through Formula (28). FB is utilized as the last α channel. According to Formula (1), the final fusion HM result is calculated through combining the final α channel, F, and B. 6: Return HM |

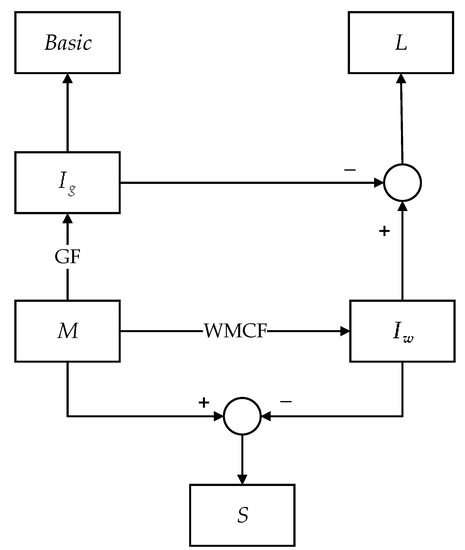

3.2. Multi-scale Decomposition Steps

WMCF can be used for image decomposition because it can preserve edge information while smoothing the image. In addition, Gaussian filter (GF) is a widely used image smoothing operator. In this paper, the multi-scale decomposition is based on WMCF and GF. The multi-scale decomposition of the PAN image using WMCF and GF obtains a large-scale image LS, a small-scale image SS and a basic image Basic. By this decomposition method, an input image can be decomposed into three sub-images with different scales. The specific decomposition process is as follows:

Firstly, the input image M is processed using WMCF and GF to obtain the filtered images Iw and Ig, respectively.

Secondly, SS is obtained by the difference of M and Iw. LS is obtained by the difference of Iw and Ig.

Finally, Ig is used as the basic image, i.e., Basic. Formulas (30)–(34) show the specific computational procedure. The flow chart of the image decomposition method based on WMCF and GF is shown in Figure 7.

where ρ and δ represent the size of the radius and the variance of the GF. M represents a PAN image. and represent the GF and WMCF operation, respectively.

Figure 7.

The flow chart of the multi-scale decomposition method based on WMCF and GF.

3.3. Component Fusion Steps

The PAC-PCNN model is introduced into the image fusion process. The MSMDM values of the basic image and MS intensity component IC, respectively, are used as the inputs of the PAC-PCNN model. The detailed design of the MSMDM is shown in Section 2.2.

There are five free parameters in the PAC-PCNN model: , , , , . The weighted connection strength is denoted by . Thus, the weighted connection strength is expressed. According to the analysis in [16], all the free parameters can be calculated adaptively according to the input information, which solves the difficulty of setting free parameters in the traditional PCNN model. All the free parameters in the PAC-PCNN model are automatically calculated in accordance with Formulas (35)–(38):

where is the standard deviation of the normalized input image S. and indicate the normalized Ostu threshold and the maximum intensity of the input image, respectively.

When the maximum number of iterations is reached, the iteration is stopped. Then, the sum of the ignition times with the basic image and IC are obtained, respectively. That will provide the total number of ignitions and for the basic image and IC, respectively. The fusion results are acquired through using the large number of ignition times. The fusion rules are as follows:

where represents the fused value at the position (x,y) in the fusion image, represents the pixel value at the position (x,y) in IC, and represents the pixel value at the position (x,y) in the basic image.

4. Experiments and Analysis

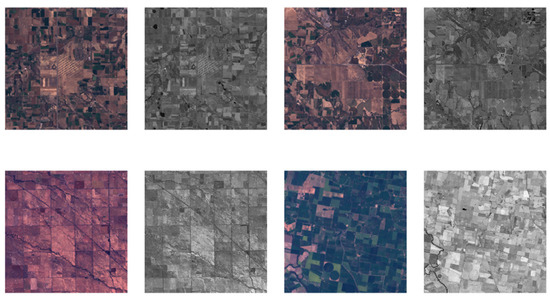

4.1. Datasets

We used a dataset which contains 36 image pairs [21]. Each image pair contains both MS and PAN images, and their pixel sizes are 200 × 200 and 400 × 400, respectively.

Firstly, we up-sampled the original MS image to obtain the MS image with pixel size of 400 × 400. Then, the MS image and PAN images are down-sampled to acquire the MS image and the PAN image with pixel size of 200 × 200 as the experimental images. In this case, the final obtained image is used as the experimental image, and the original image is used as the reference image.

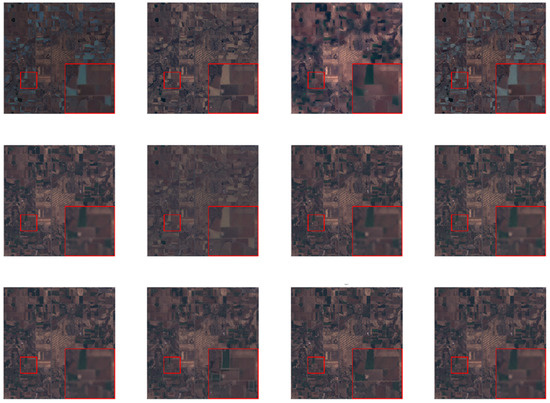

We choose four image pairs from different scenes for comparison experiments. Figure 8 shows four image pairs containing MS and PAN images and will be utilized for the comparison experiments.

Figure 8.

Four image pairs.

4.2. Comparison Methods

In this paper, ten existing pan-sharpening methods are used for comparison with the proposed method. These ten methods are BL [11], AGS [3], GFD [12], IHST [1], MOD [10], MPCA [2], PRACS [4], VLGC [9], BDSD-PC [5], and WTSR [6], respectively.

4.3. Objective Evaluation Indices

There are two ways to evaluate a pan-sharpening methods: subjective visual effects and objective evaluations. Each objective evaluation index considers different dimensions of the problem, and generally has an overall consistency, which can reflect the true image fusion results and also be consistent with the subjective evaluation indices. In order to objectively evaluate the quality of the fused images obtained by each method, five widely used quantitative indices are used in this paper. Some detailed introduction of each index is shown below:

- (1)

- Correlation Coefficient (CC) [22]. It can calculate the correlation between the reference MS image and a fused image. Its optimum value is 1;

- (2)

- Erreur Relative Global Adimensionnelle de Synthse (ERGAS) [23]. It can reflect the overall quality of a fused image and its optimum value is 0;

- (3)

- Relative Average Spectral Error (RASE) [24]. It can reflect the average performance on spectral errors and its optimum value is 0;

- (4)

- Spectral Information Divergence (SID) [25] can evaluate the divergence between spectra and its optimum value is 0. For more specific details about the SID index, please refer to the literature [25];

- (5)

- No Reference Quality Evaluation (QNR) [26]. When without a reference MS image, it can reflect the overall quality of a fused image. QNR is composed of two parts: a spectral distortion index Dλ and a spatial distortion index Ds. Its optimum value is 1. For QNR, a higher value indicates a better fusion effect.

- (6)

- Dλ can reflect the spectral distortion [26]. For Dλ, a lower value indicates a better fusion effect and its optimum value is 0;

- (7)

- Ds can reflect the spatial distortion [26]. For Ds, a lower value indicates a better fusion effect and its optimum value is 0.

4.4. Experimental Results and Analysis

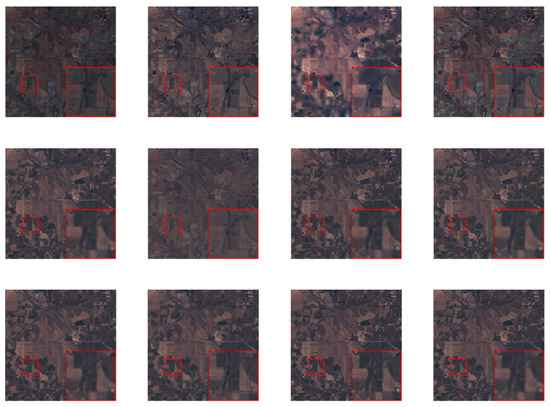

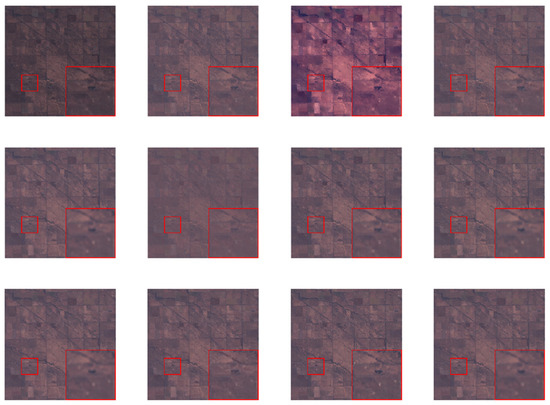

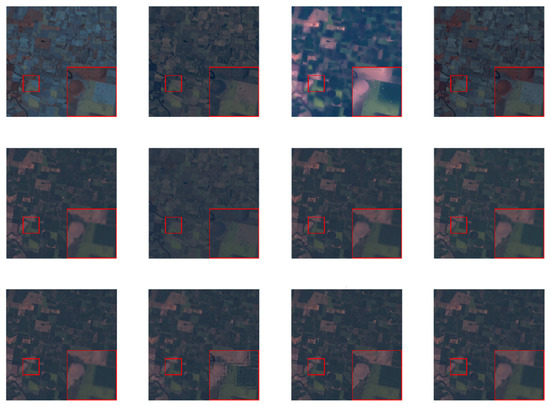

In Figure 9, Figure 10, Figure 11 and Figure 12, give four groups visualization results obtained by BL, AGS, GFD, IHST, MOD, MPCA, PRACS, VLGC, BDSD-PC, and WTSR, and the proposed method on the first image pair, the second image pair and the third image pair, respectively. Moreover, the last one gives the MS image as reference. In order to more visually compare the differences between the fusion results obtained by each method, all fusion results were locally enlarged.

Figure 9.

The first group of visualization results.

Figure 10.

The second group of visualization results.

Figure 11.

The third group of visualization results.

Figure 12.

The fourth group of visualization results.

Table 1, Table 2, Table 3 and Table 4 show four groups of quantitative results for the fusion results obtained by eleven different pan-sharpening methods on the four image pairs, respectively. There are five quantitative indices in total, including spectral and spatial quality evaluation. These five indices are Correlation Coefficient (CC), Erreur Relative Global Adimensionnelle de Synthse (ERGAS), Relative Average Spectral Error (RASE), Spectral Information Divergence (SID) and No Reference Quality Evaluation (QNR). In particular, the best values for all quantitative indices are displayed in bold red. The second best values for all quantitative indices are displayed in bold green. The third best values for all quantitative indices are displayed in bold blue.

Table 1.

The first group of quantitative results.

Table 2.

The second group of quantitative results.

Table 3.

The third group of quantitative results.

Table 4.

The fourth group of quantitative results.

In Figure 9, compared with the reference MS image, the BL, AGS, IHST, and MPCA methods appear spectral distortion with different degrees in the overall region. In particular, for the BL method, the green part becomes dark blue in the local magnification region. For the GSA and MPCA methods, the green part becomes yellowish in the local magnification area. Moreover, the WTSR and GFD method have some distortion with different degrees in the spatial details. The fused images obtained by the MOD, PRACS, VLGC, and BDSD-PC methods have higher spectral resolution and retain the spectral information contained in the MS images. However, compared with these methods, the spatial details of the proposed method are more defined, especially in the local magnification region. In Figure 7, from the analysis of subjective vision, the proposed method has clearer spatial details and higher spectral resolution, which means that the proposed method improves spatial details while more spectral information contained in the MS images is retained.

In Table 1, the proposed method can obtain the best values on all six quantitative indices. Moreover, the VLGC method can obtain the second best values on the CC and ERGAS indices, and the third best values on the SID and QNR indices. The MOD method can obtain the second best values on the RASE and QNR indices, and the third best values on the CC, ERGAS, and SID indices. The BDSD-PC method can obtain the second best value on the SID index and the third best values on the CC and RASE indices. Thus, from the perspective of objective evaluation in Table 1, the proposed method has superior spatial detail retention characteristics and spectral retention characteristics, and the overall effect is better.

In Figure 10, compared with the reference MS image, the BL, AGS, IHST, and MPCA methods appear spectral distortion with different degrees in the overall region. Especially, in the local magnification region, for the BL method, the dark green part becomes dark blue. For the AGS and MPCA methods, the dark green part becomes yellowish. For the IHS method, the dark green part becomes light green. Moreover, the WTSR and GFD methods have severe spatial detail distortion. The fused images obtained by the MOD, PRACS, VLGC, and BDSD-PC methods have a higher spectral resolution and retain the spectral information contained in the MS images. However, compared with these methods, the spatial details of the proposed method are more defined, especially in the local magnification region. In Figure 8, from the analysis of subjective vision, the proposed method has clearer spatial details and higher spectral resolution, which means that the proposed method improves spatial details while more spectral information contained in the MS images is retained.

In Table 2, the proposed method can obtain the best values on all six quantitative indices. In particular, the QNR value of the proposed method is 0.984, which is relatively close to the optimal value of 1. In addition, the VLGC method can obtain the second best values on all six indices. The MOD method can obtain the second best value on the SID index and the third best values on the CC, ERGAS and QNR indices. The BDSD-PC method can obtain the second best value on the SID index and the third best values on the CC and RASE indices. The PRACS method can obtain the third best value on the SID index. Thus, from the analysis of the objective evaluation results in Table 2, the proposed method has superior spatial detail retention characteristics and spectral retention characteristics, and the overall effect is better.

In Figure 11, compared with the reference MS image, the BL and GFD methods appear spectral distortion with different degrees. In particular, in the local magnification region, for the BL method, the light gray part becomes dark gray. For the GFD method, the yellowish part becomes pink. Moreover, the WTSR and GFD methods have severe spatial detail distortion. The fused images obtained by the MOD, PRACS, VLGC, and BDSD-PC methods have a higher spectral resolution and retain the spectral information contained in the MS images. However, compared with these methods, the spatial details of the proposed method are more defined, especially in the local magnification region. In Figure 9, from the analysis of subjective vision, the proposed method has clearer spatial details and higher spectral resolution, which means that the proposed method improves spatial details while more spectral information contained in the MS images is retained.

In Table 3, the proposed method can obtain the best values on all six quantitative indices. Besides, the VLGC method can obtain the second best values on the ERGAS and QNR indices, and the third best values on the CC, SID, and RASE indices. The MOD method can obtain the second best values on the CC, SID, and RASE indices, and the third best values on the ERGAS and QNR indices. Thus, from the analysis of the objective evaluation results in Table 3, the proposed method has superior spatial detail retention characteristics and spectral retention characteristics, and the overall effect is better.

In Figure 12, compared with the reference MS image, the BL, AGS, IHST, and MPCA methods show spectral distortion with different degrees in the overall region. In particular, in the local magnification region, for the BL and IHST methods, the pink part becomes dark red and the dark green part becomes light blue. For the AGS and MPCA methods, the pink part became dark green and the dark green part became light pink. Moreover, the WTSR and GFD methods have spatial detail distortion with different degrees. The fused images obtained by the MOD, PRACS, VLGC, and BDSD-PC methods have higher spectral resolution and retained the spectral information contained in the MS images. However, compared with these methods, the spatial details of the proposed method are more defined, especially in the local magnification region. In Figure 10, from the analysis of subjective vision, the proposed method has clearer spatial details and higher spectral resolution, which means that the proposed method improves spatial details while more spectral information contained in the MS images is retained.

In Table 4, the proposed method can obtain the best values on all six quantitative indices. In addition, the VLGC method can obtain the second best values on the ERGAS and CC indices, and the third best values on the SID, QNR and RASE indices. The MOD method can obtain the second best values on the QNR, SID and RASE indices, and the third best values on the ERGAS and CC indices. The BDSD-PC method can obtain the second best value on the SID index. The PRACS method can obtain the third best values on the CC and SID indices. The PRACS method can obtain the third best value on the CC index. Thus, from the objective evaluation results in Table 4, the proposed method has superior spatial detail retention characteristics and spectral retention characteristics, and the overall effect is better.

From the perspective of subjective vision, the fusion results obtained from the proposed method have clearer spatial details and higher spectral resolution. From the perspective of objective evaluation, the fusion result obtained from the proposed method performs best on all six quantitative indices. In general, the comprehensive evaluation based on objective evaluation and subjective visual effects can show that the proposed method has superior spatial detail retention characteristics and spectral retention characteristics.

5. Conclusions

In this paper, a novel MS and PAN images fusion method based on WMCF decomposition is proposed. A WLSF-based method is used to fuse all the bands of the MS image to generate the intensity component IC. In accordance with an image matting model, IC is used as the original α channel for spectral estimation to obtain a foreground image F and a background image B. WMCF and GF are used to decompose a PAN image into three scales, i.e., a small scale (SS) image, a large scale (LS), and a basic image, respectively. Then, a MSMDM-guided PAC-PCNN fusion strategy is used to fuse IC and the basic image obtained from the PAN image. All parameters in the PAC-PCNN model can be automatically calculated in accordance with the inputs with a fast convergence speed. Finally, the fused images, and the LS and SS image are combined as the last α channel. In accordance with an image matting model, the foreground color F, background color B and the last α channel are reconstructed to obtain the final fused image. The experimental results show that the method proposed in this paper has better performance than some representative pan-sharpening methods and can solve the problem of spatial distortion and spectral distortion.

We conducted experiments using four different image pairs to compare with ten representative pan-sharpening methods. The experimental results show that the proposed method can achieve superior results in terms of visual effects and objective evaluation. The proposed method can obtain more spatial details from the PAN image with higher efficiency while retaining more spectral information contained in the MS image. By fusing the MS and PAN images, the fused images have both higher spatial detail representation ability and retain the spectral features contained in the MS images, which can obtain a richer description about the target information. With the improvement of remote sensing satellite sensor technology, the information integration and processing technology has been enhanced. Thus, the proposed method can be better applied to various aspects, such as military reconnaissance, remote sensing measurements, forest protection, vegetation cover, image classification and machine vision.

In the future research, we will work on developing more effective fusion strategies and explore the application of our method in other fields.

Author Contributions

Conceptualization, Y.P.; methodology, Y.P.; software, S.X.; validation, S.X.; formal analysis, Y.P.; investigation, S.X.; resources, Y.P.; data curation, S.X.; writing—original draft preparation, Y.P.; writing—review and editing, Y.P., D.L. and J.A.B.; visualization, Y.P.; supervision, D.L. and J.A.B.; project administration, D.L.; funding acquisition, L.W. All authors have read and agreed to the published version of the manuscript.

Funding

This paper was supported by Leading Talents Project of the State Ethnic Affairs Commission and National Natural Science Foundation of China (No. 62071084).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Liu, C.; Qi, X.; Zhang, W.; Huang, X. Research of improved Gram-Schmidt image fusion algorithm based on IHS transform. Eng. Surv. Mapp. 2018, 27, 9–14. [Google Scholar]

- Jelének, J.; Kopačková, V.; Koucká, L.; Mišurec, J. Testing a modified PCA-based sharpening approach for image fusion. Remote Sens. 2016, 8, 794. [Google Scholar] [CrossRef]

- Aiazzi, B.; Baronti, S.; Selva, M. Improving component substitution pansharpening through multivariate regression of MS + Pan data. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3230–3239. [Google Scholar] [CrossRef]

- Choi, J.; Yu, K.; Kim, Y. A new adaptive component-substitution based satellite image fusion by using partial replacement. IEEE Trans. Geosci. Remote Sens. 2011, 49, 295–309. [Google Scholar] [CrossRef]

- Vivone, G. Robust band-dependent spatial-detail approaches for panchromatic sharpening. IEEE Trans. Geosci. Remote Sens. 2019, 57, 6421–6433. [Google Scholar] [CrossRef]

- Cheng, J.; Liu, H.; Liu, T.; Wang, F.; Li, H. Remote sensing image fusion via wavelet transform and sparse representation. ISPRS J. Photogramm. Remote Sens. 2015, 104, 158–173. [Google Scholar] [CrossRef]

- Dong, L.; Yang, Q.; Wu, H.; Xiao, H.; Xue, M. High quality multi-spectral and panchromatic image fusion technologies based on curvelet transform. Neurocomputing 2015, 159, 268–274. [Google Scholar] [CrossRef]

- Pan, Y.; Liu, D.; Wang, L.; Benediktsson, J.A.; Xing, S. A Pan-Sharpening Method with Beta-Divergence Non-Negative Matrix Factorization in Non-Subsampled Shear Transform Domain. Remote Sens. 2022, 14, 2921. [Google Scholar] [CrossRef]

- Fu, X.; Lin, Z.; Huang, Y.; Ding, X. A variational pan-sharpening with local gradient constraints. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Wu, L.; Yin, Y.; Jiang, X.; Cheng, T. Pan-sharpening based on multi-objective decision for multi-band remote sensing images. Pattern Recognit. 2021, 118, 108022. [Google Scholar] [CrossRef]

- Khan, S.S.; Ran, Q.; Khan, M.; Ji, Z. Pan-sharpening framework based on laplacian sharpening with Brovey. In Proceedings of the 2019 IEEE International Conference on Signal, Information and Data Processing (ICSIDP), Chongqing, China, 11–13 December 2019. [Google Scholar]

- Li, Q.; Yang, X.; Wu, W.; Liu, K.; Jeon, G. Pansharpening multispectral remote-sensing images with guided filter for monitoring impact of human behavior on environment. Concurr. Comput. Pract. Exp. 2021, 32, e5074. [Google Scholar] [CrossRef]

- Wang, Z.; Yide, M.; Feiyan, C.; Lizhen, Y. Review of pulse-coupled neural networks. Image Vis. Comput. 2010, 28, 5–13. [Google Scholar] [CrossRef]

- Tan, W.; Xiang, P.; Zhang, J.; Zhou, H.; Qin, H. Remote sensing image fusion via boundary measured dual-channel PCNN in multi-scale morphological gradient domain. IEEE Access 2020, 8, 42540–42549. [Google Scholar] [CrossRef]

- Zhang, J.; Zhou, H.; Wei, S.; Tan, W. Infrared polarization image fusion via multi-scale sparse representation and pulse coupled neural network. In Proceedings of the AOPC 2019: Optical Sensing and Imaging Technology, Beijing, China, 7–9 July 2019; Volume 11338, p. 113382. [Google Scholar]

- Chen, Y.; Park, S.K.; Ma, Y.; Ala, R. A new automatic parameter setting method of a simplified PCNN for image segmentation. IEEE Trans. Neural Netw. 2011, 22, 880–892. [Google Scholar] [CrossRef] [PubMed]

- Levin, A.; Lischinski, D.; Weiss, Y. A Closed-Form Solution to Natural Image Matting. IEEE Trans. Pattern. Anal. Mach. Intell. 2008, 30, 228–242. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Bai, X.; Wang, T. Boundary finding based multi-focus image fusion through multi-scale morphological focus-measure. Inf. Fusion 2017, 35, 81–101. [Google Scholar] [CrossRef]

- Eskicioglu, A.M.; Fisher, P.S. Image quality measures and their performance. Commun. IEEE Trans. 1995, 43, 2959–2965. [Google Scholar] [CrossRef]

- Gong, Y.; Goksel, O. Weighted mean curvature. Signal Process 2019, 164, 329–339. [Google Scholar] [CrossRef]

- US Gov. Available online: https://earthexplorer.usgs.gov/ (accessed on 24 October 2019).

- Alparone, L.; Wald, L.; Chanussot, J.; Thomas, C.; Gamba, P.; Bruce, L. Comparison of pansharpening algorithms: Outcome of the 2006 GRS-S data-fusion contest. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3012–3021. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Tao, D.; Huang, X. On combining multiple features for hyperspectral remote sensing image classification. IEEE Trans. Geosci. Remote Sens. 2012, 50, 879–893. [Google Scholar] [CrossRef]

- Ranchin, T.; Wald, L. Fusion of high spatial and spectral resolution images: The ARSIS concept and its implementation. Photogramm. Eng. Remote Sens. 2000, 66, 49–61. [Google Scholar]

- Chang, C.-I. Spectral information divergence for hyperspectral image analysis. In Proceedings of the IEEE 1999 International Geoscience and Remote Sensing Symposium. IGARSS’99 (Cat. No.99CH36293), Hamburg, Germany, 28 June–2 July 1999; Volume 1, pp. 509–511. [Google Scholar]

- Alparone, L.; Aiazzi, B.; Baronti, S.; Garzelli, A.; Nencini, F.; Selva, M. Multispectral and panchromatic data fusion assessment without reference. Photogramm. Eng. Remote Sens. 2008, 74, 193–200. [Google Scholar] [CrossRef] [Green Version]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).