Abstract

Augmented reality (AR) technology has been applied to the industrial field; however, at present, most industrial AR applications are developed for specific application scenarios, which are difficult to develop and have a long development cycle, and lack universality. To this end, this paper proposed a quick development toolkit for augmented reality visualization (QDARV) of a factory by using the script configuration and parsing approach. With QDARV, designers can quickly develop industrial AR applications, achieve AR registration based on quick response (QR) code and simultaneous localization and mapping (SLAM), and superpose information such as disassembly animations, industrial instruments, pictures, and texts on real scenes. In QDARV, an AR registration method based on SLAM map reloading is proposed. Through saving and reloading the map and the configuration and analysis of the SLAM-AR display content script, the AR scene configured by the designer is displayed. Meanwhile, the objects detected using YOLOv5 are used as a landmark to assist the SLAM system in positioning. The QDARV can be applied to realize AR visualization of factories with a large area.

1. Introduction

At present, there are a large number of human–machine display interfaces in industrial applications (such as mechanical instrument panels, Light-Emitting Diode display screens, Personal Computer monitors, etc.). However, these human–machine display interfaces are all “equipment-centric”, that is, these interfaces are fixed and can only be used for specific devices. With the rapid development of intelligent manufacturing and big data technology, a large amount of data information needs to be displayed in the workshop, which increases the integration complexity and cost of the traditional device-centric human–machine interface system [1,2]. Meanwhile, in the inspection and maintenance of complex equipment in the workshop, the relevant guidance information needs to be quickly displayed to the operator to guide the operator to perform complex operations [3]. Therefore, a workshop auxiliary system is urgently needed to assist and guide the operator to conduct complex inspection and maintenance tasks.

Augmented reality (AR) technology can merge computer-generated virtual information with the physical world. It can display real scenes and virtual ones simultaneously and provide a type of human–computer interaction [4]. Augmented reality technology is a human-centered human–computer interaction and display technology. One of the main application areas of augmented reality technology is visualization. Unlike traditional visualization methods, AR inserts virtual objects and information directly into digital representations of the real world, which makes these objects and data more easily to understand and interactive [5]. Schall [6] presented an AR-based underground visualization system for aiding field workers of utility companies in outdoor tasks such as maintenance, planning, or surveying of underground infrastructure. Ma et al. [5] designed a method for fusing AR views and indoor maps in changing environments during AR-GIS visualization. Recently Hoang et al. [7] proposed an AR visualization platform that can display any Robot Operating System message data type in AR. Zheng et al. [8] proposed an AR decision support framework to support immediate decisions within a smart environment by augmenting the user’s focal objects with assemblies of semantically relevant IoT data and corresponding suggestions. Tu et al. [9] designed a mixed reality (MR) application for digital twin-based services. Taking an industrial crane platform as a case study, the application allowed crane operators to monitor the crane status and control its movement.

With the help of augmented reality technology, virtual models can be placed in real scenes to interact with real scenes while reducing the production of real models and improving industrial production efficiency. This research intends to apply augmented reality to factory visualization, design and implement a human-centered industrial display human–machine interface, that is, the operator uses AR glasses or handheld mobile computer equipment to query and display the information of the workshop with the assistance of identification and AR registration technology, thereby reducing the system integration complexity and cost. Although augmented reality technology has been applied to the industrial field, most of the existing AR industrial display systems are developed for specific application scenarios, which have poor versatility and a long development cycle. For example, the AR-based disassembly and assembly process guidance system is only for specific dedicated products. This research proposes a quick development toolkit for augmented reality visualization of a factory (QDARV). With this tool, AR programs for different application objects can be quickly designed, and a human-centered AR display interface can be established.

The main contributions of this paper are summarized as follows. (1) A quick development toolkit called QDARV is proposed for augmented reality visualization of a factory. This tool can be used to quickly develop industrial AR applications, achieve AR registration based on quick response (QR) code and simultaneous localization and mapping (SLAM), and display information such as disassembly animations, industrial instruments, pictures, and texts in real scenes. (2) An AR registration method based on SLAM map reloading is proposed. Meanwhile, the map saving and map loading functions are extended based on ORB_SLAM2 [10]. Through saving and reloading the map and the configuration and analysis of the SLAM-AR display content script, the reloaded display of the AR scene is achieved. (3) With ORB_SLAM2 based on the feature point method, the object is used as a landmark to assist the SLAM system in positioning, thereby establishing a SLAM system that can build object-oriented semantic maps and improve the tracking performance.

This paper is organized as follows. Section 2 reviews the related studies. Section 3 introduces the methods used in this study, including design of the QDARV system, AR configuration software, script parsing software, and AR display software. Section 4 conducts experiments to prove the validity of the method proposed in this study. Section 5 presents the discussion and future work of this study.

2. Related Work

2.1. Augmented Reality in Manufacturing

Augmented reality is developed from virtual reality (VR), VR technology can make users fully immersed in the virtual environment, while AR technology emphasizes the information enhancement of the real environment and focuses on the seamless combination of virtual objects and the real world [11]. The concept of AR was first proposed by Boeing in the early 1990s. They developed a head-mounted display system to enable engineers to use digital augmented reality diagrams superimposed on circuit boards to assemble complex wire bundles on circuit boards [12]. Since the concept of augmented reality was proposed, augmented reality technology has been a specific research field, and has been regarded as a powerful technical tool to improve efficiency in the manufacturing industry [13]. George [14] coined the term “industrial augmented reality” (IAR) to describe the application of augmented reality technology in industrial production, and identified product design, maintenance, mechanical assembly, and training as the key areas of IAR application.

In recent years, augmented reality visualization technology for manufacturing has become a research hotspot in the field of intelligent manufacturing [15,16]. Augmented reality technology is applied to factory layout planning [17], mechanical assembly tasks [18,19], data visualization of factories [20], and remote support for maintenance operations and training [21]. Laviola et al. [22] proposed a novel authoring approach called “minimal AR”, which can optimize the visual assets used in AR interfaces to convey work instructions in manufacturing. Chen et al. proposed a projection-based augmented reality system for assembly guidance and monitoring [23]. Erkek et al. [24] developed a mobile Finite Element Analysis (AR-FEA)-based system for visualization of modal analysis results. With the system, finite-element analysis results can be viewed on the physical part, which offers designers and engineers a new way to visualize such simulation results. In recent years, the development of AR frameworks made AR application development widely accessible to developers without augmented reality background knowledge [25]. However, at present, most industrial AR applications are developed for specific application scenarios, which are difficult to develop and have a long development cycle, and lack universality.

A key problem in AR application development is the creation of augmented reality display content. At present, there are some popular AR application development libraries, such as ARToolKit [26], ARToolKitPlus [27], and OSGART [28], which use OpenGL or OpenSceneGraph [29] display virtual animation on real markers in real time. However, these AR development libraries require developers to have rich programming skills, resulting in high development costs and lack of universality. In recent years, major companies have launched commercial AR development libraries, such as ARCore of Google and Vuforia of PTC, which allow developers to create AR applications in a few minutes, but require developers to have skilled software operation ability, and the created AR applications are only simple animation displays. To achieve complex AR interaction (such as mechanical assembly), developers need to have programming ability.

To this end, we propose the QDARV. Compared with the above AR development tools, the main advantage of the QDARV development tool is that it does not rely on time-consuming and costly recompilation steps, and developers can quickly and efficiently create AR applications without a programming foundation. In addition, this tool provides disassembly and assembly animation configuration and virtual instrument configuration functions. Developers only need to configure AR registration parameters and generate intermediate script files to realize complex AR interactions such as mechanical assembly and instrument display.

2.2. AR Registration

The main characteristic of augmented reality visualization is that visual information has spatial relationships with the physical world. Therefore, in AR applications, AR registration is a key technology, which enables the virtual objects to be superimposed onto the real scene according to the spatial perspective relationship by tracking and locating the images or objects in the real scene. Augmented reality technology can be divided into three categories: sensor-based, vision-based, and hybrid methods. Among them, vision-based methods include marker-based and marker-less methods, and they are widely used.

The marker-based AR registration method is the earliest practical and commercialized technology. The most representative research is the artificial marker-based ARToolKit [26]. The tool uses a camera to capture pre-placed markers in the scene for real-time tracking. This method has the advantages of fast tracking speed and high accuracy, and is widely used in industrial augmented reality. Yin et al. [30] provided a universal calibration tool of marker-object offset matrix for marker-based industrial augmented reality applications. The method can automatically calculate the offset matrix between the Computer Aided Design (CAD) coordinate system and the marker coordinate system to achieve the global optimal AR registration visual effect. Motoyama et al. [31] developed an AR marker-tracking method that is applicable for actual casting processes and applied the method to conduct contactless measurement of the crucible motion during actual aluminum alloy casting processes. However, the marker-based tracking method needs to preset the marker pattern in the real scene, it is not conducive to the user’s operation, and when the viewing angle leaves the marker, the tracking registration cannot continue. Therefore, the tracking registration method based on SLAM has gradually become the mainstream research and development direction.

In the Parallel Tracking and Mapping (PTAM) algorithm proposed by Klein et al. [32], a classic simultaneous localization and mapping algorithm based on monocular feature points is proposed. By adopting the SLAM technology, the moving object in an unknown environment can acquire the characteristic information of the real-world environment via sensors (e.g., a camera) and construct a map consistent with this environment during movement. SLAM technology was first used in the field of robotics, in recent years, it has been widely used in the field of augmented reality, especially industrial augmented reality. Liu et al. [33] proposed a SLAM-based marker-less mobile AR tracking registration method that can handle virtual image instability caused by fast and irregular camera motion. Yu et al. [34] developed a vSLAM-based AR layout detection system. While the inspectors scan the fab floor using smart-phones, a discrepancy check is automatically made using the floor map generated through vSLAM. Zhang et al. [35] proposed a map recovery and fusion strategy based on vision-inertial simultaneous localization and mapping. This strategy is used to solve the problem of map restoration and fusion of collaborative AR systems.

However, most of the current SLAM-based industrial AR applications are oriented to specific environments and only suitable for small-scale positioning. When the system is in a large-scale and complex scene, the tracking of the system is easy to lose or drift. In addition, most industrial AR applications cannot save the constructed virtual information, while in actual industrial AR development, such as factory layout planning and mechanical parts assembly, it is often necessary to save the planned virtual scenes or part assembly animations. The QDARV development tool proposed in this paper can quickly create marker-based and SLAM-based industrial AR applications. This tool combines ORB_SLAM2 with YOLOv5 [36] and uses objects as landmarks to assist system positioning. When in the large-scale and complex scenes of the factory, the system can show superior tracking performance. Meanwhile, this study also extends the function of map saving and loading on the basis of ORB_SLAM2, which can save and reload virtual information.

3. Methods

3.1. System Design

This study proposes a quick development toolkit called QDARV for augmented reality visualization of a factory. The main goal of QDARV is to assist developers in quickly developing industrial AR systems for augmented reality visualization of a factory. Developers only need to configure augmented reality display content and augmented reality registration parameters to realize assembly disassembly animation display, industrial instrument display, tracking, and AR registration for large plant scenes in real scenes, thereby reducing code writing, improving development efficiency, and quickly customizing AR visualization content.

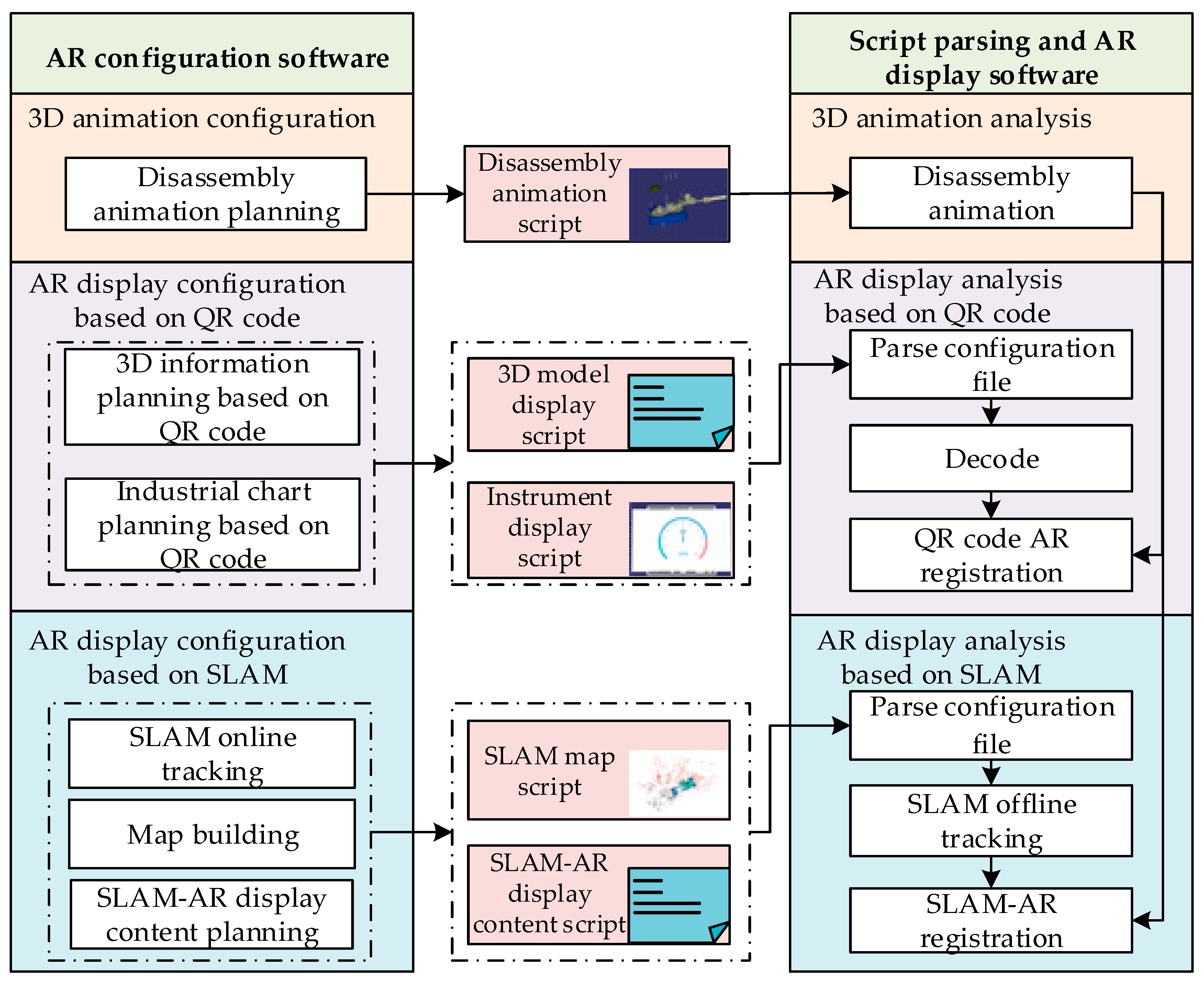

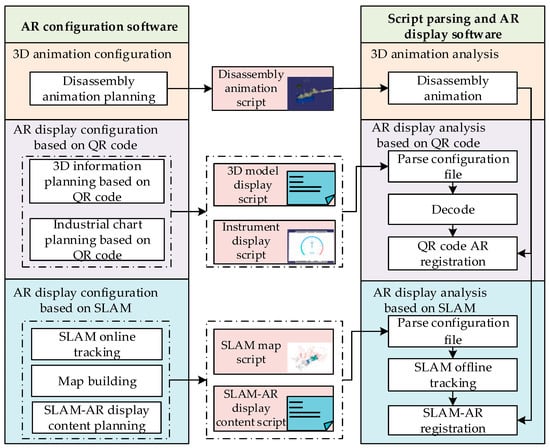

The QDARV toolkit includes AR configuration software, script parsing, and AR display software. Figure 1 shows the overall framework of the QDARV system, in which the AR configuration software is composed of three parts: the 3D animation configuration module, the AR display configuration module based on QR code, and the AR display configuration module based on SLAM. Corresponding to the AR configuration software, the script parsing and AR display software consists of three parts: the 3D animation analysis module, the AR display analysis module based on QR code, and the AR display analysis module based on SLAM. The modules of the AR configuration software and the script parsing and the AR display software exchange data through intermediate script files. By using this toolkit, users can quickly plan the augmented reality display content of the factory and realize augmented reality display of disassembly animations, industrial instruments, pictures, and texts. Developers do not need a deep understanding of augmented reality technology or extensive programming experience. Instead, they only need to use AR configuration software to configure the augmented reality display content and augmented reality registration parameters to generate intermediate script files. Script parsing and AR display software loads and parses these intermediate script files to generate augmented reality display content to quickly design industrial AR applications and customize the display of augmented reality content.

Figure 1.

Overall frame diagram of the QDARV system.

As shown in Figure 1, the QDARV toolkit supports two AR working modes, namely the AR display mode based on QR code and AR display mode based on SLAM. In the AR display mode, users scan the QR code in the scene by using the parsing script and AR display software, which use the AR registration technology to superimpose the content in the real scene to the display. In the AR display mode, the parsing script and AR display software can use the scene positioning function of the SLAM algorithm to accurately superimpose virtual information into the physical scene, thereby achieving AR display. This study mainly designs the workflow of each module in the AR configuration software, parsing script, and AR display software of the QDARV system, and investigates data exchange between the modules through the intermediate script file to quickly achieve augmented reality display.

3.2. AR Configuration Software

3.2.1. Three-Dimensional Animation Configuration Module Design

The 3D animation configuration module is mainly used to plan the disassembly animation of the assembly and guide workers to complete disassembly operations. This module includes two parts: the assembly structure design unit and the assembly disassembly animation planning unit.

As for configuring 3D animation, the 3D animation model needs to be lightweight to improve the rendering speed. The main goal of lightweight processing is to make the assembly CAD model lightweight and output a lightweight assembly mesh model. Generally, CAD models have the characteristics of a large amount of information, massive redundant data, and complex structural relationships, which are not conducive to achieving rapid AR display on mobile devices. Therefore, lightweight processing of CAD models is necessary. In this study, the main steps of making the assembly model lightweight are as follows:

- (1)

- Model format conversion: The CAD models of mechanical assemblies are mostly established by 3D modeling software, and most of the parts are solid models. In this study, the CAD models are converted into triangular mesh files and stored in the STL format;

- (2)

- Mesh model simplification: under the premise of not affecting the appearance characteristics and display accuracy of the assembly model, the mesh model in the STL format is simplified, redundant patches are eliminated, and the accuracy of relatively smooth areas is reduced, thereby reducing the number of patches in the model and improving model rendering speed.

The assembly structure design unit is to add Degree of Freedom (DOF) nodes to the assembly model for motion planning of each part in the assembly model. This study converts the mesh model in the STL format into an OpenFlight format, adds DOF nodes to each part in the assembly in this file format, and establishes a local logical coordinate system to define the motion of the DOF node and its sub-nodes in this coordinate system.

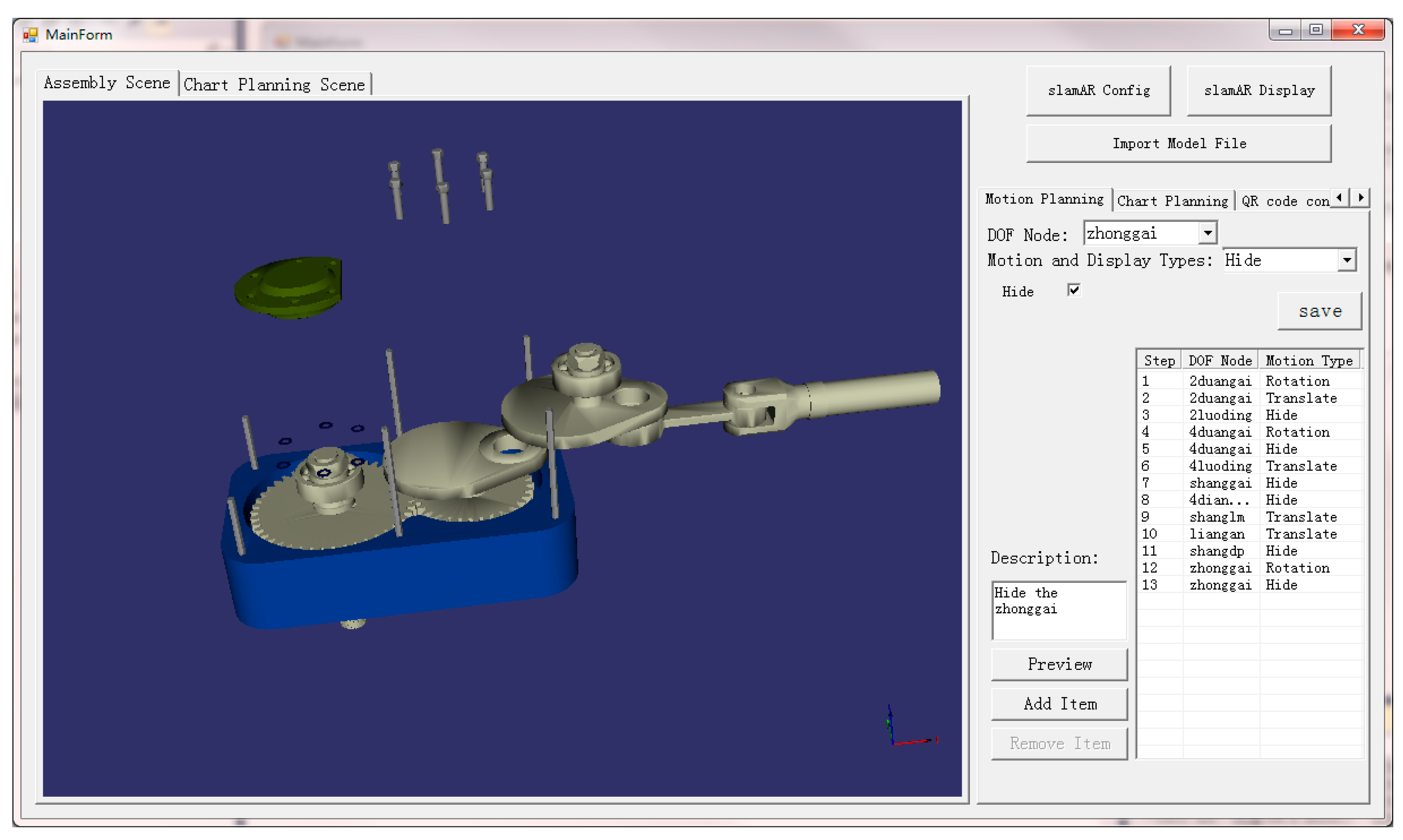

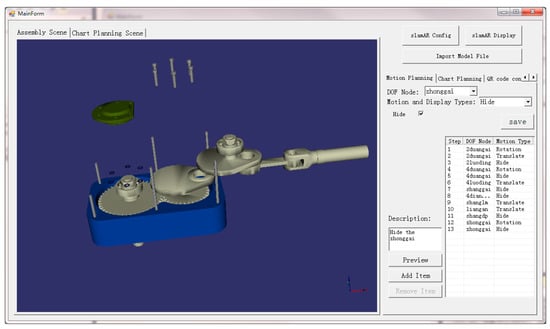

The assembly disassembly animation planning unit is used to interactively plan the disassembly steps and the disassembly motion route of each part of the assembly in the virtual scene. Figure 2 illustrates the assembly disassembly animation planning interface designed in this study. The assembly disassembly animation planning unit loads the assembly file in the OpenFlight format, extracts, and displays the DOF nodes in the assembly file. Each node is used as the logical coordinate system of the local motion of the model. The planning of disassembly steps can be completed by setting the motion type, motion direction, and motion speed of different nodes and their sub-nodes. Each step consists of multiple operations, and each operation involves motion planning for multiple parts.

Figure 2.

Disassembly animation planning interface of assembly.

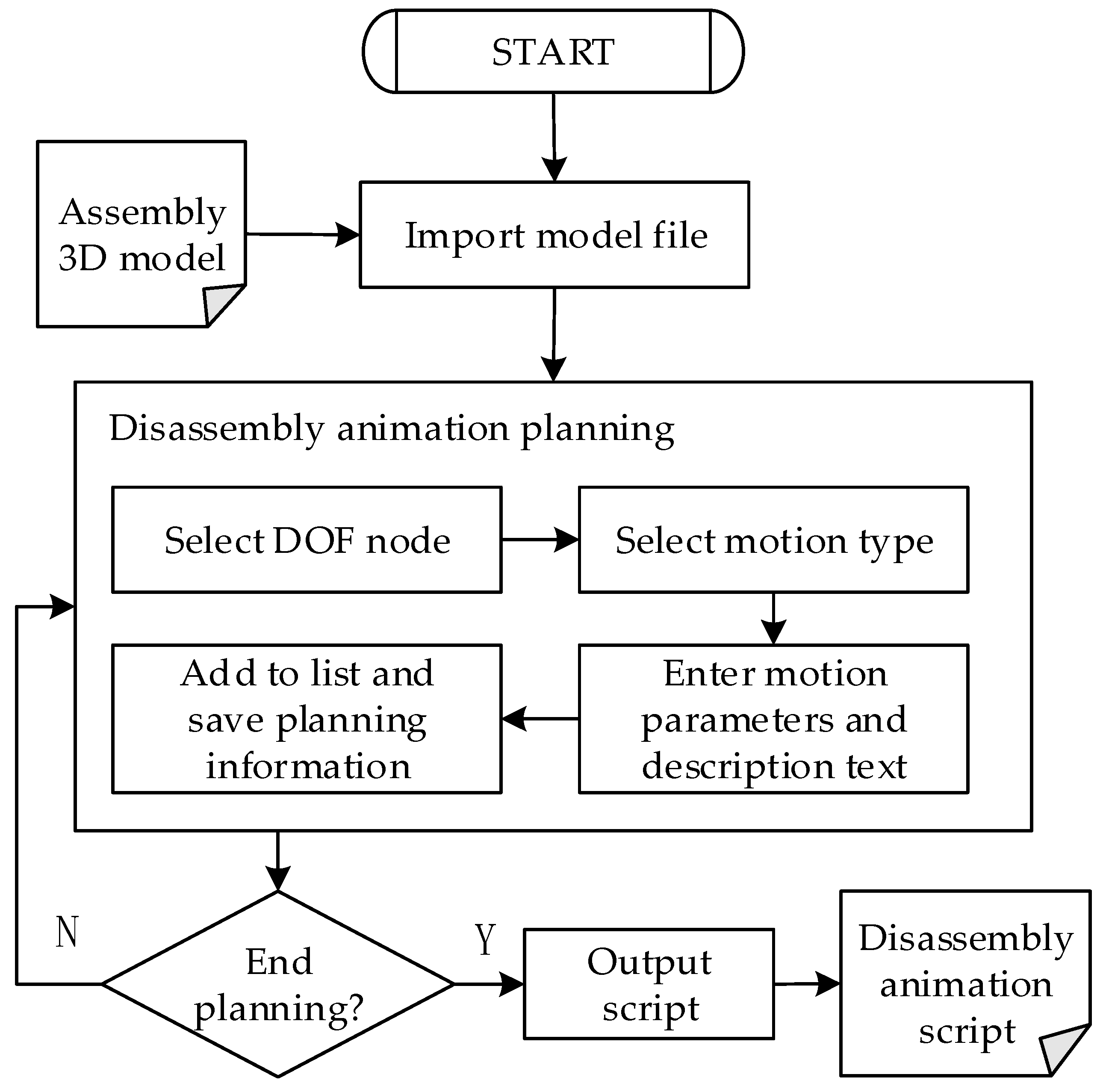

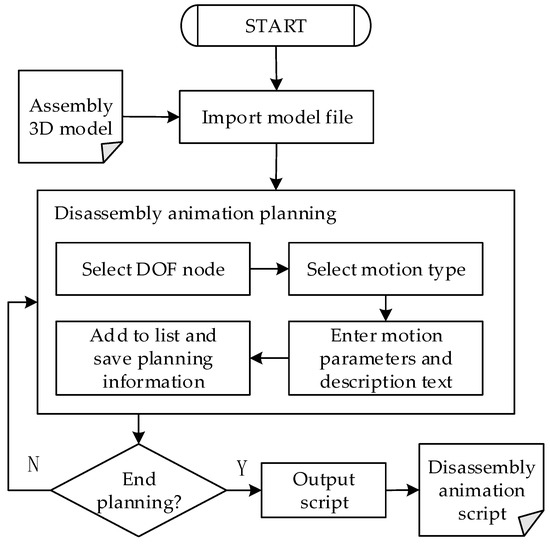

Figure 3 shows the flow chart of the assembly disassembly animation planning unit. The planning process of specific disassembly steps can be divided into the following steps.

Figure 3.

The flow chart of the assembly disassembly animation planning unit.

- (1)

- The assembly model file in OpenFlight format is imported into the virtual scene for display. All DOF nodes in the assembly model are extracted and displayed;

- (2)

- Planning assembly animation or planning disassembly animation is selected according to actual needs. When the former is selected, the display state of all nodes is “hidden” by default; when the latter is selected, the display state of all nodes is “displayed” by default;

- (3)

- According to the disassembly steps, the corresponding DOF node is selected. This node is used as the local coordinate system. Meanwhile, the motion types, motion parameters (speed, range, etc.), and description text of the node and its sub-nodes are set. The motion types include translation, rotation, and scaling. The display state properties of nodes include highlighted display, outline display, hidden, semi-transparent display, and normal display. After a node is set up, its planning information is saved and displayed in the disassembly step planning list on the right side of the software;

- (4)

- To continue to plan the disassembly animation, step (3) is repeated. When the planning ends, the above planning information is saved as an assembly disassembly animation script file.

3.2.2. AR Display Configuration Module Based on QR Code

The AR display configuration module based on the QR code is adopted to configure the parameter information of the QR code and the augmented reality display content after scanning the QR code. This module consists of two parts: the 3D information display planning unit based on QR code, and the instrument display planning unit based on QR code.

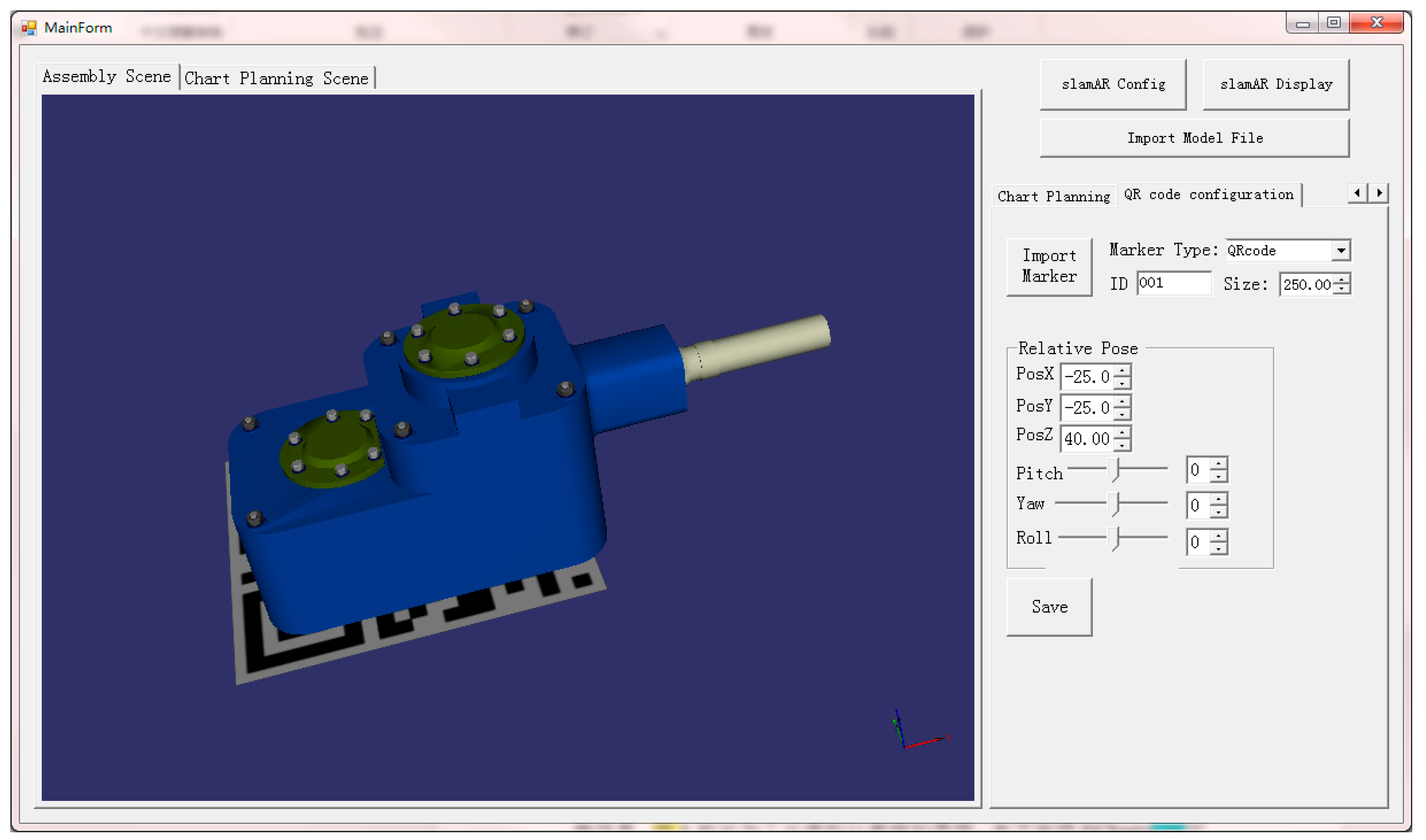

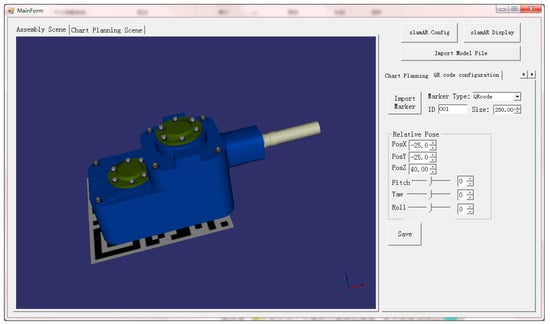

The 3D information display planning unit based on QR code is employed to bind the virtual model or animation with the QR code and set the pose relationship between the displayed content and the QR code. Figure 4 shows the interface of the QR code information planning software; it imports the virtual model into the virtual scene. By configuring the size and number of the QR code and the relative pose with the virtual model, the configuration operation of the QR code and the virtual model is completed, thereby generating the script file for virtual model display.

Figure 4.

QR code information configuration interface.

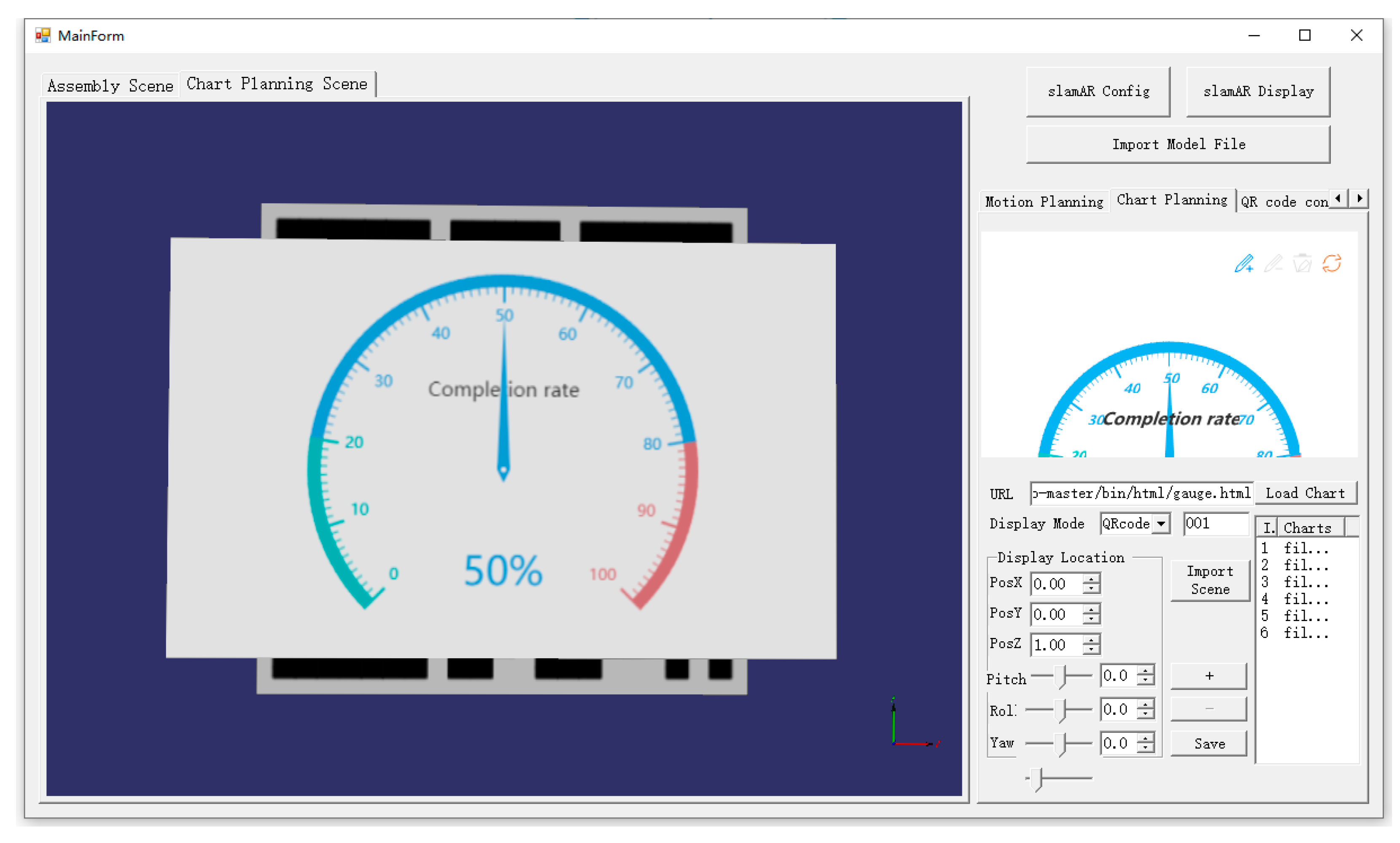

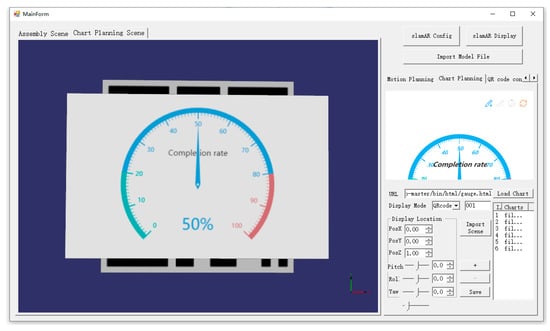

The instrument display planning unit based on QR code is used to plan information such as instrument interfaces corresponding to different QR codes, data labels, and relative display positions of the QR code and the virtual instrument in a virtual scene. Figure 5 illustrates the industrial virtual instrument planning interface. This study adopts ECharts [37], visualization library, and Websocket to build a virtual instrument library. Websocket is a full-duplex communication protocol based on the Transmission Control Protocol (TCP), and it can realize data exchange between script parsing and AR display software and server. By using Websocket, data can be retrieved from the data source in real-time, thus realizing real-time refresh of industrial virtual instruments.

Figure 5.

Industrial virtual instrument planning interface.

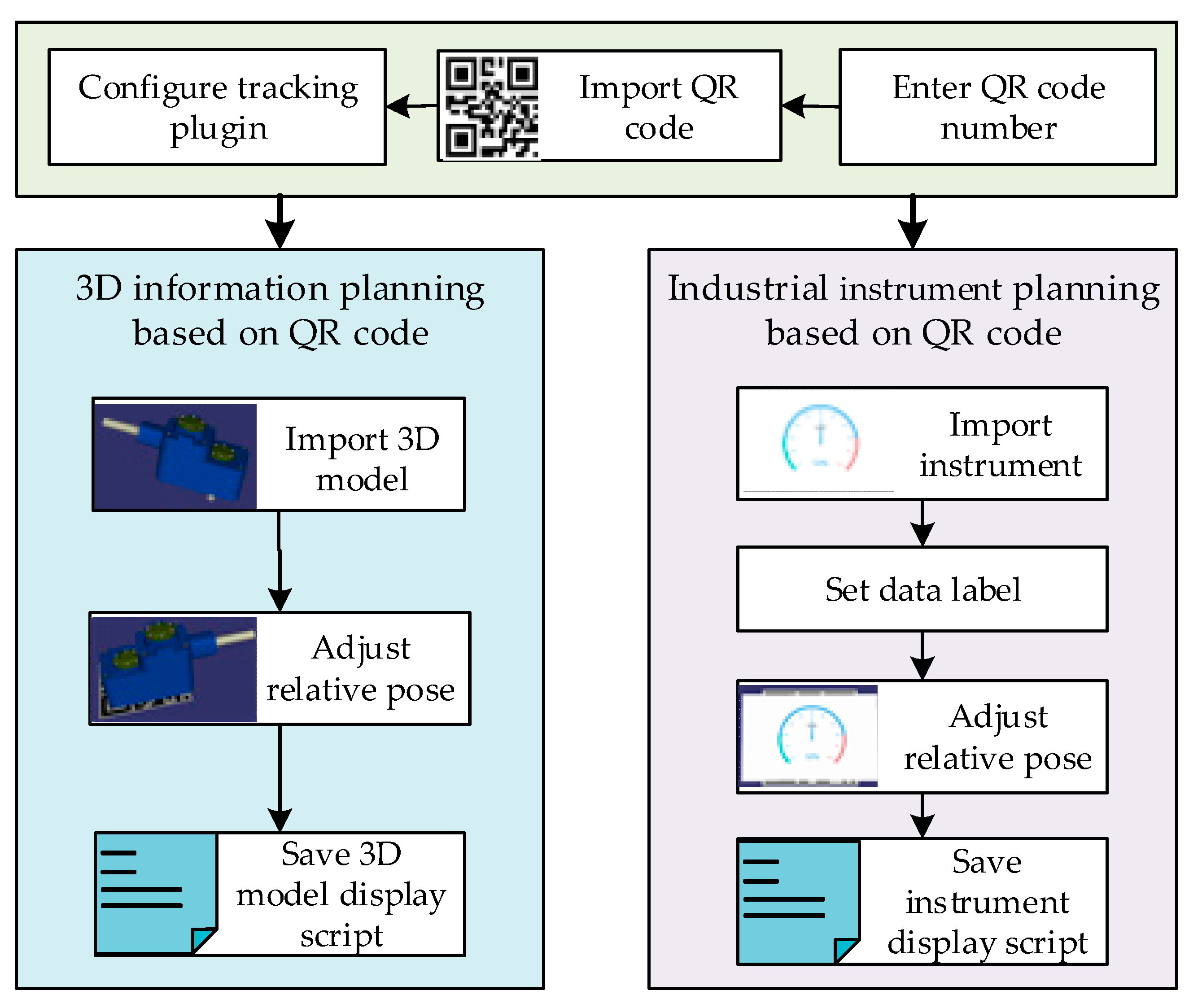

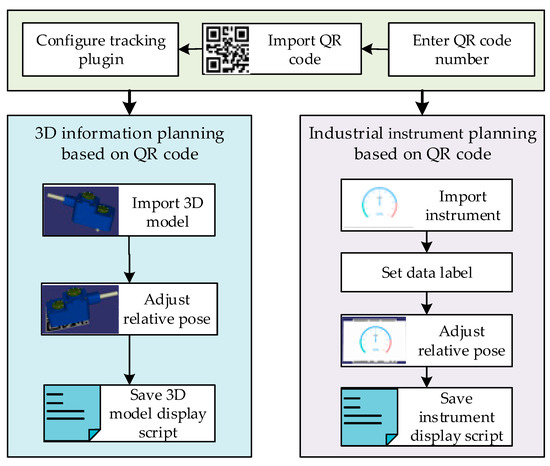

The configuration process of the 3D information display planning unit based on QR code and the instrument display planning unit based on QR code is illustrated in Figure 6. The planning process consists of the following steps.

Figure 6.

The flow chart of the QR code information configuration module.

- (1)

- Configuration of QR code parameters: First, a QR code generator is used to generate a QR code, and each QR code corresponds to a different number. Then, in the planning interface, the number and size of the QR code are configured, and the corresponding QR code is imported into the virtual scene. Finally, the tracking plugin is configured according to the size of the QR code. The tracking plugin is employed to decode and track the position and attitude of the camera. The size information of the QR code is adopted to display the 3D animation model in a suitable size on the QR code;

- (2)

- Configuration of the display content for scanning the QR code: The 3D animation model or virtual instrument file that needs to be displayed after scanning the QR code is imported. If a virtual instrument is imported, the data tags required by the instrument need to be set. Then, the instrument file requests data from the data forwarding interface through these data tags;

- (3)

- Setting display parameters: The relative pose of the QR code and the 3D animation model or virtual instrument is adjusted. Meanwhile, the translation, rotation, and scaling parameters relative to the center point of the QR code are set;

- (4)

- After completing the planning, the above configuration information is saved as a 3D model display script file or an instrument display script file.

3.2.3. AR Display Configuration Module Based on SLAM

The AR display configuration module based on SLAM is used to save the SLAM map of the scene and configure the parameters of the virtual model in the scene. This module is developed based on ORB_SLAM2 and OpenSceneGraph (OSG) [29], where the former is used for scene localization and mapping, and the latter is used for real-time rendering of 3D models in the scene. OSG has advantages of high performance, scalability, portability, and rapid development. This paper is the first to combine OSG with ORB_SLAM2 for rendering virtual models in marker-less AR.

ORB_SLAM2 can locate and map unknown scenes and realize AR registration. However, ORB_SLAM2 positioning must be run online. Once the system is shut down, the scene map built by the system and the virtual model in the scene is deleted. Thus, to save the scene map and display the virtual model in the scene when the map is loaded again, this study designed an AR display configuration module based on SLAM. This module builds a scene map under the online positioning mode of ORB_SLAM2 and places a virtual model on the map. After the mapping is completed, the system can save the scene map and the virtual model parameter configuration as binary SLAM map scripts and SLAM-AR display content scripts.

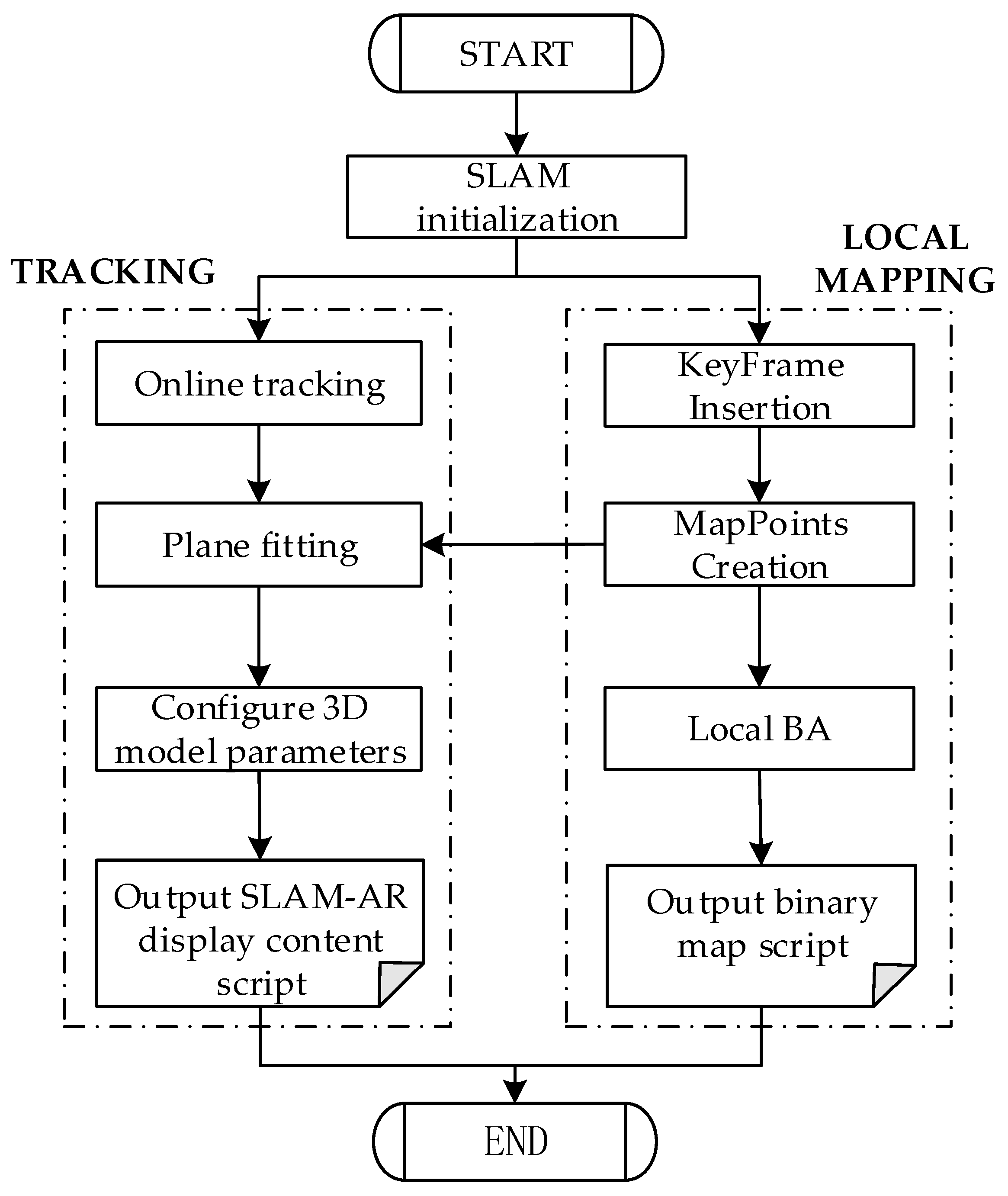

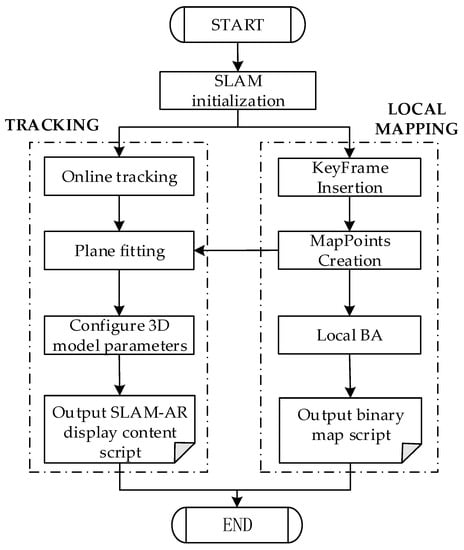

Figure 7 shows the flow chart of the AR display configuration module based on the SLAM designed in this study. In the AR configuration software, the SLAM system supports an online positioning working mode. The local mapping thread, tracking thread, and loop detection thread of the SLAM system run synchronously. Developers can initialize the SLAM system by scanning the industrial scene with a mobile camera.

Figure 7.

The flow chart of the AR display configuration module based on SLAM.

In the tracking thread, the system performs feature matching with map points by finding feature points in the scene and minimizes the reprojection error of these features to the previous frame to estimate the current camera pose. Different from AR registration based on QR code, AR registration based on SLAM does not rely on prior markers. The system adopts the Random Sample Consensus (RANSAC) [38] algorithm to fit the optimal plane from the map points in the current frame. Based on this, developers can click the graphic interaction button in the system interface to configure the 3D virtual model on the plane, and set the translation, rotation, and scaling parameters of the model. After the system is shut down, the parameter configuration of the 3D model is saved as a SLAM-AR display content script file.

In the local mapping thread, the system processes the image frame data and inserts information such as keyframes and map points into the map. Meanwhile, local Bundle Adjustment (BA) is required to optimize the entire local map. After the mapping thread ends, the keyframes, 3D map points, covisibility graph, and spanning tree in the map are saved as binary SLAM map script files.

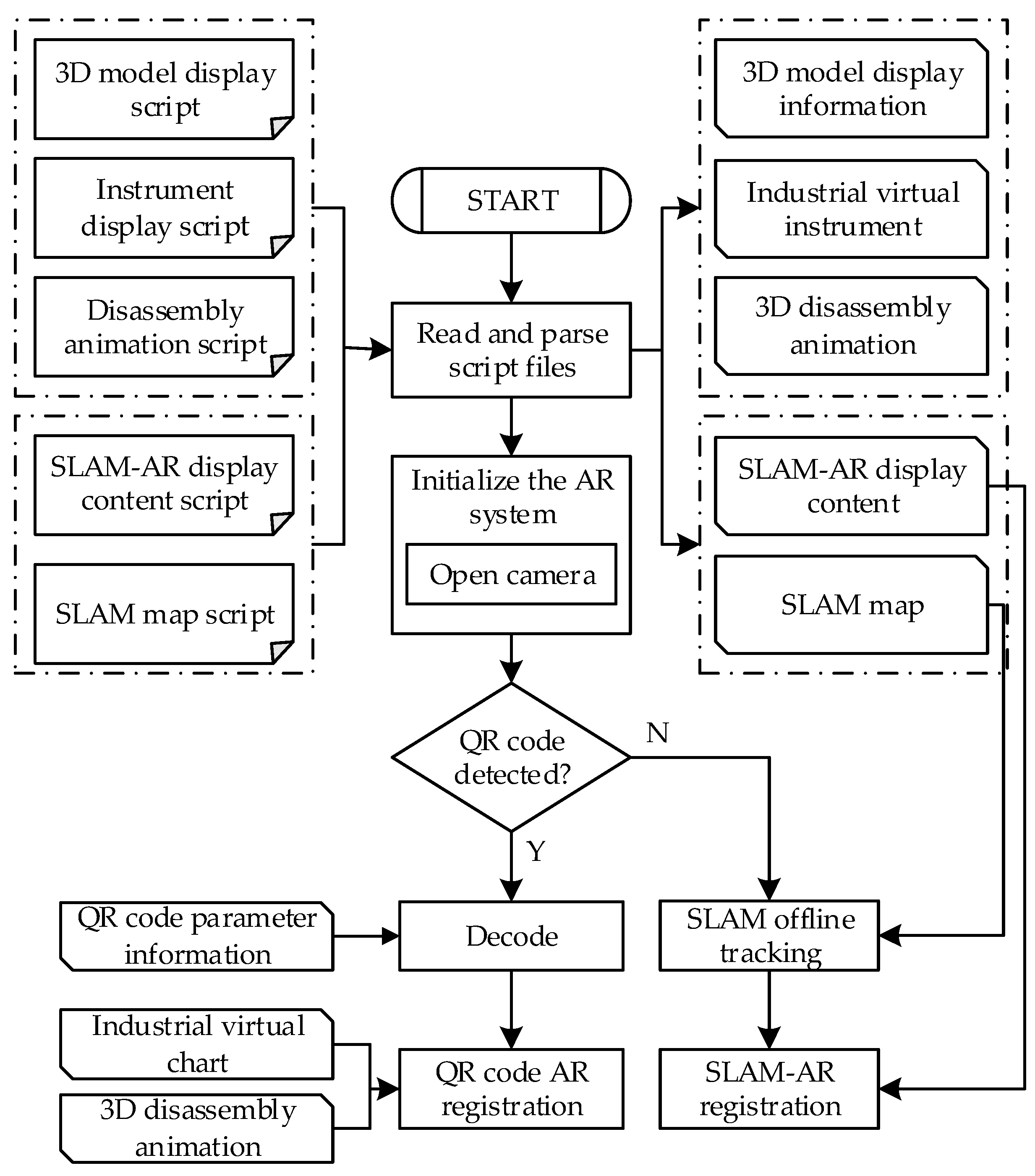

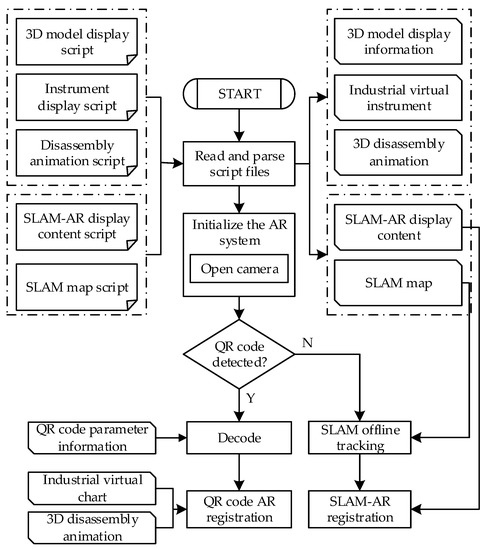

3.3. Script Parsing and AR Display Software

Three-dimensional registration is the core technology of the augmented reality system. It embeds virtual objects into the real scene according to the spatial perspective relationship by capturing the camera image frame and tracking the position and attitude of the camera. To parse the intermediate script files generated in the AR configuration software and quickly realize 3D registration, this study designed the script parsing and AR display software, whose flow chart is illustrated in Figure 8. The system reads the 3D model display script file, instrument display script file, disassembly animation script, SLAM-AR display content script (.yaml), and SLAM map script (.bin) generated in the AR configuration software. Then, it parses the scripts and obtains 3D model display information, industrial virtual instrument, motion parameters of disassembly animation, SLAM-AR display content information, and binary SLAM map. This data information is used to initialize the AR system and realize AR registration.

Figure 8.

The flow chart of the script parsing and AR display software.

By using the above-mentioned intermediate script files, the software supports two AR registration modes: AR registration based on QR code and AR registration based on SLAM map reloading. Moreover, the software integrates the two modes into the script parsing and AR display software. The two AR registration modes can switch freely in the actual scene. When the system detects that the image contains a QR code, based on the recognized QR code, it reads the number of the QR code, parses the display content (animation, instrument, etc.) corresponding to the number from the script file, and locates the pose between the QR code and the camera for AR registration based on the QR code. Otherwise, it loads the built SLAM scene map, scans the built scene, and tracks the pose of the camera through feature matching. Meanwhile, it parses the AR display content in the scene from the SLAM-AR display content script file and performs AR registration based on SLAM.

3.3.1. AR Registration Based on QR Code

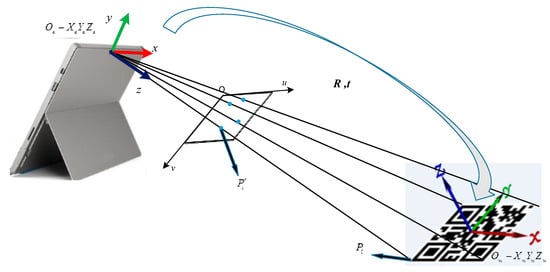

This study uses QR codes for AR registration. The image of the real scene is captured by the camera, and the QR code in the image is positioned and decoded. According to the 3D model display script generated in the AR configuration module, AR technology is employed to display the 3D animation model on the QR code.

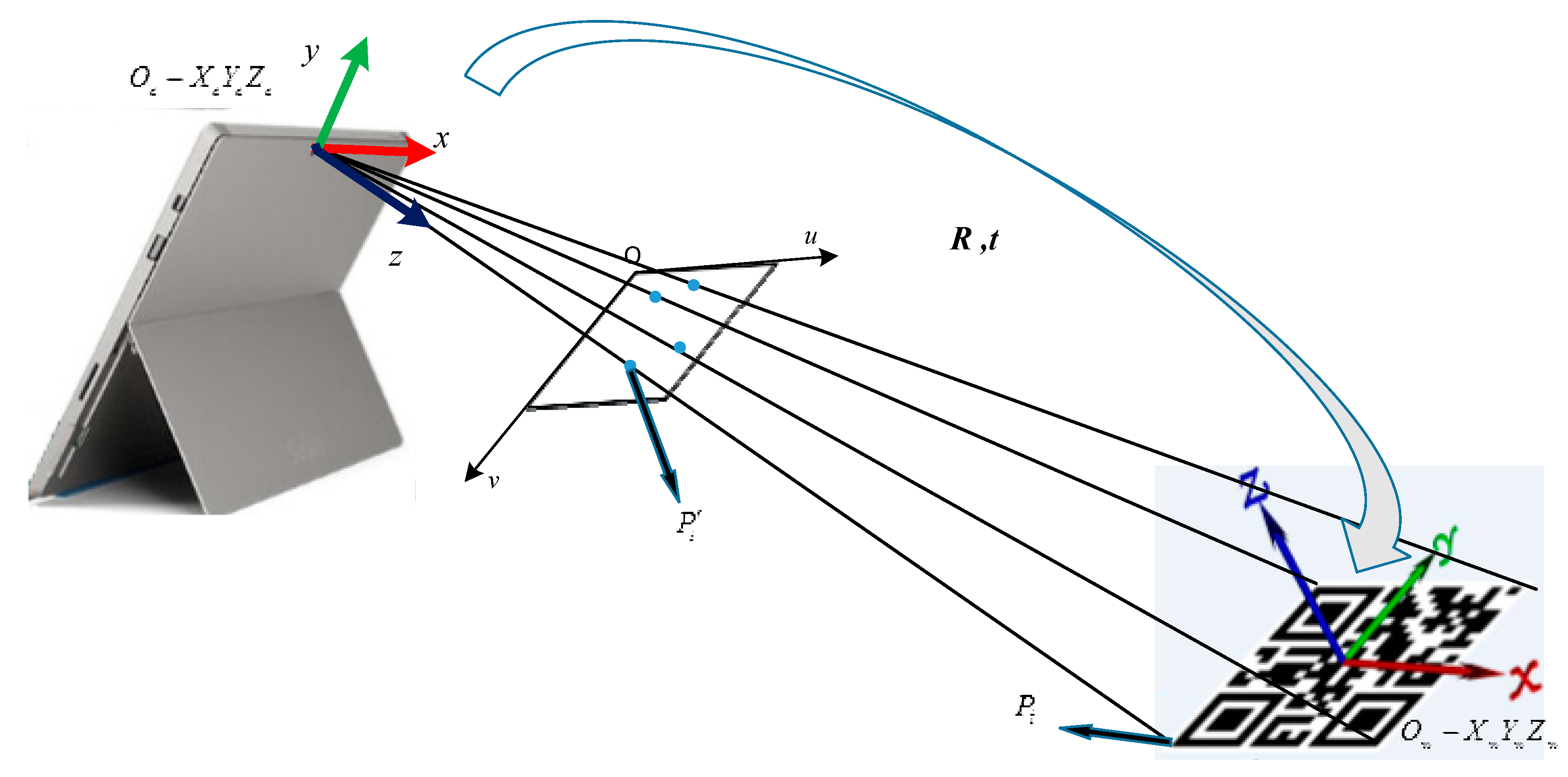

To superimpose information content on the QR code, AR registration is required, i.e., to locate the pose relationship between the QR code and the camera. AR registration method based on QR code can be regarded as a Perspective-n-Point (PnP) problem. PnP is an algorithm for estimating camera pose by the known coordinates of several 3D feature points and their 2D projection coordinates. As shown in Figure 9, the center point of the QR code is the origin of the world coordinate system; is the corner point coordinates of the QR code in the world coordinate system; is the pixel coordinates of the projection of this point in the image. According to the camera imaging model, the following formula is obtained:

where is the point in the world coordinate system; is the projection of on the image plane; is the internal parameter matrix of the camera (where and are the zoomed focal length; is the optical center); s is the scale factor of the image point; and are camera external parameters, i.e., the rotation and translation of the camera coordinate system relative to the world coordinate system. In this study, the PnP solution is performed on the four corner points of the QR code to obtain the 6-DOF pose of the camera in the QR code coordinate system.

Figure 9.

Schematic diagram of AR registration based on QR code.

In the script parsing and AR display software, the pose relationship between the QR code and the camera is determined by the camera recognizing the QR code in the real scene. Meanwhile, the 3D model to be displayed and the relative pose between the 3D model and the QR code are determined according to the 3D model display information generated by the parsing script file, thereby realizing AR display based on the QR code. If the assembly disassembly animation is displayed, the motion process between different parts of the assembly is displayed according to the disassembly animation motion parameters, and the user can click the ‘Previous’ or ‘Next’ button to realize the animation demonstration. If the industrial virtual instrument is displayed, the instrument interface file can be imported with the instrument display script, and data can be requested from the data forwarding interface through the data tag.

3.3.2. AR Registration Based on SLAM Map Reloading

In ORB_SLAM2, AR registration scans an unknown scene with a camera, estimates the camera pose following the multi-view geometry principle, identifies the plane, and places a virtual model on the plane to achieve AR display. Based on this, the QDARV system provides an AR registration method based on SLAM map reloading. This method loads the scene map, uses the camera to scan the mapped scene, applies feature matching to estimate the camera pose, parses the SLAM-AR display content script, and places the virtual model to achieve AR registration.

In the online positioning mode of ORB_SLAM2, the tracking thread, local mapping thread, loop detection thread, and AR display thread of the system are synchronized with the optimization task. The tracking thread extracts Oriented Fast and Rotated Brief (ORB) feature points from the image and estimates the camera pose between frames. The local mapping thread adopts the Bundle BA method to optimize key frames and map points. The loop detection thread detects whether the camera returns to the previously visited position and calls the g2o optimization library [39] to eliminate accumulated errors. The AR display thread realizes the AR display by detecting the plane on which the 3D model is placed in the real scene.

After loading the map, the system runs in the offline positioning mode and retains only the tracking thread and AR display thread to achieve pose estimation and AR registration for stopping the local mapping thread, loop detection thread, and optimization tasks. The system tracks the camera pose through relocation. First, the tracking thread obtains the image frame captured by the camera and finds candidate keyframes similar to the current frame in the keyframe library. Then, Bag of Words (BoW) is used for feature matching between the current frame and the keyframe. If the number of matching points is less than 15, the current candidate frame is discarded. Finally, the number of inliers obtained after BA optimization is taken to determine whether the relocation is successful. If the number of matching points between the candidate frame and the current frame is greater than 50, the relocation is considered successful. Furthermore, the Efficient Perspective-n-Point (EPNP) [40] algorithm is adopted to estimate the current camera pose. Meanwhile, the AR display thread obtains the model matrix and parameters of translation, rotation, and scaling of the 3D model through the parsed SLAM-AR display content information, and it renders the virtual model into the real scene through the OSG 3D rendering engine, thereby achieving augmented reality display of the planned scene.

The AR registration method based on SLAM map reloading proposed in this study can realize re-display of AR scenes. The real-time pose estimation results have a small accumulated error. Moreover, only keeping the tracking thread and AR display thread can reduce the computational resources required for pose estimation tasks, which greatly improves the work efficiency of the system.

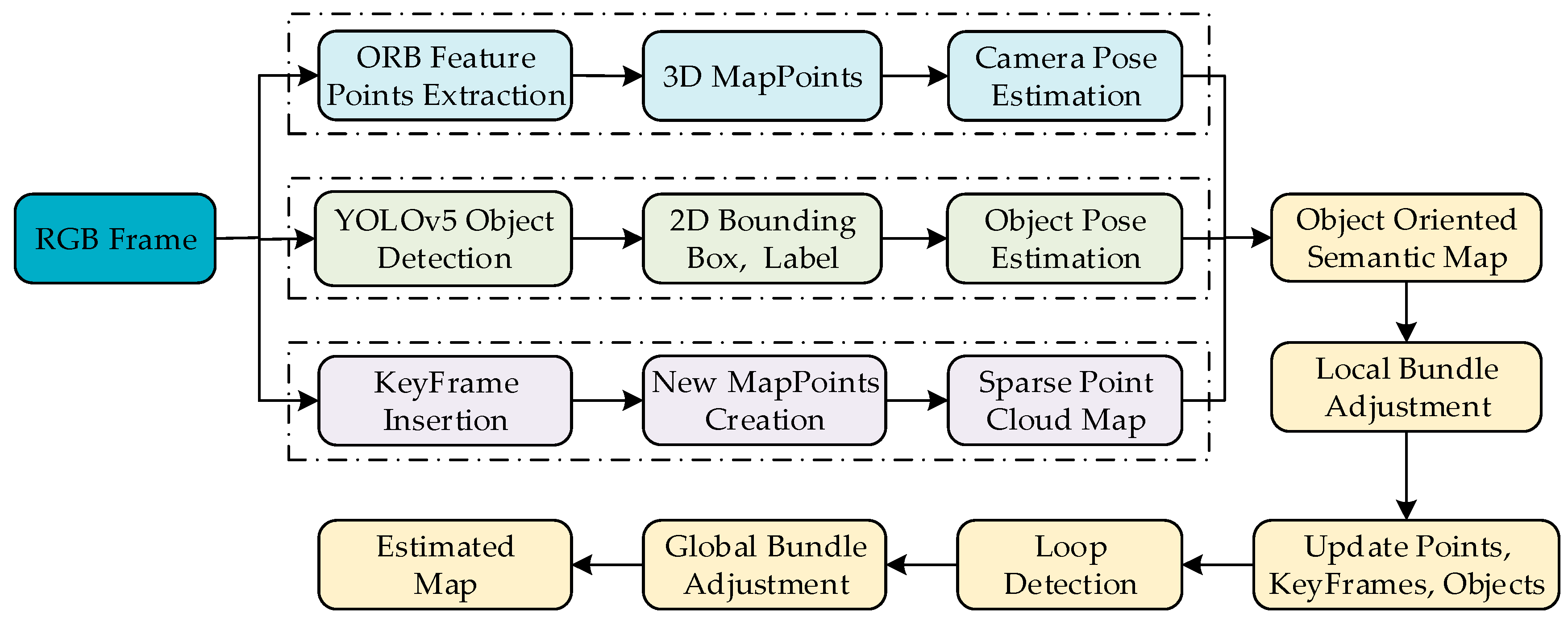

3.4. Object SLAM AR

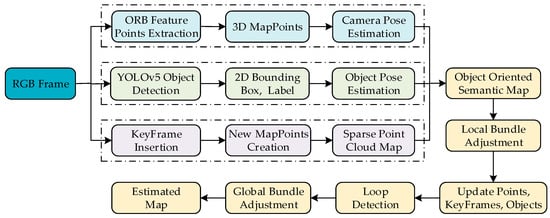

In this paper, the AR registration based on SLAM uses ORB_SLAM2 to locate and map the scene. However, ORB_SLAM2 uses feature points as geometric features to estimate the camera pose between frames. Meanwhile, the environment map it builds is only a sparse representation of the scene, which may not work in low-texture or no-texture environments. In the real environment, the object has important semantic information in the scene. It can be used as a landmark in the SLAM system to assist system localization and improve the robustness of the system. As shown in Figure 10, to implement an object-oriented SLAM system and build an object-oriented semantic map, this study adds semantic threads to ORB_SLAM2. The semantic thread applies the YOLOv5 object detector to perform object detection on each frame of the image in the real-time video stream, obtains the 2D bounding box and semantic labels of the object, estimates the pose of the object, and finally maps this information to a sparse point cloud map of ORB_SLAM2, thereby constructing object-oriented semantic maps.

Figure 10.

Object-oriented SLAM system framework.

3.4.1. Object Detection

This system uses the YOLOv5 object detector to process monocular image frames in real-time, and it uses the COCO data set [41] as the training data set of the object detection model, which can detect 80 types of targets in the real scene. The training platform is Pytorch. The model input is RGB images with a size of 640 × 384 pixels.

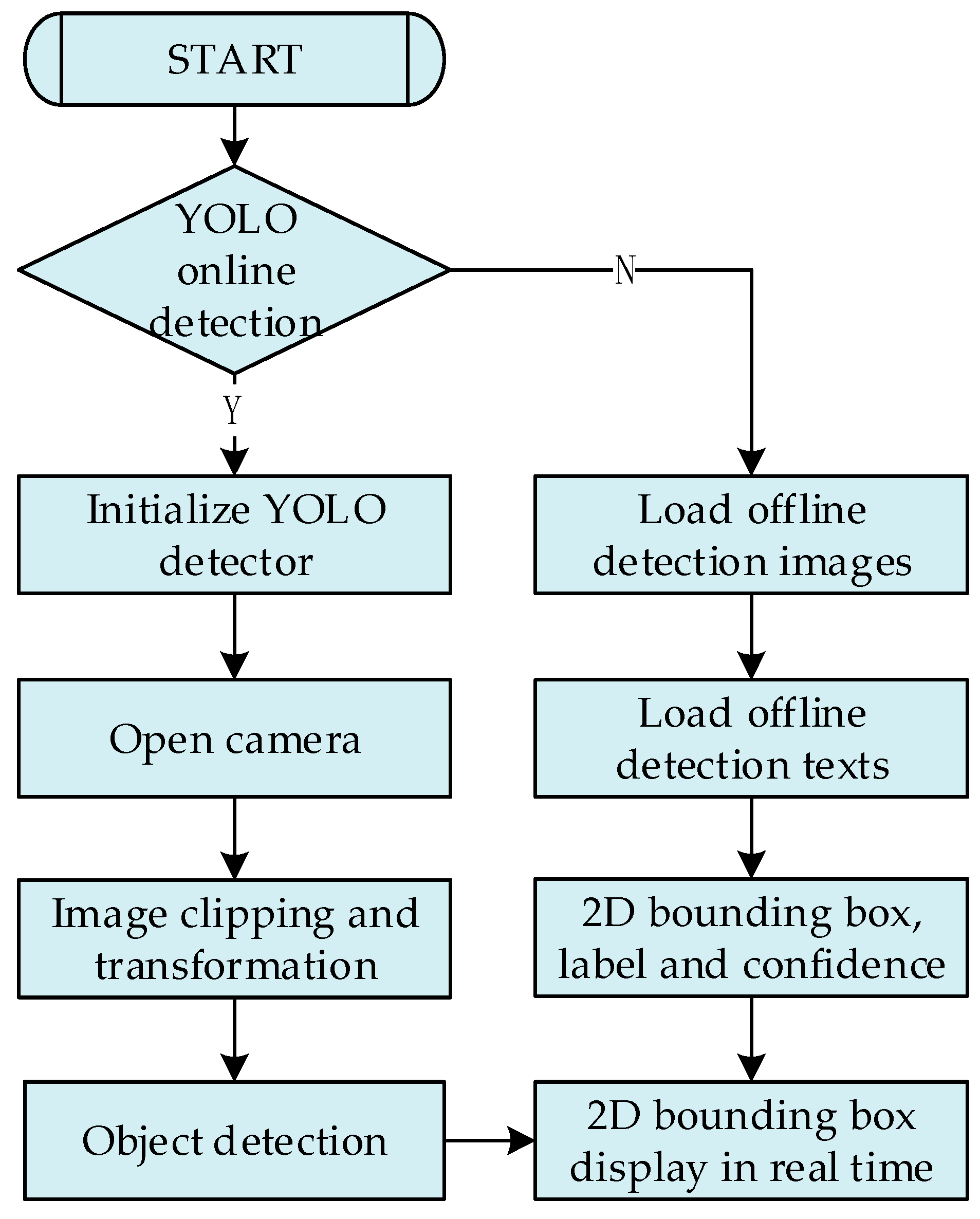

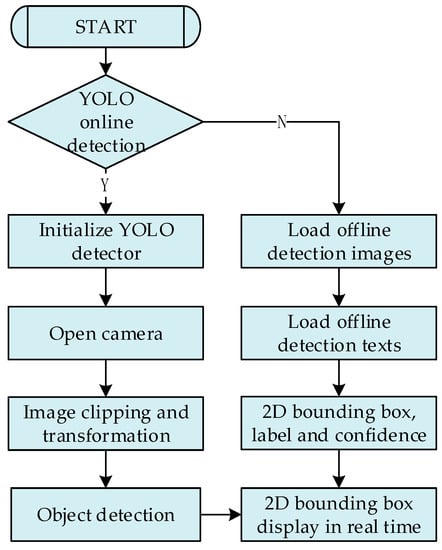

Figure 11 shows the flow chart of the object detection module of the system, which has two working modes: online detection and offline detection. In the online detection mode, the object detection module is initialized after the system starts, including loading the trained YOLOv5 object detection model and setting the clipping and transformation matrix of the image. After the object detection module is successfully initialized, the monocular camera is turned on to obtain real-time image frames. After the image is clipped and transformed, the object detection model is used for 2D detection, and the detection results are transmitted to the main thread for display. In the offline detection mode, object detection is performed on the preloaded images to generate offline detection texts. After the system is launched, the offline detection images and offline detection texts are loaded. Then, 2D bounding boxes, semantic labels, and detection confidences of the objects in each image are obtained, and they are transmitted to the main thread for real-time display. The offline mode has higher real-time performance because it does not require online reasoning. However, it can only input fixed images, which also has great limitations.

Figure 11.

The flow chart of the object detection module.

3.4.2. Construction of Object-Oriented Level Semantic Maps

The local mapping thread follows the map construction strategy of ORB_SLAM2. This strategy inserts new map points and keyframes into the map and eliminates redundant map points and keyframes to construct a sparse point cloud map of the scene. Based on this, the system inserts objects into the map as new landmarks. This study combines the methods in refs. [42,43] to represent objects in the scene in a lightweight way of dual quadric surfaces and cubes instead of complex CAD models. In this study, irregular objects such as cups and balls are represented as dual quadric surfaces, and regular objects such as keyboards and books are represented as cubes.

The main workflow of constructing object-oriented semantic maps is as follows: First, the objects in each frame of an image are detected by the YOLOv5 object detector, and then the 2D bounding box, semantic label, and label confidence of these objects are obtained. This study only retains the objects with more than 70% detection confidence. Subsequently, the methods in refs. [42,43] are employed to estimate the pose of the objects, and two lightweight methods of dual quadric surfaces and cubes are used to model the objects. Finally, these objects are mapped to a sparse point cloud map to construct object-oriented semantic maps.

3.4.3. Data Association

In the ORB_SLAM2 based on the feature point method, feature matching is the most important method for data association. The system can effectively match ORB feature points in different views through ORB descriptor matching and an epipolar geometric search. Meanwhile, object SLAM needs to build the data association between objects. Based on this, this study adopts the data association method of regional semantic categories and local feature point descriptors. At the image level, the semantic labels of objects are used to realize the object detection frame area association between frames. For the ORB feature points in the detection frame, its multiple descriptors are regarded as the regional pixel descriptors of the detection frame to solve the semantic matching ambiguity caused by multiple objects of the same category.

When the system is in a low-texture or no-texture environment, ORB_SLAM2 cannot track the camera pose due to the lack of feature points, which makes the system fail to work. The object-oriented SLAM system can construct semantic data association between objects, so it achieves superior performance even in low-texture or no-texture environments.

4. Experimental Results

In this study, a QDARV toolkit is designed. Experiments are conducted to verify the validity and reliability of this toolkit. The specific experimental contents are as follows: (1) AR registration test based on QR code; the QR code is located in the real scene to achieve the assembly disassembly animation display and the industrial virtual instrument display. (2) AR registration test based on SLAM map reloading; the scene map is saved in the AR configuration software, and the SLAM-AR display content script is configured. The constructed scene map is loaded to the script parsing and AR display software for SLAM offline positioning. The SLAM-AR display content script is parsed to realize AR registration. (3) Object SLAM system test; the functions of ORB feature point extraction, YOLOv5 object detection, object pose estimation, and object-oriented level semantic map construction in the object-oriented SLAM system are tested in real scenes. (4) The QDARV tool is compared with other AR development tools. (5) The feasibility of the QDARV is analyzed by user evaluation using heuristic evaluation techniques.

4.1. AR Registration Based on QR Code

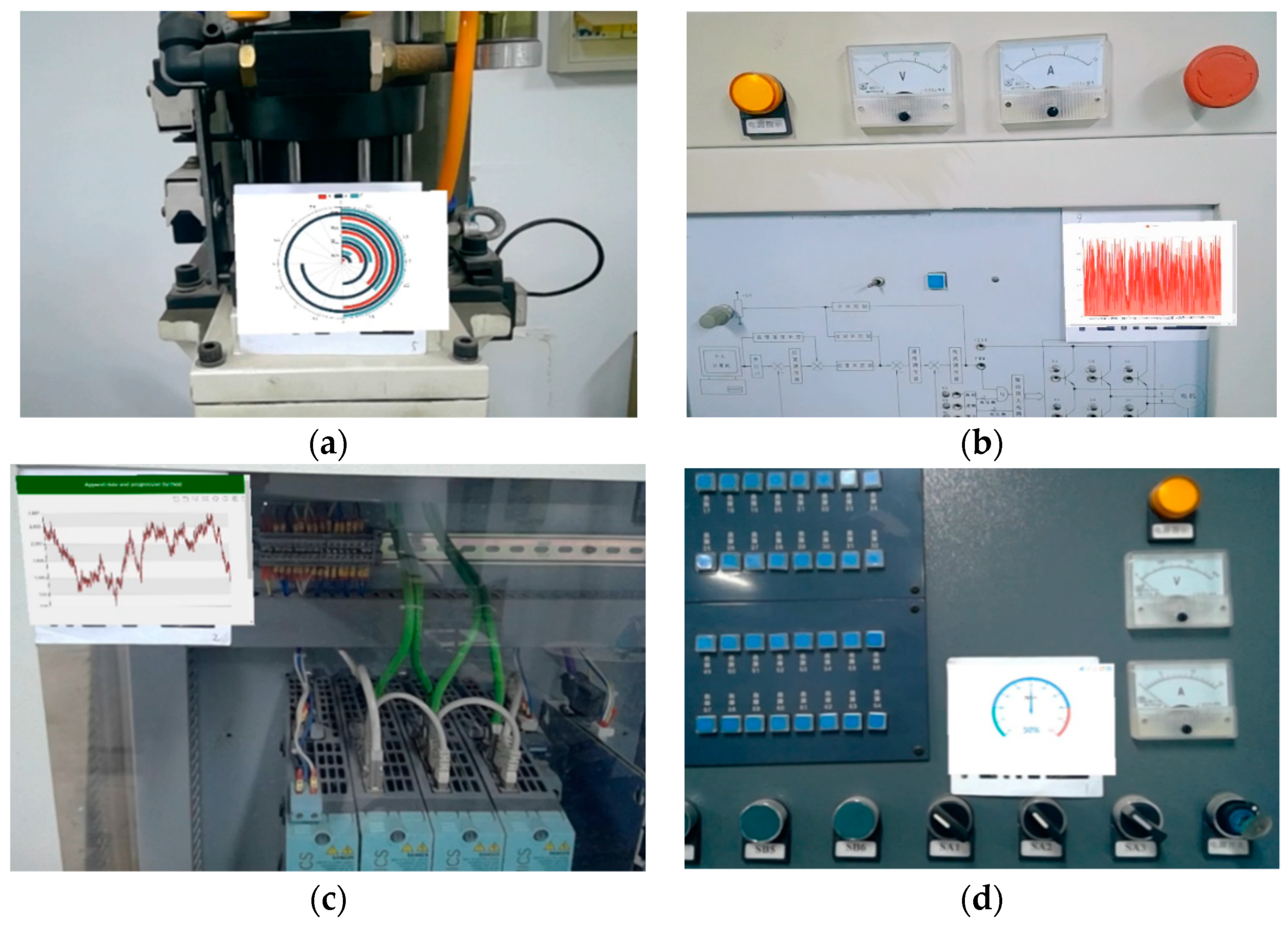

This experiment mainly takes the assembly disassembly animation and industrial virtual instrument as examples to demonstrate the function of AR registration based on QR code.

- (1)

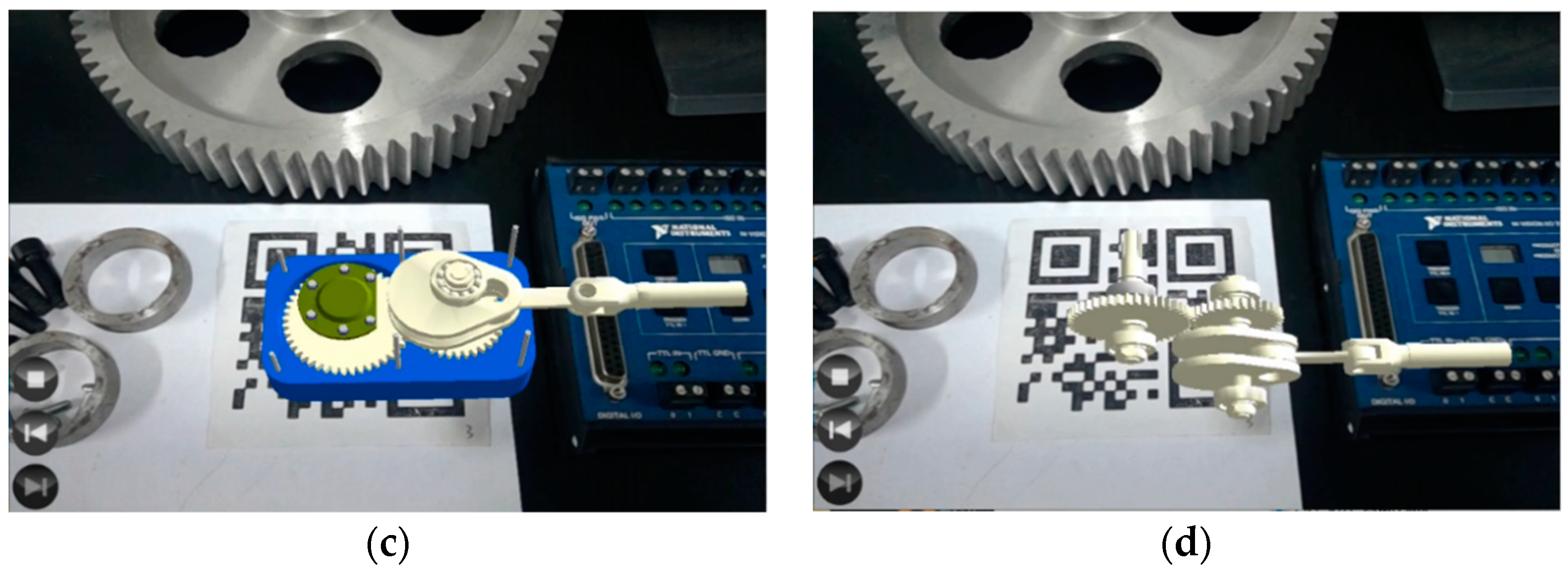

- Assembly disassembly animation demonstration

In this study, the assembly disassembly animation steps are configured in the AR configuration software. The assembly disassembly animation AR demonstration is realized by recognizing the QR code in the scene with the script parsing and AR display software. Figure 12 illustrates the assembly disassembly animation demonstration in the real scene. To better realize AR interaction and show the assembly disassembly steps more intuitively, the system designs three graphical interaction buttons to control the demonstration of the disassembly animation, including the “next step”, the “previous step”, and the “stop” buttons. Developers can achieve the animation demonstration of the entire disassembly process by clicking these buttons.

Figure 12.

Assembly disassembly animation demonstration: (a) initial page; (b) top cover is hidden; (c) middle shell is hidden; (d) operation demonstration.

- (2)

- Industrial instrument AR Display

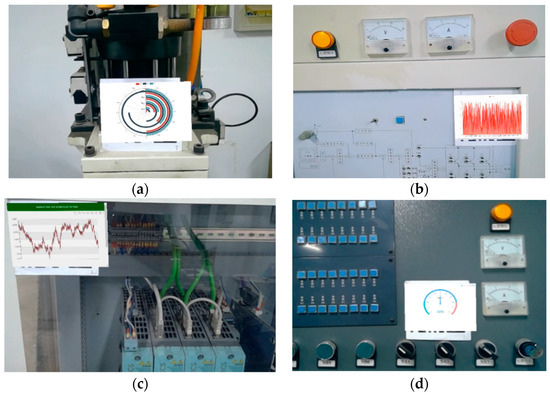

In this study, the instrument display information is configured in the AR configuration software. The AR display function of the industrial virtual instrument is realized by recognizing the QR code in the scene with the script parsing and AR display software. Figure 13 shows the AR display interface of the industrial virtual instrument in the real scene. This interface can display data interfaces such as pie charts, bar charts, line charts, and dashboards according to actual needs. The display content of the instrument interface can be customized by the actual information of the factory equipment. Factory staff only need to be equipped with an AR glass or a mobile device to achieve real-time acquisition of factory information through virtual instruments, thus realizing human-centered industrial data visualization.

Figure 13.

Industrial virtual instrument AR display: (a) display pie chart; (b) display bar chart; (c) display line graph; (d) display dashboard.

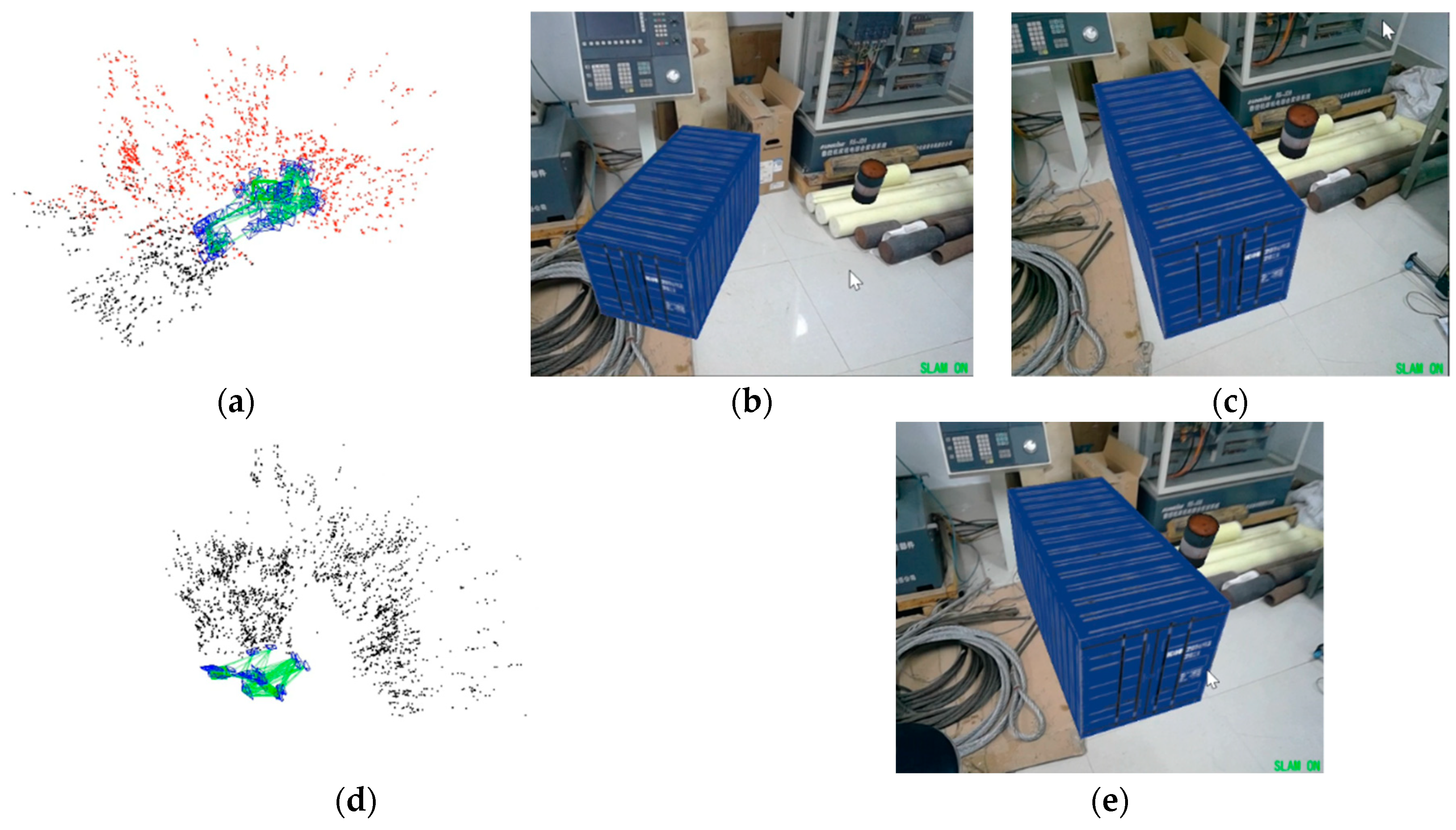

4.2. AR Registration Based on SLAM Map Reloading

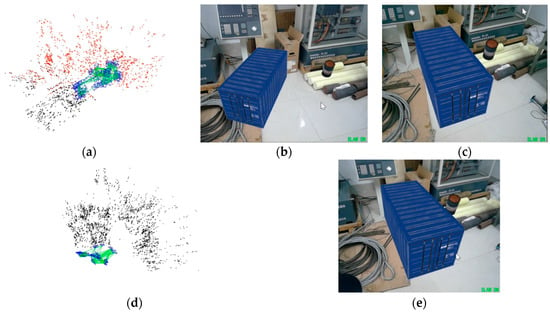

Figure 14 shows a schematic diagram of AR registration based on SLAM map reloading. In the AR configuration software, the user first scans the factory scene through the camera to build a local map. The working mode of the SLAM system is online positioning, and the running frame rate is about 22 Hz. As shown in Figure 14a, the points in the map are displayed in two colors, black and red, where the red color represents the map points observed by the camera in the current frame, and the black color represents the map points that cannot be observed in the current frame. As shown in Figure 14b,c, by recognizing the plane to place the 3D model of the factory equipment, the user can interactively control the rotation, translation, and zoom of the 3D model through the keyboard or mouse according to the actual need. After the mapping is completed, the SLAM map and 3D model parameter configuration are saved as an intermediate script file. In the script parsing and AR display software, the system reads the above intermediate script file to initialize the AR system. As shown in Figure 14d, the system reloads the constructed scene map. At this time, the camera does not observe any points, and all feature points on the map are shown in black. As shown in Figure 14e, the user scans the constructed scene again. The working mode of the SLAM system is offline positioning, and the system runs at a higher frame rate of about 26 Hz. The system relocates through feature matching while parsing the SLAM-AR display script to display the constructed 3D virtual model again.

Figure 14.

AR registration based on SLAM map reloading: (a) SLAM map in online positioning mode; (b) 3D model of initial placement; (c) 3D model after rotation, translation, and scaling; (d) SLAM map in offline positioning mode; (e) 3D model after loading the map.

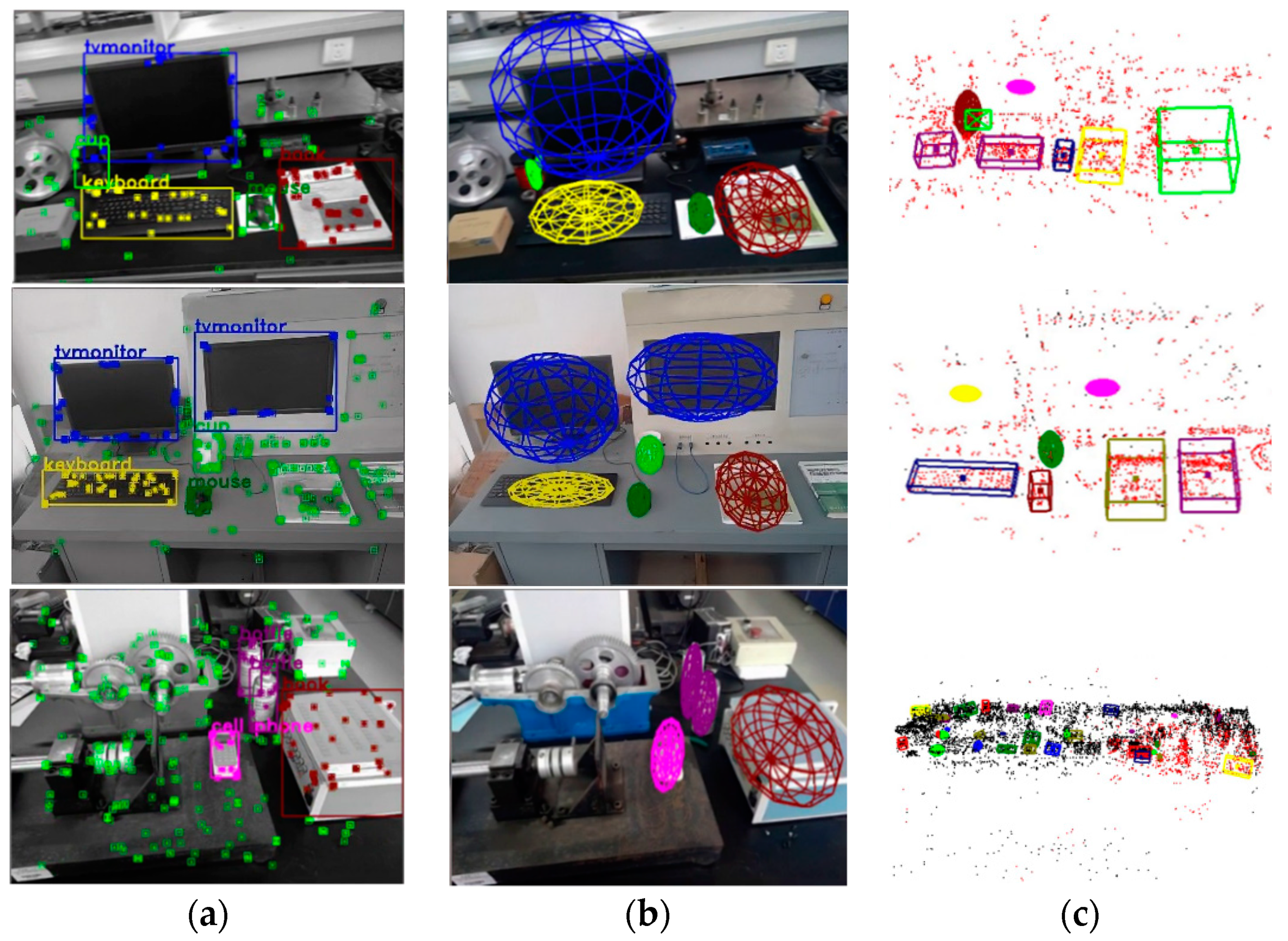

4.3. Object-Oriented Level SLAM System Test

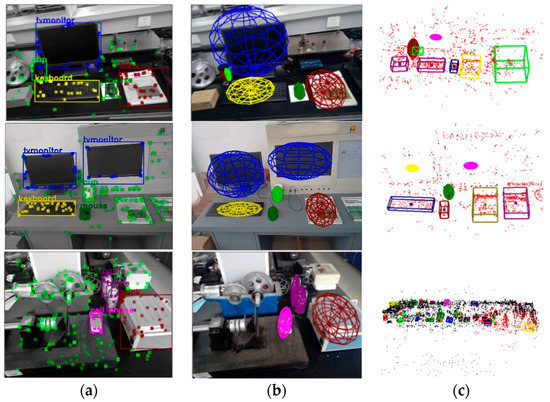

In this study, based on a robust object pose estimation algorithm, two lightweight ways of cubes and ellipsoids are used to express objects in the scene, and an object-oriented 3D semantic map is constructed. Compared with the sparse point cloud map constructed with feature points as geometric features in ORB_SLAM2, the object-oriented semantic map constructed in this study can better express the semantic information in the scene.

Figure 15 shows an example of the running results of the object-oriented SLAM system in a real scene. Specifically, Figure 15a shows the ORB feature points recognized in the image, the 2D bounding boxes of objects, and the semantic labels. For the ORB feature points in the 2D bounding boxes of different types of objects, the system displays them in different colors, e.g., the feature points outside the bounding boxes of objects are displayed in green. Figure 15b shows the pose estimation of objects in the image. In the visualization interface, the system represents the object in the form of an ellipsoid. Figure 15c shows the object-oriented 3D semantic map constructed by the system. Based on the ORB_SLAM2 sparse point cloud map, the semantic information of the object is integrated. It can be seen that the objects in the scene can be accurately estimated, and these objects are mapped into the 3D semantic map, which verifies the accuracy and robustness of the system.

Figure 15.

Running results of the object-oriented SLAM system in a real scene: (a) extraction of ORB feature points and YOLOv5 object detection; (b) ellipsoid representation of objects; (c) object-oriented level semantic maps.

4.4. Comparison with Other AR Tools

The comparison between QDAVR and other AR development tools is presented in Table 1. Among them, ARToolKit is an open-source AR Software Development Kit (SDK), Spark AR, ARCore, and Vuforia are commercial-grade AR development tools, which are widely used in industrial production and other fields. Compared with the above AR development tools, the QDARV development tool developed in this paper provides disassembly and assembly animation configuration and virtual instrument configuration functions. Based on these functions, developers can quickly realize disassembly and assembly animation AR display and industrial instrument AR display in real scenes. Meanwhile, this paper expands the map saving and map loading functions in ORB_SLAM2, which can save and reload world data. This function is not supported in ARToolKit and Spark AR. Although ARCore and Vuforia support this function, their saved map contains only discrete point clouds of the scene, while QDARV saves the map points and keyframes in the binary map script. These keyframe information can help the system better match the real world with the map. Moreover, this paper establishes an object-oriented SLAM system based on ORB_SLAM2, which can track the pose of 3D objects in the scene in real-time. This function is not supported by many AR development tools.

Table 1.

Comparison with other AR development tools.

4.5. User Evaluation

User acceptance is critical for assessing usability of QDARV development tools. Therefore, user evaluation was conducted in this study. The participants were 10 graduate students majoring in mechanical engineering. They were divided into two groups according to their research backgrounds, researcher groups and operator groups. Among them, researchers have a certain programming foundation or AR technology knowledge, while the operators do not understand programming and AR technology. This study adopted heuristic evaluation [44,45] technology to evaluate users of QDARV development tools, which uses user satisfaction surveys to analyze the usability of the system. Table 2 shows the evaluation questionnaire of QDARV development tool, which was used to obtain the feedback of participants.

Table 2.

Evaluation questionnaire of QDARV development tool.

The participants used the QDARV tool to perform AR registration based on QR code and AR registration based on SLAM map reloading, respectively. In order to verify the effectiveness and quality of the proposed development tools, qualitative and quantitative usability studies were conducted, and participants were asked to perform specific tasks as shown in Table 3 and Table 4.

Table 3.

AR registration task based on QR code.

Table 4.

AR registration task based on SLAM map reloading.

There were five possible answers: very dissatisfied (2 points), dissatisfied (4 points), ordinary system (6 points), satisfied (8 points), and very satisfied (10 points). According to these options, Table 5 shows the user evaluation results of the QDARV development tool. Overall, QDARV received positive feedback from participants. Participants noted that the functions designed in the toolkit are easy to understand, useful for beginners who do not know programming but want to develop AR applications, and the entire development process is efficient and flexible. Participants also provided some feedback needed to be improved, such as the design of the interface can be clearer, and hints and fixes should be given when operation errors occur.

Table 5.

The user evaluation results of the QDARV development tool.

5. Discussion and Future Work

Currently, augmented reality technology is widely used in industry. Most of the existing AR development tools rely on time-consuming and expensive recompilation steps, which are costly and lack versatility. This study proposes a quick development toolkit named QDARV for AR visualization of factories, which can help developers quickly develop AR applications for factory visualization to improve industrial production efficiency. The QDARV tool consists of AR configuration software and script parsing and AR display software. The AR configuration software generates intermediate script files through the 3D animation configuration module, the AR display configuration module based on QR code, and the AR display configuration module based on SLAM. The script parsing and AR display software reads and parses the intermediate script files to generate AR display content. On this basis, AR registration based on QR code and AR registration based on SLAM map reloading are realized. With the QDARV toolkit, developers do not need a deep understanding of AR technology or extensive programming experience. They only need to configure augmented reality display content and augmented reality registration parameters to generate an intermediate script file, thereby achieving data communication between the AR configuration software and script parsing and AR display software. This helps to design industrial AR applications, customize the display of augmented reality content, and achieve a human-centered display.

Compared with other AR tools, the QDARV tool developed in this paper does not rely on time-consuming and costly recompilation steps, and therefore AR display content and AR applications can be created quickly and efficiently. The QDARV tool provides disassembly and assembly animation configuration and virtual instrument configuration functions. With these functions, developers can quickly realize disassembly and assembly animation AR display and industrial instrument AR display in real scenes. In addition, the AR registration method is improved based on ORB_SLAM2. In this improved version, the functions of map saving and map loading are added, which can save and reload world data. Meanwhile, a semantic thread is added in this improved version to track the pose of 3D objects in the scene and establish an object-oriented semantic map, thereby improving the robustness and tracking performance of the system.

Section 4 validates the effectiveness, feasibility, and advantages of the proposed tool through experiments and analysis. Nonetheless, the QDARV tool has some limitations that need to be improved in future studies. This study only uses a monocular camera for pose estimation. The tracking of the system can be easily lost when the AR device moves or rotates rapidly. Meanwhile, the IMU sensor can estimate fast motion in a short time and estimate the scale through its inertial measurements. The fusion of the monocular camera and IMU sensor will be considered in future work to improve the robustness and localization performance of the system under fast motion. In addition, at present, the QDARV tool is only applicable to Windows development platform and has not been integrated with other industrial software. In the future work, we will continue to improve the functions of the QDARV tool to support cross platform development and integration with industrial software (SCADA, databases, cloud platforms, etc.).

Author Contributions

Conceptualization, C.C., Y.P. and D.L.; Data curation, Z.Z.; Funding acquisition, C.C.; Methodology, C.C., R.L. and D.L.; Software, R.L., Y.P., Y.G. and Q.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the national natural science foundation of China, grant number 52175471.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Egger, J.; Masood, T. Augmented reality in support of intelligent manufacturing—A systematic literature review. Comput. Ind. Eng. 2020, 140, 106195. [Google Scholar] [CrossRef]

- Sharma, L.; Anand, S.; Sharma, N.; Routry, S.K. Visualization of Big Data with Augmented Reality. In Proceedings of the 2021 5th International Conference on Intelligent Computing and Control Systems (ICICCS), Madurai, India, 6–8 May 2021. [Google Scholar]

- May, K.W.; KC, C.; Ochoa, J.J.; Gu, N.; Walsh, J.; Smith, R.T.; Thomas, B.H. The Identification, Development, and Evaluation of BIM-ARDM: A BIM-Based AR Defect Management System for Construction Inspections. Buildings 2022, 12, 140. [Google Scholar] [CrossRef]

- Chen, C.J.; Hong, J.; Wang, S.F. Automated positioning of 3D virtual scene in AR-based assembly and disassembly guiding system. Int. J. Adv. Manuf. Technol. 2014, 76, 753–764. [Google Scholar] [CrossRef]

- Ma, W.; Zhang, S.; Huang, J. Mobile augmented reality based indoor map for improving geo-visualization. PeerJ Comput. Sci. 2021, 7, e704. [Google Scholar] [CrossRef] [PubMed]

- Schall, G.; Mendez, E.; Kruijff, E.; Veas, E.; Junghanns, S.; Reitinger, B.; Schmalstieg, D. Handheld Augmented Reality for underground infrastructure visualization. Pers. Ubiquitous Comput. 2008, 13, 281–291. [Google Scholar] [CrossRef]

- Hoang, K.C.; Chan, W.P.; Lay, S.; Cosgun, A.; Croft, E.A. ARviz: An Augmented Reality-Enabled Visualization Platform for ROS Applications. IEEE Robot. Autom. Mag. 2022, 29, 58–67. [Google Scholar] [CrossRef]

- Zheng, M.; Pan, X.; Bermeo, N.V.; Thomas, R.J.; Coyle, D.; O’Hare, G.M.P.; Campbell, A.G. STARE: Augmented Reality Data Visualization for Explainable Decision Support in Smart Environments. IEEE Access 2022, 10, 29543–29557. [Google Scholar] [CrossRef]

- Tu, X.; Autiosalo, J.; Jadid, A.; Tammi, K.; Klinker, G. A Mixed Reality Interface for a Digital Twin Based Crane. Appl. Sci. 2021, 11, 9480. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Tardos, J.D. ORB-SLAM2: An Open-Source SLAM System for Monocular, Stereo, and RGB-D Cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef] [Green Version]

- Azuma, R.T. A Survey of Augmented Reality. Presence Teleoperator Virtual Environ. 1997, 6, 355–385. [Google Scholar] [CrossRef]

- Caudell, T.P.; Mizell, D.W. Augmented reality: An application of heads-up display technology to manual manufacturing processes. In Proceedings of the Twenty-Fifth Hawaii International Conference on System Sciences, Kauai, HI, USA, 7–10 January 1992; pp. 659–669. [Google Scholar] [CrossRef]

- Hiran Gabriel, D.J.; Ramesh Babu, A. Design and Development of Augmented Reality Application for Manufacturing Industry. In Materials, Design, and Manufacturing for Sustainable Environment; Mohan, S., Shankar, S., Rajeshkumar, G., Eds.; Lecture Notes in Mechanical Engineering; Springer: Singapore, 2021; pp. 207–213. [Google Scholar] [CrossRef]

- Georgel, P.F. Is there a reality in industrial augmented reality? In Proceedings of the 10th IEEE International Symposium on Mixed and Augmented Reality (ISMAR 2011), Basel, Switzerland, 26–29 October 2011; pp. 201–210. [Google Scholar]

- Nee, A.Y.C.; Ong, S.K.; Chryssolouris, G.; Mourtzis, D. Augmented reality applications in design and manufacturing. CIRP Ann. 2012, 61, 657–679. [Google Scholar] [CrossRef]

- Baroroh, D.K.; Chu, C.-H.; Wang, L. Systematic literature review on augmented reality in smart manufacturing: Collaboration between human and computational intelligence. J. Manuf. Syst. 2020, 61, 696–711. [Google Scholar] [CrossRef]

- Jiang, S.; Nee, A. A novel facility layout planning and optimization methodology. CIRP Ann. 2013, 62, 483–486. [Google Scholar] [CrossRef]

- Wang, Z.; Bai, X.; Zhang, S.; Wang, Y.; Han, S.; Zhang, X.; Yan, Y.; Xiong, Z. User-oriented AR assembly guideline: A new classification method of assembly instruction for user cognition. Int. J. Adv. Manuf. Technol. 2020, 112, 41–59. [Google Scholar] [CrossRef]

- Drouot, M.; Le Bigot, N.; Bolloc’h, J.; Bricard, E.; de Bougrenet, J.-L.; Nourrit, V. The visual impact of augmented reality during an assembly task. Displays 2021, 66, 101987. [Google Scholar] [CrossRef]

- Zhang, Y.; Omrani, A.; Yadav, R.; Fjeld, M. Supporting Visualization Analysis in Industrial Process Tomography by Using Augmented Reality—A Case Study of an Industrial Microwave Drying System. Sensors 2021, 21, 6515. [Google Scholar] [CrossRef]

- Bun, P.; Grajewski, D.; Gorski, F. Using augmented reality devices for remote support in manufacturing: A case study and analysis. Adv. Prod. Eng. Manag. 2021, 16, 418–430. [Google Scholar] [CrossRef]

- Laviola, E.; Gattullo, M.; Manghisi, V.M.; Fiorentino, M.; Uva, A.E. Minimal AR: Visual asset optimization for the authoring of augmented reality work instructions in manufacturing. Int. J. Adv. Manuf. Technol. 2021, 119, 1769–1784. [Google Scholar] [CrossRef]

- Chen, C.; Tian, Z.; Li, D.; Pang, L.; Wang, T.; Hong, J. Projection-based augmented reality system for assembly guidance and monitoring. Assem. Autom. 2020, 41, 10–23. [Google Scholar] [CrossRef]

- Erkek, M.Y.; Erkek, S.; Jamei, E.; Seyedmahmoudian, M.; Stojcevski, A.; Horan, B. Augmented Reality Visualization of Modal Analysis Using the Finite Element Method. Appl. Sci. 2021, 11, 1310. [Google Scholar] [CrossRef]

- Zollmann, S.; Langlotz, T.; Grasset, R.; Lo, W.H.; Mori, S.; Regenbrecht, H. Visualization Techniques in Augmented Reality: A Taxonomy, Methods and Patterns. IEEE Trans. Vis. Comput. Graph. 2020, 27, 3808–3825. [Google Scholar] [CrossRef] [PubMed]

- Kato, H.; Billinghurst, M. Marker tracking and HMD calibration for a video-based augmented reality conferencing system. In Proceedings of the 2nd IEEE and ACM International Workshop on Augmented Reality (IWAR’99), San Francisco, CA, USA, 20–21 October 1999; pp. 85–94. [Google Scholar] [CrossRef] [Green Version]

- Wagner, D.; Schmalstieg, D. Artoolkitplus for pose tracking on mobile devices. In Proceedings of the 12th Computer Vision Winter Workshop (CVWW’07), St. Lambrecht, Austria, 6–8 February 2007; pp. 139–146. [Google Scholar]

- Looser, J. AR Magic Lenses: Addressing the Challenge of Focus and Context in Augmented Reality. Ph.D. Thesis, University of Canterbury, Christchurch, New Zealand, 2007. [Google Scholar]

- Burns, D.; Osfield, R. Open scene graph a: Introduction, b: Examples and applications. In Proceedings of the IEEE Virtual Reality Conference 2004 (VR 2004), Chicago, IL, USA, 27–31 March 2004; p. 265. [Google Scholar]

- Yin, X.; Fan, X.; Yang, X.; Qiu, S.; Zhang, Z. An Automatic Marker–Object Offset Calibration Method for Precise 3D Augmented Reality Registration in Industrial Applications. Appl. Sci. 2019, 9, 4464. [Google Scholar] [CrossRef] [Green Version]

- Motoyama, Y.; Iwamoto, K.; Tokunaga, H.; Toshimitsu, O. Measuring hand-pouring motion in casting process using augmented reality marker tracking. Int. J. Adv. Manuf. Technol. 2020, 106, 5333–5343. [Google Scholar] [CrossRef]

- Klein, G.; Murray, D. Parallel tracking and mapping for small AR workspaces. In Proceedings of the 6th IEEE and ACM International Symposium on Mixed and Augmented Reality, Nara, Japan, 13–16 November 2007; pp. 225–234. [Google Scholar]

- Liu, J.; Xie, Y.; Gu, S.; Chen, X. A SLAM-Based Mobile Augmented Reality Tracking Registration Algorithm. Int. J. Pattern Recognit. Artif. Intell. 2019, 34, 2054005. [Google Scholar] [CrossRef]

- Yu, K.; Ahn, J.H.; Lee, J.; Kim, M.; Han, J. Collaborative SLAM and AR-guided navigation for floor layout inspection. Vis. Comput. 2020, 36, 2051–2063. [Google Scholar] [CrossRef]

- Zhang, J.; Liu, J.; Chen, K.; Pan, Z.; Liu, R.; Wang, Y.; Yang, T.; Chen, S. Map Recovery and Fusion for Collaborative AR of Multiple Mobile Devices. IEEE Trans. Ind. Inform. 2020, 17, 2081–2089. [Google Scholar] [CrossRef]

- Ultralytics. YOLOv5. Available online: https://github.com/ultralytics/yolov5 (accessed on 18 November 2021).

- Li, D.; Mei, H.; Shen, Y.; Su, S.; Zhang, W.; Wang, J.; Zu, M.; Chen, M. ECharts: A declarative framework for rapid construction of web-based visualization. Vis. Inform. 2018, 2, 136–146. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random Sample Consensus: A Paradigm for Model Fitting with Applications to Image Analysis and Automated Cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Kümmerle, R.; Grisetti, G.; Strasdat, H.; Konolige, K.; Burgard, W. G2o: A general framework for graph optimization. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 3607–3613. [Google Scholar] [CrossRef]

- Lepetit, V.; Moreno-Noguer, F.; Fua, P. EPnP: An Accurate O(n) Solution to the PnP Problem. Int. J. Comput. Vis. 2009, 81, 155–166. [Google Scholar] [CrossRef] [Green Version]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Lawrence Zitnick, C. Microsoft COCO: Common Objects in Context. In Computer Vision—ECCV 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer: Cham, Switzerland, 2014; Volume 8693. [Google Scholar] [CrossRef] [Green Version]

- Nicholson, L.J.; Milford, M.J.; Sunderhauf, N. QuadricSLAM: Dual Quadrics From Object Detections as Landmarks in Object-Oriented SLAM. IEEE Robot. Autom. Lett. 2018, 4, 1–8. [Google Scholar] [CrossRef] [Green Version]

- Yang, S.; Scherer, S. CubeSLAM: Monocular 3-D Object SLAM. IEEE Trans. Robot. 2019, 35, 925–938. [Google Scholar] [CrossRef] [Green Version]

- Nilsen, J.; Molich, R. Heuristic Evaluation of User Interfaces. In Proceedings of the Conference on Human Factors in Computing Systems, Seattle, WA, USA, 1–5 April 1990; pp. 249–256. [Google Scholar] [CrossRef]

- Lewis, J.R. IBM computer usability satisfaction questionnaires: Psychometric evaluation and instructions for use. Int. J. Hum.-Comput. Interact. 1995, 7, 57–78. [Google Scholar] [CrossRef] [Green Version]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).