Abstract

An artificial neural network (ANN) model for predicting the compressive strength of concrete is established in this study. The Back Propagation (BP) network with one hidden layer is chosen as the structure of the ANN. The database of real concrete mix proportioning listed in earlier research by another author is used for training and testing the ANN. The proper number of neurons in the hidden layer is determined by checking the features of over-fitting while the synaptic weights and the thresholds are finalized by checking the features of over-training. After that, we use experimental data from other papers to verify and validate our ANN model. The final result of the synaptic weights and the thresholds in the ANN are all listed. Therefore, with them, and using the formulae expressed in this article, anyone can predict the compressive strength of concrete according to the mix proportioning on his/her own.

1. Introduction

Due to the high compressive strength and the capability of being casted into any shape and size, concrete is the most used construction material in the world. The compressive strength is related to the proportioning of its ingredients, such as water, cement, coarse aggregates, sand, and other admixtures. How to predict the compressive strength according to the proportioning design is a very practical topic. For example, with a prediction model one can estimate the compressive strengths of dozens of mix designs and just choose those that achieve the required strength for further physical tests. This can reduce the number of trials for a specific compressive strength requirement and can save a lot of money and time. Furthermore, one can determine the most inexpensive choice among those that required compressive strength for economical consideration.

Conventionally, statistics are a useful tool for evaluating the results of strength tests. For instance, one can obtain a regression equation using data of water–cement ratio versus the compressive strengths [1]. However, there are not just one or two factors that affect compressive strength. The predicted results by just using the water–cement ratio could be very poor in many cases.

In recent decades, the artificial neural networks (ANNs) have drawn more and more attention because of the capability of dealing with multivariable analysis. Several authors have used the ANNs to determine the compressive strength of concrete [2,3,4,5,6,7,8,9,10,11,12,13,14,15,16]. An ANN is like a black box. After training the ANN, one can input several numerical values and the ANN will prompt another numerical value which represents the predicted compressive strength of the concrete. The components for the input can be factors such as the proportioning of water, cement, sand, etc. Recently, more and more new types of ANN structures, such as the Support Vector Machines [17,18], Deep Learning Neural Network [19,20,21,22,23], Radial Basis Function Network [24,25,26,27,28,29] for various research areas have popped out, as well as other algorithms like tabu and genetic [15,19,26,28,29,30] are newly applied for training the ANN.

In [16], data of real concrete mix proportioning listed in [12] were used to establish a database. With that database, the ANN of back-propagation type (BP) with single hidden layer was employed to establish the prediction models of strength and slump of concrete. Cylindrical test samples of 12 additional mix designs were also casted and their compressive strengths were examined in the laboratory to verify the established ANN model. In this study, we use the same database for establishing our new ANN model. However, we think data for establishing a concrete slump prediction model are insufficient due to 39% of slump records being missing from the database. Therefore, we just focus on establishing a prediction model for the compressive strength of concrete in this study.

Typically, the database has to be divided into two parts, one for training and the other for testing. This is for the sake of avoiding over-training and over-fitting. In [16], most of the data were used for training the ANN while only 20 pieces of data were used in the testing process. There are 482 pieces of data in the database, so data for testing were about 4% of the whole. In our opinion, that is inadequate, though the results of verification were still acceptable. Consequently, we contrive an improvement by using fewer data for training and more data for testing, for the reason that the over-fitting can be alleviated.

There are so many choices for establishing the ANN model but for comparison with [16], we still use the BP-ANN with single hidden layer. The final result of the synaptic weights and the thresholds in the ANN are all listed in this paper. Therefore, anyone can use them accompanied with the formulae expressed in the article to predict the concrete compressive strength according to the mix proportioning on his/her own.

Our new ANN model outperforms the ANN model of [16] as we check the result of verification. Further validation is implemented by using the data of [15]. A good agreement is also found. This implies our ANN model really works for practical uses.

2. The Concrete Mix Proportioning

According to [16], the 28-day compressive strength is related to seven factors. They are the mass of water, cement, fine aggregate (or sand), coarse aggregate, blast furnace slag, fly ash, and superplasticizer mixed in 1 m3 concrete. Therefore, the compressive strength of concrete can be expressed as a mathematical function with seven variables:

in which;

where to are those just mentioned proportioning factors while is the compressive strength of concrete. For the input and output of the ANN, all the data have to be normalized into the range of 0 to 1. The linear transformation is applied. The ranges from to are listed in Table 1.

Table 1.

The ranges of the inputs and the output in the raw data [12].

Printing out all the data might make this paper redundant. Those who need the digital file of the raw data of the database may contact the authors of [16]. Listed data can also be found in the tables of [12] which has been openly published and is downloadable from NCTU library website (https://ir.nctu.edu.tw/handle/11536/71533 (accessed on 12 March 2021)). For implementing this research, we downloaded [12], and copied the data from the tables in that thesis. The normalized data are listed in Appendix A. One can use them accompanied by those listed in Table 1 to retrieve their original values.

3. The Artificial Neural Network

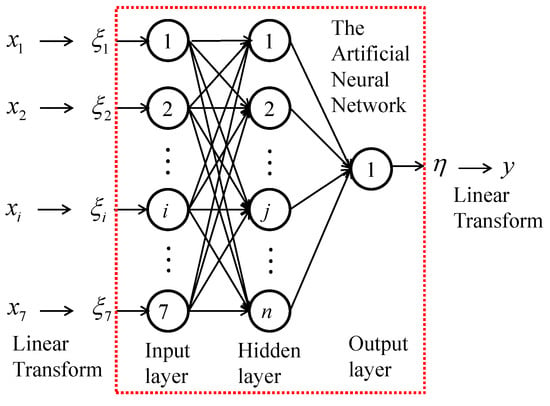

The artificial neural network used in this study is the Back Propagation (BP) which was proposed in [31]. Following [16], we also use the single hidden layer network. According to the database of the real concrete mix proportioning, we have 7 neurons in the input layer and only 1 neuron in the output layer. The structure of the BP-ANN employed in this study is shown in Figure 1.

Figure 1.

The input-output relation for predicting the compressive strength of concrete and the structure of the ANN model.

The formula for the normalized input-output relation can be expressed mathematically:

in which;

where, in Equations (5) and (6), and are so-called thresholds, and are the synaptic weights, is the shape parameter of the activating function, represents the index of a neuron in the input layer, represents the index of a neuron in the hidden layer, and represents the total number of neurons in the hidden layer. The suitable number of neurons in the hidden layer is to be determined.

From the database, records are randomly chosen for training the ANN. The error residual is defined as:

where is the desired value which represents the normalized compressive strength in the data, is the output of the ANN, and is the sequential number. We use the underline for to remind that the samples have been re-numbered because we randomly picked them up from the database. The training process is to find a set of suitable values for the thresholds and the synaptic weights. In the beginning, all the values of the thresholds and the synaptic weights are set up with small random numbers.

Now the error residual is a function of the thresholds and the synaptic weights. We can use the steepest descendent method to update their guess values and make them move toward a better solution:

where is the step parameter of the correction. The basic/standard backpropagation algorithm is employed for training. The detailed training procedure can be found in [32] (in Section 3.3 of this book) or traced back in [31]. We coded the programs by ourselves. The computer language is VBA which is embedded in Microsoft Excel®.

In this study, the value of is chosen as 1. The constant step parameter is employed. The value of is chosen as 0.02. It should be kept in mind that in Equations (5) and (6) the beginning numbers of and are both 1, but in Equations (8) and (9) the beginning numbers of and are both 0. Random numbers in the range of −1 to 1 are chosen as the initial values of the thresholds and the synaptic weights. Accordingly, the results will not be exactly the same in each training process. Even so, the tendency of convergence will be very similar.

4. The Results

There are 482 data in the database. We separate the database into two sets, the training set and the testing set, respectively. The training set includes 85% of the data. Therefore, the training set comprises 410 samples and the testing set comprises 72 samples. Samples included in the testing set are marked in the Appendix A.

Artificial neural networks with 3–12 neurons in the hidden layer are tested. More neurons in the hidden layer mean the ANN is more complicated so it can memorize more detailed features. However, it also means the result of the ANN has a tendency towards over-fitting. Furthermore, too many repetitions of the training iteration will also make the ANN memorize too many detailed features and perform poorly when it is in practical use. A time of iteration indicates all the synaptic weights and the thresholds are updated according to the error residual in the training set.

To help us detect whether over-fitting or over-training happens, both the root-mean-square errors of the training set and the testing are calculated after finishing each iteration. The values of the thresholds and the synaptic weights are also temporarily saved so we can come back to use them when we have selected a suitable ANN structure and have determined the proper iteration times. The root-mean-square error is defined as:

where the overbar represents the average in the data set. Note that the numbers of samples in the two data sets are different.

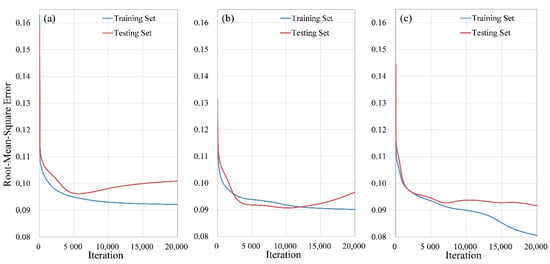

Among all the training results, we chose the results of 3, 7, and 12 neurons in the hidden layer to demonstrate the features of over-fitting and over-training. They are shown in Figure 2. It can be observed that the root-mean-square error of the training set decreases as more iterations are processed, but it does not happen to the testing set. This demonstrates the effect of over-training. One can also find that setting more neurons in the ANN can help reduce the root-mean-square error of the training set. But the root-mean-square error of the testing set seems to not go the same way as more and more neurons in the hidden layer are comprised. This indicates that setting more neurons in the hidden layer tends to cause over-fitting. With the results shown in Figure 2, we suggest that setting 7 neurons in the hidden layer is sufficient for the ANN in this study. It is worth noting that the ANN in [16] has 14 neurons in the hidden layer.

Figure 2.

The result of the training: (a) 3 neurons in the hidden layer, (b) 7 neurons in the hidden layer, (c) 12 neurons in the hidden layer.

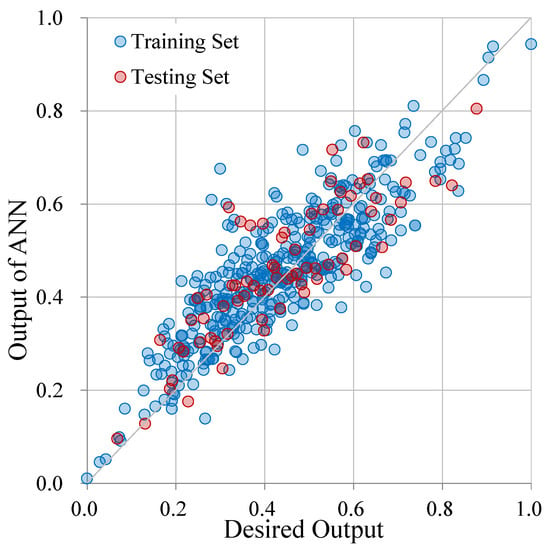

The thresholds and the synaptic weights of the 7-hidden-neuron ANN with 12,040 times of training iteration are used for further investigations. This is chosen for the reason that the root-mean-square errors of the training set and the testing set are equivalent to each other. The synaptic weights from the input layer to the hidden layer and the thresholds of the hidden neurons are listed in Table 2. The synaptic weights from the hidden layer to the output neuron and the threshold of the output neuron are shown in Table 3. With these thresholds and synaptic weights, the predicted outputs are calculated and compared with their desired values. The comparison is shown in Figure 3. It can be found that the results of the two data sets have similar divergence. That is because the root-mean-square errors of the two sets are very close. It is also worth noting that in [16] the result of such comparison looks very close to a central inclined line which represents the predicted are exact to their targets.

Table 2.

The thresholds of the hidden neurons and the synaptic weights from the input layer to the hidden layer ( ).

Table 3.

The threshold of the output neuron and the synaptic weights from the hidden layer to the output neuron ( ).

Figure 3.

The comparison of the outputs of ANN with their target values in which the ANN has seven neurons in the hidden layer and is trained after 12,040 times of iteration.

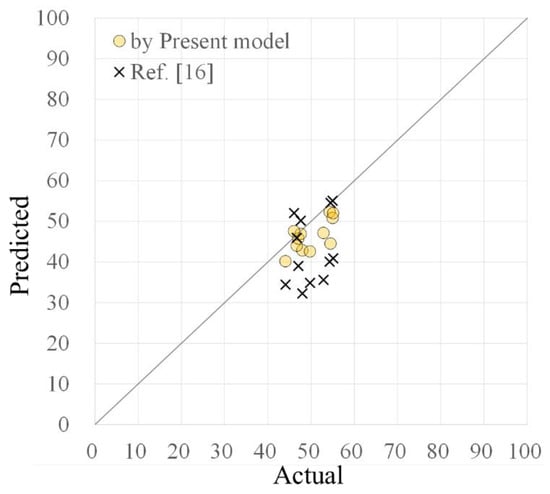

After the number of neurons in the hidden layer and all the thresholds and synaptic weights are determined, we use our ANN to predict the compressive strengths of the 12 mix designs in [16] and compare the results with the data observed in their laboratory. The results are listed in Table 4. The predicted compressive strengths in [16] are also listed in the same table. The comparisons of the predicted and the actual compressive strengths are plotted in Figure 4. Note that the range shown in this figure is from 0 to 100 due to the range of the compressive strength in the database is from 5.66 to 95.3 MPa. It is found in Figure 4 that the circular dots are more focused to the central inclined line. This implies that our ANN model outperforms the ANN model of [16] in the verification.

Table 4.

The predicted and actual compressive strengths of the experimental concrete mixtures in [16].

Figure 4.

Comparison of predicted compressive strength with the actual strength of the 12 experimental concrete mixtures in [16] (Unit: MPa).

Now the results are no longer in the range of 0 to 1. We use the coefficient of efficiency () to evaluate performance:

in which

where is the actual compressive strength, is the predicted compressive strength, and is the relative root-mean-square error. The of the predicted results in [16] is 0.955. The high means the predicted results in [2] are quite acceptable indeed. However, the of the results predicted by the present model is 0.991. The improvement is significant for the relative root-mean-square error is reduced by 21.2% to 9.49%.

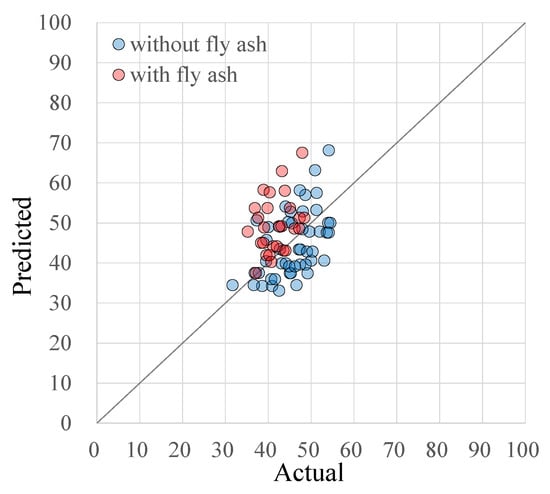

In [15], the artificial neural network and genetic programming were employed to predict the 28-day, 56-day, and 91-day compressive strengths of concrete admixing with fly ash or without fly ash. The proportions of 76 concrete mixes were listed as well as their compressive strengths. Forty-nine of them are admixed without fly ash while the other 27 are admixed with fly ash. Blast furnace slag and superplasticizer are not admixed in all of them. These data are good for further validation of our prediction model. The predicted results of [15] were not listed in their paper so a further comparison is unavailable.

With our model, the predicted compressive strengths of concrete are calculated and listed in Table 5. The comparisons of the predicted and the actual compressive strengths are plotted in Figure 5. In this figure, it can be found that the predicted compressive strengths of those without fly ash are rather close to the actual values. The is 0.975. The relative root-mean-square error is 15.8%. Those mixes with fly ash are all slightly overpredicted. The is 0.940. The relative root-mean-square error is 24.5%. The high in both sets indicates that our prediction model really works at a good level. The predictions of non-fly ash mixes are more credible. We could expand the database for further improvement in the future.

Table 5.

The predicted and actual compressive strengths of the experimental concrete mixtures in [15].

Figure 5.

Comparison of predicted compressive strength with the actual strength of the 76 experimental concrete mixtures in [15] (Unit: MPa).

5. Conclusions

In this study, the database of real concrete mix proportioning listed in [12] is used to establish an ANN prediction model for the concrete compressive strength. The ANN structure chosen in this study is the Back Propagation network with 1 hidden layer. The same database was also used in [16], and the number of hidden layers in the ANN is also the same. The database is divided into two sets, the training set and the testing set, respectively. In comparison with [16], we use fewer data for testing and more data for testing. The testing set comprises 15% of the data while in [16] it was just 4%. Considering the possible effect of over-fitting, the number of neurons in the hidden layer is finally chosen as 7, which is half the number used in [16]. The present ANN model outperforms the model in [16] in the verification in which we compare the predicted results with the experimental data. For corroborating that our model performs well in further practical use, we use experimental data from [15] for validation. A good agreement has also been found.

The synaptic weights and the thresholds are all listed in this article. With these numbers and using Equations (1)–(6), anyone can predict the compressive strength of concrete according to the concrete mix proportioning on his/her own.

Author Contributions

Conceptualization, N.-J.W.; methodology, N.-J.W.; coding, N.-J.W.; investigation, C.-J.L.; data curation, C.-J.L.; formal analysis, C.-J.L. and N.-J.W.; writing—original draft preparation, C.-J.L.; writing—review and editing, N.-J.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki, and approved by the Institutional Review Board.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

All the data are available in the tables and can be traced back to references cited in this paper.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Table A1.

The Normalized Data for Training and Testing the ANN. Marked with * at the Serial Numbers Are Those for Testing.

Table A1.

The Normalized Data for Training and Testing the ANN. Marked with * at the Serial Numbers Are Those for Testing.

| S.N. | S.N. | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 * | 0.119 | 0.442 | 0.663 | 0.544 | 0.043 | 0.579 | 0.758 | 0.506 | 242 | 0.473 | 0.651 | 0.625 | 0.481 | 0.000 | 0.315 | 0.320 | 0.589 |

| 2 | 0.285 | 0.577 | 0.624 | 0.481 | 0.053 | 0.545 | 0.607 | 0.550 | 243 | 0.473 | 0.651 | 0.649 | 0.465 | 0.277 | 0.000 | 0.276 | 0.637 |

| 3 | 0.458 | 0.710 | 0.584 | 0.418 | 0.063 | 0.512 | 0.431 | 0.506 | 244 | 0.473 | 0.453 | 0.665 | 0.427 | 0.555 | 0.000 | 0.250 | 0.671 |

| 4 * | 0.177 | 0.413 | 0.663 | 0.544 | 0.041 | 0.579 | 0.674 | 0.439 | 245 | 0.355 | 0.602 | 0.726 | 0.469 | 0.000 | 0.295 | 0.180 | 0.394 |

| 5 | 0.343 | 0.545 | 0.624 | 0.481 | 0.050 | 0.545 | 0.519 | 0.517 | 246 | 0.528 | 0.508 | 0.725 | 0.468 | 0.000 | 0.258 | 0.077 | 0.305 |

| 6 | 0.523 | 0.674 | 0.584 | 0.418 | 0.060 | 0.512 | 0.346 | 0.528 | 247 | 0.662 | 0.436 | 0.725 | 0.468 | 0.000 | 0.229 | 0.000 | 0.252 |

| 7 | 0.256 | 0.253 | 0.683 | 0.576 | 0.029 | 0.594 | 0.501 | 0.249 | 248 | 0.757 | 0.378 | 0.725 | 0.468 | 0.000 | 0.206 | 0.000 | 0.156 |

| 8 | 0.350 | 0.312 | 0.663 | 0.544 | 0.033 | 0.579 | 0.431 | 0.305 | 249 | 0.415 | 0.427 | 0.725 | 0.468 | 0.530 | 0.000 | 0.000 | 0.521 |

| 9 * | 0.538 | 0.432 | 0.624 | 0.481 | 0.042 | 0.545 | 0.276 | 0.327 | 250 | 0.568 | 0.355 | 0.725 | 0.468 | 0.463 | 0.000 | 0.000 | 0.451 |

| 10 | 0.726 | 0.550 | 0.584 | 0.418 | 0.051 | 0.512 | 0.107 | 0.361 | 251 | 0.687 | 0.299 | 0.725 | 0.468 | 0.410 | 0.000 | 0.000 | 0.312 |

| 11 | 0.158 | 0.423 | 0.679 | 0.543 | 0.042 | 0.512 | 0.568 | 0.583 | 252 * | 0.781 | 0.254 | 0.725 | 0.468 | 0.369 | 0.000 | 0.000 | 0.217 |

| 12 | 0.334 | 0.551 | 0.639 | 0.481 | 0.051 | 0.483 | 0.394 | 0.677 | 253 | 0.365 | 0.517 | 0.726 | 0.469 | 0.276 | 0.135 | 0.206 | 0.461 |

| 13 | 0.476 | 0.679 | 0.600 | 0.419 | 0.060 | 0.454 | 0.392 | 0.681 | 254 * | 0.540 | 0.433 | 0.725 | 0.468 | 0.241 | 0.118 | 0.088 | 0.360 |

| 14 | 0.158 | 0.423 | 0.679 | 0.543 | 0.042 | 0.512 | 0.568 | 0.580 | 255 | 0.676 | 0.368 | 0.725 | 0.468 | 0.214 | 0.104 | 0.000 | 0.279 |

| 15 | 0.334 | 0.551 | 0.639 | 0.481 | 0.051 | 0.483 | 0.394 | 0.619 | 256 | 0.770 | 0.317 | 0.725 | 0.468 | 0.192 | 0.094 | 0.000 | 0.204 |

| 16 * | 0.476 | 0.679 | 0.600 | 0.419 | 0.060 | 0.454 | 0.392 | 0.640 | 257 | 0.869 | 0.979 | 0.446 | 0.377 | 0.000 | 0.267 | 0.000 | 0.606 |

| 17 | 0.158 | 0.423 | 0.679 | 0.543 | 0.042 | 0.512 | 0.568 | 0.317 | 258 * | 0.826 | 0.790 | 0.446 | 0.377 | 0.000 | 0.518 | 0.000 | 0.651 |

| 18 | 0.334 | 0.551 | 0.639 | 0.481 | 0.051 | 0.483 | 0.394 | 0.448 | 259 | 0.809 | 0.717 | 0.446 | 0.377 | 0.000 | 0.669 | 0.000 | 0.584 |

| 19 | 0.476 | 0.679 | 0.600 | 0.419 | 0.060 | 0.454 | 0.392 | 0.501 | 260 | 0.874 | 0.733 | 0.550 | 0.377 | 0.000 | 0.209 | 0.000 | 0.472 |

| 20 | 0.285 | 0.623 | 0.808 | 0.229 | 0.056 | 0.439 | 0.504 | 0.638 | 261 | 0.837 | 0.588 | 0.550 | 0.377 | 0.000 | 0.406 | 0.000 | 0.495 |

| 21 | 0.213 | 0.554 | 0.834 | 0.254 | 0.051 | 0.455 | 0.498 | 0.615 | 262 | 0.822 | 0.532 | 0.550 | 0.377 | 0.000 | 0.482 | 0.000 | 0.405 |

| 22 | 0.047 | 0.484 | 0.861 | 0.278 | 0.045 | 0.467 | 1.000 | 0.573 | 263 | 0.879 | 0.576 | 0.616 | 0.377 | 0.000 | 0.174 | 0.000 | 0.417 |

| 23 | 0.329 | 0.349 | 0.865 | 0.284 | 0.037 | 0.470 | 0.283 | 0.441 | 264 * | 0.845 | 0.458 | 0.616 | 0.377 | 0.000 | 0.334 | 0.000 | 0.394 |

| 24 | 0.264 | 0.295 | 0.889 | 0.305 | 0.032 | 0.482 | 0.267 | 0.395 | 265 | 0.832 | 0.413 | 0.616 | 0.377 | 0.000 | 0.396 | 0.000 | 0.327 |

| 25 | 0.199 | 0.242 | 0.912 | 0.327 | 0.029 | 0.494 | 0.235 | 0.299 | 266 * | 0.882 | 0.467 | 0.665 | 0.377 | 0.000 | 0.145 | 0.000 | 0.294 |

| 26 | 0.422 | 0.225 | 0.880 | 0.296 | 0.419 | 0.030 | 0.195 | 0.185 | 267 | 0.852 | 0.368 | 0.665 | 0.377 | 0.000 | 0.289 | 0.000 | 0.283 |

| 27 | 0.350 | 0.183 | 0.900 | 0.316 | 0.429 | 0.027 | 0.189 | 0.172 | 268 | 0.840 | 0.330 | 0.665 | 0.377 | 0.000 | 0.337 | 0.000 | 0.216 |

| 28 | 0.278 | 0.139 | 0.922 | 0.335 | 0.440 | 0.024 | 0.171 | 0.174 | 269 | 0.890 | 0.825 | 0.446 | 0.377 | 0.473 | 0.000 | 0.000 | 0.684 |

| 29 | 0.523 | 0.556 | 0.594 | 0.505 | 0.141 | 0.318 | 0.287 | 0.656 | 270 | 0.884 | 0.756 | 0.446 | 0.377 | 0.565 | 0.000 | 0.000 | 0.673 |

| 30 * | 0.523 | 0.488 | 0.631 | 0.505 | 0.125 | 0.285 | 0.312 | 0.544 | 271 | 0.880 | 0.696 | 0.446 | 0.377 | 0.645 | 0.000 | 0.000 | 0.562 |

| 31 * | 0.560 | 0.446 | 0.675 | 0.457 | 0.117 | 0.264 | 0.259 | 0.489 | 272 | 0.892 | 0.612 | 0.550 | 0.377 | 0.369 | 0.000 | 0.000 | 0.584 |

| 32 | 0.410 | 0.288 | 0.689 | 0.430 | 0.360 | 0.273 | 0.215 | 0.609 | 273 | 0.888 | 0.558 | 0.550 | 0.377 | 0.441 | 0.000 | 0.000 | 0.573 |

| 33 | 0.410 | 0.416 | 0.689 | 0.465 | 0.360 | 0.068 | 0.215 | 0.590 | 274 | 0.884 | 0.512 | 0.550 | 0.377 | 0.503 | 0.000 | 0.000 | 0.517 |

| 34 | 0.410 | 0.159 | 0.689 | 0.394 | 0.360 | 0.477 | 0.215 | 0.575 | 275 * | 0.895 | 0.476 | 0.615 | 0.377 | 0.302 | 0.000 | 0.000 | 0.472 |

| 35 * | 0.410 | 0.459 | 0.689 | 0.438 | 0.120 | 0.273 | 0.215 | 0.605 | 276 | 0.891 | 0.432 | 0.615 | 0.377 | 0.361 | 0.000 | 0.000 | 0.506 |

| 36 | 0.410 | 0.116 | 0.689 | 0.421 | 0.600 | 0.273 | 0.215 | 0.471 | 277 | 0.888 | 0.394 | 0.615 | 0.377 | 0.412 | 0.000 | 0.000 | 0.450 |

| 37 | 0.329 | 0.288 | 0.689 | 0.467 | 0.360 | 0.273 | 0.215 | 0.739 | 278 | 0.897 | 0.382 | 0.661 | 0.377 | 0.256 | 0.000 | 0.000 | 0.361 |

| 38 | 0.491 | 0.288 | 0.689 | 0.392 | 0.360 | 0.273 | 0.215 | 0.490 | 279 | 0.893 | 0.345 | 0.661 | 0.377 | 0.306 | 0.000 | 0.000 | 0.394 |

| 39 | 0.410 | 0.288 | 0.689 | 0.430 | 0.360 | 0.273 | 0.182 | 0.685 | 280 | 0.890 | 0.313 | 0.661 | 0.377 | 0.349 | 0.000 | 0.000 | 0.294 |

| 40 | 0.410 | 0.288 | 0.689 | 0.430 | 0.360 | 0.273 | 0.248 | 0.502 | 281 | 0.473 | 0.484 | 0.652 | 0.661 | 0.000 | 0.112 | 0.000 | 0.316 |

| 41 | 0.372 | 0.240 | 0.721 | 0.456 | 0.320 | 0.242 | 0.177 | 0.542 | 282 | 0.473 | 0.413 | 0.647 | 0.652 | 0.000 | 0.224 | 0.000 | 0.301 |

| 42 | 0.372 | 0.354 | 0.721 | 0.488 | 0.320 | 0.061 | 0.177 | 0.497 | 283 | 0.473 | 0.347 | 0.643 | 0.643 | 0.000 | 0.333 | 0.000 | 0.287 |

| 43 | 0.372 | 0.126 | 0.721 | 0.424 | 0.320 | 0.424 | 0.177 | 0.483 | 284 | 0.473 | 0.276 | 0.638 | 0.634 | 0.000 | 0.442 | 0.000 | 0.219 |

| 44 | 0.372 | 0.392 | 0.721 | 0.463 | 0.107 | 0.242 | 0.177 | 0.533 | 285 | 0.675 | 0.789 | 0.381 | 0.729 | 0.325 | 0.000 | 0.114 | 0.515 |

| 45 | 0.372 | 0.088 | 0.721 | 0.449 | 0.533 | 0.242 | 0.177 | 0.483 | 286 * | 0.675 | 0.556 | 0.374 | 0.729 | 0.651 | 0.000 | 0.114 | 0.532 |

| 46 | 0.285 | 0.240 | 0.721 | 0.496 | 0.320 | 0.242 | 0.177 | 0.639 | 287 | 0.675 | 0.324 | 0.367 | 0.729 | 0.976 | 0.000 | 0.114 | 0.497 |

| 47 | 0.458 | 0.240 | 0.721 | 0.416 | 0.320 | 0.242 | 0.177 | 0.437 | 288 | 0.690 | 0.581 | 0.472 | 0.729 | 0.253 | 0.000 | 0.044 | 0.403 |

| 48 | 0.372 | 0.240 | 0.721 | 0.456 | 0.320 | 0.242 | 0.147 | 0.549 | 289 * | 0.690 | 0.402 | 0.466 | 0.729 | 0.507 | 0.000 | 0.044 | 0.418 |

| 49 * | 0.372 | 0.240 | 0.721 | 0.456 | 0.320 | 0.242 | 0.206 | 0.514 | 290 * | 0.690 | 0.221 | 0.460 | 0.729 | 0.760 | 0.000 | 0.044 | 0.390 |

| 50 | 0.432 | 0.216 | 0.721 | 0.458 | 0.300 | 0.227 | 0.152 | 0.354 | 291 | 0.697 | 0.450 | 0.530 | 0.729 | 0.208 | 0.000 | 0.015 | 0.315 |

| 51 | 0.432 | 0.323 | 0.721 | 0.489 | 0.300 | 0.057 | 0.152 | 0.476 | 292 * | 0.697 | 0.301 | 0.525 | 0.729 | 0.413 | 0.000 | 0.015 | 0.307 |

| 52 | 0.432 | 0.109 | 0.721 | 0.429 | 0.300 | 0.398 | 0.152 | 0.269 | 293 | 0.697 | 0.154 | 0.520 | 0.729 | 0.621 | 0.000 | 0.015 | 0.284 |

| 53 | 0.432 | 0.359 | 0.721 | 0.466 | 0.100 | 0.227 | 0.152 | 0.358 | 294 * | 0.314 | 0.594 | 0.661 | 0.475 | 0.133 | 0.352 | 0.221 | 0.785 |

| 54 | 0.432 | 0.073 | 0.721 | 0.452 | 0.500 | 0.227 | 0.152 | 0.232 | 295 | 0.314 | 0.486 | 0.723 | 0.475 | 0.112 | 0.300 | 0.184 | 0.662 |

| 55 | 0.350 | 0.216 | 0.721 | 0.496 | 0.300 | 0.227 | 0.152 | 0.429 | 296 | 0.314 | 0.406 | 0.768 | 0.475 | 0.099 | 0.261 | 0.147 | 0.606 |

| 56 | 0.513 | 0.216 | 0.721 | 0.420 | 0.300 | 0.227 | 0.152 | 0.289 | 297 | 0.314 | 0.345 | 0.804 | 0.475 | 0.088 | 0.230 | 0.147 | 0.573 |

| 57 | 0.432 | 0.216 | 0.721 | 0.458 | 0.300 | 0.227 | 0.124 | 0.459 | 298 | 0.314 | 0.594 | 0.556 | 0.647 | 0.133 | 0.352 | 0.221 | 0.796 |

| 58 | 0.432 | 0.216 | 0.721 | 0.458 | 0.300 | 0.227 | 0.180 | 0.402 | 299 | 0.314 | 0.486 | 0.617 | 0.647 | 0.112 | 0.300 | 0.184 | 0.707 |

| 59 * | 0.300 | 0.526 | 0.625 | 0.577 | 0.213 | 0.212 | 0.059 | 0.549 | 300 | 0.314 | 0.406 | 0.662 | 0.647 | 0.099 | 0.261 | 0.147 | 0.640 |

| 60 | 0.477 | 0.270 | 0.745 | 0.541 | 0.025 | 0.255 | 0.103 | 0.263 | 301 | 0.314 | 0.345 | 0.698 | 0.647 | 0.088 | 0.230 | 0.147 | 0.629 |

| 61 * | 0.266 | 0.207 | 0.716 | 0.561 | 0.049 | 0.498 | 0.173 | 0.263 | 302 | 0.314 | 0.594 | 0.450 | 0.819 | 0.133 | 0.352 | 0.221 | 0.818 |

| 62 | 0.365 | 0.430 | 0.375 | 0.947 | 0.000 | 0.388 | 0.110 | 0.370 | 303 * | 0.314 | 0.486 | 0.511 | 0.819 | 0.112 | 0.300 | 0.184 | 0.707 |

| 63 | 0.473 | 0.430 | 0.496 | 0.706 | 0.000 | 0.388 | 0.081 | 0.249 | 304 | 0.314 | 0.406 | 0.557 | 0.819 | 0.099 | 0.261 | 0.147 | 0.651 |

| 64 * | 0.639 | 0.430 | 0.541 | 0.561 | 0.000 | 0.388 | 0.074 | 0.249 | 305 * | 0.314 | 0.345 | 0.591 | 0.819 | 0.088 | 0.230 | 0.147 | 0.584 |

| 65 | 0.466 | 0.285 | 0.730 | 0.562 | 0.000 | 0.290 | 0.147 | 0.272 | 306 | 0.314 | 0.385 | 0.533 | 0.647 | 0.221 | 0.585 | 0.221 | 0.796 |

| 66 | 0.264 | 0.335 | 0.580 | 0.600 | 0.525 | 0.303 | 0.497 | 0.630 | 307 | 0.314 | 0.307 | 0.597 | 0.647 | 0.189 | 0.500 | 0.184 | 0.707 |

| 67 | 0.264 | 0.526 | 0.409 | 0.929 | 0.349 | 0.200 | 0.497 | 0.735 | 308 | 0.314 | 0.250 | 0.645 | 0.647 | 0.165 | 0.436 | 0.147 | 0.673 |

| 68 | 0.538 | 0.417 | 0.625 | 0.438 | 0.131 | 0.445 | 0.252 | 0.427 | 309 | 0.314 | 0.206 | 0.683 | 0.647 | 0.147 | 0.385 | 0.147 | 0.517 |

| 69 | 0.372 | 0.354 | 0.705 | 0.385 | 0.533 | 0.000 | 0.239 | 0.545 | 310 | 0.350 | 0.341 | 0.648 | 0.542 | 0.552 | 0.000 | 0.220 | 0.689 |

| 70 | 0.386 | 0.469 | 0.568 | 0.475 | 0.499 | 0.288 | 0.397 | 0.715 | 311 | 0.350 | 0.362 | 0.633 | 0.542 | 0.576 | 0.000 | 0.230 | 0.783 |

| 71 | 0.567 | 0.579 | 0.649 | 0.447 | 0.347 | 0.097 | 0.313 | 0.620 | 312 | 0.814 | 0.644 | 0.643 | 0.374 | 0.000 | 0.139 | 0.000 | 0.321 |

| 72 | 0.377 | 0.240 | 0.633 | 0.448 | 0.293 | 0.485 | 0.147 | 0.327 | 313 | 0.814 | 0.557 | 0.628 | 0.374 | 0.000 | 0.278 | 0.000 | 0.289 |

| 73 | 0.495 | 0.217 | 0.656 | 0.591 | 0.299 | 0.227 | 0.138 | 0.338 | 314 | 0.814 | 0.470 | 0.613 | 0.374 | 0.000 | 0.416 | 0.000 | 0.255 |

| 74 | 0.422 | 0.202 | 0.624 | 0.677 | 0.192 | 0.327 | 0.132 | 0.316 | 315 | 0.814 | 0.470 | 0.625 | 0.374 | 0.122 | 0.278 | 0.000 | 0.267 |

| 75 * | 0.374 | 0.341 | 0.608 | 0.489 | 0.243 | 0.491 | 0.138 | 0.320 | 316 | 0.814 | 0.470 | 0.631 | 0.374 | 0.183 | 0.208 | 0.000 | 0.278 |

| 76 * | 0.495 | 0.579 | 0.649 | 0.447 | 0.347 | 0.097 | 0.313 | 0.594 | 317 | 0.814 | 0.470 | 0.637 | 0.374 | 0.244 | 0.139 | 0.000 | 0.305 |

| 77 | 0.538 | 0.417 | 0.625 | 0.438 | 0.131 | 0.445 | 0.248 | 0.427 | 318 * | 0.339 | 0.269 | 0.709 | 0.594 | 0.000 | 0.652 | 0.055 | 0.164 |

| 78 | 0.567 | 0.417 | 0.623 | 0.435 | 0.104 | 0.476 | 0.293 | 0.396 | 319 | 0.325 | 0.269 | 0.705 | 0.594 | 0.280 | 0.318 | 0.048 | 0.349 |

| 79 | 0.567 | 0.411 | 0.671 | 0.432 | 0.107 | 0.364 | 0.267 | 0.340 | 320 | 0.069 | 0.630 | 0.511 | 0.872 | 0.000 | 0.136 | 0.515 | 0.893 |

| 80 | 0.495 | 0.335 | 0.658 | 0.522 | 0.320 | 0.242 | 0.184 | 0.465 | 321 * | 0.069 | 0.545 | 0.511 | 0.872 | 0.000 | 0.273 | 0.515 | 0.877 |

| 81 | 0.495 | 0.240 | 0.770 | 0.387 | 0.267 | 0.303 | 0.162 | 0.349 | 322 | 0.069 | 0.459 | 0.511 | 0.872 | 0.000 | 0.409 | 0.515 | 0.853 |

| 82 | 0.372 | 0.430 | 0.705 | 0.397 | 0.613 | 0.000 | 0.234 | 0.647 | 323 | 0.069 | 0.545 | 0.511 | 0.872 | 0.240 | 0.000 | 0.515 | 1.000 |

| 83 | 0.372 | 0.430 | 0.705 | 0.397 | 0.491 | 0.139 | 0.234 | 0.578 | 324 | 0.069 | 0.373 | 0.511 | 0.872 | 0.480 | 0.000 | 0.515 | 0.914 |

| 84 | 0.372 | 0.430 | 0.705 | 0.397 | 0.368 | 0.279 | 0.234 | 0.554 | 325 | 0.069 | 0.202 | 0.511 | 0.872 | 0.720 | 0.000 | 0.515 | 0.904 |

| 85 | 0.372 | 0.430 | 0.705 | 0.397 | 0.307 | 0.348 | 0.234 | 0.544 | 326 | 0.430 | 0.240 | 0.737 | 0.389 | 0.179 | 0.476 | 0.280 | 0.244 |

| 86 | 0.372 | 0.430 | 0.705 | 0.397 | 0.245 | 0.418 | 0.234 | 0.478 | 327 | 0.408 | 0.335 | 0.724 | 0.373 | 0.176 | 0.467 | 0.313 | 0.320 |

| 87 | 0.372 | 0.430 | 0.705 | 0.397 | 0.123 | 0.558 | 0.234 | 0.552 | 328 | 0.401 | 0.430 | 0.711 | 0.357 | 0.168 | 0.448 | 0.302 | 0.396 |

| 88 * | 0.372 | 0.430 | 0.705 | 0.397 | 0.000 | 0.697 | 0.234 | 0.422 | 329 | 0.386 | 0.526 | 0.698 | 0.342 | 0.163 | 0.430 | 0.324 | 0.472 |

| 89 | 0.220 | 0.560 | 0.686 | 0.525 | 0.107 | 0.285 | 0.314 | 0.583 | 330 | 0.430 | 0.697 | 0.541 | 0.609 | 0.000 | 0.333 | 0.273 | 0.716 |

| 90 | 0.220 | 0.560 | 0.686 | 0.525 | 0.149 | 0.248 | 0.317 | 0.549 | 331 | 0.430 | 0.488 | 0.530 | 0.586 | 0.000 | 0.667 | 0.273 | 0.616 |

| 91 | 0.220 | 0.560 | 0.686 | 0.525 | 0.187 | 0.212 | 0.318 | 0.526 | 332 | 0.430 | 0.278 | 0.519 | 0.563 | 0.000 | 1.000 | 0.245 | 0.467 |

| 92 | 0.531 | 0.534 | 0.638 | 0.431 | 0.407 | 0.116 | 0.280 | 0.727 | 333 | 0.430 | 0.697 | 0.550 | 0.627 | 0.293 | 0.000 | 0.384 | 0.775 |

| 93 | 0.567 | 0.421 | 0.638 | 0.431 | 0.486 | 0.138 | 0.346 | 0.625 | 334 | 0.430 | 0.488 | 0.548 | 0.622 | 0.587 | 0.000 | 0.368 | 0.830 |

| 94 | 0.567 | 0.421 | 0.638 | 0.431 | 0.486 | 0.138 | 0.308 | 0.513 | 335 | 0.430 | 0.278 | 0.546 | 0.618 | 0.880 | 0.000 | 0.327 | 0.808 |

| 95 | 0.567 | 0.335 | 0.653 | 0.431 | 0.527 | 0.150 | 0.293 | 0.603 | 336 | 0.430 | 0.697 | 0.546 | 0.618 | 0.147 | 0.167 | 0.294 | 0.796 |

| 96 | 0.552 | 0.345 | 0.653 | 0.431 | 0.530 | 0.151 | 0.296 | 0.623 | 337 * | 0.430 | 0.488 | 0.539 | 0.604 | 0.293 | 0.333 | 0.276 | 0.632 |

| 97 | 0.538 | 0.345 | 0.653 | 0.431 | 0.541 | 0.154 | 0.300 | 0.586 | 338 | 0.430 | 0.278 | 0.532 | 0.591 | 0.440 | 0.500 | 0.163 | 0.711 |

| 98 | 0.567 | 0.278 | 0.653 | 0.431 | 0.581 | 0.165 | 0.290 | 0.501 | 339 * | 0.588 | 0.545 | 0.620 | 0.531 | 0.000 | 0.273 | 0.118 | 0.518 |

| 99 | 0.531 | 0.250 | 0.653 | 0.431 | 0.637 | 0.181 | 0.296 | 0.588 | 340 | 0.588 | 0.373 | 0.610 | 0.514 | 0.000 | 0.545 | 0.109 | 0.436 |

| 100 | 0.567 | 0.250 | 0.667 | 0.431 | 0.569 | 0.162 | 0.278 | 0.491 | 341 | 0.588 | 0.202 | 0.600 | 0.497 | 0.000 | 0.818 | 0.110 | 0.275 |

| 101 | 0.531 | 0.240 | 0.667 | 0.431 | 0.606 | 0.172 | 0.285 | 0.560 | 342 | 0.588 | 0.545 | 0.628 | 0.544 | 0.240 | 0.000 | 0.136 | 0.595 |

| 102 * | 0.531 | 0.240 | 0.668 | 0.450 | 0.569 | 0.162 | 0.275 | 0.468 | 343 | 0.588 | 0.373 | 0.626 | 0.541 | 0.480 | 0.000 | 0.127 | 0.584 |

| 103 | 0.531 | 0.240 | 0.662 | 0.470 | 0.552 | 0.157 | 0.270 | 0.470 | 344 | 0.588 | 0.202 | 0.624 | 0.537 | 0.720 | 0.000 | 0.103 | 0.564 |

| 104 | 0.567 | 0.240 | 0.662 | 0.470 | 0.524 | 0.149 | 0.262 | 0.489 | 345 | 0.588 | 0.545 | 0.624 | 0.537 | 0.120 | 0.136 | 0.118 | 0.633 |

| 105 | 0.567 | 0.307 | 0.656 | 0.490 | 0.149 | 0.395 | 0.248 | 0.256 | 346 | 0.588 | 0.373 | 0.618 | 0.527 | 0.240 | 0.273 | 0.118 | 0.535 |

| 106 | 0.567 | 0.297 | 0.644 | 0.529 | 0.140 | 0.372 | 0.239 | 0.228 | 347 | 0.588 | 0.202 | 0.612 | 0.517 | 0.360 | 0.409 | 0.103 | 0.449 |

| 107 | 0.588 | 0.686 | 0.668 | 0.390 | 0.000 | 0.145 | 0.178 | 0.368 | 348 | 0.803 | 0.745 | 0.697 | 0.195 | 0.000 | 0.258 | 0.395 | 0.329 |

| 108 | 0.552 | 0.575 | 0.668 | 0.390 | 0.000 | 0.285 | 0.173 | 0.321 | 349 | 0.809 | 0.697 | 0.697 | 0.195 | 0.000 | 0.333 | 0.405 | 0.307 |

| 109 | 0.516 | 0.470 | 0.668 | 0.390 | 0.000 | 0.415 | 0.169 | 0.290 | 350 | 0.843 | 0.650 | 0.697 | 0.195 | 0.000 | 0.409 | 0.365 | 0.288 |

| 110 | 0.480 | 0.370 | 0.668 | 0.390 | 0.000 | 0.542 | 0.165 | 0.264 | 351 * | 0.851 | 0.592 | 0.697 | 0.195 | 0.000 | 0.500 | 0.365 | 0.279 |

| 111 | 0.617 | 0.705 | 0.668 | 0.390 | 0.131 | 0.000 | 0.181 | 0.381 | 352 | 0.903 | 0.535 | 0.697 | 0.195 | 0.000 | 0.591 | 0.365 | 0.267 |

| 112 * | 0.610 | 0.606 | 0.668 | 0.390 | 0.261 | 0.000 | 0.180 | 0.368 | 353 | 0.718 | 0.240 | 1.000 | 0.154 | 0.000 | 0.091 | 0.000 | 0.174 |

| 113 * | 0.603 | 0.509 | 0.668 | 0.390 | 0.389 | 0.000 | 0.180 | 0.346 | 354 * | 0.740 | 0.240 | 0.979 | 0.141 | 0.000 | 0.152 | 0.000 | 0.187 |

| 114 | 0.596 | 0.413 | 0.668 | 0.390 | 0.517 | 0.000 | 0.179 | 0.332 | 355 * | 0.755 | 0.240 | 0.963 | 0.129 | 0.000 | 0.197 | 0.000 | 0.192 |

| 115 | 0.610 | 0.268 | 0.594 | 0.765 | 0.082 | 0.186 | 0.110 | 0.187 | 356 | 0.776 | 0.240 | 0.946 | 0.118 | 0.000 | 0.258 | 0.000 | 0.190 |

| 116 | 0.574 | 0.287 | 0.594 | 0.765 | 0.086 | 0.195 | 0.136 | 0.255 | 357 | 0.812 | 0.240 | 0.929 | 0.105 | 0.000 | 0.303 | 0.000 | 0.176 |

| 117 | 0.433 | 0.363 | 0.594 | 0.765 | 0.101 | 0.229 | 0.264 | 0.483 | 358 | 0.834 | 0.240 | 0.914 | 0.095 | 0.000 | 0.348 | 0.000 | 0.160 |

| 118 * | 0.495 | 0.777 | 0.591 | 0.492 | 0.321 | 0.000 | 0.244 | 0.622 | 359 | 0.769 | 0.316 | 0.967 | 0.132 | 0.000 | 0.106 | 0.000 | 0.239 |

| 119 | 0.495 | 0.536 | 0.591 | 0.492 | 0.632 | 0.000 | 0.240 | 0.662 | 360 | 0.783 | 0.316 | 0.943 | 0.115 | 0.000 | 0.182 | 0.000 | 0.263 |

| 120 | 0.386 | 0.259 | 0.757 | 0.449 | 0.373 | 0.152 | 0.162 | 0.369 | 361 | 0.805 | 0.316 | 0.922 | 0.101 | 0.000 | 0.242 | 0.000 | 0.267 |

| 121 | 0.415 | 0.297 | 0.750 | 0.449 | 0.320 | 0.152 | 0.162 | 0.385 | 362 | 0.827 | 0.316 | 0.907 | 0.091 | 0.000 | 0.303 | 0.000 | 0.269 |

| 122 | 0.422 | 0.335 | 0.749 | 0.449 | 0.267 | 0.152 | 0.162 | 0.440 | 363 * | 0.863 | 0.316 | 0.884 | 0.075 | 0.000 | 0.364 | 0.000 | 0.255 |

| 123 | 0.422 | 0.392 | 0.751 | 0.449 | 0.187 | 0.152 | 0.162 | 0.468 | 364 | 0.892 | 0.316 | 0.865 | 0.061 | 0.000 | 0.424 | 0.000 | 0.237 |

| 124 | 0.372 | 0.202 | 0.732 | 0.449 | 0.373 | 0.303 | 0.170 | 0.422 | 365 * | 0.819 | 0.392 | 0.933 | 0.109 | 0.000 | 0.121 | 0.000 | 0.305 |

| 125 | 0.422 | 0.240 | 0.724 | 0.449 | 0.373 | 0.242 | 0.170 | 0.384 | 366 | 0.834 | 0.392 | 0.906 | 0.090 | 0.000 | 0.212 | 0.000 | 0.334 |

| 126 | 0.386 | 0.278 | 0.735 | 0.449 | 0.320 | 0.242 | 0.170 | 0.395 | 367 | 0.863 | 0.392 | 0.880 | 0.072 | 0.000 | 0.288 | 0.000 | 0.341 |

| 127 | 0.422 | 0.335 | 0.726 | 0.449 | 0.240 | 0.242 | 0.170 | 0.438 | 368 | 0.892 | 0.392 | 0.858 | 0.057 | 0.000 | 0.364 | 0.000 | 0.344 |

| 128 | 0.401 | 0.240 | 0.717 | 0.449 | 0.533 | 0.152 | 0.182 | 0.449 | 369 | 0.928 | 0.392 | 0.838 | 0.043 | 0.000 | 0.424 | 0.000 | 0.333 |

| 129 | 0.422 | 0.088 | 0.705 | 0.449 | 0.320 | 0.242 | 0.182 | 0.465 | 370 | 0.957 | 0.392 | 0.814 | 0.027 | 0.000 | 0.500 | 0.000 | 0.312 |

| 130 | 0.386 | 0.621 | 0.709 | 0.449 | 0.267 | 0.000 | 0.184 | 0.569 | 371 | 0.870 | 0.469 | 0.894 | 0.082 | 0.000 | 0.152 | 0.000 | 0.375 |

| 131 * | 0.372 | 0.383 | 0.707 | 0.449 | 0.600 | 0.000 | 0.184 | 0.571 | 372 * | 0.892 | 0.469 | 0.865 | 0.061 | 0.000 | 0.242 | 0.000 | 0.399 |

| 132 | 0.386 | 0.383 | 0.675 | 0.449 | 0.333 | 0.303 | 0.184 | 0.440 | 373 | 0.913 | 0.469 | 0.844 | 0.047 | 0.000 | 0.318 | 0.000 | 0.411 |

| 133 | 0.473 | 0.453 | 0.586 | 0.524 | 0.555 | 0.000 | 0.383 | 0.751 | 374 | 0.942 | 0.469 | 0.817 | 0.028 | 0.000 | 0.409 | 0.000 | 0.413 |

| 134 * | 0.473 | 0.453 | 0.586 | 0.503 | 0.416 | 0.158 | 0.364 | 0.718 | 375 | 0.971 | 0.469 | 0.793 | 0.013 | 0.000 | 0.485 | 0.000 | 0.396 |

| 135 | 0.473 | 0.453 | 0.586 | 0.482 | 0.277 | 0.315 | 0.346 | 0.618 | 376 | 1.000 | 0.469 | 0.775 | 0.000 | 0.000 | 0.561 | 0.000 | 0.378 |

| 136 | 0.473 | 0.453 | 0.586 | 0.461 | 0.139 | 0.473 | 0.346 | 0.568 | 377 * | 0.242 | 0.621 | 0.533 | 0.900 | 0.000 | 0.303 | 0.276 | 0.822 |

| 137 | 0.473 | 0.453 | 0.586 | 0.439 | 0.000 | 0.630 | 0.364 | 0.484 | 378 | 0.242 | 0.621 | 0.533 | 0.900 | 0.267 | 0.000 | 0.276 | 0.828 |

| 138 | 0.336 | 0.248 | 0.572 | 0.735 | 0.000 | 0.412 | 0.063 | 0.215 | 379 | 0.242 | 0.621 | 0.533 | 0.900 | 0.133 | 0.152 | 0.276 | 0.837 |

| 139 | 0.161 | 0.217 | 0.721 | 0.556 | 0.000 | 0.570 | 0.235 | 0.242 | 380 | 0.314 | 0.316 | 0.462 | 0.997 | 0.000 | 0.485 | 0.092 | 0.235 |

| 140 | 0.235 | 0.288 | 0.657 | 0.537 | 0.000 | 0.682 | 0.215 | 0.337 | 381 | 0.343 | 0.278 | 0.459 | 1.000 | 0.000 | 0.545 | 0.096 | 0.212 |

| 141 | 0.372 | 0.373 | 0.681 | 0.500 | 0.240 | 0.273 | 0.141 | 0.469 | 382 | 0.314 | 0.240 | 0.464 | 0.999 | 0.000 | 0.606 | 0.099 | 0.195 |

| 142 | 0.386 | 0.339 | 0.698 | 0.503 | 0.224 | 0.255 | 0.124 | 0.421 | 383 * | 0.170 | 0.430 | 0.486 | 0.885 | 0.000 | 0.606 | 0.055 | 0.664 |

| 143 | 0.314 | 0.255 | 0.712 | 0.497 | 0.277 | 0.312 | 0.140 | 0.331 | 384 | 0.170 | 0.430 | 0.489 | 0.891 | 0.200 | 0.379 | 0.074 | 0.836 |

| 144 | 0.458 | 0.446 | 0.583 | 0.692 | 0.192 | 0.094 | 0.166 | 0.357 | 385 | 0.170 | 0.335 | 0.486 | 0.885 | 0.000 | 0.758 | 0.055 | 0.544 |

| 145 * | 0.372 | 0.328 | 0.672 | 0.489 | 0.365 | 0.221 | 0.201 | 0.396 | 386 | 0.386 | 0.295 | 0.502 | 0.871 | 0.501 | 0.000 | 0.107 | 0.303 |

| 146 | 0.296 | 0.288 | 0.721 | 0.518 | 0.000 | 0.455 | 0.179 | 0.435 | 387 | 0.386 | 0.341 | 0.492 | 0.839 | 0.552 | 0.000 | 0.119 | 0.408 |

| 147 | 0.296 | 0.373 | 0.689 | 0.473 | 0.000 | 0.545 | 0.215 | 0.495 | 388 | 0.386 | 0.621 | 0.496 | 0.854 | 0.267 | 0.000 | 0.129 | 0.427 |

| 148 | 0.166 | 0.288 | 0.689 | 0.499 | 0.000 | 0.682 | 0.248 | 0.507 | 389 | 0.386 | 0.383 | 0.481 | 0.814 | 0.600 | 0.000 | 0.129 | 0.512 |

| 149 | 0.623 | 0.872 | 0.023 | 0.671 | 0.000 | 0.179 | 0.151 | 0.625 | 390 * | 0.386 | 0.097 | 0.489 | 0.837 | 1.000 | 0.000 | 0.129 | 0.375 |

| 150 * | 0.623 | 0.760 | 0.070 | 0.671 | 0.000 | 0.358 | 0.151 | 0.565 | 391 | 0.386 | 0.469 | 0.467 | 0.776 | 0.677 | 0.000 | 0.148 | 0.636 |

| 151 * | 0.623 | 0.646 | 0.116 | 0.671 | 0.000 | 0.536 | 0.151 | 0.501 | 392 | 0.386 | 0.514 | 0.458 | 0.751 | 0.749 | 0.000 | 0.161 | 0.667 |

| 152 | 0.653 | 0.568 | 0.009 | 0.671 | 0.000 | 0.124 | 0.000 | 0.367 | 393 | 0.502 | 0.392 | 0.495 | 0.923 | 0.245 | 0.000 | 0.000 | 0.395 |

| 153 * | 0.653 | 0.490 | 0.042 | 0.671 | 0.000 | 0.252 | 0.000 | 0.316 | 394 | 0.502 | 0.213 | 0.495 | 0.923 | 0.496 | 0.000 | 0.000 | 0.331 |

| 154 | 0.653 | 0.221 | 0.074 | 0.671 | 0.000 | 0.376 | 0.000 | 0.266 | 395 * | 0.502 | 0.392 | 0.495 | 0.923 | 0.000 | 0.279 | 0.000 | 0.288 |

| 155 | 0.653 | 0.366 | 0.000 | 0.671 | 0.000 | 0.091 | 0.000 | 0.168 | 396 | 0.097 | 0.156 | 0.515 | 0.978 | 0.000 | 0.655 | 0.132 | 0.295 |

| 156 | 0.653 | 0.310 | 0.023 | 0.671 | 0.000 | 0.179 | 0.000 | 0.128 | 397 | 0.841 | 0.874 | 0.446 | 0.376 | 0.000 | 0.403 | 0.000 | 0.695 |

| 157 | 0.653 | 0.253 | 0.047 | 0.671 | 0.000 | 0.270 | 0.000 | 0.085 | 398 | 0.848 | 0.653 | 0.549 | 0.376 | 0.000 | 0.315 | 0.000 | 0.532 |

| 158 | 0.653 | 0.872 | 0.417 | 0.671 | 0.157 | 0.000 | 0.000 | 0.554 | 399 | 0.856 | 0.510 | 0.615 | 0.376 | 0.000 | 0.258 | 0.000 | 0.413 |

| 159 | 0.653 | 0.760 | 0.414 | 0.671 | 0.315 | 0.000 | 0.000 | 0.614 | 400 | 0.863 | 0.413 | 0.664 | 0.376 | 0.000 | 0.218 | 0.000 | 0.337 |

| 160 | 0.653 | 0.535 | 0.406 | 0.671 | 0.632 | 0.000 | 0.000 | 0.604 | 401 | 0.899 | 1.000 | 0.446 | 0.376 | 0.237 | 0.000 | 0.000 | 0.652 |

| 161 | 0.653 | 0.568 | 0.535 | 0.671 | 0.109 | 0.000 | 0.000 | 0.419 | 402 | 0.892 | 0.905 | 0.446 | 0.376 | 0.365 | 0.000 | 0.000 | 0.674 |

| 162 | 0.653 | 0.490 | 0.532 | 0.671 | 0.221 | 0.000 | 0.000 | 0.442 | 403 | 0.899 | 0.747 | 0.549 | 0.376 | 0.187 | 0.000 | 0.000 | 0.539 |

| 163 | 0.653 | 0.331 | 0.527 | 0.671 | 0.443 | 0.000 | 0.000 | 0.436 | 404 | 0.892 | 0.674 | 0.549 | 0.376 | 0.285 | 0.000 | 0.000 | 0.533 |

| 164 | 0.653 | 0.366 | 0.622 | 0.671 | 0.080 | 0.000 | 0.000 | 0.220 | 405 * | 0.899 | 0.589 | 0.615 | 0.376 | 0.152 | 0.000 | 0.000 | 0.427 |

| 165 | 0.653 | 0.310 | 0.619 | 0.671 | 0.157 | 0.000 | 0.000 | 0.219 | 406 | 0.899 | 0.526 | 0.615 | 0.376 | 0.232 | 0.000 | 0.000 | 0.457 |

| 166 | 0.653 | 0.196 | 0.615 | 0.671 | 0.315 | 0.000 | 0.000 | 0.218 | 407 | 0.899 | 0.423 | 0.660 | 0.376 | 0.197 | 0.000 | 0.000 | 0.311 |

| 167 | 0.650 | 0.760 | 0.407 | 0.671 | 0.157 | 0.179 | 0.014 | 0.549 | 408 | 0.260 | 0.692 | 0.622 | 0.532 | 0.061 | 0.337 | 0.276 | 0.485 |

| 168 | 0.650 | 0.648 | 0.393 | 0.671 | 0.157 | 0.358 | 0.016 | 0.502 | 409 | 0.134 | 0.550 | 0.664 | 0.602 | 0.051 | 0.359 | 0.184 | 0.300 |

| 169 | 0.649 | 0.423 | 0.395 | 0.671 | 0.632 | 0.179 | 0.017 | 0.512 | 410 | 0.000 | 0.413 | 0.705 | 0.670 | 0.041 | 0.380 | 0.151 | 0.280 |

| 170 | 0.649 | 0.310 | 0.381 | 0.671 | 0.632 | 0.358 | 0.017 | 0.463 | 411 | 0.295 | 0.555 | 0.645 | 0.569 | 0.051 | 0.349 | 0.099 | 0.314 |

| 171 | 0.653 | 0.490 | 0.530 | 0.671 | 0.109 | 0.124 | 0.000 | 0.449 | 412 | 0.300 | 0.365 | 0.676 | 0.622 | 0.037 | 0.365 | 0.074 | 0.243 |

| 172 | 0.653 | 0.411 | 0.520 | 0.671 | 0.109 | 0.252 | 0.000 | 0.396 | 413 | 0.329 | 0.621 | 0.645 | 0.518 | 0.427 | 0.000 | 0.453 | 0.717 |

| 173 * | 0.653 | 0.253 | 0.522 | 0.671 | 0.443 | 0.124 | 0.000 | 0.408 | 414 | 0.514 | 0.530 | 0.637 | 0.606 | 0.626 | 0.000 | 0.304 | 0.642 |

| 174 * | 0.653 | 0.175 | 0.511 | 0.671 | 0.443 | 0.252 | 0.000 | 0.354 | 415 | 0.514 | 0.530 | 0.637 | 0.606 | 0.269 | 0.405 | 0.387 | 0.466 |

| 175 | 0.653 | 0.310 | 0.649 | 0.671 | 0.080 | 0.091 | 0.000 | 0.229 | 416 | 0.514 | 0.530 | 0.637 | 0.606 | 0.000 | 0.711 | 0.566 | 0.404 |

| 176 | 0.653 | 0.253 | 0.669 | 0.671 | 0.080 | 0.179 | 0.000 | 0.191 | 417 | 0.514 | 0.362 | 0.641 | 0.600 | 0.861 | 0.000 | 0.287 | 0.561 |

| 177 | 0.653 | 0.141 | 0.707 | 0.671 | 0.315 | 0.091 | 0.000 | 0.207 | 418 * | 0.514 | 0.362 | 0.641 | 0.600 | 0.370 | 0.558 | 0.431 | 0.462 |

| 178 | 0.653 | 0.084 | 0.726 | 0.671 | 0.315 | 0.179 | 0.000 | 0.191 | 419 | 0.444 | 0.495 | 0.657 | 0.627 | 0.255 | 0.384 | 0.595 | 0.394 |

| 179 | 0.278 | 0.556 | 0.626 | 0.643 | 0.000 | 0.276 | 0.168 | 0.452 | 420 | 0.444 | 0.495 | 0.657 | 0.627 | 0.000 | 0.674 | 0.198 | 0.566 |

| 180 * | 0.278 | 0.469 | 0.614 | 0.643 | 0.000 | 0.415 | 0.168 | 0.445 | 421 | 0.444 | 0.336 | 0.657 | 0.627 | 0.816 | 0.000 | 0.216 | 0.586 |

| 181 | 0.278 | 0.381 | 0.603 | 0.643 | 0.000 | 0.555 | 0.168 | 0.432 | 422 | 0.444 | 0.336 | 0.657 | 0.627 | 0.351 | 0.528 | 0.275 | 0.442 |

| 182 | 0.278 | 0.295 | 0.591 | 0.643 | 0.000 | 0.694 | 0.168 | 0.299 | 423 | 0.444 | 0.336 | 0.657 | 0.627 | 0.000 | 0.927 | 0.403 | 0.359 |

| 183 * | 0.278 | 0.208 | 0.580 | 0.643 | 0.000 | 0.830 | 0.168 | 0.235 | 424 | 0.375 | 0.461 | 0.676 | 0.646 | 0.562 | 0.000 | 0.516 | 0.422 |

| 184 | 0.278 | 0.120 | 0.568 | 0.643 | 0.000 | 0.970 | 0.168 | 0.141 | 425 | 0.375 | 0.461 | 0.676 | 0.646 | 0.258 | 0.345 | 0.563 | 0.350 |

| 185 | 0.314 | 0.400 | 0.709 | 0.643 | 0.000 | 0.215 | 0.000 | 0.373 | 426 | 0.375 | 0.461 | 0.676 | 0.646 | 0.000 | 0.638 | 0.187 | 0.549 |

| 186 | 0.314 | 0.333 | 0.699 | 0.643 | 0.000 | 0.324 | 0.000 | 0.329 | 427 | 0.375 | 0.310 | 0.676 | 0.646 | 0.772 | 0.000 | 0.205 | 0.569 |

| 187 | 0.314 | 0.265 | 0.690 | 0.643 | 0.000 | 0.430 | 0.000 | 0.291 | 428 | 0.375 | 0.310 | 0.676 | 0.646 | 0.355 | 0.474 | 0.261 | 0.344 |

| 188 | 0.314 | 0.198 | 0.681 | 0.643 | 0.000 | 0.539 | 0.000 | 0.213 | 429 | 0.375 | 0.310 | 0.676 | 0.646 | 0.000 | 0.878 | 0.381 | 0.331 |

| 189 | 0.314 | 0.130 | 0.672 | 0.643 | 0.000 | 0.645 | 0.000 | 0.154 | 430 | 0.516 | 0.530 | 0.637 | 0.606 | 0.627 | 0.000 | 0.259 | 0.662 |

| 190 * | 0.314 | 0.063 | 0.662 | 0.643 | 0.000 | 0.755 | 0.000 | 0.068 | 431 * | 0.254 | 0.457 | 0.494 | 0.831 | 0.279 | 0.317 | 0.328 | 0.552 |

| 191 | 0.314 | 0.303 | 0.755 | 0.643 | 0.000 | 0.176 | 0.000 | 0.284 | 432 | 0.250 | 0.458 | 0.495 | 0.831 | 0.280 | 0.318 | 0.386 | 0.572 |

| 192 | 0.314 | 0.248 | 0.747 | 0.643 | 0.000 | 0.264 | 0.000 | 0.224 | 433 | 0.230 | 0.449 | 0.487 | 0.810 | 0.276 | 0.313 | 0.420 | 0.603 |

| 193 | 0.314 | 0.192 | 0.740 | 0.643 | 0.000 | 0.352 | 0.000 | 0.193 | 434 | 0.408 | 0.288 | 0.689 | 0.429 | 0.360 | 0.273 | 0.215 | 0.610 |

| 194 | 0.314 | 0.135 | 0.732 | 0.643 | 0.000 | 0.439 | 0.000 | 0.130 | 435 * | 0.408 | 0.159 | 0.689 | 0.394 | 0.360 | 0.477 | 0.215 | 0.575 |

| 195 | 0.314 | 0.080 | 0.724 | 0.643 | 0.000 | 0.530 | 0.000 | 0.073 | 436 | 0.408 | 0.459 | 0.689 | 0.438 | 0.120 | 0.273 | 0.215 | 0.606 |

| 196 | 0.314 | 0.025 | 0.717 | 0.643 | 0.000 | 0.618 | 0.000 | 0.029 | 437 | 0.329 | 0.288 | 0.689 | 0.467 | 0.360 | 0.273 | 0.215 | 0.739 |

| 197 | 0.314 | 0.234 | 0.787 | 0.643 | 0.000 | 0.148 | 0.000 | 0.197 | 438 | 0.495 | 0.288 | 0.689 | 0.392 | 0.360 | 0.273 | 0.215 | 0.491 |

| 198 | 0.314 | 0.187 | 0.781 | 0.643 | 0.000 | 0.224 | 0.000 | 0.191 | 439 * | 0.365 | 0.466 | 0.689 | 0.478 | 0.217 | 0.151 | 0.195 | 0.684 |

| 199 * | 0.314 | 0.141 | 0.774 | 0.643 | 0.000 | 0.297 | 0.000 | 0.131 | 440 | 0.365 | 0.109 | 0.689 | 0.425 | 0.503 | 0.395 | 0.195 | 0.647 |

| 200 | 0.314 | 0.093 | 0.768 | 0.643 | 0.000 | 0.373 | 0.000 | 0.076 | 441 | 0.458 | 0.313 | 0.689 | 0.391 | 0.217 | 0.395 | 0.195 | 0.558 |

| 201 | 0.314 | 0.046 | 0.762 | 0.643 | 0.000 | 0.448 | 0.000 | 0.042 | 442 | 0.458 | 0.262 | 0.689 | 0.424 | 0.503 | 0.151 | 0.195 | 0.545 |

| 202 | 0.314 | 0.000 | 0.755 | 0.643 | 0.000 | 0.521 | 0.000 | 0.000 | 443 | 0.372 | 0.240 | 0.721 | 0.456 | 0.320 | 0.242 | 0.177 | 0.529 |

| 203 | 0.430 | 0.430 | 0.721 | 0.486 | 0.000 | 0.227 | 0.138 | 0.373 | 444 | 0.372 | 0.354 | 0.721 | 0.487 | 0.320 | 0.061 | 0.177 | 0.497 |

| 204 * | 0.292 | 0.288 | 0.721 | 0.518 | 0.000 | 0.455 | 0.180 | 0.435 | 445 * | 0.372 | 0.126 | 0.721 | 0.424 | 0.320 | 0.424 | 0.177 | 0.483 |

| 205 | 0.155 | 0.217 | 0.721 | 0.556 | 0.000 | 0.570 | 0.208 | 0.488 | 446 | 0.372 | 0.392 | 0.721 | 0.463 | 0.107 | 0.242 | 0.177 | 0.533 |

| 206 | 0.047 | 0.145 | 0.721 | 0.587 | 0.000 | 0.682 | 0.248 | 0.339 | 447 | 0.372 | 0.088 | 0.721 | 0.449 | 0.533 | 0.242 | 0.177 | 0.483 |

| 207 | 0.422 | 0.545 | 0.689 | 0.461 | 0.000 | 0.273 | 0.182 | 0.502 | 448 | 0.285 | 0.240 | 0.721 | 0.496 | 0.320 | 0.242 | 0.177 | 0.639 |

| 208 | 0.292 | 0.373 | 0.689 | 0.473 | 0.000 | 0.545 | 0.215 | 0.495 | 449 | 0.458 | 0.240 | 0.721 | 0.416 | 0.320 | 0.242 | 0.177 | 0.437 |

| 209 | 0.162 | 0.288 | 0.689 | 0.499 | 0.000 | 0.682 | 0.248 | 0.507 | 450 | 0.321 | 0.398 | 0.721 | 0.504 | 0.192 | 0.133 | 0.159 | 0.608 |

| 210 | 0.069 | 0.202 | 0.689 | 0.524 | 0.000 | 0.818 | 0.298 | 0.428 | 451 | 0.321 | 0.082 | 0.721 | 0.457 | 0.448 | 0.352 | 0.159 | 0.518 |

| 211 | 0.432 | 0.430 | 0.721 | 0.486 | 0.000 | 0.227 | 0.142 | 0.373 | 452 | 0.422 | 0.263 | 0.721 | 0.418 | 0.192 | 0.352 | 0.159 | 0.412 |

| 212 | 0.296 | 0.288 | 0.721 | 0.518 | 0.000 | 0.455 | 0.196 | 0.435 | 453 | 0.422 | 0.217 | 0.721 | 0.447 | 0.448 | 0.133 | 0.159 | 0.528 |

| 213 | 0.161 | 0.217 | 0.721 | 0.556 | 0.000 | 0.570 | 0.208 | 0.488 | 454 | 0.432 | 0.216 | 0.721 | 0.458 | 0.300 | 0.227 | 0.152 | 0.347 |

| 214 * | 0.053 | 0.145 | 0.721 | 0.587 | 0.000 | 0.682 | 0.273 | 0.339 | 455 | 0.432 | 0.323 | 0.721 | 0.489 | 0.300 | 0.057 | 0.152 | 0.391 |

| 215 | 0.426 | 0.545 | 0.689 | 0.461 | 0.000 | 0.273 | 0.158 | 0.502 | 456 | 0.432 | 0.109 | 0.721 | 0.429 | 0.300 | 0.398 | 0.152 | 0.270 |

| 216 * | 0.296 | 0.373 | 0.689 | 0.473 | 0.000 | 0.545 | 0.182 | 0.495 | 457 | 0.432 | 0.359 | 0.721 | 0.466 | 0.100 | 0.227 | 0.152 | 0.358 |

| 217 | 0.166 | 0.288 | 0.689 | 0.499 | 0.000 | 0.682 | 0.238 | 0.507 | 458 | 0.432 | 0.073 | 0.721 | 0.452 | 0.500 | 0.227 | 0.152 | 0.293 |

| 218 | 0.069 | 0.202 | 0.689 | 0.524 | 0.000 | 0.818 | 0.272 | 0.428 | 459 * | 0.350 | 0.216 | 0.721 | 0.496 | 0.300 | 0.227 | 0.152 | 0.452 |

| 219 | 0.856 | 0.494 | 0.426 | 0.770 | 0.000 | 0.178 | 0.000 | 0.422 | 460 | 0.513 | 0.216 | 0.721 | 0.420 | 0.300 | 0.227 | 0.152 | 0.289 |

| 220 | 0.856 | 0.382 | 0.408 | 0.770 | 0.000 | 0.356 | 0.000 | 0.321 | 461 | 0.383 | 0.365 | 0.721 | 0.503 | 0.181 | 0.126 | 0.169 | 0.392 |

| 221 | 0.856 | 0.492 | 0.440 | 0.770 | 0.157 | 0.000 | 0.000 | 0.461 | 462 | 0.383 | 0.067 | 0.721 | 0.459 | 0.419 | 0.329 | 0.169 | 0.253 |

| 222 | 0.856 | 0.382 | 0.436 | 0.770 | 0.314 | 0.000 | 0.000 | 0.406 | 463 | 0.479 | 0.238 | 0.721 | 0.423 | 0.181 | 0.329 | 0.169 | 0.254 |

| 223 | 0.675 | 0.905 | 0.381 | 0.729 | 0.000 | 0.185 | 0.114 | 0.417 | 464 | 0.479 | 0.195 | 0.721 | 0.449 | 0.419 | 0.126 | 0.135 | 0.314 |

| 224 | 0.675 | 0.789 | 0.373 | 0.729 | 0.000 | 0.370 | 0.114 | 0.396 | 465 * | 0.342 | 0.173 | 0.690 | 0.721 | 0.000 | 0.435 | 0.000 | 0.228 |

| 225 | 0.675 | 0.672 | 0.366 | 0.729 | 0.000 | 0.555 | 0.114 | 0.392 | 466 | 0.423 | 0.173 | 0.567 | 0.721 | 0.343 | 0.400 | 0.297 | 0.452 |

| 226 | 0.690 | 0.672 | 0.472 | 0.729 | 0.000 | 0.142 | 0.044 | 0.340 | 467 | 0.458 | 0.198 | 0.568 | 0.723 | 0.346 | 0.359 | 0.131 | 0.372 |

| 227 * | 0.690 | 0.581 | 0.466 | 0.729 | 0.000 | 0.288 | 0.044 | 0.334 | 468 | 0.426 | 0.178 | 0.567 | 0.722 | 0.346 | 0.390 | 0.287 | 0.394 |

| 228 | 0.690 | 0.491 | 0.461 | 0.729 | 0.000 | 0.430 | 0.044 | 0.320 | 469 | 0.291 | 0.187 | 0.654 | 0.722 | 0.036 | 0.522 | 0.162 | 0.311 |

| 229 | 0.697 | 0.524 | 0.529 | 0.729 | 0.000 | 0.118 | 0.015 | 0.347 | 470 | 0.349 | 0.190 | 0.605 | 0.722 | 0.133 | 0.526 | 0.238 | 0.361 |

| 230 | 0.697 | 0.450 | 0.525 | 0.729 | 0.000 | 0.236 | 0.015 | 0.382 | 471 | 0.343 | 0.177 | 0.586 | 0.723 | 0.201 | 0.506 | 0.291 | 0.397 |

| 231 | 0.697 | 0.377 | 0.520 | 0.729 | 0.000 | 0.352 | 0.015 | 0.392 | 472 | 0.403 | 0.190 | 0.567 | 0.723 | 0.249 | 0.485 | 0.358 | 0.357 |

| 232 * | 0.623 | 0.872 | 0.424 | 0.680 | 0.158 | 0.000 | 0.152 | 0.614 | 473 | 0.362 | 0.222 | 0.609 | 0.814 | 0.000 | 0.379 | 0.364 | 0.213 |

| 233 | 0.623 | 0.760 | 0.420 | 0.680 | 0.315 | 0.000 | 0.152 | 0.598 | 474 | 0.544 | 0.335 | 0.655 | 0.649 | 0.000 | 0.290 | 0.196 | 0.239 |

| 234 | 0.623 | 0.535 | 0.413 | 0.680 | 0.631 | 0.000 | 0.152 | 0.569 | 475 | 0.308 | 0.266 | 0.587 | 0.769 | 0.000 | 0.528 | 0.429 | 0.433 |

| 235 | 0.650 | 0.568 | 0.542 | 0.680 | 0.110 | 0.000 | 0.015 | 0.419 | 476 | 0.388 | 0.230 | 0.690 | 0.711 | 0.000 | 0.305 | 0.275 | 0.351 |

| 236 | 0.650 | 0.490 | 0.540 | 0.680 | 0.221 | 0.000 | 0.015 | 0.472 | 477 | 0.552 | 0.338 | 0.573 | 0.769 | 0.000 | 0.358 | 0.212 | 0.306 |

| 237 | 0.650 | 0.332 | 0.535 | 0.680 | 0.441 | 0.000 | 0.015 | 0.423 | 478 | 0.355 | 0.230 | 0.694 | 0.722 | 0.000 | 0.305 | 0.275 | 0.137 |

| 238 | 0.653 | 0.366 | 0.622 | 0.680 | 0.079 | 0.000 | 0.000 | 0.210 | 479 | 0.519 | 0.338 | 0.576 | 0.750 | 0.000 | 0.358 | 0.234 | 0.242 |

| 239 * | 0.653 | 0.310 | 0.619 | 0.680 | 0.158 | 0.000 | 0.000 | 0.207 | 480 | 0.455 | 0.193 | 0.567 | 0.725 | 0.282 | 0.428 | 0.082 | 0.213 |

| 240 | 0.653 | 0.197 | 0.615 | 0.680 | 0.315 | 0.000 | 0.000 | 0.228 | 481 | 0.581 | 0.347 | 0.608 | 0.717 | 0.014 | 0.236 | 0.262 | 0.182 |

| 241 | 0.473 | 0.750 | 0.625 | 0.500 | 0.000 | 0.158 | 0.287 | 0.647 | 482 * | 0.482 | 0.309 | 0.616 | 0.716 | 0.169 | 0.215 | 0.205 | 0.270 |

References

- Abrams, D. Design of Concrete Mixtures; Bulletin No. 1; Structural Materials Laboratory, Lewis Institute: Chicago, IL, USA, 1918. [Google Scholar]

- Lin, T.-K.; Lin, C.-C.J.; Chang, K.-C. Neural network based methodology for estimating bridge damage after major earthquakes. J. Chin. Inst. Eng. 2002, 25, 415–424. [Google Scholar] [CrossRef]

- Lee, S.-C. Prediction of concrete strength using artificial neural networks. Eng. Struct. 2003, 25, 849–857. [Google Scholar] [CrossRef]

- Hola, J.; Schabowicz, K. Methodology of neural identification of strength of concrete. ACI Mater. J. 2005, 102, 459–464. [Google Scholar]

- Kewalramani, M.A.; Gupta, R. Concrete compressive strength prediction using ultrasonic pulse velocity through artificial neural networks. Autom. Constr. 2006, 15, 374–379. [Google Scholar] [CrossRef]

- Öztaş, A.; Pala, M.; Özbay, E.; Kanca, E.; Çaglar, N.; Bhatti, M.A. Predicting the compressive strength and slump of high strength concrete using neural network. Constr. Build. Mater. 2006, 20, 769–775. [Google Scholar] [CrossRef]

- Topçu, I.B.; Sarıdemir, M. Prediction of compressive strength of concrete containing fly ash using artificial neural networks and fuzzy logic. Comput. Mater. Sci. 2008, 41, 305–311. [Google Scholar] [CrossRef]

- Bilim, C.; Atiş, C.D.; Tanyildizi, H.; Karahan, O. Predicting the compressive strength of ground granulated blast furnace slag concrete using artificial neural network. Adv. Eng. Softw. 2009, 40, 334–340. [Google Scholar] [CrossRef]

- Trtnik, G.; Kavčič, F.; Turk, G. Prediction of concrete strength using ultrasonic pulse velocity and artificial neural networks. Ultrasonics 2009, 49, 53–60. [Google Scholar] [CrossRef]

- Atici, U. Prediction of the strength of mineral admixture concrete using multivariable regression analysis and an artificial neural network. Expert Syst. Appl. 2011, 38, 9609–9618. [Google Scholar] [CrossRef]

- Alexandridis, A.; Triantis, D.; Stavrakas, I.; Stergiopoulos, C. A neural network approach for compressive strength prediction in cement-based materials through the study of pressure stimulated electrical signals. Constr. Build. Mater. 2012, 30, 294–300. [Google Scholar] [CrossRef]

- Shen, C.-H. Application of Neural Networks and ACI Code in Pozzolanic Concrete Mix Design. Master’s Thesis, Department of Civil Engineering, Nation Chiao Tung University, Hsinchu City, Taiwan, 2013. (In Chinese). [Google Scholar]

- Chopra, P.; Sharma, R.K.; Kumar, M. Artificial Neural Networks for the Prediction of Compressive Strength of Concrete. Int. J. Appl. Sci. Eng. 2015, 13, 187–204. [Google Scholar]

- Nikoo, M.; Moghadam, F.T.; Sadowski, L. Prediction of Concrete Compressive Strength by Evolutionary Artificial Neural Networks. Adv. Mater. Sci. Eng. 2015, 2015, 849126. [Google Scholar] [CrossRef]

- Chopar, P.; Sharma, R.K.; Kumar, M. Prediction of Compressive Strength of Concrete Using Artificial Neural Network and Genetic Programming. Adv. Mater. Sci. Eng. 2016, 2016, 7648467. [Google Scholar] [CrossRef]

- Hao, C.-Y.; Shen, C.-H.; Jan, J.-C.; Hung, S.-K. A Computer-Aided Approach to Pozzolanic Concrete Mix Design. Adv. Civ. Eng. 2018, 2018, 4398017. [Google Scholar]

- Cuingnet, R.; Rosso, C.; Chupin, M.; Lehéricy, S.; Dormont, D.; Benali, H.; Samson, Y.; Colliot, O. Spatial regularization of SVM for the detection of diffusion alterations associated with stroke outcome. Med. Image Anal. 2011, 15, 729–737. [Google Scholar] [CrossRef]

- Shalev-Shwartz, S.; Singer, Y.; Srebro, N.; Cotter, A. Pegasos: Primal estimated sub-gradient solver for SVM. Math. Program. 2011, 127, 3–30. [Google Scholar] [CrossRef]

- Hinton, G.; Osindero, S.; Teh, Y.-W. A fast learning algorithm for deep belief nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef]

- Hinton, G.; Deng, L.; Yu, D.; Dahl, G.E.; Hohamed, A.; Jaitly, N.; Senior, A.; Vanhoucke, V.; Nguyen, P.; Sainath, T.N.; et al. Deep Neural Networks for Acoustic Modeling in Speech Recognition: The Shared Views of Four Research Groups. IEEE Signal Process. Mag. 2012, 29, 82–97. [Google Scholar] [CrossRef]

- Sainath, T.N.; Mohamed, A.; Kingsbury, B.; Ramabhadran, B. Deep convolutional neural networks for LVCSR. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013; pp. 8614–8618. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- He, K.; Sun, J. Convolutional neural networks at constrained time cost. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 5353–5360. [Google Scholar]

- Segal, R.; Kothari, M.L.; Madnani, S. Radial basis function (RBF) network adaptive power system stabilizer. IEEE Trans. Power Syst. 2000, 15, 722–727. [Google Scholar]

- Mai-Duy, N.; Tran-Cong, T. Numerical solution of differential equations using multiquadric radial basis function networks. Neural Netw. 2001, 14, 185–199. [Google Scholar] [CrossRef]

- Ding, S.; Xu, L.; Su, C.; Jin, F. An optimizing method of RBF neural network based on genetic algorithm. Neural Comput. Appl. 2012, 21, 333–336. [Google Scholar] [CrossRef]

- Li, Y.; Wang, X.; Sun, S.; Ma, X.; Lu, G. Forecasting short-term subway passenger flow under special events scenarios using multiscale radial basis function networks. Transp. Res. Part C Emerg. Technol. 2017, 77, 306–328. [Google Scholar] [CrossRef]

- Aljarah, C.I.; Faris, H.; Mirjalili, S.; Al-Madi, N. Training radial basis function networks using biogeography-based optimizer. Neural Comput. Appl. 2018, 29, 529–553. [Google Scholar] [CrossRef]

- Karamichailidou, D.; Kaloutsa, V.; Alexandridis, A. Wind turbine power curve modeling using radial basis function neural networks and tabu search. Renew. Energy 2021, 163, 2137–2152. [Google Scholar] [CrossRef]

- Winiczenko, R.; Górnicki, K.; Kaleta, A.; Janaszek-Mańkowska, M. Optimisation of ANN topology for predicting the rehydrated apple cubes colour change using RSM and GA. Neural Comput. Appl. 2018, 30, 1795–1809. [Google Scholar] [CrossRef]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Ham, F.M.; Kostanic, I. Principles of Neurocomputing for Science & Engineering; McGraw-Hill Higher Education: New York, NY, USA, 2001. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).