Abstract

The spread of COVID-19 has been taken on pandemic magnitudes and has already spread over 200 countries in a few months. In this time of emergency of COVID-19, especially when there is still a need to follow the precautions and developed vaccines are not available to all the developing countries in the first phase of vaccine distribution, the virus is spreading rapidly through direct and indirect contacts. The World Health Organization (WHO) provides the standard recommendations on preventing the spread of COVID-19 and the importance of face masks for protection from the virus. The excessive use of manual disinfection systems has also become a source of infection. That is why this research aims to design and develop a low-cost, rapid, scalable, and effective virus spread control and screening system to minimize the chances and risk of spread of COVID-19. We proposed an IoT-based Smart Screening and Disinfection Walkthrough Gate (SSDWG) for all public places entrance. The SSDWG is designed to do rapid screening, including temperature measuring using a contact-free sensor and storing the record of the suspected individual for further control and monitoring. Our proposed IoT-based screening system also implemented real-time deep learning models for face mask detection and classification. This module classified individuals who wear the face mask properly, improperly, and without a face mask using VGG-16, MobileNetV2, Inception v3, ResNet-50, and CNN using a transfer learning approach. We achieved the highest accuracy of 99.81% while using VGG-16 and the second highest accuracy of 99.6% using MobileNetV2 in the mask detection and classification module. We also implemented classification to classify the types of face masks worn by the individuals, either N-95 or surgical masks. We also compared the results of our proposed system with state-of-the-art methods, and we highly suggested that our system could be used to prevent the spread of local transmission and reduce the chances of human carriers of COVID-19.

1. Introduction

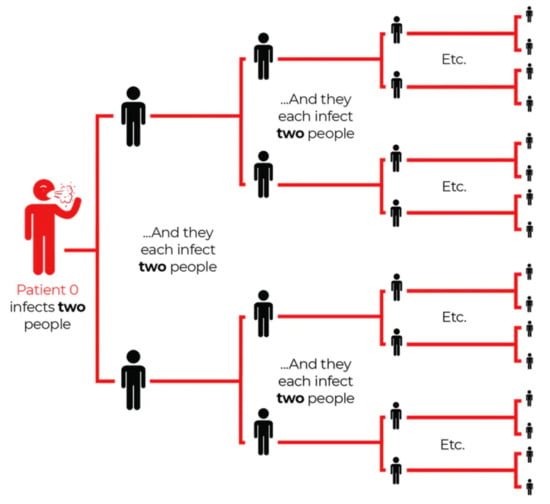

The primary healthcare challenges in COVID-19 [1] is the infectious virus that spreads rapidly among humans by their close contacts with suspects of COVID-19 positive. This novel infection and sickness were obscure before its occurrence in December 2019. COVID-19 is now a pandemic affecting all countries around the world. People who have COVID-19 infection can have a wide range of symptoms, which range from mild to severe illness. Symptoms of COVID-19 can appear from two to 14 days after exposure to the virus. Fever, dry cough, sore throat, headache, muscle or body aches, congestion or runny nose, nausea or vomiting, diarrhea, and sleepiness are the most significant common signs of COVID-19. Still, in severe cases, difficulty in breathing leads to death. Sometimes a few infected individuals have minor or no symptoms; those are asymptomatic carriers. Asymptomatic carriers are hazardous, and it’s hard to trace these silent carriers. According to the WHO [2], 80% of infections are mild or asymptomatic, 15% are severe infections, and 5% are those who require oxygen or ventilation [3]. COVID-19 can spread in three possible ways, such as contacts (direct/indirect), droplets spraying small range transmission, and aerosol in long-range transmission, also known as airborne transmission. In short-range transmission, the virus can spread from human-to-human by droplets from the nose or mouth while coughing and sneezing ban infected person of COVID-19. These droplets can travel 1.8 m (6 feet) far and soon land on the ground. These droplets stay on things and surfaces, for example, tables handle or on handrails. Humans can be infected from touching these kinds of items and then poignant their eyes, nose, or mouth. According to [3], the primary source of COVID-19 spread is close contact from person-to-person. Asymptomatic people are more vulnerable than those people who have symptoms of COVID-19. Figure 1 shows the COVID-19 spread model. Another reason for spreading this virus is touching the surface or object that has a virus on it, and then people may touch their mouth, nose, and eyes, which will be the cause of infection of COVID-19 [3], also reported that the virus could spread from animal to people and vice versa, but the chances of spreading this virus from animal to people are low. They also have evidence of spreading the virus from people to pets, get infected COVID-19 from people who have close contact with their pets [4], To disinfect the COVID-19, China uses drones to spray disinfecting the liquid around public areas and vehicles wandering in infected zones. The Internet of Things (IoT) and Artificial Intelligence (AI) have changed living standards, and they made a paradigm shift on the horizon for healthcare. IoT and Ai healthcare system has changed from traditional healthcare systems and it also reduced the cost of healthcare services and made it more reliable. IoT applications are used in different systems and technical fields, such as smart cities, smart security systems, and smart grids. There is a long history of healthcare systems and technology. This model [5] uses different IoT sensors, like heartbeat sensors, to measure heartbeat rate, blood pressure, and ECG detection sensors to monitor the patients and store the data in a database, where these raw data have further analyzed the patient.

Figure 1.

COVID-19 spreading model.

1.1. Worldwide Situation of COVID-19

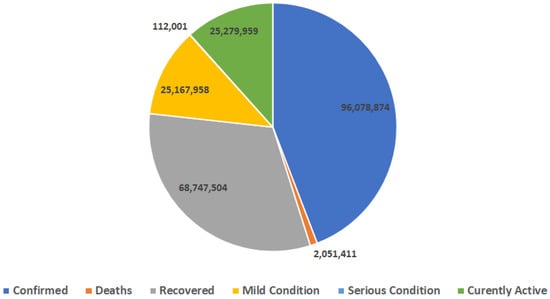

Dealing with the unanticipated difficulties that are brought by the COVID-19 pandemic has negatively affected almost all humanity across the world. There are 96,078,874 confirmed cases, 2,051,411 deaths, 68,747,504 recovered cases across the world, according to WHO [6] until 8 January Currently, active cases are 25,279,959, whereas 25,167,958 are in mild condition and 112,001 are in a critical situation, and the percentage is shown in Figure 2.

Figure 2.

Global statistics of COVID-19 till 9 November.

1.2. Pakistan’s Situation of COVID-19

In Pakistan, where there is no full lockdown [7] in the National Coordination Committee (NCC), the Government of Pakistan has decided and announced the lifting of countrywide lockdown, and applied a smart lockdown.

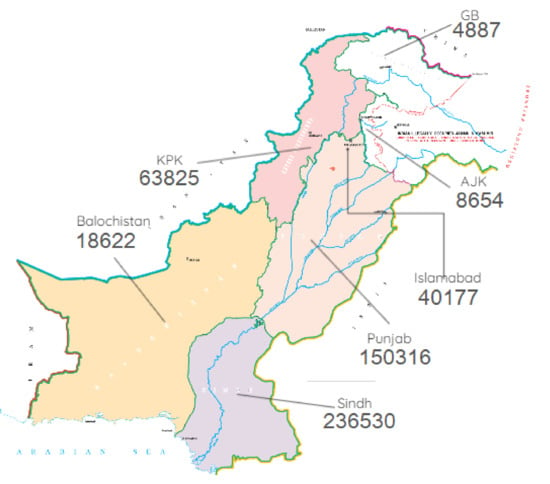

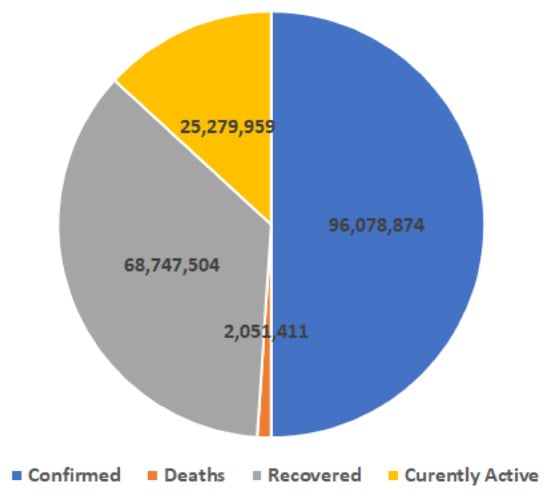

Hence, it will be a very challenging decision for the Government, despite the daily surge in Pakistan’s coronavirus cases. The virus can easily be spread by touching an object or surface by a person infected with COVID-19. In Pakistan, the first case of COVID-19 was reported on the 26 February 2020. However, there are 523,011 confirmed cases of COVID-19, according to the [6] National Command and Operation Center (NCOC) of Pakistan. The death toll rises to 11,055, and 476,471 recovered patients have been reported until the 19 January. Figure 3 shows the COVID-19 spread and total cases in Pakistan until the 9 January with a demographic view. Figure 4 indicates the number of confirmed, active deaths, and recoveries of COVID-19 using the pie chart.

Figure 3.

Map view of COVID-19 situation in Pakistan.

Figure 4.

COVID-19 statistics in Pakistan.

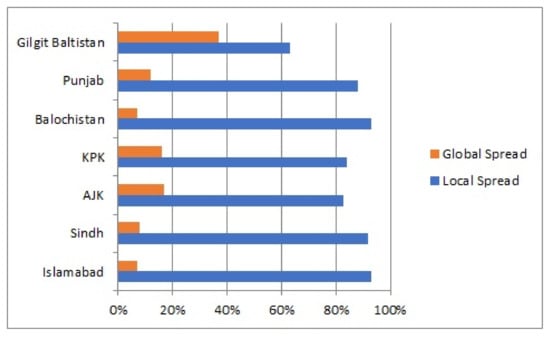

1.3. Local Transmission in Pakistan

In Pakistan, when the virus was first detected, most of the recorded cases, suspected from the pilgrims, came from Iran and other countries, but now the scenario has been changed, and local spread has increased all over Pakistan. Locally infected cases in Pakistan’s until 24 August 2020 were 90%, and foreign travel cases were reported to be 10% according to [7]. The detailed information of local and global transmission of COVID-19 in Pakistan by states included Gilgit Baltistan, Punjab, KPK (Khyber Pakhtunkhwa), AJK (Azad Jammu, and Kashmir), Sindh, and Islamabad are shown in the Figure 5.

Figure 5.

Global vs. Local spread of COVID-19 in Pakistan.

1.4. Motivation and Contribution

The motivation for this research work is to contribute and serve the community in this crucial time of emergency. While other countries have to learn from those countries who had controlled the COVID-19 situation in well mannered. The objective of our proposed SSDWG to disinfect a large number of people to save them from COVID-19. Our proposed SSDWG can reduce the chances of the spread of COVID-19 carriers and it helps to break the chain and local transmission of COVID-19 if we install SSDWG on every populated places entrance. There is still a need to adopt precautions because the vaccine of COVID-19 is still under trial, and those vaccines that have been developed and matured are not available for the developing countries in the first stage. Accordingly, there is only one way to control and minimize the spread by adopting SOP’s that are given by the World Health Organization (WHO), such as social distancing and wearing a mask. The United States Center for Disease Control (CDC) advised the public to wear a face mask or any cloth to cover their nose and mouth, they said that people can spread COVID-19, even if they do not feel sick. It is scientifically proven that a mask could reduce the chances of spread of COVID-19 by 90% [8]. The contributions and research outcomes of our proposed model are as follows.

- Our proposed SSDWG includes multi-features to disinfect many people from the COVID-19 without using a manual disinfection system.

- Our proposed system can help to track vulnerable individuals’ records for further trace and control.

- The proposed model also includes the module to check an individual’s temperature while using a contact-free temperature sensor without any human intervention and store the temperature in the database.

- According to the WHO, wearing a face mask in public places can protect an individual from COVID-19, as evidenced by experiments. The second module of SSDWG is to detect individuals wearing a mask or not and classify individuals in three classes, which are face with proper mask (FWPM), face with improper mask (FWIPM), and face without a mask (FWOM). In this module, we trained our machine learning models using three datasets, and we attained significant accuracy.

- The sub-module of our face mask identification system is to identify the types of face masks, either N-95 or surgical masks.

Our Proposed Smart Screening and Disinfection Walkthrough Gate (SSDWG) have two main modules, the architecture of our model is shown in Materials and Methods section. The first module deals with disinfecting and measure the temperature of individuals in a contact-free manner using the MLX90614 sensor. This module also includes motion and ultrasonic sensors to detect the presence of an individual, activating the disinfection spray system, the whole architecture of the first module is shown in Materials and Methods section. Moreover, the second module is designed to detect and classify the masked faces into different classes using deep learning algorithms. In the mask detection module, we used different datasets for training and testing purposes obtained from kaggle, Github, and Bing, the detailed statistics of these datasets are shown in Dataset section. The dataset consist images included, masked face, proper mask faces and improper mask faces. We did classification to classify the type of the masks into surgical and N-95. We have done pre-processing to clean datasets from noise and then fed them into the models for training and testing purposes. We used the transfer learning technique by embedding layers of five different pre-trained models to achieve our proposed objectives, the process of transfer learning can be found in Materials and Methods section. We attain significant accuracy in the face mask detection module. We achieved the highest accuracy rate of 99.8%, and 99.6% by using the VGG-16 and MobileNetV2 respectively, the detailed accuracy results can be found in Experimental Results section. We also compared our results of the mask detection module with previous work done by different which can be found in the discussion section. The rest of the paper is ordered as follows. Section 2 deals with the review of related works. The proposed methodology and materials are presented in Section 3. Section 4 describes the experimental results. The discussion about the proposed methodology and results is described in Section 5. Section 6 describes the conclusion and Section 7 describes the limitations. Finally, Section 8 describes the future work.

2. Related Work

IoT is being used in various fields, like control and monitoring mechanical, electronic, smart security, and healthcare systems. In this section, we described various IoT-based systems, in which the authors proposed different techniques for different fields of life to improve the living standards.

2.1. IoT Healthcare Systems

In this study [9], the authors introduced a cane and shoe for the visually impaired person using Arduino and ultrasonic sensor to detect obstacles. Similarly, we also used Arduino and ultrasonic sensors in our proposed SSDWG model to detect the presence of the persons. Another study [10] proposed a healthcare monitoring system in which they used various IoT sensors to check patient’s status and stores this basic information on cloud storage. We also used a similar approach to store the data of vulnerable individuals who pass from SSDWG in the database. The availability of healthcare staff is an important aspect for patients. Still, they cannot be around at all times. Hence, a proposed [11] system used an IR-based heartbeat sensor and Arduino Uno for heartbeat monitoring and it uses a GSM module to send real-time information to medical staff through the SMS directly. Healthcare authorities can use these kinds of systems to remotely track COVID-19 patient’s health status. Another study [12] assimilates medical sensors that are interlaced on a shirt to monitor cardiac frequency, electrodermal action, and temperature. Through these data, they determined the patient’s critical condition, which can also be used to measure the temperature of COVID-19 suspects. Similarly, the authors of [13] used piezo sensors to detect footsteps in 9 m square of floor to track the movement of persons in large production plants and buildings for the safety-relevant in emergencies. This kind of system can be used for quarantine centers. Although the development of the IoT in the healthcare area is significant, the safety and the integrity of the health data is still a big issue. Hence, the authors [14] proposed a cross safety structure system for securing the diagnostic information of patients. The authors [15] proposed IoT-based health monitoring system, which is programmed to monitor a sick person’s health for 24 h. Their proposed system has the capability of monitoring and controlling the patients’ health parameters, such as blood pressure, hemoglobin, and irregular cellular development in the body. By reviewing literature that is related to IoT-based healthcare systems, we concluded that IoT-based healthcare systems could play a vital role in the COVID-19 pandemic.

2.2. Role of Artificial Intelligence (AI) in COVID-19 Pandemic

COVID-19 is a new infectious disease, and scientists are still learning and doing experiments that how its spreads. According to US Centers for Disease Control and Prevention (CDC) [3], there are three possible ways of spreading this novel COVID-19. The first source of spread is direct or indirect contact, and the second source is droplets spray in short-range, and the third reason for spread is the long-range spray of airborne transmission of aerosol transmission [16]. Individuals can contract COVID-19 from other people who have the same infection. This disease spreads from person-to-person by minor precipitation from the nose or mouth, which is thrust out when an infected person coughs, sneezes, or speaks. These precipitations are generally substantial, can go far, and rapidly stuck to the surface. This virus can infect people if they inhale in these droplets. That is why it is necessary to stay at least 1 m away from individuals. Individuals can be infected by touching these things or any surfaces, then touching the mouth, nose, or eyes. According to WHO [17], airborne transmission is not the same as droplet transmission because large respiratory droplets are greater than five micrometers that can remain in the air for a short time and travel for only short distances. Usually, these droplets can travel <1 m. On the other hand, airborne droplets can remain in the air for a long time, and they can travel on long distances, such as greater than 1 m or (more than 3.3 feet). Hence, that is why, the WHO declared it mandatory to wear a face mask in their proposed SOP’s to control the transmission of COVID-19 after the results of their experiments [18]. Proposed a model that used machine learning algorithms to develop the possible condition of identifications of COVID-19 in such a short time as one can use a mobile phone-based network survey for identification of COVID-19 suspects. This will also decrease the spread in the vulnerable populaces. There is an extreme lack of awareness and sensitization with the correct information to prevent outbreaks of the COVID-19 pandemic. Therefore, the authors [19] developed an application that uses NLP and Machine Learning that finds accurate information regarding COVID-19 from authentic resources, like WHO daily reports, which deliver content in Hindi by utilizing the text to speech appliances. To prevent COVID-19 spread among people, the automatic finding structure is a fast prediction choice to avoid COVID-19. Hence, the authors of [20] proposed three different convolutional neural network-based models to detect novel COVID-19 patient using their chest X-ray radiographs. Similarly, the authors [21] implemented a back-propagation neural network in java to solve a severe image classification problem of the CIFAR-10 image dataset and evaluate the dropout’s functionality layer and rectified linear neuron. A study [22] has been conducted on the propagation of droplets. They experimented with a restaurant where people were seated more than 1 m away from each other. They observed that the strong airflow from the air conditioner became the source of propagation of droplets. More experiments have been conducted in the study [23], indicated that temperature and humidity moderate the viruses by affecting their properties, such as viral surface protein and lipid membrane. The study also showed a striking correlation of the stability of winter viruses at low RH (20–50%), while the stability of summer or all-year viruses enhanced at higher RH (80%). In hospitals, COVID-19 patient wards are the more difficult sources of spreading the virus through aerosol. According to CDC [24] published paper, they found that the COVID-19 distributed in the air and on the object surfaces in ICU and generals wards (GW). It becomes a highly infected risk for medical staff and another close contact. Further studies are needed in order to determine that samples from patient’s rooms can be used for the detection of COVID-19 or not. The study exposed that the COVID-19 positivity rate relates to all objects operating in ICU and GW, which is shown in the following Table 1.

Table 1.

Objects and Surfaces found positive of COVID-19 [24].

The virus can remain viable for up to 3 h in aerosols [25], and they performed these experiments, and aerosols were produced using an artificial model under controlled laboratory conditions. Hence, individuals need to be protected and stay clean and safe from this COVID-19. By reviewing these different studies by different authors, we concluded that the virus can spread by different sources, as shown in Table 1 at different rates. Hence, we strictly need to follow the SOP’s provided by the WHO.

According to the WHO, one of the best ways is to wear a face mask in public places for protection from the COVID-19. It is not easy to monitor people manually in public places. The authors of [26] proposed a hybrid model for face mask detection. Three datasets are used, which are the Simulated Masked Face Dataset (SMFD), the Real-World Masked Face Dataset (RMFD), and the Labeled Faces in the Wild (LFW). Their proposed model consists of two parts. The first part is based on ResNet-50 for the feature extraction from the image, while the second part is for the classification of either a face mask or without a face mask. The three algorithms are used for the classification, which is Support Vector Machine (SVM), decision tree, and ensemble algorithm. The SVM algorithm achieved the highest accuracy of 99% accuracy on the LFW dataset. Similarly another study [27], the authors used Simulated Masked Face Dataset (SMFD) to fine-tuning the pre-trained deep learning model, InceptionV3, and they achieved 99.8% accuracy in their proposed approach. Similarly, in our proposed face mask detection module, we also used fine-tuning approach for the transfer learning of five pre-trained deep learning models also included Inception V3 for the face mask detection and classification.

Face mask detection has seen significant progress in the domains of image processing and computer vision since the rise of the COVID-19 pandemic. The authors [28] used Single Shot Multibox Detector as a face detector and MobilenetV2 for the face mask detection achieved 92.64% accuracy. Another study [29] used MobileNetV2 with a global pooling block for face mask detection. They used a publicly available simulated masked face dataset, and they achieved 99% accuracy for face mask detection. Similarly, we also used the pre-trained MobileNetV2 model in our proposed face mask detection and classification module. In another study, the authors [30] proposed a model in which they used ResNet-50 for the feature extraction from the image while the YOLO v2 is used for image classification either mask or no mask. Their model achieved 81% accuracy using the Adam optimizer technique. We also used the similar model ResNet-50 for the face mask detection and classification. In another study [27], the authors used the fine-tuned Inception V3 model for the face mask detection. They used (Simulated Masked Face Dataset) for the training and testing of their proposed model, and they achieved 99.9% accuracy. We also used a similar model, Inception V3, for the face mask detection and classification, but we used a large dataset that represents real-world images consisting of 149,806 images in total. In the same contrast, the authors of [31] used the fine-tuned VGG-16 model to identify the facial expression, they used the KDEF dataset for their proposed approach, and they achieved significant accuracy. We also used these pre-trained models in our proposed approach for mask detection and classification. In this module, we used a hybrid approach, in which the first part of this module used the Haar cascade classifier [32] to perform feature extraction while using the Haar Wavelet technique for extracting frontal faces images from the noisy and multiple images dataset. After selecting frontal faces, we performed mask detection and classification process using five popular pre-trained classifiers. We have also compared our models features and results with previously proposed models by different authors which can be found in the Experimental Results section.

3. Materials and Methods

In developing countries, where people are not strictly following the Government, standard operating procedures (SOP’s) suspects are rising rapidly. To prevent the spread out of COVID-19, we proposed IoT based rapid screening models that can publicly be located to disinfect the virus and identify the people’s face mask in three categories as we mentioned before further our proposed model can identify and classify the types of face masks, either N-95 or surgical mask.

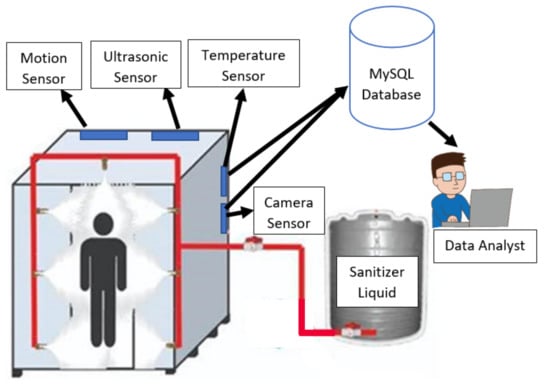

3.1. IoT Based Smart Screening and Disinfection Walkthrough Gate (SSDWG)

Our proposed system is IoT-based Smart Screening and Disinfection Walkthrough Gate (SSDWG), which consists of different modules. SSDWG is a multi-sensor and multi-purpose smart device that is designed to reduce the chances of the spread of COVID-19 carriers. In-countries, like Pakistan and other developing countries, where governments did not apply lockdown and economic activities are entirely not stopped, people are allowed to go to the market, and they can travel according to some standard operating procedures (SOP’s). In this scenario, the virus may carry on their clothes, shoes, hands, or other surfaces. Moreover, people are not bothering to follow the given SOP’s of government, and they are not taking care of social distancing. The framework of our proposed SSDWG is explained in a detailed way in Figure 6. When any person walks through from SSDWG, then, in the first step, the temperature checking module checks the temperature using a contact-free manner. If the temperature is 99 Fahrenheit or more than this, then a picture of that particular person is captured and it stores it in a database with his temperature and his health status, as suspected. Simultaneously, our mask detection module can detect the person wearing a mask or not. Meanwhile, the person who is controlling the entrance can divert that suspected person for proper COVID-19 testing. Our proposed IoT Based Smart Screening and Disinfection Walkthrough Gate (SSDWG) can be placed at the entrance of all kinds of public places, hospitals, or any crowded area that may be suspected of COVID-19.

Figure 6.

Smart Screening and Disinfection Walkthrough Gate (SSDWG).

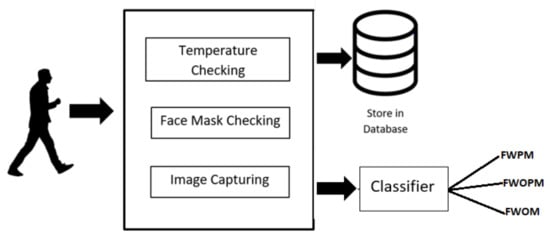

3.2. Body Temperature Detection

Our proposed IoT-based SSDWG has multiple features, and it is divided into two modules. The most prominent part of SSDWG measures the temperature of the human body in real-time in a contact-free manner, and it stores the person’s body temperature, along with a picture of a suspected person in our system, as shown in Figure 7, and we can track the most vulnerable persons through recorded temperature data because [6] some of the most common symptoms of COVID-19 are temperature or fever.

Figure 7.

Temperature measuring and mask detection module.

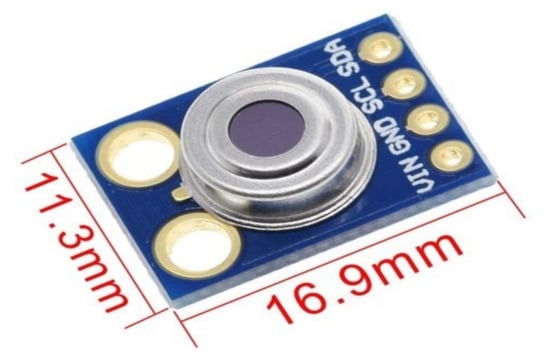

Temperature measuring sensor MLX90614 is used in our proposed SSDWG [33]. It is a non-contact wireless infrared thermometer that is used for human body temperature measurement, and its micro-controller is capable of wireless communications. We proposed contact-free temperature checking mechanisms because it is not violating the social distance SOP’s. An image processing module is used to capture a suspected photo whose body temperature is not average. In our proposed model, we placed our temperature measure sensor and image capturing module on the front side of SSDWG. Whenever any person wants to pass from SSDWG, he/she must stand in front of the temperature sensor for 3 s; the temperature sensor detects the human body temperature and contact-free sensor shown in Figure 8. If the human body temperature ia detected ar 100.4 Fahrenheit or 38.0 Celsius, or more than this, the temperature sensor passes the indication to the alarm, and the body temperature and photo captured from CCTV are stored in the database [34]; the authors proposed a complete system to auto-detect routine examinations on a teleophthalmology network. They used pathological pattern mining with lesion detection methods to obtain the information from the image.

Figure 8.

MLX90614 contact-free temperature measuring sensor.

3.3. Mask Detection Module

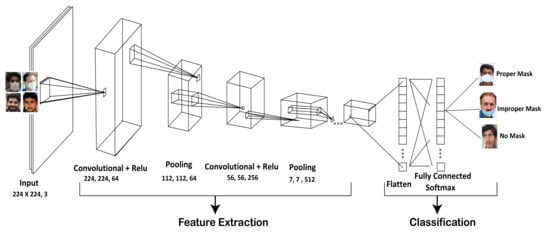

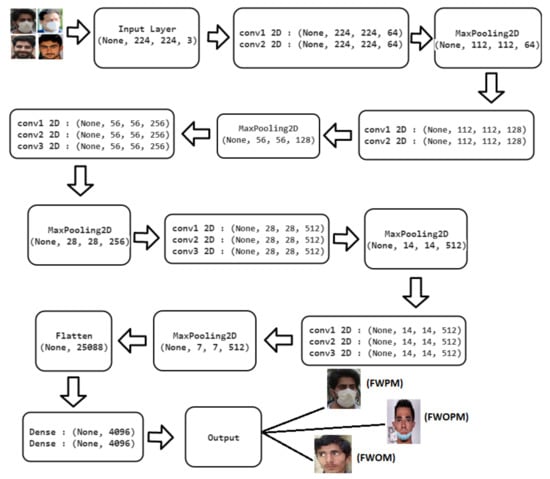

The second module of SSDWG is to detect the mask-wearing by people who are passing through it. We used five pre-trained deep learning models, which are VGG-16, MobileNetV2, Inception V3, ResNet-50, and Convolutional Neural Network (CNN), to detect a face mask wear in three classes: face with proper mask (FWPM), face with improper mask (FWIPM), and face without a mask (FWOM). Further, our proposed model identifies and classifies the types of face masks in two classes, which are N-95 and a surgical mask. We fine-tuned these five pre-trained models with transfer learning for training, which is much faster and easier than training a model from scratch with randomly initialized weights. In a similar way, the authors [13] used a deep neural network to analyze vibration signals that are caused by walking persons on the floor to detect the person localization in large buildings. We set the output layer of these models as non-trainable by freezing the weights and other trainable parameters in each layer to not be trained or updated when we feed our dataset. Further, we added an output layer to train on our dataset. This output layer would be the only trainable layer in our new model. We used the Adam optimizer with a learning rate of 0.0001, cross-entropy for our loss, and accuracy for our matrix. Figure 9 shows the architecture of the CNN model.

Figure 9.

Architecture of Convolutional Neural Network (CNN) model.

The architecture of CNN consists of an input layer, an output layer, and multiple hidden layers. The hidden layers typically consist of convolutional layers, pooling layers, fully connected layers, and normalization layers (ReLU). This network’s input is the images with face mask (proper and improper) and without a face mask input size of 224 by 224 and three RGB channels. The first layer is a convolutional layer with the Relu function, as shown in Equation (1), with a kernel size of 224 by 224 and 64 output channels.

Relu function returns 0 if it receives any negative input, but it returns that value for any positive value of x. The next layer of the architecture is max pooling, which involves shrinking the image stack. To pool an image, the window size is defined as (112 × 112, 64 output channels). The window is then filtered across the strides’ appearance, with the max value being recorded for each window. With the max-pooling layer, we have a normalization layer that is known as the Rectified Linear Unit (Relu) process, which is shown in Equation (1), which changes all of the negative values within the filtered image to 0. This step is then repeated on all of the filtered images, and the Relu layer increases the non-linear properties of the model. On the third layer, the same as the first layer, we had a convolutional layer that process images with a kernel size of (56 × 56) and process RGB to 256 output channels. There is also a pooling layer before the fully connected layer with the kernel size of 7 × 7.

We have a flattened layer with a fully connected layer with the softmax function shown in Equation (2).

where is the total input received by unit i and is the prediction probability of the image belonging to class i.

In this layer, every value gets a vote to predict the class of image. The fully connected layers are often stacked together, with each intermediate layer voting on phantom hidden categories. In effect, each additional layer allows for the network to learn even more sophisticated combinations of features towards better decision making [35]. Finally, we have an output, which is a label of proper face mask, improper face mask, and without a face mask.

3.3.1. Dataset

There is no proper dataset available for mask detection and classification. The available datasets mostly have noisy data or are created artificially, which are not useful for creating a real-time mask detection system. We have not used the simulated face mask images dataset that does not represent real-world representation. Hence, that is why we used a large dataset consisting of 149,806 images in total gathered from different sources for our proposed model. For the module of the face mask detection and classification, we used three datasets, MAFA and Masked Face-Net, which are available on Github, and the third dataset from Bing. The Github (MaskedFace-Net) dataset [36] contains 133,783 images in total, in which 67,049 images belong to the category who wear the face mask properly, and 66,734 images belong to individuals who have not wear face mask properly. From the (MaskedFace-Net) dataset, we have only used 34,456 front face images for proper masked faces and 33,106 frontal images for improper masked faces. We used another large dataset from Github (FMLD) [37], and used the MAFA dataset [38], which contains 30,811 images in total with a different orientation and occlusion degree. We used Haar cascade classifier [32] to performs feature extraction by using the Haar Wavelet technique with 24 × 24 window size to select only 12,000 frontal face images from the MAFA dataset and split the dataset as 9600 images for training and 2400 images for testing purpose. The Bing dataset contains 4039 images, in which we split our dataset by 3232 images for training and 807 for testing. We gathered face masks images from Bing using the bing-images library [39] available in Python, which can directly fetch images using URLs. Table 2 shows the overall dataset description. For the categorical classification that is shown in Figure 9, we combined the Github dataset [37] with Bing dataset.

Table 2.

Datasets Description.

Figure 10 shows the sample of the dataset, which contains the images of a face with proper mask (FWPM), face with improper mask (FWIPM), and face without a mask (FWOM).

Figure 10.

Sample of dataset Images.

3.3.2. Fine-Tuning with Transfer Learning

DNN (Deep Neural Network) has gained significant image classification progress due to its better performance than other algorithms. Developing and training DNN from scratch is a lengthy and time-consuming task, because it needs huge computational power and resources. A transfer learning approach is introduced to reduce this complexity, which allows transferring the trained knowledge of the neural network in terms of parametric weights to the new model. We used five pre-trained models in our proposed framework, and the Inception V3 and VGG-16 achieved a higher accuracy than other models. The pre-trained models (VGG-16, Inception V3) can classify 1000 different classes, but we need three categories: FWPM, FWIPM, and FWOM instead of 1000. To do that, we replaced the last fully connected layer of the models with a new one with three output features instead of 1000. We accessed the VGG-16 and Inception V3 classifiers with the ’model.classifier’ method, a six-layer array. We also disabled the training for convolutional layers, as we only trained the fully connected layer. Figure 11 shows the fine-tuned model architecture.

Figure 11.

Fine-tuned model architecture.

We also defined our loss function (cross-entropy) and the optimizer. The learning rate started at 0.001, and a StepLR object decreased by a factor of 0.1 every seven epochs. We iterated over all of the training batches for each epoch, computed the loss, and adjusted the network weights with loss.backward() and optimizer.step() methods. Subseequenty, we evaluated the performance over the test dataset. At the end of each epoch, we showed the network progress (loss and accuracy). The accuracy told us how many predictions were correct.

3.3.3. The VGG-16

The VGG-16 [41] is a pre-trained model that was used after fine-tuning it using Keras. We fine-tuned this model that only worked to classify images, as either individual wore the mask or not. We replicated the entire VGG-16 model (excluding the output layer) to a new Sequential model. We set the output layer non-trainable by freezing the weights and other trainable parameters in each layer to be not trained or updated when we feed our dataset. Further, we added our new output layer, consisting of only three nodes corresponding to FWPM, FWIPM, and FWOM. This output layer would be the only trainable layer in our new model.

3.3.4. ResNet-50

The ResNet-50 [42] is also a type of convolutional neural network that requires input images of size 224-by-224-by-3, but the images in our dataset have different sizes. We used an augmented image dataset to automatically resize the training images. Data augmentation helps to prevent the network from over-fitting and memorizing the exact details of the training images. Further, we added our new output layer, consisting of only three nodes that correspond to FWPM, FWIPM, and FWOM. This output layer is the only trainable layer in our new model.

3.3.5. Inception V3

We have also used the Inception v3 model [43] for our experiments. We used it to flatten the output layer to one dimension, add a fully connected layer with 1024 hidden units and Relu activation function, a dropout rate of 0.4, and sigmoid layer for classification. We also used data augmentation for our training images to prevent over-fitting. This data augmentation technique works directly in memory.

3.3.6. MobileNetV2

For our experiments, we have also used the pre-trained MobileNet2 [44] model, which is also a type of convolutional neural network. We performed embedding using the same approach that was used for other models to replicate the entire process. We added a new output layer that contains only three nodes corresponding to FWPM, FWIPM, and FWOM.

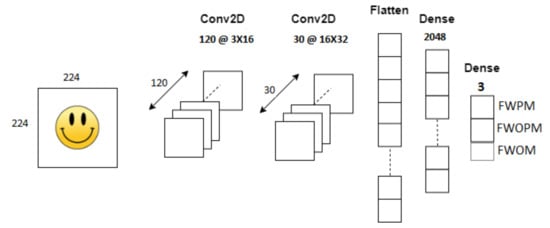

3.3.7. Convolutional Neural Network (CNN)

We also used a similar data augmentation technique to avoid model over-fitting in CNN.

The CNN architecture comprises two convolutional layers that are shown in Figure 12; the first layer has 200 kernels that are divided into 3 by 3 and the second layer has 100 kernels divided into 3 by 3. A flattening layer transforms a two-dimensional matrix of features inside a vector fed within a fully connected neural network classifier in linking the convolutional layer and the completely connected layer. Subsequently, there is a dense layer of 50 neurons, the primary layer in neural networks, individual neurons, and then providing one output to the next layer. Finally, there is a final dense layer of two neurons that provide output as classification, either people wearing a mask or without a mask.

Figure 12.

CNN work flow to detect faces with mask and without mask.

SSDWG consists of a frame shield shaped like a walk through the gate and a tank containing sanitizer liquid. The entrance is surrounded by a pipeline, including shower nozzles that are attached to the sanitizer liquid tank. Ultrasonic sensors and motion sensors are connected to the pipe inside the gate. Finally, a motor is attached between the pipeline and tank to bring the sanitizer water from tank to line, and then it showers through nozzles. Whenever any person passes through this sanitizer gate, the sensors detect the object; the motion sensor and ultrasonic sensor gave a signal to Arduino, and it activates the DC motor; hence, it starts to pump the sanitizer liquid from the tank to the nozzles for 10 s; after 10 s, Arduino again alert it and stop the motor. This task repeats whenever anyone passes through the gate. Whenever a man passes from SSDWG for disinfection using sanitizer liquid, human body temperature must be checked more than the average temperature; an alarm sound alerts the person who controls the entrance and diverts the suspected person to the infirmary to test COVID-19 further and instructs him to wear a mask. Our system stores the status of the suspected person in the database and, in this way, we can easily monitor and track individuals suspected of COVID-19. Moreover, we recommend those suspected persons to go for COVID-19 testing if anyone fails to detect the early entrance passes stage.

4. Experimental Results

This study deals with controlling the spread of COVID-19 according to the WHO’s given instructions for public safety and awareness. As we discussed in the methodology section, our proposed model consists of two modules. The first module deals with contact-free temperature detection and abnormal temperature individuals’ record-keeping system to disinfects using sanitizing liquid. In the second module of our proposed system, we implemented a mask detection and classification system in which our system is trained to detect and classify the individuals’ face mask in three classes, which are face with proper mask (FWPM), face with improper mask (FWIPM), and face without mask (FWOM) while they are passing from SSDWG. The system is trained using different machine learning models, and we achieved good accuracy on the test data in experiments.

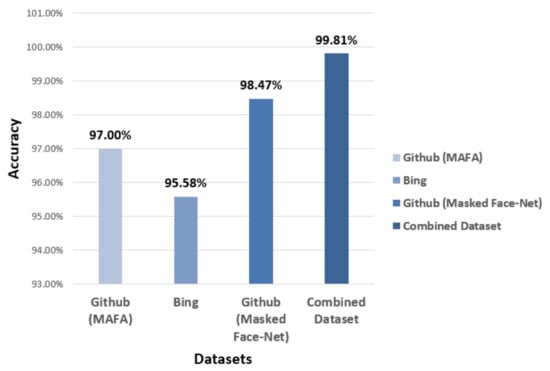

Our proposed SSDWG is designed for all kinds of public places, we want to ensure that individuals have to pass from SSDWG. The attached sensors to this gate are activated; a contact-free temperature measuring sensor detects the person’s temperature. The CCTV module captures the image from a surveillance video of the person whose temperature 100F or more than 100F, and the system will store the data in the database with the status of suspected. As mentioned before, the face mask detection module detects and classifies individuals’ face mask in three classes: FWPM, FWIPM, and FWOM. The disinfection module showers sanitizing liquid on the person for 10 s while passing from SSDWG. The person controlling the entrance can divert suspicious persons for COVID-19 testing. For the experiments, we used three datasets, as we mentioned above in dataset Section. In our proposed model, we achieved a high accuracy on the Github (Masked Face-Net). We fine-tuned five pre-trained models and re-trained these models to perform testing using test dataset. Our proposed model achieved a high accuracy on the combined dataset using the VGG-16. Figure 13 shows the comparative accuracy of VGG-16 on all datasets (MAFA and Masked Face-Net are available on Github, and third dataset from Bing).

Figure 13.

Comparative accuracy of VGG-16 on different datasets.

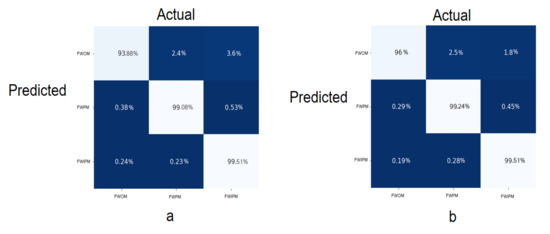

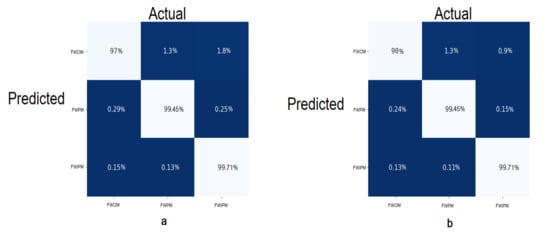

By using the VGG-16, MobileNetV2, Inception V3, ResNet-50, and CNN models, we achieved 99.8%, 99.6%, 99.4%, 99.2%, and 99.0% accuracies, respectively, in the face mask detection and classification module of our proposed SSDWG system. We plotted the confusion matrix to depict our five model’s performances on test data. The VGG-16 and MobileNet V2 achieved the highest accuracy. Figure 14, Figure 15 and Figure 16 show the confusion matrix of these five fine-tuned models.

Figure 14.

The confusion matrixes of two models: (a,b) show the confusion matrix for the identification and classification of face mask in three classes FWOM (Face Without Mask), FWPM (Face with Proper Mask), and FWIPM (Face With Improper Mask) by using the CNN and ResNet-50 models, respectively.

Figure 15.

The confusion matrixes of two models: (a,b) show the confusion matrix for the identification and classification of face mask in three classes FWOM (Face Without Mask), FWPM (Face with Proper Mask), and FWIPM (Face With Improper Mask) by using Inception V3, and MobileNetV2 models respectively.

Figure 16.

The confusion matrix for the identification and classification of face mask in three classes FWOM (Face Without Mask), FWPM (Face with Proper Mask), and FWIPM (Face With Improper Mask) by using VGG-16 model.

We also compared the results of these five models in terms of their accuracy and kappa values that are shown in Table 3.

Table 3.

Comparative results of models.

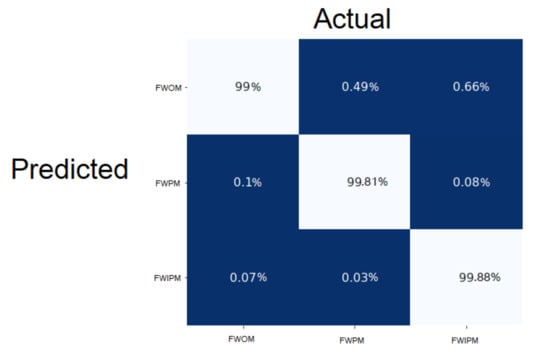

We computed and compared the accuracy, precision, and other parameters with respect to each face mask class (FWOM, FWPM, and FWIPM) for the evaluation of our models individually. We reported accuracy, precision, recall, and f1-score with respect to each face mask classes for the evaluation of our VGG-16, MobileNetV2, Inception V3, ResNet-50, and CNN models, respectively, as shown in Table 4, Table 5, Table 6, Table 7 and Table 8.

Table 4.

Statistics using VGG-16 by classes.

Table 5.

Statistics using MobileNetV2 by classes.

Table 6.

Statistics using Inception V3 by classes.

Table 7.

Statistics using ResNet-50 by classes.

Table 8.

Statistics using CNN by classes.

Further, we also plotted the accuracy, precision, recall, and f1-score of five models with respect to each face mask classes (FWOM, FWPM, and FWIPM) which shown in Figure 17.

Figure 17.

Statistics of five models by classes. The Figure (a) show the performance metrics for the identification and classification of face’s mask in three classes FWOM (Face Without Mask), FWPM (Face with Proper Mask), and FWIPM (Face With Improper Mask) by using VGG-16 model. Similar way the (b–e) show the performance metrics for the identification and classification of face’s mask in three classes (FWOM, FWPM, and FWIPM) by using the MobileNetV2, Inception V3, ResNet-50 and CNN respectively.

In our proposed system, the VGG-16 model achieved highest accuracy in face mask detection and classification module. The Figure 18, reported the face mask detection and classification in real time.

Figure 18.

VGG-16 face mask detection classification results in real time.

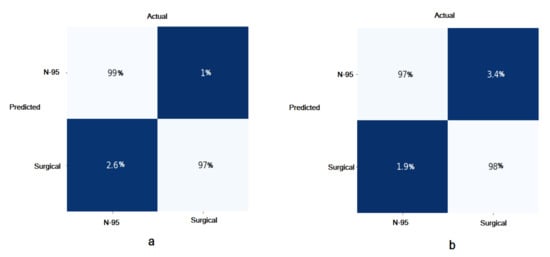

Identification and Classification for the Types of Face Masks

As we mentioned before, in our proposed face mask detection and classification module, the second module is to detect and classify the types of face masks, either it is an N-95 mask or a surgical mask. For this module, we combined the dataset that is described in the Section 3.3.1 by excluding face without mask images. The new combined dataset consists of 28,717 images in total. We used two pre-trained models (VGG-16 and MobileNetV2), which achieved the highest accuracy in the face mask detection and classification module, as described before.

For this module, we split our dataset 22,973 images for training and 5743 images for testing and feed to the two fine-tuned models, which are VGG-16 and MobileNetV2. The process of fine-tuning both models for transfer learning is the same as described before. We computed and reported the accuracy and kappa values of both models for the types of face masks identification and classification. Table 9 reported the comparative accuracy and kappa values of both of the models, and we conclude that the VGG-16 achieved higher accuracy than MobileNetV2. We also reported the confusion matrix of both models. Figure (a) shows the confusion matrix of VGG-16 on test data, and a similarly on (b) shows the confusion matrix of MobileNetV2 on test data for the identification and classification of types of face masks, as shown in Figure 19.

Table 9.

Comparative results of the models for types of face masks detection and classification.

Figure 19.

Confusion matrix of two models for face mask type identification and classification.

We computed and compared the results regarding the class-wise accuracy, precision, and other parameters for the evaluation of our models individually. We reported the class-wise accuracy, precision, recall, and f1-score for the evaluation of our VGG-16 and MobileNetV2 models, respectively, for the face mask type identification and classification, as shown in Table 10 and Table 11.

Table 10.

Statistics using VGG-16 for types of face masks detection and classification.

Table 11.

Statistics using MobileNetV2 for types of face masks detection and classification.

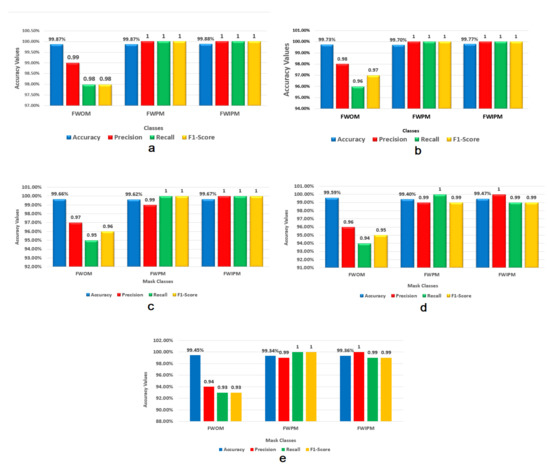

Further, we also plotted the accuracy, precision, recall, and f1-score of five models with respect to each face mask classes (Surgical, and N-95) which shown in Figure 20.

Figure 20.

Statistics of two models in mask type detection and classification. The figure (a) shows the performance metrics using VGG-16. While the figure (b) represents the performance metrics for types of face masks identification and classification (N-95 or Surgical) using MobileNetV2.

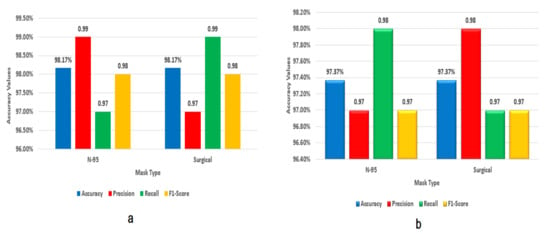

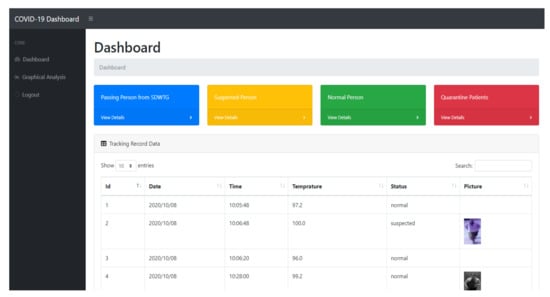

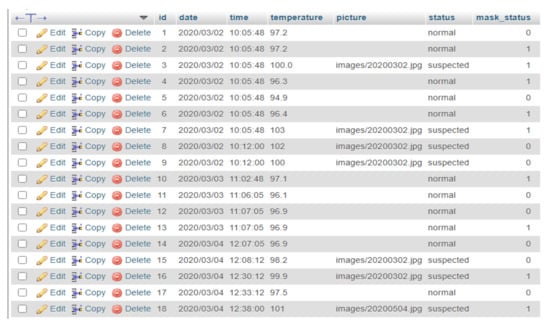

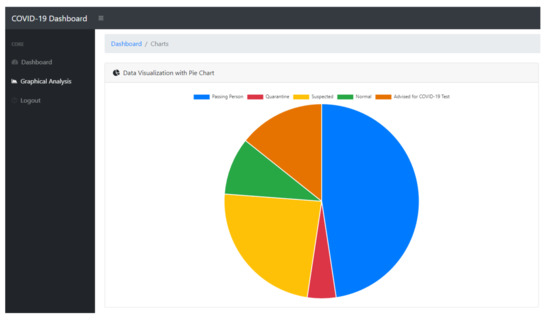

We have also designed and developed a dashboard to handle the data that are stored in the database from SSDWG. We presented it in a significant way, in which a data analyst can perform any needed operation. Here is some visual demonstration of our IoT-based web portal. While using our web portal, an analyst can perform different types of analysis after login into our COVID-19 spread control portal. The login page is shown in Figure 21.

Figure 21.

Login form of web portal for COVID-19 data analysis.

All of the data, including time, temperature, status, photo of the suspected, and wearing a mask or not, are stored in our database, as shown in Figure 22 and Figure 23. An analyst can analyze all of the information from the portal in different charts or graphs, as shown in Figure 24, and, further, he will confirm all of the detail proceeding from a particular department regarding the suspected case of COVID-19. An analyst can generate different types of reports on a daily or weekly basis from our web portal and send all of the information shown in Figure 24 to the higher authorities of the concerned department. Many walks through gates for sanitization have been developed and installed in various places and cities. Still, those are not automated, and people have to interact with the shower button for sanitization. Because buttons have a surface that itself becomes the reason for COVID-19 spread or as a carrier, and these manual sanitization gates are working for disinfection purposes only. Hence, our proposed model is an autonomous system. It works without any intervention of human resources. It also detects the the temperature of every passing person who has at least one main symptom of COVID-19. It also detects individuals’ face masks in three classes: FWPM, FWIPM, and FWOM. It also performed the identification and classification for types of face masks in two classes (N-95 and surgical mask), which are explained in the above section. It also stores all the necessary information of suspected individual and buzzes alarm at the person detect, whose temperature is abnormal and without a mask. In this way, we can easily trace the suspects and rapidly reduce the spread of COVID-19.

Figure 22.

Smart Screening and Disinfection Walkthrough Gate (SSDWG) stored information about suspects.

Figure 23.

Database view of stored data.

Figure 24.

Graph generated by Internet of Things (IoT) web portal.

5. Discussion

Body temperature measuring is adequate for preventing an outbreak of COVID-19. Fever, dry cough, sore throat, headache, muscle or body aches, congestion or runny nose, nausea or vomiting, and diarrhea are the most significant common symptoms of COVID-19. To measure individuals’ temperature manually consumes a considerable amount of human resources, time, and administrative resources. ref. [45] introduced a prototype system that consists of a contact-free temperature sensor. Their proposed system has the features of contact-free temperature measurement and attendance, which are taken at the entrances of school campuses in Hong Kong. Their experiments showed that the system could measure body temperature with adequate accuracy for screening purposes.

A pandemic-like flu requires rapid temperature measuring. ref. [46] authors designed a low-cost, scalable device used for measuring temperature to control the spread of the flu pandemic. Their proposed temperature measuring module is developed by a medical-grade version of the Melexis MLX90614 series of smart infrared temperature sensors. When the temperature is high, an alarm will alert the authorized person at the entrance to find the suspected student. We also used the Melexis MLX90614 contact-free temperature measure sensor in our proposed model. We also compared the comparative features of our model with previous work that was done by different authors. Table 12 shows our model’s comparative characteristics with previous work.

Table 12.

Result comparison with prior study and proposed approach.

We have also compared our results of the mask detection and classification module with previous work that was done by different researchers. The authors [30] used ResNet-50 for feature extraction from images and YOLO v3 for face mask detection and classification. Two datasets were used; one is the Medical Masks Dataset (MMD), published by Mikolaj Witkowski, which consists of 682 images. The second public masked face dataset is a Face Mask Dataset (FMD), which consists of 853 images. The authors combined these two datasets, and the merged dataset contains 1415 images by removing bad quality images and redundancy, and they achieved 81% accuracy for mask detection. We also used a pre-trained ResNet-50 model for face masked detection, and we achieved 99.2% accuracy. We also used the Inception V3 model and achieved 99.46% accuracy. Similarly, the authors of [27] used fine-tuning for transfer learning the Inception v3 model to classify the images into a mask or no mask and achieved 99.2% accuracy. The authors used the Simulated Masked Face dataset, which consists of 1570 images, in which 785 images are masked facial images and 785 unmasked facial images. Simulated face masks are the images of individuals on which face masks are added on individual’s faces by photo editing techniques. Further, we used the fine-tuned VGG-16 model in which we achieved 99.81% accuracy. A similar model was used by the authors of [50] for face mask detection and classification. Their dataset contains 25,000 images and 224 × 224 pixel resolution. 80% of the data is used for training and 20% for the testing, and they achieved an accuracy rate of 96%.

6. Conclusions

COVID-19 has become a pandemic and it is now spreading rapidly through direct and indirect contacts among individuals. Manual systems of measuring temperature and disinfecting are being used in homes and public places for disinfection, but these systems may become a source of the spread of infection of COVID-19. Now, this virus will stay in our lives, and we have to live with it, but we need to adopt precautions strictly to break the chain of this virus. This research aims to control the spread of COVID-19 by preventing and minimizing local transmission carriers. Our proposed model is a practical approach for rapid screening and disinfecting numerous people with an automated. The modules of our proposed SSDWG are measuring temperature in a contact-free manner and detecting the person wearing a face mask or not in three classes, which are face with proper mask (FWPM), a face without a proper mask (FWOPM), and face without a mask (FWOM), which can play a pivotal role in controlling and tracing the person who may suspect of COVID-19. Both of the modules of our proposed SSDWG gave us very impressive results and showed that Smart Screening and Disinfection Walkthrough Gate (SSDWG) can help to control the local transmission and defeat this novel COVID-19 pandemic. Our mask detection and classification module gave us accuracies of 99.81%, 99.6%, 99.46%, 99.22%, and 99.07% by using the VGG-16, MobileNetV2, Inception V3, ResNet-50, and CNN, respectively, for face mask detection and classification in three classes, which are (FWPM, FWIPM, and FWOM), and we also implemented classification to classify the types of face masks in two classes, which are (N-95 and Surgical). We achieved 98.17%, and 97.37% accuracies by using the VGG-16 and MobileNetV2 models, respectively, in identification and classification in types of face masks. We highly suggest implementing both modules in public places, such as at the entrance of the housing communities, shopping malls, mosques, schools, colleges, universities, hospitals, and hotels, etc.

7. Limitations

There is no proper dataset available for mask detection and classification. The available datasets mostly have noisy data or are created artificially, which are not useful for creating a real-time mask detection system. In contrast, we consumed a lot of time collecting and pre-processing the proper face mask images. Further, we also used the Haar Wavelet technique to only select frontal face images from the noisy images.

8. Future Work

In future work, we will propose a deep learning framework by using pre-trained deep learning models to monitor the physical interaction (social distancing) between individuals in a real-time environment as a precautionary step against the spread of the COVID-19. The CDC (Centers for Disease Control and Prevention) also states that anyone with a hearing impairment should consider a clear transparent face mask. Therefore, we will also focus on the detection and classification of transparent face mask type. According to the WHO guidelines, the sneezing and coughing are the major symptoms of COVID-19, in the future, we will work on analyzing the individuals who are coughing and sneezing by using the deep learning models, which will be helpful in controlling the spread of COVID-19.

Author Contributions

Conceptualization, S.H., M.A., J.A.W. and W.H.; Data curation, S.H.; Investigation, S.H., Y.Y., M.A. and A.K.; Methodology, S.H. and M.A.; Resources, Y.Y., M.A., A.K. and W.H.; Supervision, S.H. and W.H.; Visualization, S.H., Y.Y., M.A. and A.K.; Writing—original draft, S.H. and W.H.; Writing—review & editing, S.H., Y.Y., M.A., A.K., R.R., J.A.W. and W.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- CDC COVID-19 Response Team. Preliminary estimates of the prevalence of selected underlying health conditions among patients with coronavirus disease 2019—United States, February 12–March 28, 2020. Morb. Mortal. Wkly. Rep. 2020, 69, 382–386. [Google Scholar] [CrossRef]

- CDC COVID-19 Response Team. Severe Outcomes among Patients with Coronavirus Disease 2019 (COVID-19)-United States, February 12-March 16, 2020. MMWR Morb. Mortal Wkly. Rep. 2020, 69, 343–346. [Google Scholar] [CrossRef]

- Moriyama, M.; Hugentobler, W.J.; Iwasaki, A. Seasonality of respiratory viral infections. Annu. Rev. Virol. 2020, 7, 83–101. [Google Scholar] [CrossRef] [PubMed]

- Yang, J.; Reuter, T. Three Ways China Is Using Drones to Fight Coronavirus; World Economic Forum: Geneva, Switzerland, 2020; Volume 16. [Google Scholar]

- Gupta, P.; Agrawal, D.; Chhabra, J.; Dhir, P.K. IoT based smart healthcare kit. In Proceedings of the IEEE 2016 International Conference on Computational Techniques in Information and Communication Technologies (ICCTICT), New Delhi, India, 11–13 March 2016; pp. 237–242. [Google Scholar]

- Dong, E.; Du, H.; Gardner, L. An interactive web-based dashboard to track COVID-19 in real time. Lancet Infect. Dis. 2020, 20, 533–534. [Google Scholar] [CrossRef]

- Tribune. Govt to End Lockdown from 9th in Phases. The Express Tribune, 7 May 2020. [Google Scholar]

- Greenhalgh, T.; Schmid, M.B.; Czypionka, T.; Bassler, D.; Gruer, L. Face masks for the public during the covid-19 crisis. BMJ 2020, 369, m1435. [Google Scholar] [CrossRef] [PubMed]

- Aruna, A.; Mol, Y.B.; Delcy, G.; Muthukumaran, N. Arduino Powered Obstacles Avoidance for Visually Impaired Person. Asian J. Appl. Sci. Technol. 2018, 2, 101–106. [Google Scholar]

- Jayapradha, S.; Vincent, P.D.R. An IOT based Human healthcare system using Arduino Uno board. In Proceedings of the IEEE 2017 International Conference on Intelligent Computing, Instrumentation and Control Technologies (ICICICT), Kerala, India, 6–7 July 2017; pp. 880–885. [Google Scholar]

- Pawar, P.A. Heart rate monitoring system using IR base sensor & Arduino Uno. In Proceedings of the IEEE 2014 Conference on IT in Business, Industry and Government (CSIBIG), Indore, India, 8–9 March 2014; pp. 1–3. [Google Scholar]

- Sugathan, A.; Roy, G.G.; Kirthyvijay, G.; Thomson, J. Application of Arduino based platform for wearable health monitoring system. In Proceedings of the 2013 IEEE 1st International Conference on Condition Assessment Techniques in Electrical Systems (CATCON), Kolkata, India, 6–8 December 2013; pp. 1–5. [Google Scholar]

- Yu, Y.; Waltereit, M.; Matkovic, V.; Hou, W.; Weis, T. Deep Learning-based Vibration Signal Personnel Positioning System. IEEE Access 2020, 8, 226108–226118. [Google Scholar] [CrossRef]

- Elhoseny, M.; Ramírez-González, G.; Abu-Elnasr, O.M.; Shawkat, S.A.; Arunkumar, N.; Farouk, A. Secure medical data transmission model for IoT-based healthcare systems. IEEE Access 2018, 6, 20596–20608. [Google Scholar] [CrossRef]

- Rohokale, V.M.; Prasad, N.R.; Prasad, R. A cooperative Internet of Things (IoT) for rural healthcare monitoring and control. In Proceedings of the IEEE 2011 2nd International Conference on Wireless Communication, Vehicular Technology, Information Theory and Aerospace & Electronic Systems Technology (Wireless VITAE), Chennai, India, 28 February–3 March 2011; pp. 1–6. [Google Scholar]

- Q&A on Coronaviruses (COVID-19). Available online: https://www.who.int/emergencies/diseases/novel-coronavirus-2019/question-and-answers-hub/q-a-detail/q-a-coronaviruses (accessed on 7 September 2020).

- World Health Organization. Modes of Transmission of Virus Causing COVID-19: Implications for IPC Precaution Recommendations: Scientific Brief, 27 March 2020; Technical Report; World Health Organization: Geneva, Switzerland, 2020. [Google Scholar]

- Rao, A.S.S.; Vazquez, J.A. Identification of COVID-19 can be quicker through artificial intelligence framework using a mobile phone–based survey when cities and towns are under quarantine. Infect. Control Hosp. Epidemiol. 2020, 41, 826–830. [Google Scholar] [CrossRef] [PubMed]

- Pandey, R.; Gautam, V.; Bhagat, K.; Sethi, T. A machine learning application for raising wash awareness in the times of COVID-19 pandemic. arXiv 2020, arXiv:2003.07074. [Google Scholar]

- Narin, A.; Kaya, C.; Pamuk, Z. Automatic detection of coronavirus disease (COVID-19) using X-ray images and deep convolutional neural networks. arXiv 2020, arXiv:2003.10849. [Google Scholar]

- Decencière, E.; Cazuguel, G.; Zhang, X.; Thibault, G.; Klein, J.C.; Meyer, F.; Marcotegui, B.; Quellec, G.; Lamard, M.; Danno, R.; et al. TeleOphta: Machine learning and image processing methods for teleophthalmology. Irbm 2013, 34, 196–203. [Google Scholar] [CrossRef]

- Lu, J.; Gu, J.; Li, K.; Xu, C.; Su, W.; Lai, Z.; Zhou, D.; Yu, C.; Xu, B.; Yang, Z. COVID-19 outbreak associated with air conditioning in restaurant, Guangzhou, China, 2020. Emerg. Infect. Dis. 2020, 26, 1628. [Google Scholar] [CrossRef] [PubMed]

- Sajadi, M.M.; Habibzadeh, P.; Vintzileos, A.; Shokouhi, S.; Miralles-Wilhelm, F.; Amoroso, A. Temperature and Latitude Analysis to Predict Potential Spread and Seasonality for COVID-19. Available at SSRN 3550308. 2020. Available online: https://www.ecplanet.org/sites/ecplanet.com/files/SSRN-id3553027.pdf (accessed on 12 April 2021).

- Guo, Z.D.; Wang, Z.Y.; Zhang, S.F.; Li, X.; Li, L.; Li, C.; Cui, Y.; Fu, R.B.; Dong, Y.Z.; Chi, X.Y.; et al. Aerosol and surface distribution of severe acute respiratory syndrome coronavirus 2 in hospital wards, Wuhan, China, 2020. Emerg. Infect. Dis. 2020, 26, 1583–1591. [Google Scholar] [CrossRef]

- Van Doremalen, N.; Bushmaker, T.; Morris, D.H.; Holbrook, M.G.; Gamble, A.; Williamson, B.N.; Tamin, A.; Harcourt, J.L.; Thornburg, N.J.; Gerber, S.I.; et al. Aerosol and surface stability of SARS-CoV-2 as compared with SARS-CoV-1. N. Engl. J. Med. 2020, 382, 1564–1567. [Google Scholar] [CrossRef]

- Loey, M.; Manogaran, G.; Taha, M.H.N.; Khalifa, N.E.M. A hybrid deep transfer learning model with machine learning methods for face mask detection in the era of the COVID-19 pandemic. Measurement 2021, 167, 108288. [Google Scholar] [CrossRef]

- Chowdary, G.J.; Punn, N.S.; Sonbhadra, S.K.; Agarwal, S. Face mask detection using transfer learning of inceptionv3. In International Conference on Big Data Analytics; Springer: Berlin/Heidelberg, Germany, 2020; pp. 81–90. [Google Scholar]

- Nagrath, P.; Jain, R.; Madan, A.; Arora, R.; Kataria, P.; Hemanth, J. SSDMNV2: A real time DNN-based face mask detection system using single shot multibox detector and MobileNetV2. Sustain. Cities Soc. 2021, 66, 102692. [Google Scholar] [CrossRef]

- Venkateswarlu, I.B.; Kakarla, J.; Prakash, S. Face mask detection using MobileNet and Global Pooling Block. In Proceedings of the 2020 IEEE 4th Conference on Information & Communication Technology (CICT), Chennai, India, 3–5 December 2020; pp. 1–5. [Google Scholar]

- Loey, M.; Manogaran, G.; Taha, M.H.N.; Khalifa, N.E.M. Fighting against COVID-19: A novel deep learning model based on YOLO-v2 with ResNet-50 for medical face mask detection. Sustain. Cities Soc. 2020, 65, 102600. [Google Scholar] [CrossRef]

- Hussain, S.A.; Al Balushi, A.S.A. A real time face emotion classification and recognition using deep learning model. J. Phys. Conf. Ser. 2020, 1432, 012087. [Google Scholar] [CrossRef]

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple features. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2001), Kauai, HI, USA, 8–14 December 2001; Volume 1. [Google Scholar]

- Digital Non-Contact Infrared Thermometer (MLX90614) #Melexis. Available online: https://www.melexis.com/en/product/MLX90614/Digital-Plug-Play-Infrared-Thermometer-TO-Can (accessed on 23 January 2021).

- Wang, C.; Xi, Y. Convolutional Neural Network for Image Classification; The Johns Hopkins University Press: Baltimore, MD, USA, 1997. [Google Scholar]

- Lafiosca, P.; Fan, I.S. Review of non-contact methods for automated aircraft inspections. Insight-Non Test. Cond. Monit. 2020, 62, 692–701. [Google Scholar]

- GitHub—Cabani/MaskedFace-Net: MaskedFace-Net Is a Dataset of Human Faces with a Correctly and Incorrectly Worn Mask Based on the Dataset Flickr-Faces-HQ (FFHQ). Available online: https://github.com/cabani/MaskedFace-Net (accessed on 25 February 2021).

- GitHub—Borutb-Fri/FMLD: A Challenging, in the Wild Dataset for Experimentation with Face Masks with 63,072 Face Images. Available online: https://github.com/borutb-fri/FMLD (accessed on 4 April 2021).

- Ge, S.; Li, J.; Ye, Q.; Luo, Z. Detecting Masked Faces in the Wild with LLE-CNNs. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 426–434. [Google Scholar] [CrossRef]

- Bing-Images · PyPI. Available online: https://pypi.org/project/bing-images/ (accessed on 4 April 2021).

- Extracting Covid-19 Insights from Bing Search Data|Bing Search Blog. Available online: https://blogs.bing.com/search/2020_07/Extracting-Covid-19-insights-from-Bing-search-data (accessed on 23 February 2021).

- Kaur, T.; Gandhi, T.K. Automated brain image classification based on VGG-16 and transfer learning. In Proceedings of the IEEE 2019 International Conference on Information Technology (ICIT), Bhubaneswar, India, 19–21 December 2019; pp. 94–98. [Google Scholar]

- Reddy, A.S.B.; Juliet, D.S. Transfer learning with ResNet-50 for malaria cell-image classification. In Proceedings of the IEEE 2019 International Conference on Communication and Signal Processing (ICCSP), Chennai, India, 4–6 April 2019; pp. 0945–0949. [Google Scholar]

- Hussain, M.; Bird, J.J.; Faria, D.R. A study on cnn transfer learning for image classification. In UK Workshop on Computational Intelligence; Springer: Berlin/Heidelberg, Germany, 2018; pp. 191–202. [Google Scholar]

- Hashmi, M.F.; Katiyar, S.; Keskar, A.G.; Bokde, N.D.; Geem, Z.W. Efficient pneumonia detection in chest xray images using deep transfer learning. Diagnostics 2020, 10, 417. [Google Scholar] [CrossRef] [PubMed]

- Tang, H.F.; Hung, K. Design of a non-contact body temperature measurement system for smart campus. In Proceedings of the 2016 IEEE International Conference on Consumer Electronics-China (ICCE-China), Guangzhou, China, 19–21 December 2016; pp. 1–4. [Google Scholar]

- Kaplan, R.B.; Johnson, T.M.; Schneider, R.; Krishnan, S.M. A design for low cost and scalable non-contact fever screening system. In Proceedings of the ASEE Annual Conference and Exposition, Vancouver, BC, Canada, 26–29 June 2011. [Google Scholar]

- Agrawal, A.; Singh, S.G. PREFACE on the Special Issue ‘Technologies for Fighting COVID-19’. Trans. Indian Natl. Acad. Eng. 2020, 5, 91–95. [Google Scholar] [CrossRef]

- Hussain, S.; Cheema, M.J.M.; Motahhir, S.; Iqbal, M.M.; Arshad, A.; Waqas, M.S.; Usman Khalid, M.; Malik, S. Proposed Design of Walk-Through Gate (WTG): Mitigating the Effect of COVID-19. Appl. Syst. Innov. 2020, 3, 41. [Google Scholar] [CrossRef]

- Qin, B.; Li, D. Identifying Facemask-Wearing Condition Using Image Super-Resolution with Classification Network to Prevent COVID-19. Sensors 2020, 20, 5236. [Google Scholar] [CrossRef] [PubMed]

- Militante, S.V.; Dionisio, N.V. Real-Time Facemask Recognition with Alarm System using Deep Learning. In Proceedings of the 2020 11th IEEE Control and System Graduate Research Colloquium (ICSGRC), Shah Alam, Malaysia, 8 August 2020; pp. 106–110. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).