End-to-End Deep Reinforcement Learning for Decentralized Task Allocation and Navigation for a Multi-Robot System †

Abstract

1. Introduction

- An introduction of a novel method that performs MRTA and navigation for a homogeneous multi-robot system from end-to-end using deep reinforcement learning.

- An introduction of a new metric called the Task Allocation Index (), which measures the performance of navigation in MRTA.

2. Related Works

3. Problem Formulation

4. Methodology

4.1. Deep Reinforcement Learning Setup

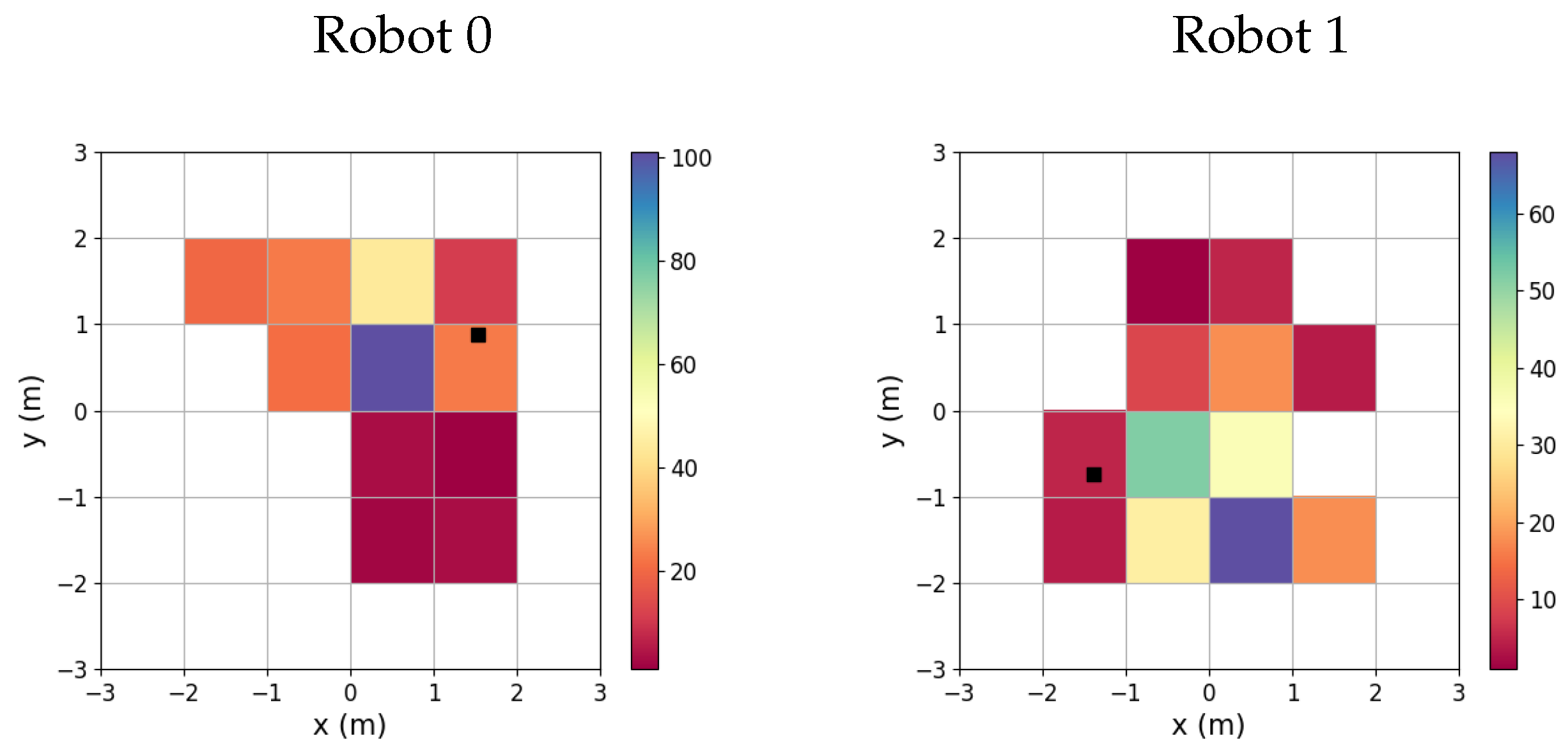

4.1.1. Observation Description

4.1.2. Actions’ Description

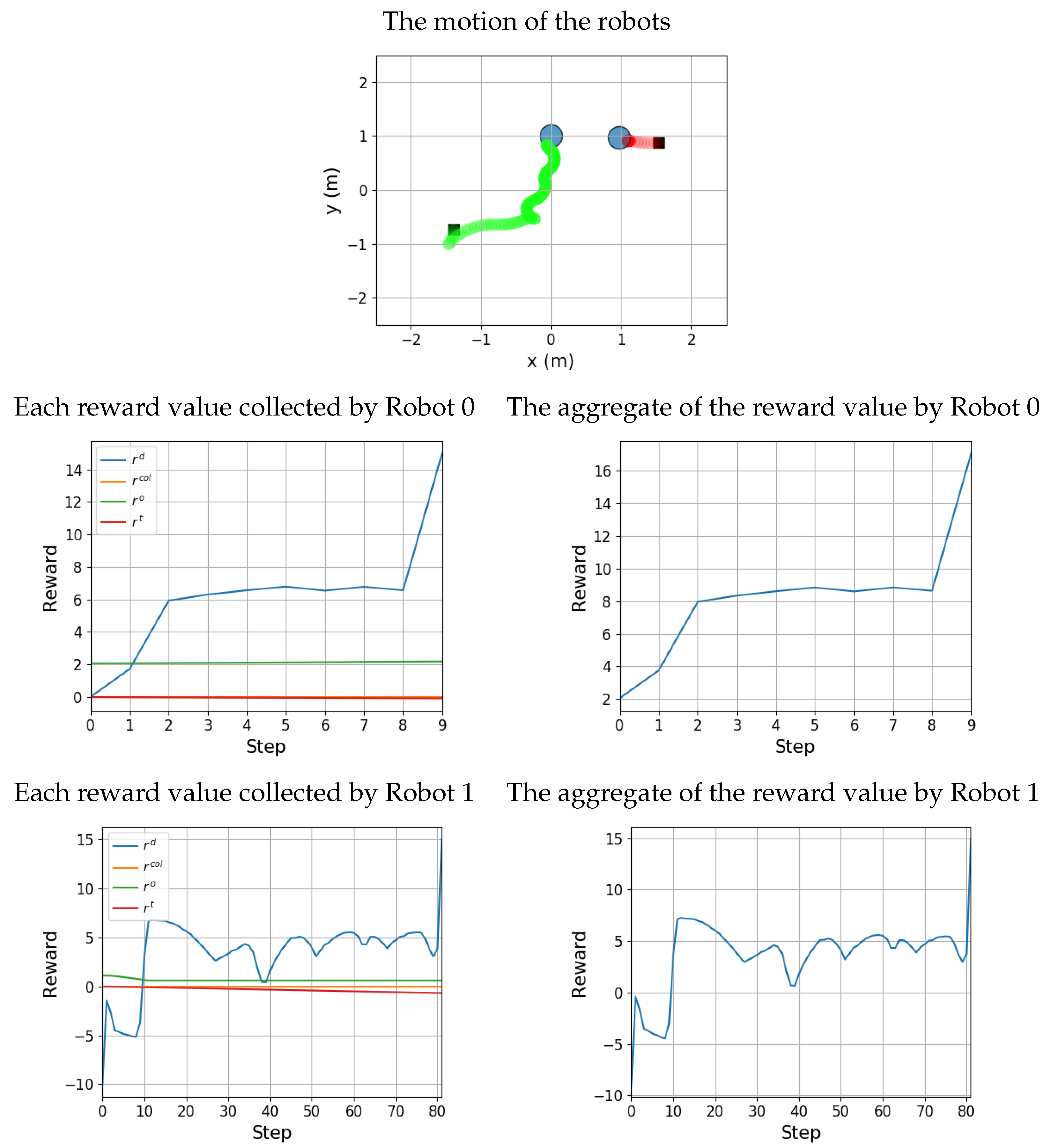

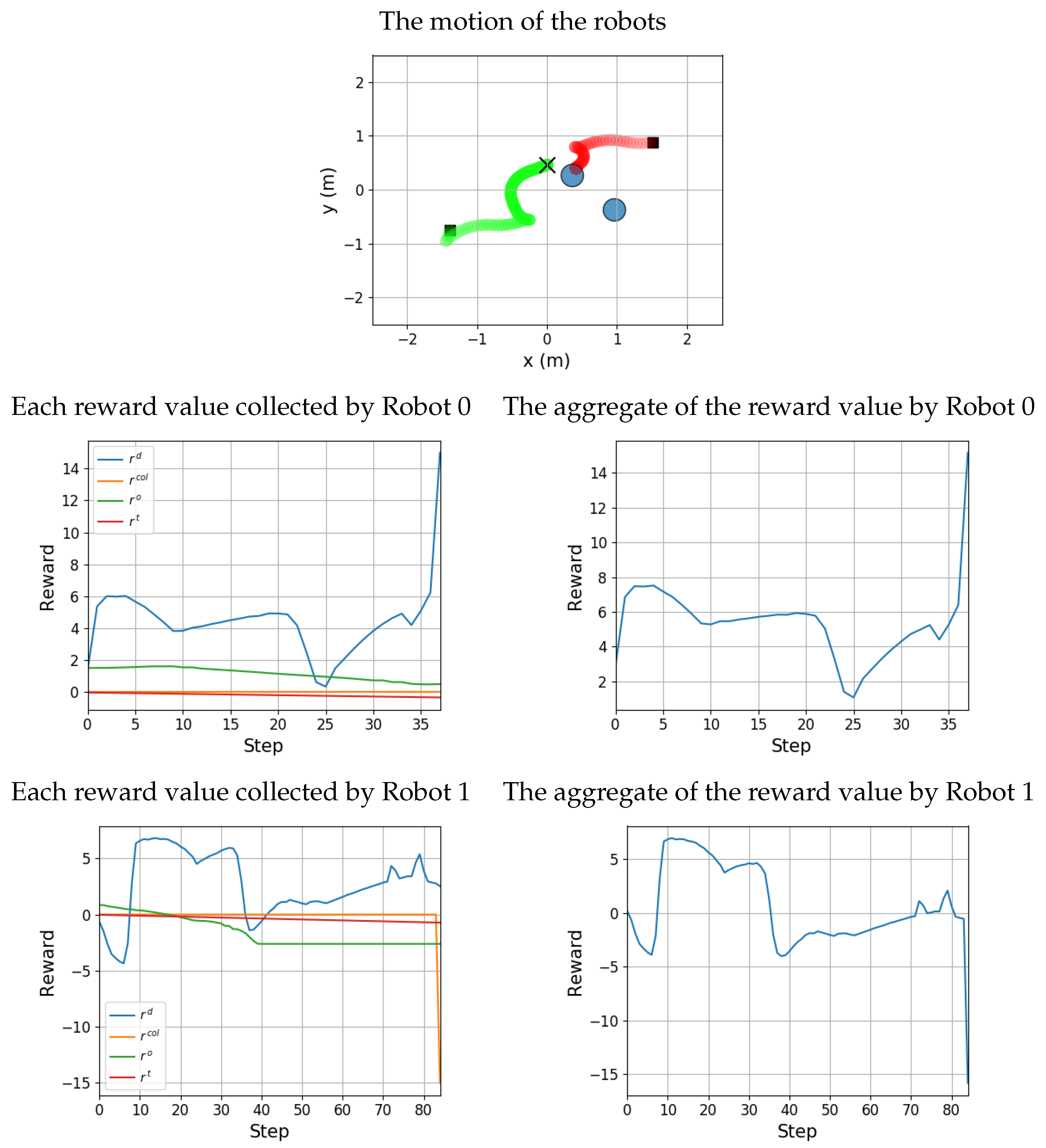

4.1.3. Reward Function

4.2. Training Algorithm

| Algorithm 1 PPO with a Multi-Robot System |

|

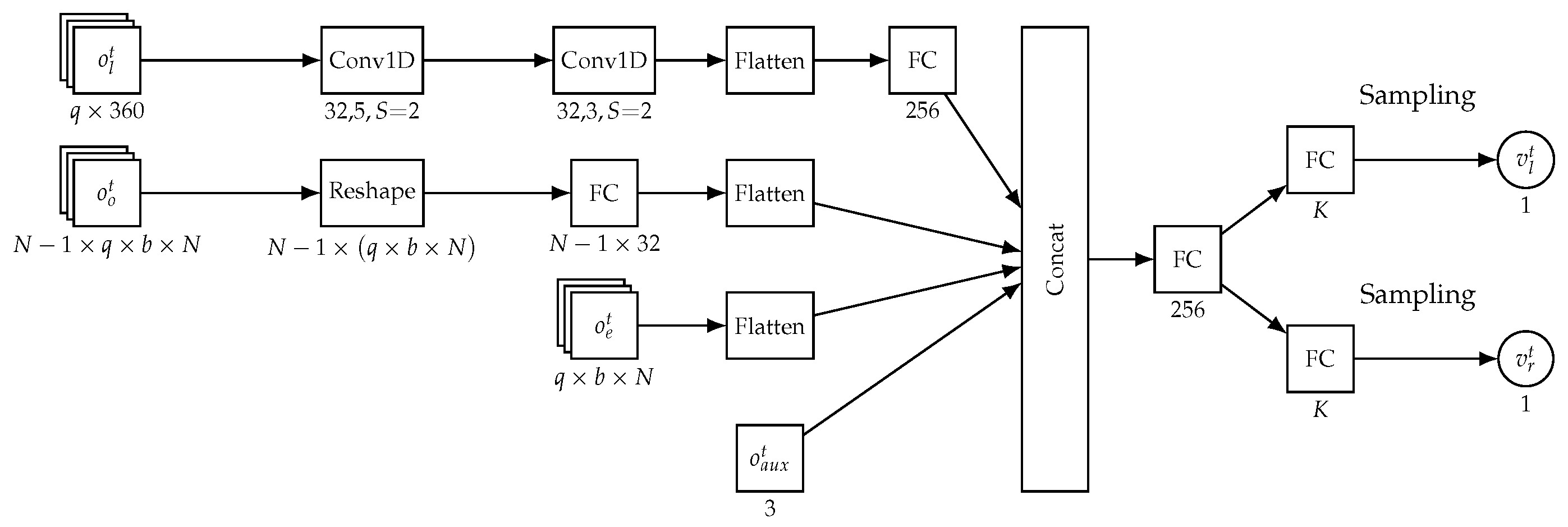

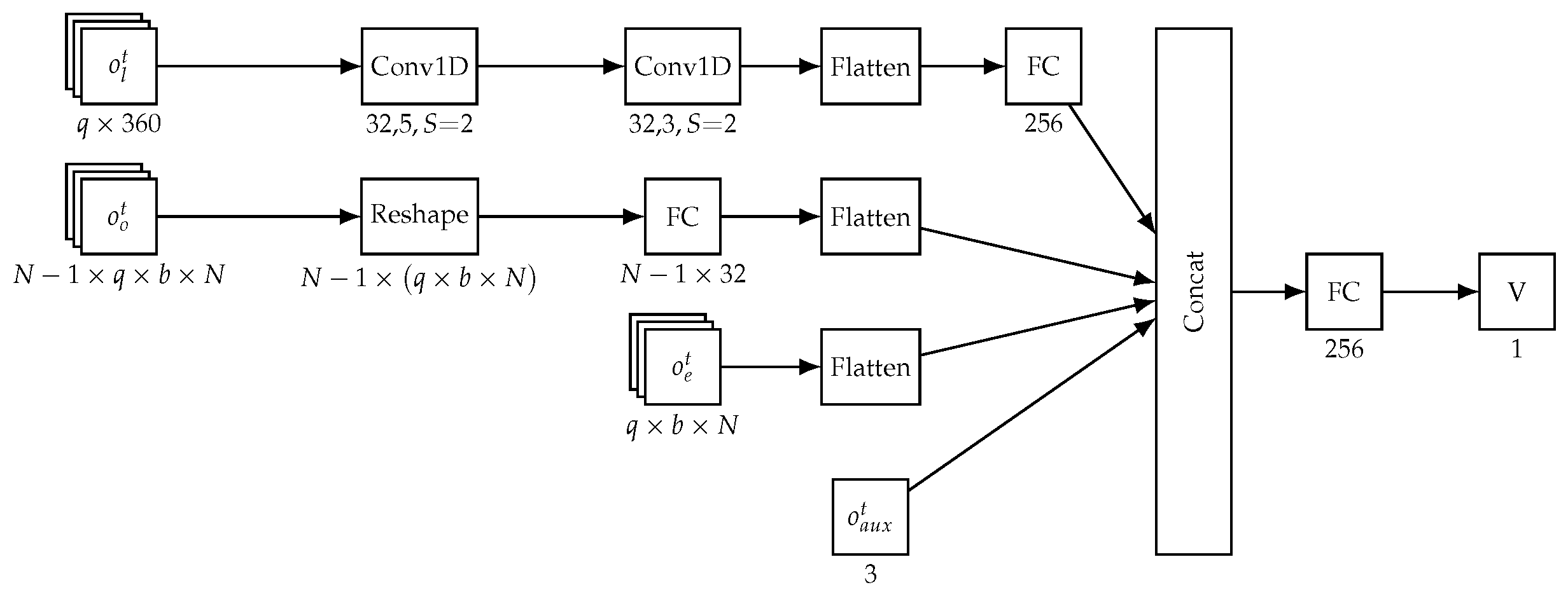

4.3. Network Architecture

5. Experiments and Results

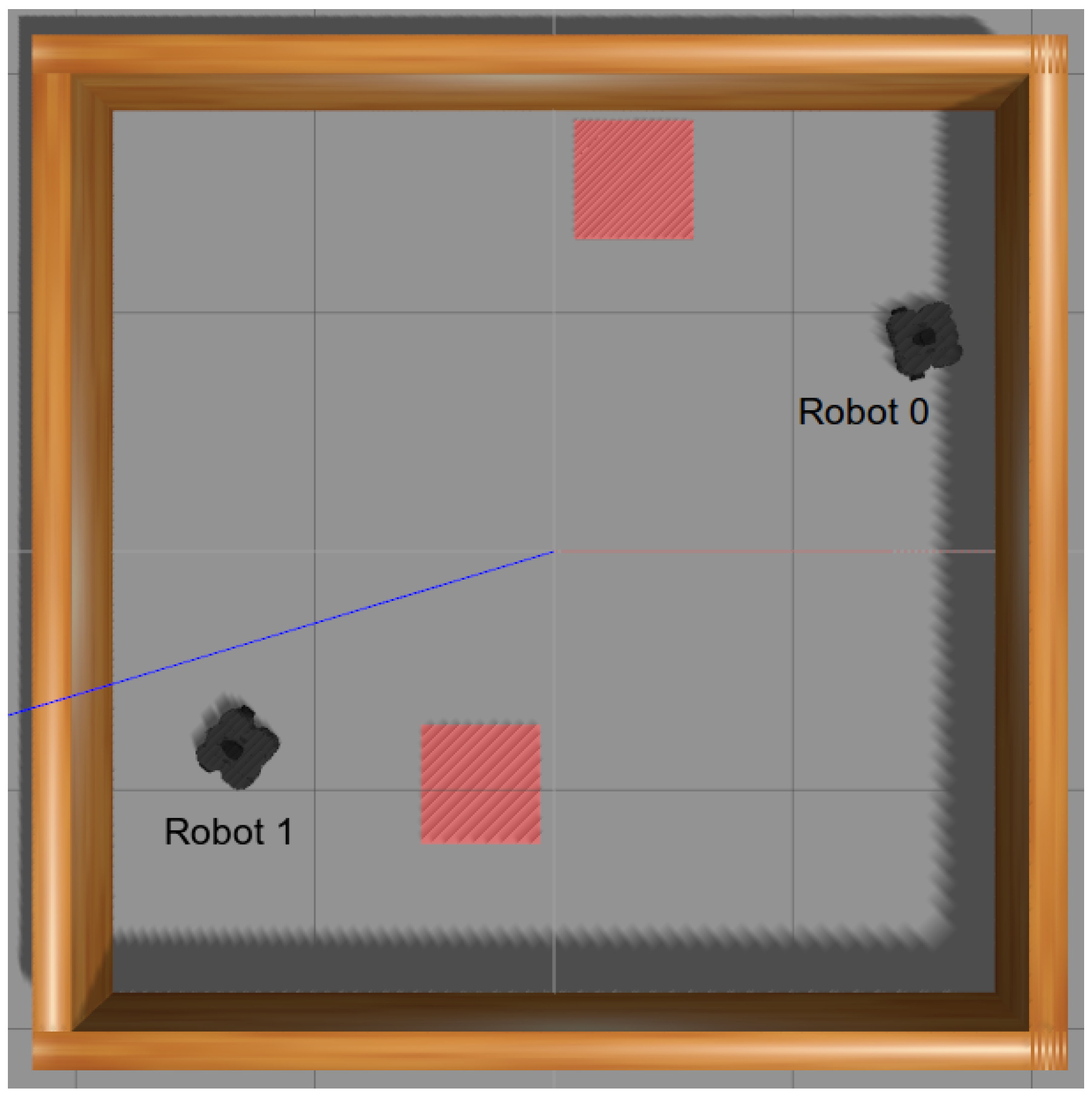

5.1. Simulation Environment

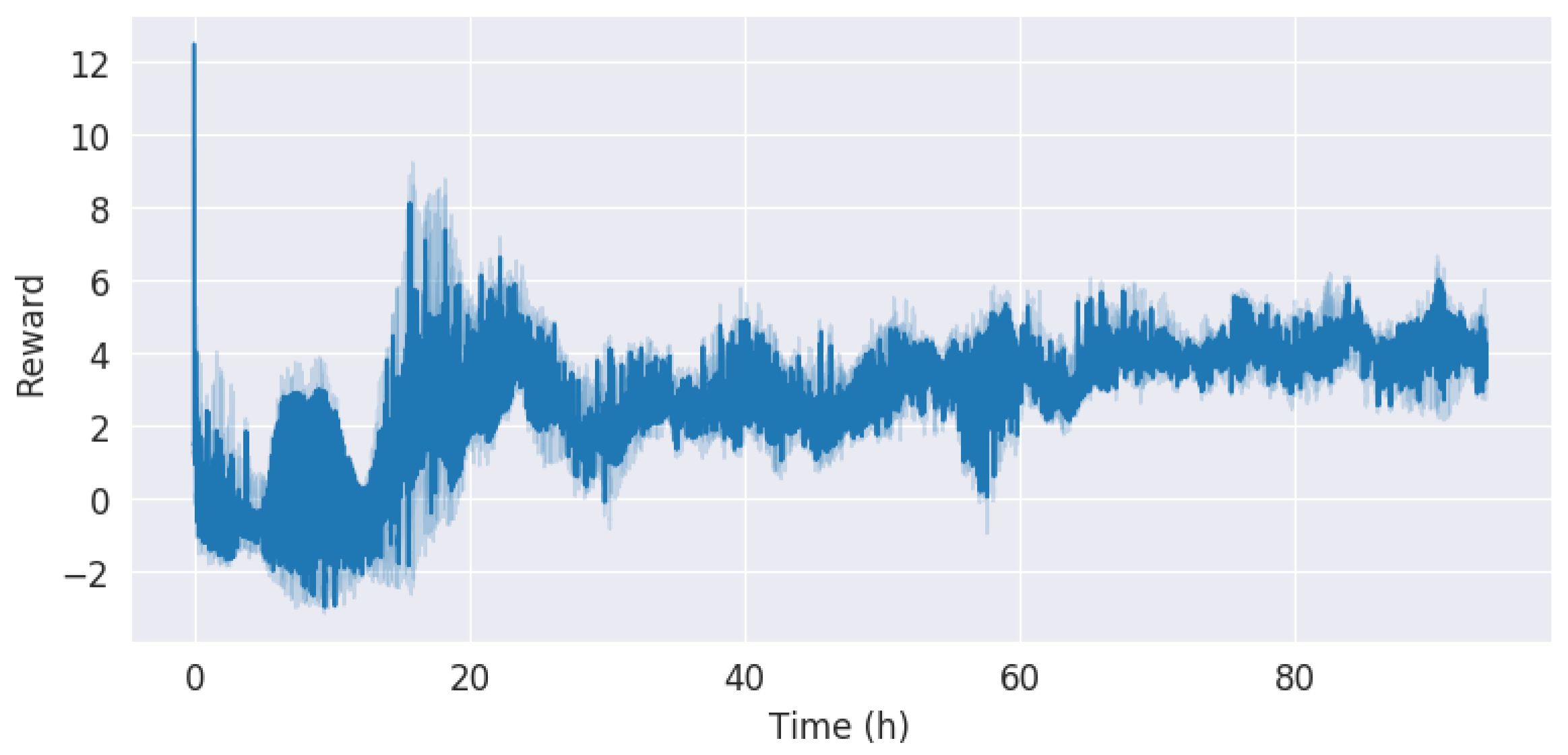

5.2. Training Configuration

5.3. Evaluation and Testing

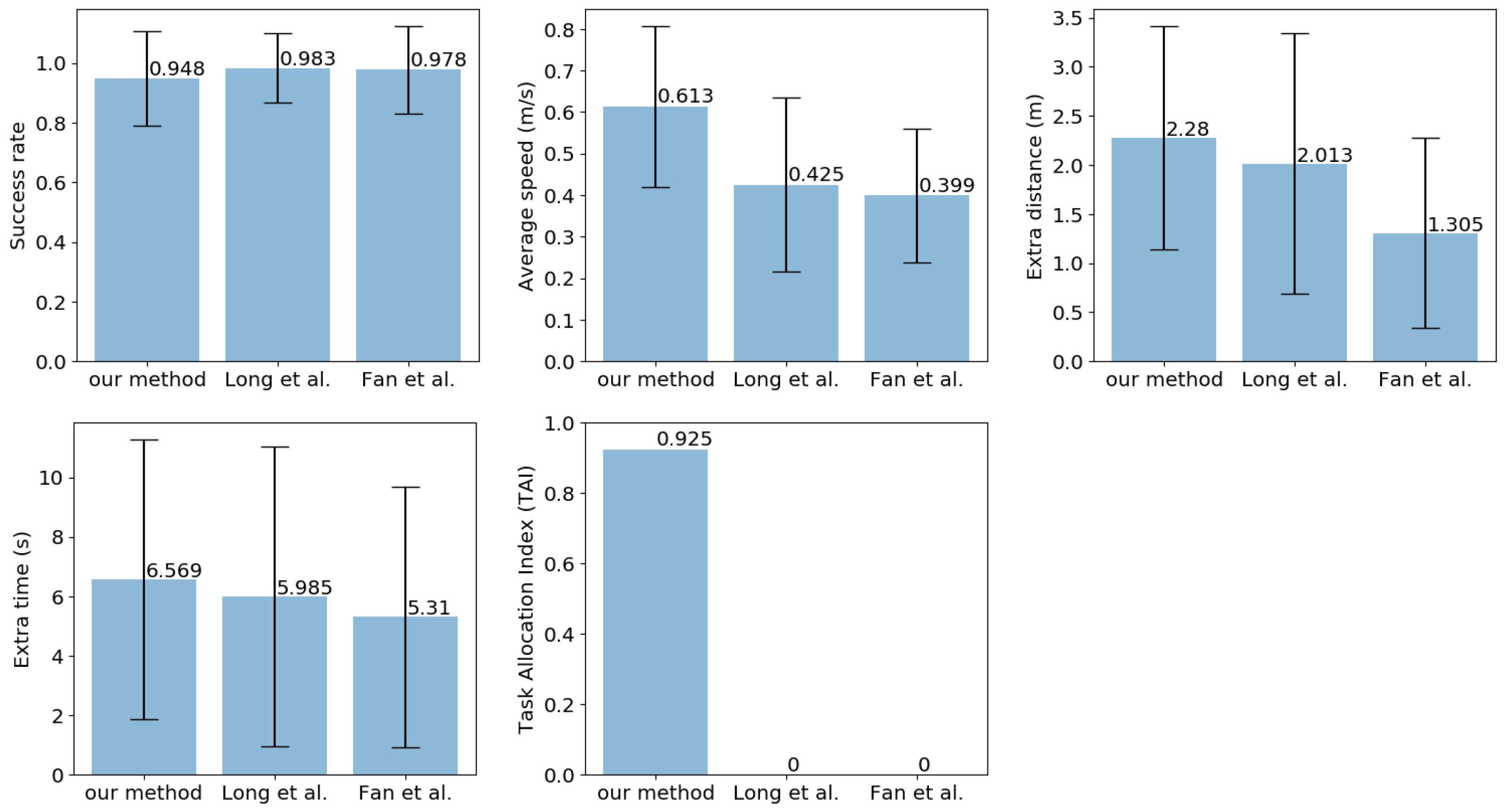

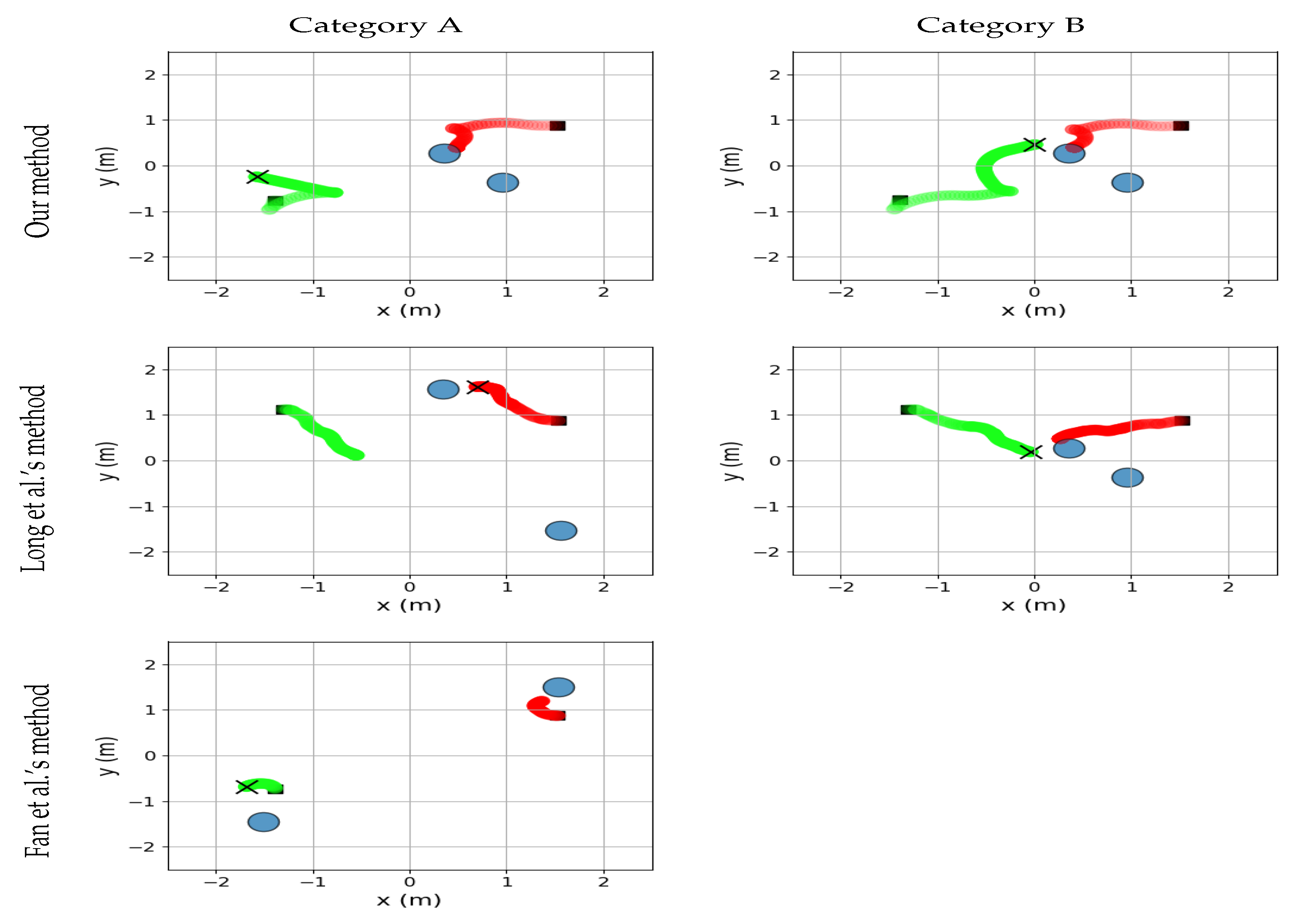

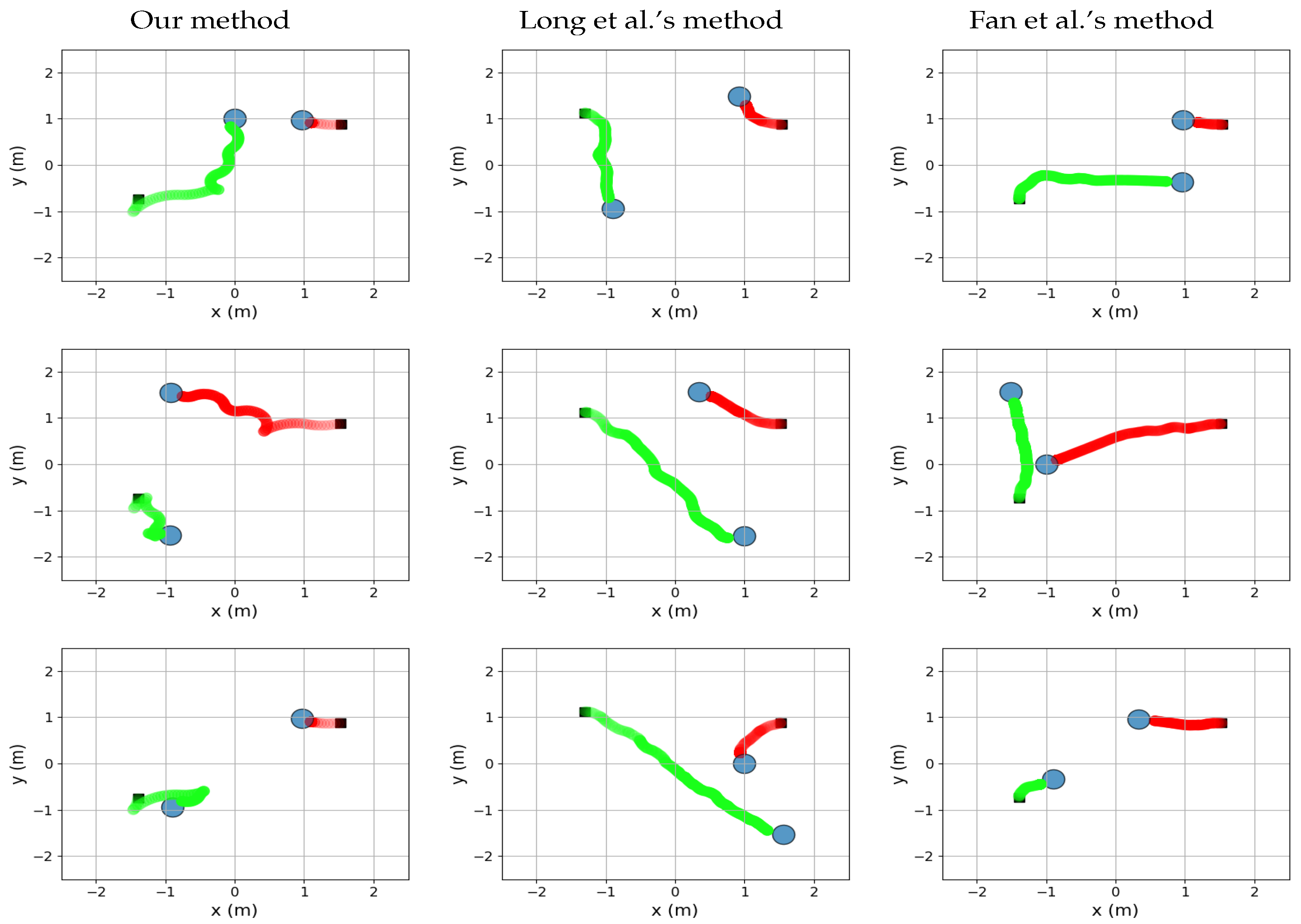

- Success rate: The ratio of the number of robots that reach unique goals successfully during the episode over the total number of robots (the higher the better).

- Extra time: The difference between the actual travel time of a robot to reach a goal position and the time it takes the robot to go to the same goal position in a straight line with maximum speed (the lower the better).

- Extra distance: The difference between the actual distance a robot traveled to reach a goal position and the Euclidean distance between the robot’s start position and the goal position (the lower the better).

- Average speed: The average of the ratio of the speed of the robot team relative to the maximum speed of a robot during the episode.

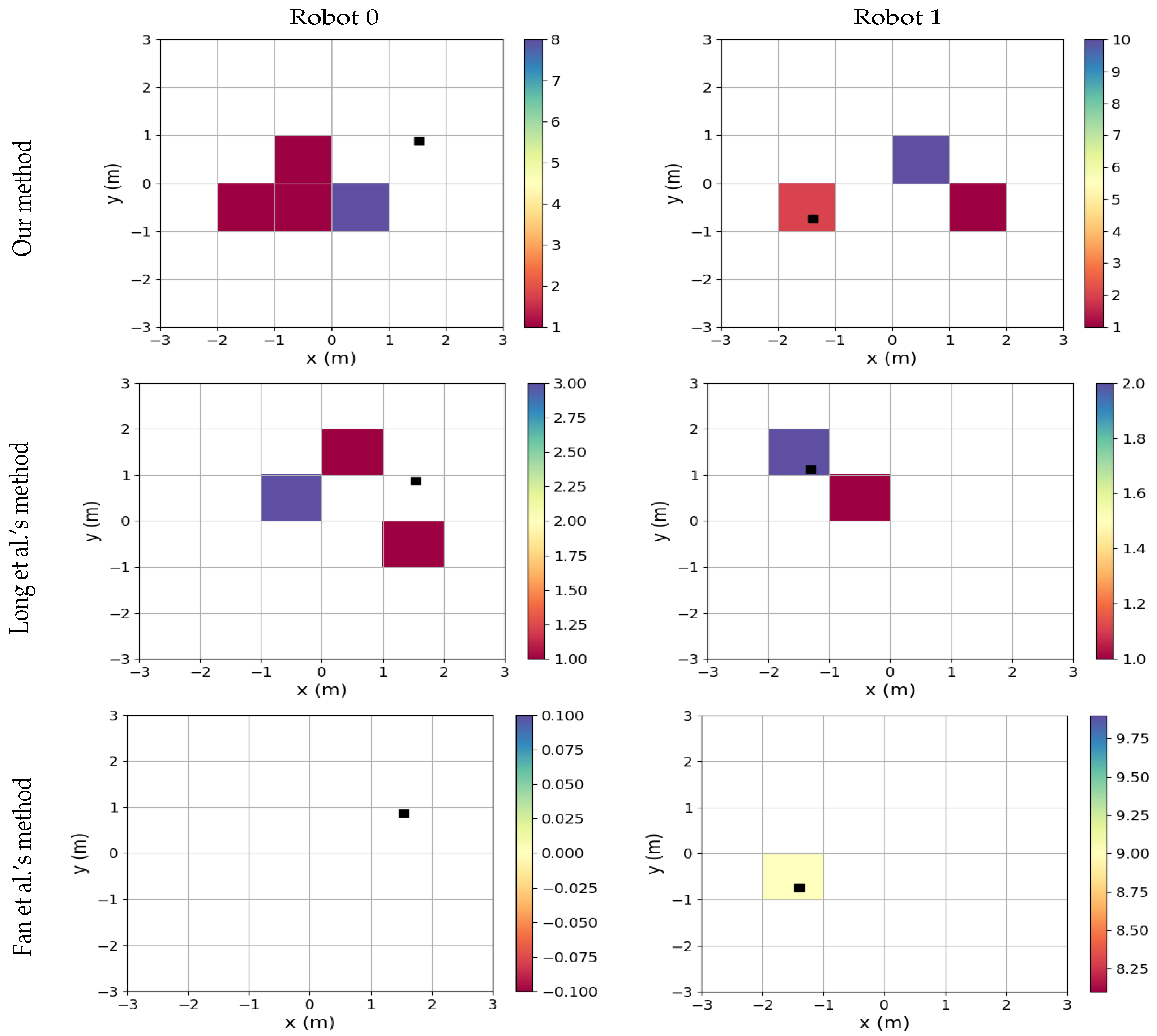

- : number of goals whose information is input to the policy.

- : total number of goals.

- : number of episodes ended by Category B collisions.

- : total number of episodes.

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Gerkey, B.P.; Matarić, M.J. A formal analysis and taxonomy of task allocation in multi-robot systems. Int. J. Robot. Res. 2004, 23, 939–954. [Google Scholar] [CrossRef]

- Yu, J.; LaValle, S.M. Optimal Multirobot Path Planning on Graphs: Complete Algorithms and Effective Heuristics. IEEE Trans. Robot. 2016, 32, 1163–1177. [Google Scholar] [CrossRef]

- Woosley, B.; Dasgupta, P. Multirobot Task Allocation with Real-Time Path Planning. In Proceedings of the Twenty-Sixth International Florida Artificial Intelligence Research Society Conference, St. Pete Beach, FL, USA, 22–24 May 2013; pp. 574–579. [Google Scholar]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction, 2nd ed.; The MIT Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Arulkumaran, K.; Deisenroth, M.P.; Brundage, M.; Bharath, A.A. A Brief Survey of Deep Reinforcement Learning. IEEE Signal Process. Mag. 2017, 34, 26–38. [Google Scholar] [CrossRef]

- Nguyen, T.T.; Nguyen, N.D.; Nahavandi, S. Deep Reinforcement Learning for Multiagent Systems: A Review of Challenges, Solutions, and Applications. IEEE Trans. Cybern. 2020, 50, 3826–3839. [Google Scholar] [CrossRef]

- Buşoniu, L.; de Bruin, T.; Tolić, D.; Kober, J.; Palunko, I. Reinforcement learning for control: Performance, stability, and deep approximators. Annu. Rev. Control. 2018, 46, 8–28. [Google Scholar] [CrossRef]

- Zhu, Q.; Oh, J. Deep Reinforcement Learning for Fairness in Distributed Robotic Multi-type Resource Allocation. In Proceedings of the 17th IEEE International Conference on Machine Learning and Applications, ICMLA, Orlando, FL, USA, 17–20 December 2018; pp. 460–466. [Google Scholar] [CrossRef]

- Dai, W.; Lu, H.; Xiao, J.; Zeng, Z.; Zheng, Z. Multi-Robot Dynamic Task Allocation for Exploration and Destruction. J. Intell. Robot. Syst. Theory Appl. 2019, 1–25. [Google Scholar] [CrossRef]

- Chen, Y.F.; Liu, M.; Everett, M.; How, J.P. Decentralized non-communicating multiagent collision avoidance with deep reinforcement learning. In Proceedings of the IEEE International Conference on Robotics and Automation, Singapore, 29 May–3 June 2017; pp. 285–292. [Google Scholar]

- Lin, J.; Yang, X.; Zheng, P.; Cheng, H. End-to-end Decentralized Multi-robot Navigation in Unknown Complex Environments via Deep Reinforcement Learning. In Proceedings of the IEEE International Conference on Mechatronics and Automation (ICMA); Institute of Electrical and Electronics Engineers (IEEE): Tianjin, China, 2019; pp. 2493–2500. [Google Scholar] [CrossRef]

- Long, P.; Fan, T.; Liao, X.; Liu, W.; Zhang, H.; Pan, J. Towards Optimally Decentralized Multi-Robot Collision Avoidance via Deep Reinforcement Learning. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 6252–6259. [Google Scholar] [CrossRef]

- Fan, T.; Long, P.; Liu, W.; Pan, J. Distributed multi-robot collision avoidance via deep reinforcement learning for navigation in complex scenarios. Int. J. Robot. Res. 2020, 39, 856–892. [Google Scholar] [CrossRef]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Hum. Level Control. Deep. Reinf. Learn. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef] [PubMed]

- Van Den Berg, J.; Guy, S.J.; Lin, M.; Manocha, D. Reciprocal n-body collision avoidance. In Springer Tracts in Advanced Robotics; Springer: Berlin/Heidelberg, Germany, 2011; Volume 70, pp. 3–19. [Google Scholar] [CrossRef]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Openai, O.K.; Klimov, O. Proximal Policy Optimization Algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar]

- Spaan, M.T.J. Partially Observable Markov Decision Processes. In Reinforcement Learning; Wiering, M., van Otterlo, M., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; Chapter 12; pp. 387–414. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J.L. ADAM: A METHOD FOR STOCHASTIC OPTIMIZATION. In Proceedings of the 3rd International Conference on Learning Representations, ICLR, San Diego, CA, USA, 7–9 May 2015; pp. 1–15. [Google Scholar] [CrossRef]

- Schulman, J.; Moritz, P.; Levine, S.; Jordan, M.I.; Abbeel, P. High-Dimensional Continuous Control Using Generalized Advantage Estimation. In Proceedings of the 4th International Conference on Learning Representations (ICLR 2016), San Juan, Puerto Rico, 2–4 May 2016. [Google Scholar]

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Nair, V.; Hinton, G.E. Rectified Linear Units Improve Restricted Boltzmann Machines. In Proceedings of the 27th International Conference on Machine Learning, Haifa, Israel, 21–24 June 2010; pp. 807–814. [Google Scholar]

- Martins, A.; Astudillo, R. From softmax to sparsemax: A sparse model of attention and multi-label classification. In Proceedings of the 33rd International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; Volume 48, pp. 1614–1623. [Google Scholar]

- Kim, A.; Seon, D.; Lim, D.; Cho, H.; Jin, J.; Jung, L.; Will Son, M.Y.; Pyo, Y. TurtleBot3 e-Manual. Available online: http://emanual.robotis.com/docs/en/platform/turtlebot3/overview/ (accessed on 15 March 2020).

- Koenig, N.; Howard, A. Design and use paradigms for Gazebo, an open-source multi-robot simulator. In Proceedings of the 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Sendai, Japan, 28 September–2 October 2004; Volume 3, pp. 2149–2154. [Google Scholar] [CrossRef]

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M.; et al. TensorFlow: A System for Large-Scale Machine Learning TensorFlow: A system for large-scale machine learning. In Proceedings of the 12th USENIX Symposium on Operating Systems Design and Implementation (OSDI ’16), Savannah, GA, USA, 2–4 November 2016; pp. 265–283. [Google Scholar]

| Paper | MRTA | Navigation |

|---|---|---|

| Zhu et al. [8] | √ | X |

| Chen et al. [10] | X | √ |

| Lin et al. [11] | X | √ |

| Long et al. [12] | X | √ |

| Fan et al. [13] | X | √ |

| Our proposed method | √ | √ |

| Parameter | Description | Value |

|---|---|---|

| q | Number of consecutive frames used in each observation | 5 |

| Data collection threshold | 1100 | |

| GAE decay factor | 0.92 | |

| Discount factor | 0.98 | |

| Max. episode time | 120 s | |

| E | Number of epochs | 10 |

| Used in the surrogate objective function | 0.2 | |

| The value loss factor | 5.0 | |

| The entropy loss factor | 0.01 | |

| Learning rate | 5 × 10−5 | |

| Number of episodes to change the goal positions | 50 | |

| Max. episode time | 120 s | |

| Terminal reward | 15 | |

| Goal’s distance factor | 200 | |

| Occupied goal reward Factor 1 | 3.5 | |

| Occupied goal reward Factor 2 | 3.409 | |

| Time reward factor | −5 | |

| R | Robot radius | 0.2 m |

| Metric | Our Method | Long et al. | Fan et al. |

|---|---|---|---|

| Number of episodes | 268 | 402 | 425 |

| % of episodes ending by collision | 8.955% | 2.239% | 2.118% |

| % of episodes ending by Category A collision | 1.522% | 1.254% | 2.118% |

| % of episodes ending by Category B collision | 7.433% | 0.985% | 0.0% |

| % of episodes ending by Category C collision | 0.0% | 0.0% | 0.0% |

| % of episodes ending by exhausting episode time | 0.746% | 0.0% | 0.0% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Elfakharany, A.; Ismail, Z.H. End-to-End Deep Reinforcement Learning for Decentralized Task Allocation and Navigation for a Multi-Robot System . Appl. Sci. 2021, 11, 2895. https://doi.org/10.3390/app11072895

Elfakharany A, Ismail ZH. End-to-End Deep Reinforcement Learning for Decentralized Task Allocation and Navigation for a Multi-Robot System . Applied Sciences. 2021; 11(7):2895. https://doi.org/10.3390/app11072895

Chicago/Turabian StyleElfakharany, Ahmed, and Zool Hilmi Ismail. 2021. "End-to-End Deep Reinforcement Learning for Decentralized Task Allocation and Navigation for a Multi-Robot System " Applied Sciences 11, no. 7: 2895. https://doi.org/10.3390/app11072895

APA StyleElfakharany, A., & Ismail, Z. H. (2021). End-to-End Deep Reinforcement Learning for Decentralized Task Allocation and Navigation for a Multi-Robot System . Applied Sciences, 11(7), 2895. https://doi.org/10.3390/app11072895