Abstract

In recent years, many countries have provided promotion policies related to renewable energy in order to take advantage of the environmental factors of sufficient sunlight. However, the application of solar energy in the power grid also has disadvantages. The most obvious is the variability of power output, which will put pressure on the system. As more grid reserves are needed to compensate for fluctuations in power output, the variable nature of solar power may hinder further deployment. Besides, one of the main issues surrounding solar energy is the variability and unpredictability of sunlight. If it is cloudy or covered by clouds during the day, the photovoltaic cell cannot produce satisfactory electricity. How to collect relevant factors (variables) and data to make predictions so that the solar system can increase the power generation of solar power plants is an important topic that every solar supplier is constantly thinking about. The view is taken, therefore, in this work, we utilized the historical monitoring data collected by the ground-connected solar power plants to predict the power generation, using daily characteristics (24 h) to replace the usual seasonal characteristics (365 days) as the experimental basis. Further, we implemented daily numerical prediction of the whole-point power generation. The preliminary experimental evaluations demonstrate that our developed method is sensible, allowing for exploring the performance of solar power prediction.

1. Introduction

The globally installed renewable energy power generation capacity accounts for structural changes that are gradually taking place. Recently, the grid-connected solar power generation capacity has significantly increased, and wind energy and solar energy will continue to dominate the renewable energy industry in the future, which is the continuous development and concern of all countries in the world [1,2,3]. However, the application of solar energy in the power grid also has disadvantages. The most obvious is the variability of power output, which will put pressure on the system. As more grid reserves are needed to compensate for fluctuations in power output, the variable nature of solar power may hinder further deployment or reduce carbon emission reduction potential.

The construction process of solar power plants is often due to the short construction period and insufficient technical support, resulting in poor technical skills of the on-site construction personnel and inaccurate equipment installation. Therefore, the operation and maintenance of solar power plants and system management decisions need to rely on monitoring systems and regular inspections to find potential problems. At the same time, the monitoring system records the data to improve the operating efficiency of the equipment and reduce the failure and shutdown rate of the equipment to ensure the stable operation of solar power plants. During the operation of the system, different factors such as environment, climate, and sunshine intensity will directly affect the power generation. How to collect relevant data to make predictions so that the solar system can increase the power generation of solar power plants is an important topic that every solar supplier is constantly thinking about.

All sources of solar power are sensitive to weather and environmental changes, but the existing literature mainly focuses on photovoltaic (PV) power generation and changes in solar radiation because it is the most relevant source [4]. For instance, the factor of global temperature will influence the efficiency and power output of the cell [5,6,7,8,9,10]. For every 1 °C increase in temperature, the efficiency of photovoltaic (PV) modules decreases by about 0.5% [11]. Temperature changes will affect the capacity of underground conductors and soil temperature [12]. Also, the temperature may influence operating costs and affect equipment efficiency [13]. For solar energy, cloud cover, which directly affects the surface downwelling shortwave radiation, is the most important climate factor to be considered. Solar irradiation and cloudiness would affect solar power output [10,14,15,16,17,18,19,20]. Factors including dirt, dust, snow, atmospheric particles, and others will also influence energy output [7,10,14,19,21,22,23,24]. In addition, wind speed also affects photovoltaic production [15,16]. In summary, for the above factors (i.e., climate, haze, environmental dust, bird droppings, etc.), long-term data tracking can be used to effectively determine the rate of performance degradation. It is one of the key observation items for the daily maintenance of solar energy systems. If we can quickly and accurately determine the cause of system abnormalities, and make immediate judgments for abnormal situations, it will increase the power plant bulk sales benefits. A 25% improvement of the accuracy in solar panel prediction could reduce 1.56% of the generation cost, which about US$ 46.5 million [25]. Therefore, solar power generation prediction is an important grid integration in the solar management system [26,27].

The problem with solar power generation is that the amount of power generation is not easy to predict in advance and will vary according to weather conditions and site conditions at specific locations. Therefore, this study is based on the following situation, that is, when the solar inverter and field equipment is operating normally, the complete power monitoring data of the solar power station can be obtained through the monitoring system. Then, we can calculate the efficiency of the solar power system based on the total sunshine intensity accumulated on that day and the power generation capacity of the power plant, to understand the impact of climate and environmental factors on the power generation performance of the solar system.

The motivation for this work can be divided into the following two parts:

- Exploring effective methods for establishing accurate prediction models for solar power generation in order to plan for power generation and power consumption in advance.

- In terms of supporting the maintenance of solar power plants, predictive models can help us analyze the factors most likely to affect the performance of solar power generation, so as to quickly and accurately determine the cause of system abnormalities, and immediately take appropriate measures to troubleshoot.

The view is taken, therefore, in this work, we utilized the historical data generated by the monitoring system to establish a prediction model of power generation by deep learning and statistical methods, and to perform statistical regression analysis of the selection of relevant feature variables. Finally, different algorithms are used to explore the results of the prediction models and performance measurement indicators. It is expected that the prediction method proposed in this paper can be further applied to more solar power plants, to improve the accuracy of power generation equipment and the power generation performance of the system.

2. Related Work

In recent years, some data mining and artificial intelligence technologies have been applied to the field of energy applications [28,29,30], especially the prediction of renewable solar energy [31,32,33,34,35]. A lot of renewable energy forecast problems have been solved by utilizing the multilayer perceptron neural network, the radial basis function neural network, and the support vector machine [31,32,33,34,35]. In addition, the emerging artificial intelligence of deep learning has the capacity for improvement in learning hierarchical features over previous AI models. The Deep learning algorithm could provide an efficient representation of the data set and improving generalization [36,37,38]. According to [39], we can find the models and prediction results established using machine learning or deep learning methods based on the forecast models applied to solar power generation in recent years, which can be roughly divided into physical models [40], statistical models [41], and the mixed model [42,43].

Among them, the physical model is mainly based on the analysis and construction of the mathematical model of the energy conversion process of the solar cell module. Generally, the linear reflection of the temperature of the solar cell module and the solar radiation intensity is used as the main parameter, and the forecast by the local weather station is used. The information uses mathematical formulas to calculate and predict power generation. Therefore, a certain degree of understanding of the physical characteristics of solar cell modules is required to increase the accuracy of prediction.

Furthermore, it is a statistical model, which mainly includes two categories: time series [44] and statistical learning. Time series is mainly established by collecting and studying historical monitoring data values and then finding suitable data structures (such as autocorrelation, trend, or seasonality). Models, the more commonly used model methods include SLT, TLSM, ARMA, and ARIMA, etc.; and statistical learning predictive models include MLR, SVM [45], and manual labor that can input multiple feature parameters or can be verified based on the passage of time. Artificial Neural Network (ANN) can improve the prediction accuracy through the pre-processing and post-processing of historical data. Among them, the models developed by ANN include ENN, RNN, GRNN, RBFNN, DRNN, GRU, and LSTM, etc. Among the many models, LSTM is one of the most widely used by people.

Finally, explain the hybrid model (PHANN), which is mainly constructed based on any combination of physical models and statistical models. They can be a combination of physical methods and statistical methods, such as GA or CSRM combined with ANN; they can also be pure statistical methods, such as ARIMA and SVM, ARMA and KNN, or FKM and RBFNN, to try to get the best predictive effect.

By reviewing the above-mentioned prediction model categories for solar power, and considering that during the energy conversion process of solar photovoltaic, the intensity change of output power is mainly based on the passage of time, this research further searches for the period from 2017 to 2019, based on the research situation of categorized neural deep learning technology and applied to the power generation prediction of solar power plants, among which the experimental results of each item are shown in Table 1.

Table 1.

2017–2019 Experimental results based on deep learning and applied to solar power prediction.

According to the research of Karar Mahmoud [46] and others, five recursive nerves based on historical power generation and LSTM were designed to predict the hourly PV output power, although the final experimental results did not use any weather information data. But historical data based on deep learning can still reduce the prediction error rate compared with other methods (MLR, BRT).

Moreover, Junseo Son [47] and others designed a 6-layer feed-forward deep neural network (DNN) and applied it to a grid-connected solar power plant in Seoul, South Korea, and based on the weather information from the previous day. The daily power generation prediction is finally defined in the experimental results. This method does not need to use any on-site sensor information and can still show a considerable or even better prediction than the traditional prediction method, and then again compared with the ANN model, is better.

Finally, Donghun Lee [48] and others applied the power generation information of the solar power plant installed in Gumi City, South Korea. By comparing the input solar irradiance, ambient temperature, and turbidity index under different models, and finally, the experimental results show that the LSTM model performs better than other models based on DNN, ANN, and ARIMA. Especially when the output power is unstable. Based on the development of Rumelhart’s research results [49], its structure is a neural network that performs linear recursion on the time structure, so the main prediction method used in this study.

In this work, the weather parameters are utilized to predict the solar power system at the Xinying site at Tainan City. All input parameters are methodically selected based on analyzing the Pearson correlation coefficient. The different combinations of multiple weather metrics are applied to the LSTM model to evaluate and compare the performance metrics. Finally, the weather parameter model which has the greatest performance is utilized with different artificial neural network models to derive prediction models and analyze the prediction accuracy of each model. In the next section, the forecast metrics are analysis and the research methods are explored. This section evaluates multiple deep learning models, while in Section 4, the performance metrics are utilized to compare the accuracy between models.

3. Method and Model

Since the selection of key feature variables has the most obvious impact on the prediction performance of the model, the variables with lower correlation after correlation analysis were eliminated at the beginning of the experiment. It is difficult to judge whether the prediction is based only on an experiment with the initially selected variables as the experimental sample and the selected algorithm is good. Therefore, in order to make the predicted performance of the model more accurate, subsequent experiment plans will continue to try different algorithms. It is hoped that the optimized results of the experiment can be obtained, and the feature variables of the optimized model can be used for subsequent research. As a result, this study is divided into two stages to perform various experimental schemes, in stage 1, is mainly to compare and identify the model’s performance by using different feature variables, and stage 2 is to test the predicting performance when different algorithms are adopted.

We first analyzed a large amount of historical data of the monitoring system set up by the solar energy research site, and added data from relevant government agencies and weather stations, and correlated the feature variables in the initial prediction model with solar energy performance. Our correlation analysis quantifies how each forecast parameter affects the other and the solar power generation. Subsequently, we applied multiple deep learning techniques to derive prediction models for solar energy generation using various forecast metrics, and then analyzed the prediction accuracy of each model.

3.1. Research Process

In this work, we mainly employed the data recorded by the Environmental Protection Administration (in Taiwan), the Central Weather Bureau (in Taiwan), and the monitoring system set up in the research site. As mentioned previously, this study is divided into two stages to perform various experimental schemes, in stage 1, it is mainly to compare and identify the model’s performance by using different feature variables, and stage 2 is to test the predicting performance when different algorithms are employed. The neural network is the core technique for electricity-generated prediction. The research process must first perform pre-training processing of historical data, including deleting abnormal or missing data and data normalization, and then perform analysis and test of the correlation coefficient of the feature variables. Finally, input the selected feature variables into the neural network model to perform the prediction and validation of solar energy. And then they compared the difference of key performance indicators that using different feature variables on LSTM and discussing the results with the implementation of the SimpleRNN, GRU, and Bidirectional neural models.

3.2. Data Collection

The monitoring data used in this paper mainly comes from the monitoring system we set in the research place, and the monitoring system is mainly built by connecting countless sensors scattered in the site through communication transmission. The main equipment on the site, one is a real-time power generation data collector of the solar cell module, another is a pyranometer used to analyze the module maximum power which is mainly affected, and temperature of the array module, and the other is a hygro-thermograph and anemometer. By the above equipment, we can analyze the correlation of atmospheric environment factors and power generation.

In order to strengthen environmental-climate factors that we lacked on-site, and we through collecting the history monitoring data at the Environmental Observation Station of the Environmental Protection Administration and the Central Weather Bureau, including the related feature data set of the wind direction, ozone, particulate matter, Sulphur dioxide, and rainfall. To strengthen the original data that we lacked in the monitoring system, we expect to find the potential factors of the solar power generation prediction, and then improve the results of prediction effectively.

Installation Situation of the Solar Power Plant

The site for this study was selected as a solar power plant to be built in southern Taiwan (i.e., Tainan City). The total area of the land is about 7.1 hectares. The set-up capacity of the site using idle vacant land is 6388 kWp. The concentrated solar inverter is used to convert the electric energy waveform. Subsequently, the transformer is used to increase the voltage to 11.4 kV and then merged into the Taipower grid. It is roughly estimated that the average annual cumulative power generation can reach Approximately 9.02 million kWh. Converted into basic electricity consumption for 2580 households for one year.

3.3. Normalization and Feature Data Analysis

The data set collected in this research is from 1 November 2018 to 31 January 2020, a total accumulation of 457 days. As the monitoring system and sensors malfunction or fail during the data collection process, interrupted and abnormal data often appear. Therefore, we must sort the data fields of the Environmental Protection Administration and meteorological station by using the transformation of the matrix in the process of data normalization and combine them with the monitoring system data of the research site, at last individually deleted abnormal feature data and insufficient columns. In addition, because the generating process of solar power needs daylight, we adopt to have the data just collect which from 6 a.m. to 17 p.m., then analyzes the results of the association between each feature data through the Pearson Correlation. After abandonment, the feature data which had a worse association was used as the training sample, the testing sample, and the prediction sample of the power generation prediction.

In statistics, correlation coefficients are used to measure the strength of a linear association between two variables and are denoted by r. Furthermore, the prediction dataset of solar power generation is the continuous sample data of the time series, which records the feature data per hour. This research work will use Pearson product-moment correlation coefficient to measure the linear correlation between two variables X and Y, through covariance of the two variables divided by the product of their standard deviations, and it is between +1 and −1, which is defined as follows:

We can get the sample correlation coefficient r based on the equation defined as overall correlation coefficient, and , respectively is the mean of the products of the standard scores of the X, Y as follows:

Therefore, the Pearson product-moment correlation coefficient can be used to observe whether the current data is approximately linear or the scattered data. When the calculated data is extremely messy, the correlation coefficient will approach zero; When the data consist of a straight line that approaches a positive slope, the correlation coefficient will approach 1.0. On the other hand, if the data consist of a straight line that approaches a negative slope, the correlation coefficient will approach −1.0.

Finally, as shown in Table 2, according to the positive and negative values of the correlation coefficient, it respectively indicates positive and negative correlations, and the size of the coefficient value represents the strength of the correlation. If we do not consider the correlation of the positive and negative, we can divide the value. If it less than 0.1, it represents almost no correlation, among 0.1~0.29 represents low correlation, among 0.3~0.69 represents medium correlation, among 0.7~0.89 represents high correlation, and among 0.9~1 represents almost complete correlation.

Table 2.

Pearson correlation coefficient comparison table.

3.4. Time-Sequential Predictive Algorithm (RNN, LSTM, GRU, and Related Models)

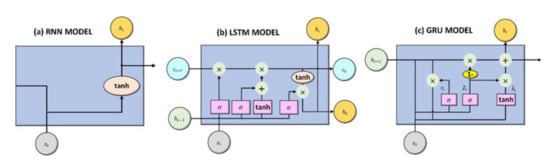

The different time-sequential predictive models are utilized to validate and compare the capability and performance in predicting the generated solar power. In this study, the RNN, LSTM, and GRU are applied to explore efficiency and accuracy. In addition, the combination of bidirectional training with sequential predictive models is also utilized to evaluate the performance. The general structure of RNN, LSTM, and GRU is shown in Figure 1.

Figure 1.

The topological structure of RNN, LSTM, and GRU for short-term prediction: (a) the recurrent neural network (RNN), (b) the Long short-term memory (LSTM), and (c) the recurrent unit neural network (GRU).

Recursive neural network (recursive neural network) is regarded as a generalization of the recurrent neural network, which is mainly based on the development of Rumelhart’s research results [49]. Its linear recursion on the time structure derive of the t vector will propagate to t-1, t-2, … Traditional RNNs can’t capture long-term memory because the final vector produces coefficients of continuous multiplication, resulting in the input of the starting vector in the forward process. The weak influence of the vector causes the problem of the disappearance of the gradient and causes the long-term memory to be hidden by the short-term memory. The RNNs that are designed to support sequences of arbitrary length can often only handle time sequences that are not too long [50].

The long short-term memory, which is a special type of RNN, has a significant advantage in consecutive events between relevant timestamps [51]. The LSTM has a lot of outperformed applications in renewable energy prediction [52,53]. The input, output, and forget gate in LSTM memory blocks could update and control the flow of consequent information which are relatively advantageous for the short-term prediction of renewable energy. The LSTM could continuously update the consequent data by utilizing the input, output, and forget gate of memory blocks.

In addition, Chung et al. proposed gated recurrent unit (GRU) which combines the input gate and forget gate in LSTM [54]. This lightweight version of LSTM could accelerate execution and reserve computational resources. The LSTM and GRU learning performance are significantly improved than traditional RNN when applied to different data sets and corresponding tasks.

In addition to the three models described above, the Bidirectional RNN was proposed by Mike Schuster in 1997 [55], which combined regular recurrent neural networks. The Bidirectional RNN extended the training process in the direction of positive and negative times. The results of the two training directions comprehensively state the transmission of recursive neural networks. The prediction value is generally performed in one direction from the front to the back, which can only be predicted through the previous information. The calculation process of the model may need to use the information from the future time. Therefore, the predictions could use the bidirectional to execute two one-way recursive neural networks at the same time and combine operations. The Bidirectional RNN provides the input value to these two recursive directions at the same time and the final output value is jointly determined by these two unidirectional recursive neural networks. In this study, the Bidirectional-simpleRNN, the Bidirectional-LSTM, and the Bidirectional-GRU are utilized to predict the generated solar power and the efficiency and accuracy are evaluated and compared.

3.5. Predictive Model Parameters and Cross Validation

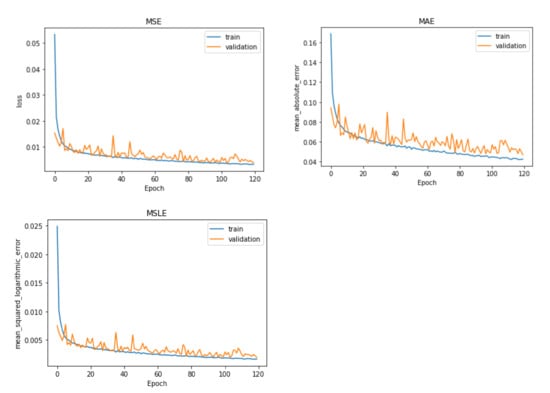

Most regression problems related to data used MSE to calculate the difference between the original and predicted values extracted by squared the average difference. We also use other functions likes MAE, which calculated the difference between the original and predicted values extracted by averaged the absolute difference, or the mean over the seen data of the squared differences between the log-transformed original and predicted values, it calls Mean squared logarithmic error (MSLE).

Therefore, it can be assumed that when n is the number of samples by the above calculation, is the actual value and is the predicted value. After compiling and calculating, we can get a performance indicator that is more than zero. When the value is more and more, it means the errors are more and more, which is defined as follows:

4. Experiments and Discussion

The main sources of research data used in this research are ambient air quality from the Environmental Protection Administration, weather-station observation data from Central Weather Bureau, and on-site monitoring system data of solar power plants. In the process of data collection, it has been discovered that the original data of the trilateral parties are stored and presented in different ways. So, the first step is converting the data into the array to stacking, and then merges all data according to the same timeline, and then through the artificial cross match. Finally, record the situation which is lost or unusual, and then the data will be eliminated and consolidated for a database.

At the beginning of this study, many difficulties were encountered in the collection of data. For example, the data fields were not completely, and multiple data sources were needed to compare with each other to fill in the missing fields. Due to the lack of fields, we tried many data sources, such as some government agencies and other data sources that provide real-time and historical climate inquiries. Although it seems that the completeness is relatively high, most of them are presented in a graphical way, which is slightly time-consuming in data conversion. In the data provided, only temperature and rainfall have clear values, and the rest must be found in other data sources or calculated manually. In this case, we have to find more data sources for data collection.

Therefore, this research data will use the historical data collected by the above trilateral parties from 7 November 2018 to 28 January 2020 (total 448 days) between 6 o’clock and 17 o’clock each day (total 12 h), it is used as the data of model of neural network. During the data collection period, the date when the abnormal data is found by manually checked is 39 days. Finally, the total data after the abnormal data is eliminated is 409 days.

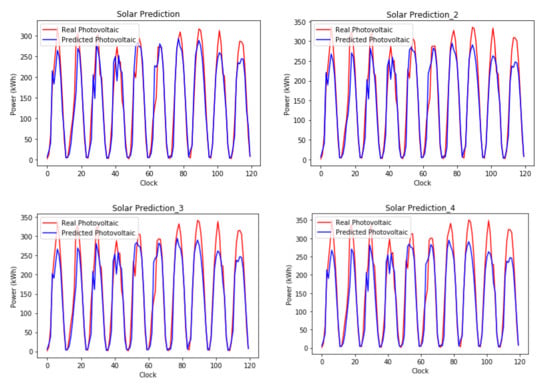

Finally, through the consolidated data, 80% of the early data (from 7 November 2018, to 31 October 2019, a total of 3948 records) recorded in the file are used as training data for the model to training, and the other 20% data (from 1 November 2019 to 28 January 2020, a total of 960 records) are used for testing, and then the testing data will be taken out for 10 days (1–10 January 2020, a total of 120 records) data is used as a visualization to present the forecast results.

Since this study is divided into two stages to execute multiple experimental schemes, in stage 1, it compares and descript model’s effect which used different feature variables; In stage 2, testing the influence of performance indicator which choose the different model. In this study, the above-mentioned experimental results uniformly use the coefficient of determination (R-Squared) as the evaluation standard of the performance indicator, and the value between [0,1] is obtained by the above-mentioned function calculation to determine the model fitting effect of is good or bad. Inside the function, the numerator is the summation of the difference of squares of original and predicted values, the denominator is the summation of the difference of squares of original and average values.

4.1. Result of Feature Selection

Since the selection of feature variables will have the most obvious impact on the model prediction effect, the items with values less than ±1.0 after the analysis of feature variable correlation were eliminated at the beginning of the experiment, and the monitoring records on the site were used to implement the first experimental plan. Finally, using the performance indicator = 0.849865 of experimental program A-1. From this experiment, it can be found that the prediction effect by using the monitoring records on the site as the experimental sample is not good and still needs to be improved. Therefore, to make the performance of the model prediction more accurate, the subsequent experimental programs will be tried with different feature data samples. It is expected that the optimal results of the experiment can be obtained, then find the feature variables of the optimal model to extend subsequent research.

In the process of trying to improve the above experimental program. First, we combine the external meteorological with the on-site monitoring system information and obtain the effectiveness index = 0.853013 of the experimental program A-2 after training. When we compared it with the monitoring system that just referenced the on-site project, the features have been strengthened a lot. Secondly, the external environment is combined with the on-site monitoring system information and obtains the performance indicator = 0.860999 of the experimental program A-3 after training. Thirdly, above the external meteorological and environment is combined with the on-site monitoring system information and obtains the performance indicator = 0.865523 of the experimental program A-3 after training. Finally, it can be found that increasing the model’s feature category can effectively improve the model’s prediction situation by comparing the results of the above-mentioned experiments.

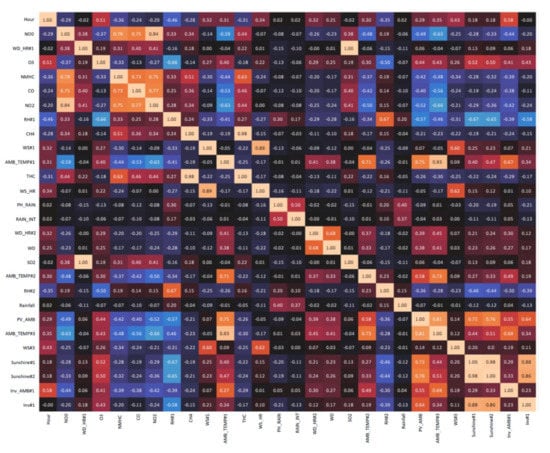

As the performance indicators of the experimental program, A-4 still have room for improvement, the results of the previous Pearson product-moment correlation coefficient analysis were used as the basis for screening, and the correlation coefficient was divided into 6 levels ± 0.2~0.3 according to the correlation coefficient. Figure 2 illustrates the resulting map of the Pearson correlation coefficient analysis. The list of feature variables of the data set used after Pearson correlation coefficient analysis is shown in Table 3. According to the comparison of the results of the above 6 programs (A-5~A-10), when the experimental program A-5 is used to delete all the feature variables of the correlation coefficient +0.2~−0.2, the performance indicator = 0.880833 can be obtained, and the correlation of the feature variables is further compared. When the coefficient screening range is expanded to ±0.3, only the experimental program A-10 can obtain a better performance indicator = 0.845699 when all the feature variables of the correlation coefficient +0.3~0 are deleted. So, according to the above project of the results of the experiment, and finally the correlation coefficient +0.3~−0.2, feature variables are aggregated into the experimental program A-11, after training and prediction, the best performance indicator of the experiment at this stage is taken = 0.890179. There are 14 items of feature variables which are recording time, inverter device temperature, solar cell module temperature, atmospheric temperature, horizontal insolation, module corresponding angle insolation, relative humidity of external weather information, nitrogen oxides, ozone, non-methane hydrocarbons, nitrogen dioxide, relative humidity, temperature, and acid rain value of external environment information.

Figure 2.

The resulting map of the Pearson correlation coefficient analysis.

Table 3.

List of feature variables of data set used after Pearson correlation coefficient analysis.

After the multiple experiment tests, it has been determined that A-11 is the optimized experimental result of this stage. Therefore, the remaining feature variables of the experiment were reviewed again. Some of the items were repetitive in nature, so the feature value item adopted in A-11 eliminates the relative humidity of the weather information and the temperature field of the environmental information in program A-11. Meanwhile, we implemented the linearly dependent experimental training with the prediction of experimental program A-12 and obtained the effectiveness index = 0.88494. However, in the end, it still failed to exceed the value of the effective index of program A-11.

Therefore, our experiment again tried to reassemble the equipment information data of the remaining 7 inverters on-site, external environment and information of weather, and on-site monitoring data into 8 training datasets. Besides, we take the best experimental program A-11 of this stage as the information sample of the stage 2 model experiment scheme, and try to obtain better solar power prediction result by changing the prediction model.

Finally, in stage 1, the results of the detailed performance indicator are presented in Table 4. The Stage 1 experimental summary of performance measurement indicators is shown in Table 5.

Table 4.

Pearson correlation coefficient comparison table.

Table 5.

Summary of experimental performance measurement indicators (Stage 1).

4.2. Experimental Results and Discussion

In this research process of experiment, besides the importance of aggregating strong correlative feature variables with generating solar power, the selection of prediction models is also to improve the performance. The most accurate and correlative feature variables are utilized to compare the effectiveness of different neural network models, which are the SimpleRNN, LSTM, GRU, Bi-SimpleRNN, Bi-LSTM, and Bi-GRU. The experimental program A-11 which has achieved the best performance measurement indicators, is utilized to demonstrate the effectiveness and accuracy during the training process. The originally configured in the initial stage between experimental models were identical, and the differences and changes were discussed. Stage 2 experimental summary of experimental performance using different models with measurement indicators among various programs is shown in Table 6.

Table 6.

Summary of experimental performance among different models with measurement indicators (Stage 2).

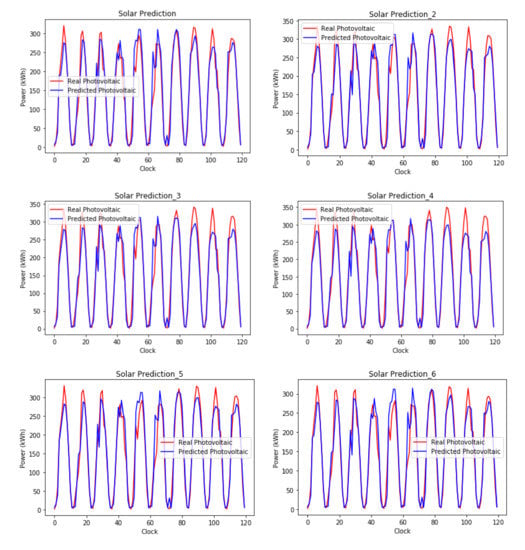

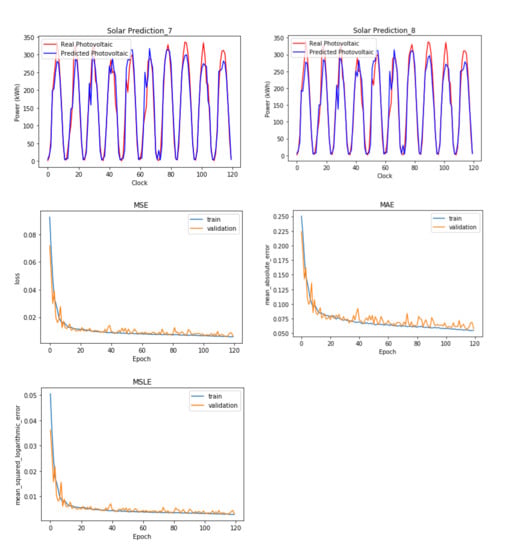

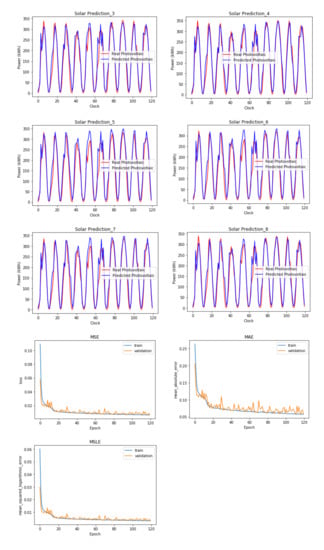

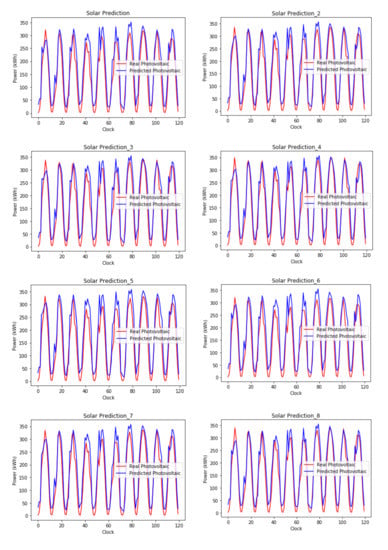

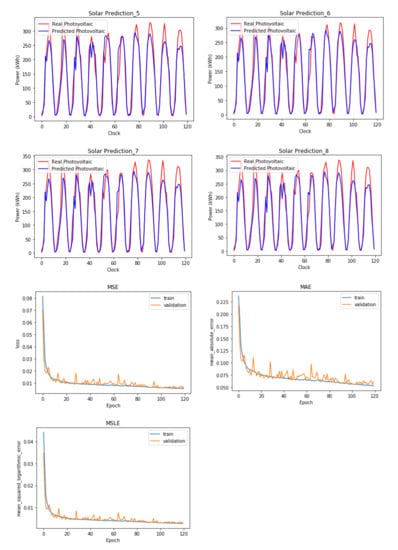

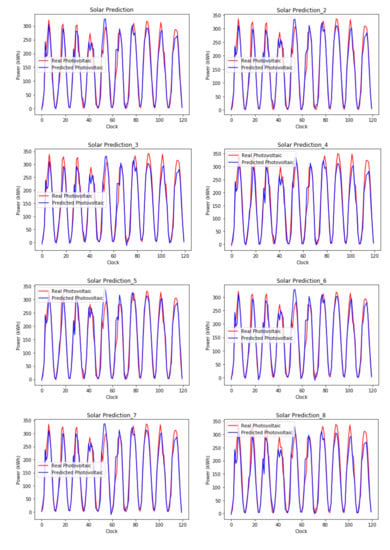

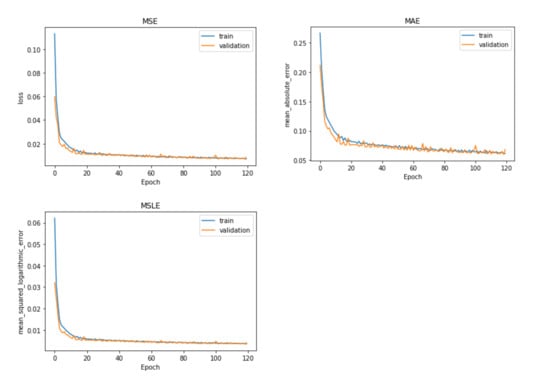

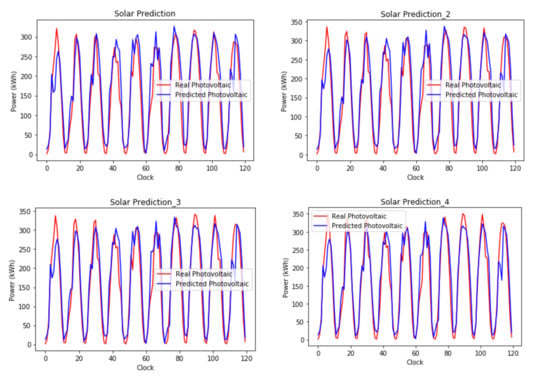

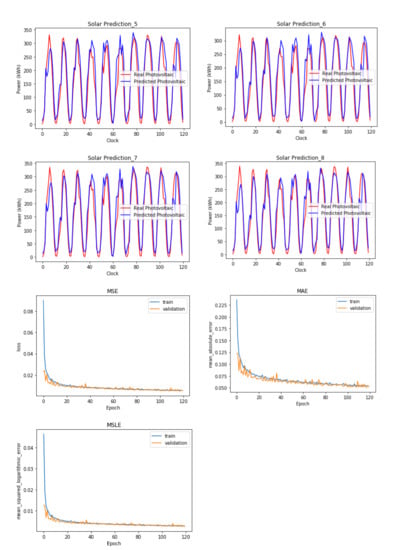

Firstly the neural network models were executed separately several times, which selected the best performance experimental results for discussion. The training process and solar generation prediction in different sceneries are shown in Figure 3, Figure 4, Figure 5, Figure 6, Figure 7 and Figure 8.

Figure 3.

Multiple inverters and model predictions with the same A-11 feature variable information (LSTM).

Figure 4.

Multiple inverters and model predictions with the same A-11 feature variable information (GRU).

Figure 5.

Multiple inverters and model predictions with the same A-11 feature variable information (SimpleRNN).

Figure 6.

Multiple inverters and model predictions with the same A-11 feature variable information (BiLSTM).

Figure 7.

Multiple inverters and model predictions with the same A-11 feature variable information (BiGRU).

Figure 8.

Multiple inverters and model predictions with the same A-11 feature variable information (BiRNN).

From Table 5, the SimpleRNN, which is essentially the most intuitive and most basic regression model, has less performance comparing with the improved LSTM and GRU. In addition, although the GRU is an improved version of LSTM and the training time required for GRU is indeed slightly reduced, the performance of GRU predictions generally cannot be equal to or exceed the performance of LSTM. Therefore, by exploring the conclusions of the differences in the effectiveness of the above three algorithms, the SimpleRNN is recommended for basic simple calculations. Secondly, if the experimental development process needs to deal with a huge data set in order to obtain preliminary experimental evaluation results, the application of GRU will be the first choice. However, if an accurate in depth calculation prediction model is required, the LSTM model is recommended as the main execution algorithm. Although the execution process requires high system resources and training time, the overall situation is relatively stable, reliable, and accurate. Since the above three models are all one-way training methods, the final verification method was used the bidirectional packaging model to attempt the optimal results through the two-way transmission of the model training process. According to Table 5, when the bidirectional -LSTM model is utilized, although the bidirectional-LSTM model can indeed reduce the difference in each training result, the R square value showed no better performance. Furthermore, when the Bidirection-SimpleRNN model is also used as the experimental scheme, the bidirectional packaged model can effectively improve the gradient descent of the training process. However, the prediction effect of the Bidirection-SimpleRNN model itself is not good, resulting in poor final results. Ideally; when the GRU model is finally used as the bidirectional packaging, this bi-direction model has increased the difference in the effectiveness of the training results. The bi-direction ability has allowed the GRU model has streamlined the calculation method of the LSTM model and achieve better performance, and finally achieved the optimized performance index of this research, as R square equals 0.934156, in the experimental program B-5.

5. Conclusions

The main purpose of this research is to explore the prediction and evaluation methods of solar power generation. Therefore, in this work, we conducted intensive experiments using deep learning models to effectively predict power generation from our experimental results. It has been confirmed through the prediction of experiment A-1, that only using the monitoring system data on-site installed in the solar power plant cannot effectively establish an accurate prediction model. The actual applications still need to collect other possibly related feature data, and it may make the solar power prediction model gradually improved to optimization through different model experiments.

Since it only uses the related feature values and the data set of a single inverter which are collected in the above experiment (total 3948 training data) for training, the performance indicator predicted by the model can only be increased to = 0.890179. So once again, the concept of a Generative adversarial network (GAN) is extended to multiple inverters (8 sets of training data total 31584), then aggregated and reshaped the dataset by the temperature of the inverter and the feature variables. Finally, experiment B-5 obtains the optimal performance indicator of = 0.934156 by using the Bidirectional-GRU model. These feature variables used in the optimal prediction results will increase the prediction effect of power generation. The most likely reason is the correlation between the greenhouse effect and solar thermal radiation. Therefore, if it is necessary to further strengthen the prediction accuracy in the future, it is recommended to collect the relevant feature variables of the solar irradiance or the greenhouse to obtain better results. Currently, there are many new and improved neural networks that can be applied to the study. In future work, the methods using a hybrid deep learning model can be considered into the system to continuously improve the prediction performance of solar power generation.

Author Contributions

Conceptualization, C.-H.L.; Formal analysis, H.-C.Y.; Investigation, H.-C.Y. and G.-B.Y.; Methodology, C.-H.L. and G.-B.Y.; Software, H.-C.Y. and G.-B.Y.; Supervision, C.-H.L.; Validation, H.-C.Y.; Visualization, G.-B.Y.; Writing—original draft, C.-H.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The study did not report any data.

Conflicts of Interest

The authors declare no conflict of interest.

Acronyms Used Frequently in this Work

| DL | Deep learning |

| ANN | Artificial neural networks |

| RNN | Recurrent neural network |

| ENN | Edited nearest neighbor |

| LSTM | Long short-term memory |

| GRU | Gated recurrent unit |

| BiRNN | Bidirectional recurrent neural network |

| BiLSTM | Bidirectional long short-term memory |

| BiGRU | Bidirectional gated recurrent unit |

| STL | Standard template library |

| TLSM | Table lookup substitution method |

| ARMA | Autoregressive moving average |

| ARIMA | Autoregressive integrated moving average |

| MLR | Multiple linear regression |

| SVM | Support vector machines |

| ANN | Artificial neural networks |

| ENN | Edited nearest neighbor |

| GRNN | Genetic reinforcement neural network |

| RBFNN | Radial basis function neural network |

| DRNN | Dynamic recurrent neural network |

| PHANN | Physical hybrid artificial neural network |

| GA | Genetic algorithm |

| CSRM | Clear-sky radiation models |

| KNN | K nearest neighbor |

| FKM | Fuzzy k-modes |

| MSE | Mean square error |

| MAE | Mean absolute error |

| MSLE | Mean squared logarithmic error |

| Coefficient of determination |

References

- Alessandrini, S.; Delle Monache, L.; Sperati, S.; Cervone, G. An analog ensemble for short-term probabilistic solar power forecast. Appl. Energy 2015, 157, 95–110. [Google Scholar] [CrossRef] [Green Version]

- Qing, X.; Niu, Y. Hourly day-ahead solar irradiance prediction using weather forecasts by LSTM. Energy 2018, 148, 461–468. [Google Scholar] [CrossRef]

- Voyant, C.; Notton, G.; Kalogirou, S.; Nivet, M.-L.; Paoli, C.; Motte, F.; Fouilloy, A. Machine learning methods for solar radiation forecasting: A review. Renew. Energy 2017, 105, 569–582. [Google Scholar] [CrossRef]

- Solaun, K.; Cerdá, E. Climate change impacts on renewable energy generation. A review of quantitative projections. Renew. Sustain. Energy Rev. 2019, 116, 109415. [Google Scholar] [CrossRef]

- Huld, T.; Šúri, M.; Dunlop, E.D. Geographical variation of the conversion efficiency of crystalline silicon photovoltaic modules in Europe. Prog. Photovolt. Res. Appl. 2008, 16, 595–607. [Google Scholar] [CrossRef]

- Amelia, A.R.; Irwan, Y.M.; Leow, W.Z.; Irwanto, M.; Safwati, I.; Zhafarina, M. Investigation of the effect temperature on photovoltaic (PV) panel output performance. Int. J. Adv. Sci. Eng. Inf. Technol. 2016, 6, 682–688. [Google Scholar]

- Thevenard, D.; Pelland, S. Estimating the uncertainty in long-term photovoltaic yield predictions. Sol. Energy 2013, 91, 432–445. [Google Scholar] [CrossRef]

- Fesharaki, V.J.; Dehghani, M.; Fesharaki, J.J.; Tavasoli, H. The effect of temperature on photovoltaic cell efficiency. In Proceedings of the 1stInternational Conference on Emerging Trends in Energy Conservation—ETEC, Tehran, Iran, 20–21 November 2011. [Google Scholar]

- Jerez, S.; Tobin, I.; Vautard, R.; Montávez, J.P.; López-Romero, J.M.; Thais, F.; Bartok, B.; Christensen, B.O.; Colette, A.; Déqué, M.; et al. The impact of climate change on photovoltaic power generation in Europe. Nat. Commun. 2015, 6, 1–8. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pérez, J.C.; González, A.; Díaz, J.P.; Expósito, F.J.; Felipe, J. Climate change impact on future photovoltaic resource potential in an orographically complex archipelago, the Canary Islands. Renew. Energy 2019, 133, 749–759. [Google Scholar] [CrossRef]

- Patt, A.; Pfenninger, S.; Lilliestam, J. Vulnerability of solar energy infrastructure and output to climate change. Clim. Chang. 2013, 121, 93–102. [Google Scholar] [CrossRef] [Green Version]

- Johnston, P.C. Climate Risk and Adaptation in the Electric Power Sector; ADB: Mandaluyong City, Philippines, 2012. [Google Scholar]

- Daniel, T.; Joanna, L.; Matt, K.; Clephane, C.; David, B.; Jon, B.; Cathy, P.; Graham, B.; Gavin, J.; John, J.; et al. Building Business Resilience to Inevitable Climate Change. In Carbon Disclosure Project Report; Global Electric Utilities: Oxford, UK, 2009. [Google Scholar]

- Gaetani, M.; Vignati, E.; Monforti, F.; Huld, T.; Dosio, A.; Raes, F. Climate modelling and renewable energy resource assessment. In JRC Scientific and Policy Report; European Environment Agency: Copenhagen, Denmark, 2015. [Google Scholar]

- Bazyomo, S.D.Y.B.; Agnidé Lawin, E.; Coulibaly, O.; Ouedraogo, A. Forecasted changes in west africa photovoltaic energy output by 2045. Climate 2016, 4, 53. [Google Scholar] [CrossRef] [Green Version]

- Bartók, B.; Wild, M.; Folini, D.; Lüthi, D.; Kotlarski, S.; Schär, C.; Vautard, R.; Jerez, S.; Imecs, Z. Projected changes in surface solar radiation in CMIP5 global climate models and in EURO-CORDEX regional climate models for Europe. Clim. Dyn. 2017, 49, 2665–2683. [Google Scholar] [CrossRef]

- Crook, J.A.; Jones, L.A.; Forster, P.M.; Crook, R. Climate change impacts on future photovoltaic and concentrated solar power energy output. J. Energy Environ. Sci. 2011, 4, 3101–3109. [Google Scholar] [CrossRef]

- Fenger, J. Impacts of Climate Change on Renewable Energy Sources. Their Role in the Nordic Energy System; Nordisk Ministerraad: Copenhagen, Denmark, 2007. [Google Scholar]

- Huber, I.; Bugliaro, L.; Ponater, M.; Garny, H.; Emde, C.; Mayer, B. Do climate models project changes in solar resources? Sol. Energy 2016, 129, 65–84. [Google Scholar] [CrossRef]

- Wild, M.; Folini, D.; Henschel, F.; Fischer, N.; Müller, B. Projections of long-term changes in solar radiation based on CMIP5 climate models and their influence on energy yields of photovoltaic systems. Sol. Energy 2015, 116, 12–24. [Google Scholar] [CrossRef] [Green Version]

- Mani, M.; Pillai, R. Impact of dust on solar photovoltaic (PV) performance: Research status, challenges and recommendations. Renew. Sustain. Energy Rev. 2010, 14, 3124–3131. [Google Scholar] [CrossRef]

- Gaetani, M.; Huld, T.; Vignati, E.; Monforti-Ferrario, F.; Dosio, A.; Raes, F. The near future availability of photovoltaic energy in Europe and Africa in climate-aerosol modeling experiments. Renew. Sustain. Energy Rev. 2014, 38, 706–716. [Google Scholar] [CrossRef]

- Panagea, I.S.; Tsanis, I.K.; Koutroulis, A.G. Climate change impact on photovoltaic energy output: The case of Greece. In Climate Change and the Future of Sustainability; Apple Academic Press: New York, NY, USA, 2017; pp. 85–106. [Google Scholar]

- Burnett, D.; Barbour, E.; Harrison, G.P. The UK solar energy resource and the impact of climate change. Renew. Energy 2014, 71, 333–343. [Google Scholar] [CrossRef] [Green Version]

- Yagli, G.M.; Yang, D.; Srinivasan, D. Reconciling solar forecasts: Sequential reconciliation. Sol. Energy 2019, 179, 391–397. [Google Scholar] [CrossRef]

- Yang, D. A universal benchmarking method for probabilistic solar irradiance forecasting. Sol. Energy 2019, 184, 410–416. [Google Scholar] [CrossRef]

- Trapero, J.R.; Kourentzes, N.; Martin, A. Short-term solar irradiation forecasting based on Dynamic Harmonic Regression. Energy 2015, 84, 289–295. [Google Scholar] [CrossRef] [Green Version]

- Gomez-Romero, J.; Fernandez-Basso, C.J.; Cambronero, M.V.; Molina-Solana, M.; Campana, J.R.; Ruiz, M.D.; Martin-Bautista, M.J. A probabilistic algorithm for predictive control with full-complexity models in non-residential buildings. IEEE Access. 2019, 7, 38748–38765. [Google Scholar] [CrossRef]

- Gómez-Romero, J.; Molina-Solana, M. Towards Data-Driven Simulation Models for Building Energy Management. In International Conference on Computational Science; Springer: Cham, Switzerland, 2021; pp. 401–407. [Google Scholar]

- Yang, L.; Nagy, Z.; Goffin, P.; Schlueter, A. Reinforcement learning for optimal control of low exergy buildings. Appl. Energy 2015, 156, 577–586. [Google Scholar] [CrossRef]

- Das, U.K.; Tey, K.S.; Seyedmahmoudian, M.; Mekhilef, S.; Idris, M.Y.I.; VanDeventer, W.; Horan, B.; Stojcevski, A. Forecasting of photovoltaic power generation and model optimization: A review. Renew. Sustain. Energy Rev. 2018, 81, 912–928. [Google Scholar] [CrossRef]

- Chu, Y.; Li, M.; Coimbra, C.F.M. Sun-tracking imaging system for intra-hour DNI forecasts. Renew. Energy 2016, 96, 792–799. [Google Scholar] [CrossRef]

- Awad, M.; Qasrawi, I. Enhanced RBF neural network model for time series prediction of solar cells panel depending on climate conditions (temperature and irradiance). Neural Comput. Appl. 2018, 30, 1757–1768. [Google Scholar] [CrossRef]

- Belaid, S.; Mellit, A. Prediction of daily and mean monthly global solar radiation using support vector machine in an arid climate. Energy Convers. Manag. 2016, 118, 105–118. [Google Scholar] [CrossRef]

- Zeng, J.; Qiao, W. Short-term solar power prediction using a support vector machine. Renew. Energy 2013, 52, 118–127. [Google Scholar] [CrossRef]

- Moncada, A.; Richardson, W.; Vega-Avila, R. Deep Learning to Forecast Solar Irradiance Using a Six-Month UTSA SkyImager Dataset. Energies 2018, 11, 1988. [Google Scholar] [CrossRef] [Green Version]

- Kaba, K.; Sarıgül, M.; Avcı, M.; Kandırmaz, H.M. Estimation of daily global solar radiation using deep learning model. Energy 2018, 162, 126–135. [Google Scholar] [CrossRef]

- Ahmad, T.; Chen, H. Deep learning for multi-scale smart energy forecasting. Energy 2019, 175, 98–112. [Google Scholar] [CrossRef]

- Mellit, A.; Massi Pavan, A.; Ogliari, E.; Leva, S.; Lughi, V. Advanced Methods for Photovoltaic Output Power Forecasting: A Review. J. Appl. Sci. 2020, 10, 487. [Google Scholar] [CrossRef] [Green Version]

- Monteiro, C.; Fernandez-Jimenez, L.; Ramirez-Rosado, I.; Muñoz Jiménez, A.; Lara-Santillan, P. Short-Term Forecasting Models for Photovoltaic Plants: Analytical versus Soft-Computing Techniques. J. Math. Probl. Eng. 2013, 2013, 1–9. [Google Scholar] [CrossRef] [Green Version]

- Fentis, A.; Bahatti, L.; Tabaa, M.; Mestari, M. Short-term nonlinear autoregressive photovoltaic power forecasting using statistical learning approaches and in-situ observations. Int. J. Energy Environ. Eng. 2019, 10, 189–206. [Google Scholar] [CrossRef] [Green Version]

- Ogliari, E.; Grimaccia, F.; Leva, S.; Mussetta, M. Hybrid Predictive Models for Accurate Forecasting in PV Systems. Energies 2013, 6, 1918–1929. [Google Scholar] [CrossRef] [Green Version]

- Dolara, A.; Grimaccia, F.; Leva, S.; Mussetta, M.; Ogliari, E. A Physical Hybrid Artificial Neural Network for Short Term Forecasting of PV Plant Power Output. Energies 2015, 8, 1138–1153. [Google Scholar] [CrossRef] [Green Version]

- Alet, P.-J.; Efthymiou, V.; Graditi, G.; Juel, D.; Nemac, F.; Pierro, M.; Rikos, D.; Tselepis, S.; Yang, G.; Moser, D.; et al. The case for better PV forecasting. PV Tech. Power 2016, 8, 94–98. [Google Scholar]

- Malvoni, M.; DeGiorgi, M.G.; Congedo, P.M. Data on Support Vector Machines (SVM) model to forecast photovoltaic power. J. Data Brief 2016, 9, 13–16. [Google Scholar] [CrossRef] [Green Version]

- Abdel-Nasser, M.; Mahmoud, K. Accurate photovoltaic power forecasting models using deep LSTM-RNN. J. Neural Comput. Appl. 2019, 31, 2727–2740. [Google Scholar] [CrossRef]

- Son, J.; Park, Y.; Lee, J.; Kim, H. Sensorless PV Power Forecasting in Grid-Connected Buildings through Deep Learning. Sensors 2018, 18, 2529. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lee, D.; Kim, K. Recurrent Neural Network-Based Hourly Prediction of Photovoltaic Power Output Using Meteorological Information. Energies 2019, 12, 215. [Google Scholar] [CrossRef] [Green Version]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning Internal Representations by Error Propagation. In Parallel Distributed Processing: Explorations in the Microstructure of Cognition; MIT Press: Cambridge, MA, USA, 1986; pp. 318–362. [Google Scholar]

- Elsheikh, A.H.; Sharshir, S.W.; Abd Elaziz, M.; Kabeel, A.E.; Guilan, W.; Haiou, Z. Modeling of solar energy systems using artificial neural network: A comprehensive review. Sol. Energy 2019, 180, 622–639. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Shen, Y.; Mao, S.; Chen, X.; Zou, H. LASSO and LSTM Integrated Temporal Model for Short-Term Solar Intensity Forecasting. IEEE Internet Things J. 2019, 6, 2933–2944. [Google Scholar] [CrossRef]

- Zhang, J.; Yan, J.; Infield, D.; Liu, Y.; Lien, F. Short-term forecasting and uncertainty analysis of wind turbine power based on long short-term memory network and Gaussian mixture model. Appl. Energy 2019, 241, 229–244. [Google Scholar] [CrossRef] [Green Version]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical Evaluation of Gated Recurrent Neural Networks on Sequence Modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- Schuster, M.; Paliwal, K.K. Bidirectional recurrent neural networks. IEEE Trans. Signal Process. 1997, 45, 2673–2681. [Google Scholar] [CrossRef] [Green Version]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).