1. Introduction

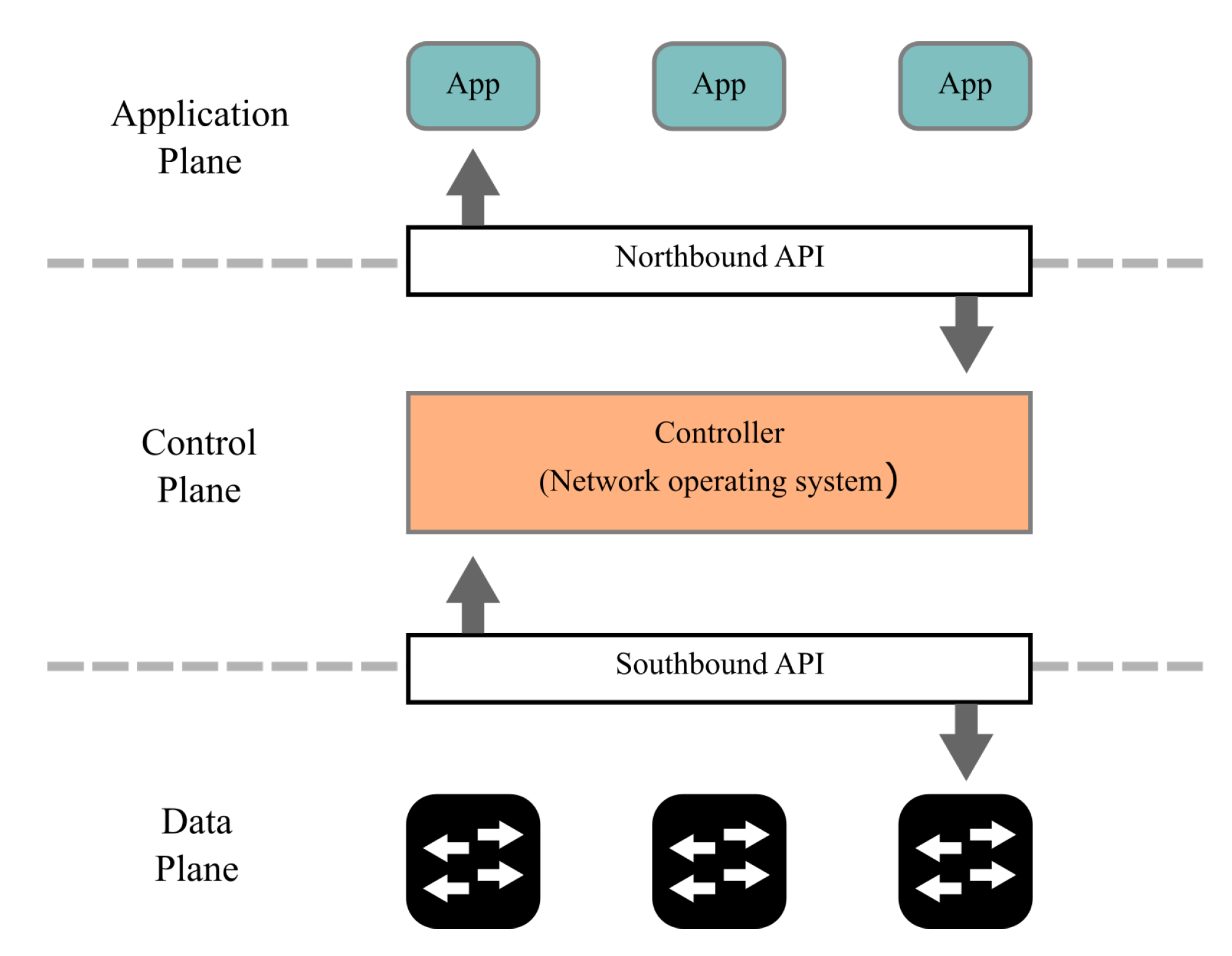

Currently, Software-Defined Networks (SDNs) present a revolution in the field of computer networks since they have reshaped several concepts of IP networks [

1]. An important concept is that the network control logic is decoupled from forwarding devices. Indeed, the control logic is moved from forwarding devices, and it is encapsulated into an external entity called the controller (control plane). Through the latter, and by leveraging a logically centralized network view and standardized communication protocols (e.g., OpenFlow [

2] and ForCES [

3]), forwarding devices (data plane) become programmable. Despite the concept of a logically centralized controller, SDN remains a distributed system wherein the control and data planes cooperate and communicate via an asynchronous communication (asynchronous communication implies arbitrary and unpredictable transmission delays) interface to establish networking. Furthermore, an out-of-order execution of events may occur since no global temporal reference is shared between network entities and message delays are arbitrary. This leads to the following problem: when updating a network while packet flows are taking routes to their destination, an out-of-order execution could give rise to non-deterministic behavior that temporarily deviates from network properties, which in turn may result in an inconsistent network update. Moreover and as a result, the network-wide view from the controller can transitorily be inconsistent with the current data plane state, which could affect the consistency of future network updates. To ensure consistent updates, depending on the network application, the network should align with some properties, such as no Transient Forwarding Loop (TFL) and no forwarding black hole, among others. The no TFL is one of the essential network properties desired by several network applications, including traffic engineering, virtual machine migration, and planned maintenance [

4]. Informally, it ensures that a packet is never forwarded along a loop back during an arbitrary time interval to a forwarding device in the network where it was previously processed. To the best of our knowledge, (i) no study formally specifies under which conditions TFLs may occur in the context of SDNs. Furthermore, (ii) no solution to this problem aligns with the distributed and asynchronous nature of the SDNs. Indeed, the proposed solutions are centralized or synchronized. In fact, a centralized-based solution is associated with memory overhead: to perform updates, it makes use of the centralized controller with full knowledge of the network, i.e., the complete network forwarding graph. In addition, calculating consistent updates based on full knowledge of the network in large-scale networks becomes costly in terms of performance overhead. On the other hand, a synchronized-based solution comes with bandwidth overhead: it relies on synchronizing switch clocks to perform updating operations simultaneously. Apart from the control information overhead, such a solution presents a risk of inconsistency during the transition phases of updates since synchronization protocols do not perfectly synchronize switch clocks. We argue that achieving updates in a loop-free manner with a trade-off between ensuring consistent updates and performing efficient updates is still a challenging open problem.

In this paper, we introduce the first of a family of patterns: TFL is defined to capture asynchronously and avoid preventively it at run-time during SDN updates. The main contributions are the following:

OpenFlow-based SDN updates are modeled at the event level according to the distributed and asynchronous nature of SDNs.

A formal model of the TFL based on temporal and causal dependencies (the causal dependencies are based on the happened-before relation defined by Lamport in [

5] (see

Section 5 for more details) that capture the conditions under which it may occur is presented. We highlight that this study is a key contribution in this work since it defines the root cause behind the triggering of TFLs and allows us to define how to achieve a TFL-free property when updating SDNs.

A causal consistent update algorithm oriented to ensure the TFL-free property is presented. This algorithm is based on the work of Prakash et al. [

6].

A proof of correctness that shows that the algorithm is TFL-free is provided.

The proposed algorithm is analytically evaluated, concluding that it enhances the update performance overhead.

The rest of this paper is structured as follows.

Section 2 presents the preliminaries for the rest of this paper.

Section 3 motivates the importance of the problem. A frame and a discussion of related work are presented in

Section 4.

Section 5 describes the network model. A study of the problem from a temporal perspective is described in

Section 6. The proposed solution is presented in

Section 7. An example scenario is described in

Section 8. We evaluate and discuss the proposed approach in

Section 9. Conclusions and future work are discussed in

Section 10.

3. Problem Description: TFL as an Inconsistent Network Update Problem

Despite the centralization of the logic control, SDN remains a distributed and an asynchronous system. Indeed, the data plane is managed by a logically-centralized controller that communicates with the data plane only by OpenFlow message passing via asynchronous communication channels. In the real world, during updates, applications running on the controller may compile several entries, requiring the controller to disseminate OpenFlow messages to install entries on switches. Therefore, the switches may deliver OpenFlow messages that are injected by the controller and in-fly packets, which interleave with update messages in any order, leading to inconsistent updates.

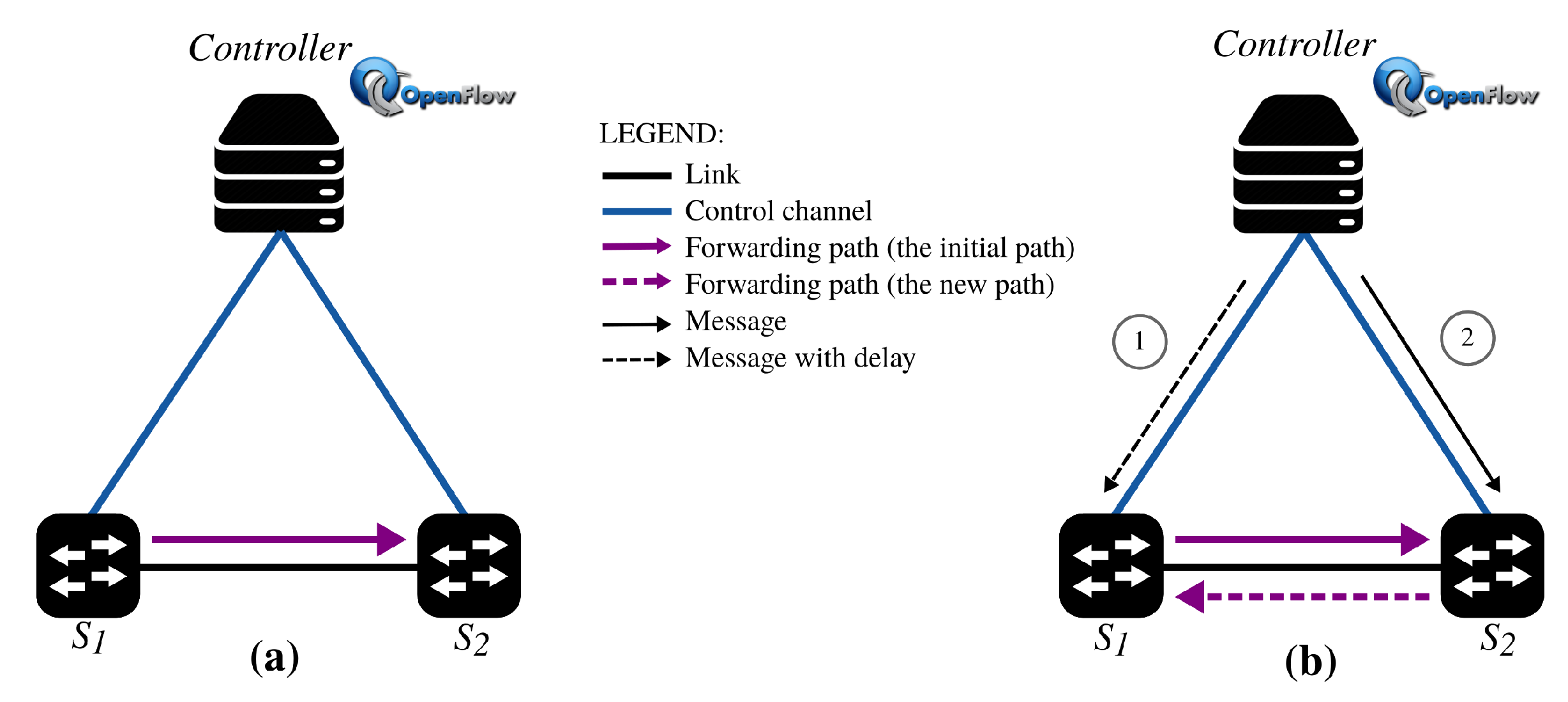

Flow swaps: a motivating scenario. In order to analyze the TFL phenomenon in SDNs, we use a common and inevitable network update scenario known as flow swaps.

Figure 2 illustrates an example of a basic flow swap scenario. In this scenario, the topology is composed of an OpenFlow controller and two switches. Initially, Switch 1 (

) contains an entry that forwards a specific packet flow

to Switch 2 (

) (see

Figure 2a). Due to a network policy change,

should be forwarded from

to

. To this end, the OpenFlow controller starts by instructing, based on two controller-to-switch OpenFlow messages of type

FlowMod, (1)

to delete the entry directing

from

to

(see

Figure 2b) and, then, (2)

to install a new entry to forward

from

to

. While the controller sends Operation (1) before Operation (2), the second operation finishes before the first one. In this case, an in-fly packet flow

may interleave with the installation of the rule in

, forwarding network traffic to

, and then

enters into a TFL between

and

.

Based on the previous example, an SDN update may result in a broad combinatorial range space of messages and packets ordering, and thus, the controller may not assume anything about the data plane state. Therefore, the network wide-view from the controller may be temporarily or permanently inconsistent with the current data plane state. Intuitively, this affects the consistency of network updates. Indeed, three of eleven bugs reported in [

10] were caused by inconsistent views from the controller. Alternatively, an OpenFlow controller can explicitly request acknowledgments by sending a barrier request message (

BarrierReq) after sending a message, that is it sets a synchronization point between messages. An OpenFlow switch of a destination should then respond with a barrier response message (

BarrierRes) once the message and all other messages received before the message have been processed [

2]. However, in which case and time should the controller explicitly request to install a BarrierReqmessage? What about the overhead of messages in large-scale networks? On the other hand, such a solution forces the system to stop processing any new requests until all messages sent before the

BarrierReq message are completely processed. Indeed, this solution can harm performance by increasing the number of control messages, controllers, switch operations, and delays.

4. Related Work

Current network requirements have changed, and even more after the introduction of SDNs, which support and facilitate network updates.

As SDNs have facilitated the updating of the networks, the problems related to them have been studied intensively. This scope has many axes that are relevant, including updating techniques and performance goals/objectives. That is why there is more than one classification of works that have addressed the problem, such as the proposal of [

11]. The work consists of a profound review of the consistent updating approaches in SDNs. This overview generalizes the network updating problem and proposes a taxonomy of consistent network updating problems in which they are classified by the type of consistency network update (connectivity, policy, and capacity consistency) and by objectives (when link-based, to make a new links available as soon as possible; when round-based, to minimize the number of makespan; and when cross-flow, to minimize the number of interactions between network entities during updates). Indeed, this taxonomy provides an orthogonal view in terms of the attacked network update problems and the works’ objectives. However, in this section, we focus on classifying works that attack the forwarding loop problem by these techniques. These techniques contribute to the prevention of the mentioned problem while measuring their impact on the network performance. For more details about this, please refer to

Section 9.

In the literature, works that have been proposed to tackle the problem fall into one of the following approaches: either the ordered update [

12,

13,

14,

15], the n-phase commit update [

4,

15,

16,

17,

18], the timed update [

19,

20], or the causal update [

21] approach. On the other hand, another set of works focuses on studying optimization problems [

22,

23,

24,

25,

26,

27] of consistent updates in SDNs. These two kinds of works are briefly discussed in this section.

Ordered updating approaches [

12,

13,

14,

15] use a sequence of steps to execute reconfigurations (controller commands) on switches, where the order of execution ensures that no inconsistent behavior appears throughout the transition from one configuration to another. During the transition at each step, the controller should wait until all the underlying switches finish their updates (referred to as rule replacements) and then inform it (through acknowledgment messages) in order to be able to initiate the next step of the updating sequence. An update finishes after the achievement of all the updating steps.

As for the n-phase commit update approaches, we distinguish between the two-phase commit and the one-commit update approaches. Works like [

4,

16], which were based on the two-phase commit, provided a strong per-packet consistency guarantee: packets are forwarded to their destinations based on the initial or the final configuration and not both, covering a wide range of consistent updating properties that include connectivity and policy consistencies. These works are based on a packet tag-match mechanism. Initially, all in-fly packets are tagged by switches with the current forwarding configuration

k. The controller starts by disseminating all updating commands of configuration

and then waits for acknowledgments from the underlying switches. Once all switches acknowledge the execution of commands (adding forwarding rules) of configuration

, then the controller instructs them to tag all incoming packets with

to the match forwarding rules of the new configuration. During the update transition phase, all switches should maintain the forwarding rules of both configurations (referred by rule additions) until all packets tagged with

k leave the network. When they leave, the controller instructs the switches to remove commands of version

k.

On the other hand, ez-Segway [

17] guarantees the following network properties: loop-freedom, black hole-freedom, and congestion-freedom. The scope of this work consists of having the controller delegate the task of performing network update operations to the switches implicated in the updates. In fact, the controller computes and sends the information needed to the switches once per update, which qualifies it as a one-phase commit approach. This is based on the basic-update technique. Once the first switch is involved in the new path, a message is sent from it towards their successors and until the destination switch to acknowledge that no packets will be forwarded anymore along the old path. Therefore, every switch that receives this new message removes the old forwarding entry of the old path and afterwards forwards it to its successor on the old path. To speed up the flow update, a segmentation of basic-update is proposed. The idea here is to split the update of a flow path of the old and the new paths into subpaths and, at the same time, perform their updates. Taking the forwarding loop case, the controller classifies segments into two categories, called InLoop and NotInLoop segments, and calculates a dependency mapping that assigns each InLoop segment to a NotInLoop segment. On the other hand, switches should install the new path and remove the old path based on the dependency mappings established by the controller and exchange messages among them to establish a selection of update operations to be executed.

Recently, the authors of [

15] proposed FLIP, an updating approach built on the dualism between the ordered update and the two-phase commit update approaches. FLIP combines the rule replacements and rule additions for matching packets with their forwarding rules in all switches, preserving the per-packet consistency property. The main contribution of this work involves reducing the rule additions during the transition update phase in the two-phase commit update approaches. This is based on two core procedures: constraint extraction and constraint swapping. The former consists of identifying the constraints (replacement and/or addition constraints) that ensure a safe update. Through the identified constraints, alternative constraints to the extracted ones are also inferred. After having extracted all the constraints, FLIP tries to calculate a sequence of update steps that satisfy all active constraints by applying a linear program where the objective is to minimize the number of update steps. If a solution can be found, FLIP outputs the operational sequences, otherwise it jumps to apply the constraint swapping procedure. This consists of considering some alternative constraints to replace some active constraints to calculate the operation steps. Based on these procedures, FLIP eventually reaches a combination between the two approaches for which a solution exists.

A timed consistent network update approach [

19,

20] has been proposed. This approach is based on establishing an accurate time to trigger network updates, that is synchronizing clocks of switches to simultaneously perform the executions of controller commands. An important advantage is that timed updates quickly become available for the in-fly packets as controller commands (adding and removing rules) are executed on the switches at the same time. Furthermore, it prevents switch congestion during the updates. In terms of consistency, a timed update achieves the per-packet consistency; however, it does not compromise the consistency during updates due to the risk that switch clocks may not effectively be synchronized.

Recently, the authors of [

21] proposed a new update approach based on the suffix causal consistency property. This property ensures, during routing policy updates, that a packet traverses the most recent path specified for it and for which it encounters forwarding rules, ensuring consistency properties, like bounded looping, among others. The authors of this work proposed to adopt the Lamport timestamp to tag packets in order to reflect the rules that correspond to each switch. This ensures that an in-fly packet is forwarded based on rules with a timestamp at least equal to the timestamps of rules where it has been processed in upstream switches. To do so, a controller module operation and switch module operation are designed to manage and maintain the required timestamps. Regarding the proposed update algorithm, there are four important steps: the first is the backward closure step whose role is to include the new forwarding rules that precede those already included. This step permits propagating the installation of new rules backward along routing paths. The same technique is applied to include the new rules that follow those already included, ensuring the second step named forward closure. The third step is tagging timestamps, which sets the new added rule timestamps that have not been set in the preceding steps. The send-back rules is the fourth step, in which a new and temporary rule is added if a packet takes its old path and encounters a switch at which the rule that would have matched in the old configuration has been already deleted. In this case, this temporary rule allows forwarding the packet in the direction of the new path from where it can continue traveling to its destination.

Works like [

22,

23,

24,

25,

26,

27] proposed studying optimization problems on consistent updates in SDNs. The authors of [

22] had as an objective to update as many switches as possible at a time, in what is known as node-based, in a loop-free manner. The authors of [

23] showed that the node-based optimization problem is NP-hard. In the same context, the authors of [

24] demonstrated that scheduling consistent updates for two destinations is NP-hard for a sublinear number of update rounds. In [

25], the authors presented an approach that aims to minimize the number of messages needed between the controller and the switches to update networks, known as round-based, in a loop-free manner. In the same context, the authors in [

26] distinguished between two loop-free network updates, the strong loop-freedom and the relaxed loop-freedom. The strong loop-freedom requires that the entries (i.e., forwarding rules) stored at the switches be loop-free at all points of time, whereas the relaxed loop-freedom requires entries stored by switches along a forwarding path to be loop-free. The problem was shown to be difficult in the strong loop-freedom case. In the worst case,

rounds may be required, where

n is the DSdefinition. On the other hand,

rounds always exist in the relaxed loop-freedom case. In [

27], the authors studied local checkability properties like s-treachability (i.e., whether the network is acyclic or contains a cycle), introduced in [

28]. This work studies the applicability of such theory in the context of SDNs by considering the case of updating spanning trees in a loop-free manner. Unlike previous works, the scope of this work is to determine a round-based update with a constant number of rounds, in such a way that every switch should be able to locally decide if it can change its forwarding behavior.

5. System Model

We consider a network represented through a set of nodes (defined later as a set of processes) and a generic (random) forwarding path, leading messages and packet flows, between a node and another (represented later as a set of forwarding paths), which is subject to being updated.

To formalize this system, we introduce a model of executions of message- and packet-passing between OpenFlow-based SDN entities during updates. First of all, in

Section 5.1, we present the model related to a typical distributed system. Then, in the next subsection, we define relevant concepts for this paper, basically the Happened-Before Relation (HBR) and the Immediate Dependency Relation (IDR) definitions. After that, in

Section 5.3, we introduce our SDN model by adapting the typical distributed system model to the SDN context and extending it to cover other sets specific to SDNs. Finally, in

Section 5.4, we discuss the causal order delivery, which is a fundamental property for our proposed solution.

5.1. Distributed System Model

To introduce our SDN model, we start by presenting the model of a typical distributed system as a point of departure. A typical distributed system is composed of different separated entities that communicate with each other by exchanging messages. It is assumed that no global time reference exists and the message transmission delay is arbitrary and unpredictable. Furthermore, for simplicity in this work, it is assumed that no message is lost.

At a high level of abstraction, a distributed system can be described based on the following sets: P, M, and E, which correspond, respectively, to the set of processes, the set of messages, and the set of events.

Processes: programs, instances of programs, or threads running simultaneously and communicating with other programs, instances of programs or threads. Each process belongs to the set of processes P. A process can only communicate with other processes by message passing over an asynchronous communication network.

Messages: Abstractions of any type of messages, which can contain either arbitrarily simple or complex data structures. Each message in the system belongs to the set of messages M. A message is sent considering an asynchronous and reliable network.

Events: An event e is an action or occurrence performed by a process . Each event e in the system belongs to the set E. There are two types of events under consideration: internal events and external events. An internal event is an action that occurs at a process in a local manner. An external event is an action that occurs in a process, but it is seen by other processes and affects the global system state. The external events are the send and delivery events. A send event identifies the emission event of a message executed by a process, whereas a delivery event identifies the execution performed or the consumption of an ingoing message m by a recipient process.

5.2. Time and Causal Order

Time is an important theoretical construct to understand how the transactions are executed in distributed systems. Indeed, it is difficult to determine if an event takes place before another one in the absence of a global physical time. However, the logical time introduces an agreed time between all processes based on which one can establish the execution order relation between any two events in a distributed system. This order relation was defined by Lamport [

5] by means of the Happened-Before Relation (HBR). The HBR is a strict partial order, and it establishes precedence dependencies between events. The HBR is also known as the relation of causal order or causality.

Definition 1. The Happened-Before Relation (HBR) [5] “→”is the smallest relation on a set of events E satisfying the following conditions: If a and b are events that belong to the same process and a occurred before b, then .

If a is the sending of a message by one process and b is the receipt of the same message by another process, then .

If and , then .

In practice, the use of HBR to maintain the causality is expensive since the relation between each pair of events should be considered, including the transitively Happened-before relationships between events (defined in the third condition of the HBR definition). To obliterate the notion that causality is expensive to set up in distributed systems, the author of [

29] proposed the Immediate Dependency Relation (IDR). The IDR is the minimal binary relation of the HBR that has the same transitive closure. We use the IDR to send the necessary and sufficient amount of control information sent per message to ensure causal order.

Definition 2. The Immediate Dependency Relation (IDR) [29] “↓”is the transitive reduction of the HBR, and it is defined as follows: 5.3. SDN Model

We developed the SDN model by adapting the sets

P,

M (also extended to the set

), and

E, presented in the distributed system model of

Section 5.1, to the SDN context and by adding the sets

,

,

, and

, which correspond, respectively, to the set of matches, the set of messages and data packets, the set of packet flows, and the set of forwarding paths, which are specific to the SDN model.

Processes: The system under consideration is composed of a set of processes | ∪ where represents the controller process and represents the set of routing processes of OpenFlow switches.

Matches: We consider a finite set of matches . A match is a field value that identifies a forwarding path in the network.

It is important to mention that the match is a key attribute in establishing the proposed updating algorithm (see

Section 7.2). In fact, each update is performed based on a match. This is because an update makes reference to a forwarding path

, a packet flow

, which is taking route to its destination according to

, and to any OpenFlow message

disseminated by the controller to update any routing process

that shares the same match

.

Messages and data packets: The system includes a set of messages and data packets | where represents the OpenFlow messages and represents the data packets.

In addition to the set of messages

M, we consider the set of OpenFlow message types

[

2] where

. Furthermore, we note that each message

corresponds to a

, denoted by

. Furthermore, a

may also correspond to a subset of OpenFlow messages. We denote a message

as

where a controller

sends an

to a

at

(the logical clock of

). Note that the tuple (

,

) represents the identifier of a message

.

In this paper, we consider controller-to-switch messages, and in particular the

FlowMod message. This message allows the controller to modify the state of OpenFlow switches either by adding, modifying, or deleting entries. The

FlowMod message is composed of various structures (see the details in [

2]). In this work, we consider

and

as the relevant

FlowMod message structures. In fact, the former specifies to which packet flow an entry corresponds, whereas the latter specifies the action to be performed on the matched packet flow. We consider

FlowMod as a relevant message, where

and

represent the “

” structure in the specification of a message

.

A packet in the subset mentioned above is denoted as , where an forwards at the logical clock a data packet, composed of a and , to an such that and . Note that the tuple (, ) represents the identifier of a data packet . The header of each data packet piggybacks a that corresponds to all of packets belonging to the same packet flow. The data consist of the payload (the current intended message).

Packet flow: We consider a finite set of packet flows . A (also ) is a sequence of packets between a source and a destination , (. Furthermore, we consider the bijection , that is each corresponds to a denoted by .

Forwarding paths: A finite set of forwarding paths is considered. An is a subset of routing processes between an source and an destination, where and . Furthermore, we take into consideration the bijection , that is each corresponds to a denoted by .

Events: As mentioned in

Section 5.1, there are two types of events: internal and external ones. We note that the internal events are not relevant to the rest of the paper. However, we define them for the completeness of the formal specification. The set of finite internal events

is the following:

- -

denotes that at , an processes a message sent by the .

- -

denotes that at , an processes a data packet forwarded by an ().

The external events considered are the send, the receive, and the delivery events. The set of external events is represented as a finite set . The set of send events is the following:

- -

denotes that the sends a message to an .

- -

: denotes that an forwards a data packet to an ().

is composed of one event:

- -

: denotes that an receives a message or a data packet . Such an event only notifies the reception of an by .

Furthermore, is composed of a unique event:

- -

: denotes that an delivered a message or a data packet . A delivery event identifies the execution performed or the consumption of an ingoing by .

The set of events associated with

is the following:

The whole set of events in the system is the finite set:

The order of occurrence of events can be collected based on the causal dependencies between them. The representation

expresses the causality between events

E in

P, using the happened-before relation

(see Definition 1) where:

5.4. Causal Order Delivery

Causal order delivery is a fundamental property in the field of distributed systems, and it is required for various distributed applications (e.g., distributed simulation). In fact, it is useful for synchronizing distributed protocols by inducing the causal order of events. Indeed, this property means that if two send messages are causally related and are sent to the same process , then their delivery should be held according to the causal order, that is by respecting the send order.

The author of [

29] showed that in order to ensure a causal order delivery for a multicast communication, it suffices to ensure the causal delivery of immediately related send events. Therefore, to ensure message-data packet causal order delivery in SDNs, the set of events is determined to be

. For simplicity, we represent the two send events

as

. Formally, the message causal delivery based on the IDR (see Definition 2) is defined for the SDN context as follows:

Definition 3. Causal order delivery in the SDN context:.

Therefore, causal order delivery establishes that if the diffusion of a message-data packet causally precedes the diffusion of message , then the delivery of should precede the delivery of at a common destination process.

6. Modeling the TFL Pattern from a Temporal Perspective

In this section, we study the use of physical time as a tool to ensure consistent network updates, and we analyze if physical time will be sufficient to deal with the TFL inconsistency problem.

We return to the flow swap update scenario of

Figure 2. In this scenario, the topology is composed of an OpenFlow controller and two switches (

and

). According to our network model (see

Section 5), the set of processes for this scenario is

, where

is the OpenFlow controller and

,

represent the switches

and

. During the update, the OpenFlow controller

sends to

a

FlowMod message

where a

, and then, it sends to

a

FlowMod message

where also

. The routing process

receives

and installs the entry directing all

to

. Accordingly,

directs

to

(see

Figure 3). Subsequently,

enters

before

is delivered to

due to a delay of the reception. Finally, as

matches the entry already installed in

, the former ends by redirecting

to

, generating a TFL between

and

.

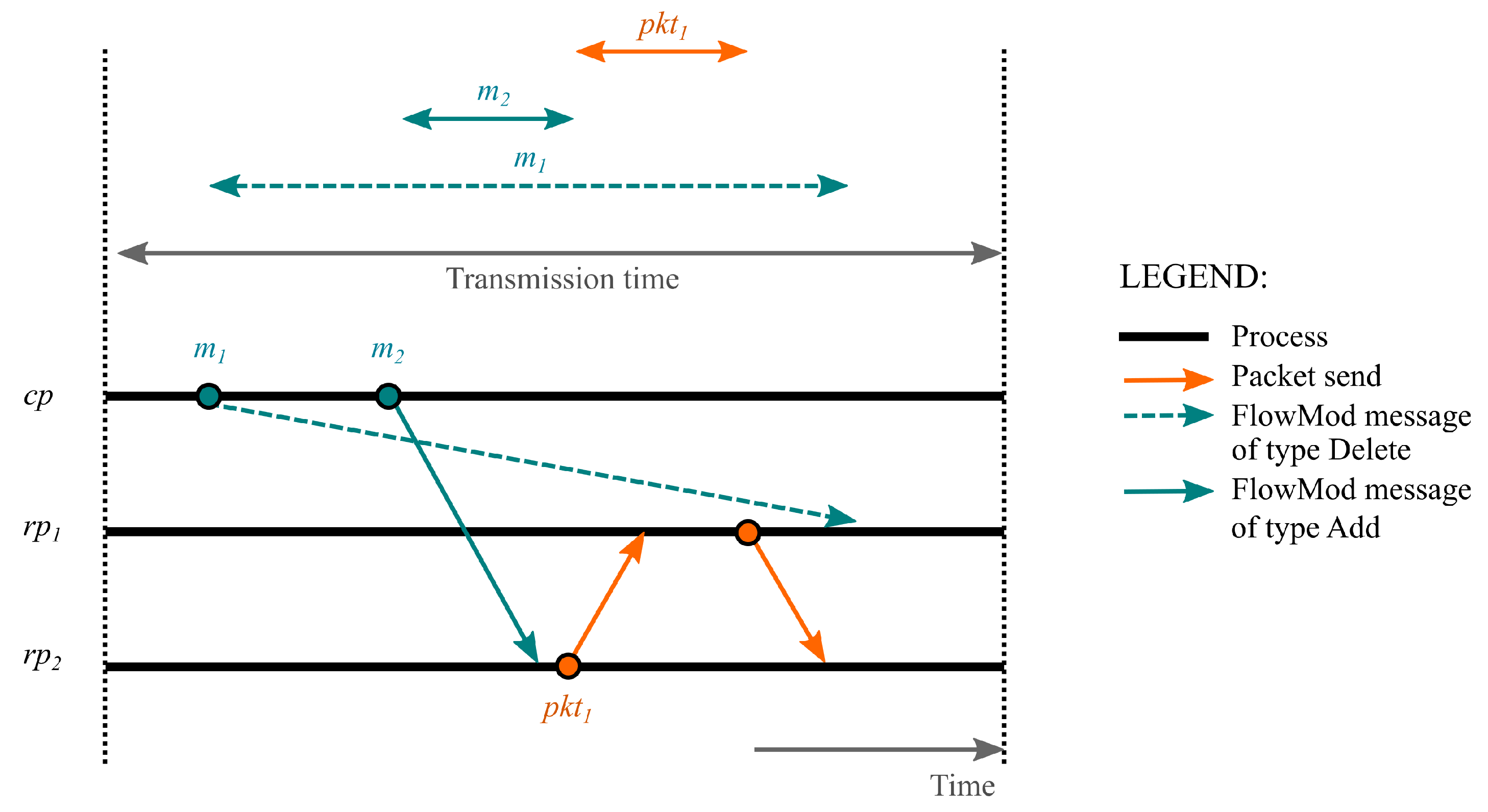

Upon analyzing the communication diagram corresponding to the execution diagram of

Figure 3, we can observe that the TFL between

and

is created when the transmission time interval of

is greater than the transmission time interval of

plus the packet forwarding time of

to

. We formally define the TFL pattern from a temporal perspective.

Definition 4. We have a and two OpenFlow messages m and , where and , such that (i) , (ii) and (iii) ∃ a forwarding path from to , such that intermediates may exist where . A TFL pattern from to exists iff there is a data packet flow , where , such that:where is a function that returns the transmission time of an OpenFlow message or a data packet from a to a with , and gives the local physical time at the moment a message is sent. Based on Definition 4, a possible solution to avoid TFLs in the data plane is to establish temporal references and perform timed execution of updating operations. However, and as was discussed in

Section 4, such a solution is not effective since it is quite difficult to perfectly synchronize clocks across network entities. Indeed, any clock synchronization mechanism (e.g., Network Time Protocol (NTP) [

30]) presents a clock synchronization accuracy. In this case, we will not be able to find out or to force the execution order of any pair of events, which may harm the update consistency.

7. The TFL-Free Property

As studied in

Section 6, a TFL occurs during an update due to the no ordered execution of events. This is because networks are not able to reason about the global real-time ordering of events across entities. In this section, we explore the use of the causality (more detail in

Section 5) as a theoretical construction, firstly, to define the TFL pattern (see

Section 7.1), and secondly, to perform coordinated network updates, ensuring the TFL-free property (see

Section 7.2).

7.1. Modeling the TFL Pattern from a Causal Perspective

In this subsection, we analyze the TFL phenomenon during OpenFlow-based SDN updates by using the scheme of the happened-before relation (See Definition 1).

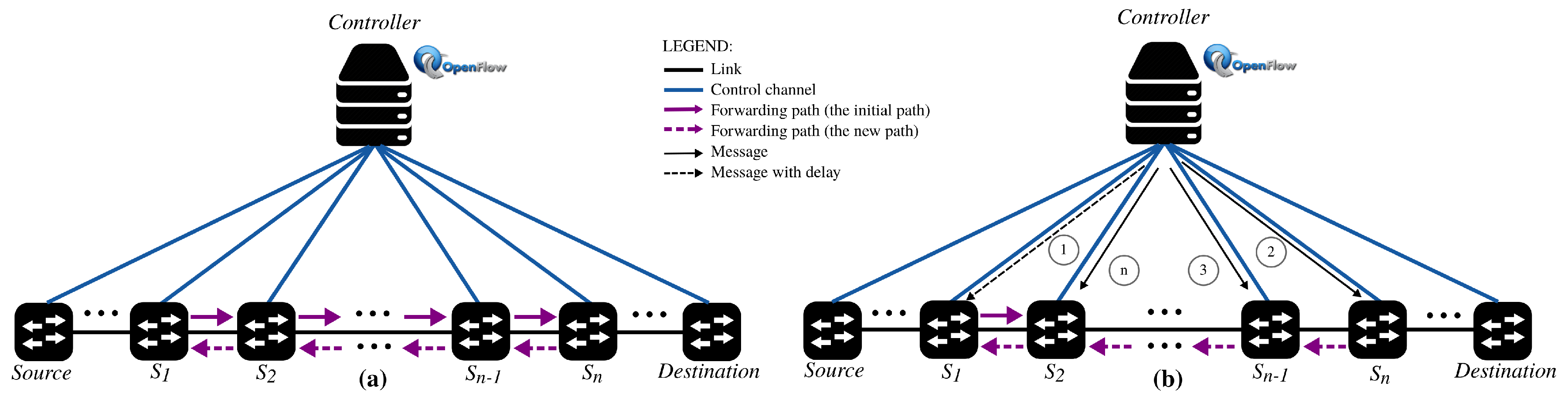

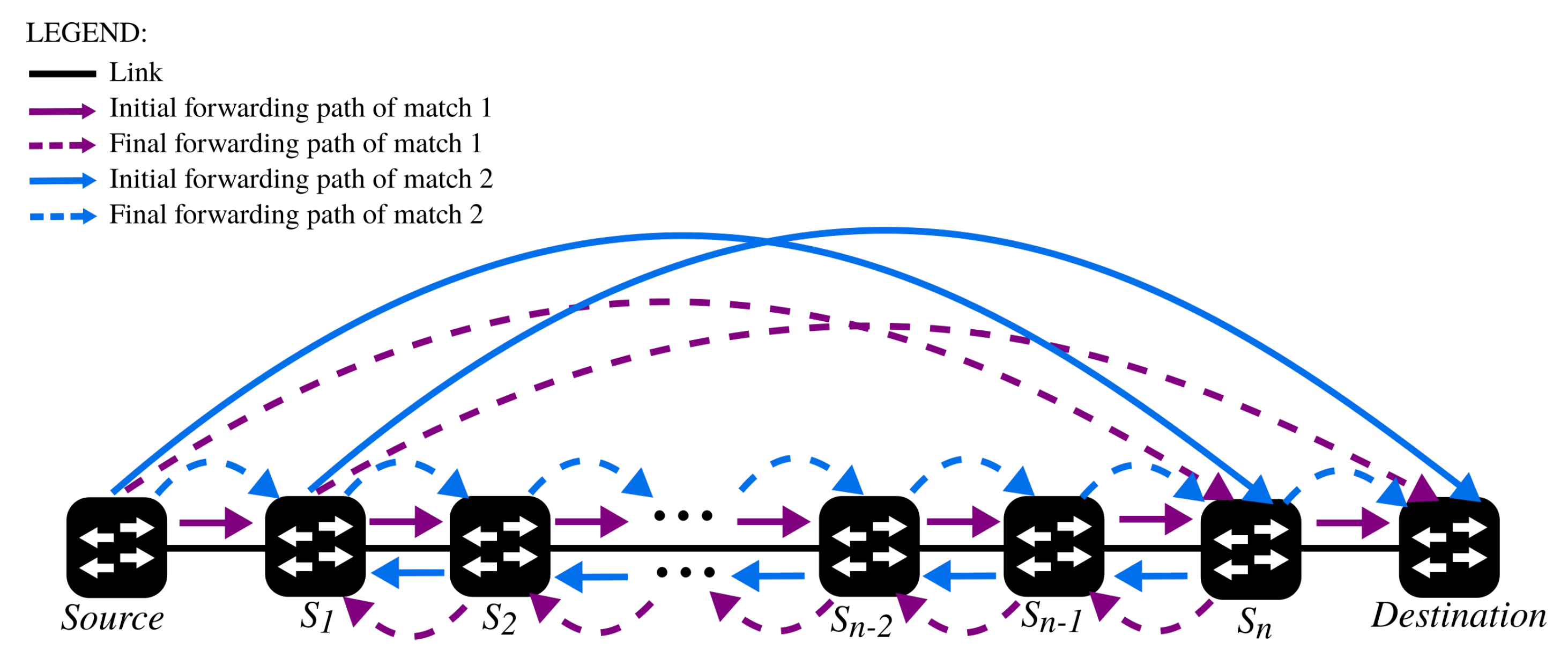

Figure 4 illustrates the generic scenario in which an out-of-order execution of messages/packets leads to a TFL during updates. In this scenario, the topology is composed of an OpenFlow controller and

n OpenFlow switches. According to our network model (see

Section 5), the set of processes for this scenario is

where

is the OpenFlow controller,

and

represent, respectively, the

and the

switches, and

,

,

represent the intermediateswitches

. Initially, each intermediate routing process

, except

, contains an entry

r directing a

to its

(see the solid lines in

Figure 4a). Due to a network policy change,

should be forwarded from

to

(see the dashed lines in

Figure 4a). To this end, the

starts by instructing each

, except

, to delete the entry directing

to its

and then instructs again each

, except

, to install an entry directing

to its

. In an OpenFlow-based SDN, the

has to send

controller-to-switch OpenFlow messages of type

FlowMod. For brevity, and as it is sufficient to express the generality of the phenomenon,

Figure 4b depicts only one message (Message (1) depicted in the dashed line) for deleting the rule in

and all the other messages (from (2) to (n)) for adding the new forwarding path from

to

.

The communication diagram of

Figure 5, which corresponds to the generic scenario of

Figure 4b, is used to characterize the phenomenon. As shown, the

starts by sending to

a

FlowMod message

of command type

and

as a match, where

, and then, it sends to

a

FlowMod message

of command type

and also to

,

as a match, as it is about forwarding the same packet flow

. The rest of the OpenFlow messages from

to

(represented in

Figure 4b by Messages (3), …, (n)) are sent to their corresponding

to add the entries directing

from

to

. Upon the reception of the messages, and due to the asynchronous communication between the controller and all the underlying switches,

,

, …and

receive their messages and install the entries while packets

hit

, directing them to

(see

Figure 5). Hence,

enters

before

is delivered to

(see

Figure 5). Consequently,

matches the entry

r already installed in

directing all

to

. Finally,

ends by redirecting

to

(see the solid line between

and

in

Figure 4b), which generates a TFL. Indeed, the fact that

is delivered to

and went back through

means that

has already entered into a TFL.

We define below an abstraction of the TFL pattern in SDN, as a specification of Lamport’s happened-before relation to express the phenomenon from a causal perspective.

Definition 5. We have a and two FlowMod messages where and , such that (i) , (ii) , and (iii) ∃ a forwarding path from to , such that intermediates may exist where . A TFL from to exists iff there is a data packet flow , where , such that:

is sent by after the delivery of ,

if is delivered by , then is the next data packet sent by , and

is delivered by before the delivery of m.

It can be interpreted that a TFL occurs due to the violation of the causal order delivery of the OpenFlow update message(s) of type deleteand matched packets/packet flows (see the three conditions in Definition 5). Based on the abstraction defined in Definition 5, we present how to capture and avoid the occurrence of the defined pattern during updates, ensuring the TFL-free property.

7.2. Algorithm for TFL-Free SDN Updating

An update algorithm based on the algorithm of [

6] is presented in this subsection. Based on the study provided in

Section 7.1, we show how the presented algorithm allows capturing asynchronously and avoiding preventively the occurrence of TFLs during updates, referred to as TFL-free updates.

7.2.1. Algorithm Overview

Throughout an update, an OpenFlow-based SDN expresses message/packet-passing between processes P, specifically, between the controller process and the set of routing processes , or between themselves. Therefore, we distributed the algorithm over the and the set of . It can be summarized as follows:

Input: The algorithm takes the list of all OpenFlow update messages and ingoing data packets as input. As described in

Section 5, updates are

match-based performed, i.e., update messages and in-fly data packets are grouped and processed by match. This allows updates of different matches to be executed concurrently without the risk of harming the TFL-free property.

Condition: Let be a match. All matched OpenFlow messages of type delete should be disseminated from the to the before all OpenFlow messages of type add.

Execution model: All OpenFlow update messages are asynchronously disseminated from to . No upper bound on messages/data packets transmission delay is required. never waits for an acknowledgment message from once a message is delivered, and clocks of all entities are not in synchronization with each other, that is the execution model is fully asynchronous.

Data structures: At a process-level, each process maintains a vector of control information to store direct dependency information between the set of messages/packets with respect to the corresponding match. Furthermore, each maintains a matrix to track dependency information (see the data structures’ subsection below for more details) when an receives an . We will also make reference to the state of the delivery matrix structure when a process sends an , denoted as .

Functionally: The algorithm is implemented at the time of sending an OpenFlow message from the , and/or at the time of forwarding a data packet from an , and/or at the time of receiving an by a routing process . At the sending of an OpenFlow message from the , and besides the message m, the algorithm encapsulates into the message a vector containing control information on the send message events that directly depend on it (see Algorithm 1). Similarly, an outgoing packet in the data plane piggybacks a vector that carries control information of the OpenFlow message/packet send events that directly depend on it (see Algorithm 2). At the reception of an , and based on the control information encapsulated into , the receiver decides if it can deliver or if it should wait for the reception of another/other (s) to then be able to deliver (see Algorithm 3).

Ensured properties: Based on the algorithm functionality, neither an OpenFlow message nor a packet, which share the same match, will be delivered out of causal order, ensuring the per-match causal order delivery property (see Definition 3). In

Section 7.3, we prove that the presented algorithm ensures the TFL-free property.

| Algorithm 1: Controller-to-switch message sending. |

| Input: The set of OpenFlow update messages |

| Condition: |

| 1: 0, |

| 2: for all OpenFlow messages do |

| 3: |

| 4: |

| 5: |

| 6: |

| Algorithm 2: Switch-to-switch packet forwarding. |

| Input: Ingoing data packets |

| 1: 0, |

| 2: |

| 3: |

| 4: |

| 5: |

| Algorithm 3: Switch message/packet reception. |

| Input: OpenFlow messages sent from the controller and in-fly data packets |

| 1: , |

| 2: (the content may be an OpenFlow message or a packet) |

| 3: wait (|) |

| 4: |

| 5: |

| 6: |: |

| 7: |

| 8: |: |

| 9: |: |

| 10: |

| 11: if then |

| 12: delete from |

7.2.2. Data Structures

In the algorithm, each process maintains a vector of control information of length N to store direct dependency information (N is the number of processes). Each element of is a set of tuples of the form . For instance, let be the vector of a process such that . This implies that any message sent by a process should be delivered to after the message of sequence number t sent by has been delivered to . Furthermore, each process maintains an integer matrix to track dependency information. Each matrix records the messages of the last sequence number delivered to other processes. For instance, denoted that knows that messages sent by process to , whose sequence numbers are less than or equal to t, have been delivered to .

7.2.3. Algorithm Details

Controller-to-switch message sending: This algorithm (see Algorithm 1) takes all update messages calculated by the controller that should be communicated to the routing processes as input. As a condition, all messages of type delete should be disseminated by the controller before all messages of type add. Before sending the messages, they should be grouped by match (see Line 2). In each message send event, the logical clock of (denoted by ) is incremented (see Line 3) to associate a timestamp with m (see Line 4). Upon sending m, is encapsulated into the send event (see Line 5). contains information about the direct predecessors of m with respect to messages sent to . After sending m, is updated by adding as a potential direct predecessor of future messages sent to after m (see Line 6). In general, contains delivery constraints of messages sent to by .

Switch-to-switch packet forwarding: This algorithm (see Algorithm 2) takes ingoing data packets as input. Before forwarding a packet to an , the logical clock of (denoted by ) is incremented (see Line 2) to associate a timestamp with (see Line 3). Upon forwarding a to its next hop, is encapsulated into the forwarding event (see Line 4). contains information about the direct predecessors of with respect to OpenFlow messages/packets sent to . After forwarding , is updated by adding as a potential direct predecessor of future packets sent to after (see Line 5). The instructions of this algorithm are similar to the previous one. The difference is at the level of the communicating processes and of the type of data for sending.

Switch message/packet reception: From the point of view of the receiver

(see Algorithm 3), an

piggybacks delivery constraints encapsulated into

, that is messages that must be delivered to

before

. Note that there is a distinction between the reception of a message

(see Line 2) and its delivery (see Line 4). The delivery of

to a process

implies that the message in question was received and all previous delivery constraints on

were satisfied (see Line 3). Thus, once the delivery constraints are satisfied,

is delivered to

(see Line 4), and what follows is updating the control information of the receiver process

. Then,

updates its

matrix, indicating that the message sent from

, whose sequence number is equal to

, was already delivered to

(see Line 5). The

matrix is also updated with respect to the messages

delivered to process

(see Line 6). After the delivery of

,

updates a new delivery constraint for future messages sent from

. Therefore,

is updated by adding

, using the

operator as a new potential direct dependency on subsequent messages sent from

and by deleting older direct dependencies (transitive dependencies), using the

operator (see Line 7), which were already satisfied. The operator

(see Algorithm 4) ensures that if there are multiple constraints corresponding to a sender process, the most recent constraint is selected [

6]. The

operator (see Algorithm 5) deletes the delivery constraints already known to be satisfied (T2) from the current set of message delivery constraints (T1) [

6]. Furthermore, and to maintain the causality property, a message sent by a process

to

, whose send event is causally dependent on messages sent by

to

, should be delivered to

only after the

message sent by

to

. Thus, for all processes

, except the ones for the sender and receiver,

is updated by adding the delivery constraints piggybacked by

(see Line 8). As we have mentioned, the

matrix is used for garbage collection to reduce the communication overhead when ensuring the causal ordering of messages. Therefore, based on the

matrix of process

,

is updated in such a way that it contains only the recent delivery constraint needed for the delivery of future messages (see Lines 9 to 12).

| Algorithm 4: The operator : (). |

| 1: Boolean change, set of tuples , set of tuples , set of tuples T |

| 2: change:= true |

| 3: ( and contain the delivery constraints) |

| 4: while (change) { |

| 5: change:= false |

| 6: if and and |

| 7: { T:= |

| 8: change:= true } } |

| 9: return |

| Algorithm 5: The operator : (). |

| 1: Boolean change, set of tuples , set of tuples , set of tuples T |

| 2: change:= true |

| 3: |

| 4: while (change) { |

| 5: change:= false |

| 6: if and and |

| 7: {T:= |

| 8: change:= true } } |

| 9: return |

7.3. Proof of Correctness

In the previous subsection, we presented an adaptation of a causal ordering algorithm as a solution for the TFL problem. We now demonstrate that the proposed algorithm is TFL-free.

When analyzing Definition 5, we can interpret that a TFL occurs in the data plane due to the violation of the causal order delivery of the relevant OpenFlow message FlowMod, and consequently, a packet or a flow of packets starts to loop between the underlying switches. Thus, and in order to prove that the algorithm is TFL-free, it is sufficient to demonstrate that there is no data packet flow that holds with the conditions specified in the TFL pattern of Definition 5. To accomplish the proof, we focus on the packet that triggers the TFL pattern. The following theorem proves this observation.

Theorem 1. The algorithm guarantees that ∄ a data packet , such that (i) and (ii) where and .

Let us assume that the Algorithm 1 and the Algorithm 2 store knowledge of the latest message/packet sent from the same process to another process, through a local matrix named that has the same structure as the delivery matrix (for more details, see the structure description subsection). Therefore, for this proof, we consider this additional instruction in both mentioned algorithms: (6). This hypothetical instruction allows building a matrix that considers the message(s)/packet(s) that belong(s) to the causal history of a message/packet and that has (have to be delivered before to a process . Thus, if is less than or equal to , this means that all message(s)/packet(s) that causally depend(s) on has (have) already been delivered to . On the other hand, if is greater than , this means that some of the message(s)/packet(s) that causally depend(s) on has (have) not been delivered to .

Proof. This is proven by contradiction. Let us assume that there is a packet , such that:

According to Definition 5, this is by transitivity since and , such that m and have as a common destination.

The existence of a

under the mentioned Conditions 1 and 2 implies that

when

receives

. However, and based on the proof of [

6], this cannot occur as the algorithm allows the delivery of

to

only when

, which contradicts the initial assumption. □

8. Scenario Description

We present the example of

Figure 6 to illustrate how our algorithm works. This example shows two independent updating processes within the same topology. Note that updating processes in OpenFlow-based SDNs are independent as forwarding decisions are flow-based: each packet flow is determined by match fields, as well as by update messages disseminated by the controller to update the forwarding tables of switches. The two updating processes shown in

Figure 6 are distinguished by means of two different colors, corresponding to a

in purple and a

in blue. In both examples, data packets that interleave with the update messages sent from the controller (

) (the

is not depicted in

Figure 6) and installed into the switches

(

) may enter into TFLs between all intermediate switches

. For brevity, only the update forwarding process corresponding to

is explored. However, and for completeness,

Table 1 and

Table A1 and

Table A2 in

Appendix A include the information related to both matches. Update messages are illustrated in

Table 1.

Table A1 shows piggybacked control information of update messages, and

Table A2 depicts control information for future update messages.

TFLs that occur when updating forwarding path related to : We will follow a packet from the origin to its destination taking into consideration the routing tables of switches corresponding to the initial policy of (colored with purple). Assuming that and update their flow tables, then forwards an ingoing matched data packet to (see the dashed purple arrow between and ). After delivering , forwards it to , and the latter delivers before updating its flow table. As a result, ends in a TFL between and (see the dashed purple arrow between and and the solid purple arrow between and ). If the last assumption is not the case, i.e., delivers after having updated its flow table, then should forward to . The same risk that enters into a TFL between and exists if delivers before updating its flow tables. may indeed enter into TFLs between all the subsequent pairs, i.e., until reaches , and then, the risk may arise between and .

How the update algorithm proceeds to capture and avoid TFLs: As already mentioned, in each hop, there is a risk of falling into a TFL during the update of the forwarding path. We will review how the initial condition and the algorithm prevent this from happening.

Table 2 illustrates the behavior of the data packet

, which interleaves with the disseminated update messages corresponding to

. Note that in each hop,

gets a different identifier. In the first row, for example,

must travel from the

switch to the switch

. Later, in the following hop,

is forwarded from

to

(second row). This continues until

reaches the

switch.

As mentioned above, before performing the update, the algorithm (see

Section 7.2) begins with the initial condition by sorting update messages by their match fields and arranging the dissemination of messages as follows: all delete messages are disseminated before all add messages. We describe now the algorithmic part. Each disseminated message (see

Table 1) piggybacks direct dependency information related to message(s), which directly depend(s) on it and correspond(s) only to their match fields.

Table A1 shows the control information (

) encapsulated into each message when sending it from the controller, and

Table A2 illustrates the control information needed to be piggybacked for future messages. In

Table A1, the vectors

of tuples encapsulated into update messages are presented and referred to the messages that causally depend on the message, i.e., messages that should be delivered to a switch before the message in question, while

Table A2 presents the vector of the controller

and the tuples stored just after sending each message and that should be encapsulated with the future messages. On the other hand, the control information piggybacked by

is built after delivering it in each hop.

Upon receiving messages/packets (see

Table 3), switches also receive the control information

related to messages and packets. After each delivery, new potential direct dependencies on subsequent messages/packets are added to a receipt switch

. In this scenario, all add update messages sent to the intermediate routing processes

are delivered without any restriction as no message or packet sent before them with a common destination exists. However, for example, the delivery of

with identifier

is left on standby (wait) by

as

piggybacked the tuple

, which means that the message disseminated from the controller

with sequence number

should be delivered before

to

(see

Table 3). Once the message sent by

with sequence number

is delivered,

can be delivered to

. The algorithm treats all

in the same way in the subsequent hops, i.e.,

, if they reach them before the delivery of the delete messages. Therefore, any forwarding of

generated by an installation of an add update message will not be delivered by the next hop switch before the delivery of the corresponding delete message (if it exists), preventing any

from going back to a switch from which it was forwarded, avoiding having them enter TFLs during the update.

9. Discussion

The problem of TFL during updates was intensively attacked by using different techniques. The proposed solutions can be classified into four approaches: ordered update, n-phase commit update, timed update, and the causal update (see

Section 4 for more details). Although previous works have reached loop-free updates, they have not achieved effective and efficient solutions. We refer to the trade-off between ensuring TFL-free updates and performing updates with tolerable computational cost. To achieve a better trade-off, an important consideration is how to reason about the modeling of TFL-free updates. In this context, while all previous works set up synchronous execution models to perform updates, our solution was based on an asynchronous model. This allowed getting rid of the strong assumptions and certain constraints that come with a synchronous execution model. Indeed, solutions based on strong assumptions tend to be less applicable.

In regards to the ordered update approaches [

12,

13,

14], such as the loop-free routing update algorithms proposed by [

14], it has to be noticed that algorithms are centralized into the controller side to calculate updates. During each routing policy update, they perform updates in a free-loop manner by calculating the possible edges that can be updated without generating loops. To do so, the algorithms take forwarding graph properties (set of nodes, set of edges to be added, set of edges to be removed, etc.) as input. In the worst case, the algorithms require |number of new edge to be added| loop-free update steps to perform one routing policy update [

14], which is very expensive in terms of update time and bandwidth. The fact that the controller should stop disseminating update messages at each k-step of an update until receiving acknowledgment messages from all involved switches means that the update requires extra time to be completed. Furthermore, acknowledgment messages result in bandwidth overhead. In contrast, our algorithm proposed to disseminate update messages in one-shot. As shown in

Section 7.2, the only required treatment is to sort update messages by their match fields and arranging the dissemination of messages following a predefined order (see Algorithm 1). In addition, switches are not required to send acknowledgment messages to the controller as the proposed algorithm works with one-step update, and unlike [

14], it does not need acknowledgment messages that confirm the reception of the disseminated messages in order to start another update step.

Regarding the solutions based on the two-phase commit update [

4,

16], they require that each switch maintains the entries of both policies (the initial and the final ones) during the transition synchronous phase. This may end by congesting the memories of switches. Even worse, this approach may not be feasible when the number of entries bypasses the memory limit size of the switches. Our proposed solution avoids such a problem since the update algorithm is based on the replacement of entries. Firstly, it consists of computing an order in which the switches should consume the delete entry update message prior to the add entry update message, instructed by the controller. Secondly, by ensuring the computed order of message execution through causal order delivery of messages/packets, no switch will have entries of both policies at the same time.

As far as the one-phase commit update approach ez-Segway [

17] is concerned, updating tasks are delegated to the switches, qualifying it as a decentralized approach. However, consistent updates are ensured through centralized calculus performed by the controller. This is based on a forwarding graph that contains the global network state at each moment. Therefore, even when the updating process is decentralized among the switches, the process to make decisions is centralized in the controller side and carried out in a synchronous manner. Furthermore, no switch can participate in a new update until the previous updating process has finished. As already mentioned, our proposal avoids the use of a global graph since it is based on the causal order delivery of messages/packets.

The timed update approach [

19,

20] ensures consistent updates. However, the consistency during the intervals of time of updates is not guaranteed. This is due to the adaptation of a synchronous execution model to tackle an asynchronous problem, i.e., the inconsistent update problem. In fact, updates on involved switches are synchronized to be processed simultaneously, assuming an upper bound on message delivery. Actually, each switch receives messages/packet flows at an interval time

where

represents the clock synchronization error. In this case, two periods of events may overlap where the execution order is not trivial in order to ensure consistency. Indeed, as analyzed in

Section 6, the reception of in-fly packets that interleave with the reception of update messages can be a question of inconsistency during the interval time when the delivery of messages may take place, leading to TFLs. Hence, one cannot find out or force the execution order of events by using mechanisms for synchronizing physical time.

The consistency update approach based on Suffix Causal Consistency (SCC) [

21] introduced an update algorithm based on the Lamport timestamp, tackling the forwarding loop problem. We should highlight that this approach and ours are quite different. In fact, SCC works based on timestamps tagging packets to reflect the rules that correspond to each switch. On the other hand, our work is based on establishing a causal order between update messages and in-fly packets. The idea behind SCC is to ensure that an in-fly packet is routed based on its recently installed forwarding path, ensuring bounded looping. As mentioned in

Section 4, the fourth step of the proposed algorithm requires the controller to calculate and install the extra temporarily forwarding rule, redirecting an in-fly packet to the recent forwarding path to reach its destination. The question here is how many extra rules would be required per policy update? Indeed, this generates extra overhead related to controller and switch memory, as well bandwidth as this requires message exchange between the controller and switches. On the other hand, our work proposes an OpenFlow-based SDN model where the relevant update events and an abstraction of the TFL phenomenon are defined. Based on this model, we demonstrated that ensuring causal order delivery of the defined relevant update events, based on our SDN model, was sufficient to ensure the TFL-free property. Contrary to the SCC, ensuring causal order delivery does not need any extra rule installation. Furthermore, packets should not only traverse the most recent path to avoid the occurrence of such phenomenon. As shown in the scenario of

Section 8, a packet may start flying from the old path and then be forwarded to the destination based on the new path without the need for an extra rule to direct it to the new path. Thus, only the original calculated rules are required to perform updates.

Instead, our proposal established a message/packet causal order delivery to detect and avoid any TFL (refer to the correctness proof in

Section 7.3). Obviously, this resulted in memory and bandwidth overhead. However, the causal order message/packet algorithm was designed based on the IDR (see Definition 2). In fact, only direct dependency information between messages/packets with respect to the destination process(es) was needed to be piggybacked with them. In the worst case, each component of a

(vector of the control information of the controller process) could have at most one tuple for each process. This was because there was no concurrent message sent from the controller to a switch, i.e., each update message was sent to a specific switch and not to others. Furthermore, each message only piggybacked control information corresponding to their match fields. Let us go back to the scenario example of

Section 8, in

Table A1, of control information

presented in

Appendix A. Messages of

do not piggyback control information of messages of

. On the other hand, a component of a

(vector of the control information of a routing process) can have at most N tuples for each process, i.e., for each vector component. This occurs when a routing process should, for example, flood a data packet to all outgoing links. Therefore,

control information is required to be piggybacked with each message/packet. Concerning memory overhead, a routing process

needs to store a vector

and a matrix

of

N X

N, which then requires

integers to be stored.

In this work, we introduced a new approach to tackle the TFL SDN update problem based on distributed system principles. By means of this approach, we outperformed the-state-of-the-art by proposing a solution that was more suitable for the characteristics of the SDNs. Indeed, the solution was totally distributed, where the execution model was asynchronous, no global references were required, and it did not assume a message upper bound delivery. The aspects mentioned above favored the following points: (1) the system configuration with the proposed update mechanism aligned with the pure features of a distributed and asynchronous system; (2) the proposed update mechanism minimized the interaction of the controller with the switches during an update (update messages were sent in one shot per updating flow); (3) the controller was not the only network entity that calculated the update operations as the switches contributed in the calculation (based on the exchanged control information and the messages/packets delivery constraints ensured by the update mechanism).

)

)