Abstract

Recently, two evolutionary algorithms (EAs), the glowworm swarm optimization (GSO) and the firefly algorithm (FA), have been proposed. The two algorithms were inspired by the bioluminescence process that enables the light-mediated swarming behavior for mating or foraging. From our literature survey, we are convinced with much evidence that the EAs can be more effective if appropriate responsive strategies contained in the adaptive memory programming (AMP) domain are considered in the execution. This paper contemplates this line and proposes the Cyber Firefly Algorithm (CFA), which integrates key elements of the GSO and the FA and further proliferates the advantages by featuring the AMP-responsive strategies including multiple guiding solutions, pattern search, multi-start search, swarm rebuilding, and the objective landscape analysis. The robustness of the CFA has been compared against the GSO, FA, and several state-of-the-art metaheuristic methods. The experimental result based on intensive statistical analyses showed that the CFA performs better than the other algorithms for global optimization of benchmark functions.

1. Introduction

Many challenging problems in modern engineering and business domains challenge the design of satisfactory algorithms. Traditionally, researchers resort to either mathematical programming approaches or heuristic algorithms. However, mathematical programming approaches are plagued by the curse of problem size and the heuristic algorithms have no guarantees to near-optimal solutions. Recently, metaheuristic approaches have come as an alternative between the two extreme approaches. The metaheuristic approaches can be classified into two classes, evolution-based and memory-based algorithms. The evolutionary algorithms (EAs) iteratively improve solution quality by decent operations inspired by nature metaphors, creating several novel algorithms, such as genetic algorithms, artificial immune systems, ant colony optimization, and particle swarm optimization. The memory-based metaheuristic approaches guide the search course to go beyond the local optimality by taking full advantage of adaptive memory manipulations. Typical renowned methods in this class include tabu search, path relinking, scatter search, variable neighborhood search, and greedy randomized adaptive search procedures (GRASP).

The metaheuristic approaches contained in the two classes have developed rather independently, and only a few works investigate the possible synergy between them [1]. Talbi and Bachelet [2] proposes the COSEARCH approach which combines the tabu search and a genetic algorithm for solving the quadratic assignment problem. Shen et al. [3] proposes an approach called HPSOTS which enables the particle swarm optimization to circumvent local optima by restraining the particle movement based on the use of tabu memory. A hybrid of the ant colony optimization and the GRASP is proposed by Marinakis et al. [4] for cluster analysis. However, the two metaheuristics are separately used to tackle the feature selection and clustering problems, respectively. Fuksz and Pop [5] proposes a hybrid genetic algorithm (GA) with variable neighborhood search (VNS) to the number partitioning problem. Their hybrid GA-VNS runs the GA as the main algorithm and the VNS procedure for improving individuals within the population. Yin et al. [6] introduces the cyber swarm algorithm which gives more substance to the particle swarm optimization (PSO) by incorporating the adaptive memory programming (AMP) strategies introduced in the scatter search and path-relinking (SS/PR) template. The adjective “cyber” emphasizes the connection between the evolutionary swarm metaheuristics and the AMP metaheuristics. The cyber swarm algorithm outperforms several state-of-the-art metaheuristics on complex benchmark functions. The experimental results of the above-noted works disclose a promising research area that the marrying of the approaches from the two classes of metaheuristics can create significant benefit that is hardly obtained by the approaches from each class alone.

More recently, two evolution-based algorithms [7,8], namely the glowworm swarm optimization (GSO) and the firefly algorithm (FA), were proposed. The two algorithms were inspired by the bioluminescence process that enables the light-mediated swarming behavior for mating or foraging. The intensity of the light and the distance between the light source and the observer determine the attractiveness degree that causes the moving maneuver. This form of metaphor can be used to develop a swarm-based optimization algorithm where a swarm of glowworms/fireflies represent a set of candidate solutions. The light intensity is evaluated by the objective value of the light source and the distance between the glowworms/fireflies implicitly defines the eligible neighbors since the light observed by an agent decays with the distance. The glowworms/fireflies are attracted by visible light sources and fly towards them. Therefore, the solutions represented by the glowworms/fireflies improve their objective value through the swarming behavior. Several improvements of FA have been proposed. Yang proposed the LFA [9] by combining his original FA with Levy flight. Yu et al. employed the variable step size strategy to create the VESSFA [10] variant. The dynamic adaptive inertia weight was adopted in WFA [11] to use the short-term memory of previous moving velocity. Kaveh et al. developed CLFA [12] which applies chaos theory and logical mapping to determine the optimal FA parameters. The Tidal Force formula was used in FAtidal [13] to improve the balance between exploitation and exploration search behaviors. The most recent improvement was GDAFA [14] which uses global-oriented positional update and dynamically adjusts the step size and attractiveness to avoid being trapped in local optima. The DsFA [15] employed dynamic step change strategy to balance the global and local search capabilities, such that the search course is not likely trapped in local optimum.

From a long-term perspective of metaheuristic development, we anticipate that the GSO and the FA can be made more effective by incorporating the notions from the memory-based approaches, as validated in many previous attempts. Based on the prevailing framework of the cyber swarm algorithm [6] that integrates key elements of the two types of metaheuristic methods, we propose the Cyber Firefly Algorithm (CFA) to proliferate the advantages of the original form of the GSO and the FA. The CFA conceptions include the employment of multiple guiding fireflies, the embedding of the pattern search, firefly swarm rebuilding by the multi-start search, and the responsive strategies based on objective landscape analysis. The robustness of the CFA has been compared against the GSO, FA, and several state-of-the-art metaheuristic methods. The result as demonstrated in our statistical analyses and comprehensive experiments showed the CFA manifests a more effective form of GSO and FA. Most FA variants intend to improve the position update mechanism such as by using Levy flight [9], variable step size strategy [10], adaptive inertia weight [11], and dynamically adjusting strategy [14]. Our CFA differs with these variants by facilitating a more intelligent step size control mechanism which performs landscape analysis in the objective space to adaptively tune the step size according to the profiles of the incumbent fitness landscape.

The novelty of this paper stems at creating a more effective form of GSO and FA approaches by bridging the advantages of evolution-based and memory-based metaheuristics. In particular, this paper investigates whether the CSA template is viable for improving GSO and FA as CSA has been shown in [6] for improving PSO. Our experimental results show that the proposed CFA prevails GSO, FA, and several state-of-the-art metaheuristics on benchmark datasets. This justifies the generalization capability of the CSA template and one can follow the template to create an effective version of interested metaheuristics.

2. Related Work Materials and Methods

2.1. Glowworm Swarm Optimization (GSO)

Krishnanand and Ghose [7] developed the GSO algorithm. It is assumed that each glowworm has a luciferin level and a local visibility range. The luciferin level of a glowworm determines its light intensity, and the local visibility range identifies the neighboring glowworms which are visible to it. The glowworm probabilistically chooses a neighbor which has a higher luciferin level than itself and is flying towards this neighbor due to light attraction. The local visibility range is dynamic for maintaining an ideal number of neighbors. The GSO simulates the glowworm behaviors and consists of three phases as depicted as follows.

The luciferin update phase evaluates the luciferin level of every glowworm according to the decay of its luminescence and the merit of its new position after performing the movement within the evolution cycle t. The luciferin level of glowworm i is updated by the following equation.

where ρ is the decay ratio of the glowworm’s luminescence and τ is an enhancement constant. The first term is the persistence substance of luminescence due to decay with time, and the second term is the additive luminescence as a function of which indicates the objective value measured at the glowworm’s new position (here, without loss of generality, we assume the objective function is to be maximized).

In the movement phase of each evolution cycle, every glowworm in the swarm must perform a movement by flying towards a neighbor which has a higher luciferin level than the incumbent glowworm and is located within the local visibility neighborhood defined by a radius . The probability with which glowworm i is attracted to a brighter glowworm j at evolution cycle t is given by:

where is the set of glowworms within the visibility neighborhood of glowworm i at evolution cycle t. Once selecting a neighbor, say glowworm j, the current glowworm i performs a movement to update its position as follows.

where s is the movement distance and indicates the length of the referred vector. Precisely speaking, glowworm i moves in s units of distance towards glowworm j.

The visibility range update phase dynamically tunes the visibility radius of each glowworm to maintain an ideal number of neighbors, . So, the current number of neighbors, , is compared to and the visibility radius is tuned by the following equation.

where is the maximum visibility radius and η is a scaling parameter for tuning . Therefore, the value of is increased if < , and it is decreased if > . The feasible range of is bounded between [0, ]. The phenomenon of = 0 indicates many glowworms are resorting to the position of the current glowworm, while =

discloses the situation that the current glowworm is in a large distance to most of the other glowworms.

2.2. Firefly Algorithm (FA)

Yang [8] introduced the FA. The FA explores the solution space with a population of fireflies. Each firefly has luminescence of flashing light which attracts its mates in an inverse multiplication of the squared distance and the light absorption. By using the metaphor, a firefly (representing a candidate solution) can improve its light intensity (a merit function of the objective value) by flying toward a more attractive firefly. In particular, the attractiveness of firefly j observed by firefly i is defined as follows.

where γ is the light absorption coefficient, r is the Euclidean distance between the two fireflies, and is the light intensity of firefly j.

The movement of firefly i is attracted to a more attractive firefly j by the following equation,

where the second term is due to the light attraction and the third term is a random perturbation with α being the randomization parameter and is a vector of small random numbers drawn from a Gaussian distribution or uniform distribution. After the movement, the light intensity of firefly i should be re-evaluated and the relative attractiveness of any other flies to firefly i is also re-calculated. The FA conducts a maximum number of evolution cycles and within each cycle every pair of fireflies should be examined for possible movement due to attraction and randomization.

2.3. Adaptive Memory Programming (AMP)

The AMP comprises a broader spectrum than its more popularly accepted branch, the tabu search [16]. In what follows, we focus our discussion on the use of the SS/PR template [17] which we found very effective in creating benefits for marrying with evolutionary-based metaheuristics.

2.3.1. Scatter Search (SS)

The scatter search (SS) [18] operates on a common reference set consisting of diverse and elite solutions observed throughout the evolution history. The SS method dynamically updates the reference set and systematically selects subsets of the reference set to generate new solutions. These new solutions are improved until local optima are obtained and the reference set is updated by comparing its current members to these local optima. Some important features of the SS are as follows. (1) A diversification-generation method is designed to identify the under-explored region in the solution space such that a set of diverse trial solutions can be produced. (2) An improvement method is used to enhance the quality of the solutions under keeping. This process usually involves a local search operation which brings the trial solution to a local optimum. (3) A reference set update method is adopted to make sure that the reference set is maintaining a set of high quality and mutually diverse solutions observed overall in search history. (4) The multi-start search strategy iteratively restarts a new search session when the current search loses it efficacy. To lead the search course towards under-explored solution space, the multi-start strategy works with a rebuilt reference set produced by the diversification-generation method. (5) Interactions between multiple reference solutions are systematically contemplated. The simplest implementation is to generate all 2-element subsets consisting of exactly two reference solutions. Various search courses are conducted between and beyond the selected reference solutions.

2.3.2. Path Relinking (PR)

There is a common hypothesis accepted by most of the metaheuristics that elite solutions often lie on trajectories from good solutions to other good solutions. In a broader sense, the crossover of chromosomes, the sociocognition learning of particles, and the pheromone trail searching are all effective operators following the noted hypothesis. PR therefore creates a search path between elite solutions. An initiating solution and a guiding solution are selected from the repository of elite solutions. PR then transforms the initiating solution into the guiding solution by generating a succession of moves that introduce attributes from the guiding solution into the initiating solution. The relinked path can go beyond the guiding solution to extend the search trajectory. PR works in the neighborhood space instead of the Euclidean space and variable neighborhoods are usually considered in performing successive moves. Therefore, PR is well fitted for use as a diversification strategy.

3. Proposed Methods

Our proposed CFA synergizes the strength from three domains, namely the GSO, the FA, and the AMP, to create a more effective global optimization metaheuristic algorithm. We articulate the new features of the CFA as follows.

3.1. Multiple Guiding Points

Both GSO and FA algorithms conduct the firefly movement by referring to a guiding point, which is a nearby elite firefly with a higher fitness than that of the moving firefly. This mechanism of using a single guiding point raises the risk of misleading due to the selection of a false peak. Our proposed CFA algorithm instead employs a two-guiding-point mechanism to enhance the exploration capability of the algorithm. More precisely, the neighboring fireflies of the incumbent firefly i are identified by reference to the current value of the visibility radius, , where t indicates the index of the evolution iteration. Among the neighbors, the elite fireflies with a higher fitness than that of the incumbent firefly are eligible for guiding-point selection. We employ the rank selection strategy to select two guiding points from the eligible neighbors. According to our preliminary experiments, the rank selection strategy is more robust against prickly fitness landscape than the roulette-wheel selection strategy because the former works in the ranking value space instead of the fitness space.

3.2. Luciferin-Proportional Movement

In GSO and FA, the luciferin of firefly is used only for the determination of the single guiding point. As we deploy the two-guiding-point mechanism, the relative significance of contribution from each of the two guiding points can be further elaborated. We propose to convert the luciferin of each guiding point to the weighting value for individual contribution. Given two guiding points and observed at iteration t, their weighting values and are set as follows.

We then propose the firefly movement formula as follows.

where and are random values drawn from a uniform distribution U(lb, ub). Hence, firefly i is drawn by firefly j and firefly k with driving force relative to respective luciferin level. Parameters lb and ub control the feasible range of the step size for every firefly movement and their values are dynamically tuned in accordance with the landscape analysis as will be noted.

3.3. Adaptive Local Search

Local search is a rudimentary component contained in most of the successful metaheuristics such as memetic algorithms [19], scatter search [18], and GRASP [20], to name a few. Our CFA employs the pattern search method [21,22] which iteratively performs a two-step trajectory search. The explorative search step tentatively looks for an improving neighbor while the pattern search step aggressively expands the improving move by doubling the execution of the move. The proposed CFA embodies the pattern search method as a local search but performs it in an adaptive way. The local search is performed on all fireflies only after consuming every t1 fitness function evaluations. To leverage the balance between search efficiency and effectiveness, we adaptively vary the frequency parameter (t1) for the performed local search. The adaptive local search strategy is based on the analysis of the landscape observed in the objective space as will be noted.

3.4. Multi-Start with PR for Firefly Swarm Rebuilding

Multi-start is a mechanism for reinitiating the search with an under-explored region when the trajectory search loses its efficacy. The multi-start search strategy thus works with two functions. The function for critical event detection emits a signal upon the moment when the search stagnation behavior is observed. The diversification-generation function identifies an under-explored region in the solution space to generate a starting solution for the new trajectory search. Our CFA approach facilitates the critical event detection by monitoring the number of enduring iterations since the last improvement of the best-so-far firefly. If the number of no-improvement iterations for the best-so-far firefly has exceeded a parameter t2, a signal for detection of a critical event is returned. The parameter t2 is also made adaptive by the landscape analysis approach.

The diversification-generation function of the CFA approach is implemented by using the path-relinking technique, which has been found very useful in identifying a promising solution within an under-explored region and has been adopted as a diversification strategy such as in Yin et al. [6]. When a critical event signal is activated, a ratio δ of the swarm fireflies are rebuilt and each new firefly is placed on the best solution along a relinked path connecting a random solution and the best-so-far firefly. The path-relinking technique creates the path by dividing the subspace between the two end points of the path into n equal-size hyper-grids where n is the number of decision variables for the addressed optimization problem. A solution is sampled within each hyper-grid, so in total n solutions will be obtained along the relinked path. The best of the n sampled solutions is designated as the rebuilt firefly.

3.5. Responsive Strategies Based on Landscape Analysis

The previous features of CFA can be more effective by incorporating the notion of responsive strategy which adaptively tunes the search strategy when observing the status transitions. The CFA adapts the strategy parameters when the search encounters the transition between the smooth and prickly landscapes manifested by the objective function. The measure of fitness distance correlation (FDC) proposed by Jones and Forrest [23] has been proved useful for judging the suitability of a fitness landscape for various search algorithms. The FDC measures the correlation between the solution cost and the distance to the closest global optimum. Let the set of observed cost-distance pairs be {(c1, d1), …, (cm, dm)}, the correlation coefficient is defined as

where and are the mean cost and the mean distance, and and . are the standard deviations of the costs and distances. Hence, a significant FDC value has the implication that on average, the better the solution quality the closer this solution is to an optimal solution. It can be contemplated that a single-modal objective function would impose a high FDC value in contrast to a multi-modal objective function whose FDC value is closer to zero because of the irrelevance between the fitness and the distance.

Most existing research adopted the FDC technique as an off-line analysis for realizing the characteristics of the fitness landscape, while our CFA exploits the fitness landscape analysis as an online responsive strategy. We periodically conduct the fitness landscape analysis for every 1000n fitness function evaluations, where n is the number of problem variables. All the visited solutions ever identified as local optima in the trajectory of a firefly within this time period are eligible for the FDC value computation because these local optima are representative solutions produced in the evolution. As the global optimum is unknown, the best solution obtained at the end of this period is considered to be the best-known solution. The FDC value is computed by using these local optima and the best solution identified in the current time period of previous 1000n fitness function evaluations. Consequently, our CFA performs the adaptive landscape analysis which can predict the objective landscape within the current region explored by the firefly swarm and activate appropriate responsive strategies to make the search more effective. If the derived FDC value for the current time period is greater than a threshold (FDC > h1), the local objective landscape is considered to be asymptotical single-modal, and we perform two responsive strategies as follows: (1) increasing the distance, on average, of the firefly movement by lb = lb/λ and ub = ub/λ (0 < λ < 1); (2) increasing the elapsed iterations for checking the feasibility of executing the local search and multi-start search by t1 = t1/λ and t2 = t2/λ. The implication of the two responsive strategies is that when the local objective landscape is single-modal, the search may be more effective if the firefly movement distance is greater and the frequency for conducting the local search and the multi-start search is lower. On contrary, If the absolute FDC value for the current time period is less than a threshold (|FDC| < h2), the local objective landscape is considered to be asymptotical multi-modal, and we perform two responsive strategies as follows: (1) decreasing the distance, on average, of the firefly movement by lb = lb × λ and ub = ub × λ; (2) decreasing the elapsed iterations for checking the feasibility of executing the local search and multi-start search by t1 = t1 × λ and t2 = t2 × λ.

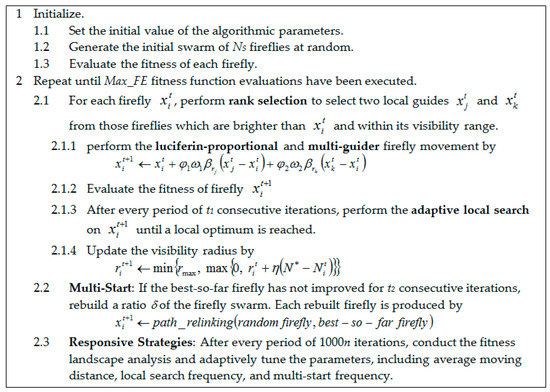

3.6. Pseudo-Code of CFA

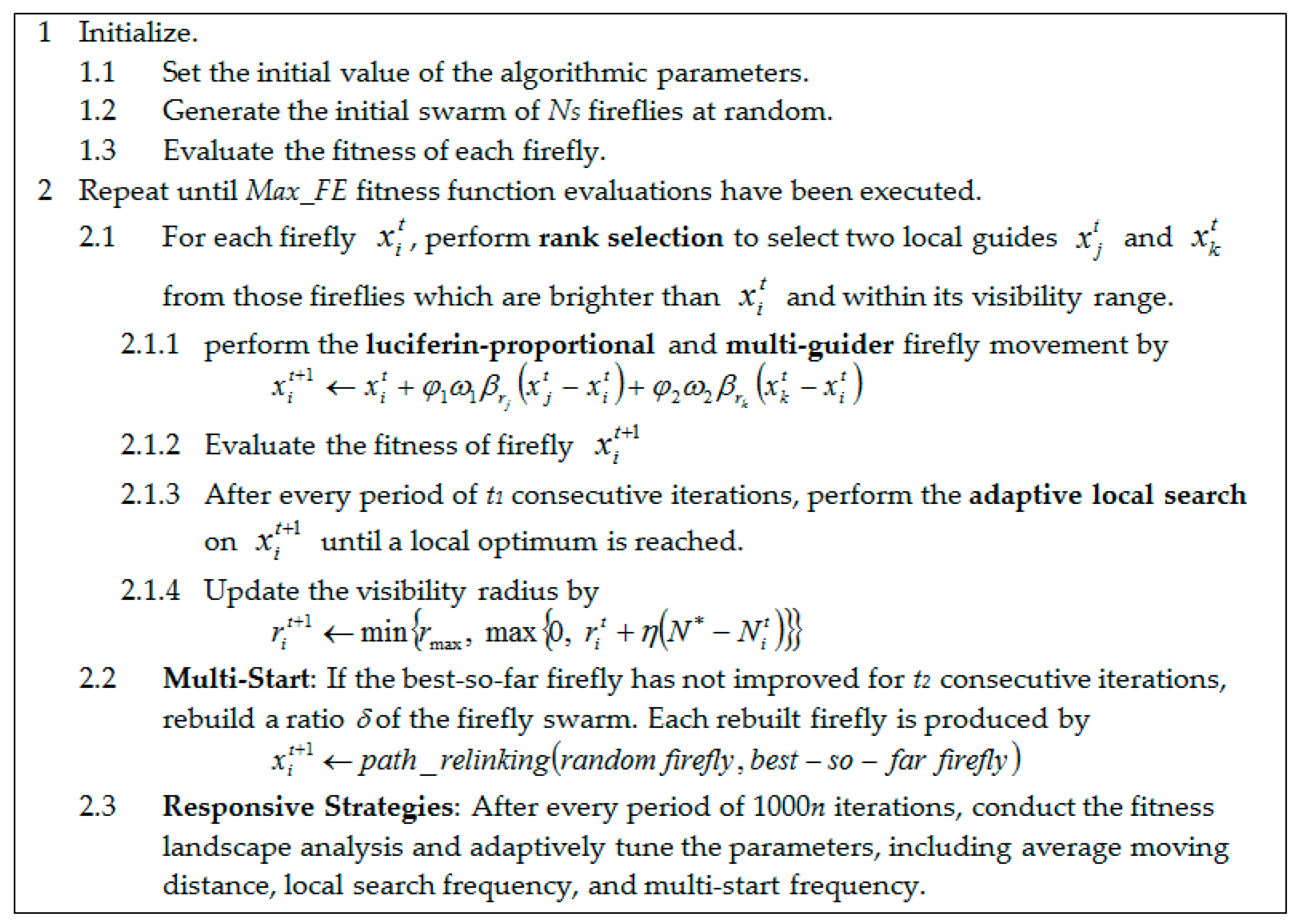

The pseudo-code of our CFA is elaborated in Figure 1. The new features of the CFA are emphasized in boldface for comprehensive descriptions.

Figure 1.

Pseudo-code of the CFA algorithm.

4. Experimental Results and Discussions

We have conducted intensive experiments and statistical tests to compare the performance of the proposed CFA algorithm and its counterparts. The experimental results disclose several interesting outcomes in addition to establishing the effectiveness of the proposed method. The platform for conducting the experiments is a PC with an Intel Core i5CPU and 8.0 GB RAM. All programs are coded in C# language.

4.1. Benchmark Test Functions and Algorithm Parameter Settings

We have chosen two benchmark datasets. (1) The standard benchmark dataset contains 23 test functions that are widely used in the nonlinear global optimization literature [6,18,24,25]. The detailed function formulas can be found in the relevant literature and they have a wide variety of different landscapes and present a significant challenge to optimization methods. The number of variables, domain, and optimal value of these benchmark test functions are listed in Table 1. Performance evaluation on this dataset is reported in terms of the mean best function value obtained from 30 repetitive runs. For each run, the tested algorithm can perform 160,000 function evaluations (FE) for ensuring that the tested algorithm has very likely converged. (2) The IEEE CEC 2005 benchmark dataset which is designed for unconstrained real-parameter optimization. We selected the most challenging functions and compared our CFA with the best methods reported in [26] under the same evaluation criteria described in the original paper. The implementation of the benchmark functions from both datasets is available in public library [27] in some programming languages such as MATLAB and Java. As we used C# for all the experiments, we modified the public codes to C# and embedded them into our main program.

Table 1.

The number of variables, domain, and optimal value of the benchmark functions.

To obtain the parameter settings of the CFA, GSO, and FA, various values for their parameters were tested with the standard benchmark dataset and the values that resulted in the best mean function value were used as the parameter settings as tabulated in Table 2. The parameter settings used by other compared metaheuristics will be presented in Section 4.3.2 and Section 4.3.3 where the comparative experiments are presented.

Table 2.

Parameter settings of the CFA, GSO, and FA.

4.2. Analysis of CFA Strategies

To understand the influence on performance of using various CFA strategies, we conduct experiments on the benchmark functions with several CFA variants as in the following subsections.

4.2.1. Selection Strategy for Multiple Guiding Solutions

In contrast to GSO and FA, the CFA selects multiple guiding solutions for performing the firefly movement. The firefly can circumvent the false peaks by relaxing the limitation that constrains the firefly to move toward the single best solution within neighborhood. To investigate the influence on performance of using various selection strategies for guiding solutions, we compare the variants of the CFA that employs the roulette-wheel selection, the tournament selection, and the rank selection, respectively. The comparative performance of the CFA variants is listed in Table 3. It is seen that for the test functions with fewer than 10 variables and the relatively simple functions (Sphere and Zakharov), all of the CFA variants work well and the mean best value obtained is very near the optimal value. For the harder and larger functions (Rosenbrock, Rastrigin, and Griewank with 10 or more variables), the CFA with the rank selection strategy finds a more effective solution for most of the functions than the CFA with the other two selection strategies. The rank selection outperforms the other selection strategies in tackling harder problems because it eliminates the fitness-scaling problem by working in the rank-value space instead of in the function-value space such that the firefly will not be severely misled by false but higher peaks.

Table 3.

The mean best function value and the standard deviation obtained by using various selection strategies.

4.2.2. Adaptive Strategy for Local Search

Local search is a rudimentary component contained in most of the modern metaheuristic algorithms. The local search procedure exploits the regional function profiles and makes the master metaheuristic algorithm more effective than the original form which does not embed this procedure. However, the local search procedure can be computationally expensive if it is performed within each iteration of the master metaheuristic algorithm. As previously noted, the CFA applies the adaptive local search strategy which dynamically varies the frequency of the local search execution according to the result of the landscape analysis. Table 4 tabulates the mean best function value and the standard deviation obtained by CFA with or without adaptive local search. We observe that for all the benchmark test functions the CFA with adaptive local search obtains better or equivalent mean function value than its counterpart without adaptive local search.

Table 4.

The mean best function value and the standard deviation obtained by CFA with or without adaptive local search.

4.2.3. Multi-Start Strategy for Swarm Rebuilding

Multi-start is a diversification strategy which terminates an ineffective trajectory search and reinitiates a new one from an under-explored region. Our CFA applies the adaptive multi-start strategy which monitors the performance of the incumbent firefly swarm. If the program stops improving the performance for a threshold number of consecutive iterations, part of the swarm is rebuilt by positioning some fireflies on diversified locations using the path-relinking technique. The performance stagnation threshold is made adaptive according to the result of the landscape analysis such that the frequency of the multi-start activation depends on the regional function profiles under exploration. It can be seen from Table 5 that the multi-start strategy effectively improves the mean best function value and the standard deviation obtained by CFA.

Table 5.

The mean best function value and the standard deviation obtained by CFA with or without multi-start strategy.

4.2.4. Responsive Strategies Based on Landscape Analysis

As noted in Section 3.5, the responsive strategies employed by the CFA are made adaptive based on the analysis of function landscape. The landscape analysis makes distinctions of two classic function forms: single-modal and multi-modal. The responsive strategies then vary the movement step size and the frequency of the local search and the multi-start activations to make the CFA search more effective. Table 6 lists the comparative performance of the CFA with or without performing the landscape analysis. It is noted that the version of the CFA without adaptive landscape analysis still embeds the local search and the multi-start procedures as its rudimentary components, which, however, are executed with fixed parameter values. From the tabulated result, we conclude that the landscape analysis can proliferate the performance gains possibly obtained by the local search and the multi-start strategies.

Table 6.

The mean best function value and the standard deviation obtained by CFA with or without landscape analysis.

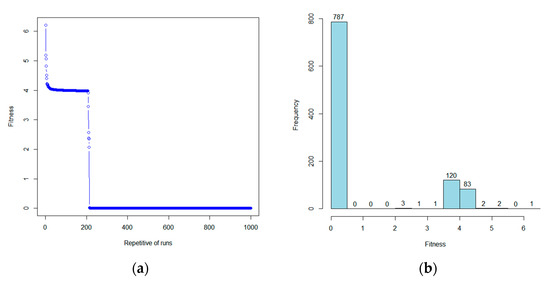

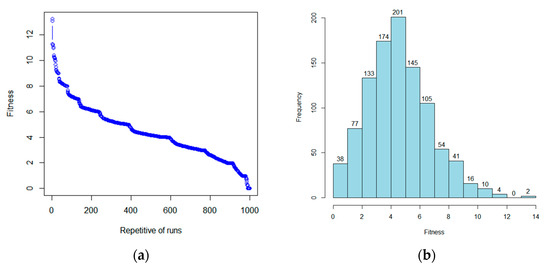

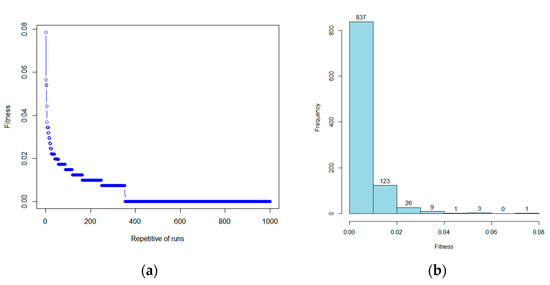

4.2.5. Worst-Case Analysis

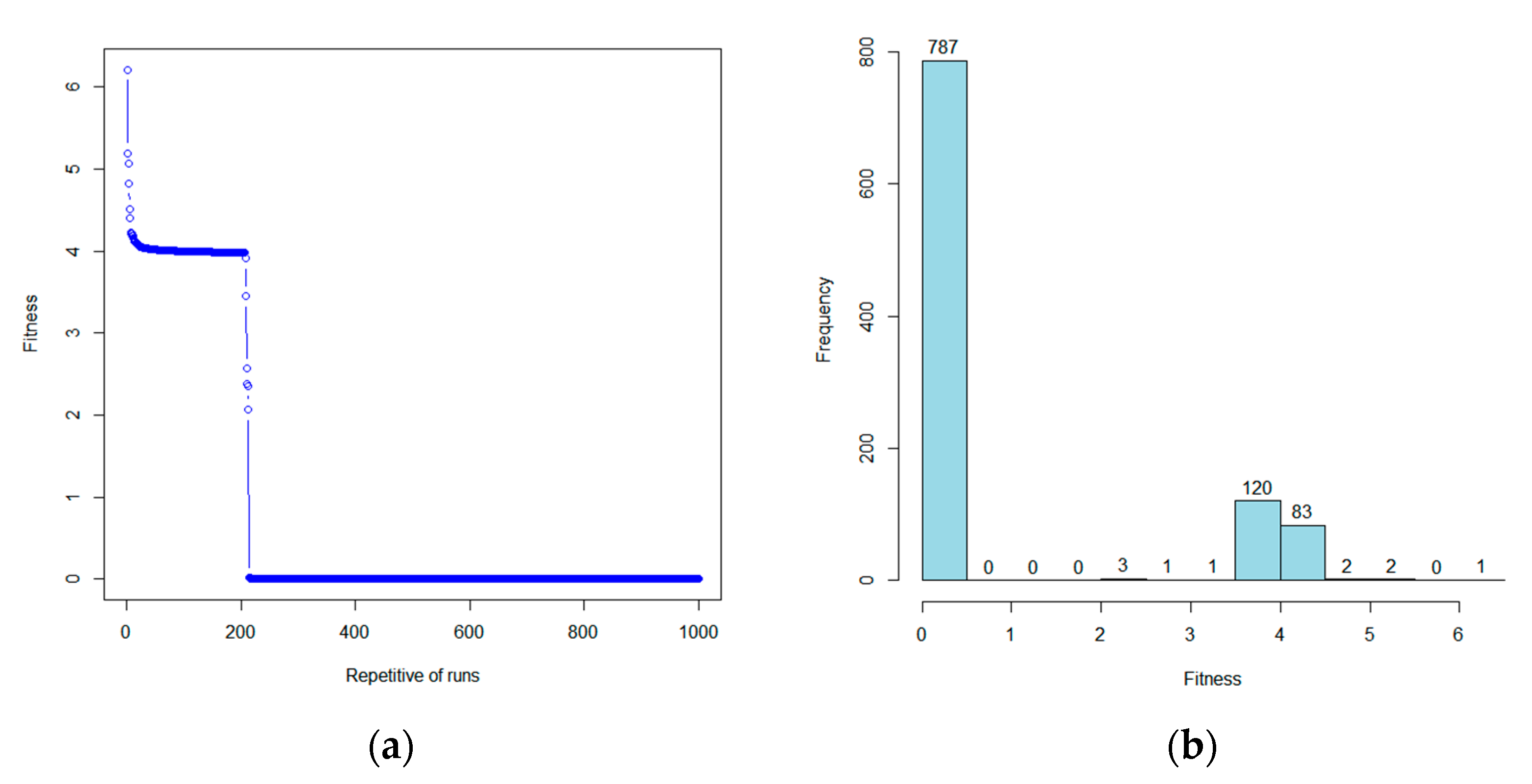

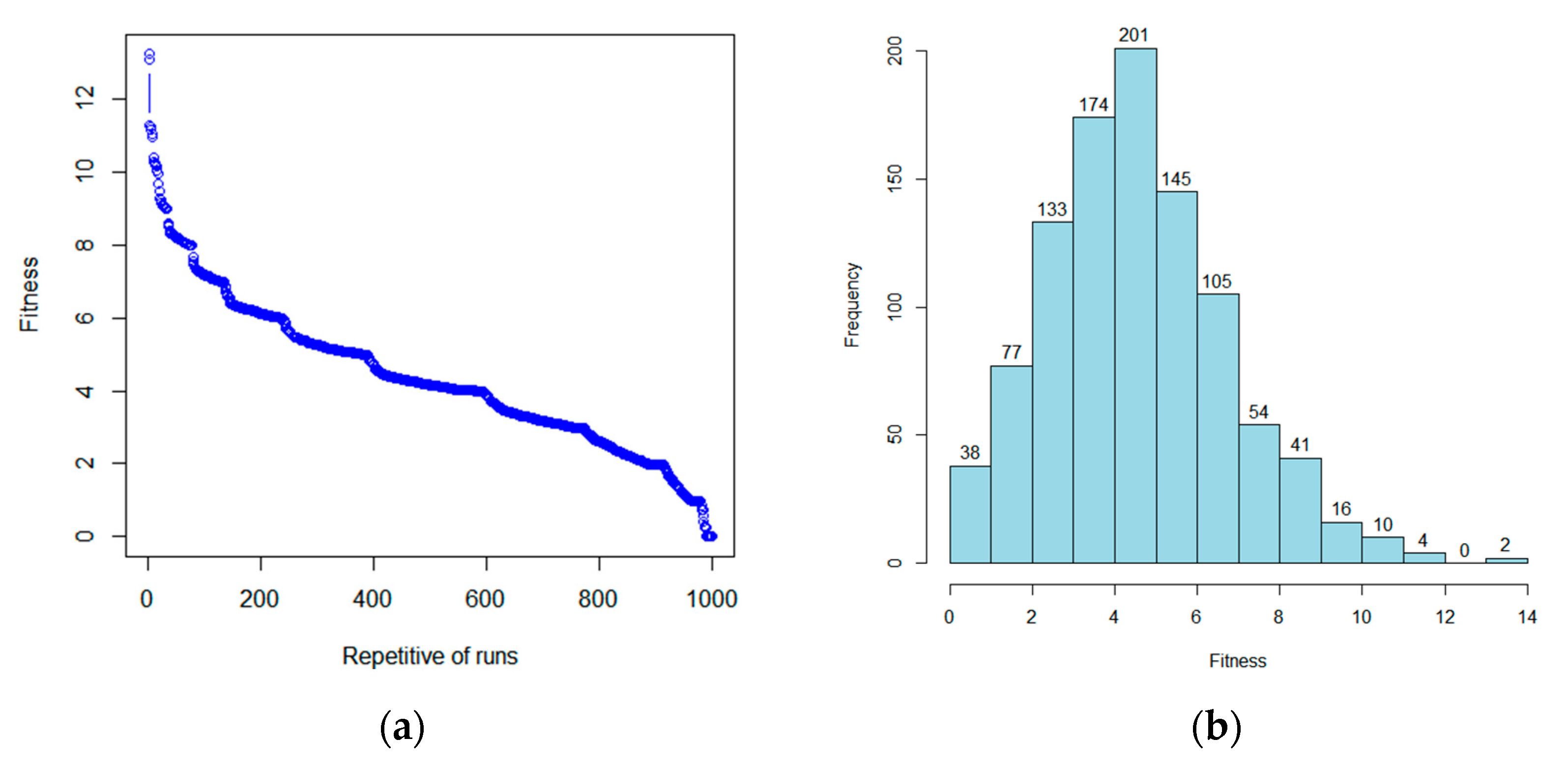

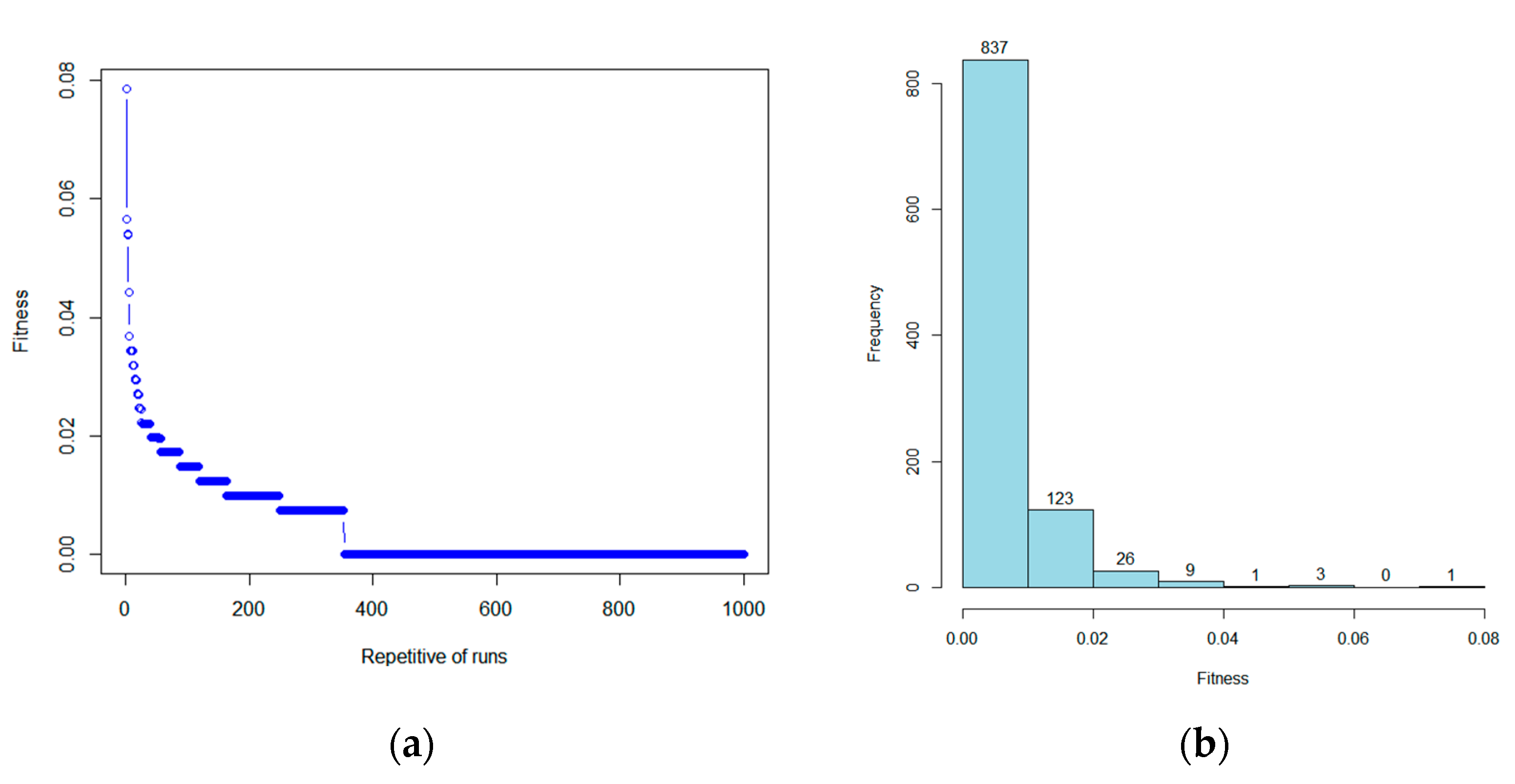

As the proposed CFA is a stochastic optimization algorithm, every single execution of the program may produce a different solution. It thus becomes very important to measure the solution variation of the worst case obtained by the CFA. We conduct the worst-case analysis on the three most challenging functions, namely Rosenbrock (30), Rastrigin (30), and Griewank (30) as follows. One thousand independent runs of the CFA program are performed. We record the worst function value (fitness) that could be obtained by allowing different number of repetitive runs in the experiment. It is seen from Figure 2 that the Rosenbrock (30) fitness value of less than 5 can be obtained with 99.7% confidence because three out of the one thousand runs report a fitness value greater than 5. It also indicates the worst fitness value we could obtain is no more than 5 if we can run the CFA program at least three times. Actually, there are two major components with the fitness distribution. One is close to 4 (a local optimum) with about 20% of the distribution, the other is near zero (the global optimum) with about 79% confidence. The worst-case analysis with Rastrigin (30) is illustrated in Figure 3. We observe a Gaussian-like distribution and the major component falls in the fitness value between 0 and 12. The Gaussian-like distribution provides a reliable guarantee that the mean performance value can be obtained with high confidence. Figure 4 shows the worst-case analysis for Griewank (30). The distribution has a long tail, and the major component concentrates at the high quality part. This situation manifests good properties that there is only one major outcome which is near the global optimum, and that a near-optimal solution can be obtained with a few runs in the worst case.

Figure 2.

Worst-case analysis with the Rosenbrock (30) function. (a) The worst fitness obtained by the CFA program as the number of repetitive runs increases. (b) The number of program runs with which each performance level is reached.

Figure 3.

Worst-case analysis with the Rastrigin (30) function. (a) The worst fitness obtained by the CFA program as the number of repetitive runs increases. (b) The number of program runs with which each performance level is reached.

Figure 4.

Worst-case analysis with the Griewank (30) function. (a) The worst fitness obtained by the CFA program as the number of repetitive runs increases. (b) The number of program runs with which each performance level is reached.

4.3. Performance Evaluation

In this section, the performance of the CFA is evaluated in three-fold. First, the CFA is compared against its counterparts, the GSO and the FA. Secondly, the CFA is compared to other kinds of metaheuristics. Thirdly, the performance of CFA is further justified on the CEC 2005 dataset. For the first two experiments, all compared algorithms were executed 30 times for each test function. For each run, the program can perform 160,000 FEs. However, for the third experiment, the evaluation criteria in the original paper are respected where the competing method is executed in 25 independent runs and in each run the method is evaluated at different numbers of consumed FEs. To compare the performance between two competing algorithms, we employ the performance index defined by Yin et al. [6] as follows. Given two competing algorithms, p and q, the performance merit of p against q on a test function is defined by the formula,

where ε is a small constant equal to 5 × 10−7, fp and fq are the mean best function values obtained by the competing algorithms p and q, and f* is the global minimum of the test function. As all the test functions involve minimization, we realize p outperforms q if Merit(p, q) < 1.0, p is inferior to q if Merit(p, q) > 1.0, and p and q perform equally well if Merit(p, q) = 1.0.

Merit(p, q) = (fp − f* + ε)/(fq − f* + ε)

4.3.1. Comparison with CFA Counterparts

Our CFA enhances the GSO and the FA by incorporating the responsive strategies from the adaptive memory programming domain. Thus, it is important to validate our idea by comparing the performance of the CFA against its counterparts, the GSO and the FA. The results shown in the second to the fifth columns of Table 7 are the mean best function values obtained by the competing algorithms, and those in the last three columns correspond to the relative merit values. We observe that for the test functions with fewer than ten variables, the CFA has a unit merit or a merit value less than one with one order of magnitude, indicating that all the competing algorithms perform nearly equally well. For the test functions with ten or more variables, the merit value of the CFA in relation to the GSO and the FA becomes significantly less, implying a superior performance in favor of the CFA. The product of the merit values gives an overview of the comparative performance on all test function. It is seen at the bottom of Table 7 that the CFA has a merit product of 7.32 × 10−43 and 9.51 × 10−32 in relation to the GSO and the FA, respectively. We further compare the CFA to the better one of the best function values obtained by the GSO and the FA, giving a merit product of 8.99 × 10−29 as shown in the last column of Table 7. The result suggests that the CFA significantly outperforms its counterparts over the benchmark dataset, and that the CFA also beats a hybrid which takes the better one of the best function values obtained by the two counterparts.

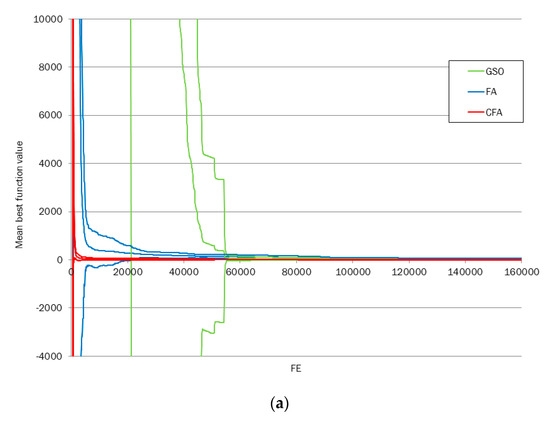

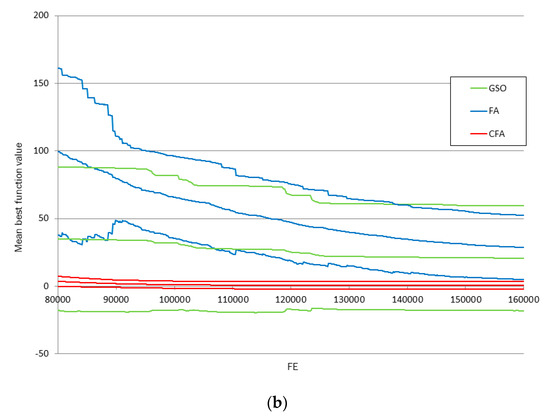

Table 7.

The performance comparison between the CFA and its counterparts.

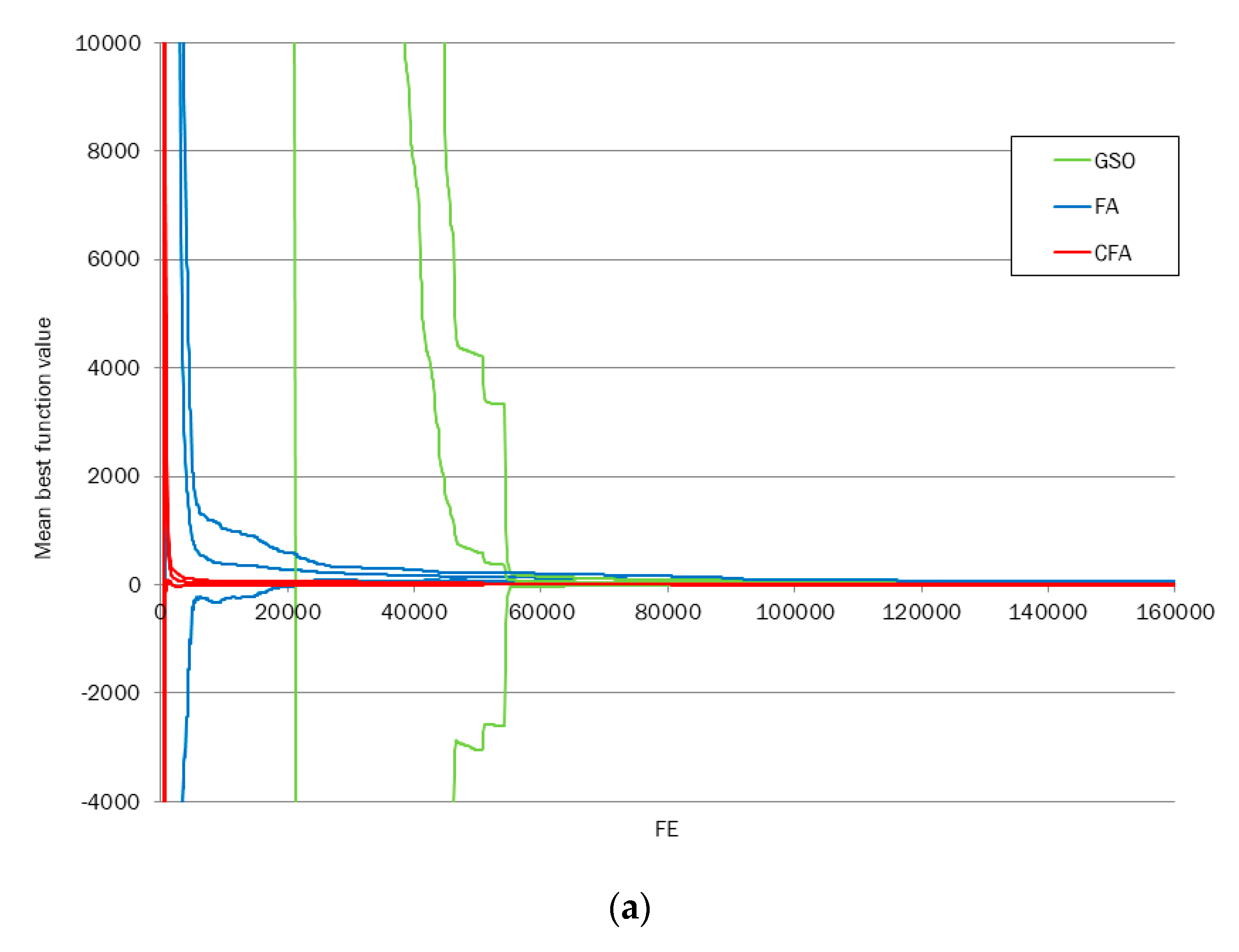

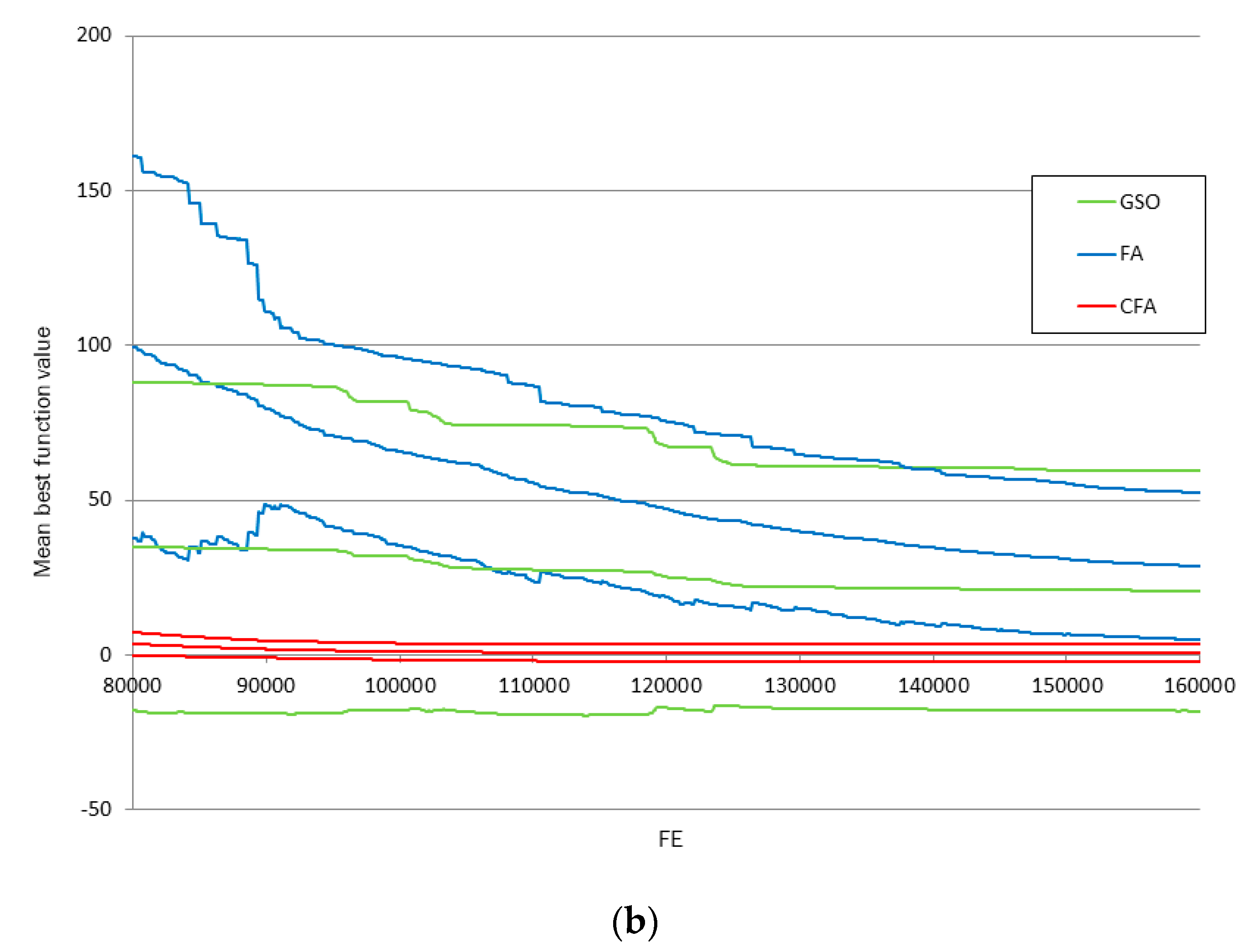

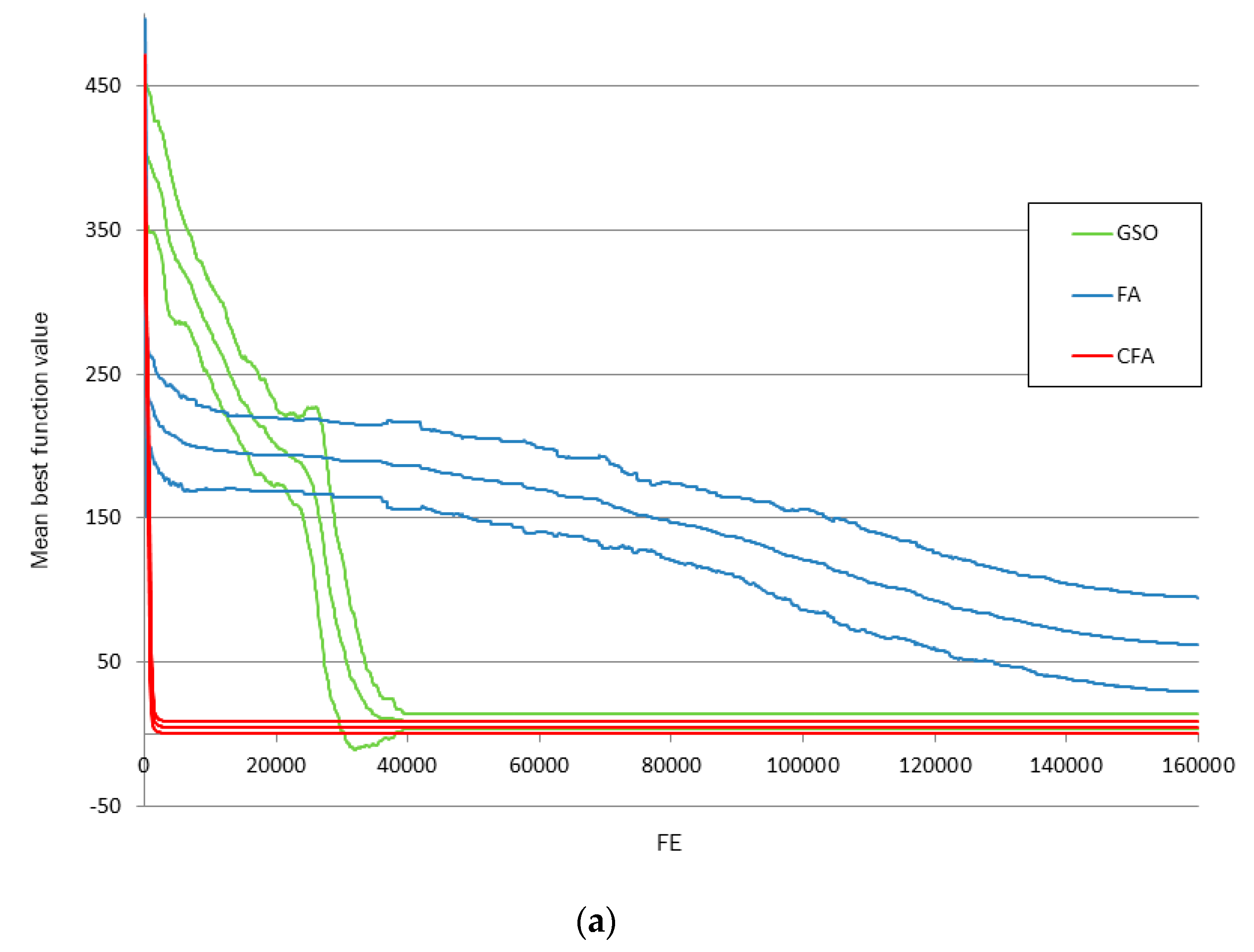

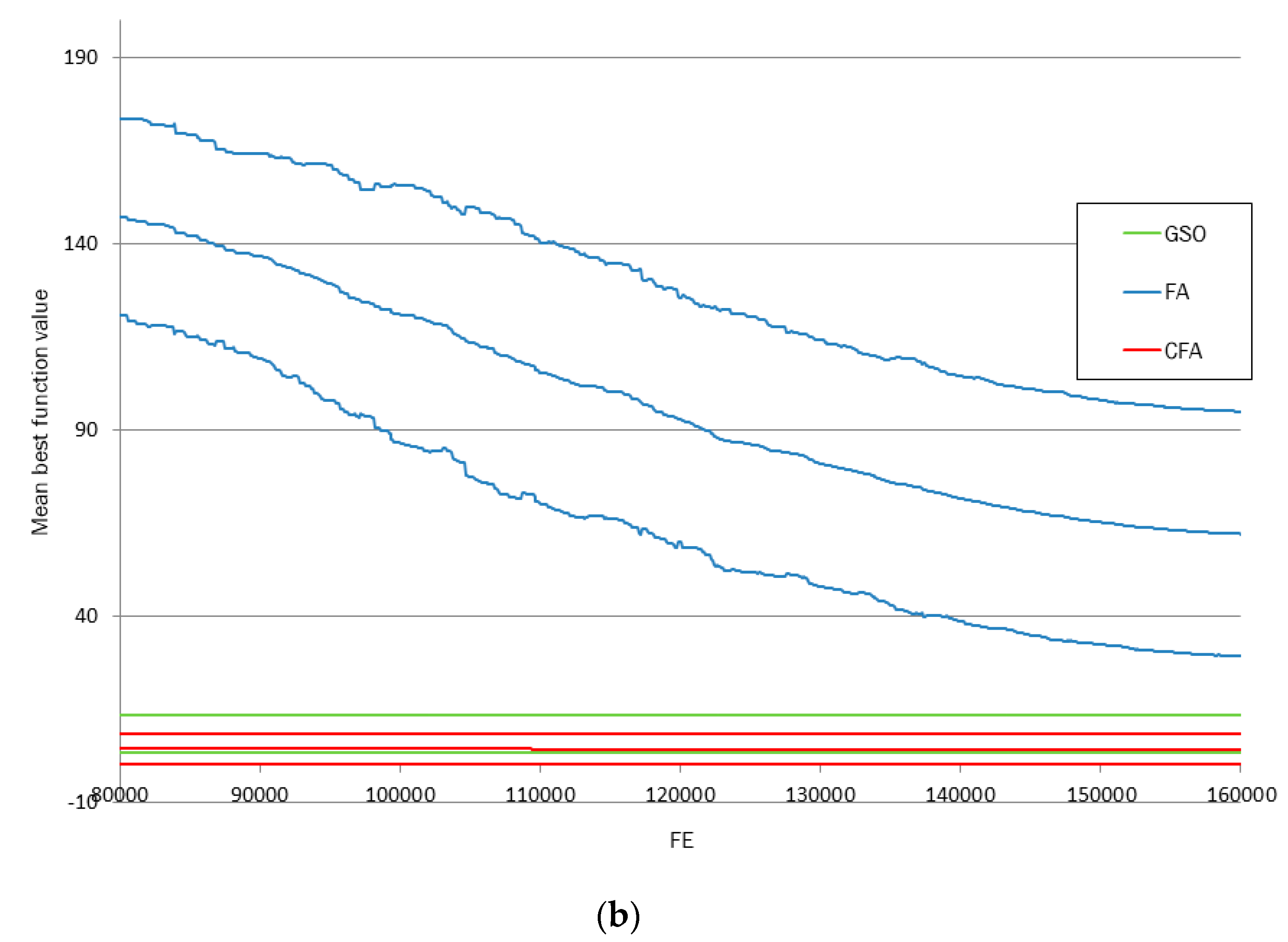

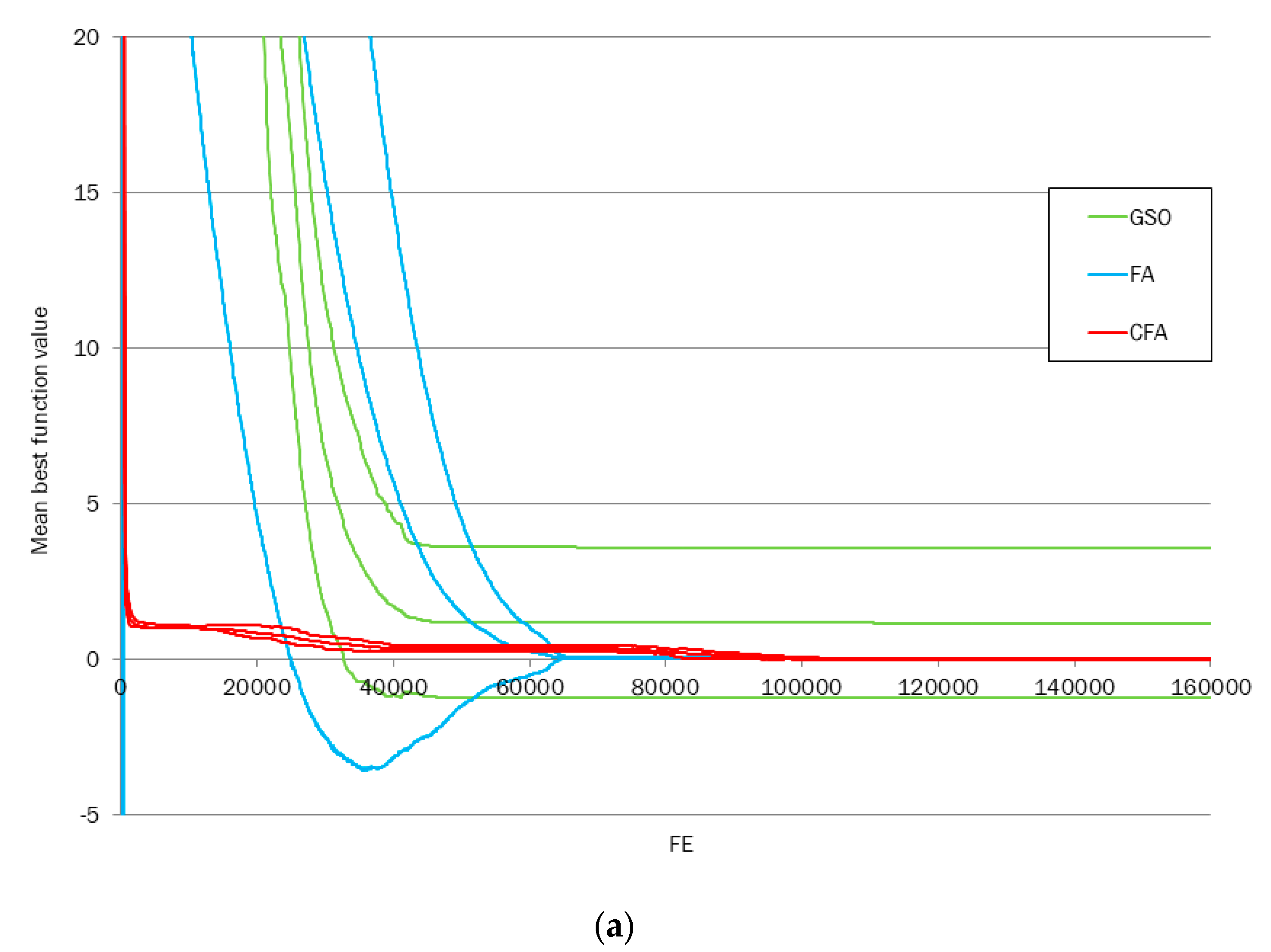

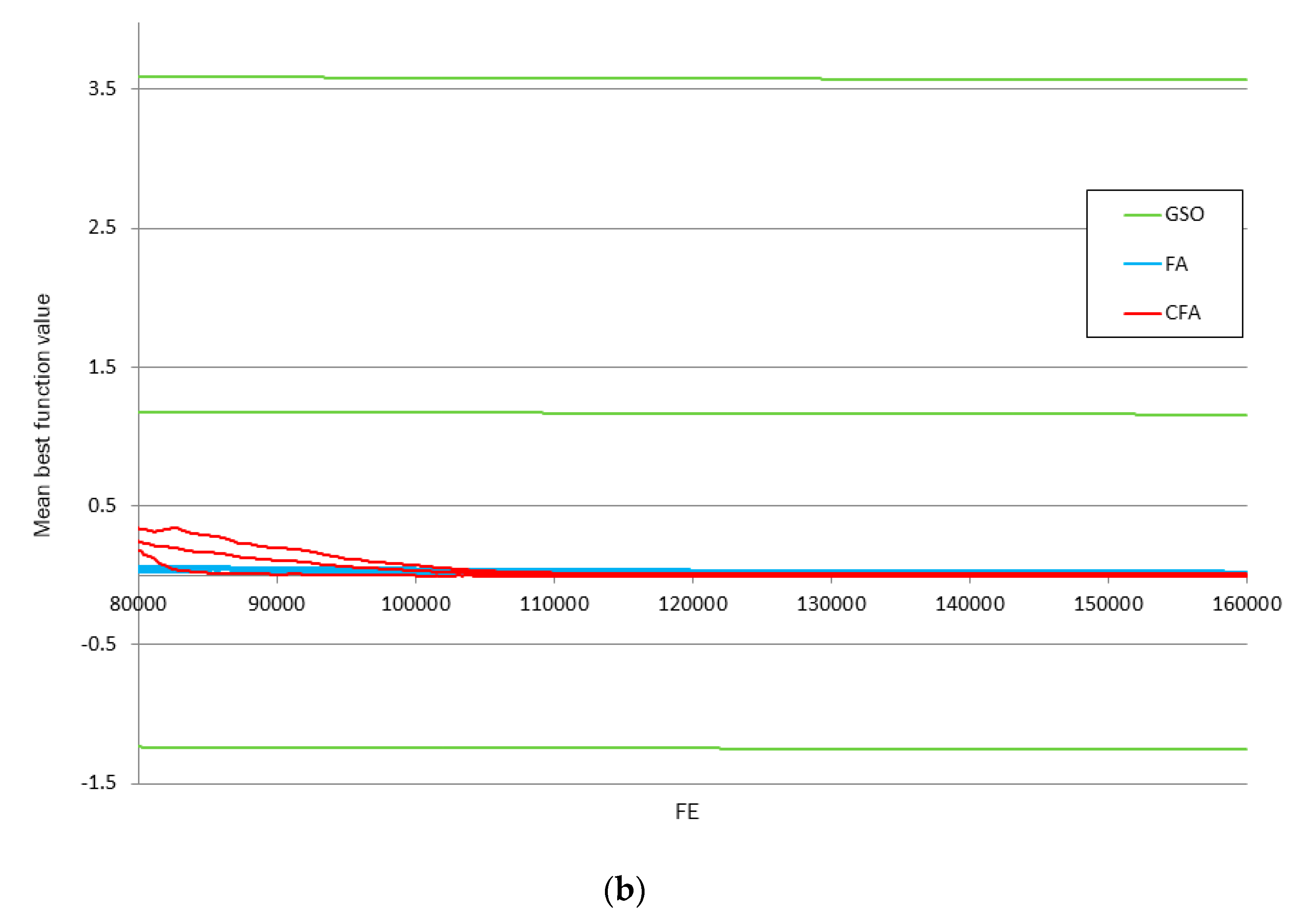

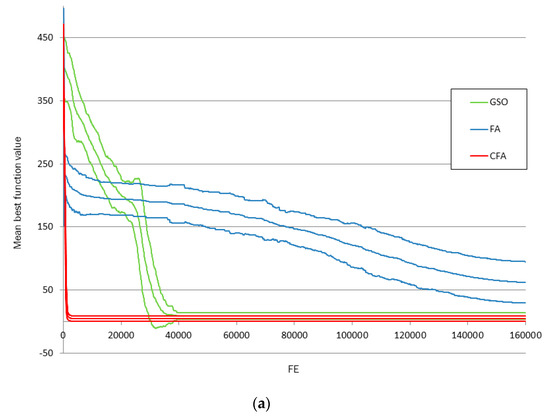

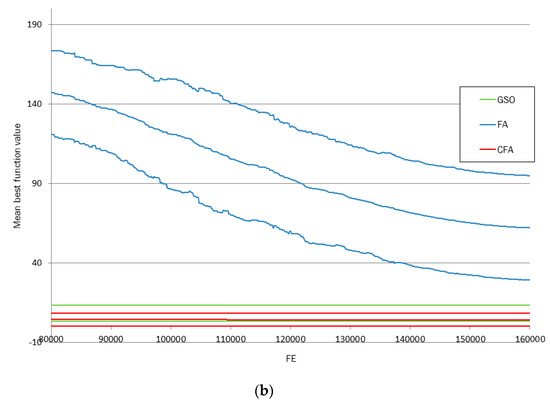

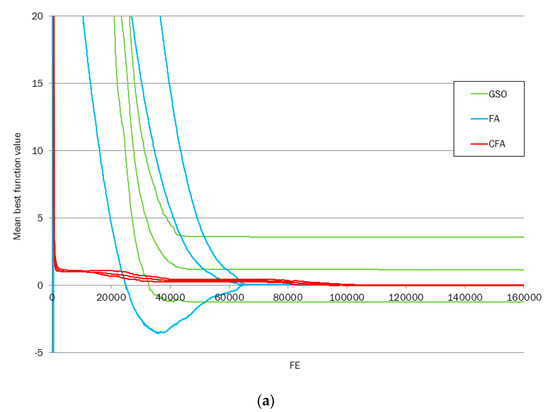

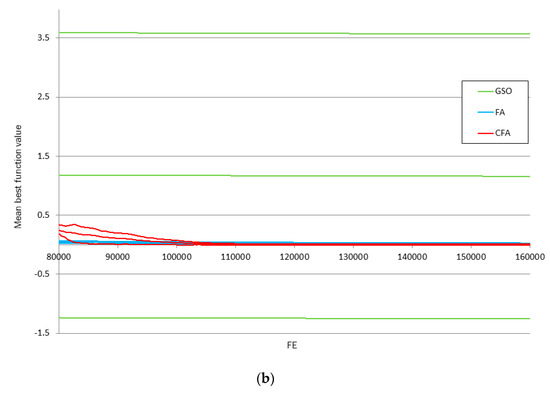

We further verify the online performance advantage of our CFA against its counterparts by a statistical test suggested in Taillard [28]. As the best objective value produced by a metaheuristic approach is non-deterministic, we model the result obtained from multiple runs of method A (and method B) as a random variable Xa (Xb) and we want to testify the confidence regarding that Xa is less than Xb. A classic statistical test based on the central limit theorem for comparing two proportions is to approximate the mean Xa − Xb as a normal distribution if the collected number of samples is sufficiently large. Therefore, 30 independent runs of each competing algorithm were conducted. For each run, the online best function value obtained at a particular FE, say e, is a sample for Xa(e), the random variable for the result obtained by method A at FE e. We tally the samples at every instance of FEs during the whole duration of executing the algorithm. By examining the mean curve of Xa(e) and Xb(e) as e increases during the evolution, we can testify if method A well outperforms method B. For clear illustration, the boundary and mean curves over the 30 runs are plotted. Figure 5a shows the online performance analysis with 95% confidence interval for the Rosenbrock (30) function. It is seen that the 95% confidence interval of the best function value obtained by the three competing algorithms (GSO, FA, and CFA) converges with various speeds. Our CFA converges at a much faster speed and reach towards a better function value than its two counterparts. The FA is the second-best performer followed by the GSO. To investigate the detailed performance during the second half duration of the execution, we enlarge the plot for this period as shown in Figure 5b. It can be seen that the CFA significantly outperforms the other two algorithms with 95% confidence level during the second half execution duration. We also found that the GSO performs better than the FA after 80,000 FEs, although the GSO may not beat the FA at the early stage of the execution as previously noted. The online performance comparison with 95% confidence level for the Rastrigin (30) function is shown in Figure 6. We observe that during the whole execution period the CFA significantly outperforms the GSO and the FA. The FA performs better than the GSO when the allowed number of consumed FEs is less than 20,000, but the FA is far surpassed by the GSO if more FEs are allowed. Figure 7 shows the online performance variation with 95% confidence level for the Griewank (30) function. Again, the CFA is the best performer among the three algorithms throughout the whole execution duration. However, we see a phenomenon differing from those for the two previous test functions with the GSO and the FA. The GSO and the FA performs about equally well before consuming 50,000 FEs, although the former is a more stable performer because it has a shorter confidence interval. However, after this critical execution period, the FA becomes very effective both in the convergence speed and the function value. As shown in Figure 7b, the FA significantly surpasses the GSO and reaches a comparative performance with the CFA.

Figure 5.

Online performance analysis with 95% confidence interval for the Rosenbrock (30) function. (a) The convergence of the best function value during the whole duration of the execution. (b) The convergence of the best function value during the second half duration of the execution.

Figure 6.

Online performance analysis with 95% confidence interval for the Rastrigin (30) function. (a) The convergence of the best function value during the whole duration of the execution. (b) The convergence of the best function value during the second half duration of the execution.

Figure 7.

Online performance analysis with 95% confidence interval for the Griewank (30) function. (a) The convergence of the best function value during the whole duration of the execution. (b) The convergence of the best function value during the second half duration of the execution.

4.3.2. Comparison against Other Metaheuristics

We now compare the CFA against other metaheuristics inspired by different nature metaphors, the PSO, the GA, and the cyber swarm algorithm (CSA). The compared PSO is the constriction factor version proposed by Clerc and Kennedy [29] which has been shown to be one of the best PSO implementations. The implemented GA employs real-value chromosome coding, tournament selection (with k = 2, i.e., two competitors in each instance of selection), arithmetic crossover, and Gaussian mutation. The GA is generational without population gap, i.e., the whole parent population is replaced by the offspring population. The implementation and parameter setting of CSA follow the original paper [6]. All the compared algorithms have the same population size of 60 individuals, and are executed until consuming 160,000 FEs. Table 8 tabulates the mean best function value obtained by the compared algorithms over 30 runs and the merit value among the competitors. For the comparison of the CFA against the PSO and the GA, we observe that the CFA well surpasses the other two algorithms on most of the benchmark functions. The merit product in relation to the PSO and the GA is 8.80 × 10−21 and 2.11 × 10−36, respectively. When we compare the CFA to the CSA, the merit product is 1.94 × 1018. The result seems to suggest that the CSA performs better on the dataset. However, if we take a closer look, the CSA is very effective in solving small functions with less than ten variables, thus CSA gives significantly greater merits for these functions. For the test functions with ten or more variables, the merit value turns to be in favor to the CFA, disclosing that the CFA is more effective than CSA in tackling larger-sized functions. It is worth noting that both CFA and the CSA take advantage of the features contained in the AMP domain, and the two algorithms extremely outperform the other compared algorithms in our experiments. This phenomenon discloses the potential of future research in the direction of marrying the AMP with other types of metaheuristics.

Table 8.

The performance comparison between the CFA and other metaheuristics.

To compare the CFA with the state-of-the-art variants of FA, we quote the results (mean objective value over 30 runs) from the original paper LFA [9], VESSFA [10], WFA [11], CLFA [12], FAtidal [13], and GDAFA [14]. The best mean objective value for each function obtained by all compared methods is printed in bold. As can be seen in Table 9, our CFA wins the most times as obtaining the best mean objective value among all. GDAFA seems to possess better performance as the dimensionality increases. Both CFA and GDAFA can gain an objective value very close to the optimum, while the other competing methods may produce an objective value far away from the optimum in some challenging functions.

Table 9.

The performance comparison between the CFA and the state-of-the-art variants of FA.

4.3.3. Comparison on the CEC 2005 Dataset

To further justify the performance of CFA, we compare CFA with the investigated methods reported in [26] on 12 CEC 2005 benchmark functions [30]. The IEEE CEC Repository [27] provides fruitful benchmark datasets for optimization problems with various purposes such as unconstrained, constrained, and multi-objective optimization. The CEC 2005 dataset is designed for unconstrained real-parameter optimization which is addressed in this paper. We selected 12 CEC 2005 functions which are very challenging and have never been solved to optimal by any known methods [26]. We executed all compared algorithms with the same evaluation criteria and parameter settings as described in the original paper [26]. Each algorithm is executed for 25 independent runs on each test function with n = 10 and 30, respectively. All compared methods are executed by being allowed to consume 1000, 10,000, and 100,000 FEs.

We adopt the GAP performance measure proposed in the original paper [26] and it is defined as GAP = |f − f*| where f is the function value obtained by an evaluated method and f* is the global optimum value of the test function. Table 10 shows the mean minimum (Min.) and average (Avg.) of GAP and Merit of all compared methods over the 12 functions for n = 10. We observe that our CFA is less exploitative in small size CEC problems than the leading methods such as G-CMA-ES and L-CMA-ES, both of which are based on the covariance matrix adaptation evolution strategy (CMA-ES) [31] which updates the covariance of the multivariate distribution to better handle the dependency between variables. Though the CFA is less competitive in the mean Avg. GAP, it can deliver a quality Min. out of 25 independent runs. It suggests that the CFA can be executed multiple times and output the best value from those runs when tackling small size yet complex problems. This phenomenon is also revealed in the geometric mean of the merits (GMM). The GMM of the Min. function value is 1.099, 0.995, and 0.878 at 1000, 10,000, and 100,000 FEs, while the GMM of the Avg. function value gradually deteriorates from 0.963, 1.167 to 1.211 at the same FEs.

Table 10.

Min./Avg. GAP and Merit over the CEC dataset with n = 10.

As for high-dimensional and complex CEC problems with n = 30, the mean Min. and Avg. of GAP and Merit of all compared methods are tabulated in Table 11. It is seen from the GMM that our CFA is compared favorably to the other methods in both Min. and Avg. function value at all FEs check points. The prevailing exploration search conducted by CFA is due to its elements of AMP-responsive strategies, which are more effective when the problem is more complex and is presented in higher-dimensional space. The G-CMA-ES is again the best method since it excels in terms of GAP in most cases as compared to the other competing methods. It is worth further studying the possibility of including the CMA technique into the CFA to resolve the dependency between variables.

Table 11.

Min./Avg. GAP and Merit over the CEC dataset with n = 30.

5. Concluding Remarks and Future Research

We have proposed the CFA which is a more effective form of the GSO and the FA in global optimization. The CFA incorporates several AMP strategies including multiple guiding solutions, pattern search as local improvement method, solution set rebuilding in a multi-start search template, and the responsive strategies. The experimental result on benchmark functions for global optimization has shown that the CFA performs significantly better in terms of both solution quality and robustness than the GSO, FA, and several state-of-the-art metaheuristic methods as demonstrated in our statistical analyses and comprehensive experiments. It is worth noting that it is certain a sophisticated method such as CFA incorporating advanced components will pose higher computational complexity in the computation iteration than an algorithm which does not. However, as metaheuristic approaches are computational ones which can stop at any computation iteration and output the best-so-far result. We conduct a fair performance comparison between two metaheuristic approaches at the same number of fitness FE instead of using the evolution iterations. All our experiments follow this fashion.

Our findings strengthen the motivations for marrying the approaches selected from each of the metaheuristic dichotomies, respectively. The CFA template gives general ideas for creating this sort of effective hybrid metaheuristics. Inspired by the promising result of the CFA, it is worthy of investigating the possibility of the application of the CFA template to other metaheuristic approaches with various problem domains for future research.

Author Contributions

Conceptualization, P.-Y.Y.; methodology, P.-Y.Y. and P.-Y.C.; software, P.-Y.C.; validation, Y.-C.W. and R.-F.D.; writing—original draft preparation, P.-Y.Y.; writing—review and editing, P.-Y.Y., Y.-C.W. and R.-F.D.; visualization, P.-Y.C.; funding acquisition, P.-Y.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by MOST Taiwan, grant numbers 107-2410-H-260-015-MY3. The APC was funded by 107-2410-H-260-015-MY3.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

Nomenclature

The list of the acronyms referenced in this paper is tabulated as follows.

| EA | evolutionary algorithm |

| GSO | glowworm swarm optimization |

| FA | firefly algorithm |

| AMP | adaptive memory programming |

| CSA | cyber swarm algorithm |

| CFA | Cyber Firefly Algorithm |

| GRASP | greedy randomized adaptive search procedures |

| GA | genetic algorithm |

| PSO | particle swarm optimization |

| VNS | variable neighborhood search |

| SS | scatter search |

| SS/PR | path relinking |

| FDC | fitness distance correlation |

| FE | function evaluations |

| CMA-ES | covariance matrix adaptation evolution strategy |

| GMM | geometric mean of the merits |

References

- Yin, P.Y. Towards more effective metaheuristic computing, In Modeling, Analysis, and Applications in Metaheuristic Computing: Advancements and Trends; IGI-Global Publishing: Hershey, PA, USA, 2012. [Google Scholar]

- Talbi, E.G.; Bachelet, V. COSEARCH: A parallel cooperative metaheuristic. J. Math. Model. Algorithms 2006, 5, 5–22. [Google Scholar] [CrossRef]

- Shen, Q.; Shi, W.M.; Kong, W. Hybrid particle swarm optimization and tabu search approach for selecting genes for tumor classification using gene expression data. Comput. Biol. Chem. 2008, 32, 52–59. [Google Scholar] [CrossRef] [PubMed]

- Marinakis, Y.; Marinaki, M.; Doumpos, M.; Matsatsinis, N.F.; Zopounidis, C. A hybrid ACO-GRASP algorithm for clustering analysis. Ann. Oper. Res. 2011, 188, 343–358. [Google Scholar] [CrossRef]

- Fuksz, L.; Pop, P.C. A hybrid genetic algorithm with variable neighborhood search approach to the number partitioning problem. Lect. Notes Comput. Sci. 2013, 8073, 649–658. [Google Scholar]

- Yin, P.Y.; Glover, F.; Laguna, M.; Zhu, J.S. Cyber swarm algorithms: Improving particle swarm optimization using adaptive memory strategies. Eur. J. Oper. Res. 2010, 201, 377–389. [Google Scholar] [CrossRef]

- Krishnanand, K.N.; Ghose, D. Detection of multiple source locations using a glowworm metaphor with applications to collective robotics. In Proceedings of the IEEE Swarm Intelligence Symposium, Pasadena, CA, USA, 8–10 June 2005; pp. 84–91. [Google Scholar]

- Yang, X.S. Firefly algorithm. Nat. Inspired Metaheuristic Algorithms 2008, 20, 79–90. [Google Scholar]

- Yang, X.S. Firefly algorithm, levy flights and global optimization. In Research and Development in Intelligent Systems XXVI; Springer: London, UK, 2010; pp. 209–218. [Google Scholar]

- Yu, S.; Zhu, S.; Ma, Y.; Mao, D. A variable step size firefly algorithm for numerical optimization. Appl. Math. Comput. 2015, 263, 214–220. [Google Scholar] [CrossRef]

- Zhu, Q.G.; Xiao, Y.K.; Chen, W.D.; Ni, C.X.; Chen, Y. Research on the improved mobile robot localization approach based on firefly algorithm. Chin. J. Sci. Instrum. 2016, 37, 323–329. [Google Scholar]

- Kaveh, A.; Javadi, S.M. Chaos-based firefly algorithms for optimization of cyclically large-size braced steel domes with multiple frequency constraints. Comput. Struct. 2019, 214, 28–39. [Google Scholar] [CrossRef]

- Yelghi, A.; Köse, C. A modified firefly algorithm for global minimum optimization. Appl. Soft Comput. 2018, 62, 29–44. [Google Scholar] [CrossRef]

- Liu, J.; Mao, Y.; Liu, X.; Li, Y. A dynamic adaptive firefly algorithm with globally orientation. Math. Comput. Simul. 2020, 174, 76–101. [Google Scholar] [CrossRef]

- Wang, J.; Song, F.; Yin, A.; Chen, H. Firefly algorithm based on dynamic step change strategy. In Machine Learning for Cyber Security; Chen, X., Yan, H., Yan, Q., Zhang, X., Eds.; Lecture Notes in Computer Science 12487; Springer: Cham, Switzerland, 2020. [Google Scholar] [CrossRef]

- Glover, F. Tabu search and adaptive memory programming—Advances, applications and challenges. In Interfaces in Computer Science and Operations Research; Kluwer Academic Publishers: London UK, 1996; pp. 1–75. [Google Scholar]

- Glover, F. A template for scatter search and path relinking. Lect. Notes Comput. Sci. 1998, 1363, 13–54. [Google Scholar]

- Laguna, M.; Marti, R. Scatter Search: Methodology and Implementation in C; Kluwer Academic Publishers: London, UK, 2003. [Google Scholar]

- Chen, X.S.; Ong, Y.S.; Lim, M.H.; Tan, K.C. A Multi-Facet Survey on Memetic Computation. IEEE Trans. Evol. Comput. 2011, 15, 591–607. [Google Scholar] [CrossRef]

- Feo, T.A.; Resende, M.G.C. Greedy randomized adaptive search procedures. J. Glob. Optim. 1995, 6, 109–133. [Google Scholar] [CrossRef]

- Hooke, R.; Jeeves, T.A. Direct search solution of numerical and statistical problems. J. Assoc. Comput. Mach. 1961, 8, 212–229. [Google Scholar] [CrossRef]

- Dolan, E.D.; Lewis, R.M.; Torczon, V.J. On the local convergence of pattern search. Siam J. Optim. 2003, 14, 567–583. [Google Scholar] [CrossRef]

- Jones, T.; Forrest, S. Fitness distance correlation as a measure of problem difficulty for genetic algorithms. In Proceedings of the International Conference on Genetic Algorithms, Morgan Laufman, Santa Fe, NM, USA, 15–19 July 1995; pp. 184–192. [Google Scholar]

- Hedar, A.R.; Fukushima, M. Tabu search directed by direct search methods for nonlinear global optimization. Eur. J. Oper. Res. 2006, 170, 329–349. [Google Scholar] [CrossRef]

- Hirsch, M.J.; Meneses, C.N.; Pardalos, P.M.; Resende, M.G.C. Global optimization by continuous GRASP. Optim. Lett. 2007, 1, 201–212. [Google Scholar] [CrossRef]

- Duarte, A.; Marti, R.; Glover, F.; Gortazar, F. Hybrid scatter-tabu search for unconstrained global optimization. Ann. Oper. Res. 2011, 183, 95–123. [Google Scholar] [CrossRef]

- Al-Roomi, A.R. IEEE Congresses on Evolutionary Computation Repository; Dalhousie University, Electrical and Computer Engineering: Halifax, NS, Canada, 2015; Available online: https://www.al-roomi.org/benchmarks/cec-database (accessed on 15 November 2020).

- Taillard, E.D.; Waelti, P.; Zuber, J. Few statistical tests for proportions comparison. Eur. J. Oper. Res. 2008, 185, 1336–1350. [Google Scholar] [CrossRef]

- Clerc, M.; Kennedy, J. The particle swarm explosion, stability, and convergence in a multidimensional complex space. IEEE Trans. Evol. Comput. 2002, 6, 58–73. [Google Scholar] [CrossRef]

- Suganthan, P.N.; Hansen, N.; Liang, J.J.; Deb, K.; Chen, Y.P.; Auger, A.; Tiwari, S. Problem Definitions and Evaluation Criteria for the CEC 2005 Special Session on Real-Parameter Optimization; Technical Report; Nanyang Technology University of Singapore: Singapore, 2005. [Google Scholar]

- Hansen, N. The CMA evolution strategy: A comparing review. In Towards a New Evolutionary Computation. Advances on Estimation of Distribution Algorithms; Springer: Berlin/Heidelberg, Germany, 2006; pp. 1769–1776. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).