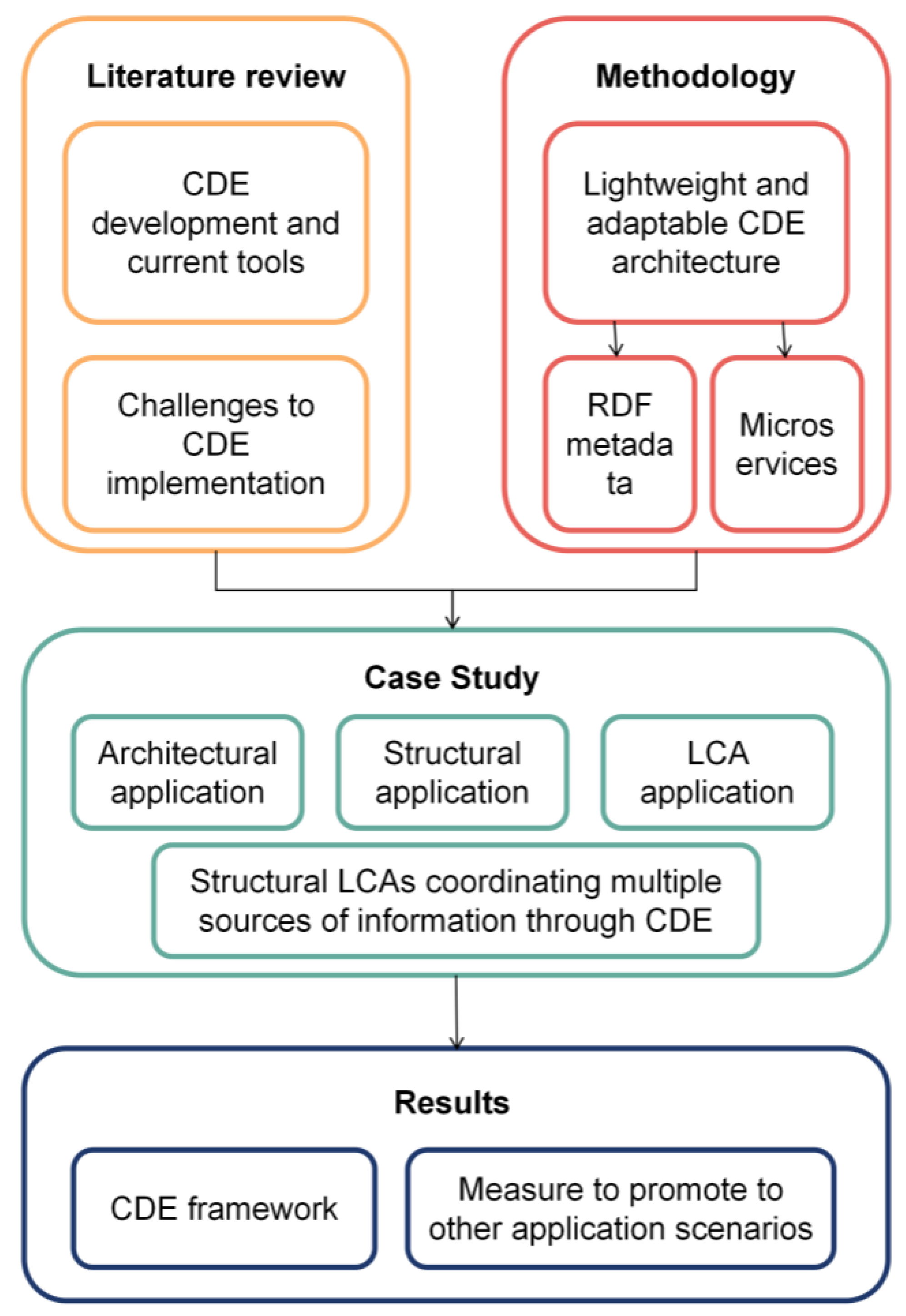

A Common Data Environment Framework Applied to Structural Life Cycle Assessment: Coordinating Multiple Sources of Information

Abstract

1. Introduction

2. Literature Review

2.1. RDF in CDE

2.2. Microservices in CDE

2.3. Challenges for LCA Implementations

2.4. State-of-the-Art CDE Development

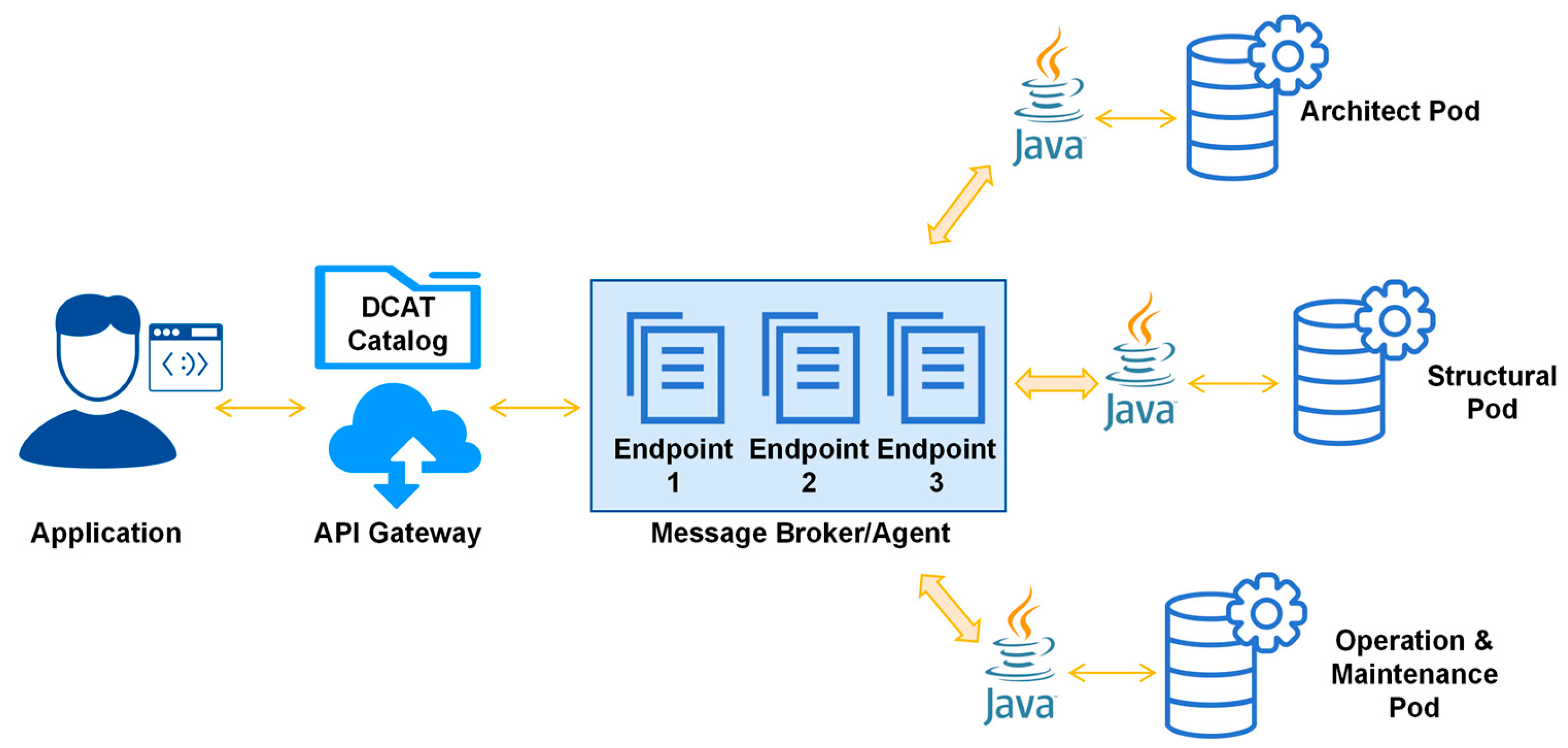

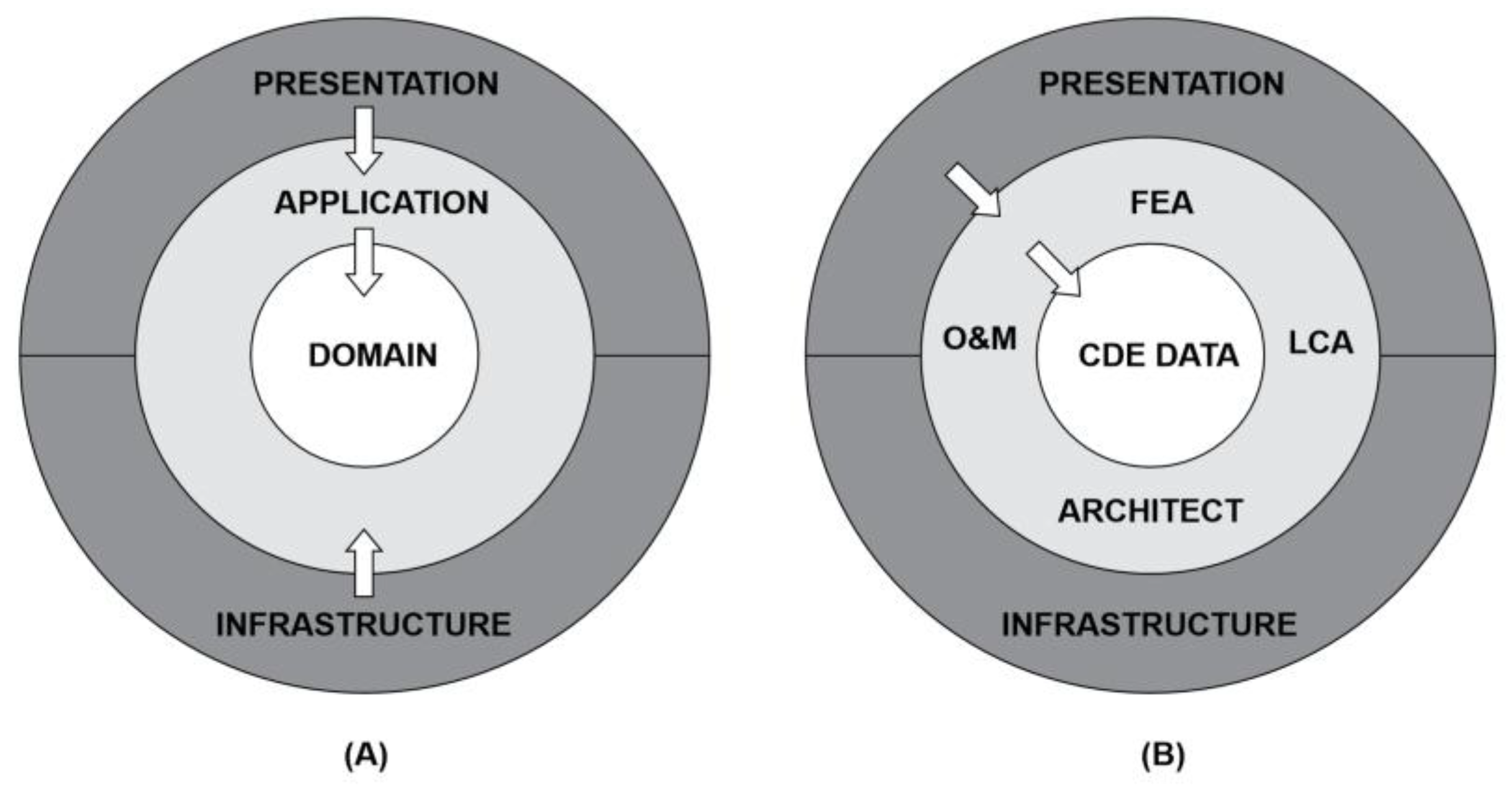

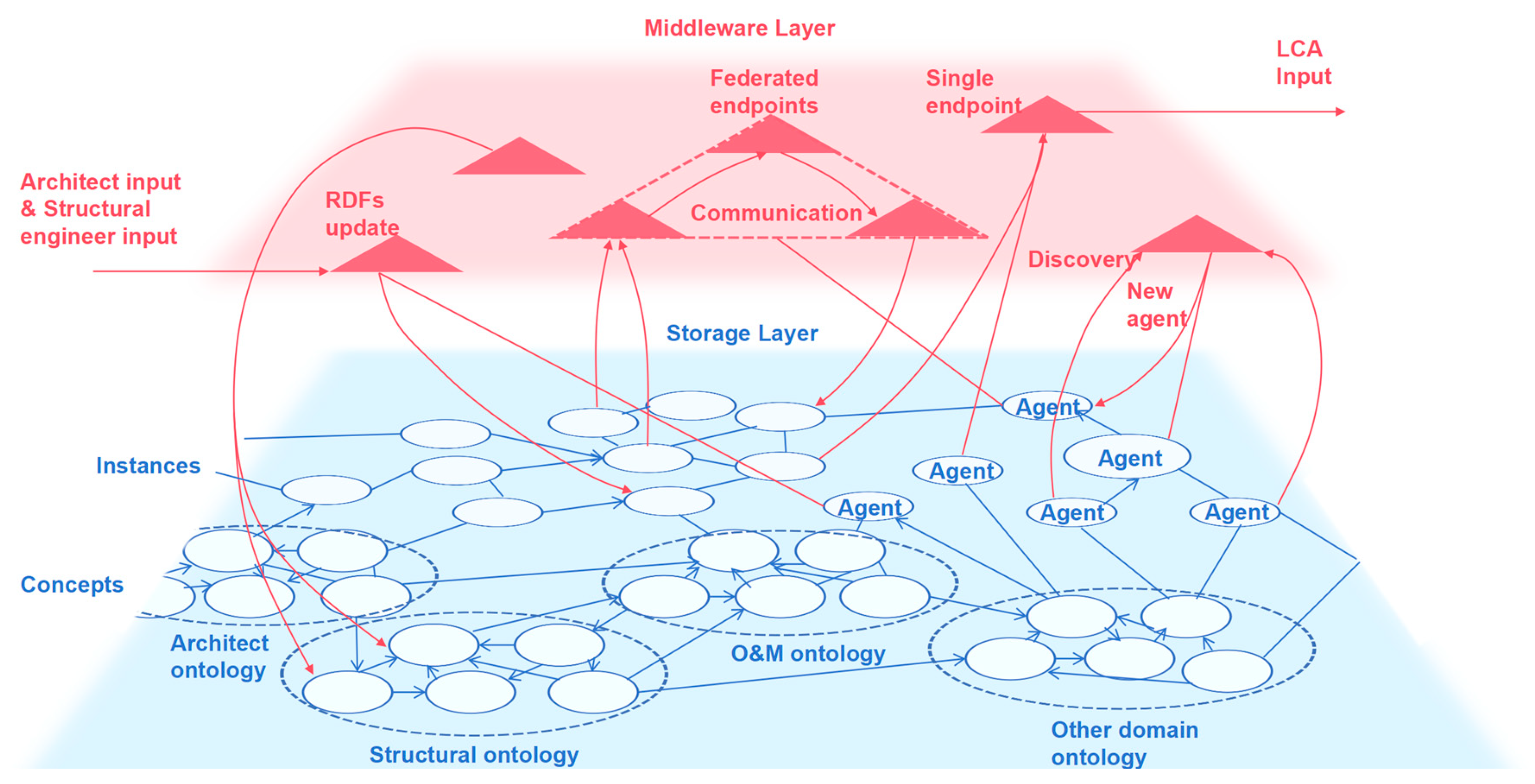

3. Proposed Framework

- Application Layer. In this layer, native applications or standalone software are local clients of a decentralized hosted data storage system. e.g., the finite element analysis solver and the LCA tool.

- Middleware Layer. The middleware layer includes two types of microservices, aggregators and adapters, utilized to facilitate communication with decentral stored data and obtain inputs requested by local applications.

- Storage Layer. This layer stores the pertinent datasets of the building LCA project, which are decentralized in disparate repositories yet adhere to RDF open data format that permits their discovery and filtering via SPARQL queries.

3.1. Application Layer: Project Scope

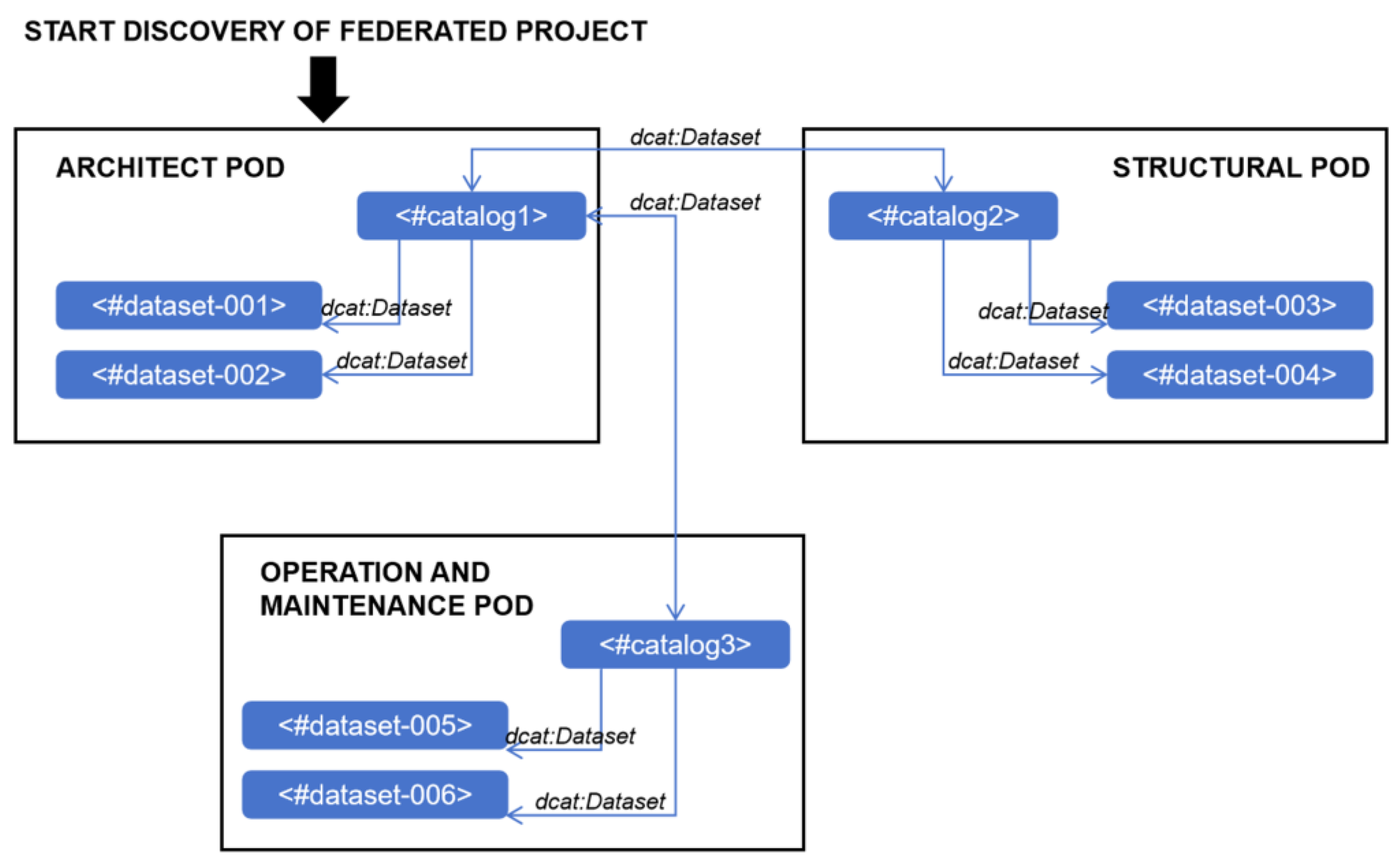

3.2. Storage Layer: Reusable Data

- Heterogeneous data (i.e., images, documents, models, and results) are stored in proprietary formats and subsequently annotated with metadata structured in RDF format. Once other registered endpoints of the federated project are retrieved using the DCAT vocabulary, it is possible to look up the specific dataset on a third-party repository and download the location.

- Three repositories contain architecture, structural analysis, and hazard evaluation model datasets. The term “model” does not imply the RDF serialization of domain-specific knowledge, i.e., hard-coding of BIM information, finite element matrix, and performance-based consequence functions into RDFs. The objective is to facilitate the interoperability of data types by automatically identifying relevant datasets through metadata and enabling engineers to continue working in their preferred professional environment.

- Project catalogs (i.e., metadata descriptions) are created based on context in communication between the local applications and the various data repositories. Subsequently, SPARQL query interfaces will employ these link sets for information retrieval and exchange.

3.3. Middleware Layer: Aggregators and Adapters

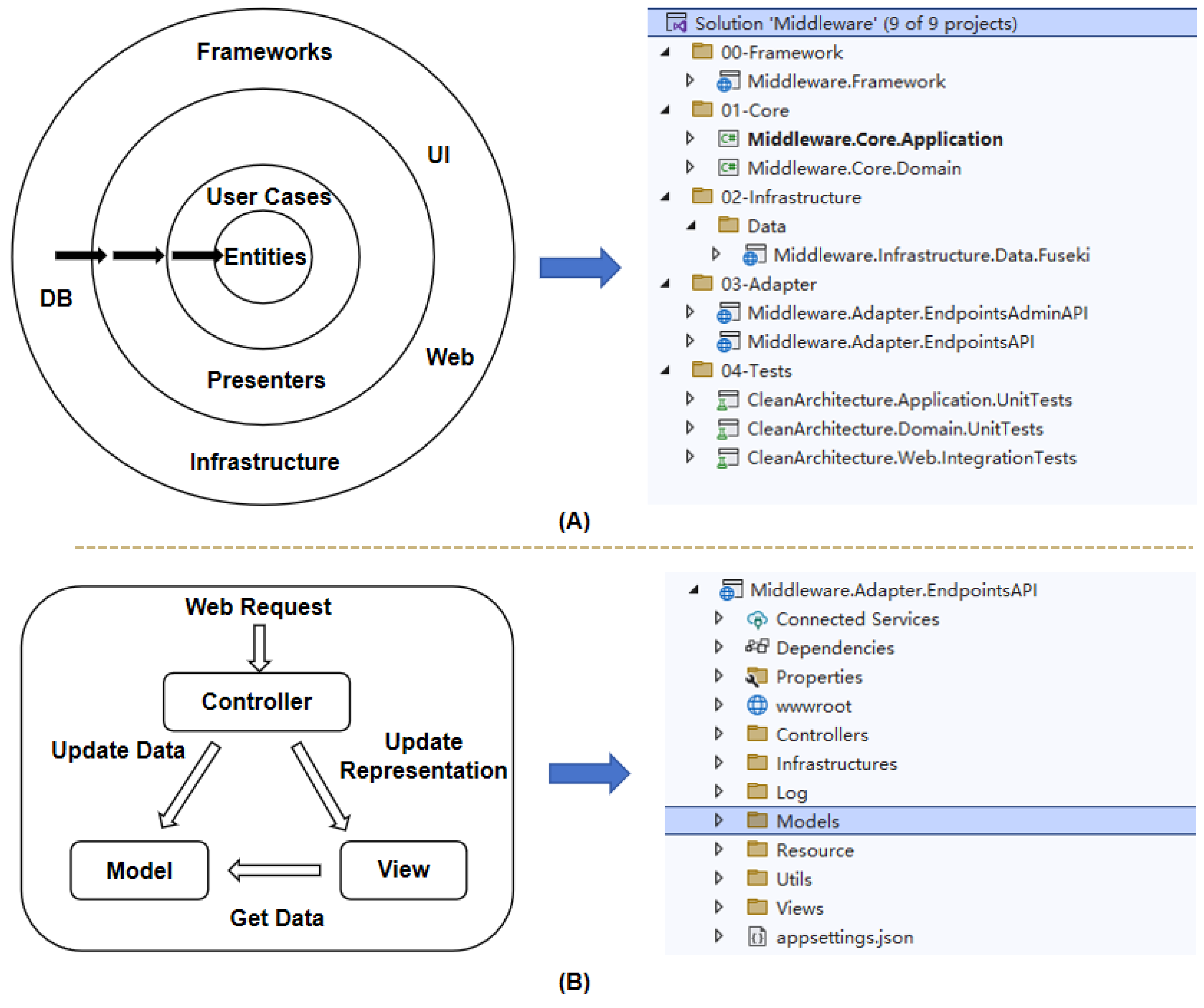

3.3.1. Middleware Architecture

3.3.2. Middleware Logic

3.3.3. Middleware Programming

- Presentation: Create two ASP.NET Web API/Blazor Service application projects: Web API and UI implementation.

- Frameworks and Drivers: A shared class library containing external tools and frameworks. It integrates a set of reusable classes, extensions, and base classes that other layers can reference to reduce development.

- Infrastructure: It mainly defines the database (DB technology stack). An Apache Fuseki server integrated with the TDB database provides persistent RDF storage.

- Interface Adapters: It contains two .NET 6.0 class libraries, EndpointsAPI and EndpointsAdminAPI, which convert data from external to internal formats.

- 5.

- Core: It contains Application and Domain two .NET 6.0 class libraries.

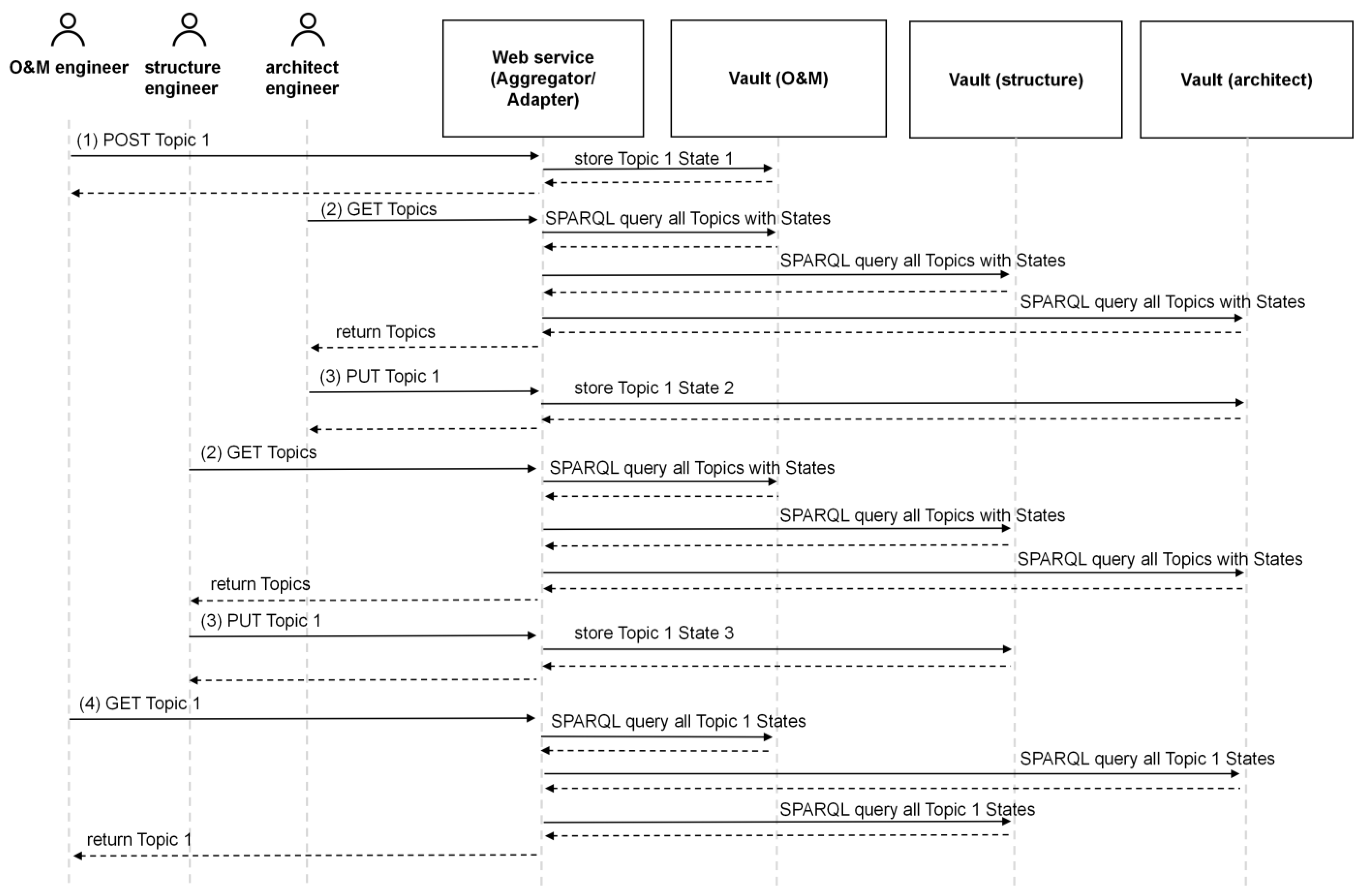

4. Case Study

4.1. Problem Definition

- The O&M staff created a new topic, “LCA assessment for the building, please check the contributing components and responses”, and assigned it to the relevant reviewers. The topic contains information about the type of request and the status. The reviewers link views and viewpoints to the topic and define the building elements that point to the scenario.

- Once the topic assignment is complete, other engineers review the tasks assigned to them:

- 3.

- Update the topic status based on the architectural and structural domain engineer review results.

- 4.

- The O&M staff verifies the topic’s status to advance the design or propose disaster-proof solutions.

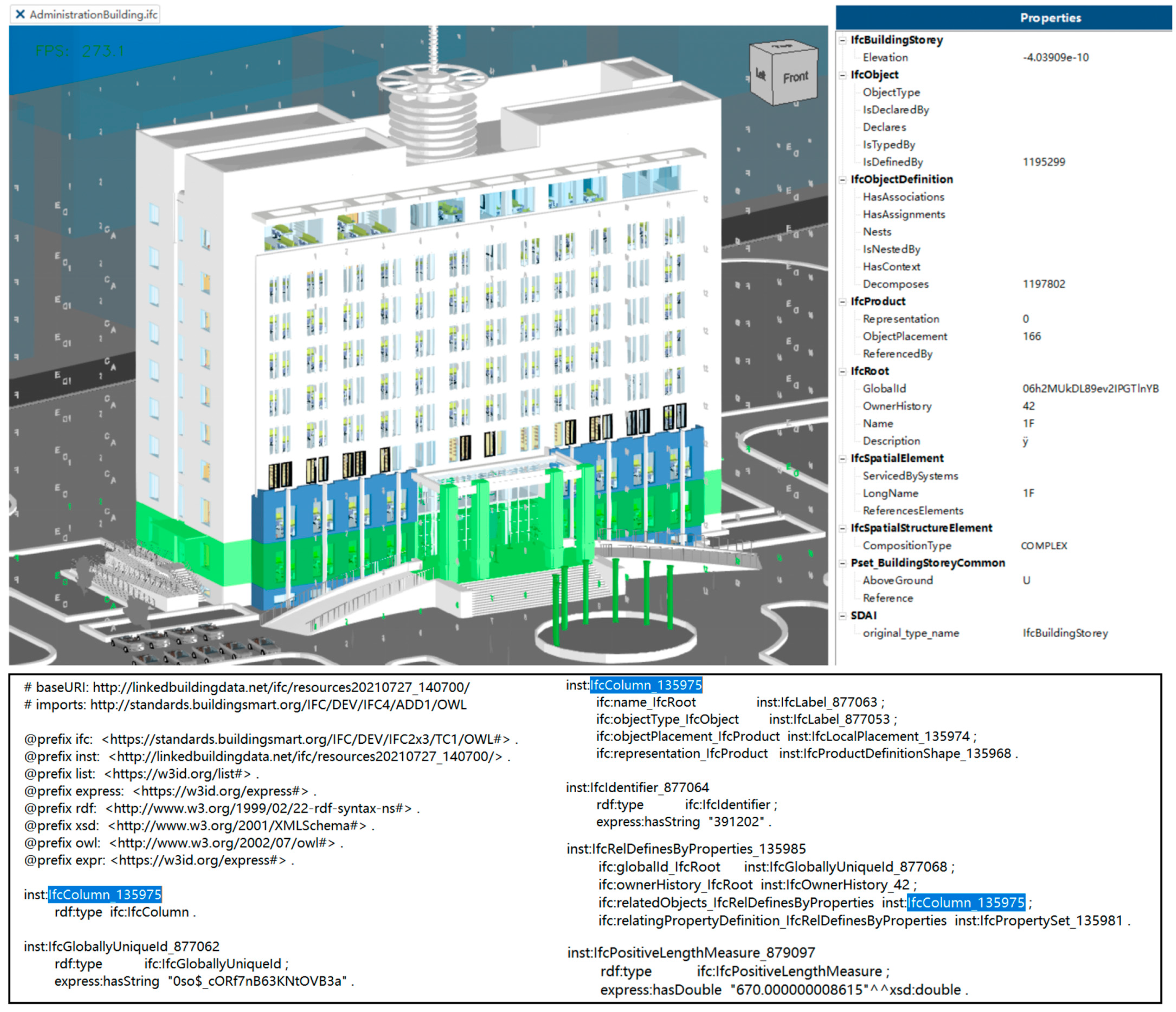

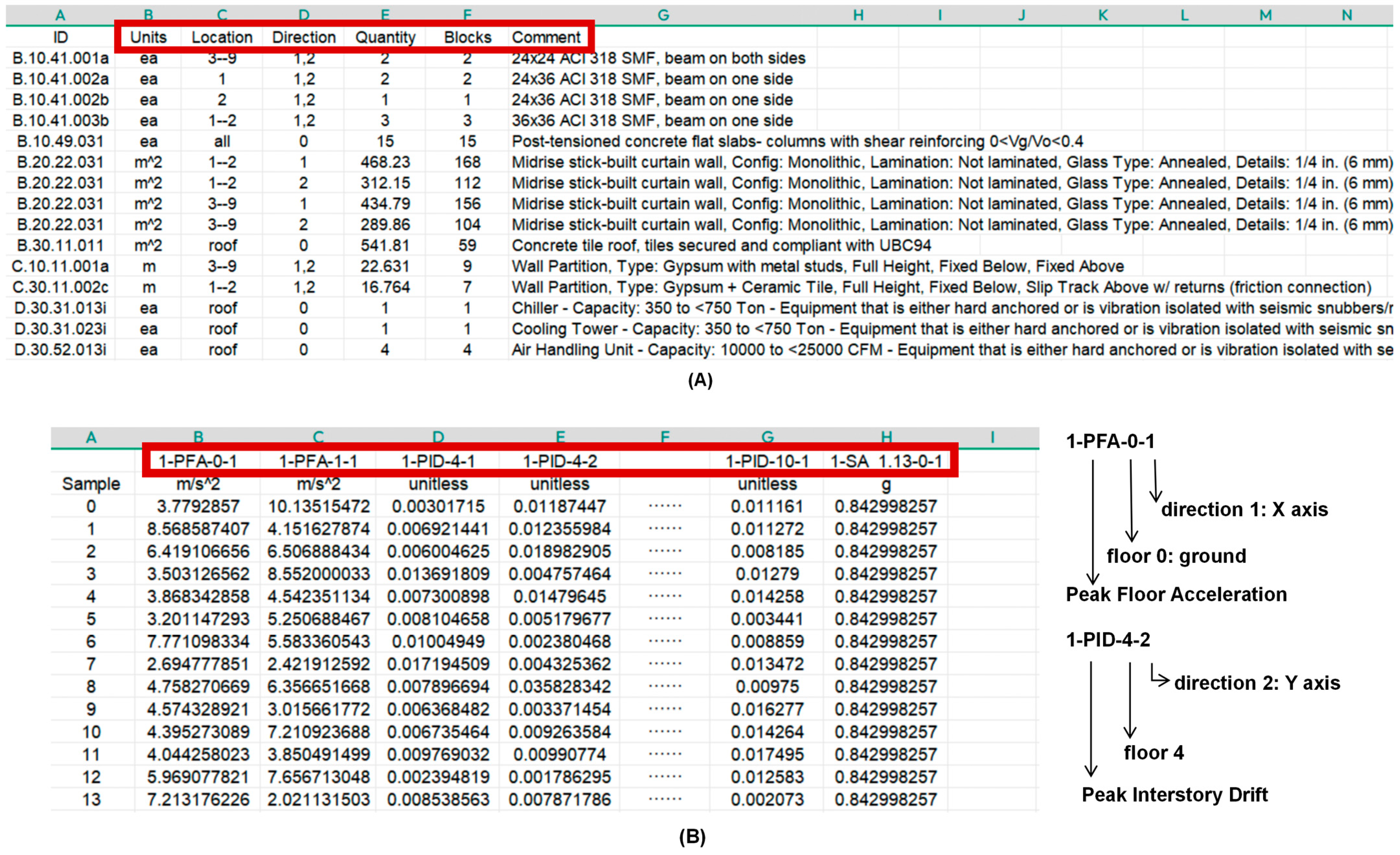

4.2. Dataset

4.3. Communication

| Listing 1. The original Topic and its bcfowl:TopicState initiated by the O&M engineer. This record is stored in <https://pod.o&m-engineer.com>. |

| <https://pod.o&m-engineer.com/Topic1> a bcfowl:Topic ; bcfowl:hasProject <https://pod.o&m-engineer.com/LCAAssessmentProject> . <https://pod.o&m-engineer.com/TopiclState1> a bcfowl:TopicState ; bcfowl:hasTopic <https://pod.o&m-engineer.com/Topic1> ; bcfowl:hasTitle “LCA assessment for the building, please check the contributing components and responses” ; bcfowl:hasTopicType <https://pod.o&m-engineer.com/LCAAssessment> ; bcfowl:hasCreationDate “2024-06-9 18:11:25” ; bcfowl:hasCreationAuthor <http://localhost:3000/o&m-engineer/profile/card#me> ; bcfowl:hasTopicStatus <https://pod.o&m-engineer.com/LCAStatusInitial> ; bcfowl:hasAssignedTo <https://pod.architect-engineer.com/profile/card#me> . |

| Listing 2. The updated bcfowl:TopicState is <https://pod.architect.com/Topic1State2>. The new state is summarized and stored in the architect’s Pod, therefore the architect requested the end of the state and reassigned it to the structural engineer. |

| <https://pod.architect-engineer.com/Topic1State2> a bcfowl:TopicState ; bcfowl:hasTopic <https://pod.o&m-engineer.com/Topic1> ; bcfowl:hasCreationDate “2024-06-10 22:39:13” ; bcfowl:hasCreationAuthor <http://localhost:3000/architect-engineer/profile/card#me> ; bcfowl:hasStatus <https://pod.architect-engineer.com/ArchitectStatusEnd> ; bcfowl:hasAssignedTo <https://pod.structural-engineer.com/profile/card#me> . |

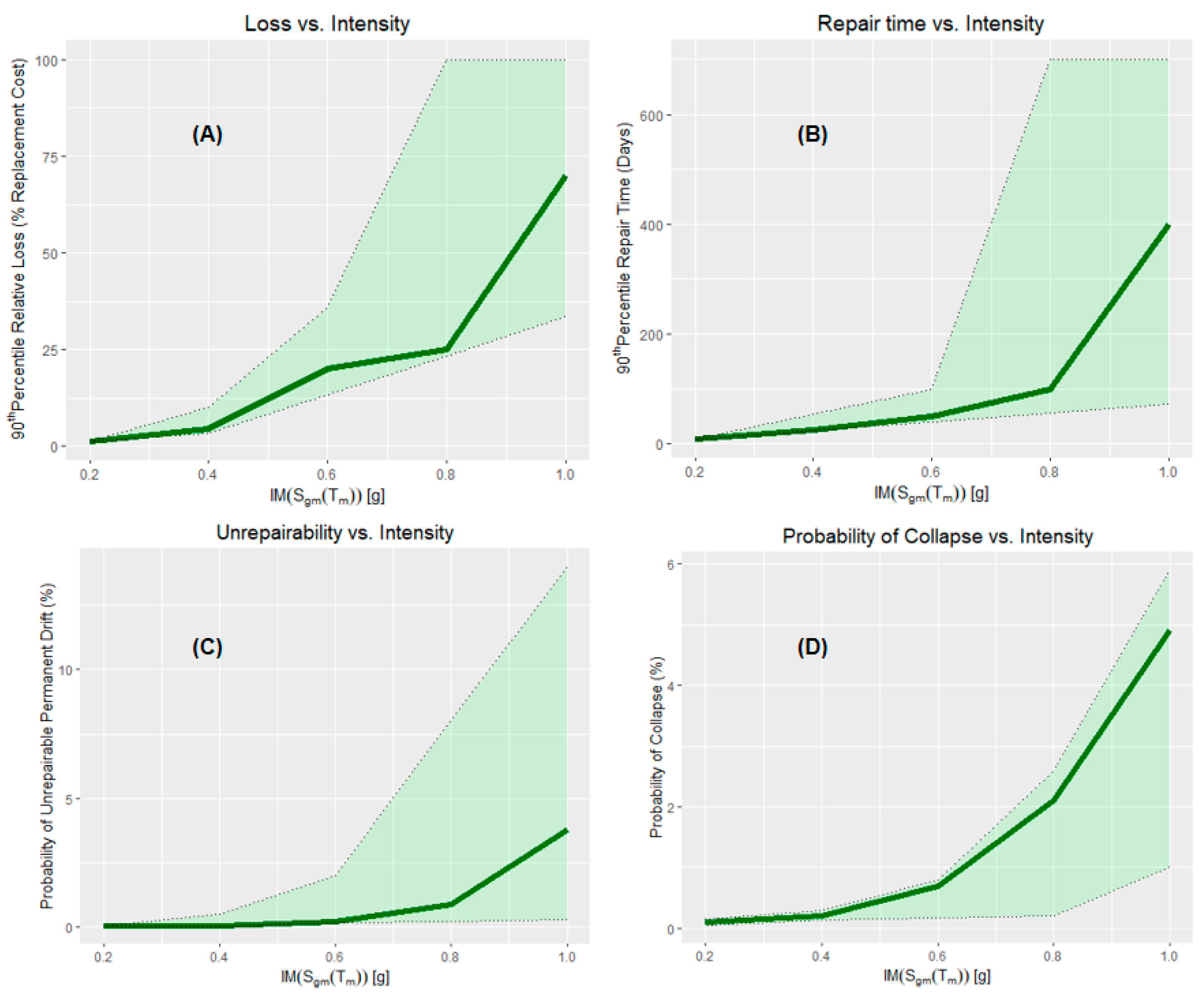

4.4. Results

5. Discussion

5.1. Main Findings

5.2. Generalized to Other Domains

5.3. Limitations and Future Directions

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A. The Probability Methodological Framework of Structural LCA

References

- Angeles, K.; Patsialis, D.; Taflanidis, A.A.; Kijewski-Correa, T.L.; Buccellato, A.; Vardeman, C. Advancing the design of resilient and sustainable buildings: An integrated lifecycle analysis. J. Struct. Eng. 2021, 147, 04020341. [Google Scholar] [CrossRef]

- Jaskula, K.; Kifokeris, D.; Papadonikolaki, E.; Rovas, D. Common data environments in construction: State-of-the-art and challenges for practical implementation. Constr. Innov. 2024. ahead of print. [Google Scholar] [CrossRef]

- Bucher, D.; Hall, D. Common Data Environment within the AEC Ecosystem: Moving collaborative platforms beyond the open versus closed dichotomy. In Proceedings of the EG-ICE 2020 Proceedings: Workshop on Intelligent Computing in Engineering, Online, 1–4 July 2020; Universitätsverlag der TU Berlin: Berlin, Germany, 2020; pp. 491–500. [Google Scholar]

- ISO 19650-1:2018; Organization and Digitization of Information About Buildings and Civil Engineering Works, Including Building Information Modelling (BIM)—Information Management Using Building Information Modelling, Part 1: Concepts and Principles. ISO: Geneva, Switzerland, 2018. Available online: https://www.iso.org/standard/68078.html (accessed on 13 April 2025).

- DIN SPEC 91391-1:2019; Common Data Environments (CDE) for BIM Projects—Function Sets and Open Data Exchange Between Platforms of Different Vendors—Part 1: Components and Function Sets of a CDE; with Digital Attachment. DIN Media: Berlin, Germany, 2019. [CrossRef]

- NBS. National BIM Report 2020. 2020. Available online: www.thenbs.com/knowledge/national-bim-report-2019 (accessed on 13 April 2025).

- Patacas, J.; Dawood, N.; Kassem, M. BIM for facilities management: A framework and a common data environment using open standards. Autom. Constr. 2020, 120, 103366. [Google Scholar] [CrossRef]

- Senthilvel, M.; Beetz, J.; Raabe, C. Linking and Managing Heterogeneous Data Using Information Containers: Leveraging Linked Data for BIM Stage 3 CDEs (No. RWTH-2025-01088). Ph.D. Thesis, Rheinisch-Westfälische Technische Hochschule Aachen, Aachen, Germany, 2024. [Google Scholar]

- Kiu, M.S.; Lai, K.W.; Chia, F.C.; Wong, P.F. Blockchain integration into electronic document management (EDM) system in construction common data environment. Smart Sustain. Built Environ. 2024, 13, 117–132. [Google Scholar] [CrossRef]

- Valra, A.; Madeddu, D.; Chiappetti, J.; Farina, D. The BIM Management System: A Common Data Environment Using Linked Data to Support the Efficient Renovation in Buildings. Proceedings 2020, 65, 18. [Google Scholar] [CrossRef]

- Tao, X.; Das, M.; Liu, Y.; Cheng, J.C. Distributed common data environment using blockchain and Interplanetary File System for secure BIM-based collaborative design. Autom. Constr. 2021, 130, 103851. [Google Scholar] [CrossRef]

- Tao, X.; Wong, P.K.-Y.; Xu, Y.; Liu, Y.; Gong, X.; Zheng, C.; Das, M.; Cheng, J.C. Smart contract swarm and multi-branch structure for secure and efficient BIM versioning in blockchain-aided common data environment. Comput. Ind. 2023, 149, 103922. [Google Scholar] [CrossRef]

- Özkan, S.; Seyis, S. Identification of common data environment functions during construction phase of BIM-based projects. In Proceedings of the CIB W78-LDAC 2021, Luxembourg, 11–15 October 2021. [Google Scholar]

- ISO 16739-1:2024; Industry Foundation Classes (IFC) for Data Sharing in the Construction and Facility Management Industries—Part 1: Data Schema. ISO: Geneva, Switzerland, 2024.

- Boje, C.; Guerriero, A.; Kubicki, S.; Rezgui, Y. Towards a semantic Construction Digital Twin: Directions for future research. Autom. Constr. 2020, 114, 103179. [Google Scholar] [CrossRef]

- Mons, B.; Neylon, C.; Velterop, J.; Dumontier, M.; da Silva Santos, L.O.B.; Wilkinson, M.D. Cloudy, increasingly FAIR; revisiting the FAIR Data guiding principles for the European Open Science Cloud. Inf. Serv. Use 2017, 37, 49–56. [Google Scholar] [CrossRef]

- Park, J. Framework for Managing Multiple Common Data Environments. In Proceedings of the Construction Research Congress 2024, Des Moines, IA, USA, 20–23 March 2024; pp. 1117–1127. [Google Scholar]

- Schaathun, H.G.; Rutle, A. Model-driven engineering in RDF-a way to version control. Nor. IKT-Konf. Forsk. Og Utdanning 2018. Available online: https://www.ntnu.no/ojs/index.php/nikt/article/view/5384/4860 (accessed on 13 April 2025).

- Esser, S.; Vilgertshofer, S.; Borrmann, A. Graph-based version control for asynchronous BIM collaboration. Adv. Eng. Inform. 2022, 53, 101664. [Google Scholar] [CrossRef]

- Berners-Lee, T. 5-Star Deployment Scheme for Open Data. 2022. Available online: http://5stardata.info/ (accessed on 16 August 2024).

- Werbrouck, J.; Taelman, R.; Verborgh, R.; Pauwels, P.; Beetz, J.; Mannens, E. Pattern-based access control in a decentralised collaboration environment. In Proceedings of the 8th Linked Data in Architecture and Construction Workshop, Dublin, Ireland, 17–19 June 2020; Volume 2636, pp. 118–131. [Google Scholar]

- Soman, R.K.; Whyte, J.K. Codification challenges for data science in construction. J. Constr. Eng. Manag. 2020, 146, 04020072. [Google Scholar] [CrossRef]

- Iovescu, D.; Tudose, C. Real-Time Document Collaboration—System Architecture and Design. Appl. Sci. 2024, 14, 8356. [Google Scholar] [CrossRef]

- Kiviniemi, A.; Fischer, M.; Bazjanac, V. Integration of multiple product models: Ifc model servers as a potential solution. In Proceedings of the 22nd CIB-W78 Conference on Information Technology in Construction, Dresden, Germany, 19–21 July 2005. [Google Scholar]

- The BIM-Bot-Services. Available online: https://github.com/opensourceBIM/BIM-Bot-services/wiki (accessed on 16 August 2024).

- Beetz, J.; van Berlo, L.; de Laat, R.; van den Helm, P. BIMserver. org—An open source IFC model server. In Proceedings of the CIP W78 Conference, Cairo, Egypt, 16–19 November 2010. [Google Scholar]

- Autodesk Construction Cloud APIs. Available online: https://aps.autodesk.com/developer/overview/autodesk-construction-cloud (accessed on 16 August 2024).

- Vu Hoang, N.; Törmä, S. DRUMBEAT Platform—A Web of Building Data Implementation with Backlinking. In Proceedings of the eWork and eBusiness in Architecture, Engineering and Construction: ECPPM, Limassol, Cyprus, 7–9 September 2016. [Google Scholar] [CrossRef]

- Huyeng, T.-J.; Thiele, C.-D.; Wagner, A.; Shi, M.; Hoffmann, A.; Sprenger, W.; Rüppel, U. An approach to process geometric and semantic information as open graph-based description using a microservice architecture on the example of structural data. In Proceedings of the EG-ICE 2020 Workshop on Intelligent Computing in Engineering, Online, 1–4 July 2020. [Google Scholar] [CrossRef]

- Werbrouck, J.; Pauwels, P.; Beetz, J.; Mannens, E. ConSolid: A federated ecosystem for heterogeneous multi-stakeholder projects. Semant. Web 2024, 15, 429–460. [Google Scholar] [CrossRef]

- van Berlo, L.; Krijnen, T. Using the BIM collaboration format in a server based workflow. Procedia Environ. Sci. 2014, 22, 325–332. [Google Scholar] [CrossRef]

- Bakkas, J.; Bahaj, M. Generating of RDF graph from a relational database using Jena API. Int. J. Eng. Technol. 2013, 5, 1970–1975. [Google Scholar]

- RDFLib—A Python Library for Working with RDF. Available online: https://github.com/RDFLib/rdflib (accessed on 16 October 2024).

- Yijing, Z.; Xiang, M.; Yuzhou, S.; Ting, L.; Yi, Z.; Peng, L. Research on Barriers of BIM Application in Construction Enterprises——Based on Literature Review Method. J. Inf. Technol. Civ. Eng. Archit. 2019, 11, 61–65. [Google Scholar] [CrossRef]

- Yavan, F.; Maalek, R.; Toğan, V. Structural Optimization of Trusses in Building Information Modeling (BIM) Projects Using Visual Programming, Evolutionary Algorithms, and Life Cycle Assessment (LCA) Tools. Buildings 2024, 14, 1532. [Google Scholar] [CrossRef]

- Sandberg, M.; Mukkavaara, J.; Shadram, F.; Olofsson, T. Multi-disciplinary optimization of lifecycle energy and cost using a BIM-based master model. Sustainability 2019, 11, 286. [Google Scholar] [CrossRef]

- Bansal, V.K. Integrated framework of BIM and GIS applications to support building lifecycle: A move toward nD modeling. J. Archit. Eng. 2021, 27, 05021009. [Google Scholar] [CrossRef]

- Sinha, R.; Lennartsson, M.; Frostell, B. Environmental footprint assessment of building structures: A comparative study. Build. Environ. 2016, 104, 162–171. [Google Scholar] [CrossRef]

- Rafiq, M.Y.; Rustell, M.J. Building information modeling steered by evolutionary computing. J. Comput. Civ. Eng. 2014, 28, 05014003. [Google Scholar] [CrossRef]

- Boonstra, S.; van der Blom, K.; Hofmeyer, H.; Emmerich, M.T. Conceptual structural system layouts via design response grammars and evolutionary algorithms. Autom. Constr. 2020, 116, 103009. [Google Scholar] [CrossRef]

- De Wolf, C.; Pomponi, F.; Moncaster, A. Measuring embodied carbon dioxide equivalent of buildings: A review and critique of current industry practice. Energy Build. 2017, 140, 68–80. [Google Scholar] [CrossRef]

- Gavrilovic, S.; Haukaas, T. Multi-model probabilistic analysis of the lifecycle cost of buildings. Sustain. Resilient Infrastruct. 2020, 7, 313–331. [Google Scholar] [CrossRef]

- Frances, Y.S.E. Whole Building Life Cycle Assessment: Reference Building Structure and Strategies; American Society of Civil Engineers: Reston, VA, USA, 2018; pp. 1–80. [Google Scholar]

- Li, S.-D.; Xu, Z.-D. System Configuration Design of BIM Object-Oriented Database for Civil Engineering. J. Constr. Eng. Manag. 2022, 148, 04022130. [Google Scholar] [CrossRef]

- Poinet, P.; Stefanescu, D.; Papadonikolaki, E. Collaborative workflows and version control through open-source and distributed common data environment. In Proceedings of the International Conference on Computing in Civil and Building Engineering, São Paulo, Brazil, 18–20 August 2020; Springer International Publishing: Cham, Switzerland, 2020; pp. 228–247. [Google Scholar]

- Kirstein, F.; Dittwald, B.; Dutkowski, S.; Glikman, Y.; Schimmler, S.; Hauswirth, M. Linked data in the european data portal: A comprehensive platform for applying DCAT-AP. In Proceedings of the International Conference on Electronic Government, San Benedetto Del Tronto, Italy, 2–4 September 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 192–204. [Google Scholar] [CrossRef]

- Werbrouck, J.; Schulz, O.; Oraskari, J.; Mannens, E.; Pauwels, P.; Beetz, J. A generic framework for federated CDEs applied to Issue Management. Adv. Eng. Inform. 2023, 58, 102136. [Google Scholar] [CrossRef]

- Fielding, R.; Nottingham, M.; Reschke, J. (Eds.) Hypertext Transfer Protocol—RFC 9110: HTTP Semantics; RFC Editor: Marina del Rey, CA, USA, 2022; Available online: https://www.rfc-editor.org/rfc/rfc9110.html (accessed on 13 April 2025).

- Oraskari, J. IFCtoRDF-Desktop: The IFCtoRDF Desktop Application, version 2.8; Zenodo: Genève, Switzerland, 2020. [Google Scholar] [CrossRef]

- Oraskari, J.; Bonduel, M.; McGlinn, K.; Wagner, A.; Pauwels, P.; Kukkonen, V.; Steyskaland, S.; Lehtonen, J.; Lefrançois, M.; McGibbney, L.J. IFCtoLBD: IFCtoLBD v 2.43.6. Available online: https://github.com/jyrkioraskari/IFCtoLBD (accessed on 16 August 2024).

- Giretti, A. Basics of Clean REST APIs. In Coding Clean, Reliable, and Safe REST APIs with ASP. NET Core 8: Develop Robust Minimal APIs with. NET 8; Apress: Berkeley, CA, USA, 2023; pp. 91–212. [Google Scholar]

- A Comparison of JavaScript libraries for Working with RDF. Available online: https://www.w3.org/community/rdfjs/wiki/Comparison_of_RDFJS_libraries (accessed on 13 April 2025).

- ISO/IEC/IEEE 42010:2022; Software, Systems and Enterprise—Architecture Description. ISO: Geneva, Switzerland, 2022.

- Zhou, E. ASP.NET 6 Clean Architecture Template. Available online: https://github.com/EdisonChou/CleanArchitectureTemplate (accessed on 16 August 2024).

- ISO 14067:2018; Greenhouse Gases—Carbon Footprint of Products—Requirements and Guidelines for Quantification. ISO: Geneva, Switzerland, 2018.

- EN 15804:2012+A2:2019/AC:2021; Sustainability of Construction Works e Environmental Product Declarations—Core Rules for the Product Category of Construction Products. CEN: Brussels, Belgium, 2021.

- FEMA. Building the Performance You Need: A Guide to State-of-the-Art Tools for Seismic Design and Assessment: FEMA P-58-7; Federal Emergency Management Agency: Washington, DC, USA, 2018. [Google Scholar]

- Gebelein, J.; Barnard, M.; Cochran, M.; Haselton, C.; McLellan, R.; Porter, K. Considerations for a framework of resilient structural design for earthquakes. In Proceedings of the Structural Engineers Association of California Convention (SEAOC 2017), San Diego, CA, USA, 13–15 September 2017. [Google Scholar]

- GB/T 38591−2020; Standard for Seismic Resilience Assessment of Buildings. Standards Press of China: Beijing, China, 2020. (In Chinese)

- Deierlein, G.G.; McKenna, F.; Zsarnóczay, A.; Kijewski-Correa, T.; Kareem, A.; Elhaddad, W.; Lowes, L.; Schoettler, M.J.; Govindjee, S. A Cloud-Enabled Application Framework for Simulating Regional-Scale Impacts of Natural Hazards on the Built Environment. Front. Built Environ. 2020, 6, 558706. [Google Scholar] [CrossRef]

- GB/T50011-2010; Seismic Design Standards for Buildings. Ministry of Housing and Urban-Rural Construction of the People’s Republic of China: Beijing, China, 2024. (In Chinese)

- OpenSeesPy—Version 3.4.0.5. Available online: https://github.com/zhuminjie/OpenSeesPy/tree/v3.4.0.5 (accessed on 25 March 2025).

- Hitzler, P.; Eberhart, A.; Ebrahimi, M.; Sarker, M.K.; Zhou, L. Neuro-symbolic artificial intelligence: The state of the art. Natl. Sci. Rev. 2022, 9, nwac035. [Google Scholar] [CrossRef]

- Moehle, J.; Deierlein, G.G. A framework methodology for performance-based earthquake engineering. In Proceedings of the 13th World Conference on Earthquake Engineering, WCEE, Vancouver, BC, Canada, 1–6 August 2004; Volume 679, p. 12. [Google Scholar]

- Vlachos, C.; Papakonstantinou, K.G.; Deodatis, G. Predictive model for site specific simulation of ground motions based on earthquake scenarios. Earthq. Eng. Struct. Dyn. 2018, 47, 195–218. [Google Scholar] [CrossRef]

- Zsarnoczay, A.; McKenna, F.; Gardner, M.; Gardner, M.; Wang, C.; Yi, S.-R.; Satish, A.B.; Pakzad, A.; Elhaddad, W. NHERI-SimCenter/PBE, Version 4.1.0 (v4.1.0); Zenodo: Genève, Switzerland, 2024. [Google Scholar] [CrossRef]

- FEMA P-58 Assessment Using External Demands. Available online: https://nheri-simcenter.github.io/PBE-Documentation/ (accessed on 25 March 2025).

| Scope | Coverage | Contains |

|---|---|---|

| IFC component | Material Categories | Concrete, Steel |

| Environmental Indicators | Thermal transmittance | |

| Product Variety | IfcColumns, IfcBeams, IfcSlab | |

| Load Bearings | Qto_ColumnBaseQuantities, Pset_ColumnCommon, Ifc_Root, Pset_ReinforcementBarPitchofColumn…… | |

| Traceability | General Information | GlobalId, OwnerHistory, Notes, and Namespace |

| Interoperability | Available Outputs | XML/JSON/RDF/CSV |

| Interoperable APIs | RDFlib, BCF API | |

| Metadata | RDF 1.2 Schema | |

| Access | HTTP request | |

| Comprehensiveness | Supportive Resources | PDFs, building ontologies, the interface of external databases (FEMA P-58), OpenSeesPy models, and EDP results…… |

| Update | Update endpoints:/select-documents,/upload-documents, and/document-versions |

| LCA Performances | Probability | 16% Percentile | 50% Percentile | 84% Percentile | Confidence Interval |

|---|---|---|---|---|---|

| Repair cost (USD_$) | - 1 | 7.14 × 104 | 4.85 × 105 | 7.69 × 105 | [2.73 × 104, 11.38 × 105] |

| Repair time (days) | - | 20.91 | 302.14 | 418.52 | [10.36, 719.62] |

| Embodied energy (MJ) | - | 5.14 × 105 | 2.23 × 106 | 3.58 × 106 | [1.27 × 105, 5.46 × 106] |

| Embodied carbon (kg) | - | 2.70 × 105 | 2.28 × 105 | 3.56 × 105 | [1.35 × 104, 5.51 × 105] |

| Collapse | 0.0507 | - | - | - | |

| Irreparable drift | 0.0392 | - | - | - |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xiang, L.; Li, G.; Li, H. A Common Data Environment Framework Applied to Structural Life Cycle Assessment: Coordinating Multiple Sources of Information. Buildings 2025, 15, 1315. https://doi.org/10.3390/buildings15081315

Xiang L, Li G, Li H. A Common Data Environment Framework Applied to Structural Life Cycle Assessment: Coordinating Multiple Sources of Information. Buildings. 2025; 15(8):1315. https://doi.org/10.3390/buildings15081315

Chicago/Turabian StyleXiang, Lini, Gang Li, and Haijiang Li. 2025. "A Common Data Environment Framework Applied to Structural Life Cycle Assessment: Coordinating Multiple Sources of Information" Buildings 15, no. 8: 1315. https://doi.org/10.3390/buildings15081315

APA StyleXiang, L., Li, G., & Li, H. (2025). A Common Data Environment Framework Applied to Structural Life Cycle Assessment: Coordinating Multiple Sources of Information. Buildings, 15(8), 1315. https://doi.org/10.3390/buildings15081315