Abstract

Background/Objectives: In routine practice, colonoscopy findings are saved as unstructured free text, limiting secondary use. Accurate named-entity recognition (NER) is essential to unlock these descriptions for quality monitoring, personalized medicine and research. We compared named-entity recognition (NER) models trained on real, synthetic, and mixed data to determine whether privacy preserving synthetic reports can boost clinical information extraction. Methods: Three Spark NLP biLSTM CRF models were trained on (i) 100 manually annotated Romanian colonoscopy reports (ModelR), (ii) 100 prompt-generated synthetic reports (ModelS), and (iii) a 1:1 mix (ModelM). Performance was tested on 40 unseen reports (20 real, 20 synthetic) for seven entities. Micro-averaged precision, recall, and F1-score values were computed; McNemar tests with Bonferroni correction assessed pairwise differences. Results: ModelM outperformed single-source models (precision 0.95, recall 0.93, F1 0.94) and was significantly superior to ModelR (F1 0.70) and ModelS (F1 0.64; p < 0.001 for both). ModelR maintained high accuracy on real text (F1 = 0.90), but its accuracy fell when tested on synthetic data (0.47); the reverse was observed for ModelS (F1 = 0.99 synthetic, 0.33 real). McNemar χ2 statistics (64.6 for ModelM vs. ModelR; 147.0 for ModelM vs. ModelS) greatly exceeded the Bonferroni-adjusted significance threshold (α = 0.0167), confirming that the observed performance gains were unlikely to be due to chance. Conclusions: Synthetic colonoscopy descriptions are a valuable complement, but not a substitute for real annotations, while AI is helping human experts, not replacing them. Training on a balanced mix of real and synthetic data can help to obtain robust, generalizable NER models able to structure free-text colonoscopy reports, supporting large-scale, privacy-preserving colorectal cancer surveillance and personalized follow-up.

1. Introduction

Colorectal cancer (CRC) remains the third most common malignancy worldwide and the second leading cause of cancer death; recent surveillance data shows more than 1 million new global cases annually, with higher incidence in people under 50 years old [1].

Colonoscopy plays an important role in early detection, surveillance, and post-polypectomy follow-up of colorectal cancer (CRC), and it is part of patient surveillance after CRC resection, enabling the detection of advanced neoplasia and recurrence [2].

The increase in biomedical data for CRC offers new research possibilities but also difficulties. Traditional CRC epidemiology used cancer registries, but now advanced technology and healthcare digitization have led to an explosion of diverse data formats. These include structured registry data, unstructured clinical notes, medical imaging, and multi-omics profiles, creating rich but heterogeneous datasets. In clinical practice, large amounts of colonoscopy data are stored as unstructured free-text reports, limiting reuse for quality assurance, population-based surveillance, or even Artificial Intelligence (AI)-based decision support. Analyzing this large and varied information requires advanced methods to integrate and interpret it for easy epidemiological understanding of CRC’s causes, progression, and prevention. Combining structured and unstructured data with natural language processing (NLP) and AI generates better patient groups, improved risk assessment, and deeper clinical understandings compared to using just using one type of data alone [3].

Information extraction techniques—particularly named-entity recognition (NER), an NLP method—offer a promising solution for structuring free-text colonoscopy reports by automatically identifying clinically relevant concepts such as lesion morphology, location, and intervention details. However, the development of accurate NER models in the medical domain is heavily dependent on access to high-quality, manually annotated data. These annotated resources are time-consuming to produce, require domain expertise, and are often constrained by institutional privacy concerns and limited availability, especially in low-resource settings or for underrepresented languages such as Romanian language.

To overcome these limitations, the generation of synthetic clinical text has emerged as a potential alternative [4,5]. Synthetic data, produced through rule-based generation, templating, or generative AI, can reproduce the linguistic and semantic structure of real-world reports while avoiding patient privacy risks [6].

When validated properly, such datasets may serve as effective training material for machine learning models, reducing reliance on sensitive real-world data and accelerating the use of clinical NLP systems. In this context, synthetic colonoscopy reports represent a potentially cost-effective, scalable, and privacy-preserving resource for training NER models [7,8]. Despite their appeal, the efficacy of models trained on synthetic text has not been rigorously benchmarked against those trained on real clinical narratives. Thus, it remains an open question whether synthetically generated medical texts can provide enough fidelity and semantic diversity to support high-quality information extraction in real-world applications.

In the gastrointestinal endoscopy domain, researchers have begun applying deep learning to automatically process colonoscopy and pathology reports. For instance, one recent study introduced the first deep learning-based NER system for colonoscopy reports, highlighting the importance of this task for clinical utility (cancer risk prediction and follow-up recommendations). The model, used with domain-specific word embeddings, achieved promising results in identifying colonoscopy findings and attributes [9]. Similarly, others have developed NLP pipelines to extract detailed features from colonoscopy pathology reports with high accuracy. Benson et al. (2023) report an end-to-end pipeline that automatically identifies histopathologic polyp features (size, location, histology, etc.) from colonoscopy reports, obtaining precision as high as 98.9% and a recall of 98.0% (F1-score 98.4%) across all entities [10]. These works demonstrate that advanced NER methods can successfully capture critical clinical entities in endoscopy texts, laying the groundwork for tools that assist in automated report summarization and decision support. When data is scarce, researchers have started to leverage large language models (LLMs) to generate artificial clinical text for NER training. In a 2024 study, researchers created a synthetic annotated corpus of radiology (CT scan) reports using GPT-3.5 and used it to fine-tune a biomedical NER model. Notably, even though the LLM-generated reports cannot perfectly mimic real clinical prose, combining synthetic data with authentic data led to marked improvements in NER performance [11]. Developing effective NER in minor languages poses additional challenges due to scarce annotated data. The recent literature shows a strong interest in increasing NER models performance under low-resource conditions through means such as data augmentation. A recent 2025 study explores using synthetically generated training samples for multilingual NER in 11 low-resource languages. Their findings suggest that synthetic augmentation holds promise for improving low-resource NER [12].

In our prior research, we demonstrated the application of Spark NLP (John Snow Labs, Lewes, DE, USA) to annotate endoscopy reports and train models capable of automatically extracting relevant medical labels [13]. This approach not only enhanced the structuring of medical data but also enabled its integration with other structured datasets, paving the way for complex patient profiling.

The current study aims to further investigate the potential use of NLP and synthetic data in clinical practice and to evaluate if synthetic colonoscopy reports can improve the clinical information extraction capabilities achieved with models trained only on real medical texts. By training and comparing three NER models, one on real endoscopy reports, one on synthetic reports, and one on an equal mix, this work’s aim is to demonstrate that synthetic data provides not only a privacy-preserving, scalable alternative to real document annotations, but also substantially improves the robustness and generalization of clinical NLP models.

Quantifying the capabilities to extract clinically relevant information will clarify the feasibility of synthetic documentation for applications such as automated colorectal cancer surveillance and advanced patient profiling, reducing the dependence on manual annotation of sensitive patient data.

2. Materials and Methods

The real texts consisted of 100 de-identified colonoscopy reports from the Gastroenterology Department, Mureș County Clinical Hospital (Târgu Mureș, Romania), recorded between January 2021 and December 2024 and containing at least one colorectal polyp. All healthcare personal identifiers were removed before export to Generative AI Lab (John Snow Labs, DE, USA) [14].

For the synthetic texts, two gastroenterologists applied techniques of prompt engineering that reproduced the narrative style, terminology, and entity frequencies observed in the real reports. Prompts were executed within John Snow Labs Generative AI Lab, which provides access to the GPT-4 family of large language models that are optimized for clinical text generation (specifically, the model used was GPT 4o). We drafted several prompt templates, each instructing the model to produce de-identified Romanian colonoscopy reports that follow the same clinical structure but with explicit lexical variation, ultimately obtaining 100 entirely artificial but realistic colonoscopy descriptions [14]. Prompt templates and annotation guidelines are detailed in the Supplementary Materials document, Table S1 from Section S1, and annotation rules in Section S2. The same experts reviewed a subset of 50 real and 50 synthetic texts for overall plausibility and style matching. Inter-rater agreement was quantified with Cohen’s κ, and the resulting statistics are presented in the Section 3 [15].

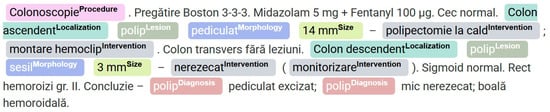

Both datasets were manually annotated by two gastroenterologists for seven entities—Intervention, Lesion, Morphology, Localization, Procedure, Diagnosis, and Size—using the Generative AI Lab web interface, following a set of annotation rules, according to predefined guidelines based on the BIO (Begin-Inside-Outside) tagging scheme [16]. The annotation process via web interface is shown in Figure 1 below:

Figure 1.

Color-coded annotation of clinical concepts in a colorectal procedure report in GenAI Lab.

An unseen evaluation set of 20 additional real reports and 20 newly generated synthetic reports was reserved exclusively for external validation.

Three NER models were trained: ModelR (trained solely on real data), ModelS (trained only on synthetic data), and ModelM (trained on an equal mixture of real and synthetic reports). All models were implemented and trained using the No-Code Generative AI Lab platfomrm (John Snow Labs) [14,17,18]. Each model used 300-dimensional GloVe embeddings (glove_6B_300) [19], concatenated with 20-dimensional character-level embeddings for each token, as supported by the Spark NLP NER architecture [17,20].

The underlying model consisted of a bidirectional Long Short-Term Memory (biLSTM) layer for sequential feature extraction, followed by a Conditional Random Field (CRF) output layer for optimal sequence tagging, as established in prior biomedical NER studies in the literature [17,18]. Hyperparameters were set as follows: LSTM hidden size = 200, batch size = 16, learning rate = 0.001, maximum epochs = 20, and early stopping if no validation loss improvement was observed over five epochs. Default dropout and optimization settings from Spark NLP were used. All models were trained from scratch on their respective training sets using the BIO-annotated token sequences, with the validation set used for monitoring convergence.

Each model was evaluated on a reserved, unseen test set comprising 20 real and 20 synthetic reports, all manually annotated. Both token-level and span-level metrics were calculated; however, the primary evaluation metric was the micro-averaged precision, recall, and F1-score at the entity (span, exact-match) level, excluding the “O” (non-entity) class. This approach aligns with best practices established in recent clinical NLP research [21,22,23,24].

For comparative evaluation, three NER models, trained on real, synthetic, and mixed (real plus synthetic) colonoscopy reports, respectively, were assessed on a common test set consisting of 40 unseen documents (20 real and 20 synthetic). Each model’s predictions were evaluated both on the full set and separately by domain, with performance summarized using micro-averaged precision, recall, and F1-score at the entity (span-level, exact-match) and token levels.

For statistical comparison of the three NER models, McNemar’s test was applied on the combined evaluation set (40 documents: 20 real, 20 synthetic). Evaluation was conducted at the entity–span level: a span was scored as correct only when it exactly matched the gold annotation. For every pair of models, we built a 2 × 2 contingency table, counting spans that only one model, the other model, both, or neither identified correctly. The significance threshold was established at 0.05. p values from the three McNemar pairwise comparisons (M vs. R, M vs. S, R vs. S) were adjusted with a Bonferroni correction [25,26].

All statistical analyses, metric computations, and model-evaluation pipelines were implemented in Python 3 programming language, using the scikit-learn, statsmodels, and pandas libraries [27,28,29].

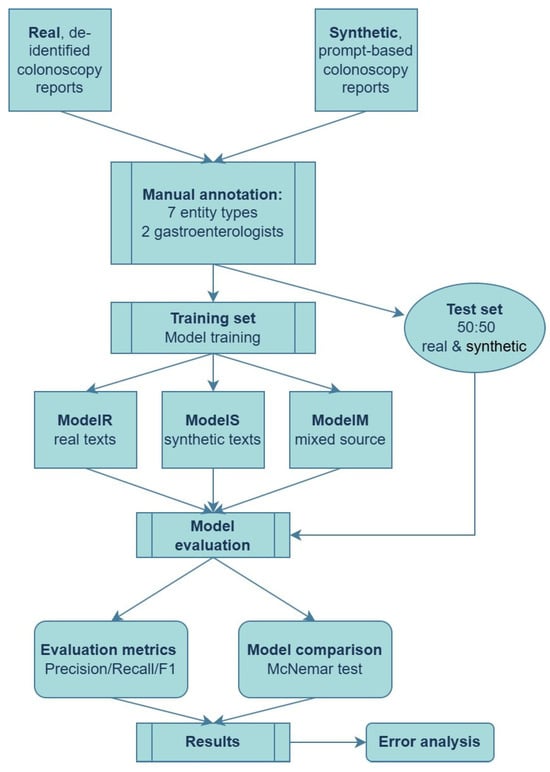

A representative flowchart of the proposed pipeline is presented in Figure 2.

Figure 2.

End-to-end pipeline for training and evaluating NER models on real and synthetic colonoscopy reports.

3. Results

Inter-rater agreement was high, with Cohen’s κ = 0.85 (95% CI 0.78–0.92) for plausibility and κ = 0.81 (95% CI 0.73–0.89) for similarity, meaning that the synthetic documents closely resembled real endoscopy reports.

ModelR was trained exclusively on real colonoscopy reports and evaluated on both real and synthetic test sets. Table 1 presents ModelR’s precision, recall, and F1-score at the entity level for both domains, along with true positive, false positive, and false negative rates.

Table 1.

Evaluation metrics for ModelR on real and synthetic test sets.

ModelS was trained only on synthetic colonoscopy reports. Table 2 summarizes its entity-level performance on real and synthetic test sets.

Table 2.

Evaluation metrics for ModelS on real and synthetic test sets.

ModelM was trained on an equal mix of real and synthetic reports. Table 3 shows its entity-level metrics on real texts and synthetic texts, respectively.

Table 3.

Evaluation metrics for ModelM on real and synthetic test sets.

Table 4 presents the global (combined real + synthetic) entity-level results for all models. These results reflect performance when models are evaluated across all test documents.

Table 4.

Global entity-level metrics for all models.

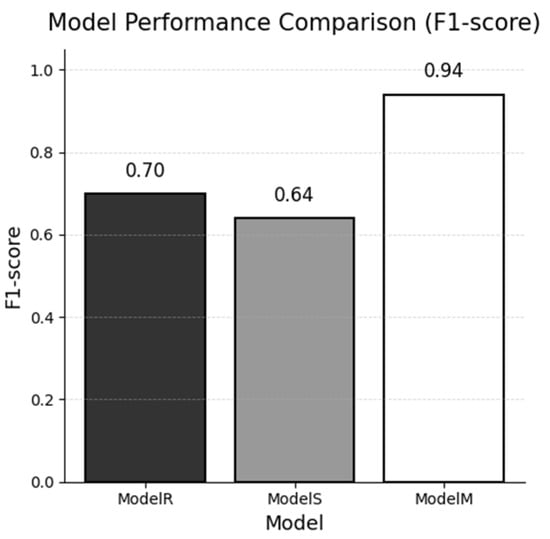

Figure 3 visualizes the global F1 gap, highlighting Model M’s advantage.

Figure 3.

Global F1-score of each named-entity recognition model evaluated on the combined real and synthetic test sets.

Below in Table 5 are the per-entity F1-scores (span-level, “O” excluded) on the real test set.

Table 5.

Per-entity evaluation of models on the real test set.

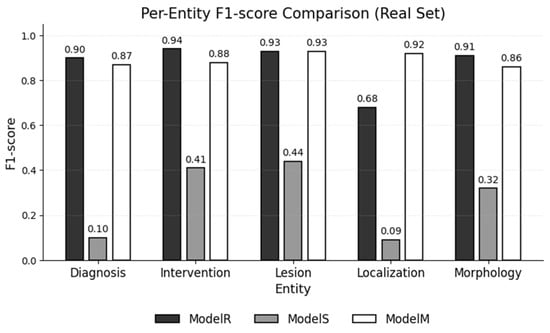

Figure 4 illustrates the per-entity differences, showing that the Localization label benefits most from mixed training.

Figure 4.

F1-scores for each entity type, as achieved by each NER model on the real test set.

Next, we calculated the F1-scores per entity for each model, based on the synthetic data (Table 6).

Table 6.

Per-entity evaluation of models on the synthetic test set.

The adjusted threshold for significance with Bonferroni correction was calculated to be 0.0167, and the p values for the McNemar tests used to compare the models are reported in Table 7 (below).

Table 7.

McNemar test results when comparing models.

In addition to the quantitative evaluation, we performed a qualitative error analysis. These were grouped into three categories: (i) entity-type confusions (misclassifying one biomedical concept as another), (ii) omissions (false negatives), and (iii) hallucinated entities (false positives). Examples include the following:

- Type confusion by Model S, which merged the Morphology [serat] and the Lesion [polip] into a single Lesion span [“polip serat”];

- Omission by Model R, which detected the lesion [polip sesil] but missed the accompanying size [5 mm] and localization [colonul ascendent];

- Hallucinated lesion produced by Model S on real text [mucoasă], where the gold annotation contained no entities.

The mixed model detected 35 localization spans that the real model missed, which raised the recall to 0.93, with an F1-score increase from 0.68 (ModelR) to 0.92 (ModelM). Representative examples include the following:

- “Colon transvers polip pediculat 8 mm—nerezecat”

- Gold annotations/Model M: Colon transvers—[Localization]

- Model R: missed

- “Colon descendent polip sesil 4 mm—polipectomie la rece.”

- Gold annotations/Model M: Colon descendent—[Localization]

- Model R: missed

- “Sigmoid polip pediculat 19 mm—polipectomie la rece.”

- Gold annotations/ Model M: Sigmoid—[Localization]

- Model R: missed

Conversely, when no polyp is recorded (“Colon ascendent fără leziuni.”), both real and mixed models skip the site, respecting the annotation guidelines.

4. Discussion

Entity-level (span-level) evaluation is preferred for clinical NER, meaning a predicted entity is counted as correct only if the entire span and type match the gold standard exactly [30].

This exact-match criteria aligns with the BIO tagging scheme and clinical concept extraction requirements. By contrast, token-level evaluation (treating NER as word-by-word classification) is now rarely reported as a primary metric, because it can be misleading—token-level scores often look high due to the abundance of non-entity tokens. Instead, studies focus on whole-entity extraction performance. If a more forgiving metric is desired, authors sometimes include a “lenient” or overlap-based F1, which gives partial credit when a predicted span overlaps the true span [31].

For example, a study reported both exact-match and overlap-based scores, noting that exact-match F1 varied by entity and dataset, while lenient F1 was consistently high (often >0.9) when partial overlaps were allowed [32].

Globally, the mixed data trained model significantly outperformed both single-domain models, whereas the latter two both suffered marked reductions in F1 when evaluated outside their training domain. Per-entity-type F1-scores showed that the mixed model was the most consistent across all entity types. Its errors on real data consisted primarily of minor misclassification between similar entities (e.g., Lesion vs. Morphology). The model trained on synthetic data, when applied to real text, produced a high rate of false positives. The model trained on real data, conversely, showed a tendency to under-predict on synthetic reports, missing true entities.

Adding synthetic sentences expanded the lexical and syntactic coverage of “site + polyp” expressions, enabling the mixed model to capture many anatomical locations that the real-only model failed to recognize, without increasing the incidence of erroneous localization annotations. An additional contributing factor to the lower metrics of ModelS (trained exclusively on synthetic data) relates to subtle but systematic linguistic differences between the synthetic and real reports. Although the synthetic texts were reviewed by domain experts and deemed viable, qualitative inspection revealed that the synthetic reports tend to employ more standardized, consistent phrasing and a reduced range of abbreviations. Sentence structures are also generally simpler and less variable than those found in authentic clinical documentation, having greater linguistic diversity and different expressions with occasional irregularities. We believe these factors limited ModelS’s ability to recognize entities in real-world text, where greater complexity is present.

Recent studies in other clinical domains have shown that NER models trained entirely or partly on synthetic notes can approach the accuracy of models trained on real text, suggesting a viable route to overcome privacy barriers and data scarcity issues [33].

A case study on clinical entity recognition investigated training NER models on synthetic clinical notes generated by GPT-2 versus real notes. An NER model trained on a purely synthetic set of 500 notes performed similarly or slightly better than a model trained on the same number of real notes in one evaluation scenario. The authors suggest that the synthetic data contained a higher density of entities, which increased model performance. Similarly to our current research, by using a mixed training set, the authors obtained the best results, outperforming single-source-trained models [34].

In line with our study, wherein we experimented with models trained on texts in an underrepresented language (Romanian), a 2025 paper focused on a low-resource language (Estonian), generating a fully synthetic clinical text set to train NER models for medical entities. The resulting NER model achieved an F1 of 0.69 for drug name recognition and an F1 0.38 for procedure names when tested on real clinical notes, where zero real data were used for training. This demonstrates the practicality of applying clinical NLP in languages or institutions with no sharable data, by using synthetic data generation, underscoring that privacy-preserving synthetic data can effectively train usable clinical NER models [35].

A consistent finding across studies is that in-domain training data (from the same institution or context as the application data) returns the best model performance, whereas cross-domain or out-of-domain data can degrade accuracy. For example, a comparative NER study on clinical trial texts showed that, even when using the same model architecture, performance varied widely (over 20 percentage points in F1) when evaluated on different datasets from other medical institutions [36]. This highlights that models tend to overfit to site-specific text styles and terminology. In practice, NER models trained on one-source reports often see decreases in precision/recall when applied to another set, due to differences in hospital documentation conventions. Incorporating diverse training data or domain adaptation techniques can improve this. Wherever possible, external validation on a dataset from a different source is recommended to assess generalizability.

Colonoscopy documentation varies between medical facilities, and these discrepancies are prone to diminish cross-site NER accuracy. First, the report layout ranges from free-text narratives to structured templates. Furthermore, the presence of headings and checklist phrases impacts sentence boundaries and punctuation, which can shift entity spans and lead to a lower recall [37]. Second, abbreviation conventions are inconsistent: the Boston Bowel Preparation Scale may appear as “BBPS 3-3-2”, “prep 332”, or “3/3/2”, and some hospitals adopt alternative scales such as OBPS, creating words that reduce precision [38]. Third, terminological preferences differ: while many centers record polyp morphology with Paris or NICE classifications, others note only “adenom” or “polip”, and bowel-prep quality can be expressed with BBPS, OBPS or narrative adjectives [39]. Finally, regional style guides influence spelling (e.g., “colon ascendent” vs. “asc.”), adding to the lexical inconsistency. Multicenter evaluations of clinical NER consistently report 10–25-point F1 declines when models are transferred across institutions, underscoring the need for abbreviation expansion, vocabulary normalization, and domain fine-tuning before wider deployment [40].

This study used a relatively small dataset selected from a single clinical center in Romania, which may limit the generalizability of findings to broader or more diverse populations. Additionally, all annotations were performed by two experts; further validation with a larger group of annotators may improve reliability. Future studies should evaluate these models on larger and more heterogeneous datasets, including colonoscopy reports from multiple institutions and geographies, to test robustness and support wider clinical adoption. Because no inter-institutional data sharing agreement was in force during the study period, additional external validation could not be undertaken; future work will seek multicenter collaborations to assess generalizability across differing reporting conventions.

While our training set of 100 real reports is small, an important question is how the model scales with larger or differently composed datasets. Synthetic data generation offers a way to rapidly scale the training corpus, but the literature suggests there is a minimum real-data proportion needed to anchor the model’s performance. Even a small fraction of real examples can greatly enhance generalization. For example, Kamath & Vajjala (2025) demonstrate, across 11 low-resource languages, that a small amount of carefully annotated data yields better performance than a large amount of synthetic data [12]. Additionally, in a 2024 study by Ashok et al., it was found that adding just 125 human-labeled data points to a synthetic-only dataset creates a great performance increase in model metrics [41]. Similarly, in a wearable-sensor classification task, using at least 20–25% real data was necessary to reach F1-scores comparable to an all-real training scenario. When the real portion dropped to only 10%, the model’s F1 fell to 73%, even after augmenting with plentiful synthetic examples [42]. In other words, performance declines as the real-data share decreases, underscoring that models trained on extremely low real data may not perform optimally when compensating the training with synthetic data alone. The scalability of our proposed method appears promising, with the caveat that completely eliminating real data is not advisable. Our experiment with a 50/50 mix (ModelM) suggests that if more real reports beyond 100 can be obtained, even in small proportions, they could further improve the model when combined with an expanded synthetic set. Conversely, if we were to scale up the synthetic data to a 1:5 or 1:10 ratio, we would expect diminishing but still positive returns. Our future work will empirically determine the exact “tipping point” for this task, for example, evaluating 1:5 vs. 1:10 real-to-synthetic training mixes.

5. Conclusions

The research demonstrates that training NER models exclusively on synthetic colonoscopy reports can facilitate high performance on synthetic data, but these models do not generalize well to real clinical reports. On the other hand, models trained only on real data maintain high accuracy on similar real texts but fail to recognize entities in synthetic or out-of-domain cases. This highlights a clear limitation of relying on a single data source for clinical information extraction.

The most effective strategy is to combine both real and synthetic data for model training. Our mixed-data model achieved high, balanced performance on both real and synthetic test sets, demonstrating robust generalizability and practical utility. This mixed approach preserves patient privacy and allows for scalable model development, especially where real annotated data is scarce. Extrapolating our findings, it seems AI tools are being developed to help doctors, not replace them.

Our study is among the first to validate such approaches for Romanian-language colonoscopy reports, addressing the lack of structured data resources and advancing NLP for underrepresented languages.

Integrating synthetic data with real-world annotations increases the accuracy and reliability of structured data extraction from unstructured colonoscopy reports. This methodology supports the development of practical, privacy-conscious NLP tools for automated clinical surveillance in colorectal cancer care. The resulting structured data can enable individualized surveillance planning, contributing to a shift toward more personalized management strategies in patient care.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/jpm15080334/s1, prompt templates, annotation guidelines, training and inference times, as well as additional training data.

Author Contributions

Conceptualization, M.-Ș.M. and A.-C.I.; methodology, A.-M.F. and A.-C.I.; software, A.-C.I. and M.-Ș.M.; validation, M.-Ș.M. formal analysis, M.-Ș.M.; data curation, A.-M.F. and A.-D.T.-M.; writing—original draft preparation, A.-C.I. and A.-M.F.; writing—review and editing, A.-D.T.-M.; supervision, M.-Ș.M. and D.-E.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

This study was conducted in accordance with the new Declaration of Helsinki. Ethical approval was waived by UMFST G.E. Palade Tg. Mureș and its Ethical Committee because this analysis did not include any personal information identifiers (code: 28/09112020).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The original data presented in the study are openly available in the public repository accessible at https://doi.org/10.5281/zenodo.16020981 (accessed on 17 July 2025). Prompt templates and annotation guidelines are duplicated in the Supplementary Materials. De-identified excerpts can be obtained from the corresponding author upon reasonable request and subject to an institutional data use agreement, in accordance with MDPI’s research data policy.

Conflicts of Interest

Author Alina-Dia Trâmbițaș-Miron was employed by the company John Snow Labs (Lewes, DE, USA). The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest. The company was not involved in the design and interpretation part of the study.

References

- Siegel, R.L.; Giaquinto, A.N.; Jemal, A. Cancer statistics, 2024. CA Cancer J. Clin. 2024, 74, 12–49. [Google Scholar] [CrossRef]

- Kim, S.; Shin, J.K.; Park, Y.A.; Huh, J.W.; Kim, H.C.; Yun, S.H.; Lee, W.Y.; Cho, Y.B. Is Colonoscopy Alone Adequate for Surveillance in Stage I Colorectal Cancer? Cancer Res. Treat. 2024, 57, 507–518. [Google Scholar] [CrossRef]

- Cheung, K.S. Big data approach in the field of gastric and colorectal cancer research. J. Gastroenterol. Hepatol. 2024, 39, 1027–1032. [Google Scholar] [CrossRef] [PubMed]

- Doste, R.; Lozano, M.; Jimenez-Perez, G.; Mont, L.; Berruezo, A.; Penela, D.; Camara, O.; Sebastian, R. Training machine learning models with synthetic data improves the prediction of ventricular origin in outflow tract ventricular arrhythmias. Front. Physiol. 2022, 13, 909372. [Google Scholar] [CrossRef]

- Gallos, P.; Matragkas, N.; Islam, S.U.; Epiphaniou, G.; Hansen, S.; Harrison, S.; van Dijk, B.; Haas, M.; Pappous, G.; Brouwer, S.; et al. INSAFEDARE Project: Innovative Applications of Assessment and Assurance of Data and Synthetic Data for Regulatory Decision Support. Stud. Health Technol. Inform. 2024, 316, 1193–1197. [Google Scholar] [CrossRef] [PubMed]

- Torfi, A.; Fox, E.A.; Reddy, C.K. Differentially private synthetic medical data generation using convolutional GANs. Inf. Sci. 2022, 586, 485–500. [Google Scholar] [CrossRef]

- Nicora, G.; Buonocore, T.M.; Parimbelli, E. A synthetic dataset of liver disorder patients. Data Brief 2023, 47, 108921. [Google Scholar] [CrossRef]

- Rashidi, H.H.; Khan, I.H.; Dang, L.T.; Albahra, S.; Ratan, U.; Chadderwala, N.; To, W.; Srinivas, P.; Wajda, J.; Tran, N.K. Prediction of Tuberculosis Using an Automated Machine Learning Platform for Models Trained on Synthetic Data. J. Pathol. Inform. 2022, 13, 10. [Google Scholar] [CrossRef]

- Seong, D.; Choi, Y.H.; Shin, S.Y.; Yi, B.K. Deep learning approach to detection of colonoscopic information from unstructured reports. BMC Med. Inform. Decis. Mak. 2023, 23, 28. [Google Scholar] [CrossRef]

- Benson, R.; Winterton, C.; Winn, M.; Krick, B.; Liu, M.; Abu-El-Rub, N.; Conway, M.; Del Fiol, G.; Gawron, A.; Hardikar, S. Leveraging Natural Language Processing to Extract Features of Colorectal Polyps From Pathology Reports for Epidemiologic Study. JCO Clin. Cancer Inform. 2023, 7, e2200131. [Google Scholar] [CrossRef]

- Platas, A.; Zotova, E.; Martínez-Arias, P.; López-Linares, K.; Cuadros, M. Synthetic annotated data for named entity recognition in computed tomography scan reports. In CEUR Workshop Proceedings, Proceedings of the 40th Annual Conference of the Spanish Association for Natural Language Processing 2024 (SEPLN-P 2024), Valladolid, Spain, 24–27 September 2024; Payo, V.C., Mancebo, D.E., Ferreras, C.G., Eds.; CEUR: Aachen, Germany, 2024; Volume 3846, pp. 69–78. [Google Scholar]

- Kamath, G.; Vajjala, S. Does synthetic data help named entity recognition for low-resource languages? arXiv 2025. [Google Scholar] [CrossRef]

- Ioanovici, A.C.; măruşteri, S.M.; Feier, A.M.; Trambitas-Miron, A.D. Spark NLP: A Versatile Solution for Structuring Data from Endoscopy Reports. Appl. Med. Inform. 2021, 43 (Suppl. S1), 26. [Google Scholar]

- John Snow Labs. Annotation Lab Documentation. Available online: https://nlp.johnsnowlabs.com/docs/en/alab/quickstart (accessed on 24 April 2025).

- McHugh, M.L. Interrater reliability: The kappa statistic. Biochem. Med. 2012, 22, 276–282. [Google Scholar] [CrossRef]

- Tjong Kim Sang, E.F.; De Meulder, F. Introduction to the CoNLL-2003 Shared Task: Language-Independent Named Entity Recognition. Proc. Conf. Nat. Lang. Learn. HLT-NAACL 2003, 2003, 142–147. [Google Scholar]

- Kocaman, V.; Talby, D. Spark NLP: Natural language understanding at scale. Softw. Impacts 2021, 8, 100058. [Google Scholar] [CrossRef]

- John Snow Labs. Spark NLP Documentation. Available online: https://sparknlp.org/docs/en/quickstart (accessed on 15 May 2025).

- Pennington, J.; Socher, R.; Manning, C.D. Glove: Global vectors for word representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar]

- Lample, G.; Ballesteros, M.; Subramanian, S.; Kawakami, K.; Dyer, C. Neural Architectures for Named Entity Recognition. In Proceedings of the NAACL-HLT, San Diego, CA, USA, 12–17 June 2016; pp. 260–270. [Google Scholar]

- Huang, Z.; Xu, W.; Yu, K. Bidirectional LSTM-CRF Models for Sequence Tagging. arXiv 2015, arXiv:1508.01991. [Google Scholar]

- Kim, D.; Lee, J.; So, C.H.; Jeon, H.; Jeong, M.; Choi, Y.; et, a.l. A neural named entity recognition and multi-type normalization tool for biomedical text mining. IEEE Access 2019, 7, 73729–73740. [Google Scholar] [CrossRef]

- Jain, S.; Agrawal, A.; Saporta, A.; Truong, S.Q.H.; Duong, D.N.; Bui, T.; Chambon, P.; Zhang, Y.; Lungren, M.P.; Ng, A.Y.; et al. RadGraph: Extracting Clinical Entities and Relations from Radiology Reports. In Proceedings of the 35th Conference on Neural Information Processing Systems (NeurIPS 2021)—Datasets and Benchmarks Track, Online, 6–14 December 2021. [Google Scholar]

- Powers, D.M. Evaluation: From precision, recall and F-measure to ROC, informedness, markedness and correlation. arXiv 2020, arXiv:2010.16061. [Google Scholar]

- McNemar, Q. Note on the sampling error of the difference between correlated proportions or percentages. Psychometrika 1947, 12, 153–157. [Google Scholar] [CrossRef]

- Dietterich, T.G. Approximate Statistical Tests for Comparing Supervised Classification Learning Algorithms. Neural Comput. 1998, 10, 1895–1923. [Google Scholar] [CrossRef]

- Van Rossum, G.; Drake, F.L. Python 3 Reference Manual; CreateSpace: Scotts Valley, CA, USA, 2009. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- McKinney, W. pandas: A foundational Python library for data analysis and statistics. Python High Perform. Sci. Comput. 2011, 14, 1–9. [Google Scholar]

- Bose, P.; Srinivasan, S.; Sleeman, W.C., IV; Palta, J.; Kapoor, R.; Ghosh, P. A Survey on Recent Named Entity Recognition and Relationship Extraction Techniques on Clinical Texts. Appl. Sci. 2021, 11, 8319. [Google Scholar] [CrossRef]

- Mahajan, D.; Liang, J.J.; Tsou, C.H.; Uzuner, Ö. Overview of the 2022 n2c2 shared task on contextualized medication event extraction in clinical notes. J. Biomed. Inform. 2023, 144, 104432. [Google Scholar] [CrossRef]

- Ren, Y.; Caiani, E.G. Leveraging natural language processing to aggregate field safety notices of medical devices across the EU. npj Digit. Med. 2024, 7, 352. [Google Scholar] [CrossRef]

- Hiebel, N.; Ferret, O.; Fort, K.; Névéol, A. Can synthetic text help clinical named entity recognition? a study of electronic health records in French. In Proceedings of the 17th Conference of the European Chapter of the Association for Computational Linguistics (EACL 2023), Dubrovnik, Croatia, 2–6 May 2023. [Google Scholar]

- Li, J.; Zhou, Y.; Jiang, X.; Natarajan, K.; Pakhomov, S.V.; Liu, H.; Xu, H. Are synthetic clinical notes useful for real natural language processing tasks: A case study on clinical entity recognition. J. Am. Med. Inform. Assoc. JAMIA 2021, 28, 2193–2201. [Google Scholar] [CrossRef]

- Šuvalov, H.; Lepson, M.; Kukk, V.; Malk, M.; Ilves, N.; Kuulmets, H.A.; Kolde, R. Using Synthetic Health Care Data to Leverage Large Language Models for Named Entity Recognition: Development and Validation Study. J. Med. Internet Res. 2025, 27, e66279. [Google Scholar] [CrossRef]

- Li, J.; Wei, Q.; Ghiasvand, O.; Chen, M.; Lobanov, V.; Weng, C.; Xu, H. A comparative study of pre-trained language models for named entity recognition in clinical trial eligibility criteria from multiple corpora. BMC Med. Inform. Decis. Mak. 2022, 22 (Suppl. S3), 235. [Google Scholar] [CrossRef] [PubMed]

- Sharma, R.S.; Rossos, P.G. A Review on the Quality of Colonoscopy Reporting. Can. J. Gastroenterol. Hepatol. 2016, 2016, 9423142. [Google Scholar] [CrossRef] [PubMed]

- Kastenberg, D.; Bertiger, G.; Brogadir, S. Bowel preparation quality scales for colonoscopy. World J. Gastroenterol. 2018, 24, 2833–2843. [Google Scholar] [CrossRef] [PubMed]

- Johnson, G.G.R.J.; Helewa, R.; Moffatt, D.C.; Coneys, J.G.; Park, J.; Hyun, E. Colorectal polyp classification and management of complex polyps for surgeon endoscopists. Can. J. Surg. J. Can. De Chir. 2023, 66, E491–E498. [Google Scholar] [CrossRef] [PubMed]

- Chuang, Y.S.; Lee, C.T.; Lin, G.H.; Brandon, R.; Jiang, X.; Walji, M.F.; Tokede, O. Cross-institutional dental electronic health record entity extraction via generative artificial intelligence and synthetic notes. JAMIA Open 2025, 8, ooaf061. [Google Scholar] [CrossRef] [PubMed]

- Ashok, D.; May, J. A Little Human Data Goes A Long Way. arXiv 2024, arXiv:2410.13098. [Google Scholar]

- Tu, Y.C.; Lin, C.Y.; Liu, C.P.; Chan, C.T. Performance Analysis of Data Augmentation Approaches for Improving Wrist-Based Fall Detection System. Sensors 2025, 25, 2168. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).