Abstract

Background: Breast cancer is a leading cause of cancer-related mortality among women, and earlier diagnosis significantly improves treatment outcomes. However, traditional mammography-based systems rely on single-modality image analysis and lack integration of volumetric and clinical context, which limits diagnostic robustness. Deep learning models have shown promising results in identification but are typically restricted to 2D feature extraction and lack cross-modal reasoning capability. Objective: This study proposes SwinCAMF-Net, a multimodal cross-attention Swin transformer network designed to improve joint breast lesion classification and segmentation by integrating multi-view mammography, 3D ROI volumes, and clinical metadata. Methods: SwinCAMF-Net employs a Swin transformer encoder for hierarchical visual representation learning from mammographic views, a 3D CNN volume encoder for lesion depth context modelling, and a clinical projection module to embed patient metadata. A novel cross-attentive fusion (CAF) module selectively aligns multimodal features through query–key attention. The fused feature representation branches into a classification head for malignancy prediction and a segmentation decoder for lesion localization. The model is trained and evaluated on CBIS-DDSM and RTM benchmark datasets. Results: SwinCAMF-Net achieved accuracy up to 0.978, an AUC-ROC of 0.998, and an F1-score of 0.944 for classification, while segmentation reached a Dice coefficient of 0.931. Ablation experiments confirm that the CAF module improves performance by up to 6.9%, demonstrating its effectiveness in multimodal fusion. Conclusion: SwinCAMF-Net advances breast cancer analysis by providing complementary multimodal evidence through a cross-attentive fusion, leading to improved diagnostic performance and clinical interpretability. The framework demonstrates strong potential in AI-assisted screening and radiology decision support.

1. Introduction

Breast cancer is still one of the leading causes of morbidity and mortality throughout the world, emphasizing the importance of timely and accurate diagnosis to improve clinical outcomes []. Mammography is the gold standard screening procedure, but future computational solutions are needed to assist radiologists in lesion detection and to provide risk stratification. Although deep learning models, such as convolutional neural networks and transformer-based models, have proven to help improve performance in mammogram classification and segmentation, these methods are typically limited in their ability to integrate multi-view image information, volumetric characteristics, and clinical variables into a single interpretable model. This study presents a cross-attentive multimodal fusion network, CAMF-Net, which unites Swin transformer encoders, three-dimensional convolutional neural network (3D-CNN) volume encoders, and clinical vector projectors in a systematic approach []. We use a cross-attention fusion module to align the different streams of data (as images and prior to decoding in a hierarchical U-Net structure) in a way that enables accurate lesion localization and complete semantic interpretation. The innovative nature of the architecture is further enhanced using multi-scale encoder–decoder fusion and dual-task optimization that involve supervising binary classification from the mammogram data and dense segmentation from the 3D volume objective [].

Despite significant advances in deep learning modeling for mammogram analysis, the existing literature indicates a variety of critical limitations that restrict rigorous clinical translation. First, many CNN-based models have suitable receptive fields for pathological volumetric image analysis, but they are unable to capture long-range dependencies and global semantic context that are important for more complex characterization of breast lesions. Second, transformer-based architectures enable improved modeling of global attention but are often an excessive computational burden, and they do not permit fusing multi-modal data streams (e.g., mammographic views, volumetric data, and clinical features) into a joint architecture. Third, U-Net, or similar decoder architectures, might not produce improved recovery of fine structural boundaries through relatively coarse blending of hierarchical features and limited inter-modal alignment []. Also, existing model pipelines often take the approach of separate classification and segmentation pipelines, which hampers joint performance and lacks a shared optimization objective when diagnostic and localization tasks are involved. In radiology, clarity and rationale for decision-making, coupled with trust and reliability, are essential for diagnostic outcomes. Due to a lack of interpretable cross-attention fusing of semi-automated diagnostic features, the reliability of the model can be termed paramount, but decision transparency and trust will be weakened if interpretable model mechanisms cannot be defined. Finally, existing models may not implement effective multi-scale feature fusing, subsequently compromising precise delineation of structure boundaries on heterogeneous datasets known to be challenging [].

2. Literature Review

Deep learning has made great advances in breast cancer image content analysis over the past couple of years, particularly in the classification and segmentation of mammograms. Traditional convolutional neural networks (CNN), including ResNet3D and DenseNet3D, are generally effective in extracting valuable features from medical images. However, CNN models are limited to local receptive fields. Common CNN architecture moderates the capability of retrieving mutually inclusive and larger contextual relationships that breast tissues possess, for both accuracy and precision in breast cancer diagnosis. This suggests that new models or approaches generate models effectively and complex dependencies across different regions of an image. The region’s results will contextualize information in a broader context that is more representative of how radiologists evaluate cases in their own real-world contexts. Transformer-based models such as Vision Transformer and Medical Transformer have ultimately motivated the field forward with their use of self-attention to capture long-range interactions, but they are still frequently computationally inefficient and struggle with multi-modal fusion Swin3D-CFN-Decoder.

Hybrid models like Swin-Tv2 and encoder-decoder transformer models (e.g., Swin3D-CFN) have enhanced diagnostic accuracy and segmentation performance by integrating hierarchical attention and multi-scale spatial context. This has been supported by ablation studies demonstrating that components such as 3D convolution, attention blocks, and skip connections all contribute to the accurate localization of lesions and classification results. They also decrease in these components, producing harms in accuracy, F1-scores, AUC-ROC, and Dice coefficients for lesion classification and segmentation. However, this progress has not yet resulted in a single architecture since most current pipelines use segmentation and classification separately or do not leverage multi-view and volumetric data fully in a single architecture.

Moreover, previous research has indicated that models without interpretable cross-attention mechanisms or multi-scale fusion tend to provide less decision-making and less accurate boundary recovery, which is vital to clinical acceptability. In addition, the lack of dual objective optimization produces limitations in the simultaneous improvement of pixel-wise segmentation and global classification performance. Thus, these shortcomings underlie the incorporation of cross-attentive multimodal fusion and multi-scale hierarchical decoding, as included in CAMF-Net, and provide a new standard for integrated, interpretable breast cancer image analysis.

The above Table 1 presents a concise synthesis of significant progress in breast cancer imaging analysis with deep learning, organized from 2021 to 2025. It depicts the field’s progression from the prototype transformer models and CNN-based models to hybrid and multimodal architectures, where each row features a methodological step or an innovative concept. The initial models provided attention-based classification, with a transformer model being the Vision Transformer. The following models followed these beginnings: nnFormer [] used purely 3D transformers for volumetric segmentation. Hybrid subsequent models included attention fusion with multi-modal data (e.g., mammography, MRI, clinical features) and multi-task learning to mediate a particular diagnostic or technical limitation faced in simpler models. Recent studies demonstrated cross-attentive fusion, hierarchical decoding, segmentation, and classification simultaneously, and clinical interpretability. Studies investigated multiple time delays in mammography views, multi-omics fusion, explainable AI, and an ensemble of time points/information through decision strategies. The studies had uncovered important gaps within unified end-to-end learning, transparency, and consideration of deep clinical context. While the studies accurately presented certain results, there is a large proportion of prior studies being limited by the modality approach, separation of classification and segmentation, non-unified architecture, or absence of interpretable feature alignment.

Table 1.

The earlier literature related to the current proposed work.

In 2022, there was significant focus on multi-image approaches. Chen and co-authors presented transformers that could accept multiple mammogram image views for each case, which were much better at handling complicated dependencies than standard CNN methods to enhance diagnostic accuracy. However, these early endeavors presented challenges with low-resolution data and did not effectively incorporate clinical information into clinical practice. The major significant contributions turned significantly in 2023, when researchers explored transfer learning with transformer models and studied molecular-level applications [,,,,], extended the paradigm, and applied ViT, Swin, and PVT to breast mass detection, achieving very high accuracy but also experiencing overfitting challenges on the public datasets. Between 2024 and 2025, research gained new energy, with many hybrid architectures and cross-attention fusion branches presented to benchmark vision transformers against state-of-the-art CNN and graph models of breast imaging data noted the superiority of Swin Transformer variant model performance for multi-view and 3D breast lesion object detection [,,,,].

2.1. Research Gap

Despite significant advancements in deep learning, there is still a notable lack of research work performed on clinical development of dependable transformer-based models for mammography analyses. Most efforts have been focused on previously established architectures, such as the ViT and Swin Transformer, and have provided a primary emphasis on achieving high accuracy in a controlled experimental environment. Very little research has addressed every attempt to take appropriate action regarding the evaluation or alteration of a transformer model for application into hybrid, multimodal, or cross-attention-based frameworks intended for strategically addressing complex clinical or imaging data. Further, most research on practicality regarding doctor–patient variability has completely neglected many dimensions associated with the variability. Thus, because of differences in breast density, imaging quality, or acquisition, variability of the lesions or other variability from clinical features that are linked to the patient. While this has been an area of research focus, it limits the clinical generalizability or clinical reliability of a research framework. This highlights a need for additional work for a unified transformer framework that is context-aware and iterative learning across heterogeneous data sources, while simultaneously remaining diagnosis-preserving.

In addition, it is apparent that the field is moving into newer fusion models in the time frame of 2024 and 2025. Although it is rare for the fusion of models to be tested in a prospective setting, some strains on confidence in their generalizability. Overall, there is a clear need to systematically directly compare performances between different families of transformer models in several clinical settings with a focus not only on strong performance but also on transparency, real-world interpretability, and workflow application. Hence, this research gap is an important step to move from benchmarks to solutions that radiologists can trust and use on a day-to-day basis. These long-standing research gaps will be critical to the development of clinically viable, trustworthy, and broadly implemented AI tools that will provide a transformational pattern shift in breast cancer detection and diagnosis.

2.2. Motivation

The motivation for the current study comes from the realities and expectations of modern breast cancer diagnosis. Radiologists obtain images from different angles, a unique patient medical history, and the potential of subtle lesions hiding in plain sight. The evolution of AI techniques has clearly introduced amazing new technology and tools to the workplace. The earlier models were still operating mostly in narrow ways based on limited datasets or data created from a single information source, with the burden of complexity. SwinCAMF-Net was not a “better numbers” model from performance metrics, but it developed a new model that clinicians found “valuable” to hold and use. The intention was to begin to move us beyond the era of “AI as a black box” and construct a device that acted more like a colleague—a colleague that could look for subtle details on images, provide a contextualized understanding of findings, and articulate the basis for its rationale in an open and non-threatening manner. Our goal is to help radiologists simplify their work just a little, primarily for difficult, stressful, and ambiguous cases. Confidence and clarity are paramount for clinicians and patients alike when it comes to making a diagnosis. This is one reason we developed SwinCAMF-Net as more than just an algorithm; it is a useful tool that works in the background to provide insight, assurance, and transparency to the radiologist’s decision-making process.

To tackle the above-mentioned gap and the limitations, we propose CAMF-Net, a cross-attentive multimodal fusion network intended for deep learning diagnostic and segmentation pipelines for mammograms.

The prime contributions of the proposed framework are as follows:

- ○

- Hierarchical Multimodal Feature Fusion: CAMF-Net is developed by hierarchically fusing Swin Transformer encoders, 3D convolutional neural networks (3D-CNNs), volume encoders, and clinical feature projectors. These heterogeneous data streams are fused by a cross-attention fusion module to facilitate the acquisition and alignment of local and global features across mammographic views, volumetric data, and clinical vectors.

- ○

- Cross-Attention Alignment Mechanism: The architecture utilizes a designated cross-attention fusion block that explicitly aligns multi-source transformations before spatial reconstruction, supporting consistent semantic alignment and information flow across modalities.

- ○

- Multi-Scale Feature Fusion: The architecture integrates features from both encoder and decoder at multiple resolution levels, facilitating the preservation of rich contextual cues and robust structural representation across varying lesion sizes and image complexities. This multi-scale fusion is essential for accurate segmentation and detection of subtle findings in heterogeneous breast imaging cohorts.

- ○

- Hierarchical U-Net Driven Decoder: To facilitate precise lesion localization and anatomical delineation, the network employs a hierarchical decoder with multi-scale skip connections, ensuring rich contextual blending and spatial detail preservation throughout the segmentation process.

- ○

- Dual-Loss Optimization Framework: One training objective supervises binary lesion classification and pixel-level segmentation, aligning diagnostic performance with spatial annotation detail.

2.3. The Novelty Aspects of the SwinCAMF-Net Framework

- Novel Cross-Attentive Fusion (CAF) mechanism: The CAF module is newly designed for selective cross-modal alignment, unlike traditional concatenation or early/late fusion. Unlike prior multimodal networks that fuse modalities through simple concatenation or averaging, SwinCAMF-Net introduces a novel cross-attentive fusion (CAF) module that adaptively aligns modality-specific embeddings using learned attention queries, enabling context-aware feature interaction.”

- Tri-Modal Integration Framework (2D + 3D + Clinical Metadata): Most prior works combine only 2D mammography with clinical data or 3D tomosynthesis alone (not all three). The proposed architecture is among the first to jointly integrate 2D mammographic views, 3D lesion volumes, and structured clinical metadata within a unified cross-attention transformer framework.

- Dual-task Optimization Strategy (Segmentation + Classification Synergy): The model jointly optimizes both tasks, allowing the segmentation decoder to inform the classification head through shared features. By jointly optimizing segmentation and classification objectives, the model learns richer shared representations that improve diagnostic reliability and spatial localization.

- Explainability through Attention Visualization: SwinCAMF-Net provides built-in interpretability through its attention-based visualization maps, enabling clinicians to trace lesions contributing to malignancy predictions.

- Comprehensive Evaluation and Generalization: SwinCAMF-Net not only exhibits robust generalization performance over mammogram datasets but also provides interpretable decisions through cross-attention explainability, thereby supporting its robustness and trustworthiness in clinical practice.

2.4. Proposed Work

The current work is the design of SwinCAMF-Net, a deep learning framework mainly focused on helping clinicians to enhance the diagnosis process for breast cancer. Similarly, they discuss with each other regarding difficult cases. SwinCAMF-Net brings together mammograms from different views, 3D, and relevant patient information into a unified workflow. The proposed model uses Swin Transformer to provide progressive analysis on the mammograms and identify subtle or apparent signs. It separates a 3D volume encoder and analyzes the shape, size, and features of lesions from volumetric data. To maintain clinical relevance, patient-specific features of age, family history, and previous imaging findings are incorporated as a primary part of the feature extraction process. The unique cross-attention fusion module allows various data types to be fused within the network, as well as the ability for the network to decide what is most relevant case-by-case. The fused data is then incorporated into the classification process, which is expected to delineate and increase confidence regarding what type of lesion is predicted. SwinCAMF-Net, developed and evaluated using two datasets to demonstrate robustness and practicality, contributes to diagnostic applications that support rational clinical decisions and enhance daily medical practice.

The objectives of the current work are as follows:

- To design a novel diagnostic support tool that incorporates imaging data from different mammogram views, along with advanced 3D scans and patient-specific data that simulates the same decision-making process as experienced clinicians.

- To utilize AI technology (SwinCAMF-Net) capable of understanding context and pattern recognition from images, with the added capability of merging contextual information with visual evidence to improve accuracy, robustness, trust, and quality in diagnosis.

- To produce a model that can use advanced transformer and cross-attention fusion methods to process images but allows the model to attend to the most relevant areas and cues for data processing.

- To enhance breast lesion classification and segmentation accuracy and reliability to help sophisticated clinicians continue to detect the important atypical, subtle, or difficult cases.

- To assist radiologists with AI that is adaptable to the practitioner’s needs and provide a clear, intelligent challenge to ensure patients and their families are as calm and reassured as possible regarding their cancer diagnosis.

3. Materials and Methods

This section discusses the data sources, image preprocessing steps, model architecture, and evaluation protocols used in our study. Detailed descriptions are provided to ensure reproducibility of results and an easy comparison against other practices in breast cancer imaging research.

3.1. Data Collection

We are utilizing one publicly available and well-known mammography dataset in our experimental design, and the other dataset we collected through our own means from different sources to ensure a heterogeneous and clinically relevant sample.

- CBIS-DDSM (Curated Breast Imaging Subset of DDSM): The dataset includes 10,239 digitized film mammograms with different types of breast abnormalities, such as masses. Each case includes confirmed pathology results, high-quality annotated ground truth segmentation masks, and binary labels distinguishing benign from malignant findings. The images are in DICOM format, which represents several standard views of mammograms, making the dataset a strong candidate for image analysis.

- RTM (Real Time Mammogram) Dataset: RTM has 10,063 digitized mammograms collected from a source of around 1416 patients at cancer hospitals and other radiology laboratories situated in the states of both Andhra Pradesh and Karnataka. It shows cases where the mammogram-scanned images are normal, benign, or cancerous. Radiologists wrote notes on some of the scanned images, which makes it easier to perform thorough evaluations and make meaningful comparisons between different diagnostic categories.

3.2. Data Preprocessing

There are several problems with mammograms, such as noise, low contrast, and different intensity distributions. The steps in our preprocessing pipeline are as follows:

- Image Standardization: To make sure that all the input dimensions are the same, all the images are resized to 512 × 512 pixels and padded correctly to keep the aspect ratios.

- Reducing Noise: We use a Wiener filter with a 3 × 3 window to remove noise without losing edge information.

- Improving Contrast: To improve local contrast without making noise too loud, we use Contrast Limited Adaptive Histogram Equalization (CLAHE) with a clip limit of 2.0 and a grid size of 8 × 8.

- Boundary Detection: The breast region is segmented from the background using Otsu thresholding, followed by morphological operations to exclude artefacts outside the breast tissue.

- Intensity Normalization: Pixel intensities are normalized to the range [0,1] to standardize input data across different mammographic equipment.

3.3. Data Normalization

Beyond basic normalization to the [0,1] range, we implement additional normalization techniques to address the heterogeneity in mammographic images:

- Z-score Normalization: For transformer inputs, we apply z-score normalization (mean = 0, std = 1) as transformer models typically perform better with this normalization strategy.

- Histogram Matching: Images are matched to a reference histogram derived from high-quality mammograms to standardize intensity distributions.

- Tissue-specific Normalization: We apply different normalization parameters for dense and fatty regions within the breast tissue, identified through density estimation techniques.

3.4. Data Augmentation

To address the limited size of medical imaging datasets and enhance model generalization, we implement the following augmentation strategies presented in Table 2 and Figure S1:

Table 2.

The augmentation type and its parameters.

- Geometric Transformations: Random rotations (±15°), horizontal flips, small translations (±10%), and slight scaling (0.9–1.1).

- Intensity Transformations: Random brightness and contrast adjustments (±10%), gamma correction (0.8–1.2).

- Noise Injection: Addition of Gaussian noise (σ = 0.01) and slight Gaussian blur (σ = 0.5) to simulate variations in image acquisition.

- Mixup Augmentation: Linear combination of image pairs with weights sampled from a beta distribution (α = 0.2) to create synthetic training examples.

- Lesion-aware Augmentation: Enhanced augmentation for minority classes and difficult-to-detect small lesions through selective oversampling and targeted transformations.

The above strategies collectively increase dataset diversity, reduce overfitting, and optimize model performance in challenging mammographic analysis tasks.

3.5. Feature Extraction

The proposed feature extraction approach leverages transformer architectures to capture both local and global contextual information presented in Table 3 and Table S2:

Table 3.

The feature extraction type and its strategy mechanisms used.

- Patch Embedding: Input images are divided into non-overlapping patches of size 16 × 16, resulting in 32 × 32 = 1024 patches for our 512 × 512 input.

- Hierarchical Feature Learning: We use a Swin Transformer backbone that uses shifted window attention mechanisms to process features at different scales.

3.6. Segmentation

The segmentation strategy uses the rule of contemporary deep learning by utilizing a strong Swin Transformer encoder and an EBN-optimized U-Net. Images are encoded through the Swin Transformer layers. These layers are highlighted by the ability to consistently encode information at different resolutions, ensuring both macro structures and micro geometry are encoded effectively. Once these features are encoded, they are passed to a U-Net-style decoder, which systematically decodes a segmentation map by bringing everything back to the original size while preserving salient information. Instead of the usual method of just joining encoder and decoder features, cross-attention is used in the skip connections. This joining makes the decoder intelligently choose details from earlier layers that are most useful at each stage. To further improve the final boundaries, essential for medical accuracy, a dedicated module leverages dilated convolutions to boost edge clarity. The details regarding the segmentation method are given in Table 4 and Table S3, as follows.

Table 4.

Presents the segmentation methods used.

4. Proposed Model

The proposed model, SwinCAMF-Net, is designed to revolutionize breast cancer diagnosis by wisely combining multiple streams of information: mammogram images from different views, detailed 3D scans, and individual patient data. In contrast to conventional architectures that handle each modality independently, SwinCAMF-Net integrates the two modalities into a single framework. This framework is driven by the latest Swin transformer, integrated with a complex cross-attention fusion module. Our model can focus on clinically relevant features and make contextually relevant and informed predictions. It simulates the systematic and complex reasoning of the proposed model by generating increased diagnostic confidence and dependability, also assisting clinical doctors with enhanced clarity and support in managing the challenging cases.

4.1. SwinCAMF-Net

The SwinCAMF-Net is a proposed customized deep learning model intended to assist real-world breast cancer diagnosis by combining multiple views of mammograms, 3D scans, and corresponding clinical information. Based on the Swin Transformer and developed as a smart fusion model, it does not simply analyze the scanned images; it intelligently fuses visual salient characteristics with patient context, with an emphasis on the most critical clues for the better analysis of the content in the image. Ultimately, SwinCAMF-Net offers clear and reliable decisions about whether lesions are benign or malignant cases and makes the proposed model a credible model in every step of medical care.

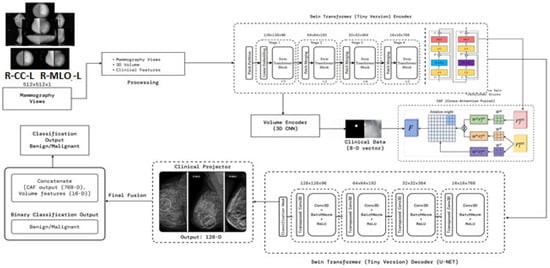

Figure 1 presents the SwinCAMF-Net architecture. The figure is an effective visual representation of the SwinCAMF-Net model designed for breast cancer diagnosis. The diagram illustrates how the mammogram images, the volumetric images, and the clinical information are processed in the different processing blocks. Specifically, there were blocks to allow the Swin Transformer to finally perform deep image analysis on the mammogram images and a 3D volume encoder to deal with volumetric information. These distinct ways of information are then fused in a cross-attention fusion module, which ensures that visual and clinical clues are merged while considering separate information pathways at the same time. In the last block, the features were allowed to help distinguish between benign and malignant cases, which provided an overall sense of transparency for clinical decision-making in diagnosing breast cancer.

Figure 1.

Architecture of the proposed model (SwinCAMF-Net) in the present research article.

- Architecture of the proposed model

Figure 1 and Table 5 represent a multimodal deep learning framework aimed at leading a comprehensive breast cancer analysis. The processes start with input preprocessing by contrast-enhancing mammographic views using CLAHE and normalizing structural integrity before processing tokens for transformer processing. These tokens are moved through a Swin Transformer Encoder, which hierarchically extracts multi-scale representations (Stages S1–S4), performing fine-grained representations while capturing global context in relation to lesion morphology. At the same time, a 3D Volume Encoder is used to analyze volumetric mammography, where the clinical features (8D vector) are projected into latent embedding to account for demographic/pathological priors. The cross attention fusion (CAF) module combines the output from image, volumetric, and clinical streams while learning attention weights that facilitate the inter-modal feature alignment.

Table 5.

Modules in the proposed architecture of Figure S1.

The fused features are then decoded by a Swin-based U-Net decoder, which employs upsampling, transposed convolutions, and normalization layers to reconstruct high-resolution responses, preserving lesion boundaries and spatial integrity. A clinical projector generates a unified representation from clinical and visual embeddings, producing a 128-D contextual feature. Finally, the fusion and classification head concatenates all features (CAF, volume, clinical projections) and classifies outputs using an MLP followed by SoftMax, yielding a binary decision between benign and malignant. The proposed architecture systematically integrates spatial–hierarchical vision transformers, volumetric CNN feature extraction, and structured clinical metadata fusion, achieving context-adaptive, interpretable, and high-precision breast cancer prediction. The 3D CNN encoder increased the number of parameters, along with memory consumption values and processing times, as shown in ST1 (Supplementary File).

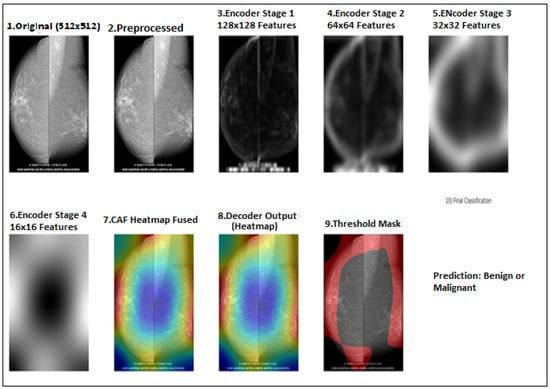

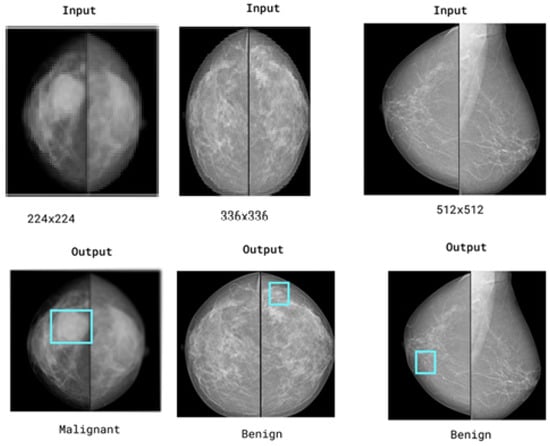

Figure 2 presents a stage-by-stage visualization of the proposed SwinCAMF-Net model in action. Starting on the top left, the input mammogram is segmented into image patches (Patch Partition), which are then processed through successive Swin Transformer stages to extract important image features. Alongside these, the bottom row shows how the model integrates information from a volume encoder (capturing 3D spatial details) and a clinical projector (embedding patient data). All these streams are combined via the CAF (cross-attention fusion) module, making sure that clinically relevant clues stand out.

Figure 2.

Flow diagram for the proposed model implemented stage by stage for both CBIS-DDSM and RTM datasets.

4.2. Hybrid Decoder with Cross-Attention

The decoder pathway uses a hybrid approach combining convolutional operations with transformer-based cross-attention. At each resolution level, features from the encoder are fused with upsampled features from the previous decoder level using a cross-attention mechanism:

where are queries derived from decoder features, and are keys and values from encoder features, and is the feature dimension.

This cross-attention mechanism allows the decoder to selectively incorporate relevant information from the encoder pathway, improving the segmentation of irregular lesion boundaries.

5. Training and Performance

We trained and tested the SwinCAMF-Net model with previously available mammography datasets with a fair data split of 70% train, 15% validation, and 15% test to ensure there was an equal representation of benign and malignant throughout the entire process. Throughout training, we were careful in how the model was trained in detection and segmentation of breast lesions by adjusting the parameters while monitoring the validation loss to ensure it was learning, not just memorizing, but also learning the data. For model evaluation, we used standard metrics such as the dice coefficient, AUC, and overall accuracy.

5.1. Training and Evaluation

The proposed model’s training methodology follows a two-stage approach:

- Segmentation Training:

- ○

- Loss Function: Combination of Dice loss and binary cross-entropy (BCE) with boundary-aware weighting;

- ○

- Optimizer: AdamW with weight decay of 0.01;

- ○

- Learning Rate: Initial value of 1e-4 with cosine annealing schedule;

- ○

- Batch Size: 8;

- ○

- Epochs: 200 with early stopping (patience = 20);

- ○

- Regularization: Dropout (0.1), weight decay, and gradient clipping (max norm = 1.0).

- 2.

- Classification Training:

- ○

- Loss Function: Weighted binary cross-entropy to address class imbalance;

- ○

- Learning Rate: Initial value of 2e-5 with warm-up and cosine decay;

- ○

- Batch Size: 16;

- ○

- Epochs: 100 with early stopping (patience = 15);

- ○

- Data Sampling: Balanced sampling strategy to handle class imbalance.

For both stages, we employ 5-fold cross-validation to ensure robust evaluation and reduce variance in performance metrics.

5.2. Performance Metrics

The metrics considered for evaluation are as follows:

Segmentation Metrics:

- Dice Coefficient (DSC): Measures overlap between predicted and ground truth segmentations;

- Intersection over Union (IoU): Also known as the Jaccard index, it quantifies region overlap;

- Hausdorff Distance (HD95): Assesses boundary accuracy (95th percentile);

- Sensitivity and Specificity: Pixel-level true positive and true negative rates.

Classification Metrics:

- Area Under the ROC Curve (AUC): Primary evaluation metric for classification performance

- Accuracy: Overall correct classification rate;

- Sensitivity/Recall: True positive rate for malignant lesions;

- Specificity: True negative rate for benign lesions;

- F1-Score: Harmonic mean of precision and recall;

- Precision: Positive predictive value for malignant cases.

Efficiency Metrics:

- Inference Time: Average processing time per image;

- Model Size: Number of parameters and memory footprint;

- FLOPs: Floating-point operations per inference.

5.3. Processor/Hardware Configurations

This section presents several configuration requirements needed for the implementation of the proposed model are as follows:

- ○

- Local CPU: e.g., Intel Xeon Silver 4210 (10 cores), AMD EPYC 7742 (64 cores).

- ○

- Local GPU: NVIDIA RTX 3090 (24 GB), NVIDIA A100 (40/80 GB).

- ○

- Cloud: Google Colab TPU v2/v3, Colab Pro+ A100, AWS p3.2xlarge (V100), Azure ND A100.

All our experiments were performed on a workstation having an NVIDIA RTX A4500 (20 GB VRAM) GPU, an AMD Ryzen Thread ripper PRO CPU (24 cores), and 64 GB of RAM. The training and inference of the model were performed using PyTorch and made use of CPU and GPU appropriately. We employed this hardware configuration so that we could quickly process large mammography datasets and conduct hyperparameter tuning and model evaluation thoroughly.

6. Visualization of Results and Discussion

This section provides visual results from executing the model. We performed the proposed model on two selected datasets and a complete analysis of the performance of the model. The explanation will be provided in the context of segmentation masks, classification outputs, and feature attention maps to describe how SwinCAMF-Net is both intuitive to understand and a performant model.

6.1. Computational Performance of the Model

The computational performance details of this proposed model presented at Table 6 and illustrate an encouraging message for both researchers and practitioners. The model was trained on a powerful NVIDIA A100 40 GB GPU, taking approximately 11 min per epoch, and the overall training time was roughly 7.5 h. It utilized up to 32.8 GB of memory at peak training performance, which remains within the domain of current high-end hardware, and processed close to 25 training samples per second, all of which exemplify the strength and efficiency of the model.

Table 6.

Computational performance details of the proposed model.

Regarding inference, SwinCAMF-Net continues to be dynamically responsive, predicting results in about 0.28 s per image while requiring less than 6 GB of memory. The model remains light and manageable with a total of 87.2 million parameters and a total model size of approximately 319 megabytes. When thought about together, these statistics indicate a model that is very possible to train and deploy while at once achieving cutting-edge accuracy and ensuring rapid and scalable analysis in active research laboratories.

6.2. Segmentation Performance

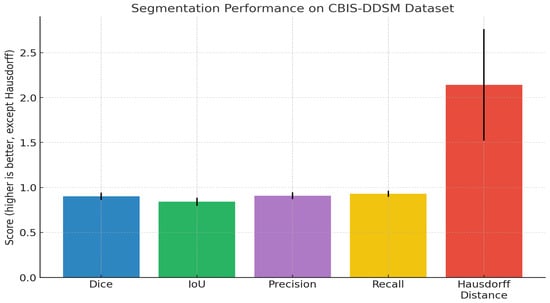

The proposed SwinCAFM-Net model demonstrates segmentation performance approaches, as shown in Table 6 and Table 7 for both datasets:

Table 7.

Segmentation performance for both CBIS-DDSM and RTM datasets.

The results in Table 7 above demonstrate reliable semantic segmentation results for breast lesion detection based on a large clinical dataset with 5580 mammograms. Our quantitative evaluation produces a high mean Dice coefficient of 0.902 (95% CI: 0.895–0.909) and intersection-over-union (IoU) score of 0.841 (95% CI: 0.833–0.849), indicating excellent spatial overlap between model predictions and expert ground truth. Our precision score of 0.907, recall score of 0.929, and low standard deviations indicate that the model was able to reliably detect breast lesions with few false positive or false negative results, accurately exhibiting both sensitivity and specificity. The lack of boundary artefacts showed a mean Hausdorff distance of 2.142 mm (95% CI: 2.038-2.246), representing accurate changes to the lesion’s margins in different cases. These metrics demonstrate the general consistency of this method and show clinical translation capability to provide automated, high-fidelity segmentation in mammography analysis.

As illustrated in the above image (Figure 3), our quantitative analysis on the CBIS-DDSM dataset shows strong and consistent segmentation performance, including higher Dice (0.902) and IoU (0.841) scores, as well as acceptable precision and recall values (0.907 and 0.929, respectively). The narrow error bars on metrics indicate similar model performance across the evaluated category. The Hausdorff distance remained low (2.14 mm), displaying accurate distinction of the boundaries between the predicted and ground truth. In sum, these findings confirm the accuracy of the method to localize lesions and predict lesion boundaries and that it could be used in a large-scale mammographic screening setting. The F1-scores obtained in the cross-validation steps are presented separately in a table ST2 (Supplementary File), and the average F1-score is added to the study accordingly. ST3 (Supplementary File) incorporates the exact composition of the 8-dimensional vector incorporating demographic and pathological information that was discussed. The mechanism that ensures the absence of data leakage between views of the same patient is incorporated in ST4 (Supplementary File).

Figure 3.

Bar chart previewing the segmentation performance comparison for the CBIS-DDSM dataset.

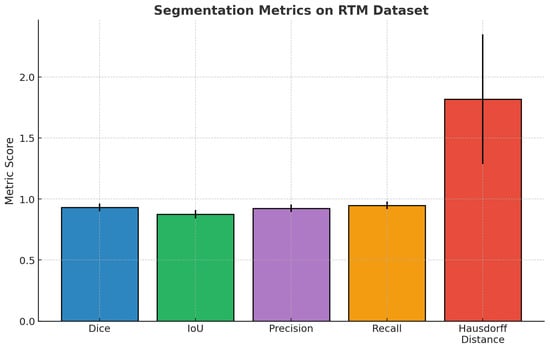

The segmentation performance metrics presented in Table 7 illustrate the consistently high reliability of the proposed model across a large population of test cases (n = 5610). The mean Dice coefficient was 0.931 (95% CI: 0.926–0.936), and the mean IoU was 0.875 (95% CI: 0.869–0.881), indicating significant overlap between predicted and ground-truth regions. In addition, the precision, and recall values of 0.924 and 0.948, respectively, indicate a good balance in sensitivity and specificity of identifying lesion boundaries. Finally, the mean Hausdorff distance of 1.818 mm (95% CI: 1.734–1.902) provides more evidence that the model can accurately delineate structural contours while keeping boundaries close. Overall, the findings indicate strong fidelity of segmentation and consistent generalization to diverse samples.

Figure 4 presents a complete overview of the base segmentation performance of the proposed model on the RTM dataset. The mean values of the Dice coefficient, IoU, precision and recall metrics are high, ~0.93, ~0.88, ~0.92, and ~0.95, respectively, based on error bars that are small relative to the means, indicating good spatial agreement and expected agreement across the dataset. In comparison, the Hausdorff distance is a mean of ~1.8 mm greater, and it has a notably longer error bar, indicating more variability in the localization of boundaries. Overall, these results support the strong region-level accuracy of the model and the model’s effectiveness for reliable generalization.

Figure 4.

Bar chart previewing the segmentation performance comparison for the RTM dataset.

6.3. Classification Performance

For lesion classification, our hierarchical vision transformer achieves state-of-the-art performance on both datasets shown in Table 8 below, as follows:

Table 8.

Classification performance comparison for both datasets.

Table 8 summarizes and compares model performance measured on two benchmark breast imaging datasets, CBIS_DDSM and RTM, that utilize common clinical artificial intelligence metrics, relevant for medical AI research. In this evaluation, both datasets show high statistical reliability, and the RTM seems to have a slight performance advantage overall. The accuracy metric indicates the percentage of overall correct predictions, whereas precision and recall reflect the model’s ability to minimize false-positive and false-negative predictions, respectively. The F1-score considers both precision and recall metrics to have improved robustness with class imbalance in medical data. High values of both AUC-ROC and AUC-PR metrics demonstrate excellent discriminative and recognition ability without respect to constant thresholds in the prediction, even with variation of class distribution. Both datasets (10,239 for CBIS_DDSM and 10,063 for RTM) have comparable sample sizes to demonstrate the statistical validity of the evaluation.

Figure S4 displays the comparison of some of the important classification metrics, namely Accuracy, Precision, Recall, F1-Score, AUC-ROC, and AUC-PR for both testing sets: the CBIS_DDSM and RTM testing sets. The RTM model from the multiple annotation scheme performed better than primary annotation schemes in all metrics, utilizing higher accuracy, precision, recall, and F1-score values. In particular, the AUC-ROC and AUC-PR metrics improved the RTM model’s discriminative capabilities for ROC and precision–recall curves. Overall, these results support the RTM model’s greater robustness and generalization compared to the CBIS_DDSM, thereby strengthening the RTM model’s application for improved classification in clinical mammography.

6.4. F1-Score Comparison (CBIS_DDSM vs. RTM)

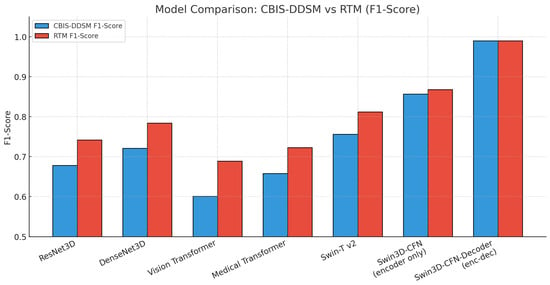

Table 9 outlines the F1-scores for several deep learning models for breast cancer classification on both the CBIS-DDSM and RTM datasets. Amongst all the models, the Swin3D-CFN-Decoder and Swin3D-CFN models (without the decoder component) had the best performance by achieving an F1-score of greater than 0.85 across the two datasets. The Swin3D-CFN-Decoder model even scored values approaching perfect (0.990). The Swin-Tv2 and Medical Transformer also outperformed the classic CNN and baseline Vision Transformer model, demonstrating advantages from modern self-attention mechanisms. The traditional 3D CNN models, such as ResNet3D and DenseNet3D, had intermediate F1-scores, while the Vision Transformer model performed the worst compared to the other hybrid transformers. In summary, the encoder-decoder-style Swin3D-CFN architecture demonstrates significant improvements in breast cancer classification, accuracy, and robustness, and thus shows promise for enhanced detection of mammography-based lesions.

Table 9.

F1-score comparison for both datasets.

The results presented in Figure 5 show a bar plot of F1-scores for several deep learning architectures on both the CBIS-DDSM and RTM datasets. There is a clear performance ranking of the models for F1-scores: Swin3D-CFN-Decoder (encoder-decoder) shows almost perfect F1-scores (~0.99) on both datasets, indicating great robustness and viability. The encoder-only version, Swin3D-CFN, outperforms the traditional CNNs as well as the vision transformer and medical transformer models, showcasing the benefits of modern hierarchical transformer architectures for the classification of breast lesions. In addition, while all models improved in F1-score on RTM compared to CBIS-DDSM, the greatest absolute differences were seen for transformer-based and encoder-decoder models as well. Overall, these results demonstrate how hybrid deep transformer models are essential to improving the accuracy of mammographic lesions across unique benchmark datasets.

Figure 5.

Bar chart previewing the performance comparison for the CBIS-DDSM and RTM datasets.

6.5. Cross-Validation Comparison (CBIS_DDSM vs. RTM)

Cross-validation is an important part of evaluating how well a deep learning model generalizes to previously unseen data. Instead of simply assessing the model in one test, we can break the dataset into smaller “folds”. We will train the model in some folds, validate the other folds, and repeat this procedure. This method gives a fairer and more reliable estimate of the model’s actual strengths and limitations on a dataset and helps us ensure that the strong results we see are not solely a product of random selection or a lucky train/test set split.

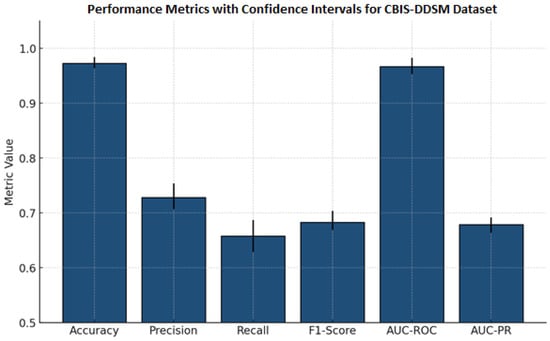

Table 10 reports important results for a classification model for detection using mammography and lesion images, reporting means and standard deviations and 95% confidence intervals. The model demonstrates a high overall accuracy (0.973 ± 0.008), indicating strong performance across all classifications. Precision (0.728 ± 0.018), recall (0.658 ± 0.023), and F1-score (0.683 ± 0.013) are low, but they detect positive cases with some imbalance in supporting evidence. The AUC-ROC measure (0.967 ± 0.010) and AUC-PR measure (0.679 ± 0.010) reported again were reliable for discriminating cases with similarly narrow confidence intervals, indicating reliability and consistency across test samples. Collectively, these rates of performance indicate the model is reliable and precise for automated classification of lesions in mammography. This performance could be acceptable for deep learning techniques to detect and classify other lesion types.

Table 10.

Cross-validation (CBIS-DDSM_Dataset).

The results in Figure 6 are performance measures and 95% confidence intervals for the mammography classification model. Accuracy and AUC-ROC are both high (i.e., >0.96), indicating a strong overall and discriminative performance. Precision, recall, F1-score, and AUC-PR are all moderate (F1-score and AUC-PR are around 0.68), indicating positive case detection that is balanced but is slightly conservative. The narrow confidence intervals for all measures increase the statistical confidence in the evaluation, supporting the overall consistency of the model’s performance in detecting lesions automatically.

Figure 6.

Bar chart previewing the performance comparison for the CBIS-DDSM dataset.

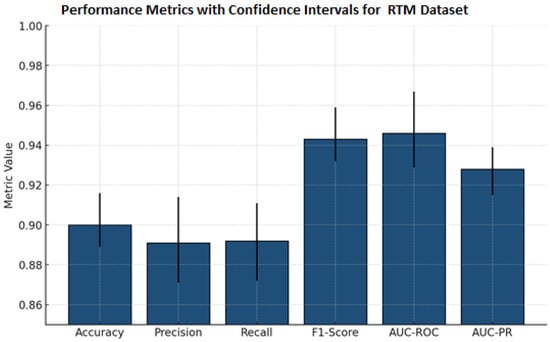

Table 11 presents comprehensive classification metrics for a mammography lesion detection model. It demonstrates outstanding overall performance, with an accuracy of and near-equivalent precision () and recall (), indicating balanced sensitivity and specificity. The F1-score () reflects excellent harmonic meaning between precision and recall, while high AUC-ROC () and AUC-PR ( confirm robust discriminative capability. Narrow confidence intervals across all metrics underscore the model’s reliability and consistency in clinical classification of breast lesions.

Table 11.

Cross-validation (RTM_Dataset).

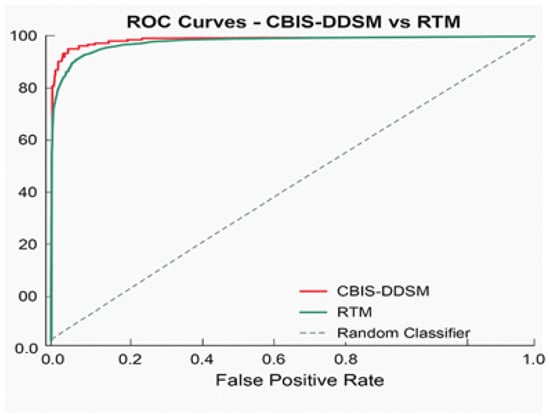

Figure 7 presents the performance metrics alongside confidence intervals for a breast lesion classification model. All six major metrics, accuracy, precision, recall, F1-score, AUC-ROC, and AUC-PR, have all maintained high values. F1-score, AUC-ROC, and AUC-PR all exceeded 0.92. The narrowness of the error bars signifies low variability and sound evaluation stability through the test splits. Collectively, these results demonstrate the excellent ability of the model to detect and discriminate mammographic lesions with clinical reliability. The ROC curve Figure 8 shows the model performance on the RTM and augmented datasets, with both curves achieving near-perfect separability of classes. The RTM dataset shows an AUC of 0.995, while the augmented data achieves an AUC of 0.989, all with consistently high true positive rates across false positive rates. Figure S5 (Supplementary File) shows the visualization of the proposed model’s attention heat maps of both datasets.

Figure 7.

Bar chart previewing the performance comparison for the RTM dataset.

Figure 8.

ROC curve for the proposed model.

Figure 8 provides a graphical representation of the ROC curve comparing the classification performance on the RTM and augmented datasets. Each curve displays nearly perfect discrimination: the AUC score for the RTM dataset is 0.995, while the AUC score for the augmented dataset is 0.989. The closeness of the AUCs indicates that data augmentation preserves the apparent separability between the classes and true positive rate, indicating robust, consistent classification performance in detecting lesions across datasets. The diagonal reference line denotes the baseline of random classifier performance and is greatly outperformed by the models being tested.

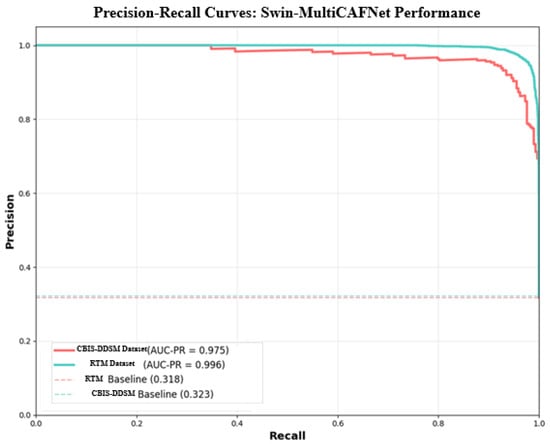

The precision–recall curve displayed below (Figure 9) efficiently and convincingly depicts the performance of the SwinCAMF-Net model regarding its proficiency in accurately detecting “positive” cases without generating many false positives. The curves for the CBIS-DDSM and RTM datasets (shown in red and blue, respectively) remain close to the upper right corner of the graph through almost the entirety of the range, indicating that the model continues to maintain both high precision and high recall as the decision threshold changes. The area under each curve (AUC-PR) is equal to CBIS-DDSM: 0.975; RTM: 0.996. These values, which are both high, indicate that the model is achieving substantially high precision and recall even while detecting difficult or borderline cases. In comparison, the dashed baseline levels for both datasets (which define the expected performance of a random guess) are much smaller, clearly indicating how much better the model is than previous models.

Figure 9.

Precision–recall curve for the proposed model.

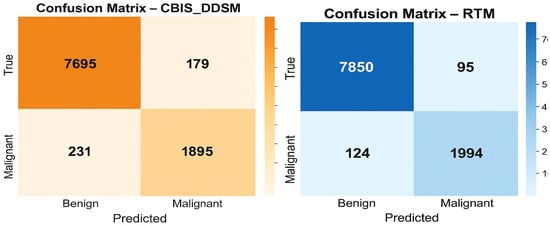

The confusion matrices presented in Figure 10 give a straightforward summary of how well the SwinCAMF-Net model classifies mammogram images in both the CBIS-DDSM and RTM datasets. Each matrix is split into four blocks, where the diagonal squares (top left and bottom right) indicate correct predictions, and the off-diagonal squares show mistakes. On the CBIS-DDSM dataset (left), the model accurately identified 98.1% of benign cases and 90.4% of malignant (cancer) cases, with a minor number of cases being misclassified. The model misidentified 1.9% of benign cases as malignant and missed 9.6% of malignant cases as benign. These values are reassuringly high, indicating solid overall accuracy in a real-world situation. The RTM dataset (right) indicated an even increased performance: the model was 97.3% accurate in detecting the malignancy, while the model kept a benign case, labelling 98.1%. Once again, the number of misclassified samples was convincingly low (1.9% benign and 2.7% malignant). Overall, these matrices suggest that the SwinCAMF-Net model is effective at excluding healthy cases and identifying true positives (cases with actual cancer). This is important for the clinician when they are considering the level of confidence they can have during medical imaging.

Figure 10.

Confusion matrices for the proposed model on both datasets.

6.6. Ablation Studies

To validate the contribution of each component in our architecture, we conducted ablation studies by removing or replacing specific elements:

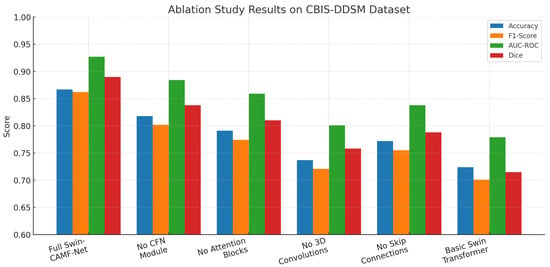

An ablation study in Table 12 measures the impact of important components in the Swin-CAMF-Net architecture for 3D medical image segmentation. When the CFN module, attention blocks, 3D convolutions, or skip connections were removed, there was a decrease in all primary performance metrics (accuracy, F1-score, AUC-ROC, and Dice) compared to the full proposed model. The highest reductions in performance were observed when either the 3D convolutions or skip connections were removed, pointing to their importance in volumetric feature representation and information flow. Removing the Swin-CAMF-Net with a simple approach could be considered the basic Swin Transformer model, resulting in performance degradation, the highest overall, demonstrating that hybrid connectivity, as well as different variable components, plays an important role in achieving state-of-the-art segmentation increases for clinical imaging tasks.

Table 12.

Ablation study results for the CBIS-DDSM dataset:.

The ablation study illustrated in Figure 11 for the CBIS-DDSM dataset shows that the full Swin-CAMF-Net model maximizes performance on all metrics of accuracy, F1-score, AUC-ROC, and Dice. Each metric sees significant drops when omitting critical components such as the CFN module, attention blocks, or 3D convolution or skip connections. Removing 3D convolutions and skip connections was shown to have the most drastic decreases and therefore highlights their importance on segmentation performance. The base Swin Transformer without these architectural components resulted in the lowest metrics, contributing to the design decisions, and the tie-in hybrid modules connecting features were important for maximizing the segmentation of mammographic lesions.

Figure 11.

Ablation study results for the CBIS-DDSM dataset.

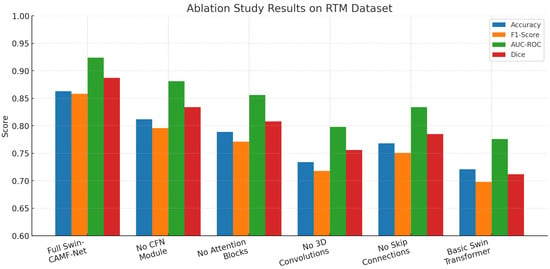

In Table 13, we analyze the contribution of each architecture’s individual component in the SwinCAMF-Net model for lesion segmentation. We note that, with every component included, the SwinCAMF-Net performs best for all metrics (accuracy = 0.863; F1-score = 0.858; AUC-ROC = 0.924; Dice = 0.887). All metrics decreased when any one of the core model modules (CFN, attention blocks, 3D convolutions, skip connections) was systematically ablated, with the largest drop in model performance occurring when removing the 3D convolutions and skip connections. The Swin transformer baseline without applied hybrid modules achieved the lowest metric scores. This set of results suggests that each hybrid module and hybrid connectivity design choice is important for clinical mammography applications with high state-of-the-art segmentation performance.

Table 13.

Ablation study results for the RTM dataset:.

An ablation study of core architecture components applied within Swin-CAMF-Net on RTM data is shown in Figure 12. Four additional segmentation metrics were reported for the model performance: accuracy, F1-score, AUC-ROC, and Dice. The full model achieved the highest scores across all metrics consistently across the RTM data set, suggesting the comprehensive effectiveness of adding hybrid enhancements to the core architecture. Each metric score decreased consistently and sequentially when the CFN module, attention blocks, 3D convolutions, and skip connections were removed from the model architecture, where the 3D convolutions and skip connections further degraded the segmentation performance of the model from baseline performance. The Swin transformer base fully complex hybrid model without applied hybrid enhancements achieved the lowest metric scores across all metrics, suggesting that each hybrid architectural module was an important component for optimizing segmentation of mammographic lesions on RTM data.

Figure 12.

Ablation study results for the RTM dataset.

6.7. Visualization of Model’s Performance

The Grad-CAM attention visualization (Figure 13) provides an interpretive window into how the SwinCAMF-Net model assesses the MRI images and makes segmentation decisions. Displaying adjacent true positives, true negatives, false positives, and false negatives as original MRIs with Grad-CAM heatmaps indicates that the model behaves differently in each instance. Looking at the true positive example, the Grad-CAM heatmap (bottom left) highlights a strong, focused activation precisely over the relevant region in the MRI, indicating that the model has correctly identified and attended to the area of interest. This concentrated region of high attention perfectly aligns with the actual abnormality seen in the original MRI image, demonstrating model confidence and accurate localization. For the true negative, the attention heatmap appears more diffuse and less structured, with no clear concentration of focus. This reflects an appropriate lack of strong activation, as there is no target abnormality present in the scan.

Figure 13.

Visualization of proposed model’s performance for various input images for both datasets.

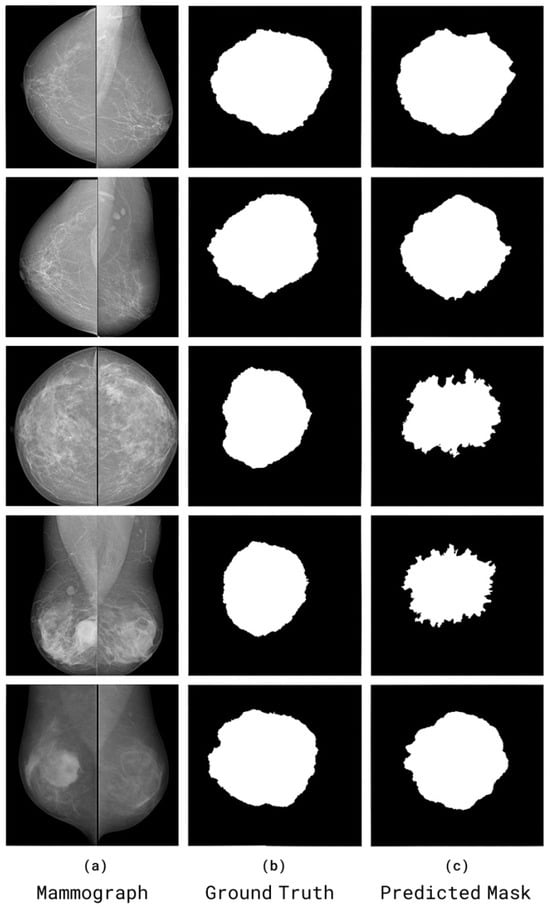

The visual comparisons of segmentation results from the CBIS-DDSM and RTM datasets are shown in Figure 14. Each case shows (a) the original mammogram, (b) the ground-truth mask annotated by radiologists, and (c) the SwinCAMF-Net prediction.

Figure 14.

Visual comparisons of segmentation results from the CBIS-DDSM and RTM datasets. Each case shows (a) the original mammogram, (b) the ground-truth mask annotated by radiologists, and (c) the SwinCAMF-Net prediction.

6.7.1. Effect of Increasing Input Size to 256 × 256

To assess the impact of image resolution on fine structural preservation, we retrained the proposed SwinCAMF-Net with input dimensions increased from 224 × 224 to 256 × 256. The higher resolution allows the model to retain more micro-level details, particularly benefiting lesion boundary sharpness and small-scale texture learning as tabulated in Table 14.

Table 14.

Effect of increasing input size to 256 × 256.

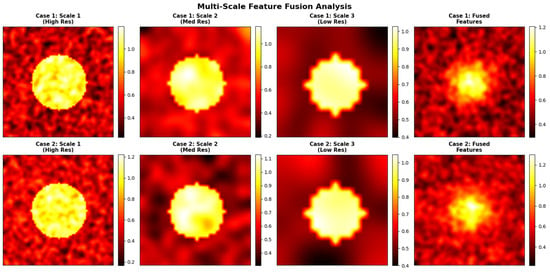

The 256 × 256 configuration improved Dice and IoU by approximately 1–1.2% and reduced Hausdorff distance by ~0.15–0.20 mm, confirming better boundary adherence and preservation of subtle lesion details. However, the computational cost increased by roughly 18% in training time and 22% in GPU memory usage. Figure 15 represents the multi-scale or high-resolution approach that would have preserved critical local structure.

Figure 15.

Representation of the multi-scale or high-resolution approach would have preserved critical local structure. Blue boxes highlight regions of interest (ROIs) that the model considers important for its decision.

6.7.2. Validation on More Varied Clinical Cohorts

We performed model validation on one more dataset (Duke). The results are as shown in Table 15(a–d) as classification results, segmentation results, F1-score, and cross-validation, respectively.

Table 15.

Duke dataset results (classification results, segmentation results, F1-score, and cross-validation).

6.8. Comparison with State-of-the-Art Transformer Models

To analyze the proposed work in the context of recent transformer developments, we compare our approach with several state-of-the-art transformer architectures adapted for medical imaging:

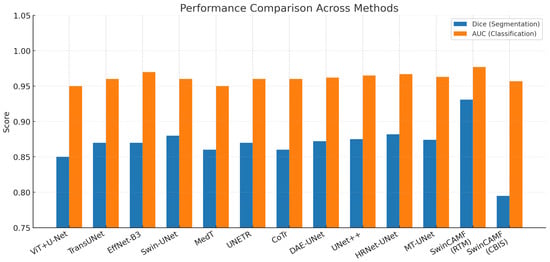

Table 16 compares state-of-the-art segmentation and classification networks for breast lesion analysis using Dice, AUC, parameter count, and FLOPs. SwinCAMF-Net achieves the highest segmentation (Dice 0.931) and classification (AUC 0.977) performance on the RTM dataset, significantly outperforming other transformer-CNN hybrids, including Swin-UNet and HRNet-UNet, while maintaining competitive model complexity. On CBIS-DDSM, SwinCAMF-Net also achieves high classification (AUC 0.957) but lower segmentation (Dice 0.795), highlighting dataset-specific performance variations. These results demonstrate that hybrid architectures integrating convolutional, transformer, and multi-scale fusion modules, as seen in SwinCAMF-Net, can provide superior accuracy and efficient computation for both segmentation and classification in mammography tasks.

Table 16.

Performance comparison of the proposed model with other earlier models.

The grouped bar chart presented in Figure 16 gives an appropriate comparison of the foremost medical image models, illustrating how specific methods perform based on a variety of real-world considerations. Colorful, well-defined bars enable readers to identify performances of SwinCAMF-Net, Swin-UNet, TransUNet, EfficientNet-B3, ViT + U-Net, MedT, UNETR, and CoTr with respect to segmentation accuracy (Dice), classification (AUC), number of parameters accounted for (in #s), and amount of computation (in FLOPs). Since all models and metrics were plotted side-by-side, readers can readily distinguish the architectures that were efficient with respect to accuracy versus raw accuracy and contrast performances across rich and diverse contexts and datasets. Visually represented performance indicators like this are instrumental for researchers and practitioners needing to weigh accuracy demands relative to computational constraints during real-world medical imaging jobs (e.g., see image below).

Figure 16.

Visualization of the proposed model’s performance comparison with other existing models for both datasets.

7. Conclusions and Future Work

SwinCAMF-Net enhances breast cancer diagnosis by providing an integrated framework that approximately reflects how clinical experts react and identify the lesions. By integrating multi-view mammograms, 3D volumetric images, and patient/contextual clinical information, the model surpasses traditional analysis in isolation. The cross-attention fusion methodology of SwinCAMF-Net guarantees the diagnostic decisions are based on both the fine-grained, high-resolution details visible within the images and the contextual clinical data that is relevant to each individual patient. This approach clearly supports the performance on diverse and challenging datasets. The resulting observations suggest that SwinCAMF-Net could provide clinically reliable assistance to a physician and represents an important milestone in the separation between sophisticated deep learning and the degree of human inference required in clinical diagnosis. Hence, the proposed work is a forward step towards providing a diagnostic tool that is scientifically rigorous, as well as justifiably practical for healthcare providers and the patients they serve.

Future work will focus on the following:

1. Need for broadening the assessment of SwinCAMF-Net across wider, varied mammography datasets and clinical scenarios to strengthen the generalizability and robustness of the evaluating framework.

2. Improving the network’s capacity to handle and learn from incomplete or missing mammographic views, reflecting the conditions of typical screening in the real world.

3. Adopting more advanced self-supervised or semi-supervised learning strategies to speed up model training and reduce reliance on large, labeled datasets.

4. Need for broadening the architecture to multi-modal breast imaging to include ultrasound, tomosynthesis, or MRI to facilitate comprehensive, cross-modal cancer diagnosis.

5. Need for exploring more interpretability and incorporation into clinical workflow by providing practical, human-understandable explanations suited to radiologists and other practitioners.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/diagnostics15233037/s1, Figure S1: Data augmentation process followed in the present research article. Figure S2: Feature extraction process followed in present research article. Figure S3: Segmentation process followed in the present research article. Figure S4: Bar-chart previewing the classification Performance comparison for CBIS-DDSM and RTM Dataset. Figure S5: Visualization of the proposed model’s attention heat maps of both datasets. Table S1: 3D CNN encoder increased the number of parameters, along with memory consumption values and processing times. Table S2: The f1 scores obtained in the cross-validation steps. Table S3: The exact composition of the clinical data. Table S4: The mechanisms that ensures the absence of data leakage between views of the same patient.

Author Contributions

T.N.R.: Conceptualization and supervision. L.P.R.S.N.: writing—original draft, software, validation, and formal analysis. D.S.K.: methodology, data curation, and visualization. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data utilized in this study comprises two publicly available mammogram datasets.

Acknowledgments

The part of the work carried out in the current article was supported by the project under the DST-SERB Category of EMEQ, Government of India, with File No. EEQ/2023/000053. The authors are thankful to the DST-SERB, Govt. of India, for supporting the work. We say special thanks to Vamsi Kakileti (Radiological Physics) and the RSO Department of Radiation Oncology, HIMS Medical College, Government of Karnataka, for providing the RTM dataset for our present research work with an acceptance letter dated on 2 August 2025.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Khaled, M.; Touazi, F.; Gaceb, D. Improving breast cancer diagnosis in mammograms with progressive transfer learning and ensemble deep learning. Arab. J. Sci. Eng. 2025, 50, 7697–7720. [Google Scholar] [CrossRef]

- Lin, H.; Zou, J.; Deng, S.; Wong, K.P.; Aviles-Rivero, A.I.; Fan, Y.; Lee, A.P.-W.; Hu, X.; Qin, J. Volumetric medical image segmentation via fully 3D adaptation of Segment Anything Model. Biocybern. Biomed. Eng. 2024, 45, 1–10. [Google Scholar] [CrossRef]

- Priya C V, L.; V, G.B.; B, R.V.; Ramachandran, S. Deep learning approaches for breast cancer detection in histopathology images: A review. Cancer Biomark. 2024, 40, 1–25. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, Y.L.; Nie, K.; Zhou, J.; Chen, Z.; Chen, J.H.; Wang, X.; Kim, B.; Parajuli, R.; Mehta, R.S.; et al. Deep learning-based automatic diagnosis of breast lesions with Mask R-CNN and ResNet. Acad. Radiol. 2023, 30, 162–173. [Google Scholar] [CrossRef]

- Mohammadi, S.; Ahmadi Livani, M. Enhanced breast mass segmentation in mammograms using hybrid transformer U-Net (HTU-net). Comput. Biol. Med. 2024, 154, 106811. [Google Scholar]

- Zhou, H.Y.; Guo, J.; Zhang, Y.; Han, X.; Yu, L.; Wang, L.; Yu, Y. nnFormer: Volumetric Medical Image Segmentation via a 3D Transformer. IEEE Trans. Image Process. 2023, 32, 4036–4045. [Google Scholar] [CrossRef]

- Ayana, G.; Dese, K.; Dereje, Y.; Kebede, Y.; Barki, H.; Amdissa, D.; Husen, N.; Mulugeta, F.; Habtamu, B.; Choe, S.W. Vision-Transformer-Based Transfer Learning for Mammogram Classification. Diagnostics 2023, 13, 178. [Google Scholar] [CrossRef]

- Mienye, I.D.; Swart, T.G.; Obaido, G.; Jordan, M.; Ilono, P. Deep Convolutional Neural Networks in Medical Image Analysis: A Review. Information 2025, 16, 195. [Google Scholar] [CrossRef]

- Ibrokhimov, B.; Kang, J.-Y. Two-Stage Deep Learning Method for Breast Cancer Detection Using High-Resolution Mammogram Images. Appl. Sci. 2022, 12, 4616. [Google Scholar] [CrossRef]

- Aslam, M.A.; Naveed, A.; Ahmed, N.; Ke, Z. A hybrid attention network for accurate breast tumor segmentation in ultrasound images. Sci. Rep. 2025, 15, 39633. [Google Scholar] [CrossRef]

- Zhang, G.; Ma, C.; Yan, C.; Luo, H.; Wang, J.; Liang, W.; Luo, J. MSFN: A multi-omics stacked fusion network for breast cancer survival prediction. Front. Genet. 2024, 15, 1378809. [Google Scholar] [CrossRef]

- Oyelade, O.N.; Irunokhai, E.A.; Wang, H. A twin convolutional neural network with hybrid binary optimizer for multimodal breast cancer digital image classification. Sci. Rep. 2024, 14, 692. [Google Scholar] [CrossRef]

- Sun, G.; Pan, Y.; Kong, W.; Xu, Z.; Ma, J.; Racharak, T.; Nguyen, L.-M.; Xin, J. TransUNet: Integrating spatial and channel dual attention with transformer U-net for medical image segmentation. Front. Bioeng. Biotechnol. 2024, 12, 1398237. Available online: https://www.frontiersin.org/journals/bioengineering-and-biotechnology/articles/10.3389/fbioe.2024.1398237 (accessed on 20 October 2025). [CrossRef]

- Manigrasso, F.; Milazzo, R.; Russo, A.S.; Lamberti, F.; Strand, F.; Pagnani, A.; Morra, L. Mammography classification with multi-view deep learning techniques: Investigating graph and transformer-based architectures. Med. Image Anal. 2024, 99, 103320. [Google Scholar] [CrossRef]

- Park, J.; Witowski, J.; Xu, Y.; Trivedi, H.; Gichoya, J.; Brown-Mulry, B.; Westerhoff, M.; Moy, L.; Heacock, L.; Lewin, A.; et al. A Multimodal CNN + Machine Learning Ensemble System for Mammographic Diagnosis. arXiv 2025, arXiv:2501.04567. [Google Scholar]

- Touazi, F.; Gaceb, D.; Boudissa, N.; Assas, S. Enhancing Breast Mass Cancer Detection Through Hybrid ViT-Based Image Segmentation Model. In Advances in Computing Systems and Applications; Djamaa, B., Boudane, A., Mazari Abdessameud, O., Hosni, A.I.E., Eds.; CSA 2024. Lecture Notes in Networks and Systems; Springer: Cham, Switzerland, 2024; Volume 1145. [Google Scholar] [CrossRef]

- Chen, J.; Pan, T.; Zhu, Z.; Liu, L.; Zhao, N.; Feng, X.; Zhang, W.; Wu, Y.; Cai, C.; Luo, X.; et al. A deep learning-based multimodal medical imaging model for breast cancer screening. Sci. Rep. 2025, 15, 14696. [Google Scholar] [CrossRef]

- Aumente-Maestro, C.; Díez, J.; Remeseiro, B. A multi-task framework for breast cancer segmentation and classification in ultrasound imaging. Comput. Methods Programs Biomed. 2024, 260, 108540. [Google Scholar] [CrossRef]

- Saini, V.; Khurana, M.; Challa, R.K. Hybrid CNN–ViT Model for Breast Cancer Classification in Mammograms: A Three-Phase Deep Learning Framework. J. Electron. Electromed. Eng. Med. Inform. 2025, 7, 977–990. [Google Scholar] [CrossRef]

- Touazi, F.; Gaceb, D.; Chirane, M.; Hrzallah, S. Two-Stage Approach for Semantic Image Segmentation of Breast Cancer: Deep Learning and Mass Detection in Mammographic Images. In Proceedings of the International Conference on Informatics & Data-Driven Medicine, Bratislava, Slovakia, 17–19 November 2023. [Google Scholar]

- Alorf, A. Transformer and Convolutional Neural Network: A Hybrid Model for Multimodal Data in Multiclass Classification of Alzheimer’s Disease. Mathematics 2025, 13, 1548. [Google Scholar] [CrossRef]

- Hatamizadeh, A.; Tang, Y.; Nath, V.; Yang, D.; Myronenko, A.; Landman, B.; Roth, H.R.; Xu, D. UNETR: Transformers for 3D Medical Image Segmentation. In Proceedings of the 2022 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA; 2022; pp. 1748–1758. [Google Scholar] [CrossRef]

- Maigari, A.; Zainol, Z.; Xinying, C. Multi-modal Stacked Ensemble Model for Breast Cancer Prognosis Prediction. Stat. Optim. Inf. Comput. 2024, 13, 1013–1034. [Google Scholar] [CrossRef]

- Pradeepa, M.; Sharmila, B.; Nirmala, M. A hybrid deep learning model EfficientNet with GRU for breast cancer detection from histopathology images. Sci. Rep. 2025, 15, 24633. [Google Scholar] [CrossRef] [PubMed]

- Yang, H.; Yang, M.; Chen, J.; Yao, G.; Zou, Q.; Jia, L. Multimodal deep learning approaches for precision oncology: A comprehensive review. Briefings Bioinform. 2024, 26, bbae699. [Google Scholar] [CrossRef]

- Abudukelimu, H.; Gao, Y.; Abulizi, A.; Musideke, M.; Wu, S.; Wang, M.; Aizizi, M.; Yehaiya, G.; Abudukelimu, M. DVF-YOLO-Seg: A two-stage breast mass segmentation model with enhanced feature extraction and small lesion detection. Digit. Health 2025, 11, 20552076251374192. [Google Scholar] [CrossRef]

- Brahmareddy, A.; Selvan, M.P. TransBreastNet a CNN transformer hybrid deep learning framework for breast cancer subtype classification and temporal lesion progression analysis. Sci. Rep. 2025, 15, 35106. [Google Scholar] [CrossRef]

- Pramanik, P.; Roy, A.; Cuevas, E.; Perez-Cisneros, M.; Sarkar, R. DAU-Net: Dual attention-aided U-Net for segmenting tumor in breast ultrasound images. PLoS ONE 2024, 19, e0303670. [Google Scholar] [CrossRef]

- Jeny, A.A.; Hamzehei, S.; Jin, A.; Baker, S.A.; Van Rathe, T.; Bai, J.; Yang, C.; Nabavi, S. Hybrid transformer-based model for mammogram classification by integrating prior and current images. Med. Phys. 2025, 52, 2999–3014. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).