Interpretable Multi-Cancer Early Detection Using SHAP-Based Machine Learning on Tumor-Educated Platelet RNA

Abstract

1. Introduction

2. Materials and Methods

2.1. Data Acquisition and Cohort Composition

2.2. Preprocessing and Feature Selection

- Statistical Filtering: One-way ANOVA was applied on 33% of the full dataset (mimicking the discovery phase of the original study), and genes with a false discovery rate (FDR) below 0.001 were retained. A maximum cap of 300 genes was imposed to limit model complexity.

- Correlation Filtering: From the ANOVA-filtered genes, we excluded features with pairwise Pearson correlation |r| > 0.8 to reduce multicollinearity.

- Standardization: All input features were scaled using z-score normalization within each cross-validation fold to avoid data leakage.

2.3. Machine Learning Pipeline

- Tree-based models: DT, RF, and XGB.

- Linear and non-linear models: LR and SVM.

- Neural models: Shallow NN and DNN.

2.4. SHAP Explainability Framework

- TreeExplainer for tree-based models (DT, RF, XGB),

- LinearExplainer for LR,

- KernelExplainer for SVM,

- DeepExplainer for neural networks (NN and DNN).

- Global feature importance: SHAP bar plots and beeswarm plots per model.

- Local explanations: Force plots highlighting gene-level impact on individual predictions.

- SHAP dependence plots: To visualize non-linear or interaction effects between gene pairs.

2.5. Weighted SHAP Aggregation

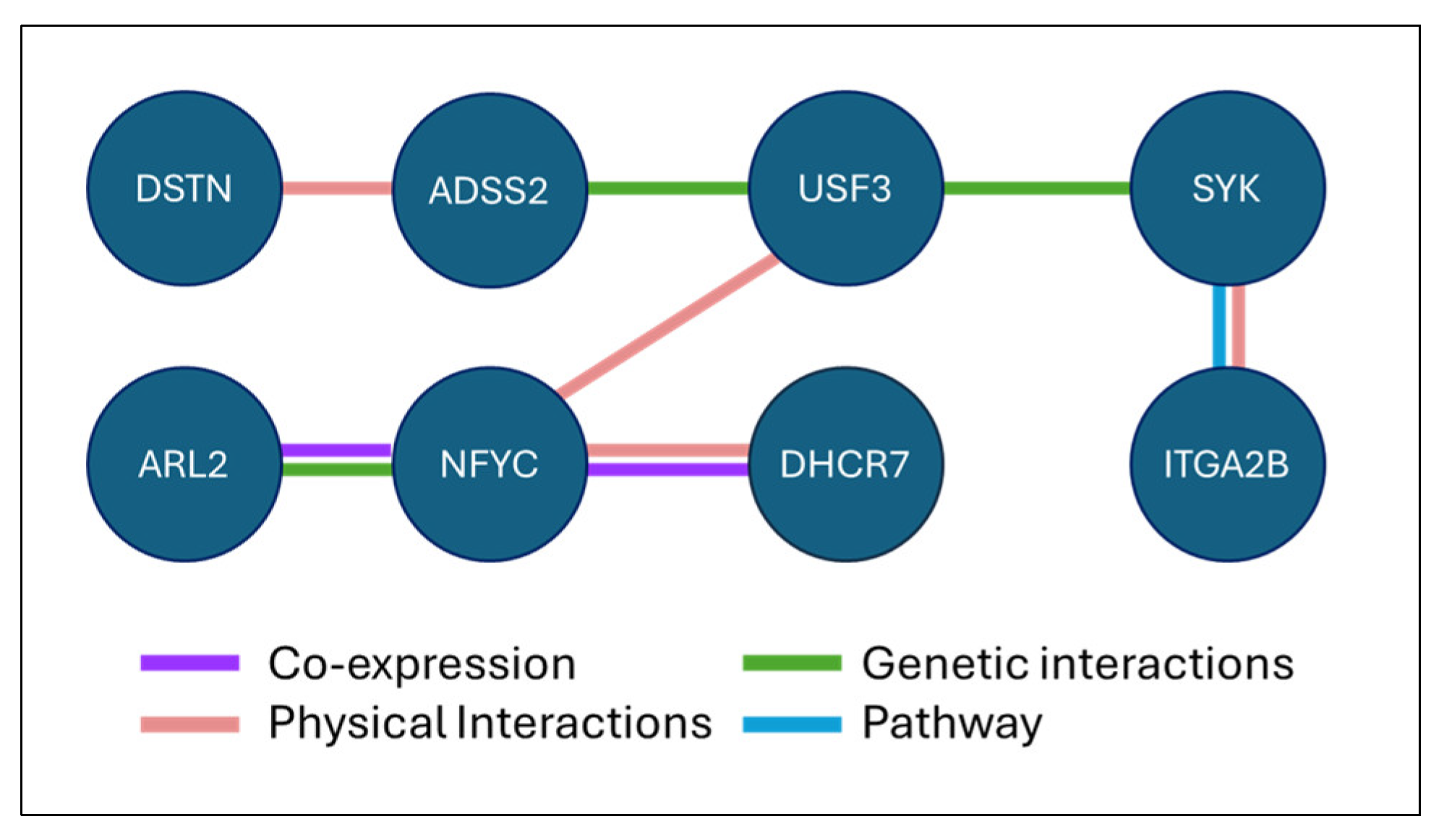

2.6. Model Agreement Analysis and Biological Relevance Evaluation

3. Results

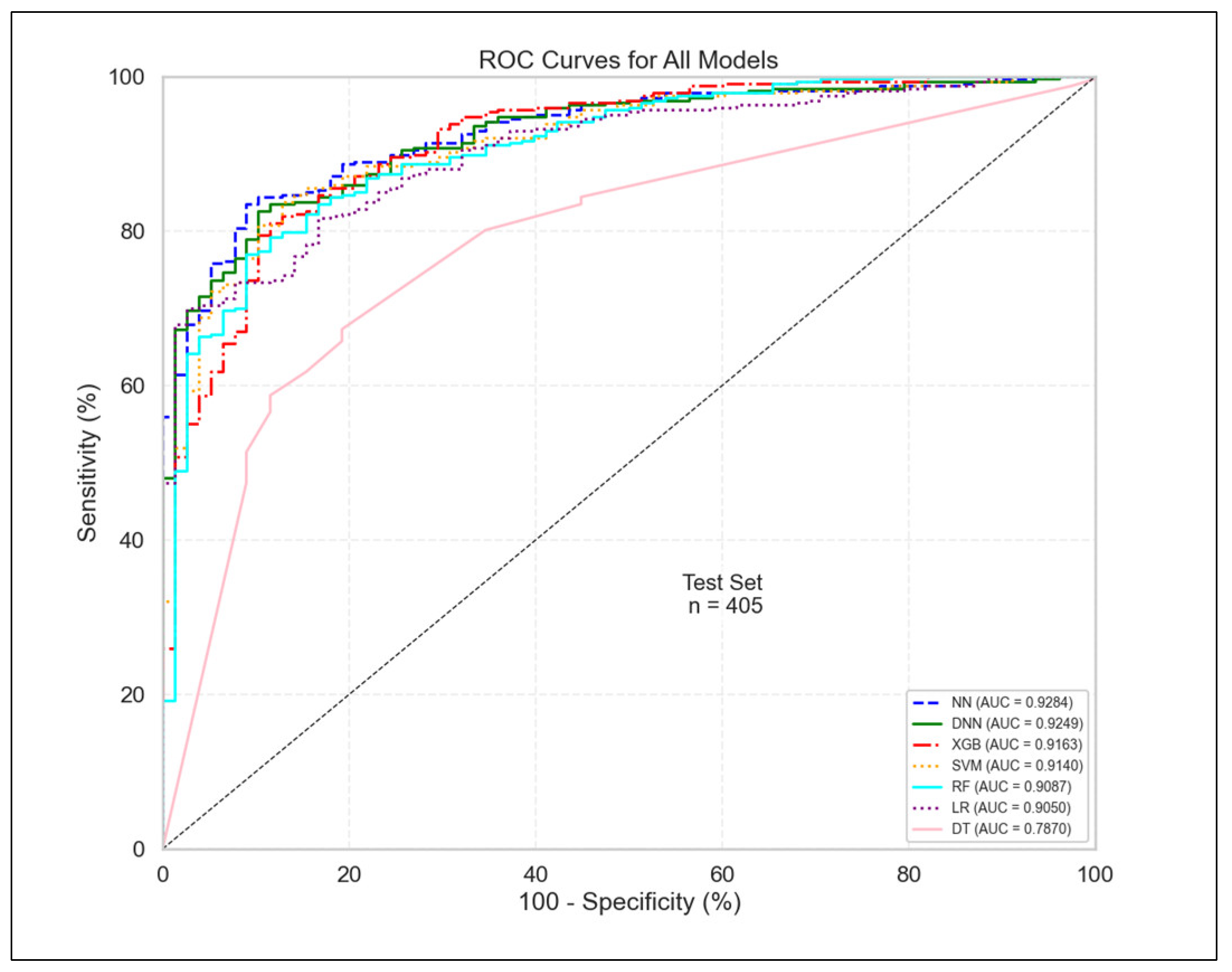

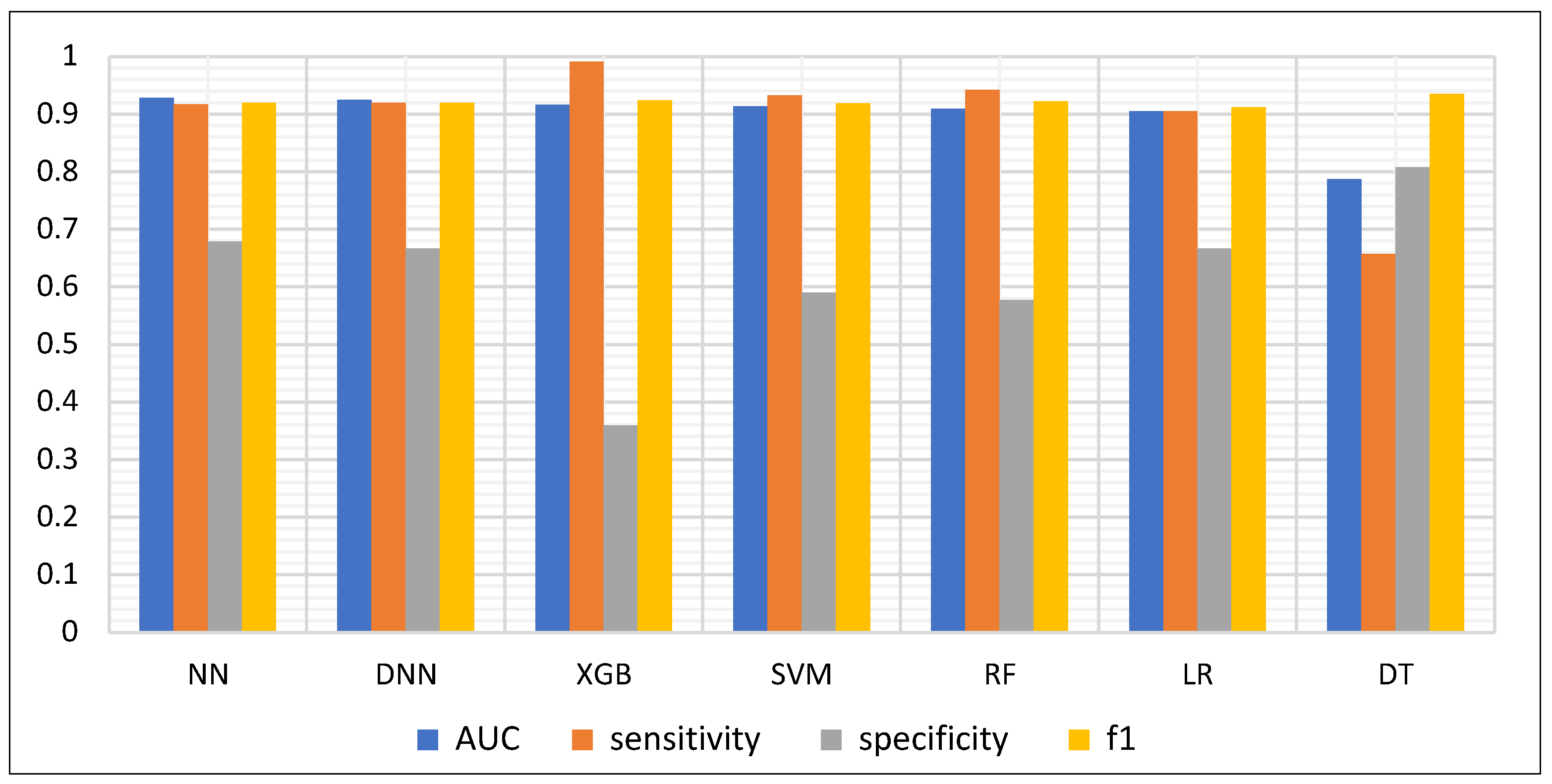

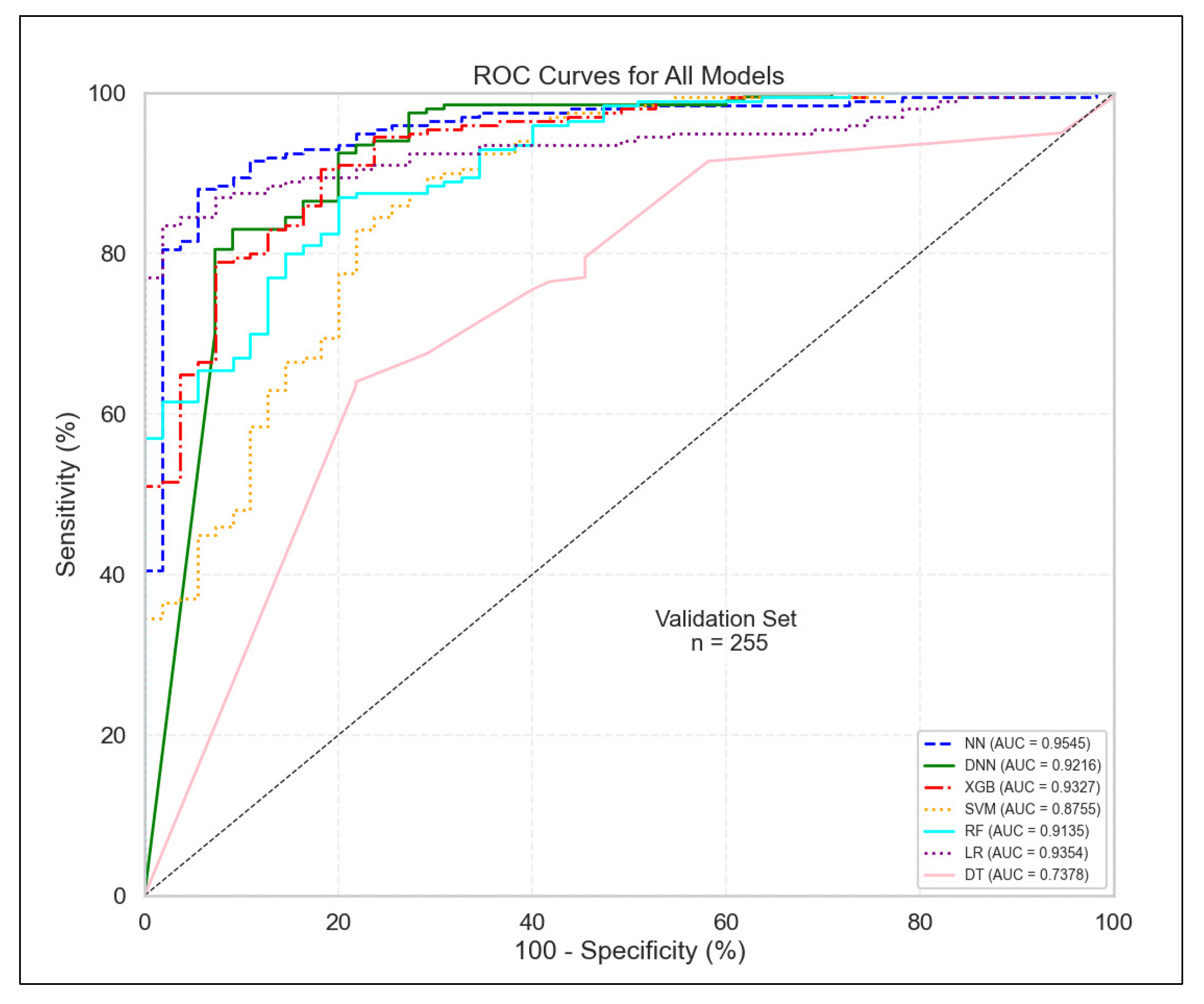

3.1. Model Performance Evaluation

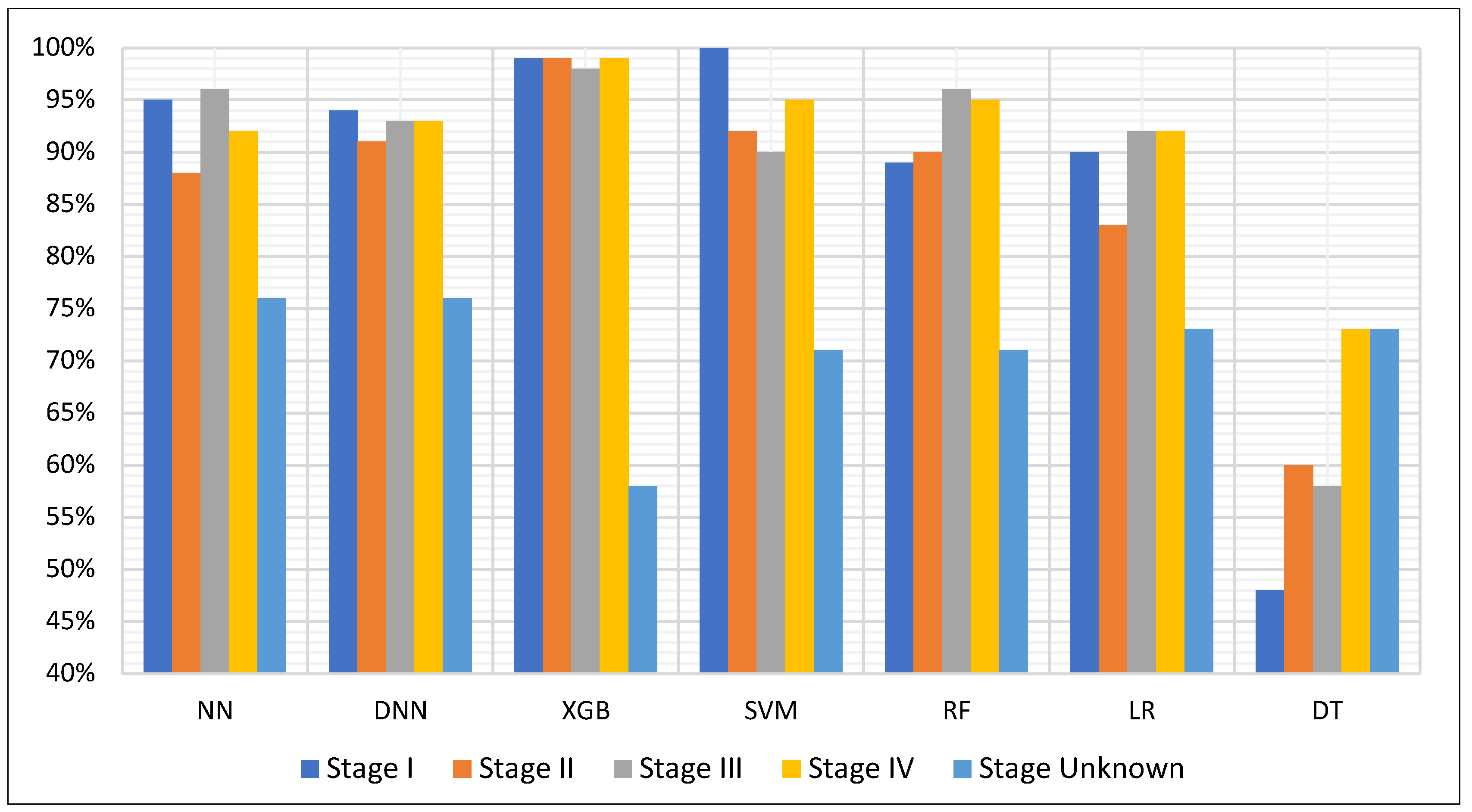

3.2. Stage-Specific Detection Performance

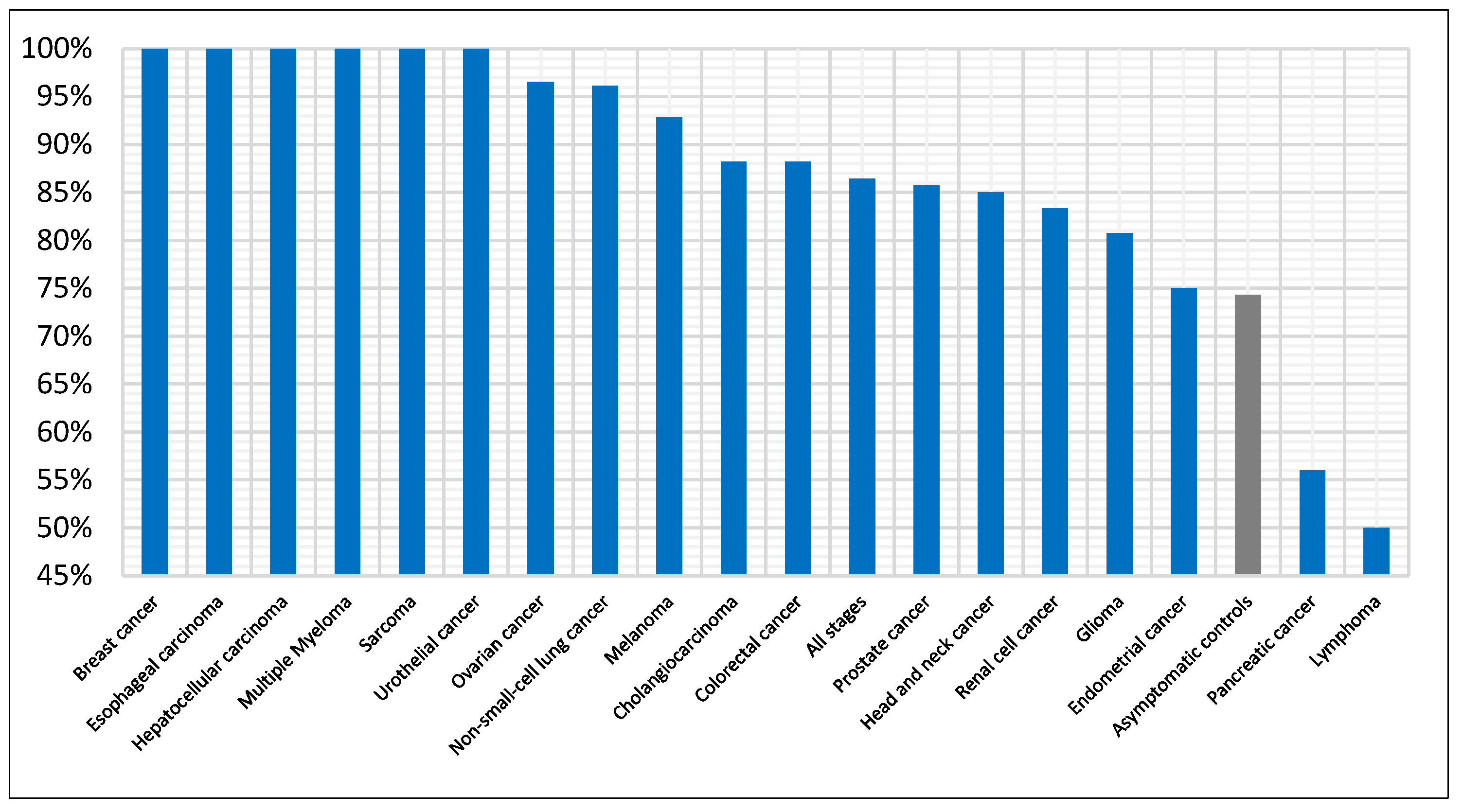

3.3. Cancer-Specific Detection Performance

3.4. SHAP-Based Interpretability Findings

3.4.1. Global SHAP Feature Importance

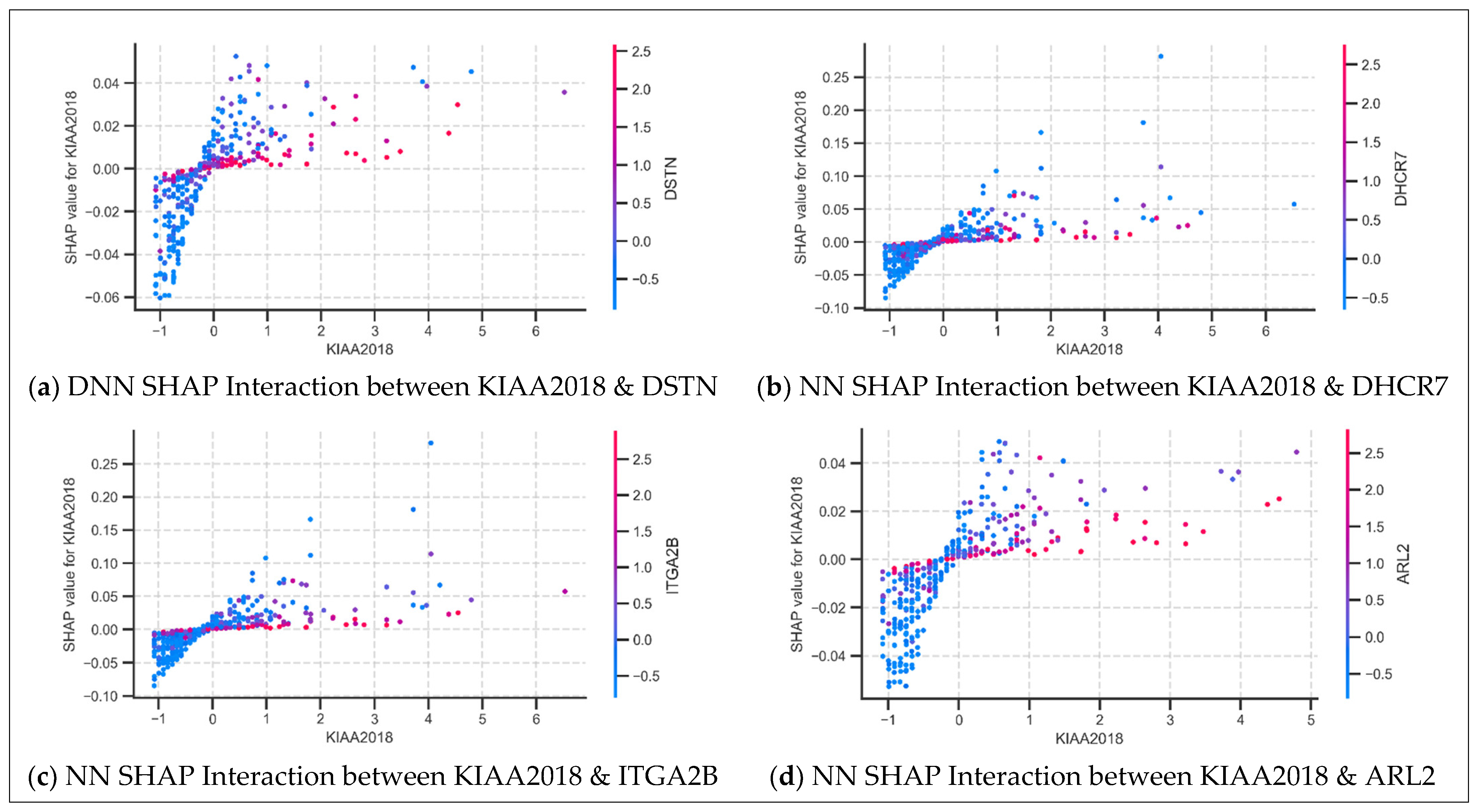

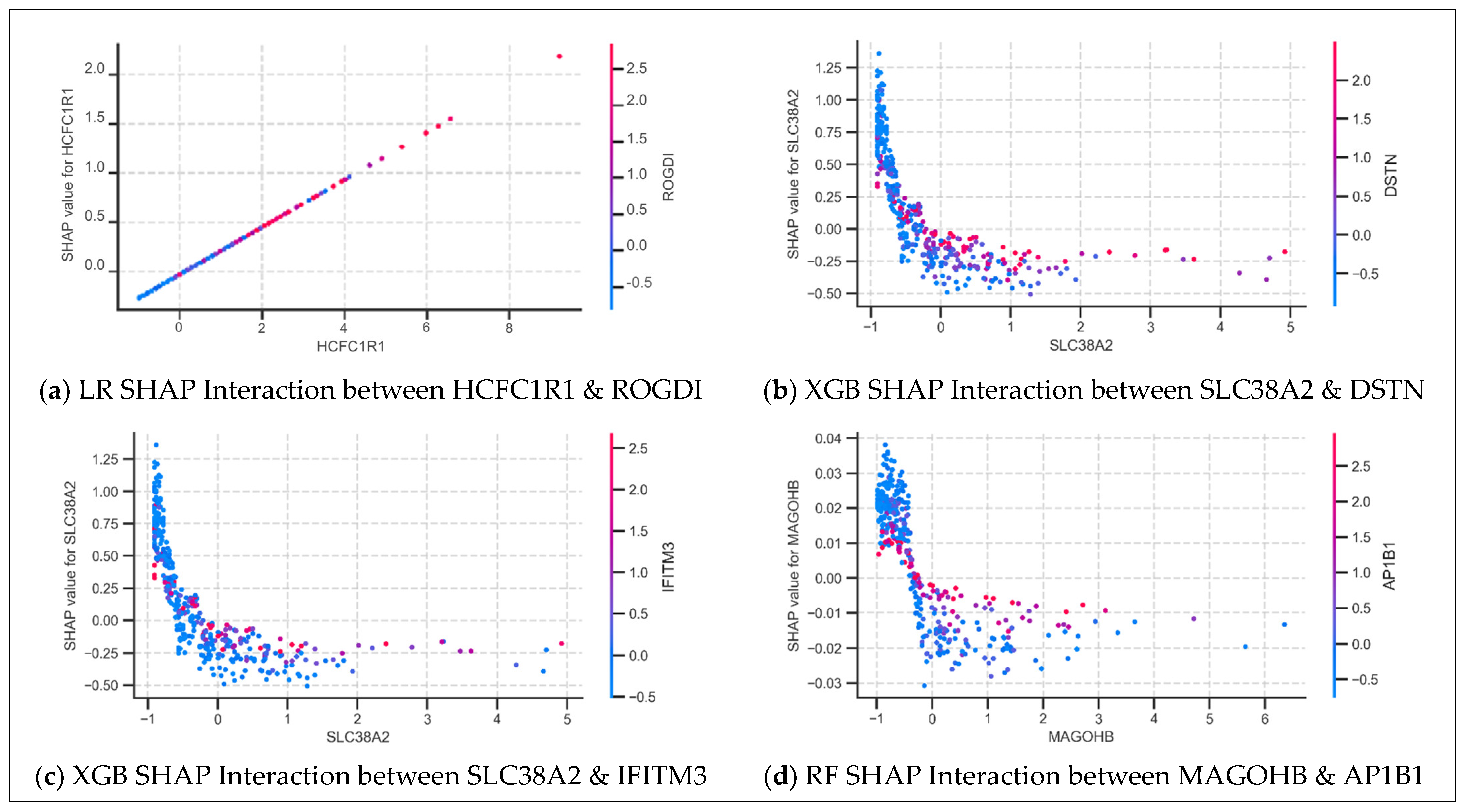

3.4.2. SHAP Dependence Plot Analysis: Feature Interactions

3.4.3. Local Interpretation Comparison Across Models

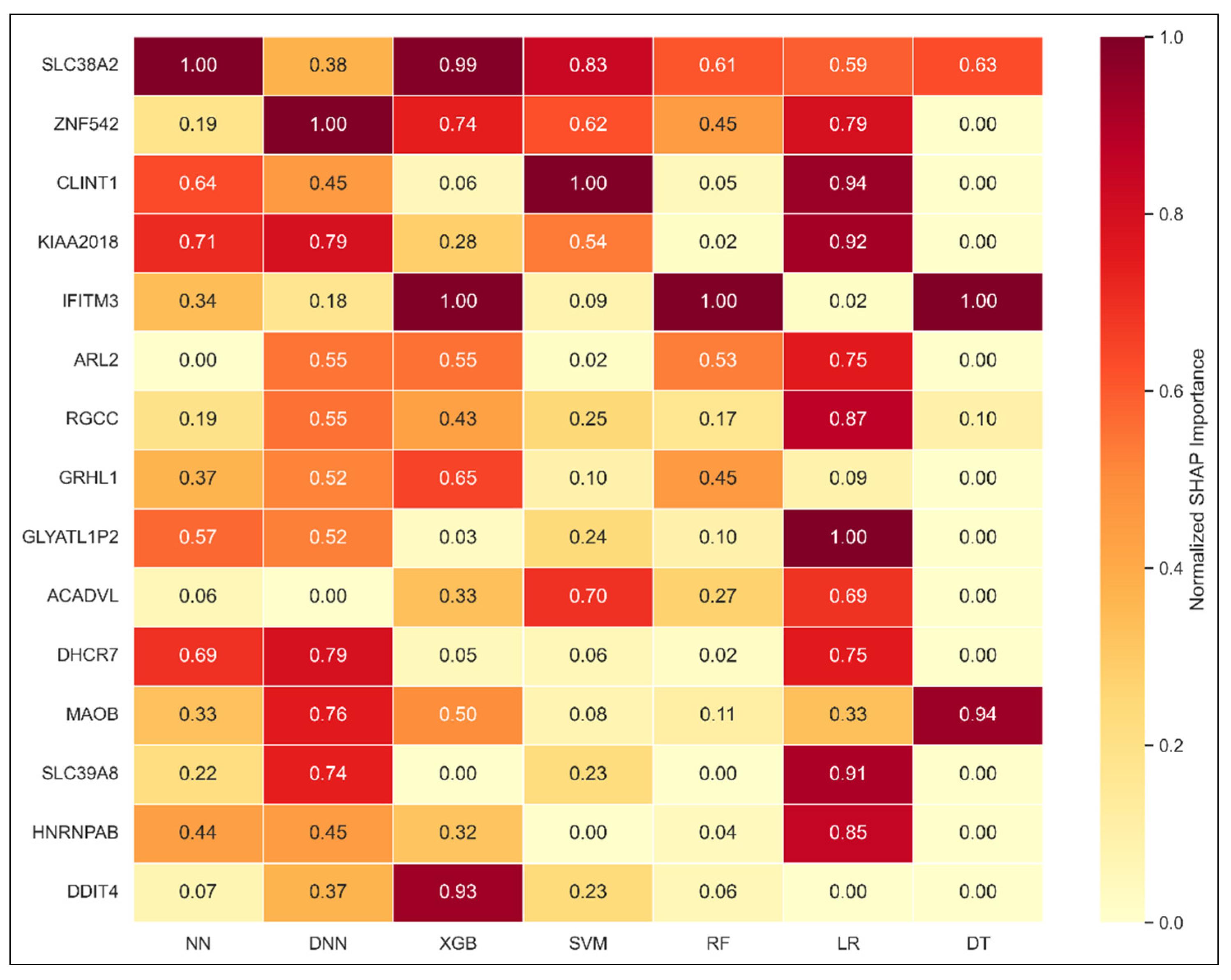

3.4.4. Weighted SHAP Aggregation: Consensus Gene Importance

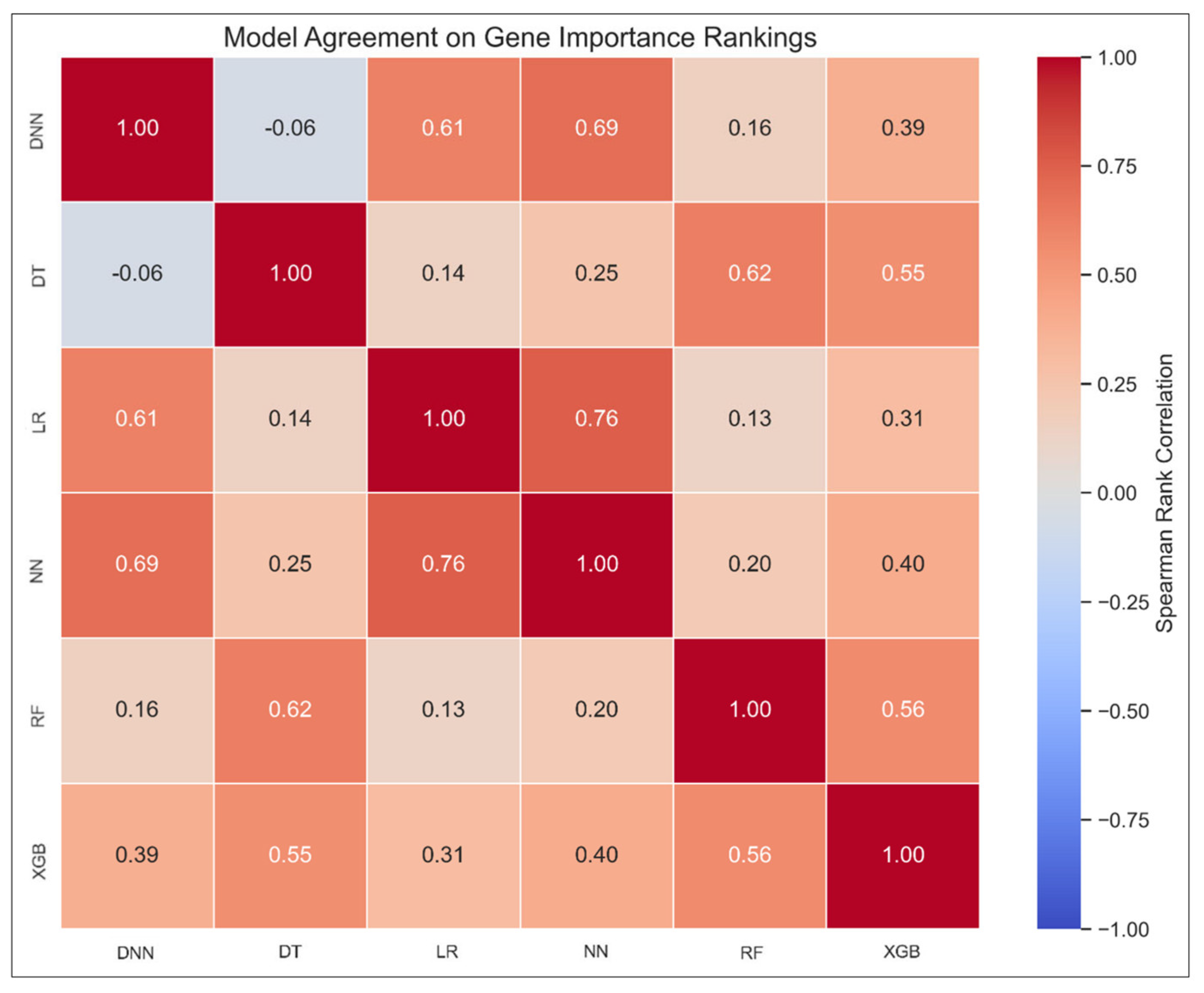

3.4.5. Model Agreement Analysis via Spearman Correlation

3.5. Model Performance on External Validation

4. Discussion

4.1. Model Interpretability and Behavior

4.2. Biological Interpretation of Key Genes

4.2.1. Top Predictive Genes

4.2.2. Conditional Regulators and Modulators

4.3. Explainability and Clinical Interpretability

4.4. Methodological Contributions

4.5. Limitations and Future Directions

4.5.1. Data Scarcity, Cohort Diversity, and Early-Stage Representation

4.5.2. Domain Adaptability and Generalization

4.5.3. Model Explainability and Stratified Insights

4.5.4. Functional Validation and Network Reliability

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| Anova | Analysis of Variance |

| AP | Average precision |

| AUC | Area Under the Receiver Operating Characteristic Curve |

| CCAAT | Cytosine–Cytosine–Adenosine–Adenosine–Thymidine |

| cfDNA | Cell-Free Deoxyribonucleic Acid |

| cfRNA | Cell-Free Ribonucleic Acid |

| DNA | Deoxyribonucleic Acid |

| DNN | Deep Neural Network |

| DT | Decision Tree |

| EMT | Epithelial–Mesenchymal Transition |

| eQTL | Expression Quantitative Trait Locus |

| GEO | Gene Expression Omnibus |

| HPA | The Human Protein Atlas |

| IQR | Interquartile Range |

| LIME | Local Interpretable Model-Agnostic Explanations |

| LR | Logistic Regression |

| MCED | Multi-Cancer Early Detection |

| ML | Machine Learning |

| NN | Neural Network |

| PBMC | Peripheral Blood Mononuclear Cell |

| ReLU | Rectified Linear Unit |

| RF | Random Forest |

| RNA | Ribonucleic Acid |

| ROC | Receiver Operating Characteristic |

| SHAP | SHapley Additive exPlanations |

| SLSQP | Sequential Least Squares Quadratic Programming |

| SMOTE | Synthetic Minority Over-sampling Technique |

| SVM | Support Vector Machine |

| TCGA | The Cancer Genome Atlas |

| TEP | Tumor-Educated Platelets |

| XAI | Explainable Artificial Intelligence |

| XGB | Extreme Gradient Boosting |

References

- Guerra, C.E.; Sharma, P.V.; Castillo, B.S. Multi-Cancer Early Detection: The New Frontier in Cancer Early Detection. Annu. Rev. Med. 2024, 75, 67–81. [Google Scholar] [CrossRef]

- Best, M.G.; Sol, N.; Kooi, I.; Tannous, J.; Westerman, B.A.; Rustenburg, F.; Schellen, P.; Verschueren, H.; Post, E.; Koster, J.; et al. RNA-seq of tumor-educated platelets enables blood-based pan-cancer, multiclass, and molecular pathway cancer diagnostics. Cancer Cell 2015, 28, 666–676. [Google Scholar] [CrossRef] [PubMed]

- Nogueira, A.S.R.; Ferreira, A.J.; Figueiredo, M.A.T. A Step Towards the Explainability of Microarray Data for Cancer Diagnosis with Machine Learning Techniques. In Proceedings of the 11th International Conference on Pattern Recognition Applications and Methods (ICPRAM), Virtual Event, 3–5 February 2022; SciTePress: Setúbal, Portugal, 2022; pp. 362–369. [Google Scholar] [CrossRef]

- Avlani, D.T.; MB, A.; GS, S.D.; Kodipalli, A.; Rao, T. Comprehensive Methodologies for Breast Cancer Classification: Leveraging XAI LIME, SHAP, Bagging, Boosting, and Diverse Single Classifiers. In Proceedings of the 4th Asian Conference on Innovation in Technology (ASIANCON), Pimpri Chinchwad, India, 24–26 August 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Zhang, J.; Wu, C.; Lu, K.; Gao, R. DCA: An Interpretable Deep Learning Model for Cancer Classification and New Knowledge Discovery Using Attention Mechanism with Discriminate Feature Constraint. In Proceedings of the 3rd International Symposium on Intelligent Unmanned Systems and Artificial Intelligence (SIUSAI ’24), Qingdao, China, 24–26 May 2024; Association for Computing Machinery: New York, NY, USA, 2024; pp. 243–249. [Google Scholar] [CrossRef]

- Dalmolin, M.; Azevedo, K.S.; de Souza, L.C.; de Farias, C.B.; Lichtenfels, M.; Fernandes, M.A.C. Feature selection in cancer classification: Utilizing explainable artificial intelligence to uncover influential genes in machine learning models. AI 2024, 6, 2. [Google Scholar] [CrossRef]

- Hasan, M.E.; Mostafa, F.; Hossain, M.S.; Loftin, J. Machine-learning classification models to predict liver cancer with explainable AI to discover associated genes. AppliedMath 2023, 3, 417–445. [Google Scholar] [CrossRef]

- Rajpal, S.; Rajpal, A.; Saggar, A.; Vaid, A.K.; Kumar, V.; Agarwal, M.; Kumar, N. XAI-MethylMarker: Explainable AI approach for biomarker discovery for breast cancer subtype classification using methylation data. Expert Syst. Appl. 2023, 225, 120130. [Google Scholar] [CrossRef]

- Withnell, E.; Zhang, X.; Sun, K.; Guo, Y. XOmiVAE: An interpretable deep learning model for cancer classification using high-dimensional omics data. Brief. Bioinform. 2021, 22, bbab315. [Google Scholar] [CrossRef]

- Yuan, D.; Jugas, R.; Pokorna, P.; Sterba, J.; Slaby, O.; Schmid, S.; Siewert, C.; Osberg, B.; Capper, D.; Halldorsson, S.; et al. crossNN is an explainable framework for cross-platform DNA methylation-based classification of tumors. Nat. Cancer 2025, 6, 1283–1294. [Google Scholar] [CrossRef]

- Alharbi, F.; Budhiraja, N.; Vakanski, A.; Zhang, B.; Elbashir, M.K.; Guduru, H.; Mohammed, M. Interpretable graph Kolmogorov–Arnold networks for multi-cancer classification and biomarker identification using multi-omics data. Sci. Rep. 2025, 15, 27607. [Google Scholar] [CrossRef]

- Kamkar, L.; Saberi, S.; Totonchi, M.; Kavousi, K. Circulating microRNA panels for multi-cancer detection and gastric cancer screening: Leveraging a network biology approach. BMC Med. Genom. 2025, 18, 27. [Google Scholar] [CrossRef]

- Alam, N.; Ghosh, D.; Jana, R.; Roy, P. Peripheral blood mononuclear cell derived biomarker detection using eXplainable Artificial Intelligence (XAI) provides better diagnosis of breast cancer. Comput. Biol. Chem. 2023, 104, 107867. [Google Scholar] [CrossRef]

- Liu, Y.; Li, Z.; Zhou, J.; Tan, T.; Wu, S.; Wang, J.; Ding, J.; Liu, Z. Interpretable machine learning-aided optical deciphering of serum exosomes for early detection, staging, and subtyping of lung cancer. Anal. Chem. 2024, 96, 16227–16235. [Google Scholar] [CrossRef]

- Driussi, A.; Lamaze, F.C.; Kordahi, M.; Armero, V.S.; Gaudreault, N.; Orain, M.; Enlow, W.; Abbosh, C.; Hodgson, D.; Dasgupta, A.; et al. Clinicopathological predictors of the presence of blood circulating tumor DNA in early-stage non–small cell lung cancers. Mod. Pathol. 2025, 38, 100744. [Google Scholar] [CrossRef]

- Hajjar, M.K.; Aldabbagh, G.; Albaradei, S. Interpretable machine learning models for early cancer detection using tumor-educated platelet RNA. Information 2024, 15, 627. [Google Scholar] [CrossRef]

- In ’t Veld, S.G.J.G.; Arkani, M.; Post, E.; Antunes-Ferreira, M.; D’Ambrosi, S.; Vessies, D.C.L.; Vermunt, L.; Vancura, A.; Muller, M.; Niemeijer, A.N.; et al. Detection and localization of early- and late-stage cancers using platelet RNA. Cancer Cell 2022, 40, 999–1009.e6. [Google Scholar] [CrossRef] [PubMed]

- Morotti, M.; Zois, C.E.; El-Ansari, R.; Craze, M.L.; Rakha, E.A.; Fan, S.J.; Valli, A.; Haider, S.; Goberdhan, D.C.I.; Green, A.R.; et al. Increased expression of glutamine transporter SNAT2/SLC38A2 promotes glutamine dependence and oxidative stress resistance, and is associated with worse prognosis in triple-negative breast cancer. Br. J. Cancer 2021, 124, 494–505. [Google Scholar] [CrossRef] [PubMed]

- Hoffmann, T.M.; Cwiklinski, E.; Shah, D.S.; Stretton, C.; Hyde, R.; Taylor, P.M.; Hundal, H.S. Effects of sodium and amino acid substrate availability upon the expression and stability of the SNAT2 (SLC38A2) amino acid transporter. Front. Pharmacol. 2018, 9, 63. [Google Scholar] [CrossRef]

- Shmulevitz, M.; Malek, T.R. IFITM protein regulation and functions: Far beyond the fight against viruses. Front. Immunol. 2022, 13, 1042368. [Google Scholar] [CrossRef]

- Newman, L.E.; Zhou, C.J.; Mudigonda, S.; Mattheyses, A.L.; Paradies, E.; Marobbio, C.M.; Kahn, R.A. The ARL2 GTPase is required for mitochondrial morphology, motility, and maintenance of ATP levels. PLoS ONE 2014, 9, e99270. [Google Scholar] [CrossRef]

- National Center for Biotechnology Information. RGCC Regulator of Cell Cycle (Homo sapiens). Available online: https://www.ncbi.nlm.nih.gov/gene/28984 (accessed on 15 July 2025).

- Mlacki, M.; Kikulska, A.; Krzywinska, E.; Pawlak, M.; Wilanowski, T. Recent discoveries concerning the involvement of transcription factors from the Grainyhead-like family in cancer. Exp. Biol. Med. 2015, 240, 1396–1401. [Google Scholar] [CrossRef]

- Lee, B.S.; Park, Y.I.; Liu, H.; Kim, S.G.; Kim, H.J.; Choi, J.H.; Rho, S.H.; Padilla, J.; Roh, J.; Woo, H.G.; et al. The role of 7-dehydrocholesterol in inducing ER stress and apoptosis of head and neck squamous cell carcinoma. Cancer Lett. 2025, 628, 217842. [Google Scholar] [CrossRef]

- National Center for Biotechnology Information. DDIT4 DNA-Damage-Inducible Transcript 4 (Homo sapiens). Available online: https://www.ncbi.nlm.nih.gov/gene/54541 (accessed on 15 July 2025).

- National Center for Biotechnology Information. HNRNPAB Heterogeneous Nuclear Ribonucleoprotein A/B (Homo sapiens). Available online: https://www.ncbi.nlm.nih.gov/gene/3182 (accessed on 15 July 2025).

- National Center for Biotechnology Information. SLC39A8 Solute Carrier Family 39 Member 8 (Homo sapiens). Available online: https://www.ncbi.nlm.nih.gov/gene/64979 (accessed on 15 July 2025).

- Sharpe, M.A.; Baskin, D.S. Monoamine oxidase B levels are highly expressed in human gliomas and are correlated with the expression of HIF-1α and with transcription factors Sp1 and Sp3. Oncotarget 2016, 7, 3379–3393. [Google Scholar] [CrossRef]

- MedlinePlus Genetics. ACADVL Gene: Acyl-CoA Dehydrogenase Very Long Chain. Available online: https://medlineplus.gov/genetics/gene/acadvl/ (accessed on 15 July 2025).

- GTEx Consortium. GTEx Portal: USF3 Gene Expression Across Tissues. Available online: https://www.gtexportal.org/home/gene/USF3 (accessed on 15 July 2025).

- The Human Protein Atlas. USF3 Tissue Expression. Available online: https://www.proteinatlas.org/ENSG00000176542-USF3 (accessed on 15 July 2025).

- Ni, Y.; Seballos, S.; Fletcher, B.; Romigh, T.; Yehia, L.; Mester, J.; Senter, L.; Niazi, F.; Saji, M.; Ringel, M.D.; et al. Germline compound heterozygous poly-glutamine deletion in USF3 may be involved in predisposition to heritable and sporadic epithelial thyroid carcinoma. Hum. Mol. Genet. 2017, 26, 243–257. [Google Scholar] [CrossRef] [PubMed]

- GTEx Portal. CLINT1—Gene Expression Across Tissues. Available online: https://www.gtexportal.org/home/gene/CLINT1 (accessed on 15 July 2025).

- Human Protein Atlas. CLINT1—Tissue Expression. Available online: https://www.proteinatlas.org/ENSG00000113282-CLINT1/tissue (accessed on 15 July 2025).

- NCBI AceView. ZNF542—Zinc Finger Protein 542 (Homo sapiens). Available online: https://www.ncbi.nlm.nih.gov/IEB/Research/Acembly/av.cgi?db=human&F=ZNF542 (accessed on 15 July 2025).

- National Center for Biotechnology Information. ZNF542 Zinc Finger Protein 542 (Homo sapiens). Available online: https://www.ncbi.nlm.nih.gov/gene/147947 (accessed on 15 July 2025).

- Pu, W.; Wang, C.; Chen, S.; Zhao, D.; Zhou, Y.; Ma, Y.; Wang, Y.; Li, C.; Huang, Z.; Jin, L.; et al. Targeted bisulfite sequencing identified a panel of DNA methylation-based biomarkers for esophageal squamous cell carcinoma (ESCC). Clin. Epigenet. 2017, 9, 129. [Google Scholar] [CrossRef] [PubMed]

- Cell Signaling Technology. Ribosomal Protein L15 (RPL15) Rabbit mAb #29753 Product Information. Available online: https://www.cellsignal.com/products/primary-antibodies/rpl15-e3x8m-rabbit-mab/29753 (accessed on 15 July 2025).

- Paniagua, G.; Jacob, H.K.C.; Brehey, O.; García-Alonso, S.; Lechuga, C.G.; Pons, T.; Musteanu, M.; Guerra, C.; Drosten, M.; Barbacid, M. KSR Induces RAS-Independent MAPK Pathway Activation and Modulates the Efficacy of KRAS Inhibitors. Mol. Oncol. 2022, 16, 3066–3081. [Google Scholar] [CrossRef] [PubMed]

- Xiao, L.; Zhang, C.; Li, X.; Jia, C.; Chen, L.; Yuan, Y.; Gao, Q.; Lu, Z.; Feng, Y.; Zhao, R.; et al. LEF1 Enhances the Progression of Colonic Adenocarcinoma via Activation of the Wnt/β-Catenin Pathway. Int. J. Mol. Sci. 2021, 22, 10870. [Google Scholar] [CrossRef]

- Laing, R.E.; Walter, M.A.; Campbell, D.O.; Herschman, H.R.; Satyamurthy, N.; Phelps, M.E.; Czernin, J.; Witte, O.N.; Radu, C.G. Noninvasive prediction of tumor responses to gemcitabine by pre-treatment DCK activity in multiple tumor types. Proc. Natl. Acad. Sci. USA 2008, 105, 12345–12350. [Google Scholar] [CrossRef]

- Wang, J.; Pan, W. The biological role of the collagen alpha-3 (VI) chain and its cleaved C5 domain fragment endotrophin in cancer. Oncol. Targets Ther. 2020, 13, 5779–5793. [Google Scholar] [CrossRef]

- Agam, G.; Atawna, B.; Damri, O.; Azab, A.N. The role of FKBPs in complex disorders: Neuropsychiatric diseases, cancer, and type 2 diabetes mellitus. Cells 2024, 13, 801. [Google Scholar] [CrossRef]

- Wei, L.F.; Weng, X.F.; Huang, X.C.; Peng, Y.H.; Guo, H.P.; Xu, Y.W. IGFBP2 in cancer: Pathological role and clinical significance (Review). Oncol. Rep. 2021, 45, 427–438. [Google Scholar] [CrossRef]

- National Center for Biotechnology Information. TPM2 Tropomyosin 2 (Homo sapiens). Available online: https://www.ncbi.nlm.nih.gov/gene/7169 (accessed on 15 July 2025).

- Warde-Farley, D.; Donaldson, S.L.; Comes, O.; Zuberi, K.; Badrawi, R.; Chao, P.; Franz, M.; Grouios, C.; Kazi, F.; Lopes, C.T.; et al. The GeneMANIA prediction server: Biological network integration for gene prioritization and predicting gene function. Nucleic Acids Res. 2010, 38, W214–W220. [Google Scholar] [CrossRef]

- UniProt Consortium. UniProtKB—NFYC_HUMAN (Q13952): Nuclear Transcription Factor Y Subunit Gamma. Available online: https://www.uniprot.org/uniprotkb/Q13952/entry (accessed on 15 July 2025).

- Benatti, P.; Chiaramonte, M.L.; Lorenzo, M.; Hartley, J.A.; Hochhauser, D.; Gnesutta, N.; Mantovani, R.; Imbriano, C.; Dolfini, D. NF-Y activates genes of metabolic pathways altered in cancer cells. Oncotarget 2016, 7, 1633–1650. [Google Scholar] [CrossRef]

- Sheller, M.J.; Edwards, B.; Reina, G.A.; Martin, J.; Pati, S.; Kotrotsou, A.; Milchenko, M.; Xu, W.; Marcus, D.; Colen, R.R.; et al. Federated learning in medicine: Facilitating multi-institutional collaborations without sharing patient data. Sci. Rep. 2020, 10, 12598. [Google Scholar] [CrossRef]

- García-de-la-Puente, P.; López-Pérez, N.; Launet, L.; Naranjo, V. Domain Adaptation for Unsupervised Cancer Detection: An Application for Skin Whole Slides Images from an Interhospital Dataset. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2024; Lecture Notes in Computer Science; Linguraru, M.G., Dou, Q., Feragen, A., Giannarou, S., Glocker, B., Lekadir, K., Schnabel, J.A., Eds.; Springer: Cham, Switzerland, 2024; Volume 15004, pp. 58–68. [Google Scholar] [CrossRef]

- Taylor, D.J.; Chhetri, S.B.; Tassia, M.G.; Biddanda, A.; Yan, S.M.; Wojcik, G.L.; Battle, A.; McCoy, R.C. Sources of gene expression variation in a globally diverse human cohort. Nature 2024, 632, 122–130. [Google Scholar] [CrossRef]

| Parameter | NN/DNN Configuration |

|---|---|

| Random seed | 42 |

| Input layer size | One node per feature in the dataset |

| No. of hidden layers | NN:1; DNN:2 |

| Activation function | Hidden: ReLU; Output: sigmoid |

| Optimizer | Adam (Defaults: β1 = 0.9, β2 = 0.999, ε = 1 × 10−7) |

| Loss | Binary cross entropy |

| Metric | Area under the ROC curve |

| Early stopping—monitor | validation loss |

| Early stopping—patience | 5 |

| Early stopping—restore best weights | Yes |

| Learning Rate | Tuned in {0.001, 0.0005}. |

| Epochs | Tuned in {20, 30} |

| Batch size | Tuned in {32, 64} |

| Dropout rate | Tuned in {0.1, 0.3} |

| Hidden units | Tuned in {64, 128} |

| Search method | RandomizedSearchCV |

| No. of iterations | NN: 8; DNN: 5 |

| Cross validation | StratifiedKFold |

| No. of folds | 5 |

| Shuffle | Yes |

| Weight initialization (Keras Default) | Kernels: Glorot uniform; Biases: zeros |

| Resampling method | SMOTE |

| Feature scaling | StandardScaler (zero mean, unit variance) |

| Classifier | KerasClassifier (SciKeras wrapper for Keras/TensorFlow models) |

| Pipeline order | (1) SMOTE; (2) StandardScaler; (3) KerasClassifier |

| Software | SciKeras, scikit learn, imbalanced learn |

| Model | AUC | F1 | AP | Sensitivity | Specificity | Precision | Accuracy | Balanced Accuracy |

|---|---|---|---|---|---|---|---|---|

| NN | 0.928 | 0.920 | 0.983 | 0.917 | 0.679 | 0.923 | 0.872 | 0.798 |

| DNN | 0.925 | 0.920 | 0.982 | 0.920 | 0.667 | 0.920 | 0.872 | 0.794 |

| XGB | 0.916 | 0.924 | 0.977 | 0.991 | 0.359 | 0.866 | 0.869 | 0.675 |

| SVM | 0.914 | 0.919 | 0.978 | 0.933 | 0.590 | 0.905 | 0.867 | 0.761 |

| RF | 0.909 | 0.922 | 0.975 | 0.942 | 0.577 | 0.903 | 0.872 | 0.759 |

| LR | 0.905 | 0.912 | 0.977 | 0.905 | 0.667 | 0.919 | 0.859 | 0.786 |

| DT | 0.787 | 0.772 | 0.923 | 0.657 | 0.808 | 0.935 | 0.686 | 0.733 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hajjar, M.; Aldabbagh, G.; Albaradei, S. Interpretable Multi-Cancer Early Detection Using SHAP-Based Machine Learning on Tumor-Educated Platelet RNA. Diagnostics 2025, 15, 2216. https://doi.org/10.3390/diagnostics15172216

Hajjar M, Aldabbagh G, Albaradei S. Interpretable Multi-Cancer Early Detection Using SHAP-Based Machine Learning on Tumor-Educated Platelet RNA. Diagnostics. 2025; 15(17):2216. https://doi.org/10.3390/diagnostics15172216

Chicago/Turabian StyleHajjar, Maryam, Ghadah Aldabbagh, and Somayah Albaradei. 2025. "Interpretable Multi-Cancer Early Detection Using SHAP-Based Machine Learning on Tumor-Educated Platelet RNA" Diagnostics 15, no. 17: 2216. https://doi.org/10.3390/diagnostics15172216

APA StyleHajjar, M., Aldabbagh, G., & Albaradei, S. (2025). Interpretable Multi-Cancer Early Detection Using SHAP-Based Machine Learning on Tumor-Educated Platelet RNA. Diagnostics, 15(17), 2216. https://doi.org/10.3390/diagnostics15172216