Abstract

This paper is concerned with the problem of state estimation of memristor neural networks with model uncertainties. Considering the model uncertainties are composed of time-varying delays, floating parameters and unknown functions, an improved method based on long short term memory neural networks (LSTMs) is used to deal with the model uncertainties. It is proved that the improved LSTMs can approximate any nonlinear model with any error. On this basis, adaptive updating laws of the weights of improved LSTMs are proposed by using Lyapunov method. Furthermore, for the problem of state estimation of memristor neural networks, a new full-order state observer is proposed to achieve the reconstruction of states based on the measurement output of the system. The error of state estimation is proved to be asymptotically stable by using Lyapunov method and linear matrix inequalities. Finally, two numerical examples are given, and simulation results demonstrate the effectiveness of the scheme, especially when the memristor neural networks with model uncertainties.

1. Introduction

Since the prototype of memristor was born in 1971 by Chua [1], memristor have been widely used in all walks of life. The vector–matrix multiplication is realized by the crossbar array structure of memristor, and a neural network can be realized by the corresponding coding scheme based on it. Various neural networks based on memristor hardware have been developed rapidly. Because of an incomparable advantage that memristor neural networks can reflect the memorized information, the memristor neural networks are particularly suitable for self-adaptability, nonlinear systems, self-learning, and associative storage, so memristor neural networks are widely used in brain simulation, pattern recognition, neural morphologic computation, knowledge acquisition, and various hardware applications involving neural networks [2,3,4,5,6,7,8,9,10,11,12,13,14,15]. To list a few, the experimental implementation of transistor-free metal-oxide memristor crossbars, with device variability sufficiently low to allow operation of integrated neural networks, in a simple network: a single-layer perceptron (an algorithm for linear classification) was shown in [16]. In [17], a structure suppressing the overshoot current was investigated to approach the conditions required as an ideal synapse of a neuromorphic system. In [18], fully memristive artificial neural networks were built by using diffusive memristors based on silver nanoparticles in a dielectric film. The electrical properties and conduction mechanism of the fabricated IGZO-based memristor device in a 10 × 10 crossbar array were analyzed in [19]. Operation of one-hidden layer perceptron classifier entirely in the mixed-signal integrated hardware was demonstrated in [20]. Therefore, the research on memristor neural networks is very necessary and meaningful. Although many papers have extended the memristor neural networks and solved some problems, there are still problems in the memristor neural networks. Therefore, memristor neural networks including their various kinds of deformation have broad market prospects. Especially, the research on memristor neural networks with model uncertainties has become a hot topic.

In recent decades, scholars have carried out a great amount of research and analysis on memristor neural networks. The results can be broadly divided into four categories: (1) Stability analysis of memristor neural networks [21,22,23,24,25]; (2) State estimation of memristor neural networks [26,27,28,29]; (3) Synchronization problem of memristor neural networks [30,31,32]; (4) Control problem of memristor neural networks [33,34,35]. In practice, time-varying delays must exist in the hardware implementation of memristor neural networks. Due to the existence of time-varying delays, the future states of the system are affected by the previous states, which leads to instability of the system and poor control performance. Consequently, state estimation of memristor neural networks is of great research value and a large part of the research has focused on state estimation of memristor neural networks. Note that the above results are generally based on the known structures and parameters of memristor neural networks without model uncertainties. In practice, the hardware implementation of memristor neural networks usually fails to attain the ideal design values, and there are design deviations. In particular, model uncertainties often exist in the hardware implementation of memristor neural networks. Therefore, model uncertainties and model errors are common in hardware memristor neural networks. Similarly, affected by model uncertainties, state estimation of memristor neural networks is also a challenging problem. Considering the above analysis, it is needed to study state estimation of memristor neural networks with model uncertainties.

A great amount of valuable research on state estimation of memristor neural networks with model uncertainties can be found in [26,27,28,29,36,37,38]. In [26], it used passivity theory to deal with the state estimation problem of memristor-based recurrent neural networks with time-varying delays. By using Lyapunov–Krasovskii function (LKF), convex combination technique and reciprocal convexity technique, a delay-dependent state estimation matrix was established, and the expected estimator gain matrix was obtained by solving linear matrix inequalities (LMIs). It is a pity that the model of the system must be determined and the functions in the system must be known. In [27], for memristor neural networks with randomness, the random system was transformed into an interval parameter system by Filippov, and the H∞ state observer was designed on this basis. One of the problems in the paper is that it is a random interference that affects the system rather than model uncertainty. The random interference is regular and limited. In [28], for memristor-based bidirectional associative memory neural networks with additive time-varying delays, a state estimation matrix was constructed by selecting an appropriate LKF and using the Cauchy-Schwartz-based summation inequality, and the gain matrix was obtained by the LMIs. The paper also has the problems mentioned above. In [29], for a class of memristor neural networks with different types of inductance functions and uncertain time-varying delays, a state estimation matrix was constructed by selecting a suitable LKF, and the gain matrix was solved by using the LMIs and Wirtinger-type inequality. Model uncertainty is involved in the paper, but it is only for the uncertainty of the time-varying delays. In [36], an extended dissipative state observer was proposed by using nonsmooth analysis and a new LKF. In [37], based on the basic properties of quaternion-valued, a state observer was designed for quaternion-valued memristor neural networks, and algebraic conditions were given to ensure global dissipation. The methods proposed in [36,37] are not suitable for memristor neural networks with model uncertainties. In [38], for memristor neural networks with random sampling, the randomness was represented by two different sampling periods, which satisfied a Bernoulli distribution. The random sampling system was transformed into a system with random parameters by using an input delay method. On this basis, a state observer was designed based on the LMIs and a LKF. Through the above discussion, it is not difficult to find that a similar method is used to estimate the states of memristor neural networks. By selecting an appropriate LKF, the state observation matrix is constructed based on the structure of the system, and the gain matrix is solved by utilizing the LMIs. It can be seen from the above analysis that most studies on state estimation of memristor neural networks have the same problem, which requires that the system cannot contain the model uncertainties. Some studies include model uncertainties, which are only for time-varying delays. Other studies also include model uncertainties, which are only about the fluctuation of parameters. There are few studies on the state estimation of memristor neural networks whose model uncertainties include time-varying delays, floating parameters and unknown functions. It has a huge research potential to tap.

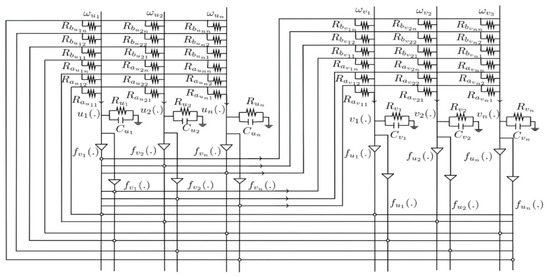

When the memristor neural networks are designed and translated into hardware by the designer, the model uncertainties of the system only include the time-varying delays and floating parameters. In practice, the situation is not unique. Sometimes it is necessary to analyze the memristor neural networks designed by other designers. At this time, the model uncertainties of memristor neural networks include time-varying delays, floating parameters and unknown functions. The model of the memristor neural networks can be designed as in Figure 1 [28]. Motivated by the above discussion, the main concern of this paper is to design a state observer for memristor neural networks with model uncertainties, which include time-varying delays, floating parameters and unknown functions. Model uncertainties are composed of current states, past states and unknown functions. In order to approach the model uncertainties that contain memory information, improved long short term memory neural networks (LSTMs) are proposed. It is theoretically proved that the improved LSTMs can approach the model uncertainties with arbitrary error. Memristor neural networks with model uncertainties can be transformed into a new system with an improved LSTMs. On this basis, a full-order state observer is designed according to the output of the system. An error matrix of the states is constructed by a designed LKF, and the gain matrix is solved by the LMIs. In order to make the new system more accurate, a new error matrix of the states is constructed by using Young’s inequality based on a LKF. On this basis, adaptive updating laws of the weights of improved LSTMs are designed to reduce the errors of the states. The main contributions of this paper are as follows.

Figure 1.

Circuit of memristor neural networks [28].

- Improved LSTMs are proposed for memristor neural networks with model uncertainties. It is proved that the improved LSTMs can well approach the model uncertainties in memristor neural networks. Model uncertainties include time-varying delays, floating parameters and unknown functions. It has not been seen in other studies.

- By utilizing the LMIs and a LKF, a full-order observer based on the output of the system is presented to obtain state information and solve the problem of state estimation.

- By using Young’s inequality and a designed LKF, adaptive updating laws of the weights of improved LSTMs are given to obtain the new system with improved LSTMs precisely.

This paper is organized as follows. In Section 2, the problem is formulated, and several essential assumptions and lemmas are listed. Section 3 presents the primary theorems, including improved LSTMs, observer design for memristor neural networks with model uncertainties, and adaptive updating laws of the weights of improved LSTMs. In Section 4, the effectiveness of the proposed scheme is demonstrated through numerical examples. Finally, the conclusions are drawn in Section 5.

Notation: denotes the n dimensional Euclidean space. For a given matrix or vector , and denote their transpose, and denotes its trace. indicate a negative definite matrix.

2. Preliminaries

Considering the memristor neural networks as follows, the same model can be found in [26,27,28,36,37],

where represents the state variable of the memristor neural networks, and n is the system dimension; is the self-feedback coefficient, which satisfies ; and represent the activation functions of states and respectively; represents the memristive synaptic connection weight between states and , and represents the memristive synaptic connection weight between states and ; denotes the time-varying delay which satisfies , and is the upper bound constant; denotes the input of the system, and represents the measurement output of the system; is the measurement constant from state to output , and m is the output dimension.

The system (1) can be represented in vector form,

where , , , , , , , , .

As mentioned in the introduction, most studies involve model uncertainties that only include floating parameters. In the process of neural network hardware implementation as memristor neural networks, the memristive synaptic connection weights and will produce deviations [28]. The fluctuation of parameters and is regarded as model uncertainty. This is the starting point of much research on state estimation of memristor neural networks, such as [26,27,28,36,37,38]. Some studies regard time-varying delay as model uncertainty and study state estimation of memristor neural networks based on it, for example [29]. It should be noted that model uncertainties in all the above studies do not include and . Both and must be known, and and float within the ideal range. If and are unknown, and the ideal values of and are unknown, the model uncertainties include floating parameters and , time-varying delay and unknown functions and , and all the above studies are not applicable. The state estimation of memristor neural networks with model uncertainties including floating parameters, time-varying delays and unknown functions is the main concern in this paper.

Remark 1.

In other studies, model uncertainties only include floating parameters and or time-varying delay . Functions and must be known. In this paper, model uncertainties include floating parameters and , time-varying delay and unknown functions and .

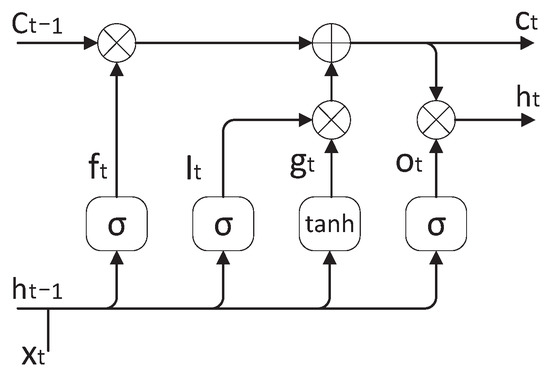

As shown in system (2), the model uncertainties contain memory information . The LSTMs are the most suitable to deal with the model uncertainties. LSTMs are networks of basic LSTMs cells, and the architecture of a conventional LSTMs cell is illustrated in Figure 2. A memory cell, an input gate, an output gate and a forgetting gate make up a LSTMs cell. The forgetting gate, input gate, and output gate respectively determine whether historical information, input information, and output information are retained [39]. The specific computation is shown in Equation (3).

where denotes the forgetting gate; and represent input gate and output gate, respectively; is the updating vector of the LSTM cell; is the hidden state vector; is the hidden state vector at step ; is the input vector of the LSTM cell; is the state vector of the cell; is the state vector of the cell at step ; is the weight matrix and refers to the bias vector; and are the sigmoid and tanh activation functions, respectively; ⊗ and ⊕ represent elementwise multiplication and addition, respectively.

Figure 2.

Schematic diagram of a basic LSTMs cell.

Remark 2.

LSTMs cell is not completely suitable for estimating the states of memristor neural networks with model uncertainties. LSTMs cell needs to be improved to save computation and be more suitable for state estimation.

Moreover, in order to improve the LSTMs, design the state observer of memristor neural networks with model uncertainties, and derive the updating laws of the weights of the improved LSTMs, some assumptions and lemmas need to be introduced for the following proof.

Assumption 1.

The functions and satisfy local Lipschitz conditions. For all , have and , where and are Lipschitz constants, and satisfy .

and are the activation functions of memristor neural networks, so Assumption 1 is generally tenable.

Lemma 1

([40]). is a continuous function defined on a set Ω. Multilayer neural networks can be defined as,

where and are the second weight matrix and the first weight vector of the Multilayer neural networks, respectively; is the input vector of Multilayer neural networks, and is the activation function of Multilayer neural networks.

Then, for a given desired level of accuracy , there exist the ideal weights and to satisfy the following inequality,

Lemma 2.

(Young’s inequality) For all , the following inequality holds,

where , , , and .

3. Main Result

In this part, improved LSTMs, state observer design for memristor neural networks with model uncertainties, and adaptive updating laws of the weights of improved LSTMs will be discussed.

To begin with, the system (2) can be redefined as follows,

where is a vector of functions, which can be defined as , .

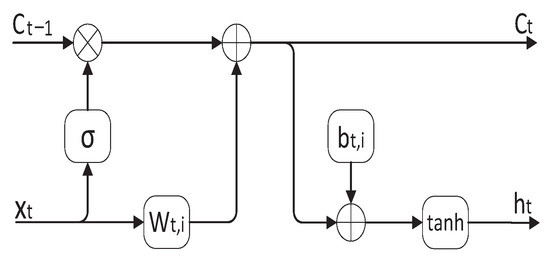

As mentioned in Remark 1, is the function vector of model uncertainties formed by floating parameters and , time-varying delay and unknown functions and . In order to approximate the unknown function vector , improved LSTMs are proposed, and an improved LSTMs cell is shown in Figure 3.

Figure 3.

Schematic diagram of an improved LSTMs cell.

Comparing Figure 2 and Figure 3, it can be seen that the input gate and the hidden state vector at step have been removed. Since is part of in the form of a function vector, the input gate can be removed. should be part of LSTMs cell in the form of tanh function. The reason why is removed is that contains , so the functions of can be combined into to save computation. Remove the output gate and use as the output of LSTMs cell to simplify the structure of LSTMs cell. Therefore, the improved LSTMs cell is made up of the following parts: (1) The state vector of the system at time t and the state vector with weights of the system at time constitute the input of the improved LSTMs cell; (2) is the vector that holds the state of the improved LSTMs cell at time t; (3) is the output vector of the improved LSTMs cell at time t; (4) is the forgetting function at time t, which is used to control whether the memory information stored by the improved LSTMs cell at time is added to the improved LSTMs cell calculation at time t. The specific computation of a simplified and improved LSTMs cell can be expressed as follows,

where denotes the weight vector of the ith cell and is a bias constant of the ith cell; represents the state vector at time t.

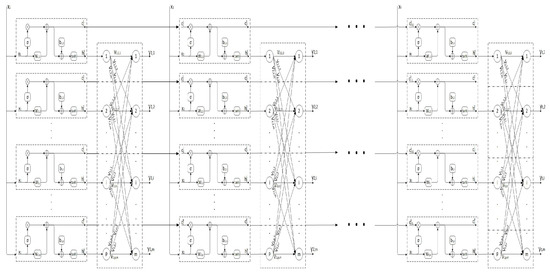

Based on the simplified and improved LSTMs cell, the improved LSTMs are illustrated in Figure 4. In Figure 4, each column represents a neural network composed of p improved LSTMs cells at time j. The outputs of the p improved LSTMs cells pass through the weight matrix to obtain the output vector of the neural network at time j, which is used to approximate . The neural network at each time can be connected through and to form neural networks at all times. represents the state vector at time j, and . denotes the output of the hidden states of the ith LSTMs cell at time j, and p is the number of LSTMs cells. represents the weight vector of the ith LSTMs cell at time j. is the bias of the ith LSTMs cell at time j. denotes the output of the states of the ith LSTMs cell at time j. represents the weight coefficient from the output of the ith LSTMs cell to the lth system output at time j, and . denotes the lth system output at time j. The improved LSTMs can approximate any nonlinear function by the following theorem.

Figure 4.

Schematic diagram of the improved LSTMs.

Theorem 1.

is a continuous nonlinear function defined on a set Ω. Improved LSTMs are shown in Figure 3. is an approximate function of based on the improved LSTMs. Then, for a given desired level of accuracy , there exist the ideal weights and to satisfy the following inequality,

The proof of Theorem 1 can be found in Appendix A.

Based on Theorem 1, the estimation system for the system (4) can be defined as the following formula,

where denotes the observer gain matrix; is an estimated function vector of based on the improved LSTMs, which satisfies Theorem 1. is given in Equation (8),

where and denote the ideal weight matrices, and is the ideal bias vector.

The function is determined by the time-varying delay which satisfies . is 1 in the range of , and is 0 in the rest of the range. This ensures that all the data in the interval to t will be included in the calculation. Considering the system (4) and the estimation system (7), the error system can be obtained as follows

Assumption 2.

For the unknown function and the estimated function , there exist Lipschitz constant vectors and , which satisfy the following inequality,

Considering Theorem 1, is an estimated function of the finite error of . Similarly, is a function of and . On this basis, considering Assumption 1, Assumption 2 is tenable.

Theorem 2.

Suppose that Assumption 2 holds for the system (4) and the estimation system (7), if there exist symmetric positive definite matrices , , , a diagonal matrix , a matrix and a real constant such that inequality (11) holds,

where , and .

Then, the error system (9) is asymptotically stable with observer gain matrix calculated by . The proof of Theorem 2 can be found in Appendix B.

Based on Theorem 2, the observer gain matrix can be obtained. Considering the function vector in system (7), the weight matrices and are ideal. In fact, the ideal weights are hard to select, and the estimated weights need to be adjusted by adaptive laws to be close to the ideal weights. With reference to the system (7), the estimated system can be redefined as follows

where is an estimated function vector of .

is given in Equation (13),

where and are estimated weight matrices; is a estimated bias vector.

With reference to the error system (9), the error system can be obtained as follows by using the Equation (6)

where is an error vector.

For error weight matrices and and a error weight vector , we have

Theorem 3.

For the error system (14), the design parameters , and satisfy following inequality

where ,

, ,

.

The adaptive updating laws of the weights can be given as follows

then the error system (14) is asymptotically stable. The proof of Theorem 3 can be found in Appendix C.

Considering (16), the adaptive updating laws of the weights are determined by . Hence, it requires that is a n-dimensional vector. According to (12), we have

If there exists , the can be obtained as follows by using (17)

In general, m is not equal to n, and does not exist. Hence, (18) does not hold. To solve this problem, the following assumption is given.

Assumption 3.

and are continuously differentiable functions and the first derivative of and are bounded and measurable.

Theorem 4.

Based on Assumption 3, if the given matrix is left invertible, the can be obtained as follows

where and . The proof of Theorem 4 can be found in Appendix D.

Remark 3.

Based on Theorem 1, the estimated system (12) can be given. By using Theorem 2, the observer gain matrix can be obtained. By using Theorems 3 and 4, the adaptive updating laws of the weights can be obtained.

4. Simulation Analysis

In this section, two numerical cases are presented to verify the rationality of the above results.

4.1. Examples

Example 1. 2-dimensional memristor neural networks are considered, and the parameters of the system (2) are given as follows,

Based on the system (2), the estimated system (17) can be designed as follows,

By using Theorem 2 and LMIs tools, the parameters of the estimated system (17) can be obtained,

Set sampling time and sampling period . Considering (18), set and . Based on Theorem 3 and Theorem 4, set and and are negative unit vectors and matrix.

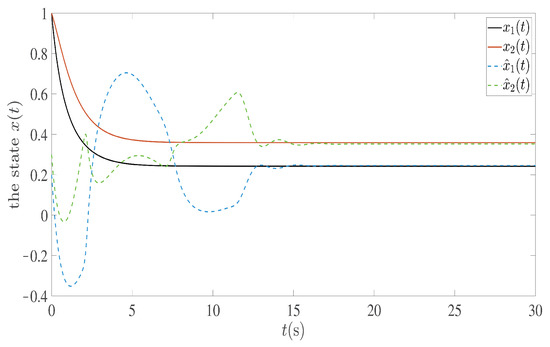

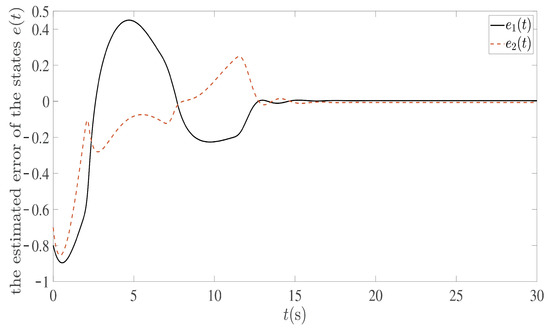

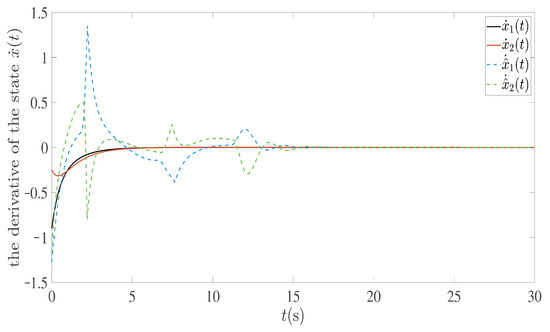

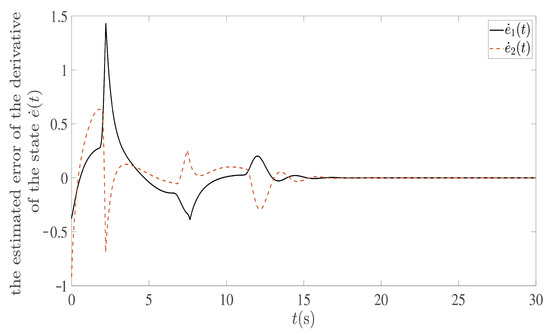

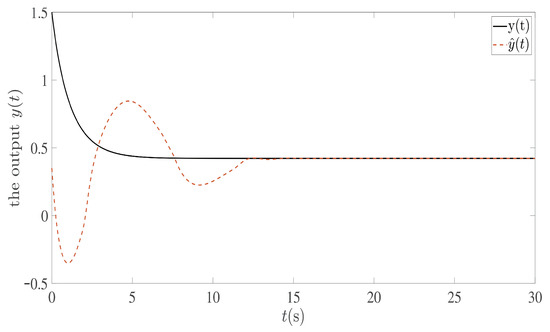

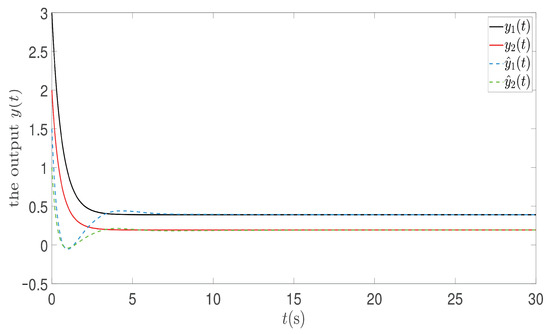

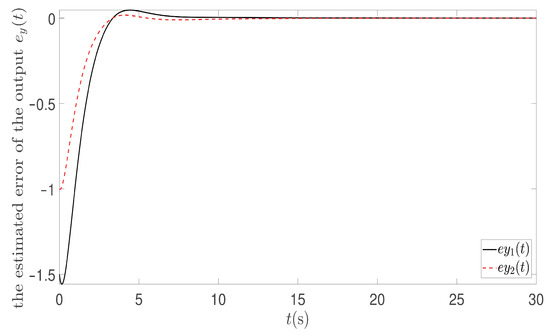

The state trajectories of the state and the state observer are drawn in Figure 5. Figure 6 is drawn for the estimated error between the state and the state observer . In Figure 7, the trajectories of the derivative of the state and the derivative of the state observer are depicted. The trajectories of the error between and are given in Figure 8. In Figure 9, the output curve and the estimated output curve are given. Figure 10 shows the estimated error curve between and .

Figure 5.

The state and estimated state curves of the 2-dimensional memristor neural networks.

Figure 6.

The estimated error curves of the states.

Figure 7.

The derivative curves of the states and estimated states.

Figure 8.

The error curves of the derivative of the states.

Figure 9.

The output and estimated output curves.

Figure 10.

The error curves of the output.

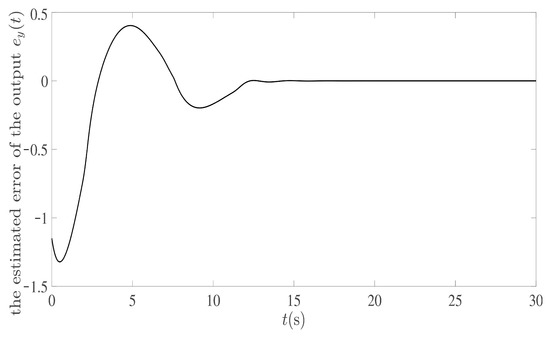

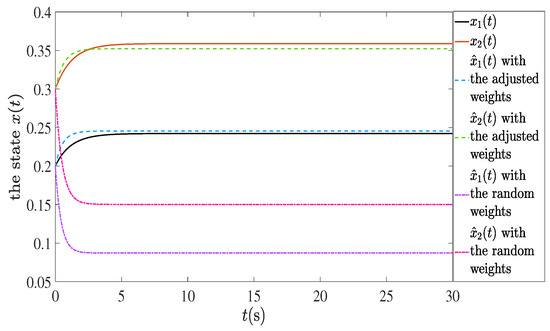

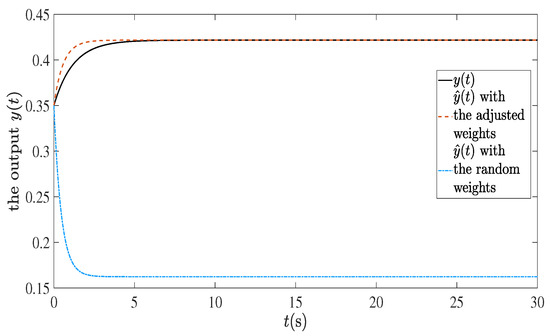

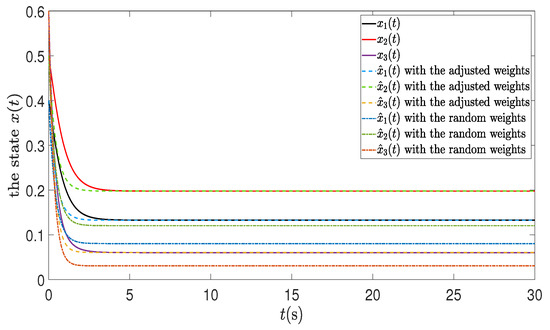

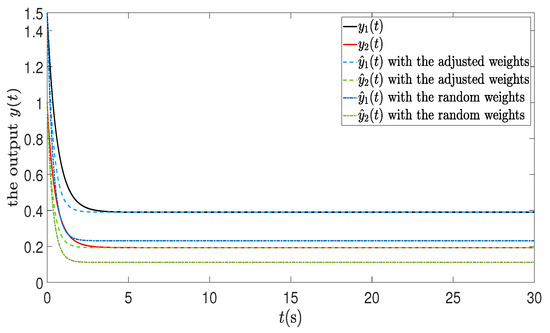

In order to verify the accuracy of the estimated structure, a test system is designed based on the gain observation matrix . Under the same simulation conditions as above, the effects of the adjusted weights and the random weights on the system are compared. In Figure 11, the state trajectories of the real system and the estimated system with the adjusted weights and the system with the random weights are given. Figure 12 shows the real output curve and the estimated output with the adjusted weights and the output curve with the random weights.

Figure 11.

The state and estimated state with the adjusted weights and state with the random weights curves.

Figure 12.

The output and estimated output with the adjusted weights and output with the random weights curves.

Example 2. 3-dimensional memristor neural networks are considered, and the parameters of the system (2) are given as follows,

Based on the system (2), the estimated system (17) can be designed as follows,

By using Theorem 2 and LMIs tools, the parameters of the estimated system (17) can be obtained,

Set sampling time and sampling period . Considering (18), set and . Based on Theorems 3 and 4, set and and are negative unit vectors and matrix.

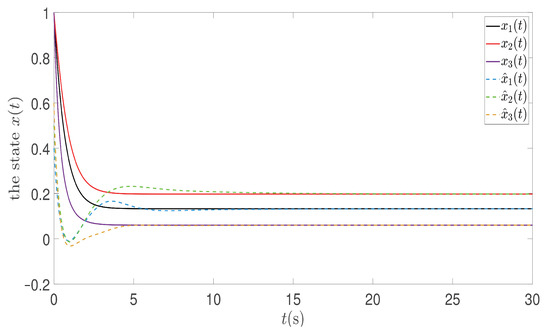

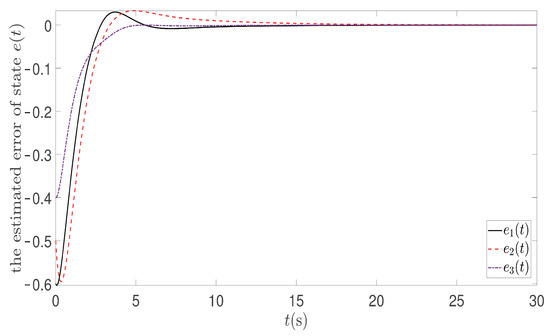

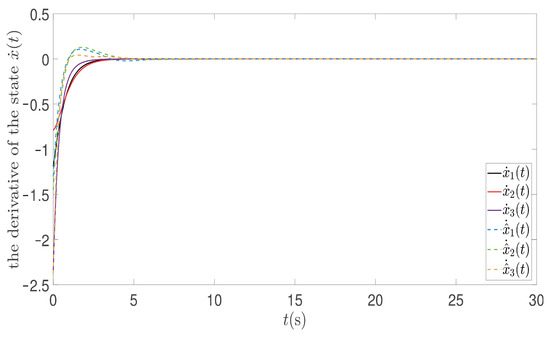

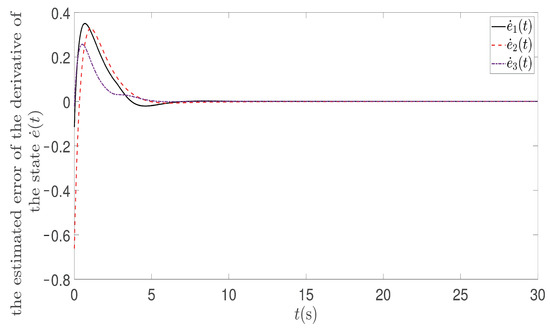

The state trajectories of the state and the state observer are drawn in Figure 13. Figure 14 is drawn for the estimated error between the state and the state observer . In Figure 15, the trajectories of the derivative of the state and the derivative of the state observer are depicted. The trajectories of the error between and are given in Figure 16. In Figure 17, the output curve and the estimated output curve are given. Figure 18 shows the estimated error curve between and .

Figure 13.

The state and estimated state curves of the 3-dimensional memristor neural networks.

Figure 14.

The estimated error curves of the states.

Figure 15.

The derivative curves of the states and estimated states.

Figure 16.

The error curves of the derivative of the states.

Figure 17.

The output and estimated output curves.

Figure 18.

The error curves of the output.

In order to verify the accuracy of the estimated structure, a test system is designed based on the gain observation matrix . Under the same simulation conditions as above, the effects of the adjusted weights and the random weights on the system are compared. In Figure 19, the state trajectories of the real system and the estimated system with the adjusted weights and the system with the random weights are given. Figure 20 shows the real output curve and the estimated output with the adjusted weights and the output curve with the random weights.

Figure 19.

The state and estimated state with the adjusted weights and state with the random weights curves.

Figure 20.

The output and estimated output with the adjusted weights and output with the random weights curves.

4.2. Description of Simulation Results

Figure 5 and Figure 13 show that the estimated state vector is a good approximation of the real state vector. On the other hand, Figure 6 and Figure 14 verify that the estimation error vector of states is going to zero. Figure 7 and Figure 15 show that the derivative vector of estimated states is a good approximation of the derivative vector of real states. On the other hand, Figure 8 and Figure 16 verify that the estimation error vector of derivative of states is going to zero. Figure 9 and Figure 17 show that the estimated output vector is a good approximation of the real output vector. On the other hand, Figure 10 and Figure 18 verify that the estimation error vector of outputs is going to zero. Figure 11 and Figure 19 show that the estimated state vector with adaptive weights is better than that with random weights. Figure 12 and Figure 20 show that the output vector with adaptive weights is better than that with random weights. Simulation results indicate that the state observer proposed in this paper has stronger adaptability and more accurate estimation results for memristor neural networks with model uncertainties.

5. Conclusions

The state estimation of memristor neural networks with model uncertainties is discussed in this paper. In particular, model uncertainties include time-varying delays, floating parameters and unknown functions. An improved approach based on LSTMs is proposed to deal with model uncertainties. This paper proves that the improved neural networks can approximate any nonlinear function with any error. On this basis, a full-order state observer is proposed to achieve the reconstruction of states based on the measurement output of the system. The adaptive updating laws of the weights of improved neural networks are proposed based on a LKF. By using LKF and LMIs tools, this paper obtains the asymptotic stability conditions for the error systems. The simulation results show that by using the full-order state observer and the adaptive updating laws of the weights, an accurate estimate of the solution can be obtained. The test results show that the model uncertainties can be approximated accurately. As mentioned in the introduction, the improved LSTMs designed in this paper can also be realized by crossbar array of memristor, which will be our next work.

Author Contributions

Conceptualization, methodology, software, validation, formal analysis, investigation, resources, data curation, writing—original draft preparation, writing—review and editing, L.M.; visualization, supervision, M.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The datasets generated during and analysed during the current study are available from the corresponding author on reasonable request.

Conflicts of Interest

The authors declared no potential conflict of interest with respect to the research, authorship, and/or publication of this article.

Appendix A. The Proof of Theorem 1

Proof.

Considering the Equation (5), the improved neural networks are derived by using the recursive method.

Take , we have

Then,

where , , , .

And we have

Then,

where , .

Take , we have

Then,

where , , , .

And we have

Then,

where , .

Take , we have

Then,

where , , , .

And we have

Then,

where , .

Take , and can be reconstituted as follows,

where , .

It can be found that the formula is the basic form of multiple neural networks. Therefore, the improved LSTMs can be converted into multiple neural networks. Considering Lemma 1, multiple neural networks can approximate any nonlinear function with any error. Considering the improved LSTMs, since it can be converted into the multiple neural networks, the lemma satisfied by the multiple neural networks can also be satisfied by the improved LSTMs. Therefore, Theorem 1 can be obtained according to the form of Lemma 1. □

Appendix B. The Proof of Theorem 2

Proof.

Take a Lyapunov-Krasovskii function,

where and are positive definite matrices.

Based on the error system (9), the derivative of can be obtained as follows,

By using Assumption 2 and Lemma 2, for a positive definite diagonal matrix , we have

And there exists a positive definite matrix to satisfy the following equation

For a real constant , combining with the above formulas, we have

where , , , and .

Considering the above inequality, take

then the error system (9) is asymptotically stable, and the proof of Theorem 2 is completed. □

Appendix C. The Proof of Theorem 3

Proof.

Based on the Equation (15), take a Lyapunov–Krasovskii function(LKF),

By using the error system (14) and the Equation (15), the derivative of V (t; e(t)) can be obtained as follows,

Combining (8) and (13), it can be obtained

where and .

Considering the above equations, we have

Then, adaptive updating laws of the weights are given as follows

where and denote the design vectors;

denotes the design matrix.

And we have

The derivative of V (t; e(t)) can be obtained as follows

Select appropriate , and to satisfy

then the error system (14) is asymptotically stable, and the proof of Theorem 3 is completed. □

Appendix D. The Proof of Theorem 4

Proof.

By using Assumption 3 and (14), we have

By using (17), Theorems 2 and 3, we have

And we have

where and .

If is left invertible, there exists such that , and the proof of Theorem 4 is completed. □

References

- Chua, L. Memristor-the missing circuit element. IEEE Trans. Circuit Theory 1971, 18, 507–519. [Google Scholar] [CrossRef]

- Wu, A.; Wen, S.; Zeng, Z. Synchronization control of a class of memristor-based recurrent neural networks. Inf. Sci. 2012, 183, 106–116. [Google Scholar] [CrossRef]

- Wen, S.; Zeng, Z.; Huang, T. Exponential stability analysis of memristor-based recurrent neural networks with time-varying delays. Neurocomputing 2012, 97, 233–240. [Google Scholar] [CrossRef]

- Chen, J.; Zeng, Z.; Jiang, P. Global mittag-leffler stability and synchronization of memristor-based fractional-order neural networks. Neural Netw. 2014, 51, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Wang, F.; Na, H.; Wu, S.; Yang, X.; Guo, Y.; Lim, G.; Rashid, M.M. Delayed switching applied to memristor neural networks. J. Appl. Phys. 2012, 111, 507–511. [Google Scholar] [CrossRef]

- Jiang, M.; Mei, J.; Hu, J. New results on exponential synchronization of memristor-based chaotic neural networks. Neurocomputing 2015, 156, 60–67. [Google Scholar] [CrossRef]

- Wu, H.; Li, R.; Ding, S.; Zhang, X.; Yao, R. Complete periodic adaptive antisynchronization of memristor-based neural networks with mixed time-varying delays. Can. J. Phys. 2014, 92, 1337–1349. [Google Scholar] [CrossRef]

- Hu, M.; Chen, Y.; Yang, J.; Wang, Y.; Li, H.H. A compact memristor-based dynamic synapse for spiking neural networks. IEEE Trans. Comput. Aided Des. Integr. Circuits Syst. 2016, 36, 1353–1366. [Google Scholar] [CrossRef]

- Zheng, M.; Li, L.; Xiao, J.; Yang, Y.; Zhao, H. Finite-time projective synchronization of memristor-based delay fractional-order neural networks. Nonlinear Dyn. 2017, 89, 2641–2655. [Google Scholar] [CrossRef]

- Negrov, D.; Karandashev, I.; Shakirov, V.; Matveyev, Y.A. A plausible memristor implementation of deep learning neural networks. Neurocomputing 2015, 237, 193–199. [Google Scholar] [CrossRef]

- Wang, X.; Ju, H.; Yang, H.; Zhong, S. A new settling-time estimation protocol to finite-time synchronization of impulsive memristor-based neural networks. IEEE Trans. Cybern. 2020, 52, 4312–4322. [Google Scholar] [CrossRef] [PubMed]

- Chen, B.; Yang, H.; Zhuge, F.; Li, Y.; Chang, T.-C.; He, Y.-H.; Yang, W.; Xu, N.; Miao, X.-S. Optimal tuning of memristor conductance variation in spiking neural networks for online unsupervised learning. IEEE Trans. Electron Devices 2019, 66, 2844–2849. [Google Scholar] [CrossRef]

- Liu, X.Y.; Zeng, Z.G.; Wunsch, D.C. Memristor-based LSTM network with in situ training and its applications. Neural Netw. 2020, 131, 300–311. [Google Scholar] [CrossRef] [PubMed]

- Ning, L.; Wei, X. Bipartite synchronization for inertia memristor-based neural networks on coopetition networks. Neural Netw. 2020, 124, 39–49. [Google Scholar]

- Xu, C.; Wang, C.; Sun, Y.; Hong, Q.; Deng, Q.; Chen, H. Memristor-based neural network circuit with weighted sum simultaneous perturbation training and its applications. Neurocomputing 2021, 462, 581–590. [Google Scholar] [CrossRef]

- Prezioso, M.; Bayat, F.M.; Hoskins, B.D.; Likharev, K.K.; Strukov, D.B. Training andoperation of an integrated neuromorphic network based on metal-oxide memristors. Nature 2015, 521, 61–64. [Google Scholar] [CrossRef] [PubMed]

- Kim, S.; Park, J.; Kim, T.H.; Hong, K.; Hwang, Y.; Park, B.g.; Kim, H. 4-bit multilevel operation in overshoot suppressed Al2O3/TiOx resistive random-access memory crossbar array. Adv. Intell. Syst. 2022, 4, 2100273. [Google Scholar] [CrossRef]

- Wang, Z.R.; Joshi, S.; Savel’ev, S.; Song, W.; Midya, R.; Li, Y.; Rao, M.; Yan, P.; Asapu, S.; Zhuo, Y.; et al. Fully memristive neural networks for pattern classification with unsupervised learning. Nat. Electron. 2018, 1, 137–145. [Google Scholar] [CrossRef]

- Choi, W.S.; Jang, J.T.; Kim, D.; Yang, T.J.; Kim, C.; Kim, H.; Kim, D.H. Influence of Al2O3 layer on InGaZnO memristor crossbar array for neuromorphic applications. Chaos Solitons Fractals 2022, 156, 111813. [Google Scholar] [CrossRef]

- Bayat, F.M.; Prezioso, M.; Chakrabarti, B.; Nili, H.; Kataeva, I.; Strukov, D.B. Implementation of multilayer perceptron network with highly uniform passive memristive crossbar circuits. Nat. Commun. 2018, 9, 2331. [Google Scholar] [CrossRef]

- Chen, Y.; Chen, G. Stability analysis of delayed neural networks based on a relaxed delay-product-type lyapunov functional. Neurocomputing 2021, 439, 340–347. [Google Scholar] [CrossRef]

- Wang, L.; Song, Q.; Liu, Y.; Zhao, Z.; Alsaadi, F.E. Finite-time stability analysis of fractional-order complex-valued memristor-based neural networks with both leakage and time-varying delays. Neurocomputing 2017, 245, 86–101. [Google Scholar] [CrossRef]

- Meng, Z.; Xiang, Z. Stability analysis of stochastic memristor-based recurrent neural networks with mixed time-varying delays. Neural Comput. Appl. 2017, 28, 1787–1799. [Google Scholar] [CrossRef]

- Chen, C.; Zhu, S.; Wei, Y. Finite-time stability of delayed memristor-based fractional-order neural networks. IEEE Trans. Cybern. 2020, 50, 1607–1616. [Google Scholar] [CrossRef]

- Du, F.; Lu, J. New criteria for finite-time stability of fractional order memristor-based neural networks with time delays. Neurocomputing 2021, 421, 349–359. [Google Scholar] [CrossRef]

- Rakkiyappan, R.; Chandrasekar, A.; Laksmanan, S.; Park, J.H. State estimation of memristor-based recurrent neural networks with time-varying delays based on passivity theory. Complexity 2014, 19, 32–43. [Google Scholar] [CrossRef]

- Bao, H.; Cao, J.; Kruths, J.; Alsaedi, A.; Ahmad, B. H∞ state estimation of stochastic memristor-based neural networks with time-varying delays. Neural Netw. 2018, 99, 79–91. [Google Scholar] [CrossRef]

- Nagamani, G.; Rajan, G.S.; Zhu, Q. Exponential state estimation for memristor-based discrete-time bam neural networks with additive delay components. IEEE Trans. Cybern. 2020, 5, 4281–4292. [Google Scholar] [CrossRef]

- Sakthivel, R.; Anbuvithya, R.; Mathiyalagan, K.; Prakash, P. Combined h∞ and passivity state estimation of memristive neural networks with random gain fluctuations. Neurocomputing 2015, 168, 1111–1120. [Google Scholar] [CrossRef]

- Wei, F.; Chen, G.; Wang, W. Finite-time synchronization of memristor neural networks via interval matrix method. Neural Netw. 2020, 127, 7–18. [Google Scholar] [CrossRef]

- Wang, J.; Wang, Z.; Chen, X.; Qiu, J. Synchronization criteria of delayed inertial neural networks with generally markovian jumping. Neural Netw. 2021, 139, 64–76. [Google Scholar] [CrossRef] [PubMed]

- Li, L.; Xu, R.; Gan, Q.; Lin, J. Synchronization of neural networks with memristor-resistor bridge synapses and lévy noise. Neurocomputing 2021, 432, 262–274. [Google Scholar] [CrossRef]

- Ren, H.; Peng, Z.; Gu, Y. Fixed-time synchronization of stochastic memristor-based neural networks with adaptive control. Neural Netw. 2020, 130, 165–175. [Google Scholar] [CrossRef] [PubMed]

- Zheng, C.D.; Zhang, L. On synchronization of competitive memristor-based neural networks by nonlinear control. Neurocomputing 2020, 410, 151–160. [Google Scholar] [CrossRef]

- Pan, C.; Bao, H. Exponential synchronization of complex-valued memristor-based delayed neural networks via quantized intermittent control. Neurocomputing 2020, 404, 317–328. [Google Scholar] [CrossRef]

- Xiao, J.; Li, Y.; Zhong, S.; Xu, F. Extended dissipative state estimation for memristive neural networks with time-varying delay. ISA Trans. 2016, 64, 113–128. [Google Scholar] [CrossRef] [PubMed]

- Li, R.; Gao, X.; Cao, J.; Zhang, K. Dissipativity and exponential state estimation for quaternion-valued memristive neural networks. Neurocomputing 2019, 363, 236–245. [Google Scholar] [CrossRef]

- Li, H. Sampled-data state estimation for complex dynamical networks with time-varying delay and stochastic sampling. Neurocomputing 2014, 138, 78–85. [Google Scholar] [CrossRef]

- Dai, X.; Yin, H.; Jha, N. Grow and prune compact, fast, and accurate lstms. IEEE Trans. Comput. 2020, 69, 441–452. [Google Scholar] [CrossRef]

- Hornik, K.; Stinchcombe, M.; White, H. Multilayer feedforward networks are universal approximators. Neural Netw. 1989, 2, 359–366. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).