Abstract

When performing Bayesian modeling on functional data, the assumption of normality is often made on the model error and thus the results may be sensitive to outliers and/or heavy tailed data. An important and good choice for solving such problems is quantile regression. Therefore, this paper introduces the quantile regression into the partial functional linear spatial autoregressive model (PFLSAM) based on the asymmetric Laplace distribution for the errors. Then, the idea of the functional principal component analysis, and the hybrid MCMC algorithm combining Gibbs sampling and the Metropolis–Hastings algorithm are developed to generate posterior samples from the full posterior distributions to obtain Bayesian estimation of unknown parameters and functional coefficients in the model. Finally, some simulation studies show that the proposed Bayesian estimation method is feasible and effective.

Keywords:

partial functional linear spatial autoregressive model; quantile regression; Bayesian estimation; functional principal components analysis; Metropolis–Hastings algorithm MSC:

62F15; 62R10; 62G08

1. Introduction

With the rapid development of data collection and storage technology, people often obtain a kind of data that appears in the form of curves, surfaces or more complex structures. This type of data with obvious functional characteristics is called functional data, which widely exist in stock data, electrocardiogram data, weather data, spectral data, etc. Over the last two decades, functional data analysis is becoming an useful tool to deal with this type of data and has received increasing attention in fields such as econometrics, meteorology, biomedical research, chemometrics, as well as other applied fields. Therefore, there has been a large amount of work in function data analysis and various functional regression models have also been proposed one after another; see [1,2,3,4], among others. However, the collected data may contain both functional covariates and scalar covariates, so classical functional linear regression models are no longer sufficient for research needs. Subsequently, some methods and theories on the partial functional linear model (PFLM) have been introduced. For example, Shin [5] proposed new estimators of partial functional linear model and established its asymptotical normality. Cui et al. [6] investigated a partially functional linear regression model based on reproducing kernel Hilbert spaces. Xiao et al. [7] studied the partial functional linear model with autoregressive errors. Li et al. [8] applied partially functional linear regression models for analysis of genetic, imaging, and clinical data. It can be observed that the above studies focus on mean regression, which typically assume the normality of error and are very sensitive to heavy-tailed distributions or outliers. As a feasible alternative, quantile regression (QR), first proposed by Koenker and Bassett [9], not only can provide a more complete statistical analysis of the relationship between the conditional quantiles of explanatory variables and response variables than mean regression, but is also more robust, and has received widespread attention. When dealing with infinite dimensional data such as functional data, many scholars have already combined quantile regression with functional data analysis and conducted research on related theories and applications. For example, Yang et al. [10] studied functional quantile linear regression within the framework of reproducing kernel Hilbert spaces. Zhu et al. [11] proposed the extreme conditional quantiles estimation in partial functional linear regression models with heavy-tailed distributions and established the asymptotic normality of the estimator. Xia et al. [12] studied the goodness-of-fit test of the functional linear composite quantile regression model and obtained a test statistic with asymptotic standard normal distribution. Ling et al. [13] discussed the estimation procedure for semi-functional partial linear quantile regression model with randomly censored responses. It is worth noting that there is no spatial dependence between response variables in the functional quantile regression models mentioned above. To the best of our knowledge, there are few studies on quantile regression of spatial functional models in existing research [14].

In practice, the observed data often exhibit spatial dependence. Currently, a common method for handling spatial dependence is the spatial autoregressive model, and extensive research on spatial autoregressive models has also been conducted. Lee [15] investigated the asymptotic properties of estimating parameters in spatial autoregressive models using the quasi-maximum likelihood estimation method. Cheng and Chen [16] studied the cross-sectional maximum likelihood estimation of partially linear single-index spatial autoregressive model. Wang et al. [17] considered variable selection in the semiparametric spatial autoregressive model with exponential squared loss. Combining series approximation, two-stage least squares, and Lagrange multiplication, Li et al. [18] developed the statistical inference for partially linear spatial autoregressive models under constrained conditions. Tang et al. [19] proposed a new standard test and studied the GMM estimation method for linear spatial autoregressive models. In recent years, many researchers have considered both spatial dependence and functional variables, such as functional spatial autoregressive models [20], functional semiparametric spatial autoregressive models [21], and varying coefficient partial functional linear spatial autoregressive models [22]. The vast majority of existing literature on spatial autoregressive models prefers mean regression. Because of the greater information contained in quantile regression, higher reliability of estimation results, and wider applicability, some studies on using quantile regression for spatial autoregressive models have emerged. For example, Dai et al. [23] proposed the quantile regression for spatial panel model with individual fixed effects. Gu et al. [24] introduced the spatial quantile regression model to explore the relationships between driving factors and land surface temperature at different quantiles. Dai et al. [25] implemented quantile regression in partially linear spatial autoregressive models with variable coefficients. However, the research on applying quantile regression to spatial autoregressive models is relatively limited.

With the advancement of computer technology and statistics, the Bayesian method has rapidly developed and been widely applied due to its unique computational advantages [26,27,28,29], and models based on quantile regression can also be established within the Bayesian framework. Yu and Moyeed [30] introduced the asymmetric Laplace distribution as a working likelihood function to perform the inference. Bayesian quantile regression (BQR) has since received increasing attention from both theoretical and empirical viewpoints with a wide range of applications. Yu [31] considered Bayesian quantile regression for hierarchical linear models. Wang and Tang [32] presented Bayesian quantile regression model with mixed discrete and non-ignorable missing covariates through reshaping QR model as a hierarchical structure model. Zhang et al. [33] studied the Bayesian quantile regression for semiparametric mixed-effects double regression models with asymmetric Laplace distribution errors. Based on the generalized asymmetric Laplace distribution, Yu and Yu [34] applied Bayesian quantile regression to nonlinear mixed effects models for longitudinal data. Chu et al. [35] studied Bayesian quantile regression for big data, variable selection and posterior prediction. Yang et al. [36] constructed the Bayesian quantile regression for bivariate vector autoregressive models and developed a Gibbs sampling algorithm that introduces latent variables. Nevertheless, little attention has been paid to functional models in the context of Bayesian quantile regression. Up to now, we also have found that there are almost no Bayesian quantile regression for spatial functional models. Hence, a Bayesian quantile regression is developed for partial functional linear spatial autoregressive model through employing a hybrid of Gibbs sampler and Metropolis–Hastings algorithm in this paper.

The outline of the paper is organized as follows. In Section 2, we introduce a partial functional linear spatial autoregressive model and give the likelihood function of the model based on the asymmetric Laplace distribution for the errors. In Section 3, we specify prior distributions of the model parameters, obtain their full conditional distributions, and develop the detailed sampling algorithms by combining the Gibbs sampler and the Metropolis–Hastings algorithm. In Section 4, we conduct some simulation studies to evaluate the performance of proposed method. Finally, Section 5 concludes this paper with a brief discussion.

2. Model and Likelihood

2.1. Model

In this paper, we consider the following partial functional linear spatial autoregressive model:

where is a real-valued spatial dependence variable corresponding to the ith observation, and is a p-dimensional vector of related explanatory variables, for is the th element of a given non-stochastic spatial weighting matrix W, such that for all . Additionally, let be zero mean random functions belonging to , and be independent and identically distributed, . Generally, we suppose throughout that . In addition, is a vector of p-dimensional unknown parameters, is a square integrable unknown slope function on , and is the random error term.

For convenience, we work with the matrix notation. Denote , , , . Then model (1) can be written as

Let be an independent and identically distributed sample which is generated from model (1). The covariance function and the empirical covariance function are defined as and , respectively. The covariance function K defines a linear operator which maps a function f to given by . We assume that the linear operator with kernel K is positive definite. Let and be the ordered eigenvalue sequences of the linear operators with kernels K and , and be the corresponding orthonormal eigenfunction sequences, respectively. It is clear that the sequences and each are an orthonormal basis in . Moreover, we can write the spectral decompositions of the covariance functions K and as and , respectively.

According to the Karhunen–Loève representation, we have

where are uncorrelated random variables with mean 0 and variance , , and is the inner product. Substituting (3) into model (2), we can obtain

Therefore, the regression model in (4) can be approximated by

where is the truncation level that trades off approximation error against variability and typically diverges with n. Replacing the by for , model (5) can be rewritten as

where , .

Alternatively, the general form of model (6) is

At a given quantile level , is a random error term with th quantile equal to zero, such that , in which is the probability density function of the error. Then the estimated value of parameter and can be obtained by minimizing the following loss function

where is the check function defined as and represents the indicator function. Within the Bayesian quantile regression framework, it is generally assumed that follows an asymmetric Laplace distribution (ALD) with probability density function

where is the scale parameter and is the location parameter.

Since the minimization in (8) and the maximization of the likelihood function with the ALD for the errors are equivalent, we can represent the ALD as a location–scale mixture and rewrite model (7) as

in which denotes that follows an exponential distribution with the parameter , whose probability density function is ; is the standard normal random variable, whose probability density function is , and are mutually independent; and , respectively. The models defined in Equation (9) are referred to as the Bayesian quantile PFLSAM in the paper.

For simplicity, we use the matrix notation. Then model (9) can be written as

where , and

2.2. Likelihood

From the model in (10), we can obtain the likelihood function

where , and is a identity matrix.

3. Bayesian Quantile Regression

3.1. Priors

To estimate the unknown parameters , , and through Bayesian inference, it is essential to first define the prior distributions for the parameters of the models. We assume that the parameters and are independently distributed as normal distributions, that is and , respectively, where the hyperparameters and are prespecified. In addition, the priors of parameters and are chosen as and , where and are hyperparameters to be given. The proposed procedures can also be adapted to other specific prior distributions.

Thus, the joint prior of all of the unknown quantities is given by

3.2. Posterior Inference

Let and we need to estimate the unknown parameters of . Based on the likelihood function (11) and priors (12), the joint posterior distribution of the unknown parameters is given by

To perform the Gibbs sampling algorithm, we need to derive the full conditional distributions of the unknown parameters. Considering the prior distributions, we can easily obtain the full conditional distribution of and as follows:

where and similarly,

where and

Additionally, a similar calculation gives the full conditional distribution of as follows,

where and

The full posterior of each is also tractable and its specific form is as follows,

where and Obviously, the full posterior distribution of can be recognized as a generalized inverse Gaussian distribution with the form of .

Furthermore, the full conditional distribution of is given as follows,

Based on the above conclusions, the detailed sampling scheme can be summarized as the following steps:

- Step 1

- Select the initial values of . Set ;

- Step 2

- A posterior sample is extracted from the posterior distribution of each parameter.

- Step 3

- Set and go to Step 2 until J, where J is the number of iteration times.

According to the above steps, an efficient MCMC-based sampling algorithm for generating posterior samples from the full conditional distributions of the unknown parameters is constructed. In particular, except the conditional distribution of in (18), the sampling for other parameters are based on familiar distributions and can be easily performed. It is difficult to directly draw the posterior samples from (18) because it is nonstandard. Hence, the Metropolis–Hastings algorithm is used to solve the problem. To begin with, we select normal distribution as the proposal distribution, where is chosen such that the average acceptance rate is approximately between 0.25 and 0.45. Specifically, the Metropolis–Hastings algorithm is implemented as follows: at the th iteration with the current value , a new candidate is generated from and is accepted with probability

Based on the proposed algorithm, we may conclude that the posterior samplers have converged to the joint posterior distribution in (13). Consequently, we can collect M MCMC samples , for The Bayesian posterior means of the parameters are estimated, respectively, as follows

4. Simulation Study

In this section, the performance of the proposed model and Bayesian estimation method will be illustrated through Monte Carlo simulation. All calculations are performed with R 4.3.3. We generate the datasets from the following model:

where and Z follow the multivariate normal distribution with the of being , for . In addition, let the spatial parameter , which represents different spatial dependencies. Similar to Xie et al. [37], the weight matrix is set to be where is an q-dimensional vector with all elements being 1, ⊗ means Kronecker product, . Referring to Shin [5], the functional coefficient and are taken, where the are independently normally distributed with mean 0 and variance and . Furthermore, the noninformative prior information type is considered for hyperparameter values of unknown parameters : , where is an m-dimensional vector with all elements being 0. Importantly, we determine the truncation level m such that the first m functional principal components scores s can explain at least 90% of the total variability of the functional predictor .

To examine the effect of random error distribution on parameter estimation, we consider the following three distributions for the errors with quantile being zero:

- Case I: , with such that th quantile of is 0;

- Case II: , with such that th quantile of is 0;

- Case III: , with such that th quantile of is 0.

In each type, R is selected as 25, 50, 100 and q is set to 3, and thus, n = 75, 150, 300 at three different quantile levels . Based on the above settings and the generated dataset, the preceding proposed hybrid algorithm is used to evaluate the Bayesian estimates of unknown parameters based on 100 replications. Furthermore, we assess the convergence of the proposed hybrid algorithm by calculating the estimated potential scale reduction (EPSR) values [38] for a number of test runs on the base of three parallel chains of observations via three different starting values. In all test runs, the EPSR values are close to 1 and less than 1.2 after 3000 iterations. Therefore, after discarding the initial 3000 iterations in producing the Bayesian estimates for each replication, we can collect observations and present the posterior summary of the parameters in Table 1, Table 2 and Table 3. To evaluate the effect of nonparametric estimators, we also check the square root of average square errors defined as:

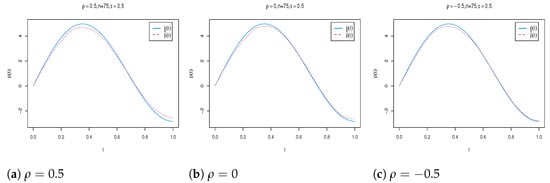

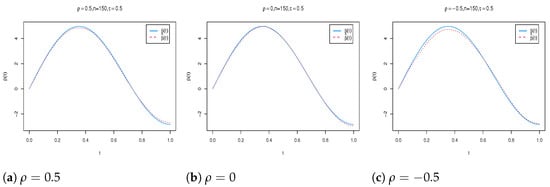

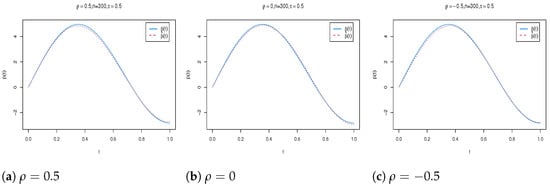

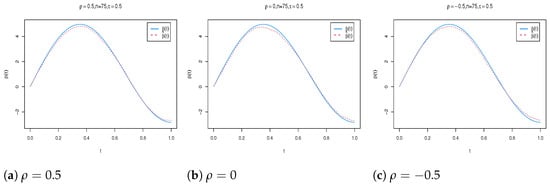

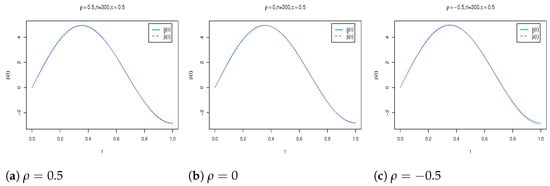

where and are the grid points at which the function is evaluated. The simulation results are reported in Table 4. Moreover, to visually see the accuracy of estimate of function , we plot the true value of function against its estimate under different cases. Due to space limitations, we only list some nonparametric estimation curve results with different spatial parameters in Figure 1, Figure 2, Figure 3, Figure 4, Figure 5, Figure 6, Figure 7, Figure 8 and Figure 9.

Table 1.

Bayesian estimates of unknown parameters under Case I.

Table 2.

Bayesian estimates of unknown parameters under Case II.

Table 3.

Bayesian estimates of unknown parameters under Case III.

Table 4.

The values of RASE for the nonparametric components under different cases.

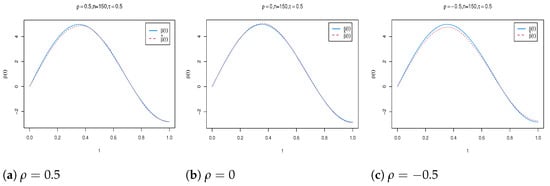

Figure 1.

The estimated functional coefficient versus true value of with different s when under Case I.

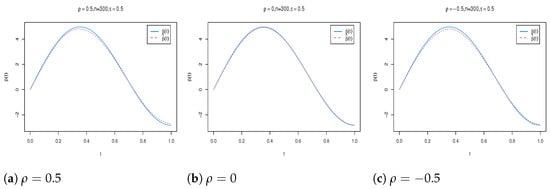

Figure 2.

The estimated functional coefficient versus true value of with different s when under Case I.

Figure 3.

The estimated functional coefficient versus true value of with different s when under Case I.

Figure 4.

The estimated functional coefficient versus true value of with different s when under Case II.

Figure 5.

The estimated functional coefficient versus true value of with different s when under Case II.

Figure 6.

The estimated functional coefficient versus true value of with different s when under Case II.

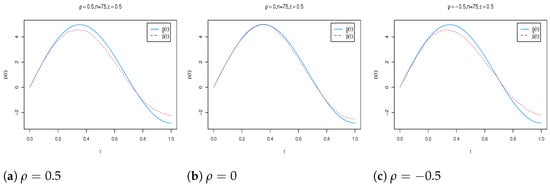

Figure 7.

The estimated functional coefficient versus true value of with different s when under Case III.

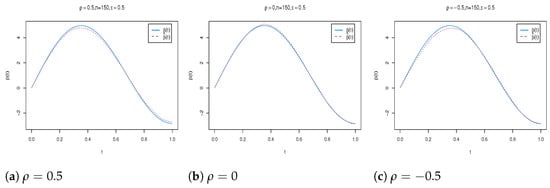

Figure 8.

The estimated functional coefficient versus true value of with different s when under Case III.

Figure 9.

The estimated functional coefficient versus true value of with different s when under Case III.

In Table 1, Table 2 and Table 3, “Bias" denotes the absolute difference between the true value and the mean of the Bayesian estimates of the parameters based on 100 replications and “SD" represents standard deviation of the Bayesian estimates. According to Table 1, Table 2, Table 3 and Table 4, we observe that (1) Bayesian estimates are reasonably accurate under all the considered settings because of their relatively small Bias values and SD values. It is also worth noting that Bayesian estimates are quite robust for different error distribution, and the performance under normal error distributions is slightly better than the performance under t and Cauchy error distributions. (2) Based on different spatial parameters and different quantile levels, the results of Bayesian estimation are similar. (3) As the sample size increases, the SD values of all the parameters decrease significantly at each quantile level. (4) For the functional component, the values of RASE decrease with increasing sample size. This indicates that the estimation of functional coefficient is improving. Additionally, Figure 1, Figure 2, Figure 3, Figure 4, Figure 5, Figure 6, Figure 7, Figure 8 and Figure 9 show that the shapes of the estimated nonparametric function closely approximate the corresponding true functional line under all the considered settings, which confirms the results reported in Table 4. In summary, all the above findings demonstrate that the proposed estimation procedure effectively recover the true information in partial functional linear spatial autoregressive model.

5. Conclusions and Discussion

In this paper, we propose a partial functional linear spatial autoregressive model that can effectively study the relationship between a scalar spatial response variable and explanatory variables, including a few scalar variables and a functional random variable. Based on functional principal components analysis, combined with the Gibbs sampler and Metropolis–Hastings algorithm, we develop the Bayesian quantile regression to analyze the model. Extensive simulation studies are conducted to demonstrate the efficiency of the proposed Bayesian approach. As expected, the results show that the developed Bayesian method is satisfactory with high efficiency and fast computation.

In addition, variable selection is an important research direction of functional data analysis, so determining how to consider the robust Bayesian variable selection of the partial functional linear spatial autoregressive model combined with quantile regression is a direction worthy of further study.

Author Contributions

Conceptualization, D.X. and S.K.; methodology, D.X., R.T., J.D. and S.K.; software, S.K. and D.X.; formal analysis, D.X. and S.K.; data curation, S.K. and J.D.; writing—original draft, D.X., S.K., J.D. and R.T.; writing—review and editing, D.X., S.K., J.D. and R.T; supervision, D.X., S.K., J.D. and R.T. All authors have read and agreed to the published version of the manuscript.

Funding

Xu’s work is supported by the Zhejiang Provincial Natural Science Foundation of China under Grant No. LY23A010013, and Dong’s work is supported by the Humanities and Social Sciences Research Project of Ministry of Education under Grant No. 21YJC910002.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Yao, F.; Müller, H.; Wang, J. Functional linear regression analysis for longitudinal data. Ann. Stat. 2005, 33, 2873–2903. [Google Scholar] [CrossRef]

- Tang, Q.; Kong, L.; Ruppert, D.; Karunamuni, R.J. Partial functional partially linear single-index models. Stat. Sin. 2021, 31, 107–133. [Google Scholar] [CrossRef]

- Zhang, X.; Fang, K.; Zhang, Q. Multivariate functional generalized additive models. J. Stat. Comput. Simul. 2022, 92, 875–893. [Google Scholar] [CrossRef]

- Rao, A.R.; Reimherr, M. Modern non-linear function-on-function regression. Stat. Comput. 2023, 33, 130. [Google Scholar] [CrossRef]

- Shin, H. Partial functional linear regression. J. Stat. Plan. Inference 2009, 139, 3405–3418. [Google Scholar] [CrossRef]

- Cui, X.; Lin, H.; Lian, H. Partially functional linear regression in reproducing kernel Hilbert spaces. Comput. Stat. Data Anal. 2020, 150, 106978. [Google Scholar] [CrossRef]

- Xiao, P.; Wang, G. Partial functional linear regression with autoregressive errors. Commun. Stat.-Theory Methods 2022, 51, 4515–4536. [Google Scholar] [CrossRef]

- Li, T.; Yu, Y.; Marron, J.; Zhu, H. A partially functional linear regression framework for integrating genetic, imaging, and clinical data. Ann. Appl. Stat. 2024, 18, 704–728. [Google Scholar] [CrossRef]

- Koenker, R.; Bassett, G., Jr. Regression quantiles. Econom. J. Econom. Soc. 1978, 46, 33–50. [Google Scholar] [CrossRef]

- Yang, G.; Liu, X.; Lian, H. Optimal prediction for high-dimensional functional quantile regression in reproducing kernel Hilbert spaces. J. Complex. 2021, 66, 101568. [Google Scholar] [CrossRef]

- Zhu, H.; Li, Y.; Liu, B.; Yao, W.; Zhang, R. Extreme quantile estimation for partial functional linear regression models with heavy-tailed distributions. Can. J. Stat. 2022, 50, 267–286. [Google Scholar] [CrossRef] [PubMed]

- Xia, L.; Du, J.; Zhang, Z. A Nonparametric Model Checking Test for Functional Linear Composite Quantile Regression Models. J. Syst. Sci. Complex. 2024, 37, 1714–1737. [Google Scholar] [CrossRef]

- Ling, N.; Yang, J.; Yu, T.; Ding, H.; Jia, Z. Semi-Functional Partial Linear Quantile Regression Model with Randomly Censored Responses. Commun. Math. Stat. 2024. [Google Scholar] [CrossRef]

- Liu, G.; Bai, Y. Functional Quantile Spatial Autoregressive Model and Its Application. J. Syst. Sci. Math. Sci. 2023, 43, 3361–3376. [Google Scholar]

- Lee, L.F. Asymptotic distributions of quasi-maximum likelihood estimators for spatial autoregressive models. Econometrica 2004, 72, 1899–1925. [Google Scholar] [CrossRef]

- Cheng, S.; Chen, J. Estimation of partially linear single-index spatial autoregressive model. Stat. Pap. 2021, 62, 495–531. [Google Scholar] [CrossRef]

- Wang, X.; Shao, J.; Wu, J.; Zhao, Q. Robust variable selection with exponential squared loss for partially linear spatial autoregressive models. Ann. Inst. Stat. Math. 2023, 75, 949–977. [Google Scholar] [CrossRef]

- Li, T.; Cheng, Y. Statistical Inference of Partially Linear Spatial Autoregressive Model Under Constraint Conditions. J. Syst. Sci. Complex. 2023, 36, 2624–2660. [Google Scholar] [CrossRef]

- Tang, Y.; Du, J.; Zhang, Z. A parametric specification test for linear spatial autoregressive models. Spat. Stat. 2023, 57, 100767. [Google Scholar] [CrossRef]

- Xu, D.; Tian, R.; Lu, Y. Bayesian Adaptive Lasso for the Partial Functional Linear Spatial Autoregressive Model. J. Math. 2022, 2022, 1616068. [Google Scholar] [CrossRef]

- Liu, G.; Bai, Y. Statistical inference in functional semiparametric spatial autoregressive model. AIMS Math. 2021, 6, 10890–10906. [Google Scholar] [CrossRef]

- Hu, Y.; Wang, Y.; Zhang, L.; Xue, L. Statistical inference of varying-coefficient partial functional spatial autoregressive model. Commun. Stat.-Theory Methods 2023, 52, 4960–4980. [Google Scholar] [CrossRef]

- Dai, X.; Jin, L. Minimum distance quantile regression for spatial autoregressive panel data models with fixed effects. PLoS ONE 2021, 16, e0261144. [Google Scholar] [CrossRef] [PubMed]

- Gu, Y.; You, X.Y. A spatial quantile regression model for driving mechanism of urban heat island by considering the spatial dependence and heterogeneity: An example of Beijing, China. Sustain. Cities Soc. 2022, 79, 103692. [Google Scholar] [CrossRef]

- Dai, X.; Li, S.; Jin, L.; Tian, M. Quantile regression for partially linear varying coefficient spatial autoregressive models. Commun. Stat.-Simul. Comput. 2024, 53, 4396–4411. [Google Scholar] [CrossRef]

- Han, M. E-Bayesian estimation of the reliability derived from Binomial distribution. Appl. Math. Model. 2011, 35, 2419–2424. [Google Scholar] [CrossRef]

- Giordano, M.; Ray, K. Nonparametric Bayesian inference for reversible multidimensional diffusions. Ann. Stat. 2022, 50, 2872–2898. [Google Scholar] [CrossRef]

- Chen, Z.; Chen, J. Bayesian analysis of partially linear, single-index, spatial autoregressive models. Comput. Stat. 2022, 37, 327–353. [Google Scholar] [CrossRef]

- Yu, C.H.; Prado, R.; Ombao, H.; Rowe, D. Bayesian spatiotemporal modeling on complex-valued fMRI signals via kernel convolutions. Biometrics 2023, 79, 616–628. [Google Scholar] [CrossRef]

- Yu, K.; Moyeed, R.A. Bayesian quantile regression. Stat. Probab. Lett. 2001, 54, 437–447. [Google Scholar] [CrossRef]

- Yu, Y. Bayesian quantile regression for hierarchical linear models. J. Stat. Comput. Simul. 2015, 85, 3451–3467. [Google Scholar] [CrossRef]

- Wang, Z.Q.; Tang, N.S. Bayesian Quantile Regression with Mixed Discrete and Nonignorable Missing Covariates. Bayesian Anal. 2020, 15, 579–604. [Google Scholar] [CrossRef]

- Zhang, D.; Wu, L.; Ye, K.; Wang, M. Bayesian quantile semiparametric mixed-effects double regression models. Stat. Theory Relat. Fields 2021, 5, 303–315. [Google Scholar] [CrossRef]

- Yu, H.; Yu, L. Flexible Bayesian quantile regression for nonlinear mixed effects models based on the generalized asymmetric Laplace distribution. J. Stat. Comput. Simul. 2023, 93, 2725–2750. [Google Scholar] [CrossRef]

- Chu, Y.; Yin, Z.; Yu, K. Bayesian scale mixtures of normals linear regression and Bayesian quantile regression with big data and variable selection. J. Comput. Appl. Math. 2023, 428, 115192. [Google Scholar] [CrossRef]

- Yang, K.; Zhao, L.; Hu, Q.; Wang, W. Bayesian quantile regression analysis for bivariate vector autoregressive models with an application to financial time series. Comput. Econ. 2024, 64, 1939–1963. [Google Scholar] [CrossRef]

- Xie, T.; Cao, R.; Du, J. Variable selection for spatial autoregressive models with a diverging number of parameters. Stat. Pap. 2020, 61, 1125–1145. [Google Scholar] [CrossRef]

- Gelman, A. Inference and monitoring convergence. In Markov Chain Monte Carlo in Practice; CRC Press: Boca Raton, FL, USA, 1996; pp. 131–144. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).