Abstract

Corner cutting algorithms are important in computer-aided geometric design and they are associated to stochastic non-singular totally positive matrices. Non-singular totally positive matrices admit a bidiagonal decomposition. For many important examples, this factorization can be obtained with high relative accuracy. From this factorization, a corner cutting algorithm can be obtained with high relative accuracy. Illustrative examples are included.

Keywords:

corner cutting algorithms; accurate computations; bidiagonal factorization; total positivity MSC:

15A23; 65D17

1. Introduction

Bidiagonal decompositions of a matrix arise in two apparently separate fields of mathematics: in total positivity theory and in computer-aided geometric design (CAGD). In the first case, the bidiagonal decomposition is a remarkable property of a non-singular totally positive matrix. Moreover, this decomposition provides a parametrization of the matrix that has been the starting point for the construction of algorithms with high relative accuracy (see [1,2,3,4,5,6]). In fact, if one knows the bidiagonal decomposition of a non-singular totally positive matrix, then one can construct such algorithms for the computation of all eigenvalues and singular values of the matrix and also to calculate the inverse (see also [7]) and the solution of some linear systems.

High relative accuracy (HRA) is a very desirable goal in numerical analysis and it can be assured when the subtractions in the algorithm only involve initial data (see [8]). The mentioned parameters of the bidiagonal decomposition come from an elimination procedure known as Neville elimination. But this procedure uses, in fact, subtractions, so that an alternative method is usually necessary to obtain the parameters of the bidiagonal decomposition with HRA.

In CAGD, decompositions of a matrix also play a crucial role. In fact, they are associated with the main family of algorithms in this field, which are called corner cutting algorithms (see [9,10,11,12]). For instance, evaluation algorithms and reduction and elevation degree algorithms are corner cutting algorithms. The matrix associated with these algorithms is totally positive as well as stochastic, and all bidiagonal factors of the decomposition are also stochastic matrices.

In [13] it was shown that, if a corner cutting algorithm is known with high relative accuracy, then the bidiagonal decomposition of the corresponding matrix can also be obtained with high relative accuracy. Here, the more practical converse question is considered. Let us assume that the bidiagonal decomposition of a given stochastic matrix is known. This has been achieved with many important classes of matrices. Then, it is proved that the corresponding corner cutting algorithm can also be obtained with high relative accuracy.

The layout of this paper is as follows. In Section 2, totally positive matrices and bidiagonal decompositions are introduced, relating them to Neville elimination. Section 3 is devoted to recall some basic facts concerning high relative accuracy. Section 4 deals with corner cutting algorithms and relates them with stochastic non-singular totally positive matrices. Section 5 proves the result mentioned above, which provides the parameters for corner cutting algorithms with high relative accuracy. Section 6 includes some illustrative examples and shows applications to curve evaluation. Finally, Section 7 summarizes the main conclusions of this paper.

2. Totally Positivity and Bidiagonal Decompositions

A matrix is called totally positive (TP) when all its minors are non-negative. They are often called totally non-negative matrices (see [14,15]). This class of matrices has relevance in many fields like approximation theory, statistics, mechanics, economics, combinatorics, biology, computer-aided geometry design, lie group theory, or graph theory (see [14,16,17,18,19]).

One of the properties of non-singular matrices TP with more computational advantages is given by their following bidiagonal decomposition, although this property can be defined for more general matrices. We say that an non-singular matrix A has a bidiagonal decomposition when it can be expressed in the following form:

where , and, for , and are unit diagonal lower and upper bidiagonal matrices, respectively, with off-diagonal entries and , satisfying

- for all i,

- for ,

- and , for .

Therefore, the bidiagonal matrices of the bidiagonal decomposition have the following form:

where .

In general, the bidiagonal decomposition of a matrix is not unique. However, Proposition 2.2 in [20] guarantees that is unique.

Proposition 1.

Let A be a non-singular matrix. If a bidiagonal decomposition exists, then it is unique.

When the matrix A is TP, its bidiagonal decomposition satisfies more specific conditions, which characterize non-singular matrices TP as shown by the following result. This result can be derived from Theorem 4.2 in [21].

Theorem 1.

An non-singular matrix A is TP if and only if there exists a (unique) such that

- 1.

- for all i;

- 2.

- , for and .

The next simple example illustrates the applications of Theorem 1.

Example 1.

Given the matrix

its is given by

so by Theorem 1 it is TP. Examples of higher dimensions can be seen in Section 6.

The representation for a non-singular matrix TP A arises in the process of the complete Neville elimination (CNE), where the entries , of the previous matrices coincide with the multipliers of the CNE and the entries with the diagonal pivots (see [21,22]).

The following section shows that leads to many accurate computations with non-singular matrices TP.

3. High Relative Accuracy

Let us recall that an algorithm can be performed with high relative accuracy if it does not include subtractions (except of the initial data), that is, if it only includes products, divisions, sums (of numbers of the same sign), and subtractions of the initial data (cf. [8,23]). In case of an algorithm without any subtraction, it is called a subtraction free (SF) algorithm and it can be performed with HRA.

For a non-singular matrix TP, the non-trivial entries of the matrices in (see (1)) have been considered in [4,5,6] as natural parameters associated with A in order to perform many linear algebra computations with A to HRA. In fact, if we know with HRA, then we can compute with HRA the singular values of A, its eigenvalues, its inverse (using also [7]), or the solution of linear systems where b has alternating signs.

Moreover, for many subclasses of non-singular matrices TP it has been possible to obtain the bidiagonal decomposition of their matrices A with HRA, so that the remaining mentioned linear algebra computations can also be obtained with HRA. Among these subclasses, we can mention (cf. [24,25,26]) the collocation matrices of the Bernstein basis of polynomials (also called Bernstein–Vandermonde matrices). Other subclasses of non-singular matrices TP for which this has also been possible are the collocation matrices of the Said–Ball basis of polynomials [27], the collocation matrices of rational bases using the Bernstein or the Said–Ball basis [28], the collocation matrices of the q-Bernstein basis [29], or the collocation matrices of the h-Bernstein basis [30]. All these mentioned bases are very useful in the field of computer-aided geometric design (see [9]). In the next section, we recall a crucial algorithms in this field.

4. Stochastic Matrices TP and Corner Cutting Algorithms

A matrix is call stochastic if it is non-negative and the entries of each row sum up to 1. We will pay special attention to the non-singular matrices TP that are also stochastic, because they are very important in CAGD. In fact, their bidiagonal factorization can lead to corner cutting algorithms, which form the most relevant family of algorithms in this subject. These algorithms have an important geometric interpretation. They start from a polygon and refine this polygon cutting its corners iterability. These algorithms, depending on how the corners are cut, can finish at a point, like the de Casteljau evaluation algorithm, or at another polygon. In this later case, one can have the elevation degree algorithms or some subdivision-type algorithms like the Chaikin algorithm (cf. [31]). In addition, corner cutting algorithms have very good stability properties. So let us now recall the definition of these algorithms.

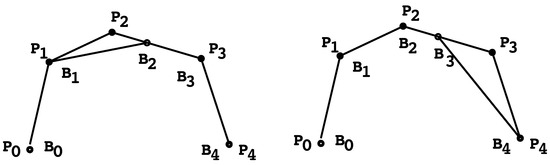

An elementary corner cutting is a transformation that maps any polygon into another polygon defined by one of the following ways:

for some , , or

for some , (see Figure 1). Then, a corner cutting algorithm is any composition of elementary corner cuttings (see [10]).

The matrix form of the elementary corner cutting given by (2) is

where is the non-singular, stochastic, bidiagonal and upper triangular matrix

Analogously to the previous case, a lower triangular matrix can also be used for the elementary corner cutting (3).

Therefore, a corner cutting algorithm is given by a product of matrices that are all bidiagonal, non-singular, TP, and stochastic. In particular, any upper triangular bidiagonal, non-singular, TP and stochastic matrix leads to a corner cutting algorithm by the composition

Likewise, there exists an analogous factorization for the lower triangular case (3).

A corner cutting algorithm coming from a non-singular stochastic matrix TP can be expressed as a product of bidiagonal non-singular stochastic matrices TP, as can be seen by the following result, which corresponds to Theorem 4.5 in [21].

Theorem 2.

An nonsingular matrix A is TP stochastic if and only if it can be decomposed as

with

and

where, satisfies

Under these conditions, the factorization is unique.

We now can back to the matrix to Example 1 to illustrate Theorem 2.

Example 2.

Let A the matrix given in Example 1. Then, its decomposition associated to a corner cutting algorithm is given by

Examples of higher dimensions will be presented in Section 6.

Remark 1.

A corner cutting algorithm corresponding to a stochastic matrix TP A as this of Theorem 2 can be expressed in compact form using the following matrix notation:

With an analogous distribution to that of the off-diagonal entries of the compact form of the corner cutting algorithm , we can define the compact form of the bidiagonal decomposition , but including in the main diagonal the diagonal pivots of the CNE of A (see Section 2 of [5]).

Observe that the restrictions for the ’s in Theorem 2 are imposed to assure the unicity of the decomposition, in the same way as the restrictions of zero entries in .

Many essential algorithms used in curve design, such as evaluation, subdivision, degree elevation, and knot insertion, are corner cutting algorithms (see [9,10]). In particular, it is well known that all bases mentioned in the previous section, which are useful in CAGD, have non-singular stochastic collocation matrices TP. Therefore, they satisfy the hypotheses of Theorem 2 and so it leads to an evaluation corner cutting algorithm for the simultaneous evaluation of points, as illustrated in Section 6.

5. Construction of Corner Cutting Algorithms with HRA

It has already been studied [13] how to obtain an accurate bidiagonal decomposition of a matrix A from a corner cutting algorithm. In this section, we consider the converse problem, that is, how to obtain with HRA the associated corner cutting algorithm if we start with an accurate bidiagonal decomposition (which has been obtained in many important examples, as recalled in Section 3). The following result gives a constructive answer proving that, from an accurate , we can construct with HRA the corner cutting algorithm of the associated non-singular stochastic matrix TP A.

Theorem 3.

Proof.

Since is non-singular and non-negative, its row sums are positive, and so we can rewrite as

where has positive diagonal entries, formed by the row sums of . In addition, is a stochastic matrix. Since for is non-singular and non-negative, the previous process can be iterated obtaining

where is the diagonal matrix whose i-th diagonal entry is the sum of the entries in the i-th row of the matrix . Since this matrix is non-negative and non-singular, the diagonal entries of are positive. Moreover, are stochastic matrices, for . After iterating this process, we obtain

Now, since is non-singular and non-negative, its row sums are positive, and it can be expressed by

where is an stochastic matrix. Iterating this procedure the following factorization of A is obtained by

where is a diagonal non-negative matrix. Taking into account that the matrices A, and are stochastic, we have

where . From (6) and using the previous formulas, we can write

So, is a diagonal stochastic matrix. Hence, the identity matrix and factorization (6) must be as follows:

where (resp., ) are stochastic and lower (resp., upper) bidiagonal matrices as in (4) of Theorem 2 (for , and ).

Algorithm 1 shows a pseudocode of the recursive algorithm introduced in the proof of the previous theorem.

| Algorithm 1 Computation of a corner cutting algorithm from a bidiagonal factorization |

|

6. Examples

This section presents examples illustrating the result and the algorithm of the previous section. For example, let us consider the basis of the space of polynomials of degree at most n given by the Bernstein polynomials of degree n:

Example 3.

For the first example, let us consider the collocation matrix of the Bernstein basis for at the points , which is given by

This matrix is stochastic, non-singular, and totally positive. It can be checked that its bidiagonal decomposition is given in compact form by

that is,

Applying Algorithm 1 to the previous bidiagonal decomposition of , the following corner cutting algorithm is obtained:

Taking into account Remark 1, this bidiagonal representation of the corner cutting algorithm can be written in compact form as

Example 4.

In this example, we consider the collocation matrix of the Bernstein basis of the space of polynomials of degree less than or equal to 7 at the points . Let us denote it by . It can be checked that its bidiagonal decomposition can be expressed in compact form as

Applying Algorithm 1 to the previous bidiagonal decomposition, the following corner cutting algorithm is obtained:

In computer-aided geometric design, the usual representation to work with polynomial curves is the Bézier representation. So a Bézier curve of degree n is given by

where , or 3, are called the control points of the curve. The usual algorithm in CAGD to evaluate a Bézier curve is the de Casteljau algorithm. This algorithm is a corner cutting algorithm; that is, all its steps are formed by linear convex combinations. Corner cutting algorithms are desirable algorithms since they are very stable from a numerical point of view. The de Casteljau algorithm evaluates a Bézier curve of degree n at a certain value with a computational cost of elementary operations. So it evaluates the Bézier curve at a sequence of points with a computational cost of elementary operations. Taking into account Examples 3 and 4, we can obtain another corner cutting algorithm to evaluate Bézier curves. Let us consider a Bézier function of degree n

where for . Then, we can consider the collocation matrix of the Bernstein basis of degree n at :

The previous matrix is stochastic, non-singular, and totally positive. By the results in [26], its bidiagonal decomposition can be computed to HRA. Then, using Theorem 3, from this bidiagonal decomposition, a corner cutting algorithm representation like this of Theorem 2 can be obtained for :

Then, we can deduce that

Hence, in fact we have a corner cutting algorithm to evaluate Bézier curves alternative to the de Casteljau algorithm. In addition, the new algorithm has a computational cost of elementary operations to evaluate the function at the points in contrast to the computational cost of elementary operations of the de Casteljau algorithm.

The two previous examples have shown how the algorithm provides a corner cutting algorithm from the bidiagonal decomposition of a non-singular stochastic matrix TP. So, in the next example, it will be illustrated that when Algorithm 1 is carried out in floating point arithmetic, the parameters of the corner cutting algorithm are obtained with high relative accuracy.

Example 5.

In Example 4, the corner cutting algorithm from was obtained applying the algorithm with exact computations. Then, were computed in double precision floating point arithmetic with a Python (3.10.9) implementation of Algorithm 1. Then, the component-wise relative errors were calculated obtaining:

As it can be observed, all the parameters of the corner cutting algorithm are obtained with an error of the order of the unit roundoff of the double precision floating point arithmetic system. So, all the parameters are obtained with HRA as Theorem 3 states.

7. Conclusions

The bidiagonal decomposition with high relative accuracy is known for many non-singular matrices TP. If the non-singular matrix TP is also stochastic, then it provides a corner cutting algorithm. It is proved that, if we have the bidiagonal decomposition with high relative accuracy, then we can obtain the corner cutting algorithm through a SF algorithm, and so with high relative accuracy. Hence, the method presented in this paper provides a source for constructing corner cutting algorithms with high relative accuracy. Examples of its use as curve evaluation algorithms are included.

Author Contributions

All authors contributed equally to this work. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partially funded by the Spanish research grants PID2022-138569NB-I00 (MCI/AEI) and RED2022-134176-T (MCI/AEI), and by Gobierno de Aragón (E41_23R).

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| TP | Totally positive |

| CNE | Complete Neville elimination |

| HRA | High relative accuracy |

| SF | Subtraction free |

| CAGD | Computer-aided geometric design |

References

- Demmel, J. Accurate singular value decompositions of structured matrices. SIAM J. Matrix Anal. Appl. 1999, 21, 562–580. [Google Scholar] [CrossRef]

- Demmel, J.; Koev, P. The accurate and efficient solution of a totally positive generalized Vandermonde linear system. SIAM J. Matrix Anal. Appl. 2005, 27, 42–52. [Google Scholar]

- Higham, N.J. Accuracy and Stability of Numerical Algorithms, 2nd ed.; SIAM: Philadelphia, PA, USA, 2002. [Google Scholar]

- Koev, P. Accurate eigenvalues and SVDs of totally nonnegative matrices. SIAM J. Matrix Anal. Appl. 2005, 27, 1–23. [Google Scholar]

- Koev, P. Accurate computations with totally nonnegative matrices. SIAM J. Matrix Anal. Appl. 2007, 29, 731–751. [Google Scholar]

- Koev, P. TNTool. Available online: https://sites.google.com/sjsu.edu/plamenkoev/home/software/tntool (accessed on 6 February 2025).

- Marco, A.; Martínez, J.J. Accurate computation of the Moore–Penrose inverse of strictly totally positive matrices. J. Comput. Appl. Math. 2019, 350, 299–308. [Google Scholar] [CrossRef]

- Demmel, J.; Dumitriu, I.; Holtz, O.; Koev, P. Accurate and efficient expression evaluation and linear algebra. Acta Numer. 2008, 17, 87–145. [Google Scholar]

- Farin, G. Curves and Surfaces for Computer-Aided Geometric Design. A Practical Guide, 4th ed.; Academic Press: San Diego, CA, USA; Computer Science and Scientific Computing, Inc.: Rockaway, NJ, USA, 1997. [Google Scholar]

- Goodman, T.N.T.; Micchelli, C.A. Corner cutting algorithms for the Bézier representation of free form curves. Linear Algebra Appl. 1988, 99, 225–252. [Google Scholar]

- Hoschek, J.; Lasser, D. Fundamentals of Computer Aided Geometric Design; A K Peters: Wellesley, MA, USA, 1993. [Google Scholar]

- Micchelli, C.A.; Pinkus, A.M. Descartes systems from corner cutting. Constr. Approx. 1991, 7, 161–194. [Google Scholar] [CrossRef]

- Barreras, A.; Peña, J.M. Matrices with bidiagonal decomposition, accurate computations and corner cutting algorithms. In Concrete Operators, Spectral Theory, Operators in Harmonic Analysis and Approximation, Birkhauser; Operator Theory, Advances and Applications; Springer: Berlin/Heidelberg, Germany, 2013; Volume 236, pp. 43–51. [Google Scholar]

- Fallat, S.M.; Johnson, C.R. Totally Nonnegative Matrices; Princeton Series in Applied Mathematics; Princeton University Press: Princeton, NJ, USA, 2011. [Google Scholar]

- Gantmacher, F.P.; Krein, M.G. Oscillation Matrices and Kernels and Small Vibrations of Mechanical Systems, Revised Edition; AMS Chelsea Publishing: Providence, RI, USA, 2002. [Google Scholar]

- Ando, T. Totally positive matrices. Linear Algebra Appl. 1987, 90, 165–219. [Google Scholar]

- Gasca, M.; Micchelli, C.A. Total Positivity and Its Applications; Kluwer Academic Publishers: Dordrecht, The Netherlands, 1996. [Google Scholar]

- Karlin, S. Total Positivity; Stanford University Press: Stanford, CA, USA, 1968; Volume 1. [Google Scholar]

- Pinkus, A. Totally Positive Matrices; Cambridge Tracts in Mathematics 181; Cambridge University Press: Cambridge, UK, 2010. [Google Scholar]

- Barreras, A.; Peña, J.M. Accurate computations of matrices with bidiagonal decomposition using methods for totally positive matrices. Numer. Linear Algebra Appl. 2013, 20, 413–424. [Google Scholar] [CrossRef]

- Gasca, M.; Peña, J.M. On factorizations of totally positive matrices. In Total Positivity and Its Applications; Gasca, M., Micchelli, C.A., Eds.; Kluwer Academic Publishers: Dordrecht, The Netherlands, 1996; pp. 109–130. [Google Scholar]

- Gasca, M.; Peña, J.M. Total positivity and Neville elimination. Linear Algebra Appl. 1992, 165, 25–44. [Google Scholar]

- Demmel, J.; Gu, M.; Eisenstat, S.; Slapnicar, I.; Veselic, K.; Drmac, Z. Computing the singular value decomposition with high relative accuracy. Linear Algebra Appl. 1999, 299, 21–80. [Google Scholar]

- Marco, A.; Martínez, J.J. A fast and accurate algorithm for solving Bernstein-Vandermonde linear systems. Linear Algebra Appl. 2007, 422, 616–628. [Google Scholar]

- Marco, A.; Martínez, J.J. Polynomial least squares fitting in the Bernstein basis. Linear Algebra Appl. 2010, 433, 1254–1264. [Google Scholar]

- Marco, A.; Martínez, J.J. Accurate computations with totally positive Bernstein-Vandermonde matrices. Electron. J. Linear Algebra 2013, 26, 357–380. [Google Scholar] [CrossRef]

- Marco, A.; Martínez, J.J. Accurate computations with Said-Ball-Vandermonde matrices. Linear Algebra Appl. 2010, 432, 2894–2908. [Google Scholar]

- Delgado, J.; Peña, J.M. Accurate computations with collocation matrices of rational bases. Appl. Math. Comput. 2013, 219, 4354–4364. [Google Scholar] [CrossRef]

- Delgado, J.; Peña, J.M. Accurate computations with collocation matrices of q-Bernstein polynomials. SIAM J. Matrix Anal. Appl. 2015, 36, 880–893. [Google Scholar] [CrossRef]

- Marco, A.; Martínez, J.J.; Viaña, R. Accurate bidiagonal decomposition of totally positive h-Bernstein-Vandermonde matrices and applications. Linear Algebra Appl. 2019, 579, 320–335. [Google Scholar]

- Joy, K.I. On-Line Geometric Modeling Notes: Chaikin’s Algorithm for Curves. Visualization and Graphics Research Group, Department of Computer Science, University of California, Davis. 1999. Available online: https://www.cs.unc.edu/~dm/UNC/COMP258/LECTURES/Chaikins-Algorithm.pdf (accessed on 10 March 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).