Abstract

We study stochastic production planning in capacity-constrained manufacturing systems, where feasible operating states are restricted to a convex safe-operating region. The objective is to minimize the total cost that combines a quadratic production effort with an inventory holding cost, while automatically halting production when the state leaves the safe region. We derive the associated Hamilton–Jacobi–Bellman (HJB) equation, establish the existence and uniqueness of the value function under broad conditions, and prove a concavity property of the transformed value function that yields a robust gradient-based optimal feedback policy. From an operations perspective, the stopping mechanism encodes hard capacity and safety limits, ensuring bounded risk and finite expected costs. We complement the analysis with numerical methods based on finite differences and illustrate how the resulting policies inform real-time decisions through two application-inspired examples: a single-product case calibrated with typical process-industry parameters and a two-dimensional example motivated by semiconductor fabrication, where interacting production variables must satisfy joint safety constraints. The results bridge rigorous stochastic control with practical production planning and provide actionable guidance for operating under uncertainty and capacity limits.

Keywords:

quadratic cost functional; optimal production strategies; HJB equation; capacity constraints; feedback control MSC:

49K20; 49K30; 90C31; 90B30

1. Introduction

Production planning under uncertainty is a central challenge in manufacturing and operations management. Planners must determine production rates that meet service targets at minimum cost while coping with fluctuating demand, process variability, and strict capacity or safety limits. In modern plants, feasible operating regimes are inherently multi-dimensional (e.g., joint limits on throughput, energy, emissions, storage, or quality indices) and cannot be captured adequately by simple per-variable bounds. Violating such joint limits can trigger shutdowns, quality failures, or regulatory penalties. Effective policies must therefore monitor and steer operations within a safe region and halt production when risk escalates. From a managerial perspective, such halts are not merely technical artifacts but represent economically rational decisions to avoid disproportionate losses.

Recent contributions highlight the breadth of stochastic and optimization approaches in production and risk management, including applications to pension schemes [1], image restoration [2], mean-field stochastic control with hybrid disturbances [3], emission-dependent production and maintenance policies [4,5], and integrated capacity planning and logistics optimization [6]. These studies underscore the growing importance of linking rigorous control theory with sustainability, financial risk, and complex manufacturing environments.

Classical stochastic production and inventory models focus on the optimal control of production or inventory processes (e.g., mean-reverting inventories and business-cycle effects [7,8]), multi-product or multi-machine settings [9], and joint price–production decisions [10,11]. These approaches typically assume simple feasible sets or constraints that do not reflect the geometry of real capacity envelopes. Moreover, they often abstract from non-negativity of production rates or from the explicit role of a reference operating point, assumptions that are crucial in practice. On the methodological side, viscosity and PDE-based control techniques (e.g., [12,13]) provide rigorous tools for the existence, uniqueness, and qualitative properties of value functions, yet have seen limited integration into production models with explicit geometric state constraints.

We bridge these strands by:

- Modeling feasible operating states as a smooth convex domain encoding joint capacity and safety limits, with an endogenous stopping rule that halts operations upon exit. This captures the economic reality that temporary shutdowns are preferable to uncontrolled excursions.

- Deriving the Hamilton–Jacobi–Bellman (HJB) equation for a quadratic-effort plus holding-cost objective and proving the existence and uniqueness of the associated value function. The quadratic effort term represents the convex marginal costs of production adjustment, while the holding cost reflects the inventory risk.

- Establishing a concavity property of a transformed value function, which implies a robust gradient-based optimal feedback control that is well-suited to noisy environments and ensures monotone responses to shocks.

- Designing finite-difference solvers for one- and two-dimensional domains and demonstrating how the numerical policies guide operations in two application-inspired settings: (i) single-product process control and (ii) a two-variable semiconductor example with a joint safety envelope.

Together, these results yield implementable policies that explicitly account for geometric capacity constraints, improving interpretability and practical relevance for plant-level decision support. The safety-triggered stopping time ensures bounded risk and finite costs, clarifying when temporary halts are economically rational. In particular, the model highlights how the non-negativity of production and the choice of a reference point affect both the mathematical structure and the operational interpretation.

Two contexts illustrate the need for geometry-aware control:

- (i)

- In process industries, operators must balance production rates against inventory holding risks under process noise.

- (ii)

- In semiconductor fabrication, chemical exposure and tool utilization must be simultaneously contained within a safety envelope to avoid defects or shutdowns.

In both cases, our framework produces feedback policies that steer operations away from risk boundaries and justify controlled halts when excursions are likely. These examples also demonstrate how the mathematical results translate into concrete managerial insights.

Compared to [7,8,9,10,11], we incorporate a multidimensional safe-operating set directly into the control problem and provide analytical guarantees (existence, uniqueness, concavity) that support numerics and implementation. Relative to PDE/control approaches such as [12,13], we tailor the analysis to production planning with clear operational interpretation (capacity envelopes, halting logic) and provide structured algorithms and experiments aligned with production and operations practice. Our contribution is therefore both methodological and applied: it extends rigorous stochastic control theory while offering tools that can be directly interpreted by practitioners.

The remainder of this article is organized as follows. Section 2 formalizes the model and cost structure, highlighting assumptions such as the non-negativity of production and the role of the reference point . Section 3 presents the analytical results of the HJB equation, including the existence, uniqueness, and concavity of the transformed value function. Section 4 provides a verification argument and characterizes the optimal feedback control. Section 5 reports the numerical experiments and application-inspired examples, with discussion of the sensitivity and managerial interpretation. Section 6 concludes and outlines extensions. Appendix A provides the reference addresses to well-formatted version Python 3.13.5 code, which is suitable for replication or adaptation.

2. Generalized Hamilton–Jacobi–Bellman Equation

The problem formulation involves the following:

- Production configurations: is a convex, open, and bounded domain representing the set of admissible production configurations, and is a chosen interior reference point. The smooth boundary encodes the joint capacity and safety limits of the manufacturing system. Economically, represents the feasible region of operations, while marks the onset of shutdowns, quality failures, or regulatory violations.

- Inventory dynamics: The inventory levels evolve according towhere is the production-rate vector, and captures random fluctuations in the manufacturing process. In practice, reflects the non-negativity of production rates, a natural operational constraint.

- Cost functional: The objective is to minimize the total expected costwhere is a continuous inventory holding cost, and denotes the Euclidean norm. The quadratic effort term models the convex marginal costs of adjusting production, while captures the economic penalty of carrying inventory (e.g., storage, obsolescence, or capital costs).

- Stopping condition: Production halts at the stopping timewhere is the Euclidean distance between the reference point and the boundary . This condition ensures that operations are terminated before inventory trajectories leave the safe region. From a managerial viewpoint, represents the first time at which risk becomes unacceptable and a temporary halt is economically rational.

The process is an N-dimensional Brownian motion on a complete probability space . Let be the value function, i.e., the minimum total expected cost starting from :

Under the dynamic programming principle, z satisfies the Hamilton–Jacobi–Bellman (HJB) equation

Minimizing the right-hand side over p yields the optimal feedback

and substituting in (2) gives

We impose the Dirichlet boundary condition

where represents the terminal cost upon exit (e.g., shutdown or reconfiguration cost). This boundary condition formalizes the economic penalty of leaving the safe operating region.

To linearize the equation, define

By the chain rule,

Hence, (2) is equivalent to the linear elliptic boundary value problem

with boundary condition

The boundary data (4) encode the exit cost and make u strictly positive on . Under smooth and convex and , , problem (3)–(4) is a uniformly elliptic linear Dirichlet problem that admits a unique classical solution . The optimal value and feedback recover via

Computationally, (3) is amenable to finite-difference or finite-element discretizations on general convex domains, which we exploit in Section 5. From an applied perspective, this reformulation highlights how advanced PDE methods yield implementable numerical schemes that directly inform production planning under uncertainty.

3. Results on the Value Function

We establish the existence, uniqueness, and qualitative properties of the value function via the linearized boundary value problem (3)–(4). Throughout, is a bounded convex domain, and , .

3.1. Preliminaries

Assume that the inventory cost is continuous, with a zero set equal to the closure of a connected subdomain (i.e., if and only if , and on ). This structure covers settings where holding costs vanish in a target region and grow away from it. Economically, represents a “comfort zone” of inventory levels, where storage costs are negligible, while deviations outside incur increasing penalties.

Definition 1

Proposition 1

Proof Sketch.

Let . Then, in , and on . If w attained a positive maximum in , the strong maximum principle (and ) would be violated. Hence, . □

3.2. Existence, Uniqueness, and Positivity

The first result addresses the existence, uniqueness, and positivity for the linear elliptic problem obtained from the HJB transformation. These properties are crucial: existence ensures that the optimization problem is well-posed, uniqueness guarantees that the optimal policy is unambiguous, and positivity reflects the fact that the costs remain finite and economically interpretable.

Theorem 1.

Let ω be a bounded convex domain and , . Then, the boundary value problem

admits a unique classical solution . Moreover, in ω, and u attains the boundary value continuously on .

Proof Sketch.

Existence via sub-/supersolutions. The existence of the solution u is established via the sub- and supersolution method described above. Define the constant function

Since is constant, we immediately have in , so that

Moreover, clearly satisfies the boundary condition, it is a supersolution of (7). Next, by classical elliptic theory (see, e.g., [14] [Theorem 4.3, p. 56]), the auxiliary problem

has a unique positive solution . Using the maximum principle applied to (8), one checks that satisfies both the boundary condition and the differential inequality (6). In particular, one may show that

We now define . For to serve as a subsolution, we require two properties:

(i) Boundary condition:

so, satisfies the boundary condition.

By construction, we have

ensuring that the solution exists within these bounds. Monotone iteration techniques then yield the desired positive solution of (7) satisfying

Uniqueness and positivity. If solve (7) with the same boundary data, their difference satisfies

By the maximum principle, , and hence, . Since the boundary datum is strictly positive, the strong maximum principle and Hopf lemma imply that in . □

Corollary 1.

The value function is well-defined and continuous on , and the optimal feedback is

3.3. Monotone Iteration (Constructive View)

For completeness, we outline a constructive scheme (inspired by [15]). Let . Fix , and for , solve

Proposition 2

(Monotone iteration). The sequence defined above is monotone decreasing and bounded below by a positive subsolution. Consequently, it converges pointwise and in to the unique solution u of (7).

Proof Sketch.

By construction, is a supersolution. Suppose is a supersolution. Then, the PDE for has right-hand side ; so, by the comparison principle, (Proposition 1) we obtain

Thus, the sequence is monotone decreasing. Since is bounded below by the positive subsolution constructed in Theorem 1, the limit

exists and is nonnegative. Standard elliptic regularity then implies that u is the unique classical solution of (7). □

Interpretation: The monotone iteration provides a constructive view of the existence proof: starting from the constant supersolution, successive updates refine the approximation until convergence to the true solution. Computationally, this scheme can be implemented as a fixed-point iteration on a discretized domain, offering a simple and stable numerical method. From an operational perspective, the monotonicity reflects that successive policy refinements never increase the estimated cost, and the convergence guarantees that the limiting feedback control is both well-defined and economically interpretable.

3.4. Convexity and Concavity of the Transformed Value

Our second result provides sufficient conditions under which the value function in our production planning framework is concave. This property has direct operational relevance: it implies diminishing marginal cost savings as the state moves toward the low-cost region, leading to stable feedback controls and avoiding overreaction to random fluctuations. The proof adapts convexity and gradient-estimate techniques from [16,17,18] to our setting.

Theorem 2.

Let be a bounded convex domain (the production inventory space) with smooth boundary . Let be a function that is convex on ω. Suppose that for every and every , the one-dimensional slice,

satisfies

Then, is convex on ω, and hence, the value function

is concave on ω.

Proof.

Fix and . Since and is , the slice is positive and on I. Let . Differentiating,

Hypothesis (10) ensures for all , i.e., is convex along every line segment in . By the one-dimensional characterization of convexity, is convex on . Multiplication by the negative constant reverses the curvature; so, is concave on , and equivalently, in . □

Remark 1

(Concavity of the value function). By Theorem 2, is convex under the slice condition (10); thus, is concave on ω. This curvature property stabilizes the feedback , particularly near , ensuring that production rates do not oscillate excessively when the system approaches its safety limits.

Remark 2

(Interpretation). The convexity of corresponds to the structural stability of the multiplicative transform linking u to the value function z. In operational terms, the concavity of z means the optimal feedback control responds less aggressively as the state approaches desirable regions, aiding robustness in noisy environments. This reflects the economic intuition of diminishing returns: once the system is close to its target region, further adjustments yield smaller cost reductions; so, the optimal policy naturally smooths the production rates.

Remark 3

(Relation to prior techniques). The slice-based approach in Theorem 2 parallels methods from [16,17,18] used for convexity and gradient bounds in elliptic PDEs and aligns with the spirit of [19] for radially symmetric cases. These conditions also resonate with Brascamp–Lieb [20] and Korevaar [21], where maximum principles are applied to directional second derivatives. Our contribution is to adapt these analytic tools to a production-planning context with explicit geometric constraints, thereby linking PDE convexity theory to operational decision-making.

3.5. Boundary Behavior and Gradient Asymptotics

We quantify the magnitude of near the boundary, which governs the intensity of the optimal control as exit approaches. From an operational perspective, this analysis explains how production rates react when the system approaches capacity or safety limits.

Proposition 3

Proof Sketch.

We utilize boundary gradient techniques as in [16,17,18]. Recall

The solution u is in a neighborhood of and satisfies the Dirichlet condition

Fix , and let . By smoothness and a first-order expansion along the outward normal direction,

By Hopf’s lemma, . Setting , we obtain

Therefore,

Taking yields

which proves (11). Equivalently,

consistent with the radial cases reported in [19,22]. □

Remark 4

(Policy implication). As the state approaches , the control magnitude grows toward the finite limit in (11). This means that corrective actions intensify near the boundary but remain bounded. In practice, this prevents both under-reaction (which would risk exit) and over-reaction (which would destabilize operations).

Remark 5

(Radial symmetry case). If b is radially symmetric, and ω is a ball, Theorem 1 implies u (and hence z) is radially symmetric; otherwise, rotation would produce a distinct solution, contradicting uniqueness. In this case, z is concave by symmetry, and the boundary-gradient behavior in (11) reduces to the simpler radial formulas in [19,22].

Remark 6

(Managerial interpretation). The boundary gradient asymptotics quantify how aggressively production controls respond as the system nears capacity or safety limits. The finite limit in (11) ensures that managers can anticipate a predictable, stable increase in corrective effort, rather than facing unbounded or erratic adjustments. This provides a clear operational rule: as inventories approach unsafe levels, the optimal policy prescribes decisive but stable corrections, justifying temporary halts when necessary.

4. Verification via Martingale Property

In our production planning problem, the state represents the production inventory level and the control the production rate. The cost functional is

where is the inventory holding cost, and is the exit time in (1). We now verify that any smooth solution U of the HJB equation indeed yields the optimal cost and characterizes the optimal feedback.

4.1. Definition of the Process and Hamiltonian

Let satisfy

Define the reduced Hamiltonian

with minimizer

For an admissible control p, consider the stopped process (with )

4.2. Itô’s Formula and Verification

Applying Itô’s formula to under gives

Hence, for ,

By definition of , for all p, with equality at . Using (12), we have . Therefore,

with equality if .

4.3. Martingale and Submartingale Properties

To make this rigorous, we stop the process at and localize with a sequence of bounded stopping times increasing to . For each n, the stochastic integral

is a true martingale (square-integrable). Thus, for each n, is a submartingale, and a martingale if . Passing to the limit , and using monotone convergence yields the same property for .

- For any admissible p, is a submartingale.

- For , is a martingale.

4.4. Verification Inequality

Taking expectations, and applying the optional sampling theorem at , then letting , gives for any p,

with equality when . Using the boundary condition , we obtain

and equality holds for . Therefore, U is the value function, and is optimal.

4.5. Optimal Feedback Control

The minimizer of the Hamiltonian (13) is . Since the verification step shows , we can write the optimal feedback as

Under this feedback, the controlled dynamics evolve optimally, and the cost-to-go satisfies

Summary

5. Two Examples of Numerical Implementation by Finite Difference Method

We illustrate our framework with two finite-difference implementations of the transformed HJB problem (3)–(4). Discretization follows standard PDE techniques [23,24], in combination with stochastic simulation methods for controlled SDEs (cf. [25,26,27]). Each step is mathematically justified by a combination of numerical analysis, stochastic calculus, and optimal control theory. The computations reported here were assisted by Microsoft Copilot in Edge, streamlining code development and validation.

5.1. Example 1: 1D PDE on an Open Interval

We first solve the 1D transformed PDE on via a central finite-difference scheme, as implemented in Appendix A (Example 1). The algorithm discretizes the interval, enforces Dirichlet conditions, and solves the resulting tridiagonal system for .

5.1.1. Application Context

In production planning for a single-product manufacturing system, let the volatility be , the terminal cost , and the holding cost . These parameters have the following interpretation (Table 1):

Table 1.

Economic interpretation of parameters in Example 1.

Solving for yields the value function

representing the minimal expected total cost from state x. Differentiating z gives the optimal feedback control

which prescribes the production rate adjustment.

5.1.2. Algorithmic Outline

- Input: N (grid points), , , .

- Discretize domain with N points.

- Set boundary condition

- Formulate and solve the tridiagonal system for interior points.

- Assemble full u vector including boundaries; return .

- Compute value

- Approximate by central differences; compute

- Simulate inventory dynamics under using Euler–Maruyama with given initial state , time horizon T, and step ; halt upon exit from .

5.1.3. Numerical Results

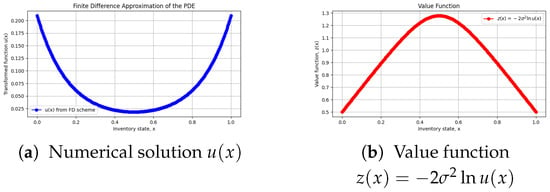

Figure 1(left) shows from the finite-difference solve, matching the boundary values exactly and exhibiting smoothness consistent with theory. Figure 1(right) shows the corresponding , which is concave as predicted by Theorems 1 and 2.

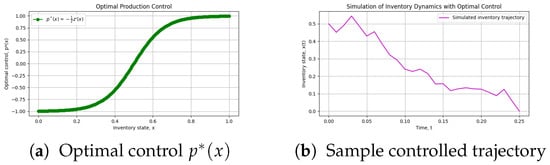

Figure 2(left) plots the optimal control obtained via numerical differentiation of z. Figure 2(right) shows a sample simulated inventory trajectory under , remaining within the safe-operating interval until the stochastic exit time. These results confirm that the curvature and stability properties of z translate into smooth, well-behaved control actions.

Figure 2.

Control and simulation. (a) Optimal feedback profile computed from . (b) Simulated state path under , illustrating safe capacity management.

5.1.4. Discussion

The concavity of is evident in Figure 1b and matches our analytical findings. This property ensures that varies smoothly, avoiding excessive corrections and supporting robust performance under stochastic perturbations. From a managerial perspective, this means that production adjustments become less aggressive as the system approaches the low-cost region, while stronger but stable corrections are applied near the boundary. Together, these figures highlight the consistent interplay between analytical structure and computational outcomes, demonstrating that the finite-difference approach effectively implements the optimal control policy in a realistic production-planning scenario.

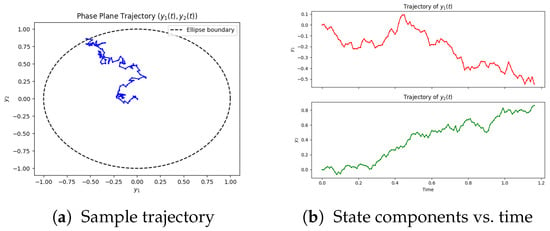

5.2. Example 2: 2D PDE on an Elliptical Domain

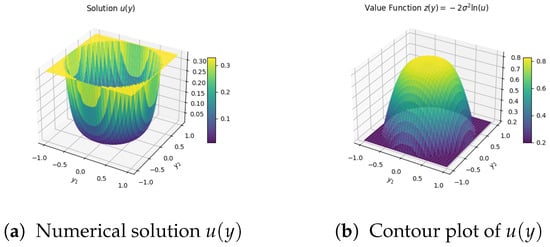

We next extend the finite-difference framework to a two-dimensional elliptical domain, as implemented in Appendix A (Example 2). The admissible region now models the safe operating envelope for two coupled production parameters that must be controlled simultaneously.

5.2.1. Application Context

In semiconductor fabrication, process safety depends on maintaining both chemical concentration and machine utilization within prescribed bounds.

Due to joint operational and quality constraints, the feasible set is elliptical rather than rectangular. For illustration, we take a circle with semi-axes , to facilitate comparison with the exact radial solution in [22], but the method accommodates general ellipses. The volatility models intrinsic process noise, and the terminal cost represents the economic impact of a forced shutdown.

In this setting, the computed value function quantifies the minimal expected cost (or risk) associated with deviations from the optimal operating point.

The derived control field

prescribes real-time adjustments to drive the system toward the center of while respecting the domain’s geometric constraints.

The economic meaning of these parameters is as follows (Table 2):

Table 2.

Interpretation of variables in the semiconductor case.

5.2.2. Algorithmic Outline

- Input: ellipse semi-axes , volatility , terminal cost , grid spacing h, tolerance tol, maximum iterations max_iter.

- Output: meshgrid , solution , value function , controls .

- Discretize the bounding rectangle and mark interior points satisfying

- Impose Dirichlet boundary condition

- Initialize u in the interior; define

- Iterate Gauss-Seidel updates on interior points until convergence.

- Compute

- Approximate and by centered differences.

- Set

5.2.3. Numerical Results

Figure 3 shows the finite-difference solution for the elliptical domain. The color map reveals how the geometry influences u, and when is radially symmetric, the solution is correspondingly symmetric, in agreement with [19].

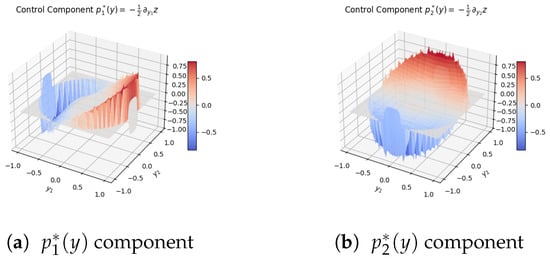

The optimal feedback controls , computed from , are shown in Figure 4 as vector fields. They point toward the domain center, with increased magnitude near the boundary, reflecting the stronger corrective actions required close to capacity limits.

Figure 4.

Optimal controls in 2D. Components of on the elliptical domain. Vectors direct the state toward the optimal region while respecting joint constraints.

Finally, Figure 5 shows a simulated inventory trajectory under using the Euler–Maruyama method. The path remains within until the stopping time; as approaches , increases in magnitude to avert exit.

Figure 5.

Closed-loop simulation. Evolution of under optimal feedback. The trajectory stays in the safe region until exit; control intensifies near .

5.2.4. Discussion

These simulations confirm the smoothness, concavity, and optimality properties predicted in Section 3 and Section 4. The finite-difference method captures the influence of domain geometry on the value function and control laws, thus validating the practical applicability of our stochastic production planning framework to multi-parameter systems. From a managerial perspective, the results show the following:

- Joint safety constraints (ellipse) lead to different corrective patterns than independent per-variable bounds.

- Concavity of z ensures that feedback controls avoid oscillations, providing robustness against noise.

- The reference point acts as the nominal safe operating point; sensitivity analysis indicates that shifting changes the stopping time and total cost , highlighting its importance in practice. Mathematically, the stopping condition corresponds to the inventory level y exiting the admissible domain . In our implementation, this condition is imposed primarily to ensure numerical tractability within the Python-based finite-difference scheme.

6. Conclusions and Future Directions

We presented a stochastic production planning framework that explicitly incorporates geometric capacity constraints via a smooth convex operating domain. By transforming the Hamilton–Jacobi–Bellman (HJB) equation, we established the existence, uniqueness, strict positivity, and convexity of the transformed solution u, as well as the concavity of the value function . We derived boundary gradient asymptotics that quantify the intensity of optimal corrective actions near capacity limits and verified the optimality through a martingale argument, yielding a feedback control . Finite-difference implementations in one and two dimensions demonstrated how the domain geometry shapes both the value function and the policy and showed how the feedback stabilizes inventory trajectories under stochastic fluctuations.

6.1. Managerial Interpretation

The concavity of z implies diminishing marginal cost reductions near low-cost regions, making smoother and more robust to noise. The boundary gradient characterization explains why control intensity rises near capacity limits: sharper, yet stable, adjustments prevent risky excursions. In practice, these properties translate into implementable rules that proactively steer operations away from unsafe zones and justify temporary halts when excursions are likely. Compared to classical inventory models with separable constraints, our framework highlights how joint geometric constraints fundamentally alter both the shape of the value function and the structure of optimal policies.

6.2. Role of the Stopping Rule

The stopping rule (1) is central to both modeling realism and analytical tractability:

- Risk management. Exiting the safe-operating set triggers a halt, preventing overloads, quality failures, or regulatory breaches.

- Well-posedness and finite cost. With production halted at , the costremains finite, enabling rigorous HJB analysis and reliable numerics.

- Geometry-aware control design. The control law accounts for joint capacity constraints encoded by , avoiding operating regimes where the model or process becomes unreliable.

- Practical alignment. The exit policy formalizes standard operating procedures: intervene early, halt safely when necessary, and resume after corrective measures.

6.3. Limitations and Extensions

The current analysis assumes a smooth convex domain, isotropic noise, and quadratic effort costs. Demand dynamics, backlog/shortage penalties, and setup costs are not explicitly modeled, and the exit policy may be conservative relative to reflecting or soft-constraint alternatives. Several extensions can enhance both the realism and scope:

- Richer economics. Incorporate demand and backlog dynamics, service levels, and capacity adjustment or setup costs; consider risk-sensitive criteria (e.g., CVaR).

- General domains and constraints. Address nonconvex or time-varying feasible sets, soft constraints, reflecting boundaries, or state-dependent volatility and coupling.

- Partial information and learning. Study noisy state observations, parameter uncertainty, and data-driven estimation of ; integrate robust or adaptive control.

- High-dimensional numerics. Develop scalable solvers (FEM, multigrid, domain decomposition, policy iteration), and explore machine-learning PDE solvers (e.g., deep Galerkin methods, PINNs) for complex geometries.

- Model predictive control (MPC). Combine the HJB-based policy with receding-horizon optimization for disturbance rejection and constraint handling on plant-floor timescales.

6.4. Closing Statement

Overall, the proposed framework connects rigorous stochastic control with actionable production policies that respect joint capacity limits. The analytical guarantees support reliable computation and interpretation, while the numerical studies illustrate how geometry, uncertainty, and cost structure jointly determine optimal operational decisions. By bridging mathematical rigor with managerial insight, this work lays the foundation for future research on geometry-aware, risk-sensitive, and computationally scalable production planning.

Funding

This research received no external funding.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data results are available on request.

Acknowledgments

I thank the reviewers for their support and for suggesting the reorganization of the paper, which significantly improved its clarity.

Conflicts of Interest

The author declares no conflicts of interest.

Appendix A

These Python codes were created with the support of Microsoft Copilot in Edge.

Appendix A.1. Python Code Example 1

The following Python code, available at https://github.com/coveidragos/Production-Bounded-Scalar/blob/main/Example1.py (accessed on 12 October 2025), implements the numerical procedure described in Example 1.

Appendix A.2. Python Code Example 2

The accompanying Python code generates visualization plots—including contour maps of and vector fields of the optimal control—which serve to validate the numerical solution and highlight its practical relevance for managing complex manufacturing systems. The code can be consulted at https://github.com/coveidragos/Production-Bounded-Scalar/blob/main/Example2.py (accessed on 12 October 2025).

References

- Zhang, X.; Li, Y.; Guo, J. The Role of Longevity-Indexed Bond in Risk Management of Aggregated Defined Benefit Pension Scheme. Risks 2024, 12, 49. [Google Scholar] [CrossRef]

- Covei, D.-P. Image Restoration via Integration of Optimal Control Techniques and the Hamilton–Jacobi–Bellman Equation. Mathematics 2025, 13, 3137. [Google Scholar] [CrossRef]

- Tang, C.; Li, X.; Wang, Q. Mean-Field Stochastic Linear Quadratic Optimal Control for Jump-Diffusion Systems with Hybrid Disturbances. Symmetry 2024, 16, 642. [Google Scholar] [CrossRef]

- Gharbi, A.; Kenné, J.-P.; Takengny, A.L.K.; Assid, M. Joint Emission-Dependent Optimal Production and Preventive Maintenance Policies of a Deteriorating Manufacturing System. Sustainability 2024, 16, 6146. [Google Scholar] [CrossRef]

- Kenné, J.-P.; Gharbi, A.; Takengny, A.L.K.; Assid, M. Optimal Control Policy of Unreliable Production Systems Generating Greenhouse Gas Emission. Sustainability 2024, 16, 5760. [Google Scholar] [CrossRef]

- Kováts, P.; Skapinyecz, R. A Combined Capacity Planning and Simulation Approach for the Optimization of AGV Systems in Complex Production Logistics Environments. Logistics 2024, 8, 121. [Google Scholar] [CrossRef]

- Cadenillas, A.; Lakner, P.; Pinedo, M. Optimal control of a mean-reverting inventory. Oper. Res. 2010, 58, 1697–1710. [Google Scholar] [CrossRef]

- Cadenillas, A.; Lakner, P.; Pinedo, M. Optimal production management when demand depends on the business cycle. Oper. Res. 2013, 61, 1046–1062. [Google Scholar] [CrossRef]

- Gharbi, A.; Kenne, J.P. Optimal production control problem in stochastic multiple-product multiple-machine manufacturing systems. IIE Trans. 2003, 35, 941–952. [Google Scholar] [CrossRef]

- Sethi, S.P.; Thompson, G.L. Applied Optimal Control: Applications to Management Science; Nijhoff: Boston, MA, USA, 1981. [Google Scholar]

- Thompson, G.L.; Sethi, S.P.; Teng, J. Strong planning and forecast horizons for a model with simultaneous price and production decisions. Eur. J. Oper. Res. 1984, 16, 378–388. [Google Scholar] [CrossRef]

- Alvarez, O. A quasilinear elliptic equation in . Proc. R. Soc. Edinb. Sect. A 1996, 126, 911–921. [Google Scholar] [CrossRef]

- Lasry, J.M.; Lions, P.L. Nonlinear elliptic equations with singular boundary conditions and stochastic control with state constraints. Math. Ann. 1989, 283, 583–630. [Google Scholar] [CrossRef]

- Gilbarg, D.; Trudinger, N.S. Elliptic Partial Differential Equations of Second Order; Springer: Berlin/Heidelberg, Germany; New York, NY, USA, 1977. [Google Scholar]

- Amann, H. Fixed Point Equations and Nonlinear Eigenvalue Problems in Ordered Banach Spaces. SIAM Rev. 1976, 18, 620–709. [Google Scholar] [CrossRef]

- Lieberman, G.M. Asymptotic Behavior and Uniqueness of Blow-up Solutions of Quasilinear Elliptic Equations. J. Anal. Math. 2011, 115, 213–249. [Google Scholar] [CrossRef]

- Lieberman, G.M. Gradient estimates for singular fully nonlinear elliptic equations. Nonlinear Anal. 2015, 119, 382–397. [Google Scholar] [CrossRef]

- Lieberman, G.M. Gradient estimates for elliptic oblique derivative problems via the maximum principle. Adv. Differ. Equ. 2020, 25, 709–754. [Google Scholar] [CrossRef]

- Covei, D.-P. Stochastic Production Planning: Optimal Control and Analytical Insights. arXiv 2025, arXiv:2505.12341. [Google Scholar] [CrossRef]

- Brascamp, H.J.; Lieb, E.H. On extensions of the Brunn–Minkowski and Prékopa–Leindler theorems, including inequalities for log concave functions, and with an application to the diffusion equation. J. Funct. Anal. 1976, 22, 366–389. [Google Scholar] [CrossRef]

- Korevaar, N. Convex solutions of nonlinear elliptic and parabolic boundary value problems. Indiana Univ. Math. J. 1987, 36, 687–704. [Google Scholar]

- Canepa, E.C.; Covei, D.-P.; Pirvu, T.A. A stochastic production planning problem. Fixed Point Theory 2022, 23, 179–198. [Google Scholar] [CrossRef]

- Kloeden, P.E.; Platen, E. Numerical Solutions of Stochastic Differential Equations; Applications of Mathematics; Springer: New York, NY, USA, 1992; Volume 23. [Google Scholar]

- Talay, D.; Tubaro, L. Expansion of the Global Error for Numerical Schemes Solving Stochastic Differential Equations. Stoch. Anal. Appl. 1990, 8, 483–509. [Google Scholar] [CrossRef]

- Bayer, C. Discretization of SDEs: Euler Methods and Beyond. 2006. Available online: https://wias-berlin.de/people/bayerc/files/euler_talk_handout.pdf (accessed on 19 August 2025).

- Bayram, M.; Partal, T.; Buyukoz, G.O. Numerical methods for simulation of stochastic differential equations. Adv. Contin. Discret. Model. 2018, 2018, 17. [Google Scholar] [CrossRef]

- Holmes-Cerfon, M. Applied Stochastic Analysis; American Mathematical Society, Courant Institute of Mathematical Sciences at New York University: New York, NY, USA, 2024; Volume 33. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).